Summary

Estimating depth from binocular disparity is extremely precise and the cue does not depend on statistical regularities in the environment. Thus, disparity is commonly regarded as the best visual cue for determining 3D layout. But depth from disparity is only precise near where one is looking; it is quite imprecise elsewhere [1-4]. To overcome this imprecision away from fixation, vision resorts to using other depth cues—e.g., linear perspective, familiar size, aerial perspective. But those cues depend on statistical regularities in the environment and are therefore not always reliable [5]. Depth from defocus blur relies on fewer assumptions and has the same geometric constraints as disparity [6], but different physiological constraints [7-14]. Hence, blur could in principle fill in the parts of visual space where disparity is imprecise [15]. We tested this possibility with a depth-discrimination experiment. We found that disparity was more precise near fixation and that blur was indeed more precise away from fixation. When both cues were available, observers relied on the more informative one. Blur appears to play an important, previously unrecognized [16,17] role in depth perception. Our findings lead to a new hypothesis about the evolution of slit-shaped pupils and have noteworthy implications for the design and implementation of stereo 3D viewing systems.

Results

Assume an observer fixates and focuses on a point at distance z0 (Figure 1A-C). Another point at z1 is imaged onto the two retinae. Horizontal disparity is the horizontal difference in the projected positions of that point1 and is determined by distances z0 and z1 and some eye parameters:

| (1) |

where d is in units of distance, I is inter-ocular distance, and s is the distance between the eye’s optical center and the retina. Using the small-angle approximation to convert into radians and rearranging:

| (2) |

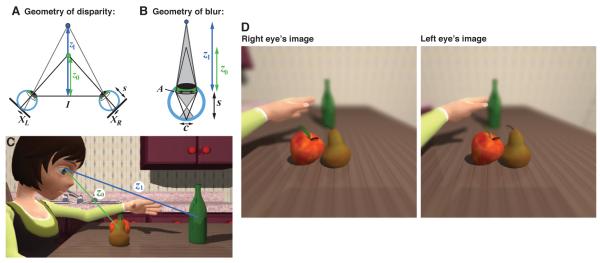

Figure 1.

Geometry of disparity and blur. A. Two eyes separated by interocular distance I fixate at a distance z0. The object at distance z1 projects to locations indicated by XL and XR on the two retinas. Disparity is XL - XR. B. An eye is focused at distance z0. Objects at other distances will be blurred on the retina. The object at distance z1 is blurred over a circular region with diameter c. The edges of the blur circle are geometrically analogous to the projections of z1 on the two retinas in A. C. Side view of a person fixating and focusing at an object at distance z0 while reaching for another object at distance z1. D. Cross-fusable stereo pair of the observer’s point of view in C. 3D models for C and D were created with AutoDesk Maya, using objects from [18] and The Andy Rig (http://studentpages.scad.edu/~jdoubl20/rigsScripts.html).

We define blur as the diameter of the circle over which the point at z1 is imaged at the retina. The blur-circle size in radians is determined by distances z0 and z1 and some eye parameters [6]:

| (3) |

where A is pupil diameter. The analysis summarized by Equation 3 incorporates geometric blur due to defocus, and not blur due to diffraction and higher-order aberrations [19]. Incorporating diffraction and aberrations would yield more blur, but only for object distances at or very close to the focal distance. We are most interested in blur caused by significant defocus where geometric blur is the dominant source [20].

δ and β are proportional to the difference between the reciprocals of z0 and z1 (i.e., the difference in diopters). Disparity and blur have very similar dependencies on scene layout because both are based on triangulation: disparity derives from the different positions of the two eyes and blur from light rays entering different parts of the pupil. Combining Equations 2 and 3 yields the relationship between disparity and blur for z1:

| (4) |

The ratio A/I is ~1/12 for typical steady-state viewing situations [6,21], so the magnitude of blur is generally much smaller than that of disparity. But this does not mean that depth estimation from blur is necessarily less precise than depth from disparity, because relative precision is also dependent on how the cues are processed physiologically.

The just-noticeable change in disparity is very small (~10arcsec) at fixation, but increases dramatically in front of and behind fixation [1]. To reduce computational load, the visual cortex has many neurons with small receptive fields devoted to encoding small disparities (near fixation) and fewer neurons with large receptive fields for encoding large disparities (far from fixation) [7-8]. This strategy is manifest in the size-disparity correlation [10,11]. The just-noticeable change in blur does not increase rapidly with base blur [12]. Not much is known about how the visual system encodes blur, but models have been developed that rely on pooling the responses of spatial-frequency-selective filters or neurons [13,14]. One such model can, with few filters, achieve near-constant precision across a wide range of defocus levels [14]. Thus, the computational load for encoding changes in blur for different amounts of base blur may be relatively low, allowing the visual system to maintain roughly equal precision across a wide range of blurs. From these considerations, we hypothesize that depth from blur is more precise than depth from disparity for the parts of visual space in front of and behind where one is looking [15]. Such complementarity could be involved in conscious perception of depth and in programming of motor behavior such as eye movements and reaching.

We tested the complementarity hypothesis in a psychophysical experiment. Subjects indicated which of two stimuli appeared farther in three conditions: 1) blur alone (monocular viewing of stimuli whose focal distance varied); 2) disparity alone (binocular viewing of stimuli whose focal distance was the same); and 3) disparity and blur (binocular viewing of stimuli whose focal distance varied). To do this, we used a unique stereoscopic, volumetric display developed in our laboratory [22]. This display allows the presentation of correct focus cues over a range of distances; without it, the current study would not be possible. In the apparatus, blur in the retinal image is created solely by the differences between the subject’s focus distance and the stimulus distance (i.e., not by rendering blurred images on a display screen).

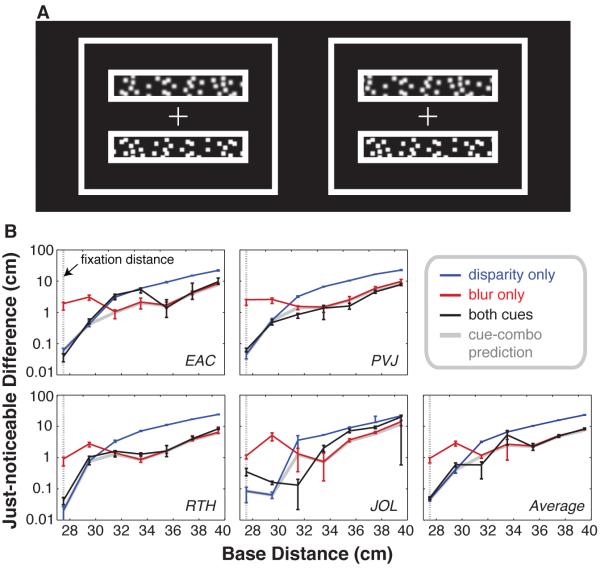

The stimulus and results are shown in Figure 2. Figure 2B plots the just-noticeable change in distance as a function of the distance of the nearer stimulus. The disparity-alone results confirm previous work showing that depth discrimination from disparity worsens very rapidly away from fixation [1]. The blur-alone results reveal that depth discrimination from blur is much poorer at fixation than depth from disparity, but does not worsen significantly with increasing distance from fixation. At greater distance, depth from blur was actually more precise than depth from disparity. When both cues were present, subjects generally based discrimination on the more precise of the two, thereby yielding much better depth discrimination than if they had relied exclusively on disparity. The experiment was not designed to determine whether subjects integrated the two cues optimally [23], but we nonetheless calculated what the two-cue discrimination thresholds would be if optimal integration occurred. A sign test yielded no significant difference between optimal and observed two-cue performance (p = 0.34), but we cannot definitively determine whether the results reflect optimal cue combination or cue switching. Although we did not formally measure discrimination for points nearer than fixation, pilot testing showed that blur plays a similar role in that region of visual space.

Figure 2.

A. Cross-fusable stereo pair of an example stimulus. Observers fixated the central cross and reported whether the lower or upper patch was farther away. Blur has been added to simulate the appearance of a stimulus with disparity and focus cues available. B. Depth-discrimination thresholds plotted as just-noticeable differences for disparity, blur, and both cues conditions. Subjects fixated and focused at a distance of 27.5cm (dotted line). Pedestal stimuli are indicated on the abscissae. The ordinates represent the just-noticeable difference in depth for each pedestal distance. Blue, red, and black lines represent performance using disparity only, blur only, and both blur and disparity. The gray line represents the predicted behavior if the visual system combined the cues optimally [23]. Disparity outperformed blur when the pedestal stimulus was within 3mm of fixation, while blur outperformed disparity at greater distances. Four of the panels show individual subject data and the fifth shows the across-subject average data. Error bars represent standard error.

Discussion

Here we consider the usefulness of blur in natural viewing and in the design of stereo 3D media, how blur can help guide motor behavior such as an upcoming eye movement, and how our theoretical and experimental results lead to a new hypothesis concerning the evolution of slit pupils.

Usefulness of Blur

We do not normally experience changes in the precision of depth estimates behind and in front of where we are looking. This state is often achieved by using other depth cues to fill in the gaps left by disparity. But the usefulness of some cues is quite dependent on viewing situation. For example, the utility of perspective depends on geometric regularities in the world. In contrast, blur is nearly always informative [14,24]. Our results show that this generally available cue is indeed used to make depth estimation significantly more precise throughout visual space. This is surprising given that previous researchers argued that blur is not a very useful cue to depth [16,25]. Blur has traditionally been regarded as a weak cue for two reasons.

1) Defocus blur does not in any obvious way indicate the sign of a change in distance: i.e., whether an out-of-focus object is nearer or farther than an in-focus object. However, the visual system does clearly solve the sign-ambiguity problem. For depth estimation, the system solves the problem by using other depth cues that do not provide metric depth information. For example, the blur of an occluding contour determines whether an adjoining blurred region is perceived as near or far [16,17]. Furthermore, perspective cues, which specify relative distance, provide disambiguating sign information, so blur plus perspective can be used to estimate absolute distance [6]. For driving accommodation in the correct direction, the system solves the sign-ambiguity problem using sign information contained in chromatic aberration [26], higher-order aberrations [20], and accommodative microfluctuations [27,28].

2) The relationship between distance and blur depends on pupil size (Equation 3). There is no evidence that people can measure their own pupil diameter, so the relationship between measured blur and specified distance is uncertain. But steady-state pupil size does not vary much under typical daylight conditions. Specifically, intra-subject pupil diameters vary over a range of 2.8mm for luminance levels between 0.40-1,600 cd/m2 [29], yielding an uncertainty in the estimate of z0 of only 66% over a luminance range of 200,000%.

Stereoscopic 3D media is becoming increasingly commonplace. Our work shows that disparity and depth-of-field blur have the same underlying geometry and therefore that blur is roughly a fixed proportion of disparity (Equation 4). Given that the two cues complement each other, stereo 3D media should be constructed with this natural relationship in mind. When the natural relationship is violated, the puppet-theater effect (characters perceived as too small because of too much blur) or the gigantism effect (characters seen as too large because of too little blur) may ensue [30].

Motor Behavior

We showed that depth from disparity is very precise near fixation, but quite imprecise in front of and behind fixation [1]. It is also known that the precision of depth from disparity falls dramatically with increasing retinal eccentricity: above and below fixation [31,32] and left and right of fixation [1,31]. Importantly, blur-discrimination thresholds do not increase significantly with retinal eccentricity [33], so it is quite likely that depth from blur is more precise than depth from disparity above and below and left and right of fixation as well. Thus, there is a small 3D volume surrounding the current fixation in which depth from disparity can be estimated quite precisely. Outside this volume, the visual system must rely on using other cues to estimate 3D structure. Having shown that blur fills in the void behind and in front of fixation, we hypothesize that it also does so left and right and above and below fixation. Alternatively, the viewer can make an eye movement to move the volume of high precision to a region of interest. However, when determining the movement required to fixate a new point in space, distance must be estimated to determine whether the eyes need to converge or diverge and by how much. Because disparity is imprecise away from fixation, blur may provide very useful information for programming the upcoming vergence eye movement.

To guide other motor behavior such as reaching and grasping, metric distance must be estimated. Can distances z0 and z1 be estimated from disparity and blur? Absolute distances can indeed be estimated from disparity. An extra-retinal, eye-position signal is used to estimate the eyes’ vergence [34] and thereby estimate z0. Because inter-ocular distance I and eye length s are known, z1 can also be estimated. This problem is also solved by using vertical disparity [35]. But can z0 and z1 be estimated from blur? If the pupil diameter A is known approximately, one would only have to estimate the eye’s current focal distance z0. This distance could be estimated crudely from proprioceptive signals arising from structures controlling the focal power of the crystalline lens [36-39]. It could also be estimated from the eyes’ vergence if the eyes are fixated and focused on the same point at z0. Finally, blur can act as an absolute cue to distance when combined with depth cues that provide relative depth information [6].

Slit Pupils

The pupils of many species are circular when dilated, but slit-like when constricted. There have been three hypotheses about the utility of slit pupils: 1) Larger adjustments in area with simple musculature thereby enabling visual function in day and night [40]; 2) better image quality for contours perpendicular to the pupil’s long axis [41]; 3) preserves chromatic-aberration correction in some lenses when pupil is constricted [42,43]. As far as we know, slit or elliptical pupils are always either vertical or horizontal relative to the upright head. Species with vertical slits (listed in Figure 3 caption) are all nocturnal predators and nearly all of them hunt on the ground. Species with horizontal slits or ellipses (listed in caption) are all terrestrial grazers with laterally placed eyes. Hypotheses 1 and 3 above do not explain why slits are always vertical or horizontal, nor why they are vertical in terrestrial predators and horizontal in terrestrial grazers. Hypothesis 2 predicts that pupils should be perpendicular to the horizon, but has the effect of pupil diameter on visual resolution backwards [41]. Our results showing the importance of blur for depth discrimination lead to a new hypothesis.

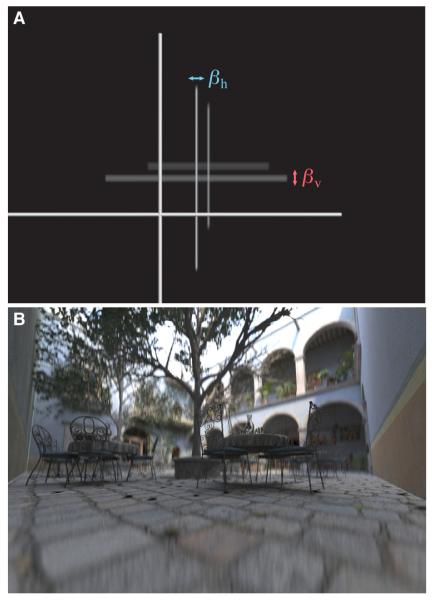

Figure 3.

Slit pupils and astigmatic depth of field. Species with vertical slits include the domestic cat, lynx, red fox, swift fox, bushbaby, loris, copperhead snake, gecko, and crocodile [42-44]. Species with horizontal slits or ellipses include the horse, sheep, goat, elk, reindeer, whitetail deer, and red deer [42-44]. A. The retinal image generated by an eye with a vertical-slit pupil. The vertical and horizontal dimensions of the pupil used for rendering are 5.5 and 1.1mm, respectively. The eye is focused on the left-most cross at a distance of 20cm. The other crosses are positioned at distances of 40 and 60cm. The horizontal limbs of the more distant crosses are more blurred than the vertical limbs. B. The retinal image generated by a natural scene. The pupil has the same aspect ratio as in A, but has been magnified by a factor of 10 to make the blur more noticeable in this small image. The eye is focused at a distance of 200cm. Note that the horizontal contours of distant objects are more blurred than the vertical contours. 3D model by Guillermo M. Leal Llaguno of Evolución Visual (http://www.evvisual.com), rendered using the Physically Based Rendering Toolkit (PBRT).

Consider a slit pupil with height Av and width Ah. With the eye focused at distance z0, the retinal images of the limbs of a cross at z1 would be blurred differently: the blur of the horizontal and vertical limbs would be determined by Av and Ah, respectively:

| (5) |

| (6) |

Combining the two equations:

| (7) |

For vertical slits, Av > Ah, so βh > βv. For horizontal slits, Av < Ah, so βh < βv. Thus, such eyes have astigmatic depth of field. With vertical slits, depth of field is smaller (i.e., blur due to misaccommodation is greater) for horizontal than for vertical contours; with horizontal slits, the opposite obtains. Figure 3A illustrates this point by showing the retinal images associated with crosses at different distances for a vertical-slit pupil. We hypothesize that slit pupils provide an effective means for controlling the amount of light striking the retina by enabling large changes in pupil area while also providing short depth of field for contours of one orientation (horizontal contours for vertical slits), which is useful for estimating distances of those contours. We thus predict that animals with vertical-slit pupils are better able to utilize the blur of horizontal contours to estimate depth than the blur of vertical contours.

The ground is a common and important part of the visual environment for terrestrial predators and grazers. With the head upright, the ground is foreshortened vertically in the retinal images, which increases the prevalence of horizontal or nearly horizontal contours in those images [45]. The vertical slit of many terrestrial predators aligns the orientation of shorter depth of field (Equation 8) with horizontal contours, which should allow finer depth discrimination of contours on the ground. This seems advantageous for their ecological niche (Figure 3B). Another observation is consistent with this hypothesis: The eyes of some reptiles with vertical-slit pupils rotate about the line of sight when the head is pitched downward or upward, which keeps the pupil’s long axis roughly perpendicular to the ground [41]. What about terrestrial grazers with horizontal slits? The eyes of these species are laterally positioned in the head, so when they pitch the head downward to graze, their pupils are roughly vertical relative to the ground. Again this arrangement aligns the orientation of the shorter depth of field with horizontal contours along the ground, which seems advantageous for their niche at least while grazing.

There is another potential advantage of vertical-slit pupils for terrestrial predators. Most of these animals have forward-facing eyes and stereopsis (unlike terrestrial grazers, who have lateral-facing eyes and minimal stereopsis). Vertical contours are critical for the computation of horizontal disparity, which underlies stereopsis. A large depth of field for vertical contours aids the estimation of depth from disparity, while a small depth of field for horizontals aids depth from blur for horizontal contours that are commonplace when viewing across the ground. This may be another sense in which disparity and blur are used in complementary fashion to perceive 3D layout.

Conclusions

We demonstrated through theoretical analysis and experimentation that blur provides greater depth precision than disparity away from where one is looking. These results are inconsistent with the previous view that blur is a weak, ordinal depth cue. They will aid the design of more effect stereoscopic 3D media, and also lead to a new hypothesis concerning the evolution of slit pupils.

Experimental Procedures

Subjects

Four subjects participated. RTH (28 years old) and EAC (23) were authors. PVJ (24) and JOL (70) were unaware of the experimental goals. Before formal data collection began, each subject was given 30 minutes of training in the experimental task with trial-by-trial feedback.

Apparatus

The experiments were conducted on a stereoscopic, multi-plane display that provides nearly correct focus cues [22]. The display contains four image planes per eye. The planes are separated by 0.6 diopters. Distances in-between planes are simulated by an interpolation algorithm that produces retinal images that are in most cases indistinguishable from real images [46-48]. The display allowed us to independently manipulate disparity and blur. The subjects’ eyes were fixed in focus at 27.5cm (the distance of the nearest image plane) by use of cycloplegia (i.e., temporary paralyzation of accommodation) and ophthalmic lenses. Cycloplegia causes pupil dilation, so to mimic natural pupils we had subjects wear contact lenses with 4.5mm diameter apertures. Subject JOL was presbyopic (and therefore unable to accommodate), so his eyes were not cyclopleged and he viewed stimuli with natural pupils of 3.5mm diameter.

Task & Stimulus

Stimuli were two rectangular patches of random-dot patterns (dot density = 4.2 dots/deg2). The patches were presented above and below a fixation cross and were partially occluded by a solid frame (400×200arcmin) that bounded the stimulus region (see Figure 2A). The cross and frame were always presented at a distance of 27.5cm. We included the frame to make clear from occlusion that the stimuli were always farther than fixation. On each trial, the random-dot stimuli were presented simultaneously for 250ms: One stimulus—the standard— had a distance of 27.5, 29.5, 31.5, 33.5, 35.5, 37.5, or 39.5cm. An increment in distance was added to the other stimulus—the test—according to the method of constant stimuli. Regardless of distance the stimuli had the same luminance and subtended the same visual angle at the eye. Subjects indicated with a key press which of the two stimuli appeared farther. The distance of the standard stimulus, the increment of the test stimulus, and which stimulus was above or below the fixation cross were randomized across trials. After a response was recorded, the next stimulus was presented after a delay of 500ms.

Conditions

Three experimental conditions were presented: disparity only, blur only, and disparity and blur together. Subjects viewed the stimuli binocularly in the disparity-only condition. In that case, the multi-plane feature of the display was disabled, so the disparity of the standard and test stimuli differed, but the focal distances were the same. Subjects viewed the stimuli monocularly in the blur-only condition. The multi-plane feature of the display was enabled, so the focal distances of the standard and test stimuli differed. Subjects viewed stimuli binocularly in the disparity-and-blur condition with the multi-plane feature enabled, so the standard and test stimuli differed in disparity and focal distance. Subject JOL frequently perceived the farther stimulus in the blur-only condition as blurrier rather than farther and in those cases he responded by picking the blurrier stimulus.

Analysis

The psychometric data rose from ~50% to ~100% as the distance between the standard and test stimuli increased. We fit cumulative Gaussians to these data for each subject in each condition using a maximum-likelihood criterion [49]. The mean of the fitted function—the 75% point—was the estimate of the discrimination threshold for that subject and condition. Average data were calculated by fitting psychometric functions to the data from all of the subjects in a given condition.

Highlights.

Depth from disparity and depth from blur have analogous geometries

Disparity is very precise near fixation, but blur is more precise elsewhere

The two cues allow more accurate depth perception than from either cue alone

Slit pupils cause asymmetric blur, aiding depth discrimination along the ground

Acknowledgments

We thank David Hoffman for his generous help with programming the experiment and Peter Vangorp for rendering the slit-pupil image in Figure 3B. The research was supported by NIH grant EY012851, an NSF graduate fellowship to the first author, and an NDSEG fellowship to the second author.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Robert T. Held, Corresponding Author, Computer Science Division, University of California, California, Berkeley, Soda Hall, Mail Code 1776, Berkeley, CA 94720

Emily A. Cooper, Helen Wills Neuroscience Institute, University of California, Berkeley, 360 Minor Hall, Berkeley, CA 94720

Martin S. Banks, Vision Science Program, University of California, Berkeley, 360 Minor Hall, Berkeley, CA 94720

References

- 1.von Helmholtz H. Treatise on physiological optics. Third Edition III. Dover; New York: 1925. [Google Scholar]

- 2.Rogers BJ, Bradshaw MF. Vertical disparities, differential perspective and binocular stereopsis. Nature. 1993;361:253–255. doi: 10.1038/361253a0. [DOI] [PubMed] [Google Scholar]

- 3.Blakemore C. The range and scope of binocular depth discrimination in man. Journal of Physiology. 1970;211:599–622. doi: 10.1113/jphysiol.1970.sp009296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bülthoff HH, Mallot HA. Integration of depth modules: Stereo and shading. JOSA. 1988;5(10):1749–1758. doi: 10.1364/josaa.5.001749. [DOI] [PubMed] [Google Scholar]

- 5.Palmer SE. Vision science: Photons to phenomenology. First Edition MIT Press; Boston: 1999. [Google Scholar]

- 6.Held RT, Cooper EA, O’Brien JF, Banks MS. Using blur to affect perceived scale. ACM Transactions on Graphics. 2010;29(2):19–1. doi: 10.1145/1731047.1731057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ohzawa I, DeAngelis GC, Freeman RD. Stereoscopic depth discrimination in the visual cortex: Neurons ideally suited as disparity detectors. Science. 1990;249:1037–1041. doi: 10.1126/science.2396096. [DOI] [PubMed] [Google Scholar]

- 8.Agarwal A, Blake A. Dense stereomatching over panum band. IEEE Transaction on Pattern Analysis and Machine Intelligence. 2010;32(3):416–430. doi: 10.1109/TPAMI.2008.298. [DOI] [PubMed] [Google Scholar]

- 9.Poggio GF. Mechanisms of stereopsis in monkey visual cortex. Cerebral Cortex. 1995;3:193–204. doi: 10.1093/cercor/5.3.193. [DOI] [PubMed] [Google Scholar]

- 10.Smallman HS, MacLeod DIA. Spatial scale interactions in stereo sensitivity and the neural representation of binocular disparity. Perception. 1997;26(8):977–994. doi: 10.1068/p260977. [DOI] [PubMed] [Google Scholar]

- 11.Schor C, Wood I, Ogawa J. Binocular sensory fusion is limited by spatial resolution. Vision Research. 1983;24(7):661–665. doi: 10.1016/0042-6989(84)90207-4. [DOI] [PubMed] [Google Scholar]

- 12.Walsh G, Charman WN. Visual sensitivity to temporal change in focus and its relevance to the accommodation response. Vision Research. 1988;28(11):1207–1221. doi: 10.1016/0042-6989(88)90037-5. [DOI] [PubMed] [Google Scholar]

- 13.Watt RJ, Morgan MJ. Spatial filters and the localization of luminance changes in human vision. Vision Research. 1984;24(1):1387–1397. doi: 10.1016/0042-6989(84)90194-9. [DOI] [PubMed] [Google Scholar]

- 14.Burge JD, Geisler WS. Optimal defocus estimation in individual natural images. PNAS. 2011;108(40):16849–16854. doi: 10.1073/pnas.1108491108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mather G. The use of image blur as a depth cue. Perception. 1997;26(9):1147–1158. doi: 10.1068/p261147. [DOI] [PubMed] [Google Scholar]

- 16.Marshall JA, Burbeck CA, Ariely D, Rolland JP, Martin KE. Occlusion edge blur: A cue to relative visual depth. JOSA. 1996;13(4):681–688. doi: 10.1364/josaa.13.000681. [DOI] [PubMed] [Google Scholar]

- 17.Palmer SE, Brooks JL. Edge-region grouping in depth perception and figure-ground organization. Journal of Experimental Psychology: Human Perception and Performance. 2008;34(6):1353–1371. doi: 10.1037/a0012729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Birn J. Lighting & Rendering in Maya: Lights and Shadows. 2008 http://www.3drender.com. [Google Scholar]

- 19.Wilson BJ, Decker KE, Roorda A. Monochromatic aberrations provide an odd-error cue to focus direction. J. Opt Soc. Am. A. 2002;19:833–839. doi: 10.1364/josaa.19.000833. [DOI] [PubMed] [Google Scholar]

- 20.Jacobs RJ, Smith G, Chan CDC. Effect of defocus on blur thresholds and on thresholds of perceived change in blur: Comparison of source and observer methods. Optometry and Vision Science. 1989;66:545–553. doi: 10.1097/00006324-198908000-00010. [DOI] [PubMed] [Google Scholar]

- 21.Schechner YY, Kiryati N. Depth from defocus vs. stereo: How different really are they? International Journal of Computer Vision. 2000;29(2):141–162. [Google Scholar]

- 22.Love GD, Hoffman DM, Hands PJW, Gao J, Kirby AK, Banks MS. High-speed switcheable lens enables the development of a volumetric stereoscopic display. Opt Express. 2009;17(18):15716–15725. doi: 10.1364/OE.17.015716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ernst EO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- 24.Pentland AP, IEEE Trans A new sense for depth of field. Pattern Analysis and Machine Intelligence. 1987;9(4):523–531. doi: 10.1109/tpami.1987.4767940. [DOI] [PubMed] [Google Scholar]

- 25.Mather G. Image blur as a pictorial cue. Proc. R. Soc. Lond. B. 1996;263:169–172. doi: 10.1098/rspb.1996.0027. [DOI] [PubMed] [Google Scholar]

- 26.Fincham EF. The accommodation reflex and its stimulus. Brit. J. Ophthal. 1951;35:381–393. doi: 10.1136/bjo.35.7.381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Campbell FW, Robson JG, Westheimer G. Fluctuations of accommodation under steady viewing conditions. J. Physiol. 1959;145:579–594. doi: 10.1113/jphysiol.1959.sp006164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Smithline LM. Accommodative response to blur. J. Opt. Soc. Am. 1974;64:1512–1516. doi: 10.1364/josa.64.001512. [DOI] [PubMed] [Google Scholar]

- 29.Spring K, Stiles WS. Variation of pupil size with change in the angle at which the light stimulus strikes the retina. British J. Ophthalmol. 1948;32(6):340–346. doi: 10.1136/bjo.32.6.340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Yamanoue H, Okui M, Okano F. Geometric analysis of puppet-theater and cardboard effects in stereoscopic HDTV images. IEEE Transactions on Circuits and Systems for Video Technology. 2006;16(6):744–752. [Google Scholar]

- 31.Fendick M, Westheimer G. Effects of practice and the separation of test targets on foveal and peripheral stereoacuity. Vision Research. 1983;23(2):145–150. doi: 10.1016/0042-6989(83)90137-2. [DOI] [PubMed] [Google Scholar]

- 32.Siderov J, Harwerth RS, Bedell HE. Stereopsis, cyclovergence, and the backwards tilt of the vertical horopter. Vision Research. 1999;39(7):1347–1357. doi: 10.1016/s0042-6989(98)00252-1. [DOI] [PubMed] [Google Scholar]

- 33.Wang B, Ciuffreda KJ. Blur discrimination of the human eye in the near retinal periphery. Optometry & Vision Science. 2005;82:52–58. [PubMed] [Google Scholar]

- 34.Richard W, Miller JF. Convergence as a cue to depth. Perception & Psychophysics. 1969;5:317–320. [Google Scholar]

- 35.Rogers BJ, Bradshaw MF. Disparity scaling and the perception of frontoparallel surfaces. Perception. 1995;24:155–179. doi: 10.1068/p240155. [DOI] [PubMed] [Google Scholar]

- 36.Flügel-Koch C, Neuhuber WL, Kaufman PL, Lütjen-Drecoll E. Morphologic indication for proprioception in the human ciliary muscle. Investigative Ophthalmology and Visual Science. 2009;50(12):5529–5536. doi: 10.1167/iovs.09-3783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Mucke S, Manahilov V, Strang NC, Seidel D, Gray LS, Shahani U. Investigating the mechanisms that may underlie the reduction in contrast sensitivity during dynamic accommodation. Journal of Vision. 2010;10(5):5, 1–14. doi: 10.1167/10.5.5. [DOI] [PubMed] [Google Scholar]

- 38.Mon-Williams M, Tresilian JR. Ordinal depth information from accommodation? Ergonomics. 2000;43(3):391–404. doi: 10.1080/001401300184486. [DOI] [PubMed] [Google Scholar]

- 39.Fisher SK, Ciuffreda KJ. Accommodation and apparent distance. Perception. 1988;17(5):609–621. doi: 10.1068/p170609. [DOI] [PubMed] [Google Scholar]

- 40.Walls GL. The vertebrate eye and its adaptive radiation. Cranbrook Institute; Bloomfield Hills, MI: 1942. [Google Scholar]

- 41.Heath JE, Northcutt RG, Barber RP. Rotational optokinesis in reptiles and its bearing on pupillary shape. J. Comparative Physiology A. 1969;62(1):75–85. [Google Scholar]

- 42.Malmström T, Kröger RHH. Pupil shapes and lens optics in the eyes of terrestrial vertebrates. J. Exp. Biol. 2006;209:18–25. doi: 10.1242/jeb.01959. [DOI] [PubMed] [Google Scholar]

- 43.Land MF. Visual Optics: The Shapes of Pupils. Current Biology. 2006;16(5):167–168. doi: 10.1016/j.cub.2006.02.046. [DOI] [PubMed] [Google Scholar]

- 44.Brischoux F, Pizzatto L, Shine R. Insights into the adaptive significance of vertical pupil shape in snakes. Journal of Evolutionary Biology. 2010;23(9):1878–1885. doi: 10.1111/j.1420-9101.2010.02046.x. [DOI] [PubMed] [Google Scholar]

- 45.Witkin AP. Recovering surface shape and orientation from texture. Artificial Intelligence. 1981;17(1-3):17–45. [Google Scholar]

- 46.Akeley K, Watt SJ, Girshick AR, Banks MS. A stereo display prototype with multiple focal distances. ACM Trans. Graph. 2004;23(3):804–813. [Google Scholar]

- 47.Hoffman DM, Girshick AR, Akeley K, Banks MS. Vergence-accommodation conflicts hinder visual performance and cause visual fatigue. Journal of Vision. 2008;8(3):33–1. doi: 10.1167/8.3.33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.MacKenzie KJ, Hoffman DM, Watt SJ. Accommodation to multiple-focal-plane displays: Implications for improving stereoscopic displays and for accommodative control. Journal of Vision. 2010;10(8):1–18. doi: 10.1167/10.8.22. 22. [DOI] [PubMed] [Google Scholar]

- 49.Wichmann F, Hill N. The psychometric function: II. Bootstrap-based confidence intervals and sampling. Attention, Perception, & Psychophysics. 2001;63(8):1314–1329. doi: 10.3758/bf03194545. [DOI] [PubMed] [Google Scholar]