Abstract

Sustained intervention effects are needed for positive health impacts in populations; however, few published examples illustrate methods for assessing sustainability in health promotion programs. This paper describes the methods for assessing sustainability of the Lifestyle Education for Activity Program (LEAP). LEAP was a comprehensive school-based intervention that targeted change in instructional practices and the school environment to promote physical activity (PA) in high school girls. Previous reports indicated that significantly more girls in the intervention compared with control schools reported engaging in vigorous PA, and positive long-term effects on vigorous PA also were observed for girls in schools that most fully implemented and maintained the intervention 3 years following the active intervention. In this paper, the seven steps used to assess sustainability in LEAP are presented; these steps provide a model for assessing sustainability in health promotion programs in other settings. Unique features of the LEAP sustainability model include assessing sustainability of changes in instructional practices and the environment, basing assessment on an essential element framework that defined complete and acceptable delivery at the beginning of the project, using multiple data sources to assess sustainability, and assessing implementation longitudinally.

Introduction

The Lifestyle Education for Activity Program (LEAP), a comprehensive school-based intervention, targeted change in instructional practices and the school environment to promote physical activity (PA) in high school girls. The development and implementation phases of LEAP, including descriptions of the intervention, program goals, implementation approach and implementation monitoring, have been reported previously and are summarized here. LEAP focused on promoting PA among high school girls as the primary outcome [1, 2] and on organizational change within the school as a secondary outcome [3, 4]. LEAP took place in school settings but was not curriculum based; rather, LEAP staff provided guidelines for changes in instructional practices [e.g. in physical education (PE)] and the school environmental (e.g. a school-wide LEAP team) to promote PA. As reported previously, these guidelines included 10 required and 6 recommended essential elements, key desirable characteristics of the school’s instructional practices and environment [3, 4]. As shown in Table I in boldface, the required seven instructional elements focused on instructional practices in PE to promote activity in girls and the three environmental elements were focused on creating a broader supportive school environment for activity in girls.

Table I.

LEAP essential element framework during active intervention and follow-up phases

| Component | Essential elements during active intervention [2, 4] | Essential elements for follow-up sustainability assessment |

| School environment | Support for PA promotion from the school administrator | Support for PA promotion from the school administrator |

| Active school PA team | Active school PA team | |

| Messages promoting PA are prominent in the school | Messages promoting PA are prominent in the school | |

| Faculty/staff health promotion provides adult modeling of PA | Faculty/staff health promotion provides adult modeling of PA | |

| Community agency involvement | ||

| Family involvement | ||

| Health education reinforces messages | ||

| School nurse involved in PA | ||

| PA opportunities outside of PE | ||

| Instructional practice | Gender-separated PE classes | Gender-separated PE classes |

| Classes are fun | Classes are fun | |

| Classes are physically active | Classes are physically active | |

| Teaching methods are appropriate | Teaching methods are appropriate | |

| Behavioral skills are taught | Behavioral skills are taught | |

| Lifelong PA emphasized | Lifelong PA emphasized | |

| Non-competitive PA included in PE | Non-competitive PA included in PE |

Bolded elements = required intervention elements; non-bolded elements = recommended intervention elements.

Each school was encouraged to achieve these essential elements in a manner that worked best given that school’s resources and culture. The LEAP implementation approach considered each school’s specific context, worked in an on-going partnership with the schools, identified and supported a school-based ‘champion’ who led LEAP activities in each school, provided on-going training and technical support and actively sought administrator support throughout the program. This unique approach was intended to improve program fit within each school and was consistent with factors known to enhance both program implementation and sustainability [5–10]. Thus, the LEAP intervention was a flexible adaptive intervention that involved working in partnership with school staff to create change in instructional practices and the school environment to promote PA in high school girls [4]. This approach has qualities consistent with sustainable programs, including an easily described program that is seen as beneficial and fits with the organization’s mission and day-to-day practices; early involvement of stakeholders along with positive and trusting relationships and effective and on-going communication; providing implementation skill development and on-going support and seeking administrative support throughout the project [5–8].

As previously reported, significantly more girls in the intervention compared with control schools (45 and 36%) reported engaging in vigorous physical activity; this difference was not explained by activity in PE (i.e. girls were active outside of PE) [1]. Furthermore, more girls reported vigorous PA in schools that were assessed to be higher implementers compared with lower implementer and control schools at the end of the active intervention (48, 40 and 36%, respectively) [3]. Positive long-term effects on vigorous PA also were observed for girls in schools that most fully maintained the intervention 3 years following the active intervention [2]. Sustained intervention effects are needed for positive health impacts in populations; however, few published examples illustrate methods for assessing sustainability in health promotion programs. The purposes of this paper are to describe the methodology used to assess sustainability of the LEAP program at the school level 3 years following the termination of the active intervention study and to present a guide for assessing implementation and sustainability for programs in other settings.

The LEAP sustainability study was guided by seven steps that built upon methods for developing a plan to assess program implementation [11] and methods for assessing implementation [3]. Specifically, we defined the sustainability focus and framework, identified data sources and identified/developed data collection tools and procedures, developed criteria for evidence of implementation at follow-up, collected and organized data, applied criteria for evidence of implementation, applied sustainability criteria and used the sustainability information to understand study outcomes at follow-up. These seven steps are consistent with the framework for measuring persistence of implementation in health care settings recommended by Bowman et al. [12], including determining what to measure, when to measure it and how to measure it. The sustainability assessment methods are presented in this paper as an initial framework for assessing sustainability in other programs and settings. The seven steps for assessing sustainability are described and illustrated using the LEAP program. Steps 1–4, presented in the Methods section, address planning for sustainability data collection and collecting and organizing the data. Steps 5–7, presented in the Results section, address applying criteria for evidence implementation at follow-up as well as sustained practice over time and using study results.

Methods

Step 1: define sustainability focus. Define sustainability, sustainability goal and framework and time frame for sustainability assessment

Defining sustainability

LEAP sustainability was defined as maintenance or continued presence of the essential elements at follow-up. This definition is consistent with maintenance of program elements over time rather than institutionalization of an intact program or benefits realized from the program [5–7, 13–15]. LEAP emphasized changes in both instructional practices and the school environment; therefore, the definition further specified that evidence for implementation at follow-up must include both school instructional practices and the school environment (i.e. maintenance of LEAP-like instructional practices alone was not considered as sufficient evidence of LEAP maintenance in the school).

The concept of sustainability or maintenance of essential elements at follow-up assumes implementation at earlier phases, that is, it is not possible to maintain an element that was not fully implemented initially. As reported previously, intervention schools were grouped into ‘high’ and ‘low’ implementing categories based on the degree to which each school implemented the essential elements at the end of the active intervention, resulting in seven ‘higher implementing’ and five ‘lower implementing’ schools [3]. The percentage of physically active girls in ‘lower implementing’ and control schools did not differ significantly [3], indicating that the ‘higher’ levels of implementation were needed to achieve intended study effects. The sustainability study took place 3 years later and focused on gathering evidence for the presence or absence of the same required 10 essential elements plus 1 additional environmental element (faculty/staff health promotion that proved to be an important component of the PA-promoting environment at the end of the LEAP intervention [3]) to place schools into ‘implementing’ and ‘non-implementing’ categories at follow-up; these are parallel to ‘higher’ and ‘lower’ implementation at the end of the active intervention period. The LEAP sustainability framework included the original 10 required essential elements. Therefore, LEAP sustainability was operationally defined as evidence for implementation at two points in time: ‘higher implementation’ at the end of the active intervention and ‘implementation’ at the 3-year follow-up.

Sustainability framework and time frame

The LEAP sustainability goal was to identify schools that sustained LEAP intervention elements at follow-up (n = 11; one of the original 12 schools did not participate at follow-up). The sustainability framework was based on the LEAP process evaluation framework that defined complete and acceptable delivery of LEAP and that was used to assess implementation of the LEAP essential elements during the active phases of the project [4]. LEAP sustainability was assessed 3 years following the end of the active intervention

Step 2: identify data sources and identify/develop data collection tools and procedures

Data sources

This step entails planning data collection methods which include identifying data sources, data collection tools and data collection procedures. Our intent was to keep methods for assessing implementation at follow-up as similar as possible to those for assessing implementation at the end of the active intervention. Intervention-specific process evaluation methods and tools that were available for implementation assessment in the original study [3] were not available at follow-up, so we tapped into the same data sources (excluding intervention staff as data sources since they were no longer involved after termination of the active intervention): former LEAP Team members (involved in school environment activities), former LEAP PE teachers (involved changes in instructional practices), girls in ninth-grade PE (current, not exposed to active LEAP intervention) and observation of PE classes and the school environment. As shown in Table II, three data sources were used to assess instructional practices (observation, former LEAP PE teachers and ninth-grade girls) and three to assess school environment (observation, former LEAP PE teachers and former LEAP team members).

Table II.

Data collection tools and methods with sample items used to assess “LEAP-like” elements at follow-up

| Component | Data sources, tools, and sample items |

||

| Instruction | Data source/tool: PE/environment observation checklist | Data source/tool: PE teacher interview | Data source/tool: ninth-grade PE girls focus groups |

|

Sample items: -Cooperative activities are included -Girls appear to be enjoying the activities -Students are organized into small enduring groups -Behavioral skills are taught (goal setting, overcoming obstacles, seeking/giving social support) -Most girls appear to be active for at least 50% of class time |

Sample items and probes: Thinking about your PE class now, what LEAP changes have remained? What has faded? Probes: -Kinds of activities, games or sports -Cooperative and competitive activities -Emphasis on lifetime activities -Teaching methods and classroom management -Involving students in selecting activities -Encouraging girls to be active outside of PE -Providing messages promoting PA |

Sample items and probes: What types of activities/active things are your doing/have you done in PE this year? - What kinds of activities, games or sports are you doing [have you done]? -How physically active have you been in PE this year? -How active how other students in PE been?Tell me a little more about your PE class. -Who decides what activities the class will do? -How are students organized to do activities or play games or sports? -Play games that are cooperative or competitive? -Boys and girls or mostly/only girls?–What kinds of things have you learned in PE this year?–Sports, games, skills, rules? -How to be active? -How to set goals to be active? |

|

| Environment | Data source/tool: PE/environment observation checklist | Data source/tool: PE teacher interview | Data source/tool: LEAP team member interview |

|

Sample item: -PA messages are evident (bulletin boards, posters, stall talkers etc.) |

Sample items: -To what extent do you see any lasting effects in your school because of LEAP? -How supportive is the principal for PE/PA today? |

Sample items and probes: Describe how things are now in our school. Describe how things are now compared with during the LEAP intervention. -PA opportunities, programs or events for students, teachers or staff? -Group in the school working together to promote and provide PA opportunities, programs or events -Wellness activities for faculty/staff -Family involvement -Working with community agencies -Principal support |

|

Data collection tools and methods

The same quantitative observation tool used in the active intervention phase, previously described [3], was used at follow-up to assess seven instructional practices in ninth-grade PE and the media environment in the school. Each item was rated 0 = no or none, 1 = sometimes, 2 = most of the time and 3 = all of the time (see Table II for sample items); the ratings for the observational scale items were summed to create a single index score. Observational data were collected by a single trained observer and analyzed using the same protocol used to assess implementation at the end of the LEAP intervention [3].

Qualitative methods included interviews with PE teachers (former LEAP PE teachers) and staff (former LEAP Team members) and focus groups with girls in the current ninth-grade PE. We contacted teachers to set up interviews and focus groups using the LEAP contact list from the active study (see Step 4 for details). Interviews and focus group questions did not use LEAP-specific intervention terminology (e.g. ‘LEAP PE’) and were designed to assess the presence or absence of LEAP-like practices and environmental factors based on the essential element framework at follow-up. The LEAP PE teacher interview had 10 open-ended questions; questions 1–5 pertained to the active LEAP intervention and questions 6–10 pertained to current PE practices and school support for PA. Similarly, there were nine open-ended questions for LEAP team member interview that addressed reflecting on activities during the active LEAP intervention and current activities. There were six open-ended questions in the current ninth-grade PE focus groups: a warm-up question (What kind of things do you like to do in your spare time?), reaction to PE class (i.e. likes and dislikes), types of activities/level of activity, how PE is managed, activity level of youth and adults at school and projecting to activity in future. Items 3–6 were designed to explore the presence or absence of the LEAP essential elements. Sample items from the interviews and focus groups are provided in Table II.

Step 3: develop criteria for evidence of implementation at follow-up

Criteria for evidence of implementation at follow-up

Determining implementation at follow-up required several steps—assessing the presence of essential elements using single data sources, assessing the presence of essential elements using multiple data sources and using data on all the essential elements to establish evidence of implementation for each school. For the single data sources, an essential element was considered to be present if it was observed ‘most’ or ‘all’ of the time (i.e. rated 2 or 3 on the observational checklist) or was identified in transcripts of focus groups or interviews by two independent coders.

Considering multiple data sources, an instructional essential element was considered present in the school if two of the three data sources (observational checklist, focus groups, interviews) identified the element. Because fewer data sources included the environmental essential elements, an environmental element was considered present if it was identified by at least one data source.

Based on the triangulation of data from multiple data sources, evidence of LEAP implementation at follow-up was determined for each school. A school was considered to have sustained LEAP if ∼60% or more of the 11 essential elements (specifically, at least 7 of 11 or 64%) were present, including at least one essential element from both instructional and environmental categories. The ≥60% criteria were based on a review of previous implementation work indicating that 60% implementation produces positive results and implementation >80% is rare [9]. Similarly, LEAP-like instructional practices were considered present in a school if a majority (4 of 7 or 57%) of the instructional essential elements were present. A LEAP-like school environment was present if a majority (3 of 4 or 75%) of the environmental essential elements were present.

Step 4: collect and organize data. (i) Collect data, (ii) synthesize/analyze data within each data collection tool and (iii) organize information into tables by school, essential element and data sources

A single trained process evaluator collected all data including 32 observations at follow-up, with a minimum of two observations per school (schools are designated by the letters A–G). Focus groups were set up through the PE teachers. Interviews were set up by contacting former LEAP PE teachers and former LEAP team members. The evaluator interviewed 14 PE teachers (including teachers at former LEAP schools C, E and F who were not involved in the LEAP intervention due to teacher turnover and 18 former LEAP team members, all of whom participated during the active LEAP intervention, and conducted 13 focus groups with current ninth-grade girls (total n = 89). Interviews and focus groups were recorded and transcribed. Two trained coders coded responses into tables independently using the LEAP essential elements as the code key; identification of an essential element theme required independent confirmation from both coders. Themes were organized into tables by school as ‘evidence’ for the presence of LEAP-like elements. For example, if respondents at a school indicated that boys and girls participated in separate activities in PE, this was coded under ‘gender separation in PE’, one of the instruction essential elements, and provided evidence for the presence of this element at follow-up.

Results

Step 5: apply criteria for evidence of implementation at follow-up. (i) Apply criteria to assess essential element implementation for each data collection tool and considering multiple data sources and (ii) apply criteria to assess school-level LEAP implementation considering all intervention elements

We examined data from three sources to establish evidence for instructional elements and from three sources to establish evidence for the environmental elements in each school. Results for the environmental and instructional essential elements, presenting evidence based on each data source, are shown in Table III, revealing evidence for some LEAP-like elements present at follow-up in 10 of the 11 schools. These results are summarized and presented in Table IV. Overall, five schools had 7–10 elements present (91, 82%, two with 73, 64%); three had 6–3 elements (55, 36, 27%) and one school had no elements present at follow-up. Application of the criteria for overall school implementation (at least 7 of 11 elements present with at least one of these in the environmental category) revealed that five schools (A, B, G, I and K) met the criteria for implementation at follow-up (Table IV). Six schools (A, B, D, G, I and K) met the criteria for instructional implementation at follow-up and three (G, I, and K) met the criteria for school environment implementation at follow-up.

Table III.

Summary of data triangulation for establishing evidence of implementation at follow-up for environmental and instructional elements by school

| Schools (A-K) and data sourcesa (1, 2, 3) | ||||||||||||||||||||||||||||||||||

| Environment essential element | A | B | C | D | E | F | G | H | I | J | K | |||||||||||||||||||||||

| 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | ||

| 1. Support from administrator for PA | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||||||||||||||||

| 2. Active school PA team | ✓ | ✓ | ||||||||||||||||||||||||||||||||

| 3. Faculty/staff health promotion | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||||||||||||||||||||

| 4. Messages promoting PA | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||||||||||||||||||||

| Instruction essential element | Schools (A-K) and data sources* (1, 3, 4) | |||||||||||||||||||||||||||||||||

| A | B | C | D | E | F | G | H | I | J | K | ||||||||||||||||||||||||

| 1 | 3 | 4 | 1 | 3 | 4 | 1 | 3 | 4 | 1 | 3 | 4 | 1 | 3 | 4 | 1 | 3 | 4 | 1 | 3 | 4 | 1 | 3 | 4 | 1 | 3 | 4 | 1 | 3 | 4 | 1 | 3 | 4 | ||

| 5. Gender-separated PE classes | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||||||||||||||

| 6. Cooperative activities are included | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||||||||||||||||||

| 7. Lifelong PA is emphasized | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||||

| 8. Classes are fun and enjoyable | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||

| 9. Teaching methods are appropriate | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||||

| 10. Behavioral skills are taught | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||||||||||||

| 11. At least 50% of class is active | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |||||||||||||||||||

✓ = evidence for presence of the indicated element for a given data source (one ✓ required for environmental elements; two ✓ required for instructional elements).

Data sources:1 = PE teacher interview; 2 = LEAP team players interview; 3 = ninth-grade PE observation; 4 = ninth-grade focus groups.

Table IV.

Overall school-level implementation at follow-up

| Essential element | Schools |

Total Essentialelement | ||||||||||

| G | A | I | K | B | D | F | H | E | J | C | ||

| 1. Support for PA promotion from the school administrator | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 6 | |||||

| 2. Active school PA team | ✓ | ✓ | 2 | |||||||||

| 3. Faculty health promotion provides adult modeling of PA | ✓ | ✓ | ✓ | ✓ | 4 | |||||||

| 4. Messages promoting PA are prominent in the school | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 7 | ||||

| Total number of environmental elements and rating per schoola | 4/4 I | 2/4 N | 4/4 I | 3/4 I | 2/4 N | 0/4 N | 1/4 N | 0/4 N | 1/4 N | 2/4 N | 0/4 N | |

| 5. Gender-separated PE classes | ✓ | ✓ | ✓ | ✓ | 4 | |||||||

| 6. Cooperative activities are included | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 6 | |||||

| 7. Lifelong PA is emphasized | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 7 | |||

| 8. Classes are fun and enjoyable | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 8 | |

| 9. Teaching methods are appropriate (e.g. emphasize small groups) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | 7 | ||||

| 10. Behavioral skills are taught | ✓ | ✓ | ✓ | 3 | ||||||||

| 11. At least 50% of class is active | ✓ | ✓ | ✓ | ✓ | 4 | |||||||

| Total for instructional practice elements and per schoolb | 6/7 I | 7/7 I | 4/7 I | 5/7 I | 5/7 I | 6/7 I | 3/7 N | 3/7 N | 2/7 N | 1/7 N | 0/ N | |

| Total elements each school and final implementation rating | 10/11 I | 9/11 I | 8/11 I | 8/11 I | 7/11 I | 6/11 N | 4/11 N | 3/11 N | 3/11 N | 3/11 N | 0/11 N | |

✓ = evidence for presence of the indicated element for a given school (from Table III), highlighted = classified as ‘implementing’ at follow-up.

I = environment element implementation (evidence for at least 3/4 elements being implemented); N = not implementing (<3/4 being implemented).

I = instructional element implementation (evidence for at least 4/7 elements being implemented); N = not implementing (<4/7 being implemented).

Step 6: apply sustainability criteria. Assess sustainability of LEAP essential elements

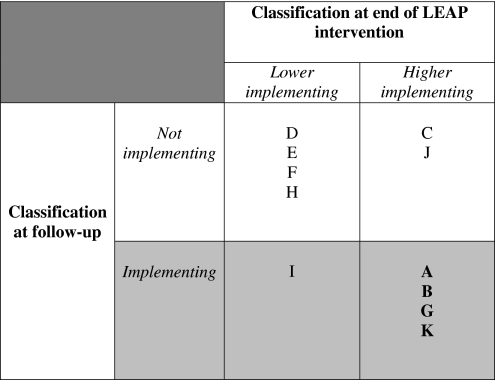

The criteria for sustainability required both high implementation of LEAP essential elements at the end of the active intervention and implementation at follow-up. As reported previously, six schools were classified as ‘high implementers’ at the end of the LEAP intervention [3] and in the study reported here, five schools were classified as ‘implementers’ at follow-up. As shown in Fig. 1, two ‘high-implementing’ schools at the end of the intervention phase were not ‘implementers’ at follow-up and one ‘low-implementing’ school at the end of the intervention was an ‘implementer’ at follow-up. Therefore, four schools met the criteria for sustainability: ‘high implementation’ at the end of the intervention and ‘implementation’ at follow-up.

Fig. 1.

Continuation of LEAP essential elements at follow-up = ‘higher implementation’ of LEAP essential elements at end of active intervention and ‘implementation’ of LEAP essential elements at follow-up.

Step 7: use sustainability information. Use sustainability information descriptively and in outcome analyses

The follow-up implementation assessment revealed more evidence for instructional elements than environmental elements, particularly those related to how PE class was conducted. The instructional elements most likely to be present at follow-up were emphasis on fun (n = 8), lifelong PA (n = 7), girl-friendly teaching methods (n = 7) and cooperative activities (n = 6). Elements least likely to be retained were gender-separated PE (n = 4), half or more of class time spent in PA (n = 4) and teaching behavioral skills (n = 3). The most frequently implemented environmental elements were media messages promoting PA (n = 7) and administrative support for PA (n = 6); the least implemented were health promotion for staff (n = 4) and active team to assess, plan and promote PA in the school (n = 2).

These results (i.e. four former LEAP schools sustaining a substantial number of instructional and environmental elements at follow-up) were used in the outcome analysis models comparing vigorous PA in 12th grade girls. As reported previously, these analyses revealed that significantly more 12th grade girls (from the original LEAP cohort) in these four schools reported vigorous PA compared with other former intervention and control schools [2], indicating that sustained changes in organizational practices and environments can positively impact PA of participants 3 years following termination of an active intervention.

Discussion

We found evidence for sustained comprehensive change in instructional practice and the school environment 3 years after the end of the active LEAP intervention in 4 of 11 former LEAP intervention schools. As reported previously, these sustained changes were related to a higher proportion of 12th grade girls engaging in vigorous PA [2]. There was also evidence for consistent implementation of LEAP-related instructional practices in six schools and consistent implementation of LEAP-related environmental changes in three schools for implementation, as well as evidence for some LEAP-related changes persisting in 10 of 11 schools. Overall, implementation at follow-up was higher for instructional compared with environmental elements; this may be due in part to the greater degree of classroom teacher control over these elements relative to the school environment elements which require cooperation of multiple stakeholders within the school. The instructional elements implemented most frequently were an emphasis on fun, lifelong PA, girl-friendly teaching methods and cooperative activities, whereas maintaining gender separation, higher activity levels and teaching behavioral skills were implemented less often at follow-up, similar to the pattern for implementation at the end of the intervention phase of the study. Environmental elements implemented most frequently at follow-up were messages and administrative support, whereas messages and the LEAP team were more commonly implemented at the end of the intervention.

It is difficult to compare these results with those of the previous studies because few have assessed maintenance of health promotion intervention elements, fewer are specific to PA interventions for youth and none have focused entirely on changing environmental and instructional practice at the school level. For example, Scheirer [7] identified 17 sustainability studies conducted in a variety of settings and using a variety of definitions and methods, but only one program addressed PA and none were in school settings. Two studies have assessed sustainability of PA programs in schools settings. A follow-up assessment of SPARK, a curriculum-based elementary school PE program, found evidence of long-term sustainability of organizational practices in PE [16]. Eighty-one percent of respondents reported using the SPARK program in a follow-up survey; however, specific level of use was not assessed and the survey response rate was low at 47% (111/223). Furthermore, activity levels of children were not assessed.

CATCH also conducted a comprehensive follow-up study of sustainability of instructional practices in PE and impact on PA in children in the intervention, controls exposed to the intervention and controls unexposed to the intervention [17–19]. Results from these publications indicated sustained intervention activities in former CATCH PE interventions and controls which differed significantly from that in unexposed controls. The amount of class time spent on CATCH activities was 33, 30 and 10% in former CATCH PE intervention schools, former CATCH control schools and unexposed control schools, respectively. The number of lessons taught (1.5 and 0.5) and adherence to the curriculum guide (1.5 and 1.2 on a scale of 1 = none to 5 = all of the time) was low in former intervention and comparison schools, respectively. Children maintained PA levels in PE around the recommended 50% of moderate-to-vigorous physical activity (MVPA) time; however, due to large secular trends, there were no differences among the three groups at follow-up (percent of time in MVPA: 50, 48 and 48% in former CATCH PE intervention schools, former CATCH control schools and unexposed control schools, respectively). Direct comparisons between LEAP and CATCH are difficult due to different settings (high versus elementary school), different foci (largely environmental change versus curricula), different methods for assessing sustainability and different approach for summarizing sustainability (school-by-school versus element specific).

It is possible that the sustainability of LEAP in some schools was due in part to its unique intervention approach, designed to encourage appropriate organizational adaptation of the LEAP intervention, although we did not directly assess this. The LEAP approach was consistent with recommendations on working in partnership with community participants [20] and defining intervention fidelity based on a standardized process [21]. These recommendations pertaining to complex interventions in field settings are intended to facilitate implementation and sustainability.

Implementation at follow-up varied among the schools. Ideally, potential factors affecting implementation and sustainability would have been assessed in LEAP; we did assess one potential influence, teacher turnover. None of the schools with PE teacher turnover (C, E and F) met the criteria for implementation at follow-up. These schools were also implementing at low levels at the end of the intervention, well prior to experiencing teacher turnover. This could suggest organizational issues underlying both implementation challenges and teacher turnover; however, we did not assess this. Nevertheless, both of these schools had evidence for LEAP-like elements at follow-up (three and four elements, respectively).

Unique features of the LEAP sustainability model include assessing sustainability of changes in instructional practices and the environment (versus curriculum activities), basing assessment on an essential element framework that defined complete and acceptable delivery at the beginning of the project, using multiple data sources to assess sustainability of the essential elements, assessing implementation longitudinally and assessing the impact of sustained instructional practice and environmental change on vigorous PA [2]. The approach used in LEAP is consistent with the emphasis on assessing implementation and maintenance in the RE-AIM model [22] and the four-step approach recommended by Durlak [23] that includes (i) defining active ingredients (LEAP Essential Elements), (ii) using good methods to measure implementation, (iii) monitoring implementation and (iv) relating implementation to outcomes. This model can be used to assess implementation and sustainability of changes introduced through other complex interventions in field settings. We also addressed several conceptual and methodological issues through this process that are relevant for assessing implementation and sustainability of activities in other settings. These include defining implementation and sustainability, identifying appropriate methods (data sources and tools), developing an approach to triangulating data from multiple sources and establishing criteria for evidence of implementation at follow-up.

The LEAP essential elements framework that defined complete and acceptable delivery for instruction and school environment greatly facilitated defining implementation during and following the LEAP intervention. Sustainability was defined based on evidence of implementation at both the end of the active intervention and 3 years following termination of the active intervention, as we believed this best reflected sustained practice. Other approaches could include outcomes such as institutionalization of an intact program or benefits realized from the program [5–7, 13–15]. A curriculum-based program, for example, would likely entail a different framework, sustainability focus and methodology [24].

Intervention-related data collection tools used to assess implementation during the LEAP study were not appropriate for the 3-year follow-up study, although we were able to use the same observational tool as in the initial study. Our strategy was to tap into the same data sources using qualitative methodology. This approach was facilitated by using the essential elements framework as the basis for coding interview and focus group narratives. Similarly, intervention-specific language was not appropriate at follow-up and was modified. Ideally, future work will entail the development of quantitative tools and methodology that can be used to assess implementation throughout the program lifecycle [7].

Multiple data sources are recommended for accurately assessing program implementation [25, 26], but there are few models for triangulating across multiple data sources to assess implementation. The approach used in this study was based on the implementation assessment in LEAP previously reported by Saunders et al. [2] and serves as a model for data triangulation to assess implementation with multiple data sources.

Defining criteria for evidence of implementation is an important process, and the specific definition will vary from program to program. In LEAP, this process required multiple steps. The initial level was defining evidence, supporting implementation for each essential element based on a single data source. The next level involved considering multiple data sources (triangulation) to define school-level implementation for a given essential element. Finally, information about implementation of multiple essential elements was used to identify school-level implementation of the instructional and environmental components, as well as overall implementation. A recent review indicated that implementing ≥60% of program elements was associated with positive outcomes [9], which is similar to the criteria we used to assess implementation at follow-up, 3 years following the termination of the active intervention. In addition, criteria for evidence of implementation in other programs must consider the program framework and goal, number and nature of program components and/or elements and the number data sources and types of data collection tools. The specific methodology for data collection is constrained by resources (e.g. availability and scheduling of skilled evaluation personnel) and practical considerations (e.g. consideration for potential disruption of organizational operations and intervention activities). Therefore, planning for implementation and sustainability assessment should ideally take place as part of proposal development.

Limitations to this study include small sample and newly developed measures. Future studies should assess implementation and sustainability in larger samples of organizations and data collection instrument validity and reliability, including interrater reliability for observations and test–retest for surveys and interview. In addition, future studies should assess potential influences on implementation and sustainability, including contextual factors.

Funding

National Heart, Lung, and Blood Institute (NIH HL 57775).

Conflict of interest statement

None declared.

Acknowledgments

The authors thank Gaye Groover Christmus, MPH, for editing the manuscript.

References

- 1.Pate RR, Ward DS, Saunders RP, et al. Promotion of physical activity in high school girls: a randomized controlled trial. Am J Public Health. 2005;95:1582–7. doi: 10.2105/AJPH.2004.045807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Pate RR, Saunders R, Dishman RK, et al. Long-term effects of a physical activity intervention in high school girls. Am J Prev Med. 2007;33:276–80. doi: 10.1016/j.amepre.2007.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Saunders RP, Ward DS, Felton GM, et al. Examining the link between program implementation and behavior outcomes in the lifestyle education for activity program (LEAP) Eval Program Plann. 2006;29:352–64. doi: 10.1016/j.evalprogplan.2006.08.006. [DOI] [PubMed] [Google Scholar]

- 4.Ward DS, Saunders R, Felton GM, et al. Implementation of a school environment intervention to increase physical activity in high school girls. Health Educ Res. 2006;21:896–910. doi: 10.1093/her/cyl134. [DOI] [PubMed] [Google Scholar]

- 5.Johnson K, Hays C, Center H, et al. Building capacity and sustainable prevention innovations: a sustainability planning model. Eval Program Plann. 2004;27:135–49. [Google Scholar]

- 6.Shediac-Rizkallah MC, Bone LR. Planning for the sustainability of community-based health programs: conceptual frameworks and future directions for research, practice, and policy. Health Educ Res. 1998;13:87–108. doi: 10.1093/her/13.1.87. [DOI] [PubMed] [Google Scholar]

- 7.Scheirer MA. Is sustainability possible? A review and commentary on empirical studies of program sustainability. Am J Eval. 2005;29:320–437. [Google Scholar]

- 8.Greenhalgh T, Robert G, Macfarlane F, et al. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82:581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41:317–50. doi: 10.1007/s10464-008-9165-0. [DOI] [PubMed] [Google Scholar]

- 10.Pluye P, Potvin L, Denis J-L, et al. Program sustainability begins with the first events. Eval Progam Plann. 2005;29:123–37. [Google Scholar]

- 11.Saunders RP, Evans MH, Joshi P. Developing a process-evaluation plan for assessing health promotion program implementation: a how-to guide. Health Promot Pract. 2005;6:134–47. doi: 10.1177/1524839904273387. [DOI] [PubMed] [Google Scholar]

- 12.Bowman CC, Sobo EJ, Asch SM, et al. Measuring persistence of implementation: QUERI series. Implement Sci. 2008;3:21. doi: 10.1186/1748-5908-3-21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bartholomew LK, Parcel GS, Kok G, et al. Planning Health Promotion Programs: Intervention Mapping. 2nd edn. San Francisco, CA: Jossey-Bass; 2006. [Google Scholar]

- 14.Pluye P, Potvin L, Denis J-L. Making public health programs last: conceptualizing sustainability. Eval Program Plann. 2004;27:121–33. [Google Scholar]

- 15.Scheirer MA. Defining sustainability outcomes of health programs: illustrations from an on-line survey. Eval Program Plann. 2008;31:335–46. doi: 10.1016/j.evalprogplan.2008.08.004. [DOI] [PubMed] [Google Scholar]

- 16.Dowda M, Sallis JF, McKenzie TL, et al. Evaluating the sustainability of SPARK physical education: a case study of translating research into practice. Res Q Exerc Sport. 2005;76:11–9. doi: 10.1080/02701367.2005.10599257. [DOI] [PubMed] [Google Scholar]

- 17.Kelder SH, Mitchell PD, McKenzie TL, et al. Long-term implementation of the CATCH physical education program. Health Educ Behav. 2003;30:463–47. doi: 10.1177/1090198103253538. [DOI] [PubMed] [Google Scholar]

- 18.McKenzie TL, Donglin L, Derby CA, et al. Maintenance of effects of the CATCH physical education program: results from the CATCH-ON study. Health Educ Behav. 2003;30:447–62. doi: 10.1177/1090198103253535. [DOI] [PubMed] [Google Scholar]

- 19.Hoelscher DM, Feldman HA, Johnson CA, et al. School-based health education programs can be maintained over time: results from the CATCH Institutionalization study. Prev Med. 2004;38:594–606. doi: 10.1016/j.ypmed.2003.11.017. [DOI] [PubMed] [Google Scholar]

- 20.Green L, Daniel M, Novick L. Partnerships and coalitions for community-based research. Public Health Rep. 2001;116(Suppl. 1):20–31. doi: 10.1093/phr/116.S1.20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hawe P, Shiell A, Riley T. Complex interventions: how “out of control” can a randomised controlled trial be? BMJ. 2004;328:1561–153. doi: 10.1136/bmj.328.7455.1561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Klesges LM, Estabrookes PA, Dzewaltowski DA, et al. Beginning with the application in mind: designing and planning health behavior change interventions to enhance dissemination. Ann Behav Med. 2005;29(Suppl):66–75. doi: 10.1207/s15324796abm2902s_10. [DOI] [PubMed] [Google Scholar]

- 23.Durlak JA. Why program implementation is important. In: Durlak J, Ferrari J, editors. Program Implementation in Preventive Trials. Philadelphia, PA: Haworth Press; 1998. pp. 5–18. [Google Scholar]

- 24.McGraw SA, Sellers DE, Stone EJ, et al. Measuring implementation of school programs and policies to promote healthy eating and physical activity among youth. Prev Med. 2000;31:S86–97. [Google Scholar]

- 25.Bouffard JA, Taxman FS, Silverman R. Improving process evaluations of correctional programs by using a comprehensive evaluation methodology. Eval Program Plann. 2003;6:149–61. doi: 10.1016/S0149-7189(03)00010-7. [DOI] [PubMed] [Google Scholar]

- 26.Dusenbury L, Brannigan R, Falco M, et al. A review of research on fidelity of implementation: implications for drug abuse prevention in school settings. Health Educ Res. 2003;18:237–56. doi: 10.1093/her/18.2.237. [DOI] [PubMed] [Google Scholar]