Abstract

Humans and other primates are adept at using the direction of another's gaze or head turn to infer where that individual is attending. Research in macaque neurophysiology suggests that anterior superior temporal sulcus (STS) contains a direction-sensitive code for such social attention cues. By contrast, most human functional Magnetic resonance imaging (fMRI) studies report that posterior STS is responsive to social attention cues. It is unclear whether this functional discrepancy is caused by a species difference or by experimental design differences. Furthermore, social attention cues are dynamic in naturalistic social interaction, but most studies to date have been restricted to static displays. In order to address these issues, we used multivariate pattern analysis of fMRI data to test whether response patterns in human right STS distinguish between leftward and rightward dynamic head turns. Such head turn discrimination was observed in right anterior STS/superior temporal gyrus (STG). Response patterns in this region were also significantly more discriminable for head turn direction than for rotation direction in physically matched ellipsoid control stimuli. Our findings suggest a role for right anterior STS/STG in coding the direction of motion in dynamic social attention cues.

Keywords: face perception, fMRI, gaze, head, MVPA

Introduction

Humans and other primates share a remarkable ability to accurately perceive where other individuals are attending and use this information to change their own attentional state (Deaner and Platt 2003). Many higher order social cognitive processes depend on such gaze following behaviors (Frith and Frith 2008; Klein et al. 2009). Although changes to gaze direction and head view are inherently dynamic, to date the majority of human neuroimaging research has used static facial stimuli to study the neural representation of such social cues (Nummenmaa and Calder 2009). In view of macaque neurophysiology evidence that neurons responsive to dynamic head turns do not respond to static views of the same head (Hasselmo et al. 1989), it is vital to explore the neural coding of dynamic social stimuli. Here, we demonstrate that a region in human superior temporal sulcus (STS)/superior temporal gyrus (STG) contains a distributed representation of perceived head turn direction, thus supplying a necessary perceptual component to support a range of social behaviors.

Neurons in macaque anterior STS play a well-established role in representing the perceived direction of others' social attention cues, as conveyed by head orientation, gaze direction, and body posture (Perrett et al. 1982, 1992; Perrett, Smith, Potter, et al. 1985; Wachsmuth et al. 1994). However, these constitute only a minority of visually responsive STS neurons and are either spatially distributed (Hasselmo et al. 1989) or are organized into fine-grained patches well beyond the resolution of conventional functional MRI (fMRI; Perrett et al. 1984). This distributed representation poses a significant signal-to-noise challenge for attempts to study similar effects with human fMRI, where each voxel likely samples millions of neurons in ways that are only indirectly related to the neuronal spike trains commonly measured in macaque neurophysiology (Logothetis 2008; Kriegeskorte et al. 2009).

Unlike the typical anterior STS region identified by research in the macaque, most human fMRI studies report that social attention cues activate posterior STS and regions of adjacent STG and middle temporal gyrus (MTG; Hein and Knight 2008; Nummenmaa and Calder 2009). Similar posterior temporal regions are also more responsive to faces than to control stimuli (Andrews and Ewbank 2004; Fox et al. 2009). Most of these studies find that posterior STS is more responsive to averted than to direct gaze (Nummenmaa and Calder 2009), but the opposite pattern has also been observed (e.g., Pageler et al. 2003; Pelphrey et al. 2004). Furthermore, posterior STS responds more when an actor gazes away from a target than when the gaze direction is congruent with the target location (Pelphrey et al. 2003), suggesting that posterior STS is influenced by contextual effects, rather than by the direction of the social attention cue as such. Even in the absence of overt contextual manipulations, comparisons between direct and averted gaze may indirectly manipulate the engagement of approach/avoidance mechanisms and other higher order social cognitive functions associated with direct and averted gaze, such as theory of mind responses to eye contact (Emery 2000; Senju and Johnson 2009; Shepherd 2010). Thus, the litmus test for direction sensitivity is whether brain responses to different averted social attention cues can be distinguished in the absence of other contextual manipulations.

When such tests for direction sensitivity between different averted cues were carried out, one study found direction-sensitive fMRI adaptation to static images of gaze cues in right anterior, rather than posterior, STS (Calder et al. 2007). Another study that applied multivariate pattern analysis (MVPA) to a posterior STS region of interest (ROI) observed no distinction between different averted views of static heads (Natu et al. 2010) but did find that this ROI distinguished direct from averted head views across different head identities, suggesting an identity-invariant representation. These head view effects are consistent with the pattern of univariate sensitivity for direct against averted gaze observed in previous univariate research (Nummenmaa and Calder 2009). Considered collectively, this literature suggests a broad role for posterior STS in representing social attention cues, but unlike the evidence from macaque anterior STS, there is little indication that posterior STS represents such cues in a direction-sensitive manner.

Outside the laboratory, cues to another's focus of attention are intrinsically dynamic in nature, but this issue has received limited attention in controlled experiments. There is initial evidence that a small subset of neurons in macaque anterior STS are tuned to dynamic changes in head turn direction (Perrett, Smith, Mistlin, et al. 1985; Hasselmo et al. 1989), but it remains unclear how the human brain codes such stimuli. In humans, posterior STS responds more to dynamic head turns than to both scrambled controls and static head views. However, neither anterior nor posterior STS has been found to show direction-sensitive coding of head turn direction, as measured by standard univariate fMRI (Lee et al. 2010). This absence of direction sensitivity is unsurprising, since neurons with such responses are unlikely to be clustered at a sufficiently large spatial scale to be detectable with univariate fMRI (Perrett et al. 1984; Hasselmo et al. 1989).

MVPA has recently been applied to detect representations thought to be coded in fine-grained patterns beyond the resolution of standard fMRI (Kamitani and Tong 2005; Haynes and Rees 2006; Shmuel et al. 2010). In the current study, we apply this method to determine whether distributed response patterns in the human STS region contain distinct direction-sensitive codes for observed head turns. If a classifier can use response patterns from the STS region to distinguish between leftward and rightward head turns, this would suggest that the underlying response patterns code head turn direction. However, leftward and rightward motion can also produce classification effects in regions without selectivity for social attention cues (Kamitani and Tong 2006). In order to avoid such confounding contributions of low-level motion, we included a set of rotating ellipsoid control videos. Previous work investigating head turn responses in macaque neurophysiology (Perrett, Smith, Mistlin, et al. 1985; Hasselmo et al. 1989) or direction-specific responses to static gaze (Calder et al. 2007) did not include such nonsocial controls, so an important aim of the current study was to establish that any direction-sensitive effects are specific to the social stimuli. Furthermore, we aimed to localize pattern effects to specific regions through the use of a searchlight algorithm that operated within the anatomically defined STS region. The STS region in this study included STG and MTG, in line with previous findings that social perception and gaze stimuli produce peaks that sometimes fall outside the STS proper (Allison et al. 2000; Nummenmaa and Calder 2009).

Materials and Methods

Participants

Twenty-one right-handed healthy volunteers with normal or corrected to normal vision participated in the study (12 males, mean age 29 years, age range 22–38). Volunteers provided informed consent as part of a protocol approved by the Cambridge Psychology Research Ethics Committee. Four volunteers were removed from further analysis: Two due to poor performance at the behavioral task whilst in the scanner (accuracy of less than 50%) and 2 due to fatigue and excessive head movements.

Experimental Design

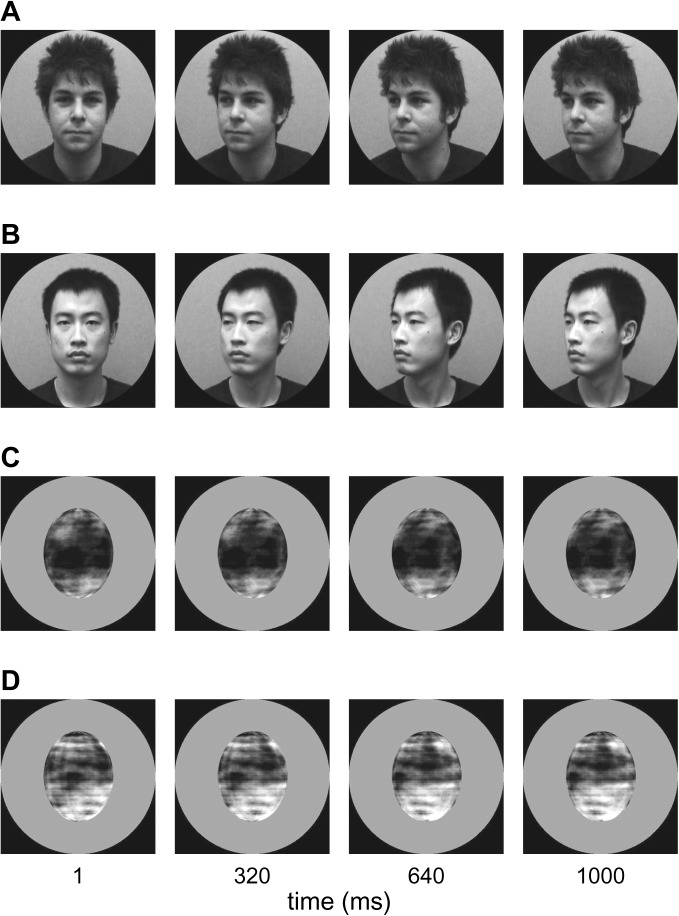

Volunteers viewed 1000-ms video clips of 45° leftward and rightward head turns and comparable ellipsoid rotations (Fig. 1; e.g., videos, see Supplementary Material). Volunteers were instructed to monitor the stimulus set for infrequent deviant response trials (1 of the 8 experimental videos, rotated 4° from the upright position) and responded to detected deviants with a button press. The deviant response trials were drawn from all experimental conditions, and the degree of rotation was chosen after behavioral pilot tests to produce an attentionally demanding task without ceiling effects.

Figure 1.

Example video frames for turning heads (A–B) and rotating ellipsoids (C–D). The stimuli were full color but are presented in grayscale for printing purposes (for full color stimuli, see Supplementary Videos). The videos were presented at 24 frames per second. All video frames are from leftward motion conditions. Rightward conditions were created through mirror reversal of the same video clips. The 2 ellipsoid identities (C–D) were created by Fourier-scrambling face textures from the first frame of the 2 head videos (A–B).

Two actors with matched head motion patterns were selected for the head turn videos. The ellipsoid control stimuli were rendered and animated in Matlab (Mathworks) and were texture mapped with the Fourier-scrambled face textures from the 2 head identities. The 2 motion directions were created by mirror reversing video clips with a single direction, thus ensuring that the stimulus set was physically matched across motion directions. This produced a total of 8 stimuli (2 heads, 2 ellipsoids, each rotating leftward or rightward), which were treated as individual conditions.

The stimuli were back-projected onto a screen in the scanner, which volunteers viewed via a tilted mirror. The stimuli were presented on a black background within a circular aperture (7° visual angle in diameter). The experiment was controlled using Matlab and the Psychophysics toolbox (Brainard 1997).

The experiment was divided into sets of 240 trials, each of which was independently randomized. Parameter estimates from each set formed an independent set of training examples for classification. The trials were presented within a rapid event-related design. Four volunteers completed a 6-set version of the experiment (approximately 40 min effective time) and 13 completed a 12-set version (80 min). Each set contained 240 trials: 80 null trials, where a fixation cross remained on the screen throughout the trial (1500 ms) and 160 experimental trials (80 heads, 80 ellipsoids), where each trial consisted of a video clip (1000 ms) followed by fixation (500 ms). Each condition was repeated 18 times in a set. Sixteen deviant response trials were randomly sampled from the experimental conditions and responses to these trials were modeled with a separate nuisance regressor of no interest. The trials within the set were presented in a pseudorandomized order, where repeats of the same trial were slightly clustered in order to increase design efficiency (Henson 2003). Every second set was followed by a 15-s rest period, which was cued by a text prompt on the screen. The scan acquisition continued during the rest periods, and volunteers were instructed to remain still.

Imaging Acquisition

Scanning was carried out at the MRC Cognition and Brain Sciences Unit, Cambridge, United Kingdom, using a 3-T TIM Trio Magnetic Resonance Imaging scanner (Siemens), with a head coil gradient set. Functional data were collected using high-resolution echo planar T2*-weighted imaging (EPI, 40 oblique axial slices, time repetition [TR] 2490 ms, time echo [TE] 30 ms, in-plane resolution 2 × 2 mm, slice thickness 2 mm plus a 25% slice gap, 192 × 192 mm field of view). The acquisition window was tilted up approximately 30° from the horizontal plane to provide complete coverage of the occipital and temporal lobes. Preliminary pilot tests suggested that the use of this high-resolution EPI sequence resulted in reduced signal dropout in the anterior STS region, relative to a standard resolution sequence (3 × 3 × 3.75 mm voxels). All volumes were collected in a single continuous run for each volunteer. The initial 6 volumes from each run were discarded to allow for T1 equilibration effects. T1-weighted structural images were also acquired (magnetization prepared rapid gradient echo, 1 mm isotropic voxels).

Imaging Analysis

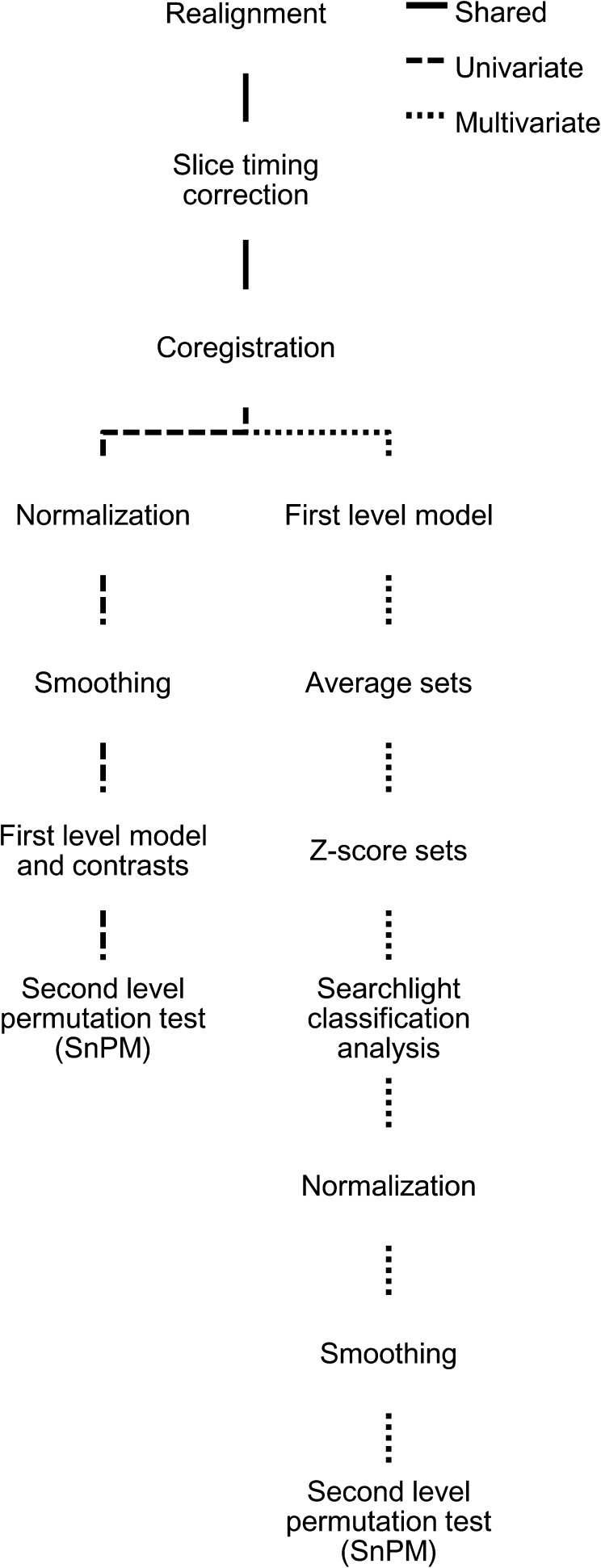

Imaging data were processed using statistical parametric mapping 5 (SPM5; www.fil.ion.ucl.ac.uk/spm). All functional volumes were realigned to the first nondiscarded volume, slice time corrected, and coregistered to the T1 structural volume. The processing pathways for univariate analysis and MVPA diverged after these common steps (Fig. 2).

Figure 2.

Processing pathways for fMRI analysis. All processing nodes take the result of the previous node in the hierarchy as input. With the exception of the searchlight classification analysis, all processing steps were implemented using standard SPM5 functionality.

Univariate analysis was carried out using standard processing steps in SPM5. Structural volumes were segmented into gray and white matter partitions and spatially normalized to the Montreal Neurological Institute (MNI) template using combined segmentation and normalization routines. Functional volumes were normalized according to the parameters of this transformation, smoothed (10-mm full width at half mean Gaussian kernel, FWHM), and high pass filtered to remove low frequency drift (128-s cutoff period).

Subject-specific generalized linear models were used to analyze the data. The models included one regressor per condition and nuisance regressors for deviant response trials, volunteer responses to nondeviant trials, and for nulling scans that contained excessive noise or movement (Lemieux et al. 2007; Rowe et al. 2008; greater than 10 units intensity difference from the mean scaled image variance or more than 0.3 mm translational or 0.035 radians rotational movement relative to the previous volume). The volunteer-specific models included 0–135 such scan nulling regressors (mean 35). The experimental predictors were convolved with a canonical hemodynamic response function, and contrast images were generated based on the fitted responses. These contrast images were then entered into second-level permutation-based random effects models using statistical nonparametric mapping (SnPM; Nichols and Holmes 2002; 10 000 permutations, 10-mm FWHM variance smoothing).

Multivariate pattern analyses were carried out using functional volumes that had been realigned and slice timing corrected but had not been spatially normalized to the MNI template (Fig. 2). Each volunteer's data were modeled using a generalized linear model with similar regressors as in the univariate analysis, with the exception that each set of trials was modeled using a separate set of regressors. Individual parameter volumes from the first half of the data set was then averaged pairwise with the corresponding volume from the second half of the data set, thus reducing session effects at the expense of halving the number of training examples. This produced 3 or 6 final sets of examples to be used for classification, depending on the number of available sets before averaging. The example volumes were z-scored so that each voxel within a set had a mean of 0 and a standard deviation of 1 across examples in that set. Finally, each example was gray matter masked using the tissue probability maps generated by the segmentation processing stage.

The resulting example volumes were used as input to a linear support vector machine classifier (as implemented in PyMVPA; Hanke et al. 2009). All MVPA used a searchlight algorithm (Kriegeskorte et al. 2006), in which classification is carried out within a spherical region (5 mm radius) that is moved through the volume. Leave-one-out cross-validated classification accuracy estimates (percent correct) were mapped back to the center of each searchlight, thus producing a classification accuracy map.

The classification accuracy maps for each volunteer were normalized to MNI space, smoothed (10-mm FWHM), and entered into second-level nonparametric random effects models in SnPM. We used nonparametric tests because the discontinuous nature of the gray matter–masked data means that conventional familywise error (FWE) correction for multiple comparisons using random field theory in SPM5 would be inappropriate. We also wished to avoid making distributional assumptions about the first-level classification accuracy maps.

In line with the hypothesized site of our effects, we restricted our primary analysis to the right STS region, which was defined anatomically by the first author based on the mean T1 volume for the sample. In line with previous evidence that social perception and eye gaze effects in the STS region extend into STG and MTG (Allison et al. 2000; Nummenmaa and Calder 2009), the mask included these gyri, whilst leaving out voxels in inferior temporal sulcus (inferior) or lateral fissure (superior) (Supplementary Fig. 1). We report P values corrected for multiple comparisons (FWE, P < 0.05) within this ROI (5162 voxels, y −58 to 22 mm MNI). We also carried out an exploratory analysis in a mirror-reversed version of the STS mask to test for effects in left STS. The use of a mirror-reversed mask sacrifices some anatomical precision in left STS but preserves the same voxel count and spatial structure in both masks. Visual inspection of the relation between the left STS mask and the mean T1 volume suggested that the mask followed the anatomy of the sulcus in a comparable manner to the right STS mask. Finally, effects that survived correction for the full volume are also reported (FWE, P < 0.05). All analyses were restricted to a group gray matter mask, which was formed by the union of each volunteer's normalized individual gray matter mask. This mask ensured that we only considered effects in regions actually covered by the searchlight analysis.

Results

Behavioral Task

Volunteers were asked to detect the occasional 4° rotation of the video stimuli and were able to detect such deviant response trials adequately (mean accuracy 71%, standard error 4%). A repeated measures analysis of variance (ANOVA) of accuracy scores with the factors of stimulus type (head, ellipsoid) and motion direction (leftward, rightward) yielded no main effects and no interaction (F1,16 < 2.4, P > 0.14 for all effects), suggesting that volunteers did not assign attention differently to the heads and ellipsoids or to the 2 motion directions.

Multivariate Pattern Analysis

Superior Temporal Sulcus

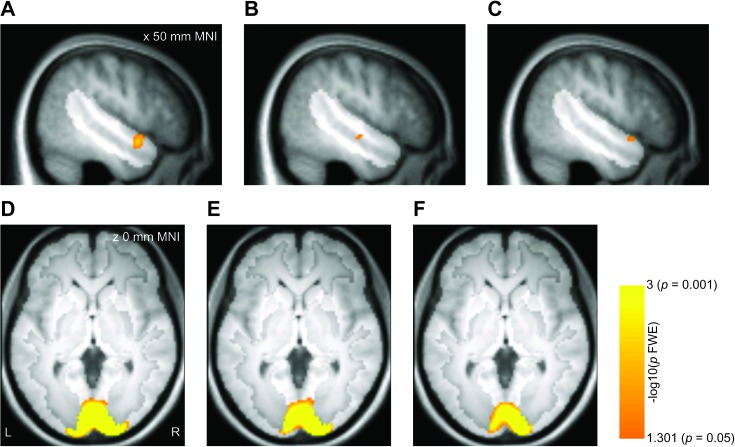

Our primary hypothesis was that the right anterior STS region distinguishes between leftward and rightward perceived head turns. In line with this prediction, a group analysis of the MVPA searchlight results for the right STS region showed that classification of head turn direction was significantly more accurate than expected by chance in a right anterior STS/STG site (P = 0.005 FWE, 50 4 −14 mm MNI, Fig. 3A; for individual subject results, see Supplementary Fig. 2). By comparison, left–right classification of rotation direction in the ellipsoid control stimuli exceeded chance in middle STS (P = 0.037 FWE, 50 −14 −10 mm MNI, Fig. 3B).

Figure 3.

Group results for MVPA, displayed on the mean T1 volume for the sample. Effects are displayed corrected for multiple comparisons within the right STS region (panels A–C; hypothesis-driven analysis, P < 0.05 FWE) or the full gray matter volume (panels D–F; exploratory analysis, P < 0.05 FWE). The highlighted portion of each panel shows the extent of the mask. (A) Classification of left–right head turns in the right STS/STG region. (B) Classification of left–right ellipsoid rotations in the right STS region. (C) Right STS regions where left–right classification of head turns was more accurate than classification of ellipsoid rotations. (D) Classification of left–right head turns in the full gray matter volume. (E): Gray matter regions where left–right classification of head turns was more accurate than classification of ellipsoid rotations. (F) Gray matter regions where the weights acquired by training the classifier on left–right head turns for one head identity generalized to left–right head turns in the other head identity.

The peaks of these head turn and ellipsoid rotation effects were approximately 18 mm apart and the activated regions did not overlap, which raises the question of how distinct the 2 effects are. We addressed this by computing the difference between the classification maps for head turn and ellipsoid rotation in each volunteer. These difference maps were entered into a group analysis, which showed that left–right classification was more accurate for head turns than for ellipsoid rotations in right anterior STS/STG (P = 0.027 FWE, 52 12 −12 mm MNI, Fig. 3C). This effect overlapped with the head turn classification effect (8 mm distance between peaks, 40% overlap), suggesting a common origin. No STS region showed significantly more accurate direction classification for ellipsoid rotations than for head turns.

We tested whether the left–right head turn codes were invariant to head identity by training the classifier on the left–right turns of one head and applying the learned weights to left–right turns of the other head. Left–right classification did not generalize across head identity at any site in right STS. Similarly, there was no significant left–right generalization across ellipsoid identities and no left–right generalization across stimulus type (head and ellipsoid).

We also carried out an exploratory analysis of effects in the left anatomically defined STS region. No left STS regions showed above-chance classification of observed head turn direction. However, a region in left anterior STS distinguished ellipsoid rotation direction with above-chance accuracy (P = 0.01 FWE, −56 −8 −16 mm MNI, Supplementary Fig. 3A). Direction classification accuracy was significantly higher for ellipsoid rotation than for head turns in a similar region (P = 0.041 FWE, −58 −4 −16 mm MNI, Supplementary Fig. 3B). No other classification effects were significant in this ROI.

Whole-Brain Analysis

Beyond our hypothesis-driven search within the anatomically defined right STS region, we also carried out an exploratory analysis within the full gray matter–masked volume to identify other effects of interest. Classification of left–right head turns exceeded chance in a region including calcarine sulcus and occipital pole (P < 0.001 FWE, 16 −96 0 mm MNI, Fig. 3D). This region is likely to include visual areas V1, V2, and V3, but in the absence of a retinotopic localizer, we use the general term early visual cortex to describe this region. Left–right ellipsoid classification did not produce significant effects in any region. Left–right classification was significantly more accurate for head turns than for ellipsoid rotations in a similar early visual region (P < 0.001 FWE, 14 −96 2 mm MNI, Fig. 3E). A similar region in early visual cortex also allowed left–right classification to generalize across head identities (P < 0.001 FWE, 14 −96 2 mm MNI, Fig. 3F) but not across stimulus types. No regions outside of early visual cortex showed significant effects for any of these comparisons.

Univariate Analysis

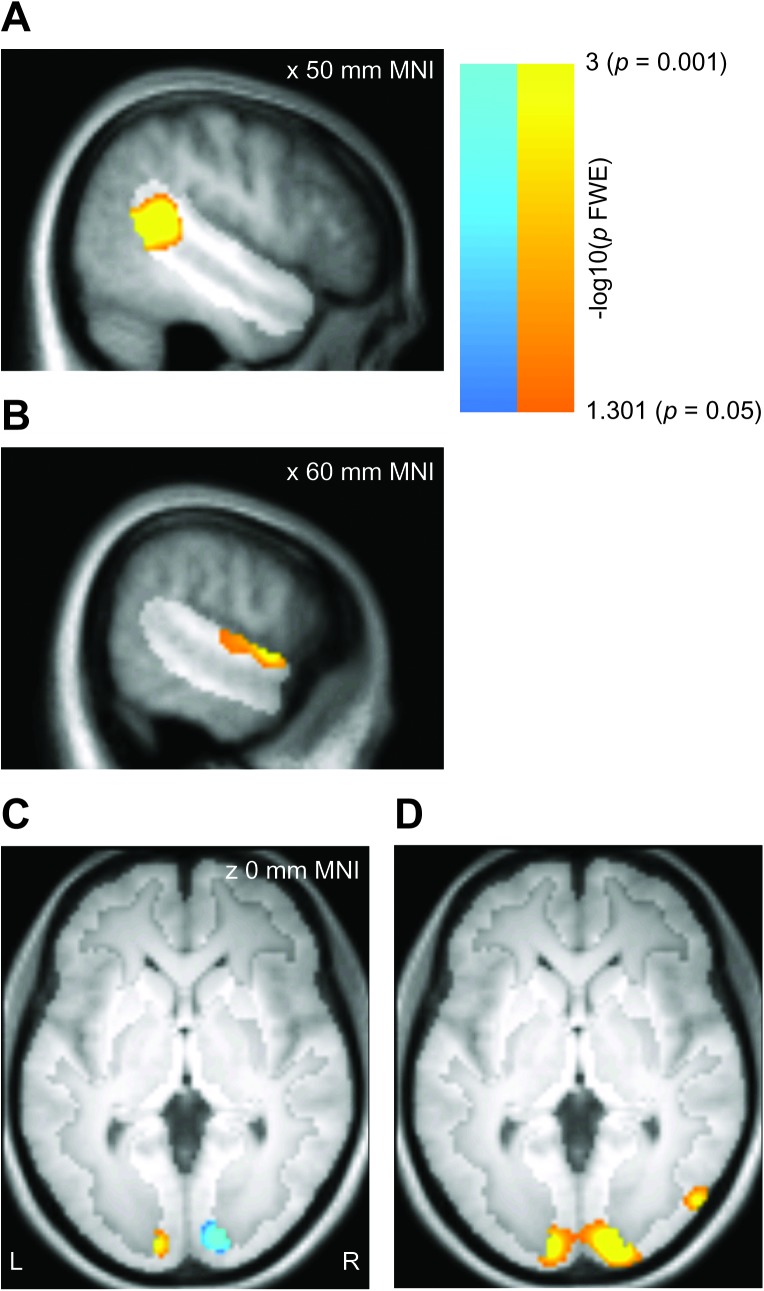

We used a conventional univariate analysis in SPM5 to address whether the observed classification effects could be attributed to large-scale response level differences between the conditions. To make comparisons between MVPA and univariate results simpler, the univariate analysis also used nonparametric permutation-based random effects analysis of group effects (SnPM, for details, see Materials and Methods). We also explored whether direction classification of head turns colocalized with greater univariate responses to heads than to ellipsoids.

Superior Temporal Sulcus

No regions inside the anatomically defined right STS ROI responded selectively to one head turn direction over the other or to one ellipsoid rotation direction over the other, suggesting that the left–right classification effects in this region did not co-occur with large-scale univariate direction sensitivity.

Collapsing across motion direction, right posterior STS responded significantly more to heads than to ellipsoids (P < 0.002 FWE, 48 −44 16 mm MNI, Fig. 4A), while a region in middle STG bordering on the edge of the ROI responded more to ellipsoids than to heads (P = 0.004 FWE, 60 0 0 mm MNI, Fig. 4B). Thus, univariate selectivity for heads over ellipsoids occurred in posterior STS, 57 mm from the left–right head turn classification peak in anterior STS/STG. The peaks for univariate selectivity for ellipsoids over heads and for left–right ellipsoid rotation classification were separated by 20 mm. Neither of the univariate effects overlapped with the classification effects.

Figure 4.

Group results for the univariate analysis, displayed on the mean T1 volume for the sample. Effects are displayed corrected for multiple comparisons within the right STS region (panels A–B; hypothesis-driven analysis, P < 0.05 FWE) or the full gray matter volume (panels C–D; exploratory analysis, P < 0.05 FWE). The highlighted portion of each panel shows the extent of the mask. (A) Greater univariate responses to heads than to ellipsoids in the right STS region. (B) Greater univariate responses to ellipsoids than to heads in the right STS region. (C) Gray matter regions with greater univariate responses to left than to right head turns (warm colors) or with greater univariate responses to right than to left head turns (cool colors). The effects do not overlap at any site. (D) Gray matter regions with greater univariate responses to heads than to ellipsoids.

Within the left STS ROI, a posterior region responded more to heads than to ellipsoids (P = 0.004 FWE, −52 −58 14 mm MNI, Supplementary Fig. 3C) and left middle STS responded more to ellipsoids than to heads (P = 0.014, −66 −18 −14 mm MNI, Supplementary Fig. 3D), mirroring the results obtained in the right STS region. No left STS regions responded preferentially to head turn or ellipsoid rotation in one direction relative to another. No other comparisons reported above were significant in the left STS analysis.

Whole-Brain Analysis

A univariate analysis of the gray matter–masked full volume revealed significant univariate selectivity for left over right head turns that was restricted to left early visual cortex (P < 0.001 FWE, −12 −94 0 mm MNI), and conversely, selectivity for right over left head turns restricted to right early visual cortex (P < 0.001 FWE, 14 −92 4 mm MNI, Fig. 4C). These effects almost completely overlapped the left–right head turn classification effect in early visual cortex (100% overlap for left over right, 91% overlap for right over left), suggesting that the classification effects co-occurred with large-scale univariate effects. Note that the laterality of these early visual effects is opposite to what would be expected for a stimulus that moves into the right and left visual hemifields, a point we return to below. No regions showed a preference for one ellipsoid rotation direction over the other in the whole-brain analysis.

A comparison of univariate responses to heads over ellipsoids and ellipsoids over heads revealed a network of activations (Supplementary Table 1). Of primary interest to the current study, bilateral early visual cortex responded more to heads than to ellipsoids (P < 0.001 FWE, 18 −96 −4 mm MNI, Fig. 4D), and this early visual effect overlapped the left–right head turn classification effect (91% overlap). Thus, the left–right head turn classification effects occurred in a region where we also observed univariate selectivity for head turn direction and preferential responses to heads over ellipsoids. Bilateral regions in posterior MTG also responded more to heads than to ellipsoids (right: P = 0.001 FWE, 52 −74 2 mm MNI. Left: P = 0.001 FWE, −50 −72 14 mm MNI). These coordinates are close to those previously reported for motion area MT (Dumoulin et al. 2000; conversion from Talairach to MNI coordinates with tools from Evans et al. 2007). Because we did not include a specific localizer scan to distinguish MT from MST or other motion areas, we refer to this region as MT+. The MT+ regions showed no direction-sensitive responses in the univariate or classification analyses, even at reduced thresholds (P < 0.01, uncorrected).

Follow-up Experiments

The pattern of univariate effects in early visual cortex suggested to us the presence of eye movements in the experiment. If volunteers tracked the heads as they turned, this would have placed the stimulus primarily in the hemifield ipsilateral to the direction of motion, which could explain the ipsilateral univariate activations in early visual cortex. Eye tracking was not available when the main experiment was undertaken, so we carried out follow-up eye tracking and fMRI experiments with 3 principal aims: First, to test whether the head turns used in the main experiment elicit eye movements; second, to assess whether the eye movement effects could be removed with a revised experimental paradigm; and finally, to test whether the fMRI effects reported in the main text remained in the absence of statistically significant eye movement effects.

Follow-up Materials and Methods

Five volunteers from the final sample used in the main experiment returned to participate in additional experiments. Eye movements were monitored using a video-based infrared eye tracker (500 Hz acquisition outside the scanner, 50 Hz acquisition inside the scanner; Sensomotoric Instruments). We analyzed the change in horizontal fixation position at the end relative to the start of each trial using custom code developed in Matlab.

Imaging data were acquired and analyzed using identical parameters as in the main experiment, with the exception that no averaging of the first and second half of the experiment was carried out, since this would have yielded an unacceptably small number of observations for first-level statistics. Furthermore, each set was scanned in a separate run to allow recalibration of the eye tracker between sets. As in the main fMRI experiment, we used a searchlight analysis. We based single-volunteer inference on binomial tests at each voxel in the ROI.

Follow-up Eye Tracking with the Original Design

Five volunteers carried out an abbreviated version of the main experiment outside the scanner (3 sets, 540 trials), while their eye position was monitored. First-level ANOVAs revealed that each volunteer showed a significant stimulus type (head, ellipsoid) by motion direction (leftward, rightward) interaction (Supplementary Table 2). This interaction reflected consistent fixation shifts in the direction of the head turns, with nonsignificant or weaker fixation shifts in the direction of the ellipsoid rotations.

Follow-up Eye Tracking with Revised Design

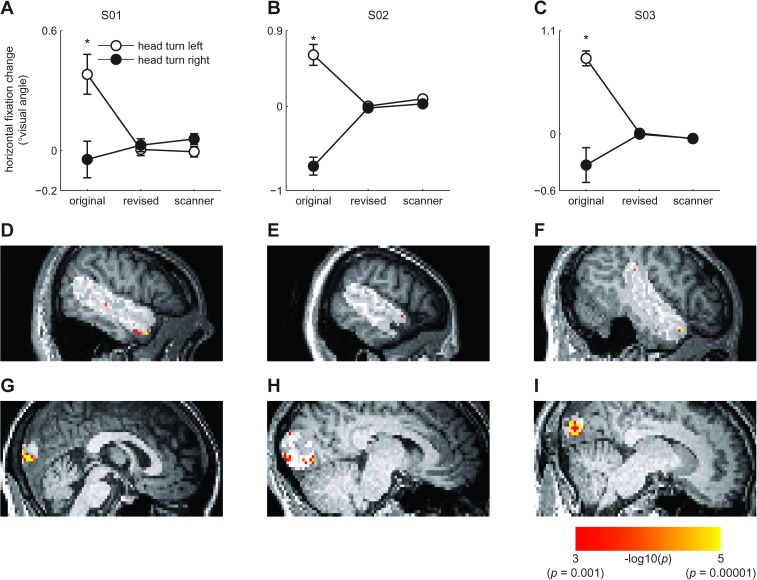

We carried out a second eye tracking experiment with a revised paradigm that included a fixation cross during the presentation of the video clips. Volunteers were also strongly instructed to maintain fixation at all times. We included only the head turn conditions in order to obtain a maximal number of trials for the head left–right comparison whilst minimizing volunteer fatigue. In this second experiment, the head turn left–right eye movement effect was reduced to nonsignificance in 4 of 5 volunteers (Fig. 5A–C).

Figure 5.

Follow-up eye tracking and fMRI experiments. (A–C) Mean horizontal fixation change plotted separately for the 3 volunteers selected for the final analysis in the revised fMRI experiment. Positive values reflect a leftward shift in fixation over the trial, while negative values reflect a rightward shift. The horizontal axis gives fixation performance in the original task, the revised task, and the revised task as measured during the fMRI experiment. The error bars give ±1 standard error of the mean. Comparisons with significant differences between the head turn directions are highlighted by asterisks (t-tests, P < 0.05). It can be seen that the revised design abolished the eye movement effect in these volunteers. (D–F) Left–right head turn classification results for the 3 volunteers in the final sample of the fMRI experiment. The volunteers are shown in the same order as in A–C. Results are overlaid on each volunteer's T1 volume and are masked to only include effects within the highlighted right STS region (P < 0.001, uncorrected). It can be seen that even in the absence of eye movement effects, anterior STS/STG codes head turn direction. (G–I) Results as in D–F but masked to show effects within a 20 mm radius of the peak early visual head turn classification effect from the main study. It can be seen that the effects in early visual cortex also remain when eye movements are controlled.

Follow-up fMRI Experiment with Revised Design

We tested whether our main classification findings in STS/STG and early visual cortex survived in the absence of eye movements by carrying out a second fMRI experiment with the revised experimental paradigm. We recruited the 4 volunteers who showed no significant eye movement effects in the eye tracking test outside the scanner. Volunteers completed a full 6-set version of the revised experiment (1080 trials, for details, see Material and Methods), while their eye position was monitored. One of the 4 scanned volunteers showed a significant fixation shift in response to the head turns whilst being scanned (t = 8.72, P = 0.003). This volunteer was removed from further analysis.

Although the 3 remaining volunteers showed no significant eye movement effects (as observed in separate tests before and during scanning), left–right classification of head turns in the right anterior STS region was greater than chance in 2 volunteers (P < 0.05, Bonferroni FWE corrected for the right STS mask) and at reduced thresholds in the third (P < 0.001, uncorrected, Fig. 5D–F). The final volunteer also showed an effect in posterior STS (P < 0.05, FWE).

All 3 volunteers showed significant left–right head turn classification effects in early visual cortex (P < 0.05 Bonferroni FWE corrected for a 20 mm radius sphere centered on the peak head turn classification effect in the main experiment, Fig. 5G–I). However, unlike the main experiment, where this effect was joined by univariate response preferences for head turns in a direction ipsilateral to the visual hemifield (Supplementary Fig. 4A), we now observed preferentially contralateral responses to head turn direction (P < 0.001, uncorrected, Supplementary Fig. 4B). Thus, although the classification effects in early visual cortex were accompanied by univariate effects also in the revised experiment, laterality of these univariate effects was reversed.

Discussion

Appropriate social behavior is dependent on accurately inferring where others are attending. In the visual domain, this inferential process is likely to involve direction-sensitive coding of social attention cues, such as head turns. In experiments, these stimuli are often abstracted to static views, which fails to capture their dynamic character in natural social interaction. Here, we demonstrate that response patterns in human right anterior STS/STG distinguish between leftward and rightward dynamic head turns. Furthermore, left–right head turns were significantly more discriminable in this region than left–right ellipsoid control stimuli. A similar analysis of the left STS region revealed no left–right classification of head turn direction at any site in the ROI.

The peak coordinates for left–right classification of head turn direction in the current study are in close proximity to a previous demonstration of direction-sensitive fMRI adaptation to static gaze (Calder et al. 2007; 16 mm distance between peaks). Considered collectively, these results suggest a general role for right anterior STS/STG in supplying higher order social cognitive processes with important information about the direction of another's attentional shifts, whether these are conveyed by static gaze in a front-facing head or dynamic head turns. Consistent with this social role, we also demonstrate that direction sensitivity does not extend to nonsocial control stimulus motion in this region. An important question is whether such direction-sensitive responses to dynamic and static social cues are driven by a single representation of the direction of another's social attention (Perrett et al. 1992) or whether dynamic information is coded separately, as indicated by the finding that STS neurons tuned to head turn motion do not respond to static head view displays (Perrett, Smith, Mistlin, et al. 1985; Hasselmo et al. 1989).

Neurons in macaque anterior STS are tuned to the direction of social attention cues (Hasselmo et al. 1989; Perrett et al. 1992). However, most human fMRI studies have reported gaze or head turn effects in posterior rather than anterior STS regions (Nummenmaa and Calder 2009). Our classification effects appear more consistent with the typical recording site in macaque anterior STS than with previous univariate fMRI effects in human posterior STS. Compared with standard univariate analysis, MVPA and fMRI adaptation techniques confer greater sensitivity (Haynes and Rees 2006). This increased sensitivity makes more rigorous comparisons possible, for instance between left and right averted social attention cues. Accordingly, we also observed greater consistency between human fMRI and single unit evidence from the macaque (see also Kamitani and Tong 2005, 2006; Calder et al. 2007). Known human–macaque discrepancies in the function of posterior STS and surrounding areas suggest that a simple correspondence between human and macaque may not apply to all high-level visual areas (Orban et al. 2004), but such a simple correspondence nevertheless offers a useful working model for the representation of social attention cues.

The pattern of results we observed in posterior and anterior portions of the right STS region also highlights how large-scale univariate response level differences can dissociate from multivariate classification performance (Haxby et al. 2001; Hanson et al. 2004; Hanson and Halchenko 2008). Similar to previous studies (Andrews and Ewbank 2004), we found that right posterior STS responded more to heads than to ellipsoids, while no such preferential responding was observed in anterior STS/STG. The left–right head turn classification effects showed the opposite pattern, with significant effects in anterior but not posterior regions. There are clear parallels between this pattern of effects and a recent report where face identity classification was possible in an anterior inferotemporal region, which did not respond preferentially to faces over places, while no such face identity effects appeared in the more posterior fusiform face area, even though this region responded more to faces than to places (Kriegeskorte et al. 2007). Face identity and head turn direction are both important dimensions for face processing, yet multivariate sensitivity for manipulations along these dimensions does not appear to colocalize with univariate selectivity for faces over other object categories. Although more systematic studies of these within- and between-category dissociations are needed before their theoretical implications for face perception can be fully considered, the current results indicate that studies where data analysis is restricted to functional ROIs defined by face selectivity are at risk of missing potentially important effects (Haxby et al. 2001; Friston et al. 2006).

Neurons with social attention responses in macaque STS are often invariant to the identity of the individual conveying the cue (Perrett et al. 1992). In this study, we observed no generalization between response patterns evoked by left–right head turns across the 2 identities. Although there is some initial evidence to suggest that STS neurons can code both head view and head identity (Perrett et al. 1984), it is in our view unlikely that the representation across STS is identity-specific. For instance, it has previously been shown that direct and averted static head views can be distinguished across identity in posterior STS (Natu et al. 2010). Given that separate training of left–right classification for each identity involves half as much data as compared with when this dimension is collapsed, it is more likely that our experiment was not sufficiently sensitive to detect any such identity-invariant head turn representations.

Our results suggest that the anterior STS region distinguishes the direction of perceived head turns. The follow-up eye-tracking experiment suggested that volunteers' eye movements tended to follow the direction of head turns, thus presenting a potential confound to the interpretation of our results. To rule out an eye movement account of our reported classification effects, we demonstrated in a revised fMRI experiment that a subset of volunteers from the main experiment showed significant left–right head turn classification in the right STS region, even though these volunteers showed no significant eye movement effects during pretests or whilst in the scanner. Thus, even though our main analysis is potentially limited by an eye movement confound, the head turn direction codes in the right anterior STS region remain when this confound is removed. The absence of prior reports of eye movement responses in the anterior STS region is also consistent with this interpretation (Grosbras et al. 2005; Bakola et al. 2007). By contrast, even minute eye movements elicit responses in early visual cortex (Dimigen et al. 2009), and an eye movement account would seem to account well for the pattern of ipsilateral univariate selectivity we observed in the main experiment, with leftward and rightward head turns producing responses in left and right early visual cortex, respectively. Notably, this ipsilateral pattern of effects reverted to the expected contralateral response preference in the univariate analysis of the follow-up experiment, even though left–right head turn classification in early visual cortex was significant in both the original and the follow-up experiments. These results suggest that the classification effects in the 2 data sets were driven by distinct large-scale univariate effects: a primarily eye movement-related response in the main experiment and a visually-evoked response in the follow-up experiment.

The pervasive tendency for volunteers to follow social attention cues points to an intriguingly close link between action and perception in this system, which is worthy of further enquiry. Previous investigators found that static gaze cues also evoke small eye movements in the perceived gaze direction (Mansfield et al. 2003). Indeed, 2 of the 5 volunteers who were tested with eye tracking in the current study were unable to consistently suppress eye movements in response to the head turns, even in the presence of a fixation cross and strong instructions to maintain fixation. Although interesting in their own right, these eye movement effects also suggest that investigators who seek to isolate effects of perceived gaze direction would be well advised to monitor the volunteer's own gaze.

Previous studies have found that socially relevant motion engages MT (Puce et al. 1998; Watanabe et al. 2006). Consistent with this literature, we observed a univariate response preference for heads relative to ellipsoids in bilateral superior temporal regions likely corresponding to MT+. Despite this category preference for heads relative to ellipsoids, we obtained no evidence that response patterns in this region distinguish head turn direction. In previous studies that attempted to decode motion directions, direction sensitivity was weaker in MT than in earlier visual areas (Kamitani and Tong 2006; Seymour et al. 2009), which the authors attribute to MT's smaller anatomical size compared with earlier visual areas. Although neurophysiological data suggest considerable direction sensitivity in both MT and early visual cortex (Snowden et al. 1992), such response properties may interact with area size when measured with coarse-grained methods such as fMRI, thus producing apparently weaker or nonsignificant effects in smaller areas (Bartels et al. 2008). Note also that both the absence of a functional MT localizer and the use of weaker, more transient motion stimuli may have rendered our analysis less sensitive to direction-sensitive responses in MT+, compared with previous studies (Kamitani and Tong 2006; Seymour et al. 2009). Thus, we do not exclude the possibility that head turns produce direction-sensitive MT+ responses, although we were unable to find evidence for this.

In conclusion, we have presented evidence that response patterns in human right anterior STS/STG distinguish between leftward and rightward dynamic head turns. Such direction sensitivity was not detected for physically matched ellipsoid control stimuli. The anterior site of this effect is consistent with evidence from macaque neurophysiology (Perrett, Smith, Mistlin, et al. 1985; Hasselmo et al. 1989) but does not colocalize with regions showing greater univariate responses to heads than to ellipsoids. In this respect, multivariate pattern approaches show great promise in linking evidence from single neurons in the macaque to large-scale response patterns in human fMRI.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/

Funding

United Kingdom Medical Research Council (grant MC_US_A060_0017 to A.J.C.; MC_US_A060_0016 to J.B.R); Wellcome Trust (WT077029 to J.B.R.).

Supplementary Material

Acknowledgments

We are grateful to Christian Schwarzbauer for help with developing the high-resolution EPI sequence used in this study. Conflict of Interest: None declared.

References

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends Cogn Sci. 2000;4:267–278. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Andrews T, Ewbank M. Distinct representations for facial identity and changeable aspects of faces in the human temporal lobe. Neuroimage. 2004;23:905–913. doi: 10.1016/j.neuroimage.2004.07.060. [DOI] [PubMed] [Google Scholar]

- Bakola S, Gregoriou G, Moschovakis A, Raos V, Savaki H. Saccade-related information in the superior temporal motion complex: quantitative functional mapping in the monkey. J Neurosci. 2007;27:2224–2229. doi: 10.1523/JNEUROSCI.4224-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartels A, Logothetis NK, Moutoussis K. fMRI and its interpretations: an illustration on directional selectivity in area V5/MT. Trends Neurosci. 2008;31:444–453. doi: 10.1016/j.tins.2008.06.004. [DOI] [PubMed] [Google Scholar]

- Brainard D. The psychophysics toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Calder AJ, Beaver JD, Winston JS, Dolan RJ, Jenkins R, Eger E, Henson RNA. Separate coding of different gaze directions in the superior temporal sulcus and inferior parietal lobule. Curr Biol. 2007;17:20–25. doi: 10.1016/j.cub.2006.10.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deaner R, Platt M. Reflexive social attention in monkeys and humans. Curr Biol. 2003;13:1609–1613. doi: 10.1016/j.cub.2003.08.025. [DOI] [PubMed] [Google Scholar]

- Dimigen O, Valsecchi M, Sommer W, Kliegl R. Human microsaccade-related visual brain responses. J Neurosci. 2009;29:12321–12331. doi: 10.1523/JNEUROSCI.0911-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dumoulin SO, Bittar RG, Kabani NJ, Baker CL, Le Goualher G, Pike GB, Evans AC. A new anatomical landmark for reliable identification of human area V5/MT: a quantitative analysis of sulcal patterning. Cereb Cortex. 2000;10:454–463. doi: 10.1093/cercor/10.5.454. [DOI] [PubMed] [Google Scholar]

- Emery NJ. The eyes have it: the neuroethology, function and evolution of social gaze. Neurosci Biobehav Rev. 2000;24:581–604. doi: 10.1016/s0149-7634(00)00025-7. [DOI] [PubMed] [Google Scholar]

- Evans A, Zilles K, Lancaster J, Martinez M, Mazziotta J, Fox P, Tordesillas-Gutierrez D, Salinas F. Bias between MNI and Talairach coordinates analyzed using the ICBM-152 brain template. Hum Brain Mapp. 2007;28:1194–1205. doi: 10.1002/hbm.20345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox C, Iaria G, Barton J. Defining the face processing network: optimization of the functional localizer in fMRI. Hum Brain Mapp. 2009;30:1637–1651. doi: 10.1002/hbm.20630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston K, Rotshtein P, Geng J, Sterzer P, Henson R. A critique of functional localisers. Neuroimage. 2006;30:1077–1087. doi: 10.1016/j.neuroimage.2005.08.012. [DOI] [PubMed] [Google Scholar]

- Frith C, Frith U. Implicit and explicit processes in social cognition. Neuron. 2008;60:503–510. doi: 10.1016/j.neuron.2008.10.032. [DOI] [PubMed] [Google Scholar]

- Grosbras M-H, Laird A, Paus T. Cortical regions involved in eye movements, shifts of attention, and gaze perception. Hum Brain Mapp. 2005;25:140–154. doi: 10.1002/hbm.20145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanke M, Halchenko Y, Sederberg P, Olivetti E, Fründ I, Rieger J, Herrmann C, Haxby J, Hanson SJ, Pollmann S. PyMVPA: a unifying approach to the analysis of neuroscientific data. Front Neuroinformatics. 2009;3:1–13. doi: 10.3389/neuro.11.003.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanson SJ, Halchenko Y. Brain reading using full brain support vector machines for object recognition: there is no “face” identification area. Neural Comput. 2008;20:486–503. doi: 10.1162/neco.2007.09-06-340. [DOI] [PubMed] [Google Scholar]

- Hanson SJ, Matsuka T, Haxby JV. Combinatorial codes in ventral temporal lobe for object recognition: Haxby (2001) revisited: is there a “face” area? Neuroimage. 2004;23:156–166. doi: 10.1016/j.neuroimage.2004.05.020. [DOI] [PubMed] [Google Scholar]

- Hasselmo M, Rolls E, Baylis G, Nalwa V. Object-centered encoding by face-selective neurons in the cortex in the superior temporal sulcus of the monkey. Exp Brain Res. 1989;75:417–429. doi: 10.1007/BF00247948. [DOI] [PubMed] [Google Scholar]

- Haxby J, Gobbini M, Furey M, Ishai A, Schouten J, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haynes J, Rees G. Decoding mental states from brain activity in humans. Nat Rev Neurosci. 2006;7:523–534. doi: 10.1038/nrn1931. [DOI] [PubMed] [Google Scholar]

- Hein G, Knight R. Superior temporal sulcus—it's my area: or is it? J Cogn Neurosci. 2008;20:2125–2136. doi: 10.1162/jocn.2008.20148. [DOI] [PubMed] [Google Scholar]

- Henson RNA. Analysis of fMRI timeseries: linear time-invariant models, event-related fMRI and optimal experimental design. In: Frackowiak RSJ, Friston KJ, Frith CD, Dolan RJ, Price CJ, editors. Human brain function. New York: Academic Press; 2003. pp. 793–822. [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding seen and attended motion directions from activity in the human visual cortex. Curr Biol. 2006;16:1096–1102. doi: 10.1016/j.cub.2006.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klein J, Shepherd S, Platt M. Social attention and the brain. Curr Biol. 2009;19:R958–R962. doi: 10.1016/j.cub.2009.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Bandettini P, Cusack R. How does an fMRI voxel sample the neuronal activity pattern: compact-kernel or complex-spatiotemporal filter? Neuroimage. 2009;49:1965–1976. doi: 10.1016/j.neuroimage.2009.09.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Formisano E, Sorger B, Goebel R. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc Natl Acad Sci U S A. 2007;104:20600–20605. doi: 10.1073/pnas.0705654104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee LC, Andrews T, Johnson SJ, Woods W, Gouws A, Green GGR, Young AW. Neural responses to rigidly moving faces displaying shifts in social attention investigated with fMRI and MEG. Neuropsychologia. 2010;48:477–490. doi: 10.1016/j.neuropsychologia.2009.10.005. [DOI] [PubMed] [Google Scholar]

- Lemieux L, Salekhaddadi A, Lund T, Laufs H, Carmichael D. Modelling large motion events in fMRI studies of patients with epilepsy. Magn Reson Imaging. 2007;25:894–901. doi: 10.1016/j.mri.2007.03.009. [DOI] [PubMed] [Google Scholar]

- Logothetis N. What we can do and what we cannot do with fMRI. Nature. 2008;453:869–878. doi: 10.1038/nature06976. [DOI] [PubMed] [Google Scholar]

- Mansfield EP, Farroni T, Johnson MH. Does gaze perception facilitate overt orienting? Vis Cogn. 2003;10:7–14. [Google Scholar]

- Natu VS, Jiang F, Narvekar A, Keshvari S, Blanz V, O'Toole AJ. Dissociable neural patterns of facial identity across changes in viewpoint. J Cogn Neurosci. 2010;22:1570–1582. doi: 10.1162/jocn.2009.21312. [DOI] [PubMed] [Google Scholar]

- Nichols T, Holmes A. Nonparametric permutation tests for functional neuroimaging: a primer with examples. Hum Brain Mapp. 2002;15:1–25. doi: 10.1002/hbm.1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nummenmaa L, Calder A. Neural mechanisms of social attention. Trends Cogn Sci. 2009;13:135–143. doi: 10.1016/j.tics.2008.12.006. [DOI] [PubMed] [Google Scholar]

- Orban GA, Van Essen D, Vanduffel W. Comparative mapping of higher visual areas in monkeys and humans. Trends Cogn Sci. 2004;8:315–324. doi: 10.1016/j.tics.2004.05.009. [DOI] [PubMed] [Google Scholar]

- Pageler NM, Menon V, Merin NM, Eliez S, Brown WE, Reiss AL. Effect of head orientation on gaze processing in fusiform gyrus and superior temporal sulcus. Neuroimage. 2003;20:318–329. doi: 10.1016/s1053-8119(03)00229-5. [DOI] [PubMed] [Google Scholar]

- Pelphrey K, Singerman J, Allison T, McCarthy G. Brain activation evoked by perception of gaze shifts: the influence of context. Neuropsychologia. 2003;41:156–170. doi: 10.1016/s0028-3932(02)00146-x. [DOI] [PubMed] [Google Scholar]

- Pelphrey K, Viola R, Mccarthy G. When strangers pass: processing of mutual and averted social gaze in the superior temporal sulcus. Psychol Sci. 2004;15:598–603. doi: 10.1111/j.0956-7976.2004.00726.x. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Hietanen J, Oram M, Benson P. Organization and functions of cells responsive to faces in the temporal cortex. Philos Trans R Soc Lond B Biol Sci. 1992;335:23–30. doi: 10.1098/rstb.1992.0003. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Rolls E, Caan W. Visual neurones responsive to faces in the monkey temporal cortex. Exp Brain Res. 1982;47:329–342. doi: 10.1007/BF00239352. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Smith P, Potter D, Mistlin A, Head A, Milner A, Jeeves M. Visual cells in the temporal cortex sensitive to face view and gaze direction. Proc R Soc Lond B Biol Sci. 1985;223:293–317. doi: 10.1098/rspb.1985.0003. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Smith PA, Mistlin AJ, Chitty AJ, Head AS, Potter DD, Broennimann R, Milner AD, Jeeves MA. Visual analysis of body movements by neurones in the temporal cortex of the macaque monkey: a preliminary report. Behav Brain Res. 1985;16:153–170. doi: 10.1016/0166-4328(85)90089-0. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Smith PA, Potter DD, Mistlin AJ, Head AS, Milner AD, Jeeves MA. Neurones responsive to faces in the temporal cortex: studies of functional organization, sensitivity to identity and relation to perception. Hum Neurobiol. 1984;3:197–208. [PubMed] [Google Scholar]

- Puce A, Allison T, Bentin S, Gore JC, McCarthy G. Temporal cortex activation in humans viewing eye and mouth movements. J Neurosci. 1998;18:2188–2199. doi: 10.1523/JNEUROSCI.18-06-02188.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowe J, Eckstein D, Braver T, Owen A. How does reward expectation influence cognition in the human brain? J Cogn Neurosci. 2008;20:1980–1992. doi: 10.1162/jocn.2008.20140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Senju A, Johnson M. The eye contact effect: mechanisms and development. Trends Cogn Sci. 2009;13:127–134. doi: 10.1016/j.tics.2008.11.009. [DOI] [PubMed] [Google Scholar]

- Seymour K, Clifford C, Logothetis N, Bartels A. The coding of color, motion, and their conjunction in the human visual cortex. Curr Biol. 2009;19:177–183. doi: 10.1016/j.cub.2008.12.050. [DOI] [PubMed] [Google Scholar]

- Shepherd SV. Following gaze: gaze-following behavior as a window into social cognition. Front Integr Neurosci. 2010;4:1–5. doi: 10.3389/fnint.2010.00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shmuel A, Chaimow D, Raddatz G, Ugurbil K, Yacoub E. Mechanisms underlying decoding at 7 T: ocular dominance columns, broad structures, and macroscopic blood vessels in V1 convey information on the stimulated eye. Neuroimage. 2010;49:1957–1964. doi: 10.1016/j.neuroimage.2009.08.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snowden R, Treue S, Andersen R. The response of neurons in areas V1 and MT of the alert rhesus monkey to moving random dot patterns. Exp Brain Res. 1992;88:389–400. doi: 10.1007/BF02259114. [DOI] [PubMed] [Google Scholar]

- Wachsmuth E, Oram M, Perrett DI. Recognition of objects and their component parts: responses of single units in the temporal cortex of the macaque. Cereb Cortex. 1994;4:509–522. doi: 10.1093/cercor/4.5.509. [DOI] [PubMed] [Google Scholar]

- Watanabe S, Kakigi R, Miki K, Puce A. Human MT/V5 activity on viewing eye gaze changes in others: a magnetoencephalographic study. Brain Res. 2006;1092:152–160. doi: 10.1016/j.brainres.2006.03.091. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.