Abstract

Multisensory integration has often been characterized as an automatic process. Recent findings suggest that multisensory integration can occur across various stages of stimulus processing that are linked to, and can be modulated by, attention. Stimulus-driven, bottom-up mechanisms induced by cross-modal interactions can automatically capture attention towards multisensory events, particularly when competition to focus elsewhere is relatively low. Conversely, top-down attention can facilitate the integration of multisensory inputs and lead to a spread of attention across sensory modalities. These findings point to a more intimate and multifaceted interplay between attention and multisensory integration than was previously thought. We review developments in our understanding of the interactions between attention and multisensory processing, and propose a framework that unifies previous, apparently discordant findings.

Bidirectional Influences of Multisensory Integration and Attention

Our brains are continually inundated with stimulus input arriving through our various sensory pathways. The processes involved in synthesizing and organizing this multisensory deluge of inputs are fundamental to effective perception and cognitive functioning. Although the combination of information across the senses has been investigated since psychology became an experimental discipline [1], the last decade has seen a sharp increase of interest in this question. Within this context, the issue of how attentional shifts in one modality can affect orienting in other modalities has inspired a great deal of this recent research [2], but, perhaps surprisingly, the role that attention plays during multisensory integration itself has been largely overlooked.

Multisensory integration generally refers to the set of processes by which information arriving from different sensory modalities (e.g., vision, audition, touch) interacts and influences processing in the other modality, including how these sensory inputs are combined together to yield a unified perceptual experience of multisensory events (Box 1). Seminal studies investigating the response patterns of single neurons [3-5] in anesthetized animals identified a number of stimulus-driven factors, most notably the temporal and spatial concordance of cross-sensory inputs, as major determinants for multisensory integration [3, 6] (see refs [3, 7, 8] for a detailed discussion). This work has inspired theoretical and empirical research on multisensory integration across a variety of species (including humans), brain structures (including cortical and subcortical networks) [9-14], and methodological perspectives [2, 15-19].

Box 1: Multisensory Integration.

In non-human single-cell recording studies, multisensory integration has been commonly characterized in terms of the firing pattern of neurons that are responsive to concurrent input from more than one sensory modality [23, 80]. Multisensory responses in these particular neurons (i.e., when input in both modalities is present) are typically strongest when the cross-modal inputs are spatially aligned, presented in approximate temporal synchrony, and evoke relatively weak responses to unisensory presentations. Interestingly, response depression has also been described in this context, following spatially misaligned inputs. As such, multisensory integration reflects relative modulations in efficacy with which sensory inputs are processed and can compete for processing resources [23]. Neurons exhibiting these properties have been most extensively studied in the superior colliculus, a midbrain structure that contains multisensory convergence zones [4, 5] and is involved in the reflexive orienting of attention, both covert and overt, towards salient stimuli.

In keeping with animal physiology, human behavioral, electrophysiological, and neuroimaging studies have shown that spatiotemporally concordant inputs to multiple modalities often result in non-linear (super- or sub-additive) brain potentials and faster response latencies [8, 26]. In addition, several other aspects of multisensory integration have been identified in human research. Some of these aspects were originally hinted at by the modality appropriateness hypothesis [29] and the unity assumption [30, 31]. The modality appropriateness hypothesis posits that, in the case of bimodal stimulation, the sensory system with the higher acuity with respect to the relevant task plays a dominant role in the outcome of multisensory integration [32-36]. For example, the visual system usually dominates audiovisual spatial processes because it has a higher spatial acuity than the auditory system, whereas the auditory tends to impart more cross-modal influence in terms of temporal analysis. Recent approaches based on Bayesian integration have allowed formalising this idea under a computational framework [15, 16]. The unity assumption, on the other hand, relates to the degree to which an observer infers (not necessarily consciously) that two sensory inputs refer to s single unitary distal object or event [29, 31]. Both non-cognitive factors (temporal and spatial coincidence) and cognitive factors (prior knowledge and expectations) are thought to contribute to this process [30, 31, 66, 81].

Other behavioral studies in the human have focused on multisensory illusions that result from incongruency across the sensory modalities (i.e., cross-modal conflict; [82]) The ventriloquist phenomenon refers to the well-known illusory displacement of a sound toward the position of a spatially disparate visual stimulus [33, 83, 84], a dramatic effect that has been applied in a wide range of settings, within and beyond a purely scientific enquiry [85]. Another example is the McGurk effect [53], an illusion that occurs when speech sounds do not match the sight of simultaneously seen lip movements of the speaker, leading to a perception of a phoneme that is different than both the auditory and visual inputs. These illusions underscore the strong tendency to bind auditory and visual information that under normal (congruent) circumstances, helps reducing stimulus ambiguity.

The question within the scope of this review is how these stimulus-driven multisensory interactions interface with attentional mechanisms during the processes that lead to perception. Attention is a relatively broad cognitive concept that includes a set of mechanisms that determine how particular sensory input, perceptual objects, trains of thought, or courses of action are selected for further processing from an array of concurrent possible stimuli, objects, thoughts and actions (Box 2) [20]. Selection can occur in a top-down fashion, based on an item's relevance to the goals and intentions of the observer, or in a bottom-up fashion, whereby particularly salient stimuli can drive shifts of attention without voluntary control [21]. Interestingly, temporally and spatially aligned sensory inputs in different modalities have a higher likelihood to be favored for further processing, and thus to capture an individual's attention, than do stimuli that are not aligned [22-25]. This suggests that attention tends to orient more easily towards sensory input that possesses multisensory properties. Indeed, recent studies have shown that multisensory bottom-up processes can lead to a capture of attention [24]. From these findings, one might infer that the output of multisensory integration precedes attentional selection, and thus that it operates in a pre-attentional and largely automatic fashion.

Box 2: Attention.

Attention is an essential cognitive function that allows humans and other animals to continually and dynamically select particularly relevant stimuli from all the available information present in the external or internal environment, so that greater neural resources can be devoted to their processing. One relatively straightforward framework encapsulating many aspects of attention is that of biased competition [86]. According to this framework, attention is considered to be the process whereby the competing neural representations of stimulus inputs are arbitrated, either due to the greater intrinsic salience of certain stimuli or because they match better to the internal goals of the individual. Thus, attention may be oriented in a top-down fashion [87, 88], in which a selective biasing of the processing of events and objects that match the observer's goals is induced. In contrast, bottom-up, or stimulus-driven, attentional control refers to a mostly automatic mechanism in which salient events in the environment tend to summon processing resources, relatively independently of higher level goals or expectations [21, 89].

A fronto-parietal network of brain areas has been shown to be involved in allocating and controlling the direction of top-down attention by sending control signals that modulate the sensitivity of neurons in sensory brain regions [90-94]. Stimulus-driven attention employs various parts of the same network, appearing to operate in concert with subcortical networks that include the superior colliculus [92, 95] and regions in the right temporal-parietal junction [92].

It is still not well understood how top-down attention is controlled or allocated across the sensory modalities. Considerable overlap can be found in brain areas responsible for the top-down orienting of visual [91] and auditory [96] attention. In addition, attending to a specific location in one modality also affects the processing of stimuli presented at that location when presented in another sensory modality, suggesting that spatial attention may tend to be directed in a supramodal, or at least modality-coordinated fashion [56, 59, 62, 97, 98]. These multisensory links indicate that there is flexible attentional deployment across sensory modalities [99], making it unlikely that there are completely independent control mechanisms for each modality. It has been proposed that top-down spatial selective attention operates by increasing the sensitivity of neurons responsive to the attended stimulus feature [100, 101]. This mechanism might explain why attentional orienting can be operated in parallel across modalities, whereas attentional resolving - that is, the processing of relevant information within each modality - may be carried out more or less independently within each modality [102-104].

Complementing these findings, however, are behavioral, electrophysiological [8, 26, 27] and neuroimaging studies [28] in humans that have identified several higher level factors, such as voluntarily oriented spatial attention and semantic congruency, that can robustly influence how integration of information across the senses occurs (see Box 1) [29-31]. Some of these factors were originally hinted at by the modality appropriateness hypothesis [29]. This hypothesis posits that in the case of bimodal stimulation, the sensory system with the higher acuity with respect to the most critical aspects of a task plays a predominant role on how multisensory inputs are integrated [32-36] – e.g., visual for spatial characteristics, auditory for temporal aspects (see Box 1). Additional factors are related to the unity assumption [30, 31], which corresponds to the degree to which observers infer (consciously or not) that two sensory inputs originate from a single common cause [29, 31] (see Box 1). These, and other findings showing robust influences of cognitive factors on multisensory integration suggest a more flexible process than previously thought, whereby attention can affect the effectiveness of multisensory integration in a top-down fashion [2, 37-45].

How can these apparently opposing ideas regarding the interplay between attention and multisensory integration (i.e., bottom-up, highly automatic, multisensory integration processes that can perhaps capture attention vs. top-down attentional mechanisms that can modulate multisensory processing) have evolved in the literature? One possible reason is that studies investigating the role of top-down influences of attention on multisensory integration and studies investigating the bottom-up influence of multisensory integration on the orienting of attention have been conducted more or less independently from each other. This disconnection may thus have led to opposing, seemingly mutually exclusive conclusions. Considering recent findings from the fields of cognitive psychology and cognitive neuroscience, we propose a framework aimed at unifying these seemingly discordant findings (Figure 1).

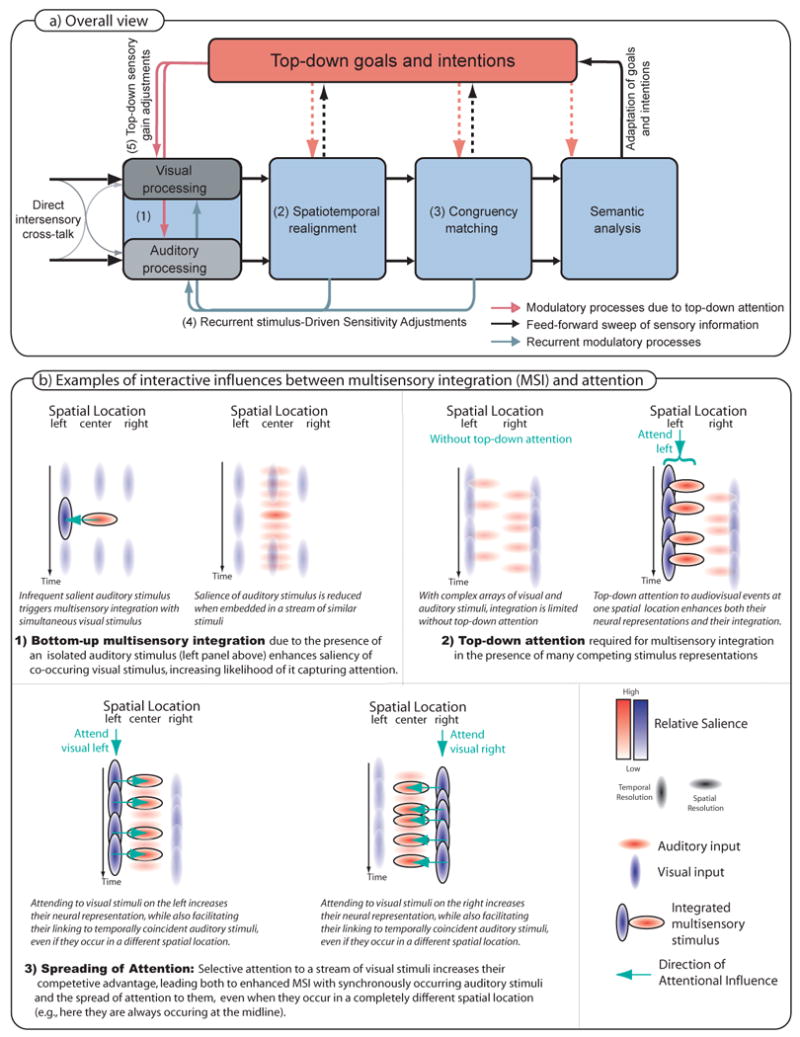

Figure 1. A framework for the interactions between multisensory integration and attention.

(a) Sequence of Processing Steps. Inputs from the sense organs are thought to interact cross-modally at multiple phases of the processing pathways, including at very early stages [8]. Stimuli can be integrated automatically if a number of conditions are satisfied: (1) if initial saliency of one of the stimuli is at or above a critical threshold, preprocessing stages will attempt to spatio-temporally realign this stimulus with one of lesser salience [(2); spatio-temporal realignment]. The stimulus stream will then be monitored for congruence in stimulus patterns of the matched streams [(3); congruency detection]. If the realignment and/or congruency matching processes succeed, the neural responsiveness of brain areas in charge of processing the input streams will be increased [(4); recurrent stimulus driven sensitivity adjustments] to sustain the integration process. If stimuli cannot be realigned or when incongruency is detected, the sensory gain would tend to be decreased. Note that we consider these potential gain adjustments to be mainly stimulus driven, and therefore a reflection of the bottom-up driven shift part of the interaction between multisensory integration and attention. If none of the stimuli is of sufficient saliency, top-down attention may be necessary to set up an initial selection of to be-integrated-stimuli [(5);top-down sensory gain adjustments]. The resulting boost in sensory sensitivity due to a top-down gain manipulation might then be sufficient to initiate the processes of spatial temporal alignment and congruency matching that would otherwise not have occurred. In addition, top-down attention can modulate processing at essentially all of these stages of multisensory processing and integration. b) Three examples of interactive influences between multisensory integration and attention: (1) bottom-up multisensory integration, which can then drive a shift of attention, (2) the need for top-down attention for multisensory integration in the presence of many competing stimulus representations; (3) the spreading of attention across space and modality (visual to auditory).

A Framework for Interactions between Attention and Multisensory Integration

As noted above, and as reviewed in the sections to follow, the evidence in favor of the existence of bidirectional influences between attention and multisensory integration is considerable. Under certain circumstances the co-occurrence of stimuli in different modalities can lead preattentively and automatically to multisensory integration in a bottom-up fashion, which then makes the resulting event more likely to capture attention and thus, capitalize available processing resources [24]. In other situations, however, top-down directed attention can influence integration processes for selected stimulus combinations in the environment [40, 46]. Based on the pattern of findings in the literature, we propose a framework in which a key factor that determines the nature and directionality of these interactions is the stimulus complexity of the environment, and particularly the ongoing competition between the stimulus components in it.

More specifically, we propose (Figure 1a) that multisensory integration can, and will tend to occur more or less pre-attentively in a scene when the amount of competition between stimuli is low. So, for example, under circumstances in which the stimulation in a task-irrelevant sensory modality is sparse (Figure 1b, case 1), the stimuli in that modality will tend to be intrinsically more salient just because they occur infrequently. Because of their increased salience, these stimuli will tend to enhance the perceptual processing of corresponding (i.e., spatially and/or temporally aligned) sensory events in a concurrently active task-relevant modality. This stimulus-driven (i.e., bottom-up) cross-modal effect will tend to capture attention and processing resources, thereby facilitating the ability for attentional selection and/or attentional “resolving” (i.e. discrimination; see Box 2) of stimuli in the task-relevant modality. This multisensory-based enhancement can occur even in a context where attentional selection in the task-relevant sensory modality is challenging, such as in a difficult visual search task [24]. In contrast, when multiple stimuli within each modality are competing for processing resources, and thus the saliency for individual stimuli within the potentially facilitating modality is low, top-down selective attention is likely to be necessary in order for multisensory integration processes between the appropriately associated stimulus events to take place [41, 42, 46] (Figure 1b, case 2).

We focus below on three main forms under which these interactions between multisensory integration and attention can manifest themselves: (1) The Stimulus-driven influences of multisensory integration on attention; (2) The Influence of top-down directed attention on multisensory integration; and (3) The Spreading of attention across modalities, which contains aspects of both top-down and bottom-up mechanisms.

Stimulus-driven Influences of Multisensory Integration on Attention

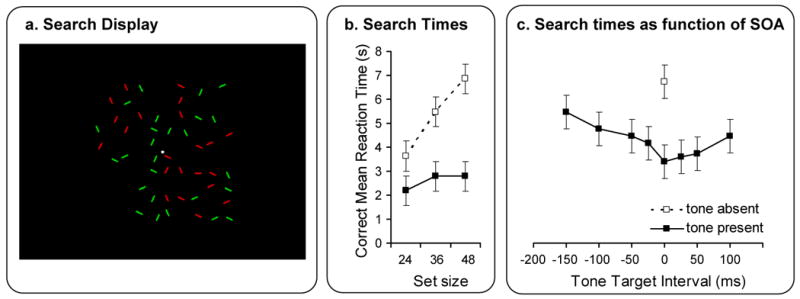

A clear demonstration of the possible involvement of stimulus-driven multisensory integration on attentional selection was recently provided using a difficult visual search task [24]. In this study visual targets were presented amongst an array of similar distractor stimuli (see Figure 2). Search times for the visual target increased with an increasing number of display items (distractors), as has typically been found in demanding unisensory visual search tasks [47, 48]. In these displays, targets and distractors changed color at random moments and irrelevantly with respect to the task. However, when a brief, spatially uninformative sound was presented concurrently with a target color change, search times became much faster and relatively independent of the number of distractor items, providing evidence that the auditory co-occurrence made the visual target pop out from the background elements.

Figure 2. Multisensory integration mechanisms affecting visual attention in a bottom-up driven fashion.

(a) Cluttered displays containing a variable number of short line segments were presented. Display elements continuously changed color from green to red (or vice versa) at random moments and locations. A short tone pip could be presented simultaneously with the color change of the target element. Participants were required to detect and report the orientation of the target element, consisting of a horizontal or vertical line amongst ±45° tilted line distractors. (b) In the absence of a sound, search times increased linearly with the number of distractor items in the display (white squares). In contrast, when the sound was present, search times became much shorter and independent of set size, indicating that the target stimulus popped out of the background (black squares). (c). Search times as a function of the relative stimulus onset asynchrony (SOA) between color change of the target, and onset of the sound. Negative SOAs indicate that the tone preceded the visual target event, and positive SOAs indicate that the target event preceded the tone. (Data from condition with set size fixed at 48 elements). Adapted, with permission, from ref [24].

At first glance these findings might seem to contradict those of another visual-search study, in which auditory stimuli failed to facilitate the detection of a uniquely corresponding (temporally matched) visual event amongst visual distractors [49]. Here, however, not only the visual stimuli but also the accessory acoustic events changed at relatively high frequencies, and thus were all consequently of low saliency. These two findings suggest that a co-occurring sound renders it likely that a visual target pops out only when the sound is relatively rare and therefore a salient event by itself [50]. This conclusion is also consistent with data from another study, which showed that a sound can affect the processing of a synchronized visual input when it is a single salient event, but not when it is part of an ongoing stream [51].

The framework we are proposing here accounts for such bottom-up multisensory integration mechanisms because a relatively salient stimulus presented in one modality can elicit a neural response that is strong enough to be automatically linked to a weaker neural response to a stimulus in another modality (Figure 1b, case 1). We hypothesize that multisensory processes triggered by temporally aligned auditory input can affect the strength of the representation of the co-occurring visual stimulus, making it pop out of a background of competing stimuli. In contrast, when multiple stimuli are competing for processing resources, their neural representations may require top-down attention for the relevant cross-modally corresponding stimuli to be readily integrated and their processing effectively facilitated.

Influence of Top-Down Directed Attention on Multisensory Processing

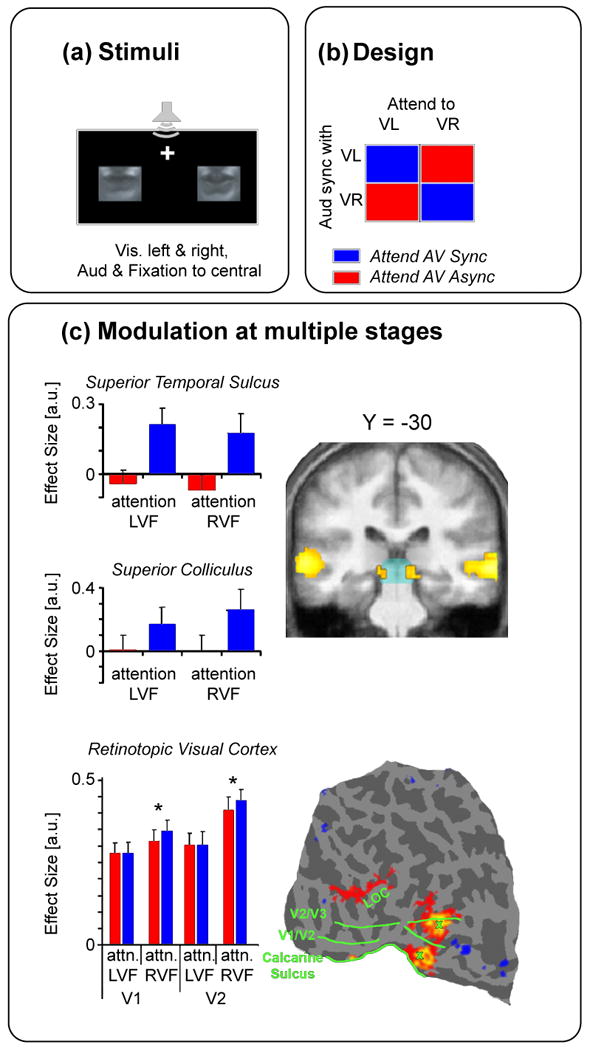

As mentioned above, recent studies have provided evidence for the influence of top-down attention on multisensory integration processes. In a recent EEG study, for example, it was shown that spatial attention can strongly influence multiple stages of multisensory processing, beginning as early as 80 ms post-stimulus [40]. Complementarily, a recent fMRI study [34] showed increased activity in multiple brain areas, including the superior temporal sulcus, striate visual cortex, extrastriate visual cortex, and the superior colliculus, when attention was directed to visual lip movements that matched an auditory spoken sentence, compared to when attention was directed to simultaneously presented but non-matching lip movements (Figure 3). Thus, these results again provide evidence for the ability of top-down attention to influence multisensory integration processes. They are also consistent with EEG data from another study showing that allocating visual attention towards irrelevant lip movements in the visual scene interferes with the recognition of audiovisual speech signals from an attended speaker [52]. Together, these results demonstrate that top-down attention can modulate multisensory processing leading to either facilitation (in the case of rhymically congruent inputs) or interference (in the case of rhythmically incongruent inputs).

Figure 3. Effects of top-down spatial attention on audiovisual speech perception.

(a) Layout of the experiment. Sounds were presented from one central location, while two visually and laterally presented streams of lip-moments were played. One of these streams was congruent with the auditory speech signals while the other stream was incongruent. (b) Attention was selectively oriented to either the left of right visual streams, either of which could, in turn, be congruent or incongruent with the auditory speech stimuli. (c) Attending to the congruent stimuli resulted in increases in activation in several brain areas that are typically associated with multisensory integration. These areas included the superior temporal sulcus, and superior colliculus (central panel), as well as large parts of the retinotopically organized visual areas V1 and V2. Adapted, with permission, from ref [39].

Further evidence for the impact of top-down attention on multisensory processing derives from studies using audiovisual speech illusion paradigms [42-44]. For instance, diverting attention to a secondary task reduces susceptibility to the McGurk effect [53], whereby an auditory phoneme dubbed onto incongruent visual lip movements leads to an illusory auditory percept (see Box 1). Interestingly, this reduced susceptibility to the McGurk effect due to the allocation of processing resources to another task was observed regardless of the sensory modality (visual, auditory, or tactile) in which the secondary task was performed [42, 43]. This result suggests that a cognitive manipulation that diverts limited processing resources away from the stimulus input from one sensory modality from a perceptual event can also result in reduced integration of it with the input from other sensory modalities [54]. Thus, when competition between different stimulus sequences is relatively low, we propose that audiovisual speech stimuli may integrate relatively automatically. However, when a secondary task is introduced, involving a diversion of attention from the event, competition ensues between the neural resources devoted to the secondary task and those resources necessary for processing the audiovisual speech stimuli. This, in turn, may reduce the automaticity of the congruency detection, and consequently, of the multisensory integration process.

It is important to distinguish these above-described top-down attentional effects from some earlier behavioral [55-59] and electrophysiological [60-62] work on supramodal attention effects. In particular, earlier studies showed that top-down spatial attention in one modality not only induces enhanced processing of stimuli in that modality at the attended location, but also of stimuli in other (task-irrelevant) modalities at that location – for example, attending visually to a location in space results in the processing of auditory stimuli at that location also being enhanced. Other studies have shown that salient stimuli in one modality in a particular location can capture spatial attention and affect the processing of stimuli in another modality that occur shortly afterward at that same location [56, 59]. These latter findings suggest that spatial attention tends to be coordinated across modalities, both when voluntarily directed and when induced in a bottom-up cueing fashion. In both cases, however, the stimuli in the different modalities do not need to be presented concurrently to be enhanced – rather, they just need to occur in the spatially attended location. Thus, although such effects reflect fundamental mechanisms by which top-down factors modulate processing across modalities, they contrast with the studies mentioned above in which top-down attention modulates the actual integration of multisensory inputs that occur aligned in time [63]).

Cross-modal Spreading of Attention

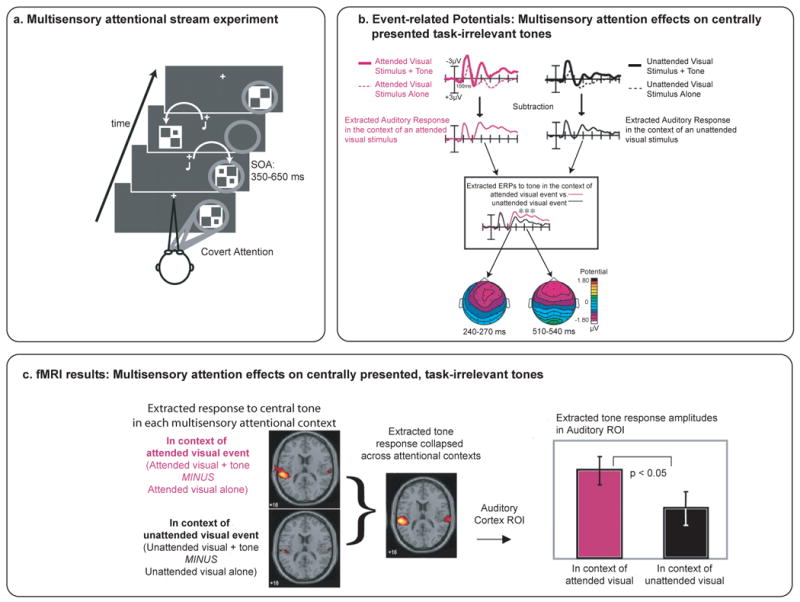

Another way that attention can interactively influence the processing of stimulus inputs in different sensory modalities that do occur at the same time has been termed cross-modal spreading of attention, which has been found to occur even when the visual and auditory stimuli arise from different spatial locations [37]. More specifically, it has been shown that a task-irrelevant, centrally presented, auditory stimulus can elicit a different brain response when paired in time with an attended versus an unattended visual stimulus presented laterally (Figure 4) [37]. This effect appeared as a prolonged, negative-polarity, fronto-central ERP deflection, beginning at ∼200 ms post-stimulus and lasting for hundreds of milliseconds, as well as a corresponding enhancement of fMRI activity in auditory cortex. The ERP effect resembled an activation known as the “late processing negativity,” a hallmark of the enhanced processing of attended unisensory auditory inputs [64]. This auditory enhanced processing effect was particularly striking because it was induced solely by whether a simultaneously occurring visual stimulus in a different spatial location was attended versus unattended. Such a result is thus consistent with a spread of attention across modalities and space to the temporally coincident auditory stimulus, occurring via a systems-level cascade of top-down and bottom-up influences. This cascade begins with top-down visuospatial attention that determines which stimulus in the visual modality is to be selectively processed. Then, presumably by means of an automatic (bottom-up) linking mechanism derived from the temporal coincidence of the multisensory components, attention spreads across modalities to encompass the auditory component, despite it being completely task-irrelevant and not even in the same spatial location.

Figure 4. Spreading of attention across a multisensory object.

(a) Experimental Design. Visual stimuli were flashed successively, and in random order, at left and right hemifields. On half of the trials, a task-irrelevant central tone was presented synchronously with the lateral visual stimulus. Participants were instructed to visually attend selectively to only one of the two locations. (b) ERP subtraction procedure and key results. ERPs elicited by attended visual stimuli that occurred alone were subtracted from ERPs elicited by attended visual stimuli that were accompanied by a tone, yielding the extracted ERP response to the central tones when they occurred in the context of an attended (lateral) visual stimulus. A similar subtraction procedure was applied to unattended-visual trials in order to yield the extracted ERP response to the central tones when they occurred in the context of an unattended (lateral) visual stimulus. An overlay of these two extracted ERPs showed that tones presented in the context of an attended visual stimulus elicited a prolonged negativity over fronto-central scalp areas beginning at around 200 ms poststimulus. This effect resembles the late auditory processing negativity, which is a hallmark neural effect elicited during intramodal auditory attention. (c) fMRI results from the same paradigm. These results, extracted using an analogous contrast logic, showed that auditory stimuli presented in the context of an attended auditory stimulus yielded an increase in brain activity in the auditory cortex, compared to the activation elicited by the same tone when it was presented in the context of an unattended visual stimulus. The enhanced processing of task-irrelevant auditory stimuli that occur simultaneously with an attended visual stimulus, even one occurring in a different location, suggests that the visual attention has spread across the components of the multisensory object to encompass the auditory part. Adapted, with permission, from ref [37]. Copyright © 2005, The National Academy of Sciences.

Variants of this effect have recently been observed in other studies [38, 41, 65]. Moreover, a distinction has been made between the object-based multisensory spread of attention that occurs when stimuli are associated simply due to their co-occurrence in time, and a representation-based spread of attention for naturally associated stimulus pairs [38]. On the other hand, in some circumstances a task-irrelevant auditory stimulus that is semantically or representationally in conflict with a task-relevant visual stimulus can serve as an attention capturing distractor and increase attentional spread [66, 67]. Regardless, the currently available data suggest that the spatial-temporal linking of visual and auditory stimulation is initially organized at an early processing stage [37, 41, 68], but that higher-level cognitive representations can also modulate the cross-modal spreading of attention [38, 66, 67, 69].

In some ways, the spread of attention [37] can be considered to be a reversed version of the sound-induced (i.e., bottom-up) visual pop-out effect described earlier [24]. In the spread-of-attention case [37], it is visuospatial attention that determines what becomes more salient in the auditory world (Figure 1b, case 3). In the sound-induced visual pop-out case [24], an auditory stimulus determines what becomes more salient in the visual world (Figure 1b, case 1). Regardless, stimuli in one modality, or attention to stimuli in one modality, selectively influence the processing of co-occurring stimuli in another modality. Thus, these studies underscore the multifaceted --and bi-directional-- ways by which attention and multisensory processing can interact [70].

Concluding remarks and future directions

While it is generally acknowledged that multisensory integration processes can influence the bottom-up orienting of attention to salient stimuli [23], the role of top-down attention and the interplay between bottom-up and top-down mechanisms during multisensory integration processes remains a matter of ongoing debate. On the one hand, there is relatively little effect of top-down attention on multisensory integration under conditions of low competition between stimuli [33]. On the other hand, robust top-down effects on multisensory integration processes have been observed under higher degrees of competition between successive or concurrent inputs to different modalities [40, 46]. Accordingly, we suggest that the degree of competition between neural representations of stimulus inputs is a major determinant for the necessity of top-down attention, thereby helping to explain the contradictory findings in the literature.

As such, we identify several important directions and challenges for future research (see Box 3). One promising direction will be to develop multisensory attention paradigms that systematically modulate the degree of competition between stimuli within an experiment, for instance by manipulating perceptual load [71] or stimulus delivery rates, while simultaneously manipulating top-down attentional factors. Such an approach would likely be helpful for determining more precisely which aspects of multisensory integration may be affected by the ongoing interplay between sensory input competition and top-down attentional influence, and the mechanisms by which these interactions occur.

Box 3: Questions for Future Research.

What is the effect of stimulus competition on the need for top-down attention in multisensory integration?

Is there a differential influence of spatial and non-spatial attention on multisensory integration, and if so, which stimulus properties are affected?

What are the neural mechanisms by which competition influences multisensory integration and how can attention influence these mechanisms?

Does the complexity of a perceptual task influence the requirement of attention in multisensory integration, or is the attentional requirement only a function of the complexity of the stimulus input array regardless of the task.

What are the circumstances and mechanisms that control whether the processing of a task-relevant stimulus in one modality is enhanced versus diminished by a task-irrelevant conflicting stimulus in a second modality?

What is the relationship between the spread of attention and sound-induced visual pop-out effects?

What role does attention play in cross-modal processing deficits in populations with neuropsychological or psychiatric disorders, such as autism and schizophrenia?

How does the relationship between attention and multisensory integration change across the lifespan?

Another challenge lies in more fully and precisely delineating the circumstances in which attention affects multisensory integration, and the mechanisms by which it does so. For instance, top-down visuo-spatial attention can have a strong impact on the processing of concurrent auditory stimuli, as shown by the visual-to-auditory spreading-of-attention effect. However, it appears that spatial attention in the auditory modality has a much less pronounced effect on the integration of visual stimuli that are not presented at the same location. Auditory inputs, and attention to them, can nevertheless affect the processing of visual stimuli, but particularly in terms of their temporal characteristics. This tendency for temporal dominance of the auditory modality, presumed due to the importance of temporal processing in auditory processing, is reflected by the sound-induced double-flash illusion [44] (see Box 1), as well as other data suggesting that auditory attention tends to temporally align the processing of concurrent visual stimuli [72].

We note that the vast majority of studies on multisensory processing and attention reviewed here have used audio-visual stimulus material. While vision and audition have by far been the most frequently investigated senses in multisensory research, it will be important in the future to delineate which aspects of the findings and framework presented here can be generalized to other combinations of sensory modalities (e.g. touch, smell, taste, or proprioception).

Finally, research on the relationship between multisensory processing and attention may also have critical implications for our understanding of multisensory integration deficits in neurological and psychiatric populations, such as in those with hemispatial neglect, schizophrenia [73], and autism [74, 75], as well as multisensory integration decrements in the aging population [76, 77]. For example, many clinical symptoms in schizophrenia seem to be the result of a pathological connectivity between cortical networks, including between sensory processing networks, during cognitive tasks [78]. It is therefore possible, perhaps even likely, that an altered interplay between attention and multisensory processes will be found in this group of patients [79]. Thus, the study of these interactions in clinical populations is important for the understanding of deficits in these populations, as well as for advancing our understanding of the underlying neural mechanisms.

We live in a multisensory world in which we are continually deluged with stimulus input through multiple sensory pathways. For effective cognitive functioning, we must continually select and appropriately integrate together those inputs that are the most relevant to our behavioral goals from moment to moment. Thus, the dynamic and bi-directional interplay between attentional selection and multisensory processing is fundamental to successful behavior. This review has aimed at organizing the present state of the research in this important area of cognitive science, and at developing a conceptual framework that we hope will be helpful for future research.

Acknowledgments

We wish to thank Erik van der Burg, Scott Fairhall, and Emiliano Macaluso for providing figure material for this paper, and Sarah Donohue and Matthijs Noordzij for helpful comments on an earlier version. The effort for this work was supported by funding from the Institute for Behavioral Research at the University of Twente to D.T., by a grant from the German Research Foundation (SE 1859/1-1) to D.S, by grants from the MICINN (SEJ2007-64103/PSIC - CDS2007-00012) and DIUE (SRG2009-092) to S.S.-F., and by grants from the U.S. NIH (RO1-NS-051048) and NSF (#0524031) to M.G.W.

Glossary

- Attentional Orienting

Attention involves mechanisms whereby processing resources are preferentially allocated toward particular locations, features, or objects. Attentional orienting refers to the process responsible for moving the focus of attention from one location, feature, or object, to another. Orienting can occur covertly, that is, in the absence of movements of the eyes or the other the sensory receptor surfaces (e.g., eyes, ears), as well as overtly, where the shift is accompanied by a reorienting of the sensory receptors (e.g., by a head turn) to the newly attended location or object.

- Bottom-up

A form of stimulus-driven selection that is mainly determined by the ability of sensory events in the environment to summon processing resources. This type of selection is invoked relatively independently of voluntary control; rather, stimulus salience (see entry, below) is the driving factor. Particularly salient stimuli (i.e., sudden motion in an otherwise still visual scene or loud sounds in an otherwise quiet room), or other stimuli for which an individual has a low detection threshold (e.g. one's own name), attract attention in a bottom-up fashion.

- McGurk effect

Audiovisual illusion, where an auditory phoneme (i.e., /b/) dubbed onto incongruent visual lip movements (i.e., [g]) tends to lead to illusory auditory percepts that are typically intermediate between the actual visual and auditory inputs (i.e., /d/), completely dominated by the visual input (i.e., /g/), or a combination of the two (i.e., /bg/). The McGurk effect occurs in the context of isolated syllables, words or even whole sentences.

- Modality Appropriateness

This framework about multisensory integration is based on the fact that some stimulus characteristics are processed more accurately in one sensory modality than in another. For instance, vision in general has a higher spatial resolution than audition, whereas audition has a higher temporal resolution than vision. According to this framework, information from visual stimuli tends to dominate the perceptual outcome of the spatial characteristics of audiovisual events (sometimes causing a shift of the apparent location of an auditory stimulus toward the location of the visual event). Conversely, the perceived temporal characteristics of an audiovisual event tend to be dominated by those of the auditory component.

- Pop-out

A perceptual phenomenon whereby a stimulus with a particularly distinctive feature relative to its surrounding background triggers quick attentional orienting and leads to rapid detection. It is often used to describe the fact that finding such particularly distinctive objects within a visual display is highly efficient and not affected by the amount of distractor elements in the scene. High stimulus salience (see entry below) leads to pop-out.

- Resolving

The process of extracting relevant information from an attended stimulus.

- Retinotopic

Spatial organization of a group of neurons based on a topographical arrangement whereby their responses map stimulus locations in the retina in a more or less orderly fashion across a brain area. In a retinotopically organized brain area, neurons involved in processing adjacent parts of the visual field are also located adjacently. This organization is most clearly seen in early (i.e., lower-level) areas of the visual pathway, but many higher-order cortical areas involved in processing visual information also show rough retinotopic organization.

- Salience

Refers to a characteristic of an object or event which makes it stand out from its context. Visual objects are said to be highly salient when they have a particularly distinctive feature with respect to the neighboring items and the background, or if they occur suddenly. A bright light spot within an otherwise empty, dark context has a high saliency. Salience is often associated with being more likely to capture attention (see Bottom-up entry).

- Sound-induced double-flash illusion

An audiovisual illusion in which a single flash of light, presented concurrently with a train of various (two or three) short tone-pips, is perceived as two (or more) flashes. This phenomenon is an example of the tendency of auditory stimulus to dominate in the perception of the temporal characteristics of an audiovisual event.

- Stimulus congruence

The match of one or more features across two stimuli, stimulus components, or stimulus modalities. Congruence can be defined in terms of temporal characteristics, spatial characteristics, or higher-level informational content (such as semantics). In audiovisual speech perception, congruency typically refers to the matching or mismatching of a sequence of auditory speech sounds with respect to lip movements being concurrently presented. Incongruence is at the base of some multisensory phenomena, such as the McGurk illusion and the ventriloquist effects (see Box 1).

- Stimulus-driven

Is a defining feature of Bottom-up processing (see entry above), and refers to a process that is triggered or dominated by current sensory input.

- Top-down

A mode of attentional orienting whereby processing resources are allocated according to internal goals or states of the observer. It is often used to refer to selective processing and attentional orienting triggered in a voluntary fashion.

- Ventriloquism effect

An audiovisual illusion in which the apparent location of an auditory stimulus is perceived as closer to the location of a concurrent visual cue than is actually the case.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Urbantschisch V. Über den Einfluss einer Sinneserrugun auf die übrigen Sinnesempfindungen. Arch gesch Psych. 1880;42:155–175. [Google Scholar]

- 2.Spence C, Driver J. Cross-Modal Space and Cross-Modal attention. Oxford University Press; 2004. [Google Scholar]

- 3.Stein B, Meredith MA. The merging of the senses. MIT Press; 1993. [Google Scholar]

- 4.Wallace MT, et al. Multisensory integration in the superior colliculus of the alert cat. J Neurophys. 1998;80:1006–1010. doi: 10.1152/jn.1998.80.2.1006. [DOI] [PubMed] [Google Scholar]

- 5.Meredith MA. On the neuronal basis for multisensory convergence: A brief overview. Cogn Brain Res. 2002;14:31–40. doi: 10.1016/s0926-6410(02)00059-9. [DOI] [PubMed] [Google Scholar]

- 6.Welch RB, Warren DH. Intersensory Interactions. In: Kauffman KR, Thomas JP, editors. Handbook of Perception and Human Performance Volume 1: Sensory Processes and Perception. Wiley; 1986. pp. 1–36. [Google Scholar]

- 7.Holmes NP. The principle of inverse effectiveness in multisensory integration: some statistical considerations. Brain Topogr. 2009;21:168–176. doi: 10.1007/s10548-009-0097-2. [DOI] [PubMed] [Google Scholar]

- 8.Giard MH, Peronnet F. Auditory-visual integration during multimodal object recognition in humans: A behavioral and electrophysiological study. J Cogn Neurosci. 1999;11:473–490. doi: 10.1162/089892999563544. [DOI] [PubMed] [Google Scholar]

- 9.Foxe JJ, Schroeder CE. The case for feedforward multisensory convergence during early cortical processing. NeuroReport. 2005;16:419–423. doi: 10.1097/00001756-200504040-00001. [DOI] [PubMed] [Google Scholar]

- 10.Schroeder CE, Foxe J. Multisensory contributions to low-level, ‘unisensory’ processing. Curr Opin Neurobiol. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- 11.Lakatos P, et al. Neuronal Oscillations and Multisensory Interaction in Primary Auditory Cortex. Neuron. 2007;53:279–292. doi: 10.1016/j.neuron.2006.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Angelaki DE, et al. Multisensory integration: psychophysics, neurophysiology, and computation. Curr Opin Neurobiol. 2009;19:452–458. doi: 10.1016/j.conb.2009.06.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Driver J, Noesselt T. Multisensory Interplay Reveals Crossmodal Influences on ‘Sensory-Specific’ Brain Regions, Neural Responses, and Judgments. Neuron. 2008;57:11–23. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kayser C, Logothetis NK. Do early sensory cortices integrate cross-modal information? Brain Struct Funct. 2007;212:121–132. doi: 10.1007/s00429-007-0154-0. [DOI] [PubMed] [Google Scholar]

- 15.Ernst MO, Bülthoff HH. Merging the senses into a robust percept. Trends Cogn Sci. 2004;8:162–169. doi: 10.1016/j.tics.2004.02.002. [DOI] [PubMed] [Google Scholar]

- 16.Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- 17.Calvert GA. Crossmodal processing in the human brain: Insights from functional neuroimaging studies. Cereb Cortex. 2001;11:1110–1123. doi: 10.1093/cercor/11.12.1110. [DOI] [PubMed] [Google Scholar]

- 18.Beauchamp MS. Statistical criteria in fMRI studies of multisensory integration. Neuroinformatics. 2005;3:93–113. doi: 10.1385/NI:3:2:093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Colonius H, Diederich A. Multisensory interaction in saccadic reaction time: A time-window-of- integration model. J Cogn Neurosci. 2004;16:1000–1009. doi: 10.1162/0898929041502733. [DOI] [PubMed] [Google Scholar]

- 20.Pashler H. The Psychology of Attention. Cambridge University Press; 1999. [Google Scholar]

- 21.Theeuwes J. Exogenous and Endogenous Control of Attention: the Effect of Visual Onsets and Offsets. Percep Psychophys. 1991;49:83–90. doi: 10.3758/bf03211619. [DOI] [PubMed] [Google Scholar]

- 22.Driver J. Enhancement of selective listening by illusory mislocation of speech sounds due to lip-reading. Nature. 1996;381:66–68. doi: 10.1038/381066a0. [DOI] [PubMed] [Google Scholar]

- 23.Stein BE, et al. Multisensory Integration in Single Neurons of the Midbrain. In: Calvert G, et al., editors. The Handbook of Multisensory processes. MIT press; 2005. pp. 243–264. [Google Scholar]

- 24.Van der Burg E, et al. Pip and Pop: Nonspatial Auditory Signals Improve Spatial Visual Search. J Exp Psychol Hum Percep Perform. 2008;34:1053–1065. doi: 10.1037/0096-1523.34.5.1053. [DOI] [PubMed] [Google Scholar]

- 25.Van der Burg E, et al. Poke and pop: Tactile-visual synchrony increases visual saliency. Neurosci Lett. 2009;450:60–64. doi: 10.1016/j.neulet.2008.11.002. [DOI] [PubMed] [Google Scholar]

- 26.Molholm S, et al. Multisensory auditory-visual interactions during early sensory processing in humans: A high-density electrical mapping study. Brain Res Cogn Brain Res. 2002;14:115–128. doi: 10.1016/s0926-6410(02)00066-6. [DOI] [PubMed] [Google Scholar]

- 27.Fort A, et al. Dynamics of cortico-subcortical cross-modal operations involved in audio-visual object detection in humans. Cereb Cortex. 2002;12:1031–1039. doi: 10.1093/cercor/12.10.1031. [DOI] [PubMed] [Google Scholar]

- 28.Calvert GA, et al. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr Biol. 2000;10:649–657. doi: 10.1016/s0960-9822(00)00513-3. [DOI] [PubMed] [Google Scholar]

- 29.Welch RB, Warren DH. Immediate perceptual response to intersensory discrepancy. Psych Bull. 1980;88:638–667. [PubMed] [Google Scholar]

- 30.Vatakis A, Spence C. Crossmodal binding: Evaluating the “unity assumption” using audiovisual speech stimuli. Percept Psychophys. 2007;69:744–756. doi: 10.3758/bf03193776. [DOI] [PubMed] [Google Scholar]

- 31.Welch RB. Meaning, attention, and the “unity assumption” in the intersensory bias of spatial and temporal perceptions. In: A G, editor. Cognitive contributions to the perception of spatial and temporal events. Elsevier; 1999. pp. 371–387. [Google Scholar]

- 32.Navarra J, et al. Assessing the role of attention in the audiovisual integration of speech. Information Fusion. 2010;11:4–11. [Google Scholar]

- 33.Bertelson P, et al. The ventriloquist effect does not depend on the direction of deliberate visual attention. Percept Psychophys. 2000;62:321–332. doi: 10.3758/bf03205552. [DOI] [PubMed] [Google Scholar]

- 34.Vroomen J, et al. The ventriloquist effect does not depend on the direction of automatic visual attention. Percept Psychophys. 2001;63:651–659. doi: 10.3758/bf03194427. [DOI] [PubMed] [Google Scholar]

- 35.Stekelenburg JJ, et al. Illusory sound shifts induced by the ventriloquist illusion evoke the mismatch negativity. Neurosci Lett. 2004;357:163–166. doi: 10.1016/j.neulet.2003.12.085. [DOI] [PubMed] [Google Scholar]

- 36.Bonath B, et al. Neural Basis of the Ventriloquist Illusion. Curr Biol. 2007;17:1697–1703. doi: 10.1016/j.cub.2007.08.050. [DOI] [PubMed] [Google Scholar]

- 37.Busse L, et al. The spread of attention across modalities and space in a multisensory object. Proc Nat Acad Sci U S A. 2005;102:18751–18756. doi: 10.1073/pnas.0507704102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Fiebelkorn IC, et al. Dual mechanisms for the cross-sensory spread of attention: How much do learned associations matter? Cereb Cortex. 2010;20:109–120. doi: 10.1093/cercor/bhp083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Fairhall SL, Macaluso E. Spatial attention can modulate audiovisual integration at multiple cortical and subcortical sites. Eur J Neurosci. 2009;29:1247–1257. doi: 10.1111/j.1460-9568.2009.06688.x. [DOI] [PubMed] [Google Scholar]

- 40.Talsma D, Woldorff MG. Selective attention and multisensory integration: Multiple phases of effects on the evoked brain activity. J Cogn Neurosci. 2005;17:1098–1114. doi: 10.1162/0898929054475172. [DOI] [PubMed] [Google Scholar]

- 41.Talsma D, et al. Selective attention and audiovisual integration: Is attending to both modalities a prerequisite for early integration? Cereb Cortex. 2007;17:679–690. doi: 10.1093/cercor/bhk016. [DOI] [PubMed] [Google Scholar]

- 42.Alsius A, et al. Audiovisual integration of speech falters under high attention demands. Curr Biol. 2005;15:839–843. doi: 10.1016/j.cub.2005.03.046. [DOI] [PubMed] [Google Scholar]

- 43.Alsius A, et al. Attention to touch weakens audiovisual speech integration. Exp Brain Res. 2007;183:399–404. doi: 10.1007/s00221-007-1110-1. [DOI] [PubMed] [Google Scholar]

- 44.Mishra J, et al. Effect of Attention on Early Cortical Processes Associated with the Sound-Induced Extra Flash Illusion. J Cogn Neurosci. 2010;22:1714–1729. doi: 10.1162/jocn.2009.21295. [DOI] [PubMed] [Google Scholar]

- 45.Soto-Faraco S, Alsius A. Conscious access to the unisensory components of a cross-modal illusion. NeuroReport. 2007;18:347–350. doi: 10.1097/WNR.0b013e32801776f9. [DOI] [PubMed] [Google Scholar]

- 46.Van Ee R, et al. Multisensory congruency as a mechanism for attentional control over perceptual selection. J Neurosci. 2009;29:11641–11649. doi: 10.1523/JNEUROSCI.0873-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Treisman AM, Gelade G. A feature-integration theory of attention. Cogn Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- 48.Wolfe JM. Moving towards solutions to some enduring controversies in visual search. Trends Cogn Sci. 2003;7:70–76. doi: 10.1016/s1364-6613(02)00024-4. [DOI] [PubMed] [Google Scholar]

- 49.Fujisaki W, et al. Visual search for a target changing in synchrony with an auditory signal. Proc R Soc B Biol Sci. 2006;273:865–874. doi: 10.1098/rspb.2005.3327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Van der Burg E, et al. Efficient visual search from synchronized auditory signals requires transient audiovisual events. PLoS ONE. 2010;5:E10664. doi: 10.1371/journal.pone.0010664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Vroomen J, De Gelder B. Sound enhances visual perception: Cross-modal effects of auditory organization on vision. J Exp Psychol Hum Percept Perform. 2000;26:1583–1590. doi: 10.1037//0096-1523.26.5.1583. [DOI] [PubMed] [Google Scholar]

- 52.Senkowski D, et al. Look who's talking: The deployment of visuospatial attention during multisensory speech processing under noisy environmental conditions. NeuroImage. 2008;43:379–387. doi: 10.1016/j.neuroimage.2008.06.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264:746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- 54.Hugenschmidt CE, et al. Suppression of multisensory integration by modality-specific attention in aging. NeuroReport. 2009;20:349–353. doi: 10.1097/WNR.0b013e328323ab07. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Santangelo V, et al. Interactions between voluntary and stimulus-driven spatial attention mechanisms across sensory modalities. J Cogn Neurosci. 2009;21:2384–2397. doi: 10.1162/jocn.2008.21178. [DOI] [PubMed] [Google Scholar]

- 56.Spence C, Driver J. Audiovisual Links in Endogenous Covert Spatial Attention. J Exp Psychol Hum Percept Perform. 1996;22:1005–1030. doi: 10.1037//0096-1523.22.4.1005. [DOI] [PubMed] [Google Scholar]

- 57.Spence C, Driver J. Audiovisual links in exogenous covert spatial orienting. Percept Psychophys. 1997;59:1–22. doi: 10.3758/bf03206843. [DOI] [PubMed] [Google Scholar]

- 58.Spence C, et al. Cross-modal selective attention: On the difficulty of ignoring sounds at the locus of visual attention. Percept Psychophys. 2000;62:410–424. doi: 10.3758/bf03205560. [DOI] [PubMed] [Google Scholar]

- 59.Koelewijn T, et al. Auditory and Visual Capture During Focused Visual Attention. J Exp Psychol Hum Percept Perform. 2009;35:1303–1315. doi: 10.1037/a0013901. [DOI] [PubMed] [Google Scholar]

- 60.Teder-Sälejärvi WA, et al. Intra-modal and cross-modal spatial attention to auditory and visual stimuli. An event-related brain potential study. Brain Res Cogn Brain Res. 1999;8:327–343. doi: 10.1016/s0926-6410(99)00037-3. [DOI] [PubMed] [Google Scholar]

- 61.Eimer M, Schöger E. Effects of intermodal attention and cross-modal attention in spatial attention. Psychophysiology. 1998;35:313–327. doi: 10.1017/s004857729897086x. [DOI] [PubMed] [Google Scholar]

- 62.Hillyard SA, et al. Event-related brain potentials and selective attention to different modalities. In: Reinoso-Suarez F, Aimone-Marsan F, editors. Cortical Integration. Raven Press; 1984. pp. 395–413. [Google Scholar]

- 63.McDonald JJ, et al. Multisensory integration and crossmodal attention effects in the human brain. Science. 2001;292:1791. doi: 10.1126/science.292.5523.1791a. [DOI] [PubMed] [Google Scholar]

- 64.Näätänen R. Processing negativity: An evoked-potential reflection. Psychol Bull. 1982;92:605–640. doi: 10.1037/0033-2909.92.3.605. [DOI] [PubMed] [Google Scholar]

- 65.Degerman A, et al. Human brain activity associated with audiovisual perception and attention. NeuroImage. 2007;34:1683–1691. doi: 10.1016/j.neuroimage.2006.11.019. [DOI] [PubMed] [Google Scholar]

- 66.Zimmer U, et al. The electrophysiological time course and interaction of stimulus conflict and the multisensory spread of attention. Eur J Neurosci. 2010;31:1744–1754. doi: 10.1111/j.1460-9568.2010.07229.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Zimmer U, et al. Multisensory conflict modulates the spread of visual attention across a multisensory object. NeuroImage. 2010 doi: 10.1016/j.neuroimage.2010.04.245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Molholm S, et al. Object-based attention is multisensory: Co-activation of an object's representation in ignored sensory modalities. Eur J Neurosci. 2007;26:499–509. doi: 10.1111/j.1460-9568.2007.05668.x. [DOI] [PubMed] [Google Scholar]

- 69.Iordanescu L, et al. Characteristic sounds facilitate visual search. Psychon Bull Rev. 2008;15:548–554. doi: 10.3758/pbr.15.3.548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Soto-Faraco S, Alsius A. Deconstructing the McGurk-MacDonald Illusion. J Exp Psychol Hum Percept Perform. 2009;35:580–587. doi: 10.1037/a0013483. [DOI] [PubMed] [Google Scholar]

- 71.Lavie N. Perceptual Load as a Necessary Condition for Selective Attention. J Exp Psychol Hum Percept Perform. 1995;21:451–468. doi: 10.1037//0096-1523.21.3.451. [DOI] [PubMed] [Google Scholar]

- 72.Talsma D, et al. Intermodal attention affects the processing of the temporal alignment of audiovisual stimuli. Exp Brain Res. 2009;198:313–28. doi: 10.1007/s00221-009-1858-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Ross LA, et al. Impaired multisensory processing in schizophrenia: Deficits in the visual enhancement of speech comprehension under noisy environmental conditions. Schizophr Res. 2007;97:173–183. doi: 10.1016/j.schres.2007.08.008. [DOI] [PubMed] [Google Scholar]

- 74.Magnée MJCM, et al. Atypical processing of fearful face-voice pairs in Pervasive Developmental Disorder: An ERP study. Clin Neurophysiol. 2008;119:2004–2010. doi: 10.1016/j.clinph.2008.05.005. [DOI] [PubMed] [Google Scholar]

- 75.Magnée MJCM, et al. Audiovisual speech integration in pervasive developmental disorder: Evidence from event-related potentials. J Child Psychol Psychiatry Allied Disci. 2008;49:995–1000. doi: 10.1111/j.1469-7610.2008.01902.x. [DOI] [PubMed] [Google Scholar]

- 76.Hugenschmidt CE, et al. Preservation of crossmodal selective attention in healthy aging. Exp Brain Res. 2009;198:273–285. doi: 10.1007/s00221-009-1816-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Peiffer AM, et al. Aging and the interaction of sensory cortical function and structure. Hum Brain Mapp. 2009;30:228–240. doi: 10.1002/hbm.20497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Jardri R, et al. Neural functional organization of hallucinations in schizophrenia: Multisensory dissolution of pathological emergence in consciousness. Conscious Cogn. 2009;18:449–457. doi: 10.1016/j.concog.2008.12.009. [DOI] [PubMed] [Google Scholar]

- 79.Foxe J, Molholm S. Ten Years at the Multisensory Forum: Musings on the Evolution of a Field. Brain Topogr. 2009;21:149–154. doi: 10.1007/s10548-009-0102-9. [DOI] [PubMed] [Google Scholar]

- 80.Meredith MA, Stein BE. Interactions among converging sensory inputs in the superior colliculus. Science. 1983;221:389–391. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- 81.Laurienti PJ, et al. On the use of superadditivity as a metric for characterizing multisensory integration in functional neuroimaging studies. Exp Brain Res. 2005;166:289–297. doi: 10.1007/s00221-005-2370-2. [DOI] [PubMed] [Google Scholar]

- 82.Bertelson P. Starting from the ventriloquist: The perception of multimodal events. In: Sabourin M, Fergus C, editors. Advances in Psychological Science vol 2: Biological and Cognitive Aspects. Psychology Press; 1998. pp. 419–439. [Google Scholar]

- 83.Pick HL, et al. Sensory Conflict in Judgements of Spatial Direction. Percept Psychophysiol. 1969;6:203–205. [Google Scholar]

- 84.Platt BB, Warren DH. Auditory Localization: The importance of Eye-Movements and a textured visual environment. Percept Psychophysiol. 1972;12:245–248. [Google Scholar]

- 85.Connor S. Dumbstruck: A cultural history of Ventriloquism. Oxford University Press; 2000. [Google Scholar]

- 86.Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- 87.Serences JT, Boynton GM. Feature-Based Attentional Modulations in the Absence of Direct Visual Stimulation. Neuron. 2007;55:301–312. doi: 10.1016/j.neuron.2007.06.015. [DOI] [PubMed] [Google Scholar]

- 88.Theeuwes J, et al. On the Time Course of Top-Down and Bottom-Up Control of Visual Attention. In: Monsel S, Driver J, editors. Attention and Performance. Vol. 18. MIT Press; 2000. pp. 105–124. [Google Scholar]

- 89.Yantis S, Jonides J. Abrupt Visual Onsets and Selective Attention -Evidence from Visual-Search. J Exp Psychol Hum Percept Perform. 1984;10:601–621. doi: 10.1037//0096-1523.10.5.601. [DOI] [PubMed] [Google Scholar]

- 90.Yantis S, Serences JT. Cortical mechanisms of space-based and object-based attentional control. Curr Opin in Neurobiol. 2003;13:187–193. doi: 10.1016/s0959-4388(03)00033-3. [DOI] [PubMed] [Google Scholar]

- 91.Woldorff MG, et al. Functional Parcellation of Attentional Control Regions of the Brain. J Cogn Neurosci. 2004;16:149–165. doi: 10.1162/089892904322755638. [DOI] [PubMed] [Google Scholar]

- 92.Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- 93.Moore T, et al. Visuomotor origins of covert spatial attention. Neuron. 2003;40:671–683. doi: 10.1016/s0896-6273(03)00716-5. [DOI] [PubMed] [Google Scholar]

- 94.Grent-'t-Jong T, Woldorff MG. Timing and sequence of brain activity in top-down control of visual-spatial attention. PLoS Biol. 2007;5:0114–0126. doi: 10.1371/journal.pbio.0050012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Kim YH, et al. The large-scale neural network for spatial attention displays multifunctional overlap but differential asymmetry. NeuroImage. 1999;9:269–277. doi: 10.1006/nimg.1999.0408. [DOI] [PubMed] [Google Scholar]

- 96.Wu CT, et al. The neural circuitry underlying the executive control of auditory spatial attention. Brain Res. 2007;1134:187–198. doi: 10.1016/j.brainres.2006.11.088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Eimer M, Driver J. Crossmodal links in endogenous and exogenous spatial attention: Evidence from event-related brain potential studies. Neurosci Biobehav Rev. 2001;25:497–511. doi: 10.1016/s0149-7634(01)00029-x. [DOI] [PubMed] [Google Scholar]

- 98.McDonald JJ, et al. Neural substrates of perceptual enhancement by cross-modal spatial attention. J Cogn Neurosci. 2003;15:10–19. doi: 10.1162/089892903321107783. [DOI] [PubMed] [Google Scholar]

- 99.Eimer M, et al. Cross-modal links in endogenous spatial attention are mediated by common external locations: Evidence from event-related brain potentials. Exp Brain Res. 2001;139:398–411. doi: 10.1007/s002210100773. [DOI] [PubMed] [Google Scholar]

- 100.Hillyard SA, et al. Sensory gain control (amplification) as a mechanism of selective attention: Electrophysiological and neuroimaging evidence. Philos Trans R Soc Lond B Biol Sci. 1998;353:1257–1270. doi: 10.1098/rstb.1998.0281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Khayat PS, et al. Visual information transfer across eye movements in the monkey. Vision Res. 2004;44:2901–2917. doi: 10.1016/j.visres.2004.06.018. [DOI] [PubMed] [Google Scholar]

- 102.Talsma D, et al. Attentional capacity for processing concurrent stimuli is larger across sensory modalities than within a modality. Psychophysiology. 2006;43:541–549. doi: 10.1111/j.1469-8986.2006.00452.x. [DOI] [PubMed] [Google Scholar]

- 103.Klemen J, et al. Perceptual load interacts with stimulus processing across sensory modalities. Eur J Neurosci. 2009;29:2426–2434. doi: 10.1111/j.1460-9568.2009.06774.x. [DOI] [PubMed] [Google Scholar]

- 104.de Jong R, et al. Dynamic crossmodal links revealed by steady-state responses in auditory-visual divided attention. Int J Psychophysiol. 75:3–15. doi: 10.1016/j.ijpsycho.2009.09.013. [DOI] [PubMed] [Google Scholar]