The emergence of evidence-based medicine (EBM) has been regarded as among the most significant advances in medicine over the past century. However, many plastic surgeons do not incorporate EBM into their daily practice, effectively denying patients of the most appropriate treatment and, at the same time, possibly squandering already scarce health resources. Levels of evidence (LOE) were described more than 30 years ago and have been increasingly used by surgical journals not only to emphasize the importance of proper study design, but to assist readers in appraising the literature and to encourage researchers to produce high quality evidence. Given the reportedly high amount of health care dollars wasted each year in Canada and around the world, it is incumbent on clinicians and researchers to publish work with high LOE. This review briefly discusses the history of EBM and the benefits and limitations of the LOE, and provides recommendations to improve the quality of the articles published in the Canadian Journal of Plastic Surgery.

Keywords: Evidence; Levels of evidence, quality; Literature review; Plastic surgery; Research methodology

Abstract

The levels of evidence (LOE) table has been increasingly used by many surgical journals and societies to emphasize the importance of proper study design. Since their origin, LOE have evolved to consider multiple study designs and also the rigour of not only the study type but multiple aspects of its design. The use of LOE aids readers in appraising the literature while encouraging clinical researchers to produce high-quality evidence. The current article discusses the benefits and limitations of the LOE, as well as the LOE of articles published in the Canadian Journal of Plastic Surgery (CJPS). Along with an assessment of the LOE in the CJPS, the authors have provided recommendations to improve the quality and readability of articles published in the CJPS.

Abstract

De nombreuses revues et sociétés de chirurgie utilisent de plus en plus le tableau de la qualité des preuves (QdP) pour souligner l’importance d’une bonne méthodologie d’étude. Depuis sa création, la QdP a évolué pour tenir compte de multiples méthodologies d’étude, de la rigueur du type d’étude ainsi que de la rigueur de multiples aspects de la méthodologie. L’utilisation de la QdP aide le lecteur à évaluer les publications tout en encourageant les chercheurs cliniques à produire des données probantes de qualité. Le présent article traite des avantages et des limites de la QdP, ainsi que de la QdP d’articles publiés dans le Journal canadien de chirurgie plastique (JCCP). En plus d’évaluer la QdP dans le JCCP, les auteurs font des recommandations pour améliorer la qualité et la lisibilité des articles publiés dans le JCCP.

In 2007, the British Medical Journal polled its members to list the top 15 advances in medicine over the past 150 years. Among the top 15 were the introduction of sanitation, antibiotics and vaccines, as well as evidence-based medicine (EBM) (1).

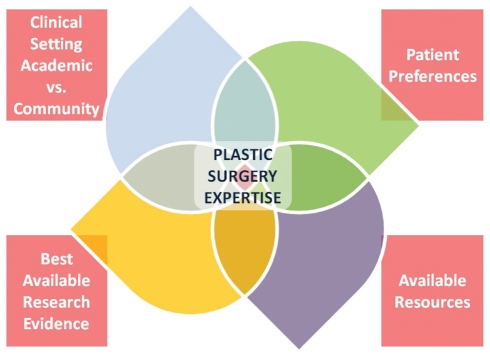

The definition of EBM can be summarized as the integration of best research evidence with clinical expertise and patient values (2). EBM also considers the setting, circumstances and available resources (3). Figure 1 presents the variables that we as surgeons need to consider when making treatment decisions for our patients.

Figure 1).

Variables to consider when making treatment decisions. vs Versus

Unfortunately, only a minority of plastic surgeons incorporate EBM in their daily practice. One may ask, so what? Generally speaking, if we do not adopt EBM, we would be ignoring one of medicine’s most important advances. Regardless of specialty, no surgeon would suggest withholding antibiotics in the presence of an established infection, or not adhering to water sanitation practices. We would hope the above analogy makes the point clear.

On a practical note, if each of us performs interventions in our daily practices without considering the best available evidence, we may be offering our patients inferior treatments and/or squandering scarce health care resources. Cumulatively, it has been estimated that 25% of health care dollars are wasted in Canada (4). This is comparable with reports of up to 50% of health care money wasted in the United States (5), and 20% to 40% worldwide (6).

HISTORY OF EBM AND THE LEVELS OF EVIDENCE

The term ‘EBM’ was coined in 1990 by Dr Gordon Guyatt, an internist and epidemiologist, during his tenure as Program Director of McMaster University’s Internal Medicine Program (Hamilton, Ontario) (7). The origins of the EBM movement, however, can be credited to Dr David Sackett. He is the ‘founding father’ of the first Department of Clinical Epidemiology & Biostatistics, established in 1966 as part of McMaster’s new School of Medicine. During the late 1970s, Dr Sackett and a group of clinical epidemiologists created a series of articles advising clinicians on how to read clinical journals. The series appeared in the Canadian Medical Association Journal in 1981 (3). Sackett and his colleagues proposed the term ‘critical appraisal’ to describe the basic rules of evidence presented in the series (8).

Levels of evidence (LOE) were described for the first time in a report by the Canadian Task Force on the Periodic Health Examination (CTFPHE) in 1979 (9). The CTFPHE was established in September 1976 by the Conference of Deputy Ministers of Health of the 10 Canadian provinces. From 1976 to 1979, a methodology was developed for weighing scientific evidence to make recommendations for or against a particular treatment or intervention, including preventive manoeuvres in the periodic health examination of asymptomatic patients. The authors of the CTFPHE developed an evidence-based rating system (Table 1). Since then, this system has been expanded and become more detailed. For example, in an article on the LOE for antithrombotic agents in 1989 (10), Sackett proposed the system found in Table 2.

TABLE 1.

Canadian Task Force on the Periodic Health Examination’s levels of evidence

| Level | Type of evidence |

|---|---|

| I | Properly randomized RCT |

| II.1 | Properly conducted cohort or case-control study |

| II.2 | Time series or dramatic results from uncontrolled studies |

| III | Expert opinion |

Adapted from reference 9. RCT Randomized controlled trial

TABLE 2.

Sackett’s levels of evidence

| Level | Type of evidence |

|---|---|

| I | Large RCTs with clear-cut results |

| II | Small RCTs with uncertain results |

| III | Nonrandomized studies with contemporaneous controls |

| IV | Nonrandomized studies with historical controls |

| V | Studies without controls (ie, case series or reports) |

Adapted from reference 10. RCT Randomized controlled trial

These early LOE systems placed the randomized controlled trial (RCT) at the top of the hierarchy of evidence. The intent of these systems is to alert readers of the literature that properly conducted studies with a higher LOE have more systematic protection against bias than studies with a lower LOE. In other words, we should generally regard the results of an RCT more highly than results derived from a similar observational study, for example. Based on the LOE system(s), the expert opinion (which is still prevalent at plastic surgery meetings and symposia) is the lowest level of evidence and should help guide decision making only when no higher level evidence is available. A number of variations of the above-mentioned systems have been adopted, somewhat belatedly, by various societies and journals. Because the questions we pose in clinical practice are variable, the LOE have been modified to account for studies with different designs, including diagnosis and prognosis. As a result, modifications were introduced that addressed these variations. The American Society of Plastic Surgeons, and Plastic and Reconstructive Surgery, have recently adopted the LOE presented in Table 3 (11). The Journal of Bone and Joint Surgery [Am] uses what is seen in Table 4. There are several purposes to instituting an ‘official’ LOE. Plastic and Reconstructive Surgery and the Journal of Bone and Joint Surgery [Am], for example, both require authors to declare the LOE of their research when submitting manuscripts for publication. The authors’ stated LOE is then peer-reviewed and indicated on the first page of the resulting publication. Stating the LOE enables readers to put the results of a research study into perspective and consider the inherent limitations of its design. This requirement also emphasizes the need for authors and readers to better understand the principles of research methodology, and recognize the benefits and limitations of particular study designs.

TABLE 3.

American Society of Plastic Surgeons levels of evidence for therapeutic studies

| Level | Qualifying studies |

|---|---|

| I | High-quality RCTs with adequate power or a systematic review of high-quality RCTs with adequate power |

| II | Lesser-quality RCTs, prospective cohort studies or a systematic review of these studies |

| III | Retrospective comparative studies, case-control, or systematic review of these studies |

| IV | Case series |

| V | Expert opinion |

Adapted from reference 11. RCT Randomized controlled trial

TABLE 4.

The Journal of Bone and Joint Surgery’s levels of evidence for the primary research question in therapeutic studies

| Level | Type of studies |

|---|---|

| I | High quality RCTs with a narrow confidence interval or a systematic review of these studies with homogenous results |

| II | Lesser quality RCTs, prospective comparative studies or a systematic review of Level II studies or Level-I studies with inconsistent heterogeneous results |

| III | Case-control studies, retrospective comparative studies or a systematic review of Level-III studies |

| IV | Case series |

| V | Expert opinion |

Adapted from reference 37. RCT Randomized controlled trial

While there is increasing emphasis placed on the importance of publishing higher-level evidence, all study designs play an important role in the medical and surgical literature. Consider case series. Because they lack a comparison group, they cannot determine cause-and-effect relationships between treatment and outcome. While a case series is not an appropriate design to demonstrate that one technique is superior to another, it is an appropriate means to report novel techniques. While these lower-level studies do play an important role in the literature, their limitations must be recognized.

The most comprehensive LOE system, produced by the Oxford Centre for Evidence-Based Medicine, was originally released in 2000 (12). The main advantage of this system over others is that it upgrades and downgrades the LOE depending on the methodological robustness of the study and provides an explanatory guide to how a study may be upgraded or downgraded.

To the majority of plastic surgeons, research findings are used to guide clinical practice. It is important to understand that even a study with a high LOE does not absolutely direct surgeons to adopt new interventions or abandon a procedure in favour of an alternative. Making an informed decision is heavily dependent on the quality of clinical research in addition to its LOE. For example, we may identify a well-designed RCT with impeccable methodology comparing pyrocarbon versus silicone arthroplasty of the proximal interphalangeal joint in elderly women with rheumatoid arthritis. Suppose the investigators found that the pyrocarbon arthroplasty produced better range of motion and pain control than the silicone implant three months postoperatively. Do we recommend this type of arthroplasty to all of our patients? Suppose the patients we see in our hand clinics are young construction workers. How do we as surgeons move from specific evidence to clinical application? Where does judgement fit in?

Clinical guidelines are different from LOE. They are a synthesis or summary of the best available evidence, and include the benefits and risks (13). To grasp a recommendation from the literature, a plastic surgeon must understand the type and quality of the evidence it was based on (14). Grading of Recommendations Assessment, Development and Evaluation (GRADE) (15) is an approach to grading the quality of evidence and the strength of recommendations in guidelines. GRADE offers a standardized approach to making clinical judgments in a transparent manner. Adopted by the Cochrane Collaboration and UpToDate, among other organizations (16), the GRADE system reduces uncertainty in interpreting a recommendation. GRADE simply rates quality of evidence in four levels (high, moderate, low, very low) and provides a ‘strong’ or ‘weak’ recommendation. Practically speaking, a strong recommendation will plainly support ‘benefits’ of a treatment when compared against the ‘potential harms’ until further evidence to the contrary is available. This is a ‘green light’ to alter your practice. A weak recommendation indicates this balance is close, and surgeons must more carefully balance the specific clinical situation, alternative treatments and patient preferences before proceeding. A very low grade, ‘yellow light’, suggests we proceed with caution, and consider our options carefully. To the reader, the practical plastic surgeon, this process is meant to provide a transparent and uncomplicated framework for multifaceted clinical decision making.

In this age of EBM, clinical guidelines are understandably growing in popularity. However, they are currently sparse in the plastic surgery literature. As evidence mounts, however, guidelines will be pivotal to the understanding and treatment of various patient populations. They will serve to structure decisions for high quality of care, quality of life and economic efficiency. The Appraisal of Guidelines for Research & Evaluation (AGREE-II) instrument (17) has been developed to address guideline evaluation. It is now recognized as a standard in guideline appraisal (18) and has already been applied to numerous cancer guidelines (17,19). Perhaps most appealing is its clarity and ease of use even for ‘novices’ in research methodology.

Status of plastic surgery literature

The LOE of the plastic surgery literature has been assessed in a recent article by Loiselle et al (20). While there has been some improvement in the publication of articles with higher LOE from 1983 to 2003, the sad truth is that the vast majority of publications were “level IV and V”, including case series, case reports and expert opinion. In contrast, the LOE of published reports has improved dramatically in some specialties. For example, Hanzlik et al (21) reviewed the LOE in the Journal of Bone and Joint Surgery [Am] from 1975 to 2005, and found that there was a significant trend toward a higher LOE with the combined percentage of level I, II and III studies increasing from 17% to 52% (21).

In a recent review of the LOE in the aesthetic surgery literature, Chuback et al (22) assessed the LOE of the literature in the journals with highest impact factors from 2000 to 2010. The investigators found that level I evidence is the least represented. Furthermore, the level I evidence identified in the review was plagued by methodological limitations. This supports a previous report on the same subject by Chang et al (23) demonstrating that the aesthetic surgery literature is dominated by uncontrolled case series, case reports and expert opinions.

HOW TO SEPARATE THE WHEAT FROM THE CHAFF

While LOE scales are useful for both the ‘doers’ and ‘users’ of clinical research, there are some limitations. ‘Doers’ want to publish work with a high LOE and investigators may publish work that they purport to be a higher LOE than it actually is. Simple LOE scales do not account for the variable quality of studies within a particular level. For example, an RCT may be considered level I based on the criterion that patients were randomly allocated to treatment. This classification fails to consider other important methodological criteria such as loss to follow-up, the blinding status, allocation concealment, power analysis, etc. If methodological weaknesses are identified, such an RCT can be downgraded to level II. Bhandari et al (24) evaluated the quality of RCTs in the the Journal of Bone and Joint Surgery [Am] from 1998 to 2000 using the Detsky quality index. Articles with a score of greater than 75 were deemed to be high quality. The investigators found that 60% of the studies had a score of less than 75. Recent studies examining the methodological quality of RCTs in plastic surgery have also demonstrated problems with the method of randomization (25,26), allocation concealment (25,27), blinding (25–27) and power analysis (25,26). Recent attempts to help ‘users’ of clinical research appraise articles they read in the medical literature include the development of multiple tools such as Quality of Reporting of Meta-analyses (QUOROM) (meta-analyses) (28), Meta-analysis Of Observational Studies in Epidemiology (MOOSE) (meta-analyses) (29), Consolidated Standards of Reporting Trials (CONSORT) (randomized controlled trials) (30), Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) (observational studies) (31) and AGREE-II (guidelines) (17) instruments to standardize the reporting of different study methodologies. Likewise, specialty-specific instructions have been released that focus on reporting common, yet lower-level evidence (32). It is critical that studies be reported in a standardized, objective manner. In any field, one key to success is repetition. While this lesson should be intuitive to surgeons, it has been forgotten in the surgical literature. Consistent reporting will improve the methodological rigour of clinical research and facilitate the abililty to compare studies and conduct meta-analyses. This streamlined approach will help to enable the plastic surgery community to critically appraise published work and become increasingly familiar with research methodology. There are several resources discussing how to evaluate and appraise the surgical literature. One such source is the Surgical Outcomes Research Centre (SOURCE) at McMaster University, which has been publishing a series of articles in the Canadian Journal of Surgery that help surgeons appraise the different study designs reported in the surgical literature (33). These help practitioners decide whether they should adopt the findings of a particular study for their patients. Other resources include the “Evidence-Based Plastic Surgery: Design, Measurement, and Evaluation” edition of the Clinics in Plastic Surgery (34) and the “Evidence-Based Medicine: How-To Articles” in Plastic and Reconstructive Surgery (35).

LOE in the Canadian Journal of Plastic Surgery

In preparing the present article, we reviewed the LOE of articles published in the Canadian Journal of Plastic Surgery in the past five years. Articles listed as ‘original articles’, ‘clinical reviews’, ‘clinical studies’, ‘ideas and innovations’, ‘surgical tips’ and ‘special topics’ from Spring 2007 to Autumn 2011 were considered. All abstracts were first reviewed, followed by full-text review and critical appraisal if there was ambiguity. The Oxford Centre for Evidence-Based Medicine rating scale (March 2009) (12) was used to categorize articles based on their level of evidence (Table 5). The study design of the reviewed articles was also documented (Table 6). As can be seen from Table 5, high-level evidence is lacking, but this is not surprising and it is typical of the plastic surgical literature as a whole.

TABLE 5.

Levels of evidence of articles published in the Canadian Journal of Plastic Surgery (2007 to 2011)

| Level of evidence* | Studies, n |

|---|---|

| 1A | 0 |

| 1B | 2 |

| 1C | 0 |

| 2A | 1 |

| 2B | 6 |

| 2C | 3 |

| 3A | 0 |

| 3B | 3 |

| 4 | 117 |

| 5 | 40 |

Oxford Centre for Evidence-Based Medicine rating scale (12), in which 1A represents the highest level of evidence while 5 represents the lowest

TABLE 6.

Study design of articles published in the Canadian Journal of Plastic Surgery (2007 to 2011)

| Study design | Studies, n |

|---|---|

| Decision analysis | 1 |

| Economic analysis | 2 |

| Systematic review | 1 |

| Randomized controlled trial | 0 |

| Cohort study | 6 |

| Case-control study | 3 |

| Outcomes study, ecological study | 3 |

| Case report/series, other descriptive study | 116 |

| Expert opinion, nonsystematic review | 39 |

| Bench research | 1 |

Why aim for higher level of evidence in our publications?

Wood et al (36) examined the importance of allocation concealment in RCTs. They reviewed 102 meta-analyses including 804 trials and found that the intervention effect estimates were exaggerated by 17% in studies with inadequate or unclear concealment compared with studies with adequate allocation concealment. Clearly, the less methodologically robust trials introduce bias and hence uncertainty of the truth. In plastic surgery, where the overwhelming LOE is IV and V (20,22), the introduction of bias is a real possibility. A recent publication from our group provides some guidance to improve the reporting and quality of case series because this remains the most common LOE in our specialty (32).

Moving forward: Recommendation

It is our belief that the LOE is a useful tool that should be applied to the Canadian Journal of Plastic Surgery. LOE encourage the ‘users’ and ‘doers’ of clinical research to better frame the importance of their work in the context of the applied study design. LOE also encourages authors to strive to publish work with higher LOE. Additionally, authors should be encouraged to apply one of the previously mentioned checklists to ensure that all important information required for authors to appraise the rigour of a study design is available.

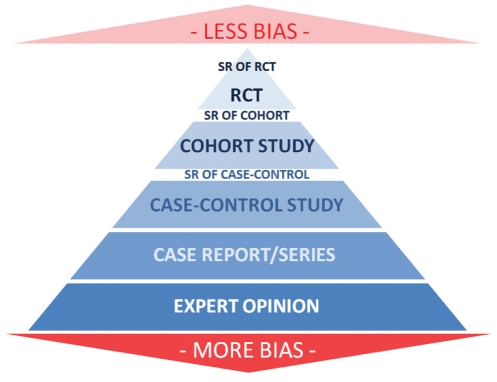

Starting in the Summer issue of the Journal, contributing authors will be required to state, at the bottom of their abstract, the LOE of their submitted work whenever this is applicable. The LOE chosen should be based on the LOE shown in the pyramid diagram (Figure 2). The numerical LOE will be based on the Oxford level of evidence (12), and we recommend that the contributors use this system. The editor may alter this based on the reviewers’ judgment of the evidence.

Figure 2).

Hierarchy of evidence. RCT Randomized controlled trial; SR Systematic review

REFERENCES

- 1.Godlee F. Milestones on the long road to knowledge. BMJ. 2007;334(Suppl 1):S2–S3. doi: 10.1136/bmj.39062.570856.94. [DOI] [PubMed] [Google Scholar]

- 2.Sackett DL, Straus SE, Richardson WS, et al. Evidence-Based Medicine: How to Practice and Teach EBM. 2nd edn. Edinburgh: Churchill Livingstone; 2000. [Google Scholar]

- 3.Guyatt G, Rennie D, editors. Users’ Guides To the Medical Literature: A Manual for Evidence-Based Clinical Practice. Chicago: American Medical Association; 2002. [Google Scholar]

- 4.Organisation for Economic Cooperation and Development (OECD) Health Care Systems: Efficiency and Policy Settings. Paris: OECD Publishing; 2010. [Google Scholar]

- 5.PricewaterhouseCoopers’ Health Research Institute; 2009. The Price of Excess: Identifying Waste in Healthcare Spending. < www.pwc.com/us/en/healthcare/publications/the-price-of-excess.jhtml> (Accessed December 19, 2011). [Google Scholar]

- 6.World Health Organization . Geneva: World Health Organization; 2010. The world health report – Health systems financing: The path to universal coverage. < www.who.int/whr/2010/en/index.html> (Accessed January 26, 2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Guyatt GH. Evidence-based medicine. ACP J Club. 1991;114(Suppl 2):A-16. [PubMed] [Google Scholar]

- 8.Department of Clinical Epidemiology & Biostatistics, McMaster University Health Sciences Centre How to read clinical journals. I: Why to read them and how to start reading them critically. CMAJ. 1981;124:555–8. [PMC free article] [PubMed] [Google Scholar]

- 9.The periodic health examination Canadian Task Force on the Periodic Health Examination. CMAJ. 1979;121:1193–254. [PMC free article] [PubMed] [Google Scholar]

- 10.Sackett DL. Rules of evidence and clinical recommendations on the use of antithrombotic agents. Chest. 1989;95(Suppl 2):2S–4S. [PubMed] [Google Scholar]

- 11.American Society of Plastic Surgeons ASPS Evidence Rating Scales. < www.plasticsurgery.org/Documents/medical-professionals/health-policy/evidence-practice/ASPS-Rating-Scale-March-2011.pdf> (Accessed November 29, 2011).

- 12.Howick J, Chalmers I, Glasziou P, et al. Oxford Centre for Evidence-Based Medicine; Explanation of the 2011 Oxford Centre for Evidence-Based Medicine (OCEBM) Levels of Evidence (Background Document) < www.cebm.net/index.aspx?o=5653> (Accessed November 29, 2011). [Google Scholar]

- 13.Shekelle PG, Woolf SH, Eccles M, Grimshaw J. Clinical guidelines: Developing guidelines. BMJ. 1999;318:593–6. doi: 10.1136/bmj.318.7183.593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Schünemann HJ, Vist GE, Jaeschke R, Kunz R, Cook DJ, Guyatt GH. Grading recommendations. In: Guyatt G, Rennie D, Meade MO, Cook D, editors. User‘s Guides to the Medical Literature A Manual for Evidence-based Clinical Practice. 2nd edn. New York: McGraw-Hill; 2008. pp. 679–701. [Google Scholar]

- 15.Atkins D, Best D, Briss PA, et al. Grading quality of evidence and strength of recommendations – GRADE Working Group. BMJ. 2004;328:1490. doi: 10.1136/bmj.328.7454.1490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Guyatt GH, Vist G, Falck-Ytter Y, Kunz R, Magrini N, Schünemann HJ. An emerging consensus on grading recommendations? ACP J Club. 2006;144:A8–A9. [PubMed] [Google Scholar]

- 17.Brouwers MC, Kho ME, Browman G, et al. AGREE II: Advancing guideline development, reporting and evaluation in health care. CMAJ. 2010;182:E839–42. doi: 10.1503/cmaj.090449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Vlayen J, Aertgeerts B, Hannes K, Sermeus W, Ramaekers D. A systematic review of appraisal tools for clinical practice guidelines: Multiple similarities and one common deficit. Int J Qual Health Care. 2005;17:235–42. doi: 10.1093/intqhc/mzi027. [DOI] [PubMed] [Google Scholar]

- 19.Brouwers MC, De Vito C, Bahirathan L, et al. Effective interventions to facilitate the uptake of breast, cervical and colorectal cancer screening: An implementation guideline. Implementation Sci. 2011;29:112. doi: 10.1186/1748-5908-6-112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Loiselle F, Mahabir RC, Harrop AR. Levels of evidence in plastic surgery research over 20 years. Plast Reconstr Surg. 2008;121:207e–211e. doi: 10.1097/01.prs.0000304600.23129.d3. [DOI] [PubMed] [Google Scholar]

- 21.Hanzlik S, Mahabir RC, Baynosa RC, Khiabani KT. Levels of evidence in research published in the Journal of Bone and Joint Surgery (American Volume) over the last thirty years. J Bone Joint Surg Am. 2009;91:425–8. doi: 10.2106/JBJS.H.00108. [DOI] [PubMed] [Google Scholar]

- 22.Chuback JE, Yarascavitch BA, Eaves F, III, Thoma A, Bhandari M. Evidence in the aesthetic surgical literature over the past decade: How far have we come? Plast Reconstr Surg. 2012;129:126e–34e. doi: 10.1097/PRS.0b013e3182362bca. [DOI] [PubMed] [Google Scholar]

- 23.Chang EY, Pannucci CJ, Wilkins EG. Quality of clinical studies in aesthetic surgery journals: A 10-year review. Aesthet Surg J. 2009;29:144–7. doi: 10.1016/j.asj.2008.12.007. [DOI] [PubMed] [Google Scholar]

- 24.Bhandari M, Richards RR, Sprague S, Schemitsch E. The quality of reporting of randomized trials in the Journal of Bone and Joint Surgery [Am] from 1988–2000. J Bone Joint Surg Am. 2002;84:388–96. doi: 10.2106/00004623-200203000-00009. [DOI] [PubMed] [Google Scholar]

- 25.Karri V. Randomised clinical trials in plastic surgery: Survey of output and quality of reporting. J Plast Reconstr Aesthet Surg. 2006;59:787–96. doi: 10.1016/j.bjps.2005.11.027. [DOI] [PubMed] [Google Scholar]

- 26.McCarthy JE, Chatterjee A, McKelvey TG, Jatzen EM, Kerrigan CL. A detailed analysis of Level I evidence (randomized controlled trials and meta-analyses) in five plastic surgery journals to date: 1978 to 2009. Plast Reconstr Surg. 2010;126:1774–8. doi: 10.1097/PRS.0b013e3181efa201. [DOI] [PubMed] [Google Scholar]

- 27.Momeni A, Becker A, Antes G, Diener M, Bluemle A, Stark BG. Evidence-based plastic surgery: Controlled trials in three plastic surgery journals (1990 to 2005) Ann Plast Surg. 2009;62:293–6. doi: 10.1097/SAP.0b013e31818015ff. [DOI] [PubMed] [Google Scholar]

- 28.Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomized controlled trials: The QUOROM statement. Quality of reporting of meta-analyes. Lancet. 1999;354:1896–900. doi: 10.1016/s0140-6736(99)04149-5. [DOI] [PubMed] [Google Scholar]

- 29.Stroup DF, Berlin JA, Morton SC, et al. Meta-analysis of observational studies in epidemiology: A proposal for reporting. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group. JAMA. 2000;283:2008–12. doi: 10.1001/jama.283.15.2008. [DOI] [PubMed] [Google Scholar]

- 30.Moher D, Schulz KF, Altman D, CONSORT Group (Consolidated Standards of Reporting Trials) The CONSORT statement: Revised recommendations for improving the quality of reports of parallel-group randomized trials. JAMA. 2001;285:1987–91. doi: 10.1001/jama.285.15.1987. [DOI] [PubMed] [Google Scholar]

- 31.von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP. Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: Guidelines for reporting observational studies. BMJ. 2007;335:806–8. doi: 10.1136/bmj.39335.541782.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Coroneos CJ, Ignacy TA, Thoma A. Designing and reporting case series in plastic surgery. Plast Reconstr Surg. 2011;128:361e–368e. doi: 10.1097/PRS.0b013e318221f2ec. [DOI] [PubMed] [Google Scholar]

- 33.Surgical Outcomes Research Centre (SOURCE) Surgical Outcomes Research Centre; < http://fhs.mcmaster.ca/source/index.html> (Accessed November 29, 2011). [Google Scholar]

- 34.Thoma A, editor. Evidence-Based Plastic Surgery: Design, Measurement, and Evaluation, Vol 35(2) Toronto: Elsevier Canada; 2008. pp. 189–316. [Google Scholar]

- 35.Plastic and Reconstructive Surgery Evidence-Based Medicine: How-To Articles. < http://journals.lww.com/plasreconsurg/pages/collectiondetails.aspx?TopicalCollectionId=24> (Accessed November 29, 2011).

- 36.Wood L, Egger M, Gluud LL, et al. Empirical evidence of bias in treatment effect estimates in controlled trials with different interventions and outcomes: Meta-epidemiological study. BMJ. 2008;336:601–5. doi: 10.1136/bmj.39465.451748.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Journal of Bone and Joint Surgery Levels of Evidence for the Primary Research Question in Therapeutic Studies. < www.jbjs.org/public/instructionsauthors.aspx#LevelsEvidence> (Accessed December 18, 2011).