Abstract

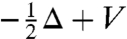

The spectral bound, s(αA + βV), of a combination of a resolvent positive linear operator A and an operator of multiplication V, was shown by Kato to be convex in  . Kato's result is shown here to imply, through an elementary “dual convexity” lemma, that s(αA + βV) is also convex in α > 0, and notably, ∂s(αA + βV)/∂α ≤ s(A). Diffusions typically have s(A) ≤ 0, so that for diffusions with spatially heterogeneous growth or decay rates, greater mixing reduces growth. Models of the evolution of dispersal in particular have found this result when A is a Laplacian or second-order elliptic operator, or a nonlocal diffusion operator, implying selection for reduced dispersal. These cases are shown here to be part of a single, broadly general, “reduction” phenomenon.

. Kato's result is shown here to imply, through an elementary “dual convexity” lemma, that s(αA + βV) is also convex in α > 0, and notably, ∂s(αA + βV)/∂α ≤ s(A). Diffusions typically have s(A) ≤ 0, so that for diffusions with spatially heterogeneous growth or decay rates, greater mixing reduces growth. Models of the evolution of dispersal in particular have found this result when A is a Laplacian or second-order elliptic operator, or a nonlocal diffusion operator, implying selection for reduced dispersal. These cases are shown here to be part of a single, broadly general, “reduction” phenomenon.

Keywords: perturbation theory, positive semigroup, reduction principle, non-self-adjoint, Schrödinger operator

The main result to be shown here is that the growth bound, ω(αA + V), of a positive semigroup generated by αA + V changes with positive scalar α at a rate less than or equal to ω(A), where A is also a generator, and V is an operator of multiplication. Movement of a reactant in a heterogeneous environment is often of this form, where V represents the local growth or decay rate, and α represents the rate of mixing. Lossless mixing means ω(A) = 0, while lossy mixing means ω(A) < 0, so this result implies that greater mixing reduces the reactant’s asymptotic growth rate, or increases its asymptotic decay rate. Decreased growth or increased decay are familiar results when A is a diffusion operator, so what is new here is the generality shown for this phenomenon. At the root of this result is a theorem by Kingman on the “superconvexity” of the spectral radius of nonnegative matrices (1). The logical route progresses from Kingman through Cohen (2) to Kato (3). The historical route begins in population genetics.

In early theoretical work to understand the evolution of genetic systems, Feldman, colleagues, and others kept finding a common result from each model they examined (4–14)—be they models for the evolution of recombination, or of mutation, or of dispersal. Evolution favored reduced levels of these processes in populations near equilibrium under constant environments, and this result was called the Reduction Principle (11).

These results were found for finite-dimensional models. But the same reduction result has also been found in models for the evolution of unconditional dispersal in continuous space, in which matrices are replaced by linear operators. This finding raises the questions of whether this common result, discovered in such a diversity of models, reflects a single mathematical phenomenon. Here, the question is answered affirmatively.

The mathematical underpinnings of the reduction principle for finite-dimensional models were discovered by Sam Karlin (15, 16) [although he did not realize it, and he had earlier proposed an alternate to the reduction principle—the mean fitness principle (17), which was found to have counterexamples (18)]. Karlin wanted to understand the effect of population subdivision on the maintenance of genetic variation. Genetic variation is preserved if an allele has a positive growth rate when it is rare, protecting it from extinction. The dynamics of a rare allele are approximately linear, and of the form

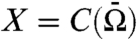

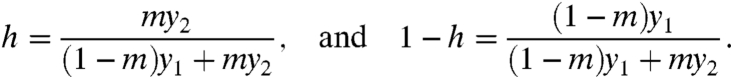

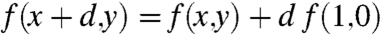

| [1] |

where x(t) is a vector of the rare allele’s frequency at time t among different population subdivisions, α is the rate of dispersal between subdivisions, P is the stochastic matrix representing the pattern of dispersal, and D is a diagonal matrix of the growth rates of the allele in each subdivision. The allele is protected from extinction if its asymptotic growth rate when rare is greater than 1. This asymptotic growth rate is the spectral radius,

| [2] |

where σ(A) is the set of eigenvalues of matrix A.

Karlin discovered that for M(α)≔[(1 - α)I + αP], the spectral radius, r(M(α)D), is a decreasing function of the dispersal rate α, for arbitrary strongly connected dispersal pattern:

Theorem 1. (Karlin's Theorem 5.2)

[(16), pp. 194–196] Let P be an arbitrary nonnegative irreducible stochastic matrix. Consider the family of matrices

Then for any diagonal matrix D with positive terms on the diagonal, the spectral radius

is decreasing as α increases (strictly provided D ≠ dI).

Karlin’s Theorem 5.2 means that greater mixing between subdivisions produces lower r(M(α)D), and if it goes below 1, the allele will go extinct. While this theorem was motivated by the issue of genetic diversity in a subdivided population, its form applies generally to situations where differential growth is combined with mixing. D could just as well represent the investment returns on different assets and P a pattern of portfolio rebalancing. Or D could represent the decay rates of reactant in different parts of a reactor, and P a pattern of stirring within the reactor. In a very general interpretation, Theorem 5.2 means that greater mixing reduces growth and hastens decay.

If the dispersal rate α is not an extrinsic parameter, but is a variable which is itself controlled by a gene, then a gene which decreases α will have a growth advantage over its competitor alleles. The action of such modifier genes produces a process that will reduce the rates of dispersal in a population. Therefore, Theorem 5.2 also means that differential growth selects for reduced mixing.

In the evolutionary context, the generality of the mixing pattern P in Karlin’s Theorem 5.2 makes it applicable to other kinds of “mixing” besides dispersal. The pattern matrix P can just as well refer to the pattern of mutations between genotypes, and then α refers to the mutation rate. Or P can represent the pattern of transmission when two loci recombine, and then α represents the recombination rate. The early models for the evolution of recombination and mutation that exhibited the reduction principle in fact had the same form as Eq. 1 for the dynamics of a rare modifier allele. Once this commonality of form was recognized (19–21), it was clear that Karlin’s theorem explained the repeated appearance of the reduction result in the different contexts, and generalized the result to a whole class of genetic transmission patterns beyond the special cases that had been analyzed.

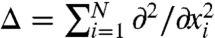

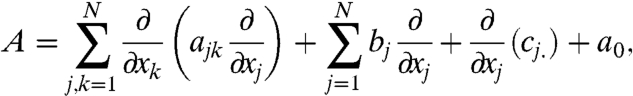

The dynamics of movement in space have been long modeled by infinite-dimensional models, where space is continuous and the concentrations of a quantity at each point are represented as a function. The dynamics of change in the concentration are modeled as diffusions, where the Laplacian or elliptic differential operator or nonlocal integral operator takes the place of the matrix P in the finite-dimensional case. When the substance grows or decays at rates that are a function of its location, the system is often referred to as a reaction diffusion. In reaction-diffusion models for the evolution of dispersal, the reduction principle again makes its appearance (22) (23, Lemma 5.2) (24, Lemma 2.1) (25). In nonlocal diffusion models, again the reduction principle appears (26). This repeated occurrence points to the possibility of an underlying mathematical unity.

Here, a broad characterization of this “reduction phenomenon” is established by generalizing Karlin’s theorem to linear operators. The reduction results previously found for various linear operators are, therefore, seen to be special cases of a general phenomenon.

This result is actually implicit in Kato’s generalization (3) of Cohen’s theorem (2) on the convexity of the spectral bound of essentially nonnegative matrices with respect to the diagonal elements of the matrix. It is educed from Kato’s theorem here by means of an elementary “dual convexity” lemma.

Kato’s goal in ref. 3 was to generalize, from matrices to linear operators, Cohen’s convexity result (2):

Theorem 2. (Cohen)

(2) Let D be diagonal real n × n matrix. Let A be an essentially nonnegative n × n matrix. Then s(A + D) is a convex function of D.

Here, s(A + D) is the spectral bound—the largest real part of any eigenvalue of A + D. A synonym for the spectral bound used in the matrix literature is the spectral abscissa (27, 28). When the spectral bound is an eigenvalue, it is also referred to as the principal eigenvalue (29), dominant eigenvalue (30), dominant root (31), Perron-Frobenius eigenvalue (32), or Perron root (33). “Essentially nonnegative” means that the off-diagonal elements are nonnegative. Synonyms include “quasi-positive” (34), “Metzler,” “Metzler-Leontief,” “ML” (32), and “cooperative” (35).

Cohen’s proof relied upon the following theorem of Kingman:

Theorem 3. (Kingman)

(1) Let A be an n × n matrix whose elements, Aij(θ), are nonnegative functions of the real variable θ, such that they are “superconvex,” i.e., for each i, j, either log Aij(θ) is convex in θ [Aij(θ) is log convex], or Aij(θ) = 0 for all θ. Then the spectral radius of A is also superconvex in θ.

Kato generalized Cohen’s result to linear operators by first generalizing Kingman’s theorem. Before presenting Kato’s theorem, some terminology needs to be introduced:

X represents an ordered Banach space or its complexification.

X+ represents the proper, closed, positive cone of X, assumed to be generating and normal (see ref. 3).

B(X) represents the set of all bounded linear operators A: X → X.

A is a positive operator if AX+⊂X+.

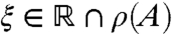

The resolvent of

A is R(ξ,A)≔(ξ - A)-1, the operator inverse of ξ - A,  .

.

The resolvent set

are those values of ξ for which ξ - A is invertible.

are those values of ξ for which ξ - A is invertible.

The spectrum of A∈B(X), σ(A), is the complement of the resolvent set, ρ(A).

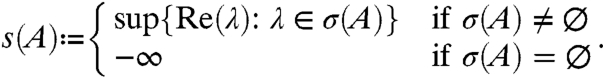

The spectral bound of closed linear operator A, not necessarily bounded, is

|

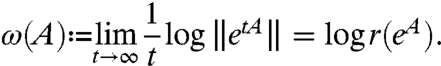

The type (growth bound) of an infinitesimal generator, A, of a strongly continuous (C0) semigroup, {etA: t > 0}, is

|

Generally, -∞ ≤ s(A) ≤ ω(A) < ∞, but conditions for s(A) = ω(A) or s(A) < ω(A) are part of a more involved theory for the asymptotic growth of semigroups (see refs. 36–38).

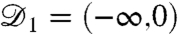

Definition 1:

Operator A is resolvent positive if there is ξ0 such that (ξ0,∞)⊂ρ(A) and R(ξ,A) is positive for all ξ > ξ0 (39).

The relationship of the resolvent positive property to other familiar operator properties includes the following list of key results:

If A generates a C0-semigroup Tt, then Tt is positive for all t≥0 if and only if A is resolvent positive (ref. 38, p. 188).

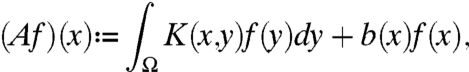

If A is a resolvent positive operator defined densely on X = C(S), the Banach space of continuous complex-valued functions on compact space S, then A generates a positive C0-semigroup [(38), Theorem 3.11.9].

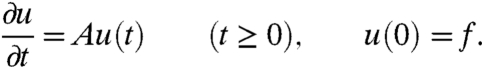

- If A is resolvent positive and its domain, D(A)⊂X, is dense in X, then for every f∈D(A2), there exists a unique solution, u(t)∈D(A) for all t≥0, u∈C1([0,∞),X), to the Cauchy problem (39, Theorem 7.1)

If A is resolvent positive then: s(A) < ∞; if σ(A) is nonempty; i.e., -∞ < s(A), then s(A)∈σ(A); if

yields R(ξ,A)≥0 then ξ > s(A) (3) (38, Proposition 3.11.2).

yields R(ξ,A)≥0 then ξ > s(A) (3) (38, Proposition 3.11.2).Differential operators higher than second order are never resolvent positive (40, Corollary 2.3) (41).

- Well-known examples of resolvent positive operators include the following (for details see the sample references):

Kato’s generalization of Cohen’s theorem is as follows:

Theorem 4. (Generalized Cohen’s Theorem)

(3) Consider X = C(S) (continuous functions on a compact Hausdorff space S) or X = Lp(S), 1 ≤ p < ∞, on a measure space S, or more generally, let X be the intersection of two Lp-spaces with different p’s and different weight functions. Let A: X → X be a linear operator which is resolvent positive. Let V be an operator of multiplication on X represented by a real-valued function v, where v∈C(S) for X = C(S), or v∈L∞(S) for the other cases. Then s(A + V) is a convex function of V. If in particular A is a generator of a C0 semigroup, then both s(A + V) and ω(A + V) are convex in V.

Kato’s theorem is further generalized to Banach lattices by Arendt and Batty (51) as follows:

Theorem 5. (Generalized Kato’s Theorem)

[(51), Theorem 3.5] Let A be the generator of a positive semigroup on a Banach lattice X, and let

refer to the “center” of X. Then the functions V↦s(A + V) and V↦ω(A + V) from Z(X) into [-∞,∞) are convex.

Results

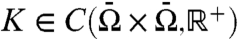

Theorem 6. (Generalized Karlin’s Theorem)

Let A be a resolvent positive linear operator, and V be an operator of multiplication, under the same assumptions as Theorem 4 (or let A and V be as in Theorem 5). Then for α > 0 :

s(αA + V) is convex in α;

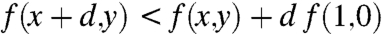

∀ d > 0, or

∀ d > 0;

In particular, when s(A) = 0 then s(αA + V) is nonincreasing in α (the reduction phenomenon), and when s(A) < 0 then s(αA + V) is strictly decreasing in α;

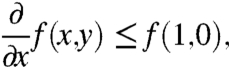

except possibly at a countable number of points α, where the one-sided derivatives exist but differ:

[3]

[4] If A is a generator of a C0-semigroup, then the above relations on s(αA + V) also apply to the type ω(αA + V).

Proof:

We consider the general form

[5] where α > 0,

. Kato (3) explicitly shows that ϕ(1,β) is convex in β (which he points out is equivalent to varying V). Kato's result is shown to imply the properties claimed for s(αA + V) = ϕ(α,1) with respect to variation in α, by Lemma 1, to follow.

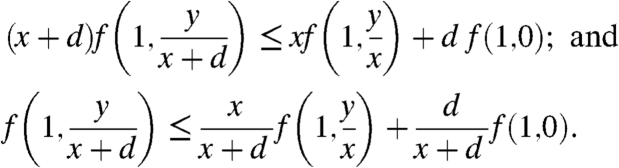

Lemma 1. (Dual Convexity)

Let

and

. Let

have the following properties:

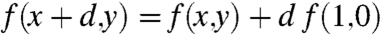

[6]

[7] Then:

f(x,y) is convex in x;

, either

For y ≠ 0, if f(x,y) is strictly convex in y, then f(x,y) is strictly convex in x, and

; or

.

.

,

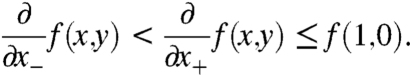

except possibly at a countable number of points x, where the one-sided derivatives exist but differ:

The lemma holds if we substitute

or

or both.

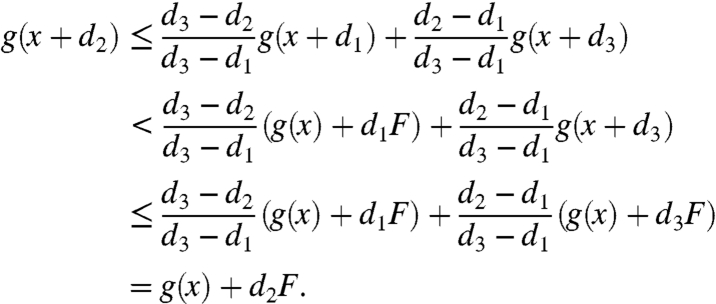

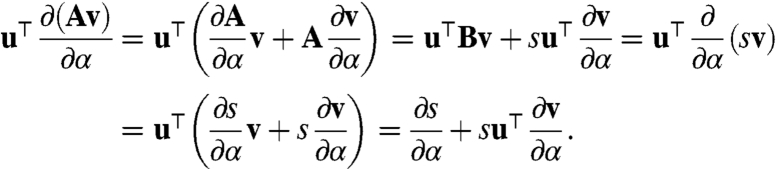

Proof:

f(x,y) is convex in x.

The relation

(f is homogeneous of degree one) allows a set of rescalings that transform convexity in y into convexity in x. It is perhaps worth noting that this relation is actually a homomorphism, which can be put into a more familiar form by defining a product x⋆y ≔f(x,y), and function ψ(x)≔αx, which gives ψ(x)⋆ψ(y) = ψ(x⋆y).

For the case y = 0, Eq. 6 gives f(αx,0) = αf(x,0), so f is trivially convex in x.

For y ≠ 0, the following derivations have the constraints y, y1,

, y, y1, y2 ≠ 0, and 0 < m < 1, so that {y,y1,y2,m,1 - m,(1 - m)y1 + my2} are nonzero and their ratios and reciprocals are always defined, and ratios of yi terms always positive. These constraints keep the arguments of f within their domains throughout the rescalings.

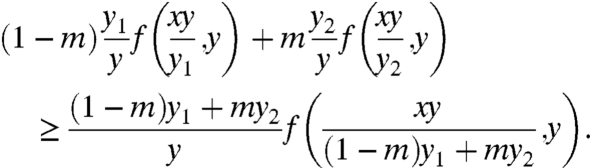

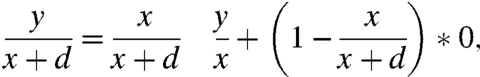

Convexity of f(x,y) in y givesfor m∈(0,1), y1 ≠ y2. Applying [6] to [8], with respective substitutions α = y1/y, α = y2/y, and α = [(1 - m)y1 + my2]/y in the three f terms, yields:

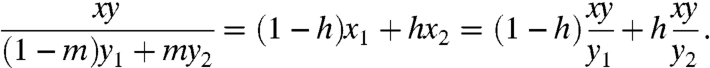

[8] Let x1≔xy/y1 and x2≔xy/y2 represent the rescaled first arguments for f on the left side of [9] (so x, x1,

[9] ). We try the ansatz that x1 and x2 can be combined convexly to yield the third rescaled argument on the right side of [9]:

The ansatz has solution

Note that h∈(0,1) is assured because y1 and y2 have the same sign, y1 ≠ y2, and m∈(0,1).

After dividing both sides by ϕ > 0,

which is convexity in x [for each (x1,x2,h),∃(y1,y2,m)]. The case of strict convexity follows by substituting > for ≥ throughout.

[10] Either

, or

.

If y = 0, then case 2b in Lemma 1 holds by [6]:For y ≠ 0, the strategy will be to show first that

. Next, it is shown that if

for any

, then it is true for all

.

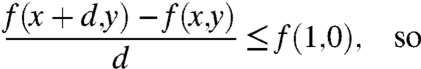

The steps are shown here only for x,, but they are readily applied to

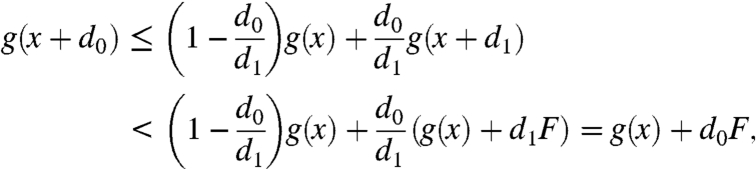

. By [6], for x, d > 0, the following are equivalent:

[11] Because 0 < d/(x + d), x/(x + d) < 1, the second arguments for f in [12] are related by convex combination,

[12] so [12] is just a statement of the convexity of f(x,y) in y, as hypothesized. Strict convexity of f(x,y) in y replaces ≤ with < throughout [11] and [12], yielding case 2a in Lemma 1.

We shall see that convexity then prevents f(x + d,y) from ever returning to the line

[13] for d > 0.

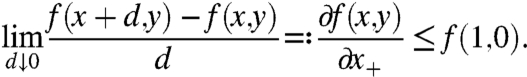

For each

, ∂f(x,y)/∂x ≤ f(1,0), except possibly at a countable number of points x, where the one-sided derivatives exist but differ:

.

Rearrangement of [11] gives

[15] For y = 0, equality holds in [15] for all d > 0. For y ≠ 0, because f(x,y) is convex in x on the open interval

[16] , the left-sided and right-sided derivatives always exist, and differ at most at a countable number of points, at which the right-sided derivative [16] is greater than the left-sided derivative [(52) Proposition 17, pp. 113–114].

A concavity version of the lemma may be trivially produced by reversal of the convexity inequalities.

Remark 1:

It would be clearly desirable to characterize the conditions for strict convexity in Kato’s theorem, so that by Lemma 1, one would obtain strict convexity in Theorem 6, item 1, and strict monotonicity in items 3 and 4. Item 2 is the best that can be offered in the way of strict inequality without strict convexity. But the problem is more technical and is deferred to elsewhere.

It is reasonable, nevertheless, to conjecture that the properties which produce strict convexity in the matrix case (ref. 53, Theorem 4.1) (ref. 54, Theorem 1.1) extend to their Banach space versions: i.e., for a > 0, when resolvent positive operator A is irreducible (ref. 55, p. 250) (ref. 51, p. 41), then s(αA + βV) is strictly convex in β if and only if V is not a constant scalar.

The conjectured sharpening of Theorem 6 to strict inequality would have application to continuous-space models for the evolution of dispersal, and show populations to be invadable by less-dispersing organisms when they experience spatially heterogeneous growth rates (a “selection potential” as defined in ref. 20). This invasibility result is a key element of the Reduction Principle, and is first stated generally for finite matrix models in refs. 19, pp. 118, 126, 137, 195, 199 and 20, Results 2, 3. The invasibility result's primary implication is that for a population to be non-invadable, it must experience no spatial heterogeneity of growth rates where it has positive measure, and this points toward ideal free distributions (defined to be those which spatially equalize the growth rates when this is possible), as the evolutionarily stable states. For reviews and recent developments, see refs. 56–60.

A Third Proof of Karlin’s Theorem 5.2.

Karlin’s proof is based on the Donsker-Varadhan variational formula for the spectral radius (62). Kirkland, et al. (56) recently discovered another proof using entirely structural methods. A third distinct proof of Karlin’s theorem is seen as follows by application of Lemma 1 to Cohen’s theorem, combined with Friedland’s equality condition [(53), Theorem 4.1] (see also refs. 53 and 62 for other proof methods).

The expression in Karlin’s Theorem 5.2 can be put into the form used in Theorem 6:

where A = (P - I)D, α∈(0,1), and β = 1. Because M(α)D is a nonnegative matrix when α∈(0,1), by Perron-Frobenius theory its spectral bound s(M(α)D) equals its spectral radius r(M(α)D).

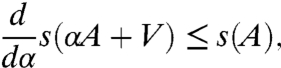

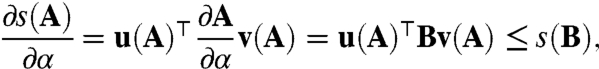

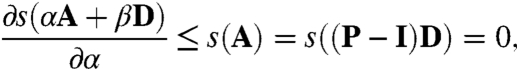

Cohen’s theorem gives that s(αA + βD) is convex in β, and thus by Lemma 1, s(αA + βD) is convex in α > 0 and

|

[17] |

the right identity seen because e⊤(P - I)D = (e⊤ - e⊤)D = 0, where e is the vector of ones, and e⊤ is its transpose.

Strict convexity in β is shown by Friedland (ref. 53, Theorem 4.1) to occur when P is irreducible and D ≠ cI, for any c > 0. Strict convexity in β implies, by Lemma 1, that r(M(α)D) = s(M(α)D) is strictly convex and decreasing in α.

Remark 2:

The core of Kirkland, et al.’s (56) proof of Theorem 1 is their Lemma 4.1, which can be expressed as

with equality only when e⊤A = s(A)e⊤, where u(A)⊤ and v(A) are the left and right eigenvectors of A associated with the Perron root s(A), and u∘v is the Schur-Hadamard (elementwise) product.

Kirkland, et al.’s (55) result, except for the equality condition, is a special case of ref. 63 Theorem 3.2.5 that x⊤Ay≥s(A) for any x,y≥0: x∘y = u(A)∘v(A). To obtain the equality condition requires an approach Kirkland, et al.’s (55) distinct proof provides.

Remark 3:

Schreiber and Lloyd-Smith [(64) Appendix B, Lemma 1] followed the reverse path and extended Kirkland, et al’s result on s(M(α)D) to the form s(αA + D), where A is essentially nonnegative and D any diagonal matrix.

Remark 4:

Kato (3) notes that the Donsker-Varadhan formula provides another route besides Kingman’s theorem to his generalization of Cohen’s theorem (but with more restrictive conditions). Indeed, Friedland (53) uses the Donsker-Varadhan formula to prove Cohen’s theorem augmented by strict convexity. The dual convexity relationship shown here between Cohen’s and Karlin’s theorems means that both routes of proof apply as well to Karlin’s theorem. Given these parallels, the relationship between the theorem of Kingman and the theorem of Donsker and Varadhan invites deeper study.

Lemma 1 combined with Cohen’s theorem can also be used to give a new proof of an inequality of Lindqvist, the special case considered in ref. 65, Theorem 2, pp. 260–261.

Theorem 7. (Lindqvist)

[(65), Theorem 2, subcase ] Let A be an irreducible n × n real matrix such that (i) Aij≥0 for i ≠ j, and (ii) The left and right eigenvectors of A, u(A)⊤ and v(A), associated with eigenvalue s(A), satisfy u(A)⊤v(A) = 1. Let D be an n × n real diagonal matrix. Then

[18]

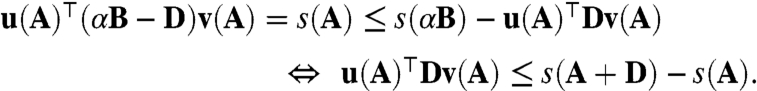

Proof:

Because A is an irreducible essentially nonnegative matrix, s(A) is an eigenvalue of multiplicity 1. Consider the representation A = αB - D, where B is essentially nonnegative and α > 0. Write s ≡ s(A). Because A is irreducible, it has unique u ≡ u(A) and v ≡ v(A) given u⊤v = e⊤v = 1, and all the derivatives exist (28) in the following derivation [(66), Sec. 9.1.1]:

Cancellation of terms su⊤∂v/∂α gives

the inequality derived as in Eq. 17. Scaling by α, subtracting D, and substituting αB = A + D, we get

A Key Open Problem.

In some physical systems, and in biological applications especially, there may be multiple, independently varied operators acting on a quantity [e.g., diffusion with independent advection (ref. 67, eq. 2.9)], or the variation may not scale the mixing process uniformly [e.g., conditional dispersal (68, 69)], so that variation is not of the form αA + V but rather αA + B, where B is a linear operator other than an operator of multiplication. Examples are known where departures from reduction occur; i.e., ds(αA + B)/dα > s(A). Results for the form αA + B have been obtained for symmetrizable finite matrices in models of multilocus mutation (70), and dispersal in random environments (71). Some results for Banach space models have also been obtained (67–69, 72–77).

A key open problem, then, is to find necessary or sufficient conditions on Banach space operators, B, such that ∂s(αA + βB)/∂α ≤ s(A) (which may depend on A, β/α, domain, and boundary conditions). A sufficient condition is that s(αA + βB) be convex in β, by Lemma 1. Thus, the dual problem is to ask: for which B is s(αA + βB) convex in β? Some results towards this problem are in ref. 78. Kato obtained Theorem 4 with operators of multiplication, V, because the family of operators eβV is semigroup-superconvex in β (definition in ref. 3), but this semigroup-superconvexity approach faces the challenge that, “It is in general difficult to find a nontrivial semigroup-superconvex family B(h)” (3).

Acknowledgments.

I thank Prof. Shmuel Friedland for introducing me to the papers of Cohen, and for inviting me to speak on the early state of this work at the 16th International Linear Algebra Society Meeting in Pisa; Prof. Mustapha Mokhtar-Kharroubi and anonymous reviewers for pointing out an error in a definition in the first version; anonymous reviewers for helpful suggestions; Laura Marie Herrmann for assistance with the literature search; and Arendt and Batty (51) for guiding me to Kato (3).

Footnotes

The author declares no conflict of interest.

This article is a PNAS Direct Submission.

References

- 1.Kingman JFC. A convexity property of positive matrices. Q J Math. 1961;12:283–284. [Google Scholar]

- 2.Cohen JE. Convexity of the dominant eigenvalue of an essentially nonnegative matrix. P Am Math Soc. 1981;81:657–658. [Google Scholar]

- 3.Kato T. Superconvexity of the spectral radius, and convexity of the spectral bound and the type. Mathematische Zeitschrift. 1982;180:265–273. [Google Scholar]

- 4.Gadgil M. Dispersal: population consequences and evolution. Ecology. 1971;52:253–261. [Google Scholar]

- 5.Feldman MW. Selection for linkage modification: I. Random mating populations. Theor Popul Biol. 1972;3:324–346. doi: 10.1016/0040-5809(72)90007-x. [DOI] [PubMed] [Google Scholar]

- 6.Balkau B, Feldman MW. Selection for migration modification. Genetics. 1973;74:171–174. doi: 10.1093/genetics/74.1.171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Karlin S, McGregor J. Towards a theory of the evolution of modifier genes. Theor Popul Biol. 1974;5:59–103. doi: 10.1016/0040-5809(74)90052-5. [DOI] [PubMed] [Google Scholar]

- 8.Feldman MW, Krakauer J. Genetic modification and modifier polymorphisms. In: Karlin S, Nevo E, editors. Population Genetics and Ecology. New York: Academic Press; 1976. pp. 547–583. [Google Scholar]

- 9.Teague R. A result on the selection of recombination altering mechanisms. J Theor Biol. 1976;59:25–32. doi: 10.1016/s0022-5193(76)80022-7. [DOI] [PubMed] [Google Scholar]

- 10.Teague R. A model of migration modification. Theor Popul Biol. 1977;12:86–94. doi: 10.1016/0040-5809(77)90036-3. [DOI] [PubMed] [Google Scholar]

- 11.Feldman MW, Christiansen FB, Brooks LD. Evolution of recombination in a constant environment. Proc Nat'l Acad Sci USA. 1980;77:4838–4841. doi: 10.1073/pnas.77.8.4838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Holt R. Population dynamics in two-patch environments: some anomalous consequences of an optimal habitat distribution. Theor Popul Biol. 1985;28:181–208. [Google Scholar]

- 13.Feldman MW, Liberman U. An evolutionary reduction principle for genetic modifiers. Proc Nat'l Acad Sci USA. 1986;83:4824–4827. doi: 10.1073/pnas.83.13.4824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.McPeek M, Holt R. The evolution of dispersal in spatially and temporally varying environments. Am Nat. 1992;140:1010–1027. [Google Scholar]

- 15.Karlin S. Population subdivision and selection migration interaction. In: Karlin S, Nevo E, editors. Population Genetics and Ecology. New York: Academic Press; 1976. pp. 616–657. [Google Scholar]

- 16.Karlin S. Classifications of selection--migration structures and conditions for a protected polymorphism. In: Hecht MK, Wallace B, Prance GT, editors. Evolutionary Biology. Vol. 14. NY: Plenum Publishing Corporation; 1982. pp. 61–204. [Google Scholar]

- 17.Karlin S, McGregor J. The evolutionary development of modifier genes. Proc Nat'l Acad Sci USA. 1972;69:3611–3614. doi: 10.1073/pnas.69.12.3611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Karlin S, Carmelli D. Numerical studies on two-loci selection models with general viabilities. Theor Popul Biol. 1975;7:399–421. doi: 10.1016/0040-5809(75)90026-x. [DOI] [PubMed] [Google Scholar]

- 19.Altenberg L. A Generalization of Theory on the Evolution of Modifier Genes. Stanford University: ProQuest; 1984. Ph.D.dissertation. [Google Scholar]

- 20.Altenberg L, Feldman MW. Selection, generalized transmission, and the evolution of modifier genes. I. The reduction principle. Genetics. 1987;117:559–572. doi: 10.1093/genetics/117.3.559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Altenberg L. The evolutionary reduction principle for linear variation in genetic transmission. B Math Biol. 2009;71:1264–1284. doi: 10.1007/s11538-009-9401-2. [DOI] [PubMed] [Google Scholar]

- 22.Hastings A. Can spatial variation alone lead to selection for dispersal? Theor Popul Biol. 1983;24:244–251. [Google Scholar]

- 23.Hutson V, López-Gómez J, Mischaikow K, Vickers G. Limit behavior for a competing species problem with diffusion. In: Agarwal RP, editor. Dynamical Systems and Applications. Singapore: World Scientific; 1995. pp. 343–358. [Google Scholar]

- 24.Dockery J, Hutson V, Mischaikow K, Pernarowski M. The evolution of slow dispersal rates: a reaction diffusion model. J Math Biol. 1998;37:61–83. [Google Scholar]

- 25.Cantrell R, Cosner C, Lou Y. Evolution of dispersal in heterogeneous landscapes. In: Cantrell R, Cosner C, Ruan S, editors. Spatial Ecology. London: Chapman & Hall/CRC Press; 2010. pp. 213–229. [Google Scholar]

- 26.Hutson V, Martinez S, Mischaikow K, Vickers G. The evolution of dispersal. J Math Biol. 2003;47:483–517. doi: 10.1007/s00285-003-0210-1. [DOI] [PubMed] [Google Scholar]

- 27.Lozinskiy S. On an estimate of the spectral radius and spectral abscissa of a matrix. Linear Algebra Appl. 1969;2:117–125. [Google Scholar]

- 28.Deutsch E, Neumann M. On the first and second order derivatives of the Perron vector. Linear Algebra Appl. 1985;71:57–76. [Google Scholar]

- 29.Keilson J. A review of transient behavior in regular diffusion and birth-death processes. J Appl Probab. 1964;1:247–266. [Google Scholar]

- 30.Horn RA, Johnson CR. Matrix Analysis. Cambridge: Cambridge University Press; 1985. [Google Scholar]

- 31.Gantmacher F. Applications of the Theory of Matrices. NY: Interscience; 1959. [Google Scholar]

- 32.Seneta E. Non-negative Matrices and Markov Chains. New York: Springer-Verlag; 1981. [Google Scholar]

- 33.Bellman R. On an iterative procedure for obtaining the Perron root of a positive matrix. P Am Math Soc. 1955;6:719–725. [Google Scholar]

- 34.Hadeler K, Thieme H. Monotone dependence of the spectral bound on the transition rates in linear compartment models. J Math Biol. 2008;57:697–712. doi: 10.1007/s00285-008-0185-z. [DOI] [PubMed] [Google Scholar]

- 35.Birindelli I, Mitidieri E, Sweers G. The existence of the principal eigenvalue for cooperative elliptic systems in a general domain. Diff Equat. 1999;35:326–334. [Google Scholar]

- 36.Nagel R, editor. One-parameter Semigroups of Positive Operators, Lecture Notes in Mathematics. Vol. 1184. Berlin: Springer-Verlag; 1986. [Google Scholar]

- 37.Van Neerven J. The Asymptotic Behaviour of Semigroups of Linear Operators. Vol. 88. Switzerland: Birkhäuser, Basel; 1996. (Operator Theory Advances and Applications). [Google Scholar]

- 38.Arendt W, Batty C, Hieber M, Neubrander F. Vector-valued Laplace Transforms and Cauchy Problems, Monographs in Mathematics. 2nd Ed. Vol. 96. Basel: Birkhäuser; 2011. [Google Scholar]

- 39.Arendt W. Resolvent positive operators. P Lond Math Soc. 1987;3:321–349. [Google Scholar]

- 40.Arendt W, Batty C, Robinson D. Positive semigroups generated by elliptic operators on Lie groups. J Operat Theor. 1990;23:369–407. [Google Scholar]

- 41.Ulm M. The interval of resolvent-positivity for the biharmonic operator. P Am Math Soc. 1999;127:481–490. [Google Scholar]

- 42.Simon B. Schrödinger semigroups. P Am Math Soc. 1982;7:447–526. [Google Scholar]

- 43.Voigt J. Absorption semigroups, their generators, and Schrödinger semigroups. J Funct Anal. 1986;67:167–205. [Google Scholar]

- 44.Mokhtar-Kharroubi M. On Schrödinger semigroups and related topics. J Funct Anal. 2009;256:1998–2025. [Google Scholar]

- 45.Ouhabaz EM. Analysis of Heat Equations on Domains, London Mathematical Society Monograph Series. Vol. 31. Princeton, NJ: Princeton University Press; 2005. [Google Scholar]

- 46.Donsker M, Varadhan S. On the principal eigenvalue of second-order elliptic differential operators. Commun Pur Appl Math. 1976;29:595–621. [Google Scholar]

- 47.Berestycki H, Nirenberg L, Varadhan S. The principal eigenvalue and maximum principle for second-order elliptic operators in general domains. Commun Pur Appl Math. 1994;47:47–92. [Google Scholar]

- 48.Grinfeld M, Hines G, Hutson V, Mischaikow K, Vickers G. Non-local dispersal. Differential and Integral Equations (Athens) 2005;18:1299–1320. [Google Scholar]

- 49.Bates P, Zhao G. Existence, uniqueness and stability of the stationary solution to a nonlocal evolution equation arising in population dispersal. J Math Anal Appl. 2007;332:428–440. [Google Scholar]

- 50.Chabi M, Latrach K. On singular mono-energetic transport equations in slab geometry. Math Method Appl Sci. 2002;25:1121–1147. [Google Scholar]

- 51.Arendt W, Batty CJK. Principal eigenvalues and perturbation. Operator Theory: Advances and Applications. 1995;75:39–55. [Google Scholar]

- 52.Royden HL. Real Analysis. 3rd Ed. New York: Macmillan; 1988. [Google Scholar]

- 53.Friedland S. Convex spectral functions. Linear Multilinear A. 1981;9:299–316. [Google Scholar]

- 54.Nussbaum D. Convexity and log convexity for the spectral radius. Linear Algebra Appl. 1986;73:59–122. [Google Scholar]

- 55.Greiner G, Voigt J, Wolff M. On the spectral bound of the generator of semigroups of positive operators. J Operat Theor. 1981;5:245–256. [Google Scholar]

- 56.Kirkland S, Li CK, Schreiber SJ. On the evolution of dispersal in patchy landscapes. SIAM J Appl Math. 2006;66:1366–1382. [Google Scholar]

- 57.Cantrell R, Cosner C, Deangelis D, Padron V. The ideal free distribution as an evolutionarily stable strategy. Journal of Biological Dynamics. 2007;1:249–271. doi: 10.1080/17513750701450227. [DOI] [PubMed] [Google Scholar]

- 58.Cantrell R, Cosner C, Lou Y. Evolution of dispersal and the ideal free distribution. Math Biosci Eng. 2010;7:17–36. doi: 10.3934/mbe.2010.7.17. [DOI] [PubMed] [Google Scholar]

- 59.Averill I, Lou Y, Munther D. On several conjectures from evolution of dispersal. Journal of Biological Dynamics. 2011 doi: 10.1080/17513758.2010.529169. [DOI] [PubMed] [Google Scholar]

- 60.Cosner C, Dávila J, Martínez S. Evolutionary stability of ideal free nonlocal dispersal. Journal of Biological Dynamics. 2011 doi: 10.1080/17513758.2011.588341. [DOI] [PubMed] [Google Scholar]

- 61.Donsker MD, Varadhan SRS. On a variational formula for the principal eigenvalue for operators with maximum principle. Proc Nat'l Acad Sci USA. 1975;72:780–783. doi: 10.1073/pnas.72.3.780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.O’Cinneide C. Markov additive processes and Perron-Frobenius eigenvalue inequalities. The Annals of Probability. 2000;28:1230–1258. [Google Scholar]

- 63.Bapat RB, Raghavan TES. Nonnegative Matrices and Applications. Cambridge, United Kingdom: Cambridge University Press; 1997. [Google Scholar]

- 64.Schreiber SJ, Lloyd-Smith JO. Invasion dynamics in spatially heterogeneous environments. Am Nat. 2009;174:490–505. doi: 10.1086/605405. [DOI] [PubMed] [Google Scholar]

- 65.Lindqvist BH. On comparison of the Perron-Frobenius eigenvalues of two ML-matrices. Linear Algebra Appl. 2002;353:257–266. [Google Scholar]

- 66.Caswell H. Matrix Population Models. 2nd Ed. MA: Sinauer Associates; 2000. p. 722. [Google Scholar]

- 67.Belgacem F, Cosner C. The effects of dispersal along environmental gradients on the dynamics of populations in heterogeneous environments. Canadian Applied Mathematics Quarterly. 1995;3:379–397. [Google Scholar]

- 68.Hambrock R. OH: Ohio State University; 2007. Evolution of conditional dispersal: a reaction-diffusion-advection approach. Ph.D. thesis. [DOI] [PubMed] [Google Scholar]

- 69.Hambrock R, Lou Y. The evolution of conditional dispersal strategies in spatially heterogeneous habitats. B Math Biol. 2009;71:1793–1817. doi: 10.1007/s11538-009-9425-7. [DOI] [PubMed] [Google Scholar]

- 70.Altenberg L. An evolutionary reduction principle for mutation rates at multiple loci. B Math Biol. 2011;73:1227–1270. doi: 10.1007/s11538-010-9557-9. [DOI] [PubMed] [Google Scholar]

- 71.Altenberg L. The evolution of dispersal in random environments and the principle of partial control. 2012. Ecological Monographs, http://arxiv.org/abs/1106.4388.

- 72.Lou Y. Some challenging mathematical problems in evolution of dispersal and population dynamics. Lect Notes Math. 2008:171–205. [Google Scholar]

- 73.Chen X, Hambrock R, Lou Y. Evolution of conditional dispersal: a reaction-diffusion-advection model. J Math Biol. 2008;57:361–386. doi: 10.1007/s00285-008-0166-2. [DOI] [PubMed] [Google Scholar]

- 74.Bezugly A. Columbus, OH: Ohio State University; 2009. Reaction-diffusion-advection models for single and multiple species. Ph.D. dissertation. [Google Scholar]

- 75.Godoy T, Gossez J, Paczka S. On the asymptotic behavior of the principal eigenvalues of some elliptic problems. Ann Mat Pur Appl. 2010;189:497–521. [Google Scholar]

- 76.Bezuglyy A, Lou Y. Reaction-diffusion models with large advection coefficients. Appl Anal. 2010;89:983–1004. [Google Scholar]

- 77.Kao C, Lou Y, Shen W. Evolution of mixed dispersal in periodic environments. Discrete and Continuous Dynamical Systems B. 2012;17 In press. [Google Scholar]

- 78.Webb G. Convexity of the growth bound of CO-semigroups of operators. In: Goldstein G, Goldstein J, editors. Semigroups of Linear Operators and Applications. Dordrecht: Kluwer; 1993. pp. 259–270. [Google Scholar]