Abstract

Attention is known to affect the response properties of sensory neurons in visual cortex. These effects have been traditionally classified into two categories: 1) changes in the gain (overall amplitude) of the response; and 2) changes in the tuning (selectivity) of the response. We performed an extensive series of behavioral measurements using psychophysical reverse correlation to understand whether/how these neuronal changes are reflected at the level of our perceptual experience. This question has been addressed before, but by different laboratories using different attentional manipulations and stimuli/tasks that are not directly comparable, making it difficult to extract a comprehensive and coherent picture from existing literature. Our results demonstrate that the effect of attention on response gain (not necessarily associated with tuning change) is relatively aspecific: it occurred across all the conditions we tested, including attention directed to a feature orthogonal to the primary feature for the assigned task. Sensory tuning, however, was affected primarily by feature-based attention and only to a limited extent by spatially directed attention, in line with existing evidence from the electrophysiological and behavioral literature.

Keywords: motion and orientation processing, tuning curve, noise image classification

visual attention has been shown to affect the response characteristics of cortical neurons in idiosyncratic ways. A common finding in electrophysiological studies on awake monkeys is that attention to spatial locations or features leads to increased response gain within the attended location or feature with little (Spitzer et al. 1988) or no change in stimulus selectivity (Treue and Martinez-Trujillo 1999). Response enhancement has been demonstrated for various visual features such as orientation (McAdams and Maunsell 1999; Motter 1993) and direction of motion (Treue and Maunsell 1996; Treue and Martinez-Trujillo 1999; Recanzone and Wurtz 2000), as well as spatial attention (Moran and Desimone 1985; Connor et al. 1996, 1997; Luck et al. 1997; Seidemann and Newsome 1999). A less common finding is that selectivity at the level of neuronal populations can be modified by directing attention to specific visual features, such as motion direction (Martinez-Trujillo and Treue 2004). Finally, although the relationship between the BOLD signal and neuronal activity remains difficult to interpret, two human functional MRI studies have reported evidence for an increase in both gain and selectivity when attending to spatial location (Fischer and Whitney 2009) or to objects (Murray and Wojciulik 2004).

How do these neuronal effects translate onto the level of perceptual processing? We currently do not have a satisfactory answer to this question, primarily because different results (sometimes contrasting) have been reported by different groups using different methods (see discussion), leaving us with a mixed picture where it is often difficult to be sure whether the reported differences are perceptually relevant or, for example, the consequence of specific methodological choices. Our goal was to study the effect of attentional deployment across a wide range of experimental manipulations, while at the same time retaining the same underlying logic and methodological structure for the different experiments so that comparison of the results across conditions would be meaningful. We studied attention directed to specific spatial locations and features for both motion and orientation processing using a psychophysical variant of reverse correlation (Ahumada 2002; Neri and Levi 2006), allowing us to gauge potential changes in both amplitude and selectivity from fully characterized tuning curves. We also measured internal noise and the effect (or lack thereof) of attention on this quantity; this additional measurement was motivated both by existing electrophysiological studies (Cohen and Maunsell 2009; Mitchell et al. 2009; Cohen and Maunsell 2011) and by its potential relevance to amplitude changes for the tuning curve (Ahumada 2002; see discussion).

Changes in amplitude are easily summarized by the result that all the different attentional manipulations we studied generated an increase in amplitude when attention was deployed. For example, cueing observers to a specific spatial location increased the amplitude of the perceptual filter for that location. Similarly, cueing the observer to a specific motion direction increased the amplitude of the perceptual filter for directions that were similar to the cued value. Changes in tuning followed a more complex pattern that cannot be summarized easily. The most robust effect was observed for feature-based attention, i.e., cueing to a specific motion direction sharpened the perceptual filter centered on that direction. However, this result did not generalize to orientation (as opposed to motion direction) and some degree of retuning (albeit less robust) was observed for spatial attention too. We conclude that the term “attention” as is currently used in the literature refers to a highly heterogeneous class of pheonomena, the characteristics of which can vary substantially depending on the specific context in which the system is operating.

METHODS

Observers and general protocol.

Twenty-four observers took part in the experiments reported here; we refer to them as S1, S2, and so on. Of these, 10 collected data for a given condition (e.g., feature-based attention). All were naive [and paid for their time at the rate of 7 GBP (Great British Pound) per hour] except for author A. Paltoglou (S4). Because we could not render 24 different symbols in figures, we were not in a position to assign a unique symbol to each observer. Instead, we used the same 10 symbols in all plots: ✶, ◆, ●, ★, ▶, ◀, ▲, ▼, ■, ×. In the sequence in which they are listed, these symbols refer to the following participants for the five different experimental conditions (detailed below): S3–4, S8–9, S14, S20 for the 2-alternative forced-choice (2AFC) feature-based attention experiments (Figs. 2, 3, A and B, and 4); S1, S3–4, S6, S14–18, S20 for the 4AFC feature-based (Figs. 1, D–F, 2, 3, C and D, and 4) and spatial attention experiments (Figs. 5, A–C, 6, A and B, 7, A and B, and 8, A–C); S1, S10, S12–14, S19, S21–24 for the color experiments (attention directed to orthogonal feature, see Figs. 5, D–F, 6, C and D, 7, C and D, and 8, D–F); and S1–3, S5, S7, S11, S14, S19, S21, S24 for the orientation-based attention experiments (Figs. 5, G–I, 6, C and D, 7, E and F, and 8, D–F).

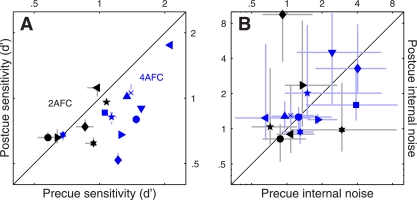

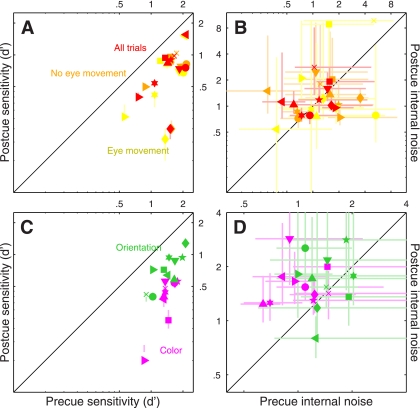

Fig. 2.

Performance metrics for feature-based attention. Sensitivity (A) and internal noise (B) are plotted for both 2AFC (black symbols) and 4AFC (blue symbols) experiments, for precue trials on the x-axis vs. postcue trials on the y-axis. Different symbols refer to different observers. Error bars show ± 1 SE. Please see methods for details on how internal noise was estimated.

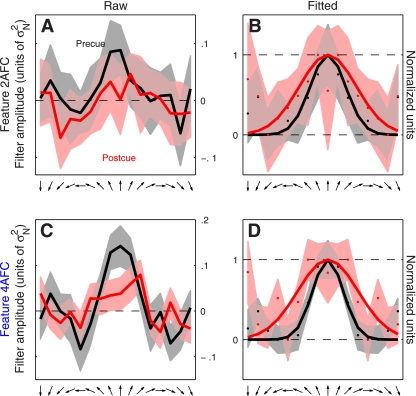

Fig. 3.

Directional tuning functions for feature-based attention. Raw data are plotted in the left column for both 2AFC (A) and 4AFC (C) versions of the experiment. Same data are plotted in the right column but after symmetrically averaging the traces around the center and rescaling them to range between 0 and 1. Solid curves in B and D show best-fit raised Gaussian functions (see methods). Shading shows ± 1 SE. The tuning curve has been arbitrarily centered on the upward direction but it refers to all directions reported by the observer (see methods). Filter amplitude in A and C is in units of noise variance σN2.

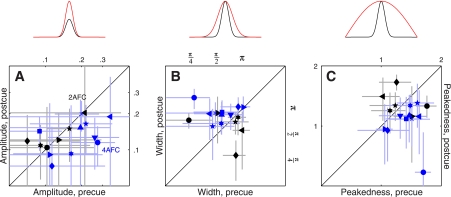

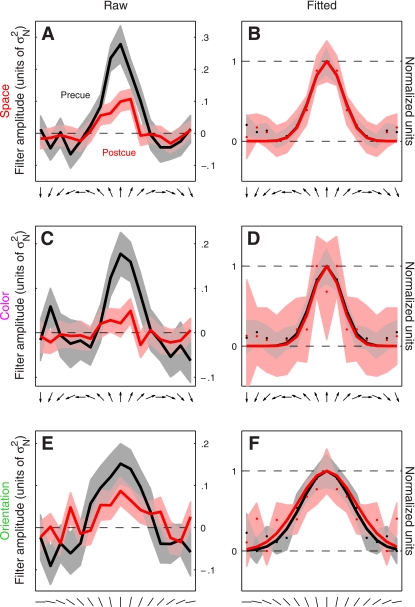

Fig. 4.

Amplitude and tuning changes for feature-based attention. Amplitude of the tuning curve is plotted in A for both 2AFC (black symbols) and 4AFC (blue symbols) experiments, for precue trials on the x-axis vs. postcue trials on the y-axis (same plotting conventions as Fig. 2). A, top: shows how the tuning curve would be modified by an amplitude change. B and C: plot of 2 metrics derived from the raised Gaussian fit (see Fig. 3, B and D, and methods), which we have termed width and peakedness. B and C, top: show how the tuning curve would be modified by changes in these 2 parameters.

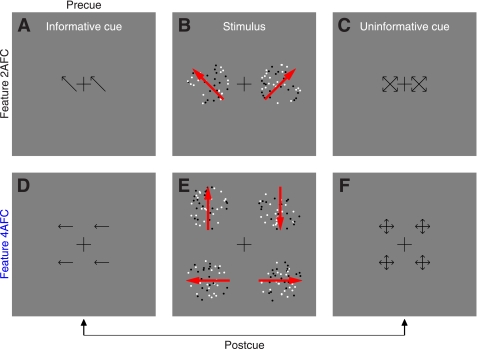

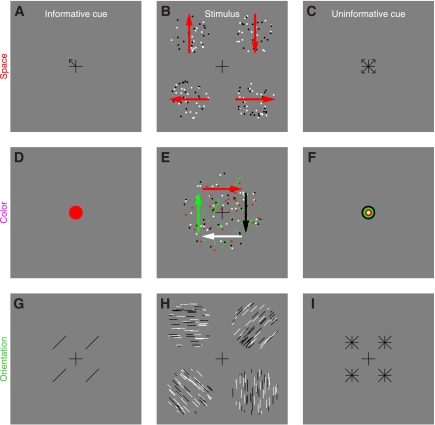

Fig. 1.

Stimuli for studying the effect of feature-based attention on directional tuning. In the 2-alternative forced-choice (2AFC) version of the experiment (A–C), observers saw an informative cue (A) specifying 1 of 4 possible diagonal directions, a stimulus (B) containing 2 patches to the sides of fixation, and an uninformative cue (C) pointing to all 4 possible directions. Each patch contained both “signal” and “noise” dots. Signal dots were fixed in number and all moved in 1 of the 4 possible diagonal directions. Signal direction was always different for the 2 patches, and 1 patch always took the direction indicated by the informative cue. In this example, the signal directions for the 2 patches are indicated by red arrows (not visible in the actual stimulus). Observers were asked to indicate which patch (left or right) moved globally in the cued direction. The 4AFC version of the experiment (D–F) was similar, except the stimulus contained 4 patches (E) and the 4 possible directions were cardinal (up, down, left, and right). Because signal dots in the 4 patches all took different directions, all 4 possible directions were represented in every stimulus but at different spatial locations. For each experiment, we mixed 2 types of trials within the same block: precue trials and postcue trials. On precue trials, the informative cue appeared before the stimulus and the uninformative cue after the stimulus. On postcue trials, the uninformative cue appeared before the stimulus and the informative cue after the stimulus.

Fig. 5.

Stimuli for studying the effect of spatial attention (A–C), attention directed to an orthogonal feature (D–F), and orientation-based attention (G–I). For the spatial attention experiments (A–C), the main stimulus (B) was identical to the one used in the 4AFC feature-based attention experiments (Fig. 1E); however, observers were asked to make a different judgment. The informative cue (A) specified the spatial location of 1 of the 4 patches, and observers were asked to report the direction (out of the 4 possible cardinal ones) for that patch. The logic of the color-based attention experiments (D–F) was similar, except the 4 different groups of moving dots were not labeled by spatial location (i.e., which patch they belonged to) but rather by color, and all appeared within the same central patch (E). The informative cue (D) specified 1 of the 4 possible colors, and observers were asked to report the direction of the moving dots that carried that color. Notice that the cued feature, i.e., color, is not the feature that is being mapped for tuning (direction). The logic of the orientation-based experiments was identical to the 4AFC feature-based experiments depicted in Fig. 1, D–F, except we used oriented lines instead of moving dots (H) and the informative cue (G) specified 1 of 4 possible orientations. For clarity, the example stimulus in H shows patches with 100% signal elements, but in the actual stimulus some of the lines within each patch were randomly oriented (see methods).

Fig. 6.

Performance metrics for spatial, color-based, and orientation-based attention. Sensitivity is plotted at left; internal noise is at right. Plotting conventions are similar to those used in Fig. 2. A and B: data for spatial attention: red from all trials, yellow and orange symbols from trials on which observers did or did not move their eyes respectively (see methods for a specification of how this distinction was drawn). C–D: data for the color-based attention experiment (magenta) and the orientation-based experiment (green).

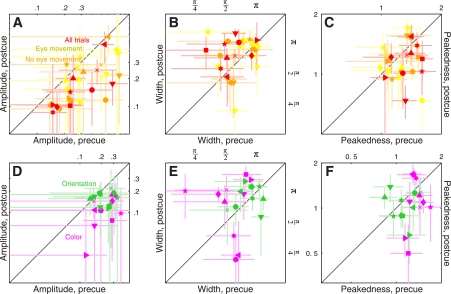

Fig. 7.

Tuning functions for spatial, color-based and orientation-based attention. Same plotting conventions as Fig. 3. A and B: data for spatial attention. C and D: data for color-based attention. E and F: data for orientation-based attention. Notice that x-axes in E and F refer to orientation, not motion direction.

Fig. 8.

Amplitude and tuning changes for spatial, color-based, and orientation-based attention. Plotting conventions are similar to those used in Fig. 4. A–C: data for spatial attention: red from all trials, yellow and orange symbols from trials on which observers did or did not move their eyes respectively (see methods for a specification of how this distinction was drawn). D–F: data for the color-based attention experiment (magenta) and the orientation-based experiment (green).

Each block consisted of 100 trials, and participants were tested over the course of several sessions until they completed between 5K and 10K trials for each experiment (except in the 2AFC experiments for which we collected fewer trials). We collected the following number of trials (means ± SD across 10 observers) for the five different experimental conditions: 6.8K ± 3.8K for 2AFC feature-based attention, 11K ± 2.3K for 4AFC feature-based attention, 11.4K ± 3.2K for spatial attention, 9.3K ± 1.2K for color-based attention, and 8.9K ± 1.3K for orientation-based attention.

Observers in all experiments were required to fixate on a central black cross (length of each arm was roughly 78 arcmin at the adopted viewing distance of 57 cm) against a grey background (25 cd/m2); the cross remained on the screen at all times. The response by the observer triggered the next trial after a random delay uniformly distributed between 200 and 1,200 ms. This was followed by the precue (lasting 200 ms), a blank interval of 700 ms, the stimulus (lasting 350 ms), a blank interval of 700 ms, and the postcue (lasting 200 ms). Observers were asked to discriminate the direction of moving dots or the orientation of static segments (see more detailed stimulus descriptions below). On approximately half the trials (randomly chosen), the precue was informative and the postcue was uninformative for performing this task; on the remaining trials the postcue was informative and the precue was uninformative. These two conditions were therefore mixed within the same block. The informative cue informed the observer of the particular direction/color/position/orientation they should attend to; the uninformative cue contained all four (or 2 in the 2AFC experiments) possible directions/colors/positions/orientations of the target.

Observers were not required to make a speeded response. We selected signal strength (to be defined for each stimulus below) for each observer individually to target threshold performance; before selecting threshold regime, observers were allowed to familiarize themselves with the task in the presence of a 100% signal stimulus for a few blocks. The ratio between the number of signal elements and the mean number of noise elements was (means ± SD across observers): 0.6 ± 0.4 for 2AFC feature-based attention, 0.6 ± 0.3 for 4AFC feature-based attention, 0.5 ± 0.2 for spatial attention, 0.8 ± 0.2 for color-based attention, and 0.3 ± 0.1 for orientation-based attention. Observers received trial-by-trial feedback (correct/incorrect) as well as a summary at the end of each block indicating the percent correct on that particular block and on all blocks (average) up to that point.

Stimulus for 2AFC feature-based attention.

Black (0 cd/m2) and white (50 cd/m2) dots (diameter 9 arcmin) moved at a speed of 2.5°/s within two apertures (diameter was 4°) to the left and to the right of fixation (Fig. 1, A–C); each aperture was centered at 4° from fixation (Fig. 1B). Dots within each aperture could move in 1 of 16 directions around the clock (in steps of 22.5°). There was a fixed number of dots, which we refer to as “signal” dots, that all moved in a specific direction. The remaining dots were “noise” dots and could take any of the 16 directions. The number of noise dots moving in a specific direction was determined by a Gaussian process with mean 2 dots and SD 2/3 dots. An important detail of our design is that the random process used to select the number of dots for a given direction was independent of the process used to select the number of dots for the other directions, so the power delivered by the stimulus at each direction was independent from other directions. This also means that the total number of dots within the aperture was not constant from trial to trial but was dependent upon the specific samples generated by the random process for different directions. In previous experiments (e.g., Neri and Levi 2008a), we allowed for different random numbers of dots for each motion direction, but we fixed the total number of dots in the stimulus; this constraint meant that the different directions were not sampled completely independently, a potential issue that we have rectified with the present protocol. Signal dots in a given aperture could take one of the four diagonal directions (45, 135, 225, and 315° indicated by red arrows in Fig. 1A; the red arrows were not visible in the actual stimulus), and the two directions selected for the two apertures presented on a given trial were never the same. The informative cue (Fig. 1A) was an arrow (72 arcmin long) pointing towards the direction of motion that the observer ought to attend to (the target direction); this direction always matched one of the two directions represented by the signal dots in one of the two apertures. The uninformative cue (Fig. 1C) consisted of four arrows pointing towards the four possible motion directions that signal dots could take. To avoid confusion with the left-right judgment that observers were required to make (i.e., choose aperture to the left or to the right), we always presented two replicas of each cue (whether informative or uninformative) on both sides of fixation (centered at 2° from fixation). Observers were asked to press a button to indicate which patch (left vs. right) contained the global direction that matched the arrow in the informative cue. Notice that this task cannot be performed without relying on the informative cue because both patches contained the same number of signal dots and the same average number of noise dots.

Stimulus for 4AFC feature-based attention.

This was an extension of the 2AFC stimulus detailed above, except we presented four patches (as opposed to 2, see Fig. 1E). All four possible directions for the signal dots were therefore represented on each trial, but they were randomly assigned to the four different patches. To avoid confusion with the response observers were asked to make (see below), we chose the four cardinal directions (0, 90, 180, and 270°) as possible target directions (indicated by red arrows in Fig. 1E). Informative and uninformative cues were modified from the 2AFC protocol to suit the 4AFC version as depicted in Fig. 1, D (informative) and F (uninformative). All remaining stimulus details were as in the 2AFC protocol. In the 4AFC version of the experiment, observers were asked to press one of four buttons to report which patch (upper left, upper-right, lower-left, or lower-right) contained the global direction indicated by the informative cue. Three observers performed the task while we recorded their eye movements (Eyelink 4.31). Head position was stabilized using a chin-and-forehead rest. At the end of each block feedback was given as to how many times the observer moved their eyes away from fixation, which we defined as eye position exceeding a circular window of 1.3° radius (1/3 of stimulus eccentricity) centered on fixation. On some blocks, participants were also given trial-by-trial feedback to indicate whether they broke fixation or not on a specific trial.

Stimulus for spatial attention.

This experiment was very similar to the 4AFC feature-based attention experiment detailed above to the extent that the stimulus (Fig. 5B) was identical. What differed was the judgment that observers were asked to make, and therefore the cues they were shown. The informative cue (Fig. 5A) consisted of an arrow pointing towards one aperture, while the uninformative cue (Fig. 5C) consisted of four arrows each pointing to one of the four apertures. Observers were required to report the global motion direction (out of four possible choices for the four cardinal directions) carried by the dots within the cued aperture. The same 10 observers that participated in the 4AFC feature-based attention experiment also participated in this experiment. Half of them were tested on the 4AFC feature-based attention experiment first, while the other half on this experiment first. All participants were tested while recording eye movements with the Eyelink eyetracker.

Stimulus for attention directed to orthogonal color feature.

All moving dots were presented within a large aperture (8.4°) centered on fixation (Fig. 5E). We chose this configuration because pilot experiments with four apertures showed that the task was impossible (chance performance) for the four-patch configuration. Each dot could take one of four colors: red (8.5 cd/m2), green (40 cd/m2), light grey (43 cd/m2), and dark grey (5 cd/m2), respectively. The logic of this experiment is very similar to the spatial attention experiment detailed above, except dots were labeled by color rather than spatial location. The informative cue (Fig. 5D) was a disk centered on fixation and specified the cued color. The uninformative cue (Fig. 5F) contained all four colors in concentric circles. Observers were asked to press a button to indicate the global direction of the dots that had the same color as the cue.

Stimulus for orientation-based attention.

This experiment was very similar to the 4AFC feature-based attention experiment detailed above except the moving dots were replaced by static lines of different orientations (Fig. 5H). Line length was 6 arcmin. There were 16 possible orientations (spanning half the circle). The informative cue (Fig. 5G) consisted of one line oriented along the cued orientation. To avoid confusion with the response, this cue was replicated four times and placed next to each of the four apertures (see Fig. 5G). The uninformative cue (Fig. 5I) consisted of four lines all centered at the same location in a star-shape, therefore, spanning all possible orientations (vertical, horizontal, and the two 45° diagonals), and again was replicated four times around fixation. Observers were asked to press a button to indicate which of the four patches contained the global orientation that matched the cued orientation.

Internal noise.

We estimated (Figs. 2B and 6, B and D) internal noise using standard procedures (Burgess and Colborne 1988; Neri 2010a), which we only summarize briefly here. Our methodology relies on the established signal detection theory (SDT) model (Green and Swets 1966); the advantage of adopting this general theoretical framework is that it offers a unified account of different experimental conditions and therefore makes it possible to draw direct comparisons (Neri 2010a). The SDT model is defined within the space of the “internal response,” i.e., the response of the system (e.g., visual cortex) to the input stimulus, regardless of the front-end process that maps the stimulus onto a response. This process may consist of a bank of motion or orientation detectors, but the details are not relevant because the SDT formulation bypasses this stage.

For our nAFC task, we assume that the internal response before the addition of internal noise follows a normal distribution for the n-1 nontarget stimuli and a normal distribution with mean din for the target stimulus. Each response was added to a Gaussian noise source with SD σ; only this noise source differs for repeated presentations and represents internal noise. On each trial, the model selects the stimulus associated with the largest response. Different din and σ values correspond to a different percentage of correct responses and a percentage of the same response to repeated presentations (%agreement). We then selected the specific values for din and σ that minimized the mean-square error between the predicted and the observed values for percent correct and percent agreement. The latter is estimated by performing a double-pass experiment in which the same set of stimuli is presented twice and the percentage of same responses to the two sets is computed (Burgess and Colborne 1988).

We performed a series of double-pass experiments consisting of 100-trial blocks (like in the main experiment). Observers were not aware of any difference with respect to blocks for the main experiment. In double-pass blocks, the second half of 50 trials showed the same stimuli presented during the first half of 50 trials but in randomly permuted order. We collected the following mean number of double-pass trials for the five different experimental conditions (detailed below): 800 for 2AFC feature-based attention, 1K for 4AFC feature-based attention, 600 for spatial attention, 600 for color-based attention, and 400 for orientation-based attention.

Estimation of tuning functions.

Because our stimuli contained noisy fluctuations (in the form of dots moving in random directions or segments taking random orientations), they effectively perturbed the visual system of the observer using a source of external noise that was under direct experimental control (Figs. 3 and 7). These controlled perturbations affect the specific psychophysical choice that the observer makes on a given trial. By establishing a link between trial-to-trial psychophysical choices and the specific noise samples presented on those trials, we are in a position to infer which features of the input noise source affected the observer in the form of a descriptor termed in the literature a classified noise image (Ahumada 2002), a perceptual filter (Neri 2011), or a perceptive field (Neri and Levi 2006). For the experiments reported here, the descriptor is a tuning function defined along the axis of motion direction (e.g., Fig. 3) or segment orientation (Fig. 7, E and F). This methodology is termed noise image classification in the vision literature (Ahumada 2002), and it amounts to a form of psychophysical reverse correlation (Neri and Levi 2006; Murray 2011). In its most basic formulation, this approach relies on a linear observer model (Murray 2011), which represents a severe limitation in terms of its applicability to the often highly nonlinear process of human vision (Murray et al. 2002; Tjan and Nandy 2006). However, it is possible to extend the methodology to incorporate nonlinear treatments (Tjan and Nandy 2006; Neri 2010b), which can be particularly useful in specific contexts (Neri 2010b).

In line with previous work (Neri 2004), we only used the noise sample associated with the stimulus that was selected by the observer on a specific trial for the purpose of computing the perceptual filter. This procedure was necessary because in the 4AFC experiments observers did not express an unambiguous judgment about the three patches that were not selected, either by the cue or by the observer. Consider the 4AFC feature-based task: in this case observers were asked to indicate which patch moved in a cued direction (or was oriented along a cued orientation). By selecting a specific patch they express a judgment about that patch, from which we can infer that they perceive that patch to move in the direction we specified. However, this does not allow us to infer which directions they perceived for the other patches; lacking this information, we cannot rely on those patches to reconstruct the perceptual filter because (as detailed below) we must be able to realign each noise sample to the perceived direction associated with that sample. A similar issue occurs with the spatial attention experiment: in this case observers were required to report their perceived direction for one specific patch, not for the remaining patches about which we cannot say anything in terms of how they were perceived by the observer.

On each trial, we realigned the noise sample associated with the cued patch, or the patch selected by the observer, with the direction/orientation reported by the observer for that patch. By realignment we mean that the directional axis over which the noise distribution was defined was recentered to the direction reported by the observer on that trial for that noise sample. In the feature-based attention, this direction was always the cued direction because observers were asked to choose a patch that matched the cued direction, implicitly reporting the direction of the chosen patch. In the spatial attention experiment, it was the direction reported by the observer. Following realignment, we simply averaged all noise samples from incorrect trials (and subtracted the expected value) to obtain the tuning functions plotted in Figs. 3 and 7. We only relied on incorrect trials (i.e., false-alarm noise samples) and not on correct trials (i.e., hit noise samples) because the former, but not the latter, are known to reflect the perceptual filter with minimal distortions from potential nonlinearities (Neri and Heeger 2002, Neri 2010b). We obtain qualitatively similar (albeit noisier) results when correct trials are included.

Gain and tuning metrics.

Before applying any metric to the tuning function (Figs. 4 and 8), we symmetrically averaged it around its center point to increase measurement signal-to-noise ratio. This operation relies on the assumption of symmetry around the perceived direction/orientation, which is entirely plausible. Our measure of gain (plotted in Fig. 4A) was simply the maximum value of the tuning function. To estimate tuning we first rescaled the tuning function so that its maximum value was set to 1 and its minimum value to 0 (see Fig. 3B for examples). We then minimized the mean-square-error with the function G(σ)p where G is a Gaussian function with SD σ, mean equal to the center of the tuning function, and constrained to range between 0 and 1. This function has two free parameters: σ and p, which we refer to as width and peakedness in Figs. 4 and 8.

RESULTS

Feature-based attention: performance metrics.

We manipulated attention to the direction of a moving stimulus by cueing observers to a specific motion direction before the appearance of the stimulus: observers saw an informative cue containing two arrows pointing in the attended direction (Fig. 1A); the attended direction could be one of four prespecified directions (oriented diagonally, see methods). This cue was followed by a stimulus consisting of two patches of moving dots (Fig. 1B); a proportion of the dots in each patch (“signal” dots) moved in one of the four possible directions, and the direction selected for one of the two patches always matched the previously cued direction. Observers were asked to choose whether the patch on the left or the patch on the right contained dots moving in the cued direction. The stimulus was followed by an uninformative cue (Fig. 1C). The type of trial just detailed was termed “precue” trial.

Throughout each block of trials, observers were randomly presented with either a precue trial or a “postcue” trial. Postcue trials consisted of the same three elements used for precue trials: the stimulus and two cues, one informative and one uninformative. On postcue trials, however, the informative cue appeared after the stimulus while the uninformative cue appeared before the stimulus. In the postcue condition observers were therefore precluded from allocating attention to the cued direction before stimulus presentation. We selected a relatively long interval (700 ms) between cue and stimulus for two main reasons: 1) exclude any visual interference (e.g., masking) between the two; and 2) ensure that observers had sufficient time to deploy attention to the cued feature. In particular with relation to the latter point, we emphasize that the type of attentional deployment studied here is endogenously generated so it is not restricted to the relatively short timescale reported for exogenous (stimulus-driven) attention (Nakayama and Mackeben 1989; Liu et al. 2007; Neri and Heeger 2002).

It is essential to establish whether the precue/postcue manipulation detailed above actually resulted in differential deployment of attention on the part of the human observers: it is conceivable that observers may choose to deploy the same strategy on both precue and postcue trials, given that both trial types offer the same overall information on each trial (notice, however, that the informative cue cannot be ignored altogether: performance would then be at chance). Figure 2A plots sensitivity (d') on precue trials (x-axis) vs. sensitivity on postcue trials (y-axis) for this 2AFC task (black symbols): the six observers we tested (each indicated by a different symbol) performed equally well on both trial types (black points fall on unity line; two-tailed paired t-test for precue vs. postcue was not significant at P = 0.47). Although it remains possible that the cue manipulation had some effect on our observers (we consider this possibility later in the article), we do not have any firm evidence that this was the case for the 2AFC task we assigned to the observers. We therefore decided to modify the protocol in the form of a 4AFC task (see below).

In the 4AFC version of the experiment, observers saw four (rather than 2) patches of moving dots (Fig. 1E). Each patch contained signal dots moving in one of the four prespecified directions, one per patch. Similarly to the 2AFC version of the experiment, observers saw both an informative and an uninformative cue on every trial (Fig. 1, D and F); they were asked to select the spatial location of the patch that contained signal dots moving in the cued direction. Figure 2A shows that the 4AFC version of the experiment resulted in clear sensitivity differences between precue and postcue trials for the 10 observers we tested (blue symbols fall below the unity line at P = 0.001). We now have a measurable indication that our cue manipulation was effective in inducing different attentional strategies on the part of the observers.

In a series of separate experiments, we exploited a double-pass methodology to estimate the intensity of each observer's internal noise for both precue and postcue conditions (see methods). Our interest in measuring internal noise was motivated by two factors: 1) recent electrophysiological work demonstrating that interneuronal correlation is reduced by attentional deployment (Cohen and Maunsell 2009, 2011; Mitchell et al. 2009); it is conceivable that there may be a relationship between interneuronal correlation and behavioral response reliability, the latter being closely related to the concept of internal noise; and 2) the theoretically expected inverse relationship between amplitude of the sensory tuning curve measured using reverse correlation (see next paragraph) and internal noise (Ahumada 2002); to draw meaningful conclusions about potential changes in amplitude it is necessary to ensure that they are not simply the consequence of differences in internal noise. Estimates for both 2AFC and 4AFC experiments, plotted in Fig. 2B, fall within the overall range expected from previous work (Neri 2010a); however, we were unable to measure any significant difference between precue and postcue conditions [points fall on the unity line at P = 0.43 for black (2AFC) symbols and P = 0.92 for blue (4AFC) symbols].

Feature-based attention: sensory tuning.

As mentioned previously, each patch of moving dots contained a fixed proportion of signal dots moving in the same specified direction. The remaining dots, termed “noise” dots, moved in random directions for every instance of each patch; this source of external noise affected the choice that observers made on individual trials. We can study the distribution of motion directions sampled by the noise dots contained within the patch selected by the observers; we expect that this distribution will return an image of the motion “filter” that observers relied on in making their selection. This procedure is now well established in the psychophysical literature and is often termed noise image classification (Ahumada 2002); it represents a psychophysical variant of reverse correlation (Neri and Levi 2006; see methods). For the specific application we are presently interested in, this method allows us to derive a full directional tuning curve corresponding to the motion sensor employed by individual observers to process the motion signals delivered by the visual stimulus (Neri and Levi 2008b).

Examples of such directional tuning curves are shown in Fig. 3 (pooled across observers) for both the 2AFC experiment (A and B) and the 4AFC experiment (C and D). It is apparent from cursory inspection of the raw data (A and C) that the precue condition (black traces) was associated with a tuning curve of larger amplitude compared with the postcue condition (red traces). Furthermore, the postcue tuning curve appears broader and less peaked at target direction (indicated by upward-pointing arrow on the x-axis). To evaluate this type of potential effect on the shape of the tuning curve more clearly we proceeded as follows: 1) because there is no logical reason to expect that directions away from the target direction would have different effects depending on whether they deviated clockwise or anticlockwise, we reduced measurement noise by symmetrically averaging the tuning curve around target direction (second column in Fig. 3); 2) we factored out overall gain differences between the two curves by rescaling each curve along the y-axis to range between its minimum (set to 0) and its maximum (set to 1) values (indicated by horizontal dashed lines in Fig. 3, B and D); and 3) we fitted each curve using a raised Gaussian function (solid lines in Fig. 3, B and D). The result of applying this analysis to the 2AFC data set is shown in Fig. 3B (observer average); the corresponding result for the 4AFC data set is shown in Fig. 3D. For both experiments there appears to be a change in the width of the tuning curve whereby the postcue condition (red traces) returns broader tuning.

It is not possible to draw firm conclusions from a qualitative inspection of aggregate data like that shown in Fig. 3, nor is it possible to inspect perceptual filters for individual observers as they show significant variability [expected from previous work (Meese et al. 2005)] and present the same challenge of only allowing primarily qualitative considerations. We therefore performed additional analyses that captured relevant aspects of the perceptual filters (see below) and quantified each aspect using a single value for each observer. The data could then be subjected to simple population statistics in the form of two-tailed t-tests and confirm or reject specific hypotheses about the overall shape of the filters. Our conclusions are therefore based on individual observer data, not on the aggregate observer (which is used solely for visualization purposes). This distinction is important because there is no generally accepted procedure for generating an average filter from individual images for different observers (see Neri and Levi 2008b for a detailed discussion of this issue).

We relied on three metrics designed to capture the following three possible ways in which a tuning curve is expected to change: 1) amplitude change, see cartoon above Fig. 4A; 2) width change, see Fig. 4B, top; and 3) change in peakedness, see Fig. 4C, top). We measured amplitude by simply taking the maximum value of each curve; the other two parameters were obtained directly from the raised Gaussian fit to the rescaled curves (see methods).

The results of this analysis for the 2AFC data set did not return any significant difference between precue and postcue conditions: no change in amplitude (black points in Fig. 4A fall on the unity line at P = 0.5), no change in width (black points in Fig. 4B fall on the unity line at P = 0.76) and no change in peakedness (black points in Fig. 4C fall on the unity line at P = 0.75). This result highlights the importance of carrying out individual observer analysis: inspection of the aggregate tuning curves (Fig. 3, A and B) seemed to suggest that all three characteristics were affected by the cueing manipulation, but this suggestion did not survive a more quantitative analysis that probes data structure at the level of individual participants. We conclude from this analysis and from the sensitivity results detailed previously (Fig. 2A) that, if there was any effect of attention for the 2AFC data set, this effect was too small to survive quantitative inspection.

The results were substantially different for the 4AFC data set, indicated by blue symbols in Fig. 4. All three characteristics differed for precue and postcue condition: amplitude was larger for the precue condition compared with the postcue condition (blue points in Fig. 4A fall below the unity line at P = 0.02), width was larger for postcue condition (blue points in Fig. 4B fall above the unity line at P = 0.01), and the precue tuning curves presented a more pronounced peak than the postcue curves (blue points in Fig. 4C fall below the unity line at P = 0.01). Overall, the results of this analysis for the 4AFC data set confirmed the observations that resulted from inspection of the aggregate data (Fig. 3, C and D).

It is possible that the same effects were operating in the 2AFC experiments, as suggested by cursory inspection of the aggregate data (notice the similarity between Fig. 3, A and B and Fig. 3, C and D) and that our data set simply lacked sufficient power to measure them effectively (see previous paragraph). We cannot rule out this interpretation, however, we emphasize that the lack of a significant effect of the attentional manipulation on sensitivity for the 2AFC data set (black points in Fig. 2A) is unlikely to result from lack of statistical power: we certainly had sufficient data from each observer to estimate sensitivity with good accuracy (see error bars in Fig. 2A), and it does not appear that adding four more observers (to match the 10 used in the 4AFC experiments) would have altered our conclusions because the pattern we observed from the six observers that participated in the 2AFC experiments does not show any tendency to depart from the null result (black points in Fig. 2A fall squarely on the unity line). In contrast, the attentional effect on sensitivity during the 4AFC experiments survives even if we randomly remove 4 of the 10 observers (at P = 0.05). Lacking evidence of a clear effect on sensitivity in the 2AFC data set, any effect on the perceptual filters that we may have missed due to lack of power would be difficult to interpret satisfactorily. We therefore decided to perform all the other experiments in this study using 4AFC protocols. A different but related concern is that the lack of significant effects in the 2AFC protocol may have derived from different memory demands imposed by the two tasks; we take up this possibility in discussion.

Spatial attention: performance metrics and sensory tuning.

Attention can be directed to specific visual features, as prompted by the cue used in the experiments described previously, but it can also be directed to specific spatial locations. There are indications in the literature that these two types of attentional deployment may differ in important respects (Ling et al. 2009); we therefore conducted additional experiments to establish whether the effects we measured for feature-based cues would also be obtained using spatial cues. Our goal in designing these additional experiments was specifically to ensure that the conditions of the two sets of experiments would be comparable; for this reason we used an identical visual stimulus but modified the cue and the task (see below).

In the spatial version of the experiment, the informative cue consisted of an arrow pointing to one of the four possible patch positions around fixation (Fig. 5A). Observers were asked to report the direction of the cued patch as a choice out of the four prespecified directions that were used for the feature-based experiment. We emphasize that the stimulus (Fig. 5B) was identical, only the task (and associated cue) changed: in the feature-based version of the experiment observers were asked to indicate which patch moved in the cued direction, whereas in the spatial version of the experiment they were asked to indicate the direction of the cued patch. Both tasks conformed to a 4AFC protocol.

The spatial cueing manipulation was effective in inducing differential deployment of attention on precue and postcue trials: sensitivity was significantly higher for the former as shown by the red symbols in Fig. 6A (points fall below the unity line at P = 0.0005). For the spatial experiments, we monitored eye position during each trial, because it is conceivable that spatially cueing observers to a specific patch may prompt them to move their eyes towards that patch (although they were specifically instructed not to do so but rather maintain fixation on the central marker at all times). Yellow and orange symbols in Fig. 6A show sensitivity when computed from trials when observers did or did not move their eyes, respectively (see methods for a specification of how this distinction was drawn); in both cases the pattern is very similar to that obtained from all trials (P = 10−3 and P = 10−4, respectively). In line with the results from the feature-based experiments, the internal noise measurements (Fig. 6B) did not show any difference between precue and postcue conditions: points fall on the unity line regardless of whether they come from all trials (P = 0.45), eye-movement trials (P = 0.47), or no-eye-movement trials (P = 0.79).

Figure 7, A and B, shows tuning curves for the spatial attention experiment. The two curves differ in amplitude (Fig. 7A), but as far as the aggregate data are concerned there seemed to be no difference in tuning between precue and postcue conditions (Fig. 7B). When we applied the same quantitative analysis used for the feature-based experiments, however, we found that at the level of individual observers there was a small but detectable change in tuning: tuning width was larger on postcue trials as opposed to precue trials (red points in Fig. 8B fall above the unity line at P = 0.03), and peakedness was larger on precue trials (red points in Fig. 8C fall below the unity line at P = 0.03). These effects seemed smaller than those we measured for the feature-based experiments but were measurable. The change in amplitude was clear (red points in Fig. 8A fall below the unity line at P = 0.01).

Attention directed to orthogonal feature.

A previous study of the potential effect of feature-based attention on directional tuning reported no change in tuning width under attentional deployment (Murray et al. 2003); this previous result may appear to contradict the results we detailed above for feature-based attention, but there is an important difference between the way feature-based attention was defined in the previous study and ours. In our study, feature-based attention was directed to the feature that was manipulated by the noisy perturbation and for which we measured sensory tuning, i.e., motion direction. In the study by Murray et al. (2003), feature-based attention was directed to a feature (the color of the dots) that was not manipulated by the noisy perturbation in the stimulus; this feature was effectively orthogonal to the feature for which the authors measured sensory tuning. We were interested in determining whether these two types of feature-based attention may be associated with different effects on sensory tuning, as indicated by the different results obtained in our study and in the previous study by Murray et al. (2003).

For this experiment, we presented all dots within a large aperture centered on fixation (Fig. 5E), so that their spatial location would be irrelevant for grouping them into separate sets. Instead, dots could be grouped according to their color into one of four groups: the black group, the white group, the red group, and the green group (see Fig. 5E). Signal dots within each group moved in one of the four prespecified directions; observers were cued to report the direction (out of the four possible ones) of one of the groups, cued by an informative color cue. This task is similar to the spatial attention task, except the task-relevant dot group is defined by a cued feature (its color) rather than by its spatial location (see Figs. 5, D and F, for examples of informative and uninformative cues in this experiment).

The cueing manipulation was very effective: sensitivity was much higher on precue trials as opposed to postcue trials, as clearly demonstrated by the magenta symbols in Fig. 6C (points fall below the unity line at P = 10−7). Perhaps surprisingly, we also observed a significant difference in the estimated amount of internal noise: this quantity was larger on postcue trials (magenta points fall above the unity line in Fig. 8D at P = 0.01). More specifically, internal noise was near 1 on precue trials but reached 1.5 on postcue trials. This result is noteworthy, because we did not observe any difference in internal noise for the experiments detailed previously.

Figure 7, C and D, shows tuning curves for this orthogonal feature-based experiment. The two curves clearly differ in amplitude (Fig. 7C), but there is no difference in tuning between precue and postcue conditions (Fig. 7D). The quantitative analysis on individual observers confirmed these observations: despite a marked change in amplitude between precue and postcue conditions (magenta points fall below the unity line in Fig. 8D at P = 0.001), there was no difference in either width (magenta points fall on unity line in Fig. 8E at P = 0.12) or peakedness (magenta points fall on unity line in Fig. 8F at P = 0.14). As we discuss in more detail in the discussion, these results are consistent with those reported by Murray and collaborators (Murray et al. 2003). It is also relevant in this context that the reduction in amplitude on postcue trials may reflect the higher internal noise we measured for this condition (Ahumada 2002).

Effects of feature-based attention on sensory tuning for orientation.

A relevant question is whether the results detailed previously can be generalized to other sensory dimensions. We have demonstrated that feature-based attention can sharpen sensory tuning for processing the direction of moving objects; can we then extrapolate this result to state that feature-based attention sharpens sensory tuning for processing other visual features of an object? We tested the applicability (or lack thereof) of this type of generalization by characterizing sensory tuning for another important visual attribute of objects: their orientation. This experiment was conceptually identical to the feature-based attention experiments with moving dots, except the stimulus consisted of oriented (static) segments (Fig. 5H). Each of the four patches presented around fixation contained signal segments of one of four prespecified orientations; observers were asked to report which patch contained orientation signals corresponding to the orientation indicated by the informative cue (Fig. 5G). We derived sensory tuning for orientation by applying reverse correlation to the noise segments, the orientation of which was perturbed randomly.

As for the previous 4AFC experiments, we were able to confirm that the cueing manipulation was effective: sensitivity was higher on precue trials as opposed to postcue trials; this is clearly demonstrated by the green symbols in Fig. 6C (points fall below the unity line at P = 10−5). We did not observe a difference in the estimated amount of internal noise (green points fall on the unity line at P = 0.17). In relation to these metrics, the results for orientation processing are similar to those we reported earlier for motion direction in the 4AFC experiment (Fig. 4).

Figure 7, E and F, shows tuning curves for orientation tuning. The two curves appear to differ in amplitude (Fig. 7E) but not in tuning (Fig. 7F) between precue and postcue conditions. The quantitative analysis on individual observers confirmed that, despite a significant change in amplitude between the two conditions (green points fall below the unity line in Fig. 8D at P = 0.02), there was no difference in either width (green points fall on unity line in Fig. 8E at P = 0.52) or peakedness (green points fall on unity line in Fig. 8F at P = 0.46). This result indicates that the effect of attention may depend not only on whether it is directed to a visual attribute (feature-based) or to a spatial location but also on which visual attribute is being considered (see discussion).

DISCUSSION

Summary of results.

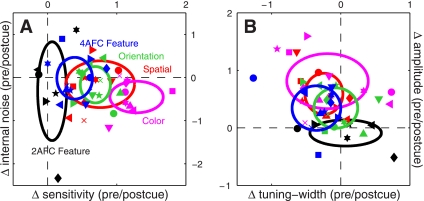

Figure 9 presents a compact view of all the experiments detailed in the results section. Figure 9A plots the change in sensitivity between precue and postcue conditions on the x-axis: for this metric a value of 0 (vertical dashed line) indicates no change, positive values indicate greater sensitivity on precue trials, and negative values indicate greater sensitivity on postcue trials. Except for the 2AFC feature-based experiments (black symbols) all points fall to the right of the vertical dashed line, demonstrating that sensitivity was higher on precue trials across all the 4AFC experiments we ran. The y-axis plots a similar metric for internal noise: in this case there was no difference between precue and postcue conditions (points fall on horizontal dashed line) for all experiments except for the feature-based condition where attention was directed to an orthogonal feature (magenta symbols fall below the horizontal dashed line).

Fig. 9.

Summary of the entire data set (461K trials). A: plots the change in sensitivity between precue and postcue conditions on the x-axis; it was simply computed by taking the logarithm of the d' value on precue trials divided by the d' value on postcue trials (log-ratio). This quantity is equal to 0 when the 2 d' values are equal; the zero points are marked by dashed lines. The y-axis plots the same calculation for internal noise. B: plots the same calculation for amplitude of the tuning functions on the y-axis, and for a composite index of tuning on the x-axis. The composite index of tuning was obtained by taking the average between the log-ratio of the width estimate and minus the log-ratio of the peakedness estimate. The negative sign was necessary because width and peakedness are inversely related to sharper tuning: a sharper tuning function indicates smaller width and/or larger peakedness. Negative values of the resulting composite index (points falling to the left of the vertical dashed line in B) correspond to sharper tuning functions on precue trials, positive values (points falling to the right of the vertical dashed line in B) to sharper tuning on postcue trials. Black symbols refer to 2AFC feature-based attention experiments, blue to 4AFC feature-based attention experiments, red to spatial attention experiments, magenta to color-based attention experiments, and green to orientation-based attention experiments. Ovals are centered on mean values with width and height showing SD across observers.

Figure 9B plots the change in tuning width on the x-axis vs. the change in amplitude on the y-axis. Tuning width was computed by combining the width and peakedness measurements presented previously (see methods). All 4AFC experiments showed a change in amplitude (colored points fall above the horizontal dashed line), but only two experiments resulted in a measurable change in tuning width: the feature-based attention experiment where attention was directed to the probed feature (blue symbols fall to the left of the vertical dashed line) and the spatial attention experiment (red symbols). In both cases, attention sharpened sensory tuning (narrower width for precue condition), but the effect was clearer for the feature-based experiments (notice that in Fig. 9B all blue points fall to the left of the vertical dashed line, whereas some red points fall to the right). The amplitude changes reported on the y-axis are not attributable to differences in internal noise because, in general, there was no measurable change in this quantity (as discussed in the previous paragraph, see y-axis in Fig. 9A). This is an important observation because filter amplitude is theoretically expected to depend on internal noise (Ahumada 2002), so we would not be in a position to draw firm conclusions about amplitude had we not measured internal noise.

Potential role of memory limitations.

It may be argued that the difference between precue and postcue conditions pertained not to attention but to memory. Consider for example the spatial attention experiments. On precue trials, observers know before seeing the stimulus that only one patch is relevant and therefore only the perceived direction of that patch needs to be remembered upon generating their final response. On postcue trials, they do not know which patch they will have to evaluate for perceived direction, so in a sense they are asked to remember the perceived direction of all four patches to perform effectively once the informative cue appears after the stimulus. The memory requirement on postcue trials, but not on precue trials, therefore approaches the storage limit for humans (Cowan 2001). Is it possible that the effects we observed on sensory tuning may be a consequence of a memory bottleneck? This possibility is unlikely because similar memory requirements were instantiated by all 4AFC protocols we used; however, we only observed retuning for some experiments (e.g., direction-based attention) and not others (e.g., orientation-based attention) even when the same visual feature (motion direction) was being mapped (compare results from experiments where attention was directed to motion direction in Fig. 3, C and D, vs. those in which attention was directed to an orthogonal feature in Fig. 7, C and D) or stimulus characteristics remained unchanged [compare the smaller retuning effect in the spatial (Fig. 7, A and B) vs. feature (Fig. 3, C and D) attention experiments]. It is therefore unlikely that our results in the 4AFC experiments reflect the role of memory as opposed to attention.

We cannot rule out a memory-based interpretation for the difference between 2AFC and 4AFC variants of the feature-based attention experiments (Figs. 2, 3, and 4). In the 2AFC experiments, observers were effectively asked to retain two items in working memory, as opposed to four in the 4AFC variant; this difference is substantial for a system with a four-item bottleneck (Cowan 2001). We are not in a position to address this issue effectively because we only collected data for one experiment using a 2AFC protocol. Further experiments will be necessary to clarify the exact reasons behind the differences we observed between the two protocols. In this context, it is also important to recognize that there is a reasonable attention-based explanation for the observed difference, relating to the possibility that the 4AFC protocol imposed more demands on the attentional system by requiring that attention be deployed to a larger number of items: it is conceivable that the residual attention directed to the postcue item (as a fraction of that directed to the precue item) may be smaller in the 4AFC protocol than in the 2AFC protocol, leading to a larger attentional effect in the former. In other words, although we cannot exclude a memory-based interpretation (and can only partially exclude an interpretation based on lack of statistical power, see relevant subsection in results), our data are not inconsistent with an interpretation based on the notion of attentional deployment.

The above discussion brings up a methodological question: why did we opt for this specific precue/postcue paradigm (Fig. 1), given its potential memory implications that may weaken the specificity of our conclusions in relation to visual attention? In particular, we cannot completely exclude selective, attention-like effects of the informative postcue on the mnemonic representation of the visual stimulus (Coltheart 1980; Orenstein and Holding 1987); this potential confound may have been at least partly avoided by not having a postcue at all. This was not an option, however, because of several problems associated with this design choice that we briefly discuss below.

First, in the absence of an informative postcue, it is unclear how the task should be specified. Consider for example the feature-based experiment, where observers had to indicate which patch moved in the cued direction. All patches contained signal dots moving in one of four directions; without being cued to one of them, no patch is defined as target patch. One way to circumvent this problem may have been to choose one specific direction (say upwards) from the start and inform observers at the beginning of each block that this would be the target direction. This design, however, would render the precue irrelevant, and no manipulation of feature attention would be possible. Alternatively, we may have redesigned our stimuli so that only one patch contained signal dots moving in the target direction: on cued trials observers would be told which direction that is, and on uncued trials they would not know but would still have to choose the patch containing some coherent motion signal. The problem with this design is that it does not enforce the use of a directional detector: on both cued and uncued trials, the observer would be in a position to detect the target patch by relying on a motion coherence (nondirectional) detector (effectively ignoring the cue). Because our goal was to map the tuning of a putative directional detector, a design that does not enforce reliance on such detectors would severely complicate any interpretation of our results.

The list of design issues is long; after considering all possibilities, we settled on the specific design depicted in Fig. 1 because it satisfies a number of important requirements, not least that the number of alternative forced choices must be the same between precue and postcue conditions in order for the derived perceptual filters to be comparable. For example, it is possible to show that the amplitude of the perceptual filter may depend on the number of choices in the AFC design (unpublished theoretical observations); for this reason, in previous work on spatial cueing where we used different AFC designs (2AFC for cued vs. 8AFC for uncued), we were not in a position to compare amplitude directly (Neri 2004).

Potential relations to existing electrophysiological literature.

Linking psychophysical results to the properties of single neurons is a daunting enterprise with several potential pitfalls, but it represents the final goal of any satisfactory account of attention. In this section, we make a brief speculative attempt to interpret our results in relation to the known properties of single neurons in visual cortex as relayed to us by electrophysiological studies on this topic, with the understanding that our approach is far less relevant to establishing this link than simultaneous psychophysics and electrophysiology in awake behaving animals (e.g., Cohen and Maunsell 2011). In other words, our results have direct implications for human behavior and can be firmly interpreted only within this context. Outside such context they remain speculative.

Earlier single-unit studies suggested that both spatial (McAdams and Maunsell 1999) and feature (Treue and Martinez-Trujillo 1999) attention lead to response enhancement (scaling) with negligible tuning changes. This notion was confirmed for spatial attention in subsequent studies using different visual features (e.g., Cook and Maunsell 2004), but it now appears to require substantial revision in relation to feature attention: more recent studies indicate that feature attention can lead to measurable changes in the tuning of single neurons and/or neuronal populations (Martinez-Trujillo and Treue 2004; Khayat et al. 2010). There is no evidence to suggest that different features, such as motion and orientation, are affected differently by attention at the neuronal level [existing studies (McAdams and Maunsell 1999; Cook and Maunsell 2004) would suggest similarities], and we are not aware of any electrophysiological study on the potential effect of attention directed to an orthogonal feature.

Our results are broadly consistent with the literature summarized above: we observed robust tuning changes only for feature-based attention, in line with recent single-unit studies (Martinez-Trujillo and Treue 2004; Khayat et al. 2010). There is one additional point of potential interface between our measurements and those from single neurons: the effect of attention on intrinsic noise. In general, we found that this quantity was not affected by attention, except for attention directed to an orthogonal feature (Fig. 5, D–F); the effect for this condition seemed convincing (virtually all magenta points in Fig. 8D fall above the unity line). We can try and relate this result, at least qualitatively, to recent measurements of interneuronal correlation (Cohen and Maunsell 2009; Mitchell et al. 2009; Cohen and Maunsell 2011). Given the highly speculative nature of this exercise, it only appears sensible to limit our discussion to the overall direction of the effect: interneuronal correlation is reduced by attentional deployment (Cohen and Maunsell 2009; Mitchell et al. 2009), while we found that internal noise was reduced by attention. At first it may appear that these two findings are inconsistent: if interneuronal correlation is reduced, one may expect more variable responses across neurons and therefore more overall internal variability. However, the link between interneuronal correlation and intrinsic variability of the read-out code depends on the origins of the interneuronal correlation: current thinking ascribes most of the interneuronal correlation to ongoing cortical activity (Mitchell et al. 2009), the suppression of which may lead to a more efficient (less noisy) code (Cohen and Maunsell 2011). The latter effect would be consistent with lower behavioral internal noise in the presence of attention (as we report here for one type of attentional manipulation).

Relations to previous psychophysical literature.

Various studies have examined the question of how sensory tuning may be affected by attentional deployment (examples are discussed below); none of them, however, were in the position to compare results across a wide range of conditions for experiments that were directly comparable in both experimental design and methodology for estimating sensory tuning. In this respect, our study offers a unique opportunity to study the effect of attention in a comprehensive and cohesive context.

We draw a distinction among the existing studies on this topic, based on whether they relied on what we will refer to as threshold-based techniques (e.g., contrast sensitivity, masking) or reverse-correlation techniques (like the current study). Although this distinction is to some extent arbitrary, its motivation derives from the observation that threshold-based results have often yielded contradictory conclusions in relation to the topic of interest here, while on the other hand results obtained using psychophysical reverse correlation have all been consistent across different stimuli, tasks, and laboratories (see Neri and Levi 2006 and further discussion below).

The threshold-based literature has not always been consistent with the psychophysical reverse correlation literature, but neither has it been consistent with itself. To provide an example of the latter inconsistency, Yeshurun and Carrasco (1998) reported that spatial cueing sharpened spatial resolution, i.e., it modified sensory tuning for an orientation task. This result is at odds with results from other laboratories, e.g., Baldassi and Verghese (2005) reported that spatial cueing increases sensitivity to all orientations in a uniform manner without changes in tuning. More recently Ling et al. (2009) reported that spatial attention does not modify sensory tuning, in line with Baldassi and Verghese (2005) but not with Yeshurun and Carrasco (1998). We have chosen to regard the article by Ling et al. (2009) as representing the currently accepted knowledge that has emerged from the threshold-based literature. According to this knowledge, sensory tuning is modified by feature-based attention but not by spatial attention; a similar distinction has been drawn by Baldassi and Verghese (2005) (see below for a more detailed discussion of this study). This last result is in line with evidence of narrowing of the tuning curve. Our results are in overall agreement with this notion, although we did measure a detectable (i.e., statistically significant) effect of spatial attention too.

The psychophysical reverse correlation literature has been consistent in reporting no retuning following attentional deployment. Using different stimuli and tasks, Eckstein et al. (2002), Murray et al. (2003), Neri (2004), and Busse et al. (2008) found no sensory retuning in the presence of attention. These results may seem to contradict those reported here, as the present study falls within the same methodological category. However, a closer look at those previous studies and their relation to ours shows that, to the contrary, our results are in complete agreement with those reported earlier. To recognize this fact, we first consider that the attentional manipulation for which we found retuning, i.e., feature-based attention where the attended feature is the feature relevant to the task (blue symbols in Fig. 4), has never been studied before in the reverse correlation literature. Murray et al. (2003) measured directional tuning curves with and without feature-based attention, but the attended feature (the color of the moving dots) was not the task-relevant feature (motion direction); their experiments are therefore more directly comparable to our experiments with color-labeled dots (Fig. 5H), for which we found no retuning (Fig. 7D) in agreement with those authors. Busse et al. (2008) also measured directional tuning curves with and without attention, but the attentional manipulation involved a central fixation task unrelated to the main task; once again, the conditions of their experiments are more similar to our experiments with color-labeled dots rather than those probing attention directed to the task-relevant feature (Fig. 1). As in the case of Murray et al. (2003), our results are therefore consistent with those reported by Busse et al. (2008).

With relation to spatial attention, we found some retuning although it was not as robust as that observed for feature-based attention. Eckstein et al. (2002) measured spatial filters for detecting a bright Gaussian blob with and without spatially directed attention, and found no change in spatial tuning with attention. Their experiments are not comparable to ours in that our primary measurement (tuning curves for direction/orientation) was very different, so it is difficult to relate the two sets of experiments. Perhaps more similar to our current experiments were those reported by Neri (2004) who measured orientation tuning curves with and without spatial attention and found no retuning with attention. There may seem to be an inconsistency here with our results, but the critical difference is that in our experiments on spatial attention we measured directional tuning, not orientation tuning like Neri (2004). As we have demonstrated here for feature-based attention, retuning may be observed for motion direction (Fig. 3D) but not for orientation (Fig. 7F) in almost identical experimental conditions. It is therefore likely that the small effect of spatial attention on directional tuning we report here is specific to motion direction and may not extend to the dimensions of space and/or orientation. Furthermore, it is possible that our ability to resolve an effect of spatial attention, while previous studies have not measured any, may have resulted from a substantial difference in data mass: we tested 10 observers per condition, each observer collecting 10K trials on average per condition (the total number of trials collected for this study was close to 1/2 million). These figures should be compared with four observers (12.5K trials per observer) in the case of Eckstein et al. (2002) and four observers (3.4K trials per observer) in the case of Neri (2004). Finally, although these studies all relied on some variant of psychophysical reverse correlation, the stimuli and specific methodologies used in each study differed substantially from one another, making it difficult to draw direct comparisons.

Notwithstanding the above comments, there are areas where our results differ significantly from earlier reports; these areas will need clarifications. An example is provided by Baldassi and Verghese (2005) who used pattern masking to measure orientation tuning when attending to line orientation and spatial location. They reported that spatial attention increases sensitivity to all orientations, i.e., an increase in response gain with no change in tuning. This result is consistent with those we report here (Fig. 7B). However, these authors also reported that feature-based attention, where the attended feature was orientation itself, selectively increased sensitivity for the target orientation, a result that would seem to indicate retuning. We were not able to measure any retuning for feature-based attention in the case of orientation filters (see Fig. 7F and Fig. 8, E and F). There are many potential explanations for this discrepancy with Baldassi and Verghese (2005), not least the fact that we used completely different methodologies/stimuli and that our primary measurements (perceptual filters) are not readily comparable to theirs (sensitivity thresholds). However, this incongruency does point to the need for further experiments to clarify the role of feature-based attention in the perception of oriented elements.

Quirky feature in the data.

We alert readers to a puzzling feature of our results, for which we do not have a ready explanation. Consider the experimental protocol we used to study feature-based attention, depicted in Fig. 1, D-F, and compare it to the protocol we used to study spatial attention, depicted in Fig. 5, A–C. The stimulus was identical in these two protocols (compare Fig. 1E with Fig. 5B), the only difference consisted in how observers were asked to report on the direction of a specific patch: in the feature-based experiment, they were asked to indicate which patch moved in a specified direction; in the spatial experiment, they were asked to indicate the direction of the patch at a specified location. On postcue trials, observers did not know which direction/location would be specified for the purpose of generating their response, so in principle they were asked to encode the direction of all 4 patches in both experiments. In other words, it may be reasonable to expect that on postcue trials observers were perceptually operating on the stimulus in very similar ways between the two experiments. Yet, our tuning measurements differed: the directional tuning curve from postcue trials (red trace) during feature-based experiments (Fig. 3D) was broader than the directional tuning curve from postcue trials during the spatial attention experiments (Fig. 3B). This result may suggest that the shape of the tuning curve depends on the specific way in which observers are asked to report about the stimulus: for example, it is possible that they may adopt different strategies for encoding the stimulus depending on the task they know they are going to be asked to perform. This interpretation remains speculative, as we do not have enough data to reach firm conclusions with relation to this feature of our data set.

Conclusions.

The phenomenon we term attention affects sensory processing in both aspecific and specific ways, where by specific we mean that a given effect (whether involving gain or tuning changes) pertains to one kind of attentional manipulation (e.g., spatial attention) and not others (e.g., feature-based attention). It boosts the overall gain of sensory tuning (without affecting its bandwidth) across the board, without specificity for visual attribute and/or attentional manipulation. It can also retune sensory processing by sharpening the underlying perceptual filter; however, this effect is highly specific and only observed for certain visual attributes (here motion direction) and attentional manipulations (feature-based attention directed to the task-relevant feature).

It is possible that all the effects reported here, spanning different visual attributes and attentional manipulations, may be captured by a single model. For example, Reynolds and Heeger (2009) have recently formulated a model of visual attention that is potentially able to account for a wide range of relevant results from single-unit electrophysiology. This model is unlikely to explain some of our results (for example the unexpected finding detailed in the previous section) and would need to be extended in some respects (for example to incorporate the effect of attention directed to an orthogonal feature), but it may offer important insights for interpreting the effects reported here. As a specific example, it may explain the observed difference between motion and orientation experiments (compare Fig. 3D with Fig. 7F): for consistency we adopted comparable stimulus sizes for the two sets of experiments (Figs. 1E and 5H), but the receptive field size of motion-sensitive MT neurons is typically larger than the receptive field size of orientation-selective V1 neurons (Albright and Desimone 1987); the normalization model of attention predicts that the effect of attentional deployment should depend on the ratio between stimulus and receptive field size (Reynolds and Heeger 2009), potentially explaining the difference we report here between directional tuning and orientation tuning.

Whichever modeling/conceptual framework is adopted, our empirical results offer a comprehensive view of how different types of visual attention impact sensory processing across a range of tasks and stimuli that are sufficiently similar to be naturally integrated within the context of a unified theoretical account. This useful information will need to be accommodated in future efforts to understand the role of attentional deployment in human vision.

GRANTS

Support was provided by the Royal Society of London and the Medical Research Council.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

ACKNOWLEDGMENTS

We thank three anonymous reviewers for useful comments.

REFERENCES