Abstract

Recognizing other individuals by integrating different sensory modalities is a crucial ability of social animals, including humans. Although cross-modal individual recognition has been demonstrated in mammals, the extent of its use by birds remains unknown. Herein, we report the first evidence of cross-modal recognition of group members by a highly social bird, the large-billed crow (Corvus macrorhynchos). A cross-modal expectancy violation paradigm was used to test whether crows were sensitive to identity congruence between visual presentation of a group member and the subsequent playback of a contact call. Crows looked more rapidly and for a longer duration when the visual and auditory stimuli were incongruent than when congruent. Moreover, these responses were not observed with non-group member stimuli. These results indicate that crows spontaneously associate visual and auditory information of group members but not of non-group members, which is a demonstration of cross-modal audiovisual recognition of group members in birds.

Keywords: cross-modal, individual recognition, expectancy violation, social classification

1. Introduction

The recognition of individuals is a fundamental prerequisite for social animals living in complex societies, as it allows for maintaining cooperation, classifying individuals based on their social classes (i.e. mate, kin, rank and group membership) and recognition of relationships between third-party individuals [1]. Indeed, many social animals, both vertebrates and invertebrates, have been reported to be able to distinguish conspecific individuals in various social contexts [2]. Most past studies have documented the ability of animals to use the class-level recognition to distinguish biologically significant individuals from others (i.e. mate and territorial neighbour). However, only minimal evidence has supported the existence of ‘true’ individual recognition, which involves the representation to associate multiple cues within or between modalities (e.g. voice and appearance) relating to specific individuals [3–7].

Recently, cross-modal individual recognition has been demonstrated in non-human primates [3–5], and non-primate mammals such as dogs (Canis familiaris) [6] and horses (Equus caballus) [7], by using an expectancy violation paradigm. This paradigm is a powerful method that can be used to verify the subject's spontaneous association of multiple cues related to a given target by use of the following prediction. If a subject spontaneously forms an association between multiple cues related to a target (e.g. a person's face and voice), the subject should respond strongly when the memorized association has been unexpectedly violated (in other words, when cues of different modalities are incongruent). The studies described above successfully adopted this paradigm for use in animal behaviour experiments and demonstrated cross-modal recognition of individuals such as conspecific group members [5,7] and heterospecific human caretakers [4–6]. In contrast to these recent advances in mammals, studies in birds have demonstrated class-level recognition, defined as the ability to discriminate specific individuals, such as mate and territorial neighbour, from others [8,9]. However, the extent of cross-modal individual recognition remains unknown.

Corvids are ideal subjects for the study of cross-modal individual recognition as they form complex fission–fusion societies where individual recognition is crucial for inter-individual competition and cooperation [10–13]. A number of studies have revealed the extraordinary socio-cognitive skills of corvids, such as recognizing relationships between third parties [11,14] and attributing knowledge to conspecific rivals [15–17]. These studies suggest the involvement of individual recognition as an underlying mechanism for their sophisticated cognitive skills. To date, however, no evidence of cross-modal individual recognition has been provided and thus our understanding of the underlying mechanisms is still incomplete.

In this study, we examined audiovisual cross-modal individual recognition by the large-billed crow (Corvus macrorhynchos) using an expectancy violation paradigm. Large-billed crows were recently reported to discriminate familiar conspecifics visually [12,18], but also using auditory cues based on contact calls that differed acoustically between individuals [19]. To investigate whether crows cross-modally recognize group members using audiovisual cues, the looking responses of subject birds into a small opening and towards the visual and auditory stimuli were compared for congruent and incongruent conditions. Under the congruent condition, visual and auditory cues were matched in terms of identity, whereas under the incongruent condition, these were non-matched. Using this approach, we tested the following predictions. If the crows could spontaneously associate the appearance and call of a group member, they should respond more strongly when the visual presentation of a group member was followed by playback of the call of a different member than when both stimuli were from the same member.

Individual recognition is generally thought to develop when repeated social interactions allow an individual to associate multiple cues of another individual [2]. Surprisingly, however, no past studies have investigated the role of learning through social interaction on cross-modal individual recognition in animals. If cross-modal individual recognition develops through regular social contact, it is predicted that expectancy violation is effective for familiar conspecifics such as group members but not for unfamiliar non-group members, because the opportunities for regular social interactions between group members are not available for unfamiliar non-group members. In this study, we examined this prediction by comparing the effects of expectancy violation arising from two categories of stimuli birds: familiar group members and unfamiliar non-group members.

2. Material and methods

(a). Animals

Eleven large-billed crows (four females and seven males) served in this study (table 1). Of these, seven (two females and five males, group 1) were caught in Tokyo as fledglings from 2004 to 2008, and thereafter hand-reared by N.K., and group-housed in an outdoor aviary (10 × 10 × 3 m) for more than 1 year before the experiment. Of the other four crows, two females (group 2) and two males (group 3) were caught in 2009 and 2010, respectively, as free-floating juveniles (1–2 years of age) in Tsukuba city, and group-housed in separate outdoor aviaries (1.8 × 2.4 × 1.8 m) for more than two months before the experiment. The crows had free access to food (dog food, eggs and dried fruits) and water in the aviaries. Tokyo and Tsukuba city are geographically separated by more than 40 km. Therefore, the crows in group 1 and the other two groups had no opportunities to interact socially with each other before the experiment, which ensured that the crows in group 1 were familiar with other members of their group but not with the conspecifics in groups 2 and 3. Crows in groups 2 and 3 participated in the experiment only as stimuli. One male in group 1 (Gah-ko) served only as a subject because in our pilot study, this individual vocalized often even after a piece of meat was given. The other birds in group 1 served as both subjects and stimuli (table 1). The Environmental Bureau of Tokyo Metropolitan Government gave permission for the crows to be caught (permission no. 286–6).

Table 1.

Group composition and stimulus pairs presented to each subject. The initial letters of crows' names identify the stimulus pairs. Note that birds of the familiar stimulus pairs 1 and 2 were different between subjects, as the subjects were not tested for their own pairs.

| presented stimulus pair |

|||||||

|---|---|---|---|---|---|---|---|

| group composition |

group member |

non-group member |

|||||

| individual | sex | age | group | pair 1 | pair 2 | pair 1 | pair 2 |

| Oto | M | 6 | 1 | N, Y | Ta, S | J, L | F, Te |

| Uta | M | 6 | 1 | N, Y | Ta, S | J, L | F, Te |

| Gah-ko | M | 5 | 1 | N, Y | Ta, S | J, L | F, Te |

| Yamato | M | 6 | 1 | O, U | Ta, S | J, L | F, Te |

| Naka | M | 3 | 1 | O, U | Ta, S | J, L | F, Te |

| Sakura | F | 2 | 1 | N, Y | O, U | J, L | F, Te |

| Takeru | F | 5 | 1 | N, Y | O, U | J, L | — |

| Jenny | F | 2 | 2 | — | — | — | — |

| Lunac | F | 2 | 2 | — | — | — | — |

| Fujisan | M | 1 | 3 | — | — | — | — |

| Tetsuo | M | 1 | 3 | — | — | — | — |

(b). Set-up

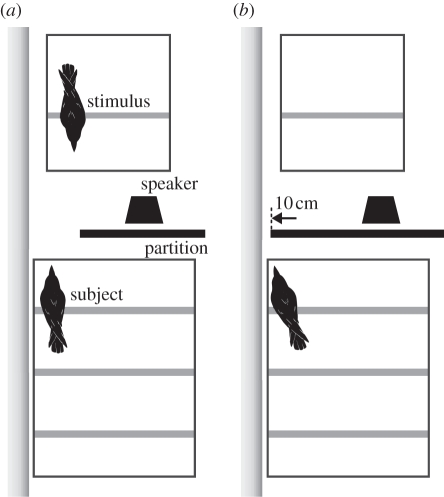

To conduct the experiment under the expectancy violation paradigm, we aligned two cages to face each other. One cage was for the subject (70 × 106 × 76 cm), while the other was for the stimulus (46 × 75 × 54 cm). The cages were separated by 80 cm (figure 1). A black, sliding partition was inserted 20 cm in front of the subject cage, which allowed for a large (30 cm; figure 1a) or a small (10 cm; figure 1b) opening between the partition and the wall. The large opening allowed the subject to see the stimulus, whereas the small opening did not. A piece of meat was placed in the stimulus cage for the stimulus crow as a visual stimulus to concentrate on it and not to vocalize. A loudspeaker (MS-76, Elecom) used for playback of the pre-recorded calls (see below) was placed behind the partition in a position that could not be seen by the subject. The behaviours of the subject and the stimulus were monitored in another room and video-recorded (BDZ-L70, Sony) for analysis offline.

Figure 1.

Diagram of the experimental set-up. The subject (a) could see the stimulus cage through the 30 cm opening during the visual stimulus presentation but (b) could not see through the 10 cm opening after visual presentation and during the subsequent auditory stimulus presentation.

(c). Auditory stimuli and playback procedure

Contact ‘ka’ calls were used as the auditory cue for individual recognition because our previous study showed that this type of call acoustically encoded individuality and that crows were able to discriminate between individuals according to their ka calls [19]. Prior to this experiment, ka calls were recorded using a linear PCM recorder (PCM-D50, Sony) with a 48 kHz/16 bit sampling resolution. For each individual, the recorded sample with the best signal-to-noise ratio was used as the stimulus. Mean duration (±s.e.) of the calls was 0.35 ± 0.021 s.

Each subject participated in one trial per day under one of four experimental conditions. A single trial consisted of a 60 s visual presentation of the stimulus followed by two playbacks of a stimulus call separated by a 7 s interval. At the beginning of each trial, N.K. sequentially introduced the subject and the stimulus crows into their cages using dog carry boxes. Subsequently, the trial was started by creating a 30 cm opening between the partition and the wall for a 60 s visual presentation of the stimulus (figure 1a). Closing the opening to 10 cm terminated the visual presentation, at which time the stimulus was immediately returned to the aviary. Approximately 30 s after the visual presentation had ended, a call stimulus by the same bird was played twice at a 7 s interval. The trial was terminated 30 s after the second playback. To avoid habituation to the playback, trials for any individual bird were separated by 4 day intervals. If the stimulus bird vocalized during the presentation, we had planned to immediately terminate the trial and recommence it 4 days later; however, no such cases occurred. Playback of the calls was at 85 dB SPL and 24 kHz/16 bits resolution.

During the visual presentation, no interaction occurred in any trial between the stimulus bird and the subject. Stimulus birds remained silent while eating pieces of meat and none was attracted to the subjects. The subjects, on the other hand, spent most of the time (48.2 ± 0.94 s, mean ± s.e.) in front of the opening, although there was no statistical difference between group member and non-group member conditions. However, the subjects vocalized slightly more under non-group member conditions (i.e. four subjects vocalized over a total of 16 trials) than under group member conditions (i.e. two subjects vocalized over a total of seven trials). It is noted, again, that the stimulus birds did not respond to vocalization of the subjects.

(d). Experimental design

Subjects were tested under four trial conditions that differed in congruency (congruent or incongruent) between the visual and auditory stimulus identities, and in familiarity (group member or non-group member) of the subject with the stimulus bird. Five stimulus pairs were prepared (table 1), three and two of which were group member (from group 1) and non-group member stimuli (from groups 2 and 3), respectively. Birds were randomly assigned to matched-sex pairs and also matched-age pairs as far as possible, in order to exclude the expectancy violation of subjects for the sex or age between visual and auditory stimuli.

For each stimulus pair, four different visual–auditory sequences were possible using combinations of two congruency conditions (i.e. bird Avis–bird Aaud and Bvis–Baud as congruent, and Avis–Baud and Bvis–Aaud as incongruent). Each subject underwent each trial only once. Therefore, each subject underwent a total of 16 trials, which consisted of four stimulus-pair blocks of four stimulus sequences each, excluding the subject's own stimulus pair. The order of the four stimulus sequences was quasi-randomized within a block but counterbalanced in congruency between blocks for a given subject. Also, the order of stimulus pairs was quasi-randomized between blocks for each subject but counterbalanced in familiarity between subjects. One female, Takeru, participated in only three blocks as she refused to enter the experimental room in the final block. Thus, for this subject, only the data of two group member conditions and one non-group member conditions were used for the analysis.

(e). Analysis of behaviour

To examine the effects of congruency and familiarity on the subjects' responses, N.K. measured the total looking durations and the latencies of the subjects' first looks using a frame-by-frame analysis (30 frames s−1). For each frame, a subject's looking behaviour was characterized by whether it met two criteria: (i) the subject was perched on the northwest corner and faced the stimulus cage, and (ii) the subject's head inclined or stretched through the small opening. For each trial, we examined the 30 s period after the first playback. A second observer, who did not know the experimental conditions and the subjects' identities, characterized the subject's behaviour in 50 per cent of the trials (54 trials) according to the above criteria. Inter-observer agreement was high (Cohen's κ = 0.92) and thus the observation was highly reliable.

(f). Statistics

We examined the effects of congruency and familiarity of the stimulus birds, but also sex and age of birds of the played-back calls on the subject's responses by using generalized linear mixed models (GLMMs) that allowed us to treat the repeated measurements of subjects and stimulus pairs as random effects [20]. The total looking duration and the first-look latency were set as response variables, and the congruency, familiarity, sex, age and their second-order interactions as fixed factors. We first put all fixed factors into the model and then performed a stepwise reduction procedure to eliminate variables based on the small-is-better criterion for the Baysian information criterion (BIC). Significance of the fixed factors and the interactions in the best-fitted model were determined with a log-likelihood test between the two models in which a focal factor (or interaction) was included and excluded. If there was a significant interaction in the best-fitted model, two additional stratified GLMMs were evaluated to examine the effect of the factors separately conditioned on the other factor. Negative binomial error distribution with a logit-link function was used for the looking duration and normal error distribution with an identity link function for the latency of the first look. For a trial in which a subject showed no looking behaviour, the duration and the latency were taken to be 0 and 30 s, respectively. All statistical tests were conducted by using the free software program R v. 2.12.1 (R Development Core Team) with statistical packages ‘glmmADMB’ for negative binomial error with a logit-link function and ‘lme4’ for normal error with an identity link function.

3. Results

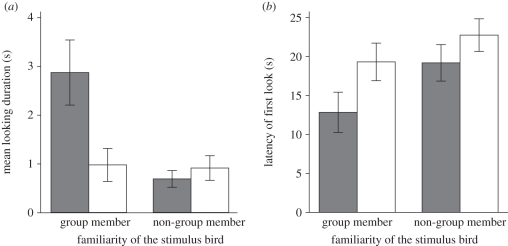

Subject crows looked for a longer duration under the incongruent condition when the stimulus was a group member (figure 2a and table 2). GLMM selected the best-fitted model that included familiarity, congruency and the interaction between familiarity and congruency. In this final model, the interaction between congruency and familiarity was found to be significant (p = 0.0049, Wald Z = 2.81, d.f. = 105) but congruency (p = 0.26, Wald Z = −1.11, d.f. = 105) and familiarity (p = 0.18, Wald Z = 1.32, d.f. = 105) factors were not. To determine the trial conditions producing the significant interaction between familiarity and congruency, we performed two additional GLMMs that separately examined the effect of congruency factor under group member and non-group member conditions. The GLMMs revealed a significant effect under group member condition (p = 0.0078, Wald Z = 2.66, d.f. = 54) but not non-group member condition (p = 0.22, Wald Z = −1.23, d.f. = 50), indicating a significantly longer looking duration under the condition with incongruent group member stimuli than under the other trial conditions.

Figure 2.

(a) Looking duration and (b) latency of the first look. The crows looked towards the opening for a longer duration and with a shorter latency period under the incongruent group member stimulus condition than under other conditions. The error bars indicate standard errors. Grey bars, incongruent; white bars, congruent.

Table 2.

Mean values (±s.e.) of total looking duration and latency of the first look under four conditions.

| total looking duration (s) |

latency of first look (s) |

|||

|---|---|---|---|---|

| congruent | incongruent | congruent | incongruent | |

| group member | 2.9 ± 0.7 | 1.0 ± 0.3 | 12.8 ± 2.6 | 19.3 ± 2.4 |

| non-group member | 0.7 ± 0.2 | 0.9 ± 0.3 | 19.2 ± 2.3 | 22.7 ± 2.1 |

Subject crows showed looking behaviour with shorter latencies under incongruent condition with group member stimuli than under the other trial conditions (figure 2b and table 2). GLMM selected a best-fitted model that included congruency and familiarity but not sex, age or any interactions. In this final model, significant effects were found in both the congruency (p = 0.0072, t = −2.74, d.f. = 105) and familiarity (p = 0.0083, t = −2.68, d.f. = 105) factors, indicating that the latency of the first look was shortest under the incongruent condition with group member stimuli.

4. Discussion

In this study, we examined the audiovisual cross-modal individual recognition of large-billed crows by using an expectancy violation paradigm. The crows looked through the opening for longer time with shorter latency when the identities of the visual and auditory stimuli were familiar to them but incongruent than when they were congruent. Interestingly, no such behavioural patterns were observed when the stimulus was unfamiliar. Both sex and age of auditory-stimulus birds had no effect on looking behaviour of the subjects. These results indicate that large-billed crows cross-modally recognize group members, but not non-group members. Our findings provide the first evidence for cross-modal individual recognition in birds.

We hypothesized in the introduction that crows should recognize group members because regular contact provides the opportunities to learn the associations between these members' vocalizations and their appearances. In this study, there was no exposure to these individual cues in unfamiliar non-group members before this experiment, and therefore such recognition was not possible. The finding that crows responded to the incongruent condition in group members but not non-group members supports this prediction and suggests that regular social contacts among group members could play a crucial role in the development of cross-modal individual recognition. However, the present study did not demonstrate the learning process whereby cross-modal individual recognition occurred among group members. To elucidate the mechanisms underlying the association of cross-modal cues related to specific individuals, future studies should examine how social interactions affect the formation of cross-modal individual recognition among unfamiliar non-group members.

The subjects' weak responses to non-group member stimuli cannot be accounted for by habituation owing to the use of only one call per stimulus bird throughout the experiment. If this were the case, the subjects would have been expected to respond similarly to both group and non-group member stimuli under the incongruent condition; however, this did not occur. Therefore, the differential responses to the stimuli between group and non-group members showed that crows did not habituate to the call stimuli during the experiment, but rather inferred the identities of the group member stimulus birds.

The model analyses found no effect of the sex of the played-back calls on subjects' responses. This indicates that the sex of the individuals of played-back calls did not contribute to the differential responses of the subjects in this experiment. However, this does not necessarily mean that crows are insensitive to sex in their communications. Previous studies in birds revealed that social interactions differed depending on sex combinations—for example, higher aggression between males in large-billed crows, as well as other corvids [12,13,21], and strong responses to contact calls of opposite-sex individuals in orange-fronted conures (Aratinga canicularis) [22]. These sex effects on social interactions suggest that responses of subjects to the played-back calls might be influenced by the combination of the stimulus and the subject sexes. However, the model analyses in our study included the sex of stimulus birds but not of subjects, because of the small subject population and the biased sex ratio (i.e. only two females), making it difficult to reliably examine this possibility. Further investigation of the effects of sex on individual recognition-based communication is necessary in order to understand how crows classify other individuals based on their sex.

The differential responses of the subjects to group members and non-group members might be explained by age differences between these stimulus groups. The birds from group 1 used for the group member stimulus were adult (i.e. over 3 years old), except for one female (Sakura), while those of groups 2 and 3, used for the non-group member stimulus, were juveniles. If the subjects' responses simply depended on the ages of the auditory-stimulus birds, the fixed factor of age should have remained in the final model. However, this was not the case. Similar to the argument above, if we were to consider the ages of both the subject and the stimulus bird, the age effect on the subjects' responses might be observed. In this study, however, only one juvenile (a female, Sakura) was included in the subject population, which made it difficult to verify the age effects. The role of age, including developmental stage (i.e. adult versus juvenile), in the social communication of the large-billed crows has been unclear, as has the manner in which individual birds determine the ages of others. Given their complex society consisting of heterogeneous social classes and relationships, however, it is possible that young crows are more sensitive to adult individuals than to younger ones, as suggested by recent findings in Campbell's monkeys (Cercopithecus campbelli) [23]. Further studies should investigate the ability of crows to discern the ages of conspecifics, as well as the cues to do so, for instance, the acoustic structures.

Another possible explanation for the present result is that the crows responded based on the violation of the expected relationship between the physical properties of the visual stimulus, such as body size and acoustic characteristics of the ka call. A recent study reported that domestic dogs could relate auditory information such as the formant frequencies of growl vocalizations to the body size of conspecifics [24]. However, if after seeing the stimulus birds the subject crows expected certain acoustic properties that accorded with a general assessment rule—as was the case for dogs—without considering the identity of the stimulus conspecifics, the different responses found under the congruent and incongruent conditions for group member stimuli must have been generalized to the non-group member stimuli. This was not the case, however, as a longer looking behaviour with a shorter latency period under the incongruent condition was observed only for the group member stimuli. Therefore, the crows' responses did not follow such a general rule of visual–auditory assessment.

A number of past studies have shown that corvids have highly developed socio-cognitive skills that suggest the capacity for individual recognition. However, no clear evidence of individual recognition has yet been provided. Our study on corvids clearly demonstrated audiovisual cross-modal individual recognition based on learning. The present findings provide greater insight regarding the mechanisms underlying the extraordinary socio-cognitive skills of corvids. Use of an expectancy violation paradigm, as in this study, allows us to further explore the cognitive abilities of birds to categorize social classes (e.g. sex and age) and relationships. Such social categorization based on individual recognition probably underpins the complex forms of inter-individual competition and cooperation seen in corvids [10–17,25]. The present study serves as a very important foundation for future studies that will expand our understanding of the phylogeny-independent evolution of cognitive ability in close association with social ecology [25,26].

Acknowledgements

The experiments adhered to the Japanese national regulations for animal welfare and were approved by the Animal Care and Use Committee of Keio University (no. 08 005).

This study was funded by KAKENHI grants to N.K. (for JSPS Fellows, no. 217525) and to E.-I.I. (for Young Scientists (B), no. 19700248, no. 23700319), the Sasakawa Scientific Research Grant from The Japan Science Society (no. 23-619) to N.K., and a Global COE Programme grant (D-09) to S.W. We thank Dr Wataru Kitamura for his advice on statistics. We also thank two anonymous referees for their valuable comments.

References

- 1.Alexander R. D. 1974. The evolution of social behavior. Ann. Rev. Ecol. Syst. 5, 325–383 10.1146/annurev.es.05.110174.001545 (doi:10.1146/annurev.es.05.110174.001545) [DOI] [Google Scholar]

- 2.Tibbetts E. A., Dale J. 2007. Individual recognition: it is good to be different. Trends Ecol. Evol. 22, 529–537 10.1016/j.tree.2007.09.001 (doi:10.1016/j.tree.2007.09.001) [DOI] [PubMed] [Google Scholar]

- 3.Kojima S., Izumi A., Ceugniet M. 2003. Identification of vocalizers by pant hoots, pant grunts and screams in a chimpanzee. Primates 44, 225–230 10.1007/s10329-002-0014-8 (doi:10.1007/s10329-002-0014-8) [DOI] [PubMed] [Google Scholar]

- 4.Adachi I., Fujita K. 2007. Cross-modal representation of human caretakers in squirrel monkeys. Behav. Proc. 74, 27–32 10.1016/j.beproc.2006.09.004 (doi:10.1016/j.beproc.2006.09.004) [DOI] [PubMed] [Google Scholar]

- 5.Sliwa J., Duhamel J. R., Pascalis O., Wirth S. 2011. Spontaneous voice–face identity matching by rhesus monkeys for familiar conspecifics and humans. Proc. Natl Acad. Sci. USA 108, 1735–1740 10.1073/pnas.1008169108 (doi:10.1073/pnas.1008169108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Adachi I., Kuwahata H., Fujita K. 2007. Dogs recall their owner's face upon hearing the owner's voice. Anim. Cogn. 10, 17–21 10.1007/s10071-006-0025-8 (doi:10.1007/s10071-006-0025-8) [DOI] [PubMed] [Google Scholar]

- 7.Proops L., McComb K., Reby D. 2009. Cross-modal individual recognition in domestic horses (Equus caballus). Proc. Natl Acad. Sci. USA 106, 947–951 10.1073/pnas.0809127105 (doi:10.1073/pnas.0809127105) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Beer C. G. 1970. Individual recognition of voice in the social behaviour of birds. Adv. Study Anim. Behav. 3, 27–74 10.1016/S0065-3454(08)60154-0 (doi:10.1016/S0065-3454(08)60154-0) [DOI] [Google Scholar]

- 9.Falls J. B. 1982. Individual recognition by sounds in birds. In Acoustic communication in birds, vol. 2 (eds Kroodsma D. E., Miller E. H.), pp. 237–278 New York, NY: Academic Press [Google Scholar]

- 10.Clayton N. S., Emery N. J. 2007. The social life of corvids. Curr. Biol. 17, R652–R656 10.1016/j.cub.2007.05.070 (doi:10.1016/j.cub.2007.05.070) [DOI] [PubMed] [Google Scholar]

- 11.Emery N. J., Seed A. M., von Bayern A. M. P., Clayton N. S. 2007. Cognitive adaptations of social bonding in birds. Phil. Trans. R. Soc. B 362, 489–505 10.1098/rstb.2006.1991 (doi:10.1098/rstb.2006.1991) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Izawa E.-I., Watanabe S. 2008. Formation of linear dominance relationship in captive jungle crows (Corvus macrorhynchos): implications for individual recognition. Behav. Proc. 78, 44–52 10.1016/j.beproc.2007.12.010 (doi:10.1016/j.beproc.2007.12.010) [DOI] [PubMed] [Google Scholar]

- 13.Fraser O. N., Bugnyar T. 2010. The quality of social relationships in ravens. Anim. Behav. 79, 927–933 10.1016/j.anbehav.2010.01.008 (doi:10.1016/j.anbehav.2010.01.008) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Paz-y-Miño C. G., Bond A. B., Kamil A. C., Balda R. P. 2004. Pinyon jays use transitive inference to predict social dominance. Nature 430, 778–781 10.1038/nature02723 (doi:10.1038/nature02723) [DOI] [PubMed] [Google Scholar]

- 15.Bugnyar T. 2005. Ravens, Corvus corax, differentiate between knowledgeable and ignorant competitors. Proc. R. Soc. B 272, 1641–1646 10.1098/rspb.2005.3144 (doi:10.1098/rspb.2005.3144) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dally J. M., Emery N. J., Clayton N. S. 2006. Food-caching western scrub-jays keep track of who was watching when. Science 312, 1662–1665 10.1098/rspb.2005.3144 (doi:10.1098/rspb.2005.3144) [DOI] [PubMed] [Google Scholar]

- 17.Bugnyar T. 2011. Knower–guesser differentiation in ravens: others' viewpoints matter. Proc. R. Soc. B 278, 634–640 10.1098/rspb.2010.1514 (doi:10.1098/rspb.2010.1514) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Nishizawa K., Izawa E.-I., Watanabe S. 2011. Neural-activity mapping of memory-based dominance in the crow: neural networks integrating individual discrimination and social behaviour control. Neuroscience 197, 307–319 10.1016/j.neuroscience.2011.09.001 (doi:10.1016/j.neuroscience.2011.09.001) [DOI] [PubMed] [Google Scholar]

- 19.Kondo N., Izawa E.-I., Watanabe S. 2010. Perceptual mechanism for vocal individual recognition in crows: contact call signature and discrimination. Behaviour 147, 1051–1072 10.1163/000579510X505427 (doi:10.1163/000579510X505427) [DOI] [Google Scholar]

- 20.Faraway J. J. 2005. Extending the linear model with R: generalized linear, mixed effects and nonparametric regression models. Boca Raton, FL: Chapman & Hall/CRC [Google Scholar]

- 21.Chiarati E., Canestrari D., Vera R., Marcos J. M., Baglione V. 2010. Linear and stable dominance hierarchies in cooperative carrion crows. Ethology 116, 346–356 10.1111/j.1439-0310.2010.01741.x (doi:10.1111/j.1439-0310.2010.01741.x) [DOI] [Google Scholar]

- 22.Balsby T. J. S., Scarl J. C. 2008. Sex-specific responses to vocal convergence and divergence of contact calls in orange-fronted conures (Aratinga canicularis). Proc. R. Soc. B 275, 2147–2154 10.1098/rspb.2008.0517 (doi:10.1098/rspb.2008.0517) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lemasson A., Gandon E., Hausberger M. 2010. Attention to elders voice in non-human primates. Bio. Lett. 6, 325–328 10.1098/rsbl.2009.0875 (doi:10.1098/rsbl.2009.0875) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Taylor A. M., Reby D., McComb K. 2011. Cross modal perception of body size in domestic dogs (Canis familiaris). PLoS ONE 6, e17069. 10.1371/journal.pone.0017069 (doi:10.1371/journal.pone.0017069) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Emery N. J., Clayton N. S. 2004. The mentality of crows: convergent evolution of intelligence in corvids and apes. Science 306, 1903–1907 10.1126/science.1098410 (doi:10.1126/science.1098410) [DOI] [PubMed] [Google Scholar]

- 26.de Waal F. B. M., Tyack P. L. 2003. Animal social complexity: intelligence, culture, and individualized societies. Cambridge, MA: Harvard University Press [Google Scholar]