Abstract

Background

Residents and interns are recognized as important clinical teachers and mentors. Resident-as-teacher training programs are known to improve resident attitudes and perceptions toward teaching, as well as their theoretical knowledge, skills, and teaching behavior. The effect of resident-as-teacher programs on learning outcomes of medical students, however, remains unknown. An intervention cohort study was conducted to prospectively investigate the effects of a teacher-training workshop on teaching behavior of participating interns and on the clerkship learning outcomes of instructed fourth-year medical students.

Methods

The House Officer-as-Teacher Training Workshop was implemented in November 2009 over 1.5 days and attended by all 34 interns from one teaching hospital. Subsequently, between February and August 2010, 124 fourth-year medical students rated the observable teaching behavior of interns during 6-week general surgery clerkships at this intervention hospital as well as at 2 comparable hospitals serving as control sites. Ratings were collected using an anonymous 15-item Intern Clinical Teaching Effectiveness Instrument. Student achievement of clerkship learning outcomes during this period was evaluated using a validated and centralized objective structured clinical examination.

Results

Medical students completed 101 intern clinical teaching effectiveness instruments. Intern teaching behavior at the intervention hospital was found to be significantly more positive, compared with observed behavior at the control hospitals. Objective structured clinical examination results, however, did not demonstrate any significant intersite differences in student achievement of general surgery clerkship learning outcomes.

Conclusions

The House Officer-as-Teacher Training Workshop noticeably improved teaching behavior of surgical interns during general surgery clerkships. This improvement did not, however, translate into improved achievement of clerkship learning outcomes by medical students during the study period.

Background

The role of the resident teacher has taken on significant meaning in the past 40 years.1 It is now understood that medical students attribute one-third of their clinical education to teaching from interns and residents2,3 and consider them to be important role models and mentors.4–6 Correspondingly, interns and residents report spending up to 25% of their time supervising, teaching, and evaluating medical students and junior colleagues7 and feel a strong responsibility to teach.8,9 Residents not only find clinical teaching enjoyable but also consider it an important component of their own experience and education.10,11

Resident-as-Teacher (RaT) programs have emerged as a means of improving resident teaching skills and have a number of positive effects,12–18 including improved attitudes and perceptions toward clinical teaching and improved teaching skills and behavior observable by medical students, peers, and senior clinicians. The effect of RaT programs on learning outcomes of medical students, however, has not yet been investigated, to our knowledge, leaving a considerable gap in our understanding of their overall effect.1

For this reason, an interventional cohort study was designed to determine whether a teacher training workshop would improve the teaching behavior of interns (postgraduate year-1 house officers) and whether improved teaching leads to improved achievement of clerkship learning outcomes by the medical students under the interns' supervision. Preliminary evaluation of the workshop's effect found improvement in participants' attitudes and perceptions toward clinical teaching with increases in self-reported awareness of clinical teaching and confidence as clinical teachers.19

Methods

Workshop Curriculum and Implementation

The House Officer-as-Teacher (HOT) Training Workshop curriculum was developed in 2009 as a collaborative project between Harvard Medical School and the University of Auckland (Auckland, New Zealand). Aspects of this new curriculum were adopted from the curriculum of a RaT program commissioned by the Society for Academic Emergency Medicine (De Plaines, IL) for American emergency medicine residents.20

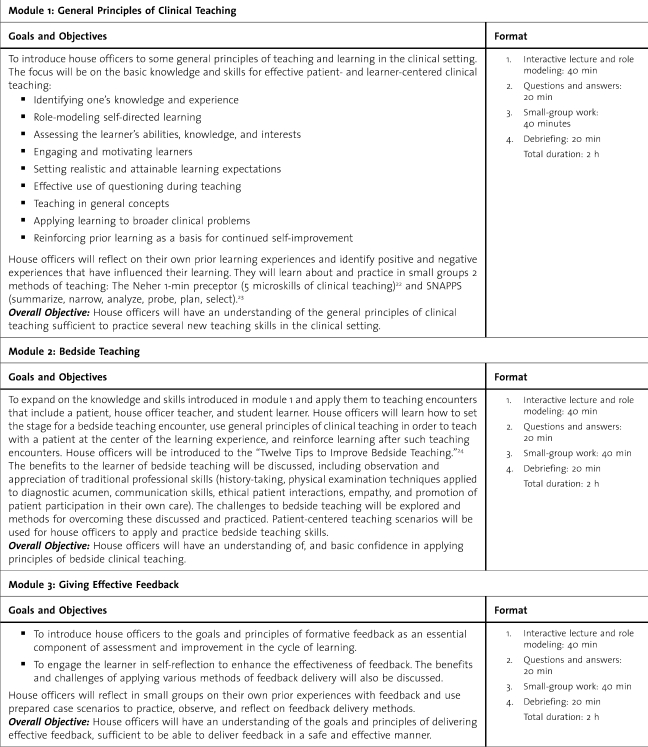

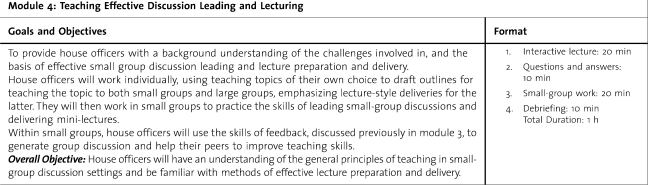

New Zealand medical graduates do not receive formal teacher training during or after medical school, so the workshop curriculum was designed to introduce basic principles of clinical teaching to newly graduated interns who have no previous teaching experience. The workshop duration was 1.5 days. table 1 outlines the learning objectives and instructional format of the workshop's 4 modules.

TABLE 1.

The House Officer-as-Teacher (HOT) Training Workshop Curriculum

TABLE 1.

Continued

In November 2009, the HOT workshop was implemented as a pilot during the compulsory intern-orientation week at a teaching hospital in Auckland, New Zealand, and the attendance rate by interns was 100%. It was led by a chief workshop facilitator (S.F.) and 4 trained co-facilitators. On day 1, interns were introduced to the basic principles of clinical teaching and to the theoretical aspects of each learning module through a combination of short interactive lectures and large group discussions. Interns then had the opportunity to apply demonstrated teaching principles and methods to standardized clinical teaching scenarios constructed by workshop facilitators. Interns were divided into small groups of 6 or 7, and each intern had opportunities to role-play the clinical teacher and the medical student during reenactment of the scenarios. Workshop facilitators, attached to each group, provided encouragement, reinforcement of principles from the workshop curriculum, and timely feedback and ensured teaching exercises were conducted in a safe and supportive environment.

Day 2 of the workshop involved applying clinical teaching skills to simulated bedside-teaching scenarios. These scenarios used trained professional actors playing standardized patients and third-year medical students volunteering as real-life learners. Divided again into small groups to facilitate peer learning, the interns took turns role-playing the clinical teacher in these bedside-teaching scenarios. Each scenario was limited to 7 minutes to simulate the realistic time constraints of everyday clinical practice. Within the allocated time, interns had to address the patient's medical and nonmedical concerns and engage the student in a constructive learning discussion while navigating challenges when the needs of a patient and student clash. To further enrich the learning experience, interns were digitally recorded to allow for self-evaluation and self-reflection. Consistent with activities on day 1, interns again received peer and facilitator feedback while concurrently engaged in delivering feedback to fellow participants.

Study Design

This interventional cohort study was designed to investigate the effect of a teacher training workshop on the teaching behavior of interns and the clerkship learning outcomes of medical students who interacted with these interns. The intervention cohort was made up of all 34 interns from an Auckland, New Zealand, teaching hospital where the pilot HOT workshop was implemented, whereas the control cohort consisted of 70 interns from 2 other teaching hospitals in the same city. The 3 hospitals were similar in size and facilities and offered comparable clerkship learning opportunities for medical students. Medical students from the University of Auckland are assigned to all 3 teaching sites for the 6-week fourth-year general surgery clerkship. The clinician-student ratio at each hospital is similar, and assignment is based on student preference and position availability. Student work schedules were also similar at each hospital because all learning activities during the clerkship are standardized across teaching sites.21

Assessment of Workshop Impact

To compare the teaching behavior of interns from the 2 study cohorts, fourth-year medical students were asked to rate the effectiveness of intern-led teaching at all 3 teaching hospitals between February and August 2010. At the completion of their general surgery clerkships, 124 fourth-year students were asked to anonymously complete a 15-item Intern Clinical Teaching Effectiveness Instrument (box 1), rating the effectiveness of observable teaching behavior displayed by the interns with whom they interacted. Participation by the medical students was not compulsory.

Box 1 The Intern Clinical Teaching Effectiveness Instrument

The interns establish a good learning environment (approachable, enthusiastic, etc).

The interns stimulate me to learn independently.

The interns allow me autonomy appropriate to my level/experience/competence.

The interns organize time to allow for both teaching and patient care.

The interns offer regular feedback (both positive and negative).

The interns clearly specify what I am expected to know and do during the attachment.

The interns adjust teaching to my needs (experience, competence, interest, etc).

The interns ask questions that promote learning (clarifications, probes, reflective questions, etc).

The interns give clear explanations/reasons for opinions, advice, actions, etc.

The interns adjust teaching to diverse settings (bedside, operating room, emergency room, etc).

The interns coach me on my clinical/technical skills (history-taking, examination, procedural, etc).

The interns incorporate research data and/or practice guidelines into teaching.

The interns teach diagnostic skills (clinical reasoning, selection/interpretation of tests, etc).

The interns teach effective patient and/or family communication skills.

The interns teach principles of cost-appropriate care (resource utilization, etc).

The Intern Clinical Teaching Effectiveness Instrument was adapted by the investigators from a validated tool originally developed by Copeland and Hewson.25 This instrument was constructed to evaluate the effectiveness of teaching in a wide variety of clinical settings. Each of the instrument's items is a detailed statement describing a specific teaching skill, and those skills are rated using a 5-point Likert scale. Modifications were made so that it would be appropriate for use by New Zealand–trained interns. These included removing the terms Socratic questions and view box, and substituting the term interview with history-taking. Comparison of teacher ratings was first made on a hospital-to-hospital basis to investigate for intersite differences. Ratings from the 2 control hospitals were then combined into one inclusive control group and compared with ratings from the intervention hospital.

A centralized, surgical objective structured clinical examination (OSCE) was used to measure student achievement of clerkship learning objectives during the study period. This clerkship assessment was attended by all fourth-year medical students in 2010. The OSCE is made up of 11 written stations and 4 clinical skills stations. Written stations entail short-answer questions about a clinical scenario, and clinical skills stations involve demonstration of history-taking and physical examination skills under direct examiner observation. The OSCE is graded on 230 marks (110 marks from the written component; 120 marks from clinical skills component).

The external validity and internal reliability of the surgical OSCE has previously been confirmed,21 giving it credibility as an accurate measure of student learning achievements during the fourth-year general surgery clerkship. Furthermore, OSCE scores from 2005 to 2008 have been statistically similar across all 3 teaching sites.21 For the current study, student OSCE results from the intervention hospital in 2010 were compared with those from the control hospitals to determine whether implementation of the HOT workshop advanced intern teaching skills leading to improved student learning at the intervention site.

A priori power analysis was performed using historic OSCE grades (mean = 172; SD = 17) to give study investigators an indication of sample size sufficiency. For this study to detect a minimally significant difference of 5% in OSCE scores (effect size of 0.5) between the 2 study arms with significance criterion equal to 0.05 and power of 0.95, 16 medical students would be required in each arm. The study received ethics approval from the University of Auckland Human Participants Ethics Committee.

Statistical Analysis

Intern-led teaching ratings collected using the Intern Clinical Teaching Effectiveness Instrument were compared using a multivariate analysis of variance (MANOVA) test after exploratory factor analysis was performed using the maximum-likelihood method. The MANOVA with a post hoc Scheffe test was used to compare the ratings on a hospital-to-hospital basis. Internal reliability of this data set was established by calculating Cronbach α coefficient. Student OSCE results were compared using independent sample t tests. All statistical tests were performed using the Predictive Analytics software statistics program, version 18.0 (SPSS Inc., Chicago IL).

Results

The HOT workshop was attended by 34 interns, and with the exception of 3 interns, participants were all graduates of New Zealand's 2 medical schools. There were 22 men and 12 women, and their ages ranged from 23 to 42 years.

A total of 101 Intern Clinical Teaching Effectiveness Instruments were collected from 124 medical students during the 7-month study period (response rate of 81%). A Cronbach α coefficient of 0.95 confirmed the instrument's internal consistency, and maximum-likelihood factor analysis demonstrated that 58% of the total variance among the instrument items could be explained by a single underlying factor. All instrument items were found to contribute meaningfully to this analysis (box 2).

Box 2 Factor Matrix: Effectiveness of Intern Clinical Teaching

Instrument Item: “The Interns… ” Factor 1

Adjusted teaching to my learning needs 0.851

Teach effective communication skills 0.836

Teach diagnostic skills including clinical reasoning 0.811

Adjust teaching to diverse settings 0.797

Organize time to allow for teaching 0.796

Ask questions to promote learning 0.783

Give clear explanations/reasons 0.763

Offer regular feedback 0.756

Coach clinical/technical skills 0.743

Stimulate independent learning 0.742

Establish a good learning environment 0.718

Clearly specify learning expectations 0.707

Incorporate research data and/or practice guidelines into teaching 0.696

Teach principles of cost-appropriate health care 0.695

Allow appropriate autonomy 0.653

There were 99 fully completed sets of teacher ratings, and significant differences were found between those collected at the intervention hospital and those at the control hospitals (Wilks λ = 0.603, F15,83 = 2.662, P = .002). table 2 demonstrates that ratings for all the items were significantly different between the intervention hospital and control hospitals, and effect sizes ranged from medium to high (0.47–1.20). There were no significant differences between the 2 control hospitals.

TABLE 2.

MANOVA Analysis of Intern Clinical Teaching Effectiveness Instrument Ratings: Intervention Site Versus Control Sites

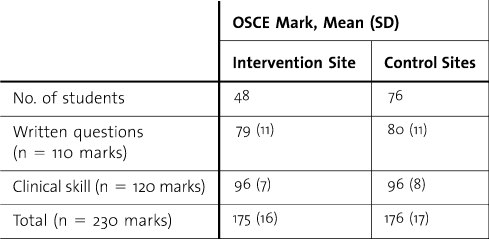

Surgical OSCE grades of the same 124 medical students demonstrated no statistically significant interhospital differences. There were also no differences when grades from the intervention hospital were compared with combined grades from the 2 control hospitals (table 3).

TABLE 3.

Fourth-Year General Surgery Clerkship Objective Structured Clinical Examination (OSCE) Results, February to August 2010

Discussion

To our knowledge, the current study is the first to formally investigate the effect of an RaT program on medical student learning outcomes, and no immediate improvements were demonstrated despite observable progress in teacher behavior. The effectiveness of teachers is commonly evaluated by students in tertiary and professional educational institutions, including medical schools and teaching hospitals. In general, these teacher ratings are reliable and comparable with other measures, such as teacher self-evaluations, peer ratings, and student learning outcomes.26 The psychometric properties of various clinical teacher rating instruments have been described,27 and their reported benefits include provision of consistent feedback to teachers and program directors and facilitation of research to investigate variables affecting teaching effectiveness. These rating instruments have been extensively used in the assessment of RaT programs.1

The Intern Clinical Teaching Effectiveness Instrument was modified from a tool developed by Copeland and Hewson25 with psychometric properties that include reliability, content validity, and criterion validity. This instrument accurately discriminated between residents who had attended the HOT workshop and those who had not, and its validity was highlighted by the comparison analysis that matched the largest effect sizes to instrument items describing teaching skills emphasized during the HOT workshop.

In a recent systematic review,1 4 other studies have investigated the effect of RaT programs on interns. Of these, 2 had similar follow-up durations to the current study.14,28 Edwards et al14 described the effect of an RaT workshop on student evaluation of intern teaching skills at Louisiana State University School of Medicine. Using the Clinical Teaching Assessment Form,29 third-year medical students rated 9 aspects of intern-led teaching. Interns who had attended the workshop received significantly higher scores for 4 of the items than did residents who had not attended. These items described the interns' knowledge, organizational skills, demonstration of clinical skills, and overall teaching effectiveness.

Putting aside potential criticisms of the HOT workshop's curriculum and delivery methods, there remain several additional considerations: which confounding factors could potentially contribute to student OSCE grades, can intern-teacher training programs instantly improve student learning outcomes, and if attainable improvements had been made, was the OSCE the most appropriate method of evaluation?

A number of potential confounding factors need to be considered. These are either intrinsic to student participants or extrinsic, that is, related to intern teachers and the clinical teaching environments. Although medical students were not randomized to the participating hospitals, study investigators felt confident that the cohorts were matched based on previous data.21 Potential confounding factors related to intern teachers include differences in their clinical knowledge and skills and prior teaching experience. Unfortunately, the first factor is difficult to exclude. Although New Zealand medical graduates do not routinely receive recognized training in clinical teaching during or after medical school, senior medical students may gain random ad hoc teaching experience before internship.

Because all 3 teaching hospitals are in the same city and are of similar size, study investigators were assured that the clinical workload, patient demographics, available specialties, and clinician-student ratio at these sites were comparable. They were, however, unable to control for interhospital differences in effectiveness of student teaching conducted by more senior clinicians.

Consideration should also be given to the possibility that RaT programs for interns may not necessarily bring about immediate changes to student learning. Like RaT programs, faculty development programs for senior clinicians also improve teaching skills and increase student satisfaction.30 It is rational to believe that improving the pedagogic skills of clinicians promotes learning by medical students. However, there is only limited evidence suggesting that the effectiveness of clinical teaching perceived by students can positively affect their performance and learning outcomes.30–32 Medical students are characteristically individuals with profound capacity to adapt and self-motivate, likely possessing the ability to achieve assessed learning outcomes, regardless of the teaching they receive. Even the best clinical teachers may, therefore, have only a minor role in student learning.

Furthermore, the study findings may be explained by a possible mismatch between the clinical skills and knowledge taught to students by surgical interns and those examined by the surgical OSCE, which is based on the clerkship's formal curriculum. Residents and interns teach a significant proportion of the “informal” clinical curriculum, where skills and knowledge are focused on the day-to-day aspects of patient management (procedural skills, patient communication, task prioritization, among others).33 A thorough understanding of the teaching contributions made by residents and interns is needed before effectiveness of their teaching can be truly determined.

The RaT programs are likely to have medium- and long-term effects, which remain unexplored. Previous research has highlighted the effect of resident-initiated bedside teaching, skills coaching, and role modeling on self-evaluated “preparedness to practice” of final-year medical students.34–36 Mentoring by residents and interns is also known to influence future career choices of medical students, particularly attracting them to surgical specialties.37–39 How RaT programs influence these subsequent student outcomes has yet to be documented.

Conclusions

The HOT workshop improved reported clinical teaching behavior by attending interns. However, there were no demonstrable improvements in measured clerkship learning outcomes by the medical students supervised by the interns. Before further research can be conducted to investigate the effectiveness of resident- and intern-led teaching, the contributions made to the clerkship learning environment by these clinical teachers must be better defined and coupled with an understanding of how to accurately and reliably evaluate their effect on student learning.

Footnotes

Andrew G. Hill, MBChB, MD, FRACS, FACS, is Associate Professor at South Auckland Clinical School, Faculty of Medical and Health Sciences, University of Auckland, Middlemore Hospital, Otahuhu, Auckland, New Zealand; Sanket Srinivasa, MBChB, is Research Fellow at South Auckland Clinical School, Faculty of Medical and Health Sciences, University of Auckland, New Zealand; Susan J. Hawken, MBChB, FRNZCGP, MHSc(Hons), is Senior Lecturer of Department of Psychological Medicine at School of Medicine, Faculty of Medical and Health Sciences, University of Auckland, New Zealand; Mark Barrow, MSc, EdD, is Associate Dean of Education at Deanery, Faculty of Medical and Health Sciences, University of Auckland, New Zealand; Susan E. Farrell, MD, EdM, is Assistant Professor at the Center for Teaching and Learning, Harvard Medical School, Harvard University; John Hattie, BA, DipEd, MA(Hons), PhD, is Honorary Professor of Education at University of Auckland, New Zealand; and Tzu-Chieh Yu, MBChB, is Research Fellow at South Auckland Clinical School, Faculty of Medical and Health Sciences, University of Auckland, New Zealand.

Funding: The authors report no external funding source for this study.

The authors wish to acknowledge the study participants and thank the South Auckland Clinical School, School of Medicine, University of Auckland, for their assistance and support.

Items were rated on a 5-point Likert scale (1 = never/poor, 2 = seldom/mediocre, 3 = sometimes/good, 4 = often/very good, 5 = always/superb).

References

- 1.Hill AG, Yu TC, Barrow M, Hattie J. A systematic review of resident-as-teacher programmes. Med Educ. 2009;43(12):1129–1140. doi: 10.1111/j.1365-2923.2009.03523.x. [DOI] [PubMed] [Google Scholar]

- 2.Barrow M. Medical students' opinions of the house officer as teacher. J Med Educ. 1966;41(8):807–810. doi: 10.1097/00001888-196608000-00010. [DOI] [PubMed] [Google Scholar]

- 3.Bing-You RG, Sproul MS. Medical students' perceptions of themselves and residents as teachers. Med Teach. 1992;14(2–3):133–138. doi: 10.3109/01421599209079479. [DOI] [PubMed] [Google Scholar]

- 4.Remmen R, Denekens J, Scherpbier A, Hermann I, van der Vleuten C, Roven PV, et al. An evaluation study of the didactic quality of clerkships. Med Educ. 2000;34(6):460–464. doi: 10.1046/j.1365-2923.2000.00570.x. [DOI] [PubMed] [Google Scholar]

- 5.De SK, Henke PK, Ailawadi G, Dimick JB, Colletti LM. Attending, house officer, and medical student perceptions about teaching in the third-year medical school general surgery clerkship. J Am Coll Surg. 2004;199(6):932–942. doi: 10.1016/j.jamcollsurg.2004.08.025. [DOI] [PubMed] [Google Scholar]

- 6.Whittaker LD, Jr, Estes NC, Ash J, Meyer LE. The value of resident teaching to improve student perceptions of surgery clerkships and surgical career choices. Am J Surg. 2006;191(3):320–324. doi: 10.1016/j.amjsurg.2005.10.029. [DOI] [PubMed] [Google Scholar]

- 7.Brown RS. House staff attitudes toward teaching. J Med Educ. 1970;45(3):156–159. doi: 10.1097/00001888-197003000-00005. [DOI] [PubMed] [Google Scholar]

- 8.Busari JO, Prince KJ, Scherpbier AJ, Van Der Vleuten CP, Essed GG. How residents perceive their teaching role in the clinical setting: a qualitative study. Med Teach. 2002;24(1):57–61. doi: 10.1080/00034980120103496. [DOI] [PubMed] [Google Scholar]

- 9.Wilkerson L, Lesky L, Medio FJ. The resident as teacher during work rounds. J Med Educ. 1986;61(10):823–829. doi: 10.1097/00001888-198610000-00007. [DOI] [PubMed] [Google Scholar]

- 10.Apter A, Metzger R, Glassroth J. Residents' perceptions of their roles as teachers. J Med Educ. 1988;63(12):900–905. doi: 10.1097/00001888-198812000-00003. [DOI] [PubMed] [Google Scholar]

- 11.Sheets KJ, Hankin FM, Schwenk TL. Preparing surgery house officers for their teaching role. Am J Surg. 1991;161(4):443–449. doi: 10.1016/0002-9610(91)91109-v. [DOI] [PubMed] [Google Scholar]

- 12.Lawson BK, Harvill LM. The evaluation of a training program for improving residents' teaching skills. J Med Educ. 1980;55(12):1000–1005. doi: 10.1097/00001888-198012000-00003. [DOI] [PubMed] [Google Scholar]

- 13.Jewett LS, Greenberg LW, Goldberg RM. Teaching residents how to teach: a one-year Study. J Med Educ. 1982;57(5):361–366. doi: 10.1097/00001888-198205000-00002. [DOI] [PubMed] [Google Scholar]

- 14.Edwards JC, Kissling GE, Plauche WC, Marier RL. Evaluation of a teaching skills improvement programme for residents. Med Educ. 1988;22(6):514–517. doi: 10.1111/j.1365-2923.1988.tb00796.x. [DOI] [PubMed] [Google Scholar]

- 15.Greenberg LW, Goldberg RM, Jewett LS. Teaching in the clinical setting: factors influencing residents' perceptions, confidence and behaviour. Med Educ. 1984;18(5):360–365. doi: 10.1111/j.1365-2923.1984.tb01283.x. [DOI] [PubMed] [Google Scholar]

- 16.Bing-You RG, Greenberg LW. Training residents in clinical teaching skills: a resident-managed program. Med Teach. 1990;12(3–4):305–309. doi: 10.3109/01421599009006635. [DOI] [PubMed] [Google Scholar]

- 17.Dunnington GL, DaRosa D. A prospective randomized trial of a residents-as-teachers training program. Acad Med. 1998;73(6):696–700. doi: 10.1097/00001888-199806000-00017. [DOI] [PubMed] [Google Scholar]

- 18.Morrison EH, Rucker L, Boker JR, Gabbert CC, Hubbell FA, Hitchcock MA, et al. The effect of a 13-hour curriculum to improve residents' teaching skills: a randomized trial. Ann Intern Med. 2004;141(4):257–263. doi: 10.7326/0003-4819-141-4-200408170-00005. [DOI] [PubMed] [Google Scholar]

- 19.Yu TC, Srinivasa S, Steff K, Vitas MR, Hawken SJ, Farrell SE, et al. House officer-as-teacher workshops: changing attitudes and perceptions towards clinical teaching. Focus Health Prof Educ. 2010;12(2):86–96. [Google Scholar]

- 20.Farrell SE, Pacella C, Egan D, Hogan V, Wang E, Bhatia K, et al. Resident-as-teacher: a suggested curriculum for emergency medicine. Acad Emerg Med. 2006;13(6):677–679. doi: 10.1197/j.aem.2005.12.014. [DOI] [PubMed] [Google Scholar]

- 21.Yu T-CW, Wheeler BR, Hill AG. Effectiveness of standardized clerkship teaching across multiple sites. J Surg Res. 2011;168(1):e17–23. doi: 10.1016/j.jss.2009.09.035. [DOI] [PubMed] [Google Scholar]

- 22.Neher JO, Gordon KC, Meyer B, Stevens N. A five-step “microskills” model of clinical teaching. J Am Board Fam Pract. 1992;5(4):419–424. [PubMed] [Google Scholar]

- 23.Wolpaw TM, Wolpaw DR, Papp KK. SNAPPS: a learner-centered model for outpatient education. Acad Med. 2003;78(9):893–898. doi: 10.1097/00001888-200309000-00010. [DOI] [PubMed] [Google Scholar]

- 24.Ramani S. Twelve tips to improve bedside teaching. Med Teach. 2003;25(2):112–115. doi: 10.1080/0142159031000092463. [DOI] [PubMed] [Google Scholar]

- 25.Copeland HL, Hewson MG. Developing and testing an instrument to measure the effectiveness of clinical teaching in an academic medical center. Acad Med. 2000;75(2):161–166. doi: 10.1097/00001888-200002000-00015. [DOI] [PubMed] [Google Scholar]

- 26.Pritchard RD, Watson MD, Kelly K, Paquin AR. Helping Teachers Teach Well: A New System for Measuring and Improving Teaching Effectiveness in Higher Education. San Francisco, CA: New Lexington Press; 1998. [Google Scholar]

- 27.Beckman TJ, Ghosh AK, Cook DA, Erwin PJ, Mandrekar JN. How reliable are assessments of clinical teaching? a review of the published instruments. J Gen Intern Med. 2004;19(9):971–977. doi: 10.1111/j.1525-1497.2004.40066.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Litzelman DK, Stratos GA, Skeff KM. The effect of a clinical teaching retreat on residents' teaching skills. Acad Med. 1994;69(5):433–434. doi: 10.1097/00001888-199405000-00060. [DOI] [PubMed] [Google Scholar]

- 29.Irby D, Rakestraw P. Evaluating clinical teaching in medicine. J Med Educ. 1981;56(3):181–186. doi: 10.1097/00001888-198103000-00004. [DOI] [PubMed] [Google Scholar]

- 30.Steinert Y, Mann K, Centeno A, Dolmans D, Spencer J, Gelula M, et al. et al. A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME guide no. 8. Med Teach. 2006;28(6):497–526. doi: 10.1080/01421590600902976. [DOI] [PubMed] [Google Scholar]

- 31.Roop SA, Pangaro L. Effect of clinical teaching on student performance during a medicine clerkship. Am J Med. 2001;110(3):205–209. doi: 10.1016/s0002-9343(00)00672-0. [DOI] [PubMed] [Google Scholar]

- 32.Marriott DJ, Litzelman DK. Students' global assessments of clinical teachers: a reliable and valid measure of teaching effectiveness. Acad Med. 1998;73(10)(suppl):S72–S74. doi: 10.1097/00001888-199810000-00050. [DOI] [PubMed] [Google Scholar]

- 33.Tremonti LP, Biddle WB. Teaching behaviors of residents and faculty members. J Med Educ. 1982;57(11):854–859. doi: 10.1097/00001888-198211000-00006. [DOI] [PubMed] [Google Scholar]

- 34.Gome JJ, Paltridge D, Inder WJ. Review of intern preparedness and education experiences in general medicine. Intern Med J. 2008;38(4):249–253. doi: 10.1111/j.1445-5994.2007.01502.x. [DOI] [PubMed] [Google Scholar]

- 35.Cave J, Woolf K, Jones A, Dacre J. Easing the transition from student to doctor: how can medical schools help prepare their graduates for starting work. Med Teach. 2009;31(5):403–408. doi: 10.1080/01421590802348127. [DOI] [PubMed] [Google Scholar]

- 36.Sheehan D, Wilkinson TJ, Billett S. Interns' participation and learning in clinical environments in a New Zealand hospital. Acad Med. 2005;80(3):302–308. doi: 10.1097/00001888-200503000-00022. [DOI] [PubMed] [Google Scholar]

- 37.Ek EW, Ek ET, Mackay SD. Undergraduate experience of surgical teaching and its influence and its influence on career choice. ANZ J Surg. 2005;75(8):713–718. doi: 10.1111/j.1445-2197.2005.03500.x. [DOI] [PubMed] [Google Scholar]

- 38.McCord JH, McDonald R, Sippel RS, Leverson G, Mahvi DM, Weber SM. Surgical career choices: the vital impact of mentoring. J Surg Res. 2009;155(1):136–141. doi: 10.1016/j.jss.2008.06.048. [DOI] [PubMed] [Google Scholar]

- 39.Musunuru S, Lewis B, Rikkers LF, Chen H. Effective surgical residents strongly influence medical students to pursue surgical careers. J Am Coll Surg. 2007;204(1):164–167. doi: 10.1016/j.jamcollsurg.2006.08.029. [DOI] [PubMed] [Google Scholar]