Abstract

Introduction

The direct fundoscopic examination is an important clinical skill, yet the examination is difficult to teach and competency is difficult to assess. Currently there is no defined proficiency assessment for this physical examination, and the objective of this study was to assess the feasibility of a simulation model for evaluating the fundoscopic skills of residents.

Methods

Emergency medicine and ophthalmology residents participated in simulation sessions using a commercially available eye simulator that was modified with customized slides. The slides were designed with the goal of having a quantifiable measure of visualization in addition to a more traditional descriptive outcome. To assess feasibility, participants' ease of use, time to perform the examination, and user satisfaction were assessed.

Results

The simulation could be completed in a timely fashion (mean time per slide, 61–95 seconds), and there were no significant differences in performance between emergency medicine and ophthalmology residents in completion of this task. Residents expressed an interest in fundoscopy through simulation but found this model technically challenging.

Conclusions

This simulation model has potential as a means of training and testing fundoscopy. A concern was low user satisfaction, and further refinement of the model is needed.

Introduction

Physical examination skills proficiency is a key component of accurate diagnosis. Despite the recognized importance of this, medical education has been criticized for a perceived decay in the physical examination skills of recent graduates.1 Direct ophthalmoscopic examination is frequently mentioned in the discussion of the attrition of physical examination skills.1–4 While indirect ophthalmoscopy has largely replaced direct examination in the specialized practice of ophthalmologists, the ability to perform a fundsocopic examination on a nondilated eye remains important in general practice.

Multiple techniques have been used to teach and evaluate this examination skill, but currently there is no defined competency assessment. Some simulation models exist but are limited by their lack of fidelity or the fact that they were not designed with competency assessment in mind.5–7 The objective of this study was to assess the feasibility of a new model for competency assessment at fundoscopy.

Methods

Study Design

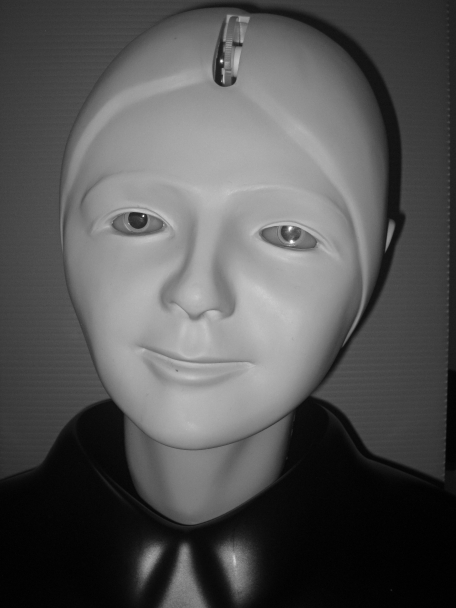

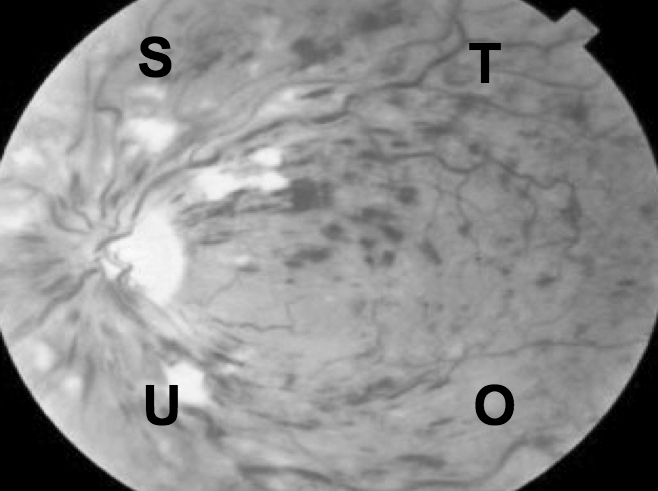

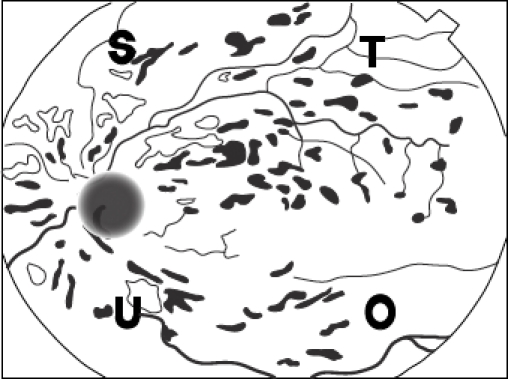

An existing product, the EYE Exam Simulator (Kyoto Kagaku Co.) (figure 1) was modified for our study. The life-sized mannequin head has an adjustable pupil and allows visualization of the fundus by using a standard ophthalmoscope. The “fundus” is created by a set of removable 35-mm slides with a reflective backing. Building on the methodology of Bradley et al,7 slides were created to allow an observer to quantify the participants' visualization of the fundus. We chose fundoscopic pictures of common emergency diagnoses (including normal fundus, central retinal artery occlusion, central retinal vein occlusion, papilledema, and cytomegalovirus retinitis) and added letters to each corner of the image so that raters would be able verify that participants got a complete view of the fundus (figure 2).

FIGURE 1.

“EYE Exam Simulator” by Kyoto Kagaku Co, Ltd

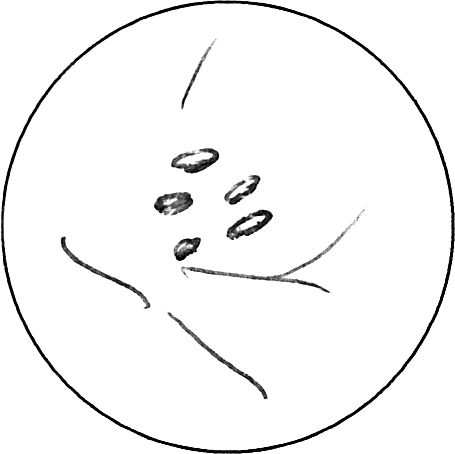

FIGURE 2.

Sample Slide: Central Retinal Vein Occlusion (CRVO) Modified With Letters Used for Markers in Corners

Image provided by The New York Eye and Ear Infirmary Robert Bendheim Digital Atlas of Ophthalmology, www.nyee.edu.

Selection of Participants

The population eligible for the study included emergency medicine (EM) residents (postgraduate year 1–4 [PGY-1–4]) and ophthalmology residents (PGY-2–4) at Northwestern University during 2009–2010. PGY-1 ophthalmology residents completed a preliminary year and were excluded. Participants were recruited via e-mail in the summer of 2009. Data collection was performed one-on-one and, although written consent was waived, participants were read an oral consent script. The Institutional Review Board granted exempt status to this study.

Participants provided demographic and previous experience data, attempted direct visualization of the fundus with a handheld ophthalmoscope, and were instructed to draw everything they visualized and to record the pathology seen. They were not informed that there were markers on the slides. Finally, participants completed a brief survey about their impressions of the model.

Outcome Measures

The primary outcome of this study was to assess the feasibility of this model as a quantifiable assessment of fundoscopic skills, and we examined a combination of resident opinions of the model, the time to perform the task, and residents' abilities to recognize both the markers and the pathology.

Primary Data Analysis

All data were de-identified. Two blinded raters, trained to use a coding scheme, independently viewed each drawing and rated it for similarity to a prototype picture (1 = poor, 5 = excellent) (figures 3 and 4). A rating of 1 represented no attempt at a drawing or a “scribble” that was unrecognizable as any anatomic feature.

FIGURE 3.

Index Drawing of CRVO

FIGURE 4.

Sample Student Drawing of CRVO

Means, standard deviations (SD), and Student t-test results were calculated for data in which normality could be ensured. Medians (interquartile ranges) and the Mann-Whitney U test were used for survey data and completeness of visualization, in which normality could not be ensured. Pearson χ2 tests were used for categorical data including the recognition of pathology on slides. Interrater reliability was calculated using Cohen kappa coefficient. All statistical analysis was performed using Stata 9 software (Stata Corp, College Station, TX).

Results

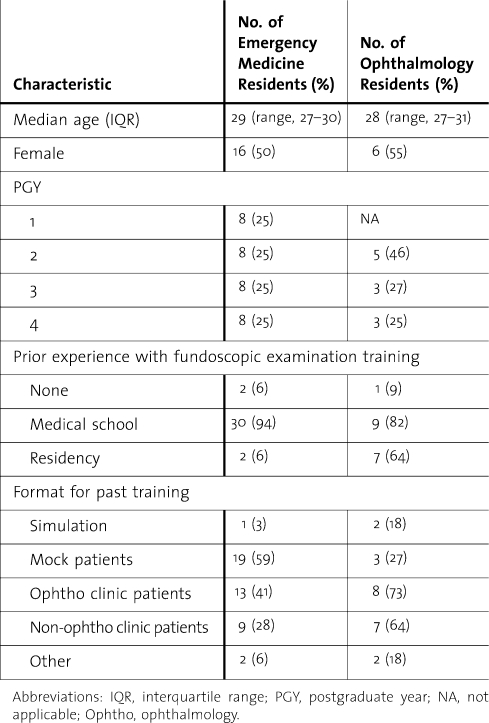

Thirty-two of 46 (70%) EM and 11 of 12 (92%) ophthalmology residents completed the evaluation. Most participants had previous training in fundoscopy; however, only 7% had used a fundoscopic simulator (table 1).

TABLE 1.

Characteristics of Participants

The mean number of identified markers was 0.82 of 4. There was a progressive improvement in identification of markers seen over the course of the experiment, with 11.6% of markers identified on Slide 1 compared with 22.8% on Slide 4 (N = 172 markers, P = .006). Post hoc analysis showed no significant differences between specialties or training level.

Pathology was correctly identified for only 6 slides (of a possible 215 [ = 5 slides × 43 residents]). The mean time spent per slide ranged from 61.4–68.3 seconds for EM and 68.4–95.6 seconds for ophthalmology residents. There was a tendency for ophthalmology residents to spend more time. This was statistically significant for 2 slides (Slide 3, P = .03; Slide 5, P = .01).

The drawing analysis revealed generally low scores but no difference between groups. EM residents had an average score of 1.13 (range, 1–1.9), while ophthalmology residents had an average score 1.16 (range, 1–1.6). This score of 1 indicates that many participants did not attempt a drawing, and when it was attempted, it was poor quality. Agreement between the raters was good (kappa coefficient = 0.6).

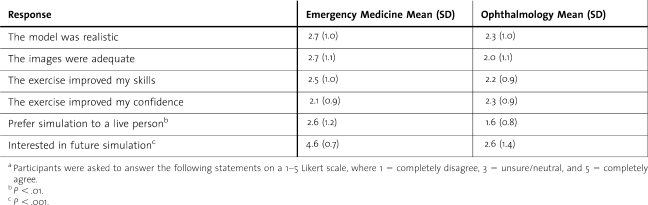

table 2 displays the results of the participant assessment of the model. The EM residents preferred simulation rather than live patients for learning this skill (P = .01) and were more interested in future simulation training (P < .001).

TABLE 2.

Survey Results Dataa

Discussion

Dr L. F. Herbert wrote, “examination of the eye is not stressed and often it is forgotten unless the eye is posing an obvious and immediate problem.”2 The advent of innovative technologies has eroded the perceived utility of some physical examination skills1; however, in an eye emergency, there is no proven replacement for direct ophthalmoscopy. When surveyed, most physicians recognize the importance of this examination skill and their lack of proficiency in performing it.1,3,8 In fact, performing a nondilated fundoscopic examination rated lowest in self-confidence of all examination skills.1 Similarly, our EM participants reported low self-confidence in performing fundoscopy.

Although the technique of practicing fundoscopy on fellow learners is realistic, there is no way for an observer to verify what the student visualizes. Previous simulation models have made an important first step toward objectively assessing ophthalmoscopic skills but have been limited by nonanatomic design.5–7,9 Use of the EYE Exam Simulator model avoids some of the downfalls of earlier simulated eyes (backlighting, wider field of vision, nonanatomic pupil size, lack of red reflex).

Unfortunately, in our study, most participants did not identify the markers or the pathology. Because there is no gold standard for this competency, we cannot determine if the poor performance was due to a low skill level or if it is attributable to the model. It is possible that not informing participants of the markers on the slides could have impacted performance; however, the authors believe this is unlikely as nearly 90% of participants were able to identify markers on one or more of the slides. Participants infrequently attempted to draw the pathology, and when drawings were made, it was difficult for raters to distinguish between an errant scribble and an intentional drawing of a blood vessel. A small number of residents were able to successfully complete both marker and pathology identification, demonstrating that both were achievable. We believe that the low rate of drawings and identification of pathology were linked and likely attributable to light penetration of the slides (see limitations).

We assessed differences between EM and ophthalmology residents. Survey data support the hypothesis that ophthalmology residents are more comfortable with fundoscopy at baseline. Interestingly, they did not identify more markers or recognize more pathology. This may be because ophthalmology residents prefer indirect ophthalmoscopy and receive most of their training in that modality. However, the lack of a difference between the two groups could be an indicator of an inadequate model or power of the study.

To assess the feasibility of this model, we sought feedback from participants. Overall, the feedback was neutral and did not indicate support for training using this model, although EM residents indicated interest in future simulation training. The lack of enthusiasm for our model, while frustrating, highlights the challenge of assessing these skills. One lesson learned in the development of the model that could have improved the fidelity of the simulation would have been to test for deficiencies prior to full study enrollment.

Our study and the model have several limitations. The sample size was small, and the images used to create slides were generally darker than the images commercially produced by Kyoto Kagaku Co, which may have decreased the visibility of landmarks. To readers considering using a similar design, we would recommend creating customized slides with greater light penetration and placing the slide markers more centrally. The eccentric placement may have limited participants' abilities to see all of the markers.

Conclusion

Our simulated fundoscopy model was able to be performed in a brief period of time and is adaptable with respect to pathology. Unfortunately, many residents found the images dark, the markers placed too eccentrically, and the lens of the model too reflective. We believe that the model requires further refinement and testing in comparison to other models before a final determination about its usefulness is made. The slides were simple to create and inexpensive to produce, and educators could easily and inexpensively tailor this product to their learners' needs. We hope our experience will help inform future efforts in creating a fundoscopic model that allows objective assessment of this skill.

Footnotes

All authors are at the Feinberg School of Medicine, Northwestern University. Danielle M. McCarthy, MD, is a Clinical Instructor, Department of Emergency Medicine; Heather R. Leonard, MD, is a Resident, Department of Emergency Medicine; and John A. Vozenilek, MD, is an Associate Professor, Department of Emergency Medicine and Director of the Simulation Technology and Immersive Learning Center.

Funding: The authors report no external funding source for this study.

References

- 1.Wu EH, Fagan MJ, Reinert SE, Diaz JA. Self-confidence in and perceived utility of the physical examination: a comparison of medical students, residents, and faculty internists. J Gen Intern Med. 2007;22:1725–1730. doi: 10.1007/s11606-007-0409-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Herbert LF. Looking Back (and Forth) Reflections of an Old-Fashioned Doctor. Macon, GA: Mercer University Press; 2003. Requiem for an ophthalmoscope; pp. 60–62. [Google Scholar]

- 3.Roberts E, Morgan R, King D, Clerkin L. Funduscopy: a forgotten art. Postgrad Med J. 1999;75:282–284. doi: 10.1136/pgmj.75.883.282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jacobs DS. Teaching doctors about the eye: trends in the education of medical students and primary care residents. Surv Ophthalmol. 1998;42:383–389. doi: 10.1016/s0039-6257(97)00121-5. [DOI] [PubMed] [Google Scholar]

- 5.Penta FB, Kofman S. The effectiveness of simulation devices in teaching selected skills of physical diagnosis. J Med Educ. 1973;48:442–445. doi: 10.1097/00001888-197305000-00005. [DOI] [PubMed] [Google Scholar]

- 6.Chung KD, Watzke RC. A simple device for teaching direct ophthalmoscopy to primary care practitioners. Am J Ophthalmol. 2004;138:501–502. doi: 10.1016/j.ajo.2004.04.009. [DOI] [PubMed] [Google Scholar]

- 7.Bradley P. A simple eye model to objectively assess ophthalmoscopic skills of medical students. Med Educ. 1999;33:592–595. doi: 10.1046/j.1365-2923.1999.00370.x. [DOI] [PubMed] [Google Scholar]

- 8.Gupta RR, Lam WC. Medical students' self-confidence in performing direct ophthalmoscopy in clinical training. Can J Ophthalmol. 2006;41:169–174. doi: 10.1139/I06-004. [DOI] [PubMed] [Google Scholar]

- 9.Levy A, Churchill AJ. Training and testing competence in direct ophthalmoscopy. Med Educ. 2003;37:483–484. doi: 10.1046/j.1365-2923.2003.01502_13.x. [DOI] [PubMed] [Google Scholar]