Abstract

Although nonnegative matrix factorization (NMF) favors a sparse and part-based representation of nonnegative data, there is no guarantee for this behavior. Several authors proposed NMF methods which enforce sparseness by constraining or penalizing the of the factor matrices. On the other hand, little work has been done using a more natural sparseness measure, the . In this paper, we propose a framework for approximate NMF which constrains the of the basis matrix, or the coefficient matrix, respectively. For this purpose, techniques for unconstrained NMF can be easily incorporated, such as multiplicative update rules, or the alternating nonnegative least-squares scheme. In experiments we demonstrate the benefits of our methods, which compare to, or outperform existing approaches.

Keywords: NMF, Sparse coding, Nonnegative least squares

1. Introduction

Nonnegative matrix factorization (NMF) aims to factorize a nonnegative matrix into a product of nonnegative matrices and . We can distinguish between exact NMF, i.e. , and approximate NMF, i.e. . For approximate NMF, which seems more relevant for practical applications, one needs to define a divergence measure between the data and its reconstruction , such as the Frobenius norm or the generalized Kullback–Leibler divergence [1]. Approaches using more generalized measures, such as Bregman divergences or the , can be found in [2,3]. In this paper, we focus on approximate NMF using the Frobenius norm as objective. Let the dimensions of , and be , , and , respectively. When the columns of are multidimensional measurements of some process, the columns of gain the interpretation of basis vectors, while contains the corresponding weights. The number of basis vectors (or inner approximation rank) K is typically assumed to be . Hence, NMF is typically used as compressive technique.

Originally proposed by Paatero and Tapper under the term positive matrix factorization [4], NMF became widely known due to the work of Lee and Seung [5,1]. One reason for its popularity is that the multiplicative update rules proposed in [1] are easy to implement. Furthermore, since these algorithms rely only on matrix multiplication and element-wise multiplication, they are fast on systems with well-tuned linear algebra methods. The main reason for its popularity, however, is that NMF tends to return a sparse and part-based representation of its input data, which makes its application interesting in areas such as computer vision [5], speech and audio processing [6–9], document clustering [10], to name but a few. This naturally occurring sparseness gives NMF a special status compared to other matrix factorization methods such as principal/independent component analysis or k-means clustering.

However, sparsity in NMF occurs as a by-product due to nonnegativity constraints, rather than being a design objective of its own. Various authors proposed modified NMF algorithms which explicitly enforce sparseness. These methods usually penalize [11,12] or constrain [13] the of or , which is known to yield a sparse representation [14,15]. An explanation for the sparseness inducing nature of the is that it can be interpreted as a convex relaxation of the -(pseudo)-norm, i.e. the number of non-zero entries in a vector. Indeed, the is a more intuitive sparseness measure, which allows to specify a certain number of non-zero entries, while similar statements cannot be made via the . Introducing the non-convex as constraint function typically renders a problem NP-hard, requiring exhaustive combinatoric search. However, Vavasis [16] has shown that NMF is NP-hard per se,1 so we have to accept (most probably) that any algorithm for NMF is suboptimal. Hence, a heuristic method for NMF with might be just as appropriate and efficient as NMF.

Little work is concerned with NMF. The K-SVD algorithm [17] aims to find an overcomplete dictionary for sparse representation of a set of training signals . The algorithm minimizes the approximation error between data and its reconstruction , where are imposed on the columns of . The nonnegative version of K-SVD [18] additionally constrains all matrices to be nonnegative. Hence, nonnegative K-SVD can be interpreted as NMF with sparseness constraints on the columns of . Probabilistic sparse matrix factorization (PSMF) [19] is closely related to K-SVD, however, no nonnegative PSMF was proposed so far. Morup et al. [20] proposed an approximate NMF algorithm which constrains the of the columns, using a nonnegative version of least angle regression and selection [21]. For the update, they used the normalization-invariant update rule described in [12]. In [22], a method for NMF was described, which penalizes a smoothed of the matrix . Using this smooth approximation of the , they were able to derive multiplicative update rules, similar as in [1]. More details about prior art concerned with NMF can be found in Section 2.4.

In this paper, we propose two generic strategies to compute an approximate NMF with constraints on the columns of or , respectively. For NMF with constraints on , the key challenge is to find a good approximate solution for the nonnegative sparse coding problem, which is NP-hard in general [23]. For sparse coding without nonnegativity constraints, a popular approximation algorithm is orthogonal matching pursuit (OMP) [24], due to its simplicity and theoretic guarantees [25,26]. Here, we show a close relation between OMP and the active set algorithm for nonnegative least squares (NNLS) [27], which, to the best of our knowledge, was overlooked in the literature so far. As a consequence, we propose a simple modification of NNLS, called sparse NNLS (sNNLS), which represents a natural integration of nonnegativity constraints in OMP. Furthermore, we propose an algorithm called reverse sparse NNLS (rsNNLS), which uses a reversed matching pursuit principle. This algorithm shows the best performance of all sparse coders in our experiments, and competes with or outperforms nonnegative basis pursuit (NNBP). Note that basis pursuit usually delivers better results than algorithms from the matching pursuit family, while requiring more computational resources [28,25]. For the second stage in our framework, which updates and the non-zero coefficients in , we show that the standard multiplicative update rules [1] can be used without any modification. Also, we propose a sparseness maintaining active set algorithm for NNLS, which allows to apply an alternating least squares scheme for NMF.

Furthermore, we propose an algorithm for NMF with columns in . As far as we know, no method exists for this problem so far. The proposed algorithm follows a similar approach as in NMF [13], projecting the columns of onto the closest vectors with desired sparseness after each update step. In experiments, the algorithm runs much faster than the method. Furthermore, the results of the proposed method are significantly sparser in terms of the while achieving the same reconstruction quality.

Throughout the paper, we use the following notation. Upper-case boldface letter denote matrices. For sets we use Fraktur letters, e.g. . A lower-case boldface letter denotes a column vector. A lower-case boldface letter with a subscript index denotes a specific column of the matrix denoted by the same upper-case letter, e.g. is the ith column of matrix . Lower-case letters with subscripts denote specific entries of a vector or a matrix, e.g. x i is the ith entry of and is the entry in the ith row and the jth column of . A matrix symbol subscripted with a set symbol denotes the submatrix consisting of the columns which are indexed by the elements of the set, e.g. is the sub-matrix of containing the columns indexed by . Similarly, a vector subscripted by a set symbol is the sub-vector containing the entries indexed by the set. With we denote the : . Further, denotes the , i.e. the number of non-zero entries in the argument. The Frobenius norm is defined as .

The paper is organized as follows. In Section 2 we discuss NMF techniques related to our work. We present our framework for NMF in Section 3. Experiments are presented in Section 4 and Section 5 concludes the paper.

2. Related work

Let us formalize NMF as the following optimization problem:

| (1) |

where denotes the element-wise greater-or-equal operator.

2.1. NMF via multiplicative updates

Lee and Seung [1] showed that is nonincreasing under the multiplicative update rules

| (2) |

and

| (3) |

where and / denote element-wise multiplication and division, respectively. Obviously, these update rules preserve nonnegativity of and , given that is element-wise nonnegative.

2.2. NMF via alternating nonnegative least squares

Paatero and Tapper [4] originally suggested to solve (1) alternately for and , i.e. to iterate the following two steps:

Note that is convex in either or , respectively, but non-convex in and jointly. An optimal solution for each sub-problem is found by solving a nonnegatively constrained least-squares problem (NNLS) with multiple right hand sides (i.e. one for each column of ). Consequently, this scheme is called alternating nonnegative least-squares (ANLS). For this purpose, we can use the well known active-set algorithm by Lawson and Hanson [27], which for convenience is shown in Algorithm 1. The symbol denotes the pseudo-inverse.

Algorithm 1

Active-set NNLS [27].

1: 2: 3: 4: 5: whileanddo 6: 7: 8: 9: 10: 11: whiledo 12: 13: 14: 15: 16: 17: 18: end while 19: 20: 21: 22: end while

This algorithm solves NNLS for a single right hand side, i.e. it returns , for an arbitrary vector . The active set and the in-active set contain disjointly the indices of the entries. Entries with indices in are held at zero (i.e. the nonnegativity constraints are active), while entries with indices in have positive values. In the outer loop of Algorithm 1, indices are moved from to , until an optimal solution is obtained (cf. [27] for details). The inner loop (steps 11–18) corrects the tentative, possibly infeasible (i.e. negative) solution to a feasible one. Note that i ⁎ is guaranteed to be an element of in step 6, since the residual is orthogonal to every column in , and hence . Lawson and Hanson [27] showed that the outer loop has to terminate eventually, since the residual error strictly decreases in each iteration, which implies that no in-active set is considered twice. Unfortunately, there is no polynomial runtime guarantee for NNLS. However, they report that the algorithm typically runs well-behaved.

For ANLS, one can apply Algorithm 1 to all columns of independently, in order to solve for .2 However, a more efficient variant of NNLS with multiple right hand sides was proposed in [29]. This algorithm executes Algorithm 1 quasi-parallel for all columns in , and solves step 16 (least-squares) jointly for all columns sharing the same in-active set . Kim and Park [30] applied this more efficient variant to ANLS-NMF. Alternatively, NNLS can be solved using numerical approaches, such as the projected gradient algorithm proposed in [31]. For the remainder of the paper, we use the notation to state that is approximated by in NNLS sense. Similarly, denotes the solution of an NNLS problem with multiple right hand sides.

2.3. Sparse NMF

Various extensions have been proposed in order to incorporate sparsity in NMF, where sparseness is typically measured via some function of the . Hoyer [11] proposed an algorithm to minimize the objective , which penalizes the of the coefficient matrix . Eggert and Koerner [12] used the same objective, but proposed an alternative update which implicitly normalizes the columns of to unit length. Furthermore, Hoyer [13] defined the following sparseness function for an arbitrary vector :

| (4) |

where D is the dimensionality of . Indeed, is 0, if all entries of are non-zero and their absolute values are all equal, and 1 when only one entry is non-zero. For all other , the function smoothly interpolates between these extreme cases. Hoyer provided an NMF algorithm which constrains the sparseness of the columns of , the rows of , or both, to any desired sparseness value according to (4). There are further approaches which aim to achieve a part-based and sparse representation, such as local NMF [32] and non-smooth NMF [33].

2.4. Prior art for NMF

As mentioned in the Introduction, relatively few approaches exist for NMF. The K-SVD algorithm [17] aims to subject to where is the maximal number of non-zero coefficients per column of . Hence, the nonnegative version of K-SVD (NNK-SVD) [18] can be considered as an NMF algorithm with constraints on the columns of . However, the sparse coding stage in nonnegative K-SVD is rather an ad hoc solution, using an approximate version of nonnegative basis pursuit [28]. For the update stage, the K-SVD dictionary update is modified, by simply truncating negative values to zero after each iteration of an SVD approximation.

In [20], an algorithm for NMF with constraints on the columns was proposed. For the sparse coding stage, they used a nonnegative version of least angle regression and selection (LARS) [21], called NLARS. This algorithm returns a so-called solution path of the objective using an active-set algorithm, i.e. it returns several solution vectors with varying . For a specific column out of , one takes the solution with desired (when there are several such vectors, one selects the solution with smallest regularization parameter ). Repeating this for each column of , one obtains a nonnegative coding matrix with on its columns. To update , the authors used the self-normalizing multiplicative update rule described in [12].

In [22], the objective is considered, where . For , the second term in the objective converges to times the number of non-zero entries in . Contrary to the first two approaches, which constrain the , this method calculates an NMF which penalizes the smoothed , where penalization strength is controlled with the trade-off parameter . Therefore, the latter approach proceeds similar as the NMF methods which penalize the [11,12]. However, note that a trade-off parameter for a penalization term is generally not easy to choose, while constraints have an immediate meaning.

3. Sparse NMF with

We now introduce our methods for NMF with constraints on the columns of and , respectively. Formally, we consider the problems

| (5) |

and

| (6) |

We refer as -H and -W to problems (5) and (6), respectively. Parameter is the maximal allowed number of non-zero entries in or .

For -H, the sparseness constraints imply that each column in is represented by a conical combination of maximal L nonnegative basis vectors. When we interpret the columns of as features, this means that each data sample is represented by maximal L features, where typically . Nevertheless, if the reconstruction error is small, this implies that the extracted features are important to some extend. Furthermore, as noted in the Introduction, for unconstrained NMF it is typically assumed that . We do not have to make this restriction for -H, and can even choose , i.e. we can allow an overcomplete basis matrix (however, we require ).

For -W, the sparseness constraints enforce basis vectors with limited support. If for example the columns of contain image data, sparseness constraints on encourage a part-based representation.

NMF algorithms usually proceed in a two stage iterative manner, i.e. they alternately update and (cf. Section 2). We apply the same principle to -H and -W, where we take care that the sparseness constraints are maintained.

3.1. -H

A generic alternating update scheme for -H is illustrated in Algorithm 2. In the first stage, the sparse coding stage, we aim to solve the nonnegative sparse coding problem. Unfortunately, the sparse coding problem is NP-hard [23], and an approximation is required. We discuss several approaches in Section 3.1.1. In the second stage we aim to enhance the basis matrix . Here we allow that non-zero values in are adapted during this step, but we do not allow that zero values become non-zero, i.e. we require that the sparse structure of is maintained. We will see in Section 3.1.2 that all NMF techniques discussed so far can be used for this purpose. Dependent on the methods used for each stage, we can derive different algorithms from the generic scheme. Note that NNK-SVD [18] and the NMF proposed by Morup et al. [20] also follow this framework.

Algorithm 2

-H.

1: Initialize randomly 2: fordo 3: Nonnegative Sparse Coding: Sparsely encode data , using fixed basis matrix , resulting in a sparse, nonnegative matrix . 4: Basis Matrix Update: Enhance basis matrix and coding matrix , maintaining the sparse structure of . 5: end for

3.1.1. Nonnegative sparse coding

The nonnegative sparse coding problem is formulated as

| (7) |

Without loss of generality, we assume that the columns of are normalized to unit length. A well known and simple sparse coding technique without nonnegativity constraints is orthogonal matching pursuit (OMP) [24], which is shown in Algorithm 3. OMP is a popular technique, due to its simplicity, its low computational complexity, and its theoretical optimality bounds [25,26].

Algorithm 3

Orthogonal matching pursuit (OMP).

1: 2: 3: 4: for l=1:Ldo 5: 6: 7: 8: 9: 10: end for

In each iteration, OMP selects the basis vector which reduces the residual most (steps 6 and 7). After each selection step, OMP projects the data vector into the space spanned by the basis vectors selected so far (step 8).

Several authors proposed nonnegative variants of OMP [26,34,35], where all of them replace step 6 with , i.e. the absolute value function is removed in order to select a basis vector with a positive coefficient. The second point, where nonnegativity can be violated, is in step 8, the projection step. Bruckstein et al. [26] used NNLS [27] (Algorithm 1) instead of ordinary least squares, in order to maintain nonnegativity. Since this variant is very slow, we used the multiplicative NMF update rule for (cf. (2)), in order to approximate NNLS [35]. Yang et al. [34] left step 8 unchanged, which violates nonnegativity in general.

However, there is a more natural approach for nonnegative OMP: Note that the body of the outer loop of the active-set algorithm for NNLS (Algorithm 1) and OMP (Algorithm 3) perform identical computations, except that OMP selects , while NNLS selects , exactly as in the nonnegative OMP variants proposed so far. NNLS additionally contains the inner loop (11–18) to correct a possibly negative solution. Hence, a straightforward modification for nonnegative sparse coding, which we call sNNLS (which equally well can be called nonnegative OMP), is simply to stop NNLS, as soon as L basis vectors have been selected (i.e. as soon as in Algorithm 1). Note that NNLS can also terminate before it selects L basis vectors. In this case, however, the solution is guaranteed to be optimal [27]. Hence, sNNLS guarantees to find either a nonnegative solution with exactly L positive coefficients, or an optimal nonnegative solution with less than L positive coefficients. Note further that OMP and NNLS have been proposed in distinct communities, namely sparse representation versus nonnegatively constrained least squares. It seems that this connection has been missed so far. The computational complexity of sNNLS is upper bounded by the original NNLS algorithm [27] (see Section 2.2), since it only contains an additional stopping criterion ().

We further propose a variant of matching pursuit, which does not only apply to the nonnegative framework, but generally to the matching pursuit principle. We call this variant reverse matching pursuit: instead of adding basis vectors to an initially empty set, we remove basis vectors from an optimal, non-sparse solution. For nonnegative sparse coding, this method is illustrated in Algorithm 4, to which we refer as reverse sparse NNLS (rsNNLS). The algorithm starts with an optimal, non-sparse solution in step 1. While the of the solution is greater than L, the smallest entry in the solution vector is set to zero and its index is moved from the in-active set to the active set (steps 4–7). Subsequently, the data vector is approximated in NNLS sense by the remaining basis vectors in , using steps 9–19 of Algorithm 1 (inner correction loop), where possibly additional basis vectors are removed from . For simplicity, Algorithm 4 is shown for a single data vector and corresponding output vector . An implementation for data matrices , using the fast combinatorial NNLS algorithm [29], is straightforward. Similar as for sNNLS, the computational complexity of rsNNLS is not worse than for active-set NNLS [27] (Section 2.2), since in each iteration one index is irreversibly removed from . The NNLS algorithm (step 1) needs at least the same number of iterations to build the non-sparse solution. Hence, rsNNLS needs at most twice as many operations as NNLS.

Algorithm 4

Reverse sparse NNLS (rsNNLS).

1: 2: 3: whiledo 4: 5: 6: 7: 8: Perform steps 9–19 of Algorithm 1. 9: end while

The second main approach for sparse coding without nonnegativity constraints is basis pursuit (BP) [28], which relaxes the with the convex . Similar as for matching pursuit, there exist theoretical optimality guarantees and error bounds for BP [36,15]. Typically, BP produces better results than OMP [28,25]. Nonnegative BP (NNBP) can be formulated as

| (8) |

where controls the trade-off between reconstruction quality and sparseness. Alternatively, we can formulate NNBP as

| (9) |

where is the desired maximal reconstruction error. Formulation (9) is more convenient than (8), since the parameter is more intuitive and easier to choose than . Both (8) and (9) are convex optimization problems and can readily be solved [37]. To obtain an solution from a solution of (8) or (9), we select the L basis vectors with the largest coefficients in and calculate new, optimal coefficients for these basis vectors, using NNLS. All other coefficients are set to zero. The whole procedure is repeated for each column in , in order to obtain a coding matrix for problem (7). NNK-SVD follows this approach for nonnegative sparse coding, using the algorithm described in [11] as approximation for problem (8).

3.1.2. Enhancing the basis matrix

Once we have obtained a sparse encoding matrix , we aim to enhance the basis matrix (step 4 in Algorithm 2). We also allow the non-zero values in to vary, but require that the coding scheme is maintained, i.e. that the “zeros” in have to remain zero during the enhancement stage.

For this purpose, we can use a technique for unconstrained NMF as discussed in Section 2. Note that the multiplicative update rules (2) and (3) can be applied without any modification, since they consist of element-wise multiplications of the old factors with some update term. Hence, if an entry in is zero before the update, it remains zero after the update. Nevertheless, the multiplicative updates do not increase , and typically reduce the objective. Therefore, step 4 in Algorithm 2 can be implemented by executing (2) and (3) for several iterations.

We can also perform an update according to ANLS. Since there are no constraints on the basis matrix, we can proceed exactly as in Section 2.2 in order to update . To update , we have to make some minor modifications of the fast combinatorial NNLS algorithm [29], in order to maintain the sparse structure of . Let be the set of indices depicting the zero-entries in after the sparse coding stage. We have to take care that these entries remain zero during the active set algorithm, i.e. we simply do not allow that an entry, whose index is in , is moved to the in-active set. The convergence criterion of the algorithm (cf. [27]) has to be evaluated considering only entries whose indices are not in . Similarly, we can modify numerical approaches for NNLS such as the projected gradient algorithm [31]. Generally, the and matrices of the previous iteration should be used as initial solution for each ANLS iteration, which significantly enhances the overall speed of -H.

3.2. -W

We now address problem -W (6), where we again follow a two stage iterative approach, as illustrated in Algorithm 5. We first calculate an optimal, unconstrained solution for the basis matrix (with fixed ) in step 3. Next, we project the basis vectors onto the closest nonnegative vector in Euclidean space, satisfying the desired . This step is easy, since we simply have to delete all entries except the L largest ones. Step 7 enhances , where also the non-zero entries of can be adapted, but maintaining the sparse structure of . As in Section 3.1.2, we can use the multiplicative update rules due to their sparseness maintaining property. Alternatively, we can also use the ANLS scheme, similar as in Section 3.1.2. Our framework is inspired by Hoyer's NMF [13], which uses gradient descent to minimize the Frobenius norm. After each gradient step, the algorithm projects the basis vectors onto the closest vector with desired (cf. (4)).

Algorithm 5

-W.

1: Initialize randomly 2: fordo 3: 4: fordo 5: Set smallest values in to zero 6: end for 7: Coding Matrix Update: Enhance the coding matrix and basis matrix , maintaining the sparse structure of . 8: end for

4. Experiments

4.1. Nonnegative sparse coding

In this section we empirically compare the nonnegative sparse coding techniques discussed in this paper. To be able to evaluate the quality of the sparse coders, we created synthetic sparse data as follows. We considered random overcomplete basis matrices with D=100 dimensions and containing basis vectors, respectively. For each K, we generated 10 random basis matrices using isotropic Gaussian noise; then the sign was discarded and each vector was normalized to unit length. Further, for each basis matrix, we generated “true” sparse encoding matrices with sparseness factors , i.e. we varied the sparseness from L=5 (very sparse) to L=50 (rather dense). The values of the non-zero entries in were the absolute value of samples from a Gaussian distribution with standard deviation 10. The sparse synthetic data is generated using . We executed NMP [35], NNBP, sNNLS, rsNNLS and NLARS3 [20] on each data set. For NNBP we used formulation (9), where was chosen such that an SNR of was achieved, and we used an interior point solver [38] for optimization. All algorithms were executed on a quad-core processor (3.2 GHz, 12 GB memory).

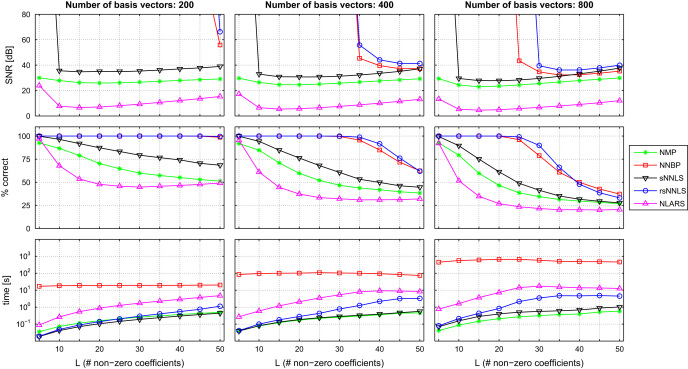

Fig. 1 shows the performance of the sparse coders in terms of reconstruction quality (), percentage of correctly identified basis vectors, and runtime, averaged over the 10 independent data sets per combination of K and L, where the SNR was averaged in the linear domain (i.e. arithmetic mean). Curve parts outside the plot area correspond to SNR values larger than 120 dB (which we considered as perfect reconstruction). NLARS shows clearly the worst performance, while requiring the most computational time after NNBP. sNNLS performs consistently better than NMP, while being approximately as efficient in terms of computational requirements. The proposed rsNNLS clearly shows the best performance, except that it selects slightly less correct basis vectors than NNBP for K=800 and . Note that NNBP requires far the most computational time,4 and that the effort for NNBP depends heavily on the number of basis vectors K. rsNNLS is orders of magnitude faster than NNBP.

Fig. 1.

Results of sparse coders, applied to sparse synthetic data. All results are shown as a function of the number of non-zero coefficients L (sparseness factor), and are averaged over 10 independent data sets. First row: reconstruction quality in terms of SNR. Markers outside the plot area correspond to perfect reconstruction (). Second row: percentage of correctly identified basis vectors. Third row: runtime on logarithmic scale. Abbreviations: nonnegative matching pursuit (NMP) [35], nonnegative basis pursuit (NNBP) [28,18], sparse nonnegative least squares (sNNLS) (this paper), reverse sparse nonnegative least squares (rsNNLS) (this paper), nonnegative least angle regression and selection (NLARS) [20].

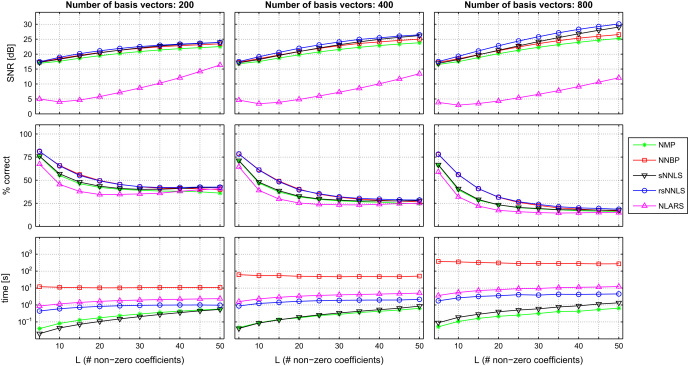

We repeated the experiment, where we added positive, uniformly distributed noise to the synthetic sparse data, such that . Note that the choice of for NNBP is now more difficult: A too small renders problem (9) infeasible, while a too large delivers poor results. Therefore, for each column in , we initially selected according to . For the case where problem (9) turned out to be infeasible, we relaxed the SNR constraint by until a feasible problem was obtained. The results of the experiment with noisy data is shown in Fig. 2 . As expected, all sparse coders achieve a lower reconstruction quality and identify less correct basis vectors than in the noise-free case. The proposed rsNNLS shows the best performance for noisy data.

Fig. 2.

Results of sparse coders, applied to sparse synthetic data, contaminated with noise (SNR=10 dB). All results are shown as a function of L and are averaged over 10 independent data sets. First row: reconstruction quality in terms of SNR. Second row: percentage of correctly identified basis vectors. Third row: runtime on logarithmic scale. Abbreviations: nonnegative matching pursuit (NMP) [35], nonnegative basis pursuit (NNBP) [28,18], sparse nonnegative least squares (sNNLS) (this paper), reverse sparse nonnegative least squares (rsNNLS) (this paper), nonnegative least angle regression and selection (NLARS) [20].

4.2. -H applied to spectrogram data

In this section, we compare methods for the update stage in -H (Algorithm 2). As data we used a magnitude spectrogram of 2 min of speech from the database by Cooke et al. [39]. The speech was sampled at 8 kHz and a 512 point FFT with an overlap of 256 samples was used. The data matrix finally had dimensions 257×3749. We executed -H for 200 iterations, where rsNNLS was used as sparse coder. We compared the update method from NNK-SVD [18], the multiplicative update rules [1] (Section 3.1.2), and ANLS using the sparseness maintaining active-set algorithm (Section 3.1.2). Note that these methods are difficult to compare, since one update iteration of ANLS fully optimizes first , then , while one iteration of NNK-SVD or the multiplicative rules only reduce the objective, which is significantly faster. Therefore, we proceeded as follows: we executed 10 (inner) ANLS iterations per (outer) -H iteration, and recorded the required time. Then we executed the versions using NNK-SVD and the multiplicative updates, respectively, where both were allowed to update and for the same amount of time as ANLS in each outer iteration. Since NNK-SVD updates the columns of separately [18], we let NNK-SVD update each column for a kth fraction of the ANLS time.

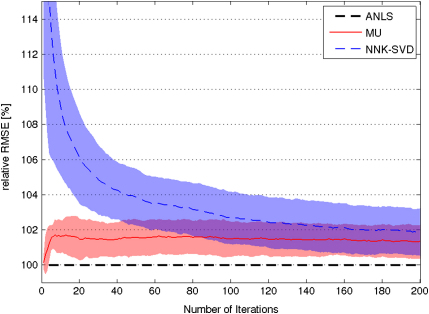

For the number of basis vectors K and sparseness value L, we used each combination of and . Since the error depends strongly on K and L, we calculated the root mean square error (RMSE) of each update method relative to the error of the ANLS method, and averaged over K and L: , where i denotes the iteration number of -H, and are the factor matrices of the method under test in iteration i, for parameters K and L. Similarly, and are the factor matrices of the ANLS update method. Fig. 3 shows the averaged relative RMSE for each update method, as a function of i. The ANLS approach shows the best performance. The multiplicative update rules are a constant factor worse than ANLS, but perform better than NNK-SVD. After 200 iterations, the multiplicative update rules and NNK-SVD achieve an average error which is approximately 1.35% and 1.9% worse than the ANLS error, respectively. NNK-SVD converges slower than the other update methods in the first 50 iterations.

Fig. 3.

Averaged relative RMSE (compared to ANLS update) of multiplicative update rules (MU) and nonnegative K-SVD (NNK-SVD) over number of -H iterations. The shaded bars correspond to standard deviation.

4.3. -W applied to face images

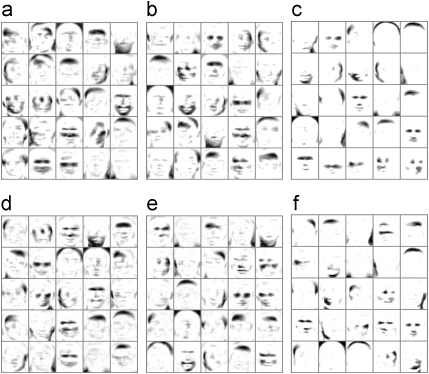

Lee and Seung [5] reported that NMF returns a part-based representation, when applied to a database of face images. However, as noted by Hoyer [13], this effect does not occur when the face images are not aligned, like in the ORL database [40]. In this case, the representation happens to be rather global and holistic. In order to enforce a part-based representation, Hoyer constrained the basis vectors to be sparse. Similarly, we applied -W to the ORL database, where we constrained the basis vectors to have sparseness values L corresponding to , and of the total pixel number per image (denoted as sparseness classes a, b and c, respectively) As in [13], we trained 25 basis vectors per sparseness value. We executed the algorithm for 30 iterations, using 10 ANLS coding matrix updates per iteration. In order to compare our results to NMF [13], we calculated the average (using (4)) of our basis vectors. We executed NMF on the same data, where we required the basis vectors to have the same as our -W basis vectors. We executed the algorithm for 2500 iterations, which were necessary for convergence. Fig. 4 shows the resulting basis vectors returned by -W and NMF, where dark pixels indicate high values and white pixels indicate low values.

Fig. 4.

Top: basis images trained by -W. Bottom: basis images trained by NMF. Sparseness: 33% (a), 25% (b), 10% (c), 52.4% (d), 43% (e), 18.6% (f) of non-zero pixels.

We see that the results are qualitatively similar, and that the representation becomes a more local one, when sparseness is increased (from left to right). We repeated this experiment 10 times and calculated the (in % of non-zero pixels), the and measured the runtime for both methods. The averaged results are shown in Table 1 , where the SNR was averaged in the linear domain.

Table 1.

Comparison of (in percent of non-zero pixels), (cf. (4)), reconstruction quality in terms of SNR, and runtime for NMF [13] and -W. SNR⁎ denotes the SNR value for , when the same as for -W is enforced.

| Method | (%) | SNR (dB) | SNR⁎ (dB) | Time (s) | |

|---|---|---|---|---|---|

| 52.4 | 0.55 | 15.09 | 14.55 | 2440 | |

| -W | 33 | 0.55 | 15.07 | – | 186 |

| 43 | 0.6 | 14.96 | 14.31 | 2495 | |

| -W | 25 | 0.6 | 14.94 | – | 164 |

| 18.6 | 0.73 | 14.31 | 13.52 | 2598 | |

| -W | 10 | 0.73 | 14.33 | – | 50 |

We see that the two methods achieve de facto the same reconstruction quality, while -W uses a significantly lower number of non-zero pixels. One might ask if the larger portion of non-zeros in basis vectors stems from over-counting entries which are extremely small, yet numerically non-zero. Therefore, Table 1 additionally contains a column showing SNR for , which denotes the SNR value when the target is enforced, i.e. all but the 33%, 25%, 10% of pixels with largest values are set to zero. We see that when a strict is required, -W achieves a significantly better SNR (at least 0.5 dB in this example). Furthermore, -W is more than an order of magnitude faster than NMF in this setup.

5. Conclusion

We proposed a generic alternating update scheme for NMF, which naturally incorporates existing approaches for unconstrained NMF, such as the classic multiplicative update rules or the ANLS scheme. For the key problem in -H, the nonnegative sparse coding problem, we proposed sNNLS, whose interpretation is twofold: it can be regarded as sparse nonnegative least-squares or as nonnegative orthogonal matching pursuit. From the matching-pursuit perspective, sNNLS represents a natural implementation of nonnegativity constraints in OMP. We further proposed the simple but astonishingly well performing rsNNLS, which competes with or outperforms nonnegative basis pursuit. Generally, NMF is a powerful technique with a large number of potential applications. -H aims to find a (possibly overcomplete) representation using sparsely activated basis vectors. -W encourages a part-based representation, which might be particularly interesting for applications in computer vision.

Acknowledgment

This work was supported by the Austrian Science Fund (Project number P22488-N23). The authors like to thank Sebastian Tschiatschek for his support concerning the Ipopt library, and his useful comments concerning this paper.

Biographies

Robert Peharz received his MSc degree in 2010. He currently pursues his PhD studies at the SPSC Lab, Graz University of Technology. His research interests include nonnegative matrix factorization, sparse coding, machine learning and graphical models, with applications to signal processing, audio engineering and computer vision.

Franz Pernkopf received his PhD degree in 2002. In 2002 he received the Erwin Schrödinger Fellowship. From 2004 to 2006, he was Research Associate in the Department of Electrical Engineering at the University of Washington. He received the young investigator award of the province of Styria in 2010. Currently, he is Associate Professor at the SPSC Lab, Graz University of Technology, leading the Intelligent Systems research group. His research interests include machine learning, discriminative learning, graphical models, with applications to image- and speech processing.

Footnotes

He actually has shown that the decision problem, whether an exact NMF of a certain rank exists or not, is NP-hard. An optimal algorithm for approximate NMF can be used to solve the decision problem. Hence NP-hardness follows also for approximate NMF.

To solve for , one transposes and and executes the algorithm for each column of .

We used the MATLAB implementation available under http://www2.imm.dtu.dk/pubdb/views/edoc_download.php/5523/zip/imm5523.zip.

For NNBP we used a C++ implementation [38], while all other algorithms were implemented in MATLAB. We also tried an implementation using the MATLAB optimization toolbox for NNBP, which was slower by a factor of 3–4.

References

- 1.Lee D.D., Seung H.S. NIPS. 2001. Algorithms for non-negative matrix factorization; pp. 556–562. [Google Scholar]

- 2.Dhillon I.S., Sra S. NIPS. 2005. Generalized nonnegative matrix approximations with Bregman divergences; pp. 283–290. [Google Scholar]

- 3.Cichocki A., Lee H., Kim Y.D., Choi S. Non-negative matrix factorization with . Pattern Recognition Lett. 2008;29(9):1433–1440. [Google Scholar]

- 4.Paatero P., Tapper U. Positive matrix factorization: a non-negative factor model with optimal utilization of error estimates of data values. Environmetrics. 1994;5:111–126. [Google Scholar]

- 5.Lee D.D., Seung H.S. Learning the parts of objects by nonnegative matrix factorization. Nature. 1999;401:788–791. doi: 10.1038/44565. [DOI] [PubMed] [Google Scholar]

- 6.Smaragdis P., Brown J. IEEE Workshop on Applications of Signal Processing to Audio and Acoustics. 2003. Non-negative matrix factorization for polyphonic music transcription; pp. 177–180. [Google Scholar]

- 7.Virtanen T. Monaural sound source separation by nonnegative matrix factorization with temporal continuity and sparseness criteria. IEEE Trans. Audio Speech Lang. Process. 2007;15(3):1066–1074. [Google Scholar]

- 8.Févotte C., Bertin N., Durrieu J.L. Nonnegative matrix factorization with the Itakura–Saito divergence: with application to music analysis. Neural Comput. 2009;21(3):793–830. doi: 10.1162/neco.2008.04-08-771. [DOI] [PubMed] [Google Scholar]

- 9.Peharz R., Stark M., Pernkopf F. Proceedings of Interspeech. 2010. A factorial sparse coder model for single channel source separation; pp. 386–389. [Google Scholar]

- 10.Xu W., Liu X., Gong Y. Proceedings of ACM SIGIR'03. 2003. Document clustering based on non-negative matrix factorization; pp. 267–273. [Google Scholar]

- 11.Hoyer P.O. Proceedings of Neural Networks for Signal Processing. 2002. Non-negative sparse coding; pp. 557–565. [Google Scholar]

- 12.Eggert J., Koerner E. International Joint Conference on Neural Networks. 2004. Sparse coding and NMF; pp. 2529–2533. [Google Scholar]

- 13.Hoyer P.O. Non-negative matrix factorization with sparseness constraints. J. Mach. Learn. Res. 2004;5:1457–1469. [Google Scholar]

- 14.Donoho D., Elad M. Optimally sparse representation in general (nonorthogonal) dictionaries via minimization . Proc. Natl. Acad. Sci. 2003;100(5):2197–2202. doi: 10.1073/pnas.0437847100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Tropp J. Just relax: convex programming methods for identifying sparse signals. IEEE Trans. Inf. Theory. 2006;51:1030–1051. [Google Scholar]

- 16.Vavasis S. On the complexity of nonnegative matrix factorization. SIAM J. Matrix Anal. Appl. J. Optim. 2009;20(3):1364–1377. [Google Scholar]

- 17.Aharon M., Elad M., Bruckstein A. K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006;54:4311–4322. [Google Scholar]

- 18.Aharon M., Elad M., Bruckstein A. vol. 5914. 2005. K-SVD and its non-negative variant for dictionary design; pp. 11.1–11.13. (Proceedings of the SPIE Conference, Curvelet, Directional, and Sparse Representations II). [Google Scholar]

- 19.D. Dueck, B. Frey, Probabilistic Sparse Matrix Factorization, Technical Report PSI TR 2004-023, Department of Computer Science, University of Toronto, 2004.

- 20.Morup M., Madsen K., Hansen L. Proceedings of ISCAS. 2008. Approximate L0 constrained non-negative matrix and tensor factorization; pp. 1328–1331. [Google Scholar]

- 21.Efron B., Hastie T., Johnstone I., Tibshirani R. Least angle regression. Ann. Stat. 2004;32:407–499. [Google Scholar]

- 22.Yang Z., Chen X., Zhou G., Xie S. Proceedings of SPIE. 2009. Spectral unmixing using nonnegative matrix factorization with smoothed L0 norm constraint. [Google Scholar]

- 23.Davis G., Mallat S., Avellaneda M. Adaptive greedy approximations. J. Constr. Approx. 1997;13:57–98. [Google Scholar]

- 24.Pati Y.C., Rezaiifar R., Krishnaprasad P.S. Proceedings of 27th Asilomar Conference on Signals, Systems and Computers. 1993. Orthogonal matching pursuit: recursive function approximation with applications to wavelet decomposition; pp. 40–44. [Google Scholar]

- 25.Tropp J. Greed is good: algorithmic results for sparse approximation. IEEE Trans. Inf. Theory. 2004;50(10):2231–2242. [Google Scholar]

- 26.Bruckstein A.M., Elad M., Zibulevsky M. On the uniqueness of nonnegative sparse solutions to underdetermined systems of equations. IEEE Trans. Inf. Theory. 2008;54:4813–4820. [Google Scholar]

- 27.Lawson C., Hanson R. Prentice-Hall; 1974. Solving Least Squares Problems. [Google Scholar]

- 28.Chen S., Donoho D., Saunders M. Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 1998;20(1):33–61. [Google Scholar]

- 29.Van Benthem M., Keenan M. Fast algorithm for the solution of large-scale non-negativity-constrained least-squares problems. J. Chemometrics. 2004;18:441–450. [Google Scholar]

- 30.Kim H., Park H. Non-negative matrix factorization based on alternating non-negativity constrained least squares and active set method. SIAM J. Matrix Anal. Appl. 2008;30:713–730. [Google Scholar]

- 31.Lin C.J. Projected gradient methods for nonnegative matrix factorization. Neural Comput. 2007;19:2756–2779. doi: 10.1162/neco.2007.19.10.2756. [DOI] [PubMed] [Google Scholar]

- 32.Li S., Hou X., Zhang H., Cheng Q. Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR) 2001. Learning spatially localized, parts-based representation. [Google Scholar]

- 33.Pascual-Montano A., Carazo J., Kochi K., Lehmann D., Pascual-Marqui R. Transactions on Pattern Analysis and Machine Intelligence. 2006. Nonsmooth nonnegative matrix factorization (NSNMF) [DOI] [PubMed] [Google Scholar]

- 34.Yang A.Y., Maji S., Hong K., Yan P., Sastry S.S. International Conference on Information Fusion. 2009. Distributed compression and fusion of nonnegative sparse signals for multiple-view object recognition. [Google Scholar]

- 35.Peharz P., Stark M., Pernkopf F. Proceedings of MLSP. 2010. Sparse nonnegative matrix factorization using ; pp. 83–88. [Google Scholar]

- 36.Donoho D.L., Tanner J. Sparse nonnegative solutions of underdetermined linear equations by linear programming. Proc. Natl. Acad. Sci. 2005;102(27):9446–9451. doi: 10.1073/pnas.0502269102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Boyd S., Vandenberghe L. Cambridge University Press; 2004. Convex Optimization. [Google Scholar]

- 38.Wächter A., Biegler L.T. On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Programming. 2006;106:25–57. [Google Scholar]

- 39.Cooke M.P., Barker J., Cunningham S.P., Shao X. An audio-visual corpus for speech perception and automatic speech recognition. J. Am. Stat. Assoc. 2006;120:2421–2424. doi: 10.1121/1.2229005. [DOI] [PubMed] [Google Scholar]

- 40.Samaria F.S., Harter A. Proceedings of the 2nd IEEE Workshop on Applications of Computer Vision. 1994. Parameterisation of a stochastic model for human face identification; pp. 138–142. [Google Scholar]