Abstract

The typical objective of research is to try and identify cause-and-effect relationships. As with any research design, there are strengths and weaknesses involved in trying to achieve this objective. Some study designs are stronger than others in attempting to establish cause-and-effect associations. The task of establishing cause-and-effect relationships is challenging (Hill, 1965) and any study that does not include experimentation, that is by manipulating a variable’s exposure, is inhibited from drawing causal inferences (Heath, 1995). Similarly, statistical significance is also based on probability rather than certainty. This article focuses on a particular research design, namely, the ecological study, and attempts to serve as a reminder that the design has its place in the realm of various research designs.

Keywords: Epidemiologic research designs, Ecological studies, Case control studies, Environment and public health

BACKGROUND

Recently, this author had his paper, which incorporated an ecological study design, rejected at Dose Response. Some of the comments challenged the validity of my ecological design and its corresponding level of uncertainty. The purpose of this brief note is to provide a reminder that most, if not all, research has an element of uncertainty, and that ecological studies, like other types of studies, have a legitimate place in the realm of research designs.

Early example of an ecological study

A famous case of early epidemiology in action is the John Snow case and cholera epidemic in London in the mid 1800s. Snow used a map of cholera cases and pinpointed a contaminated source of water (Friis and Sellers, 2004). This case is considered to contain the necessary ingredients of modern epidemiology, namely, the “organization of observations, a natural experiment, and a quantitative approach” (Lilienfeld and Lilienfeld, 1980 cited in Friis and Sellers, 2004). In this author’s view, Snow’s method essentially had an ecological study design since ecological studies also organize observations, utilize natural experimentation (by comparing exposed versus unexposed groups), and quantifies the data. It is doubtful that Snow knew any specific exposure level to any specific individual yet his important ecological observation in this case helped to pinpoint a source of trouble. Curiously, Snow’s ecological observations are not referred to as an ecological study, at least in a standard text on epidemiology (Friis and Sellers, 2004). Freedman (2005) is an example of an author who indicates that Snow’s study indeed had an ecological design.

Compared to case control studies

In the case of the ecological study design, the obvious weakness is that data is based on groups rather than individuals. In other words, the ecological study provides group exposure and group response without knowing what any individual exposure-response was. This weakness is often contrasted with the strength in the case-control design where individual exposures are known. However, in the case of radon, as Cohen (2000) indicates, the radon level in an individual house is likely to change more than the average change for all houses in a region. Consequently, ecological studies may actually be more accurate than case control studies in estimating exposures, at least in regard to radon. Cohen (1990) also notes that the large amount of data available in the ecological design reduces the confounder effect to a level of the case control study that accounts for known exposures of confounders. In addition, the case control study design has its own set of weaknesses, similar to the ecological study design, namely, that they (case control studies) often have flaws pertaining bias, matching, and confounding (Mann, 2003).

When the attempt is made to apply to individuals conclusions drawn from group level data, the condition of ecological fallacy is the result (DeAngelis, 1990). However, if the application is kept to the group and not the individual, by definition (DeAngelis, 1990), the condition of ecological fallacy would not exist even though ecological design was used. Schwartz likewise points out the problems with the concept of “ecological fallacy.” Still, methods are available to help minimize what is known as “ecological fallacy” (Wakefield and Shaddick, 2006; Salway and Wakefield, 2007).

DeAngelis (1990) who cites Ibraham (1985) ranks study designs from weakest to strongest in regard to causal implications (Table 1). Case control designs are often mentioned as being acceptable for studies on exposures to, say, natural background radiation and radon (Cohen, 2000). However, in view of the hierarchy of designs shown in Table 1, the case control design is not that far from ecological study design in strength of causal relationships.

TABLE 1.

Study designs ranked from weakest to strongest. Adapted from DeAngelis (1990). Ecological studies, as with case-control studies, are not the strongest but they are not the weakest either.

| 1. | Anecdotes |

| 2. | Clinical hunches |

| 3. | Case history |

| 4. | Time series |

| 5. | Ecological studies |

| 6. | Cross-sectional |

| 7. | Case-control |

| 8. | Before and after, with controls |

| 9. | Historical cohort |

| 10. | Prospective cohort |

| 11. | Clinical randomized trials |

| 12. | Community randomized trials |

A sampling of ecological study usage

A search of three fairly well-known journals that contain papers on epidemiology was performed in August 2010 using keywords “ecological study” in “abstract and title” and checking the “phrase” box. The journals searched were: 1) International Journal of Epidemiology (IJE), b) American Journal of Public Health (AJPH), and c) American Journal of Epidemiology (AJE). In IJE, 17 publications were returned, 16 of which pertained to clinical studies, e.g., on: 1) cancer and heart disease mortality in regard to soy product intake by a researcher from the Gifu University of Medicine, Gifu, Japan (Nagata, 2000); 2) leprosy in Brazil by researchers at universities in Brazil and Germany (Kerr-Pontes et al. 2004); and 3) plague in Vietnam by various international researchers, e.g., from the Institute of Hygiene and Epidemiology of Tay Nguyen in Dak Lak, Vietnam (Pham et al. 2009). In AJPH, 10 publications were returned, nine of which pertained to clinical studies, e.g., on: 1) Breast cancer in Connecticut by researchers from the Centers for Disease Control and Prevention (CDC) (Hahn and Moolgavkar, 1989); 2) tuberculosis in California, by researchers from various institutions such as University of California, Berkeley, School of Public Health (Myers et al. 2006); and 3) stroke mortality by researchers from the Division of Epidemiology, School of Public Health, University of Minnesota in Minneapolis (Jacobs et al. 1992). Curiously, no returns were observed for AJE.

It seems that by publishing their ecological studies in reputable journals, these researchers from reputable institutions, are implying that the ecological study is an acceptable research design and is part of the overall research design “pie.”

Immunizations

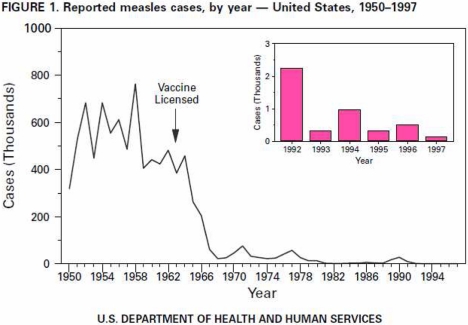

The U.S. Department of Health and Human Services’ website shows various charts from population data purportedly showing the efficacy of immunizations of various diseases (U.S. Department of Health and Human Services, 2010). This website states that the charts “show the impact of immunizations in the United States in reducing the number of cases of, or deaths from, vaccine-preventable diseases...” (U.S. Department of Health and Human Services, 2010). With the exception perhaps of the polio chart, which states that cases from 1975 – 1997 are “consultant verified” (U.S. Department of Health and Human Services, 2010), these charts appear to be based on population data rather than data from known individuals. Consequently, the charts would be considered as data being derived from the ecological study design.

A measles chart, from the Centers for Disease Control and Prevention (1998), as cited in an epidemiology textbook by Friis and Sellers (2004) is shown in Figure 1. In reference to the chart in this textbook (Friis and Sellers) and in the CDC report, nothing is mentioned about whether any particular individuals were exposed to measles, or whether any particular individuals actually received the vaccine, but instead, information about populations is provided. Consequently, this chart would also seem to qualify as ecological data, purportedly showing a measles vaccination dose-response.

FIGURE 1.

Measles cases by year from CDC (1998). The ecological data purportedly shows efficacy of measles vaccine.

If the ecological study design is acceptable in demonstrating the efficacy of immunizations, then it (the ecological study design) should likewise be acceptable in demonstrating other dose-response relationships such as radiation hormesis. The old saying, “What’s good for the goose is good for the gander” seems applicable here.

CONCLUSION

As long as the reader realizes the limitation of the study design, then he or she should be able to decide on what level of certainty to place on its findings. Ecological studies, as with other study designs, including case-control designs, have limitations. The ecological study design is a valid method for showing dose-response relationships, and at a minimum, provides a solid foundation on which continued investigation can take place.

REFERENCES

- Centers for Disease Control and Prevention Measles. – United States; 1997. MMWR. 1998;47(14):273. MMWR Weekly. Cited in Friis and Sellers (2004). [Google Scholar]

- Cohen B. Ecological versus case-control studies for testing a linear-no threshold dose-response relationship. International Journal of Epidemiology. 1990;19(3):680–684. doi: 10.1093/ije/19.3.680. [DOI] [PubMed] [Google Scholar]

- Cohen B. Updates and extensions to tests of the linear-no-threshold theory. Technology. 2000;7:657–672. [Google Scholar]

- DeAngelis C. An introduction to clinical research. New York: Oxford University Press; 1990. [Google Scholar]

- Freedman DA. Statistical models: Theory and practice. New York, NY: Cambridge University Press; 2005. Excerpt available from: http://assets.cambridge.org/97805218/54832/excerpt/9780521854832_excerpt.pdf. [Google Scholar]

- Friis RH, Sellers TA. Epidemiology for public health practice. Boston: Jones and Bartlett; 2004. [Google Scholar]

- Hahn RA, Moolgavkar SH. Nulliparity, decade of first birth, and breast cancer in Connecticut cohorts, 1855 to 1945: an ecological study. American Journal of Public Health. 1989;79:1503–1507. doi: 10.2105/ajph.79.11.1503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heath D. An introduction to experimental design and statistics for biology. Boca Raton: CRC Press; 1995. p. 17. [Google Scholar]

- Hill AB. The environment and disease: association or causation. Proceedings of the Royal Society of Medicine. 1965;58:295–300. [PMC free article] [PubMed] [Google Scholar]

- Ibraham MA. Epidemiology and health policy. Rockville, MD: Aspen Systems; 1985. [Google Scholar]

- Jacobs DR, McGovern PG, Blackburn H. The US decline in stroke mortality: what does ecological analysis tell us? American Journal of Public Health. 1992;82:1596–1599. doi: 10.2105/ajph.82.12.1596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerr-Pontes LRS, Montenegro ACD, Barreto ML, Werneck GL, Feldmeier H. Inequality and leprosy in Northeast Brazil: an ecological study. International Journal of Epidemiology. 2004;33:262–269. doi: 10.1093/ije/dyh002. [DOI] [PubMed] [Google Scholar]

- Lilienfeld AM, Lilienfeld DE. Foundations of epidemiology. 2nd ed. New York: Oxford University Press; 1980. Cited in: [Google Scholar]; Friis RH, Sellers TA. Epidemiology for public health practice. Boston: Jones and Bartlett; 2004. p. 26. [Google Scholar]

- Mann CJ. Observational research methods. Research design II: Cohort, cross sectional, and case-control studies. Emergency Medicine. 2003;20:54–60. doi: 10.1136/emj.20.1.54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers WP, Westenhouse JL, Flood J, Riley LW. An Ecological Study of Tuberculosis Transmission in California. American Journal of Public Health. 2006;96:685–690. doi: 10.2105/AJPH.2004.048132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nagata C. Ecological study of the association between soy product intake and mortality from cancer and heart disease in Japan. International Journal of Epidemiology. 2000;29:832–836. doi: 10.1093/ije/29.5.832. [DOI] [PubMed] [Google Scholar]

- Pham HV, Dang DT, Minh NNT, Nguyen ND, Nguyen TV. Correlates of environmental factors and human plague: an ecological study in Vietnam. International Journal of Epidemiology. 2009;38:1634–1641. doi: 10.1093/ije/dyp244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salway R, Wakefield J. A hybrid model for reducing ecological bias. Biostatistics. 2007;9(1):1–17. doi: 10.1093/biostatistics/kxm022. [DOI] [PubMed] [Google Scholar]

- U.S. Department of Health and Human Services Effectiveness of immunizations. 2010. [Cited 2010 Dec 15]. Available from: http://www.hhs.gov/nvpo/concepts/intro6.htm.

- Wakefield J, Shaddick G. Health-exposure modeling and the ecological fallacy. Biostatistics. 2006;7(3):438–455. doi: 10.1093/biostatistics/kxj017. [DOI] [PubMed] [Google Scholar]