Abstract

Objective

Clinical summarization, the process by which relevant patient information is electronically summarized and presented at the point of care, is of increasing importance given the increasing volume of clinical data in electronic health record systems (EHRs). There is a paucity of research on electronic clinical summarization, including the capabilities of currently available EHR systems.

Methods

We compared different aspects of general clinical summary screens used in twelve different EHR systems using a previously described conceptual model: AORTIS (Aggregation, Organization, Reduction, Interpretation and Synthesis).

Results

We found a wide variation in the EHRs’ summarization capabilities: all systems were capable of simple aggregation and organization of limited clinical content, but only one demonstrated an ability to synthesize information from the data.

Conclusion

Improvement of the clinical summary screen functionality for currently available EHRs is necessary. Further research should identify strategies and methods for creating easy to use, well-designed clinical summary screens that aggregate, organize and reduce all pertinent patient information as well as provide clinical interpretations and synthesis as required.

Keywords: Electronic health records, clinical summarization, user interface

Introduction

The use of electronic health records (EHRs) has enhanced our ability to collect a large amount of patient-specific health information over long periods of time. With the impending widespread adoption of EHRs along with the creation of community and statewide Health Information Exchange systems (HIEs) [1], an immense amount of electronically available clinical data describing all aspects of a patient’s care will be available to every clinician at every patient encounter. Clinicians will be responsible for reviewing and acting on all of this data [2]. However the amount of time that a clinician spends interacting with their patients on average has decreased [3–5]. Clinicians and patients already complain that a large percentage of the physician-patient encounter is now spent interacting with the EHR [3–5]. When interacting with the EHR, clinicians often need to find and interpret relevant information from various sources in a timely manner. EHR systems must therefore have powerful clinical decision support features that complement this important part of medical decision making, rather than be a hindrance to efficient patient-centered care [6].

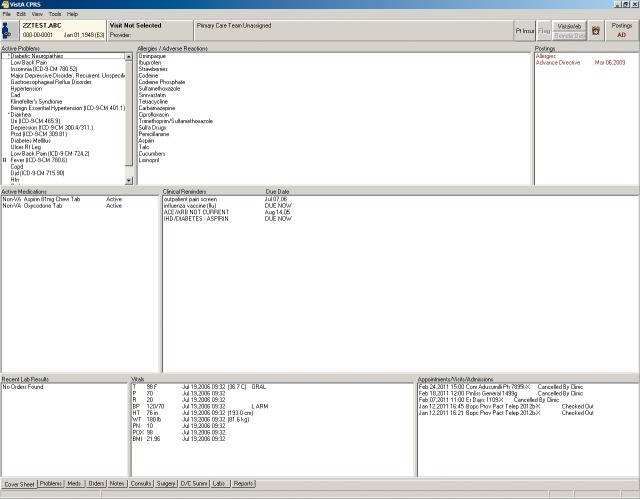

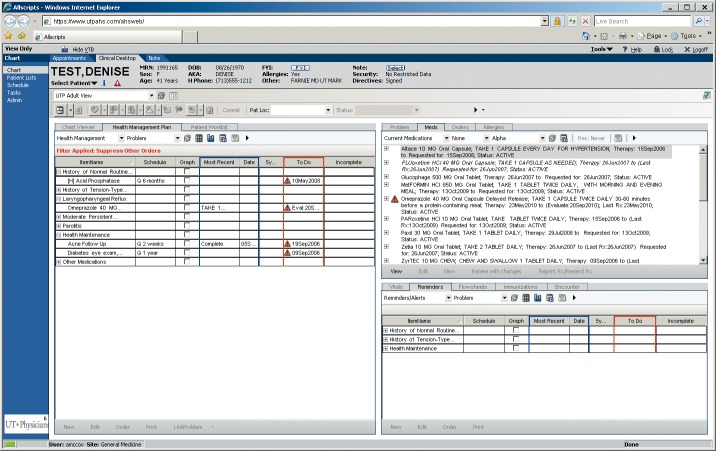

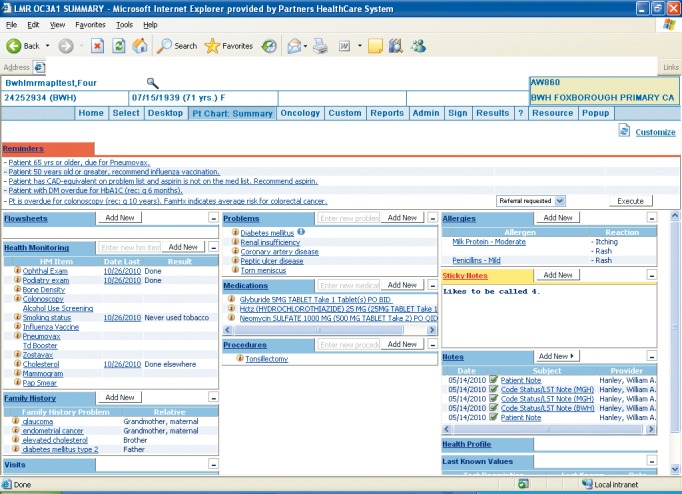

It is now widely accepted that the adoption of an EHR will improve processes of care including documentation and retrieval of medical information, information exchange between disparate systems, and reduction of error [7]. However, barriers to EHR adoption and subsequent dissatisfaction with implemented technology still exist [8]. Studies of failure of health information technology to deliver its promises have identified the unintended consequences of its use [9]. In addition to the obvious clinical support that electronic aggregation of clinical data promises, it might add to the unintended consequences of having large amounts of longitudinal health data which may, in fact, hinder point-of-care information retrieval and decision-making. In addition, the use of structured templates that render clinical notes meaningless and difficult to read and interpret may proliferate [10]. The use of narrative unstructured text, and the complexity in navigating a multi-faceted electronic record to identify useful information can lead to subsequent information overload [9]. Many EHR systems offer some form of summary screen that provides a limited overview of an individual patient’s chart. These summary screens have been found to be minimally utilized in processes of care [11]. ►Figures 1, 2 and 3 depict examples of these summary screens.

Fig. 1.

Screen print of Partners HealthCare System’s Longitudinal Medical Record (LMR) clinical summary screen

Fig. 2.

Screen print of the Veterans Affairs Health System’s Computerized Patient Record System (CPRS) clinical summary screen

Fig. 3.

Screen print of the UTHealth Practice Plan’s Allscripts clinical summary screen

Despite the existence of summary screens in many EHRs, there are minimal standards that determine which data elements should be included (defined nationally by the National Institutes of Standards and Technology as at least containing diagnostic test results, problem list, medication list, and medication allergy list) and how the information should be summarized [12, 13]. In particular, “Clinical Summary” Standards have been described for after-visit summaries [12] and care-transition summaries in the United Kingdom [14] but no standards exist for problem-oriented clinical summaries for healthcare providers [15]. We have developed methods for generating the knowledge required to determine, in real-time, which data elements are relevant to include in a problem oriented summary screen [16, 17]. A description of common summarization capabilities of various EHR systems provides a necessary springboard before formal evaluation and redesign that will support the cognitive needs of clinicians [18]. In order to characterize the current implementation of this type of clinical decision support, we compared the extent of the different clinical summarization capabilities of various EHR systems in use with attention to the clinical content available in general clinical summary screens.

Background

There exists a paucity of research on electronic clinical summarization, including whether or not current EHR systems have these capabilities. Summarization of medical information by clinicians has been studied under limited domains of clinical care, including handoffs [19, 20], creation of discharge summaries [21], and medical education [22, 23]. The use of automatically generated clinical summaries is promising since they could provide easy access to important data that could potentially be customized to the needs of clinicians for individualized patient care [24].

We briefly describe previous work done in electronic clinical summarization. In the neonatal intensive care setting, Law et al. in a study of forty neonatal ICU staff in 2005 discovered that textual summaries that were generated by experts lead to better choices of appropriate responses compared to data represented as trend graphs [25]. This was later supported by a study of thirty-five neonatal ICU staff in 2008, which also discovered that human generated summary information was superior to computer generated summaries [26]. An example of a relatively widely adopted summary system is the National Health Services’ Summary Clinical Record, which aggregates information about medication, allergies and adverse reactions and is intended for use in emergency and unscheduled visits. While no direct evidence of improved safety was found using this summary, Greenhalgh et al., described a small positive impact on preventing medication errors [27]. Data other than from the health record have been assessed as potentially useful sources of summary information [28], but the majority of research has concentrated on extracting data from the electronic health record and in particular, from textual data. Van Vleck et al., discovered that physicians heavily utilized textual data contained in notes whilst generating summaries [24]; Elhadad et al discovered that personalized summaries in which summaries were tailored to patient characteristics were preferred by physicians in comparison to generic summaries [29]. Afantenos et al. present a detailed evaluation of the potential of summarization technology in the medical domain, based on the examination of the state of the art, as well as of existing medical document types and summarization applications [30]. A cognitive analysis of the process of summarization performed by Reichert at al. on eight nephrologists confirmed that a large amount of time was spent in reviewing textual data, while identifying several different strategies used by physicians when summarizing relevant information. They also identify three primary goals that guided physicians in the summarization process which was to identify relevant information, validate the same with a more detailed review of data and to ascertain the current status of the problem or disease state [31].

In summary, it is clear that computer-generated clinical summaries

-

1.

can be created in limited domains,

-

2.

are useful and satisfying to clinicians, and

-

3.

may improve quality and safety of care.

The only conceptual model of the process of clinical summarization was first described by Feblowitz et al. [32], who identified the need for a model that would:

-

1.

provide a framework applicable to various types of clinical summaries

-

2.

lay foundation to methods used to analyze clinical summaries;

-

3.

facilitate future standardization and translation of human generated clinical summaries into electronic form; and

-

4.

promote and extend future research on clinical summarization.

This model consists of five distinct stages – Aggregation, Organization, Reduction, Interpretation and Synthesis (AORTIS). In brief the five stages of the AORTIS model are as follows:

Stage 1: Aggregation

Aggregation is simply the collection of clinical data from various electronic sources across multiple databases or health networks, for example medication lists from the pharmacy, laboratory test results from the laboratory, progress notes from multiple providers, radiology test images from the Picture Archiving and Communications System (PACS), etc. In addition, these data may be from different parts of an integrated EHR or from multiple, community-based EHRs connected by a Health Information Exchange (HIE).

Stage 2: Organization

Organization is the arrangement of data according to some specified underlying principle without condensing, altering, or interpreting it. This sort of arrangement occurs concurrently with aggregation within the EHR unlike in a paper chart where the process is more visible and time consuming. Common organization operations are grouping (e.g. by data type or origin of service), sorting (e.g. by date or alphabetically) and prioritizing (e.g. by urgency or specialty).

Stage 3: Reduction & Transformation

Reduction is the process of filtering salient information without modifying it to decrease the amount of information presented (e.g. only displaying most recent values, values from a single location or values attributed to one provider or specialty). Transformation is the process of altering how the data is viewed or how the data is presented in order to facilitate understanding (e.g. graphing data over time). Another form of data reduction involves a mathematical transformation such as the calculation of descriptive statistics such as the mean, median, mode, percentile rankings, maxima, or minima, for example.

Stage 4: Interpretation

Interpretation is the context-based analysis of relevant data through the application of general clinical knowledge or rules. For example, selecting abnormal lab results to include in a patient handoff summary requires interpretation because a clinician or computer program must be able to identify which results are abnormal. In general, interpretation requires access to a clinical knowledge base and is a necessary step to produce knowledge-rich abstracts of clinical information.

Stage 5: Synthesis

Synthesis is the combination of two or more patient-specific clinical data elements along with general medical knowledge to yield more meaningful information or suggest action. Following knowledge-based interpretation, clinical information can be understood in relation to other parts of the medical record and can be viewed with respect to a specific patient problem.

Methods

We chose twelve different EHR systems and their general clinical summary screens for comparison. These systems were chosen based on convenience (i.e., those to which our colleagues had access), as many of the commercially available EHR systems are not publically available for comparison. A general clinical summary screen is a designated part of the EHR that displays a concise view of clinical information; such screens are usually denoted as summary screens, overview screens, or a face-sheet. For each system, we inspected screenshots of the general clinical summary screens. ►Figures 1, 2, and 3 depict the reviewed screens for three of these systems. A complete listing of the EHR systems chosen is included in ►Table 1.

Table 1.

Examined clinical summary screens

| EHR Product | Version | Implementation Site (blinded for peer review) | Type of system |

|---|---|---|---|

| Partner’s LMR | Fall 2010 | Partners Healthcare System, MA | Locally developed |

| Allscripts Enterprise | v11.2.0 | UTHealth Practice Plan, TX | Commercially available |

| CPRS | v1.0.27.90 | VA Houston, TX | Freely available |

| GE Centricity | 2008 version | University of Medicine & Dentistry, NJ | Commercially available |

| OCW | v1.9.802 | Oschner Clinic, LA | Locally developed |

| StarPanel | N/A | Vanderbilt Practice Plan, TN | Locally developed |

| Springcharts | v1.6.0_20 | Web Demo | Commercially available |

| OpenMRS | v1.7.1 | Web Demo | Open Source; Freely available; Disease-specific (HIV/AIDS) |

| Cerner | v2010.01 | Stonybrook, NY | Commercially available |

| ClinicStation | v3.7.1 | MD Anderson, TX | Locally developed; Disease-specific (Cancer) |

| NextGen | Early 2008 version | Mid-Valley Independent Physician’s Association, OR | Commercially available |

| Epic | v 2009 IU7 | Harris County Hospital District | Commercially available |

LMR- Longitudinal Medical Record; CPRS-Computerized Patient Record System; OCW- Oschner Clinical Work Station; MRS – Medical Record System.

A clinician (AL) and informatics expert (ABM) independently reviewed all the systems to determine which summary elements were included. We used Cohen’s kappa to determine inter-rater agreement. For those components on which reviewers disagreed, a third reviewer (DFS) examined the screen to determine consensus.

Currently there are no standard data elements defined for such clinical summaries. A variety of clinical scenarios (inpatient versus outpatient clinical summarization needs) and different clinical user profiles (for example, nurse versus physician) may drive a variety of tasks that require the use of these clinical summaries. Hence we did not perform a formal needs analysis or narrow our examination to the summarization needs for a handful of clinical tasks. Instead, we examined each system for summarization capabilities for a variety of content types determined to be important through authors’ experiences in implementation of similar summary tools (AW, DFS), informal observation and interviews of clinical summarization in ambulatory clinics using the Rapid Assessment methods described by McMullen et al. [33] and review of the literature (►Table 2). Almost all clinical content types shown by each summary screen are included in the table. We also reviewed each screen for inclusion of other elements, such as alerts, custom reminders, diagnostics or imaging, directives, dynamic links, immunization, insurance, procedures, referrals, and task lists. We used the conceptual model described by Feblowitz et al. to frame our comparison [32].

Table 2.

Content types evaluated for inclusion in summary screens

| Content Type | Example |

|---|---|

| Vitals | Current and past temperature, blood pressure, pulse, respiratory rate |

| Medications | Previously or actively prescribed medications |

| Visit Schedule | Past, current, or future scheduled appointments |

| Patient Information | Current patient demographics, picture, or other identifying information |

| Allergies | Medication allergies documented for the patient |

| Problem List | Previous or active clinical problems, diagnoses, or medical conditions |

| Health Care Maintenance | Reminders for vaccinations or cancer screening |

| Labs | Recent clinical laboratory test results |

In addition to the components of the AORTIS model, we also performed a preliminary assessment of each EHR for the different apparent aspects of the graphical user interface relevant to the clinical summary screen. These features include specific functions of the graphical user interface that enable summarization and the display of data on the screen. The features included:

-

1.

The ability to graph information.

-

2.

The use of color to emphasize importance of specific information.

-

3.

The specific layout of the user interface, including the following categories

-

a)

Tabbed: Use of tabbed screens to display/hide data

-

b)

Modular Views: The use of multiple tiled frames that allow different data elements to be seen at the same time

-

c)

Collapsible/Expandable screens: The use of layered frames that allow different data elements to be displayed/hidden

-

d)

Custom content: The ability to insert customized content on the summary screens relevant to the clinician

-

e)

Scrolling: The ability to scroll within the frames to display information

-

a)

-

4.

The ability to link to information within the chart

-

5.

The ability to link to clinical reference information outside of the application

Results

Our findings are summarized in ►Table 3. Inter-rater agreement for all summarization capabilities was moderate (kappa = 0.68). There was a wide variation in clinical summarization capabilities across the systems. Two of the studied systems catered to disease-specific populations (Open MRS – HIV/AIDS and Clinic Station – Cancer). All the systems examined seemed to aggregate, organize or reduce most of the clinical content, corresponding to the lower tiers of the AORTIS model. When reduction of clinical data was employed, more recent information was preferentially displayed.

Table 3.

Clinical summarization capabilities of the examined electronic health record systems

| Characteristic | EHR Vendor | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CPRS | Allscripts | Next Gen | LMR | OCW | Centricity | Clinic Station | Star Panel | Spring Charts | Cerner | Open MRS | Epic | ||

| Content | Vitals | A O R | A O | A O T | Absent | Absent | A O I | A O | A O | Absent | A O R | Absent | Absent |

| Medications | A O R | A O R | A O R | A O R | A | A O R I | A O | A O | A O R | A O | A R | A R | |

| Visit Schedule | A O R | A O | A O | A O | Absent | Absent | A O | Absent | Absent | A O | Absent | Absent | |

| Patient Information | A O R | A O R | A O R | A O R | A O R | A O R | A O R | A R | A O | A O | A | A O R | |

| Allergies | A | A O | A | A O | A | A | A O | Absent | A | A O | A | A | |

| Problem List | AR | A O R | A | A O R | A | A R | A | A O R | A O R | A O R | A R | A | |

| Health Care Maintenance | R | A | Absent | A O I | Absent | Absent | Absent | A O | A O R | A O | A O R I | A O R I | |

| Labs | A O R | A O | A O | Absent | Absent | A O | A O | Absent | Absent | A O R | Absent | Absent | |

| Other Content | Directives | Custom Reminders; Diagnostics/Imaging; Immunization; Procedures |

Custom Reminders; Dynamic Links; Immunization; Procedures |

Alerts; Insurance; Task List |

Directives; Insurance; Procedures |

Diagnostics/Imaging; Directives; Dynamic Links |

Alerts | Referrals | Custom Reminders; Diagnostics/Imaging; Immunization; Procedures |

Custom Reminders; Insurance; Procedures; Referrals |

Custom Reminders | ||

| Structure | Graph | Absent | Absent | Present | Absent | Absent | Absent | Absent | Absent | Absent | Absent | Absent | Absent |

| Color-Emphasis | Present | Present | Absent | Present | Absent | Present | Absent | Present | Present | Present | Absent | Absent | |

| Custom Layout | MV; Sc | Tb; CoE; Sc | MV; Cu; Sc | MV; CoE; Cu; Sc | MV; Sc | MV; Sc | Tb; CoE; Cu; Sc | Tb; MV; Sc | MV; Sc | Tb; MV; CoE; Sc | MV | MV | |

| Chart Specific Information | Present | Present | Present | Present | Present | Present | Present | Present | Present | Present | Present | Present | |

| Reference Information | Absent | Absent | Absent | Present | Absent | Absent | Absent | Present | Absent | Absent | Absent | Absent | |

A-Aggregation; O-Organization; R-Reduction; T-Transformation; I-lnterpretation; S-Synthesis; Tb-Tabbed; MV-Modular Views; CoE-Collapsible/Expandable; Cu-Custom content; Sc- Scroll

There was only one system (NextGen) that clearly presented transformed (i.e., altering how the data is viewed or presented in order to facilitate understanding) vital signs data within the summary screen using graphs. Other systems may have other options to create graphs or other visual representations of selected data, but these were not apparent from the summary screen. Four of the studied EHR systems had the ability to interpret information (i.e., analysis of data through the application of general clinical knowledge or rules) from various clinical content – Cerner and Centricity for vital signs, where arrows are used to designate trends in temperature or pulse rate, and Spring Charts and LMR for health care maintenance reminders, which were specified based on patient information. Only one system (LMR) combined information to synthesize recommendations for further action on the summary screen (for example, aspirin for coronary artery disease equivalent disease, diabetes mellitus present on problem list; and age more than 65, requiring pneumococcal vaccination).

Two systems, LMR and Star Panel, had data fields contextually linked to specific data within the chart as well as specific reference information. Three of the studied EHR systems (LMR, NextGen and Clinic Station) allowed clinicians to customize the clinical content that was presented to them, but only one system (LMR) allowed the presented data to be edited directly from the clinical summary screen. Scrolling and tabbed screens were used commonly to display information that did not fit into the default size of the screen. Most systems attempted the display of the summary information using modular views (boxes or windows) to separate the content displayed

Discussion

The Institute of Medicine’s recent report on “Health Information Technology and Patient Safety: Building Safer Systems for Better Care” correctly recognized that many EHR vendors restrict access to screenshots of their products [34]. If we are to collectively develop the next generation of safe and effective EHRs, we must have the ability to review, compare, and comment on the features and functions of all EHRs. Therefore, our findings, while not an exhaustive analysis of all currently available EHR systems, suggest that, while most of the EHR systems studied have some similarities, they vary widely not only in the content and presentation of information but also in the ability and extent of summarization that may support clinical decision making. Our results emphasize that the electronic clinical summary screens often lack customizability and have only a limited ability to extract contextually linked specific patient information. They commonly use less sophisticated techniques in the process of clinical summarization like aggregation and organization rather than more active clinical decision support that is provided by the interpretation of information using clinical rules and the synthesis of recommendations for further action. Our study is limited by its observational nature and by the inability to directly interact with systems capabilities; but since a general clinical summary screen is inherently a clinical “snapshot”, our study in fact highlights the aspects of the summary screens that are vague and not easily discoverable from the interface. An obvious next step in our research would be a detailed interactive usability comparison for each screen.

Clinicians are often presented with large amounts of aggregate data from a variety of sources both in paper and electronic form and have to process this information in a manner that is not only conducive to medical decision-making but is also transparent in other subsequent processes of care, for example communication of relevant information while referring a patient to a colleague. Inadequate attention to the clinical summarization process can lead to various potential failures due to information that has been overlooked, including missed allergy information, inadvertent medication errors, and missed or delayed diagnoses, all leading to adverse patient outcomes [35]. From a user’s perspective this could lead to potential information overload, dissatisfaction with the electronic health record and subsequent adoption of unsafe workarounds and resistance to the adoption of otherwise potentially useful technology [36–38]. Therefore, the way that relevant information is summarized and presented to the clinician in an EHR is of increasing importance. Each component in the AORTIS model has significant safety implications; thus the authors recommend that EHRs should strive to adopt each in its summary screen. The higher levels of this model (i.e., transformation, interpretation and synthesis) are superior methods for displaying pertinent information, but the components chosen to display data should be tailored to the information needs of the clinician. In particular, we encourage EHR designers and developers to include more graphical, transformation-type elements (e.g., SparkLines) in their summary screens [39].

The authors also recommend that vendors openly participate in collaborative research, working together with informaticians, clinicians and patient safety researchers in order to create safe and relevant clinical summary screens. The planned next steps in our study include a more formal naturalistic observation and artifact analysis to supplement our understanding about the nature and context of use of these clinical summary screens. We are also currently exploring the development of clinical knowledge bases that would allow clinical summary screen developers to organize a patient’s data by clinical condition. In other words, the clinician would be able to review all of a patient’s medications that were being used to treat a particular condition along with relevant laboratory test results required to monitor either the condition or its treatment.

Conclusion

Improvement of the clinical summary screen functionality for currently available EHRs is necessary. It is imperative that EHR developers create new standard clinical summarization features, functions, and displays if clinicians are to achieve the anticipated benefits of state of the art EHRs. Further research should identify strategies and methods for creating easy to use, well-designed clinical summary screens that aggregate, organize and reduce all pertinent patient information as well as provide clinical interpretations and synthesis as required.

Conflicts of Interest

The authors declare that they have no conflicts of interest in the research.

Ethics

No human subjects were involved in the study.

Acknowledgements

We thank Toby Samo, Chief Medical Officer at Allscripts for permission to use a screenshot of their summary screen. The authors would like to acknowledge Adolph Trudeau, Laura Fochtmann, Dario Giuse, Jacob McCoy, David Beck and others who provided information about the studied EHR systems and screens. This project was supported in part by Grant No. 10510592 for Patient-Centered Cognitive Support under the Strategic Health IT Advanced Research Projects Program (SHARP) from the Office of the National Coordinator for Health Information Technology and supported in part by the Houston VA HSR&D Center of Excellence (HFP90–020).

References

- 1.Adler-Milstein J, DesRoches CM, Jha AK. Health information exchange among US hospitals. Am J Manag Care 2011; 17(11): 761–768 [PubMed] [Google Scholar]

- 2.Sittig DF, Singh H. Legal, ethical, and financial dilemmas in electronic health record adoption and use. Pediatrics 2011; 127 : e1042-e1047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Gilchrist V, McCord G, Schrop SL, King BD, McCormick KF, Oprandi AM, et al. Physician activities during time out of the examination room. Ann Fam Med 2005; 3: 494–499 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Laxmisan A, Hakimzada F, Sayan OR, Green RA, Zhang J, Patel VL. The multitasking clinician: decision-making and cognitive demand during and after team handoffs in emergency care. Int J Med Inform 2007; 76: 801–811 [DOI] [PubMed] [Google Scholar]

- 5.Weigl M, Muller A, Zupanc A, Angerer P. Participant observation of time allocation, direct patient contact and simultaneous activities in hospital physicians. BMC Health Serv Res 2009; 9: 110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sittig DF, Wright A, Osheroff JA, Middleton B, Teich JM, Ash JS, et al. Grand challenges in clinical decision support. J Biomed Inform 2008; 41: 387–392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gaylin DS, Moiduddin A, Mohamoud S, Lundeen K, Kelly JA. Public Attitudes about health information technology, and its relationship to health care quality, costs, and privacy. Health Serv Res 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Boonstra A, Broekhuis M. Barriers to the acceptance of electronic medical records by physicians from systematic review to taxonomy and interventions. BMC Health Serv Res 2010; 10: 231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc 2004; 11: 104–112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Weir CR, Nebeker JR. Critical issues in an electronic documentation system. AMIA Annu Symp Proc 2007: 786–790 [PMC free article] [PubMed] [Google Scholar]

- 11.Staggers N, Clark L, Blaz JW, Kapsandoy S. Why patient summaries in electronic health records do not provide the cognitive support necessary for nurses’ handoffs on medical and surgical units: insights from interviews and observations. Health Informatics J 2011; 17(3): 209–223 [DOI] [PubMed] [Google Scholar]

- 12.NIST Health IT Standards and Testing [Internet] Clinical Summaries; Available from: http://healthcare. nist.gov/

- 13.HL7 Implementation Guide CDA Release 2 – Continuity of Care Document (CCD) Ann Arbor, Mich: Health Level Seven, Inc; Jan,2007 [Google Scholar]

- 14.Wright A, Chen ES, Maloney FL. An automated technique for identifying associations between medications, laboratory results and problems. J Biomed Inform 2010; 43(6): 891–901 [DOI] [PubMed] [Google Scholar]

- 15.Sittig DF, Singh H. Rights and responsibilities of electronic health record users. CMAJ 2012(in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.McCoy AB, Wright A, Laxmisan A, Singh H, Sittig DF. A prototype knowledge base and SMART app to facilitate organization of patient medications by clinical problems. AMIA Annu Symp Proc 2011: 888–894 [PMC free article] [PubMed] [Google Scholar]

- 17.Greenhalgh T, Wood GW, Bratan T, Stramer K, Hinder S. Patients’ attitudes to the summary care record and HealthSpace: qualitative study. BMJ 2008; 336(7656): 1290–1295 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wright A, Sittig DF, Ash JS, Feblowitz J, Meltzer S, McMullen C, Guappone K, Carpenter J, Richardson J, Simonaitis L, Evans RS, Nichol WP, Middleton B. Development and evaluation of a comprehensive clinical decision support taxonomy: comparison of front-end tools in commercial and internally developed electronic health record systems. J Am Med Inform Assoc 2011; 18(3): 232–242 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Petersen LA, Orav EJ, Teich JM, O'Neil AC, Brennan TA. Using a computerized sign-out program to improve continuity of inpatient care and prevent adverse events. Jt Comm J Qual Improv 1998; 24: 77–87 [DOI] [PubMed] [Google Scholar]

- 20.Patterson ES, Roth EM, Woods DD, Chow R, Gomes JO. Handoff strategies in settings with high consequences for failure: lessons for health care operations. Int J Qual Health Care 2004; 16(2): 125–132 [DOI] [PubMed] [Google Scholar]

- 21.van Walraven C, Rokosh E. What is necessary for high-quality discharge summaries? Am J Med Qual 1999; 14: 160–169 [DOI] [PubMed] [Google Scholar]

- 22.Green EH, Hershman W, DeCherrie L, Greenwald J, Torres-Finnerty N, Wahi-Gururaj S. Developing and implementing universal guidelines for oral patient presentation skills. Teach Learn Med 2005; 17: 263–267 [DOI] [PubMed] [Google Scholar]

- 23.Onishi H. Role of case presentation for teaching and learning activities. Kaohsiung J Med Sci 2008; 24: 356–360 [DOI] [PubMed] [Google Scholar]

- 24.Van Vleck TT, Stein DM, Stetson PD, Johnson SB. Assessing data relevance for automated generation of a clinical summary. AMIA Annu Symp Proc 2007: 761–765 [PMC free article] [PubMed] [Google Scholar]

- 25.Law AS, Freer Y, Hunter J, Logie RH, McIntosh N, Quinn J. A comparison of graphical and textual presentations of time series data to support medical decision making in the neonatal intensive care unit. J Clin Monit Comput 2005; 19: 183–194 [DOI] [PubMed] [Google Scholar]

- 26.Hunter J, Freer Y, Gatt A, Logie R, McIntosh N, van der Meulen M, et al. Summarising complex ICU data in natural language. AMIA Annu Symp Proc 2008: 323–327 [PMC free article] [PubMed] [Google Scholar]

- 27.Greenhalgh T, Stramer K, Bratan T, Byrne E, Russell J, Potts HW. Adoption and non-adoption of a shared electronic summary record in England: a mixed-method case study. BMJ 2010; 340 : c3111. [DOI] [PubMed] [Google Scholar]

- 28.Brennecke T, Michalowski L, Bergmann J, Bott OJ, Elwert A, Haux R, et al. On feasibility and benefits of patient care summaries based on claims data. Stud Health Technol Inform 2006; 124: 265–270 [PubMed] [Google Scholar]

- 29.Elhadad N, McKeown K, Kaufman D, Jordan D. Facilitating physicians' access to information via tailored text summarization. AMIA Annu Symp Proc 2005: 226–230 [PMC free article] [PubMed] [Google Scholar]

- 30.Afantenos S, Karkaletsis V, Stamatopoulos P. Summarization from medical documents: a survey. Artif In-tell Med 2005; 33: 157–177 [DOI] [PubMed] [Google Scholar]

- 31.Reichert D, Kaufman D, Bloxham B, Chase H, Elhadad N. Cognitive analysis of the summarization of longitudinal patient records. AMIA Annu Symp Proc 2010; 2010: 667–671 [PMC free article] [PubMed] [Google Scholar]

- 32.Feblowitz JC, Wright A, Singh H, Samal L, Sittig DF. Summarization of clinical information: a conceptual model. J Biomed Inform 2011; 44(4): 688–699 [DOI] [PubMed] [Google Scholar]

- 33.McMullen CK, Ash JS, Sittig DF, Bunce A, Guappone K, Dykstra R, Carpenter J, Richardson J, Wright A. Rapid assessment of clinical information aystems in the healthcare setting. An efficient method for time-pressed evaluation. Methods of Information in Medicine 2011; 50: 299–307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Institute of Medicine Health IT and Patient Safety: Building safer systems for better care. Washington, DC: The National Academies Press, 2012. Available at: http://iom.edu/Reports/2011/Health-IT-and-Patient-Safety-Building-Safer-Systems-for-Better-Care.aspx(Accessed December 16, 2011) [PubMed] [Google Scholar]

- 35.Singh H, Thomas EJ, Sittig DF, Wilson L, Espadas D, Khan MM, Petersen LA. Notification of abnormal lab test results in an electronic medical record: do any safety concerns remain? The American Journal of Medicine 2010; 123(3): 238–244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ash JS, Sittig DF, Campbell EM, Guappone KP, Dykstra RH. Some unintended consequences of clinical decision support systems. AMIA Annu Symp Proc 2007: 26–30 [PMC free article] [PubMed] [Google Scholar]

- 37.Schattner A. Angst-driven medicine? QJM 2009; 102: 75–78 [DOI] [PubMed] [Google Scholar]

- 38.Gandhi TK, Sittig DF, Franklin M, Sussman AJ, Fairchild DG, Bates DW. Communication breakdown in the outpatient referral process. J Gen Intern Med 2000; 15: 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Thomas P, Powsner S. Data presentation for quality improvement. AMIA Annu Symp Proc 2005: 1134. [PMC free article] [PubMed] [Google Scholar]