Abstract

Background

In-hospital mortality measures, which are widely used to assess hospital quality, are not based on a standardized follow-up period and may systematically favor hospitals with shorter lengths of stay (LOS).

Objective

To assess the agreement between performance measures of U.S. hospitals using risk-standardized in-hospital and 30-day mortality rates.

Design

Observational study.

Setting

U.S. acute care non-federal hospitals with at least 30 admissions for acute myocardial infarction (AMI), heart failure (HF) and pneumonia in 2004–2006.

Patients

Medicare fee-for-service patients admitted for AMI, HF, and pneumonia from 2004–2006.

Measurements

The primary outcomes are in-hospital and 30-day risk-standardized mortality rates.

Results

There were 718,508 AMI admissions to 3,135 hospitals, 1,315,845 HF admissions to 4,209 hospitals, and 1,415,237 pneumonia admissions to 4,498 hospitals. The hospital-level mean patient LOS in days varied across hospitals for each condition, ranging (min-max) for AMI, HF and pneumonia from 2–13, 3–11, and 3–14 days, respectively. The mean risk-standardized mortality rate differences (30-day minus inhospital) were 5.3% (SD=1.3) for AMI, 6.0% (SD=1.3) for HF, and 5.7% (SD=1.4) for pneumonia, with wide distributions across hospitals. Hospital performance classifications differed between in-hospital and 30-day models for 257 hospitals (8.2%) for AMI, 456 (10.8%) for HF, and 662 (14.7%) for pneumonia. Hospital mean LOS was positively correlated with in-hospital RSMR for all three conditions.

Limitations

Our study uses Medicare claims data for risk adjustment.

Conclusions

In-hospital mortality measures provide a different assessment of hospital performance than 30-day mortality and are biased in favor of hospitals with shorter LOS.

INTRODUCTION

The increasing use of mortality rates to assess hospital quality has intensified their importance. A key question confronting policy makers is whether measures that assess only in-hospital deaths are adequate for public reporting. Because the length of time patients are hospitalized for a particular condition or procedure varies across hospitals, in-hospital measures that have differential follow-up have the potential for bias (1). The measures may systematically favor hospitals with early transfers to other facilities and shorter lengths of stay (LOS), regardless of whether these approaches to patient management lead to better patient outcomes. Nevertheless, both in-hospital measures and those with a fixed follow-up period, such as 30 days, are currently widely used (2, 3).

Earlier research suggests cause for concern. Prior studies that compared results of in-hospital and 30-day mortality measures showed differences in mortality trends (4) and hospitals’ relative performance (5–7). Moreover, to avoid biased results, national quality measurement guidelines advocate that hospital quality measures assess patient outcomes over a standardized period of time (8, 9).

Nevertheless, many public and private quality programs continue to use measures focused on in-hospital outcomes. For example, the Agency for Health Research on Quality (AHRQ) has developed 15 in-hospital mortality indicators (for seven medical conditions and eight surgical procedures) (2), and nine states publicly report a subset of these (3). CMS, which currently publicly reports 30-day mortality for AMI, HF, and pneumonia on its website, Hospital Compare, also plans to report AHRQ in-hospital mortality rates for hip fracture, abdominal aortic aneurysm, and a composite of medical conditions.(10)

Guidelines notwithstanding, in-hospital mortality measures continue to be used for practical and conceptual reasons; data for in-hospital assessments of mortality are readily available to any institution, while assessment periods beyond the hospitalization require linking identified patient data to a comprehensive death index or conducting resource-intensive patient follow-up and often will entail delays. In addition, some argue that hospitals should only be assessed on events that occur within their walls(5). Any standard period of follow-up extending beyond discharge may be affected by factors, such as patient adherence post-discharge, that hospitals do not fully control. Conversely, hospitals have a clear responsibility for discharge planning and are a major influence in the post-discharge course, and using a 30-day measure can better capture the quality of the care episode.

Mortality measures with shorter observation periods (e.g. in-hospital or 7-day measures) may better capture certain elements of care quality such as medication or errors and hospital safety practices. But death, regardless of whether it takes place in the hospital or post-discharge period, is a critical outcome for patients, and the death rate for common conditions often is high in the early weeks after discharge and has become relatively higher as LOS has fallen over time. For example, from 1993–94 to 2005–06, as mean hospital LOS for HF fell from 8.6 to 6.4 days, mean in-hospital death rates dropped from 8.2% to 4.5%, while post-discharge death rates within 30 days of admission rose from 4.4% to 6.3% (11). Thus, in-hospital rates suggested hospitals were dramatically improving but from a patient’s perspective less progress had been made.

While the brevity of the observation period is an important limitation of in-hospital measures, the primary concern with in-hospital measures is that their variable period of observation may distort quality assessment. The research studies recommending against the use of in-hospital deaths as hospital measures are more than 15 years old, and changes in practice patterns and statistical approaches may have since negated or exacerbated this concern. As we implement new policies to drive system innovation, a better understanding of the difference between in-hospital and 30-day hospital measures is needed to guide measurement choices and interpretation of measure results. Such information is critically important to guide our expanding quality measurement efforts. Accordingly, we made use of national data from the Centers for Medicare and Medicaid Services (CMS) to compare in-hospital risk-standardized mortality rates (RSMRs) and 30-day RSMRs for profiling the quality of care provided in United States’ hospitals for AMI, HF, and pneumonia.

METHODS

Study Cohort

We included admissions to non-federal acute care hospitals for patients discharged between January 1, 2004 through December 31, 2006 for Medicare patients 65 years and older with complete claims history in the 12 months prior to admission. We created 3 separate cohorts, AMI, HF, and pneumonia, based on the International Classification of Diseases, Ninth Revision, Clinical Modification principal discharge diagnoses codes used to define the cohort for CMS’ publicly reported 30-day RSMRs for these conditions (12). We excluded patients who: stayed in the hospital one day or less if discharged alive; were discharged against medical advice; had a history of Medicare Hospice use prior to admission; or had unclear mortality status (the date of death from hospital claims and enrollment sources conflicted). For HF and pneumonia patients with multiple hospitalizations during the designated time frame, only one admission per patient per year was randomly selected for inclusion. For patients who were transferred from one acute care hospital to another, we linked the hospitalizations into a single episode of care and included the first admission in the transfer sequence, assigning the outcome to that admission. We also limited our admissions sample for each condition to admissions at hospitals with at least 30 cases for that condition over the 3-year period after applying the other exclusions.

Data Sources

Index admission and in-hospital comorbidity data were obtained from Medicare’s Standard Analytic File (SAF). Comorbidities were assessed using Part A inpatient and outpatient, and Part B physician office Medicare claims in the 12 months prior to admissions. Enrollment, hospice, and post-discharge mortality status were obtained from Medicare’s enrollment database.

Outcomes

The patient outcomes were in-hospital and 30-day all-cause mortality. In-hospital mortality was defined as occurring if the patient’s in-hospital record indicated a discharge status of dead regardless of cause and length of stay. Deaths occurring after patients were transferred were not counted as in-hospital deaths. Thirty-day mortality was defined as occurring if the patient died within 30 days of admission regardless of cause or venue.

In-hospital and 30-day Risk-Standardized Mortality Rates

We used methods endorsed by the National Quality Forum (NQF) and applied by CMS to estimate in-hospital and 30-day RSMRs for AMI, HF and pneumonia (13, 14). We estimated hospital-level 30-day risk-standardized all-cause mortality rates (30-day RSMRs) for each condition using CMS’ hierarchical generalized linear models. These models are described in detail elsewhere (13–18). Briefly, the models simultaneously use two levels (patient and hospital) to account for the variance in patient outcomes within and between hospitals. At the first level, we model the log-odds of mortality for a patient treated in a particular hospital as a function of age, gender, 21–29 clinical covariates, and a hospital-specific intercept. At the second level, the hospital-specific intercepts are modeled as an overall mean and a random error term. The inclusion of the hospital-specific intercepts accommodates clustering of outcomes for patients treated within the same hospital(19). The error term in the second level was assumed to be Normal with mean 0 and unknown variance.

We fit the hierarchical models to the data for each condition separately using inhospital mortality as the outcome, and repeated our estimation process using 30-day rather than in-hospital mortality.

We next calculated the risk-standardized mortality rates, defined as the ratio of the number of “predicted” to “expected” deaths, multiplied by the patient-level raw mortality rate for the dataset (expressed as a percent). The expected number of deaths for each hospital was estimated by applying the estimated regression coefficients to the patient characteristics of each of the hospital’s patients, adding the average of the hospital-specific intercepts, and after transformation, summing over all patients in the hospital to obtain the count. The predicted number of deaths was calculated by applying the estimated regression coefficients to the patient characteristics of each of the hospital’s patients, adding the hospital-specific intercept (representing baseline mortality risk at the specific hospital), and after transformation, summing over all patients in the hospital to get a predicted count.

Statistical Analysis

For each hospital and each condition we calculated: patient volume, hospital-level mean length of stay (defined as the difference between the discharge date and admission date plus 1 day), and the percent of patients transferred. For each condition, we calculated the proportion of 30-day deaths among all hospitals that occurred in the hospital.

We calculated the difference between hospitals’ 30-day and in-hospital RSMRs (30-day RSMR – in-hospital RSMR) by hospital and then computed statistical summaries of the empirical distribution of the differences. A wide range in RSMR differences suggests quality assessments based on the in-hospital and 30-day measures differ.

To compare how the two measures categorize hospital performance, we classified hospitals by in-hospital and 30-day RSMRs into three performance categories using the approach CMS employs for reporting its 30-day RSMRs on the Hospital Compare website (20). Specifically, we used bootstrapping to empirically construct a 95% interval estimate for each RSMR. If the hospital’s interval estimate was below the mean mortality rate for the condition we classified the hospital as having better than expected mortality; if the interval estimate was entirely above the mean, we classified the hospital as having worse than expected mortality; if the hospital’s interval estimate included the national average, we classified it “as expected.” Because the 30-day mortality models are based on a standard follow-up period, we assumed it was the gold-standard and then calculated the difference in classification between 30-day and in-hospital measures as well as the sensitivity and specificity of the in-hospital measures for classifying better (versus as expected or worse) and worse (versus as expected or better) hospitals.

To assess differences in how the in-hospital and 30-day measures characterize variation in hospital performance, we also quantified the between-hospital variation of inhospital and 30-day rates. The between-hospital variation is the variation that remains after accounting for patient risk factors and number of cases. Assuming all key risk factors are accounted for, the residual or between-hospital variation provides a quantitative measure of quality (21). To characterize the clinical importance of the between-hospital variation, we estimated the odds of dying for a patient when treated in a hospital one standard deviation above the national mortality rate relative to when treated at a hospital one standard deviation below the national mortality rate. If there were no differences in hospital quality after adjusting for patient factors and number of cases, the odds would be one. We computed these odds for each RSMR and condition, and compared the magnitude of these odds between the in-hospital and 30-day models.

We also examined the association of LOS with in-hospital mortality rates. This was accomplished by calculating the mean LOS for each hospital, fitting a weighted linear regression model, weighted by hospital volume, with RSMR as the outcome and mean LOS as the only predictor. Similarly, we fit a regression model with RSMR as a function of hospitals’ rates of transferring patients for the relevant condition from their facility to another acute care facility (transferred-out rates). Because PN and HF have low transferred-out rates (<2.0%), we only fit the model for the AMI RSMRs.

All statistical testing was 2-sided, at a significance level of 0.05. All analyses were conducted using SAS version 9.2 (SAS Institute Inc. Cary, North Carolina). Hierarchical models were fitted using the GLIMMIX procedure in SAS.

The Yale University Investigational Review Board approved this study.

Role of the Funding Source

The analyses upon which this publication is based were performed under Contract Number HHSM-500-2008-0025I Task Order T0001, entitled “Measure & Instrument Development and Support (MIDS)-Development and Re-evaluation of the CMS Hospital Outcomes and Efficiency Measures,” funded by the Centers for Medicare & Medicaid Services, an agency of the U.S. Department of Health and Human Services. The Centers for Medicare & Medicaid Services reviewed and approved the use of its data for this work and approved submission of the manuscript. The content of this publication does not necessarily reflect the views or policies of the Department of Health and Human Services, nor does mention of trade names, commercial products, or organizations imply endorsement by the U.S. Government. The authors assume full responsibility for the accuracy and completeness of the ideas presented. In addition, the project was supported by Award Number U01HL105270 from the National Heart, Lung, And Blood Institute. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Heart, Lung, And Blood Institute or the National Institutes of Health.

RESULTS

Hospital Sample

There were 718,508 AMI admissions to 3,135 hospitals; 1,315,845 HF admissions to 4,209 hospitals; and 1,415,237 pneumonia admissions to 4,498 hospitals during the 3-year study period (Table 1). The hospital-level mean patient LOS in days varied across hospitals for each condition, ranging (min-max) for AMI, HF and pneumonia from 2–13, 3–11, and 3–14 days, respectively (Table 1; Figure 1a–c). The percent of patients transferred from one acute care facility to another was highest for AMI and also varied widely across hospitals, with mean hospital transferred-out rates (range) for AMI, HF and pneumonia of 10.4% (0.0–80.6), 1.3% (0.0–19.4), and, 0.7% (0.0–52.5), respectively (Table 1). For all conditions the proportion of deaths within 30 days occurring after discharge varied by hospital; the mean hospital proportions were 34.3% (Inter-quartile range[IQR] =25.7–41.7) for AMI, 54.9% (IQR=47.7–63.3) for HF, and 50.3% IQR=41.9–58.6) for pneumonia (Table 1).

Table 1.

Hospital Sample and Results

| AMI | HF | Pneumonia | |

|---|---|---|---|

| Number of Hospitals | 3,135 | 4,209 | 4,498 |

| Total Admissions (2004–2006) | 718,508 | 1,315,845 | 1,415,237 |

| Volume | |||

| Mean (SD) | 229.9 (242.1) | 312.6 (318.0) | 314.6 (276.3) |

| Median | 147 | 204 | 233 |

| Range | 30-2,291 | 30-3,669 | 30-3,913 |

| Hospital transfer-out rates | |||

| Mean (SD) | 10.4 (14.6) | 1.3 (1.7) | 0.7 (1.3) |

| Range | 0–80.6 | 0–19.4 | 0–52.5 |

| LOS (days) | |||

| Mean (SD) | 7.1 (1.5) | 6.8 (1.1) | 7.1 (1.2) |

| Range | 2.3–13.7 | 3.5–11.9 | 3.8–14.8 |

| In-hospital RSMR (%) | |||

| Mean (SD) | 10.8 (1.8) | 5.2 (1.2) | 6.4 (1.9) |

| Range | 6.5–19.3 | 2.4–12.3 | 2.5–23.1 |

| 30-day RSMR (%) | |||

| Mean (SD) | 16.1 (1.8) | 11.2 (1.7) | 12.2 (2.1) |

| Range | 10.8–23.5 | 5.8–19.7 | 6.9–28.4 |

| Percentage of hospital deaths within 30 days occurring post discharge | |||

| Mean (SD) | 34.2 (12.4) | 54.9 (13.1) | 50.3 (13.6) |

| IQR | 25.7–41.7 | 47.4–63.3 | 41.9–58.6 |

| (30-day RSMR[%]) – (In-hospital RSMR [%]) | |||

| Mean (SD) | 5.3 (1.3) | 6.0 (1.3) | 5.7 (1.4) |

| Range | 1.3–11.0 | 1.4–11.2 | (−)0.4–12.1 |

|

|

|||

Abbreviations: acute myocardial infarction (AMI); heart failure (HF); length of stay (LOS); IQR (inter-quartile range) risk-standardized mortality rate (RSMR); standard deviation (SD)

Figure 1.

Figure 1a. Acute Myocardial Infarction Hospital Mean Length of Stay

Figure 1b: Heart Failure Hospital Mean Length of Stay

Figure 1c: Pneumonia Hospital Mean Length of Stay

30-Day and In-Hospital RSMR Differences

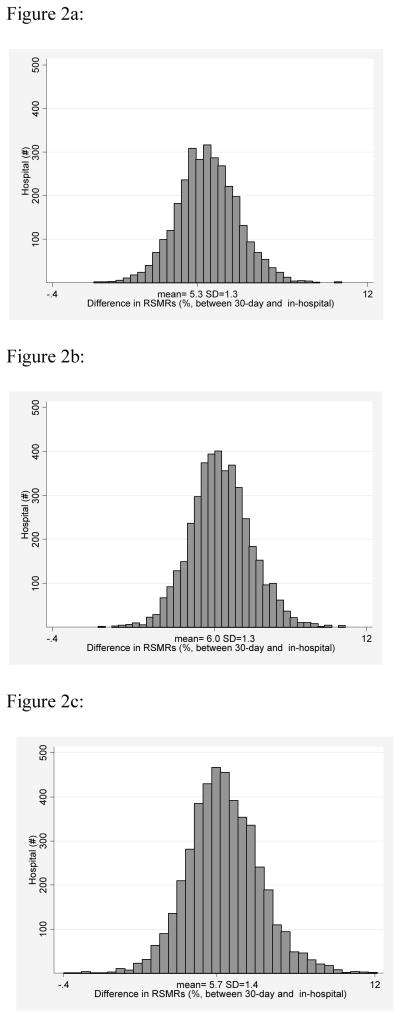

The mean in-hospital RSMR was 10.8% (SD =1.8) for AMI, whereas the mean 30-day RSMR was 16.1% (SD=1.8). Similarly, the inpatient and 30-day RSMRs for HF hospitalizations were 5.2% (SD=1.2) and 11.2% (SD=1.7) and for pneumonia were 6.4% (SD=1.9) and 12.2% (SD=2.1). The mean RSMR differences (30-day RSMR minus inhospital RSMR) were 5.3% (SD=1.3) for AMI, 6.0% (SD=1.3) for HF, and 5.7% (SD=1.4) for pneumonia, with a wide range across hospitals for all 3 conditions (range min-max: 1.3%–11% for AMI; 1.4%–11.2% for HF; (-)0.4%–12.1% for pneumonia) (Figure 2a-c; Table 1).

Figure 2.

Figure 2a: Distribution of (30-Day) – (In-hospital) Risk Standardized Mortality Rates for Acute Myocardial Infarction

Figure 2b: Distribution of (30-Day) – (In-hospital) Risk Standardized Mortality Rates for Heart Failure

Figure 2c: Distribution of (30-day) – (In-hospital) Risk Standardized Mortality Rates for Pneumonia

Hospital Performance Categories

Classifying hospital performance using in-hospital and 30-day models resulted in different performance assessments for many hospitals (Tables 2a-c). The in-hospital models resulted in different performance classifications for 257 hospitals (8.2%) for AMI, 456 (10.8%) for heart failure; and 662 (14.7%) for pneumonia. For all conditions, more hospitals shifted to less favorable performance categories than shifted to more favorable performance categories when using the in-hospital measure instead of the 30-day measure.

Table 2.

Hospital classification comparison*

| Table 2a. Acute Myocardial Infarction Hospital Classifications | ||||||||

|---|---|---|---|---|---|---|---|---|

| In-Hospital Mortality | 30-Day Mortality | |||||||

| Better | As Expected | Worse | Total | |||||

| # | % | # | % | # | % | # | % | |

| Better | 48 | 1.5% | 51 | 1.6% | 0 | 0.0% | 99 | 3.2% |

| As Expected | 76 | 2.4% | 2,780 | 88.7% | 31 | 1.0% | 2,887 | 92.1% |

| Worse | 0 | 0.0% | 99 | 3.2% | 50 | 1.6% | 149 | 4.8% |

| Total | 124 | 4.0% | 2,930 | 93.5% | 81 | 2.6% | 3,135 | 100.0% |

| Table 2b. Heart Failure Hospital Classifications | ||||||||

|---|---|---|---|---|---|---|---|---|

| In-Hospital Mortality | 30-Day Mortality | |||||||

| Better | As Expected | Worse | Total | |||||

| # | % | # | % | # | % | # | % | |

| Better | 78 | 1.9% | 59 | 1.4% | 0 | 0.0% | 137 | 3.3% |

| As Expected | 145 | 3.4% | 3,573 | 84.9% | 100 | 2.4% | 3,818 | 90.7% |

| Worse | 4 | 0.1% | 148 | 3.5% | 102 | 2.4% | 254 | 6.0% |

| Total | 227 | 5.4% | 3,780 | 89.8% | 202 | 4.8% | 4,209 | 100.0% |

| Table 2c. Pneumonia Hospital Classifications | ||||||||

|---|---|---|---|---|---|---|---|---|

| In-Hospital Mortality | 30-Day Mortality | |||||||

| Better | As Expected | Worse | Total | |||||

| # | % | # | % | # | % | # | % | |

| Better | 136 | 3.0% | 107 | 2.4% | 0 | 0.0% | 243 | 5.4% |

| As Expected | 178 | 4.0% | 3,457 | 76.9% | 122 | 2.7% | 3,757 | 83.5% |

| Worse | 2 | 0.0% | 253 | 5.6% | 243 | 5.4% | 498 | 11.1% |

| Total | 316 | 7.0% | 3,817 | 84.9% | 365 | 8.1% | 4,498 | 100.0% |

Approach to classification: Better: 95% interval estimate was entirely above the national average mortality rate; As Expected: 95% interval included the national average; Worse: 95% interval was entirely above the national average.

The sensitivity and specificity, respectively, of the in-hospital measure for identifying better hospitals were 38.7% and 98.3% for AMI; 34.4% and 98.5% for HF; and 43.0% and 97.3% for pneumonia. The sensitivity and specificity, respectively, for identifying higher-than-expected mortality (worse) hospitals were 61.7% and 96.8% for AMI; 50.5% and 96.2% for HF, and 66.6% and 93.8% for pneumonia.

Measured Hospital Variation

The in-hospital models were associated with greater between-hospital variation in mortality than the 30-day models for all conditions. As a result, the estimated odds of a patient dying when treated at a hospital one standard deviation below average quality relative to when treated at a hospital one standard deviation above average quality were greater for the in-hospital measures (Table 3). For example, for pneumonia the odds were 2.1 for in-hospital death and 1.7 for 30-day death.

Table 3.

Estimated Mortality Odds [95% confidence interval] in a Hospital one Standard Deviation Above vs. One Standard Deviation Below the National Mean Mortality

| In-Hospital Models | 30-Day Models | |

|---|---|---|

| Acute Myocardial Infarction | 1.66 (1.62–1.70) | 1.48 (1.45–1.51) |

| Heart Failure | 1.85 (1.80–1.90) | 1.58 (1.56–1.61) |

| Pneumonia | 2.11 (2.06–2.17) | 1.68 (1.67.1.72) |

|

|

||

Association of LOS and Transfer-Out Rate on RSMR

For the in-hospital measures, hospital mean LOS was positively correlated with RSMR for all three conditions, and the effect was greatest for pneumonia (Table 4). The estimated increase in RSMR associated with each per-day increase in LOS was an absolute increase of 0.33% for AMI, 0.30% for HF, and 0.62% for pneumonia (p< 0.0001 for all conditions). For in-hospital AMI RSMR, an absolute 1% increase in the hospital transferred-out rate was associated with a 0.02% decrease in RSMR (Table 4).

Table 4.

Regression of LOS and Transfer Rate on In-Hospital RSMRs*

| AMI Coefficient (SE) | HF Coefficient (SE) | Pneumonia Coefficient (SE) | |

|---|---|---|---|

| LOS vs. In-Hospital RSMR (%) | |||

| Intercept | 8.77 (0.01) | 3.48 (0.01) | 2.62 (0.01) |

| Hospital Mean LOS (days) | 0.33 (0.00) | 0.30 (0.00) | 0.62 (0.00) |

| Transfer-out rate vs. In- Hospital RSMR (%) | |||

| Intercept | 0.11 (0.00) | ||

| Transfer-out rate | −0.02 (0.00) | ||

|

|

|||

All p-values < 0.0001

DISCUSSION

The use of in-hospital mortality – a measure that observes patients for variable lengths of time depending on their length of stay and transfer status – results in a different assessment of hospital quality than an assessment that observes patients for a standardized period of 30 days. We found that the differences between in-hospital and 30-day rates varied widely across hospitals, confirming that in-hospital measures would favor some hospitals over others. Consistent with that finding, many hospitals had a different performance category classification with the in-hospital measure compared with the 30-day measure. The in-hospital measures were less than 50% sensitive for better hospitals for all conditions, but greater than 50% sensitive for worse hospitals.

In-hospital mortality measures were associated with more between-hospital variation relative to 30-day measures. This result is expected given that the variation in the period of time patients were observed for the outcome of mortality due to LOS and transfer differences across hospitals contributes to variation in the observed in-hospital mortality rates across hospitals. By including variability attributable to non-constant follow-up periods the in-hospital models overstate variation attributable to quality differences.

Because patients at hospitals with longer length of stays are on average observed for longer lengths of time, mean hospital LOS was positively associated with in-hospital RSMRs. Hospital transfer-out rates for AMI were negatively associated with in-hospital RSMRs. These findings support the conclusion that in-hospital rates favor hospitals with shorter LOS and higher transfer rates compared to measures with a standardized follow-up period of 30 days.

Policy and Research Implications

Our findings are consistent with and extend these prior studies, and have direct relevance for current policies (5–7). The NQF has endorsed both types of measures as appropriate for public reporting of hospital quality, facilitating their use in public reporting(22). Our work suggests the need to reassess the use of in-hospital measures in national profiling efforts, considering the differences in hospital classifications using the CMS 30-day RSMRs and the in-hospital RSMRs calculated with the same methods. The in-hospital measure results reflect variation in the period of time the measures observe patients for the outcome as well as variation in quality. As a result, they favor hospitals with shorter LOS and early transfers and overstate variation in quality. Outcomes measures designed to assess quality should not be biased by these differences.

The use of mortality measures – and outcomes measures more generally -- with standardized observation periods will become more important as we seek to assess health care innovations promoted by the Affordable Care Act. Although the relationships of hospital LOS and transfer-out rates with in-hospital RSMRs in our study were modest, our results argue against using in-hospital measures to judge the success of these innovations. As accountable care organizations and other innovative care delivery strategies evolve, LOS and referral patterns across acute care hospitals are likely to further diverge, and measures used to assess care quality should be neutral to these factors.

Advantages and Limitations

Our study has several advantages. We used a recent, national sample of admissions that reflects the diversity of hospitals likely to be found in any large hospital profiling effort. We applied NQF-approved hierarchical models that account for clustering, and we used CMS’s current approach to national performance classification demonstrating the relevance of our findings to current practice. A limitation of our study is the use of Medicare claims data to risk adjust for patient mortality. The 30-day models for all three conditions, however, have been shown to produce estimates of hospital rankings that are highly correlated with those based on data sources with richer clinical information (13, 14, 18).

Conclusions

Using in-hospital mortality measures to profile the quality of care for three common medical conditions across U.S. hospitals leads to substantial differences in hospital performance classifications when compared with 30-day mortality measures. The increased variation and change in classifications seen in the in-hospital measures reflect in part variation in how long patients were observed for the outcome. In-hospital measures favor hospitals with shorter mean LOS and transfer rates. As the nation increases its use of outcome measures to assess and reimburse for quality and to evaluate system innovations, outcomes measures with standardized follow up periods that are unaffected by variation in LOS or transfer patterns should be considered in preference to in-hospital measures. Building national databases of key outcomes such as mortality that can be readily linked to patient data would make measures that use standardized outcome periods more feasible and timely.

Acknowledgments

We thank Shantal Savage for her assistance with the preparation of this manuscript.

References

- 1.Piantadosi S. Clinical trials: a methodologic perspective. New York: John Wiley and Sons, Inc; 1997. [Google Scholar]

- 2.Agency for Healthcare Research and Quality. Inpatient Quality Indicators Overview - AHRQ Quality Indicators. 2006. [Google Scholar]

- 3.Agency for Healthcare Research and Quality. Health Care Report Card Compendium. [DOI] [PubMed] [Google Scholar]

- 4.Baker DW, Einstadter D, Thomas CL, Husak SS, Gordon NH, Cebul RD. Mortality trends during a program that publicly reported hospital performance. Medical care. 2002;40(10):879–90. doi: 10.1097/00005650-200210000-00006. [DOI] [PubMed] [Google Scholar]

- 5.Jencks SF, Williams DK, Kay TL. Assessing hospital-associated deaths from discharge data. The role of length of stay and comorbidities. JAMA. 1988;260(15):2240–6. [PubMed] [Google Scholar]

- 6.Rosenthal GE, Baker DW, Norris DG, Way LE, Harper DL, Snow RJ. Relationships between in-hospital and 30-day standardized hospital mortality: implications for profiling hospitals. Health Serv Res. 2000;34(7):1449–68. [PMC free article] [PubMed] [Google Scholar]

- 7.Chassin MR, Park RE, Lohr KN, Keesey J, Brook RH. Differences among hospitals in Medicare patient mortality. Health Serv Res. 1989;24(1):1–31. [PMC free article] [PubMed] [Google Scholar]

- 8.Centers for Medicare and Medicaid Services. Measure Management System Blueprint. 7.0 [Google Scholar]

- 9.Krumholz HM, Brindis RG, Brush JE, Cohen DJ, Epstein AJ, Furie K, et al. Standards for statistical models used for public reporting of health outcomes: an American Heart Association Scientific Statement from the Quality of Care and Outcomes Research Interdisciplinary Writing Group: cosponsored by the Council on Epidemiology and Prevention and the Stroke Council. Endorsed by the American College of Cardiology Foundation. Circulation. 2006;113(3):456–62. doi: 10.1161/CIRCULATIONAHA.105.170769. [DOI] [PubMed] [Google Scholar]

- 10.Medicare Program; Hospital Inpatient Prospective Payment Systems for Acute Care Hospitals and the Long Term Care Hospital Prospective Payment System Changes and FY2011 Rates; Provider Agreements and Supplier Approvals; and Hospital Conditions of Participation for Rehabilitation and Respiratory Care Services; Medicaid Program: Accreditation for Providers of Inpatient Psychiatric Services; Final Rule. Federal Register. 2010;75(157):50188. [PubMed] [Google Scholar]

- 11.Bueno H, Ross JS, Wang Y, Chen J, Vidan MT, Normand SL, et al. Trends in length of stay and short-term outcomes among Medicare patients hospitalized for heart failure, 1993–2006. JAMA: the journal of the American Medical Association. 2010;303(21):2141–7. doi: 10.1001/jama.2010.748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Grosso LM, Schreiner GC, Wang Y, Grady JN, Wang Y, Duffy CO, et al. Measures Maintenance Technical Report: Acute Myocardial Infarction, Heart Failure, and Pneumonia 30-Day Risk-Standardized Mortality Measures. 2009:1–47. http://www.qualitynet.org/dcs/BlobServer?blobkey=id&blobnocache=true&blobwhere=1228881531318&blobheader=multipart%2Foctet-stream&blobheadername1=Content-Disposition&blobheadervalue1=attachment%3Bfilename%3DMortMeasMainTechRpt_092809.pdf&blobcol=urldata&blobtable=MungoBlobs on September 14, 2011.

- 13.Krumholz HM, Wang Y, Mattera JA, Wang Y, Han LF, Ingber MJ, et al. An Administrative Claims Model Suitable for Profiling Hospital Performance Based on 30-Day Mortality Rates Among Patients With Heart Failure. Circulation. 2006;113(13):1693–701. doi: 10.1161/CIRCULATIONAHA.105.611194. [DOI] [PubMed] [Google Scholar]

- 14.Krumholz HM, Wang Y, Mattera JA, Wang Y, Han LF, Ingber MJ, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with an acute myocardial infarction. Circulation. 2006;113(13):1683–92. doi: 10.1161/CIRCULATIONAHA.105.611186. [DOI] [PubMed] [Google Scholar]

- 15.Krumholz HM, Normand S-LT, Bratzler DW, Mattera JA, Rich AS, Wang Y, et al. Risk-Adjustment Methodology for Hospital Monitoring/Surveillance and Public Reporting Supplement #1: 30-Day Mortality Model for Pneumonia. 2006:1–48. http://www.qualitynet.org/dcs/BlobServer?blobkey=id&blobnocache=true&blobwhere=1228861744769&blobheader=multipart%2Foctet-stream&blobheadername1=Content-Disposition&blobheadervalue1=attachment%3Bfilename%3DYaleCMS_PN_Report%2C0.pdf&blobcol=urldata&blobtable=MungoBlobs on September 14, 2011.

- 16.Bhat KR, Drye EE, Krumholz HM, Normand S-LT, Schreiner GC, Wang Y, et al. Acute Myocardial Infarction, Heart Failure, and Pneumonia Mortality Measures Maintenance Technical Report. 2008:1–80. http://www.qualitynet.org/dcs/BlobServer?blobkey=id&blobnocache=true&blobwhere=1228873653578&blobheader=multipart%2Foctet-stream&blobheadername1=Content-Disposition&blobheadervalue1=attachment%3Bfilename%3DMortMeasMaint_TechnicalReport_March+31+08_FINAL.pdf&blobcol=urldata&blobtable=MungoBlobs on September 14, 2011.

- 17.Krumholz HM, Normand S-LT, Galusha DH, Mattera JA, Rich AS, Wang Y, et al. Risk-Adjustment Models for AMI and HF 30-Day Mortality. 2007:1–118. http://www.qualitynet.org/dcs/BlobServer?blobkey=id&blobnocache=true&blobwhere=1228861777994&blobheader=multipart%2Foctet-stream&blobheadername1=Content-Disposition&blobheadervalue1=attachment%3Bfilename%3DYale_AMI-HF_Report_7-13-05%2C0.pdf&blobcol=urldata&blobtable=MungoBlobs on September 14, 2011.

- 18.Lindenauer PK, Bernheim SM, Grady JN, Lin Z, Wang Y, Merrill AR, et al. The performance of US hospitals as reflected in risk-standardized 30-day mortality and readmission rates for medicare beneficiaries with pneumonia. Journal of hospital medicine: an official publication of the Society of Hospital Medicine. 2010;5(6):E12–8. doi: 10.1002/jhm.822. [DOI] [PubMed] [Google Scholar]

- 19.Normand S, Shahian DM. Statistical and clinical aspects of hospital outcomes profiling. Statistical Science. 2007;22(2):206–29. (see p. 214) [Google Scholar]

- 20.CMS. Hospital Compare - A quality tool for adults, including people with Medicare. http://www.hospitalcompare.hhs.gov.

- 21.Iezzoni LI. Risk adjustment for measuring health care outcomes. 3. Chicago: Health Administration Press; 2003. [Google Scholar]

- 22.National Quality Forum. NQF Endorsed Standards. 2010 Jan 1; http://www.qualityforum.org/Measures_List.aspx.