Abstract

Two studies examined response to varying amounts of time in reading intervention for two cohorts of first-grade students demonstrating low levels of reading after previous intervention. Students were assigned to one of three groups that received (a) a single dose of intervention, (b) a double dose of intervention, or (c) no intervention. Examination of individual student response to intervention indicated that more students in the treatment groups demonstrated accelerated learning over time than students in the comparison condition. Students’ responses to the single-dose and double-dose interventions were similar over time. Students in all conditions demonstrated particular difficulties with gains in reading fluency. Implications for future research and practice within response to intervention models are provided.

Keywords: learning disabilities, response to intervention, reading intervention

In the past 30 years, reading intervention research has demonstrated repeatedly that when students at risk for reading difficulties are identified early and provided with appropriate interventions, many students acquire the necessary skills to become successful readers (Foorman, Francis, Fletcher, Schatschneider, & Mehta, 1998; Torgesen et al., 1999; Vellutino et al., 1996). Research examining effective reading instruction for beginning readers is abundant. Nonetheless, few areas of instruction in education have been as controversial over time as reading instruction. The lack of consensus concerning reading instruction has been viewed by many as restricting advances in teacher preparation and, ultimately, the quality of reading instruction obtained by schoolchildren. As a result, Congress mandated a synthesis of the research on reading instruction to summarize findings and influence practice. The outcome was the National Reading Panel (NRP; 2000) report synthesizing the converging evidence of effective instruction in teaching beginning readers. The report identified five critical components of reading instruction necessary for young readers to successfully gain reading skill and reach the ultimate goal of reading fluently and comprehending text: (a) phonological awareness, (b) phonics, (c) fluency, (d) vocabulary, and (e) comprehension. Several other summaries of research on effective reading instruction and effective intervention for students with reading difficulties or disabilities concur; explicit instruction in components of reading involving decoding words effectively, fluency, and comprehension may be necessary for these students to achieve success in reading (Biancarosa & Snow, 2004; Donovan & Cross, 2002; NRP, 2000; Rand Reading Study Group, 2002; Snow, Burns, & Griffith, 1998; Swanson, 1999; Vaughn, Gersten, & Chard, 2000).

Using this body of knowledge to design effective instruction for students in Grades K–3 may help to reduce the incidence of reading difficulties (Torgesen, 2000). In fact, instruction for elementary students may be improving; the most recent National Assessment of Educational Progress report suggests that reading scores for fourth-grade students have increased, with overall scores higher than in any previous assessment year (National Center for Education Statistics, 2005).

Yet despite the wealth of current knowledge in the area of beginning reading instruction, nearly every research study conducted has demonstrated that some students with reading difficulties continue to struggle, even after receiving effective and intensive interventions that have resulted in overall gains for the majority of students. These students are sometimes referred to as “nonresponders” or “treatment resisters.” In recent years, it has become increasingly common for authors to report the number or percentage of nonresponders to the reported interventions. In a synthesis of 23 studies, the range of students not responding to reading interventions varied from 8% to 80% (Al Otaiba & Fuchs, 2002). In general, profiles of these students indicate deficits in phonological processing and rapid naming ability (Al Otaiba & Fuchs, 2002; Torgesen, Wagner, & Rashotte, 1997; Wolf & Bowers, 1999), though there is evidence these variables actually may provide low predictive value and may be codependent (Hammill, 2004; Swanson, Trainin, Necoechea, & Hammill, 2003). Not surprisingly, studies implementing explicit instructional methods yielded stronger outcomes and resulted in fewer students identified as not responding. It has been estimated that 2% to 6% of students continue to struggle with reading, even when generally effective reading interventions are implemented (Torgesen, 2000). Students demonstrating these persistent difficulties in learning to read may require a different amount or type of intervention and likely require special education.

The recent reauthorization of the Individuals With Disabilities Education Act (2004) included examination of the extent to which students respond to intervention as one option for identifying students with learning disabilities. The response-to-intervention (RTI) approach is used to identify students with learning disabilities in reading by examining their response to previous reading instruction. Students who have received generally effective reading instruction and intervention and continue to make insufficient progress may be referred for special education. Based on documentation of their insufficient progress, the rationale is that more specialized instruction or special education is warranted for these students (Barnett, Daly, Jones, & Lentz, 2004). RTI holds promise for providing early intervention and more appropriate identification of students with reading difficulties and learning disabilities.

As RTI is implemented, examination of effective instructional techniques for students demonstrating minimal response to effective interventions becomes paramount. Although we know much about early intervention for many students at risk for reading difficulties, the question remains: What instruction is needed to assist students who have demonstrated low response to a typically effective intervention? In this study, we sought to examine student response to varying amounts of reading intervention for students who had demonstrated low levels of reading after receiving previous intervention.

Interventions for Low Responders

Several studies have taken a preliminary look at the effects of additional interventions for low responders (Berninger et al., 2002; McMaster, Fuchs, Fuchs, & Compton, 2005; Vadasy, Sanders, Peyton, & Jenkins, 2002; Vellutino et al., 1996). Researchers report that student response to the additional interventions has been mixed. Berninger et al. (2002) provided a one-on-one intervention to second-grade students who were very low responders to an intervention provided in first grade. The authors reported that 9 of the 48 students did not demonstrate growth on measures of word reading. Vadasy et al. (2002) also provided continued intervention for students who were low responders. Overall, results indicated that student response was not significantly improved after the additional intervention. The only exception was word identification, where 88% of the students did demonstrate growth.

McMaster et al. (2005) and Vellutino et al. (1996) also reported few significant gains from additional intervention for students whose initial response to intervention was low. McMaster et al. randomly assigned low responders to one of three groups for additional intervention: continued peer-assisted learning strategies (PALS), modified PALS, and one-on-one tutoring. Although no statistically significant differences were found between the three treatments, approximately 80% of the students in PALS and modified PALS remained low responders (performance level and slope more than .50 SD below average students) after the additional 13 weeks of intervention, compared to 50% of students in the tutoring condition.

Vellutino et al. (1996) used student progress in an initial intervention to identify students making very good growth, good growth, low growth, and very low growth (study results also presented in Vellutino & Scanlon, 2002). First-grade students demonstrating initial low response (below 40th percentile) continued with an additional 8 to 10 weeks of intervention in second grade. In general, the growth rates of the low-responding students receiving the additional intervention were the same as the responders (no longer receiving intervention) during the 2nd year of the study; however, the identified growth groups remained distinct on outcome measures after the second intervention. Although many students demonstrated continued very low response after the additional 8 to 10 weeks of intervention, the authors report that several students did reach average levels following the continued intervention.

One difficulty in comparing student response across each of the studies described above is the variation in the identification of students as low responders and their resulting success in additional interventions. However, it is clear from the results that there are some students who demonstrated persistent difficulties in learning to read and that these difficulties were not easily remedied through additional weeks in intervention. In addition to providing more weeks of intervention, two of the studies decreased group size in the second intervention (Berninger et al., 2002; McMaster et al., 2005). Three of the studies also changed instruction in the second intervention (Berninger et al., 2002; McMaster et al., 2005; Vadasy et al., 2002). These adaptations may have provided more intense intervention for the students demonstrating previous low response. Indeed, Torgesen (2000) suggested that students not responding to generally effective interventions may need more intensive intervention. In an effort to more directly examine this hypothesis, a few researchers have investigated reading outcomes for students with reading difficulties when varying levels of intervention are provided. In these studies, intensity has been defined as decreasing group size for instruction and/or increasing the amount of time in instruction.

Increasing Intervention Intensity

One study of group size assigned second-grade students at risk for reading problems to various group sizes to determine effects on reading (Vaughn, Linan-Thompson, Kouzekanani, et al., 2003). Students received the same intervention and were randomly assigned to one of three conditions: (a) a group of 10 students, (b) a group of 3 students, or (c) one-on-one instruction. Results indicated that students who received instruction in groups of 3 or one-on-one made considerably more gains on comprehension measures than students taught in groups of 10. Students receiving one-on-one instruction demonstrated significantly higher gains in fluency and phonological awareness than students in groups of 10. There were no significant differences between the students taught in groups of 3 and the students who received one-on-one instruction, indicating that the increased intensity of providing one-on-one instruction may not be necessary to improve student outcomes. A meta-analysis of one-on-one tutoring interventions found similar results in that one-on-one instruction yielded no different outcomes than small-group interventions (Elbaum, Vaughn, Hughes, & Moody, 2000). However, neither study examined grouping results specifically for students demonstrating low response to previous intervention. Whether instruction provided in groups of 3 or one-on-one can further increase outcomes for these lowest responding students has yet to be specifically examined.

Increasing the intensity of intervention by increasing the duration of intervention has also been examined. In the meta-analysis of one-on-one instruction described earlier (Elbaum et al., 2000), the authors split the study samples into (a) interventions of 20 weeks or less and (b) interventions longer than 20 weeks. Higher effects were yielded in interventions of 20 weeks or less, suggesting students may make the highest gains early in intervention. Though student progress is still evident in longer interventions, the sizeable gains made in the shorter time period may suggest the intensity level of intervention is not increased substantially by providing longer interventions.

A more specific examination of the effect of duration on intervention outcomes can be found in a study of second-grade students with reading difficulties who participated in a reading intervention of 10, 20, or 30 weeks (Vaughn, Linan-Thompson, & Hickman, 2003). Students not meeting exit criteria after each 10-week intervention continued in the intervention for an additional 10 weeks. All 10 of the students who exited after 10 weeks of instruction, as well as 10 of 12 students who exited after 20 weeks, continued to make gains in reading fluency with classroom reading instruction only. An additional 10 students met exit criteria after 30 weeks of instruction, but their progress was not followed after exit. This study combined with the meta-analysis provide evidence that interventions up to at least 20 weeks can allow many students to make substantial gains in their reading outcomes.

Another way to increase time or intensity in an intervention is to increase the number of sessions or hours of instruction a student spends in intervention over the same number of days (e.g., 2 hours per day for 10 weeks vs. 1 hour per day for 10 weeks). Although the effects of this type of intervention intensity have not been studied specifically, most interventions occur for between 20 and 50 minutes per day. One of the most intense interventions can be found in a study by Torgesen et al. (2001). In this study, 8- to 10-year-old students with reading disabilities were provided with a reading intervention for two 50-minute sessions per day over 8 to 9 weeks. The 67.5 hours of instruction yielded substantial improvements in word reading and comprehension that were maintained over the next 2 years of follow-up. The results of this study suggest that more instruction in a short period of time may benefit students with severe reading disabilities. However, the study was not designed specifically to investigate whether the increased time in intervention significantly improved outcomes over interventions of less time per day.

Little is known about appropriate interventions for students who do not make adequate progress in reading interventions that are typically effective. Although evidence suggests that increasing the intensity of effective instruction (e.g., use of smaller groups, more time spent in intervention) may have positive effects on student outcomes (Torgesen et al., 2001; Vaughn, Linan-Thompson, & Hickman, 2003), the relative effectiveness of various levels of intervention intensity for students who demonstrate low response to previous interventions requires further investigation. That is, far less is known about the intensity levels necessary for the most at-risk students to succeed.

In order to begin to address this need, we conducted two studies with students who were low responders. In Study 1, we examined the individual student response for low responders after receiving either a single dose of intervention (30-minute daily sessions of continued intervention) or no research intervention. In Study 2, we examined the individual student response for low responders after receiving a double dose of intervention (two 30-minute daily sessions of continued intervention) or no research intervention.

Specifically, two research questions were addressed:

What are the effects for first-grade students demonstrating previous insufficient response to intervention of a single dose of intervention (30-minute daily sessions of continued treatment during a 13-week time period) and comparison? (Study 1)

What are the effects for first-grade students demonstrating previous insufficient response to intervention of a double dose of intervention (two 30-minute daily sessions of continued treatment during a 13-week time period) and comparison? (Study 2)

Method

Study 1 and Study 2 were conducted in successive school years in the same schools with two nonoverlapping samples of first-grade students. As part of a larger study, first-grade students who were identified as at risk for reading difficulties had been randomly assigned to treatment and comparison groups and were provided intervention in the fall of first grade (daily, 30-minute sessions for 13 weeks; approximately 50 sessions). Each year, the students demonstrating insufficient response after this fall intervention period (low responders) qualified for each of the studies reported here. During the spring semester of first grade, students assigned to the treatment group continued to receive the same intervention with one 30-minute session daily (Study 1) or two 30-minute sessions daily (Study 2). Students in the comparison group continued in the comparison group for the spring semester. Descriptions of these students and criteria for low response follow.

Larger Study

Students participating in Study 1 and Study 2 were selected from a larger study examining RTI in a three-tiered model (Vaughn et al., 2004; Vaughn, Wanzek, Woodruff, & Thompson, 2007). The larger study implements three tiers or levels of instruction: Tier 1 (core reading instruction with screening three times a year for all students and progress monitoring more frequently for students at risk for reading problems), Tier 2 (intervention and progress monitoring for students who are struggling), and Tier 3 (intensive interventions for students for whom the Tier 2 intervention was insufficient). As part of this larger study, we are examining the patterns of response to each of these tiers and the characteristics of students and teachers that differentiate response to various tiers of instruction.

In relation to the current study, all first-grade teachers in the district participated in professional development throughout 2 school years to enhance core reading instruction. In addition, students at risk for reading difficulties were identified in fall and winter using screening instruments. The students identified as at risk in the fall of first grade were randomly assigned to treatment and comparison groups. Students in the treatment group received the Tier 2 intervention with progress monitoring (daily 30-minute sessions for 13 weeks; approximately 25 hours of intervention). Students in the comparison group received school services. Following the fall intervention, all students were again screened for risk. We selected participants for the current study during this winter screening period. Thirty-eight percent of the students in the treatment group receiving the fall intervention qualified for Study 1 (50% of the students in the comparison group were identified). The following year, 31% of the students in the treatment group receiving the fall intervention demonstrated low response and qualified for Study 2 (43% of the students in the comparison group demonstrated low response and were identified). The procedures for selecting these participants are described below. No Tier 3 interventions occurred in first grade.

Participants

The two studies took place in 2 consecutive years in six elementary schools in one southwestern school district participating in a large-scale investigation of multi-tiered instruction. The schools are high-poverty Title I schools with a high percentage of minority students. Each year, a cohort of students was selected who (a) were enrolled in any of the district’s 25 first-grade classrooms, (b) were identified as at risk for reading difficulties in the fall and randomly assigned to either the treatment or comparison groups, and (c) did not meet exit criteria in December after the 13-week fall intervention period (low responders). For students to be considered low responders to the intervention, we established a priori exit criteria defined as (a) scores below 30 correct sounds per minute on the Nonsense Word Fluency (NWF) subtest of the Dynamic Indicators of Basic Early Literacy Skills (DIBELS; Good & Kaminski, 2002) and below 20 words per minute on the DIBELS Oral Reading Fluency (ORF) or (b) a score below 8 words per minute on the DIBELS ORF. These criteria relate to the established midyear benchmarks for the measures.

There were 57 students (23 in the treatment, 34 in the comparison groups) who qualified as low responders for Study 1 (Year 1). During the spring intervention, 7 of the students (12% of sample) moved out of the district. The final sample consisted of 21 students in the treatment and 29 students in the comparison groups. The final sample for Study 1 represents the number of students who were low responders to the fall-of-first-grade intervention provided as part of the large-scale study investigating multi-tiered instruction. Thus, more students in the treatment condition responded to the fall-of-first-grade intervention than students in the comparison condition. Study 1 examines the continued intervention response of these students during the spring of first grade.

The participants for Study 2 (Year 2) were selected using the same criteria as for Study 1. There were 40 students (16 students in treatment group and 24 students in comparison group) who qualified for Study 2. Four students (10% of sample) moved out of the district. The final sample for Study 2 consisted of 36 students (14 treatment and 22 comparison). Again, the final sample for Study 2 indicates that more students in the treatment condition responded to the fall-of-first-grade intervention provided as part of the large-scale study. Study 2 examines the continued intervention response of these students during the spring of first grade.

Demographic information for the participants in Studies 1 and 2 is provided in Tables 1 and 2, respectively. In addition, student scores on the Peabody Picture Vocabulary Test–Revised (PPVT-R; Dunn & Dunn, 1997) provide an indication of the students’ below-average level in receptive vocabulary for both Study 1 and Study 2 (Table 3).

Table 1.

Study 1: Participant Demographic Information

| Group | N | Gender | Ethnicity | Free or Reduced- Price Lunch |

Disability Identified |

||

|---|---|---|---|---|---|---|---|

| Male | Female | Hispanic | Non-Hispanic | ||||

| All first-grade students in district | 507 | 270 (53.3%) | 237 (46.7%) | 335 (66.1%) | 172 (33.9%) | 385 (75.9%) | 58 (11.4%) |

| Single-dose treatment | 21 | 15 (71.4%) | 6 (28.6%) | 16 (76.2%) | 5 (23.8%) | 19 (90.5%) | 5 (23.8%) |

| Comparison | 29 | 17 (58.6%) | 12 (41.4%) | 20 (69.0%) | 9 (31.0%) | 26 (89.7%) | 11 (37.9%) |

| Single-dose treatment vs. comparison | 50 | Fisher’s exact probability = .265 | Fisher’s exact probability = .407 | Fisher’s exact probability = .654 | Fisher’s exact probability = .228 | ||

Table 2.

Study 2: Participant Demographic Information

| Group | N | Gender | Ethnicity | Free or Reduced- Price Lunch |

Disability Identified |

||

|---|---|---|---|---|---|---|---|

| Male | Female | Hispanic | Non-Hispanic | ||||

| All first-grade students in district | 500 | 252 (50.4%) | 248 (49.6%) | 346 (69.2%) | 154 (30.8%) | 369 (73.8%) | 51 (10.2%) |

| Double-dose treatment | 14 | 8 (57.1%) | 6 (42.9%) | 10 (71.4%) | 4 (28.6%) | 14 (100%) | 9 (64.3%) |

| Comparison | 22 | 12 (54.5%) | 10 (45.5%) | 13 (59.1%) | 9 (40.9%) | 13 (59.1%) | 3 (13.6%) |

| Double-dose treatment vs. comparison | 36 | Fisher’s exact probability = .577 | Fisher’s exact probability = .349 | Fisher’s exact probability = .005 | Fisher’s exact probability = .003 | ||

Table 3.

Peabody Picture Vocabulary Test–Revised Standard Mean Scores

| Group | M | SD | t | p |

|---|---|---|---|---|

| Study 1 | ||||

| Research intervention (n = 21) | 85.10 | 10.93 | 0.619 | .539 |

| No research intervention (n = 29) | 83.38 | 8.67 | ||

| Study 2 | ||||

| Research intervention (n = 14) | 78.71 | 11.26 | 2.18 | .036 |

| No research intervention (n = 22) | 87.86 | 12.89 |

Procedures

The procedures for each study were identical. First-grade students who received intervention in the fall were assessed with the DIBELS NWF and ORF measures (Good & Kaminski, 2002) and the Woodcock Reading Mastery Test–Revised (WRMT-R) Word Identification, Word Attack, and Passage Comprehension subtests (Woodcock, 1987) in December at the conclusion of the fall intervention. Students not meeting exit criteria were identified for each study. Treatment students were divided within school into homogeneous instructional groups of 4 to 5 students, based on their NWF pretest scores. Intervention was implemented 5 days a week for approximately 50 days (range = 47–55 days) beginning in late January and continuing through the 1st week in May. Participants were posttested the week immediately following intervention (the 2nd week in May) on DIBELS ORF and the WRMT-R subtests of Word Identification, Word Attack, and Passage Comprehension.

Measures

Woodcock Reading Mastery Test–Revised (Woodcock, 1987)

The WRMT-R is a battery of individually administered tests of reading. Three subtests, Word Identification, Word Attack, and Passage Comprehension, were administered to assess students’ basic reading skills. The WRMT-R provides two alternate, equivalent forms. Form G was used at pretest and Form H was used at posttest.

The Word Identification subtest requires students to read words in isolation. The Word Attack subtest measures students’ ability to decode nonsense words such as ift, laip, and vunhip. The Passage Comprehension subtest is a cloze measure requiring students to read sentences silently and supply missing words. Split-half reliability coefficients for first grade are reported as .98, 94, and .94 for Word Identification, Word Attack, and Passage Comprehension, respectively. Concurrent validity ranges for the subtests of the WRMT-R are reported from .63 to .82 when compared to the Total Reading Score of the Woodcock-Johnson Psycho-Educational Battery (Woodcock & Johnson, 1977)

Curriculum-based measure: Dynamic Indicators of Basic Early Literacy Skills (Good & Kaminski, 2002)

Two DIBELS measures were individually administered: (a) the NWF subtest was given at pretest for screening and for progress-monitoring purposes, and (b) the ORF subtest was administered at pretest, at posttest, and for progress monitoring. The NWF assesses students’ fluent knowledge of letter–sound correspondences and the ability to blend sounds into words. Alternate form reliability estimates are .83. The predictive validity of DIBELS NWF to oral reading fluency in May of first grade is reported as .82. The ORF evaluates students’ oral reading on 1-minute timed reading samples with the number of correct words per minute for each passage recorded. The test–retest coefficients for this measure range from .92 to .97. Alternate forms were administered at pretest, at posttest, and during progress monitoring. Implementation validity checks for administration of the DIBELS were conducted during each test administration period with forms included in the DIBELS Administration and Scoring Guide.

Tutors

The interventions in each study were provided by trained graduate students and research associates. Seven tutors provided the intervention in Study 1. Four of the tutors were female. One tutor was Hispanic and the others were Caucasian. All tutors held at least a bachelor’s degree; five of the tutors also held a master’s degree. Four of the tutors were certified teachers. In Study 2, five tutors provided the intervention (three female). Two tutors were Hispanic and three were Caucasian. All of the tutors held at least a bachelor’s degree; four of the tutors in Study 2 also held a master’s degree. Four of the tutors were certified teachers. Two tutors provided intervention in both Study 1 and Study 2.

Training for tutors

All tutors participated in 15 hours of training (five 3-hour sessions) during a 1-month period prior to the start of the intervention. Training addressed instructional techniques for the critical components of intervention for at-risk first graders: phonemic awareness, phonics and word recognition, fluency, vocabulary, and comprehension. Training also covered effective instructional techniques, including explicit instruction, quick pacing, error correction, and scaffolding. Tutors received training in lesson planning, progress monitoring, and group management techniques. Between training sessions, tutors prepared full sets of lesson plans to use in simulation practice sessions. Trainers provided feedback on written lessons and practice sessions to each tutor. Throughout the intervention, each tutor was observed at least once a week and given feedback on implementation. In addition, weekly meetings were held to address tutor needs, student progress, and additional implementation issues.

Materials

Tutors received sets of sequenced lessons incorporating each of the intervention components to guide their instruction. Materials were scripted and teachers included all key elements of the script in a standardized format but did not necessarily read the script verbatim. With the assistance of the researcher, tutors matched appropriate lessons to reading skills of students in their intervention groups. All materials necessary to implement the lessons were provided to tutors.

Description of Interventions

Treatment

Treatment students received either a single dose of intervention with one 30-minute daily session (Study 1) or a double dose of intervention with two 30-minute daily sessions (Study 2) during the spring semester of first grade. Treatment students participating in each study were provided the same intervention with the only difference being that students in Study 2 received twice as much time in intervention (approximately 50 hours for Study 2 compared to approximately 25 hours for Study 1) during the same number of weeks.

Students were provided interventions in small groups of 5 in a separate classroom at the school. The following elements were included in a standard protocol intervention for Studies 1 and 2.

Phonics and word recognition (15 minutes)

Phonics and word-recognition instruction was provided each day. Instruction included letter names, letter sounds (building from individual letter sounds to letter combinations), reading and spelling regular and irregular words, word family patterns (e.g., fin, tin, bin), and word building (e.g., work, works, worked, working).

Fluency (5 minutes)

Daily fluency exercises addressed improving reading speed and accuracy. Each activity addressed one of three skill areas: (a) letter names and sounds, (b) word reading, or (c) passage reading.

Passage reading and comprehension (10 minutes)

Students read short passages incorporating sounds and words previously taught through phonics and word recognition activities. The passages built from 3 to 4 words to more than 40 words, according to student skill level. Appropriate comprehension questions integrating literal and inferential thinking followed each passage. Tutors taught students strategies for locating answers or clues to answers for the comprehension questions.

Students participating in the treatments (Study 1 and Study 2) received no additional reading interventions during the school day beyond the classroom instruction. The only exception was students with Individualized Education Programs (IEPs) who continued to receive identified services.

Comparison

Data were collected through teacher interviews to describe any school reading interventions students in the comparison group received. In Study 1, 10 students in the comparison did not receive any additional reading instruction beyond the classroom instruction. The other 19 students received intervention ranging from 30 to 700 minutes per week. The additional reading services were provided throughout the school year for all but 1 student, who received instruction for only 1 month. In Study 2, 12 students in the comparison did not receive additional reading instruction beyond the classroom instruction. The other 10 students received intervention ranging from 75 to 300 minutes per week. Three of these students began receiving additional instructional support in November and continued throughout the school year. All other students received the additional reading instruction throughout the entire school year.

School personnel reported that interventions provided to comparison students included practice with letter sounds and blends as well as reading and spelling sight words. Students read aloud from books and engaged in discussion of books or wrote sentences to describe the story. Books were decodable or leveled readers. In addition, fluency and comprehension practice was provided through rereading texts and introducing new vocabulary. Trade books occasionally were used for fluency and comprehension activities. Students also spent some time on independent reading and test preparation.

Progress Monitoring

Progress monitoring data were collected to assist teachers of students in the treatment and comparison groups in planning instruction. Tutors formally monitored the progress of each student in the treatment groups weekly using alternate forms of the ORF measure. A second measure, NWF, was also used to monitor progress of students scoring below the NWF benchmark of 50 sounds per minute. Tutors recorded students’ scores each week, assessed progress toward the spring goal of 40 words per minute on ORF and the goal of 50 sounds per minute on NWF and made instructional adjustments as needed. Additionally, informal progress monitoring occurred each day of the intervention, as tutors recorded instructional elements missing (evidenced by students unable to accomplish the skill), emerging (evidenced by students able to accomplish a skill with teacher prompts), or mastered (evidenced by students able to accomplish a skill without teacher assistance for 3 consecutive days). Tutors used the formal and informal progress-monitoring records to plan daily lessons.

Students in the comparison group also had their progress monitored on ORF and NWF by classroom teachers. The number of times each teacher monitored the progress of students in the comparison group ranged from three to five times during the intervention period for each study.

Fidelity of Implementation

Tutors were observed weekly and provided feedback. Each tutor also kept daily logs of lesson components completed, student mastery levels, and difficulties. Implementation validity checklists were completed for each tutor once a month to assure fidelity and consistency of implementation across tutors. The occurrence and nonoccurrence of major treatment components were evaluated. Instructional time for each component was recorded and rated as mostly instructional, often interrupted, or mostly managerial on the basis of the tutors’ use of behaviors that maximize student engagement. Quality of instruction was recorded as a composite score that included the occurrence of the treatment components, appropriateness of instruction and materials, and the rating for instructional time. The scores for quality of instruction are on a Likert-type scale and range from 1 (low) to 3 (high).

Table 4 provides quality of instruction ratings (fidelity) for each tutor in Studies 1 and 2, respectively. Overall, fidelity in Study 1 was high (quality scores above 2.5). A low mean score of 1.67 was reported for Tutor 13 on fluency. This score was the result of the tutor’s inability to complete the fluency activity during one observation due to a behavior difficulty. Weekly observations indicated that fluency instruction was completed by this tutor consistently throughout the intervention. In Study 2, fidelity was generally high for phonics and word recognition and fluency, but mean scores below 2.5 in passage reading and comprehension suggested that these elements were not always fully completed. In all cases, the lower scores in passage reading and comprehension were due to difficulty with off-task behavior and instructional pacing. In Study 2, the tutors reported difficulties with student fatigue during the second session of the intervention.

Table 4.

Fidelity of Implementation: Mean Quality of Implementation

| Mean Quality by Intervention Area | ||||

|---|---|---|---|---|

| Tutor | Study | Phonics and Word Recognition |

Fluency | Passage Reading and Comprehension |

| 2 | 1 | 3.00 | 3.00 | 2.67 |

| 4 | 1 | 2.83 | 3.00 | 2.67 |

| 7 | 1 | 2.83 | 3.00 | 3.00 |

| 8 | 1 | 2.67 | 2.67 | 2.67 |

| 11 | 1 | 3.00 | 2.67 | 2.67 |

| 12 | 1 | 2.50 | 3.00 | 2.67 |

| 13 | 1 | 2.50 | 1.67 | 2.67 |

| 7 | 2 | 3.00 | 3.00 | 3.00 |

| 11 | 2 | 2.50 | 2.33 | 2.00 |

| 16 | 2 | 3.00 | 2.67 | 2.33 |

| 17 | 2 | 2.50 | 2.67 | 2.00 |

| 20 | 2 | 2.50 | 2.67 | 2.33 |

Results

Each research question was addressed using statistical and descriptive analyses. First, a series of analyses of covariance (ANCOVA) were conducted for each study to examine differences in the equality of means between groups at posttest. To provide further information on individual student response to intervention, descriptive analyses of similarities and differences in students’ responses between groups for each outcome measure are reported.

Analysis of Pretest Data

Means and standard deviations for treatment and comparison groups for outcome measures are presented in Tables 5 through 8. The t test for independent samples showed no statistically significant differences between the treatment and comparison groups in Study 1 (single dose) on pretest measures of ORF, Word Identification, Word Attack, and Passage Comprehension. The two groups did differ statistically on the pretest measure of NWF (t = 2.203; p = .032) in favor of the treatment group. No statistically significant differences between the treatment and comparison groups in Study 2 (double dose) were found on pretest measures.

Table 5.

WRMT-R Word Identification Pretest and Posttest Standard Scores

| Study 1 | Study 2 | |||

|---|---|---|---|---|

| Group | Pretest M (SD) |

Posttest M (SD) |

Pretest M (SD) |

Posttest M (SD) |

| Treatment | 97.81 | 95.86 | 93.00 | 94.86 |

| (8.47) | (9.17) | (10.96) | (11.75) | |

| Comparison | 98.38 | 95.93 | 98.68 | 96.45 |

| (11.01) | (10.56) | (6.90) | (8.30) | |

Note: WRMT-R = Woodcock Reading Mastery Test–Revised (Woodcock, 1987).

Table 8.

DIBELS Oral Reading Fluency Pretest and Posttest Scores (in words per minute)

| Study 1 | Study 2 | |||

|---|---|---|---|---|

| Group | Pretest M (SD) |

Posttest M (SD) |

Pretest M (SD) |

Posttest M (SD) |

| Treatment | 5.62 | 16.19 | 4.21 | 13.93 |

| (3.20) | (7.81) | (4.58) | (8.32) | |

| Comparison | 5.17 | 18.62 | 5.82 | 14.27 |

| (4.12) | (13.24) | (2.84) | (6.13) | |

Note: DIBELS = Dynamic Indicators of Basic Early Literacy Skills (Good & Kaminski, 2002).

Analysis of Treatment Effects

An ANCOVA was performed to compare the treatment and comparison groups on each of the dependent measures in each study. Two covariates were used for each comparison: (a) pretest of the dependent variable and (b) NWF pretest.

The normality and homogeneity of variances assumptions were not fully met for Study 1. No statistically significant differences were found between the Study 1 single-dose treatment and comparison groups on any of the dependent measures.

In Study 2, no statistically significant differences were found between groups on the measures of Word Identification, Passage Comprehension, or ORF. The groups were significantly different at posttest on Word Attack (F = 5.199; p = .029). However, the differences discussed earlier between groups in free or reduced-price lunch program, disability status, and PPVT-R standard scores indicate there might be uncontrolled variables on which the groups differed. These differences suggest that the interpretation of the ANCOVA analyses in Study 2 is speculative.

Student Response to Intervention

Study 1 and Study 2 were designed to examine student response to continued intervention for students demonstrating low response to previous intervention. Given the difficulties with the interpretation of the ANCOVA results, particularly for Study 2, descriptive information was culled to examine responses of individual students to the intervention. Standardized mean effect sizes were calculated to examine differential effects from pretest to posttest. The mean difference between pretest and posttest was divided by the standard deviation of the mean difference (Howell, 1992). The effect sizes are provided in Table 9. In addition, patterns of individual student response to intervention were examined for each outcome measure.

Table 9.

Pretest to Posttest Standardized Mean Difference Effect Sizes

| Oral Reading Fluency | Word Identification | Word Attack | Passage Comprehension | |||||

|---|---|---|---|---|---|---|---|---|

| Group | Study 1 | Study 2 | Study 1 | Study 2 | Study 1 | Study 2 | Study 1 | Study 2 |

| Treatment | 1.76 | 1.66 | −.27 | .24 | .06 | .02 | .66 | .20 |

| Comparison | 1.25 | 1.55 | −.36 | −.39 | −.09 | −.56 | .07 | .37 |

Study 1

Treatment group: Single dose

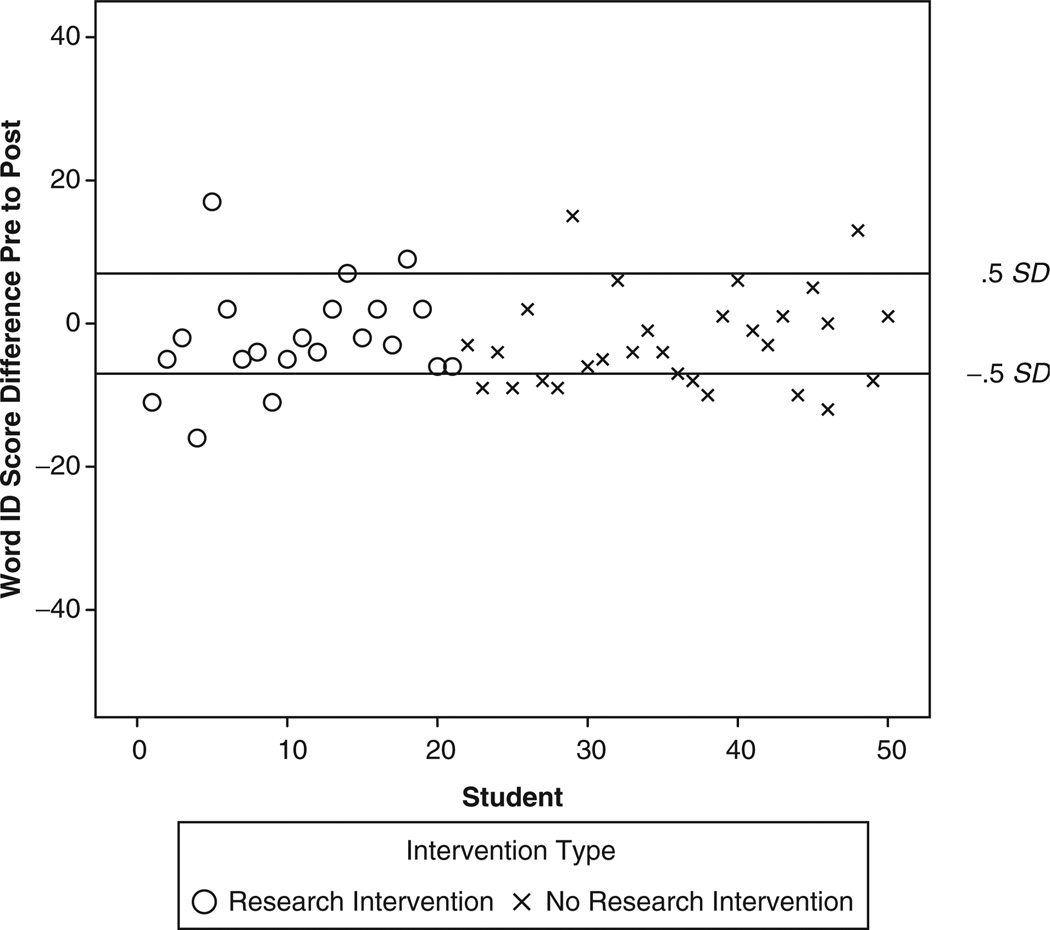

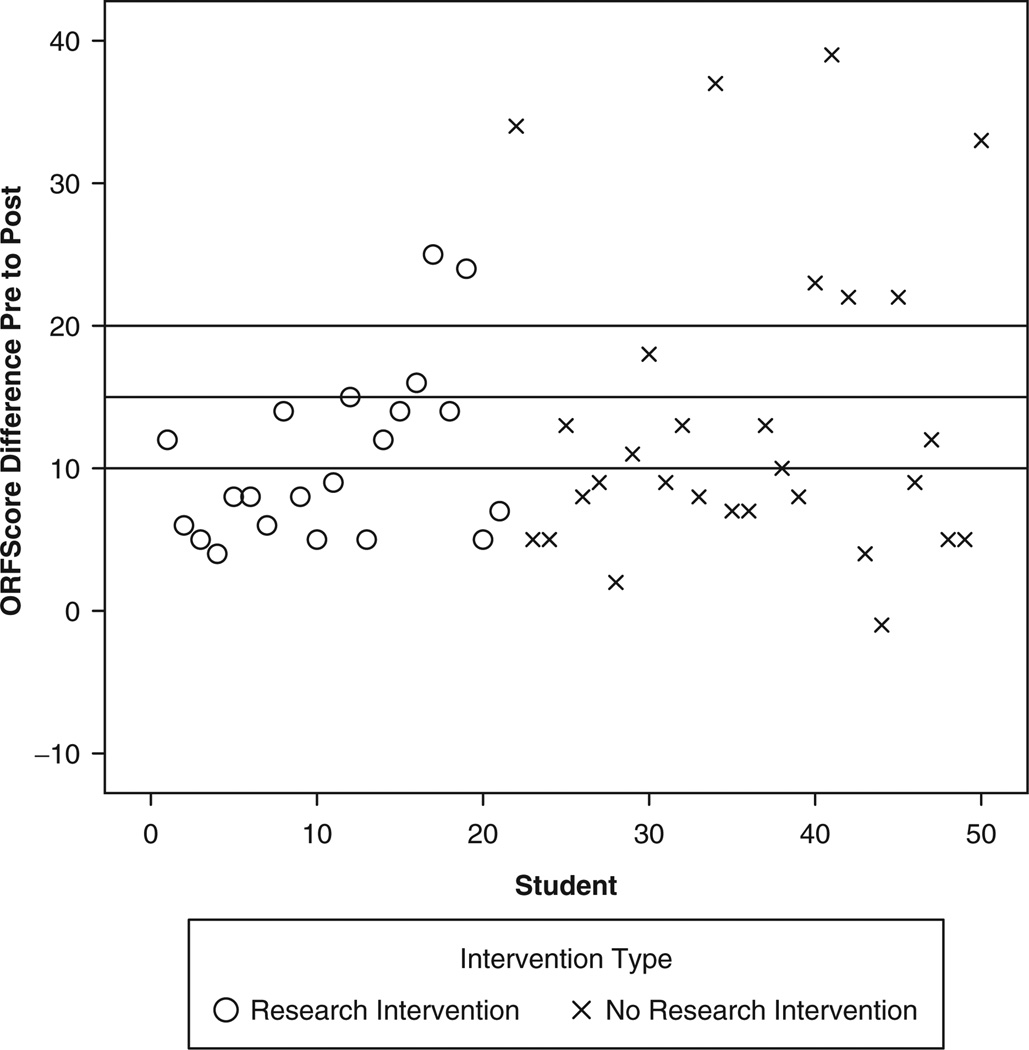

Individual student responses over time indicated that 3 (14%) students achieved at least a half of a standard deviation increase in Word Identification, and 7 (33%) students each achieved this gain in Word Attack and Passage Comprehension performance. Of these students, 1 student achieved more than a full standard deviation increase in Word Identification, 3 (14%) students achieved at least 1 standard deviation increase in Word Attack, and 4 students achieved this gain in Passage Comprehension. Conversely, 3 (14%) students decreased a half of a standard deviation or more on Word Identification. Five (24%) students demonstrated this decrease on Word Attack, but no students showed this decrease in performance on Passage Comprehension. Examination of response on ORF showed that 9 (43%) students increased by at least 10 words per minute. Only 4 of these students (19% of the total group) increased by 15 words or more; 2 of these students (10% of the total group) made gains greater than 20 words per minute over time. However, none of the students reached exit criteria with the end-of-first-grade benchmark score of 40 words read correctly per minute.

Comparison group

Examination of individual responses in Study 1 demonstrated that the largest gains in words read correctly per minute were made by students in the comparison group. Specifically, 14 (48%) students gained 10 or more words per minute over time, and 7 of these students (24% of the total group) gained 20 or more words per minute. In addition, 3 students in the comparison group achieved the end-of-first-grade benchmark of 40 or more words read correctly per minute by the end of the intervention.

Individual student responses on the WRMT-R subtests were less positive for students in the comparison group. For Word Identification, 2 (7%) students made gains of at least a half of a standard deviation, whereas 10 (34%) students decreased half of a standard deviation or more. Similarly, 7 (24%) students made gains of at least a half of a standard deviation or more on Word Attack, and 14 (48%) students decreased half of a standard deviation or more. Six (21%) students increased scores by at least half of a standard deviation on Passage Comprehension, and 7 (24%) students decreased half of a standard deviation or more over time. Of the students who made the gains, 1 student gained a full standard deviation on Word Identification, 4 students (14% of the total group) increased a full standard deviation on Word Attack, and 3 students (10% of the total group) made similar gains on Passage Comprehension. Two students decreased a half of a standard deviation or more on every standardized measure. In contrast, no students demonstrated this consistent decrease in score over time in the single-dose treatment group.

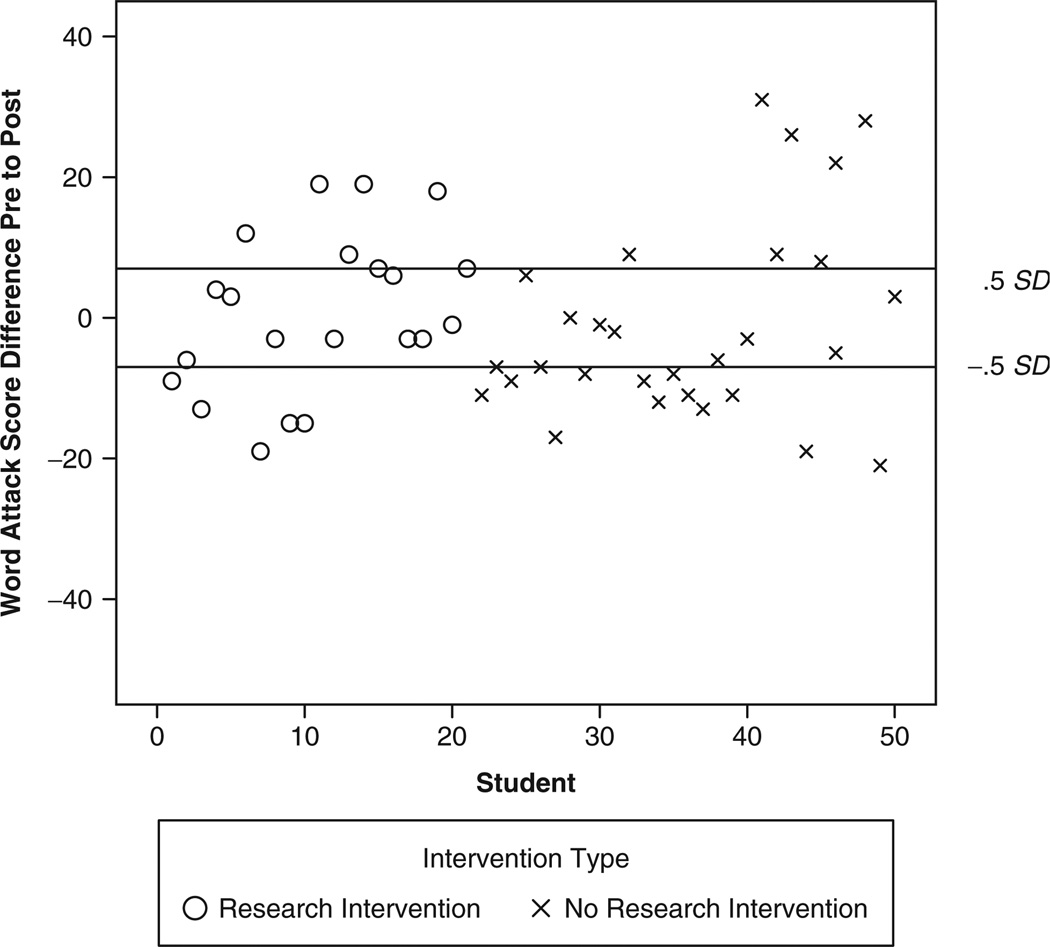

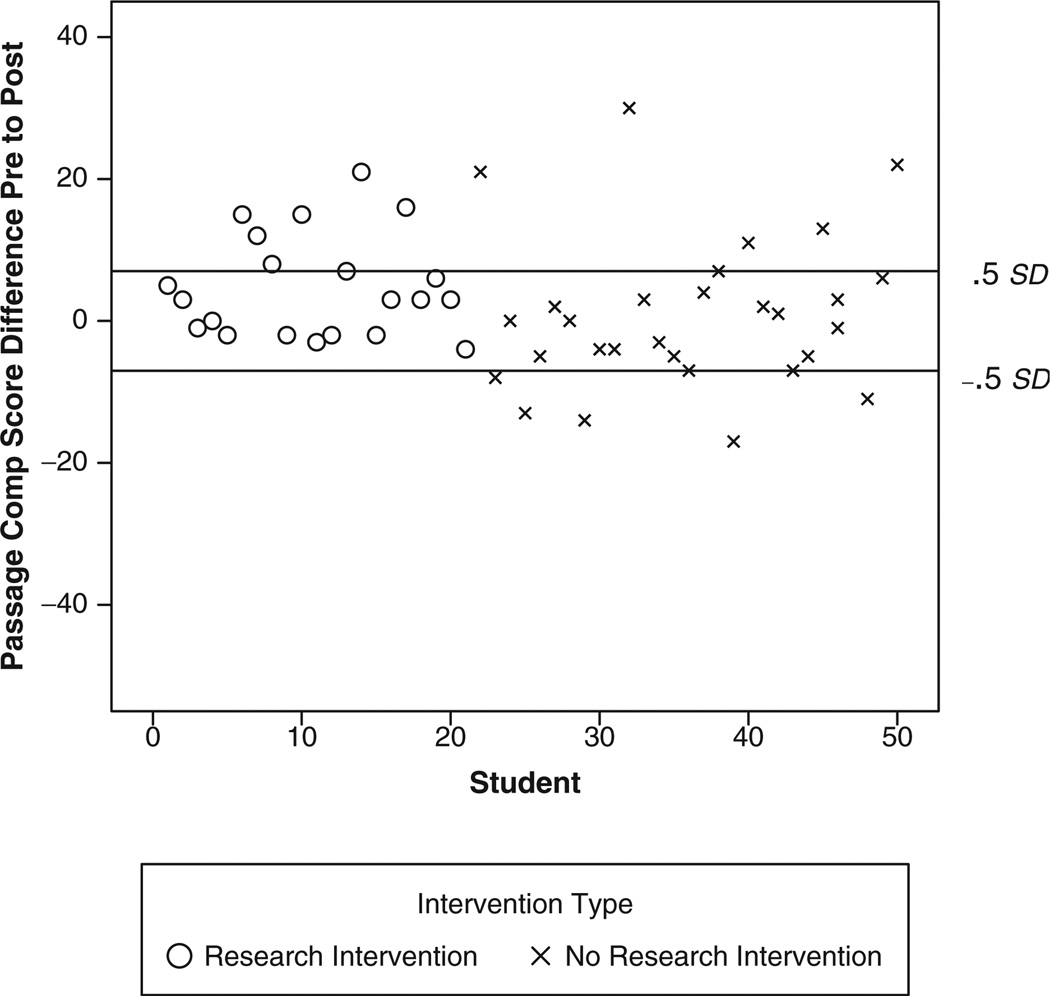

Overall, descriptive information of individual student response to intervention suggests more students in the comparison group losing ground over time on standardized measures and more students in the single-dose treatment group accelerating their learning on these measures. However, in reading fluency, the comparison group had more students making substantial gains and even reaching grade-level expectations. Figures 1 through 4 show individual student response over time for each of the outcome measures. Each individual student gain or loss from pretest to posttest is presented in the figures (each x or o represents 1 student in the respective treatment or no-treatment group). Lines are drawn on the figures to indicate the half standard deviation standard score gain and half standard deviation standard score loss between pretest and posttest for each of the WRMT subtests. For the ORF measure, drawn lines indicate 10, 15, and 20 gains from pretest to posttest.

Figure 1.

Study 1: Individual Student Response on Word Identification

Figure 4.

Study 1: Individual Student Response on Oral Reading Fluency

Study 2

Treatment group: Double dose

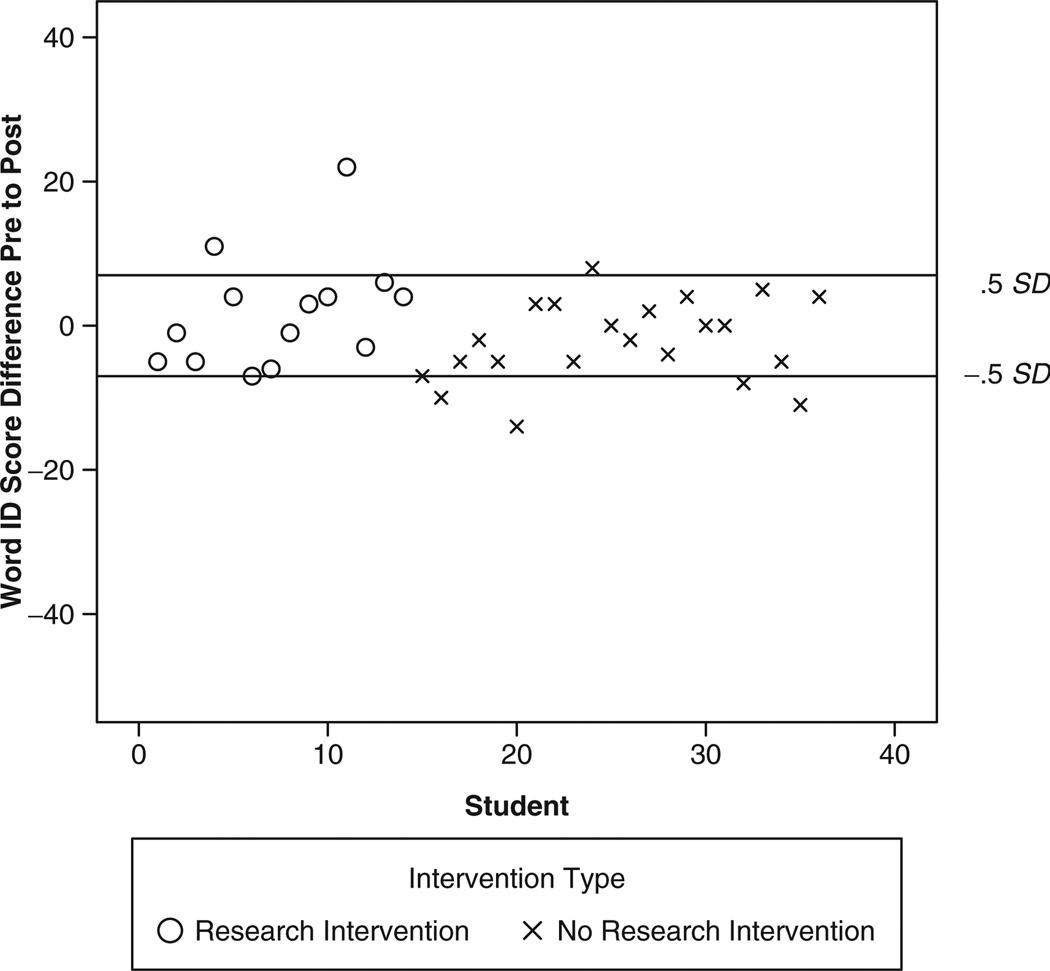

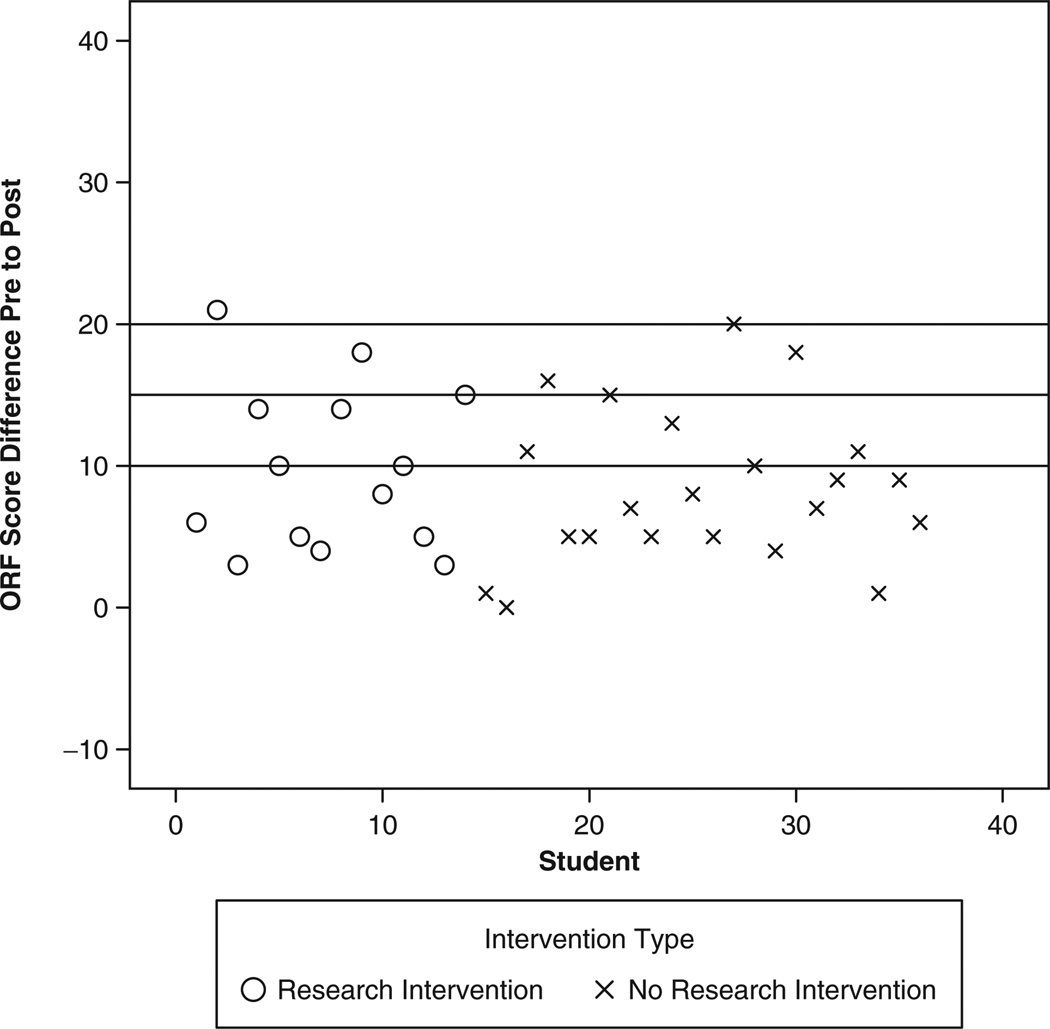

Individual student responses over time indicated that 2 (14%) students achieved at least a half of a standard deviation increase in Word Identification, 3 (21%) students achieved this gain in Word Attack, and 6 (43%) students achieved this gain in Passage Comprehension. Of these students, 1 student each achieved more than a full standard deviation increase in Word Identification, Word Attack, and Passage Comprehension. A decrease of a half a standard deviation or more was demonstrated by 1 student on Word Identification, by 5 (35%) students on Word Attack, and by 2 (14%) students on Passage Comprehension. Examination of the ORF outcomes demonstrated that 7 (50%) students increased by at least 10 words per minute. Only 3 of these students (21% of the total group) increased by 15 words or more, and 1 student made gains greater than 20 words per minute over time. However, all of the students remained well below the end-of-first-grade benchmark score of 40 words per minute (also the exit criteria), indicating their continued risk.

Comparison group

Individual student responses indicated that fewer students in the comparison group made substantial progress compared with the double-dose treatment group. One student made more than a half of a standard deviation gain in Word Identification, whereas 5 (23%) students decreased by a half standard deviation or more on this same measure. Similarly, 3 (14%) students made at least a half of a standard deviation gain in Word Attack, but 10 (45%) students decreased by a half standard deviation or more. The largest number of cases in the comparison group increasing by a half standard deviation or more (8 students, or 36%) was seen on Passage Comprehension. Notably, 5 of these students (23% of the total group) made 1 standard deviation gain or more. Only 2 (9%) students had scores that decreased by a half of a standard deviation or more on Passage Comprehension.

Similar to the scores of students in the treatment group, comparison students’ responses on ORF were low, with 8 students (36%) making gains of 10 or more words read correctly. Four of these students (18% of the total sample) made gains of more than 15 words read correctly. Given the average first-grade gain of approximately 20 words from January to May, these gains indicated that students were falling further behind. As with the double-dose treatment group, all students in the comparison group remained substantially below the exit criteria, an end-of-first-grade benchmark score of 40 words read correctly per minute.

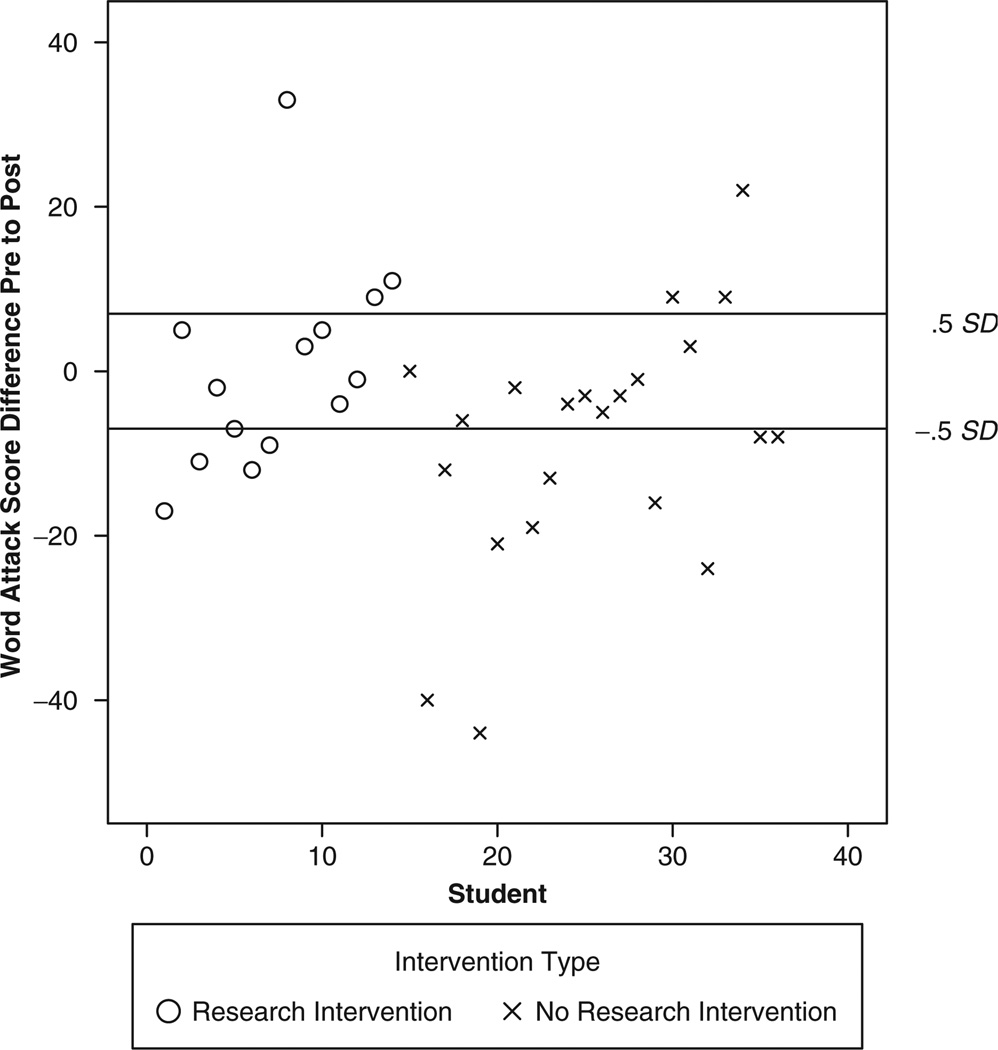

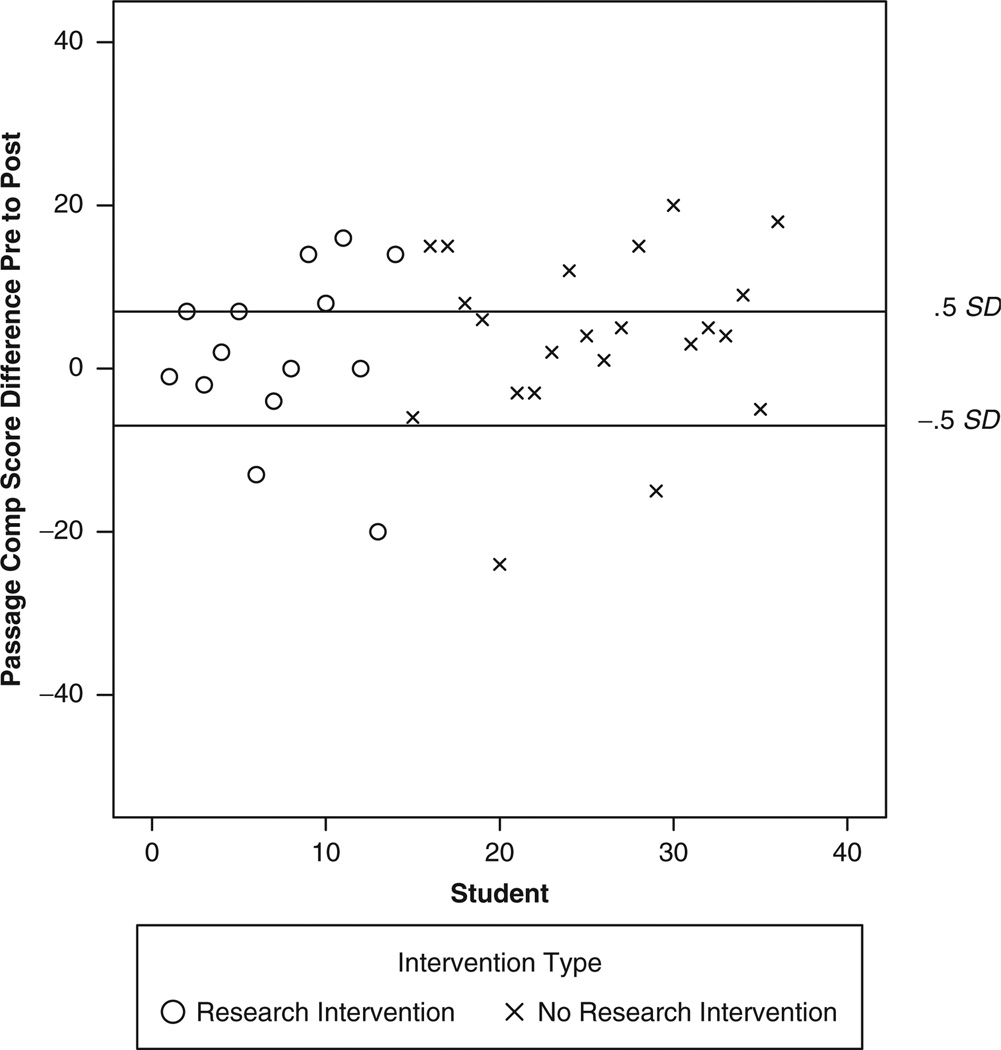

Overall, more students in the double-dose treatment group demonstrated gains in Word Identification, Word Attack, and ORF than students in the comparison group. Although similar numbers of students made gains of a half of a standard deviation or more on Passage Comprehension in both groups, more students in the comparison group made gains of 1 standard deviation or more. Figures 5 through 8 show individual student responses over time for Study 2.

Figure 5.

Study 2: Individual Student Response on Word Identification

Figure 8.

Study 2: Individual Student Response on Oral Reading Fluency

Discussion and Implications

The purpose of these two studies was to examine the individual student response to various levels of intervention intensity for students who had demonstrated low response to previous interventions. Students demonstrating low response to fall intervention continued to receive either a single dose or a double dose of intervention, or they continued in the comparison group. Examination of data for students in the treatment conditions (single dose and double dose) revealed few differences in students’ responses. Fundamentally, increasing the intensity of the intervention by double dosing students in the spring of first grade did not seem to increase the number of students responding to intervention. However, results indicated that more students in the treatment groups demonstrated gains from pretest to posttest on standardized measures of Word Identification, Word Attack, and Passage Comprehension than students continuing in the comparison group for each study.

Examination of students’ responses provides preliminary information related to student outcomes, but caution should be exercised in generalizing results to other students. These descriptive analyses do not take into account measurement error. As a result, individual student posttest scores may be influenced by error variance inherent in the measure rather than by true changes in student outcomes. However, the descriptive findings are reported here to further explore individual student response patterns and for the purpose of generating hypotheses for future studies. Furthermore, in special education, the examination of students’ responses over time is a valuable way to determine the effectiveness of interventions for individual students and to develop alternative treatments.

We think that the context in which these two intervention studies were conducted may have influenced findings. All students (single-dose treatment, double-dose treatment, and comparison) were participating in a relatively effective classroom reading instruction with progress monitoring as part of the large-scale study from which the participants for these two intervention studies were selected. A thorough description of the classroom reading implementation and its effects are described elsewhere (Vaughn et al., in press); however, the program consisted of ongoing professional development (25 hours per year) with occasional in-class support for all first-grade teachers and implementation of ongoing progress monitoring. Within this context, most students in first grade were provided with a reasonably good opportunity to learn to read. Thus, when students in the treatment groups demonstrated risk status in December, after receiving effective classroom instruction and 25 hours of additional intervention in relatively small groups (4–6), the likelihood that these students were truly “nonresponders” is quite high. In our view, these students were the very-difficult-to-teach youngsters that a response to intervention model is seeking to identify and consider for special education.

Examining students’ responses to the interventions provided in this study relate to decision making about identification of students with learning disabilities within a response to intervention model. As previous research has also indicated (Al Otaiba & Fuchs, 2006; McMaster et al., 2005; Vadasy et al., 2002; Vellutino et al., 1996), we think the response to intervention seen in this study indicates that some low-responding students will continue to be challenging to teach. Students whose response to intervention has been relatively low are likely to require very intensive and ongoing interventions over time, and their response to these interventions is likely to be slow, given what we currently know from the two studies presented here and previous studies.

To further compare the outcomes of students demonstrating low response, we calculated the per-hour student gain from the findings in these two studies as well as findings reported in previous research that examined continued interventions for low responders. Table 10 displays the calculated standard score gain per hour of intervention for the two studies described in this article as well as for three previously conducted studies reporting standard score outcomes for the WRMT-R (Berninger et al., 2002; Vadasy et al., 2002; Vaughn, Linan-Thompson, & Hickman, 2003). Although these previous studies provided the continued intervention in second grade, the gains reported in two of the studies are similar to the gains realized in the two studies described in this article. The consistent results across studies provide evidence that students demonstrating significant reading difficulties may need different instruction than other at-risk readers. Berninger et al. (2002) described much stronger results for a relatively short intervention (8 hours over 4 months); however, low responders in the study were selected from a previous intervention of relatively low intensity (approximately 10 hours). Each of the other studies provided 25 or more hours of intervention before selecting low responders, suggesting that the participants may have had more persistent difficulties.

Table 10.

Gains per Hour for Low Responders in Intervention

| Study | Grade Level |

No. Hours Intervention |

WRMT-R Subtest |

Standard Score Gain per Hour of Intervention |

|---|---|---|---|---|

| Berninger et al. (2002) | 2 | 8.0 | Word ID | .66 |

| Word Attack | .83 | |||

| Vadasy, Sanders, Peyton, & Jenkins (2002) | 2 | M = 39.4 | Word ID | −.01 |

| Word Attack | −.12 | |||

| Vaughn, Linan-Thompson, & Hickman (2003), Group 1a | 2 | 87.5 | Word Attack | .06 |

| Pass. Comp. | .09 | |||

| Vaughn, Linan-Thompson, & Hickman (2003), Group 2b | 2 | 87.5 | Word Attack | .06 |

| Pass. Comp. | .07 | |||

| Wanzek & Vaughn (2005), Study 1 | 1 | 25.0 | Word ID | −.08 |

| Word Attack | .03 | |||

| Pass. Comp. | .19 | |||

| Wanzek & Vaughn (2005), Study 2 | 1 | 50.0 | Word ID | .04 |

| Word Attack | .004 | |||

| Pass. Comp. | .04 |

Note: WRMT-R = Woodcock Reading Mastery Test–Revised (Woodcock, 1987). Word ID = Word Identification; Pass. Comp. = Passage Comprehension. Vellutino and colleagues (1996) and McMaster, Fuchs, Fuchs, and Compton (2005) also provided intervention to students with previous low response. However, Vellutino et al. did not administer the WRMT-R as an outcome measure, and McMaster et al. did not provide standard scores. Thus, the results of these two studies are not compared here.

Students did not respond to 20 weeks of intervention but exited after 30 weeks of intervention.

Students did not respond after 30 weeks of intervention.

We are not suggesting that students demonstrating insufficient response to previous intervention will not make progress but are merely indicating that these students may need more specialized instruction for long periods of time to demonstrate significantly improved outcomes. However, the actual practices required to improve performance are largely unknown. We know a great deal more about how to teach students who are struggling readers and respond to intensive small-group instruction than we do about those students (fewer in number) whose response to these interventions has been minimal.

Perhaps the most consistent finding for students in this study as well as other intervention studies is the relatively low outcomes for fluency (e.g., Lovett, Steinbach, & Frijters, 2000; McMaster et al., 2005; Torgesen et al., 2001). The participants in both our single- and double-dose treatments had particular difficulties making gains in fluency. End-of-the-year scores for the large majority of treatment students were well below the expected benchmark of 40 words per minute. Though this difficulty in affecting fluency outcomes for struggling readers is consistent with previous research, we think that future research might investigate factors to further explain consistently low fluency. For example, students in the treatment groups were successful at daily mastery during word-reading instruction but struggled significantly with fluent reading of connected text during the lesson. The daily word-reading instruction proceeded from regular words, where students applied phonics skills they had learned, to irregular word reading, where students had to read words by “sight.” During word-reading instruction, students did not practice reading regular and irregular words mixed together as they are in connected text. In effect, students always knew the correct strategy to use during word-reading instruction (phonics or sight reading). This scaffold may explain the ease with which the students read words in isolation and their subsequent struggles in text reading when this scaffold was not present. Research investigating this hypothesis is warranted. We also hypothesize that providing more opportunity for reading connected text might have been associated with improved outcomes. As students demonstrated strength in word reading and weakness in text reading, students may have profited from adjusting the emphasis to more text reading.

Another factor that may be related to the lack of improved student response after increased time in intervention is the age of the students. Students participating in the double-dose treatment were provided their two 30-minute sessions consecutively to assist with school scheduling. Thus, students participated in the intervention during a 60-minute time block with a 1- to 2-minute stretch break between sessions. Tutors reported difficulties throughout the 13-week intervention with student fatigue, group management, and increased problem behavior during the second 30-minute session. As reported earlier, the fidelity of passage reading and comprehension instruction supports this difficulty. To effectively increase the duration of intervention for young students, it may be necessary to break up the intervention time across the day (e.g., one 30-minute session in the morning and one 30-minute session in the afternoon). Of course, it may also be that for students with very extreme reading difficulties—perhaps reading disabilities—it is unrealistic to think that 50 hours of instruction would distinguish them from other very low responders who received virtually the same instruction for 25 hours.

The lack of differences in individual student response between the single- and double-dose groups may also be related to other aspects of intervention intensity. It may be that additional time is not sufficient to increase intensity for low-responding students and that reduction in group size is needed (Elbaum et al., 2000). An increase in instructional time might have been effective if combined with smaller instructional group sizes (e.g., decreasing group size to 2 or 3 students). Smaller groups might also reduce some of the group management and behavior difficulties discussed earlier.

Additionally, more intense instructional routines may be needed to improve reading for these students who demonstrate the most significant reading difficulties. As a result of the enhanced classroom instruction provided by the larger study, confidence is high that the students in these studies had received effective instruction in their classrooms in addition to the interventions implemented. The students qualifying for the treatment groups each year represented approximately 3% to 4% of the first graders in the district. Although the elements of the intervention employed in the treatment have been shown effective in previous research, the previous research included students who were receiving effective instruction or intervention for the first time—thus increasing the number of students who responded positively and quickly to treatment. Perhaps when only the lowest readers (students who have not responded to previous intervention) are identified and selected from the larger sample of all at-risk students, as they were in this study, the intervention techniques are less powerful. In other words, interventions that have demonstrated effectiveness for “most” students at risk for reading difficulties may be inadequate for the specific group of students who do not respond initially to these interventions. Students demonstrating initial insufficient response may need a different intervention.

Limitations of the Study

When effective instruction is in place, only a small percentage of students are unable to make sufficient progress in reading. The participants for the studies reported here were identified from pools of approximately 500 first-grade students per year. Yet after effective instruction, only 50 students qualified for Study 1 (single dose) and 36 students for Study 2 (double dose). Thus, studying these low responders creates limitations in study design. Nevertheless, the findings related to student response for students with severe reading difficulties provide critical information that can be used to design future research.

A limitation related to the realities of the school setting is the additional reading instruction students in the comparison group received. Because of budget cuts the schools faced prior to the beginning of this study, there was variability between schools in the amount of additional instruction provided by the school for the comparison group. The amount of time students in the treatment condition were provided intervention was controlled across both studies; however, students in the comparison group received interventions in their schools that ranged from 0 to 450 minutes per week. As a result, a few comparison students received substantially more time in intervention than students in the treatment groups, particularly in Study 1. Interestingly, an examination of students’ responses in the comparison group did not suggest they were related to whether or not they received intervention. All patterns of response discussed in the results occurred for students who received additional reading services and students who did not receive additional reading services.

Recommendations for Future Research

This study provides preliminary information regarding student response to varying levels of intervention intensity. Further research is needed to examine effective interventions for students demonstrating low response to current intervention techniques. There is already evidence (e.g., 10 weeks vs. 20 weeks) that increased duration can have positive effects for at-risk students (Vaughn, Linan-Thompson, & Hickman, 2003). Research is needed to compare the two types of time intensity (increased duration and increased session time) to determine further whether intensity through more time in intervention can improve student outcomes for the lowest readers. Studies examining these time intensity factors can provide guidance as to the levels of intensity needed to improve outcomes for all students. Relatedly, examination of various ways of increasing intensity such as time, group size, and a combination of time and group size may provide information on how intensity factors effect student response specifically for students demonstrating low response to intervention.

This study suggests that more of the same intervention was not beneficial for these students who demonstrated previous low response to intervention. To this end, further examination of instructional techniques specifically for these students is needed. Researchers conducting studies on reading interventions generally have used standard protocols of instruction (e.g., Lovett et al., 1994; Torgesen et al., 2001; Vellutino et al., 1996; Wise, Ring, & Olson, 1999), as were used for the instruction in the current studies. Standard protocol interventions provide clearly specified interventions to all participants. Although the materials and instruction are matched to the students’ current level, the emphasis and procedures for implementing the instruction are similar for all students receiving the intervention. Another type of approach is the individualized approach. When individualized intervention is used, emphasis is placed on designing an intervention in response to the differentiated needs of students. The emphasis of instruction may change frequently throughout the intervention period to match changes in individual student needs. Although this individualized approach has been used in practice (e.g., Ikeda, Tilly, Stumme, Volmer, & Allison, 1996; Marston, Muyskens, Lau, & Canter, 2003) and is often referred to conceptually in the field of special education, little data are available to support many of the implementations (Fuchs, Mock, Morgan, & Young, 2003).

One notable exception is a recent study by Mathes et al. (2005). In the study, two interventions were implemented: (a) proactive reading, derived from the model of direct instruction (Carnine, Silbert, Kame’enui, & Tarver, 2004) and using a more standardized approach with a specific scope and sequence, and (b) responsive reading, following the model of cognitive strategy instruction and using a more individualized approach with daily objectives determined by student need. Mathes et al. identified and randomly assigned at-risk first-grade students to one of three interventions: (a) enhanced classroom instruction and proactive reading, (b) enhanced classroom instruction and responsive reading, or (c) enhanced classroom instruction only. Though student outcomes were enhanced after participation in the interventions (compared to students receiving enhanced classroom instruction only), only the Word Attack measure showed significant differences between the two interventions in favor of the proactive reading intervention. However, Mathes et al. did not examine specifically the effects of these interventions for students with previous low response to intervention. Further studies comparing the effects of standard intervention protocols and individualized interventions that are designed to provide more differentiated instruction may provide valuable information regarding effective instruction for students with significant reading difficulties. Further investigation in these areas is vital to provide researchers and practitioners with much needed information on effective intervention for students with learning disabilities.

Figure 2.

Study 1: Individual Student Response on Word Attack

Figure 3.

Study 1: Individual Student Response on Passage Comprehension

Figure 6.

Study 2: Individual Student Response on Word Attack

Figure 7.

Study 2: Individual Student Response on Passage Comprehension

Table 6.

WRMT-R Word Attack Pretest and Posttest Standard Scores

| Study 1 | Study 2 | |||

|---|---|---|---|---|

| Group | Pretest M (SD) |

Posttest M (SD) |

Pretest M (SD) |

Posttest M (SD) |

| Treatment | 96.24 | 96.90 | 101.29 | 101.50 |

| (12.86) | (13.29) | (12.45) | (12.01) | |

| Comparison | 96.55 | 95.24 | 104.09 | 95.64 |

| (12.24) | (12.34) | (11.97) | (13.62) | |

Note: WRMT-R = Woodcock Reading Mastery Test–Revised (Woodcock, 1987).

Table 7.

WRMT-R Passage Comprehension Pretest and Posttest Standard Scores

| Study 1 | Study 2 | |||

|---|---|---|---|---|

| Group | Pretest M (SD) |

Posttest M (SD) |

Pretest M (SD) |

Posttest M (SD) |

| Treatment | 82.57 | 87.38 | 82.64 | 84.64 |

| (9.74) | (7.69) | (4.70) | (10.95) | |

| Comparison | 87.28 | 88.00 | 86.77 | 90.68 |

| (10.08) | (8.86) | (10.90) | (7.96) | |

Note: WRMT-R = Woodcock Reading Mastery Test–Revised (Woodcock, 1987).

Biographies

Jeanne Wanzek, PhD, is an assistant professor in special education at Florida State University and on the research faculty at the Florida Center for Reading Research. Her research interests include learning disabilities, reading, effective instruction, and response to intervention.

Sharon Vaughn, PhD, is the H. E. Hartfelder/Southland Corporation Regents Chair in Human Development at University of Texas at Austin. She is currently the principal investigator or co–principal investigator on several research grants investigating effective interventions for students with reading difficulties and students who are English language learners.

Contributor Information

Jeanne Wanzek, Florida State University.

Sharon Vaughn, University of Texas at Austin.

References

- Al Otaiba S, Fuchs D. Characteristics of children who are unresponsive to early literacy intervention: A review of the literature. Remedial and Special Education. 2002;23:300–316. [Google Scholar]

- Al Otaiba S, Fuchs D. Who are the young children for whom best practices in reading are ineffective?: An experimental and longitudinal study. Journal of Learning Disabilities. 2006;39 doi: 10.1177/00222194060390050401. 441-431. [DOI] [PubMed] [Google Scholar]

- Barnett DW, Daly EJ, Jones KM, Lentz FE. Response to intervention: Empirically based special service decisions from single-case designs of increasing and decreasing intensity. Journal of Special Education. 2004;38:66–79. [Google Scholar]

- Berninger VW, Abbott RD, Vermeulen K, Ogier S, Brooksher R, Zook D, et al. Comparison of faster and slower responders to early intervention in reading: Differentiating features of their language profiles. Learning Disability Quarterly. 2002;25:59–76. [Google Scholar]

- Biancarosa G, Snow CE. Reading Next: A vision for action and research in middle and high school literacy. A report to Carnegie Corporation of New York. Washington, DC: Alliance for Excellence in Education; 2004. [Google Scholar]

- Carnine DW, Silbert J, Kame’enui EJ, Tarver SG. Direct instruction reading. 4th ed. Upper Saddle River, NJ: Merrill/Prentice Hall; 2004. [Google Scholar]

- Donovan MS, Cross CT. Minority students in special and gifted education. Washington, DC: National Academy Press; 2002. [Google Scholar]

- Dunn LM, Dunn LM. Peabody picture vocabulary test. 3rd ed. Circle Pines, MN: American Guidance Service; 1997. [Google Scholar]

- Elbaum B, Vaughn S, Hughes MT, Moody SW. How effective are one-to-one tutoring programs in reading for elementary students at-risk for reading failure? A meta-analysis of the intervention research. Journal of Educational Psychology. 2000;92:605–619. [Google Scholar]

- Foorman BR, Francis DJ, Fletcher JM, Schatschneider C, Mehta P. The role of instruction in learning to read: Preventing reading failure in at-risk children. Journal of Educational Psychology. 1998;90:37–55. [Google Scholar]

- Fuchs D, Mock D, Morgan PL, Young CL. Responsiveness-to-intervention: Definitions, evidence, and implications for the learning disabilities construct. Learning Disabilities Research and Practice. 2003;18:157–171. [Google Scholar]

- Good RH, Kaminski RA. Dynamic Indicators of Basic Early Literacy Skills. 6th ed. Eugene, OR: Institute for the Development of Educational Achievement; 2002. [Google Scholar]

- Hammill D. What we know about correlates of reading. Exceptional Children. 2004;70:453–468. [Google Scholar]

- Howell DC. Statistical methods for psychology. Boston: PWS-Kent; 1992. [Google Scholar]

- Ikeda MJ, Tilly WD, III, Stumme J, Volmer L, Allison R. Agency-wide implementation of problem solving consultation: Foundations, current implementation, and future directions. School Psychology Quarterly. 1996;11:228–243. [Google Scholar]

- Individuals With Disabilities Education Act, 20 U.S.C. 1400 et. seq. 2004 [Google Scholar]

- Lovett M, Borden L, DeLuca T, Lacarenza L, Benson N, Brackstone D. Treating core deficits of developmental dyslexia: Evidence of transfer of learning after phonologically- and strategy-based reading training programs. Developmental Psychology. 1994;30:805–822. [Google Scholar]

- Lovett MW, Steinbach KA, Frijters JC. Remediating the core deficits of developmental reading disability: A double-deficit perspective. Journal of Learning Disabilities. 2000;33:334–358. doi: 10.1177/002221940003300406. [DOI] [PubMed] [Google Scholar]

- Marston D, Muyskens P, Lau M, Canter A. Problem-solving model for decision-making with high-incidence disabilities: The Minneapolis experience. Learning Disabilities Research and Practice. 2003;18(3):186–200. [Google Scholar]

- Mathes PG, Denton CA, Fletcher JM, Anthony JL, Francis DJ, Schatschneider C. The effects of theoretically different instruction and student characteristics on the skills of struggling readers. Reading Research Quarterly. 2005;40:148–182. [Google Scholar]

- McMaster KL, Fuchs D, Fuchs LS, Compton DL. Responding to nonresponders: An experimental field trial of identification and intervention methods. Exceptional Children. 2005;71:445–463. [Google Scholar]

- National Center for Education Statistics. National assessment of educational progress. Washington, DC: Author; 2005. [Retrieved August 11, 2005]. from http://nces.ed.gov/nationsreportcard/ltt/results2004/natreadinginfo.asp. [Google Scholar]

- National Reading Panel. Teaching children to read: An evidence-based assessment of the scientific research literature on reading and its implications for reading instruction: Reports of the subgroups. Bethesda, MD: National Institute of Child Health and Human Development; 2000. [Google Scholar]

- Rand Reading Study Group. Reading for understanding. Santa Monica, CA: Rand Corporation; 2002. Available from http://www.rand.org/multi/achievementforall/ [Google Scholar]

- Snow CE, Burns MS, Griffin P. Preventing reading difficulties in young children. Washington, DC: National Academies Press; 1998. [Google Scholar]

- Swanson HL. Reading research for students with LD: A meta-analysis in intervention outcomes. Journal of Learning Disabilities. 1999;32:504–532. doi: 10.1177/002221949903200605. [DOI] [PubMed] [Google Scholar]

- Swanson HL, Trainin G, Necoechea DM, Hammill DD. Rapid naming, phonological awareness, and reading: A meta-analysis of the correlation evidence. Review of Educational Research. 2003;73:407–440. [Google Scholar]

- Torgesen JK. Individual differences in response to early interventions in reading: The lingering problem of treatment resisters. Learning Disabilities Research and Practice. 2000;15:55–64. [Google Scholar]

- Torgesen JK, Alexander AW, Wagner RK, Rashotte CA, Voeller KKS, Conway T. Intensive remedial instruction for children with severe reading disabilities: Immediate and long-term outcomes from two instructional approaches. Journal of Learning Disabilities. 2001;34:33–58. doi: 10.1177/002221940103400104. [DOI] [PubMed] [Google Scholar]

- Torgesen JK, Wagner RK, Rashotte CA. Contributions of phonological awareness and rapid automatic naming ability to the growth of word-reading skills in second- to fifth-grade children. Scientific Studies of Reading. 1997;1:161–185. [Google Scholar]

- Torgesen JK, Wagner RK, Rashotte CA, Rose E, Lindamood P, Conway T, et al. Preventing reading failure in young children with phonological processing disabilities: Group and individual responses to instruction. Journal of Educational Psychology. 1999;91:579–593. [Google Scholar]

- Vadasy PF, Sanders EA, Peyton JA, Jenkins JR. Timing and intensity of tutoring: A closer look at the conditions for effective early literacy tutoring. Learning Disabilities Research and Practice. 2002;17:227–241. [Google Scholar]

- Vaughn S, Gersten R, Chard DJ. The underlying message in LD intervention research: Findings from research syntheses. Exceptional Children. 2000;67:99–114. [Google Scholar]

- Vaughn S, Linan-Thompson S, Elbaum B, Wanzek J, Rodriguez KT, Cavanaugh CL, et al. Centers for implementing K-3 behavior and reading intervention models preventing reading difficulties: A three-tiered intervention model. Unpublished report. University of Texas Center for Reading and Language Arts; 2004. [Google Scholar]

- Vaughn S, Linan-Thompson S, Hickman P. Response to instruction as a means of identifying students with reading/learning disabilities. Exceptional Children. 2003;69:391–409. [Google Scholar]

- Vaughn S, Linan-Thompson S, Kouzekanani K, Bryant DP, Dickson S, Blozis SA. Reading instruction grouping for students with reading difficulties. Remedial and Special Education. 2003;24:301–315. [Google Scholar]

- Vaughn S, Linan-Thompson S, Murray CS, Woodruff T, Wanzek J, Scammacca N, et al. High and low responders to early reading intervention: Response to intervention. Exceptional Children. (in press). [Google Scholar]

- Vaughn S, Wanzek J, Woodruff AL, Linan-Thompson S. A three-tier model for preventing reading difficulties and early identification of students with reading disabilities. In: Haager DH, Vaughn S, Klingner JK, editors. Validated reading practices for three tiers of intervention. Baltimore: Brookes; 2007. pp. 11–27. [Google Scholar]

- Vellutino FR, Scanlon DM. The interactive strategies approach to reading intervention. Contemporary Educational Psychology. 2002;27:573–635. [Google Scholar]

- Vellutino FR, Scanlon DM, Sipay ER, Small SG, Pratt A, Chen R, et al. Cognitive profiles of difficult-to-remediate and readily remediated poor readers: Early intervention as a vehicle for distinguishing between cognitive and experiential deficits as basic causes of specific reading disability. Journal of Educational Psychology. 1996;88:601–638. [Google Scholar]

- Wise BW, Ring J, Olson RK. Training phonological awareness with and without explicit attention to articulation. Journal of Experimental Child Psychology. 1999;72:271–304. doi: 10.1006/jecp.1999.2490. [DOI] [PubMed] [Google Scholar]

- Wolf M, Bowers PG. The double-deficit hypothesis for the developmental dyslexias. Journal of Educational Psychology. 1999;91:415–438. [Google Scholar]

- Woodcock RW. Woodcock Reading Mastery Test–Revised. Circle Pines, MN: American Guidance Service; 1987. [Google Scholar]

- Woodcock RW, Johnson MB. Woodcock-Johnson Psycho-Educational Battery. Allen, TX: DLM Teaching Resources; 1977. [Google Scholar]