Abstract

Accurately reading the body language of others may be vital for navigating the social world, and this ability may be influenced by factors, such as our gender, personality characteristics and neurocognitive processes. This fMRI study examined the brain activation of 26 healthy individuals (14 women and 12 men) while they judged the action performed or the emotion felt by stick figure characters appearing in different postures. In both tasks, participants activated areas associated with visual representation of the body, motion processing and emotion recognition. Behaviorally, participants demonstrated greater ease in judging the physical actions of the characters compared to judging their emotional states, and participants showed more activation in areas associated with emotion processing in the emotion detection task, whereas they showed more activation in visual, spatial and action-related areas in the physical action task. Gender differences emerged in brain responses, such that men showed greater activation than women in the left dorsal premotor cortex in both tasks. Finally, participants higher in self-reported empathy demonstrated greater activation in areas associated with self-referential processing and emotion interpretation. These results suggest that empathy levels and sex of the participant may affect neural responses to emotional body language.

Keywords: fMRI, body language, posture, emotion, gender, empathy

INTRODUCTION

Human beings are continually engaged in observing and interpreting the actions, emotions and intentions of others. Nonverbal cues, such as body posture, provide vital input in such interpretations. Posture has been found to be a particularly powerful tool in both expressing and recognizing emotion (Bianchi-Berthouze et al., 2006), and the body language portrayed by a posture can serve as a rich source of information that can reveal the goals, intentions and emotions of others. Unlike face processing, which demands a closer look at the features of a face, body language allows us to make social interpretations configurally and from a distance (e.g. Reed et al., 2003, 2006). Such analysis helps us to adjust our own behavior to match the demands of the interpersonal environment, which may confer substantial social benefit. Thus, it is of little surprise that the ability to engage in configural body processing seems to develop as early as 3-months of age (Gliga and Dehaene-Lambertz, 2005). Neurologically, the representation of body language and its emotional components appears to rely on a diverse network of brain areas that process different types of body-relevant information. One useful framework for understanding the underlying neural correlates of body language interpretation is provided by de Gelder (2006), who points out the role of three coordinated networks for decoding: (i) the visuomotor aspects of body language, (ii) the reflex-like emotional response to body language and (iii) the proprioceptive (both emotional and physical) response to body language.

The visual representation of body has been associated with the extrastriate body area (EBA), located in the lateral occipitotemporal cortex (Downing et al., 2001, 2006a,b; Pourtois et al., 2007), and the fusiform body area (FBA) (Peelen and Downing, 2005; Schwarzlose et al., 2005). While the EBA has been commonly found to respond to body parts, the FBA may help to form a holistic interpretation of the body (Taylor et al., 2007). The EBA and FBA response to body parts has also been found to translate into interpreting emotional body language, where its response was correlated with amygdala activation (Peelen et al., 2007).

The emotional processing of body postures is thought to occur rapidly and automatically (de Gelder and Hadjikhani, 2006), although most of this research has focused on the interpretation of fearful body language. In fearful body language, the areas that are implicated in this reflex-like emotional interpretation are similar to those thought to be involved in automatic affective face processing (Johnson, 2005; Vuilleumier, 2005), and they include the subcortical structures of the superior colliculus, the pulvinar nucleus, the striatum and the amygdala (de Gelder, 2006). However, nonfear emotions may activate regions other than the amygdala. For example, happy body language was found to elicit increased activation of the visual cortex and not the amygdala (de Gelder et al., 2004). Areas such as the anterior insula, medial prefrontal cortex (MPFC), and anterior cingulate cortex (ACC) may also be expected to respond to emotional body language, as these areas have been found to be activated in various emotional tasks (for a review see Phan et al., 2004) and may also be a part of the proprioceptive response to emotional body language.

The translation of the perceived body language into a motor or proprioceptive script for the perceiving agent to make sense of the action may be accomplished by another network, the mirror neuron system (MNS). The MNS, discovered in nonhuman primates, consists of specific neurons that fire both when a monkey performs an action and when the monkey observes another person performing the same action (Gallese et al., 1996; Rizzolatti et al., 1996). In humans, the MNS primarily involves the inferior frontal gyrus (IFG) and the inferior parietal lobule (IPL) (Iacoboni et al., 1999; Decety et al., 2002). However, the MNS may also be assisted by supporting areas such as the superior temporal sulcus (STS) that provide visuo-motor congruence during observation and imitation (Molenberghs et al., 2010) but are not formally part of the MNS. Although there is some debate about an equivalent of the MNS existing in humans (see Hickock, 2009; Turella et al., 2009), the general consensus is that the mirror neuron system (i.e. the IFG and IPL) and the supporting STS selectively respond to biological motion and engage in visuomotor action planning. For example, the STS and the parietal lobe (along with the premotor cortex) have been found to be involved in the perception of the body and body movements (for a review see Allison et al., 2000), whereas the IFG and IPL have been found to be involved in the understanding, planning and execution of motor actions (Rizzolatti and Craighero, 2004).

Taken together, the three networks proposed by de Gelder (2006) may allow for the static snapshots of body images to be combined with motion, proprioceptive and affective information processed by other brain areas. Nevertheless, the specific brain regions that are activated by body language may vary according to individual differences. Indeed, differences in personality and gender have been associated with behavioral differences in interpreting emotions (see Hamann and Canli, 2004 for a review) and have often been accompanied by distinct neural responses in emotion-related brain regions. For example, while women showed more activation than men in the left anterior insula when viewing emotionally aversive stimuli (Mériau et al., 2009), men showed more activation than women in the right anterior insula when viewing emotional faces, scenes and words (Naliboff et al., 2003; Lee et al., 2005). These gender differences may be due to a confounding variable like empathy that may mediate the relation between gender and the pattern of neural activation. For example, Singer and colleagues (2004) examined the degree of empathy in their participants and the subsequent activation in emotion-related brain regions while the participants witnessed a loved one experiencing pain. They found the degree of empathy to be positively correlated with the activation in the left anterior insula and the rostral ACC. Similarly, Hooker and colleagues (2010) found that self-rated empathy was related to more activation in the left IFG, right STS, left somatosensory cortex and bilateral middle temporal gyri when watching emotionally charged animations. These results suggest that the degree of empathic reaction may be related to the amount of activation in certain brain regions associated with emotions.

The primary goal of the present study was to examine the activation and coordination of the aforementioned networks while participants interpreted actions and emotions from body postures. An additional goal was to investigate the effect of gender and one's self-reported inclination toward empathy on how the body postures were interpreted. In line with previous research (i.e. de Gelder, 2006), we hypothesized that the interpretation of body postures would activate brain areas associated with visual body processing (EBA and FBA), emotional processing (anterior insula, MPFC, striatum and ACC) and motor/proprioceptive processing [the MNS and the supplementary motor area (SMA)]. In addition, we hypothesized that brain activation in response to body postures may systematically differ according to gender and/or self-reported empathizing differences, especially in brain regions implicated in previous research, such as the anterior insula.

METHOD

Participants

Twenty-six right-handed, healthy university students (mean age: 21.0 years; age range: 18.5–35.8 years) participated in this functional magnetic resonance imaging (fMRI) study. The participants consisted of 14 women and 12 men recruited through a screening questionnaire administered in the UAB department of psychology's Introduction to Psychology classes. Participants were not recruited if they had any MRI contraindications, were taking psychotropic medications, had claustrophobia, or had hearing problems. No participants indicated having a developmental cognitive disorder, anxiety disorder, schizophrenia or obsessive compulsive disorder. Each participant signed an informed consent that had been approved by the University of Alabama at Birmingham Institutional Review Board.

Stimuli and experimental task

The stimuli consisted of stick figure characters (created using Pivot Stickfigure Animator) in different postures, appearing in white color on a black background. All stick figures were still-life line drawings, drawn to look as if the character was engaged in an action (see Appendix A for the stick figure stimuli depicting actions and emotions). Stick figure depictions of action were chosen over photographs of human action because: (i) stick figures are extremely common and convincing representations of human actions in the real world (e.g. road signs, bathroom signs, etc.) and (ii) stick figures do not convey race, gender or facial features of the character that may bias how a given action or emotion is interpreted by viewers.

The experiment was in blocked design format, with two different types of experimental conditions (physical action or emotional interpretation of the stick figure posture) and a fixation baseline (Figure 1). In both experimental conditions, each trial consisted of a stick figure character that was presented at the center of the screen with three one-word response options. The participants’ task was to view the character's posture and choose the option that best described the action or emotion the character was portraying from three alternatives (A, B or C). While the participants chose the option that best represented how a character was feeling (i.e. sad, happy, scared, upset, relaxed, confused, excited, tired and pained) in the emotion task, they chose the option that best represented which physical action a character was performing (i.e. running, swimming, pushing, cartwheel, handstand, sitting, stretching and throwing) in the physical action task. The response options for the physical interpretation included two action words and one emotional state word, while the response options for the emotional interpretation included two emotional state words and one action word. Among the three options, one was the correct answer, another one was a distracter from the same category (emotion word in emotion condition and physical action word in action condition), and the third option was another distracter, but from the other category. All stimuli were pilot tested on a unique set of students from an Introduction to Psychology course to ascertain congruency in the interpretation of the stick figure character's emotion and action. Only the stimuli that demonstrated above 80% congruency were used in the experiment, which left us with nine emotional stimuli and nine action stimuli.

Fig. 1.

Example of a sample trial of the physical action task and the emotion task, and the order of presentation of the blocks in the experiment. Participants were asked what the character was doing (action) and what the character was feeling (emotion). Participants chose their answer from three alternative words, one of which best described the figure.

Prior to the fMRI scan, each participant practiced the task on a laptop. The practice stimuli consisted of six unique practice trials (three physical actions and three emotional actions). In the scanner, the stimuli were presented through the stimulus presentation software, E-Prime 1.2 (Psychology Software Tools, Pittsburgh, PA, USA). An IFIS interface (Integrated Functional Imaging System, Invivo Corporation, Orlando, FL, USA) was used to present the visual stimuli onto a screen behind the participant while in the scanner. Each experimental block lasted 18 s (three actions per block each lasting 6 s). There was a 6-s break at the end of each action block and before each emotion block. In all, there were three physical action blocks, three emotional action blocks and three fixation baselines. The order of the action and emotion blocks was not counterbalanced. The fixation baselines lasted 24 s and were presented at the beginning of the experiment and after every two blocks. Participants made their responses on a fiber optic button response system by pressing the left index finger to select response A, the right index finger to select response B, and the right middle finger to select response C. In both experimental conditions, the stick figure remained on the screen for the entire 6 s, during which time the participant was asked to make his/her response.

Measures

Empathy quotient (EQ; Baron-Cohen and Wheelwright, 2004) is a 60-item self-report measure that assesses an individual's inclination toward empathy. Participants are asked to indicate whether they strongly agree, slightly agree, slightly disagree or strongly disagree with each statement (e.g. ‘I really enjoy caring for other people’). The EQ has been previously shown to have good psychometric properties, with low kurtosis (−0.32), low skewness (0.28) and high internal consistency (Cronbach's α = 0.86; Wakabayashi et al., 2007). The EQ renders a maximum score of 120 (high empathy) and a minimum score of zero (low empathy).

Imaging parameters

All fMRI scans were acquired on a Siemens 3.0 T Allegra head-only scanner (Siemens Medical Inc., Erlangen, Germany) at the Civitan International Research Center of the University of Alabama at Birmingham. For structural imaging, initial high-resolution T1-weighted scans were acquired using a 160-slice three-dimensional (3D) MPRAGE volume scan with TR = 200 ms, TE = 3.34 ms, flip angle = 7, FOV = 25.6 cm, 256 × 256 matrix size and 1-mm slice thickness. The stimuli were rear-projected onto a translucent plastic screen and participants viewed the screen through a mirror attached to the head coil. For functional imaging, a single-shot gradient-recalled echo-planar pulse sequence was used that offered the advantage of rapid image acquisition (TR = 1000 ms, TE = 30 ms, flip angle = 60°). Seventeen adjacent oblique axial slices were acquired in an interleaved sequence with 5-mm slice thickness, 1-mm slice gap, a 24 × 24 cm field of view (FOV), and a 64 × 64 matrix, resulting in an in-plane resolution of 3.75 × 3.75 × 5 mm.

fMRI data analysis

To examine the distribution of activation, the data were analyzed using Statistical Parametric Mapping (SPM8) software (Wellcome Department of Cognitive Neurology, London, UK). Images were corrected for slice acquisition timing, motion-corrected, normalized to the Montreal Neurological Institute (MNI) template, resampled to 2 × 2 × 2 mm voxels and smoothed with an 8-mm Gaussian kernel to decrease spatial noise. For first-level analyses, whole-brain statistical analyses were performed on individual data by using the general linear model (GLM) as implemented in SPM8 (Friston et al., 1995) with emotion, action and fixation as regressors. Four within-subject contrasts were examined: (i) emotion–fixation, (ii) action–fixation, (iii) emotion–action and (iv) action–emotion.

For the second-level (group) analyses, two different types of analyses were conducted in line with the distinct goals for each of these analyses. First, our goal was to analyze the task-minus-fixation conditions (emotion–fixation and action–fixation) using a region of interest (ROI-based) analysis in order to directly compare our results to those of past research findings, while decreasing the problem of multiple comparisons. Therefore, an ROI analysis was performed with anatomical ROIs defined using WFU pickatlas toolbox (Maldjian et al., 2003). Twelve bilateral ROI masks were created and selected a priori, based on the findings of past research (e.g. de Gelder, 2006). These included the IFG, the IPL, the striatum (caudate and putamen), MPFC, the STS, the anterior insula, the amygdala, the ACC, the EBA, the FBA, the superior colliculus and the pulvinar aspect of the thalamus. For areas not anatomically defined in the WFU pickatlas toolbox, we used the MNI coordinates of regions implicated in past research. The list of ROI masks can be seen in Table 1. Statistical significance of activation in each ROI was determined for the emotion–fixation and action–fixation contrasts by deriving statistical parametric maps from the resulting paired t-value associated with each voxel, using a family-wise error (FWE) rate of 0.05.

Table 1.

MNI coordinates of ROI masks that were not anatomically defined by WFU pickatlas

| Region | MNI coordinates |

Radius | Reference | ||

|---|---|---|---|---|---|

| x | y | z | r (mm) | ||

| Left EBA | −46 | −70 | 0.65 | 8 | (Downing et al., 2001) |

| Right EBA | 46 | −70 | 0.65 | 8 | (Downing et al., 2001) |

| Left FBA | −40 | −44 | −24 | 5 | (Peelen and Downing, 2005) |

| Right FBA | 40 | −44 | −24 | 5 | (Peelen and Downing, 2005) |

| L/R Sup Colliculus | −2 | −28 | −16 | 10 | (Bushara et al., 2001) |

These ROIs were selected from previous studies in addition to regions anatomically defined by WFU pickatlas and were used in our ROI analyses.

Second, in the emotion–action and action–emotion group-level contrasts, our goal was to explore which areas of activation would differ between the two conditions. To our knowledge, these contrasts have not been analyzed in previous research, and these areas could theoretically be outside of the regions encompassed by the ROI masks for the former group-level analysis. Therefore, whole-brain statistical analyses were performed on individual data by using the GLM as implemented in SPM8, and significant voxels were identified using a nonmasked paired t-statistic on a voxel-by-voxel basis. Separate regressors were created for the emotion and action conditions by convolving a boxcar function with the standard hemodynamic response function as specified in SPM. Statistical maps were superimposed on normalized T1-weighted images. Statistical significance of activation was determined and reported at an uncorrected height threshold (P < 0.001) with an extent threshold of 40 voxels for direct contrasts between conditions and groups. The extent threshold of 40 voxels was applied via Monte Carlo simulations to the data using AlphaSim (Ward, 2000) run through Analysis of Functional Neuroimaging Software (AFNI; Cox, 1996) to determine the minimum number of voxels in each cluster to be at the level of statistical significance equivalent to a family-wise error corrected threshold of P < 0.05.

To examine task-related sex differences in brain activation, the groups were separated into males (N = 12) and females (N = 14) and GLM-based two-sample t-tests were performed, comparing brain activation in males vs females across each of the four contrasts (emotion–fixation, action–fixation, emotion–action and action–emotion). Statistical significance of activation was determined and reported at an uncorrected height threshold (P < 0.001) with an extent threshold of 40 voxels for direct contrasts between conditions and groups.

In addition to the GLM-based activation analyses, we used SPM8 multiple regression analyses to examine activation in the emotion–fixation and action–fixation contrasts as a function of self-reported empathy scores (EQ; Baron-Cohen and Wheelwright, 2004). Statistical significance of activation was determined and reported at an uncorrected height threshold (P < 0.001) with an extent threshold of 40 voxels for direct contrasts between conditions and groups.

RESULTS

This fMRI study examined the brain activation in healthy individuals when they made emotion or action judgments about static stick figure characters in different postures. The main results are: (i) participants were faster and more accurate in judging the characters’ physical actions than judging the characters’ emotional states; (ii) similar to past research, participants activated brain regions associated with visual representation and motion processing in both tasks. However, the brain regions associated with emotional interpretation differed slightly from the past research, which is likely due to the types of emotions used in the present study; (iii) while participants showed more activation in brain regions associated with emotion processing in the emotion detection task, they recruited visual, spatial and action related areas in the physical action task; (iv) gender differences emerged in activation patterns with men and women significantly differing in emotion and action detection and (v) self-reported empathizing scores significantly predicted brain activation in a number of regions associated with self-reference and emotion perception.

Behavioral results

To examine the effect of the task condition and gender on performance, we conducted two separate 2 (emotion vs action task) × 2 (women vs men) mixed ANOVAs (one ANOVA using reaction time as the dependent variable, and the other ANOVA using accuracy as the dependent variable). Task condition was a within-subjects variable, whereas gender was a between-subjects variable. Participants showed greater percent accuracy in judging the physical actions of the stick figure character (M = 94%, s.d. = 11%) than in judging their emotional states (M = 88%, s.d. = 15%), F(1, 24) = 16.75, P < 0.001. Similarly, participants were significantly faster in judging the physical actions of the characters (M = 2048 ms, s.d. = 303 ms) than in judging their emotional states (M = 2634 ms, s.d. = 328 ms), F(1, 24) = 123.79, P < 0.001. These results suggest that judging physical actions may have been slightly easier for the participants than making judgment about the emotional states. In terms of gender, there were no significant gender differences in accuracy, F(1, 24) = 1.03, P = 0.32 or reaction time, F(1, 24) = 0.02, P = 0.90. Furthermore, there was no significant interactions between task condition and gender [accuracy: F(1, 24) = 0.002, P = 0.97; reaction time: F(1, 24) = 0.14, P = 0.71]. The women in our sample (M = 48.71, s.d. = 11.04) did not demonstrate higher empathy scores compared to the men (M = 46.83, s.d. = 9.77), t(24) = 0.46, P = 0.65. This differs from past research linking women to greater levels of empathizing (i.e. Nettle, 2007). A Pearson-R correlation analysis was performed to examine possible relations between task performance and the EQ scores, revealing no significant correlations.

Brain activation results

Participants showed an overall similar pattern of activation when they made judgments about the emotions and the actions of stick figure characters. In both tasks compared to the fixation baseline, ROI analyses indicated significant activation in the bilateral EBA, the bilateral FBA, the bilateral IFG, the bilateral IPL, the bilateral striatum (caudate and putamen), the MPFC (bilateral in the emotion detection condition but only the right MPFC in the action detection condition), the STS (right in the emotion detection condition but left in the action detection condition), the bilateral superior colliculus, the bilateral pulvinar aspect of the thalamus and the bilateral anterior insula (FWE, P < 0.05). However, contrary to past findings (e.g. de Gelder, 2006), emotional body language interpretation (compared to baseline) did not activate the amygdala or the ACC (FWE, P < 0.05).

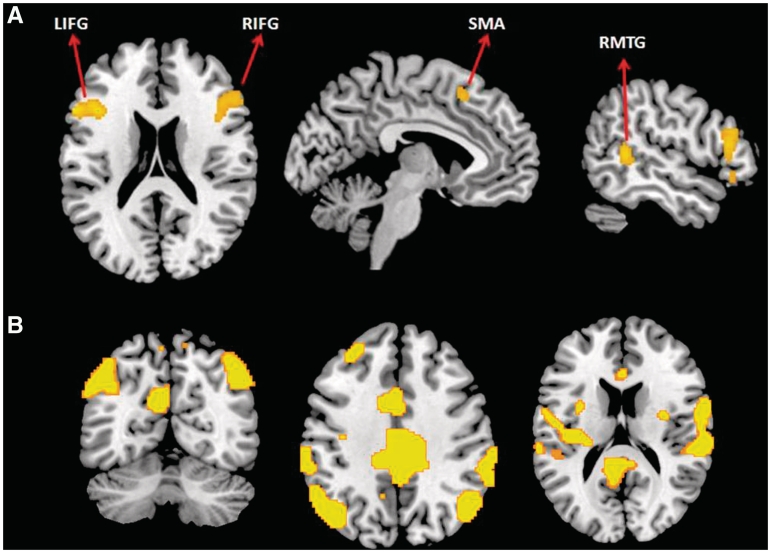

When detecting emotions associated with the stick figure characters’ posture (emotion vs action contrast), participants showed reliably greater activation in the bilateral IFG (pars triangularis aspect), right middle temporal gyrus (RMTG) and left SMA (P < 0.001, k = 40) (Table 2 and Figure 2), areas found to be associated with emotion processing (Morris et al., 1998; Johnstone et al., 2006). To ascertain that the bilateral IFG and SMA activation were not due to slower responding in the emotional condition, we correlated the difference in reaction time of the conditions (emotion RT–action RT) with activation, and we found no significant correlations (P < 0.001, k = 40). Therefore, the bilateral IFG and SMA activation here may underlie mirror neurons assisting the participant's simulation of the posture of a given stick figure to infer the corresponding emotion. On the other hand, when recognizing physical actions (contrasted with judging emotions), greater activation was found in the bilateral middle cingulate cortex (MCC), left superior medial frontal and orbitofrontal (OFC) cortices, bilateral angular gyri, bilateral superior temporal sulcus (STS), left middle frontal gyrus, right superior frontal gyrus (SFG), left precuneus and right superior parietal lobule (P < 0.001, k = 40).

Table 2.

Areas of peak activation in the action vs emotion and emotion vs action contrasts

| x | y | z | Voxels | t | |

|---|---|---|---|---|---|

| Emotion vs action | |||||

| L inf frontal (triangularis) | −40 | 18 | 24 | 1005 | 5.47 |

| R inf frontal (triangularis) | 42 | 14 | 24 | 555 | 4.78 |

| R Mid Temporal | 54 | −46 | 6 | 160 | 4.74 |

| L Supp Motor Area | −6 | 18 | 52 | 50 | 3.56 |

| Action vs emotion | |||||

| L/R Mid cingulate | 0 | −28 | 50 | 6949 | 6.88 |

| L Sup Medial Frontal | 0 | 62 | 0 | 410 | 6.28 |

| L Angular Gyrus | −40 | −72 | 42 | 1414 | 6.16 |

| R Angular Gyrus | 44 | −66 | 46 | 539 | 5.88 |

| R Sup Temporal Sulcus | 64 | 4 | 14 | 2807 | 5.8 |

| L Sup Orbitofrontal | −28 | 58 | −2 | 123 | 5.47 |

| L Sup Temporal Sulcus | −54 | −2 | 2 | 2127 | 5.32 |

| L Mid Frontal Gyrus | −34 | 28 | 44 | 158 | 4.52 |

| R Sup Frontal | 24 | 36 | 44 | 42 | 3.94 |

| L Precuneus | −2 | −74 | 48 | 71 | 3.91 |

| R Sup Parietal | 16 | −76 | 52 | 59 | 3.85 |

The threshold for significant activation was P < 0.001 with a spatial extent of at least 40 voxels determined by Monte Carlo simulation. Region labels apply to the entire extent of the cluster. t-value scores and MNI coordinates are for the peak activated voxel in each cluster only.

Fig. 2.

(A) Increased activation in bilateral inferior frontal gyri, right middle temporal and left supplementary motor areas during emotion recognition from body postures (emotion vs action contrast); (B) increased activation in bilateral parietal, temporal and in cingulate areas during action recognition (action vs emotion contrast) (P < 0.001, uncorrected with an extent threshold of 40 voxels determined by Monte Carlo simulation).

Gender differences in brain activation

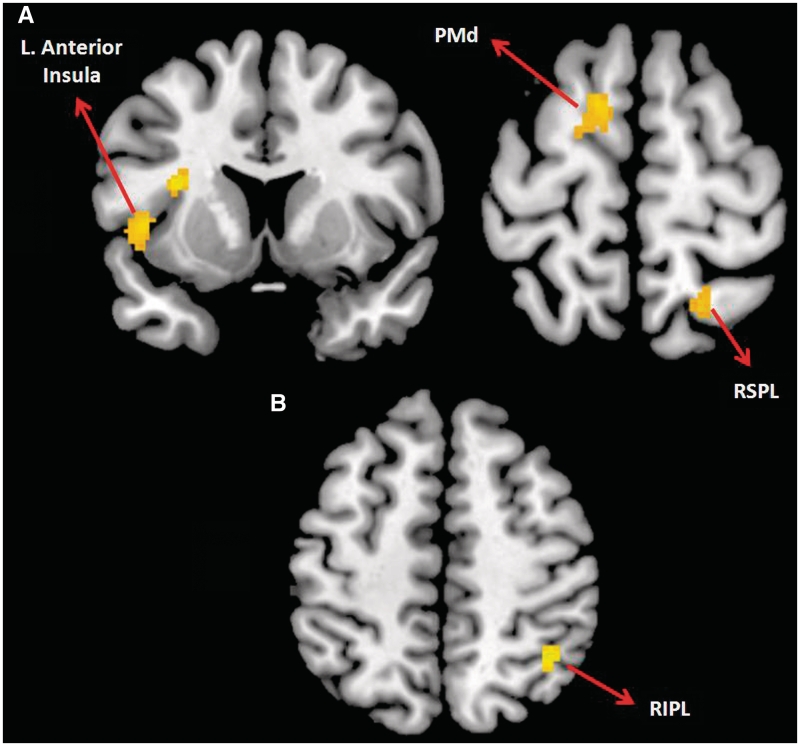

In addition to the overall group analysis, the data were further analyzed to determine gender differences, the primary finding of which involves a different pattern of activation between the men and the women. While judging the physical actions of characters (compared to baseline), men showed significantly greater activation in the left dorsal premotor cortex (PMd) (P < 0.001, k = 40). Conversely, while judging the physical actions of the characters, women did not show significantly greater activation in any of the brain regions compared to men (P < 0.001, k = 40).

When recognizing the emotions of the characters (compared to the fixation baseline), men showed reliably greater activation than the women in the left PMd, the left anterior insula/left anterior STS, and the right superior parietal lobule (P < 0.001, k = 40) (Figure 3). On the other hand, women showed greater activation in the right IPL while detecting emotions of the character.

Fig. 3.

(A) Increased activation in men compared to women during emotion recognition in left anterior insula, left dorsal premotor cortex and right superior parietal lobule; (B) increased activation in women, relative to men, in the right inferior parietal lobule during emotion recognition (P < 0.001 uncorrected with an extent threshold of 40 voxels determined by Monte Carlo simulation).

Multiple regression results

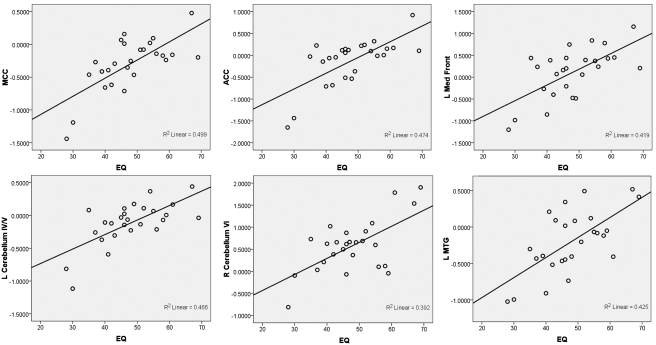

A multiple linear regression analysis was conducted to predict the functional activation using the EQ as a predictor variable. When detecting the emotion of the character (compared to baseline), participants’ self-reported empathy scores were able to predict activation in the bilateral MCC and ACC, the left superior medial frontal cortex, the left MTG, the bilateral precuneus, the left cerebellum (area IV/V) and the right cerebellum (area VI), with higher empathy scores being correlated with increased activation in these regions (P < 0.001, k = 40) (Figure 4). When detecting the action of the character (compared to baseline), participants’ self-reported empathy scores were able to predict activation of the bilateral middle frontal gyri, the left anterior insula, the SFG (bilateral superior medial frontal gyrus and the right superior frontal gyrus) and the right medial orbitofrontal gyrus, with higher empathy scores being correlated with increased activation in these regions.

Fig. 4.

Scatter plots showing correlations between empathizing quotient and brain activation in six ROIs: middle cingulate cortex, anterior cingulate cortex, left medial frontal gyrus, left cerebellum area IV/V, right cerebellum area IV/V, and left middle temporal gyrus.

DISCUSSION

Human beings are experts at using subtle visual and spatial cues to make judgments about the emotional and mental states of others. In social communication, nonverbal cues (posture, gesture, etc.) play a significant role, especially in communicating affect. Recent research has shown posture as a crucial element in indicating certain emotions (anger, frustration, boredom, etc.) (Coulson, 2004). Our study set out to examine the neural substrates underlying such functions and to further investigate possible individual differences in the ability to read and interpret body language. The main results of our study can be summarized into the following three categories: (i) behavioral and brain activation differences during the interpretation of action and emotion from body language, (ii) gender differences in brain activation and (iii) individual differences in self-rated empathizing that account for patterns of brain activation. Each of these three categories of results will be discussed in more detail below.

Interpreting actions and emotions from body language

Consistent with the predictions of de Gelder's (2006) action interpretation framework, this study found significant activation in brain areas associated with visual representation (the EBA and FBA), with motion processing (IFG, IPL, STS) and with emotion processing (anterior insula, MPFC, striatum, superior colliculus and pulvinar) when interpreting the actions and emotions of stick figures. However, the current study differed from de Gelder's framework in that we did not find significant activation of the amygdala or the ACC in the emotion vs fixation contrast. This difference may be attributed to the variety of emotions portrayed by the stick figure characters in our study (sadness, happiness, anger, etc.), whereas de Gelder and colleagues have typically used fear stimuli in their studies (Hadjikhani and de Gelder, 2003; Stekelenburg and de Gelder, 2004; Meeren et al., 2005). Past research has indicated that fear recognition may occur more rapidly and automatically than the recognition of other emotions (Yang et al., 2007), suggesting that the emotion task we used may have required more effortful interpretation compared to that of previous studies.

In order to further investigate the lack of significant recruitment of amygdala in this task, we examined our slice orientation, the number of slices, as well as the quality of the signal from amygdala in our data. We found that the slice prescription in this study covered amygdala reasonably well. In addition, the temporal signal-to-noise ratio (tSNR) extracted from left and right amygdala from the raw data of individual participants in the study ranged from 40 to 70, and also was on par with that in other cortical structures. Furthermore, we checked the amygdala response in different contrasts at liberal statistical thresholds, but did not find any significant activation. It is possible that the relatively shorter time periods dedicated to the experimental conditions might have influenced our ability to find significant amygdala activation. Nevertheless, the results of our study highlight the role of the anterior insula and MPFC in tasks that require the interpretation of a wider range of emotions, as was predicted by Phan and colleagues (2004).

When the two tasks were compared, the emotion interpretation elicited greater brain activation than the action interpretation in the bilateral IFG, the right MTG and the left SMA, which are regions implicated in emotion-related tasks as well as tasks that require an understanding of mental states. For example, the right MTG has been shown to respond to emotional faces (especially when happy faces are contrasted with fearful faces) (Morris et al., 1998) and emotional prosody (especially happy voices contrasted with angry voices) (Johnstone et al., 2006), suggesting that it may play a key role in interpreting such emotions. Furthermore, the IFG and SMA activation in the emotion task might have been associated with simulation (Gallese and Goldman, 1998; Blakemore and Decety, 2001). For instance, van de Riet and colleagues (2009) found that emotional body language activated the IFG and striatum which suggests that observing the emotional body language of others may stimulate our own motor planning. However, future research will be needed to examine if interpretation of emotion from body language leads to automatic motor planning and emotional contagion.

In contrast, the action interpretation task elicited greater activation in a diverse set of brain regions thought to be involved in motion processing, self-referential processing and visual body processing (the bilateral MCC, left MPFC and OFC, bilateral angular gyri, bilateral STS, left MFG, right SFG, bilateral precuneus) compared to the emotion interpretation task. While the STS activation may be associated with perceiving the motion of the character in a given action (Allison et al., 2000), the role of precuneus in visuospatial imagery has been widely documented (Suchan et al., 2002; Knauff et al., 2003; Malouin et al., 2003; Wenderoth et al., 2005). In summary, many of the brain regions recruited during the action recognition task have been found to be active during motion planning and execution (Chouinard and Paus, 2006), production of both rhythmic and discrete arm movements (Schaal et al., 2004), movement imitation (Iacoboni et al., 1999), motor imagery (Binkofski et al., 2000), biological motion perception (Peelen et al., 2006) and action observation (Grezes and Decety, 2001).

Gender differences in brain activation

While judging the physical actions as well as the emotions of the characters, men demonstrated greater activation in the left dorsal premotor cortex (PMd) compared to women. This activation may suggest a gender difference in decoding strategy, whereby men may have analyzed the motor states of the characters more than women. Reference frames centered on body parts, such as the hand, are thought to be present in the dorsal premotor cortex (Caminiti et al., 1991; Crammond and Kalaska, 1994; Shen and Alexander, 1997; Johnson et al., 1999). Men also showed greater activation in the left anterior insula and the right superior parietal lobule (RSPL) while judging the emotional state of the character. In contrast, women showed more activation than men in the right IPL, an area associated with analyzing intentions (Desmurget et al., 2009). Past studies have found that men showed more activation than women in the right anterior insula when viewing emotional faces, scenes, and words (Naliboff et al., 2003; Lee et al., 2005). However, the present study found this difference to occur in the left anterior insula. Using a think-aloud protocol, Lee and colleagues found that sex differences in insula activation might be due to men recalling past experiences when evaluating the emotion of the present stimulus. The left anterior insula has also been implicated in successful emotional recall (Smith et al., 2005), which suggests that the men in our study may have been relying on emotional recall more than the women. It should be noted here that although these results may provide an initial trend toward strategic differences between the two groups, more evidence specifically targeting such differences is needed.

Empathy and brain response to body posture

Our results also indicated a relation between one's disposition toward empathizing with others and the neural responses to the perceived emotion and action of a stick figure character. When judging emotions, we found that self-reported empathizing significantly predicted the activation of the bilateral ACC, bilateral MCC, bilateral precuneus, left MTG, left MPFC and the cerebellum. Many of these areas (i.e. ACC, MTG, MPFC and cerebellum) are thought to be associated with emotional empathy or theory-of-mind (Farrow et al., 2001; Morrison et al., 2004, Singer et al., 2004; Völlm et al., 2006; Hooker et al., 2010). Additionally, past research suggests that areas such as the MCC (Singer et al., 2004; Jackson et al., 2006; Tomlin et al., 2006; Lamm et al., 2007) and precuneus (Vogeley et al., 2001) are involved in self-referential processing/perspective taking, which may be enhanced in persons with higher levels of empathy. Similarly, although they examined activation in response to specific emotions rather than across a conglomerate of emotions, Chakrabarti et al. (2006) found that more empathic individuals tended to show brain activation in brain areas involved in theory of mind, self-other processing and simulation across a host of different emotions. Although the exact brain regions may differ across the present study and the study by Chakrabarti and colleagues, this congruence of results provides converging evidence that more empathic individuals may engage in increased emotional contagion, self-other processing and theory of mind decoding when viewing emotional stimuli.

Similarly, when judging the physical action of the character, self-reported empathizing significantly predicted the activation in the bilateral MPFC, right SFG, right medial OFC, left anterior insula and bilateral MFG, also areas associated with emotional empathy or theory-of-mind (Gallagher et al., 2000; Farrow et al., 2001; Singer et al., 2004; Völlm et al., 2006). Overall, these results point to a strong relationship between the degree of empathic reaction and the brain response to emotion. This brain activation may indicate that individuals with higher empathy levels are more likely to engage in theory-of-mind interpretation and emotional interpretation when interpreting both the action and emotion portrayed by stick figures. These results are similar to the results of the neutral face processing condition of Chakrabarti et al. (2006), which also found empathy to be correlated with activation of the superior frontal gyrus, middle frontal gyrus and cingulate gyrus. Given the nature and definition of empathy, an empathic individual being more likely to try to understand and relate to the stick figure stimuli may not be far-fetched. However, future research is needed to confirm this possibility.

CONCLUSIONS

In summary, this study found that action and emotion interpretations of body language seem to rely on a network of brain areas that specialize in visual, motor and emotional processing. The study also found evidence for gender differences, possibly suggesting that although men and women are equally accurate in identifying what action or emotion is being portrayed, they might think about body language in slightly different ways. Future research should examine these specific ways. Furthermore, this study found that participants with higher empathy scores demonstrated more activation in brain areas typically found to be involved in self-awareness, theory-of-mind and emotional engagement. Future research should examine if more empathic individuals are more likely to engage in perspective taking when decoding the body language of another. Overall, the ability to interpret body language is a powerful tool that allows us to understand and react to our social environment. Our findings suggest that men and women, and higher and lower empathic individuals, may show differences in how they neurologically process body language. Future research should explore the nuances of such differences in response to different emotions and different stimuli.

SUPPLEMENTARY DATA

Supplementary data are available at SCAN online.

Conflict of Interest

None declared.

Supplementary Material

Acknowledgments

The authors would like to thank Christopher Klein, Elizabeth Blum, Kathy Pearson and Hrishikesh Deshpande for their help at different stages of this study. The authors also would like to thank Marie Moore for her comments on an earlier version of the manuscript.

This research was supported by the Psychology department faculty stat-up funds and the McNulty-Civitan Scientist Award (to R.K.).

REFERENCES

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends in Cognitive Sciences. 2000;4:267–78. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S. The empathy quotient: an investigation of adults with asperger syndrome or high functioning autism, and normal sex differences. Journal of Autism and Developmental Disorders. 2004;34:163–75. doi: 10.1023/b:jadd.0000022607.19833.00. [DOI] [PubMed] [Google Scholar]

- Bianchi-Berthouze N, Cairns P, Cox A, Jennett C, Kim W. Presented at Emotion and HCI Workshop at BCI HCS. London, UK: BCS HC; 2006, September. On posture as a modality for expressing and recognizing emotions. [Google Scholar]

- Binkofski F, Amunts K, Stephan KM, et al. Broca's region subserves imagery of motion: a combined cytoarchitectonic and fMRI study. Human Brain Mapping. 2000;11(4):273–85. doi: 10.1002/1097-0193(200012)11:4<273::AID-HBM40>3.0.CO;2-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore SJ, Decety J. From the perception of action to the understanding of intention. Nature Reviews Neuroscience. 2001;2:561–7. doi: 10.1038/35086023. [DOI] [PubMed] [Google Scholar]

- Caminiti R, Johnson PB, Galli C, Ferraina S, Burnod Y. Making arm movements within different parts of space: the premotor and motor cortical representation of a coordinate system for reaching to visual targets. Journal of Neuroscience. 1991;11:1182–97. doi: 10.1523/JNEUROSCI.11-05-01182.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chakrabarti B, Bullmore E, Baron-Cohen S. Empathizing with basic emotions: common and discrete neural substrates. Social Neuroscience. 2006;1:364–84. doi: 10.1080/17470910601041317. [DOI] [PubMed] [Google Scholar]

- Chouinard PA, Paus T. The primary motor and premotor areas of the human cerebral cortex. Neuroscientist. 2006;12:143–52. doi: 10.1177/1073858405284255. [DOI] [PubMed] [Google Scholar]

- Coulson M. Attributing emotion to static body postures: recognition accuracy, confusions, and viewpoint dependence. Journal of Nonverbal Behavior. 2004;28:117–39. [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–73. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Crammond DJ, Kalaska JF. Modulation of preparatory neuronal activity in dorsal premotor cortex due to stimulus ± response compatibility. Journal of Neurophysiology. 1994;71:1281–4. doi: 10.1152/jn.1994.71.3.1281. [DOI] [PubMed] [Google Scholar]

- Decety J, Chaminade T, Grèzes J, Meltzoff A. A PET exploration of the neural mechanisms involved in reciprocal imitation. NeuroImage. 2002;15:265–72. doi: 10.1006/nimg.2001.0938. [DOI] [PubMed] [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nature Reviews Neuroscience. 2006;7:242–9. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Hadjikhani N. Non-conscious recognition of emotional body language. NeuroReport: For Rapid Communication of Neuroscience Research. 2006;17:583–6. doi: 10.1097/00001756-200604240-00006. [DOI] [PubMed] [Google Scholar]

- de Gelder B, Snyder J, Greve D, Gerard G, Hadjikhani N. Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proceedings of the National Academy of Sciences of United States of America. 2004;101:16701–6. doi: 10.1073/pnas.0407042101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desmurget M, Reilly KT, Richard N, Szathmari A, Mottolese C, Sirigu A. Movement intention after parietal cortex stimulation in humans. Science. 2009;8:811–3. doi: 10.1126/science.1169896. [DOI] [PubMed] [Google Scholar]

- Downing P, Chan A, Peelen M, Dodds C, Kanwisher N. Domain specificity in visual cortex. Cerebral Cortex. 2006a;16(10):1453–61. doi: 10.1093/cercor/bhj086. [DOI] [PubMed] [Google Scholar]

- Downing P, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–3. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Downing P, Peelen M, Wiggett A, Tew B. The role of the extrastriate body area in action perception. Social Neuroscience. 2006b;1:52–62. doi: 10.1080/17470910600668854. [DOI] [PubMed] [Google Scholar]

- Farrow TF, Zheng Y, Wilkinson ID, et al. Investigating the functional anatomy of empathy and forgiveness. Neuroreport. 2001;12:2433–8. doi: 10.1097/00001756-200108080-00029. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline JB, Frith CD, Frackowiak RSJ. Statistical parametric maps in functional imaging: a general linear approach. Human Brain Mapping. 1995;2:189–210. [Google Scholar]

- Gallagher H, Happé F, Brunswick N, Fletcher P, Frith U, Frith C. Reading the mind in cartoons and stories: an fMRI study of 'theory of the mind' in verbal and nonverbal tasks. Neuropsychologia. 2000;38:11–21. doi: 10.1016/s0028-3932(99)00053-6. [DOI] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119:593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Gallese V, Goldman A. Mirror neurons and the simulation theory of mind-reading. Trends in Cognitive Sciences. 1998;2:493–501. doi: 10.1016/s1364-6613(98)01262-5. [DOI] [PubMed] [Google Scholar]

- Gliga T, Dehaene-Lambertz G. Structural encoding of body and face in human infants and adults. Journal of Cognitive Neuroscience. 2005;17:1328–40. doi: 10.1162/0898929055002481. [DOI] [PubMed] [Google Scholar]

- Grèzes J, Decety J. Functional anatomy of execution, mental simulation, observation, and verb generation of actions: a meta-analysis. Human Brain Mapping. 2001;12(1):1–19. doi: 10.1002/1097-0193(200101)12:1<1::AID-HBM10>3.0.CO;2-V. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadjikhani N, de Gelder B. Seeing fearful body expressions activates the fusiform cortex and amygdala. Current Biology. 2003;13:2201–5. doi: 10.1016/j.cub.2003.11.049. [DOI] [PubMed] [Google Scholar]

- Hamann S, Canli T. Individual differences in emotion processing. Current Opinion in Neurobiology. 2004;14:233–8. doi: 10.1016/j.conb.2004.03.010. [DOI] [PubMed] [Google Scholar]

- Hickok G. The functional neuroanatomy of language. Physics of Life Reviews. 2009;6:121–43. doi: 10.1016/j.plrev.2009.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hooker C, Verosky S, Germine L, Knight R, D'Esposito M. Neural activity during social signal perception correlates with self-reported empathy. Brain Research. 2010;1308:100–13. doi: 10.1016/j.brainres.2009.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M, Woods RP, Brass M, Bekkering H, Mazziotta JC, Rizzolatti G. Cortical mechanisms of human imitation. Science. 1999;286:2526–8. doi: 10.1126/science.286.5449.2526. [DOI] [PubMed] [Google Scholar]

- Johnson M. Subcortical face processing. Nature Reviews Neuroscience. 2005;6(10):766–86. doi: 10.1038/nrn1766. [DOI] [PubMed] [Google Scholar]

- Johnson MT, Coltz JD, Hagen MC, Ebner TJ. Visuomotor processing as reflected in the directional discharge of premotor and primary motor cortex neurons. Journal of Neurophysiology. 1999;81:875–94. doi: 10.1152/jn.1999.81.2.875. [DOI] [PubMed] [Google Scholar]

- Johnstone T, van Reekum CM, Oakes TR, Davidson RJ. The voice of emotion: an FMRI study of neural responses to angry and happy vocal expressions. Social Cognitive and Affective Neuroscience. 2006;1:242–9. doi: 10.1093/scan/nsl027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knauff M, Fangmeier T, Ruff CC, Johnson-Laird PN. Reasoning, models, and images: behavioral measures and cortical activity. Journal of Cognitive Neuroscience. 2003;15:559–73. doi: 10.1162/089892903321662949. [DOI] [PubMed] [Google Scholar]

- Lamm C, Batson C, Decety J. The neural substrate of human empathy: effects of perspective-taking and cognitive appraisal. Journal of Cognitive Neuroscience. 2007;19:42–58. doi: 10.1162/jocn.2007.19.1.42. [DOI] [PubMed] [Google Scholar]

- Lee T, Liu H, Chan C, Fang S, Gao J. Neural activities associated with emotion recognition observed in men and women. Molecular Psychiatry. 2005;10(5):450–5. doi: 10.1038/sj.mp.4001595. [DOI] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Burdette PJ, Kraft RA. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. NeuroImage. 2003;19:1233–9. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- Malouin F, Richards CL, Jackson PL, Dumas F, Doyon J. Brain activations during motor imagery of locomotor-related tasks: a PET study. Human Brain Mapping. 2003;19:47–62. doi: 10.1002/hbm.10103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences United States of America. 2005;102:16518–23. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mériau K, Wartenburger I, Kazzer P, et al. Insular activity during passive viewing of aversive stimuli reflects individual differences in state negative affect. Brain and Cognition. 2009;69:73–80. doi: 10.1016/j.bandc.2008.05.006. [DOI] [PubMed] [Google Scholar]

- Molenberghs P, Brander C, Mattingley JB, Cunnington R. The role of the superior temporal sulcus and the mirror neuron system in imitation. Human Brain Mapping. 2010;31:1316–26. doi: 10.1002/hbm.20938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Büchel C, et al. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Morrison I, Lloyd D, di Pellegrino G, Roberts N. Vicarious responses to pain in anterior cingulate cortex: is empathy a multisensory issue? Cognitive, Affective, & Behavioral Neuroscience. 2004;4:270–8. doi: 10.3758/cabn.4.2.270. [DOI] [PubMed] [Google Scholar]

- Nettle D. Empathizing and systemizing: what are they, and what do they contribute to our understanding of psychological sex differences? British Journal of Psychology. 2007;98:237–55. doi: 10.1348/000712606X117612. [DOI] [PubMed] [Google Scholar]

- Naliboff BD, Berman S, Chang L, et al. Sex-related differences in IBS patients: central processing of visceral stimuli. Gastroenterology. 2003;124:1738–47. doi: 10.1016/s0016-5085(03)00400-1. [DOI] [PubMed] [Google Scholar]

- Peelen M, Atkinson A, Andersson F, Vuilleumier P. Emotional modulation of body-selective visual areas. Social Cognitive and Affective Neuroscience. 2007;2:274–83. doi: 10.1093/scan/nsm023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen M, Downing P. Within-subject reproducibility of category-specific visual activation with functional MRI. Human Brain Mapping. 2005;25:402–8. doi: 10.1002/hbm.20116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelen MV, Wiggett AJ, Downing PE. Patterns of fMRI activity dissociate overlapping functional brain areas that respond to biological motion. Neuron. 2006;49:815–22. doi: 10.1016/j.neuron.2006.02.004. [DOI] [PubMed] [Google Scholar]

- Phan K, Wager T, Taylor S, Liberzon I. Functional neuroimaging studies of human emotions. CNS Spectrums. 2004;9:258–66. doi: 10.1017/s1092852900009196. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Peelen M, Spinelli L, Seeck M, Vuilleumier P. Direct intracranial recording of body-selective responses in human extrastriate visual cortex. Neuropsychologia. 2007;45:2621–5. doi: 10.1016/j.neuropsychologia.2007.04.005. [DOI] [PubMed] [Google Scholar]

- Reed C, Stone V, Bozova S, Tanaka J. The body-inversion effect. Psychological Science. 2003;14:302–308. doi: 10.1111/1467-9280.14431. [DOI] [PubMed] [Google Scholar]

- Reed C, Stone V, Grubb J, McGoldrick J. Turning configural processing upside down: Part and whole body postures. Journal of Experimental Psychology: Human Perception and Performance. 2006;32:73–87. doi: 10.1037/0096-1523.32.1.73. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annual Review of Neuroscience. 2004;27:169–92. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Cognitive Brain Research. 1996;3:131–41. doi: 10.1016/0926-6410(95)00038-0. [DOI] [PubMed] [Google Scholar]

- Schaal S, Sternad D, Osu R, Kawato M. Rhythmic arm movement is not discrete. Nature Neuroscience. 2004;7:1137–44. doi: 10.1038/nn1322. [DOI] [PubMed] [Google Scholar]

- Schwarzlose R, Baker C, Kanwisher N. Separate face and body selectivity on the fusiform gyrus. The Journal of Neuroscience. 2005;25:11055–9. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shen L, Alexander GE. Preferential representation of instructed target location versus limb trajectory in dorsal premotor area. Journal of Neurophysiology. 1997;77:1195–212. doi: 10.1152/jn.1997.77.3.1195. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty J, Kaube H, Dolan R, Frith C. Empathy for pain involves the affective but not sensory components of pain. Science. 2004;303:1157–62. doi: 10.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- Smith A, Henson R, Rugg M, Dolan R. Modulation of retrieval processing reflects accuracy of emotional source memory. Learning & Memory. 2005;12:472–9. doi: 10.1101/lm.84305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stekelenburg JJ, de Gelder B. The neural correlates of perceiving human bodies: an ERP study on the body-inversion effect. Neuroreport. 2004;15:777–80. doi: 10.1097/00001756-200404090-00007. [DOI] [PubMed] [Google Scholar]

- Suchan B, Yágüez L, Wunderlich G, et al. Hemispheric dissociation of visuo-spatial processing and visual rotation. Cognitive Brain Research. 2002;136:533–44. doi: 10.1016/s0166-4328(02)00204-8. [DOI] [PubMed] [Google Scholar]

- Taylor J, Wiggett A, Downing P. Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. Journal of Neurophysiology. 2007;98:1626–33. doi: 10.1152/jn.00012.2007. [DOI] [PubMed] [Google Scholar]

- Tomlin D, Kayali M, King-Casas B, Anen C, Camerer CF, Quartz SR, Montague P. Agent-specific responses in the cingulate cortex during economic exchanges. Science. 2006;312:1047–1050. doi: 10.1126/science.1125596. [DOI] [PubMed] [Google Scholar]

- Turella L, Pierno AC, Tubaldi F, Castiello U. Mirror neurons in humans: consisting or confounding evidence? Brain and Language. 2009;108:10–21. doi: 10.1016/j.bandl.2007.11.002. [DOI] [PubMed] [Google Scholar]

- van de Riet WA, Grezes J, de Gelder B. Specific and common brain regions involved in the perception of faces and bodies and the representation of their emotional expressions. Society for Neuroscience. 2009;4:101–20. doi: 10.1080/17470910701865367. [DOI] [PubMed] [Google Scholar]

- Vogeley K, Bussfield P, Newen A, Herrmann S, Happe F, Falkai P, Maier W, Shah NJ, Fink GR, Zilles K. Mind reading: Neural mechanisms of theory of mind and self-perspective. NeuroImage. 2001;14:170–181. doi: 10.1006/nimg.2001.0789. [DOI] [PubMed] [Google Scholar]

- Völlm BA, Taylor ANW, Richardson P, et al. Neuronal correlates of theory of mind and empathy: A functional magnetic resonance imaging study in a nonverbal task. NeuroImage. 2006;29:90–8. doi: 10.1016/j.neuroimage.2005.07.022. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends in Cognitive Science. 2005;9:585–94. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Wakabayashi A, Baron-Cohen S, Uchiyama T, Yoshida Y, Kuroda M, Wheelwright S. Empathizing and systemizing in adults with and without autism spectrum conditions: cross-cultural stability. Journal of Autism and Developmental Disorders. 2007;37:1823–32. doi: 10.1007/s10803-006-0316-6. [DOI] [PubMed] [Google Scholar]

- Ward BD. Simultaneous inference for fMRI data. 2000. From http://homepage.usask.ca/~ges125/fMRI/AFNIdoc/AlphaSim.pdf (date last accessed March 23, 2011)

- Wenderoth N, Debaere F, Sunaert S, Swinnen SP. The role of anterior cingulate cortex and precuneus in the coordination of motor behavior. European Journal of Neuroscience. 2005;22:235–46. doi: 10.1111/j.1460-9568.2005.04176.x. [DOI] [PubMed] [Google Scholar]

- Yang E, Zald D, Blake R. Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion. 2007;7:882–6. doi: 10.1037/1528-3542.7.4.882. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.