Abstract

Significant needs exist for increased and better substance abuse treatment services in our nation’s prisons. The TCU Organizational Readiness for Change (ORC) survey has been widely used in community-based treatment programs and evidence is accumulating for relationships between readiness for change and implementation of new clinical practices. Results of organizational surveys of correctional counselors from 12 programs in two states are compared with samples of community-based counselors. Correctional counselors perceived strong needs for new evidence-based practices but, compared to community counselors, reported fewer resources and less favorable organizational climates. These results have important implications for successfully implementing new practices.

Keywords: Correctional programs, organizational readiness for change, substance abuse treatment services, implementation research, program needs

INTRODUCTION

The need for effective substance abuse treatment in prison settings continues to grow. Belenko and Peugh (2005) recently estimated that 70% of offenders need some level of services, yet the level of service available falls far short of the need, with only 10% to 15% of offenders receiving treatment in prison (Mumola, 1999). In a nationally representative survey of correctional institutions, Taxman, Perdoni, and Harrison (2007) reported that although most agencies report offering services, few clinical services are offered and less than a quarter of prisoners have daily access to those services. Taxman et al. conclude that drug treatment services and correctional programs for offenders are not appropriate to meet the needs of that population. These findings are of particular concern given evidence on the effectiveness of prison-based treatment (e.g., Knight, Simpson, & Hiller, 1999; Martin, Butzin, Saum, & Inciardi, 1999; Pearson & Lipton, 1999; Wexler, Melnick, Lowe, & Peters, 1999) and because prison-based treatment appears to be cost-effective (Griffith, Hiller, Knight, & Simpson, 1999; McCollister et al., 2003).

There is some argument that findings on in-prison treatment effectiveness represent well-funded, stable programs and may not be true for prison-based programs in general (Farabee et al., 1999). According to the data reported by Taxman et al. (2007), expanded clinical services are needed to meet demands. In order to make the most effective use of what is becoming increasingly scarce service dollars, it is important that services that are added or even those that are being maintained be based on well-established, evidence-based practices. Friedmann, Taxman, and Henderson (2007) reported that treatment directors in correctional settings use about half of a list of accepted evidence-based practices (EBP) and prisons tended to use fewer EBPs than did jails and community correction programs. Correctional programs that offered more EBPs were more likely to be accredited and network-connected with community programs, and to have more training resources and leadership with a high regard for the value of substance abuse treatment and an understanding of EBPs. It is critical that efforts to improve services or add new services or practices be conducted in ways that increase the probability that those services and practices will be successfully adopted, implemented, and sustained. As budgets for treatment continue to decline and state shortfalls are requiring cuts in services to help balance budgets, paying attention to the context of organizational change becomes essential.

Significant barriers to adoption of new EBPs exist for almost all programs that attempt change. Bartholomew, Joe, Rowan-Szal, and Simpson (2007) reported that lack of time, not enough training, and lack of resources were the most frequent cited barriers to adoption of workshop materials in trainings for community counselors and directors. Low tolerance for change also interfered with adoption of new materials (Simpson, Joe, & Rowan-Szal, 2007). While there are significant barriers to change in any organization, correctional settings have their own unique issues (Farabee et al., 1999). Correctional settings must balance security and treatment concerns with security often taking precedence. Treatment regimens can be interrupted by lockdowns and routine security activities. Discipline for non-compliance is often based on correctional rather than therapeutic responses and may be counter-therapeutic. Treatment for many offenders is often coerced which has implications for the therapeutic process (e.g., client motivation and engagement). While involuntary treatment can often be effective, it may require different approaches to increase client readiness. Many prisons are based in rural areas which may limit the pool of available, qualified clinical staff. Counselors who have experience in community-based programs may not be as effective in prison because of problems with overfamiliarization and resistance to custody regulations that can be encountered in prison settings (Farabee et al., 1999).

Organizational Readiness for Change

There is clearly a need for increased levels of services and more effective services in correctional settings. This is exacerbated by shrinking budgets available for treatment and special barriers to change that exist for prison-based programs. Thus it is critical that efforts for adding or improving services be conducted in a context that is optimal for adopting, implementing, and sustaining change. Attempting to implement new processes in a context that is not conducive to change can seriously impact chances of success unless deficiencies are identified and addressed. Increasing emphasis has been placed on the context of organizational change efforts and on increasing the capacity of programs to provide evidence-based treatments (Brown & Flynn, 2002; Flynn & Brown, in press). A number of observers have described the process of transferring new innovations into clinical practice and the organizational characteristics necessary for successful implementation (Aarons, 2006; Fixsen, Naoom, Blasé, Friedman, & Wallace, 2005; Flynn & Simpson, 2009; Klein & Sorra, 1996; Roman & Johnson, 2002; Simpson & Flynn, 2007).

More specifically, the TCU Program Change Model (Flynn & Simpson, 2009; Simpson, 2002; Simpson & Flynn, 2007) describes organizational change as a dynamic, step-based process. It has been developed to improve clinical practices by implementing new initiatives in a sequential manner. Steps include exposure to new evidence-based interventions in training workshops, adopting the new practices (by individuals or groups), which involves decision-making and action, implementing the innovation by expanding the adoption to regular use, and finally sustaining the implementation to become standard practice. Thus, the process begins with a consideration of program needs and resources, structural and functional characteristics, and general readiness to embrace change (Simpson, 2009). Guidelines for conducting program self-evaluations and developing action plans are discussed by Simpson and Dansereau (2007). Integrated models for identifying needed clinical practices and implementing them via the program change model are discussed by Lehman, Simpson, Knight, and Flynn (in press).

This model shows that preparation for change is a critical feature. Surveys of staff needs and functioning provide diagnostic information regarding staff readiness and ability to accept planned changes. Programs need to “know” themselves to adapt to changing environments, and to survive and improve. This is especially critical in times of budget shortfalls, service cutbacks, and program closures. Settings where communication, cohesion, trust, and tolerance for change are lacking will have a much more difficult time surviving and thriving.

Assessing organizational readiness for change

Organizational assessments provide agencies with pertinent information about the health of their organization, and identify organizational barriers to implementation as well as specific clinical needs. Effective innovation implementation results depend on organizational infrastructure – the level of training, experience, and focus of staff – which can be identified through ratings of program needs, clarity of mission, internal functioning, and professional attributes. Deploying practical innovations in an atmosphere of confidence and acceptance requires diagnostic tools to identify staff perceptions that can affect each element of the innovation process.

The ORC survey (Greener, Joe, Simpson, Rowan-Szal, & Lehman, 2007; Lehman, Greener, & Simpson, 2002) includes 25 scales organized under four major domains: (1) program needs/pressures for change; (2) staff attributes; (3) institutional resources; and (4) organizational climate. The first two domains of the ORC – program needs/pressures for change and staff attributes – are particularly relevant for assessing service needs and organizational readiness for implementation (preparation), and the third and fourth domains -- involving program resources and climate -- represent influences on maintenance of innovations.

To date, the ORC has been administered to over 5,000 substance abuse treatment staff in more than 650 organizations (including work in England and Italy) representing a variety of substance abuse, social, medical, and mental health settings in the U.S. in both community and correctional settings. ORC scores have been shown to be associated with higher ratings and satisfaction with training, greater openness to innovations (Fuller et al., 2007; Saldana, Chapman, Henggeler, & Rowland, 2007), greater satisfaction with training, greater utilization of innovations following training (Simpson & Flynn, 2007), and with better client functioning (Broome, Flynn, Knight, & Simpson, 2007; Greener et al., 2007; Lehman et al., 2002). A staff more likely to value growth and change and programs with a more positive and supportive climate (e.g., a clearer mission, higher cohesion, autonomy, and communication, and lower stress) were more likely to report higher utilization of training, although these factors were not strongly related to exposure to training (e.g., more staff exposed to more training opportunities). Programs with more program resources available offered more training opportunities (Lehman, Knight, Joe, & Flynn, 2009). In a sample of substance abuse (SA) counselors from eight different correctional institutions in a southwestern state (Garner, Knight, & Simpson, 2007), counselor burnout was found to be associated with more program needs, poorer staffing resources, lower capacity for growth and adaptability, poor clarity of mission, and higher stress levels.

Objectives

To date, most of the research and use of the ORC has involved community-based substance abuse treatment programs (although the ORC has also been used in a variety of other human service settings). Little data have been published on organizational readiness in correctional settings and how it compares to community programs. Given the high levels of unmet treatment needs in correctional settings, the additional barriers in implementing new practices in correctional settings, and current threats to budgets and programs, it is important to examine organizational functioning and readiness in these settings. Thus, the objectives of the present study are to–

Examine psychometric properties of the ORC in correctional settings;

Describe the staff, program, and training needs of SA counselors in correctional settings; and

Describe organizational readiness for change in terms of staff attributes, institutional resources, and organizational climate for correctional programs in comparison to these domains for community residential and outpatient programs.

METHODS

Samples

Data were collected from counselors in prison-based substance abuse treatment programs and counselors in residential/inpatient and from outpatient community-based SA programs. A total of 165 counselors from 12 correctional programs in two states (one in the Midwest and one in the south) were included in the correctional sample. The correctional programs included all of those in the two states that provided substance abuse treatment services run by private vendors. SA treatment directors at the correctional facilities were contacted for participation and then survey packets were sent for distribution to staff. Participants returned completed surveys directly to research offices. The correctional surveys were conducted in 2009 and 2010. Informed consent documents were included with all of the surveys. A passive consent procedure, approved by the University IRB was used in which completing and returning the survey indicated consent. Respondents who did not wish to participate could simply return a blank survey or throw their survey away.

Community programs were from three different samples including programs from several Midwestern states and two southern states. Community programs from states represented by the Prairielands Addiction Technology Transfer Center (PATTC) were recruited to participate in workshops sponsored by the PATTC on implementing evidence-based practices. Several months before the workshops, the directors of the participating programs were contacted and asked to have staff complete ORC surveys. Surveys were sent to the program to be distributed to counseling staff and completed surveys were then mailed directly back to research offices at TCU. A similar procedure was used to administer counselor surveys in a southern state. Workshops were sponsored by the state drug and alcohol office and participating programs were contacted several months before the workshops to arrange survey administration. Packets of surveys were sent to the programs for distribution to counselors, who then returned completed surveys by mail to research offices. Surveys in another southern state were coordinated through the state drug and alcohol office. Counselors at participating programs were provided access to an online version of the ORC. The community samples were collected between 2000 and 2004. Informed consent procedures were similar to those described above.

The community samples were grouped into residential/inpatient and outpatient programs. Outpatient methadone programs were not included because the level of counseling services and corresponding resources often differ from other types of treatment modalities. Programs from the community samples associated with hospitals, universities, or in criminal justices settings also were eliminated from the sample. The community residential sample included 256 counselors from 61 different programs and the community outpatient sample included 267 counselors from 76 different programs.

Feedback reports were prepared for all participating programs in the different samples. These reports showed profiles based on scale scores aggregated to the program level with comparison 25th and 75th percentile profiles from larger samples of similar programs.

Measures

The Organizational Readiness for Change (ORC; Greener et al., 2007; Lehman et al., 2002) survey was administered to counseling staff at the correctional and community programs. The ORC includes four major domains (21 scales, 125 items), including Needs/Pressures for Change (Program Needs, Staff Needs, Training Needs, Pressures for Change); Institutional Resources (Office, Staff, Training, Equipment/Computers, Internet, Supervision); Staff Attributes (Growth, Efficacy, Influence, Adaptability, Satisfaction); and Organizational Climate (Mission, Cohesion, Autonomy, Communication, Stress, and Openness to Change). The ORC-D4 (see http://www.ibr.tcu.edu/pubs/datacoll/ADCforms.html#OrganizationalAssessments) was administered to the correctional programs. An earlier version, the ORC-S, was administered to the community programs. The ORC-S differed from the ORC-D4 in that it did not include the Supervision and Satisfaction scales and the items for the Needs/Pressures for Change scales were different (with the exception of four items in the Staff Needs scale). Items in the Needs scales (Staff Needs, Program Needs, and Training Needs) have been updated to include areas found to be more relevant to counselors based on previous survey results.

Response categories for the items in the ORC were on a 5-point Strongly Disagree (1) to Strongly Agree (5) scale. Scale scores were computed by taking the average score of the items in the scale (and after reflecting scores on items worded in the opposite direction from the scale construct). Scale scores were then multiplied by 10 to obtain a range of 10 to 50 (with a score of 30 indicating neither agree nor disagree on average). Thus scale scores above 30 indicated at least some average agreement on the concept measured by the scale, and scale scores below 30 indicated at least some disagreement on average.

For the items in the Needs/Pressures domain, responses for individual items are presented rather than scale scores to demonstrate the level of agreement with specific needs and pressures. For these items, the percent of staff who agreed (Agree or Strongly Agree) that the item was a need or pressure for change is reported.

In addition to the ORC items, the survey also included a section on demographics and background and structural information about the organization. A Program Information Form was also completed by the community programs that enabled classification of modality and setting.

Analyses

Perceptions of institutional resources, staff attributes, and organizational climate between correctional counselors, and counselors from residential/inpatient and outpatient community treatment programs were compared using SAS Proc Mixed. Because counselors from the same program cannot be considered independent in their perceptions of organizational characteristics, an analytical approach that took into account the nesting of counselors within programs was needed. Proc Mixed provided results for an overall ANOVA type comparison between the three groups as well as pairwise comparisons when the overall statistic was significant while accounting for the nesting within programs. In addition, effect sizes (Cohen’s d; Cohen, 1988) for the differences between means on the ORC scales were computed (the difference between means divided by the pooled standard deviation).

RESULTS

Sample descriptions

The three samples included a total of 688 counselors (165 correctional, 256 community residential, and 267 community outpatient) from 149 programs (although many of the programs in the community samples had multiple locations or units). Demographic and background characteristics are presented in Table 1. Counselors in all three groups averaged around 45 years of age and just under two-thirds were female (around 65% to 67%). The majority of counselors were White (56% for residential to 66% for outpatient). Community residential counselors included a higher percentage of Black counselors (28%) than did correctional (10%) or community outpatient (14%). Community outpatient counselors were most likely to have a college or advanced degree (60%) compared to less than half of correctional (49%) and residential (44%) counselors. More than half of each of the three groups carried some form of certification (ranging from 56% for correctional to 62% for residential counselors). However, correctional counselors who were not yet certified were more likely than community counselors to be in an internship program (34% compared to 15% and 18%).

Table 1.

Demographics and Background

| Correctional | Community Residential | Community Residential Outpatient | prob | |

|---|---|---|---|---|

| Number of Programs | 12 | 61 | 76 | |

| Number of Counselors | 165 | 256 | 267 | |

| Average age | 46.2 | 45.5 | 45.1 | |

| Gender | ||||

| Female | 66.9% | 64.8% | 66.7% | |

| Race/Ethnicity | ||||

| White | 61.6% | 55.9% | 66.2% | |

| Black | 10.4%A | 28.0%B | 13.9%A | <.001 |

| Hispanic | 17.1% | 11.4%% | 15.0% | |

| Education | ||||

| College or advanced degree | 48.5%AB | 44.2%B | 59.5%A | .006 |

| Certification | ||||

| Not certified | 9.8% | 20.1% | 26.4% | |

| Certified | 56.1% | 62.2% | 58.9% | |

| Intern | 34.2%A | 17.7%B | 14.7%B | .004 |

| Years Experience in SA | ||||

| 3 or more years | 49.4%A | 67.9%B | 64.9%B | .013 |

| Years on job | ||||

| 3 or more years | 23.9% | 34.5% | 36.4% | |

| Client load | ||||

| 20 or more clients | 70.6%A | 9.0%B | 48.5%A | <.001 |

Note: Values with different superscripts significantly differed at p < .05 in post hoc pairwise comparisons.

Correctional counselors were less likely than community residential or community outpatient counselors to have at least 3 years of experience in substance abuse treatment (49% compared to 68% and 65%, respectively). Correctional counselors were also less likely to report being in their current job for at least 3 years (24% to 35% and 36% although the differences were not statistically significant). Finally, correctional counselors were more likely to report having a client load of 20 or more clients (71%) compared to community outpatient (49%) or community residential (9%) counselors.

Program, staff and training needs

Correctional staff perceptions of staff needs, program needs, and training needs are shown in Table 2. This table shows the percent agreeing with the item (sum of Agree and Strongly Agree) for each item under the three areas. Items within each area are sorted by level of agreement by correctional staff; items in which there is strongest agreement that it is a need are listed first. For clinical staff needs, more than 50% of correctional staff agreed that nine of the ten included items were needs. Counselors reported the strongest agreement that identifying and using evidence-based practices was a need (70%) followed by improving behavioral management of clients (67%). More than half of correctional counselors in the sample also agreed that staff needed guidance for improving cognitive focus of clients during group counseling, rapport with clients, client thinking and problem-solving skills, and increasing program participation, as well as using client assessments to guide clinical care and program decisions, to document client improvements, and to identify client needs.

Table 2.

Staff, Program, and Training Needs for Correctional Counselors

| % Agree | |

|---|---|

| Clinical staff at your program needs guidance in– | |

| 10. identifying and using evidence-based practices. | 69.8% |

| 8. improving behavioral management of clients. | 66.7% |

| 9. improving cognitive focus of clients during group counseling. | 59.0% |

| 7. improving client thinking and problem solving skills. | 58.9% |

| 5. increasing program participation by clients. | 56.8% |

| 2. using client assessments to guide clinical care and program decisions. | 53.7% |

| 3. using client assessments to document client improvements. | 52.1% |

| 6. improving rapport with clients. | 51.9% |

| 1. assessing client needs. | 50.9% |

| 4. matching client needs with services. | 48.5% |

| Your organization needs guidance in– | |

| 17. improving communications among staff. | 68.3% |

| 16. improving relations among staff. | 62.2% |

| 19. improving billing/financial/accounting procedures. | 61.1% |

| 13. assigning or clarifying staff roles. | 50.6% |

| 12. setting specific goals for improving services. | 50.3% |

| 18. improving record keeping and information systems. | 50.0% |

| 14. establishing accurate job descriptions for staff. | 43.9% |

| 15. evaluating staff performance. | 42.1% |

| 11. defining its mission. | 23.8% |

| You need more training for– | |

| 22. new methods/developments in your area of responsibility. | 55.8% |

| 23. new equipment or procedures being used or planned. | 54.5% |

| 25. new laws or regulations you need to know about. | 53.3% |

| 24. maintaining/obtaining certification or other credentials. | 49.1% |

| 26. management or supervisory responsibilities. | 45.7% |

| 21. specialized computer applications (e.g., data systems). | 38.2% |

| 20. basic computer skills/programs. | 26.1% |

Several of the items on staff needs were also included on the community program surveys. Correctional counselors were more likely than community residential and outpatient counselors to agree that they needed guidance in using client assessments to guide clinical care and program decisions (54% compared to 32% and 38%, respectively), for assessing client needs (51% to 27% and 28%) and matching client needs with services (49% to 36% and 37%). In addition, correctional counselors and community outpatient counselors were more likely than community residential counselors to agree they needed guidance in increasing program participation by clients (57% and 58% compared to 38%). All comparisons were significant at p < .05 based on overall F-tests from SAS Proc Mixed.

In terms of organizational needs, correctional staff perceived the most important needs to include improving communications among staff (68%), improving relations among staff (62%), and improving billing/financial/accounting procedures (61%). Half of counselors agreed that clarifying staff roles, setting specific goals, and improving record keeping were important needs. Establishing accurate job descriptions, evaluating staff performance and defining the organization’s mission were less likely to be perceived as important needs.

More than half of the correctional counselors agreed that they need more training for new methods/developments in their area of responsibility, for new equipment or procedures being used or planned, and for new laws or regulations they need to know about. Just under half of correctional counselors agreed that maintaining or obtaining certification and management/supervisory responsibilities were important training needs.

Correctional counselors viewed pressures for change (not shown in the table) as coming primarily from internal sources including program management (62%) and other staff (62%). Less than 40% of correctional counselors viewed pressures as coming from external sources such as funding agencies, accreditation or licensing authorities, board members or community groups. Community residential and outpatient counselors also were most likely to view pressures for change coming from program managers (over 60% agreement), but were less likely than correctional counselors to agree that pressures for change came from other staff members (54% and 42%). However, more than 50% of counselors from community programs also agreed that funding agencies and accreditation or licensing authorities were also significant sources of pressures for change.

ORC scale reliabilities

A list of domains and brief descriptions of each of the scales within the four domains for the ORC are included in Table 3. Scale reliabilities (Cronbach’s alpha) computed for the correctional sample and two community samples are also reported. The Staff Needs, Program Needs, Training Needs, Satisfaction, and Supervision scales are new and reliabilities have not previously been reported. Results for these scales in Table 3 show acceptable reliabilities with alphas above .80 for all of the scales except Satisfaction, which had an alpha of .78. Generally, the scale reliabilities reported here for the correctional sample are similar to reliabilities for the two community samples and to those reported by Lehman et al. (2002) for community samples with a few exceptions. Alphas in the correctional sample for Equipment, Internet, and Autonomy were below .50 in the correctional sample (although alphas for Autonomy in the two community samples were also relatively low at .51 and .58).

Table 3.

Organizational Readiness for Change Scales with Reliabilities (Cronbach’s alpha)

| Correctional | Community Residential | Community Outpatient | |

|---|---|---|---|

| Program Needs/Pressures for Change | |||

| Treatment Staff Needs -- perceptions about program needs with respect to improving client functioning. | .91 | na | na |

| Program Needs -- perceptions about program strengths, weaknesses, and issues that need attention. | .88 | na | na |

| Training Needs -- perceptions of training needs in several technical and knowledge areas. | .81 | na | na |

| Pressure for Change -- perceptions about pressures from internal (e.g., target constituency, staff, or leadership) or external (e.g., regulatory and funding) sources. | .68 | .71 | .70 |

| Staff Attributes | |||

| Growth -- extent to which staff members value and use opportunities for professional growth | .69 | .65 | .68 |

| Efficacy -- staff confidence in their own professional skills and performance | .81 | .69 | .77 |

| Influence -- an index of staff interactions, sharing, and mutual support | .82 | .80 | .79 |

| Adaptability -- ability of staff to adapt effectively to new ideas and change. | .65 | .57 | .74 |

| Satisfaction -- general satisfaction with job and work environment | .78 | na | na |

| Institutional Resources | |||

| Offices -- adequacy of office equipment and physical space available | .68 | .57 | .65 |

| Staffing -- adequacy of staff assigned to do the work | .64 | .71 | .70 |

| Training Resources -- emphasis and scheduling for staff training and education | .55 | .59 | .59 |

| Equipment -- adequacy and use of computerized systems and equipment | .43 | .56 | .72 |

| Internet -- staff access and use of e-mail and the internet for professional communications, networking, and obtaining work-related information. | .42 | .78 | .74 |

| Supervision -- staff confidence in agency leaders and perceptions of co-involvement in the decision making process | .86 | na | na |

| Organizational Climate | |||

| Mission -- captures staff awareness of agency mission and clarity of its goals | .81 | .74 | .78 |

| Cohesion -- workgroup trust and cooperation | .84 | .89 | .90 |

| Autonomy -- freedom and latitude staff members have in doing their jobs | .47 | .51 | .58 |

| Communication -- adequacy of information networks to between staff and between staff and management. | .86 | .80 | .81 |

| Stress -- perceived strain, stress, and role overload. | .83 | .76 | .81 |

| Change -- staff attitudes about agency openness and efforts in keeping up with changes that are needed. | .68 | .71 | .70 |

The alphas for Equipment and Internet in the correctional sample were particularly low and were lower than those in the two community samples. For the Internet scale in particular, the low reliability is partially a reflection of the very low means on the items in the scale and thus low variance. It should be noted that scales in the Resources domain were developed more as brief checklists of critical resources categories that could be summed together as an index. The low reliabilities for Equipment and Internet for correctional programs are possibly a reflection of programs having to choose a limited set of resources in these categories. When different programs choose different resources (e.g., one program might have staff email access but very limited internet access and another program might more available internet access but low use of the internet for accessing drug treatment information). Intercorrelations among the specific resources could be low and may even be negative, resulting in low reliabilities.

Staff attributes, institutional resources and organizational climate

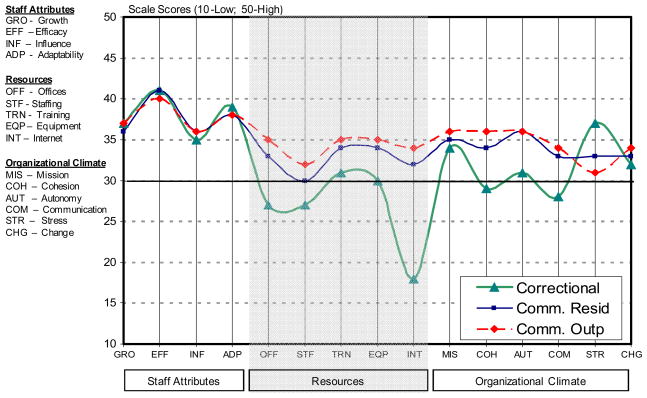

Figure 1 shows profiles for correctional, community outpatient, and community residential counselors for ORC scales under the staff attributes, institutional resources, and organizational climate domains. Overall, the profiles for the community outpatient and community residential counselors tend to be similar across the three domains, although residential counselors tend to have slightly lower scores on resources and report slightly lower average cohesion and slightly higher stress levels than do community outpatient counselors. The most striking differences shown in the profiles are that correctional counselors reported significantly lower scores on resources and organizational climate scales including cohesion, autonomy, communication, and higher scores on stress. Correctional and community counselors have nearly identical profiles on the staff attribute scales.

Figure 1.

Comparisons of ORC mean scores for correctional and community residential/outpatient treatment Counselors.

The three counselor samples were compared on each scale score using SAS Proc Mixed which accounts for the nesting of counselors within programs. Statistical tests on the four Staff Attributes scales indicated that there were no significant differences between the three groups on any of the scales. As shown in Figure 1, staff perceptions of their attributes including Growth, Efficacy, Influence, and Adaptability were all very positive, with mean scores above 35 for each of the four scales.

Overall differences were statistically significant for all of the Institutional Resource scales (Offices, p < .001; Staffing, p = .006; Training, p = .013; Equipment, p = .002; Internet, p < .001). Pairwise comparisons showed that perceptions of resources did not differ significantly (all p-values > .05) between community residential and community outpatient counselors, but that perceptions of resources were significantly lower for correctional counselors for all of the resource scales. For correctional counselors, perceptions of office, staffing and internet resources were low, with means below the scale mid-point of 30. Internet resources were particularly low for correctional counselors with a scale mean below 20. Perceptions of training and equipment resources for correctional counselors were somewhat better although mean scale scores were very near the scale midpoint of 30 and were significantly lower than those for community counselors.

Effect sizes for the differences between each group of counselors were computed. For the five Resource scales, effect sizes for the difference between community and outpatient and residential counselors were small, ranging from 0.15 for Staffing to 0.22 for Offices. Effect sizes for the differences between correctional and community counselors were much larger. For comparisons between correctional and community outpatient counselors, effect sizes ranged from 0.54 for Training to 1.74 for Internet access. For the differences between correctional and community residential counselors, effect sizes ranged from 0.36 for Training to 1.55 for Internet access. Cohen (1988) has described effect sizes of .5 or greater as representing large effects.

Statistical tests for the six Climate scales indicated that there were significant between-group differences on all of the scales (p-values < .001 for all of the scales with the exception of Mission which had p = .041). Pairwise comparisons indicated that community residential and outpatient counselors differed significantly only for stress levels, but that correctional counselors differed significantly from community outpatient counselors on all of the Climate scales and differed significantly from community residential counselors on all of the climate scales except for Mission and Change. That is, correctional counselors on average rated their cohesion, autonomy, and communication as significantly lower and their stress levels higher than did community residential and outpatient counselors. Correctional counselors reported mean scores on Cohesion, Autonomy and Communication near the scale midpoint compared to scale scores considerably above in the more positive range for community outpatient and residential counselors. An average Stress score above 35 indicated relatively higher stress levels for correctional counselors.

Effect sizes were also computed for the differences between each pair of groups. Similar to the Resource scales, effect sizes for differences between community residential and community outpatient counselors were relatively small, ranging from .05 for Autonomy to .25 for Stress. Effect sizes for differences between correctional and community outpatient programs were larger, ranging from .39 and .40 for Change and Mission to .85 for Autonomy. Effect sizes for the difference between correctional and community residential counselors were small for Change (.22) and Mission (.24), but were in the large range for Stress (−.45), Cohesion (.48), Communication (.53), and Autonomy (.80).

DISCUSSION

There is a great need for increasing the quantity and quality of treatment programs in correctional settings. Taxman et al. (2007) estimated that only about a quarter of offenders receive daily treatment services in prison even though up to 70% of offenders are in need of such services. Farabee et al. (1999) described a number of barriers to effective treatment in prisons including inadequate assessments that did not assure that offenders most in need of services actually receive them, difficulty recruiting, training and retaining qualified staff, and frequent conflict between therapeutic and security concerns.

These issues run concurrent with an emphasis at national and state levels to improve treatment outcomes by implementing more effective, evidence-based interventions and increased attention to models of program change which describe the process of adopting, implementing, and sustaining these new practices. In light of these trends, the TCU Program Change model has described a step-based process for improving clinical practices by implementing the innovations in a sequential manner. A measurement system, including the ORC survey has been developed to help assess important elements of this process.

The data presented in this paper represent one of the first attempts to describe organizational readiness factors in correctional treatment settings, especially as compared to community-based programs. The results demonstrate a strong need for new evidence-based practices, with a large majority of correctional treatment counselors reporting that they need guidance in identifying and using evidence-based practices, for improving behavioral management of clients, for improving their cognitive focus and thinking and problem-solving skills, and for using client assessments to guide clinical care and document client improvements. Counselors also strongly support help for improving organizational climate factors such as communication, staff relations, and clarifying staff roles. Correctional staff also recognize a need for more training on new developments in their areas of responsibility, for new equipment or procedures being used, and on new laws or regulations that affect their jobs.

Although correctional staff recognize strong needs in these areas, they also report significant deficiencies in areas that are likely to interfere with successful implementation and adoption of new evidence-based practices. Correctional staff report significantly lower levels of important resources, especially compared to counselors in community-based programs. In fact, large effect sizes further highlight these differences. Even apart from these deficiencies, scale means for correctional programs show a definite lack of Office and Staffing resources with scores below the scale midpoints of 30, and an even greater lack of Internet resources with its mean score below 20.

Previous research has highlighted the importance of adequate resources for successful program change and successful adoption of new EBPs (Bartholomew et al., 2007; Garner et al., 2007; Lehman et al., 2009; Simpson et al., 2007). The lack of resources in correctional programs highlights an important area of concern for improving clinical services especially in a time of tightening budgets. Internet resources are a particular area of concern. The lack of adequate internet accessibility is a function of security concerns in many facilities. Many staff simply do not have internet or email access inside prison walls. It is a significant barrier to successful implementation because of the lack of electronic educational opportunities to learn about new developments in the field and the increasing use of the web as a dissemination platform (e.g., ATTCs, NIDA/SAMHSA blending products, TCU manuals and forms).

In addition to inadequate resources, counselors in correctional facilities also reported significantly lower scores on important organizational climate dimensions. Of particular concern are low cohesion, autonomy, and communication, and high stress levels. Attempts to implement new clinical practices in programs with staff who are not cohesive, that have little autonomy to act independently, that do not communicate well with each other or with management, and with those who have high stress levels are going to be much more difficult than in programs with better organizational climates. These results point to the importance of self-diagnosis for programs and highlight the need for interventions to improve climate areas such as communication and cohesion before attempting to implement complex new clinical practices in order to improve the chances of successful and sustainable implementation.

According to Farabee et al. (1999), correctional programs often have a difficult time recruiting and retaining qualified staff. Examination of demographic patterns of the correctional and the community samples partially supports this contention. Just under half of the counselors in the correctional sample had three or more years of experience in the drug treatment field compared to over 60% for counselors in community-based programs. Correctional counselors also tended to have less time on the job. However, corrections-based counselors were slightly more likely to have a college or advanced degree than did community residential counselors although less likely than outpatient counselors. On a positive note, correctional counselors who were not certified were more likely to be in internship programs which include higher levels of supervision than were non-certified counselors in community programs.

Limitations

Several caveats about the results of this study are warranted. First, the samples of both correctional and community programs, and counselors within participating programs, are not necessarily representative of all community or correctional programs. The community programs were located in the Midwest, the South and other parts of the country were not represented. The correctional programs represent two different states. Programs in other parts of the country may have different characteristics. Many of the community programs in the sample participated in training workshops and thus may differ from programs that did not have the resources or motivation to attend such workshops. Because of the method used for distributing staff surveys, we do not have data on the return rate of the surveys and thus do not know how representative the counselors who completed surveys were of their specific programs. And finally, the community-based counselors were surveyed several years prior to the correctional surveys. Thus, some of the results could be influenced by factors related to the different time points.

Conclusions

In one of the first examinations of organizational readiness for change in a sample of corrections-based substance abuse treatment programs, a number of organizational obstacles were identified that can interfere with improving treatment by implementing new evidence-based practices. These obstacles include staff with less experience and high case loads, inadequate resources, and weak organizational climates that can present critical barriers to successful program change. In times of shrinking budgets for treatment services, careful planning and assessment of the organizational context is a requisite when attempting program changes to maximize opportunities for success.

In light of these findings, future research should focus on additional organizational contexts of correctional programs. For example, the correctional programs included in this paper were from two different states and all the programs had treatment services provided by external vendors. Needs, resources, and organizational climate might be different in programs from other states or regions or in those with other types of treatment delivery systems. The contribution of staff characteristics to the differences in perceptions between correctional and community programs should be examined. And finally, methods of implementing new practices in correctional settings need to be studied to determine effective approaches which might be different than those approaches used in community settings due to the differences in barriers to change between the two settings.

Acknowledgments

This program of research has been funded primarily under a series of grants to Texas Christian University (R37DA13093, D. D. Simpson, Principal Investigator, and R01DA025885, W. E. K. Lehman, Principal Investigator) from the National Institute on Drug Abuse, National Institutes of Health (NIDA/NIH). The research protocol was approved by the Missouri Department of Corrections and Texas Department of Criminal Justice, and was conducted in cooperation with Gateway Foundation. Interpretations and conclusions, however, are entirely those of the authors and do not necessarily represent the position of the NIDA, NIH, or Department of Health and Human Services. TCU Short Forms and related user guides are available from the IBR Website at www.ibr.tcu.edu. Assessment forms, intervention manuals, and other addiction treatment resources can be downloaded and used without cost in non-profit applications.

References

- Aarons GA. Transformational and transactional leadership: Association with attitudes toward evidence-based practice. Psychiatric Services. 2006;57(8):1162–1168. doi: 10.1176/appi.ps.57.8.1162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartholomew NG, Joe GW, Rowan-Szal GA, Simpson DD. Counselor assessments of training and adoption barriers. Journal of Substance Abuse Treatment. 2007;33:192–199. doi: 10.1016/j.jsat.2007.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belenko S, Peugh J. Estimating drug treatment needs among state prison inmates. Drug and Alcohol Dependence. 2005;77:269–281. doi: 10.1016/j.drugalcdep.2004.08.023. [DOI] [PubMed] [Google Scholar]

- Broome KM, Flynn PM, Knight DK, Simpson DD. Program structure, staff perceptions, and client engagement in treatment. Journal of Substance Abuse Treatment. 2007;33(2):149–158. doi: 10.1016/j.jsat.2006.12.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown BS, Flynn PM. The federal role in drug abuse technology transfer: A history and perspective. Journal of Substance Abuse Treatment. 2002;22(4):245–257. doi: 10.1016/s0740-5472(02)00228-3. [DOI] [PubMed] [Google Scholar]

- Cohen J. Statistical power analysis for the behavioral sciences. 2. New Jersey: Lawrence Erlbaum; 1988. [Google Scholar]

- Farabee D, Prendergast M, Cartier J, Wexler H, Knight K, Anglin MD. Barriers to implementing effective drug treatment programs. The Prison Journal. 1999;79:150–162. [Google Scholar]

- Fixsen DL, Naoom SF, Blasé KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; 2005. (FMHI Publication #231) [Google Scholar]

- Flynn PM, Brown BS. Implementation research: Issues and prospects. Addictive Behaviors. doi: 10.1016/j.addbeh.2010.12.020. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flynn PM, Simpson DD. Adoption and implementation of evidence-based treatment. In: Miller PM, editor. Evidence-based addiction treatment. San Diego, CA: Elsevier; 2009. pp. 419–437. [Google Scholar]

- Friedmann PD, Taxman FS, Henderson CE. Evidence-based treatment practices for drug-involved adults in the criminal justice system. Journal of Substance Abuse Treatment. 2007;32:267–277. doi: 10.1016/j.jsat.2006.12.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuller BE, Rieckmann T, Nunes EV, Miller M, Arfken C, Edmundson E, McCarty D. Organizational Readiness for Change and opinions toward treatment innovations. Journal of Substance Abuse Treatment. 2007;33(2):183–192. doi: 10.1016/j.jsat.2006.12.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garner BR, Knight K, Simpson DD. Burnout among corrections-based drug treatment staff. International Journal of Offender Therapy and Comparative Criminology. 2007;51:510–522. doi: 10.1177/0306624X06298708. [DOI] [PubMed] [Google Scholar]

- Greener JM, Joe GW, Simpson DD, Rowan-Szal GA, Lehman WEK. Influence of organizational functioning on client engagement in treatment. Journal of Substance Abuse Treatment. 2007;33:139–148. doi: 10.1016/j.jsat.2006.12.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Griffith JD, Hiller ML, Knight K, Simpson DD. A cost-effectiveness analysis of in-prison therapeutic community treatment and risk classification. The Prison Journal. 1999;79:352–368. [Google Scholar]

- Klein KJ, Sorra JS. The challenge of innovation implementation. Academy of Management Review. 1996;21(4):1055–1080. [Google Scholar]

- Knight K, Simpson DD, Hiller ML. Three-year reincarceration outcomes for in-prison therapeutic community treatment in Texas. The Prison Journal. 1999;79:337–351. [Google Scholar]

- Lehman WEK, Greener JM, Simpson DD. Assessing organizational readiness for change. Journal of Substance Abuse Treatment. 2002;22(4):197–209. doi: 10.1016/s0740-5472(02)00233-7. [DOI] [PubMed] [Google Scholar]

- Lehman WEK, Knight DK, Joe GW, Flynn PM. Program resources and utilization of training in outpatient substance abuse treatment programs. Poster presentation at the Addiction Health Services Research annual meeting; San Francisco, CA. 2009. Oct, [Google Scholar]

- Lehman WEK, Simpson DD, Knight DK, Flynn PM. Integration of treatment innovation planning and implementation: Strategic process models and organizational challenges. Psychology of Addictive Behaviors. doi: 10.1037/a0022682. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin SS, Butzin CA, Saum CA, Inciardi JA. Three-year outcomes of therapeutic community treatment for drug-involved offenders in Delaware. The Prison Journal. 1999;79:294–320. [Google Scholar]

- McCollister KE, French MT, Prendergast M, Wexler H, Sacks S, Hall E. Is in-prison treatment enough? A cost-effectiveness analysis of prison-base treatment and aftercare services for substance-abusing offenders. Law and Policy. 2003;25:63–82. [Google Scholar]

- Mumola CJ. Substance abuse and treatment, state and federal prisoners, 1997 (Bureau of Justice Statistics Special Report; NJC-172871) Washington, DC: U.S. Department of Justice, Office of Justice Programs; 1999. [Google Scholar]

- Pearson FS, Lipton DS. A meta-analytic review of the effectiveness of corrections-base treatments for drug abuse. The Prison Journal. 1999;79:384–410. [Google Scholar]

- Roman PM, Johnson JA. Adoption and implementation of new technologies in substance abuse treatment. Journal of Substance Abuse Treatment. 2002;22(4):211–218. doi: 10.1016/s0740-5472(02)00241-6. [DOI] [PubMed] [Google Scholar]

- Saldana L, Chapman JE, Henggeler SW, Rowland MD. Organizational readiness for change in adolescent programs: Criterion Validity. Journal of Substance Abuse Treatment. 2007;33(2):159–169. doi: 10.1016/j.jsat.2006.12.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD. A conceptual framework for transferring research to practice. Journal of Substance Abuse Treatment. 2002;22:171–182. doi: 10.1016/s0740-5472(02)00231-3. [DOI] [PubMed] [Google Scholar]

- Simpson DD. Organizational readiness for stage-based dynamics of innovation implementation. Research on Social Work Practice. 2009;19:541–551. [Google Scholar]

- Simpson DD, Dansereau DF. Assessing organizational functioning as a step toward innovation. Science & Practice Perspectives. 2007;3(2):20–28. doi: 10.1151/spp073220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD, Flynn PM. Moving innovations into treatment: A stage-based approach to program change. Journal of Substance Abuse Treatment. 2007;33(2):111–120. doi: 10.1016/j.jsat.2006.12.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson DD, Joe GW, Rowan-Szal GA. Linking the elements of change: Program and client responses to innovation. Journal of Substance Abuse Treatment. 2007;33:201–210. doi: 10.1016/j.jsat.2006.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taxman FS, Perdoni ML, Harrison LD. Drug treatment services for adult offenders: The state of the state. Journal of Substance Abuse Treatment. 2007;32:239–254. doi: 10.1016/j.jsat.2006.12.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wexler HK, Melnick G, Lowe L, Peters J. Three-year incarceration outcomes for Amity in-prison therapeutic community and aftercare in California. The Prison Journal. 1999;79:321–336. [Google Scholar]