Abstract

The “ABCD” mnemonic to assist non-experts’ diagnosis of melanoma is widely promoted; however, there are good reasons to be sceptical about public education strategies based on analytical, rule-based approaches – such as ABCD (i.e. Asymmetry, Border Irregularity, Colour Uniformity and Diameter). Evidence suggests that accurate diagnosis of skin lesions is achieved predominately through non-analytical pattern recognition (via training examples) and not by rule-based algorithms. If the ABCD are to function as a useful public education tool they must be used reliably by untrained novices, with low inter-observer and intra-diagnosis variation, but with maximal inter-diagnosis differences. The three subjective properties (the ABCs of the ABCD) were investigated experimentally: 33 laypersons scored 40 randomly selected lesions (10 lesions × 4 diagnoses: benign naevi, dysplastic naevi, melanomas, seborrhoeic keratoses) for the three properties on visual analogue scales. The results (n=3,960) suggest that novices cannot use the ABCs reliably to discern benign from malignant lesions.

Keywords: ABCD, non-analytical reasoning, pattern recognition, skin cancer, melanoma, dermatology diagnosis

The “ABCD” rule to aid in the diagnosis of early malignant melanoma has recently celebrated its 25th anniversary (1). This mnemonic was introduced in 1985 to aid non-experts’ macroscopic diagnosis of pigmented lesions, and over the years it has been promoted widely in an attempt to facilitate the earlier detection of melanomas (2). In the 25 years since its inception, the use of the ABCD has transitioned from assisting non-expert physicians’ diagnosis, through educating the public in intensive clinician-led programmes, to now being used at the heart of most general public education strategies; with the criteria described on the public websites of the American Academy of Dermatology (AAD) (3), British Association of Dermatologists (4), Australasian College of Dermatologists (5) and European Academy of Dermatology and Venereology (EADV) (6). Although the fundamental four-part ABCD mnemonic has received widespread adoption, it is surprising that there has been little apparent validation of its utility as a general public education strategy.

Given that lay individuals first identify the majority of melanomas and are also responsible for the largest proportion of the delays in its diagnosis, reliable and accurate information is essential to assist them in early detection (7-10). The importance of accurate patient information has recently been further highlighted as, thus far, the numerous strategies initiated to improve screening and the early detection of melanoma have not resulted in a substantial reduction in the proportion of tumours with prognostically unfavourable thickness (11). There is now mounting evidence leading us to question the use of the ABCD rule as a general public education strategy (12, 13). The majority of studies that are cited as providing evidence for the mnemonics’ adoption have either involved clinician-performed assessments (14-18) or intensive lay education (19, 20), and, as we detail below, there are methodological limitations with extrapolating the findings from these studies to general public health promotion; the roles of experience and prior examples.

The role of experience

Since the late 1980s the cognitive processes involved in dermatological diagnosis have been under investigation (21-25), most notably in the laboratory of Geoff Norman and colleagues. At the risk of some simplification, the processes involved in diagnosis can be viewed either as being explicit and based on conscious analytical reasoning or, alternatively, as being implicit and holistic, and hidden from the conscious view of the diagnostician. Dermatologists appear predominately to use the latter non-analytical reasoning, derived through experience of prior examples to identify skin lesions. This overall pattern recognition cannot be unlearnt and thus has a carry-over effect on any attempts at analytical rule application (26-30). In addition, study designs where experts are asked to explain their diagnoses inherently over-emphasize algorithmic reasoning by promoting intentional rather than incidental analytical processing (31). It has even been suggested that experienced clinicians make a diagnosis intuitively, then alter their analytical assessment to fit in with their preconceptions about the relationship between these features (such as the ABCD) and the diagnosis (such as melanoma) (31, 32). Certainly, the only prospective study of dermatologists’ recognition patterns, confirmed that whilst analytical pattern recognition (the ABCD rules) could be used to predict malignancy, it was not actually how dermatologists arrived at the correct diagnosis; instead the experts seemed to use overall or differential pattern recognition (“Ugly duckling sign (33)”) (23).

The role of prior examples

The exact number of prior examples that are required to significantly alter analytical assessments is unknown, but we do know that these carry-over effects do not only apply to seasoned clinicians; novices have been shown to exhibit this bias with only a few prior examples (27, 28, 34). We do not fully understand how novices naturally visually assess skin lesions, but unless it is significantly different from the rest of human visual perception it is unlikely to be by analytical methods. If, as is likely, overall-pattern recognition plays even a small role, the biasing effect of prior examples needs to be controlled for when assessing the analytical ABCD criteria. In the few studies where intensive ABCD education has been demonstrated to have a beneficial effect on lay individuals, overall pattern recognition was not controlled for (19, 20). It is therefore unclear how much of the positive effect can be attributed to the novices’ ability to discriminate the true analytical ABCD criteria rather than their ability to use overall-pattern recognition “learnt” from the example lesions used to demonstrate the analytical criteria during their patient education. Thus far the only randomized control trial testing the ABCD in lay hands showed that it decreased diagnostic accuracy and was not as effective as a pattern recognition education strategy (12).

In this particular context any screening or diagnostic tool would ideally have the following three criteria: inter-observer variability should be minimal; variation within a diagnostic class should be small; and the inter-diagnosis differences should be significant. In the present study we set out to examine these three criteria experimentally, assessing the three subjective analytical properties (the ABCs) of the ABCD rule.

METHODS

Study image selection

Forty digital images of pigmented skin lesions were selected randomly from 878 relevant images in the University of Edinburgh Department of Dermatology’s image database. The Department’s image library (comprising over 3,500 images) has been prospectively collected for ongoing dermato-informatics research investigating semi-automated diagnostic systems and non-expert education. The 40 images selected for this experiment were stratified so that there were 10 images from each of the following four diagnostic classes: benign naevi, dysplastic naevi (defined as lesions with histological atypia), melanomas and seborrhoeic keratoses. All the images had been collected using the same controlled fixed distance photographic set-up; Canon (Canon UK Ltd, Reigate, Surrey, UK) EoS 350D 8.1MP cameras, Sigma (Sigma Imaging UK Ltd, Welwyn Garden City, Hertfordshire, UK) 70 mm f2.8 macro lens and Sigma EM-140 DG Ring Flash at a distance of 50 cm. The lesions were cropped from the original digital photograph, each to the same resolution (600 × 450 pixels) and displayed in a 1:1 ratio (approximately equivalent to seven times magnification from the original 50 cm capturing distance) in the custom-built experimental software.

Software design and implementation

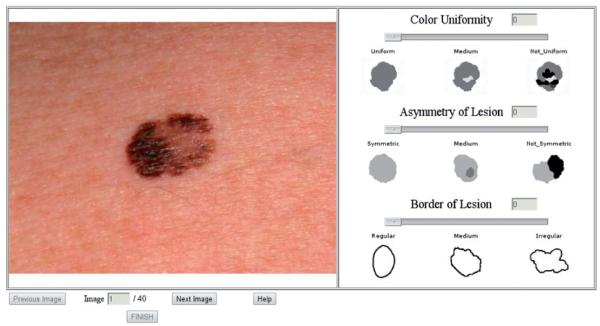

A purpose built programme was created to allow the three subjective criteria of the ABCD rule (Asymmetry, Border Irregularity and Colour Uniformity) to be tested remotely over the internet, to limit any investigator-related interaction bias. Diameter was felt not to be suitable for assessment as the images were magnified and thus would have required the placement of a relative 6 mm marking scale next to each lesion before asking each participant to comment which was longer; the lesion or the measuring scale, which would not have been instructive. The programme was written in JavaScript and PHP, and after entering their demographics the participants were given a set of instructions stating how to use the ABC criteria and the software. The instructions were based on the verbal descriptors of the ABC criteria taken from the website of the AAD (3). After confirming that they had read and understood the instructions the subjects assessed each of the 40 images in turn for asymmetry, border irregularity and colour uniformity on a 10-point visual analogue scale (VAS). A screen shot of the software can be seen in Fig. 1. At any stage the subjects could click on a “Help” button to redisplay the instructions and verbal descriptors of the ABC.

Fig. 1.

A screen “snapshot” of the purpose-built software used to record the 33 subjects’ assessments of the three ABC properties. The subjects scored each of the three properties on the 10-point visual analogue scales that were displayed to the right of the image, by moving the slider to the desired level.

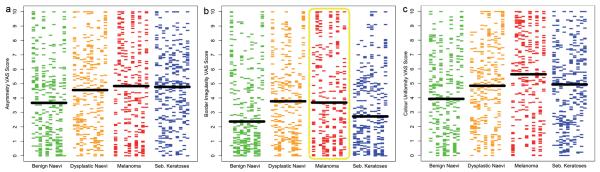

To minimize any lead bias or fatigue effect, the software was programmed to randomize the order in which the subjects undertook the 40-lesion assessment, so that it was different for each participant. In addition to the verbal descriptions of the three properties assessed, to further reduce inter-rater differences visual anchor points were integrated into the software at the mid-point and either ends of the rating scale. These anchor points were taken from the ABCDE patient guidelines on the SkinCancerNet “Melanoma: What it Looks Like” webpage produced in conjunction with the AAD (35). As we were interested in the lay public’s ability to discriminate analytically the three properties and not their ability to use their innate non-analytical reasoning to match or distinguish from real-life examples, only the caricatured diagrams from the SkinCancerNet website were used as the high-end anchor points, rather than example pictures of melanomas (Fig. 2). The mid- and low-end anchor points were computer-generated to complete the VAS.

Fig. 2.

A screen “snapshot” taken from the SkinCancerNet website (35), demonstrating the caricatured images that we used as the anchor points for the visual analogue scales in our software. The pictures on the right were used as they demonstrate the analytical criteria of the ABC, but without facilitating any non-analytical pattern recognition that could have developed if the “real-life” images had been used.

Subjects

An open e-mail request containing the URL link to the study was distributed to MSc students of the University of Edinburgh’s School of Informatics, inviting them to personally undertake the study and forward the invitation on to non-medical acquaintances who also might be willing to participate. A total of 33 lay subjects agreed to participate without remuneration. Sex distribution was split with 21 males and 12 females (64% male). Mean age was 34 years (age range 17–62 years). None of the subjects had a personal history of skin cancer.

Statistics

The subjects’ responses were recorded automatically by the programme then exported into “R for MacoS” for graphing and statistical analysis (36).

Ethics

NHS Lothian research ethics committee granted permission for the collection and use of the images, and additional permission for the use of students in this research was granted through the University of Edinburgh’s “Committee for the use of student volunteers”.

RESULTS

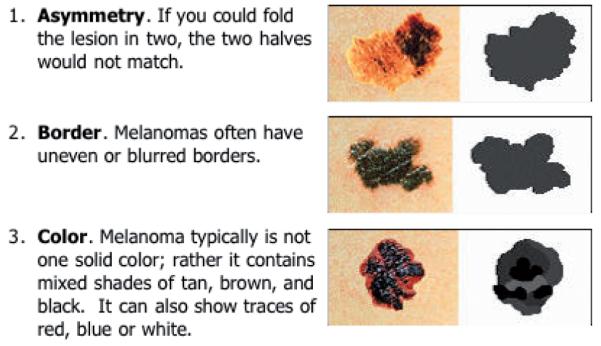

The full results of all 3,960 analytical VAS scores attributed in the study (33 subjects × 40 lesions × 3 “ABC” properties) are graphically displayed in Fig. 3a-c, with each property presented in a separate plot. At first glance these plots may seem complicated, but they have the virtue that every data-point from the experiment is presented and the variability in scoring can be instinctively assessed. In explanation: each horizontal coloured bar represents an individual subject’s score for a specific lesion, with each of the 40 lesions displayed in individual columns across the x-axis. These 40 lesions’ columns are grouped into different colours according to their diagnostic classes (green = the 10 benign naevi, orange = the 10 dysplastic naevi, red = the 10 melanomas, blue = the 10 seborrhoeic keratoses). The overall median score for each diagnostic class is demonstrated by the large horizontal black bar, straddling each of the four coloured series of 10 columns.

Fig. 3.

The full results of all 3,960 comparisons undertaken are split according the ABC properties assessed into three plots (a: asymmetry; b: border irregularity; c: colour uniformity). Each horizontal bar represents an individual’s score. The 40 lesions assessed are displayed in columns across the x-axis, grouped by their diagnostic classes (green = benign naevi, orange = dysplastic naevi, red = melanomas, blue = seborrhoeic keratoses). The median score for each diagnostic class is demonstrated by the large horizontal black bar.

The inter-person variability in assessing each of the three ABC properties for any single lesion is represented by a single column’s vertical spread across the y-axis. Within a specific diagnostic class the variability in scores is demonstrated by the differences in vertical spread between the 10 uniform coloured columns. The variability between the four diagnostic classes is appreciated by the differences in the overall distributions between the four coloured groups and further enforced by the differences in their median scores indicated by the horizontal black bars. The results are further summarized in Table I.

Table I. Medians, interquartile ranges and 90th percentiles of the ‘ABC’ visual analogue scale (VAS) scores for each diagnostic class.

| Lesion class | Benign naevi |

Dysplastic naevi |

Melanomas | Seborrhoeic keratoses |

|---|---|---|---|---|

| Asymmetry VA S scores (0 = symmetrical, 10 = asymmetrical) | ||||

| Median | 3.66 | 4.55 | 4.83 | 4.77 |

| IQR | 4.96 | 4.88 | 5.94 | 4.07 |

| 90th percentile | 8.93 | 8.97 | 9.52 | 8.09 |

| Border irregularity VAS scores (0 = regular, 10 = irregular) | ||||

| Median | 2.37 | 3.77 | 3.68 | 2.72 |

| IQR | 4.61 | 5.05 | 6.46 | 4.08 |

| 90th percentile | 8.27 | 8.93 | 9.78 | 7.52 |

| Colour uniformity VAS scores (0 = single uniform colour, 10 = multiple or non-uniform colour distribution) | ||||

| Median | 3.92 | 4.83 | 5.63 | 4.92 |

| IQR | 5.00 | 4.88 | 5.59 | 4.23 |

| 90th percentile | 8.26 | 9 | 9.59 | 8.34 |

IQR: interquartile range.

Whilst it is possible to appreciate the small, albeit significant (Kruskal–Wallis = p < 0.0001), difference between the four diagnostic groups’ scores, what is far more striking is the substantial spread in the scores attributed to the same lesion by the 33 subjects and the further variation in scoring between the 10 lesions within the same diagnostic class for all three of the subjective ABC properties. Additional data analysis demonstrates that a similar substantial variation exists within the 10 scores that each individual attributed to the lesions within the same diagnostic class (data not shown).

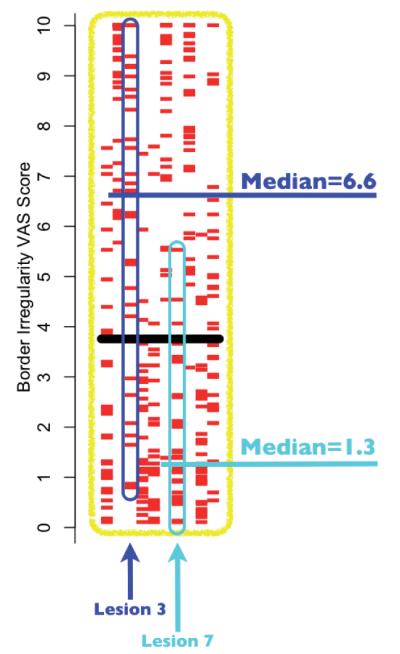

The inter-person and intra-class variations can better be appreciated by specific examples (see Fig. 4, which is an enlarged view of the highlighted section of Fig. 3b). In Fig. 4 it can be seen that lesion 3 (blue arrow/highlights), which had the largest inter-person variation (interquartile range (IQR) = 4.86) of the 10 lesions within the melanoma class had a range of “border irregularity” scores attributed by the 33 subjects from 0.7 to 10 with a median score of 6.6, and lesion 7 (cyan arrow/highlights), which had the least inter-person variance (IQR = 1.93), had a range from 0 to 5.5 with a median score of 1.3.

Fig. 4.

An enlarged display of the highlighted section of Fig. 3b, showing all the border irregularity visual analogue scale (VAS) scores for the 10 melanoma lesions. The lesions with the highest variation (lesion 3, interquartile range (IQR)=4.86) and lowest variation (lesion 7, IQR=1.93) are further highlighted, in blue and cyan, respectively, to demonstrate the large spread of scores attributed to lesions within the same diagnostic class. For lesion 3 it can be seen that the range of scores attributed by the 33 subjects was 0.7–10, with a median of 6.6, and for lesion 7 the range was 0–5.5, with a median of 1.3.

DISCUSSION

Our principal motivation for the current work was the observation that the original rationale and justification for the ABCD approach had slipped from the primary target group of physicians to the lay public, with little supporting evidence to justify this transfer. In the absence of empirical evidence of effectiveness, there are at least two theoretical reasons to be suspicious of this approach. First, there is an increasing body of evidence that experts are not necessarily able to explicitly state the basis of their own expertise in a way that is simply transferable (31, 32). Secondly, that previous testing of ABCD had not controlled for prior exposure (14-20), meaning that prior accounts of the utility of the ABCD may have reflected prior experience and knowledge rather than the implementation and use of the criteria themselves (26-30).

Other relevant considerations are that, whereas experts may be able to use the criteria on appropriate subclasses of lesions (i.e. melanocytic naevi and melanomas), available evidence suggests that distinguishing primary melanocytic lesions from mimics (such as seborrhoeic keratoses) requires considerable expertise (37-39). Finally, the only large-scale randomized controlled trial (RCT) in this area comparing ABCD approach with those based on pattern recognition provided little support for the use of the ABCD criteria (12).

The approach we took was an experimental one attempting to delineate the characteristics of the ABCD rules on a test series of lesions. Our rationale was that for the ABCD system to be capable of guiding diagnosis, it would have to have certain statistical properties: different diagnostic groups needed to score differently, and variance between persons for the same lesion and within diagnostic groups needed to be small. Within the limits of our test situation and the images randomly chosen, it is self-evident that these criteria were not met. Different people judged the same lesion very differently, and although the medians of different diagnostic groups differed, the overlap was considerable. Looking at Fig. 3, it is difficult to imagine being able to choose any criteria based on ABCs that would usefully discriminate suspicious from banal lesions.

There are limitations to our approach. Our subjects were not chosen at random, and were highly educated, computer literate, and probably above-average at abstract and analytical reasoning. We do not consider that this is a reason to doubt the generalizability of our conclusions. Secondly, We included not just primary melanocytic lesions, but mimics, such as seborrhoeic keratoses. The justification for this is simply that non-experts and many physicians are not able reliably to distinguish between these classes of lesions. In practice the ABCD criteria are applied (incorrectly) to various diagnostic classes: we needed to account for this. Thirdly, as the subjects undertook the experimental task remotely over the internet we were unable to assess the “effort” they applied to their scoring. However, because we randomized the order in which each subject assessed the 40 lesions any fatigue effect would have been minimized. Indeed, close inspection of the data demonstrates that whilst there is substantial overlap in scoring between (and within) the diagnostic groups, the subjects’ scoring was not random; individual lesions each had (to varying degrees) distinct scoring patterns.

We cannot say whether, if subjects had undergone intense education in the use of the ABCD approach, the results might have been different. In practice, however, the promulgation of the ABCD criteria via web sites and patient leaflets provides little opportunity for such intense education. We also suggest that previous studies of the ABCD approach have been methodologically compromised because of failure to control for prior exposure during the teaching phase. Training persons in the use of the ABCD inevitably means exposure to test images: during this, albeit minimal exposure, pattern recognition skills will develop, and it is a mistake to believe that any change in performance pre- and post-test is due to the ABCD system rather than other learning. To make any other conclusion requires a degree of experimental control that has been lacking in prior work.

We also acknowledge the multiple modifications to the basic ABCD mnemonic that have been suggested over the years to “improve” its functionality (40-47), and accept it is now commonplace to use the ABCDE criteria (although we note there is a wide variety of “E”s suggested; evolving, enlarging, elevated, erythema, expert). However, in light of the fact that there is now further evidence that untrained novices cannot use the analytical ABC criteria effectively, should the public education message not be simplified to include only “evolving” (i.e. change). Such a simplification has previously been suggested by Weinstock (47, 48) and has independently been found to be the most important predictor of melanoma in patient-observed features (13).

Finally, given the changing epidemiology of malignant melanoma and the importance of early presentation by patients and early diagnosis by physicians, Our criticisms of the ABCD approach are not meant to disparage attempts to develop alternative strategies. In this respect we note the work of Grob and co-workers (12), who have used approaches based on fostering pattern recognition skills for laypersons. our own work has also suggested that the use of images and a structured database may enhance diagnostic skills of laypersons, although in the context of malignant melanoma, such systems need far more testing before being promoted as being clinically useful (49, 50).

ACKNoWLEDGEMENTS

The work was supported by The Wellcome Trust (reference 083928/Z/07/Z) and the Foundation for Skin Research (Edinburgh). We are also grateful to the advice and assistance given by Karen Roberston and Yvonne Bisset (Department of Dermatology, University of Edinburgh) regarding the photographic capture and preparation of the digital images. We also recognize the contribution of Nikolaos Laskaris (School of Informatics, University of Edinburgh) who undertook some of the preliminary programming as part of his MSc thesis (51).

Footnotes

The authors declare no conflicts of interest.

REFERENCES

- 1.Rigel DS, Russak J, Friedman R. The evolution of melanoma diagnosis: 25 years beyond the ABCDs. CA Cancer J Clin. 2010;60:301–316. doi: 10.3322/caac.20074. [DOI] [PubMed] [Google Scholar]

- 2.Friedman RJ, Rigel DS, Kopf AW. Early detection of malignant melanoma: the role of physician examination and self-examination of the skin. CA Cancer J Clin. 1985;35:130–151. doi: 10.3322/canjclin.35.3.130. [DOI] [PubMed] [Google Scholar]

- 3.American Academy of Dermatology ABCDs of melanoma detection. 2010 [cited 2010 Nov 15]. Available from: http://www.aad.org/public/exams/abcde.html.

- 4.British Association of Dermatologists Sun awareness - mole checking. 2010 [cited 2010 Nov15]. Available from: http://www.bad.org.uk/site/719/default.aspx.

- 5.Australasian College of Dermatologists A-Z of skin-moles & melanoma. 2010 [cited 2010 Nov 15]. Available from: http://www.dermcoll.asn.au/public/a-z_of_skin-moles_melanoma.asp.

- 6.European Academy of Dermatology and Venereology Moles and malignant melanoma patient information leaflet. 2010 [cited 2010 Nov 15]. Available from: http://www.eadv.org/ patient-corner/leaflets/eadv-leaflets/moles-and-malignant-melanoma-patient-information-leaflet.

- 7.Koh HK, Miller DR, Geller AC, Clapp RW, Mercer MB, Lew RA. Who discovers melanoma? Patterns from a population-based survey. J Am Acad Dermatol. 1992;26:914–919. doi: 10.1016/0190-9622(92)70132-y. [DOI] [PubMed] [Google Scholar]

- 8.Temoshok L, DiClemente RJ, Sweet DM, Blois MS, Sagebiel RW. Factors related to patient delay in seeking medical attention for cutaneous malignant melanoma. Cancer. 1984;54:3048–3053. doi: 10.1002/1097-0142(19841215)54:12<3048::aid-cncr2820541239>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- 9.Richard MA, Grob JJ, Avril MF, Delaunay M, Gouvernet J, Wolkenstein P, et al. Delays in diagnosis and melanoma prognosis (I): the role of patients. Int J Cancer. 2000;89:271–279. [PubMed] [Google Scholar]

- 10.Richard MA, Grob JJ, Avril MF, Delaunay M, Gouvernet J, Wolkenstein P, et al. Delays in diagnosis and melanoma prognosis (II): the role of doctors. Int J Cancer. 2000;89:280–285. doi: 10.1002/1097-0215(20000520)89:3<280::aid-ijc11>3.0.co;2-2. [DOI] [PubMed] [Google Scholar]

- 11.Criscione VD, Weinstock MA. Melanoma thickness trends in the United States, 1988-2006. J Invest Dermatol. 2010;130:793–797. doi: 10.1038/jid.2009.328. [DOI] [PubMed] [Google Scholar]

- 12.Girardi S, Gaudy C, Gouvernet J, Teston J, Richard M, Grob J. Superiority of a cognitive education with photographs over ABCD criteria in the education of the general population to the early detection of melanoma: a randomized study. Int J Cancer. 2006;118:2276–2280. doi: 10.1002/ijc.21351. [DOI] [PubMed] [Google Scholar]

- 13.Liu W, Hill D, Gibbs AF, Tempany M, Howe C, Borland R, et al. What features do patients notice that help to distinguish between benign pigmented lesions and melanomas?: the ABCD(E) rule versus the seven-point checklist. Melanoma Res. 2005;15:549–554. doi: 10.1097/00008390-200512000-00011. [DOI] [PubMed] [Google Scholar]

- 14.McGovern TW, Litaker MS. Clinical predictors of malignant pigmented lesions. A comparison of the Glasgow seven-point checklist and the American Cancer Society’s ABCDs of pigmented lesions. J Dermatol Surg oncol. 1992;18:22–26. doi: 10.1111/j.1524-4725.1992.tb03296.x. [DOI] [PubMed] [Google Scholar]

- 15.Healsmith MF, Bourke JF, osborne JE, Graham-Brown RA. An evaluation of the revised seven-point checklist for the early diagnosis of cutaneous malignant melanoma. Br J Dermatol. 1994;130:48–50. doi: 10.1111/j.1365-2133.1994.tb06881.x. [DOI] [PubMed] [Google Scholar]

- 16.Thomas L, Tranchand P, Berard F, Secchi T, Colin C, Moulin G. Semiological value of ABCDE criteria in the diagnosis of cutaneous pigmented tumors. Dermatology. 1998;197:11–17. doi: 10.1159/000017969. [DOI] [PubMed] [Google Scholar]

- 17.Barnhill RL, Roush GC, Ernstoff MS, Kirkwood JM. Interclinician agreement on the recognition of selected gross morphologic features of pigmented lesions. Studies of melanocytic nevi V. J Am Acad Dermatol. 1992;26:185–190. doi: 10.1016/0190-9622(92)70023-9. [DOI] [PubMed] [Google Scholar]

- 18.Gunasti S, Mulayim MK, Fettahloglu B, Yucel A, Burgut R, Sertdemir Y, et al. Interrater agreement in rating of pigmented skin lesions for border irregularity. Melanoma Res. 2008;18:284–288. doi: 10.1097/CMR.0b013e328307c25a. [DOI] [PubMed] [Google Scholar]

- 19.Bränström R, Hedblad MA, Krakau I, Ullén H. Laypersons’ perceptual discrimination of pigmented skin lesions. J Am Acad Dermatol. 2002;46:667–673. doi: 10.1067/mjd.2002.120463. [DOI] [PubMed] [Google Scholar]

- 20.Robinson JK, Turrisi R. Skills training to learn discrimination of ABCDE criteria by those at risk of developing melanoma. Arch Dermatol. 2006;142:447–452. doi: 10.1001/archderm.142.4.447. [DOI] [PubMed] [Google Scholar]

- 21.Norman GR, Rosenthal D, Brooks LR, Allen SW, Muzzin LJ. The development of expertise in dermatology. Arch Dermatol. 1989;125:1063–1068. [PubMed] [Google Scholar]

- 22.Norman G, Brooks LR. The non-analytical basis of clinical reasoning. Adv Health Sci Educ Theory Pract. 1997;2:173–184. doi: 10.1023/A:1009784330364. [DOI] [PubMed] [Google Scholar]

- 23.Gachon J, Beaulieu P, Sei JF, Gouvernet J, Claudel JP, Lemaitre M, et al. First prospective study of the recognition process of melanoma in dermatological practice. Arch Dermatol. 2005;141:434–438. doi: 10.1001/archderm.141.4.434. [DOI] [PubMed] [Google Scholar]

- 24.Norman G, Young M, Brooks L. Non-analytical models of clinical reasoning: the role of experience. Med Educ. 2007;41:1140–1145. doi: 10.1111/j.1365-2923.2007.02914.x. [DOI] [PubMed] [Google Scholar]

- 25.Norman G. Dual processing and diagnostic errors. Adv Health Sci Educ. 2009;14(Suppl 1):37–49. doi: 10.1007/s10459-009-9179-x. [DOI] [PubMed] [Google Scholar]

- 26.Ross BH. Remindings and their effects in learning a cognitive skill. Cogn Psychol. 1984;16:371–416. doi: 10.1016/0010-0285(84)90014-8. [DOI] [PubMed] [Google Scholar]

- 27.Allen SW, Brooks LR, Norman GR, Rosenthal D. Effect of prior examples on rule-based diagnostic performance. Res Med Educ. 1988;27:9–14. [PubMed] [Google Scholar]

- 28.Allen SW, Norman GR, Brooks LR. Experimental studies of learning dermatologic diagnosis: the impact of examples. Teach Learn Med. 1992;4:35–44. [Google Scholar]

- 29.Regehr G, Cline J, Norman GR, Brooks L. Effect of processing strategy on diagnostic skill in dermatology. Acad Med. 1994;69:S34–36. doi: 10.1097/00001888-199410000-00034. [DOI] [PubMed] [Google Scholar]

- 30.Kulatunga-Moruzi C, Brooks LR, Norman G. Using comprehensive feature lists to bias medical diagnosis. J Exp Psychol Learn Mem Cogn. 2004;30:563–572. doi: 10.1037/0278-7393.30.3.563. [DOI] [PubMed] [Google Scholar]

- 31.McLaughlin K, Rikers RM, Schmidt HG. Is analytic information processing a feature of expertise in medicine? Adv Health Sci Educ Theory Pract. 2008;13:123–128. doi: 10.1007/s10459-007-9080-4. [DOI] [PubMed] [Google Scholar]

- 32.Norman G. Building on experience - the development of clinical reasoning. N Engl J Med. 2006;355:2251–2252. doi: 10.1056/NEJMe068134. [DOI] [PubMed] [Google Scholar]

- 33.Grob JJ, Bonerandi JJ. The ‘ugly duckling’ sign: identification of the common characteristics of nevi in an individual as a basis for melanoma screening. Arch Dermatol. 1998;134:103–104. doi: 10.1001/archderm.134.1.103-a. [DOI] [PubMed] [Google Scholar]

- 34.Norman G, Brooks L, Colle C, Hatala R. The benefit of diagnostic hypotheses in clinical reasoning: experimental study of an instructional intervention for forward and backward reasoning. Cogn Instr. 1999;17:433–448. [Google Scholar]

- 35.SkinCancerNet (“A comprehensive online skin cancer information resource developed by the AAD”) Melanoma - what it looks like. 2010 [cited 2010 Nov 15]. Available from: http://www.skincarephysicians.com/skincancernet/melanoma.html.

- 36.R Development Core Team . R: a language and environment for statistical computing. R Foundation for Statistical Computing; Vienna, Austria: 2009. Available from: http://www.R-project.org. [Google Scholar]

- 37.MacKenzie-Wood AR, Milton GW, de Launey JW. Melanoma: accuracy of clinical diagnosis. Australas J Dermatol. 1998;39:31–33. doi: 10.1111/j.1440-0960.1998.tb01238.x. [DOI] [PubMed] [Google Scholar]

- 38.Marks R, Jolley D, McCormack C, Dorevitch AP. Who removes pigmented skin lesions? J Am Acad Dermatol. 1997;36:721–726. doi: 10.1016/s0190-9622(97)80324-6. [DOI] [PubMed] [Google Scholar]

- 39.Duque MI, Jordan JR, Fleischer AB, Jr, Williford PM, Feldman SR, Teuschler H, et al. Frequency of seborrheic keratosis biopsies in the United States: a benchmark of skin lesion care quality and cost effectiveness. Dermatol Surg. 2003;29:796–801. doi: 10.1046/j.1524-4725.2003.29211.x. [DOI] [PubMed] [Google Scholar]

- 40.Rigel DS, Friedman RJ. The rationale of the ABCDs of early melanoma. J Am Acad Dermatol. 1993;29:1060–1061. doi: 10.1016/s0190-9622(08)82059-2. [DOI] [PubMed] [Google Scholar]

- 41.Abbasi NR, Shaw HM, Rigel DS, Friedman RJ, McCarthy WH, osman I, et al. Early diagnosis of cutaneous melanoma: revisiting the ABCD criteria. JAMA. 2004;292:2771–2776. doi: 10.1001/jama.292.22.2771. [DOI] [PubMed] [Google Scholar]

- 42.Rigel DS, Friedman RJ, Kopf AW, Polsky D. ABCDE - an evolving concept in the early detection of melanoma. Arch Dermatol. 2005;141:1032–1034. doi: 10.1001/archderm.141.8.1032. [DOI] [PubMed] [Google Scholar]

- 43.Zaharna M, Brodell RT. It’s time for a “change” in our approach to early detection of malignant melanoma. Clin Dermatol. 2003;21:456–458. doi: 10.1016/s0738-081x(03)00058-0. [DOI] [PubMed] [Google Scholar]

- 44.Moynihan GD. The 3 Cs of melanoma: time for a change? J Am Acad Dermatol. 1994;30:510–511. doi: 10.1016/s0190-9622(08)81963-9. [DOI] [PubMed] [Google Scholar]

- 45.Marghoob AA, Slade J, Kopf AW, Rigel DS, Friedman RJ, Perelman Ro. The ABCDs of melanoma: why change? J Am Acad Dermatol. 1995;32:682–684. doi: 10.1016/s0190-9622(05)80002-7. [DOI] [PubMed] [Google Scholar]

- 46.Fox GN. ABCD-EFG for diagnosis of melanoma. Clin Exp Dermatol. 2005;30:707. doi: 10.1111/j.1365-2230.2005.01857.x. [DOI] [PubMed] [Google Scholar]

- 47.Weinstock MA. ABCD, ABCDE, and ABCCCDEEEEFNU. Arch Dermatol. 2006;142:528. doi: 10.1001/archderm.142.4.528-a. [DOI] [PubMed] [Google Scholar]

- 48.Weinstock MA. Cutaneous melanoma: public health approach to early detection. Dermatol Ther. 2006;19:26–31. doi: 10.1111/j.1529-8019.2005.00053.x. [DOI] [PubMed] [Google Scholar]

- 49.Brown N, Robertson K, Bisset Y, Rees J. Using a structured image database, how well can novices assign skin lesion images to the correct diagnostic grouping? J Invest Dermatol. 2009;129:2509–2512. doi: 10.1038/jid.2009.75. [DOI] [PubMed] [Google Scholar]

- 50.Aldridge RB, Glodzik D, Ballerini L, Fisher RB, Rees JL. The utility of non-rule based visual matching as a strategy to allow novices to achieve skin lesion diagnosis. Acta Derm Venereol. doi: 10.2340/00015555-1049. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Laskaris N, Ballerini L, Fisher RB, Aldridge B, Rees J. Fuzzy description of skin lesions. In: Manning DJ, Abbey CK, editors. Medical imaging 2010: image perception, observer performance, and technology assessment; Proceedings of SPIE Vol 7627 (Bellingham, WA, SPIE, 2010); San Diego, CA, SPIE Publishing. 2010.pp. 762717-1–762717-10. [Google Scholar]