Abstract

Behavior may be generated on the basis of many different kinds of learned contingencies. For instance, responses could be guided by the direct association between a stimulus and response, or by sequential stimulus-stimulus relationships (as in model-based reinforcement learning or goal-directed actions). However, the neural architecture underlying sequential predictive learning is not well-understood, in part because it is difficult to isolate its effect on choice behavior. To track such learning more directly, we examined reaction times (RTs) in a probabilistic sequential picture identification task. We used computational learning models to isolate trial-by-trial effects of two distinct learning processes in behavior, and used these as signatures to analyze the separate neural substrates of each process. RTs were best explained via the combination of two delta rule learning processes with different learning rates. To examine neural manifestations of these learning processes, we used functional magnetic resonance imaging to seek correlates of timeseries related to expectancy or surprise. We observed such correlates in two regions, hippocampus and striatum. By estimating the learning rates best explaining each signal, we verified that they were uniquely associated with one of the two distinct processes identified behaviorally. These differential correlates suggest that complementary anticipatory functions drive each region's effect on behavior. Our results provide novel insights as to the quantitative computational distinctions between medial temporal and basal ganglia learning networks and enable experiments that exploit trial-by-trial measurement of the unique contributions of both hippocampus and striatum to response behavior.

Keywords: hippocampus, striatum, associative learning, model-based fmri

Introduction

Although a behavior may appear to stem from unitary processes, a primary thrust of cognitive neuroscience has been to fractionate its neural causes. In the case of memory, it is established that different forms are subserved by separate systems (Knowlton et al. 1994 1996a,b; Packard et al. 1989); similarly, different networks relying on distinct representations appear to support distinct strategies for learned decision making (Dickinson and Balleine 2002; Niv et al. 2006; Bornstein and Daw 2011).

In particular, whereas much work has examined the brain's mechanisms for “model-free” reinforcement learning (RL) of action policies (Houk et al. 1995; Montague et al. 1996), decisions may also be evaluated by anticipating their consequences using learned predictive associations among non-rewarding states or events, as for locations in a cognitive map (Doya 1999; Daw et al. 2005; Rangel et al. 2008; Redish et al. 2008; Fermin et al. 2010). The psychological, computational, and neural processes supporting such “model-based” RL are comparatively poorly understood and under intensive current study (Balleine et al. 2008; Daw et al. 2011; McDannald et al. 2011; Simon and Daw 2011). However, in RL tasks, it has been challenging to disentangle the contributions of either strategy to choices (both, after all, ultimately seek reward). Here, we extended methods previously used for the study of RL to examine more directly the learning of sequential, nonrewarded predictive representations, an important subcomponent of model-based RL.

We used reaction times (RTs) as a trial-by-trial index of predictive learning in a serial reaction time task requiring human subjects to identify images presented in a probabilistic sequence. Predictive learning was evidenced by facilitated reaction times for more probable images (Bahrick 1954; Harrison et al. 2006; Bestmann et al. 2008; den Ouden et al. 2010).

The trial-by-trial pattern by which RTs depended on experience was consistent with learning by a delta rule: a gradually decaying influence, on average, of images observed on previous trials (cf. Corrado et al. 2005; Lau & Glimcher 2005). A key feature of such a process is the learning rate: how much weight the system places on new information, relative to previous experience. Suggestively, RTs were best explained as resulting a combination of two such processes, each with different learning rates. Such multiplicity might reflect multiple predictive representations supporting the behavior, e.g. response-response sequencing (procedural learning) and stimulus-stimulus associations (relational learning). Of these two sorts of hypothetical representations, only the second might support model-based RL. While our experimental design cannot explicitly distinguish between the two, we can use our behavioral signatures to uniquely identify neural correlates in regions suggestive of one type of mapping or the other.

We reasoned that if two distinct neural systems underlay this apparently dual-process estimation, then a computational analysis of fMRI data could dissociate their activity via this parameter (Gläscher and Büchel 2005). We used a computational model of learning to analyze the fMRI data (O'Doherty et al. 2007), seeking correlates of predictive learning and estimating the implied learning rates. Studies using related tasks have identified a distributed network of regions, including hippocampus and striatum, involved in contingency estimation (Strange et al. 2005; Harrison et al. 2006). Here, we decompose this apparently unitary network into separable subnetworks, each displaying a learning rate that corresponds uniquely to one of those estimated behaviorally. This approach allows us to dissociate the individual contributions of hippocampus and striatum to trial-by-trial response behavior, and, consequently, measure neural activity reflecting sequential predictive learning specific to each of these structures.

Methods

Participants

Twenty right-handed individuals (nine female; ages 18 to 32, mean 25) participated in the study. All had normal or corrected-to-normal vision. Participants received a fixed fee, unrelated to performance, for their participation. Participants were recruited from the New York University community as well as the surrounding area and gave informed consent in accordance with procedures approved by the New York University Committee on Activities Involving Human Subjects.

Exclusion criteria

Data from two participants were excluded due to failure to demonstrate learning of the sequential contingencies embedded in the task. Failure to learn was identified when a regression model with only nuisance regressors (the “constant” model) proved a statistically superior explanation of participant reaction times than any of the other models considered here, which each include regressors of interest specifying the estimated conditional probability of images (see Analysis, below). Statistical superiority was measured by Bayesian Information Criterion (BIC; Schwarz 1978), used to correct likelihood scores when comparing models with different numbers of parameters.)

Task design

Participants performed a Serial Reaction Time (SRT) task in which they observed a sequence of image presentations and were instructed solely to respond to each image using a pre-trained keypress assigned to that image. The stimulus set consisted of four grayscale photographs of natural landscapes that were matched for size, contrast and luminance (Wittmann et al. 2008). Each participant viewed the same four images. During behavioral training, the keys corresponded to the innermost fingers on the home keys of a standard USA-layout keyboard (D, F, J, K). For the MRI sessions, the same finger keys were used on two MR-compatible button boxes (Figure 1a). Participants were instructed to learn the responses as linking a finger and an image, rather than a key and an image (e.g., left index finger, rather than 'F'). The mappings between the four images and four keys were one-to-one, pseudorandomly generated for each participant prior to their training session, trained to criterion prior to the fMRI session, and maintained constant during the experiment. The experiment was controlled by a script written in Matlab (Mathworks, Natick MA), using the Psychophysics Toolbox (Brainard 1997).

Figure 1.

Task design. (A) Training. Participants were first trained to deterministically associate each of four buttons with one of the stimulus images. Training proceeded until participants reached a fixed accuracy criterion. The associations between stimuli and responses were not varied during the course of the task. (B) Test. Images were presented one at a time for a fixed 2000ms, regardless of the keypress response. At the first correct keypress, a gray bounding box appears around the image and remains displayed for 300ms, or until the end of the fixed trial time, whichever is lesser. Reaction time was recorded to the first keypress. (C) Transition structure. Successive images were chosen according to a first-order transition structure, the existence of which was not instructed to the participants. This structure changed abruptly at two points during the task, unaligned to rest periods and with no visual or other notification.

At each trial, one of the pictures was presented in the center of the screen, where it remained for 2 seconds, plus or minus a small, pseudorandom amount of jitter time, up to 220ms in increments of 55ms. Participants were instructed to continue pressing keys until they responded correctly or ran out of time. Correct responses triggered a gray bounding box which appeared around the image for the lesser of 300ms or the remaining trial time (Figure 1b). Thus, each image presentation occurred for the programmed amount of time, regardless of participant response. The inter-trial interval consisted of 220ms of blank screen.

The training phase of the experiment was conducted outside of the scanner, seated upright, with responses provided on a standard PC keyboard. During this phase, participants were trained to a criterion level of accuracy, defined as 75 correct first responses out of at most the previous 100 presentations.

A second practice session of 150 presentations was conducted inside the scanner, to ensure that participants attained reasonable comfort and proficiency with the magnet-safe button boxes used to collect responses. Neuroimaging data were not collected during this practice session. The finger-to-image response mappings generated for the training session were preserved for the scanner session.

The test phase of the scanning session proceeded with four blocks of 249 presentations. The first three blocks were followed by a rest period of participant-controlled length. During the rest period the participants were presented with a color image of a natural scene not among the study set, and a text reminder to pause and rest before beginning the next run. Scan blocks after the first were initiated manually by the operator only after the participant pressed any of the relevant keys twice to alert the operator that they were prepared to continue the task. Total experiment time -- inclusive of training, practice, and test -- was approximately 1.5 hours.

Stimulus sequence

For training, the sequence of images was selected pseudorandomly according to a uniform distribution. Participants were instructed to emphasize learning the mappings between image and finger, disregarding speed of response in favor of correctly identifying the image on the screen.

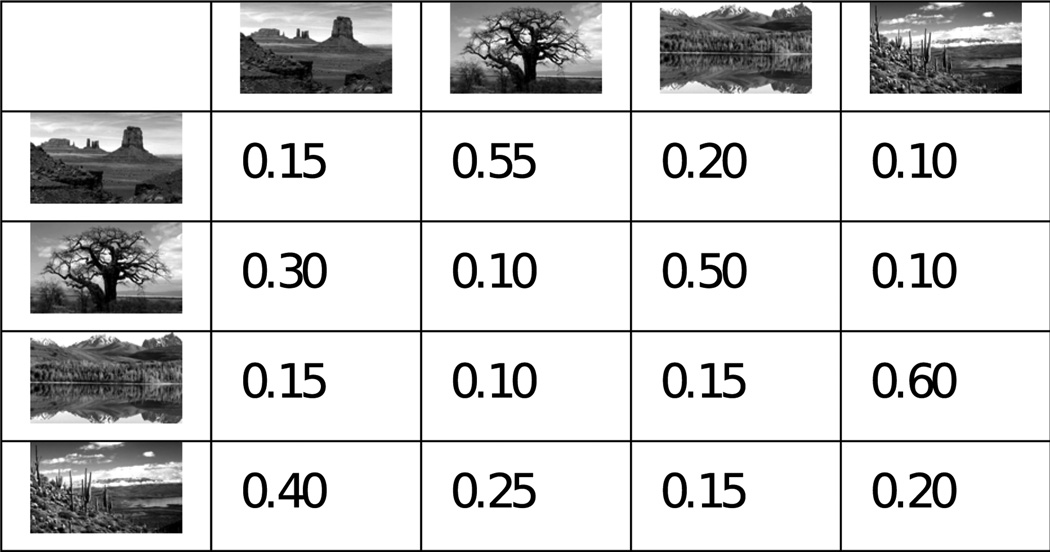

During the test phase of the experiment, the sequence of images was generated pseudorandomly according to a first-order Markov process, meaning that the probability of viewing a particular image was solely dependent on the identity of the previous image (Figure 1c). Thus, the statistical structure of the sequence is fully specified by a 4×4 array containing the conditional probabilities that each picture would be followed by the others. Self-transitions were allowed. Participants were not informed of the existence of sequential structure in the task design.

In order to encourage continual learning, and to sample responses across a wide range of conditional probabilities, the transition matrices were changed twice, at evenly-spaced intervals during the experimental session. Despite this, the program offered no explicit indication of the shift to a different transition matrix, nor were changes of matrix aligned with the onset of rest periods. Time to first keypress was recorded and used as our dependent behavioral variable. Participants were not informed that their reaction times were being recorded, and no information was provided as to overall accuracy or speed either during or after the experiment. Trials on which the first keypress was incorrect were discarded from behavioral analysis.

Three transition matrices were generated pseudorandomly for each subject, in a manner designed to balance two priorities: a) to equalize the overall presentation frequencies for each image over the long- and medium-term, while b) examining response properties across a wide sample of conditional image transition probabilities. Detailed information on the procedure used to generate matrices satisfying these constraints is available in the supplemental material (Transition Matrix Generation).

Analysis

We employed a series of multiple linear regressions to investigate whether reaction times reflected learning of the stimulus-stimulus conditional probabilities, and, subsequently, to examine the form of this learning. In particular, each participant's trial-by-trial RTs for correct identifications were regressed on explanatory variables including the estimated conditional probability of the picture currently being viewed given its predecessor, and the entropy of the distribution of conditional probabilities leading to this picture – defined, in separate models (described below), in a number of different ways representing different accounts of learning – together with several effects of no interest. Trials on which the first keypress was not correct were excluded from behavioral analysis. Effects of no interest included stimulus-self transitions, image-identity effects, and a linear effect of trial number. These effects were identical across models – thus, each model-based analysis was uniquely identified by a proposed form for conditional probability estimates.

In our initial analysis, the conditional probabilities (and pursuant entropies) were specified as the ground-truth contingencies -- the contingencies actually encoded in the transition matrix. Having established that RT reflected such learning by demonstrating a significant correlation with these asymptotic values (Figure 2a) of probability (though not entropy, see Results), subsequent analyses used computational models to generate a timeseries of probability estimates such as would be produced by different learning rules with the same experience history as the participant (see Computational Models). These rules involved additional free parameters controlling the learning process (e.g., learning rates), which were jointly estimated together with the regression weights by maximum likelihood. For behavioral analysis, models were fit and parameters estimated separately for each participant. At the group level, regression weights were tested for significance using a t-test on the individual estimates across participants (Holmes and Friston 1998).

Figure 2.

Sequential learning. (A) Despite the fact that they were unaware of task structure, participant reaction times reflected the probabilities as designed — response time was commensurately lower as conditional likelihood of the image increased. (B) An analysis of the influence of prior responses on reaction time on the current trial shows a decaying effect of previous experience, with significant contributions from the seven most recent presentations of the current image. Reaction time for a given image-image transition was lowered by more recent experience with that transition; this effect showed an exponential relationship between recency of experience and reaction time. This pattern excludes models that do not incorporate forgetting of past experience. □ = p < 0.05. Error bars are SEM (C) Model comparison. Individual log Bayes factors in favor of a model using two learning processes, versus a single process. The two-process model is decisively favored for 14 / 18 subjects, and was a significantly better fit across the population (summed log Bayes factor 145, p < 5e−5 by likelihood ratio test).

To generate regressors for fMRI analysis (below) we refit the behavioral model to estimate a single set of the parameters that optimized the reaction time likelihoods aggregated over all participants (i.e., treating the behavioral parameters as fixed effects). This approach allowed us separately to characterize baseline learning-related activity and individual variation in neurally implied learning rates relative to this common baseline. For the former, in our experience (Daw et al. 2006; Schoenberg et al. 2007, 2010; Gershman et al. 2009; Daw 2010; Daw et al. 2011), enforcing common model parameters provides a simple regularization that improves the reliability of population-level neural results. To capture individual between-subjects variation in the learning rate parameter, over this baseline, we add, as an additional random effect across participants, the partial derivatives of the regressors of interest with respect to the learning rate (see Learning rate analysis, below).

Computational models

First, to investigate the contribution of past experience to expectation about the current stimulus, while making relatively few assumptions about the form of this dependence, we entered the past events themselves as explanatory variables in the analysis (Lau and Glimcher 2005; Corrado et al. 2005). In particular, we used a similar multiple linear regression to the above, but replaced the conditional probability regressor with ten regressors, each a timeseries of binary indicator variables. If I(t) is the image displayed on trial t, then the indicator variables at trial t represented, for each of the ten most recent presentations of the preceding image I(t-1), whether on that presentation it had or had not been followed by image I(t). The logic for this assignment is that the expectation of image I(t), conditional on having viewed image I(t-1), should depend on previous experience with how often image I(t) has in the past followed the predecessor image I(t-1). Because error-driven learning algorithms such as the Rescorla-Wagner (1972) model predict that the coefficients for these indicators should decline exponentially with the number of image presentations into the past (Bayer and Glimcher 2005), we fit exponential functions to the regression weights.

Following up on the results of this analysis, we considered a more constrained learning rule – again, of the form proposed by Rescorla and Wagner (1972; see also Gläscher et al. 2010) -- which updates entries in a 4×4 stimulus-stimulus conditional probability matrix in light of each trial's experience. The appropriate estimate from this matrix at each step was then used as an explanatory variable for the reaction times in place of the ground-truth probabilities or binary indicator regressors. Formally, at each trial the transition matrix was updated according to the following rule, for each image i:

| [1] |

where I(t) is the identity of the image observed at trial t and α is a free learning-rate parameter. This rule preserves the normalization of the estimated conditional distribution.

In addition, we examined the possibility that behavior may reflect the contributions of two parallel learning processes, by examining the fit of a transition matrix resulting from a weighted combination of two Rescorla-Wagner processes, each with different values of the learning rate parameter α. Each process updated its matrix as above, however the behaviorally expressed estimate of conditional probability was computed by combining the output of each process according to a weighted average with weight (a free parameter) π:

| [2] |

Since the models considered here differ in the number of free parameters, we compared their fit to the reaction time data using Bayes factors (Kass and Raftery 1995; the ratio of posterior probabilities of the model given the data) to correct for the number of free parameters fit. We approximated the log Bayes factor using the difference between scores assigned to each model using the Laplace approximation to the model evidence, assuming a uniform prior probability for values of the free parameters between zero and one. In participants for whom the Laplace approximation was not estimable for any model (due to a non-positive definite value of the Hessian of the likelihood function with respect to parameters) the BIC was used for all models. Model comparisons were computed both per-individual, and on the log Bayes factors aggregated across the population.

Finally, to evaluate the relative contribution of each process to explaining RTs, we report standardized regression coefficients for the slow and fast conditional probability estimates, e.g.

| [3] |

fMRI Methods

Acquisition

Imaging was performed on the 3T Siemens Allegra head-only scanner at the NYU Center for Brain Imaging (CBI), using a Nova Medical (Wakefield, MA) NM011 head coil. For functional imaging, 33 T2*-weighted axial slices of 3mm thickness and 3mm in-plane resolution were acquired using a gradient-echo EPI sequence (TR=2.0s). Acquisition was tilted in an oblique orientation at 30° to the AC-PC line, consistent with previous efforts to minimize signal loss in orbitofrontal cortex and medial temporal lobe (e.g., Hampton et al. 2006). This prescription obtained coverage from the base of the orbitofrontal cortex and medial temporal lobes to the superior border of the dorsal anterior cingulate cortex. Four scans of 300 acquisitions each were collected, with the first four volumes (eight seconds) discarded to allow for T1 equilibration effects. We also obtained a T1-weighted, high-resolution anatomical image (MPRAGE, 1×1×1 mm) for normalization and localizing functional activations.

Imaging analysis

Preprocessing and data analysis were performed using Statistical Parametric Mapping software version 5 (SPM5; Wellcome Department of Imaging Neuroscience, London UK), and version 8 for final multiple comparison correction. EPI images were realigned to the first volume to compensate for participant motion, coregistered to a higher resolution field map to with the anatomical image, and, to facilitate group analysis, spatially normalized to atlas space using a transformation estimated by warping the subject's anatomical image to match a template (SPM5 segment and normalize). In order to ensure that the original sampling resolution was preserved in the normalized space, images were resampled to 2×2×2 mm voxels in the normalized space. Finally, they were smoothed using a 6mm full-width at half-maximum Gaussian filter. For statistical analysis, data were scaled to their global mean intensity and high-pass filtered with a cutoff period of 128s. Volumes on which instantaneous motion was greater than 0.25mm in any direction were excluded from analysis. Data from two participants were excluded due to excessive motion on a large percentage of volumes.

Statistical analysis

Statistical analyses of functional timeseries were conducted using general linear models, and coefficient estimates from each individual were used to compute random-effects group statistics. Delta-function onsets were specified at the beginning of each stimulus presentation, and -- to control for lateralization effects -- duplicate nuisance onsets were specified for presentations on which right-handed responses were required. This had the effect of mean-correcting these trials separately. All further regressors were defined as parametric modulators over the initial, two-handed stimulus presentation onsets. All regressors were convolved with SPM's canonical hemodynamic response function (HRF).

The remaining regressors were constructed as follows. First, to control for nonspecific effects of reaction time (which, as demonstrated by our behavioral results, was correlated with our primary regressor of interest, the conditional probability), each trial's reaction time was entered into the design matrix as a parametric nuisance effect. We took advantage of the serial orthogonalization implicit in SPM's parametric regressor construction by placing this regressor first in the set of parametric modulators. As a result, all subsequent regressors, including all regressors of interest, were orthogonalized against this variable, ensuring that it accounted for any shared variance. We next included regressors of interest specifying the conditional probability and conditional entropy associated with the current image, and their partial derivatives with respect to the learning rate (see Learning rate analysis below). Finally, variance due to the effects of missed trials (those in which the participant did not press any keys in the allotted time) and error trials was modeled with additional nuisance regressors, entered last in orthogonalization priority.

Our regressors of interest were derived from the timeseries of transition matrices estimated by the best-fitting behavioral model, the two-learning rate model of Equations 1 and 2. In particular, we include the probability of the image I(t) displayed at each trial t, conditional on its predecessor – P(I(t) | I(t-1)), and in addition the entropy of the distribution over the subsequent stimulus, given the image I(t) currently viewed:

| [4] |

(Here I(t) denotes the image actually displayed on trial t, but the sum is over all four possible identities of the as-yet-unrevealed subsequent image, I(t+1)). Whereas the conditional probability measures how ”surprising” is the current stimulus, this quantity, which we refer to as the “forward entropy,” measures the ”expected surprise” for the next stimulus conditional on the current one, i.e. the uniformity of the conditional probability distribution. Because of the temporal dissociation between probability of the current stimulus and entropy of the distribution of ensuing stimuli, there was no inherent confound between these two regressors. However, as the entropy regressor was orthogonalized against probability, any shared variance was attributed to conditional probability.

In all analyses, unless otherwise stated, activations are reported for areas where we had a prior anatomical hypothesis at a threshold of p < 0.05, corrected for family-wise error (FWE) in a small volume defined by constructing an anatomical mask, comprising the regions of a priori interest, over the population average of normalized structural images. These are, bilaterally, hippocampus as defined by the Automated Anatomical Labeling atlas (Tzourio-Mazoyer et al. 2002), and anterior ventral striatum (caudate and putamen), defined (after Drevets et al. 2001) by taking the portion of the relevant AAL masks below the ventral-most extent of the lateral ventricles (in MNI coordinates, Z<1), and anterior to the anterior commissure (Y>5). This area corresponds, functionally, to the regions most often observed to reflect learning-related update signals in fMRI studies of reward learning tasks (O'Doherty et al. 2003; McClure et al. 2004; Delgado et al. 2000; Knutson et al. 2001). Activations outside regions of prior interest are reported if they exceed a threshold of p < 0.05, whole-brain corrected for FWE. All voxel locations are reported in Montreal Neurological Institute coordinates, and results are displayed overlaid on the average over participants’ normalized anatomical scans.

Learning rate analysis

In the best-fitting behavioral model, the learned transition matrix arises from two modeled learning processes each with a free parameters for its learning rate. We used three separate fMRI analyses (and, reported in supplemental material, two more alternative specifications) to investigate this multiplicity of potential effects. First, seeking correlates for each of these two subprocesses hypothesized on the basis of the behavior separately, we conducted two GLM analyses, each using as regressors of interest the probability and entropy regressors constructed from one of the single learning rates identified from the behavior. These two analyses were conducted in separate GLMs due to correlation between regressors generated using different values of the learning rate parameter. Our third GLM addressed the problem of correlation between signals more directly with a single design that formally investigated the possibility that learning rates expressed across regions of the brain differed from one another. To this end, we employed a GLM that included additional regressors quantifying the effect of changes in the modeled learning rate on the regressors of interest. A detailed description of this analysis is available in the supplementary methods (Learning rate analysis).

Results

Behavioral Results

Participants performed a Serial Reaction Time (SRT) task, in which they were instructed to label each of a continuous sequence of image stimuli (Figure 1b) according to a predetermined one-to-one mapping of each of four keys to each of four natural scene images (Wittmann et al. 2008). Participants were first trained to map fingerpress responses to images (Figure 1a) at a criterion level of performance (75 correct out of at most the 100 preceding trials). During this training phase, the mean number of trials to criterion was 102.4 (standard deviation 45.6). The mean time to correct response was 889.9ms (standard deviation 443.2ms).

The main experiment consisted of a sequence of image labeling trials. Images on each trial were selected according to a first-order Markov process; that is, with a conditional probability determined by the identity of the previous image (see Methods). Participants were not instructed about the existence of sequential structure in the task. During the testing phase, errors – defined as trials in which the first keypress did not correspond to the presented image – were few (mean 2.6%, standard deviation 1.5%), while the mean time to correct response fell relative to training, to 692.7ms (standard deviation 268ms).

Ground-truth probability

Figure 2a shows the relationship between reaction time (RT) on correct trials and the ground-truth conditional probability of the image being identified, across the population. Here, for each participant, RTs were first corrected for the mean RT and a number of nuisance effects – estimated using a linear regression containing only these effects as explanatory variables and computing the residual RTs. Of the nuisance regressors, only the self-transition effect was significant across the population (p < 1e-7 ; all others p > 0.15).

The impression that RTs are faster for conditionally more probable images is confirmed by repeating the regressions with the ground-truth conditional probability included as an additional explanatory variable. Across participants (that is, treating the regression weight as a random effect that might vary between individuals), the regression weight for this quantity was indeed significantly negative (one-sample t-test, p < 2e-7; mean effect size 0.76 ms RT per percentage conditional probability) and, at an individual level, reached significance (at p < 0.05) for 15 of 20 participants. This analysis contained a second regressor of interest: the entropy of the conditional distribution leading to the current image. Although entropy has previously been shown to impact RTs (Strange et al., 2005), the conditional entropy effect did not reach significance here either across the population (p > 0.2), or for any participant individually, and thus was discarded from further behavioral analyses.

This analysis indicates that participant responses were prepared in a manner reflecting some approximation of the programmed transition probabilities. As the probabilities were not instructed, we inferred that these quantities were estimated incrementally by learning from experience. The remainder of our analysis of behavior attempted to characterize the nature of this learning (den Ouden et al. 2010).

Learning analysis

In order to test the general structure of a learning model, we first examined the contribution of past experience to current expectations, while making fewassumptions about the form of this dependence. To this end, we employed a regression model in which previous events were explicitly included as explanatory variables for RT (Lau and Glimcher 2005). In particular, for each trial, in which some image Y was presented having been preceded by some image X, we included explanatory variables corresponding to each of the last ten previous presentations of X, defined as 1 if that presentation was also followed by Y, and 0 otherwise. The resulting regression weights measure to what extent the RT to Y following X is affected by recent experience with the image pair X→Y, relative to other pairs X→Z. In Figure 2b, the fit regression weights, up to one more than the most remote to reach significance, are averaged across participants and plotted as a function of the lag into the past, counted as the number of presentations of X.

Consistent with experience-driven learning of conditional probabilities, recent experiences with the the image pair X→Y predict faster responding. This dependence appears to decay rapidly, though it continues to contribute to current expectations for several presentations: regression weights are significantly non-zero across subjects through roughly the seventh previous observation of X (one sample t-tests: at lag 5 p=0.11, all others from 1 to 7 p<0.04; at lag 8 p>0.8). Since any image occurs, in expectation, every four trials, this suggests that the experience of a transition has a detectable effect over an average window of some sixteen to twenty-eight trials.

This regression characterizes the form of reaction times' dependence on past experiences as a weighted running average, here appearing reasonably exponential. Exponentially decaying weights are characteristic (Bayer and Glimcher 2005; Lau and Glimcher 2005; Corrado et al. 2005) of an error-driven learning procedure for estimating conditional probabilities (Rescorla and Wagner 1976; here, Equation 1 in Methods), with the free learning rate parameter, α, determining the time constant (1 - α) of the decay. Thus, the learning rate is equivalent to a 'forgetting', or 'decay' rate (Rubin et al. 1996; Rubin et al. 1999). The same equations also characterize the average decay behavior for models that update at varying rates or only sporadically (Behrens et al. 2007). However, other sorts of learning rules predict qualitatively different weightings. For instance, because of exchangeability, ideal Bayesian estimation of a static transition matrix (Harrison et al. 2006) or indeed a simple all-trials running average predict equal coefficients at all time lags. On the basis of these results, we do not consider such models further (though the superiority of the Rescorla-Wagner model was also verified by directly comparing these models' fits to the RTs; analyses not reported).

However, in the domain of decision making, it has previously been noted that the effects of lagged experiences on choices are better described by the weighted sum of two exponentials with different time constants, a pattern that was suggested to result from the superposition of two underlying processes learning at different rates (Corrado et al. 2005). This is also true of the averaged regression weights in Figure 2b (likelihood ratio test comparing one- and two-exponential fits, p=0.0024).

Altogether, then, the form of the regression weights suggests reaction times superimpose conditional probability estimates learned using two error-driven learning processes with different decays. To verify that this appearance did not arise from the averaging over subjects in Figure 2b, and to quantify the hypothesis directly in terms of its fit to RTs (rather than parameter estimates from an intermediate analysis), we considered the fit of one- and two-learning rate Rescorla-Wagner models to each participant's RT data, essentially equivalent to refitting the regression model while constraining each participant's weights to follow a one- or two-exponential form.

Figure 2c shows the difference in log Bayes factor between these two models. The two-learning rate model was favored over the one-learning rate model for 17 of 18 participants. Aggregated over subjects, the two-learning rate model was favored by a log Bayes Factor of 145. The conditional probabilities learned by the two-learning rate Rescorla-Wagner model also explained the RTs considerably better than the 'ground truth' programmed probabilities (log Bayes factor 308.9 aggregated, and favoring the two process model for 17 of 18 participants individually). For this two-learning rate model, the mean effect size implied by the regression weights was 1.06 ms RT per percentage point of combined conditional probability. Finally, we measured the relative contribution of each probability estimate to reaction time, by computing the each effect's regression coefficients normalized for variance in each probability timeseries. The resulting standardized coefficients were −0.075 for the slow regressor, and −0.071 for the fast regressor (both means across the population, with S.E.M of 0.01). Thus, the probabilities learned by slower and faster learning rates appear to contribute roughly equally to explaining reaction times.

For the purpose of conducting fMRI analyses measuring individual variations in neurally implied learning rate estimates relative to a common baseline (see Methods for an in-depth justification of this approach), we re-estimated the two-process model parameters as single, fixed effects across participants. The best-fit learning-rate parameters were 0.5499 and 0.0138, weighted at 0.4018 to the slow parameter. Additionally, we computed the population median of the random-effects learning rates (0.6054 and 0.008) and weight (0.35 to the slow parameter), and observed that they did not significantly differ from the best-fit fixed-effects values (all p > 0.1).

Together, these data suggest that participants learn predictions of conditional probability from experience by an error-driven learning process, and that the data are best explained by the superposition of two such processes learning in parallel.

fMRI Results

We next sought signatures in neural activity of the two learning processes suggested by our behavioral analyses. On the basis of previous work on multiple systems involved in sequential learning (e.g., Poldrack et al. 2001; Nomura et al. 2007), we focused on the hippocampus and the anterior ventral striatum as areas of prior anatomical interest. We hypothesized that activity in these two areas might reflect learning at different rates, matching the two processes we inferred from behavior (Gläscher and Büchel 2005).

We used a strategy of model-based fMRI analysis (Gläscher and O'Doherty 2010), analogous to an approach often used with reward-related learning. In short, we exploit the fact that the models we fit to behavior define internal variables – here, the learned transition matrices – hypothesized to underlie behavior – here, the RTs. The timeseries of these variables from the fit behavioral models may serve as signatures for ongoing neural processes related to the computations and, thus, provide quantitative tests for the hypothesized dynamics of these processes. This approach appears well-suited to our study, in which the behavioral results suggest two parallel learning processes specifying distinct timeseries of values for these internal variables. Analogous work in RL tasks often seeks both anticipatory (future value) and reactive (prediction error) measures of reward prediction; here, we define analogous regressors for stimulus predictions using the forward entropy (cf. Strange et al. 2005, Harrison et al. 2006) of the predicted stimulus distribution, and the conditional probability of an observed stimulus. We adopt the latter regressor rather than its log (a traditional measure of surprise in information-theoretic work), because of its relationship to the prediction error (Eq. 1 and Gläscher et al. 2010).

However, simply seeking the correlates of these timeseries in a GLM (Tanaka et al. 2004; Gläscher and Büchel 2005) is statistically inefficient because the versions of our variables of interest calculated at different values of α are highly correlated (average correlation across participants between slow and fast LR processes: R=0.52 for probability, 0.31 for entropy). We instead separately studied the regressors from the slow and fast LR processes, in distinct GLMs, to form an initial impression of how this activity may fractionate according to processes operating at different learning rates.

Next, to formally answer questions about whether the learning process that best explains BOLD activity differs between areas, we measured the degree to which BOLD in a region reflects a value of the learning rate parameter α that was different from the one tested. In particular, we first identified areas with learning-related activity by assuming a value of the decay rate intermediate to those observed behaviorally, and then analyzed residual activity explained by additional, orthogonal regressors representing how the modeled signal would change if the parameter that produced these regressors was increased or decreased.

Forward entropy

Our primary analysis sought regions where activity suggested anticipatory processing, operationalized by the uncertainty about the identity of the next stimulus conditional on the current one (forward entropy; c.f. Strange et al. 2005, Harrison et al. 2006). BOLD signals correlating with this timeseries may reflect spreading activation among (anticipatory retrieval of) multiple representations in an autoassociative memory network, or, similarly, simulation of future events using a forward model (Niv et al. 2006).

When the regressors were computed according to the slow LR process, correlates emerged in the hippocampus. Activity correlated with the slow process is illustrated in Figure 3c. In particular, a cluster of significantly correlating activity was identified in left anterior hippocampus [−26, −10, −18; peak FWE p < 0.02 small-volume corrected for family-wise error due to multiple comparisons over our mask of a-priori regions of interest, cluster FWE p < 0.04].

Figure 3.

Areas where the BOLD signal correlated with the entropy over the distribution of upcoming stimuli, generated at each of our analyzed learning rates. Images are thresholded at p < 0.001, uncorrected, for display purposes. Row A shows activation observed in the fast process GLM, with clusters of negative correlation in ventral striatum and anterior insula. Row B shows activation observed in the slow process GLM, a positively correlated cluster in anterior hippocampus. The activation visible in posterior parahippocampal cortex did not survive correction for multiple comparisons.

For regressors computed according to the fast LR process, we observed no activity positively correlated with forward entropy at our threshold. However, a significant cluster of negatively correlated activity – possibly reflecting a lower degree of response preparation for the upcoming trial – was identified in right putamen [18, 6, −6; peak marginal at p < 0.06 SVC for FWE, but cluster FWE p < 0.04].

Outside our areas of interest, correlates of fast LR forward entropy were also observed in left anterior insula [−40, 14, −4 ; peak p < 0.05 when corrected for FWE over a mask of the whole brain] and inferior frontal gyrus [36, 16, −4; peak p < 0.05 by whole-brain FWE correction; not shown]. A complete list of clusters correlating with forward entropy can be found in Supplementary Tables S1 and S2.

Together, these results suggest that anticipatory activity reflecting learned transition contingencies is visible across a number of brain regions. The distinct difference in activations observable in the SPMs from each process further suggests that distinct networks which include either hippocampus or striatum might be associated, respectively, with slow and fast LR estimates of sequential contingencies, and further correlated in opposing directions with the same measure, forward entropy, extracted from each process. In supplemental materials, we also report results from analyses using probability and entropy regressors derived from the combined process rather than either separately. Correlates there do not reach similar levels of statistical significance, consistent with our interpretation that activity is related to either process separately (Supplemental Results).

However, it is important to stress that an apparent difference between thresholded statistical maps does not constitute formal demonstration that a difference exists (“the imager's fallacy”; Henson 2005). Further, the results presented thus far do not directly compare the two processes in a single GLM. Supplementary Figure S1 shows that qualitatively similar activations were observed only at a lower statistical threshold (as expected due to correlation between the regressors), when regressors from both processes were estimated within a single GLM. We report a different strategy to allow a more direct and statistically powerful investigation of this issue under Divergent learning rates, below.

Conditional probability

Next we sought regions with reactive rather than anticipatory activity, specifically those where BOLD signal correlated with the probability of the presently viewed image, conditional on the identity of the preceding image. Such activity might reflect the degree of expectation or response preparation, or (in the case of negative correlations) surprise or prediction error, which is decreasing in the predicted probability of the observed image (Gläscher et al. 2010). Note that although the conditional probability was shown to correlate with RT in the behavioral analyses, above, RT effects do not confound the fMRI analyses presented here, as all regressors of interest were first orthogonalized against RT.

When regressors were computed from the fast LR process, activation correlating with this timeseries was observed in right putamen [18, 14, −4; p < 0.04 FWE corrected over small volume] (Figure 4b).

Figure 4.

Areas where the BOLD signal correlated with the conditional probability of the current stimulus, generated at each of our analyzed learning rates. Images are thresholded at p < 0.001, uncorrected, for display purposes. Using the fast process GLM, significant activation was observed in ventral striatum. No clusters significantly correlated with conditional probability were observed in the slow process GLM.

In the low LR process, no activity correlating with conditional probability was observed in our regions of interest, and those clusters above our observation threshold did not survive whole-brain correction.

A complete list of clusters correlating with conditional probability can be found in Supplementary Tables S3.

Divergent learning rates

Our results to this point are suggestive of distinct neural processes operating at different learning rates, but we have not yet provided statistical evidence explicitly supporting the claim that these rates are different. We now quantitatively evaluate the claims that activity in these regions is a) best explained by different rates and b) these rates are uniquely consistent with one of each identified in our behavioral analysis.

Here, again, we consider timeseries drawn from a single process learning at a single rate, and pose questions about neural activity, relative to that rate. The dependence of the modeled learning-related activity (conditional probability or entropy timeseries) on the learning rate parameter is nonlinear. We adopt a linear approximation to this dependence so as to pose statistical questions about the learning rate that would best explain the neural activity in terms of a standard random-effects general linear model (GLM). Specifically, having generated regressors for our variables of interest according to a baseline learning rate (the midpoint of the behavioral rates), we estimated weights for additional regressors, representing the change in each variable that would result from a change in learning rate -- formally, this is the partial derivative, with respect to learning rate, of the variable (Friston et al. 1998). A positive beta value estimated for this regressor in a given voxel would imply that the activity in that voxel is best explained by a timeseries generated using a higher learning rate than the one used as a baseline, and a negative beta value would imply a lower value for this best-fit learning rate. For a detailed description of the analysis, see Learning rate derivatives in Supplementary Methods.

To allow comparison between regions, we first identified voxels displaying learning activity in either region. For this, we performed a whole-brain regression using regressors of interest generated from a baseline learning rate parameter set to the midpoint of the fast and slow rate values identified in behavior, so chosen to have a symmetric chance of detecting activity related to either putative process. We then selected the peak active voxels in our two a priori regions of interest for the regressors that elicited significant activity in our previous analyses – forward entropy in hippocampus and conditional probability and forward entropy in ventral striatum – and examined the beta weights estimated for the corresponding derivative regressors. Before testing, these were scaled by the main effect of the regressor of interest in order to produce an estimate with units of learning rate.

Figure 5 displays the pattern of results. Comparing weights between areas, the activity in hippocampus related to forward entropy was best explained by a smaller learning rate, as assessed by the partial derivative regressor, than that in striatum related either to entropy (paired two-sample, two-tailed t-test across subjects, p=0.023) or probability (p=0.0251). These tests support the conclusion that activity in each region is described by incremental learning processes with distinctly different dynamics.

Figure 5.

Comparison of learning rates implied by activity in our primary regions of interest. These values were computed by first identifying voxels in our a priori regions of interest (hippocampus and ventral striatum) which were maximally responsive to to our model regressors (probability and entropy) when generated at the midpoint of our behaviorally-obtained learning rates (black dotted line), then estimating best-fitting learning rates by deviations from this baseline (see Supplementary Methods). Bars represent the average implied learning rate across subjects, at a single voxel for each combination of region and regressor: left hippocampus (−26, −14, 24) and right ventral striatum (entropy 18, 16, −6 ; probability 20, 6, −2). Error bars represent the positive and negative confidence intervals, across subjects.

To clarify the unique association of each region with our behaviorally obtained learning rates, we additionally compared the parameter implied by the responses measured in each neural structure to the fast and slow rates identified behaviorally, and also to the average used to select voxels, α0. Compared to α0, the implied αBOLD in hippocampus was significantly lower (one-sample, two-tailed t-tests across subjects, p=0.049), while activity in striatum implied a value that trended towards significantly higher than α0 for entropy (p=0.058), though not for probability (p=0.37). The rate implied by activity in striatum was significantly higher than the slow rate for entropy (p < 5e-8) and probability (p=0.007); neither was significantly different from the fast rate (entropy, p=0.53; probability, p=0.86. Symmetrically, the αBOLD estimated for hippocampus was significantly lower than the fast rate (p=0.017) but not significantly different from the slow rate (p=0.56).

Taken together, these results suggest that learning-related activity in the hippocampus and striatum was, respectively, consistent with the slow and fast LR processes hypothesized on the basis of of our behavioral model fits.

Discussion

We provide evidence that learned expectations expressed in serial response behavior are comprised of dissociable contributions from anatomically distinct networks learning at different rates. Effects of serial expectation on RTs (Bahrick 1954) and BOLD responses (Huettel et al. 2002; Harrison et al. 2006; Schendan et al. 2003) are well-established; we exploit these effects to study how participants learned expectations trial-by-trial. Thus our approach parallels recent work in reward learning and decision making (O'Doherty et al. 2003; Barraclough et al. 2004; Samejima et al. 2005; Lohrenz et al. 2007), but we apply these methods to investigate behavior that results from sequential contingency learning, while minimizing the influence of reinforcement. Since feedback about correctness was downplayed and the response behavior was well practiced and maintained near ceiling, the reaction time effects and associated neural modulations are likely to reflect fluctuating serial contingency predictions and are not explicable in terms of differential reward expectations. Such behavior appears well described by a weighted combination of two error-driven learning processes.

It is possible that the two-process nature of our results may be rooted in the fact that a serial reaction time task confounds both response-response and stimulus-stimulus sequencing. For instance, the long-lasting effects of transitions on hippocampal activity might reflect learning there of stimulus-stimulus predictive relations – a key building block for model-based RL (Gläscher et al. 2010) – while the faster decaying effects in striatum might reflect more transitory learning of response-response biases, with both affecting RTs. Directly testing this suggestion would require a different task that separately manipulated these two sorts of contingencies.

Similar distinctions in the timescale over which expectations are drawn have been observed between disparate brain structures processing common information – e.g., between subcortical and cortical association structures (Gläscher and Büchel 2005), and between different parts of sensory cortex (Hassan et al. 2008), and even between different neurons within an area (Bernacchia et al. 2011). However, this dissociation has not previously been drawn between hippocampus and striatum, and in none of these cases were the neural timescales linked to dissociable effects on behavior (see Kable and Glimcher 2007, for a discussion of the import of connecting putatively separate neural processes to distinct behavioral influences). In contrast, the most prominent issue addressed using SRT tasks like ours has been the status of learning as explicit or implicit (e.g., Nissen et al. 1989). In examining this distinction, researchers have explored the proposal that multiple processes underlie learning. A recent model (Keele et al. 2003) proposes that sequential learning involves the parallel operation of two cognitive systems, each constructing distinct representations: a multidimensional representation in one, entrained by the unidimensional representations of the other. Though it is unclear whether this hierarchical arrangement results in different timescales of integration (which would be reflected by different learning rates), the proposal that these systems correspond to ventral and dorsal networks, containing hippocampus and basal ganglia, respectively, is broadly consistent with our results. Even closer to our conclusions, Davis and Staddon (1990) advance a dual-system, two-learning-rate architecture to explain pigeons’ choices on a reversal learning task, which display both long- and short-timescale dependencies on experience.

Finally, neuropsychological and animal lesion studies have repeatedly observed that removal or damage to the hippocampus does not impair gross sequential RT effects in similar tasks (e.g., Curran 1997). Our results are consistent with these observations, in that either system's predictions may encourage responses, though our model predicts a quantitative difference in trial-by-trial adjustments in reaction times. Crucially, a recent pair of studies on a serial reaction time task resembling the one employed in the present experiment observed that lesions to the dorsal hippocampus of rodents actually improved performance (Eckart et al. 2011) – i.e., producing a steeper learning curve - while striatal lesions severely diminished the rate of learning (Eckart et al. 2010); a pattern consistent with slow and fast contingency learning in hippocampus and striatum, respectively.

Model-based simulation

One interpretation of the hippocampal activity in the present study is that it is driven by retrieval, which is ubiquitous, rather than encoding, which (as we discuss below) may be rare. For instance, if hippocampus retrieves likely subsequent pictures at each step, then more widespread activation would occur on trials with higher forward entropy, i.e., those in which activation spreads more evenly among more potential successors.

The suggestion that our hippocampal BOLD effects reflect preparatory “prefetching” of the anticipated next elements in the sequence coincides with observations of “preplay” activity in this structure in rodents (Ferbinteanu and Shapiro 2003; Johnson and Redish 2007; Diba and Buszáki 2007). Such activity has been suggested to support decision making by evaluating the anticipated consequences of candidate actions, a strategy formalized by model-based reinforcement learning (Doya 1999; Daw et al. 2005; Niv et al. 2006; Johnson and Redish 2005; Rangel et al. 2008; Balleine et al. 2008; Doll et al. 2009) and also known as constructive episodic simulation (Tulving & Thompson 1971; Schacter and Addis 2007). Our results indicate that signals reflecting this activity may be parametrically modulated by a measure of the associative complexity of the trace being constructed or simulated.

The model-based approach contrasts with the model-free algorithms for reinforcement learning prominently associated with striatum and its dopaminergic afferents (Houk et al. 1995; Schultz et al. 1997). Note that, due to the minimal involvement of rewards (or errors) in our task, our striatal results are unlikely to relate to the reward prediction errors posited by these models (O'Doherty et al. 2003; McClure et al. 2004; Delgado et al. 2000; Knutson et al. 2001; Hare et al. 2008), but could relate to other non-reward correlates in striatum (Zink et al. 2006; Wittmann et al. 2008).

Arbitration between multiple systems in learning and control

Lesions in rodents support a dissociation between decision strategies along the lines of model-based vs model-free learning, supported by distinct networks neurally (Balleine and Dickinson 1998; Corbit and Balleine 2000; Gerlai 1998; Balleine et al. 2008). However, the neural basis of the dissociation is so far less crisply apparent in humans, and some work even seems to suggest overlapping substrates (Valentin et al. 2007; Frank et al. 2009; Tricomi et al. 2010; Gläscher et al 2010; Daw and Simon 2011; Daw et al. 2011), perhaps because model-based and model-free evaluations of reward expectancy (and thus their anticipated BOLD correlates) are typically quite similar. To the extent to which our two processes do indeed map differentially to stimulus-stimulus and response-response associations, our study suggests an additional difference between the systems, in their timescale of learning. This difference may allow their predictions in a reinforcement learning context to be more easily distinguished. Thus, a potentially fruitful avenue for further research is to use the tools provided herein to identify the use of either system's learned predictions in the service of reward-guided decision making.

The nature of competition or collaboration between these systems in the control of behavior has been a topic of much empirical (Poldrack et al. 2001) and theoretical (Daw et al. 2005) inquiry. Our results suggest that activity in both hippocampus and striatum is mediated by the uncertainty (i.e. entropy) about anticipated ensuing stimuli, and that this activity may differently drive fluctuating signals in each area – positively in hippocampus, negatively in striatum. Such activity may reflect differential engagement of either system under different conditions of uncertainty (Daw et al. 2005). In a traditional response or choice task with fixed contingencies, the overall trend would be towards sharper expectations (lower uncertainty) over time, giving rise to a decrease in hippocampal and commensurate increase in striatal activity. Indeed, such a pattern bears strong similarity to that repeatedly observed in probabilistic association learning tasks (Poldrack et al. 2001; Poldrack and Packard 2003).

Learning rates and associative representations

So far, we have stressed differences in the timescales over which neural activity reflects past events. However, in the error-driven learning model (Equation 1), as indeed in estimation more generally, encoding is also forgetting. Thus a long-timescale dependence of learned predictions on events (slow decay, high 1 - α) goes hand in hand with slow, incremental encoding (a small learning rate α). Viewed from this perspective, perhaps the most surprising aspect of our data is that we measure faster learning rates in striatum than hippocampus, given the traditional association of hippocampus with fast, single-shot episodic encoding (McClelland et al. 1995), and striatum with more incremental procedural learning (Knowlton et al. 1996a).

There are at least two possible answers to this question. One possibility is that a fundamental hippocampal function in learning relations (Cohen and Eichenbaum 1993) comprises not only relating events occurring simultaneously in an episode – a fast-timescale encoding – but also discovering event relations obtaining stochastically between temporally separated events (Shohamy and Wagner 2009; Hales and Brewer 2010), as with state transition contingencies in model-based RL. If so, different tasks or task variants might induce learning over different timescales, depending on the relations involved (Komorowski et al. 2009). In particular, learning a probabilistic transition structure, like imputing the equivalence relationships in Shohamy and Wagner's (2009) acquired equivalence task, requires integrating events across time rather than within an episode.

A second interpretation of the hippocampal result, suggested by the episodic learning literature, is that the slow learning rate we measure for representations in hippocampus reflects not continual, incremental updating, but instead the average rate of learning over trials in which associations might be formed rapidly but only sporadically. In particular, the Rescorla/Wagner equation also describes the expected update for the predictions under sporadic encoding (e.g., on a given trial, probability α of fully re-encoding a categorical stimulus-stimulus link, with no learning otherwise), or more generally some in-between process where α modulates over some fraction of trials. For simplicity and to enable comparing many sorts of learning in a single framework, we operationalized the distinction between the systems in terms of a nominally constant learning rate. If updates are sporadic or step sizes time-varying, the fit parameter value will instead characterize their average rate (Behrens et al. 2007). Our learning rule thus comprises (at least in expectation) a spectrum of possibilities between incremental learning of a probabilistic relation and sporadic encoding of a categorical relation, the latter similar to discrete state space models that have previously been applied to hippocampal function (Law et al. 2005; Prerau et al. 2008; Wirth et al. 2009).

This hypothesis that our task produces sporadic hippocampal encoding is supported by work suggesting that hippocampus forms associations preferentially at the detection of sufficiently large deviations from expected input (Tulving et al. 1996; Bakker et al. 2008; Lisman and Grace 2005), or when task demands enhance the motivational salience of expectancy violations (Duncan et al. 2009).

This interpretation of the current result is difficult to test directly using the present data set and methods, particularly because it is technically challenging to test the fit of such a model absent a specific hypothesis about which trials may have encouraged encoding or not. A more direct approach would be to manipulate factors expected to impact the tendency to form new episodes (Behrens et al. 2007; Bakker et al. 2008; Duncan et al. 2009; Wilson et al. 2010; Nassar et al. 2010), and seek an effect on the measured hippocampal learning rate. For instance, in environments with largely stationary associative structure, as in our task, learning may appear “slow” on average. However, tasks with more frequent changes may produce a correspondingly larger value of the hippocampal learning rate. This prediction parallels previous work demonstrating that humans (Behrens et al. 2007; Speekenbrink and Shanks 2010) and animals (Dayan et al. 2000; Courville et al. 2006; Preuschoff and Bossaerts 2007) modulate their learning rates in response to the volatility of changes in associational structure. The exact response of each individual system to environmental volatility is a potentially fruitful avenue for future research.

Representations in the model-based system

The question of whether sequential predictions are categorical or graded bears directly on how they would support decisions. In computational RL, state-state world models are probabilistic (to support exact computations of expected future value in stochastic Markov decision tasks) but more psychological accounts of deliberative processing, to which model-based RL might correspond, often take its representations to be rule-based or categorical, in contrast to more graded representations learned in an incremental fashion by (model-free) procedural systems (Knowlton 2002; Maddox and Ashby, 2004). A similar binary view is taken in “state space” models (Lau et al. 2005) that have been applied to hippocampal associative representations.

However, the above considerations notwithstanding, the extreme case of a process in which hippocampus learns only categorical predictive associations seems unlikely to explain our observations, because neural activity here is seen to relate to forward entropy (see also Strange et al. 2005; Harrison et al. 2006). Although the Rescorla/Wagner rule describes the expected timecourse for the stimulus predictions even under sporadic encoding, the timecourse of their entropy in this case is not the same as the entropy of the expected predictions, since the entropy is a nonlinear function of the predictions. More concretely, if the neural representation over the next stimulus were always fully categorical (i.e., as a probability distribution, deterministic, though undergoing sporadic stepwise changes) then the implied entropy would be always minimal and never modulate, unlike the hippocampal signal we detect. To be sure, it is possible that our observations could be produced by a process learning graded predictions via sporadic yet still incremental changes, at a learning rate a multiple of that measured here.

Either way, our data invite an interpretation in which hippocampal representations reflect the statistics of the environment in graded form, a view more conducive to model-based RL and also consistent with research on its involvement in learning of statistical task structure (Gluck and Myers 1993; Courville et al. 2004; Gershman and Niv 2009).

Supplementary Material

To observe the preferential activation of each region despite the correlation between our regressors of interest when generated at different learning rates, this GLM was run with orthogonalization turned off, for all regressors. The regressors of interest from both fast and slow processes were used to construct a single GLM. The entropy results, shown here, reflect similar patterns of activity as in the main text (Figure 3a and b), albeit at a less stringent statistical threshold.

Row A shows activation observed in correlation with the conditional probability regressor from the combined process GLM, highlighting clusters observed in body of caudate. Row B shows activation observed in correlation with the forward entropy regressor, in particular a cluster of negative correlation in anterior ventral striatum.

Acknowledgements

The authors are grateful to Todd Gureckis, David Heeger, Rich Ivry, Michael Landy, Brian McElree, Wendy Suzuki, Daphna Shohamy and Klaas Stephan for helpful conversations, and Samuel Gershman, Jian Li, and Dylan Simon for valuable technical assistance.

Grants: This work was funded by a McKnight Scholar Award, NIMH grant 1R01MH087882-01, part of the CRCNS program, Human Frontiers Science Program Grant RGP0036/2009-C, and a NARSAD Young Investigator Award.

Contributor Information

Aaron M. Bornstein, New York University, 4 Washington Pl. Suite 888, New York, NY 10003 USA

Nathaniel D. Daw, New York University, 4 Washington Pl. Suite 888, New York, NY 10003 USA

References

- Bahrick HP. Incidental learning under two incentive conditions. Journal of Experimental Psychology. 1954;47(3):170–172. doi: 10.1037/h0053619. [DOI] [PubMed] [Google Scholar]

- Bakker A, Kirwan CB, Miller M, Stark CEL. Pattern separation in the human hippocampal CA3 and dentate gyrus. Science. 2008;319(5870):1640–1642. doi: 10.1126/science.1152882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37(4):407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Daw ND, O'Doherty JP. Multiple forms of value learning and the function of dopamine. In: Glimcher PW, Camerer C, Poldrack RA, Fehr E, editors. Neuroeconomics: Decision Making and the Brain. Academic Press; 2008. [Google Scholar]

- Bar M. Visual Objects in Context. Nature Reviews Neuroscience. 2004;5(8):617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nature Neuroscience. 2004;7(4):404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47(1):129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens TE, Woolrich MW, Walton ME, Rushworth MF. Learning the value of information in an uncertain world. Nature Neuroscience. 2007;10(9):1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- Bernacchia A, Seo H, Lee D, Wang X-J. A reservoir of time constants for memory traces in cortical neurons. Nature Neuroscience. 2011;14(3):366–372. doi: 10.1038/nn.2752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bornstein AM, Daw ND. Multiplicity of control in the basal ganglia: Computational roles of striatal subregions. Current Opinion in Neurobiology. 2011;21(3):374–380. doi: 10.1016/j.conb.2011.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spatial vision. 1997;10(4):433–436. [PubMed] [Google Scholar]

- Cardinal RN, Parkinson JA, Hall J, Everitt BJ. Emotion and motivation: The role of the amygdala, ventral striatum, and prefrontal cortex. Critical Reviews in Neurobiology. 2002;26(3):321–352. doi: 10.1016/s0149-7634(02)00007-6. [DOI] [PubMed] [Google Scholar]

- Cohen NJ, Eichenbaum H. Memory, Amnesia, and the Hippocampal System. Cambridge, MA: MIT Press; 1993. [Google Scholar]

- Corbit LH, Balleine BW. The role of the hippocampus in instrumental conditioning. Journal of Neuroscience. 2000;20(11):4233. doi: 10.1523/JNEUROSCI.20-11-04233.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corkin S. What’s new with the amnesic patient H.M.? Nature Reviews Neuroscience. 2002;3(2):153–160. doi: 10.1038/nrn726. [DOI] [PubMed] [Google Scholar]

- Corrado GS, Sugrue LP, Sebastian Seung H, Newsome WT. Linear-Nonlinear-Poisson Models of Primate Choice Dynamics. Journal of the Experimental Analysis of Behavior. 2005;84(3):581–617. doi: 10.1901/jeab.2005.23-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Courville AC, Daw ND, Touretzky DS. Bayesian theories of conditioning in a changing world. Trends in Cognitive Sciences. 2006;10(7):294–300. doi: 10.1016/j.tics.2006.05.004. [DOI] [PubMed] [Google Scholar]

- Curran T. Higher-Order Associative Learning in Amnesia: Evidence from the Serial Reaction Time Task. Journal of Cognitive Neuroscience. 1997;9(4):522–533. doi: 10.1162/jocn.1997.9.4.522. [DOI] [PubMed] [Google Scholar]

- Davis DG, Staddon JER. Memory for Reward in Probabilistic Choice. Behaviour. 1990;114(1–4) [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nature Neuroscience. 2005;8(12):1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Daw ND, O’Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441(7095):876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND. Trial-by-trial data analysis using computational models. In: Phelps EA, Robbins TW, Delgado M, editors. Affect, Learning and Decision Making, Attention and Performance XXIII. Oxford University Press; 2010. [Google Scholar]

- Daw ND, Gershman SJ, Seymour B, Dayan P, Dolan RJ. Model-Based Influences on Humans’ Choices and Striatal Prediction Errors. Neuron. 2011;69(6):1204–1215. doi: 10.1016/j.neuron.2011.02.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dayan P, Kakade S, Montague PR. Learning and selective attention. Nature Neuroscience. 2000;3:1218–1223. doi: 10.1038/81504. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Nystrom L, Fissel C, Noll D, Fiez J. Tracking the Hemodynamic Responses to Reward and Punishment in the Striatum. Journal of Neurophysiology. 2000;84(6):3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- Diba K, Buszáki G. Forward and reverse hippocampal place-cell sequences during ripples. Nature Neuroscience. 2007;10:1241–1242. doi: 10.1038/nn1961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dickinson A, Balleine BW. The role of learning in the operation of motivational systems. Stevens handbook of experimental psychology vol. 3: Learning, motivation and emotion. 2002:497–533. [Google Scholar]

- Doeller CF, King JA, Burgess N. Parallel striatal and hippocampal systems for landmarks and boundaries in spatial memory. Proceedings of the National Academy of Sciences of the United States of America. 2008;105(15):5915–5920. doi: 10.1073/pnas.0801489105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doll BB, Jacobs WJ, Sanfey AG, Frank MJ. Instructional control of reinforcement learning: a behavioral and neurocomputational investigation. Brain Research. 2009;1299:74–94. doi: 10.1016/j.brainres.2009.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doya K. What are the computations of the cerebellum, the basal ganglia and the cerebral cortex? Neural Networks. 1999;12:961–974. doi: 10.1016/s0893-6080(99)00046-5. [DOI] [PubMed] [Google Scholar]

- Drevets WC, Gautier C, Price JC, Kupfer DJ, Kinahan PE, Grace AA, Price JL, Mathis CA. Amphetamine-induced dopamine release in human ventral striatum correlates with euphoria. Biological Psychiatry. 2001;49:81–89. doi: 10.1016/s0006-3223(00)01038-6. [DOI] [PubMed] [Google Scholar]

- Duncan K, Curtis C, Davachi L. Distinct memory signatures in the hippocampus: Intentional States distinguish match and mismatch enhancement signals. Journal of Neuroscience. 2009;29(1):131. doi: 10.1523/JNEUROSCI.2998-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H. Hippocampus: Cognitive Processes and Neural Representations that Underlie Declarative Memory. Neuron. 2004;44:109–120. doi: 10.1016/j.neuron.2004.08.028. [DOI] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392(6676):598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Ferbinteanu J, Shapiro ML. Prospective and retrospective memory coding in the hippocampus. Neuron. 2003;40(6):1227–1239. doi: 10.1016/s0896-6273(03)00752-9. [DOI] [PubMed] [Google Scholar]

- Fermin A, Yoshida T, Ito M, Yoshimoto J, Doya K. Evidence for model-based action planning in a sequential finger movement task. J Motor Behav. 2010;42:371–379. doi: 10.1080/00222895.2010.526467. [DOI] [PubMed] [Google Scholar]

- Foerde K, Knowlton BJ, Poldrack RA. Modulation of competing memory systems by distraction. Proc. Natl. Acad. Sci. 2006;103:11778–11783. doi: 10.1073/pnas.0602659103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MJ, Doll BB, Oas-Terpstra J, Moreno F. Prefrontal and striatal dopaminergic genes predict individual differences in exploration and exploitation. Nature Neuroscience. 2009;12(8):1062–1068. doi: 10.1038/nn.2342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Josephs O, Rees G, Turner R. Nonlinear Event-Related Responses in fMRI. Magnetic Resonance Methods. 1998;39:41–52. doi: 10.1002/mrm.1910390109. [DOI] [PubMed] [Google Scholar]

- Gallistel CR, Fairhurst S, Balsam PD. The learning curve: Implications of a quantitative analysis. Proc Natl Acad Sci. 2004;101:13124–13131. doi: 10.1073/pnas.0404965101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gershman SJ, Pesaran B, Daw ND. Human reinforcement learning subdivides structured action spaces by learning effector-specific values. Journal of Neuroscience. 2009;29(43):13524–13531. doi: 10.1523/JNEUROSCI.2469-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gläscher J, Buchel C. Formal learning theory dissociates brain regions with different temporal integration. Neuron. 2005;47(2):295–306. doi: 10.1016/j.neuron.2005.06.008. [DOI] [PubMed] [Google Scholar]