ABSTRACT

BACKGROUND

Physician organizations (POs)—independent practice associations and medical groups—located in lower socioeconomic status (SES) areas may score poorly in pay-for-performance (P4P) programs.

OBJECTIVE

To examine the association between PO location and P4P performance.

DESIGN

Cross-sectional study; Integrated Healthcare Association’s (IHA’s) P4P Program, the largest non-governmental, multi-payer program for POs in the U.S.

PARTICIPANTS

160 POs participating in 2009.

MAIN MEASURES

We measured PO SES using established methods that involved geo-coding 11,718 practice sites within 160 POs to their respective census tracts and weighting tract-specific SES according to the number of primary care physicians at each site. P4P performance was defined by IHA’s program and was a composite mainly representing clinical quality, but also including measures of patient experience, information technology and registry use.

KEY RESULTS

The area-based PO SES measure ranged from −11 to +11 (mean 0, SD 5), and the IHA P4P performance score ranged from 23 to 86 (mean 69, SD 15). In bivariate analysis, there was a significant positive relationship between PO SES and P4P performance (p < 0.001). In multivariate analysis, a one standard deviation increase in PO SES was associated with a 44% increase (relative risk 1.44, 95%CI, 1.22-1.71) in the likelihood of a PO being ranked in the top two quintiles of performance (p < 0.001).

CONCLUSIONS

Physician organizations’ performance scores in a major P4P program vary by the SES of the areas in which their practice sites are located. P4P programs that do not account for this are likely to pay higher bonuses to POs in higher SES areas, thus increasing the resource gap between these POs and POs in lower SES areas, which may increase disparities in the care they provide.

KEY WORDS: physician organizations, independent practice associations, medical groups, pay-for-performance, quality, disparities

INTRODUCTION

Pay-for-performance (P4P) incentives continue to be the key strategy by which stakeholders align financial rewards with quality healthcare.1,2 Not only is the use of P4P incentives common, but recent health legislation mandates the continued use of these incentives. Increasingly, public and private health plans are experimenting with tying payment rewards to quality healthcare through “value-based purchasing” efforts, “alternative quality contracts,” and accountable care organizations.2–4 Understanding how P4P programs work is important for shaping payment policy as health reform continues to unfold.

One ongoing concern about the use of P4P programs is that they could widen resource gaps among provider organizations and thus exacerbate disparities in care.5 POs—medical groups and independent practice associations (IPAs)—located in lower socioeconomic status (SES) areas may be less able to obtain bonuses than those in higher SES areas for at least three reasons. POs in lower SES areas may treat patients who are less educated or less wealthy, and therefore less able to seek needed health information or follow recommended care. For example, it may be more difficult to obtain high rates of screening for diabetic retinopathy or cervical cancer for these patients.6,7 Second, lower SES areas are likely to have fewer resources than more affluent ones—fewer specialist physicians, pharmacies, laboratories, imaging facilities and transportation options.8–13 Third, POs in lower SES areas may have fewer resources (financial and human) because they have a worse “payer mix” (i.e., a higher proportion of Medicaid and uninsured patients), receive lower payment rates from health plans, and/or have difficulty recruiting highly qualified physicians and staff.6,7,14–17

Recent research has demonstrated that hospitals and health plans located in less affluent areas score lower on the types of measures used in pay-for-performance programs,6,9,15,18 and that patients are less likely to receive care recommended by guidelines (e.g., screening mammograms) when they are cared for by individual physicians who care for higher percentages of patients of low SES.6,15,18 However, it is not known whether POs located in lower SES areas perform worse in large scale P4P programs.

We address this question using data from the California Integrated Healthcare Association (IHA), the largest non-governmental, multi-payer P4P program in the U.S.14–16 The IHA program represented a prevailing approach to P4P program design at the time, and tying bonuses to “all-or-nothing” achievement (i.e., payment only if a pre-determined quality threshold is crossed) is still the approach used by Medicare and most commercial health plans, even as some are beginning to combine P4P with global budgets.5,19,20 Since 2003, this program (which includes the seven largest health plans in California) has distributed between $38 million and $65 million in P4P bonuses annually to approximately 200 POs across the state.21

We used readily available SES information from the United States Census and geocoding techniques to characterize the SES of a PO, and to quantify the relationship between area-based SES and P4P performance. We hypothesized that POs with more primary care physicians in practice sites located within lower SES areas are less likely to score in the top tiers of performance within IHA’s P4P program than those located in higher SES areas.

METHODS

Setting and Participants

Our study included 160 of the 219 POs that participated in IHA’s P4P program in 2008 and 2009.21 IHA provided the name and address for the central administrative offices for 219 POs. We called POs directly and searched their websites to gather the additional information on the 11,718 practice sites we needed to calculate the area-based PO SES measure. We excluded 43 POs for which we were unable to identify practice sites or the number of primary care physicians at the sites, seven for which IHA did not receive sufficient information for calculating P4P scores, and two that did not provide their percent annual revenues from Medicaid. We also excluded five academic medical centers because they have access to funding not generated by clinical activities (e.g., research, graduate medical education) and therefore may be less affected when some of their practice sites are in low SES areas.22,23 Finally, we excluded two that underwent major organizational changes between 2008 and 2009 (e.g., went out of business).

The unit-of-analysis was the PO because that was the level at which P4P performance was assessed and bonus payments were made.

Measures

Area-based PO SES Measure

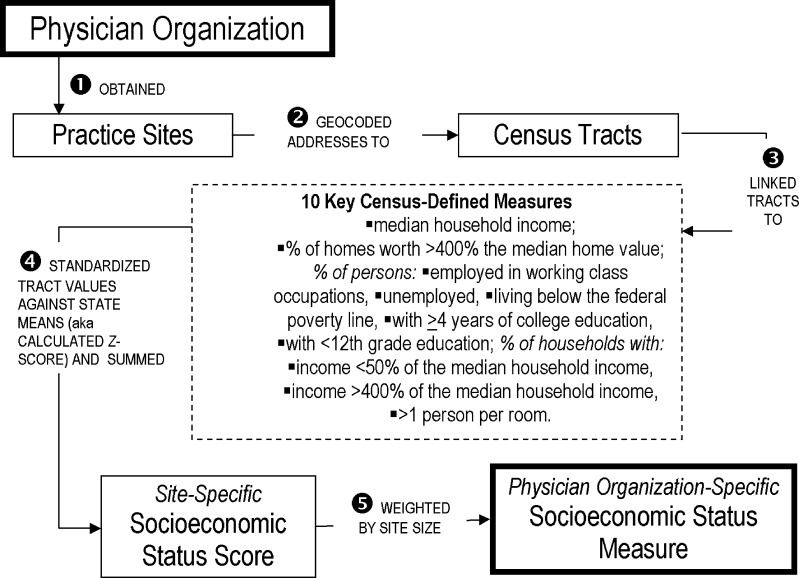

Our main predictor was an area-based PO SES measure based on Krieger’s area-based socioeconomic measure, a well-established method for characterizing the SES of a census tract.24–28 The area-based PO SES measure serves as a proxy for the characteristics of individual patients likely to be seen by a PO located in an area and also for the resources within that area (e.g., number of imaging facilities). To determine PO SES (Fig. 1), we first identified all 11,718 practice sites within the 160 POs, and then identified the number of PCPs at each site. Second, we geo-coded practice sites to corresponding census tracts29 and linked tracts to Krieger’s 10 census-defined variables. Third, we calculated a z-score for each of these 10 variables, standardizing values against those for California as a whole (i.e., if a census tract’s value for a variable was equivalent to the state’s average, then the census tract’s z-score for that variable was 0). We then added each tract’s z-scores together to generate a site-specific summary SES score; we weighted each census variable equally per previously described methodologies.24–28 We weighted the site-specific SES scores according to the proportion of the POs’ total number of primary care physicians at each site because practice sites varied in terms of size and because the P4P program is focused on primary care performance targets. We considered physicians to be primary care physicians if they practiced in any of the following specialties: general internal medicine, geriatrics, general practice, family practice or general pediatrics. These weighted site-specific scores were then summed to arrive at the area-based PO SES measure, oriented such that larger values corresponded to higher SES.

Figure 1.

Method for determining the area-based physician organization socioeconomic status (PO SES) measure.

IHA P4P Performance Score

Our main outcome variable was the composite performance score used in IHA’s P4P Program; this is the score used by health plans to calculate the P4P bonus payments they make to POs. The IHA P4P composite performance score was comprised of measures related to clinical quality (e.g., percent of eligible patients who were screened for breast, cervical and colorectal cancer), patient experience (e.g., percent of patients who indicated that their providers communicated well with patients), and use of information technology and registries (e.g., percentage of physicians using clinical decision support, whether registries were being used to coordinate care for patients with diabetes). IHA’s methodology for calculating the composite performance score is available on the IHA website, as is the total amount of bonuses paid by health plans annually.21,30

Covariates

We included three covariates: PO size (i.e., total number of PCPs [expressed in log base 10]); because larger organizations may have more resources or may be better able to take advantage of economies of scale (e.g., install IT, support nurse care managers); PO type (i.e., medical group versus IPA) since there is some data to suggest that larger medical groups on average perform better than IPAs31; and percentage of annual revenues from Medicaid. We included the Medicaid variable in our analyses because POs in both high and low SES areas vary greatly in their willingness to accept Medicaid patients (hence the low correlation between our Medicaid and SES variables). The Medicaid variable may thus provide some additional information, beyond that contained in our SES measure, about the characteristics of some of the patients seen by the PO and about the resources available to it (because Medicaid pays physicians at very low rates).32

Statistical Analysis

We used a non-parametric trend test to initially explore the bivariate relationship between the area-based PO SES measure and IHA’s P4P performance score.33 To mimic the fact that bonus payments were triggered by a certain threshold of performance, we examined the likelihood of POs scoring in the top two quintiles of performance using bivariate and multivariate regression with modeling to estimate relative risks (RR) as described by Zou.34 Due to a highly skewed distribution, the dichotomous outcome for level of Diabetes Registry Use (range 0–5) was constructed as a score of 5 versus less than 5.

We conducted three sensitivity analyses. First, we examined the likely impact of our inclusion criteria by comparing the characteristics of the 160 POs included to those of the excluded POs using Wilcoxon rank-sum tests.35 Second, we examined the impact of area by varying the size of the area upon which the PO SES measure was based: practice site’s census tract only, tract plus all tracts within 1.5 -10 miles, or tract plus all immediately contiguous tracts (generally broader than the 1.5-10-mile radius).36–39 These radii were based on prior research that shows that most patients seek primary care close to their homes—within a 1.5-mile radius in urban and suburban areas or a 10-mile radius in rural areas.36,37 Third, we conducted sensitivity analyses around levels that are typically used to determine whether a bonus payment would be triggered (i.e., top 25, 33, or 50% in addition to top 40%).

We used STATA Version 11 (StataCorp, College Station, TX) for all analyses. The Institutional Review Boards of The University of Chicago, Weill Cornell Medical College, and Children’s Hospital of Boston approved this study.

Role of the Funding Source

This project was supported by a grant from the Robert Wood Johnson Foundation. The funding source had no role in the design, data collection, analysis, interpretation, decision to submit manuscript for publication, or manuscript preparation. Dr. Chien is supported by a Career Development Award (K08HS017146) from the Agency for Healthcare Research and Quality.

RESULTS

The 160 POs included 11,718 practice sites with 21,831 PCPs. (Table 1.) Within these POs, the total number of PCPs ranged from 6 to 1,248 (mean 136, SD 156) and the total number of practice sites ranged from 1 to 727 (mean 73, SD 104). Fifty-seven percent of the POs were IPAs; 43% were medical groups. Half of the POs received no revenue from Medicaid; among the POs that did, the mean reported percentage of total revenue from Medicaid-insured patients was 10% (SD 16%, range 0.2% to 80%). The area-based PO SES measure ranged from −11 to +11 (mean 0, SD 5; range for all census tracts within California was −24 to +26). POs’ composite performance scores ranged from 23 to 86 (mean 69, SD 15; possible range 0–100).

Table 1.

Physician Organization Characteristics, Socioeconomic Status, and Composite Pay-for-Performance (P4P) Performance Score

| PO | Number of Primary Care Physicians per PO | Area-Based PO SES Measure | IHA’s Composite P4P Performance Score | |

|---|---|---|---|---|

| N (%) | Mean (SD, Range) | Mean (SD, Range) | Mean (SD, Range) | |

| Overall | 160 (100%) | 136 (156, 6 - 1 248) | 0 (5, -11 - +11) | 69 (15, 23 - 69) |

| By Size (Number of Primary Care Physicians) | ||||

| 1st Quartile | 41 (26) | 28 (13) | −1 (5) | 60 (18) |

| 2nd Quartile | 39 (24) | 69 (13) | 1 (5) | 70 (15) |

| 3rd Quartile | 40 (25) | 120 (18) | −1 (4) | 73 (13) |

| 4th Quartile | 40 (25) | 330 (207) | 1 (4) | 74 (11) |

| By Type | ||||

| Independent Practice Association | 91 (57) | 168 (185) | 0 (5) | 66 (15) |

| Medical Group | 69 (43) | 94 (90) | 0 (5) | 74 (15) |

| By Percent of Annual Revenue from Medicaid | ||||

| 0% | 79 (49) | 125 (126) | 0 (4) | 68 (14) |

| >0-1% | 28 (18) | 147 (226) | 2 (4) | 78 (9) |

| >1-6% | 27 (17) | 141 (147) | −1 (5) | 74 (16) |

| >6% | 26 (16) | 154 (163) | −2 (4) | 59 (18) |

| By Area-Based PO SES Measure | ||||

| 1st Quintile - LOW | 32 (20) | 121 (147) | −7 (2) | 62 (18) |

| 2nd Quintile | 32 (20) | 167 (221) | −3 (1) | 65 (17) |

| 3rd Quintile | 32 (20) | 130 (155) | 0 (1) | 73 (11) |

| 4th Quintile | 32 (20) | 127 (133) | 2 (1) | 71 (16) |

| 5th Quintile - HIGH | 32 (20) | 138 (106) | 6 (2) | 76 (11) |

P4P = Pay-for-Performance; PO = Physician Organization; SES = Socioeconomic Status; IHA = Integrated Healthcare Association

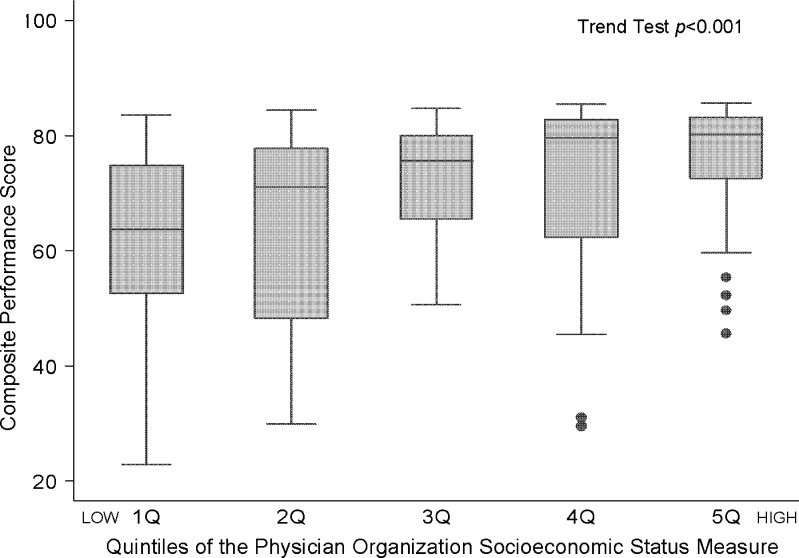

The bivariate relationship between the area-based PO SES measure and the composite performance score was significant (trend test p < 0.001); POs in higher SES areas had better performance scores. (Fig. 2.) POs located in the highest SES quintile had a median performance score nearly 20 points higher than those located in the lowest quintile. However, four POs within the lowest SES quintile scored in the highest quintile of P4P performance, and four POs within the highest SES quintile scored quite low. In multivariate analysis, the PO SES measure continued to be significantly associated with the IHA P4P performance score. (Table 2.) A single standard deviation increase in the area-based PO SES measure (about 5 points) was associated with a 44% greater likelihood (RR 1.44, 95%CI 1.22-1.71, p < 0.001) of the PO being ranked in the top 40% of performance. As expected, the likelihood of a PO ranking in the top 40% was also higher for larger POs than for smaller (RR 2.55 for a PO with ten times more physicians; 95%CI 1.67-3.90, p < 0.001) and for medical groups compared to IPAs (RR 2.93, 95%CI 2.00-4.28, p < 0.001). POs with higher percentages of revenue from Medicaid were less likely to rank in the top two performance quintiles (RR 0.68, 95% CI 0.50-0.93, p = 0.017).

Figure 2.

Bivariate relationship between the physician organization socioeconomic status measure and the integrated healthcare association’s composite P4P performance score.

Table 2.

Bivariate and Multivariate Relationships between Physician Organization Socioeconomic Status and Performance in Integrated Healthcare Association’s Pay-for-Performance Program

| Unadjusted (bivariate) likelihood of ranking in the Top 40% | Adjusted (multivariate) likelihood of ranking in the Top 40% | |

|---|---|---|

| Physician Organization Characteristic | RR (95% CI) | RR (95% CI) |

| Area-Based Physician Organization Socioeconomic Status Measure (PO SES) per 1 SD increase | 1.63 (1.36, 1.94)*** | 1.44 (1.22, 1.71)*** |

| Size - Log Base 10 of the Number of Primary Care Physicians | 2.55 (1.67, 3.90)*** | |

| Type - Medical Group (referent is Independent Practice Association) | 2.93 (2.00, 4.28)*** | |

| Percent of Annual Revenues from Medicaid per 10% increase | 0.68 (0.50, 0.93)* |

N = 160; RR = Relative Risk; CI = Confidence Interval; SD = Standard Deviation; *p < 0.05, **p < 0.01, ***p < 0.001

We obtained similar results when we conducted multivariate analyses using the scores for individual domains of the composite performance score as the outcome variables. (Table 2.)

In sensitivity analyses, we found these associations to be robust whether we based the area-based PO SES measure on the practice sites’ census tract, the practice’s census tract plus all tracts within a 1.5- or 10-mile radius, or the site’s census tract plus immediately contiguous census tracts (data not shown). We also found that associations were robust to varying the cutoff threshold for the IHA P4P performance score (top 25, 33, or 50%). We also found that excluded POs reported a significantly higher percentage of their revenues from Medicaid (median was 43% for excluded POs versus 0.3% for the included POs; p < 0.001) and had lower composite performance scores (mean was 49 for excluded POs versus 69 for included POs; p < 0.001).

DISCUSSION

We found a significant association between the SES of the census tracts in which POs are located and their performance in IHA’s P4P program. This association was robust across three different methods for defining the areas in which a PO is located, and at a variety of cut-points for awarding bonuses in P4P programs.

To our knowledge this is the first study to show that an area-based SES measure is associated with P4P performance for large medical groups and independent practice associations; its impact on hospital P4P has been demonstrated recently.9

This finding may be of particular interest to rank-and-file physicians because they will be increasingly subject to performance incentives as a part of how they are reimbursed for their work. Tying rewards or sanctions to healthcare quality through pay-for-performance or public reporting programs remains a core strategy in healthcare reform efforts; these incentives are featured prominently in the Patient Protection and Affordable Care Act (ACA) and will be relied upon to counterbalance incentives aimed primarily at controlling costs.1 While early experience suggests that performance incentives may improve care quality, there are concerns that these programs may also inadvertently penalize providers serving more disadvantaged populations and thus potentially widen disparities in the quality of care.5,10,40–45

This finding is also of interest because it suggests that the healthcare “neighborhood” may matter even for the large organizations included in the IHA P4P program—large medical groups and IPAs thought to constitute an important foundation upon which the accountable care organizations featured in health care reform can be built.46–48

We found a significant association between the SES of the areas in which a PO is located and the PO’s performance on quality metrics in both bivariate and multivariate analyses. From an immediate policy point-of-view, the bivariate result—which shows a somewhat larger “effect” of SES—is most relevant because this is the manner in which payments are dispersed. If policymakers are concerned that their P4P program may be increasing resource gaps between POs in higher and lower SES areas, they first need to know whether POs in lower SES areas have lower performance scores, regardless of their other characteristics. The bivariate analysis provides this information. The multivariate analysis illustrates the strength of this relationship after accounting for other important explanatory factors.

Our study has six main limitations. First, the POs in the IHA program are quite large; it is possible that the association between performance and area-based SES is different for small physician practices. Second, IHA’s P4P program operates in only California, which differs from most of the U.S. in that it has a large number of large medical groups and IPAs, more capitation and more delegation of utilization management from health plans to POs; findings in this setting may or may not be generalizable to other parts of the country. Third, our analysis does not include detailed information about individual patients or about specific resources in the areas being served by POs. A multi-level analysis—one that includes patient-level, PO-level, and area-level variables—would be needed in order to better determine the relative contribution of each factor. Fourth, we had to exclude 59 of the 219 eligible POs. Excluded POs served more Medicaid patients and performed less well in the P4P program. If we had been able to include these POs, it is likely that the finding of an association between the area-based PO SES measure and performance would have been even stronger. Fifth, although IHA’s program represents a prevailing approach to P4P program design, alternative tactics (e.g., those attempting to reward incremental improvements rather than all-or-nothing achievement) may yield different results.49,50 Sixth, P4P may have a different effect if achievement levels are set lower versus higher—although in our study results did not change at a variety of achievement levels.

Our data do not address the mechanisms by which POs in lower SES areas score lower on IHA’s performance measure. It is possible that these POs deliver poorer quality care, and/or that it is more difficult for POs in low SES areas to score well, even when they deliver high quality care, for the reasons discussed in the introduction to this article. Regardless of the mechanism, P4P programs that fail to account for the SES of the areas in which providers are located risk increasing resource gaps between providers in high versus low SES areas, and thus increasing disparities in health care delivery.

Public and private payers may want to consider alternative designs for P4P programs to make them less likely to increase disparities, and medical groups and IPAs may want to encourage these alternative designs. One approach would be to adjust for the SES of the areas a PO serves through risk adjustment or by placing POs into strata based on their SES score and paying P4P bonuses based on comparisons within the same stratum.5 Further work is needed to better understand how alternative incentive strategies may affect providers or which structural features are important for POs aiming to improve quality while being located in lower SES settings.17,50

Another option would be to design P4P programs to de-emphasize absolute thresholds (i.e., only paying providers for reaching a certain level of performance) and tournaments (where providers earn rewards for scoring higher than their competitors), and emphasize rates of relative improvement.49,51,52 In fact, in 2009, IHA suggested a methodology that gives performance attainment and relative improvement more equal weighting; since then, three of the seven participating health plans have adopted this recommendation. This strategy would not eliminate the possibility that P4P programs would increase disparities, but could mitigate it.

In summary, this paper supports the hypothesis that the SES of the locations in which a medical group or IPA’s physicians care for patients is likely to be associated with how well the PO performs in P4P programs. The strengths of this paper include the diversity of POs with respect to their area-based SES, and the use of well-established methodologies for defining area SES and for measuring P4P performance. As policymakers and researchers devote increasing attention to the reasons why POs in lower SES areas have lower scores on P4P quality measures, and to ways of redesigning P4P programs, it may be possible to reduce, or at least not increase, disparities in health care delivery between richer and poorer areas. Physicians will want to learn how some POs located in low SES areas are nevertheless able to provide high quality care, as four POs were able to do in our study. Future studies should evaluate the relative contributions of patient-, practice-, and area-level factors when assessing healthcare performance.

Acknowledgements

Special thanks to Talia Walker and Caitlin Rideout for their assistance.

Funding

This project was supported by a grant from the Robert Wood Johnson Foundation.

Prior presentations

The National Pay for Performance Summit, March 24, 2011; AcademyHealth Annual Research Meeting, June 12, 2011

Conflicts of Interest

Dr. Chien does not have any conflicts of interest.

Ms. Wroblewski does not have any conflicts of interest.

Dr. Damberg does not have any conflicts of interest.

Dr. Williams does not have any conflicts of interest. His employer, Integrated Healthcare Association, a non-profit, has the following conflicts of interest: Consultancies: Keenan Advisory Board; Grants received: Sanofi-Aventis, 11/2008.

Ms. Yanagihara does not have any conflicts of interest. Her employer, Integrated Healthcare Association, a non-profit, has the following conflicts of interest: Consultancies: Keenan Advisory Board; Grants received: Sanofi-Aventis, 11/2008.

Ms. Yakunina does not have any conflicts of interest. Her former employer, Integrated Healthcare Association, a non-profit, has the following conflicts of interest: Consultancies: Keenan Advisory Board; Grants received: Sanofi-Aventis, 11/2008.

Dr. Casalino does not have any conflicts of interest.

REFERENCES

- 1.H.R. 3590 --111th Congress. Patient Protection and Affordable Care Act. United States of America; 2009.

- 2.Shortell SM, Casalino LP. Health care reform requires accountable care systems. JAMA. 2008;300(1):95–97. doi: 10.1001/jama.300.1.95. [DOI] [PubMed] [Google Scholar]

- 3.Rosenthal MB, Landon BE, Normand SL, Frank RG, Epstein AM. Pay for performance in commercial HMOs. N Engl J Med. 2006;355(18):1895–1902. doi: 10.1056/NEJMsa063682. [DOI] [PubMed] [Google Scholar]

- 4.Chernew ME, Mechanic RE, Landon BE, Safran DG. Private-payer innovation in Massachusetts: the ‘Alternative Quality Contract’. Health Aff (Millwood) 2011;30(1):51–61. doi: 10.1377/hlthaff.2010.0980. [DOI] [PubMed] [Google Scholar]

- 5.Chien AT, Chin MH, Davis AM, Casalino LP. Pay for performance, public reporting, and racial disparities in health care: how are programs being designed? Med Care Res Rev. 2007;64(5 Suppl):283S–304S. doi: 10.1177/1077558707305426. [DOI] [PubMed] [Google Scholar]

- 6.Franks P, Fiscella K. Effect of patient socioeconomic status on physician profiles for prevention, disease management, and diagnostic testing costs. Med Care. 2002;40(8):717–724. doi: 10.1097/00005650-200208000-00011. [DOI] [PubMed] [Google Scholar]

- 7.Reschovsky JD, O’Malley AS. Do primary care physicians treating minority patients report problems delivering high-quality care? Health Aff (Millwood) 2008;27(3):w222–w231. doi: 10.1377/hlthaff.27.3.w222. [DOI] [PubMed] [Google Scholar]

- 8.Subramanian SV, Chen JT, Rehkopf DH, Waterman PD, Krieger N. Comparing individual- and area-based socioeconomic measures for the surveillance of health disparities: A multilevel analysis of Massachusetts births, 1989–1991. Am J Epidemiol. 2006;164(9):823–834. doi: 10.1093/aje/kwj313. [DOI] [PubMed] [Google Scholar]

- 9.Blustein J, Borden WB, Valentine M. Hospital performance, the local economy, and the local workforce: findings from a US National Longitudinal Study. PLoS Med. 2010;7(6):e1000297. PMCID: PMC2893955. [DOI] [PMC free article] [PubMed]

- 10.Bach PB, Pham HH, Schrag D, Tate RC, Hargraves JL. Primary care physicians who treat blacks and whites. N Engl J Med. 2004;351(6):575–584. doi: 10.1056/NEJMsa040609. [DOI] [PubMed] [Google Scholar]

- 11.Rosenblatt RA, Andrilla CHA, Curtin T, Hart LG. Shortages of medical personnel at community health centers: implications for planned expansion. JAMA. 2006;295(9):1042–1049. doi: 10.1001/jama.295.9.1042. [DOI] [PubMed] [Google Scholar]

- 12.National Healthcare Disparities Report, 2010. Rockville, MD: U.S. Department of Health and Human Services; 2011. [Google Scholar]

- 13.National Healthcare Disparities Report, 2003. Rockville, MD: U.S. Department of Health and Human Services; 2003. [Google Scholar]

- 14.Hong CS, Atlas SJ, Chang Y, et al. Relationship between patient panel characteristics and primary care physician clinical performance rankings. JAMA. 2010;304(10):1107–1113. doi: 10.1001/jama.2010.1287. [DOI] [PubMed] [Google Scholar]

- 15.Franks P, Fiscella K, Beckett L, Zwanziger J, Mooney C, Gorthy S. Effects of patient and physician practice socioeconomic status on the health care of privately insured managed care patients. Med Care. 2003;41(7):842–852. doi: 10.1097/00005650-200307000-00008. [DOI] [PubMed] [Google Scholar]

- 16.Mehta RH, Liang L, Karve AM, et al. Association of patient case-mix adjustment, hospital process performance rankings, and eligibility for financial incentives. JAMA. 2008;300(16):1897–1903. doi: 10.1001/jama.300.16.1897. [DOI] [PubMed] [Google Scholar]

- 17.Young G, Meterko M, White B, et al. Pay-for-performance in safety net settings: issues, opportunities, and challenges for the future. J Healthc Manag. 2010;55(2):132–141. [PubMed] [Google Scholar]

- 18.Pham HH, Schrag D, Hargraves JL, Bach PB. Delivery of preventive services to older adults by primary care physicians. JAMA. 2005;294(4):473–481. doi: 10.1001/jama.294.4.473. [DOI] [PubMed] [Google Scholar]

- 19.The Leapfrog Group. The Leapfrog Group fact sheet. http://www.leapfroggroup.org/leapfrog-factsheet. Accessed November 16, 2011.

- 20.Center for Health Care Strategies, Centers for Medicare and Medicaid Services. Descriptions of selected performance incentives programs. http://www.chcs.org/usr_doc/State_Performance_Incentive_Chart_0206.pdf. Accessed November 16, 2011.

- 21.Integrated Healthcare Association. The California Pay for Performance Program. http://www.iha.org/p4p_california.html. Accessed November 16, 2011.

- 22.Andreae MC, Blad K, Cabana MD. Physician compensation programs in academic medical centers. Health Care Manage Rev. 2006;31(3):251–258. doi: 10.1097/00004010-200607000-00011. [DOI] [PubMed] [Google Scholar]

- 23.Ayanian JZ, Weissman JS. Teaching hospitals and quality of care: a review of the literature. Milbank Q. 2002;80(3):569–593. doi: 10.1111/1468-0009.00023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Krieger N, Chen JT, Waterman PD, Rehkopf DH, Subramanian SV. Painting a truer picture of US socioeconomic and racial/ethnic health inequalities: the Public Health Disparities Geocoding Project. Am J Public Health. 2005;95(2):312–323. doi: 10.2105/AJPH.2003.032482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Krieger N, Chen JT, Waterman PD, Rehkopf DH, Subramanian SV. Race/ethnicity, gender, and monitoring socioeconomic gradients in health: a comparison of area-based socioeconomic measures--the Public Health Disparities Geocoding Project. Am J Public Health. 2003;93(10):1655–1671. doi: 10.2105/AJPH.93.10.1655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Erickson SE, Iribarren C, Tolstykh IV, Blanc PD, Eisner MD. Effect of race on asthma management and outcomes in a large, integrated managed care organization. Arch Intern Med. 2007;167(17):1846–1852. doi: 10.1001/archinte.167.17.1846. [DOI] [PubMed] [Google Scholar]

- 27.Coberley CR, Puckrein GA, Dobbs AC, McGinnis MA, Coberley SS, Shurney DW. Effectiveness of disease management programs on improving diabetes care for individuals in health-disparate areas. Dis Manag. 2007;10(3):147–155. doi: 10.1089/dis.2007.641. [DOI] [PubMed] [Google Scholar]

- 28.Pollack LA, Gotway CA, Bates JH, et al. Use of the spatial scan statistic to identify geographic variations in late stage colorectal cancer in California (United States) Cancer Causes Control. 2006;17(4):449–457. doi: 10.1007/s10552-005-0505-1. [DOI] [PubMed] [Google Scholar]

- 29.Information Technology Laboratory, National Institute of Standards and Technology. Federal Information Processing Standards publications. http://www.itl.nist.gov/fipspubs/. Accessed November 16, 2011.

- 30.Integrated Healthcare Association. The California Pay for Performance Program financial transparency. http://www.iha.org/financial_transparency.html. Accessed November 16, 2011.

- 31.Mehrotra A, Epstein AM, Rosenthal MB. Do integrated medical groups provide higher-quality medical care than individual practice associations? Ann Intern Med. 2006;145(11):826–833. doi: 10.7326/0003-4819-145-11-200612050-00007. [DOI] [PubMed] [Google Scholar]

- 32.Cunningham PJ, Hadley J. Effects of changes in incomes and practice circumstances on physicians’ decisions to treat charity and Medicaid patients. Milbank Q. 2008;86(1):91–123. doi: 10.1111/j.1468-0009.2007.00514.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cuzick J. A Wilcoxon-type test for trend. Stat Med. 1985;4(1):87–90. doi: 10.1002/sim.4780040112. [DOI] [PubMed] [Google Scholar]

- 34.Zou G. A modified Poisson regression approach to prospective studies with binary data. Am J Epidemiol. 2004;159(7):702–706. doi: 10.1093/aje/kwh090. [DOI] [PubMed] [Google Scholar]

- 35.Wilcoxon F. Individual comparisons by ranking methods. Biometrics. 1945;1:80–83. doi: 10.2307/3001968. [DOI] [Google Scholar]

- 36.Openshaw S, Taylor PJ. The modifiable areal unit problem. In: Wrigley N, Bennet RJ, editors. Quantiative geography: a British view. London: Routledge and Regan Paul; 1981. [Google Scholar]

- 37.Flowerdew R, Manley DJ, Sabel CE. Neighbourhood effects on health: does it matter where you draw the boundaries? Soc Sci Med. 2008;66(6):1241–1255. doi: 10.1016/j.socscimed.2007.11.042. [DOI] [PubMed] [Google Scholar]

- 38.Billi JE, Pai CW, Spahlinger DA. The effect of distance to primary care physician on health care utilization and disease burden. Health Care Manage Rev. 2007;32(1):22–29. doi: 10.1097/00004010-200701000-00004. [DOI] [PubMed] [Google Scholar]

- 39.Brooks CH. Associations among distance, patient satisfaction, and utilization of two types of inner-city clinics. Med Care. 1973;11(5):373–383. doi: 10.1097/00005650-197309000-00002. [DOI] [PubMed] [Google Scholar]

- 40.Dudley RA, Frolich A, Robinowitz DL, et al. Strategies to support quality-based purchasing: a review of the evidence. Rockville, MD: Agency for Healthcare Research and Quality; 2004. pp. 04–0057. [PubMed] [Google Scholar]

- 41.Friedberg MW, Safran DG, Coltin K, Dresser M, Schneider EC. Paying for performance in primary care: potential impact on practices and disparities. Health Aff (Millwood) 2010;29(5):926–932. doi: 10.1377/hlthaff.2009.0985. [DOI] [PubMed] [Google Scholar]

- 42.Karve AM, Ou FS, Lytle BL, Peterson ED. Potential unintended financial consequences of pay-for-performance on the quality of care for minority patients. Am Heart J. 2008;155(3):571–576. doi: 10.1016/j.ahj.2007.10.043. [DOI] [PubMed] [Google Scholar]

- 43.Petersen LA, Woodard LD, Urech T, Daw C, Sookanan S. Does pay-for-performance improve the quality of health care? Ann Intern Med. 2006;145(4):265–272. doi: 10.7326/0003-4819-145-4-200608150-00006. [DOI] [PubMed] [Google Scholar]

- 44.Rosenthal MB, Frank RG, Li Z, Epstein AM. Early experience with pay-for-performance: from concept to practice. JAMA. 2005;294(14):1788–1793. doi: 10.1001/jama.294.14.1788. [DOI] [PubMed] [Google Scholar]

- 45.Werner RM, Goldman LE, Dudley RA. Comparison of change in quality of care between safety-net and non-safety-net hospitals. JAMA. 2008;299(18):2180–2187. doi: 10.1001/jama.299.18.2180. [DOI] [PubMed] [Google Scholar]

- 46.Casalino LP, Devers KJ, Lake TK, Reed M, Stoddard JJ. Benefits of and barriers to large medical group practice in the United States. Arch Intern Med. 2003;163(16):1958–1964. doi: 10.1001/archinte.163.16.1958. [DOI] [PubMed] [Google Scholar]

- 47.Shortell SM, Casalino LP. Implementing qualifications criteria and technical assistance for accountable care organizations. JAMA. 2010;303(17):1747–1748. doi: 10.1001/jama.2010.575. [DOI] [PubMed] [Google Scholar]

- 48.Hing E, Burt CW. Office-based medical practices: methods and estimates from the National Ambulatory Medical Care Survey. Adv Data. 2007;383:1–16. [PubMed] [Google Scholar]

- 49.Chien AT, Li Z, Rosenthal MB. Improving timely childhood immunizations through pay for performance in Medicaid-managed care. Health Serv Res. 2010;45(6 Pt 2):1934–1947. doi: 10.1111/j.1475-6773.2010.01168.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Chien AT, Eastman D, Li Z, Rosenthal MB. Impact of a pay for performance program to improve diabetes care in the safety net. Preventive Medicine, forthcoming. [DOI] [PubMed]

- 51.Mehrotra A, Sorbero ME, Damberg CL. Using the lessons of behavioral economics to design more effective pay-for-performance programs. Am J Manag Care. 2010;16(7):497–503. [PMC free article] [PubMed] [Google Scholar]

- 52.Hayward RA. All-or-nothing treatment targets make bad performance measures. Am J Manag Care. 2007;13(3):126–128. [PubMed] [Google Scholar]