Abstract

To investigate how hippocampal neurons encode sound stimuli, and the conjunction of sound stimuli with the animal's position in space, we recorded from neurons in the CA1 region of hippocampus in rats while they performed a sound discrimination task. Four different sounds were used, two associated with water reward on the right side of the animal and the other two with water reward on the left side. This allowed us to separate neuronal activity related to sound identity from activity related to response direction. To test the effect of spatial context on sound coding, we trained rats to carry out the task on two identical testing platforms at different locations in the same room. Twenty-one percent of the recorded neurons exhibited sensitivity to sound identity, as quantified by the difference in firing rate for the two sounds associated with the same response direction. Sensitivity to sound identity was often observed on only one of the two testing platforms, indicating an effect of spatial context on sensory responses. Forty-three percent of the neurons were sensitive to response direction, and the probability that any one neuron was sensitive to response direction was statistically independent from its sensitivity to sound identity. There was no significant coding for sound identity when the rats heard the same sounds outside the behavioral task. These results suggest that CA1 neurons encode sound stimuli, but only when those sounds are associated with actions.

Keywords: episodic memory, systems neuroscience, interneurons, auditory objects, pyramidal cells, electrophysiology, mutual information, sensory representation

hippocampal neurons represent the animal's location in the environment (Leutgeb et al. 2004, 2005a, 2005b; O'Keefe 1976; O'Keefe and Burgess 1996; O'Keefe and Nadel 1978; Wills et al. 2005; Wilson and McNaughton 1993). A growing body of work documents that hippocampal neurons also represent individual sensory stimuli and other nonspatial events (Christian and Deadwyler 1986; Eichenbaum et al. 1987; Hampson et al. 1999, 2004; Ho et al. 2011; Hok et al. 2007; Itskov et al. 2011; Komorowski et al. 2009; Lenck-Santini et al. 2008; Manns and Eichenbaum 2009; Moita et al. 2003; Wiener et al. 1989; Wood et al. 1999). Since hippocampus contains a prominent representation of location in space, one can specify and quantify a stimulus representation only after teasing it apart from head and body position-dependent responses associated with the stimulus-guided behavior. Despite the body of work demonstrating the representation of nonspatial, sensory stimuli in the hippocampus, few studies have focused on the distinction between spatial representation and stimulus representation.

We recently demonstrated that hippocampus contains a robust representation of tactile stimuli: many neurons fired at different rates when the rat encountered surfaces of different roughness even when the action associated with those stimuli was identical (Itskov et al. 2011). Still, in separate locations within the same experimental room, independent populations of neurons responded to the same tactile stimuli, indicating that responses to the tactile stimuli were modulated by the location of the animal. We also found that the explicit behavior of the animal (the direction of turn associated with reward) and tactile stimuli were represented by independent populations of neurons. The goal of the current work is to confirm and extend the previous results from tactile stimuli to sound stimuli and to further elaborate on principles underlying the neuronal representation of sounds in the hippocampus.

We therefore trained animals to associate four different sounds with two possible response directions. Because the behavioral responses to the two different stimuli associated with the same response direction were identical, any difference in the neuronal activity evoked by these two stimuli must reflect the coding of stimulus identity, rather than some aspect of behavior. Our experimental design allowed us to “isolate” stimulus-specific auditory responses and to examine how such sensory responses depend on spatial and behavioral context.

MATERIALS AND METHODS

Ethics statement.

All experiments were conducted in accordance with National Institutes of Health, international, and institutional standards for the care and use of animals in research and were approved by the Bioethics Committee of the International School for Advanced Studies (permit n.5575-III/14) and supervised by a consulting veterinarian.

Subjects.

Three Wistar male rats weighing about 350 g were housed together and maintained on a 14:10-h light-dark cycle. To ensure that the animals did not suffer dehydration as a consequence of water restriction, they were allowed to continue the behavioral testing to satiation (250–350 trials per session) and were given access ad libitum to drinking water for 1 h after the end of each training session. The animals' body weight and general state of health was monitored throughout.

Stimuli.

Artificial vowels were chosen as a more “naturalistic” class of stimuli than pure tones. Artificial vowels are a simplified version of vocalization sounds used by many species of mammals and have been used in studies of the ascending auditory pathway from the auditory nerve (Cariani and Delgutte 1996; Holmberg and Hemmert 2004) to the auditory cortex (Bizley et al. 2009, 2010). The spectra of natural vowels are characterized by “formant” peaks that result from resonances in the vocal tract of the vocalizing animal (Schnupp et al. 2011). Formant peaks therefore carry information about both the size and the configuration of the vocal tract, and human listeners readily categorize vowels according to vowel type (e.g., /a/ vs. /o/) (Peterson and Barney 1952), as well as according to speaker type (e.g., male vs. female voice) or speaker identity (Gelfer and Mikos 2005). Many species of animals, including rats (Eriksson and Villa 2006), chinchillas (Burdick and Miller 1975), cats (Dewson 1964), monkeys (Kuhl 1991), and many bird species (Dooling and Brown 1990; Kluender et al. 1987) readily learn to discriminate synthetic vowels.

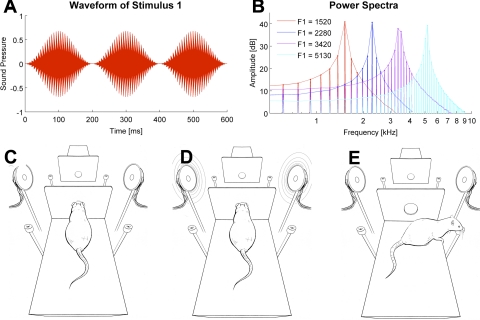

In the present study we used single formant artificial vowels, which were generated using binary click trains at the fundamental frequency of 152 Hz and 200-ms duration and were bandpass filtered with a bandwidth equal to 1/50th of the formant frequency using Malcolm Slaney's Auditory Toolbox (http://cobweb.ecn.purdue.edu/malcolm/interval/1998-010/). Formant center frequencies were 1,520, 2,280, 3,420, 5,130 Hz, respectively. The spectra of all four stimuli are shown in Fig. 1B. The formant frequencies chosen here lie below the point of maximum sensitivity in the rat's audiogram (Heffner et al. 1994), but previous work (Eriksson and Villa 2006) has shown that rats can easily learn to categorize vowels that are distinguished by formant peaks entirely below 4 kHz. The stimuli were ramped on and off using a Hanning window and played three times in succession (see Fig. 1A; sound stimuli are in Supplemental Files S1–S4) such that the stimuli presented to the animals were 600 ms long. (Supplemental data for this article is available online at the Journal of Neurophysiology website.) The stimuli were presented at a sound level of 70 dB SPL simultaneously from two speakers located symmetrically on the both sides of the platforms, so the sound level at the position of the animal's ears was equal when the animal was in the “nose poke” position at the start of the trial (see Fig. 1D).

Fig. 1.

Sound stimuli and behavioral apparatus. A: waveform envelope of the artificial vowel stimulus 1. B: power spectra of all 4 stimuli. Stimuli 1 and 3 (formants at 1,520 and 3,420 Hz) were associated with turns to the right and stimuli 2 and 4 (2,280 and 5,130 Hz) with turns to the left. C: to initiate a trial, the rat had to enter the nose poke. D: immediately after its entry in the nose poke, 1 of the 4 sounds was played through both speakers. E: the rat had to recall whether the left or right water spout was associated with the sound vowel sounds to obtain a water reward. The opposite platform, where the identical behavior was performed, is depicted at the top of C–E.

For the training and for analysis of neuronal responses, it was crucial for the sounds to be easily distinguishable. For this reason, we ensured that the distance between formant peaks (0.585 octave) was well above the frequency difference at which rats reach plateau performance in tone discrimination (∼0.07 octave; Sloan et al. 2009). Sounds were presented through Visaton FRS 8 speakers, which have a flat frequency response (less than ± 2 dB) between 200 Hz and 10 kHz. The speakers were driven through a standard personal computer sound card controlled by LabView (National Instruments, Austin TX).

Apparatus.

The arena consisted of two platforms, each with a layout as illustrated in Fig. 1C. The platforms were elevated 40 cm to encourage the animals to remain on the platform during the experiment. A small wall with a nose-poke hole was positioned at one end of each platform. Reward water spouts were located on either side of the nose poke, 3.5 cm from the lateral platform edge on the level of the platform. The two platforms faced each other with their nose-poke walls at a distance of 25 cm. The two platforms differed only by the texture of the floor and their spatial position and orientation. The whole setup was in a Faraday room and was illuminated with infrared light (880 nm) during testing sessions.

Auditory categorization task.

The day before behavioral testing, animals were placed on a water-restricted dry food diet. They were trained to initiate each trial with a nose poke. This triggered the presentation of one of the four stimuli. Different sounds were presented in random order. The animals did not have to wait for the end of a stimulus presentation but were free to respond at any time after stimulus onset. Right turns after presentation of the 1,520- or 3,420-Hz formants were considered correct responses, as were left turns after presentation of 2,800 or 5,130-Hz formants. Correct responses triggered delivery of a small water reward (≈50 μl) from the spout on the corresponding side. Incorrect responses triggered no water delivery but caused a 3-s timeout during which no new trial could be initiated. Each session lasted until the rat stopped doing the task.

The reaction times (from sound onset to the approach to the reward site) were measured. Since our setup required the animal to move just 13 cm from nose poke to reward spout, reaction times are a better measure of behavior than video tracking, where errors can exceed ± 2 cm (Jensen and Lisman 2000). One key aim of this study was to dissociate neuronal coding of stimulus identity from any aspect of explicit behavior (either ongoing or planned), for example, when the animals' response causes them to move into or out of hippocampal place fields. We therefore considered a neuron to be sensitive to the acoustic stimuli only if its response patterns differentiated cases where the stimuli differed even though the animal's behavioral responses were the same, i.e., it turned in the same direction, and did so with comparable speed, for both stimuli. By disregarding all the sessions in which the reaction times for the two stimuli associated with either response direction on either platform differed significantly (P < 0.05, Wilcoxon rank sum test, 14% of cases), we excluded the possibility that any systematic variability in the animals' motor response, which might be differentially encoded by hippocampal place cells, could be misinterpreted as stimulus-related neuronal activity. Before and after the training session, the room was illuminated by visible light, so the rats had the opportunity to use visual cues to orient themselves relative to the layout of the experimental chamber and apparatus.

Surgery and electrophysiological recordings.

Once the rats reached ≥70% correct performance on both platforms, they were implanted with chronic recording electrodes. To do so, they were anesthetized with a mixture of Zoletil (30 mg/kg) and xylazine (5 mg/kg) delivered intraperitoneally. A craniotomy was made above left dorsal hippocampus, centered 3.0 mm posterior to bregma and 2.5 mm lateral to the midline. A microdrive loaded with 12 tetrodes (25-μm-diameter Pl/Ir wire; California Fine Wire) was mounted over the craniotomy. Tetrodes were positioned perpendicular to the brain surface and individually advanced in small steps of 40–80 μm per day until they reached the CA1 area, indicated by the amplitude and the shape of the sharp wave/ripples. All recordings were performed at a depth of 2,000–2,500 μm below the brain surface. After surgery, animals were given antibiotic (Baytril; 5 mg/kg delivered through the water bottle) and the analgesic caprofen (Rimadyl; 2.5 mg/kg by subcutaneous injection) for postoperative analgesia and prophylaxis against infection, and they were allowed to recover for 1 wk to 10 days after surgery before testing resumed.

After recovery from electrode implantation, the animals were retrained for one to two sessions to get them accustomed to performing the task with a microdrive and cable attached. They then performed regular behavioral tests with the categorization paradigm described above, during which neuronal responses were recorded from the tetrode array using Neuralynx data acquisition equipment (analog-wired Cheetah acquisition system). Tetrodes had impedances between 100 and 500 kΩ. We usually recorded between one and six single units per tetrode. Spikes were presorted automatically using KlustaKwik, with the use of waveform energy on each of the four channels of the tetrode as coordinates in a four-dimensional feature space. The result of the automatic clustering was inspected visually after the data were imported into MClust. Only well-isolated single units that exhibited a clear refractory period were included in further analysis. In addition, we used two measures of spike separation, the L-ratio and the isolation index, to define the separation between the clusters in the multidimensional space (Schmitzer-Torbert et al. 2005). In the vast majority of cases, each single unit was recorded only in one session. In rare cases, the same unit could be distinguished on 2 consecutive days (judged by the spike waveform, distribution of the interspike intervals, and the response pattern), in which case only the single session with better isolation quality was considered in the analysis.

Statistical procedures.

Firing rates usually varied over time, and the latency of the response varied across individual cells. Therefore, to quantify the effect of sound identity (or response direction) on neuronal activity, we used a method that would 1) take into account multiple time points, 2) provide a single measure of statistical significance of the effect, and 3) quantify the strength of the effect in each case. From among the many statistical methods that could be applied (ANOVA, classification techniques such as linear discriminant analysis, etc.), we opted to use information theory measures because they provide a uniform scale that can be used to compare results from different experiments, their properties and biases have been extensively studied analytically, and robust bias correction procedures have been described and successfully implemented (Panzeri and Treves 1996; Panzeri et al. 2007; Pola et al. 2003).

Shannon mutual information (Shannon 1948) was used to quantify the statistical interdependence between trial parameter X (either stimulus identity or response direction) and neuronal spike count in a given temporal window. The information measures were computed in Matlab (MathWorks) using the information breakdown toolbox (Magri et al. 2009). Mutual information is given by the following equation:

| (1) |

This quantifies the reduction of uncertainty about the trial parameter (X) gained by a single-trial observation of the spike count (Y). The probabilities in the above formulas are not known a priori and must be estimated empirically from the observed frequencies in a limited number of trials N. Inevitable inaccuracies in the resulting estimates of response probabilities can lead to an upward bias in the estimate of mutual information (Panzeri and Treves 1996; Panzeri et al. 2007; Pola et al. 2003). An approximate expression for the bias has been formulated (Panzeri and Treves 1996) and can be subtracted from the information estimates calculated with Eq. 1 above, provided that N is at least two to four times greater than the number of different stimuli or behavioral responses (Pola et al. 2003). In our data, N depended on the number of trials performed by the animals in a particular session but was never less than 12 in any condition, whereas the number of classes was always 2 (corresponding to either the 2 response directions or the 2 different sound stimuli associated with 1 response direction). This allowed us to use the above-mentioned bias correction procedure (Panzeri and Treves 1996). The information carried by spike counts was calculated in a 200-ms-wide window sliding in steps of 25 ms along the whole duration of the trial, from the sound onset to 1 s after.

Since for each neuron we took into account many time points, multiple comparisons had to be accounted for. This was done using a permutation test, which tested the null hypothesis that the mean value of information obtained across all the time points could be expected by chance (see below).

Statistical significance of sound-related information.

Sound-related information quantified how reliably a neuron's firing rate could distinguish between the two stimuli associated with the same response direction. First, the information about sound identity was measured independently at every time point from the sound onset until 400 ms after the sound offset. Each integration window was 200 ms long; the step size was 25 ms, yielding 41 sequential information values.

Next, to determine whether the mutual information carried by the neuron's firing rate was statistically significant, we used a permutation test that compared the observed mean information value (averaged across the 1-s analysis window) to a distribution of simulated values obtained after sound labels had been randomly reassigned to neuronal responses and the mean mutual information for these shuffled data had been computed. This resampling, repeated 200 times, provided an estimate of the distribution of average information values expected by chance if sound stimuli had no systematic influence on neuronal firing. P values for the significance of sound-related information were then calculated by comparing the information value for the original data to a Gaussian curve fitted to the distribution of 200 information values obtained by resampling. Neurons that were found to carry significant information about stimulus identity for at least one of the possible reward locations are referred to as “sound-sensitive neurons.”

Meta-test for the significance of sound-related information in the neuronal population as a whole.

Although the permutation test just described yielded small (highly significant) P values for many neurons in our data set, it was important to ask whether the number of significant outcomes observed across the population exceeded that expected from possible “false positives” that occur within any large sample. To ascertain this, we compared the distribution of sound information P values obtained across the entire data set (all 261 neurons) to a distribution of P values obtained from simulated neuronal responses created by 200 shuffles of the stimulus labels. We found that the distribution of observed P values was significantly smaller (Wilcoxon rank sum test, P = 0.0013) than that expected by chance. This means that the neuronal population carried a far stronger sound identity signal than could be expected based on firing rate fluctuations unrelated to the stimuli.

Statistical significance of response direction-related information.

Response direction is the side to which the rat turned to obtain water. When the “turn right” and the “turn left” trials are compared, any difference in firing rate could be due to the place field of the cell, head direction, or predictive coding of the direction of the turn. The statistical significance of such differences was measured in the same way as was done for sound identity-related differences in activity: at each time point, the firing rate in the trials with correct right turn was compared with the firing rate at the same time point in the trials with correct left turn. The information across all time points was averaged and compared with the distribution of average information values obtained by random shuffling of the trial labels. Neurons carrying a significant quantity of information about the animal's response direction on at least one of the two test platforms are referred to as “response direction-sensitive neurons.”

In theory, the difference between right-turn and left-turn trials could also be due to sound-dependent responses: for instance, if a neuron strongly responds to sound 1 (right) but does not respond to sounds 2, 4 (left), or 3 (right), it could result in spurious significant response direction information. To exclude cases in which sound-related responses might cause an apparent response direction response, a neuron was classified as sensitive to response direction only if it showed a significant left vs. right firing difference for all four left vs. right stimulus pairings: sound 1 vs. sound 2, sound 1 vs. sound 4, sound 3 vs. sound 2, and sound 3 vs. sound 4.

Context dependence of sound sensitivity.

The design of the experiment allowed us to test for unambiguous neuronal sensitivity to differences in sounds in four different conditions: discrimination of sounds 1 and 3, both of which directed the rat to the right water reward spout, and discrimination of sounds 2 and 4, both of which directed the rat to the left spout. Both discriminations were made on platforms 1 or 2. Consequently, one recorded neuron could exhibit sensitivity to sound identity (that is, carry a significant quantity of information about the sound stimulus) under a total of 0, 1, 2, 3, or 4 conditions. We compared the observed instances of sound sensitivity of each neuron to the number expected by chance if the sound sensitivity under each condition were independent.

The distribution expected by chance was calculated as follows. Under the assumption of independence, the number of sound-sensitive neurons associated with all four response directions is expected to equal Np4, where N is the number of neurons in the sample and p is the probability that a neuron will carry a significant quantity of information about sound at one of the four possible reward locations. Similarly, the number of neurons expected not to be sensitive associated with any of the four response directions equals N(1 − p)4. There are four different ways in which a neuron can be sensitive to sound associated with only one response direction (i.e., sensitive to the sound associated with the 1st, 2nd, 3rd, or 4th spout), and each of these possible outcomes has a probability of p(1 − p)3, yielding an expected number of neurons sensitive at just one spout equal to 4Np(1 − p)3. Similarly, the expected number of neurons sensitive at three spouts equals 4Np3(1 − p). Finally, there are six different ways in which a neuron can be sensitive to sound at two spouts ([1 1 0 0], [1 0 1 0], [1 0 0 1], [0 1 0 1], [0 0 1 1], and [0 1 1 0]), each with a probability of p2(1 − p)2, yielding an expected number of neurons sensitive at two spouts equal to 6Np2(1 − p)2. Once the expected values were calculated in this manner, their confidence intervals were obtained from the critical values of the inverse binomial distribution (Matlab function “binoinv”).

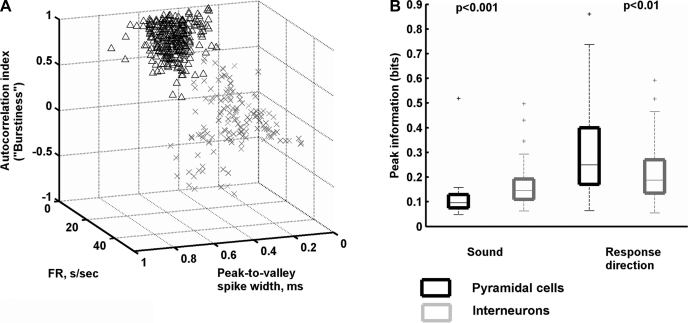

Separation and comparison of putative interneurons and pyramidal cells.

In keeping with established methods, three criteria were used to distinguish neuronal cell types according to physiological criteria: mean firing rate (Fox and Ranck 1981; Ranck 1973), spike duration (Skaggs et al. 1996), and the autocorrelation function (Csicsvari et al. 1999). Firing rate was measured over the whole session. Spike duration was measured at 25% of maximum spike amplitude (Csicsvari et al. 1999). The autocorrelation-derived index is the difference between the numbers of spikes that occurred in a 3- to 5-ms postspike window and a 20- to 80-ms postspike, divided by the sum of the two. Spike duration and the autocorrelation-derived index yielded bimodal distributions. On the basis of firing rate, spike duration, and the autocorrelation-derived index, the complete set of neurons was clustered into two classes using a K-means algorithm. In keeping with the conventions established by others (Csicsvari et al. 1999; Fox and Ranck 1981; Wiebe and Staubli 2001), we refer to the units with the broader action potentials and lower firing rates as putative pyramidal cells and to those with the narrower spikes and higher firing rates as putative interneurons. We stress that a definitive classification of cell types requires information beyond that which can be obtained with extracellular recordings alone. We found that narrow spike-firing, putative interneurons exhibited a broader distribution of spike widths and firing rates than putative pyramidal cells. This may be attributable to the greater diversity of the interneurons compared with pyramidal cells (Csicsvari et al. 1999; Klausberger and Somogyi 2008).

For the two physiologically distinguished cell types, the statistical significance of the information about sound stimulus identity or response direction was determined using the permutation test described above. To quantify the amount of information carried by each neuron, we took the peak value of the information across time among those neurons that passed the significance test. These values were used to compare sound and response direction coding differences between the putative pyramidal cells and interneurons.

Histology.

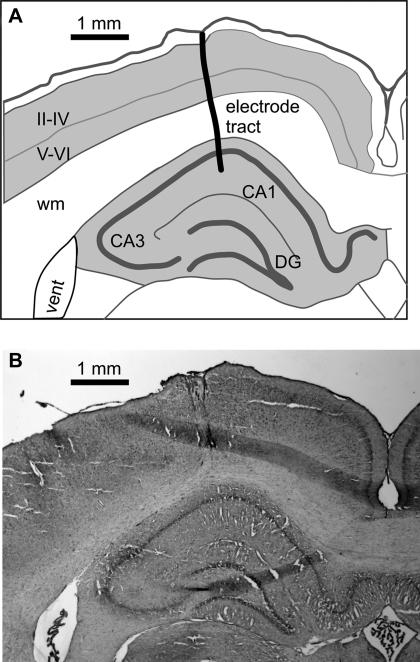

After conclusion of the recording experiments, the animals were overdosed with intraperitoneal injection of the anesthetic urethane and transcardially perfused with 10% formalin. Brains were sectioned in the coronal plane and stained with cresyl violet. Electrode tracks were localized on the serial sections (Fig. 2).

Fig. 2.

Example reconstruction of the electrode tract. A: outline drawing depicting the relationship of the electrode tract to the brain structures. CA1 and CA3, hippocampal regions; DG, dentate gyrus; II-IV and V-VI, cortical layers; wm, white matter. B: corresponding serial section (−3.36 mm from bregma).

RESULTS

Neuronal representation of sound identity.

In this study we set out to test how neurons in rat hippocampus represent auditory stimuli in the context of a behavioral task. Rats triggered a sound stimulus (Fig. 1, A and B) by extending their head through an infrared light barrier placed at the front of the platform (the “nose-poke” position, see Fig. 1C). When the light barrier was crossed, one of the four artificial vowel triplets (Fig. 1D) was played bilaterally. Depending on stimulus identity, animals had to turn either right or left to receive a water reward (Fig. 1E). Two sounds were associated with the reward on the right, and two other sounds with the reward on the left. Since the animals performed the same action for both sounds associated with the same reward spout, this design allowed us to look for stimulus-specific neuronal responses. The rats were initially trained on one of the two nearly identical training platforms until they performed at ≥70% correct for all four sounds. This took between 1.5 and 2 mo. Next, they were trained in the same task on the second platform with the same stimulus-response associations (in rat-centered coordinates). Although the behavioral task and sound stimuli were identical, the transfer of the task to the second platform was not immediate. About 3–5 days (∼400 trials) of additional training were required for the animals to become competent, and for the remaining sessions, they persistently achieved lower performance on the second platform (median performance across all recording sessions: 80.2% on first platform and 70.6% on second platform; Wilcoxon sign rank test, P < 10−5). The lack of immediate transfer of reward contingencies suggests the animals may have formed two distinct representations of the task, one for each of the two training platforms. Alternatively, it may stem from the conflict between a room-centered representation of the arena and a self-centered representation.

To quantify the representation of sound identity, we measured the information carried by neuronal firing rate on correct trials (see materials and methods for details). To ensure that any observed differences in firing for the two different sounds associated with each response direction reflected differences in sound, and not differences in behavior (see materials and methods for details), we excluded recording sessions in which reaction times differed between the two sounds tested at any of the four response directions (left or right on either platform 1 or 2). Accordingly, 261 of the total of 429 recorded neurons were included in further analysis.

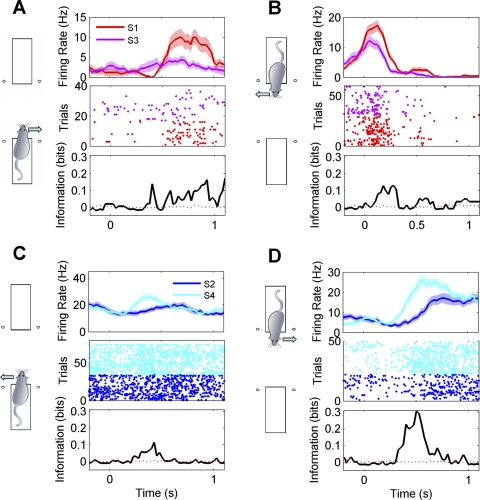

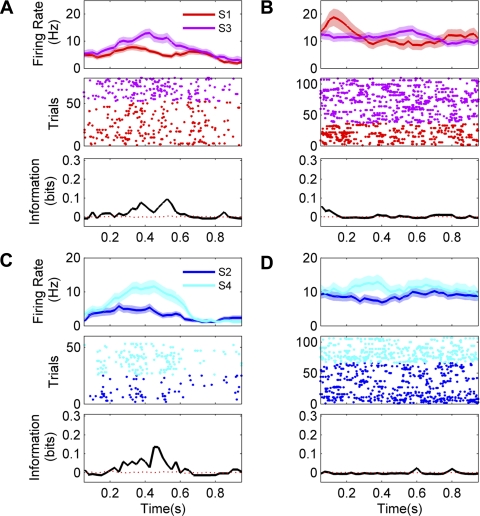

Examples of responses from sound-sensitive neurons are shown in Fig. 3. A total of 55 of 261 tested neurons (21%) exhibited significant sensitivity to sound identity. The median quantity of information about sound identity carried by neurons with sound sensitivity was 0.12 bits (interquartile range 0.08–0.16 bits). The upper limit, in a discrimination between two sounds, would be 1.0 bit. A meta-test (see materials and methods for details) confirmed that the observed sensitivity across the population was statistically significant (Wilcoxon rank sum test, P = 0.0013).

Fig. 3.

Examples of sound-sensitive responses recorded from 4 neurons (A–D). Diagrams at left indicate on which platform and with which reward direction the activity was recorded. Top plots show mean firing rates (±SE) after stimulus onset. Responses to the 2 different sound stimuli (S1 or S3; S2 or S4) tested for each response direction are color coded as indicated. Middle plots show the responses as dot rasters (each dot corresponds to the time of a spike; sequential trials of 1 stimulus are plotted on separate rows). Bottom plots quantify the stimulus dependence of firing rate over time as bits of mutual information in 200-ms sliding time windows. Solid black lines indicate actual information quantity; red dashed lines represent average information across 200 permutations. Bin size = 200 ms, step = 25 ms.

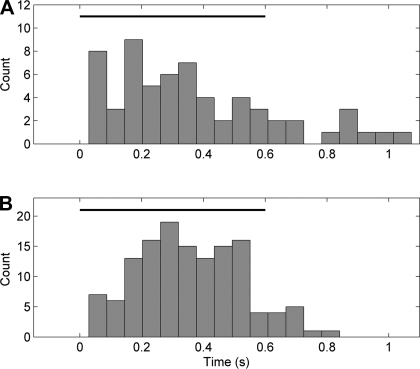

To evaluate the latencies of sound-specific neuronal responses, we analyzed the distribution of the first bin after stimulus onset carrying a significant quantity of information about sound identity (P < 0.05). Neurons started to carry sound information at a median latency of 310 ms after the sound onset (Fig. 4A). There were insufficient numbers of error trials to permit an analysis of whether the representation of specific sounds was equivalent on correct and incorrect trials.

Fig. 4.

Distribution of information latencies for sound (A) and response direction (B). Horizontal black bar depicts the duration of the sound stimuli.

Passive sound exposure.

The use of sounds as stimuli allowed us to ask whether their representation in CA1 was related to the stimuli as mere physical signals or as meaningful events dependent on behavioral context. To date, it has not been possible to show unambiguously whether rat hippocampal neurons truly encode nonspatial sensory stimuli or whether they fire to some particular aspect of the behavior associated with the discrimination. To answer this question, we recorded neuronal activity just after the animals had completed the test session and were resting on the second platform. These data were obtained for 282 of the total of 429 cells in our data set. The animals were exposed to the same sounds as during the immediately preceding behavioral task. The sounds were triggered with no relation to the animal's posture or movement, in a random sequence lasting ∼15 min with a random interstimulus interval ranging from 0.8 to 1.5 s. The rats did not respond to these sounds with head turns toward the water spouts or any other overt behavior. We analyzed the neuronal discharges in relation to sounds by the same methods as when the sounds were presented during the discrimination behavior. Seventeen of 282 neurons (6%) recorded during the passive conditions were classified as sound sensitive at a P < 0.05 significance level, a number close to the expected “false alarm” rate of 5%. We compared the frequency of such responses across the population using the stimulus label shuffling meta-test described in materials and methods and found no evidence for statistically significant sound coding across the population as a whole (P = 0.39). Moreover, among the neurons that did exceed the criterion, the median quantity of sound information was significantly smaller than the quantity found during the active task (0.05 bits in the passive condition vs. 0.12 bits for the active task; Wilcoxon rank sum test, P < 10−7). By visual inspection, none of the 282 neurons recorded in the passive condition showed convincing, reproducible sound selectivity. Two examples of neurons that distinguished between two sounds within the behavioral task but not in the passive condition are shown in Fig. 5. Together, these results indicate that under our experimental conditions, neurons in the CA1 region of hippocampus were not sensitive to differences in acoustic stimuli presented in isolation, even if the neurons showed clear discrimination between the same acoustic stimuli in the context of sound-guided behavior.

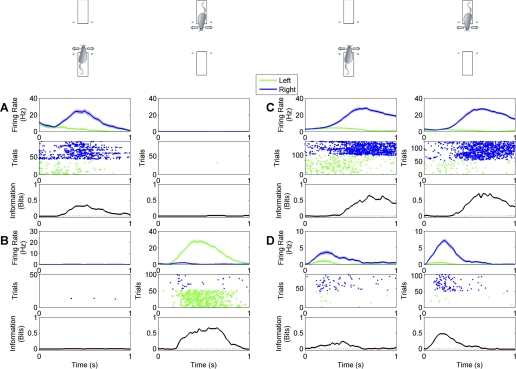

Fig. 5.

Examples of responses for 2 neurons sensitive to sound identity during the behavioral task but not during passive listening. Top plots show mean firing rates (±SE) after stimulus onset. Middle plots show the responses as dot rasters. Bottom plots quantify the stimulus dependence of the firing rate over time as bits of mutual information in 200-ms sliding time windows. Black lines indicate actual information quantity; red dashed lines represent average information across 200 permutations. Bin size = 200 ms, step = 25 ms. The first neuron fired more vigorously to S3 than to S1 during the discrimination task (A) but did not show significant sound coding when the same stimuli were presented passively (B). The second neuron was excited by the onsets of each of the sounds during the discrimination task (the triplets of bursts in C are aligned to the triplets of sounds) but showed no stimulus-related modulation during passive listening (D).

Neuronal representation of response direction.

A wealth of literature documents that many neurons in rat hippocampus are highly sensitive to the animals' location in space, and one might therefore expect a number of neurons in our sample to be sensitive to whether the animal turned left or right to collect water reward. We tested for such sensitivity by applying the same mutual information measures used to test for stimulus coding (see materials and methods for details). Examples of neurons that showed response direction-dependent firing on one of the two platforms only are shown in Fig. 6, A and B, whereas Fig. 6, C and D, shows examples of neurons that fired more during turns toward the reward spout on the left regardless of platform.

Fig. 6.

Examples of responses from 4 neurons (A–D) sensitive to response direction. Responses recorded during right turn trials are shown in blue, and responses recorded during left turn trials are shown in green. Top plots show mean firing rates (±SE) after stimulus onset. Middle plots show the responses as dot rasters. Bottom plots quantify the stimulus dependence of the firing rate over time as bits of mutual information in 200-ms sliding time windows. Black lines indicate actual information quantity; red dashed lines represent average information across 200 permutations. Bin size = 200 ms, step = 25 ms. For each neuron, responses recorded on both platforms are shown, as indicated by the diagrams above the plots. A and B show examples of neurons that responded when the rat was on only 1 of the 2 platforms. C and D show examples of neurons that responded to left or right turns in similar ways regardless of when the rat was on platforms 1 and 2.

A total of 113 of 261 tested neurons (43%) exhibited significant sensitivity to response direction. Neurons with response direction sensitivity carried a median value of 0.22 bits of information about response direction (interquartile range 0.15–0.38 bits). Neurons that encoded response direction on one platform only (67 putative pyramidal cells and 24 putative interneurons) were more common than those that encoded response direction on both platforms (12 putative pyramidal cells and 10 putative interneurons). Neurons started to carry response direction information at a median latency of 350 ms after the sound onset (Fig. 4B).

Whenever there was a sufficient number of incorrect trials to characterize neuronal firing on those trials, the firing of the response direction-sensitive neurons was found to be associated with the animals' actions on those trials. The simplest interpretation is that these neurons discriminated the actual direction of the movement rather than the stimulus category.

Relationship of sensitivity across different platforms.

The fact that animals were tested on two nearly identical but differently located platforms enabled us to investigate whether sound-related information present in the neuronal discharges depended on the animal's spatial location, and how coding for sound identity and place interacted. Our data indicate that in the large majority of the cases, sound-related information was observed only when the rat performed the task on one of the two platforms, suggesting that sound coding depended on spatial context.

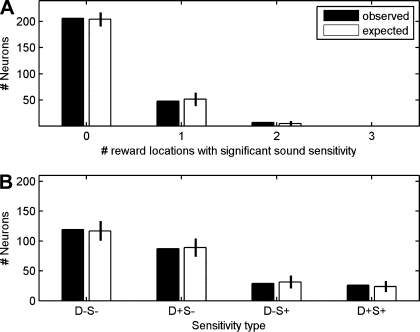

The relationship between sound coding and spatial location was assessed as follows. When tested on two platforms, each with two response spouts, a neuron could in principle show sound sensitivity in its firing associated with each of the four possible response directions (i.e., during both left and right turns on each of the 2 platforms), or it might be sensitive to sound in association with only a subset of the four response directions or at none at all. We found that sensitivity to sound was linked to the position of the animal within the room, i.e., the neuronal responses to sounds on one platform could not be predicted based on the sensitivity of the same neuron on the other platform. Of the 261 neurons in our sample, 48 gave significant sound identity information for only one of the four response directions, 7 gave significant information for two response directions, and none gave significant information for more than two response directions. The filled bars in Fig. 7A summarize these results. The open bars show the distribution that is predicted if one assumes that that the presence in a given neuron of a sound identity code for any one of the four tested reward spout locations does not predict whether the same neuron will discriminate between sounds for other response directions. The observed distribution matches the distribution predicted by the model of independence.

Fig. 7.

A: filled bars indicate numbers of neurons observed to be sensitive to the identity of the sound stimuli associated with 0, 1, 2, 3, or 4 reward locations (response directions), respectively. Open bars represent the distribution expected if the probability of a neuron being sensitive to sound for each reward location were independent of the probability of it being sensitive to sound sensitivity for all other reward locations. B: filled bars indicate numbers of neurons sensitive to neither response direction nor sound identity (D−S−), sensitive to response direction but not sound identity (D+S−), not sensitive to response direction but sensitive to sound identity (D−S+), or sensitive to both response direction and sound identity (D+S+). Open bars represent distribution of sensitivity to response direction and sound identity expected under the assumption of independent coding of response direction and sound identity. Error bars show 95% confidence intervals for the expected distributions.

Relationship of sensitivity to sound and response direction.

In principle, a neuron could be sensitive to only response direction (left/right), only sound identity, or both. The sensitivity of the neuron to one of these factors may depend on the other factor, or, alternatively, sound sensitivity and response direction sensitivity could be independent properties. We derived a distribution of cell counts expected to occur in a population, given the assumption of this independence (see materials and methods).

In the experiment represented in Fig. 7B, we investigated the relationship between sensitivity to sound stimulus identity and sensitivity to response direction across the sample of 261 neurons. A neuron was considered sound sensitive (S+) if in at least one of the four conditions (platform 1: sound 1 vs. sound 3; platform 1: sound 2 vs. sound 4; platform 2: sound 1 vs. sound 3; platform 2: sound 2 vs. sound 4) it carried a significant quantity of information about sound identity. Otherwise, it was considered sound insensitive (S−). Likewise, a neuron was considered sensitive to response direction (D+) if it distinguished left and right response direction on either platform. Otherwise, it was considered to be insensitive to response direction (D−). One hundred nineteen neurons were sensitive to neither response direction nor sound identity (D−S−), 87 were sensitive to response direction but not to sound identity (D+S−), 29 were sensitive to sound identity but not to response direction (D−S+), and 26 were sensitive to both response direction and sound identity (D+S+). The filled bars in Fig. 7B show these observed neuron counts, whereas the open bars show the counts of neurons that would be expected if we assume that response direction sensitivity and sound identity sensitivity are statistically independent of each other. Again, there is a close correspondence between the observed distribution and that expected by independence. The results indicate that whether a particular neuron was recruited for the representation of auditory stimuli did not predict whether it would also be recruited for the representation of response direction. Responses for a neuron characterized as D−S+ are illustrated in Fig. 8, A–C, whereas responses for a neuron characterized as D+S+ are illustrated in Fig. 8, D–F.

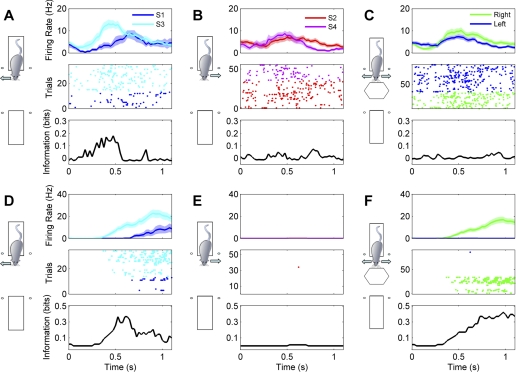

Fig. 8.

A–C: responses of 1 neuron that was sensitive to sound identity but not response direction. Top plots show mean firing rates (±SE) after stimulus onset. Middle plots show the responses as dot rasters. Bottom plots quantify the stimulus dependence of the firing rate over time as bits of mutual information in 200-ms sliding time windows. Black lines indicate actual information quantity; red dashed lines represent average information across 200 permutations. Bin size = 200 ms, step = 25 ms. This neuron fired more vigorously to S3 than to S1 during right turn trials (A). but firing in response to left or right turns (combining the responses to different sounds; C) did not differ significantly. D–F: responses of a neuron sensitive to both sound identity and response direction. Plots are as described in A–C. This neuron fired only in trials where the animal turned to the right, and fired substantially more spikes in response to S3 than to S1.

Firing properties of putative interneurons and pyramidal cells.

Using the physiological criteria described in materials and methods and illustrated in Fig. 9A, we classified 179 neurons as putative pyramidal cells and 82 as putative interneurons (Csicsvari et al. 1999; Fox and Ranck 1981; Itskov et al. 2011; Ranck 1973). We then asked whether the putative cell type was related to functional properties of neurons in the behavioral task.

Fig. 9.

Functional properties of putative interneurons and pyramidal cells. A: neurons were classified as putative pyramidal neurons or interneurons according to physiological criteria including spike width, mean firing rate, and tendency to fire in bursts. Burst firing was quantified from each neuron's autocorrelation. FR, firing rate (s/sec, spikes per second). B: putative pyramidal cells and interneurons exhibited systematic differences in the amounts of stimulus identity or response direction information. For each box, the central mark is the median, the edges of the box are the interquartile range, the whiskers extend to the most extreme data points not considered outliers, and outliers are plotted individually.

Putative interneurons were more likely to be sensitive to stimulus identity, and carried more sound-related information, than putative pyramidal cells. Among putative interneurons, 33% were sound sensitive, whereas only 16% of putative pyramidal cells were sound sensitive (27 of the 82 putative interneurons and 28 of the 179 putative pyramidal cells). To quantify information in these cells, we took a peak value of sound identity information. The median value of the peak among all sound-sensitive putative interneurons was 0.15 bits (interquartile range 0.11–0.19 bits), significantly higher than the value of 0.10 bits (interquartile range 0.07–0.13 bits) in putative pyramidal cells (Wilcoxon rank sum test, P = 0.0008).

Seventy-nine of 179 (44%) putative pyramidal cells and 34 of 82 (41%) putative interneurons carried significant amounts of information about response direction. The median of the peak response direction information for putative pyramidal neurons (0.35 bits, interquartile range 0.17–0.40 bits) was significantly higher than that of putative interneurons (0.19 bits, interquartile range 0.13–0.23 bits; Wilcoxon rank sum test, P = 0.009). Figure 9B summarizes values of information about stimulus identity and response direction for putative pyramidal and putative interneurons.

DISCUSSION

This work characterizes the encoding of sound stimuli in rat hippocampus. Anatomic studies indicate that the hippocampus receives auditory input only very indirectly from perirhinal cortex via lateral entorhinal cortex (Burwell et al. 1995; Burwell and Amaral 1998) and medial prefrontal areas via the nucleus reuniens of the thalamus (Vertes 2006). The auditory information arriving in the hippocampus, like that of other modalities, is therefore highly processed, and the hippocampus is not a sensory structure as such. Nevertheless, a number of previous studies have demonstrated stimulus-related firing in hippocampus for olfactory (Komorowski et al. 2009; Wiener et al. 1989; Wood et al. 1999), visual (Anderson and Jeffery 2003; Hampson et al. 2004; Leutgeb et al. 2005a; Lever et al. 2002; Quiroga et al. 2005), tactile (Itskov et al. 2011), auditory (Berger et al. 1976, 1983; Christian and Deadwyler 1986; Moita et al. 2003; Quiroga et al. 2009; Sakurai 1994, 1996, 2002; Segal et al. 1972; Takahashi and Sakurai 2009), and gustatory stimuli (Ho et al. 2011). Several groups have used operant conditioning to sound stimuli to study changes in hippocampal activity during learning (Berger et al. 1976; Christian and Deadwyler 1986; Moita et al. 2003; Segal et al. 1972), and it appears from these studies that hippocampal neurons acquire responsiveness to the sound stimuli used for conditioning as the animal learns the conditioned behavioral response. After the acquisition of the conditioned response, hippocampal neurons started to fire to the sound onset, with a latency of around 80 ms, and unrewarded sounds caused smaller responses (Christian and Deadwyler 1986; Moita et al. 2003). In our experiment, the onset of sound-specific firing can be seen in a wide distribution of latency values ranging from about 0.05 to 1.1 s (median value, 310 ms).

One potential difficulty in interpreting previous work is that when stimuli and conditioned responses are coupled, it is difficult to know whether neuronal activity recorded during an operant conditioning task encodes the stimulus or the animal's resultant behavior. This can be particularly problematic if the response requires the animal to change its location or orientation in space, given that many neurons in rat hippocampus are well known to exhibit place-related firing (O'Keefe 1976; O'Keefe and Nadel 1978; Wills et al. 2005). In the present study, we designed the experiment in a manner that can dissociate stimulus-related firing from firing related to behavioral responses or spatial location, by training animals to associate more than one sound stimulus with each behavioral response. Moreover, we required rats to carry out identical stimulus-reward pairing experiments at different locations in the room. We found that some CA1 neurons clearly distinguished between different acoustic stimuli, and because the actions associated with the paired sounds were identical, the sound sensitivity cannot be easily explained by any explicit behavioral correlate. Acoustic sensitivity was in most cases contingent on behavioral context and the animal's location in space. Neurons that discriminated between a sound pair when the animal was on one platform frequently failed to discriminate between the same sound pair when the animal was on the other platform. As a further demonstration of the role of context, we found that during passive listening, neurons did not discriminate between sounds. The modulation of neural responses to the presented sounds that is commonly found in hippocampus thus appears to be much greater in extent and qualitatively different from that normally seen either in auditory cortex as a function of changes in behavioral context (Fritz et al. 2007) or in the midbrain as a function of spatial variables (Campbell et al. 2006).

A degree of dependence on spatial context of sensory responses in hippocampus has been observed previously. For example, studies by Wood et al. (1999) and Komorowski et al. (2009) reported that hippocampal responses to odorant stimuli often differed at different locations, although some neurons appeared to “prefer” certain particular odors regardless of location. Our experiment was not designed to map sound preferences across space, but the results nevertheless confirm and extend the previous findings that coding for sensory stimuli and coding for space appear to interact in hippocampus. In particular, a neuron's tendency to differentiate two sound stimuli at one location in space did not predict whether the neuron would differentiate the same sounds at a different location.

Interactions between coding for sensory stimuli and coding for spatial location do not of course preclude the possibility that spatial or sensory coding may be achieved by somewhat separate, specialized subpopulations of hippocampal neurons. A number of studies by Sakurai (1994, 1996, 2002) have explored hippocampal population responses during various sound-related tasks. Sakurai's experimental and analytical approach differs substantially from ours, but our work nevertheless confirms and extends several of Sakurai's findings. In one study (Sakurai 1996), animals were trained in three different tasks: auditory discrimination, visual discrimination, and a configural auditory plus visual discrimination. The populations of neurons that were engaged in each of these tasks were found to be partially overlapping. The author put forward the idea that these overlapping assemblies arose from independent coding. Independence here is to be understood in the statistical sense, meaning that the probability that any one neuron carries significant amounts of visual information is not influenced by whether or not the same neuron encodes auditory or spatial information. However, Sakurai did not demonstrate such statistical independence quantitatively. In comparison, our current experiment, as well as a previous experiment using tactile stimuli (Itskov et al. 2011), revealed distinct and statistically independent representations of sounds associated with different locations in the spatial arena (Fig. 7A) and also showed that neuronal populations involved in coding different aspects of the task, sound identity and the action associated with it, overlap in proportions consistent with statistical independence (Fig. 7B).

However, we observed some statistical dependence of coding for space or sound on cell type. At present, there is no consensus in the literature on the relative contributions made by putative hippocampal interneurons and putative pyramidal cells to the representation of auditory stimuli or the animal's location in space. Whereas one study showed a clear functional dissociation between the two neuronal cell types (Christian and Deadwyler 1986), another observed no clear differences in sound-related firing (Moita et al. 2003). In our experiment, both types of neurons participated in the representation of sounds and places, but putative interneurons carried more stimulus-related information, whereas putative pyramidal cells carried more response direction-related information. These differences were statistically highly significant, but their neuroanatomical and functional significance remains difficult to evaluate at present, given that distinguishing anatomically defined neural populations on the basis of physiological criteria such as spike width and firing rate is not without difficulty (Vigneswaran et al. 2011) and given that these observed differences between the two physiologically distinguished cell types, although statistically highly significant, were nevertheless not very large, and it is important to remember that.

Although our results therefore agree with previous studies of rodent hippocampus, parallels with work on human hippocampus are harder to draw. Neurons in human hippocampus fire according to the stimulus category (Quiroga et al. 2005, 2009), which suggests a highly abstracted representation of the semantics of stimuli (Gelbard-Sagiv et al. 2008) that is largely independent of context. This contrasts sharply with the strong spatial and behavioral context dependence of sensory coding that we observed in our experiments. The sounds in our experiments were identical on both platforms and in the active and passive conditions, so if the neurons had formed abstract and invariant representations of the sounds per se, they should have exhibited similar responses on both platforms and in the passive as well as active condition. An important difference in experimental design is that in the human studies so far, the subjects were always tested in just a single location. Future studies in humans may reveal whether this categorical representation is also influenced by the subject's position and behavioral context.

The spatial context dependence of sensory responses that we observed is certainly compatible with the hypothesis that hippocampus may create “object in place” representations by linking salient events to the spatial context in which they occur (Cohen and Eichenbaum 1993; Eacott and Norman 2004; Eichenbaum et al. 1999; Wood et al. 1999). This may also relate to the phenomenon referred to as “remapping” (Anderson and Jeffery 2003; Bostock et al. 1991; Colgin et al. 2008; Muller and Kubie 1987), whereby place fields of hippocampal neurons may change radically if the context in which an animal finds itself is altered or when the behavioral context is altered (Kennedy and Shapiro 2009; Moita et al. 2004). The fact that different sensory events appear to be encoded by partially overlapping, statistically independent populations of neurons agrees with the predictions of theoreticians such as Marr (1971), who considered this a desirable property in a memory system designed to collect “snapshots” of experience.

GRANTS

This work was supported by the Human Frontier Science Program (contract RG0041/2009-C), the European Union (contract BIOTACT-21590 and CORONET), the Compagnia San Paolo, a Royal Society international joint project grant, a Wellcome Trust Studentship to C. Honey, the Italian Institute of Technology through the Brain Machine Interface Project, and the Ministry of Economic Development.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

Author contributions: P.M.I., E.V., C.H., J.W.H.S., and M.E.D. conception and design of research; P.M.I. and E.V. performed experiments; P.M.I., E.V., J.W.H.S., and M.E.D. analyzed data; P.M.I., E.V., C.H., J.W.H.S., and M.E.D. interpreted results of experiments; P.M.I., E.V., C.H., and J.W.H.S. prepared figures; P.M.I. and E.V. drafted manuscript; P.M.I., E.V., C.H., J.W.H.S., and M.E.D. edited and revised manuscript; P.M.I., E.V., C.H., J.W.H.S., and M.E.D. approved final version of manuscript.

Supplementary Material

ACKNOWLEDGMENTS

We are grateful to Dr. Stefano Panzeri for help with the information analysis, as well as to members of the laboratory and various outside collaborators for valuable discussions. Fabrizio Manzino and Marco Gigante provided outstanding technical support. Marco Gigante illustrated the behavioral task for Fig. 1.

REFERENCES

- Anderson MI, Jeffery KJ. Heterogeneous modulation of place cell firing by changes in context. J Neurosci 23: 8827–8835, 2003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berger TW, Alger B, Thompson RF. Neuronal substrate of classical conditioning in the hippocampus. Science 192: 483–485, 1976 [DOI] [PubMed] [Google Scholar]

- Berger TW, Rinaldi PC, Weisz DJ, Thompson RF. Single-unit analysis of different hippocampal cell types during classical conditioning of rabbit nictitating membrane response. J Neurophysiol 50: 1197–1219, 1983 [DOI] [PubMed] [Google Scholar]

- Bizley JK, Walker KMM, King AJ, Schnupp JW. Neural ensemble codes for stimulus periodicity in auditory cortex. J Neurosci 30: 5078–5091, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bizley JK, Walker KM, Silverman BW, King AJ, Schnupp JW. Interdependent encoding of pitch, timbre, and spatial location in auditory cortex. J Neurosci 29: 2064–2075, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bostock E, Muller RU, Kubie JL. Experience-dependent modifications of hippocampal place cell firing. Hippocampus 1: 193–205, 1991 [DOI] [PubMed] [Google Scholar]

- Burdick CK, Miller JD. Speech perception by the chinchilla: discrimination of sustained /a/ and /i/. J Acoust Soc Am 58: 415–427, 1975 [DOI] [PubMed] [Google Scholar]

- Burwell RD, Amaral DG. Cortical afferents of the perirhinal, postrhinal, and entorhinal cortices of the rat. J Comp Neurol 398: 179–205, 1998 [DOI] [PubMed] [Google Scholar]

- Burwell RD, Witter MP, Amaral DG. Perirhinal and postrhinal cortices of the rat: a review of the neuroanatomical literature and comparison with findings from the monkey brain. Hippocampus 5: 390–408, 1995 [DOI] [PubMed] [Google Scholar]

- Campbell RA, Doubell TP, Nodal FR, Schnupp JW, King AJ. Interaural timing cues do not contribute to the map of space in the ferret superior colliculus: a virtual acoustic space study. J Neurophysiol 95: 242–254, 2006 [DOI] [PubMed] [Google Scholar]

- Cariani PA, Delgutte B. Neural correlates of the pitch of complex tones. I. Pitch and pitch salience. J Neurophysiol 76: 1698–1716, 1996 [DOI] [PubMed] [Google Scholar]

- Christian EP, Deadwyler SA. Behavioral functions and hippocampal cell types: evidence for two nonoverlapping populations in the rat. J Neurophysiol 55: 331–348, 1986 [DOI] [PubMed] [Google Scholar]

- Cohen NJ, Eichenbaum H. Memory, Amnesia, and the Hippocampal System. Cambridge, MA: MIT Press (Bradford Book), 1993 [Google Scholar]

- Colgin LL, Moser EI, Moser MB. Understanding memory through hippocampal remapping. Trends Neurosci 31: 469–477, 2008 [DOI] [PubMed] [Google Scholar]

- Csicsvari J, Hirase H, Czurko A, Mamiya A, Buzsaki G. Oscillatory coupling of hippocampal pyramidal cells and interneurons in the behaving rat. J Neurosci 19: 274–287, 1999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dewson JH. Speech sound discrimination by cats. Science 144: 555–556, 1964 [DOI] [PubMed] [Google Scholar]

- Dooling RJ, Brown SD. Speech perception by budgerigars (Melopsittacus undulatus): spoken vowels. Percept Psychophys 47: 568–574, 1990 [DOI] [PubMed] [Google Scholar]

- Eacott MJ, Norman G. Integrated memory for object, place, and context in rats: a possible model of episodic-like memory? J Neurosci 24: 1948–1953, 2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H, Dudchenko P, Wood E, Shapiro M, Tanila H. The hippocampus, memory, and place cells: is it spatial memory or a memory space? Neuron 23: 209–226, 1999 [DOI] [PubMed] [Google Scholar]

- Eichenbaum H, Kuperstein M, Fagan A, Nagode J. Cue-sampling and goal-approach correlates of hippocampal unit activity in rats performing an odor-discrimination task. J Neurosci 7: 716–732, 1987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eriksson JL, Villa AE. Learning of auditory equivalence classes for vowels by rats. Behav Processes 73: 348–359, 2006 [DOI] [PubMed] [Google Scholar]

- Fox SE, Ranck JB. Electrophysiological characteristics of hippocampal complex-spike cells and theta cells. Exp Brain Res 41: 399–410, 1981 [DOI] [PubMed] [Google Scholar]

- Fritz JB, Elhilali M, Shamma SA. Adaptive changes in cortical receptive fields induced by attention to complex sounds. J Neurophysiol 98: 2337–2346, 2007 [DOI] [PubMed] [Google Scholar]

- Gelbard-Sagiv H, Mukamel R, Harel M, Malach R, Fried I. Internally generated reactivation of single neurons in human hippocampus during free recall. Science 322: 96–101, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gelfer MP, Mikos VA. The relative contributions of speaking fundamental frequency and formant frequencies to gender identification based on isolated vowels. J Voice 19: 544–554, 2005 [DOI] [PubMed] [Google Scholar]

- Hampson RE, Pons TP, Stanford TR, Deadwyler SA. Categorization in the monkey hippocampus: a possible mechanism for encoding information into memory. Proc Natl Acad Sci USA 101: 3184–3189, 2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampson RE, Simeral JD, Deadwyler SA. Distribution of spatial and nonspatial information in dorsal hippocampus. Nature 402: 610–614, 1999 [DOI] [PubMed] [Google Scholar]

- Heffner HE, Heffner RS, Contos C, Ott T. Audiogram of the hooded Norway rat. Hear Res 73: 244–247, 1994 [DOI] [PubMed] [Google Scholar]

- Ho AS, Hori E, Thi Nguyen PH, Urakawa S, Kondoh T, Torii K, Ono T, Nishijo H. Hippocampal neuronal responses during signaled licking of gustatory stimuli in different contexts. Hippocampus 21: 502–519, 2011 [DOI] [PubMed] [Google Scholar]

- Hok V, Lenck-Santini PP, Roux S, Save E, Muller RU, Poucet B. Goal-related activity in hippocampal place cells. J Neurosci 27: 472–482, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmberg M, Hemmert W. Auditory information processing with nerve-action potentials. In: 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2004, p. iv-193–iv-196 [Google Scholar]

- Itskov PM, Vinnik E, Diamond ME. Hippocampal representation of touch-guided behavior in rats: persistent and independent traces of stimulus and reward location. PLoS One 6: e16462, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen O, Lisman JE. Position reconstruction from an ensemble of hippocampal place cells: contribution of theta phase coding. J Neurophysiol 83: 2602–2609, 2000 [DOI] [PubMed] [Google Scholar]

- Kennedy PJ, Shapiro ML. Motivational states activate distinct hippocampal representations to guide goal-directed behaviors. Proc Natl Acad Sci USA 106: 10805–10810, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klausberger T, Somogyi P. Neuronal diversity and temporal dynamics: the unity of hippocampal circuit operations. Science 321: 53–57, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kluender KR, Diehl RL, Killeen PR. Japanese quail can learn phonetic categories. Science 237: 1195–1197, 1987 [DOI] [PubMed] [Google Scholar]

- Komorowski RW, Manns JR, Eichenbaum H. Robust conjunctive item-place coding by hippocampal neurons parallels learning what happens where. J Neurosci 29: 9918–9929, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl PK. Human adults and human infants show a “perceptual magnet effect” for the prototypes of speech categories, monkeys do not. Percept Psychophys 50: 93–107, 1991 [DOI] [PubMed] [Google Scholar]

- Lenck-Santini PP, Fenton AA, Muller RU. Discharge properties of hippocampal neurons during performance of a jump avoidance task. J Neurosci 28: 6773–6786, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leutgeb JK, Leutgeb S, Treves A, Meyer R, Barnes CA, McNaughton BL, Moser MB, Moser EI. Progressive transformation of hippocampal neuronal representations in “morphed” environments. Neuron 48: 345–358, 2005a [DOI] [PubMed] [Google Scholar]

- Leutgeb S, Leutgeb JK, Barnes CA, Moser EI, McNaughton BL, Moser MB. Independent codes for spatial and episodic memory in hippocampal neuronal ensembles. Science 309: 619–623, 2005b [DOI] [PubMed] [Google Scholar]

- Leutgeb S, Leutgeb JK, Treves A, Moser MB, Moser EI. Distinct ensemble codes in hippocampal areas CA3 and CA1. Science 305: 1295–1298, 2004 [DOI] [PubMed] [Google Scholar]

- Lever C, Wills T, Cacucci F, Burgess N, O'Keefe J. Long-term plasticity in hippocampal place-cell representation of environmental geometry. Nature 416: 90–94, 2002 [DOI] [PubMed] [Google Scholar]

- Magri C, Whittingstall K, Singh V, Logothetis NK, Panzeri S. A toolbox for the fast information analysis of multiple-site LFP, EEG and spike train recordings. BMC Neurosci 10: 81, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manns JR, Eichenbaum H. A cognitive map for object memory in the hippocampus. Learn Mem 16: 616–624, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marr D. Simple memory: a theory for archicortex. Philos Trans R Soc Lond B Biol Sci 262: 23–81, 1971 [DOI] [PubMed] [Google Scholar]

- Moita MA, Rosis S, Zhou Y, LeDoux JE, Blair HT. Hippocampal place cells acquire location-specific responses to the conditioned stimulus during auditory fear conditioning. Neuron 37: 485–497, 2003 [DOI] [PubMed] [Google Scholar]

- Moita MA, Rosis S, Zhou Y, LeDoux JE, Blair HT. Putting fear in its place: remapping of hippocampal place cells during fear conditioning. J Neurosci 24: 7015–7023, 2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller RU, Kubie JL. The effects of changes in the environment on the spatial firing of hippocampal complex-spike cells. J Neurosci 7: 1951–1968, 1987 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Keefe J. Place units in the hippocampus of the freely moving rat. Exp Neurol 51: 78–109, 1976 [DOI] [PubMed] [Google Scholar]

- O'Keefe J, Burgess N. Geometric determinants of the place fields of hippocampal neurons. Nature 381: 425–428, 1996 [DOI] [PubMed] [Google Scholar]

- O'Keefe J, Nadel L. The Hippocampus as a Cognitive Map. Oxford: Oxford University Press, 1978 [Google Scholar]

- Panzeri S, Senatore R, Montemurro MA, Petersen RS. Correcting for the sampling bias problem in spike train information measures. J Neurophysiol 98: 1064–1072, 2007 [DOI] [PubMed] [Google Scholar]

- Panzeri S, Treves A. Analytical estimates of limited sampling biases in different information measures. Network 7: 87–107, 1996 [DOI] [PubMed] [Google Scholar]

- Peterson GE, Barney HL. Control methods used in a study of the vowels. J Acoust Soc Am 24: 175, 1952 [Google Scholar]

- Pola G, Thiele A, Hoffmann KP, Panzeri S. An exact method to quantify the information transmitted by different mechanisms of correlational coding. Network 14: 35–60, 2003 [DOI] [PubMed] [Google Scholar]

- Quiroga RQ, Kraskov A, Koch C, Fried I. Explicit encoding of multimodal percepts by single neurons in the human brain. Curr Biol 19: 1308–1313, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quiroga RQ, Reddy L, Kreiman G, Koch C, Fried I. Invariant visual representation by single neurons in the human brain. Nature 435: 1102–1107, 2005 [DOI] [PubMed] [Google Scholar]

- Ranck JB. Studies on single neurons in dorsal hippocampal formation and septum in unrestrained rats. I. Behavioral correlates and firing repertoires. Exp Neurol 41: 461–531, 1973 [DOI] [PubMed] [Google Scholar]

- Sakurai Y. Involvement of auditory cortical and hippocampal neurons in auditory working memory and reference memory in the rat. J Neurosci 14: 2606–2623, 1994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sakurai Y. Hippocampal and neocortical cell assemblies encode memory processes for different types of stimuli in the rat. J Neurosci 16: 2809–2819, 1996 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sakurai Y. Coding of auditory temporal and pitch information by hippocampal individual cells and cell assemblies in the rat. Neuroscience 115: 1153–1163, 2002 [DOI] [PubMed] [Google Scholar]

- Schmitzer-Torbert N, Jackson J, Henze D, Harris K, Redish AD. Quantitative measures of cluster quality for use in extracellular recordings. Neuroscience 131: 1–11, 2005 [DOI] [PubMed] [Google Scholar]

- Schnupp J, Nelken I, King AJ. Auditory Neuroscience: Making Sense of Sound. Cambridge, MA: MIT Press, 2011 [Google Scholar]

- Segal M, Disterhoft JF, Olds J. Hippocampal unit activity during classical aversive and appetitive conditioning. Science 175: 792–794, 1972 [DOI] [PubMed] [Google Scholar]

- Shannon CE. A mathematical theory of communication. Bell Syst Tech J 27: and 623–656379–423, 1948 [Google Scholar]

- Skaggs WE, McNaughton BL, Wilson MA, Barnes CA. Theta phase precession in hippocampal neuronal populations and the compression of temporal sequences. Hippocampus 6: 149–172, 1996 [DOI] [PubMed] [Google Scholar]

- Sloan AM, Dodd OT, Rennaker RL., 2nd Frequency discrimination in rats measured with tone-step stimuli and discrete pure tones. Hear Res 251: 60–69, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takahashi S, Sakurai Y. Sub-millisecond firing synchrony of closely neighboring pyramidal neurons in hippocampal CA1 of rats during delayed non-matching to sample task. Front Neural Circuits 3: 9, 2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vertes RP. Interactions among the medial prefrontal cortex, hippocampus and midline thalamus in emotional and cognitive processing in the rat. Neuroscience 142: 1–20, 2006 [DOI] [PubMed] [Google Scholar]

- Vigneswaran G, Kraskov A, Lemon RN. Large identified pyramidal cells in macaque motor and premotor cortex exhibit “thin spikes”: implications for cell type classification. J Neurosci 31: 14235–14242, 2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiebe SP, Staubli UV. Recognition memory correlates of hippocampal theta cells. J Neurosci 21: 3955–3967, 2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiener SI, Paul CA, Eichenbaum H. Spatial and behavioral correlates of hippocampal neuronal activity. J Neurosci 9: 2737–2763, 1989 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wills TJ, Lever C, Cacucci F, Burgess N, O'Keefe J. Attractor dynamics in the hippocampal representation of the local environment. Science 308: 873–876, 2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson MA, McNaughton BL. Dynamics of the hippocampal ensemble code for space. Science 261: 1055–1058, 1993 [DOI] [PubMed] [Google Scholar]

- Wood ER, Dudchenko PA, Eichenbaum H. The global record of memory in hippocampal neuronal activity. Nature 397: 613–616, 1999 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.