Abstract

Most moving objects in the world are non-rigid, changing shape as they move. To disentangle shape changes from movements, computational models either fit shapes to combinations of basis shapes or motion trajectories to combinations of oscillations but are biologically unfeasible in their input requirements. Recent neural models parse shapes into stored examples, which are unlikely to exist for general shapes. We propose that extracting shape attributes, e.g., symmetry, facilitates veridical perception of non-rigid motion. In a new method, identical dots were moved in and out along invisible spokes, to simulate the rotation of dynamically and randomly distorting shapes. Discrimination of rotation direction measured as a function of non-rigidity was 90% as efficient as the optimal Bayesian rotation decoder and ruled out models based on combining the strongest local motions. Remarkably, for non-rigid symmetric shapes, observers outperformed the Bayesian model when perceived rotation could correspond only to rotation of global symmetry, i.e., when tracking of shape contours or local features was uninformative. That extracted symmetry can drive perceived motion suggests that shape attributes may provide links across the dorsal–ventral separation between motion and shape processing. Consequently, the perception of non-rigid object motion could be based on representations that highlight global shape attributes.

Keywords: motion from shape, non-rigid shapes, shape representation, non-rigid motion

Introduction

Tumbling, rolling, swaying, stretching, leaping, spinning, flapping, dancing, kicking, bucking, jerking, sliding, gliding, tripping, shaking, wobbling, and twirling are just some of the many motions that human observers perceive and classify effortlessly, while maintaining object identity despite shape changes. All visual motions are first parsed in the striate cortex by direction-selective cells that signal local translations (Hubel & Wiesel, 1968), making it a challenge to discover how the brain disentangles different classes of complex motion from shape deformations. However, since the motion of any complex non-rigid object consists of shape transformations in systematic rather than arbitrary sequences, several authors (e.g., Jenkins & Mataric, 2004; Troje, 2002; Yacoob & Black, 1999) have shown that an object’s shapes can be encoded in a low-dimensional space. Exploiting low-dimensional representations, computer vision models have extended Tomasi and Kanade’s (1992) seminal factorization solution for 3-D shape from motion to the extraction of non-rigid shapes, by using either sets of basis shapes (Torresani, Hertzmann, & Bregler, 2008) or sets of basis trajectories (Akhter, Sheikh, Khan, & Kanade, 2008). The factorization algorithms, however, require the locations of each point in each image as their input, a sequence of operations that is biologically implausible. Neural models for the perception of non-rigidly articulated human motion (Giese & Poggio, 2003) suggest that view-tuned neurons in the ventral stream provide snapshots for shapes, dorsal stream neurons match patterns for trajectories, and later motion-pattern neurons combine the two streams. Indeed, some evidence suggests that neurons in the temporal cortex encode articulated humanoid actions (Singer & Sheinberg, 2010; Vangeneugden, Pollick, & Vogels, 2009). It is, however, unlikely that the brain has stored snapshots for most deforming objects. As an alternative model, we exploit the fact that objects generally have invariant global properties, such as symmetry, and propose that abstracted shape properties can provide the information needed to separate shape deformations from global motions. We base this proposal on the results of a new experimental method designed to study shape-motion separations for arbitrarily deforming objects undergoing rotations.

We used this method to tackle three fundamental issues in object motion perception. First, we examined how disparate local motions are combined into a coherent global percept. Since motion-sensing cells in striate cortex are generally cosine-tuned (Hawken, Parker, & Lund, 1988), the motion of each local segment of an object activates neurons with preferred directions ranging over 180°, which leads to variations in local population responses across an object’s boundary. In some cases such as translating plaids and the barber-pole illusion, where global motion is perceived as a single vector, combination rules such as intersection of constraints (Movshon, Adelson, Gizzi, & Newsome, 1985) or slowest/shortest motion (Weiss, Simoncelli, & Adelson, 2002) can explain the percepts. Rigid rotations require combination rules that are not as simple as for translations (Caplovitz & Tse, 2007a; Morrone, Burr, & Vaina, 1995; Weiss & Adelson, 2000) but may conform to regularization principles, such as minimal mapping (Hildreth, 1984; Ullman, 1979), smoothest motion (Hildreth, 1984), or motion coherence (Yuille & Grzywacz, 1988). By adding different forms of dynamic shape distortions to rotation, we were able to tease apart the role that global representations play in combining local motion estimates into unitary percepts.

Second, we verified that shape representations can drive veridical motion perception without directional clues, thus going beyond the divergence of motion computation and shape analysis into dorsal and ventral neural streams, respectively (Ungerleider & Mishkin, 1982). While shape-driven motion has been demonstrated in the absence of motion-energy signals for faces rotated from one side to another (Ramachandran, Armel, Foster, & Stoddard, 1998) and changes in geometrical shapes, e.g., from squares to rectangles (Tse & Logothetis, 2002), in both of these cases, the direction of motion could be inferred from the end shapes. We used randomly generated dotted shapes with indeterminate orientations to prevent such influences on rotation perception. We also quantified human efficiency for shape-driven motion perception by comparing accuracy to the simulated performance of an optimal shape-based Bayesian decoder.

Third, we tested whether properties abstracted from complex shapes can determine perceived motion. Past work has shown that shape features, such as contour completion, relatability, convexity, and closure, can determine motion grouping through surface segmentation (McDermott & Adelson, 2004; McDermott, Weiss, & Adelson, 2001) or binding (Lorenceau & Alais, 2001), and neuroimaging has suggested that dorsal area V3A may extract features that are tracked in motion perception (Caplovitz & Tse, 2007b). However, Fang, Kersten, and Murray (2008) and Murray, Kersten, Olshausen, Schrater, and Woods (2002) presented evidence that activation of the Lateral Occipital Complex (LOC) reduced activity in striate cortex during the percept of a grouped moving stimulus but not during a non-rigid percept of the same stimulus as independently moving features. This raises the possibility that feature extraction and global shape representations may play quite different roles in object motion perception. To isolate the role of shape representations, we tested cases where tracking of shape contours or local features was uninformative, and perceived rotation could correspond only to rotation of a global axis of symmetry.

Disentangling motion direction from shape deformations

Experiment 1: Rotation detection of non-rigid shapes

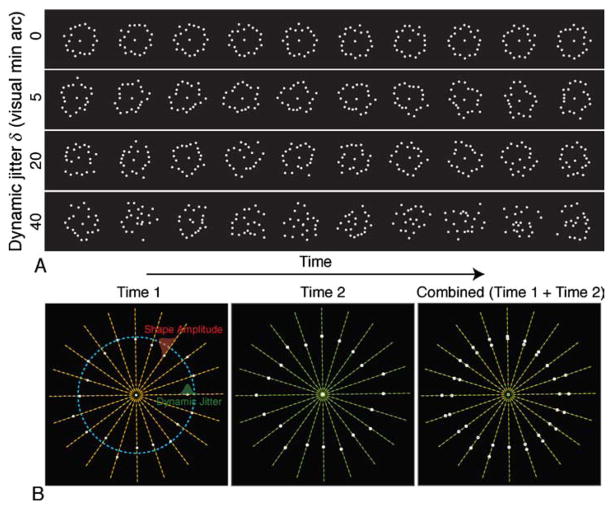

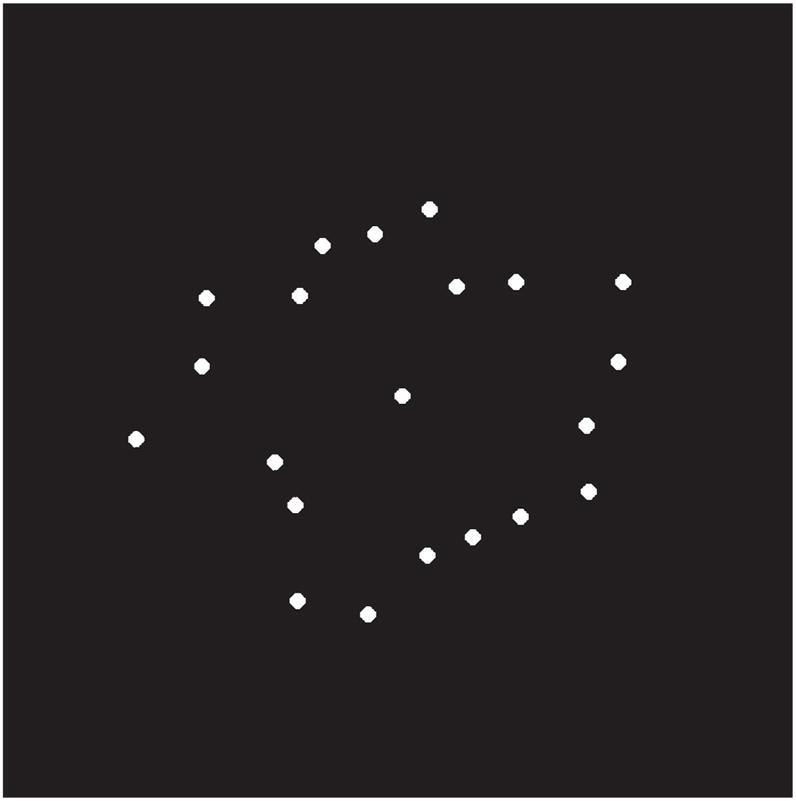

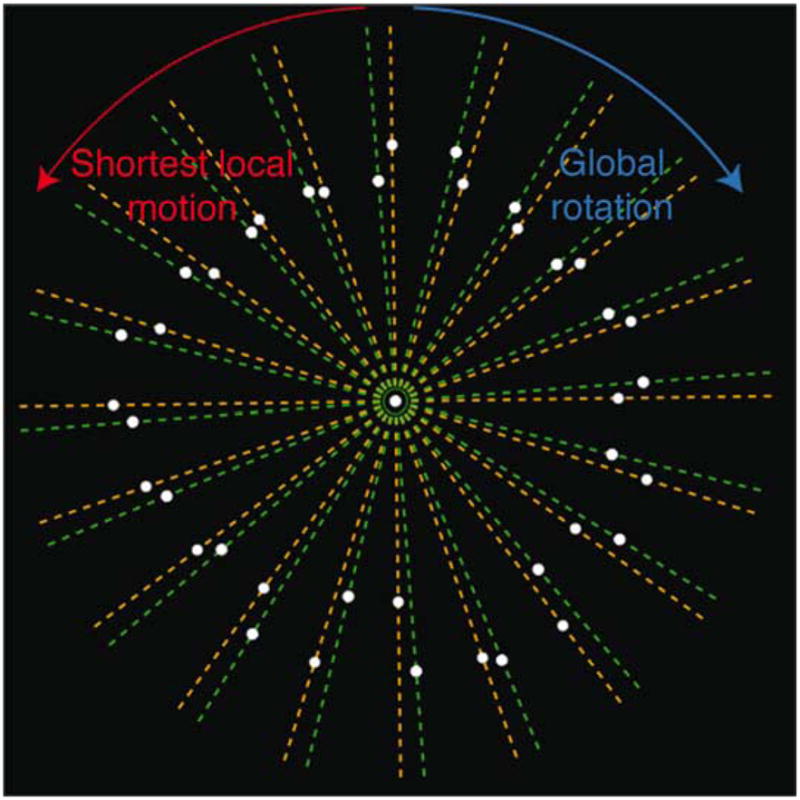

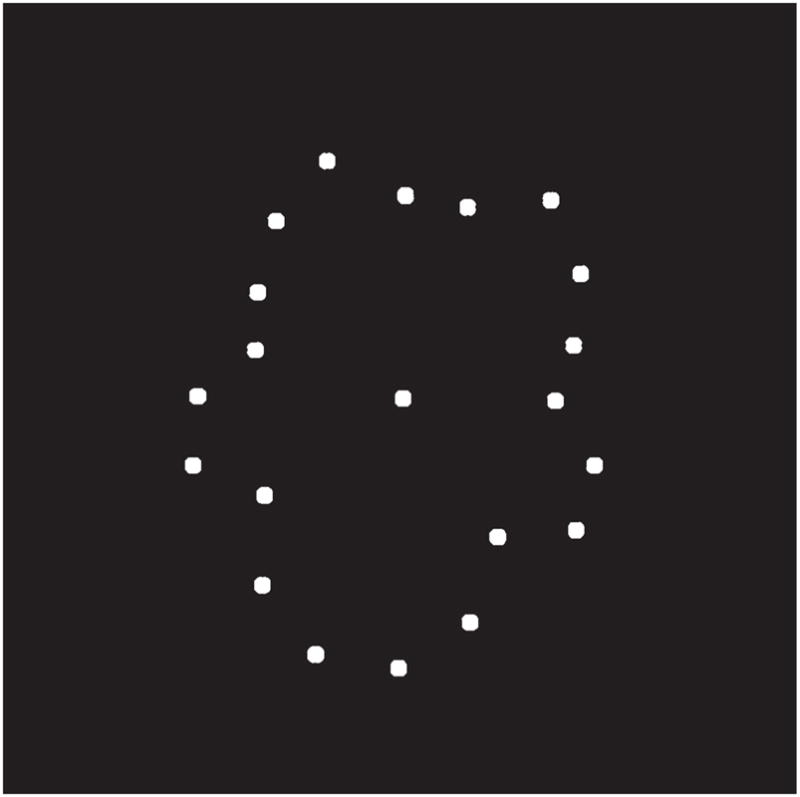

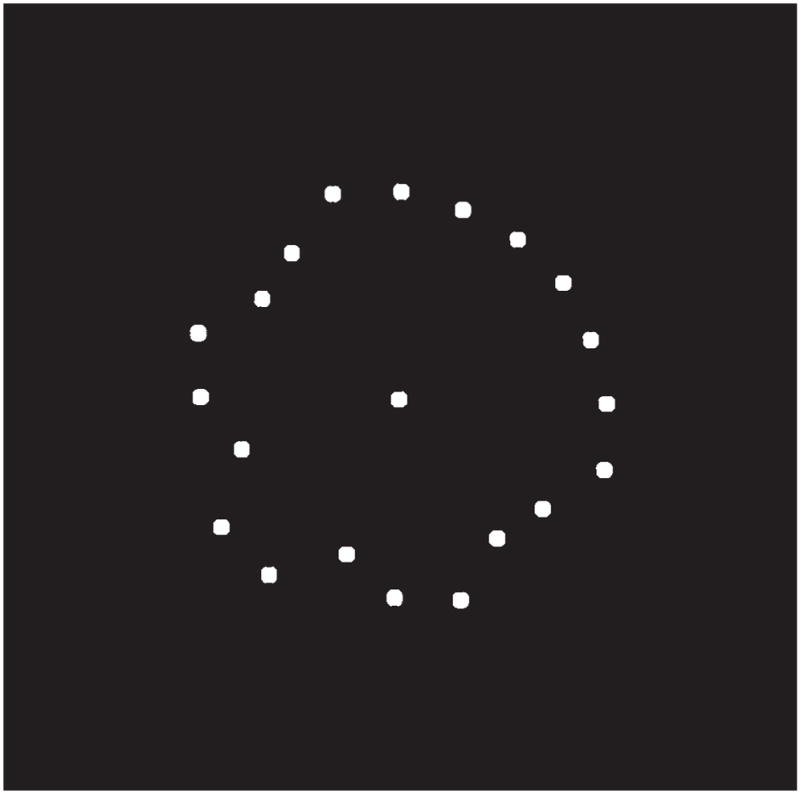

We sought to determine how specific local motions are chosen from the set of possible correspondences and integrated into global percepts. In the new method, identical dots were synchronously moved in or out along invisible spokes radiating from a center, in a manner consistent with the rotation of a single stimulus (Figure 1A, Movies 1–4). The stimuli consisted of jagged shapes formed by taking 20 identical dots evenly spaced around a circle (angular gap θ = 18°) and independently varying each dot’s radial distance from the central fixation along its invisible spoke (Figure 1B). For each trial, the variations were drawn from a Gaussian random distribution with zero mean and standard deviation α, the shape amplitude. Further, on each frame in the trial, positional noise was applied to each dot’s radial component, independently sampled from a random Gaussian distribution with zero mean and a pre-set standard deviation δ, the dynamic jitter magnitude for that trial (Figure 1B).

Figure 1.

(A) Four representative trials from Experiment 1 at different dynamic jitter values. (B) The generation technique for Experiment 1 stimuli. Dot positions were initially set by the intersection of a circle and evenly spaced spokes. Dot variation from the circle was determined by the shape amplitude, α, for the trial and the dynamic jitter, δ, added to each frame. The first two panels depict sequential frames of the trial. The third panel superimposes the two frames to demonstrate that “nearest neighbor” motions fall along the generative spokes rather than along the veridical rotation. Dashed lines illustrate generative technique and were not visible during the experiment. Individual dots appear proportionately larger than the experimental stimuli.

Movie 1.

Representative trial from Experiment 1 at 3.5 Hz, α = 10, and dynamic jitter value (δ) = 0. Recommended viewing distance 1 m.

Movie 4.

Representative trial from Experiment 1 at 3.5 Hz, α = 10, and dynamic jitter value (δ) = 40. Recommended viewing distance 1 m.

In Experiment 1, shape rotation between successive frames was set equal to the angle θ, so the rotation of a circle centered at fixation would be invisible in this experiment. Given the ambiguous nature of the rotation, a dot belonging to a random shape on frame i could have been perceived as moving to either of the adjacent spokes on frame i + 1 or along the same spoke. As illustrated in Figure 1B, the distance between a dot at frame i was generally shorter to the dot on the same spoke at frame i + 1 than it was to dots on adjacent spokes. A “nearest neighbor” rule is generally accepted as dominating the perceived path of apparent motion in cases where multiple locations compete for motion correspondence (e.g., Ullman, 1979). In addition, the shortest spatial excursion between two frames is also the slowest motion, which has been suggested as a governing principle in motion perception (Weiss et al., 2002). To test whether local correspondence or coherent global rotation dominates motion perception in different configurations, we measured observers’ accuracy in determining the direction of rotation as a function of the standard deviation δ of dynamic jitter. Since, a moving form is sampled through apertures, this paradigm may seem similar to multi-slit viewing (Anstis, 2005; Kandil & Lappe, 2007; Nishida, 2004), but it is different both in intent and design. Instead of using familiar shapes to study form recognition, we used unfamiliar shapes that deform during rotation to create competition between different rules of motion combination.

Methods

There were 10 sequential frames in each trial. The 20 white dots subtended 6.6 min arc each, varied around a circular radius of 132 min arc, and were presented against a black background. Shape amplitudes of α = 4 or 13 min arc were used to assign each dot a fixed radius for the entire trial. Dynamic jitter was calculated independently for each dot per frame with δ set at 0, 2.5, 5, 10, 20, or 40 min arc for the trial. To vary the difficulty of the task, we used presentation rates of 3.5, 5.5, or 12.5 frames per second. Rotation speed was proportional to presentation rate, since all trials consisted of the same number of frames.

Stimuli were generated with a Cambridge Research Systems ViSaGe controlled by a Dell GX620 and displayed on a Sony CRT monitor with 1024 × 768 pixels at 120-Hz refresh rate. The observer viewed the monitor at a distance of 100 cm in a dark room with head positioned on a chin rest. For each trial, the observer used a key press to choose between clockwise and counterclockwise global rotations. No feedback was provided. Trials were presented in blocks of 36, containing each of the 36 conditions in random order. Observers viewed 20 blocks spread out over 2 days, allowing for 20 repetitions of each condition.

Data were collected from six observers, including author EC and 5 naive observers who were paid for their participation. All had normal or corrected-to-normal vision and were given prior training on the experimental task. The experiments were conducted in compliance with the standards set by the IRB at SUNY College of Optometry and observers gave their informed consent prior to participating in the experiments.

Results and modeling

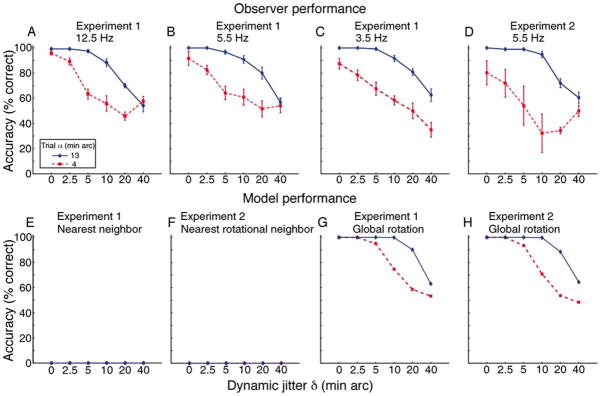

Figures 2A–2C display accuracy, averaged over 6 observers, plotted against δ of the dynamic jitter, for the three presentation rates, respectively. Accuracy decreased monotonically with increasing jitter. High accuracy rates at low jitter show that global rotation was detected easily, despite competing with shorter inward and outward local motions. This shows that the global percept is not formed by combining the most salient local motions. Shapes with larger shape amplitudes (α) were significantly more resistant to dynamic jitter (F(1, 5) = 465.3, p < 0.0001) but not by a constant factor as reflected in the significant interaction between shape amplitude and jitter (F(5, 5) = 9.9, p < 0.001). An accuracy of 75% can be used as the estimated threshold for radial jitter for each shape amplitude. Thresholds generally corresponded to values of dynamic jitter slightly greater than the shape amplitude, i.e., when the deformation of the trial shape from frame to frame was of the same order as the variations that distinguish the trial shape from a circle. This suggests that until the global rotation becomes incoherent, its percept dominates the shorter/slower local motions that indicate local expansions or contractions but do not form a coherent percept. The difference between the two presentation times was not significant, but a small improvement for less jagged shapes at faster presentation times led to a significant interaction between presentation time and shape amplitude (F(2, 5) = 4.7, p < 0.05). Johansson (1975) wrote “The eye tends to assume spatial invariance, or invariance of form, in conjunction with motion rather than variance of form without motion”. The results of this experiment provide limits to Johansson’s principle.

Figure 2.

(A–C) Rotation discrimination in Experiment 1 averaged over 6 observers, plotted against δ of the dynamic jitter, for the three presentation rates. (D) Results from Experiment 2. (E) Predictions from Nearest Neighbor Model for Experiment 1. (F) Predictions from Nearest Rotational Neighbor Model for Experiment 2. (G) Predictions from Global Rotation Model for Experiment 1. (H) Predictions from Global Rotation Model for Experiment 2.

To show quantitatively that observers’ rotation perception could not be explained by combining the shortest/slowest local motions, we implemented a Nearest Neighbor Model based on closest spatiotemporal correspondence. Each dot on frame i was matched to the nearest dot on frame i + 1. On each trial, a tally was kept of the number of clockwise, counterclockwise, and same spoke matches. The trial was classified as clockwise or counterclockwise if the majority of matches were in that direction. For the same stimuli as used in Experiment 1, the predicted percent of correct classifications is plotted against dynamic jitter in Figure 2E, showing that this model could not detect the correct direction of rotation because its input was dominated by same spoke motions.

There remain three plausible explanations for the experimental results. First, since observers are instructed to report only the direction of rotation, it is possible that they were able to ignore the radial excursions by attending only to motions from one spoke to another, and the global percept is created from the shorter/slower of the local rotary motions. Second, activation of a neural template for rotary motion, e.g., MST neurons selective for direction of rotation (Duffy & Wurtz, 1991) supersedes other motion percepts. Third, the observer matches shapes across consecutive frames and infers rotation direction from the best match. We test these possibilities in subsequent experiments and models.

Experiment 2: Strongest local motions vs. global rotation

The attention-based explanation would be consistent with the finding by Chen et al. (2008) that task-dependent spatial attention modulates neuronal firing rate in striate cortex and that response enhancement and suppression are mediated by distinct populations of neurons that differ in direction selectivity. We tested this explanation by using stimuli missing the same-spoke excursions but where the shortest/slowest local motions were in the opposite direction from the shape’s rotation. In Experiment 2, each rotation was equal to 80% of the angle θ between dots (Figure 3). The shortest/slowest local motions were all individually consistent with rotation but in the direction opposite to the globally consistent rotation.

Figure 3.

The correspondence conflict between successive stimulus frames in Experiment 2. Orange lines signify the shape on frame i. Green lines signify the shape on frame i + 1. For most dots, the shortest local path is in the direction opposite to the global rotation.

Experimental methods were identical to Experiment 1, except that the magnitude of each rotation was 80% of the distance between spokes, i.e., 14.4°. Only one presentation speed (5.5 Hz) was used. Data were collected for author EC and 3 experienced observers.

The average results are plotted in Figure 2D to allow comparison with the 5.5-Hz results from Experiment 1. The manipulation of rotation percentage made little difference to observers’ accuracy in reporting rotation direction. Informal reports from observers revealed they were generally unaware of the shorter local correspondence created by partial rotation. These results argue against a combination of shortest/slowest local motions as a basis for rotation perception. If attention is involved, it may be captured by the dots moving coherently in one direction (Driver & Baylis, 1989).

To provide quantitative support for this assertion, we implemented a Nearest Rotational Neighbor Model, which was identical to the first model, except that radial (same spoke) motions were ignored and dots were matched according to the shortest/slowest rotary motions. As would be expected, this model did better for the large amplitude shapes in Experiment 1. However, it did not predict observers’ accuracy for the low amplitude shapes and failed completely on the critical test provided by Experiment 2 (Figure 2F).

Optimal global rotation model for shape-driven rotation

Two classes of neural processes could register global rotation, processes that differentiate between forms of movement, and processes that differentiate between movements of forms. The first class could be MST-like rotation templates that can signal the correct direction even if the center of rotation does not coincide with the center of the receptive field (Zhang, Sereno, & Sereno, 1993). The second class could consist of Procrustes-like processes that match shapes by discounting rotation, translation, and scaling (Mardia & Dryden, 1989). In both cases, the modeling issues are similar: how is the error estimated across each pair of frames, what function of this computed error is used to decide the direction, how are errors accumulated across frames in a trial, and how is the direction decision made for each trial. Probability theory (Jaynes, 2003) provides optimal rules for all of these issues. In fact, at the computational level, these rules allow us to design the same optimal Global Rotation Model for the two distinct neural processes.

A rotating rigid shape provides a perfect fit to a rotation template and also a perfect shape match after rotation. Any error in the shape match due to dynamic jitter will be proportional to the motion deviation from a perfect rotation template; hence, we can use the same error metric for both processes. A number of error metrics have been devised for shape mismatches, but for the stimuli used in this study, a sufficient metric is to sum the squared distances between corresponding dots, after allowing for rotation, translation, and scaling. If Gi is the transition from frame i to frame i + 1, is the sum of squared errors for transition Gi after accounting for a clockwise rotation, and is the sum of squared errors for transition Gi after accounting for a counterclockwise rotation.

The optimal method to evaluate the plausibility ratio of the two alternative rotation directions given a particular transition is by using Bayes’ theorem to relate the probability of the direction given the transition, to the likelihood that the transition occurred as a result of some rotation angle in that direction, and the prior probability of that direction (MacKay, 2003):

| (1) |

where Pi(cw) and Pi(cc) are the prior probabilities for clockwise and counterclockwise rotations (based on the experimental design, priors were set equal to 0.5). The likelihoods for stimulus transition Gi, P(Gi |θcw) and P(Gi |θcc), were calculated for each rotation angle independently using

| (2) |

where, is the sum of squared errors for transition Gi after accounting for a rotation by an angle θk.

The rotation was considered clockwise for 0 < θk < π and counterclockwise for −π < θk < 0. Assuming that judgments on each transition were independent of other transitions, the plausibility ratio for each trial was taken as the product of the ratios calculated for all transitions in that trial. The outcome of the trial was taken as clockwise if the trial ratio was larger than 1.0 and as counterclockwise otherwise.

Finally, the total numbers of correct rotation decisions were tallied to get an accuracy proportion over all trails belonging to each condition. These estimates are plotted for the stimuli of Experiments 1 and 2 in Figures 2G and 2F, respectively. The Global Rotation Model does as well as the human observers in both experiments, suggesting that the visual system could either use a rotation template or match shapes across rotations to accomplish the task. Note that the optimal model also performs with greater accuracy for the larger shape amplitude, reflecting the easier distinctions between cc and cw shape matches as shapes depart more from the generating circle.

Human vs. model efficiency

Psychophysical results can sometimes be explained quantitatively in terms of neural properties (e.g., Cohen & Zaidi, 2007a). However, there is little information about neurons that code complex motions (Duffy & Wurtz, 1991, 1995; Oram & Perrett, 1996) or complex forms (Gallant, Braun, & Van Essen, 1993; Pasupathy & Connor, 1999; Tanaka, 1996; Tanaka, Saito, Fukada, & Moriya, 1991). Therefore, instead of a neural model that predicts rotation direction, the Global Rotation Model provides optimal decoding of rotations at a computational level (Marr, 1982).

To confront the model with the same problems as the human observers, calculating the shape error required the model to estimate the center and angle of rotation from the frame data. In particular, the centroid of each frame was taken as the center of rotation, creating some variability with respect to the true center. We examined whether performance would improve if we provided the shape-matching algorithm with the true center of rotation or if the center of rotation was computed as a running average of centroids for all the frames. The improvement in both cases was barely discernible. Similarly, using exact errors, analytically computed from the stimulus generation routine, to calculate likelihoods, led to only a slight improvement in performance. This was probably because the shape-matching routine is quite accurate, as reflected by the fact that the distribution of errors computed by the model was very similar to the distribution of errors computed from the combinations of Gaussian noise distributions in the shape generation routine. It is worth noting that the model’s performance degraded if instead of the priors for clockwise and counterclockwise rotations being set at 0.5, the prior for each transition in the simulation was updated based on the outcome of the preceding transition.

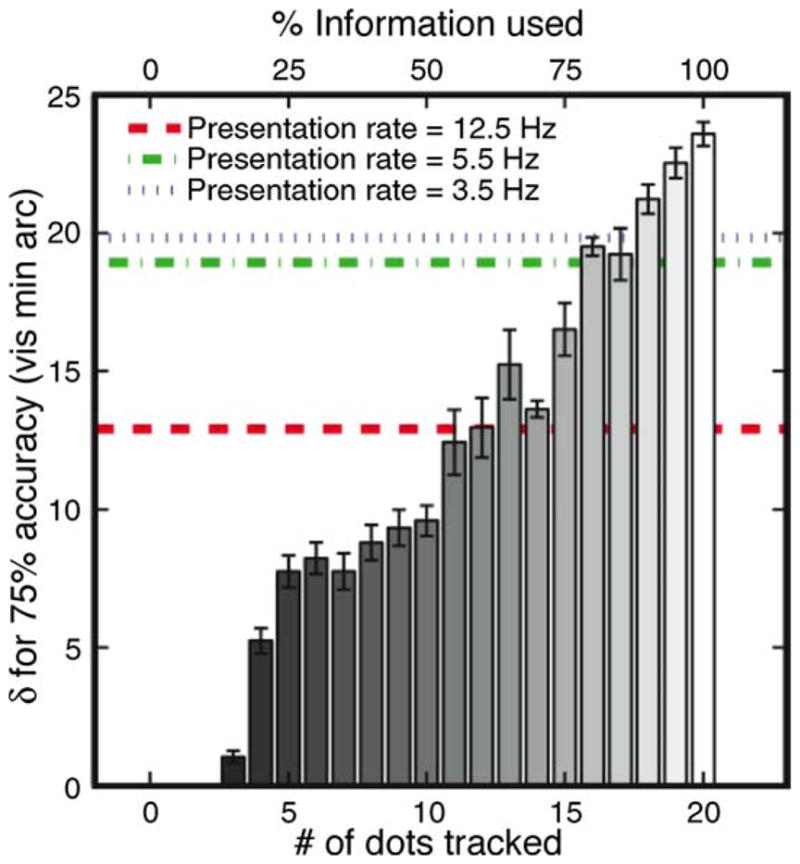

Since human and model accuracies decrease monotonically with dynamic jitter (Figure 3) and the task used a two-alternate forced choice, we summarized the performance curves by an accuracy threshold equal to the amount of noise at the 75% accuracy level. Unlike usual thresholds, in this case a higher accuracy threshold implies that the observer can tolerate more noise and hence performs better. The horizontal lines in Figure 4 show accuracy thresholds for human performance at three speeds for the higher shape amplitude in Experiment 1, where the shapes were more distinct. We simulated the thresholds of the optimal Global Rotation Model for the stimuli of Experiment 1, but instead of considering the whole shape, we considered only 1, 2, … or 20 consecutive dots, chosen randomly for each frame transition. The labels on the top of Figure 4 convert number of dots considered to percentage of available information used, which we will use as a measure of equivalent efficiency for human observers. At the slowest speed we tested, human observers performed almost as well as the model that used 18 points, i.e., at 90% of the efficiency of the optimal decoder. This implies that the human visual system includes near optimal processes for matching deforming shapes and/or for detecting rotation in the presence of strong distracting motions. The equivalent efficiency of human observers declined at the faster presentation rates. The equivalent linear speeds at these presentation rates were 3.8 and 8.6 dva/s. Since motion energy is extracted well at these speeds (Lu & Sperling, 1995; Zaidi & DeBonet, 2000), observer limitations at higher speeds may reflect the number of dots that can be used in shape or motion computations at the shorter stimulus durations.

Figure 4.

Seventy-five percent accuracy thresholds for human performance at three presentation rates in Experiment 1 compared with the performance of the optimal Global Rotation Model using subsets of dots, or equivalently percent of available information. Error bars for the model simulations are standard errors calculated from 6 independent sets of stimuli, each containing the same number of trials as the psychophysical experiments.

Shape-driven motion

Experiment 3: Shape axis motion

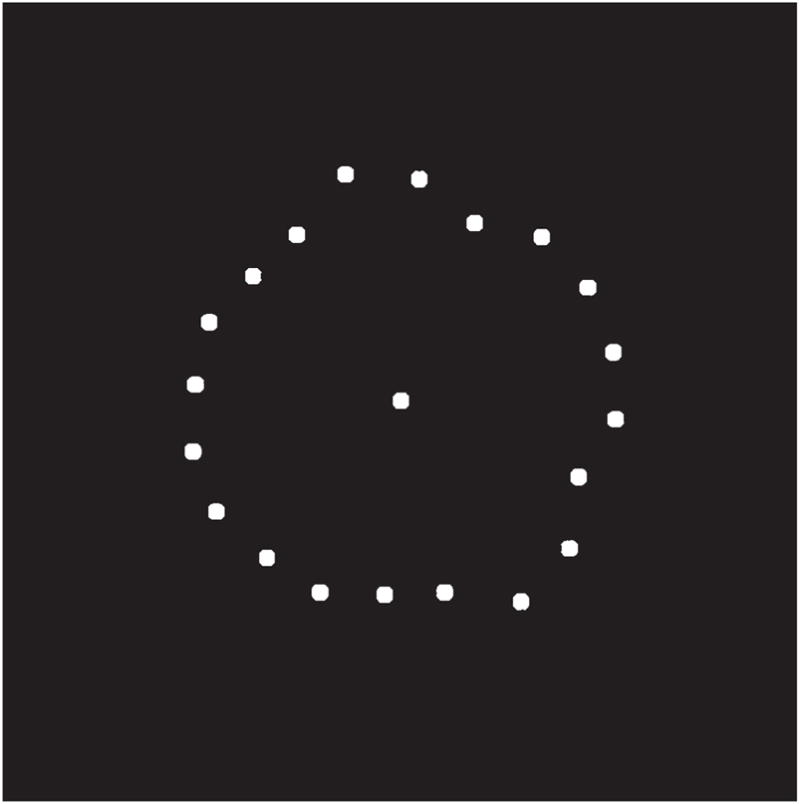

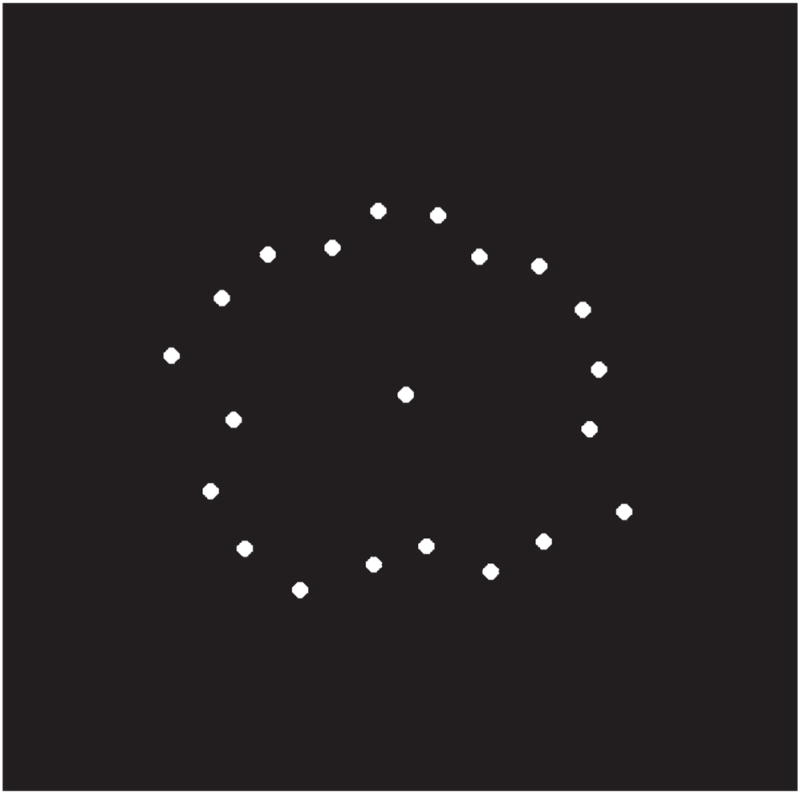

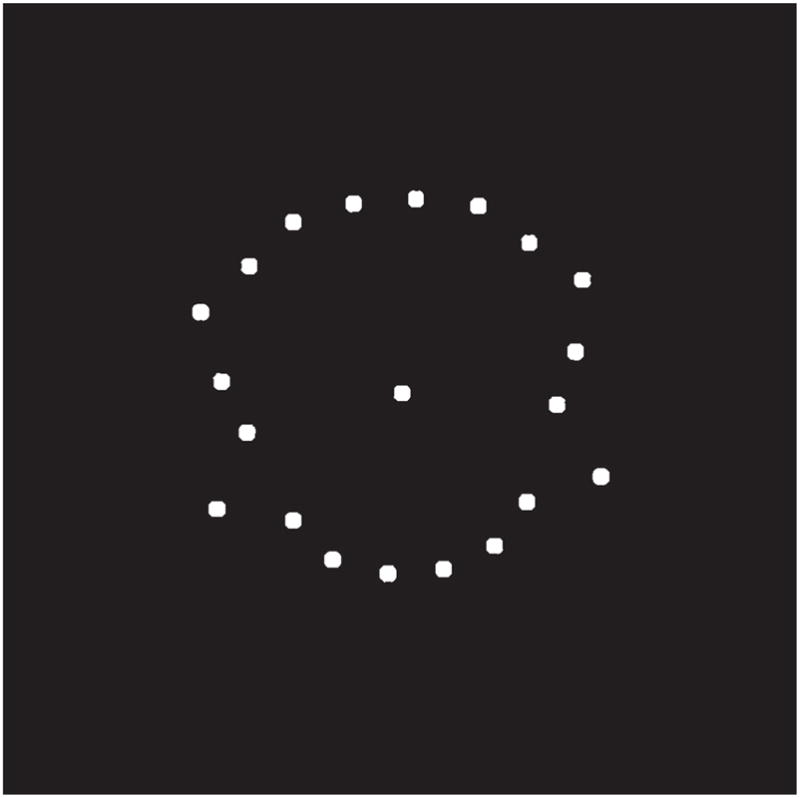

The next challenge was to devise an experiment in which rotation direction could be determined from an abstracted shape property in conditions where shape matches and rotation templates are insufficient. We sought a salient shape attribute that could retain spatiotemporal continuity without having features that are correlated across frames. Shape axes have been considered central defining features of shape representation (Marr & Nishihara, 1978), and this may be particularly true for axes of bilateral symmetry (Wilson & Wilkinson, 2002). In the extreme case, we presented essentially unique symmetric shapes on each frame, so that dot-based correlations were absent between successive frames, but the orientations of the symmetry axes rotated consistently in one direction. Thus, only an extracted symmetry axis could provide a cue to rotation direction.

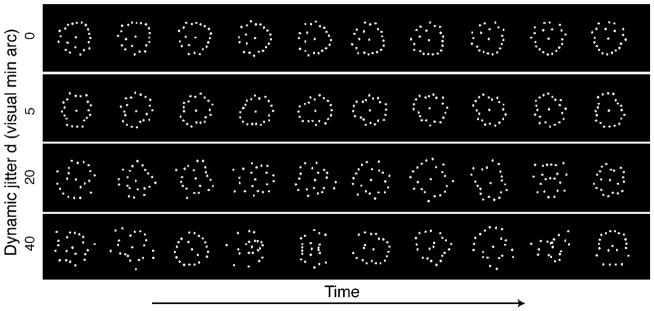

Stimuli were varied for symmetry in two ways. Base shapes were generated from circles to be either symmetric or asymmetric, and dynamic jitter was also either symmetric or asymmetric. Trials with symmetric base shapes and high levels of symmetric dynamic jitter consisted of a series of distinct symmetric shapes with a continuously turning axis (Figure 5, Movies 5–8).

Figure 5.

Four representative trials in the symmetric shape/symmetric jitter condition from Experiment 3 at four different dynamic jitter levels. Trials with high dynamic jitter appear to display a unique shape on each frame while presenting a unidirectional rotation.

Movie 5.

Representative trial from Experiment 3 at 2.5 Hz and symmetric dynamic jitter value (δ) = 0. Recommended viewing distance 1 m.

Movie 8.

Representative trial from Experiment 3 at 2.5 Hz and symmetric dynamic jitter value (δ) = 40. Recommended viewing distance 1 m.

In Experiment 3, all stimulus parameters from Experiment 1 were repeated for all combinations of base shape and dynamic jitter. Therefore, there were four times as many experimental conditions (2 types of base shape × 2 types of dynamic jitter). This produced a total of 144 conditions (36 × 4). Each experimental block contained all conditions in random order. Twenty blocks were run for each subject. All six participants from Experiment 1 participated in Experiment 3.

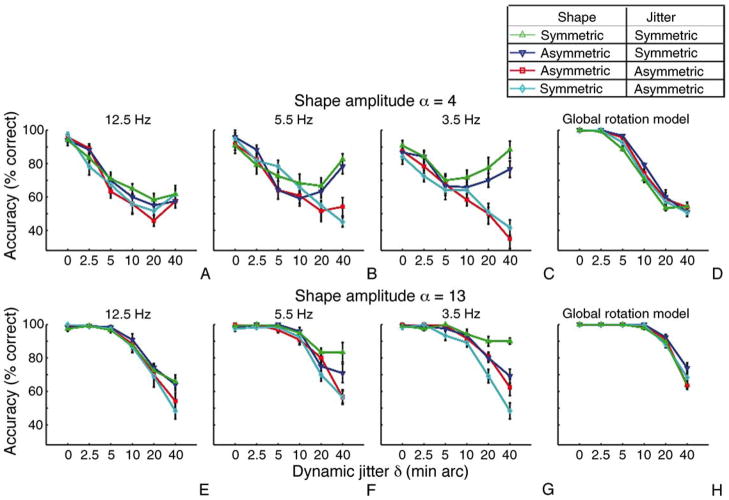

Performance accuracy in Experiment 3 as a function of dynamic jitter is plotted in Figures 6A–6C and 6E–6G for two shape amplitudes and three presentation rates. With low levels of dynamic jitter, rotations of symmetric and asymmetric shapes were detected equally. With increasing dynamic jitter, the symmetric dynamic jitter results form U-shaped functions. As dynamic jitter magnitude increased, making each sequential shape more unique from its temporal neighbors, symmetric motions remained detectable for the two slower presentation rates. Observers perceived the stimulus as rotating, despite lack of correspondence between local elements, at magnitudes of dynamic jitter that made it impossible for observers to detect asymmetric global rotation at any presentation rate. There was a significant main effect for symmetric versus asymmetric jitter (F(1, 5) = 130.5, p < 0.001) and an interaction between jitter symmetry and jitter amplitude (F(5, 5) = 33.6, p < 0.001). As shown in Figures 6D and 6H, the Global Rotation Model demonstrated no significant difference between symmetric and asymmetric stimuli from Experiment 3. This demonstrates that observers’ performance on symmetric shapes was not due to dot-based shape or motion correlations. Comparison of human and model performances show that observers were capable of outdoing the optimal global model by extracting relevant shape attributes that are invisible to shape matching and rotation templates.

Figure 6.

(A–C and E–G) Results from Experiment 3 for symmetric and asymmetric random shapes. (D, H) Accuracy estimates from the Global Rotation Model for the two shape amplitudes.

The question arises whether discerned movement of symmetric shapes is based on perceived motion or on perceived changes of orientation, a variant of the dichotomy between motion energy and feature tracking (Lu & Sperling, 1995; Zaidi & DeBonet, 2000). Phenomenologically, in these trials, unless the shapes were perceived as symmetric, the dots were seen in random motion. When symmetry was perceived, the impression was that of a shape changing during rotation but not always smoothly. Direction discernment thus required following the rotation actively, consistent with feature tracking or “conceptual motion” (Blaser & Sperling, 2008; Seiffert & Cavanagh, 1998). However, symmetry-based motion exhibits at least one signature of “perceptual motion”, i.e., motion aftereffects. To test for motion aftereffects, we adapted for 30-s epochs to shapes from the Symmetric-shape + Symmetric-jitter conditions with α = 4 and δ = 40 rotated at 3.5 Hz in Experiment 3. As shown in Figure 6C, the greatest performance advantage for symmetry occurs in this condition. Two authors (AJ and QZ) reported the direction of the adapting stimulus and then judged the direction of rotation of dots forming a circle of the same average size as the adapting stimulus. The dots of the circle were rotated half the inter-spoke angle at 3.5 Hz, hence were equally likely to be seen to move in clockwise and counterclockwise directions, unless biased by motion adaptation. For the Symmetric shapes, AJ judged 30/30 of the adapting directions correctly while QZ judged 26/30 correctly. More to the point, AJ judged the motion aftereffects to be in the direction opposite to the simulated motion 26/30 times and QZ judged 22/30 times (chance performance can be rejected for both observers at p < 0.01). Both observers noted that unlike judging the direction of the jittered symmetric shapes, judging the direction of the aftereffects seemed effortless. These aftereffects were not an artifact, because reliable aftereffects did not result from adaptation to Asymmetric shape + Asymmetric jitter of the same size at the same speed: percent corrects for Asymmetric adapting shapes were essentially at chance, 13/30 and 15/30, as were aftereffects reported in the direction opposite to the simulated motion, 13/30 and 16/30.

Experiment 4: Symmetry extraction durations

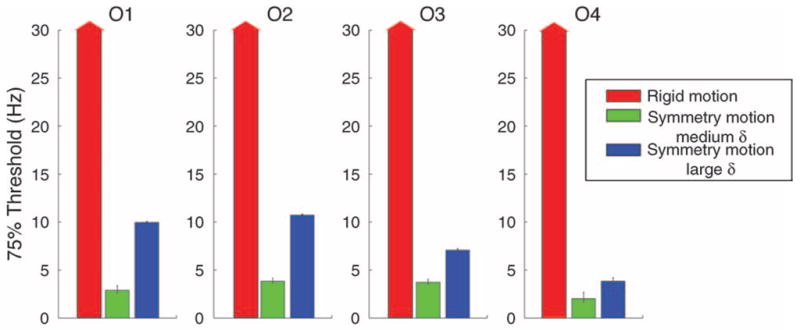

The results of Experiment 3 showed a decrease in accuracy for symmetric stimuli at higher presentation rates. To test whether this effect is due to the time demands of symmetry detection, Experiment 4 was designed to measure duration thresholds for perceiving the rotation of symmetric shapes. Four of the six observers (author EC and three others) were presented with rotations of either rigid asymmetric shapes or symmetric shapes with large magnitudes of symmetric dynamic jitter, at nine presentation rates ranging from 1.5 to 60.0 Hz.

Figure 7 shows the 75% presentation rate thresholds estimated from psychometric functions for accuracy (Wichmann & Hill, 2001). Observer performance for rigid motion trials was above threshold for all presentation rates. Average threshold for detecting symmetry axis rotation was 7.9 Hz (SE = 1.6) for jitter amplitude δ = 40 and 3.1 Hz (SE = 0.41) for δ = 20. Symmetry perception is possible in intervals as short as 50 ms under ideal conditions (Julesz, 1971) but can take considerably longer for complex stimuli (Cohen & Zaidi, 2007b) and non-vertical orientations (Barlow & Reeves, 1979). The presentation rate threshold for the larger δ translates into a frame duration threshold of 125 ms. This result suggests that observers need presentation durations compatible with symmetry extraction to detect rotation of the symmetry axis.

Figure 7.

Results from Experiment 4 for each of four observers (O1–O4). Red arrows for rigid motion stimuli indicate that performance was above threshold at all presented rates. Green bars show results for shape amplitude δ = 20 and blue bars for δ = 40.

Discussion

The results of this study have a number of implications for understanding object motion perception. The results of Experiments 1 and 2 show that human observers are almost as efficient at detecting the rotation of arbitrarily deforming objects as the optimal statistical decoder. Despite competition from stronger local motion signals, global rotation is discriminated easily and accurately in the presence of even high levels of positional noise. The random jitter in this method may be seen as reflecting a worst-case scenario for motion of deforming objects. In real-world deformations, local motions will be more systematic and there is likely to be a higher shape correlation across successive frames. Human observers may thus be expected to do better than a shape-matching model for most natural deformations, especially those that present extra information like elongations.

The results of Experiments 3 and 4 show that observers can use symmetry axes to infer motion direction, even when form or motion information from the contour is uninformative. Stable appearance descriptors can be a tremendous aid in the critical task of object recognition. For example, shape is the geometric descriptor that is invariant to translation, rotation, and scaling (Kendall, Barden, Carne, & Le, 1999). It has been suggested that by detecting and encoding an object’s shape structure, the visual system may form a robust object representation that is stable across changes in viewing conditions and efficiently characterizes spatially distributed information (Biederman, 1987; Hoffman & Richards, 1984; Marr & Nishihara, 1978). Some retinal projections of 3-D objects, however, introduce shape distortions that the visual system is unable to discount (Griffiths & Zaidi, 1998, 2000). In addition, many objects are articulated, plastic, or elastic, so a rigid shape description is insufficient. In such cases, invariant shape properties like symmetry may aid in recreation and recognition of volumetric shapes from images (Pizlo, 2008). In fact, bilateral symmetry is widespread in natural and man-made objects (Tyler, 1996), and axes based on local symmetry have been found to be useful in representing shapes (Blum, 1973; Feldman & Singh, 2006; Leyton, 1992; Marr & Nishihara, 1978). Our results show that in identifying the path of a moving object, where disparate and spatially distributed local motion signals need to be combined (Hildreth, 1984; Ullman, 1979; Yuille & Grzywacz, 1988), the visual system may benefit from representation of object structures such as axes of symmetry. Symmetry was chosen as the property examined in these studies due to its separability from local contour properties, but it is possible that other abstract properties related to the contour may also benefit motion processing.

The motivation for this study was to identify the influence of form processing on the perception of object motion. The results of this study identify a number of such influences. First, the selection of local motion information for combination into a global percept depends not on the relative strengths of local directional signals but on the most plausible/coherent global motion (note that the dot motions are compatible with rotation in either direction if it is coupled with sufficient shape distortions). The global percept may be a result of the activation of a rotation template overriding an incoherent percept formed by the strongest local motion signals, or it may result from a process that infers the direction of motion as that which gives the strongest shape correlation across frames. The latter generalizes the basic idea behind Reichardt’s (1961) detector to object motions more complex than translation (Lu & Sperling, 1995). Second, the shapes we used in the first two experiments had no inherent orientation, so the inference of rotation from one frame to the other could not have been based on any intrinsic quality of the shapes but solely on the post-rotation point-wise correlation or the most coherent global motion. Third, for these arbitrary shapes, the high efficiency of human motion perception could be due to either just motion or just shape information or both. The above threshold accuracy for motion detection of the dynamically varying symmetric shapes, however, cannot be based on any rotation-sensitive neuron fed by motion-energy signals, or on correlating contours of the shape, so it must be based on shape representations that explicitly label the extracted symmetry axes. It is possible that shape representations that include abstracted attributes may provide solutions to a broad range of problems in conditions where contours or features create ambiguous percepts. A general model for the perception of object motion should thus include representation processes that highlight shape attributes.

Movie 2.

Representative trial from Experiment 1 at 3.5 Hz, α = 10, and dynamic jitter value (δ) = 5. Recommended viewing distance 1 m.

Movie 3.

Representative trial from Experiment 1 at 3.5 Hz, α = 10, and dynamic jitter value (δ) = 20. Recommended viewing distance 1 m.

Movie 6.

Representative trial from Experiment 3 at 2.5 Hz and symmetric dynamic jitter value (δ) = 5. Recommended viewing distance 1 m.

Movie 7.

Representative trial from Experiment 3 at 2.5 Hz and symmetric dynamic jitter value (δ) = 20. Recommended viewing distance 1 m.

Acknowledgments

This work was supported by NEI Grants EY07556 and EY13312 to Q.Z.

Footnotes

Commercial relationships: none.

Contributor Information

Elias H. Cohen, Graduate Center for Vision Research, State University of New York, College of Optometry, New York, NY, USA, & Psychology Department, Vanderbilt University, Nashville, TN, USA

Anshul Jain, Graduate Center for Vision Research, State University of New York, College of Optometry, New York, NY, USA.

Qasim Zaidi, Graduate Center for Vision Research, State University of New York, College of Optometry, New York, NY, USA.

References

- Akhter I, Sheikh YA, Khan S, Kanade T. Nonrigid structure from motion in trajectory space. Paper presented at the Neural Information Processing Systems.2008. [Google Scholar]

- Anstis S. Local and global segmentation of rotating shapes viewed through multiple slits. Journal of Vision. 2005;5(3):4, 194–201. doi: 10.1167/5.3.4. http://www.journalofvision.org/content/5/3/4. [DOI] [PubMed] [Google Scholar]

- Barlow HB, Reeves BC. The versatility and absolute efficiency of detecting mirror symmetry in random dot displays. Vision Research. 1979;19:783–793. doi: 10.1016/0042-6989(79)90154-8. [DOI] [PubMed] [Google Scholar]

- Biederman I. Recognition-by-components: A theory of human image understanding. Psychological Review. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Blaser E, Sperling G. When is motion “motion”? Perception. 2008;37:624–627. doi: 10.1068/p5812. [DOI] [PubMed] [Google Scholar]

- Blum H. Biological shape and visual science. I. Journal of Theory of Biology. 1973;38:205–287. doi: 10.1016/0022-5193(73)90175-6. [DOI] [PubMed] [Google Scholar]

- Caplovitz GP, Tse PU. Rotating dotted ellipses: Motion perception driven by grouped figural rather than local dot motion signals. Vision Research. 2007a;47:1979–1991. doi: 10.1016/j.visres.2006.12.022. [DOI] [PubMed] [Google Scholar]

- Caplovitz GP, Tse PU. V3A processes contour curvature as a trackable feature for the perception of rotational motion. Cerebral Cortex. 2007b;17:1179–1189. doi: 10.1093/cercor/bhl029. [DOI] [PubMed] [Google Scholar]

- Chen Y, Martinez-Conde S, Macknik SL, Bereshpolova Y, Swadlow HA, Alonso JM. Task difficulty modulates the activity of specific neuronal populations in primary visual cortex. Nature Neuroscience. 2008;11:974–982. doi: 10.1038/nn.2147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen EH, Zaidi Q. Fundamental failures of shape constancy resulting from cortical anisotropy. Journal of Neuroscience. 2007a;27:12540–12545. doi: 10.1523/JNEUROSCI.4496-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen EH, Zaidi Q. Salience of mirror symmetry in natural patterns [Abstract] Journal of Vision. 2007b;7(9):970, 970. doi: 10.1167/7.9.970. http://www.journalofvision.org/content/7/9/970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Driver J, Baylis GC. Movement and visual attention: The spotlight metaphor breaks down. Journal of Experimental Psychology: Human Perception and Performance. 1989;15:448–456. doi: 10.1037//0096-1523.15.3.448. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Sensitivity of MST neurons to optic flow stimuli. I. A continuum of response selectivity to large-field stimuli. Journal of Neurophysiology. 1991;65:1329–1345. doi: 10.1152/jn.1991.65.6.1329. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Response of monkey MST neurons to optic flow stimuli with shifted centers of motion. Journal of Neuroscience. 1995;15:5192–5208. doi: 10.1523/JNEUROSCI.15-07-05192.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fang F, Kersten D, Murray SO. Perceptual grouping and inverse fMRI activity patterns in human visual cortex. Journal of Vision. 2008;8(7):2, 1–9. doi: 10.1167/8.7.2. http://www.journalofvision.org/content/8/7/2. [DOI] [PubMed] [Google Scholar]

- Feldman J, Singh M. Bayesian estimation of the shape skeleton. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:18014–18019. doi: 10.1073/pnas.0608811103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallant JL, Braun J, Van Essen DC. Selectivity for polar, hyperbolic, and Cartesian gratings in macaque visual cortex. Science. 1993;259:100–103. doi: 10.1126/science.8418487. [DOI] [PubMed] [Google Scholar]

- Giese MA, Poggio T. Neural mechanisms for the recognition of biological movements. Nature Reviews Neuroscience. 2003;4:179–192. doi: 10.1038/nrn1057. [DOI] [PubMed] [Google Scholar]

- Griffiths AF, Zaidi Q. Rigid objects that appear to bend. Perception. 1998;27:799–802. doi: 10.1068/p270799. [DOI] [PubMed] [Google Scholar]

- Griffiths AF, Zaidi Q. Perceptual assumptions and projective distortions in a three-dimensional shape illusion. Perception. 2000;29:171–200. doi: 10.1068/p3013. [DOI] [PubMed] [Google Scholar]

- Hawken MJ, Parker AJ, Lund JS. Laminar organization and contrast sensitivity of direction-selective cells in the striate cortex of the Old World monkey. Journal of Neuroscience. 1988;8:3541–3548. doi: 10.1523/JNEUROSCI.08-10-03541.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hildreth EC. Measurement of visual motion. Cambridge, MA: MIT Press; 1984. [Google Scholar]

- Hoffman DD, Richards WA. Parts of recognition. Cognition. 1984;18:65–96. doi: 10.1016/0010-0277(84)90022-2. [DOI] [PubMed] [Google Scholar]

- Hubel DH, Wiesel TN. Receptive fields and functional architecture of monkey striate cortex. The Journal of Physiology. 1968;195:215–243. doi: 10.1113/jphysiol.1968.sp008455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jaynes ET. Probability theory: The logic of science. Cambridge, UK: Cambridge University Press; 2003. [Google Scholar]

- Jenkins OC, Mataric MJ. A spatio-temporal extension to isomap nonlinear dimension reduction. Paper presented at the 21st International Conference on Machine Learning; Banff, Canada. 2004. [Google Scholar]

- Johansson G. Visual motion perception. Science America. 1975;232:76–88. doi: 10.1038/scientificamerican0675-76. [DOI] [PubMed] [Google Scholar]

- Julesz B. Foundations of cyclopean perception. Chicago: The University of Chicago Press; 1971. [Google Scholar]

- Kandil FI, Lappe M. Spatio-temporal interpolation is accomplished by binocular form and motion mechanisms. PLoS One. 2007;2:e264. doi: 10.1371/journal.pone.0000264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendall DG, Barden D, Carne TK, Le H. Shape and shape theory. Hoboken, NJ: John Wiley & Sons; 1999. [Google Scholar]

- Leyton M. Symmetry, causality, mind. Cambridge, MA: MIT Press; 1992. [Google Scholar]

- Lorenceau J, Alais D. Form constraints in motion binding. Nature Neuroscience. 2001;4:745–751. doi: 10.1038/89543. [DOI] [PubMed] [Google Scholar]

- Lu ZL, Sperling G. The functional architecture of human visual motion perception. Vision Research. 1995;35:2697–2722. doi: 10.1016/0042-6989(95)00025-u. [DOI] [PubMed] [Google Scholar]

- MacKay DJC. Information theory, inference, and learning algorithms. Cambridge, UK: Cambridge University Press; 2003. [Google Scholar]

- Mardia KV, Dryden IL. The statistical analysis of shape data. Biometrika. 1989;76:271–281. [Google Scholar]

- Marr D. Vision: A computational investigation into the human representation and processing of visual information. San Francisco: W. H. Freeman; 1982. [Google Scholar]

- Marr D, Nishihara HK. Representation and recognition of the spatial organization of three-dimensional shapes. Proceedings of the Royal Society of London B: Biology Science. 1978;200:269–294. doi: 10.1098/rspb.1978.0020. [DOI] [PubMed] [Google Scholar]

- McDermott J, Adelson EH. The geometry of the occluding contour and its effect on motion interpretation. Journal of Vision. 2004;4(10):9, 944–954. doi: 10.1167/4.10.9. http://www.journalofvision.org/content/4/10/9. [DOI] [PubMed] [Google Scholar]

- McDermott J, Weiss Y, Adelson EH. Beyond junctions: Nonlocal form constraints on motion interpretation. Perception. 2001;30:905–923. doi: 10.1068/p3219. [DOI] [PubMed] [Google Scholar]

- Morrone MC, Burr DC, Vaina LM. Two stages of visual processing for radial and circular motion. Nature. 1995;376:507–509. doi: 10.1038/376507a0. [DOI] [PubMed] [Google Scholar]

- Movshon JA, Adelson EH, Gizzi MS, Newsome WT. The analysis of moving visual patterns. In: Chagas C, Gattas R, Gross CG, editors. Pattern recognition mechanisms. Rome, Italy: Vatican Press; 1985. pp. 117–151. [Google Scholar]

- Murray SO, Kersten D, Olshausen BA, Schrater P, Woods DL. Shape perception reduces activity in human primary visual cortex. Proceedings of the National Academy of Sciences of the United States of America. 2002;99:15164–15169. doi: 10.1073/pnas.192579399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nishida S. Motion-based analysis of spatial patterns by the human visual system. Current Biology. 2004;14:830–839. doi: 10.1016/j.cub.2004.04.044. [DOI] [PubMed] [Google Scholar]

- Oram MW, Perrett DI. Integration of form and motion in the anterior superior temporal polysensory area (STPa) of the macaque monkey. Journal of Neurophysiology. 1996;76:109–129. doi: 10.1152/jn.1996.76.1.109. [DOI] [PubMed] [Google Scholar]

- Pasupathy A, Connor CE. Responses to contour features in macaque area V4. Journal of Neurophysiology. 1999;82:2490–2502. doi: 10.1152/jn.1999.82.5.2490. [DOI] [PubMed] [Google Scholar]

- Pizlo Z. 3D shape: Its unique place in visual perception. Cambridge, MA: MIT Press; 2008. [Google Scholar]

- Ramachandran VS, Armel C, Foster C, Stoddard R. Object recognition can drive motion perception. Nature. 1998;395:852–853. doi: 10.1038/27573. [DOI] [PubMed] [Google Scholar]

- Reichardt HP. Autocorrelation, a principle for evaluation of sensory information by the central nervous system. In: Rosenblith WA, editor. Principles of sensory communications. Hoboken, NJ: John Wiley & Sons; 1961. pp. 303–317. [Google Scholar]

- Seiffert AE, Cavanagh P. Position displacement, not velocity, is the cue to motion detection of second-order stimuli. Vision Research. 1998;38:3569–3582. doi: 10.1016/s0042-6989(98)00035-2. [DOI] [PubMed] [Google Scholar]

- Singer JM, Sheinberg DL. Temporal cortex neurons encode articulated actions as slow sequences of integrated poses. Journal of Neuroscience. 2010;30:3133–3145. doi: 10.1523/JNEUROSCI.3211-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka K. Inferotemporal cortex and object vision. Annual Reviews Neuroscience. 1996;19:109–139. doi: 10.1146/annurev.ne.19.030196.000545. [DOI] [PubMed] [Google Scholar]

- Tanaka K, Saito H, Fukada Y, Moriya M. Coding visual images of objects in the inferotemporal cortex of the macaque monkey. Journal of Neurophysiology. 1991;66:170–189. doi: 10.1152/jn.1991.66.1.170. [DOI] [PubMed] [Google Scholar]

- Tomasi C, Kanade T. Shape and motion from image streams under orthography: A factorization method. International Journal of Computer Vision. 1992;9:18. [Google Scholar]

- Torresani L, Hertzmann A, Bregler C. Nonrigid structure-from-motion: Estimating shape and motion with hierarchical priors. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2008;30:15. doi: 10.1109/TPAMI.2007.70752. [DOI] [PubMed] [Google Scholar]

- Troje NF. Decomposing biological motion: A framework for analysis and synthesis of human gait patterns. Journal of Vision. 2002;2(5):2, 371–387. doi: 10.1167/2.5.2. http://www.journalofvision.org/content/2/5/2. [DOI] [PubMed] [Google Scholar]

- Tse PU, Logothetis NK. The duration of 3-D form analysis in transformational apparent motion. Perception & Psychophysics. 2002;64:244–265. doi: 10.3758/bf03195790. [DOI] [PubMed] [Google Scholar]

- Tyler CW. Human symmetry perception and its computational analysis. Utrecht, The Netherlands: VSP Press; 1996. [Google Scholar]

- Ullman B. The interpretation of visual motion. Cambridge, MA: MIT Press; 1979. [Google Scholar]

- Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW, editors. Analysis of visual behavioral. Cambridge, MA: MIT Press; 1982. pp. 549–586. [Google Scholar]

- Vangeneugden J, Pollick F, Vogels R. Functional differentiation of macaque visual temporal cortical neurons using a parametric action space. Cerebral Cortex. 2009;19:593–611. doi: 10.1093/cercor/bhn109. [DOI] [PubMed] [Google Scholar]

- Weiss Y, Adelson EH. Adventures with gelatinous ellipses—Constraints on models of human motion analysis. Perception. 2000;29:543–566. doi: 10.1068/p3032. [DOI] [PubMed] [Google Scholar]

- Weiss Y, Simoncelli EP, Adelson EH. Motion illusions as optimal percepts. Nature Neuroscience. 2002;5:598–604. doi: 10.1038/nn0602-858. [DOI] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ. The psychometric function: I. Fitting, sampling, and goodness of fit. Perception & Psychophysics. 2001;63:1293–1313. doi: 10.3758/bf03194544. [DOI] [PubMed] [Google Scholar]

- Wilson HR, Wilkinson F. Symmetry perception: A novel approach for biological shapes. Vision Research. 2002;42:589–597. doi: 10.1016/s0042-6989(01)00299-1. [DOI] [PubMed] [Google Scholar]

- Yacoob Y, Black MJ. Parametrized modeling and recognition of activities. Computer Vision and Image Understanding. 1999;73:232–247. [Google Scholar]

- Yuille AL, Grzywacz NM. A computational theory for the perception of coherent visual motion. Nature. 1988;333:71–74. doi: 10.1038/333071a0. [DOI] [PubMed] [Google Scholar]

- Zaidi Q, DeBonet JS. Motion energy versus position tracking: Spatial, temporal, and chromatic parameters. Vision Research. 2000;40:3613–3635. doi: 10.1016/s0042-6989(00)00201-7. [DOI] [PubMed] [Google Scholar]

- Zhang K, Sereno MI, Sereno ME. Emergence of position-independent detectors of sense of rotation and dilation with Hebbian learning: An analysis. Neural Computation. 1993;5:597–612. [Google Scholar]