Abstract

Structured illumination (SI) has long been regarded as a nonquantitative technique for obtaining sectioned microscopic images. Its lack of quantitative results has restricted the use of SI sectioning to qualitative imaging experiments, and has also limited researchers’ ability to compare SI against competing sectioning methods such as confocal microscopy. We show how to modify the standard SI sectioning algorithm to make the technique quantitative, and provide formulas for calculating the noise in the sectioned images. The results indicate that, for an illumination source providing the same spatially-integrated photon flux at the object plane, and for the same effective slice thicknesses, SI sectioning can provide higher SNR images than confocal microscopy for an equivalent setup when the modulation contrast exceeds about 0.09.

OCIS codes: (180.0180) Microscopy, (110.4280) Noise in imaging systems, (100.3010) Image reconstruction techniques, (180.6900) Three-dimensional microscopy, (100.6640) Superresolution

1. Introduction

Structured illumination (SI) is an optical sectioning technique compatible with widefield imaging microscopy, and has been shown to provide a depth resolution comparable to confocal microscopy [1]. Since its invention [2], SI microscopy has been widely used as a sectioning tool in bioimaging research, both at the cellular level — such as in 3D imaging of cellular nuclear periphery [3], cellular fenestrations [4], tubulin and kinesin dynamics [5] and at the tissue level — such as in imaging of autofluorescence aggregation in human eye [6], zebrafish development [7], rat colonic mucosa [8]. In addition, SI has also been used as a super-resolution technique to break the diffraction limit [5, 9–11]. SI thus has the ability to maintain the high light collection capability of widefield imaging [12,13] while also removing out-of-plane light. It has, however, been criticized as being a non-quantitative technique, and for producing noisy data in comparison to the sectioned images derived from confocal microscopes. The analysis below shows that SI can easily be made quantitative by properly scaling the standard sectioning algorithm, and we also provide analytical expressions for the resulting noise in SI-sectioned images. Although Somekh et al. [14, 15] provide numerical simulations of the noise properties of SI-sectioned images, they use a non-quantitative algorithm and do not include the effects of out-of-focus light on the noise.

Quantitative sectioned images allow one to perform photon counting as if the regions above and below the sectioned layer were not present. While the resulting photon number estimate will be noisier than would be the case for imaging the slice without out-of-focus layers present, the mean value of the correctly scaled algorithm will equal to the mean photon count one would obtain with a standard widefield microscope. This permits researchers to use standard methods [16] of correcting for the objective lens’ numerical aperture, optical transmission, and detector quantum efficiency to determine photon counts at the sectioned plane relative to the number of photoelectrons detected at the sensor plane. Allowing quantitative data to be obtained with SI sectioning thus gives researchers the ability to perform measurements of radiance, absolute reflectance, fluorophore quantum yield, and absolute fluorophore concentration within volumetric media [17, 18].

Finally, the analytical formulas for noise also enable us to roughly define an operational range for the modulation contrast at the section plane, such that for any contrast above this value one can expect SI to provide higher SNR data than confocal microscopy. For any contrast below this value, confocal imaging will out-perform SI.

2. Sectioning algorithm

The general approach in SI is to illuminate the object with a sinusoidal illumination pattern of the form [2]

| (1) |

at each of three spatial phases ϕ1 = 0, ϕ2 = 2π/3, and ϕ3 = 4π/3. Although other structured forms are possible [19], this one is particularly easy to implement. The quantity m is the modulation contrast (a number varying from 0 to 1), and ν is the modulation spatial frequency. The factor of 1/2 placed in front, not present in previous work, is used here in order to represent the fact that half of the illumination light is absorbed or reflected by the grid placed in the illumination path. If we take the limit m → 0, we obtain standard widefield illumination of half the intensity that one would obtain without the grid in place. Note that s represents a normalized illumination amplitude, ranging from 0 to 1.

Ignoring the effects of optical blurring, the resulting modulated images gi(x, y) are given by

| (2) |

for a planar object distribution f(x,y) and out-of-focus light d(x,y), both scaled to what one would obtain with standard widefield imaging. For fluorescence imaging, the absolute brightness f of the object contains the illumination irradiance I, the fluorophore quantum yield q, and a factor Ω resulting from integrating the angular distribution of fluorescence emission over the numerical aperture of the imaging optics: ffluor = IqΩ. For brightfield imaging, f is simply the illumination I multiplied by the object reflectance R: fbright = IR. Thus, the expression for gi is valid for the cases of both fluorescence imaging and brightfield imaging, but with a subtle difference in what f means for each case.

In order to obtain an optically sectioned image i(x,y) at the focal plane, the most common algorithm used is [1, 2, 8, 20–31]

| (3) |

based on square law detection. Alternative illumination patterns allow for different processing approaches [19]. SI for superresolution, for example, relies on the Moire effect to detect light emitted outside the conventional bandwidth limit.

Since the algorithm (3) operates on each pixel independently, we have dropped the spatial arguments (x,y) as unnecessary. (These can be added back into each equation at any point.) Inserting Eqs. (1) into (2) and applying trigonometric identities, we obtain the result

| (4) |

Thus, the sectioned image is a copy of the object distribution, as we expect, but scaled by the factor . The quantitatively-scaled algorithm is obtained by multiplying Eq. (3) by the inverse of this factor:

| (5) |

Note that the algorithm assumes that the out-of-focus light d(x,y) does not change with a shift in the illumination pattern. A consequence of this result is that in order to obtain quantitative results for the sectioned image, one must estimate the modulation contrast m. A further assumption required is that of linearity, which in fluorescence imaging is limited to weakly fluorescent structures [1].

The scale factor in front of the square root differs from that given in previous studies. The factor of 2 in the numerator appears as a result of the 1/2 scaling introduced into our definition of si(x, y) and thus is new. All previous authors have also assumed ideal modulation (m = 1). This is an assumption which introduces a large error into the quantitative result. Moreover, since the modulation m(x,y) is in general spatially varying, the error introduced is generally not a simple scalar factor for the whole image. As a whole, the literature shows wide disagreement over the appropriate scale factor to place in front of the square root. Refs [20,22,26,28,32,33] use , which is appropriate when m = 1 and the factor of 1/2 in s(x,y) is not used. Refs [2, 23–25,27,29] use a scale factor of 1, which is the most appropriate choice for a non-quantitative approach, while other authors use alternative factors such as [8], [1, 31], or [21] without explanation.

In practice, one finds that even for ideal samples m cannot achieve the maximum value of 1. The modulation contrast, however, remains excellent (m > 0.5) in thin samples in which the sectioned plane is taken near the surface, but poor (m < 0.1) in dense tissue samples (in which multiple scattering is present) and in deeper layers of thinly scattering media.

3. Widefield image algorithm

In addition to the sectioned image algorithm (5), it is well known that one can form a widefield image representation iw(x, y) from the modulated images by

| (6) |

(Our scale factor differs from that of previous authors due to the factor of 1/2 used in the definition of s(x,y).) Once again inserting the formulas for the illumination and modulated images, Eqs. (1) and (2), we obtain

| (7) |

The widefield image is a simple sum of the sectioned plane and the out-of-focus contribution.

For quantitative work, we also want to estimate the variance of the widefield image, for which we insert Eqs. (1), (2), and (6) into the standard variance formula and solve. Here the angle brackets 〈·〉 represent an expectation value. The first term in the variance formula is

| (8) |

Here we have assumed that d and f are independent of one another, so that terms such as 〈d + f〉 = 〈d〉 + 〈f〉 and 〈df〉 = 〈d〉〈f〉. Because the si represent a normalized illumination distribution, the stochastic properties of the system are present only within the out-of-focus light d and the slice’s light distribution f, and not in the illumination s.

The second moment thus separates into four terms, each of which can be considered separately. Using trigonometric identities, we obtain for the modulation factor in each term

| (9) |

| (10) |

| (11) |

giving the result

where we have used 〈d2〉 = 〈d〉2 + var(d).

The second term in the variance formula is easily obtained from Eq. (7) as

Putting the two results together produces

| (12) |

Just as the mean value of the widefield image is a simple sum of the planar slice and the out-of-focus light, 〈iw〉 = 〈d〉 + 〈f〉, the variance of the widefield image is also a simple sum of the component variances.

4. Variance and SNR of sectioned images

Next we can try to follow the same procedure for the sectioning algorithm (5) to obtain the variance of the sectioned image, var (i) = 〈i2〉 − 〈i〉2. The second term in the variance formula is easily obtained from Eqs. (4) and (5) as 〈i〉2 = 〈f〉2. The first term can be obtained by inserting Eq. (6) to give

| (13) |

The next step is to substitute Eqs. (1) and (2) into this formula, but here one must be careful. Each modulated image gi provides different samples di and fi of the stochastic out-of-focus and slice light distribution, such that terms like will be nonzero. That is, since d1 is independent of d2, we can write 〈d1d2〉 = 〈d1〉〈d2〉, whereas . Once all cross-terms are eliminated from inside an expectation value, one can then take 〈di〉 → 〈d〉 and var(di) = var(d), and likewise for the fi as well. Thus, the first quadratic term inside the expectation value of Eq. (13) is

Letting all 〈di〉 → 〈d〉 and , we have

Doing this also for the second and third squared terms in Eq. (13) and combining gives

where we have also used the results of Eqs. (10) and (11). Incorporating this result into the variance formula obtains

| (14) |

The variance of the sectioned image is thus dependent on the out-of-focus contribution in addition to the variance of f. (Recall that f represents the light obtained from a single standard widefield image of just the planar slice itself.) Both terms contain a dependence on the modulation contrast, so that as the modulation approaches zero (m → 0), the variance in the sectioned image increases without bound, as we should expect.

The theoretical expression for the sectioned image variance Eq. (14) indicates that when the illumination produces ideal modulation contrast at the slice plane (m = 1), the sectioning algorithm amplifies noise in the slice image by a factor of (in standard deviation) relative to the stochastic noise in f. For most cases, a large out-of-focus contribution is present, and this not only reduces the modulation contrast but adds to the noise as well. Then m becomes small, and in this regime the variance approximates to

so that the signal-to-noise ratio of the sectioned image in the weak modulation regime is reduced by a factor relative to that of a standard widefield image,

5. Estimating the modulation contrast

The quantitative sectioning algorithm (5) requires knowing the modulation contrast m in order to properly scale the result. This value is generally not known a priori and so must be estimated, but one can use the modulated images themselves to provide the estimated value m̂. For each of the three modulated images, we obtain a “modulation map” by normalizing the modulated images using the widefield algorithm as

Since μi can also be written as , subtracting 1/2 from μi produces a result proportional to m, so that

and an estimate of m is thus given by

| (15) |

This suggests that, for high SNR data, one can calculate the sectioned image pixel-by-pixel by combining Eqs. (5) and (15) to give

| (16) |

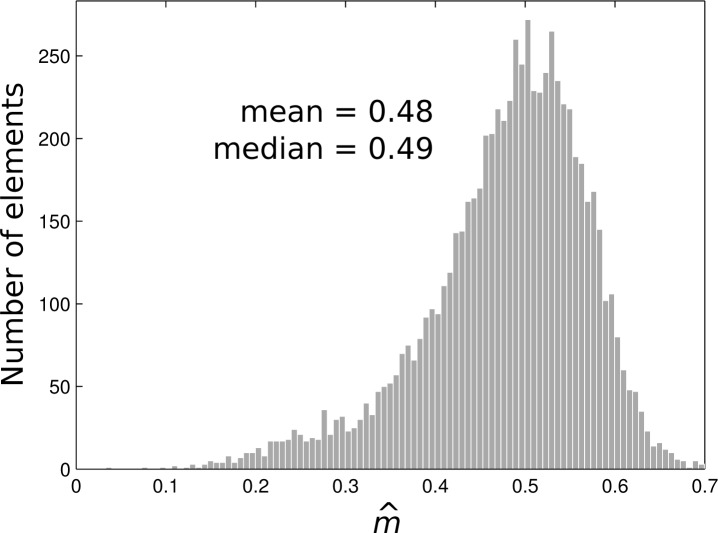

As an example, we measured the three modulated images g1, g2, and g3 on a fluorescent bead sample and use the algorithm in Eq. (15) to obtain the estimated modulation m̂ (x,y) at every pixel in the image. Our experimental setup (see Sec. 6.1) achieves approximately uniform modulation across the image, and so if we first threshold the image iw to prevent noisy pixels from skewing the estimate, we obtain a histogram of m̂ across the image, as shown in Fig. 1. The histogram suggests that the pixel-by-pixel estimate of m will be quite noisy, so that a much more accurate estimate can be achieved by averaging m̂ across the image. Or, if there is a significant contribution of outliers, one can use the histogram median as a more robust estimate. For Fig. 1, the mean and median values are m̂ = 0.48 and 0.49 respectively. We can note, however, that taking the mean or median are only valid for homogeneous samples.

Fig. 1.

A histogram of the estimated modulation contrast, m̂, obtained from (15).

From a theoretical standpoint, the roughly Gaussian shape to the histogram is expected, but the long tail at the lower values of m̂ is not, and may be the result of some beads aggregating together to create a thicker layer at some locations within the image. If the thickness exceeds the sectioning depth, then the modulation contrast will drop.

While Fig. 1 shows that the median modulation contrast can be accurately estimated from a single image, the use of Eq. (15) to estimate m(x,y) requires exceedingly high signal-to-noise ratio images in order to achieve a reasonable accuracy for every pixel in the image. Its practical use, therefore, requires collecting a large number of photons (perhaps by summing a sequence of static images) or some kind of spatial processing in order to reduce the effects of noise.

6. Experimental results

In order to test our theoretical results, we conducted several experiments on a Zeiss Axio Imager Z1 microscope equipped with an Apotome module, a Zeiss AxioCam MRm monochromatic camera (1388 × 1040 pixels), and an HB-100 mercury lamp illumination source. The objective lens used for all of the experiments is a Zeiss Plan-Apochromatic 20× objective (NA = 0.8). In order compare measurements against theory, we use the ratio r of measured noise to the estimated shot noise. Whereas the measured noise is obtained by taking the standard deviation of a sequence of 1000 measurements, the photon shot noise standard deviation σp is estimated by taking the square root of the mean number of photons collected. For a standard widefield measurement, this number should be close to 1, but for SI-sectioned images the noise is larger than one would expect from the shot noise alone, so that the theory predicts r > 1.

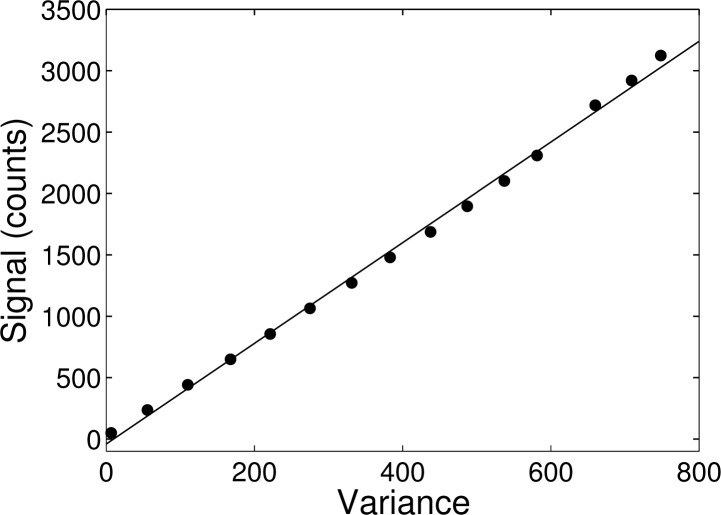

The first step of the experiment involves measuring the camera gain in order to scale digital counts to detected photelectrons. This involves imaging a uniformly illuminated field (created by Köhler illumination) at the microscope sample stage with different illumination intensities. To remove the effects of pixel response nonuniformity, we implemented the following procedures: [34]

At each illumination intensity, two wide field images I1 and I2 are acquired.

The standard deviation σc is calculated for a 200 × 200 pixel area in the difference image I1 − I2.

The signal variance is calculated by . The scaling factor of 2 accounts for the increased noise due to the image subtraction operation in Step 2.

The quantities fc and σc here indicate the measured intensity and standard deviation in units of counts and not photons. The resulting measured signal vs. variance at different illumination intensities is shown in Fig. 2, and gives an estimated gain g = 4.1 photons/count.

Fig. 2.

Signal vs. variance measurement, showing experimental data (dots) and the fitted line. The line slope of 4.1 gives an estimate for the gain of g = 4.1 photons/count.

In order to provide a baseline reference for later SI noise measurements, we imaged a microscope slide containing a sparse layer of fluorescent beads (Molecular Probes Fluosphere F8853, peak emission at 515 nm, 2 μm diameter) in wide-field mode (i.e. without the structured grid placed in the illumination path). To prepare a uniformly distributed sample, the fluorescent beads were suspended by vortex mixing and sonicated. The suspensions was then dropped onto a microscope slide and sealed with a cover slip. A total of 1000 images of the sample were acquired in a time sequenced experiment. The measured mean fluorescent intensity 〈f〉 of the fluorescent beads is 4.33 × 104 counts (obtained by summing all pixels at the bead location), and the standard deviation σn of fluorescent intensity is 112 counts. Thus the ratio of measured noise to the photon shot noise is:

Since r is very close to 1, we can say that the imaging system is shot-noise limited.

6.1. Sectioned imaging of 2 μm fluorescent beads without out-of-focus light

We first measured the axial PSF of the SI-sectioned measurements by imaging sub-resolution green fluorescent nanoparticles (175 nm diameter spheres, from Invitrogen), using the software-recommended VL grid (17.5 lines/mm) on the Apotome. The FWHM of resulting measured axial PSF is 3.6 μm, indicating that our fluorescent bead sample (peak emission at 515 nm, 2 μm diameter) is sufficiently thin that no out-of-focus light will be present. For the quantitative algorithm, we estimate the modulation contrast for this setup using the data shown in Fig. 1, giving m̂ = 0.49 from the median of the distribution. A total of 1000 sectioned images were acquired in a time sequenced experiment. The mean intensity 〈f〉and its standard deviation σm are calculated for 5 different beads, with results shown in Table 1. The ratio of measured noise to the photon noise is calculated as:

| (17) |

On the other hand, the theoretical value of the ratio r, assuming Poisson noise, is obtained by

The theoretical value of 2.49 thus closely corresponds to the measured mean value of 2.41 given in Table 1. That is, use of the sectioning algorithm with the three modulated images on the (planar) sample has reduced the SNR by a factor of 2.41 from that of a single (unmodulated) widefield image.

Table 1.

Measurements on a sample of five different fluorescent beads without out-of-focus light. These are the measured mean widefield intensity 〈f〉 and its standard deviation σm(f), the calculated widefield intensity 〈iw〉 and its standard deviation σm(iw), and the corresponding ratios r obtained with Eq. (17). The modulation contrast m used to calculate 〈f〉 is estimated using Eq. (15).

| Sectioned image

|

Calculated widefield image

|

|||||

|---|---|---|---|---|---|---|

| Bead | 〈f〉 (cts) | σm(f) (cts) | r | 〈iw〉 (cts) | σm(iw) (cts) | r |

| 1 | 5.03 × 104 | 279 | 2.53 | 4.99 × 104 | 114 | 1.03 |

| 2 | 6.29 × 104 | 292 | 2.35 | 5.51 × 104 | 122 | 1.06 |

| 3 | 4.61 × 104 | 259 | 2.44 | 4.59 × 104 | 115 | 1.08 |

| 4 | 5.04 × 104 | 277 | 2.50 | 4.93 × 104 | 110 | 1.00 |

| 5 | 6.17 × 104 | 276 | 2.24 | 5.60 × 104 | 119 | 1.02 |

|

|

|

|||||

| mean: | 2.41 | mean: | 1.04 | |||

The calculated widefield image iw of the same sample is obtained by algorithm (6). The mean intensity 〈iw〉, standard deviation σm and ratio r are calculated for the same five beads, with results shown in Table 1. The measured value of the noise ratio r = 1.04 closely corresponds to the theoretical result of r = 1 obtained from Eq. (12).

6.2. Sectioned imaging of 6 μm fluorescent beads containing out-of-focus light

In order to measure noise amplification in SI in the case when out-of-focus light is present, we imaged a sample of 6 μm diameter green fluorescent beads. Since the sectioning thickness of the Apotome in this setup is 3.6 μm, there will be some out-of-focus light present. A total of 1000 sectioned images were acquired in a time sequenced experiment. The mean intensity 〈f〉 and its standard deviation σm are calculated for five different beads, with results shown in Table 2. The theoretical value of the ratio r, which we write as r̂, is given by

If we use the relation 〈iw〉 = 〈d〉 + 〈f〉, let m̂ = 0.49, and substitute the mean 〈iw〉 with its measured value iw, we have

The resulting theoretical r̂ values for the five beads are shown in Table 2, and indicate a close correspondence with the experimentally measured value for r. Note that the variation in r̂ among the five beads selected may be due to a variation in the relative amount of fluorescence emitted by the bead from within the sectioned plane to that emitted from outside the sectioned plane.

Table 2.

The mean intensity of wide-field images, 〈iw〉, and standard deviation σm of five different fluorescent beads, obtained from experiment and theory.

| Experiment

|

Theory

|

||||

|---|---|---|---|---|---|

| Bead | 〈f〉 (cts) | σm (cts) | r | iw (cts) | r̂ |

| 1 | 1.70 × 105 | 836 | 4.09 | 5.01 × 105 | 4.11 |

| 2 | 1.20 × 105 | 669 | 3.90 | 4.13 × 105 | 4.38 |

| 3 | 10.29 × 104 | 511 | 3.24 | 2.32 × 105 | 3.56 |

| 4 | 1.89 × 105 | 750 | 3.50 | 5.04 × 105 | 3.85 |

| 5 | 9.05 × 104 | 526 | 3.54 | 2.80 × 105 | 4.15 |

|

|

|

||||

| mean: | 3.66 | mean: | 4.01 | ||

7. Conclusion

Although it has often been argued that structure illumination sectioning microscopy is a non-quantitative technique, we have shown that a quantitative version of the algorithm can be obtained by adding a proper scaling factor. Quantitative scaling does require that one estimate the modulation contrast m, and this adds an extra step of complexity, but Eq. (15) provides a simple means of obtaining such an estimate. A consequence of ignoring this scaling factor, as the sectioning algorithms have up to this point, is that in z-stack volumetric images (x,y,z) the deeper layers will appear artificially darkened. A result of the SI sectioning approach, however, is that since it removes out-of-focus light from the sectioned image after detection, it suffers from the shot noise of both the section image and all out-of-focus planes. While this has long been known, little has been known about the quantitative correlation between the noise amplification in SI microscopy and the out-of-focus light or other imaging parameters.

The theoretical analysis given above has made no assumptions about the properties of the noise other than to assume that the variance is the primary quantity of interest, and thus the results remain valid across all noise regimes (read noise limited, shot noise limited, etc.).

The noise amplification indicated by the variance result may be taken as an argument that SI-sectioning is a poor substitute for confocal sectioning due to the loss in SNR. But this is not the whole story. The compatibility of SI with widefield imaging also allows orders of magnitude greater light throughput than that achievable by confocal microscopy, such that one can use lower-intensity light sources and still obtain 100–200× increases in photon collection above that of scanning laser illumination [12,13]. In this case, taking 150 as a representative value for increased light collection, SI sectioning can provide better SNR than confocal sectioning when

An additional advantage SI microscopy has is the ability to reject any residual DC light, such as that generated by stray light or reflections within the optical system, though this comes at an SNR penalty. Whether SI-sectioning or confocal sectioning produces better SNR images is dependent on the microscope setup and the object under analysis, but our theoretical results provide support for the common empirical observation that SI-sectioning gives lower quality results when imaging deep within tissue.

Acknowledgments

This work was supported in part by the National Institute of Health under grants R01-CA124319 and R21-EB009186.

References and links

- 1.Karadaglić D., Wilson T., “Image formation in structured illumination wide-field fluorescence microscopy,” Micron 39, 808–818 (2008). 10.1016/j.micron.2008.01.017 [DOI] [PubMed] [Google Scholar]

- 2.Neil M. A. A., Juškaitis R., Wilson T., “Method of obtaining optical sectioning by using structured light in a conventional microscope,” Opt. Lett. 22, 1905–1907 (1997). 10.1364/OL.22.001905 [DOI] [PubMed] [Google Scholar]

- 3.Schermelleh L., Carlton P. M., Haase S., Shao L., Winoto L., Kner P., Burke B., Cardoso M. C., Agard D. A., Gustafsson M. G. L., Leonhardt H., Sedat J. W., “Subdiffraction multicolor imaging of the nuclear periphery with 3D structured illumination microscopy,” Science 320, 1332–1336 (2008). 10.1126/science.1156947 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cogger V. C., McNerney G. P., Nyunt T., DeLeve L. D., McCourt P., Smedsrød B., Couteur D. G. L., Huser T. R., “Three-dimensional structured illumination microscopy of liver sinusoidal endothelial cell fenestrations,” J. Struct. Biol. 171, 382–388 (2010). 10.1016/j.jsb.2010.06.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kner P., Chhun B. B., Griffis E. R., Winoto L., Gustafsson M. G. L., “Super-resolution video microscopy of live cells by structured illumination,” Nat. Met. 6, 339–344 (2009). 10.1038/nmeth.1324 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Best G., Amberger R., Baddeley D., Ach T., Dithmar S., Heintzmann R., Cremer C., “Structured illumination microscopy of autofluorescent aggregations in human tissue,” Micron 42, 330–335 (2011). 10.1016/j.micron.2010.06.016 [DOI] [PubMed] [Google Scholar]

- 7.Keller P. J., Schmidt A. D., Santella A., Khairy K., Bao Z., Wittbrodt J., Stelzer E. H. K., “Fast, high-contrast imaging of animal development with scanned light sheet-based structured-illumination microscopy,” Nat. Meth. 7, 637–645 (2010). 10.1038/nmeth.1476 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bozinovic N., Ventalon C., Ford T., Mertz J., “Fluorescence endomicroscopy with structured illumination,” Opt. Express 16, 8016–8026 (2008). 10.1364/OE.16.008016 [DOI] [PubMed] [Google Scholar]

- 9.Heintzmann R., Cremer C., “Laterally modulated excitation microscopy: improvement of resolution by using a diffraction grating,” in EUROPTO Conference on Optical Microscopy, Proc. SPIE 3568, 185–196 (1999). 10.1117/12.336833 [DOI] [Google Scholar]

- 10.Gustafsson M. G. L., “Surpassing the lateral resolution limit by a factor of two using structured illumination microscopy,” J. Microsc. 198, 82–87 (2000). 10.1046/j.1365-2818.2000.00710.x [DOI] [PubMed] [Google Scholar]

- 11.Hirvonen L. M., Wicker K., Mandula O., Heintzmann R., “Structured illumination microscopy of a living cell,” Eur. Biophys. J. 38, 807–813 (2009). 10.1007/s00249-009-0501-6 [DOI] [PubMed] [Google Scholar]

- 12.Murray J. M., Appleton P. L., Swedlow J. R., Waters J. C., “Evaluating performance in three-dimensional fluorescence microscopy,” J. Microsc. 228, 390–405 (2007). 10.1111/j.1365-2818.2007.01861.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gao L., Bedard N., Hagen N., Kester R. T., Tkaczyk T. S., “Depth-resolved image mapping spectrometer (IMS) with structured illumination,” Opt. Express 19, 17439–17452 (2011). 10.1364/OE.19.017439 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Somekh M. G., Hsu K., Pitter M. C., “Resolution in structured illumination microscopy: a probabilistic approach,” J. Opt. Soc. Am. A 25, 1319–1329 (2008). 10.1364/JOSAA.25.001319 [DOI] [PubMed] [Google Scholar]

- 15.Somekh M. G., Hsu K., Pitter M. C., “Stochastic transfer function for structured illumination microscopy,” J. Opt. Soc. Am. A 26, 1630–1637 (2009). 10.1364/JOSAA.26.001630 [DOI] [PubMed] [Google Scholar]

- 16.Kedziora K. M., Prehn J. H. M., Dobruck J., Bernas T., “Method of calibration of a fluorescence microscope for quantitative studies,” J. Microsc. 244, 101–111 (2011). 10.1111/j.1365-2818.2011.03514.x [DOI] [PubMed] [Google Scholar]

- 17.Waters J. C., “Accuracy and precision in quantitative fluorescence microscopy,” J. Cell Biol. 1858, 1135–1148 (2009). 10.1083/jcb.200903097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Esposito A., Schlachter S., Schierle G. S., Elder A. D., Diaspro A., Wouters F. S., Kaminski C. F., Iliev A. I., “Quantitative fluorescence microscopy techniques,” Methods Mol. Biol. 586, 117–142 (2009). 10.1007/978-1-60761-376-3_6 [DOI] [PubMed] [Google Scholar]

- 19.Heintzmann R., Benedetti P. A., “High-resolution image reconstruction in fluorescence microscopy with patterned excitation,” Appl. Opt. 45, 5037–5045 (2006). 10.1364/AO.45.005037 [DOI] [PubMed] [Google Scholar]

- 20.Neil M. A. A., Wilson T., Juškaitis R., “A light efficient optical sectioning microscope,” J. Microsc. 189, 114–117 (1998). 10.1046/j.1365-2818.1998.00317.x [DOI] [Google Scholar]

- 21.Cole M. J., Siegel J., Webb S. E. D., Jones R., Dowling K., Dayel M. J., Parsons-Karavassis D., French P. M. W., Lever M. J., Sucharov L. O. D., Neil M. A. A., Juvskaitis R., Wilson T., “Time-domain whole-field fluorescence lifetime imaging with optical sectioning,” J. Microsc. 203, 246–257 (2001). 10.1046/j.1365-2818.2001.00894.x [DOI] [PubMed] [Google Scholar]

- 22.Schaefer L. H., Schuster D., Schaffer J., “Structured illumination microscopy: artefact analysis and reduction utilizing a parameter optimization approach,” J. Microsc. 216, 165–174 (2004). 10.1111/j.0022-2720.2004.01411.x [DOI] [PubMed] [Google Scholar]

- 23.Krzewina L. G., Kim M. K., “Single-exposure optical sectioning by color structured illumination microscopy,” Opt. Lett. 31, 477–479 (2006). 10.1364/OL.31.000477 [DOI] [PubMed] [Google Scholar]

- 24.Barlow A. L., Guerin C. J., “Quantization of widefield fluorescence images using structured illumination and image analysis software,” Microsc. Res. Tech. 70, 76–84 (2007). 10.1002/jemt.20389 [DOI] [PubMed] [Google Scholar]

- 25.Chasles F., Dubertret B., Boccara A. C., “Optimization and characterization of a structured illumination microscope,” Opt. Express 15, 16130–16141 (2007). 10.1364/OE.15.016130 [DOI] [PubMed] [Google Scholar]

- 26.Konecky S. D., Mazhar A., Cuccia D., Durkin A. J., Schotland J. C., Tromberg B. J., “Quantitative optical tomography of sub-surface heterogeneities using spatially modulated structured light,” Opt. Express 17, 14780–14790 (2009). 10.1364/OE.17.014780 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Langhorst M. F., Schaffer J., Goetze B., “Structure brings clarity: structured illumination microscopy in cell biology,” Biotechnol. J. 4, 858–865 (2009). 10.1002/biot.200900025 [DOI] [PubMed] [Google Scholar]

- 28.Erickson T. A., Mazhar A., Cuccia D., Durkin A. J., Tunnell J. W., “Lookup-table method for imaging optical properties with structured illumination beyond the diffusion theory regime,” J. Biomed. Opt. 15, 036013 (2010). 10.1117/1.3431728 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wicker K., Heintzmann R., “Single-shot optical sectioning using polarization-coded structured illumination,” J. Opt. 12, 084010 (2010). 10.1088/2040-8978/12/8/084010 [DOI] [Google Scholar]

- 30.Wilson T., “Optical sectioning in fluorescence microscopy,” J. Microsc. 242, 111–116 (2010). 10.1111/j.1365-2818.2010.03457.x [DOI] [PubMed] [Google Scholar]

- 31.Gruppetta S., Chetty S., “Theoretical study of multispectral structured illumination for depth resolved imaging of non-stationary objects: focus on retinal imaging,” Biomed. Opt. Express 2, 255–263 (2011). 10.1364/BOE.2.000255 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Neil M. A. A., Juškaitis R., Wilson T., “Real time 3D fluorescence microscopy by two beam interference illumination,” Opt. Commun. 153, 1–4 (1998). 10.1016/S0030-4018(98)00210-7 [DOI] [Google Scholar]

- 33.Poher V., Zhang H. X., Kennedy G. T., Griffin C., Oddos S., Gu E., Elson D. S., Girkin M., French P. M. W., Dawson M. D., Neil M. A. A., “Optical sectioning microscopes with no moving parts using a micro-stripe array light emitting diode,” Opt. Express 15 (2007). 10.1364/OE.15.011196 [DOI] [PubMed] [Google Scholar]

- 34.Mortara L., Fowler A., “Evaluations of charge-coupled device (CCD) performance for astronomical use,” in Solid state imagers for astronomy, Proc. SPIE 290, 28–33 (1981). [Google Scholar]