Abstract

Theories of rational choice often make the structural consistency assumption that every decision maker’s binary strict preference among choice alternatives forms a strict weak order. Likewise, the very concept of a utility function over lotteries in normative, prescriptive, and descriptive theory is mathematically equivalent to strict weak order preferences over those lotteries, while intransitive heuristic models violate such weak orders. Using new quantitative interdisciplinary methodologies we dissociate variability of choices from structural inconsistency of preferences. We show that laboratory choice behavior among stimuli of a classical “intransitivity” paradigm is, in fact, consistent with variable strict weak order preferences. We find that decision makers act in accordance with a restrictive mathematical model that, for the behavioral sciences, is extraordinarily parsimonious. Our findings suggest that the best place to invest future behavioral decision research is not in the development of new intransitive decision models, but rather in the specification of parsimonious models consistent with strict weak order(s), as well as heuristics and other process models that explain why preferences appear to be weakly ordered.

Keywords: Rationality, Strict Weak Order, Utility of Uncertain Prospects, Random Utility

Bob and Joe meet for three meals. Restaurants A, B, C are compatible with Joe’s diet. On Monday, C is closed, and Bob prefers A over B. On Tuesday, A is closed, and Bob would rather eat at B than C. On Friday, Bob is indifferent between A and C, but he asks not to go to B. Joe expected Bob to like A the best and C the least. Is Bob inconsistent?

On Monday, Bob craves a sandwich, where A outshines B. On Tuesday, he wants a soup, with B an easy choice. On Friday, Bob craves a salad, which B does not offer, while A and C have salad bars. Even though Bob first chooses A over B, then B over C, then is indifferent between A and C and prefers both to B, this cannot be interpreted to mean that Bob makes incoherent decisions. Rather, Bob compares the restaurants by quality of his desired entree, and the resulting rankings (with possible ties) of the restaurants vary as he seeks out different entrees. We need to dissociate behavioral variability from structural inconsistency.

The literature on individual decision making is internally conflicted over whether or not preferences can be modeled with unidimensional numerical utility representations and, in particular, whether or not variable choice behavior can be reconciled with transitive preferences. Even a leading thinker in the field, Amos Tversky, was the author of both the most influential intransitivity study (Tversky, 1969) and, later, of the most influential (transitive) numerical representation, (cumulative) prospect theory (Kahneman and Tversky, 1979; Tversky and Kahneman, 1992). Regenwetter, Dana and Davis-Stober (2011) questioned the literature on intransitivity of preferences and reported that the variable binary choice data they considered were generally consistent with variable (transitive) linear order preferences. In this paper, we develop a much more direct, general, and yet also powerful, test of unidimensional utility representations and associated (transitive) “weak order” preferences. Even though our experimental stimuli have a historical track record of allegedly revealing inconsistent preferences, our findings support the highly restrictive class of numerical utility theories.

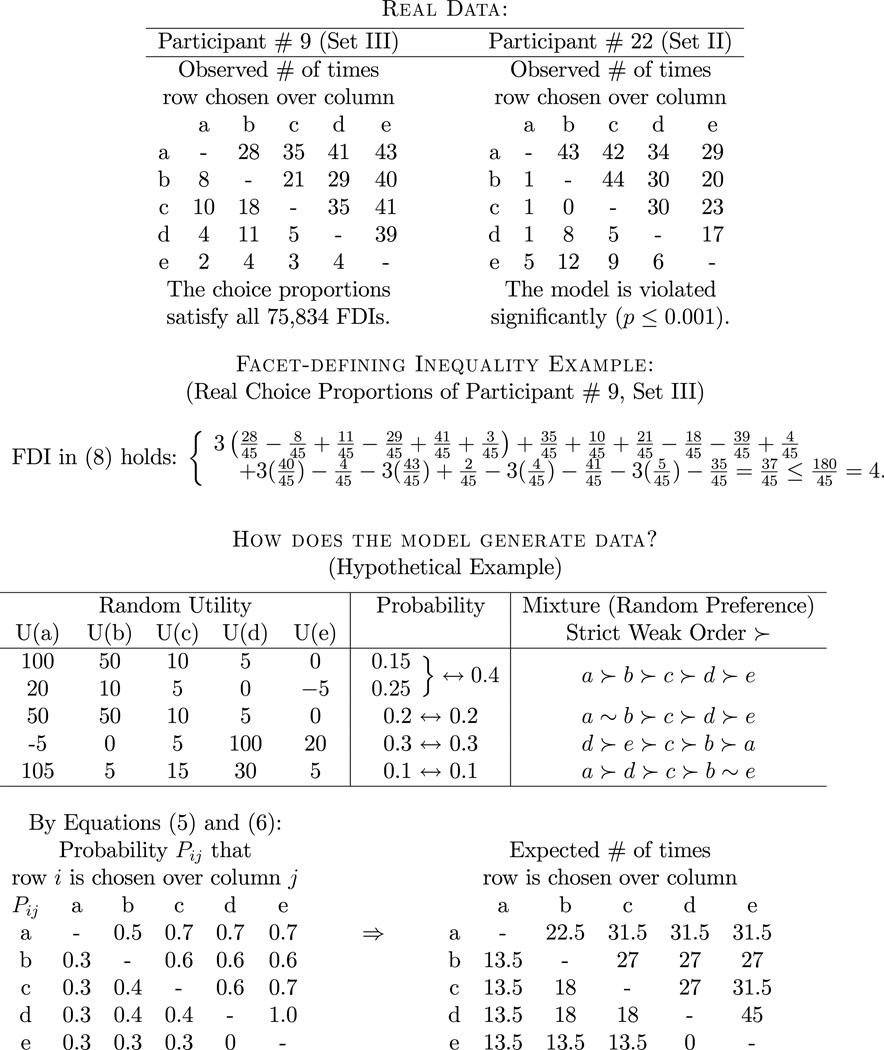

Consider the two data sets at the top of Figure 1. These show the number of times, out of 45 repetitions, that decision makers chose a ‘row’ gamble over a ‘column’ gamble. For instance, Participant # 9 (Gamble Set III of our experiment) chose gamble a over gamble b on 28 trials, chose b over a on 8 trials, and expressed indifference among a and b on 9 trials. The primary goal of this paper is to disentangle such variable overt choices from inconsistent latent preferences. We show that a restrictive quantitative model of variable, but structurally consistent, decision making explains a large set of empirical data. According to this model, individual preferences form strict weak orders, i.e., asymmetric and negatively transitive binary relations, as defined in Formulae (2) and (3) below. We consider the class of all possible decision theories that share the structural property that decision makers, knowingly or unknowingly, rank order choice options, allowing the possibility that they are indifferent between some options. Should this structural property be violated over those lotteries, then all theories of (unidimensional) utility would be violated, including expected utility theory and its many offspring, such as (cumulative) prospect theory. Should preferences be strict weak orders, however, then an even larger class of binary relations would be rejected, including all theories with intransitive preferences, such as intransitive heuristics and regret theory (Loomes and Sugden, 1982). Our approach is unique in that we found a way to test the pivotal concept of weak order preferences directly, hence to test the very notion of a utility function directly without limiting ourselves to particular functional forms (e.g., “expected utility” with “power” utility for money) or even to cash stimuli. Returning to Figure 1, we later show that Participant # 9 (Set III) is not just approximately, but perfectly consistent with variable weak order preferences, whereas Participant # 22 (Set II), whose data look superficially similar, in fact, violates the model. Throughout the paper, we use the term “preference” to refer to a hypothetical construct that might not be directly observable, and “choice” to refer to the observed behavior of participants when asked to select an option.

Figure 1.

Illustration with real and hypothetical ternary paired comparison data. The top gives two real ternary paired comparison data sets. The model fits one set perfectly and is violated significantly by the other. We illustrate one facet-defining inequality (FDI) for Participant # 9 (Gamble Set III). The center panel illustrates a hypothetical random utility representation and the corresponding mixture. The joint distribution of the random utilities is on the left, the weak order probabilities are on the right. Below are the implied binary choice probabilities and the corresponding expected frequencies for ternary choice (45 repetitions).

1. Motivation and Background

Why is the concept of rank ordering choice alternatives such a central and pivotal construct in psychological and economic theories of decision making?

Many laboratory and real life decisions share a common structure: They typically involve various outcomes that are contingent upon uncertain states of the world. Decision making is often complicated by intricate trade-offs among different attributes, outcomes, and uncertainty or risk. Psychological experiments in the laboratory often use cash lotteries as choice alternatives. In addition to such cash lotteries, we offered our participants noncash options similar to the following prospects A and C.

A major class of decision theories hypothesizes a numerical value U(i) associated with each prospect i. These numerical theories state that a decision maker prefers prospect i to prospect j if s/he values i more highly than j, that is, if U(i) > U(j). Writing ≻ to denote strict pairwise preference, such theories predict, for example,

| (1) |

There is a substantial literature in the decision sciences, primarily for monetary gambles, that discusses the functional form of the numerical function U(·). Famous examples of such theories are expected utility theory (Savage, 1954; von Neumann and Morgenstern, 1947) or (cumulative) prospect theory (Kahneman and Tversky, 1979; Tversky and Kahneman, 1992). All of these theories share one, very restrictive, property: They model decision behavior through the hypothetical numerical construct of a utility value associated with each uncertain prospect. These theories assume that decision makers act in accordance with (1). Even though the utilities in (1) are numerical, the prospects themselves need not be. For example, Prospects A and C are nonmonetary and, in our experiment, their probabilities are displayed geometrically using pie charts depicting wheels of chance. Any theory, in which pairwise preferences are derived from hypothetical numerical values, as illustrated in Formula (1), implies that binary preferences ≻ form “strict weak orders.” It has been shown that, likewise, strict weak order preferences can always be represented numerically, whether the decision makers mentally compute numerical utilities or not (see, e.g. Roberts, 1979).

This answers our question: All theories that rely on the hypothetical construct of a utility function as in (1) imply that preference is a strict weak order, and all theories that model preference using a strict weak order can be recast mathematically as (1) via a function U(·).

Hence, by considering the class of ‘all’ theories in which preferences form strict weak orders we study the pivotal property that distinguishes between preferences which are structurally consistent with utility functions from those that are inconsistent with utility. To study the structural property of strict weak orders we must disentangle varying choice (as in Figure 1) from structurally inconsistent preference. We start by considering basic concepts.

We are interested in the structural constraint that binary strict preference must form a strict weak order. This means that 1) strict preference ≻ is an asymmetric binary relation: if a person strictly prefers i to j then s/he does not strictly prefer j to i:

| (2) |

2) strict preference ≻ is negatively transitive: if a person does not strictly prefer i to j, and does not strictly prefer j to k, then s/he does not strictly prefer i to k:

| (3) |

As a consequence of these two axioms, strict weak orders also satisfy transitivity:1 if a participant strictly prefers i to j, and strictly prefers j to k, then s/he strictly prefers i to k:

| (4) |

Transitivity is often viewed as a rationality axiom. It is satisfied by the vast majority of normative, prescriptive, and descriptive theories of decision making in the literature.

Asymmetry strikes us as a property that we may safely assume to hold inherently for strict preference. Hence, we do not test it empirically. We consider stimulus sets of five choice alternatives in our experiment. For five alternatives, there are different possible asymmetric binary relations. Of these, only 541, that is fewer than one percent, are strict weak orders (Fiorini, 2001). Hence, fewer than 1% of all conceivable strict preference relations on five choice options can be represented with a numerical function U(·). The remaining 99% feature violations of negative transitivity and/or violations of transitivity.

It is already clear, even from a purely algebraic point of view, that strict weak orders form quite a restrictive class of preference relations. We will later see, once we move to our probabilistic specification of strict weak orders, that our quantitative model is characterized by more than 75, 000 nonredundant simultaneous mathematical constraints on choice probabilities. Our model also allows us to separate the wheat from the chaff by distinguishing variability in observable choices from inconsistency of underlying preferences.

2. The Mixture Model of Strict Weak Order Preferences

We propose a strict weak order model for ternary paired comparisons. A key distinguishing feature of our empirical paradigm is that decision makers compare pairs of objects (e.g., gambles) and either choose one of the objects as their “preferred” option, or they indicate that they are indifferent among the offered pair of objects. Each decision maker repeats each such ternary paired comparison multiple times over the course of an experiment. All repetitions are carefully separated by decoys in order to avoid memory effects and to reduce statistical interdependencies among repeated observations for each binary choice. For five choice alternatives, this paradigm generates 20 degrees of freedom in the data: each pair i, j ∈ 𝒞 yields some number of i choices, some number of j choices, and some number of “indifference” statements, hence yielding two degrees of freedom for each of the 10 gamble pairs. In comparison, a two-alternative forced choice paradigm for the same stimuli only has 10 degrees of freedom in the data because it does not permit “indifference” responses, hence each of the 10 gamble pairs only has one degree of freedom associated with it. In other words, even though we only marginally increase the amount of information required from the respondent on each trial (Miller, 1956), we double the degrees of freedom and thereby dramatically increase the total amount of information extracted from the respondent over the entire experiment as we combine multiple stimuli.2

We are interested in the structural property of strict weak order preference. We also need to separate variable overt choice behavior from structurally inconsistent latent preferences. We achieve these goals by evaluating the goodness-of-fit of a probabilistic model. According to our model, whenever facing a pair of choice alternatives, a decision maker evaluates these choice alternatives in a fashion that induces a strict weak order on the set of choice alternatives. Over repeated ternary paired comparisons, the decision maker may fluctuate in the strict weak order that s/he uses in the various decisions. This is either because s/he varies in his/her preferences over time, or because s/he experiences uncertainty about his/her own preference, and, when asked to decide, ends up fluctuating in those forced choices. We introduce a probability space where every sample point must be a strict weak order. We deliberately avoid peripheral mathematical assumptions, because all we do is take the concept of weak order preferences and place it into a probability space that has no additional structure except for requiring every sample point to be a strict weak order.

Equivalently, we are interested in the structural property of preferences that are consistent with numerical utilities. In the face of variable choice behavior, we need to distinguish whether variable choices are consistent with variable numerical utilities or not. According to our model, when considering a pair of choice alternatives, a decision maker chooses in accordance with a numerical function U(·) as specified in Formula (1). Over repeated ternary paired comparisons, the value of U(c) of a choice option c may vary. Accordingly, we replace the function U(·) by a family of random variables, (Uc)c∈𝒞, whose joint distribution is not restricted in any way. We allow decision makers to waver in the numerical utility values they associate with the choice options. Again, we deliberately avoid peripheral mathematical assumptions, because all we do is take the concept of a numerical function U(·) and turn the numerical value U(c) of each prospect c into a random variable that has no additional constraints, because we allow the random variables to have any distribution whatsoever.

At first blush, our weak order model appears extremely flexible: For five choice options, it permits 541 different preference states, i.e., it has 540 free parameters. Its formulation in terms of arbitrary random variables, (Uc)c∈𝒞, has no limit on free parameters, since we explicitly forego constraints on the joint distribution. The empirical data only have 20 degrees of freedom. Do we engage in over-fitting? Appearances are misleading. First, we have already seen that strict weak orders are rather restrictive compared to asymmetric relations. Even more important is the statistical perspective. Using Monte Carlo methods, we have computed the approximate relative volume of the parameter space within the outcome space (both are of the same dimension). It turns out that the model is, in fact, highly restrictive: For five choice alternatives, the permissible ternary paired comparison probabilities of the strict weak order model occupy only a two-thousandth of the empirical outcome space. It is crucial to distinguish statistical identifiability from statistical testability. While the 540 free parameters are not uniquely identifiable when there are only 20 degrees of freedom in the data, the model is testable because it makes extraordinarily restrictive predictions. The strict weak order model, stated formally in Equation 5, can test the structural property of strict weak order preferences in the presence of variable choice behavior.

Denote by 𝒲𝒪𝒸 the collection of all strict weak orders on a given finite set 𝒞 of choice alternatives. A collection is called a system of ternary paired comparison probabilities, if ∀i, j ∈ 𝒞, with i ≠ j, we have 0 ≤ Pij ≤ 1 and Pij + Pji ≤ 1. This system satisfies the strict weak order model if there exists a probability distribution on 𝒲𝒪𝒸

that assigns probability P≻ to any strict weak order ≻, such that ∀i, j ∈ 𝒞, i ≠ j,

| (5) |

In words: The probability that the respondent chooses i, when offered i versus j in a ternary paired comparison trial, is the (marginal) total probability of all those strict weak orders ≻ in which i is strictly preferred to j.

In the terminology of Loomes and Sugden (1995), Equation 5 is a random preference model whose core theory is the strict weak order. It can be rewritten as a “distribution-free random utility model” where each sample point is a strict weak order (Heyer and Niederée, 1992; Regenwetter, 1996; Regenwetter and Marley, 2001; Niederée and Heyer, 1997).

A system of ternary paired comparison probabilities satisfies a distribution-free random utility model if there exists a probability measure P and there exist real-valued jointly distributed random variables (Uc)c∈𝒞 such that ∀i, j ∈ 𝒞, i ≠ j,

| (6) |

In words: The probability that the respondent chooses i, when offered i versus j in a ternary paired comparison trial, is the (marginal) total probability that the random utility of i exceeds the random utility of j.3

To see of how all of these ideas come together and how the model generates predicted ternary paired comparison data, consider Figure 1. The box entitled “Random Utility” shows the values of five hypothetical utility functions as well as the probability of each function. For example, the utility function in the first row has probability 0.15 and assigns utilities 100, 50, … 0 to a, b, … e. Each utility function induces a strict weak ordering of the alternatives. For example, the first two functions induce the weak order a ≻ b ≻ c ≻ d ≻ e, hence that strict weak order has probability 0.15 + 0.25 = 0.40. Let i ~ j denote that both i ⊁ j and, j ⊁ i. To illustrate the mixture model in (5) consider the weak orders in which a ≻ b, namely a ≻ b ≻ c ≻ d ≻ e and a ≻ d ≻ c ≻ b ≻ e, whose probabilities sum to 0.5, hence the entry Pab = 0.5 in the bottom left matrix. Notice that Pab + Pba = 0.5 + 0.3 = 0.8 ≠ 1 because the weak order a ~ b ≻ c ≻ d ≻ e has positive probability 0.2.

To illustrate the random utility model in (6) notice that Pab is also given by summing the probabilities 0.15 + 0.25 + 0.1 of the utility functions with U(a) > U(b). We can now establish that the models in (5) and (6) are mathematically equivalent, and hence we can later focus on the formulation in (5). The following theorem (proven in the Online Supplement) follows directly from related prior work (Heyer and Niederée, 1992; Regenwetter, 1996; Regenwetter and Marley, 2001; Niederée and Heyer, 1997). This theorem is extremely important: It shows that any algebraic theory that uses a numerical function U(·), say cumulative prospect theory, can be extended quite naturally into a probabilistic model that induces a probability distribution over strict weak orders.

Theorem. A system of ternary paired comparison probabilities Pij satisfies the weak order model in (5) if and only if it satisfies the distribution-free random utility model in (6).

Characterizing models like (5) or (6) is a nontrivial task that has received much attention, e.g., in mathematics, operations research and mathematical psychology. The most promising approach tackles the problem by characterizing the weak order model stated in Equation 5 using tools from convex geometry. In that terminology, the collection of all ternary paired comparison probabilities Pij consistent with the model in (5) forms a geometric object called a “convex polytope” in a vector space whose coordinates are the probabilities Pij. A convex polytope is a high-dimensional generalization of (two-dimensional) polygons and of (three-dimensional) polyhedra.4 We can test the weak order model if we understand its polytope.

A triangular area in two-dimensional space (in a plane) can be described fully by the area between the three points that form its ‘corners’ (so-called “vertices”) or it can be described equivalently as the area between its three ‘sides’ (so-called “facets”). This logic extends to high dimensional spaces: A convex polytope can likewise be described in two equivalent ways: One can either describe it as the “convex hull of a set of vertices,” or as the solution to a system of “affine inequalities” (Ziegler, 1995) that define its “facets” (for examples, see WO1–WO3 below). The model formulation in Equation 5 directly yields the vertex representation as the convex hull of a collection of vertices: For each strict weak order ≻ in 𝒲𝒪𝒸 create a vertex with the following 0/1-coordinates

| (7) |

Each vertex of the polytope represents one strict weak order. Intuitively, if a person has deterministic preferences in the form of one single weak order, then this person’s choice probabilities are given by the vertex coordinates of that weak order: If the person always prefers i over j, then, according to the model (5), she chooses i over j with probability 1. If a person never prefers i to j, then, according to the model (5), she chooses i over j with probability zero. While each vertex can be thought of as a degenerate distribution that places all probability mass on a single strict weak order, the entire polytope forms a geometric representation of all possible probability distributions over strict weak orders. That is precisely the model stated in Equation 5. The Online Supplement illustrates these geometric concepts with a simple 2-dimensional example and three illustrative figures.

To test the model, we wish to determine whether a set of data was generated by a vector of ternary paired comparison probabilities that belongs to the polytope. To achieve this goal, it is useful to find the alternative representation of the polytope, i.e., to find a system of inequalities whose solution set is the polytope. We can then test whether the ternary paired comparison probabilities satisfy or violate those “facet-defining” inequalities (FDIs).

When |𝒞| = 3, say, 𝒞 = {a, b, c}, the polytope is known to have 13 vertices, representing the 13 different strict weak orders over three objects. These vertices ‘live’ in a 6-dimensional vector space spanned by the probabilities each Pab, Pac, Pba, Pbc, Pca, Pcb. The following three families of inequalities completely characterize the strict weak order polytope when 𝒞 = 3:

WO1 states that probabilities cannot be negative. WO2 reflects the empirical paradigm: The probabilities of choosing either i or j may possibly sum to less than one, because the decision maker may use the indifference option with positive probability. Constraints WO1 and WO2 automatically hold in a ternary paired comparison paradigm and are thus not subject to testing, whereas WO3 is testable. Convex geometry allows one to prove that WO1 through WO3 are nonredundant and that they fully characterize the family of all conceivable ternary paired comparison probabilities for |𝒞| = 3 that can be represented using (5). This means that the constraints WO1 through WO3 form a minimal description, i.e., the shortest possible list of nonredundant constraints that fully describe the strict weak order model for |𝒞| = 3 (Fiorini and Fishburn, 2004). Such constraints are called facet-defining inequalities. Each of these inequalities defines a facet of the polytope, that is, a face of maximal dimension.5

Obtaining a minimal description of convex polytopes can be computationally prohibitive. Our experiment used three stimulus sets of five alternatives each. The minimal description of the polytope in terms of facet-defining inequalities for the case of interest to us, where |𝒞| = 5, was previously unknown. We determined this description using public domain software, PORTA, the POlyhedron Representation Transformation Algorithm, of T. Christof & A. Löbel, 1997.6 That strict weak order polytope has 541 vertices in a vector space of dimension 20. This polytope occupies of the space of trinomial probabilities. It is characterized by 75,834 facet-defining inequalities (see Online Supplement).7 The following is one of these 75,834 constraints. It interrelates all 20 choice probabilities for 𝒞 = {a, b, c, d, e}.

| (8) |

How can one test thousands of joint constraints like (8)? First, it is possible that the data fall inside the tiny polytope, namely if the observed choice proportions themselves satisfy all 75,000+ constraints (see Figure 1). When they do, the observed choice frequencies are precisely within the range of predicted values and we do not need to compute statistical goodness-of-fit.8 When the observed choice proportions fall outside the polytope, then, writing Nij for the observed frequency with which a person chose object i when offered the ternary paired comparison among i and j altogether K many times, and assuming iid sampling, the likelihood function is given by

It can be shown that the log-likelihood ratio test statistic, G2, need not have an asymptotic chi-square distribution, because the model in Equation 5 imposes inequality constraints on the probabilities Pij, Pji. The asymptotic distribution of G2 is a mixture of chi-square distributions, with the mixture weights depending on the structure of the polytope in the neighborhood around the maximum likelihood point estimate (Davis-Stober, 2009; Myung et al., 2005; Silvapulle and Sen, 2005). Depending on the location of the point estimate on the weak order polytope, we need to employ some of the 75,000+ facet-defining inequalities to derive a suitable mixture of chi-square distributions. Next, we use Davis-Stober’s (2009) methods, implemented in a MATLAB© program, to test the model on new data.

3. Experiment and Data Analysis

Our experimental study built on Tversky (1969) and used similar stimuli as Regenwetter et al. (2011). The main change was that decisions were ternary paired comparisons. We investigated the behavior of 30 participants and we secured high statistical power by collecting 45 trials per gamble pair and per respondent. The gambles were organized into four sets: Gamble Set I, Gamble Set II, Gamble Set III, and Distractors. We provide details of the experiment, including recruitment, stimuli and our data, in the Online Supplement.

Table 1 provides our results for all 30 participants and the three gamble sets. When the ternary paired-comparison proportions are in the interior of the weak order polytope, we indicate this with a checkmark (✓). Data outside the polytope have positive G2 values, with large p-values indicating statistically nonsignificant violations. Each G2 needs to be evaluated against a specific “Chi-bar-squared” distribution, thus individual G2 values cannot be compared across results. Significant violations are marked in boldfaced font.

Table 1.

Goodness-of-fit for 30 individual respondents (Id 1–30) and three gamble sets (Set I, Set II, Set III). A checkmark (✓) indicates a perfect fit, positive G2 values are accompanied with p-values in parentheses. Significant p-values (< .05), i.e., significant violations of the weak order model, are marked in bold.

| Set I | Set II | Set III | |

|---|---|---|---|

| Id | G2 (p-val.) | G2 (p-val.) | G2 (p-val.) |

| 1 | ✓ | ✓ | .23 (.91) |

| 2 | ✓ | ✓ | 2.23 (.55) |

| 3 | ✓ | 1.11 (.77) | 2.82 (.32) |

| 4 | ✓ | ✓ | 10.78 (.01) |

| 5 | ✓ | .17 (.89) | ✓ |

| 6 | ✓ | ✓ | ✓ |

| 7 | ✓ | ✓ | ✓ |

| 8 | ✓ | ✓ | ✓ |

| 9 | .52 (.82) | 5.95 (.18) | ✓ |

| 10 | 2.82 (.47) | ✓ | ✓ |

| 11 | ✓ | ✓ | ✓ |

| 12 | ✓ | ✓ | ✓ |

| 13 | ✓ | ✓ | ✓ |

| 14 | 8.01 (.02) | 1.22 (.38) | 12.09 (.05) |

| 15 | ✓ | .80 (.40) | .40 (.84) |

| 16 | ✓ | ✓ | ✓ |

| 17 | ✓ | ✓ | ✓ |

| 18 | ✓ | ✓ | 4.77 (.27) |

| 19 | ✓ | ✓ | ✓ |

| 20 | ✓ | ✓ | ✓ |

| 21 | ✓ | ✓ | 1.87 (.46) |

| 22 | 5.01 (.10) | 14.90 (< .01) | 3.63 (.42) |

| 23 | .16 (.95) | .04 (.89) | .54 (.62) |

| 24 | ✓ | ✓ | ✓ |

| 25 | ✓ | ✓ | 6.42 (.08) |

| 26 | 1.93 (.48) | 1.30 (.75) | .06 (.92) |

| 27 | ✓ | ✓ | ✓ |

| 28 | ✓ | ✓ | ✓ |

| 29 | 6.04 (.26) | 14.81 (.01) | ✓ |

| 30 | 1.03 (.54) | .04 (.88) | ✓ |

In Gamble Set I, 22 respondents generated choice proportions that satisfied all 75000+ (nonredundant) inequality constraints that the model in Equation 5 imposes on the choice probabilities. In other words, 22 of 30 respondents generated a “perfect fit.” Given that the parameter space of the model occupies only a tiny fraction of the outcome space, this is a remarkable discovery. One out of 30 participants violated the model at a significance level of 5%. This is well within Type-I error range. In Gamble Set II, which created more difficult trade-offs between probability of winning and outcome, 20 of 30 respondents yielded a perfect fit. This is, again, an extraordinary finding. Among the remaining 10 respondents, two significantly violated the constraints of the model in Equation 5 at a significance level of 5%. Again, two significant violations is within a reasonable Type-I error range. For Gamble Set III, 18 of 30 participants generated choice proportions that satisfied all 75,000+ inequalities. The remaining 12 participants included one significant violation at the 5% level. This is yet again within Type-I error range.

4. Discussions and Conclusions

A number of descriptive, prescriptive, and normative theories of decision making share the structural assumption that individual preferences are strict weak orders. These include the high-profile class of theories of decision making that rely on numerical representations of preferences, such as (subjective) expected utility and (cumulative) prospect theory, among many others. Noncompensatory models, such as lexicographic semiorders (Tversky, 1969) or the priority heuristic (Brandstätter et al., 2006) violate such structural properties.

To consider the future direction of decision research, we propose to carry out a thorough “triage” at a general level. If preferences are consistent with strict weak orders, then this has implications for the type of process models that can work, and it eliminates the large class of existing or potential theories that violate weak orders, e.g., because they predict intransitive preferences. On the other hand, if preferences are not consistent with strict weak orders, then we may have to give up modeling choice through numerical representations. This would have far-reaching consequences, e.g., in modeling economic behavior.

We believe that much confusion can be eliminated if we find successful means to distinguish variability of overt choice from structural inconsistency of hypothetical, latent preference. We have shown how to formulate a probabilistic model of weak order preference and, equivalently, a probabilistic model of numerical utility. While the basic concepts underlying these mathematical models, by themselves, are not new, the models have never been fully characterized nor have they ever been tested empirically in their full generality using quantitative methods. We leverage new mathematical and statistical results to offer the first direct, parsimonious and quantitative test of weak order preferences and numerical utility representations to date.

Our approach is furthermore guided by three key criteria for the rigorous development and testing of psychological theories (Roberts and Pashler, 2000; Rodgers and Rowe, 2002). 1) Researchers should understand the full scope of a theory’s predictions and focus on models that make highly restrictive predictions. 2) Researchers should understand and control or limit the sampling variability in their data. 3) Researchers should more often ask themselves: “what would disprove my theory?” (Roberts and Pashler, 2000, p. 366). The a priori expectation should be that the theory will be rejected, hence a good fit should be a surprise.

1) Scope and Parsimony

The weak order model makes predictions on stimuli like our noncash prospects A and C for which other theories, such as the priority heuristic and parametric versions of cumulative prospect theory are mute. We have fully characterized the model for five stimuli. It forms a convex polytope in a 20 dimensional space, characterized by precisely 75,834 nonredundant mathematical constraints that define a volume of just of the space of all possible ternary paired comparison probabilities. We derive these restrictive predictions using a minimum of mathematical assumptions, i.e., we expressly avoid common auxiliary assumptions that are, at best, idealizations of a more complex reality.

2) Data Variability

The choice frequencies differ dramatically between respondents. We did not aggregate across participants, because such aggregated data need not be representative of even a single person. Even within person we went beyond the standard approach that often merely considers modal choices. We provided and tested highly specific quantitative predictions about the predicted choice proportions for each individual. By analyzing individual participants separately we controlled for inter-individual differences. By collecting 45 observations per trinomial for each person, we reduced sampling variability substantially, especially compared to studies with one observation per person per gamble pair.

3) How Surprising is the Model Fit?

By including stimuli with a historic reputation for allegedly yielding intransitive preferences, we biased the experimental paradigm against the weak order model. Even disregarding the nature of the stimuli themselves, for 5 choice alternatives, 99% of asymmetric binary preference relations violate weak orders and only of all conceivable ternary paired comparison probabilities satisfy the polytope. Using simulation methods, we estimated the average power of our test to be about .96.

Yet, our data analysis revealed a remarkable picture: Over all three experimental conditions combined, 60 out of 90 data sets generated choice proportions that lie inside of the tiny space allowed by the model. Hence, two thirds of our data sets yielded a perfect fit. A perfect fit means that the model generated predictions that are so accurate that, no matter how small the significance level α, we cannot reject the model. Combining all three conditions, only 5 out of 90 data sets violated the strict weak order model at a 5% significance level. This is just about at the usual Type-I error level. We also cross-replicated the excellent model performance within individuals across three stimulus sets, including noncash gambles: Of 30 individuals, 13 consistently fit perfectly across all three gamble sets, and none generated a significant violation for more than a single gamble set.

We plan to complement this work with a Bayesian analysis along the lines sketched by Myung et al. (2005). By the likelihood principle and thanks to our large sample size, we expect the Bayesian viewpoint to reinforce our substantive conclusions, unless one employs prior distributional assumptions that give little weight to the empirical data. Since a Bayesian approach does not allow for a perfect fit, we predict that our perfect fit results will translate into very high Bayes’ factors in favor of the weak order model over an unconstrained model (that permits all ternary choice probabilities).

Variable choice behavior is often cited as a reason to reject structural properties of algebraic theories, such as, here, weak orders and numerical utility representations. We show that variability of preference (or uncertainty about preference) can be separated from violations of structural properties, even without requiring an error theory. In our model, all strict weak orders are allowable preference states, i.e., we allow for maximal variability in latent preference and overt choice. But we also require perfect adherence to the structural properties of strict weak orders in latent preference. Adding a random error component to the model would relax our constraints by inflating the weak order polytope, and would automatically further improve the already excellent fit.

Common sense tells us that variability of, say, restaurant choice, should not be mistaken for incoherence in, say, food preference. Probabilistic models like the weak order model allow us to tease apart variability of choice from inconsistency of preference. Future work should replicate our findings in other labs and expand the analysis to other stimulus sets.

Supplementary Material

Acknowledgements

We thank J. Dana for providing the computer program for the experiment, and A. Kim, W. Messner, and A. Popova, e.g., for data collection. We thank the referees and M. Birnbaum, M. Fifić, Y. Guo, R. D. Luce, A. A. J. Marley, A. Popova, and C. Zwilling for comments on earlier drafts. This work was supported by the Air Force Office of Scientific Research (FA9550-05-1-0356, Regenwetter, PI), the National Institute of Mental Health (T32 MH014257-30, Regenwetter, PI), and the National Science Foundation (SES 08-20009, Regenwetter, PI). An early draft of this article was written while the first author was a guest scientist of the “ABC group” at the Max Planck Institute for Human Development in Berlin, and while the second author was supported by a Dissertation Completion Fellowship of the University of Illinois. Any opinions, findings, and conclusions or recommendations expressed in this publication are those of the authors and need not reflect the views of colleagues, funding agencies, the Max Planck Society, the University of Illinois, or the University of Missouri.

Footnotes

Note, however, that the converse does not hold. For example, for five choice alternatives, there are 541 strict weak orders (Fiorini, 2001), whereas there are 154,303 different transitive relations (Pfeiffer, 2004).

Similar ideas have been used in signal detection theory with multiple response categories (Macmillan and Creelman, 2005).

Notice that we do not require noncoincidence, i.e., we do not require P (Ui = Uj) = 0 for (i ≠ j). Random utility models in econometrics often satisfy noncoincidence because they rely on, say, multivariate normal or extreme value distributions. Such distributional assumptions are known idealizations that serve the purpose of making modeling and statistical estimation tractable. One can show that noncoincidence effectively forces “completeness” of binary preferences, because indifference statements have probability zero, according to noncoincidence, hence incomplete relations ≻ have zero probability in (5). This is precisely the assumption that we do not want to make when testing weak order preferences and/or testing numerical utility. The most interesting applications of these models are when P (Ui = Uj) > 0, and thus Pij + Pji < 1.

We also do not assume that the random variables U are mutually independent, as do some Thurstonian models in psychology. Because we strive to avoid technical convenience assumptions, especially distributional assumptions that would imply noncoincidence or independence assumptions in (6), our approach differs from standard off-the-shelf measurement models as well as signal-detection models that relate subjective percepts to an objective ground truth (Macmillan and Creelman, 2005). In our data analysis, we do require that the data result from an independent and identically distributed (iid) random sample, due to a lack of statistical tools for non-iid samples at this time. However, the iid sampling assumption neither assumes nor implies independence among the random variables (Uc)c∈𝒞 in the model (6).

Triangles, parallelograms, etc. are examples of convex polygons in two-space; cubes, pyramids, etc. are examples of convex polyhedra in three-space.

For example, a three-dimensional cube has 8 square-shaped facets and a tetrahedron has four triangular facets. The edges of these polyhedra are one-dimensional faces, and hence not facets.

http://www.iwr.uni-heidelberg.de/groups/comopt/software/PORTA/. Translating the vertex representation into a minimal description took three weeks of continuous computation on a powerful PC.

In particular, it can be shown that Equation 5 again implies the family of so-called “triangle inequalities,” labeled WO3 above. WO3 has previously surfaced for two-alternative forced choice because it is directly related to transitivity (Block and Marschak, 1960). Here, WO3 is only one of thousands of testable constraints that characterize the model. Unlike WO1–WO3, these constraints are not so straightforward to interpret.

Here, we take a frequentist view. We comment on Bayesian approaches in the Conclusion.

References

- Block HD, Marschak J. Random orderings and stochastic theories of responses. In: Olkin I, Ghurye S, Hoeffding H, Madow W, Mann H, editors. Contributions to Probability and Statistics. Stanford: Stanford University Press; 1960. pp. 97–132. [Google Scholar]

- Brandstätter E, Gigerenzer G, Hertwig R. The priority heuristic: Making choices without trade-offs. Psychological Review. 2006;113:409–432. doi: 10.1037/0033-295X.113.2.409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis-Stober CP. Analysis of multinomial models under inequality constraints: Applications to measurement theory. Journal of Mathematical Psychology. 2009;53:1–13. [Google Scholar]

- Fiorini S. PhD thesis. Université Libre de Bruxelles; 2001. Polyhedral Combinatorics of Order Polytopes. [Google Scholar]

- Fiorini S, Fishburn PC. Weak order polytopes. Discrete Mathematics. 2004;275:111–127. [Google Scholar]

- Heyer D, Niederée R. Generalizing the concept of binary choice systems induced by rankings: One way of probabilizing deterministic measurement structures. Mathematical Social Sciences. 1992;23:31–44. [Google Scholar]

- Kahneman D, Tversky A. Prospect theory: An analysis of decision under risk. Econometrica. 1979;47:263–291. [Google Scholar]

- Loomes G, Sugden R. Regret theory - an alternative theory of rational choice under uncertainty. Economic Journal. 1982;92:805–824. [Google Scholar]

- Loomes G, Sugden R. Incorporating a stochastic element into decision theories. European Economic Review. 1995;39:641–648. [Google Scholar]

- Macmillan N, Creelman C. Detection Theory: A User’s Guide. Mahwah, NJ: Lawrence Erlbaum; 2005. [Google Scholar]

- Miller G. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review. 1956;63:81–97. [PubMed] [Google Scholar]

- Myung J, Karabatsos G, Iverson G. A Bayesian approach to testing decision making axioms. Journal of Mathematical Psychology. 2005;49:205–225. [Google Scholar]

- Niederée R, Heyer D. Generalized random utility models and the representational theory of measurement: a conceptual link. In: Marley AAJ, editor. Choice, Decision and Measurement: Essays in Honor of R. Duncan Luce. Mahwah, NJ: Lawrence Erlbaum; 1997. pp. 155–189. [Google Scholar]

- Pfeiffer G. Counting transitive relations. Journal of Integer Sequences. 2004 [Google Scholar]

- Regenwetter M. Random utility representations of finite m-ary relations. Journal of Mathematical Psychology. 1996;40:219–234. doi: 10.1006/jmps.1996.0022. [DOI] [PubMed] [Google Scholar]

- Regenwetter M, Dana J, Davis-Stober CP. Transitivity of preferences. Psychological Review. 2011;118:42–56. doi: 10.1037/a0021150. [DOI] [PubMed] [Google Scholar]

- Regenwetter M, Marley AAJ. Random relations, random utilities, and random functions. Journal of Mathematical Psychology. 2001;45:864–912. [Google Scholar]

- Roberts FS. Measurement Theory. London: Addison-Wesley; 1979. [Google Scholar]

- Roberts S, Pashler H. How persuasive is a good fit? A comment on theory testing. Psychological Review. 2000;107:358–367. doi: 10.1037/0033-295x.107.2.358. [DOI] [PubMed] [Google Scholar]

- Rodgers JL, Rowe DC. Theory development should begin (but not end) with good empirical fits: A comment on Roberts and Pashler (2000) Psychological Review. 2002;109:599–604. doi: 10.1037/0033-295x.109.3.599. [DOI] [PubMed] [Google Scholar]

- Savage LJ. The Foundations of Statistics. New York: John Wiley & Sons; 1954. [Google Scholar]

- Silvapulle M, Sen P. Constrained Statistical Inference: Inequality, Order, and Shape Restrictions. New York: John Wiley & Sons, New York; 2005. [Google Scholar]

- Tversky A. Intransitivity of preferences. Psychological Review. 1969;76:31–48. [Google Scholar]

- Tversky A, Kahneman D. Advances in prospect theory: Cumulative representation of uncertainty. Journal of Risk and Uncertainty. 1992;5:297–323. [Google Scholar]

- von Neumann J, Morgenstern O. Theory of Games and Economic Behavior. Princeton: Princeton University Press; 1947. [Google Scholar]

- Ziegler GM. Lectures on Polytopes. Berlin: Springer-Verlag; 1995. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.