Abstract

We investigated how age of faces and emotion expressed in faces affect young (n = 30) and older (n = 20) adults’ visual inspection while viewing faces and judging their expressions. Overall, expression identification was better for young than older faces, suggesting that interpreting expressions in young faces is easier than in older faces, even for older participants. Moreover, there were age-group differences in misattributions of expressions, in that young participants were more likely to label disgusted faces as angry, whereas older adults were more likely to label angry faces as disgusted. In addition to effects of emotion expressed in faces, age of faces affected visual inspection of faces: Both young and older participants spent more time looking at own-age than other-age faces, with longer looking at own-age faces predicting better own-age expression identification. Thus, cues used in expression identification may shift as a function of emotion and age of faces, in interaction with age of participants.

Keywords: eye tracking, own-age effect, emotion, faces, age differences

Human faces represent a well-learned category of objects and they have great physical, social, and emotional relevance. Two of the most salient features of faces are age and emotion expressed. These features are extracted rapidly and affect how faces are processed by young and older adults. For example, there is evidence that very shortly after presentation of a face our cognitive system is sensitive to differences between young and older faces (Ebner, He, Fichtenholtz, McCarthy, & Johnson, 2010). Moreover, both young and older adults are more distracted by task-irrelevant own-age than other-age faces (Ebner & Johnson, in press) and are better at remembering own-age than other-age faces (Bäckman, 1991; see Harrison & Hole, 2009, for a review).

In addition, there are effects of the emotion expressed in faces. For example, older, but not young, adults show an attention preference and/or better memory for positive than negative faces (Ebner & Johnson, 2009; Isaacowitz, Wadlinger, Goren, & Wilson, 2006; see Mather & Carstensen, 2005, for a review; but see D’Argembeau & Van der Linden, 2004), and older relative to young adults show deficits in facial expression identification, particularly for negative expressions such as anger or sadness (see Ruffman, Henry, Livingstone, & Phillips, 2008, for a meta-analysis). Furthermore, both age groups are more accurate in identifying certain expressions (e.g., happiness) than others (e.g., anger; Ebner & Johnson, 2009; Murphy & Isaacowitz, in press; Ruffman et al., 2008); this latter finding, however, may largely reflect the fact that most studies present only one category of positive expressions along with more than one category of negative expressions.

To date, little is known about how age and emotion of faces may or may not interact in affecting young and older adults’ visual inspection of faces. The present study examined potential differences in visual attention based on the age and the expression of faces. There are several reasons why one might expect differences in young and older adults’ visual inspection of faces as a function of the age of the faces in addition to the emotion expressed. For example, age-related changes in facial features such as shape or surface texture and coloration of skin (Burt & Perrett, 1995) may influence how faces are inspected, and, possibly, affect how easy it is, and/or what cues are used, to identify different emotions. Also, age-related changes in motivational orientation may affect visual scan patterns, in that certain emotions (i.e., positive as opposed to negative) may become more relevant than others for older than young adults, as suggested by age differences in emotion regulation strategies (Carstensen, Isaacowitz, & Charles, 1999). At the same time, differences in young and older adults’ daily routines and interests may render own-age individuals the more likely social interaction partners (Ebner & Johnson, 2009; He, Ebner, & Johnson, in press). Or own-age faces may be more affectively laden and/or more self-relevant, as suggested by greater activation of ventromedial prefrontal cortex (a region associated with self-referential processing) for own-age than other-age faces (Ebner, Gluth, et al., in press). Thus, identification of expressions in own-age versus other-age faces may not only be more practiced but also more crucial for successful social interactions, particularly for some expressions, and paying greater attention to own-age than other-age faces or to some types of expressions may represent a cognitive adaptation to social/motivational factors.

Visual Inspection of Young and Older Faces

To our knowledge, only two studies so far have examined how young and older adults differ in their visual examination of young and older faces. These two studies vary somewhat in the procedures used and the conclusions drawn. Firestone, Turk-Browne, and Ryan (2007) recorded eye movements while participants rated the quality of pictures of young and older neutral faces and judged the age of the faces. There was no indication of an own-age effect in visual inspection of faces. For both age groups, overall looking time was greater for young than older faces (with older faces better remembered later), and participants looked longer at eyes of older than young faces, and longer at mouths of young than older faces. In addition, young participants looked longer at eyes than older participants, whereas older participants looked longer at the mouth than young participants. Somewhat surprisingly, only longer time viewing the nose was correlated with better old/new face recognition memory (and only in young but not older adults).

In contrast, using passive free viewing and a shorter presentation time, He et al. (in press) found that young and older adults looked longer at own-age than other-age faces, and this own-age effect in inspection time predicted the own-age effect in old/new face recognition memory. It is possible that asking participants to judge the age of faces (Firestone et al., 2007) focused participants on age-related features, thus reducing differences in patterns of visual inspection spontaneously associated with young and older faces in passive viewing as in He et al. (in press). Importantly, neither of these studies systematically varied the emotion expressed in the faces.

Visual Inspection of Faces with Different Expressions

Only a few studies have examined how different expressions affect visual examination of faces in young and older adults (Murphy & Isaacowitz, in press; Sullivan, Ruffman, & Hutton, 2007; Wong, Cronin-Golomb, & Neargarder, 2005). These studies were mostly directed at exploring age differences in visual scan patterns that might underlie age-related declines in expression identification. They were based on suggestions that viewing of lower half of faces facilitates identification of happiness and disgust, whereas viewing of upper half of faces facilitates identification of anger, fear, and sadness (Calder, Young, Keane, & Dean, 2000).

In Wong et al. (2005) young and older participants identified expressions and, in a separate passive viewing task, eye movements were recorded. Older compared to young participants were worse at identifying angry, fearful, and sad faces, but were better at identifying disgust; there were no age-group differences in identification of happiness and surprise. Across participant age groups, the longer participants fixated on angry, fearful, and sad faces, the worse was their expression identification. However, the pattern of visual inspection when people are explicitly trying to identify expressions may be different than the pattern during passive viewing of faces; thus conclusions from the Wong et al. study about the relation between inspection pattern and accuracy of expression identification (where inspection pattern and expression identification were obtained under different instructional sets) are tentative.

Recording eye movements while participants identified expressions, Murphy and Isaacowitz (in press) found that happy faces were more likely to be correctly identified than angry, fearful and sad faces, and that older compared to young participants were worse at identifying anger, fear, and sadness. Across expressions, young participants looked more at the upper half of faces than older participants. For angry faces, longer time looking at the lower half of faces was positively correlated with expression identification.

Sullivan et al. (2007; Experiment 2) also recorded eye movements during expression identification. Older compared to young participants were worse at identifying anger, but did not differ in any of the other expressions. Similar to Murphy and Isaacowitz (in press), young participants looked longer at the upper, whereas older participants looked longer at the lower, half of faces. Older participants looked longer at sad than happy faces, and at happy than angry faces. For anger, fear and sadness, young participants’ looking at the upper half of faces was associated with better, and lower half looking with worse, expression identification (this later effect also held for older participants).

In sum, despite some inconsistencies in the findings across these studies, which may be due to methodological differences in the orienting tasks used and/or the eye-tracking variables extracted, they largely agree that there are differences in how young and older adults visually scan faces and that emotion expressed affects visual inspection. Also, they suggest that accuracy of identification of at least some expressions is related to visual scan patterns. Note, however, that all these studies investigating effects of emotion expressed used only young adults’ faces; the age of the presented faces was not varied.

The Present Study

We examined several questions derived from the previous literature: (1) Expression Identification: Based on earlier research, we expected better expression identification in young than older participants (Ebner & Johnson, 2009; Isaacowitz et al., 2007; Ruffman et al., 2008), and better identification of (at least negative) expressions for young than older faces (Ebner & Johnson, 2009). This latter outcome would extend Ebner and Johnson’s findings regarding differences in expression identification of young and older faces from neutral and angry to sad, fearful, and disgusted faces.

(2) Overall Gaze Time at Faces and Expression Identification: Given theoretical considerations that own-age faces represent personally and socially relevant types of faces that individuals would be especially motivated to accurately interpret with respect to emotions, we predicted that both young and older participants would look longer at own-age than other-age faces. We extended the study by He et al. (in press) which used neutral faces and examined the effect in passive viewing paradigm in that we used emotional, in addition to neutral, faces in the context of an expression identification task. Considering individuals’ greater interest in, and expertise with, own-age than other-age faces (Ebner & Johnson, 2009; Harrison & Hole, 2009; He et al., in press), we were furthermore interested in exploring whether longer looking at own-age faces in particular was associated with better expression identification of own-age faces.

(3) Gaze Time at Upper versus Lower Half of Faces and Expression Identification:

Based on evidence that young adults look longer at the upper half whereas older adults look longer at the lower half of young emotional (Murphy & Isaacowitz, in press; Sullivan et al, 2007; Wong et al., 2005) and young and older neutral (Firestone et al., 2007) faces, we were interested in exploring how the age of faces may affect looking patterns at upper and lower half of emotional as well as neutral faces, and whether these gaze patterns were associated with expression identification.

In addition, based on theoretical suggestions (Calder et al., 2000) and empirical findings (Sullivan et al., 2007; Wong et al., 2005), we predicted longer looking at the upper half of angry, fearful, and sad, but longer looking at the lower half of happy and disgusted, faces. With respect to this latter prediction, we did not have specific expectations regarding differences between young and older faces, or about how differences would interact with participants’ age. However, if looking patterns at upper and lower half of faces for different expressions were independent of the age of faces and participants, this would suggest that the cues used in expression identification were similar for young and older faces and young and older participants.

Methods

Participants

Forty-six young adults (age range 18–30 years, M = 22.3, SD = 2.9, 59% women) were recruited through flyers on campus, and 33 older adults (age range 63–92 years, M = 74.9, SD = 7.8, 70% women) through flyers in community or senior citizen centers. Only participants who had more than 67% trials with valid gazing information (defined as gazes focused within 1° of visual angle for at least 0.1 seconds) were included in the analyses. This resulted in a final sample of 30 young (age range: 18–30 years, M = 22.62, SD = 3.36, 57% women) and 20 older (age range 63–92 years, M = 73.52, SD = 8.39, 70% women) participants.

All participants were compensated for participation. Most young participants were Yale University undergraduates (varying majors). Older participants reported a mean of 16.9 years of education (SD = 1.6). Young and older participants did not differ in self-reported health, near vision, or negative affect, but older compared to young participants reported more positive affect (Young participants: M = 2.91, SD = 0.70; Older participants: M = 4.14, SD = 1.60), F (1, 49) = 13.77, p = .012, ηp2 = .22, and showed worse contrast sensitivity (Young participants: M = 1.68, SD = 0.15; Older participants: M = 1.56, SD = 0.14), F (1, 49) = 7.50, p = .010, ηp2 = .14, and visual-motor processing speed (Young participants: M = 65.03, SD = 10.08; Older participants: M = 47.64, SD = 7.37), F (1, 49) = 43.76, p = .001, ηp2 = .48. Health, cognition, vision, and affective measures were not significantly correlated with the gaze time measures.

Stimuli and Equipment

Stimuli were taken from the FACES database (Ebner, Riediger, & Lindenberger, 2010). This database comprises digital high-quality color, front-view photographs of Caucasian faces of three different age groups, each displaying each of six expressions. All faces are standardized in terms of their production and general selection procedure and with respect to brightness, background color, and visible clothes, and show no eye-catching items such as beards or glasses (see Ebner et al., 2010 and Riediger, Voelkle, Ebner, & Lindenberger, 2011, this issue, for more detail). Equal numbers of faces of young (18–31 years) and older (69–80 years) individuals, half male and half female, were presented on a 17-inch display (1024 x 768 pixels) at a distance of 24 inches (face stimuli: 623 x 768 pixels; on grey background). Stimulus presentation was controlled using Gaze Tracker (Eye Response Technologies, Inc., Charlottesville, VA). An Applied Science Laboratories (Bedford, MA) Model 504 Eye Tracker recorded eye movements at a rate of 60 Hz.

Procedure and Measures

After informed consent, participants filled in a demographic and physical health questionnaire, and worked on the Digit-Symbol-Substitution Test (visual-motor processing speed; Wechsler, 1981) followed by the Rosenbaum Pocket Vision Screener (near vision; Rosenbaum, 1984), the MARS Letter Contrast Sensitivity Test (Arditi, 2005), and the Positive and Negative Affect Schedule (Watson, Clark, & Tellegen, 1988). Next, participants rested their head on a chinrest and the eye tracking camera was adjusted to locate the corneal reflection and pupil of participants’ left eye, followed by an individual 9-point calibration covering the area of stimulus presentation. Participants then worked on the Expression Identification Task for about 20 minutes, during which their eye movements were recorded. Before the task, the experimenter gave verbal instructions and a computer program provided written instructions and practice runs.

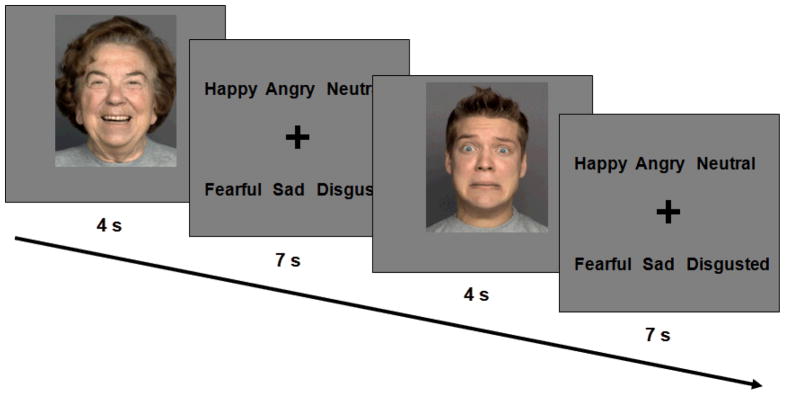

As shown in Figure 1, during this task participants saw pictures, one at a time, of 48 young and 48 older faces. Each face displayed either a happy, neutral, angry, fearful, sad, or disgusted expression. The presentation of a face identity with an expression was counterbalanced across participants (each participant only saw each face with one expression). Each of the resulting 6 presentation orders were pseudo-randomized with the constraints that no more than two faces of the same category (age, gender, expression) repeated in a row. Each face was presented for 4 seconds. Participants were instructed to “Look naturally at whatever is interesting to you in the images as if you were at home watching TV”, while blinking naturally. After a face disappeared, the response options (happy, angry, neutral, fearful, sad, disgusted; always presented in this order) appeared on the screen with a fixation cross for 7 seconds, and participants said aloud what expression was shown. To reduce head movement, participants gave their verbal response only when the response options appeared, and they were instructed to realign their gaze to the screen center once they had given their response. The experimenter recorded the responses.

Figure 1.

Timing and sample faces used in Expression Identification Task.

As in He et al. (in press; see also Firestone et al., 2007), we used gaze time (amount of time pupil and corneal reflection were recorded on any point on the face, regardless of length) as the main outcome variable. We chose this variable instead of looking time based on temporal criteria (e.g., fixations; Murphy & Isaacowitz, in press; Sullivan et al., 2007; Wong et al., 2005) to account for evidence that some facial features (e.g., race) seem to be processed already around 100 ms after face onset (He, Johnson, Dovidio, & McCarthy, 2009; Liu, Harris, & Kahnwisher, 2002), and even when exposed to a face for as short as 30 ms (Cunningham et al., 2004). In addition, each face was approximately evenly divided into an upper half (covering the area around the eyes) and a lower half (covering the area around the mouth), without overlap and gap, and gaze time to these two areas of interest was extracted. At the end of the session, participants were debriefed.

Results

Alpha was set at .050 for all statistical tests.

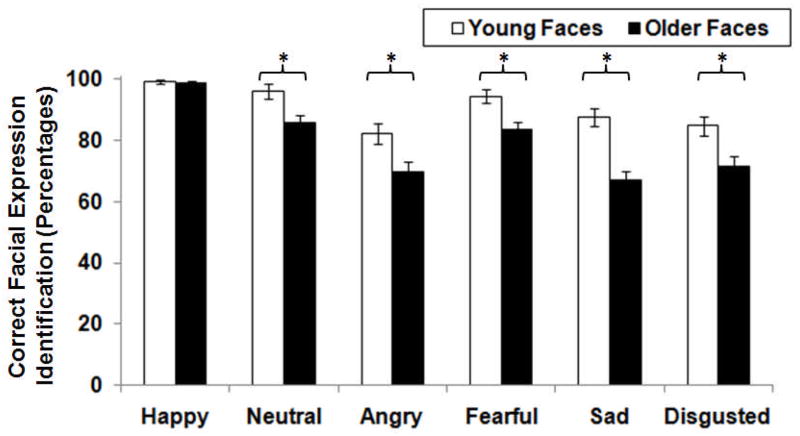

Expression Identification

We conducted a 2 (Age of Participant: Young, Older) x 2 (Age of Face: Young, Older) x 6 (Facial Expression: Happy, Neutral, Angry, Fearful, Sad, Disgusted) mixed-model analysis of variance (ANOVA) on percentage of correct expression identification with Age of Participant as a between-subjects factor and Age of Face and Facial Expression as within-subject factors (see Figure 2). There were main effects for Age of Face, Wilks’ λ = .28, F (1, 48) = 122.25, p < .001, ηp2 = .72, and Facial Expression, Wilks’ λ = .15, F (5, 44) = 49.69, p < .001, ηp2 = .85, and an Age of Face x Facial Expression interaction, Wilks’ λ = .30, F (5, 44) = 20.63, p < .001, ηp2 = .70 (see Figure 2). No other effect was significant. Both young and older participants were better at identifying expressions in young (M = 91%, SD = 6.6%) than older (M = 80%, SD = 12.7%) faces (i.e., there was no indication of an own-age effect in expression identification). As shown in Figure 2, better expression identification for young faces held true for angry, Wilks’ λ = .80, F (1, 49) = 11.88, p = .001, ηp2 = .20, neutral, Wilks’ λ = .69, F (1, 49) = 21.33, p < .001, ηp2 = .31, fearful, Wilks’ λ = .63, F (1, 49) = 27.99, p < .001, ηp2 = .37, sad, Wilks’ λ = .49, F (1, 49) = 49.51, p < .001, ηp2 = .51, and disgusted, Wilks’ λ = .75, F (1, 49) = 15.75, p < .001, ηp2 = .25, faces. The only exception was happiness, for which expression identification was close to ceiling for both young (M = 99 %, SD = 3.0 %) and older (M = 99 %, SD = 3.4 %) faces.

Figure 2.

Percentage of correct expression identification separately for young and older faces and for each facial expression. Error bars represent the standard errors of the condition mean differences; * p < .05.

Happy faces (M = 99 %, SD = 2.5 %) were more likely to be correctly identified than neutral (M = 91%, SD = 13.4 %), F (1, 49) = 18.95, p < .001, ηp2 = .28, angry (M = 76%, SD = 16.5 %), F (1, 49) = 93.56, p < .001, ηp2 = .66, fearful (M = 89 %, SD = 12.0 %), F (1, 49) = 34.46, p < .001, ηp2 = .41, sad (M = 77 %, SD = 13.9 %), F (1, 49) = 113.04, p < .001, ηp2 = .70, or disgusted (M = 78%, SD = 18.5 %), F (1, 49) = 65.20, p < .001, ηp2 = .57, faces. Fearful faces were more likely to be correctly identified than disgusted, F (1, 49) = 16.80, p < .001, ηp2 = .26, sad, F (1, 49) = 23.97, p < .001, ηp2 = .33, and angry, F (1, 49) = 33.30, p < .001, ηp2 = .41, faces. Neutral faces were more likely to be correctly identified than angry, F (1, 49) = 27.67, p < .001, ηp2 = .36, sad, F (1, 49) = 25.91, p < .001, ηp2 = .35, and disgusted, F (1, 49) = 15.13, p < .001, ηp2 = .24, faces. There were no differences between neutral and fearful or disgusted, sad, and angry faces.

Unexpectedly, young and older participants (with valid gaze information) did not differ in their overall ability to identify expressions. However, when we included those participants with behavioral data but who did not meet our criteria for analyzing gaze information, young participants (M = 86%, SD = 6.7%) showed better expression identification than older participants (M = 80%, SD = 10.6%), F (1, 77) = 9.88, p = .002, ηp2 = .11. Post-hoc direct comparisons between participants with valid and invalid gaze information, separately for young and older participants, on chronological age, health, cognition, vision, and socio-affective measures showed no differences for young participants but better self-reported general health for older participants with valid (M = 4.35, SD = 0.67) than invalid (M = 3.70, SD = 0.82), F (1, 28) = 5.38, p = .028, ηp2 = .16, gaze information, and better visual-motor processing speed for older participants with valid (M = 47.65, SD = 7.37) than invalid (M = 83.00, SD = 6.55), F (1, 28) = 12.25, p = .002, ηp2 = .30, gaze information.

Table 1 shows correct and erroneous categorizations of faces separately for young and older participants, young and older faces, and each expression. As can be concluded from the pattern of results depicted in Table 1, both young and older participants were unlikely to confuse positive with other expressions: On average, across young and older participants and young and older faces, only 0.6% happy faces were erroneously identified as either neutral, angry, fearful, sad, or disgusted. Likewise, only 2.1% neutral, angry, fearful, sad, or disgusted faces were erroneously identified as happy. Interestingly, young and older participants differed in their misattributions of expressions: The most typical error made by young participants was to interpret disgusted faces as angry (25.0%); older participants were less likely to make this error (11.2 %), F (1, 48) = 5.13, p = .028, ηp2 = .10. In contrast, the most typical error made by older participants involved interpreting angry faces as showing disgust (30.6%); young participants were less likely to make this error (14.6%), F (1, 48) = 6.20, p = .016, ηp2 = .11). In addition, for both young and older participants, misattribution of disgusted faces as angry was more likely for older (26.0%) than young (10.2%) faces, Wilks’ λ = .83, F (1, 48) = 9.80, p = .003, ηp2 = .17.

Table 1.

Percentages of correct and erroneous responses in expression identification

| Categorized as

|

||||||

|---|---|---|---|---|---|---|

| Happy | Neutral | Angry | Fearful | Sad | Disgusted | |

| Young Participants | ||||||

| Young Faces | ||||||

| Happy | 100.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| Neutral | 0.0 | 98.3 | 0.8 | 0.4 | 0.4 | 0.0 |

| Angry | 0.0 | 1.7 | 85.4 | 0.0 | 7.1 | 5.8 |

| Fearful | 0.0 | 0.8 | 2.1 | 93.3 | 0.4 | 3.3 |

| Sad | 0.0 | 1.7 | 0.4 | 5.0 | 87.5 | 5.4 |

| Disgusted | 0.0 | 0.0 | 7.1 | 2.9 | 6.2 | 83.3 |

| Older Faces | ||||||

| Happy | 99.2 | 0.4 | 0.0 | 0.0 | 0.0 | 0.4 |

| Neutral | 1.3 | 91.2 | 2.5 | 0.8 | 3.4 | 0.8 |

| Angry | 0.0 | 6.7 | 70.4 | 1.7 | 12.5 | 8.8 |

| Fearful | 2.9 | 0.4 | 4.2 | 87.1 | 1.7 | 3.3 |

| Sad | 0.0 | 5.8 | 5.4 | 10.4 | 68.8 | 8.8 |

| Disgusted | 0.0 | 0.0 | 17.9 | 1.2 | 12.1 | 68.8 |

| Older Participants | ||||||

| Young Faces | ||||||

| Happy | 98.1 | 1.2 | 0.0 | 0.0 | 0.0 | 0.0 |

| Neutral | 0.0 | 93.1 | 2.5 | 1.9 | 2.5 | 0.0 |

| Angry | 0.0 | 0.6 | 77.5 | 3.1 | 1.9 | 15.0 |

| Fearful | 0.6 | 0.6 | 0.6 | 96.2 | 0.0 | 1.2 |

| Sad | 0.0 | 0.0 | 2.5 | 1.9 | 88.1 | 0.0 |

| Disgusted | 0.0 | 0.6 | 3.1 | 2.5 | 6.9 | 86.9 |

| Older Faces | ||||||

| Happy | 98.8 | 0.0 | 0.0 | 0.0 | 0.6 | 0.0 |

| Neutral | 1.2 | 77.5 | 3.1 | 0.6 | 12.5 | 5.0 |

| Angry | 0.0 | 3.1 | 68.8 | 6.9 | 5.0 | 15.6 |

| Fearful | 1.9 | 3.8 | 8.1 | 78.8 | 1.9 | 3.1 |

| Sad | 0.6 | 7.5 | 6.9 | 11.9 | 65.0 | 7.5 |

| Disgusted | 0.0 | 0.6 | 8.1 | 5.0 | 9.4 | 75.6 |

Note. Rows do not add up to 100 % as participants missed responding to 0.4 % of the trials within the seven seconds time interval. Bolded numbers are correct expression identifications, non-bolded numbers are erroneous expression identifications

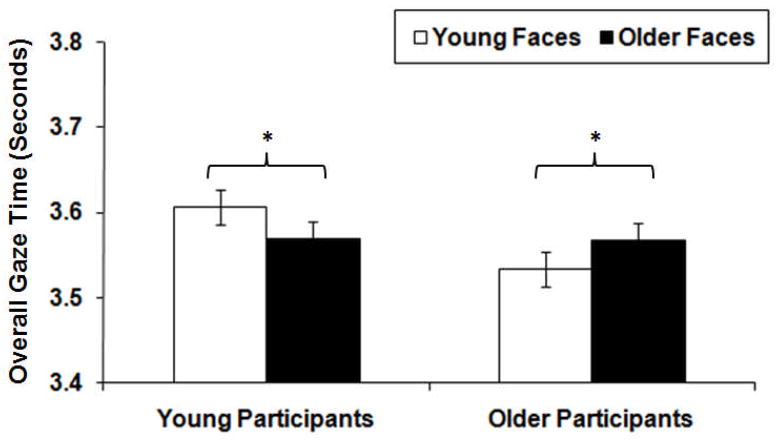

Overall Gaze Time at Faces and Expression Identification

We conducted a 2 (Age of Participant: Young, Older) x 2 (Age of Face: Young, Older) x 6 (Facial Expression: Happy, Neutral, Angry, Fearful, Sad, Disgusted) mixed-model ANOVA on overall gaze time (in seconds) with Age of Participant as a between-subjects factor and Age of Face and Facial Expression as within-subject factors. The only significant effect was an Age of Participant x Age of Face interaction, Wilks’ λ = .85, F (1, 48) = 8.69, p = .005, ηp2 = .15 (Figure 3): Across expressions, both age groups looked longer at own-age than other-age faces.

Figure 3.

Overall gaze time (in seconds) separately for young and older faces in young, t(29) = 2.29, p = .029, d = 0.49, and older, t(19) = −2.06, p = .049, d = −0.57, participants. Error bars represent the standard errors of the within-group condition mean differences; * p < .05.

To test whether gaze time at own-age (as opposed to other-age) faces predicted better expression identification of own-age faces, we conducted two separate linear regression analyses on percentage of correct expression identification of own-age and other-age faces, respectively, with overall gaze time at own-age and other-age faces as the respective model predictors. As shown in Table 2 (A, in bold), the longer both young and older participants looked at own-age faces the better they were able to identify their expressions; there was no such effect for other-age faces (B, in bold).

Table 2.

Gaze Time (Overall/Upper and Lower Half of Faces) Predicting Expression Identification (N = 50)

|

Expression Identification

| |||||

|---|---|---|---|---|---|

|

(A)Overall Own-Age Faces

|

(B)Overall Other-Age Faces

|

||||

| b | p | b | p | ||

| Constant | 0.29 | .181 | Constant | 0.63 | .000 |

| Gaze Time | 0.16 | .010 | Gaze Time | 0.06 | .200 |

| F | 0.13 | .010 | F | 0.03 | .200 |

| R2 | 7.24 | R2 | 1.69 | ||

|

| |||||

|

(C)Upper Half Young Faces

|

(D) Lower Half Young Faces

|

||||

| b | p | b | p | ||

|

| |||||

| Constant | 0.91 | .000 | Constant | 0.91 | .000 |

| Age of Participant | −0.02 | .457 | Age of Participant | −0.02 | .385 |

| Gaze Time | 0.04 | .018 | Gaze Time | −0.04 | .129 |

| Age of Participant*Gaze Time | −0.07 | .021 | Age of Participant*Gaze Time | 0.08 | .018 |

| F | 2.63 | .062 | F | 2.25 | .095 |

| R2 | 0.09 | R2 | 0.07 | ||

|

(E) Upper Half Older Faces

|

(F) Lower Half Older Faces

|

||||

| b | p | b | p | ||

|

| |||||

| Constant | 0.81 | .000 | Constant | 0.81 | .000 |

| Age of Participant | −0.03 | .205 | Age of Participant | −0.04 | .162 |

| Gaze Time | 0.03 | .263 | Gaze Time | −0.02 | .599 |

| Age of Participant*Gaze Time | −0.05 | .250 | Age of Participant*Gaze Time | 0.06 | .222 |

| F | 1.10 | .358 | F | 1.27 | .296 |

| R2 | 0.01 | R2 | 0.02 | ||

Note. Age of Participant was dummy coded with young participants coded as 0 and older participants coded as 1. Bolded effects are discussed in the text.

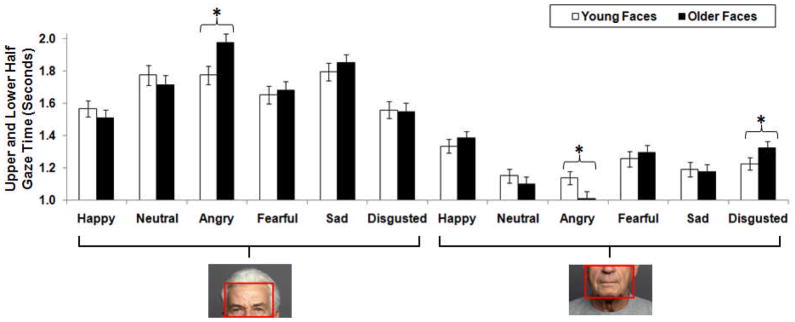

Gaze Time at Upper versus Lower Half of Faces and Expression Identification

We next examined differences in looking at the upper and the lower half of faces for young and older participants and for each of the expressions, and explored how differences in looking patterns may interact with the age of faces. We conducted a 2 (Age of Participant: Young, Older) x 2 (Age of Face: Young, Older) x 6 (Facial Expression: Happy, Neutral, Angry, Fearful, Sad, Disgusted) x 2 (Face Half: Upper, Lower) mixed-model ANOVA on gaze time (in seconds) with Age of Participant as a between-subjects factor and Age of Face, Facial Expression, and Face Half as within-subject factors. There were no main effects or interactions involving Age of Participant, suggesting no significant differences between young and older participants, and no indication of an own-age effect, in looking at upper and lower half of faces. Thus, the data presented in Figure 4 are collapsed across young and older participants. There was a main effect for Face Half, Wilks’ λ = .85, F (1, 48) = 8.37, p = .006, ηp2 = .15, Facial Expression, Wilks’ λ = .49, F (5, 44) = 9.01, p < .001, ηp2 = .51, and interactions of Age of Face x Facial Expression, Wilks’ λ = .78, F (5, 44) = 2.55, p = .041, ηp2 = .23, Face Half x Facial Expression, Wilks’ λ = .38, F (5, 44) = 14.64, p < .001, ηp2 = .63, and Age of Face x Face Half x Facial Expression, Wilks’ λ = .65, F (5, 44) = 4.73, p = .002, ηp2 = .35. Overall both young and older participants looked longer at the upper (M = 1.72, SD = 0.65) than the lower (M = 1.20, SD = 0.58) half of faces.

Figure 4.

Upper and lower half gaze time (in seconds) separately for young and older faces and for each facial expression. Error bars represent the standard errors of the condition mean differences; * p < .05

Following up on the three-way interaction, we examined upper and lower half gaze time separately in 2 (Age of Face) x 6 (Facial Expression) repeated-measures ANOVAs. The main effects for Facial Expression (Upper half, Wilks’ λ = .31, F (5, 45) = 19.89, p < .001, ηp2 = .69; Lower half, Wilks’ λ = .52, F (5, 45) = 8.49, p < .001, ηp2 = .49) and Age of Face x Facial Expression interactions (Upper half, Wilks’ λ = .67, F (5, 45) = 4.48, p = .002, ηp2 = .33; Lower half, Wilks’ λ = .67, F (5, 45) = 4.36, p = .003, ηp2 = .33) were significant. As can be seen in Figure 4, upper half gaze time was longer for angry (M = 1.89, SD = 0.63) than happy (M = 1.55, SD = 0.64), F (1, 49) = 43.03, p < .001, ηp2 = .47, neutral (M = 1.77, SD = 0.72), F (1, 49) = 5.29, p = .026, ηp2 = .10, fearful (M = 1.69, SD = 0.69), F (1, 49) = 13.33, p = .001, ηp2 = .21, and disgusted (M = 1.58, SD = 0.66), F (1, 49) = 62.24, p < .001, ηp2 = .56, faces. Upper half gaze time was also longer for sad (M = 1.84, SD = 0.72) than happy, F (1, 49) = 59.61, p < .001, ηp2 = .55, neutral, F (1, 49) = 3.68, p = .049, ηp 2 = .07, fearful, F (1, 49) = 18.78, p < .001, ηp2 = .28, and disgusted, F (1, 49) = 47.41, p < .001, ηp2 = .49, faces. Upper half gaze time was also longer for neutral than happy, F (1, 49) = 22.34, p < .001, ηp2 = .31, and disgusted, F (1, 49) = 22.29, p < .001, ηp2 = .31, faces, and it was longer for fearful than happy, F (1, 49) = 11.33, p = .001, ηp2 = .19, and disgusted, F (1, 49) = 8.48, p = .005, ηp2 = .15, faces. There was no difference between angry and sad, neutral and fearful, or disgusted and happy faces. Comparing young and older faces for each expression separately, gaze time in upper half of angry faces was longer for older than young faces, Wilks’ λ = .79, F (1, 48) = 12.79, p = .001, ηp2 = .21 (see Figure 4). No other comparison was significant.

Lower half gaze time was longer for happy (M = 1.35, SD = 0.58) than neutral (M = 1.09, SD = 0.65), F (1, 49) = 26.58, p < .001, ηp2 = .35, angry (M = 1.06, SD = 0.52), F (1, 49) = 33.72, p < .001, ηp2 = .41, ηp2 = .35, fearful (M = 1.26, SD = 0.66), F (1, 49) = 5.52, p = .023, ηp2 = .10, sad (M = 1.16, SD = 0.63), F (1, 49) = 21.96, p < .001, ηp2 = .31, and disgusted (M = 1.26, SD = 0.60), F (1, 49) = 5.44, p = .024, ηp2 = .10, faces. Lower half gaze time was longer for disgusted than angry, F (1, 49) = 25.89, p < .001, ηp2 = .35, neutral, F (1, 49) = 14.67, p < .001, ηp2 = .23, and sad, F (1, 49) = 7.02, p = .011, ηp2 = .13, faces, and it was longer for fearful than angry, F (1, 49) = 17.04, p < .001, ηp2 = .26, neutral, F (1, 49) = 14.52, p < .001, ηp2 = .23, and sad, F (1, 49) = 9.49, p = .003, ηp2 = .16, faces, as well as for sad than angry, F (1, 49) = 5.60, p = .022, ηp2 = .10, and neutral, F (1, 49) = 4.13, p = .048, ηp2 = .08, faces. There was no difference between disgusted and fearful or neutral and angry faces. Comparing young and older faces for each expression separately, gaze time at the lower half of angry faces was longer for young than older faces, Wilks’ λ = .85, F (1, 48) = 8.64, p = .005, ηp2 = .15, but gaze time at the lower half of disgusted faces was longer for older than young faces, Wilks’ λ = .89, F (1, 48) = 5.84, p = .020, ηp2 = .11 (see Figure 4). No other comparison was significant.

Even though we had not observed significant differences in young and older participants’ upper and lower looking at young and older faces, we were interested in exploring any relations between gaze time at upper and lower half of young and older faces and expression identification in young and older participants. For this purpose, we conducted four separate linear regression analyses on percentage of correct expression identification of young and older faces, respectively, with age of participant, gaze time at upper or lower half of young or older faces, and the interaction of these two factors as the respective model predictors. Interestingly, as shown in Table 2 (C, in bold), for young participants longer looking at the upper half of young faces was related to better expression identification of young faces. The reverse pattern was true for older participants. In contrast, the longer older participants looked at the lower half of young faces, the better they were able to identify expressions in young faces, with this effect reversed in young participants (D, in bold). Older faces showed a similar but not significant pattern (E and F, in bold).

Discussion

The present study reports several novel findings concerning how the age and the expression of faces affect young and older adults’ visual inspection of faces, and how looking patterns are related to expression identification. We discuss next the contributions of these findings to our understanding of emotion-cognition interactions in young and older adults.

Expression Identification

As expected, with the exception of happy faces, both young and older participants were better at identifying expressions in young than older emotional faces. This supports and extends previous findings for neutral and angry expressions (Ebner & Johnson, 2009) to disgust, sadness, and fear. The greater difficulty of identifying expressions in older than young faces may be due to age-related changes in physical features (e.g., wrinkles), that may make it harder to read emotions in older faces. Another interesting possibility is that it may be that prototypes of facial expressions are more likely to be young faces. For example, emotion schemas may be developed in childhood from the relatively young faces of parents, and from TV and movie depictions of facial expressions (where older individuals are underrepresented; Signorielli, 2004). Additionally, perhaps due to age-related changes in flexibility and controllability of muscle tissue, intentional display of facial emotions may become less successful, and displays of unintended blended emotions may become more likely. Accurately identifying expressions is an important component of processing emotion and is crucial for social interactions and environmental adaptation in everyday life (Carstensen, Gross, & Fung, 1998). The fact that older faces’ expressions may be more likely to be misinterpreted than young faces, by both young and older adults, has potentially important implications for many life situations, such as in discussions with doctors, lawyers, and in social interactions in general.

In line with other studies (Ebner & Johnson, 2009; Murphy & Isaacowitz, in press), both young and older participants were better at identifying happiness than any other expression, with performance for happy faces near ceiling. Because happy faces were the only representative of positive expressions, while there were four different negative expressions, it is not surprising that they were easy to identify. To address this limitation, future research asking participants to differentiate between categories of positive expressions such as love, positive surprise, or enthusiasm, and/or between levels of genuineness of positive expressions (see Murphy, Lehrfeld, & Isaacowitz, 2010), is needed to explore age differences in the effects of the age of faces and positive emotion expressed in faces.

Both age groups were less likely to correctly identify disgusted, sad, or angry than fearful or neutral faces. This is in line with evidence that disgust is among the most difficult expressions to identify, especially by young participants, and anger and sadness are among the most difficult expressions to identify, especially by older participants (Ruffman et al., 2008). In contrast to our findings, however, in some previous studies identification of fear was particularly difficult for both young and older adults (Isaacowitz et al., 2007; Murphy & Isaacowitz, in press; Ruffman et al., 2008). This difference between studies may be related to the specific picture set used in the present study. The selection procedure applied in the FACES database ensured surprise-free displays of facial fear; see Ebner et al., 2010), which may have made identification of the present study’s fearful faces easier compared to fearful faces used in other studies. Future studies will have to determine the degree of generalizability of results across sets of face stimuli, including the impact of blended emotions on expression identification in young and older faces.

Particularly interesting is that young participants mostly misinterpreted disgusted faces as showing anger, especially for older faces, whereas older participants mostly misinterpreted angry faces as showing disgust. This pattern of asymmetric misattributions is interesting given findings that for young adults it is relatively difficult to identify facial displays of disgust, whereas for older adults it is relatively difficult to identify facial anger (Ruffman et al., 2008; see also Isaacowitz et al., 2007). Young and older adults may have a tendency to look for different expressions in faces, with young adults looking for anger cues and older adults for disgust cues. Also, a bias to “see” an emotion could inflate apparent accuracy for that emotion (see also Riediger et al., 2011, this issue). We can only speculate about whether, for example, age-related differences in motivational orientation, in personal and social relevance of certain emotions, or in brain activation associated with processing of certain emotions may play a role here. Importantly, such asymmetric misattributions by young and older adults (especially if more likely for other-age faces) could contribute to misunderstandings and conflict (especially across age groups), and would be interesting to follow up in future research.

Somewhat inconsistent with the literature on age-related decline in expression identification (Ebner & Johnson, 2009; Murphy & Isaacowitz, in press; Sullivan et al., 2007; Wong et al., 2005; see also Ruffman et al., 2008), young and older participants with valid gaze information did not differ significantly in their overall ability to identify expressions. However, when participants with behavioral data but invalid gaze information were included, young participants significantly outperformed older participants. Interestingly, older (but not young) participants with valid as compared to invalid gaze information reported better general health and showed better visual-motor processing speed, suggesting that these factors may affect older adults’ ability to correctly identify expressions. The difference between older participants with valid as compared to invalid gaze information suggests that previously reported age-related deficits in expression identification are characteristic of more representative samples of older adults, including participants who are less generally healthy and slower in their visual-motor processing than the older adults in our main analyses.

Age and Emotion Affect How We Look at a Face

As expected, overall looking time was longer for own-age than other-age faces in young and older participants, extending the own-age effect in gaze time for neutral faces observed by He et al. (in press) to emotional faces. In addition, longer looking time was associated with better expression identification of own-age but not other-age faces. Firestone et al. (2007) did not find such an own-age effect in looking time. Note, however, that they used an orienting task that focused participants on the age of the faces (in this case, both age groups looked longer at young faces), and attention may be directed differently during different task agendas. More systematic manipulation of the purpose of looking at faces within the same study will be needed to resolve difference in findings across studies.

We believe that our observed pattern of an own-age effect in visual inspection and its association with expression identification makes age-related changes in compositional (e.g., nose–mouth distance) or low-level perceptual (e.g., spatial frequency) features an unlikely explanation for differences in visual examination of young and older faces. If visual inspection was determined only by such factors, young and older participants should have been influenced similarly (i.e., should have shown very similar visual inspection patterns for young and older faces). Rather, our finding of an own-age effect in visual inspection may reflect greater interest and social relevance of own-age individuals (Harrison & Hole, 2009; He et al., in press). Also, consistent with Allport’s (1954) ‘contact hypothesis’ and Sporer’s (2001) ‘expertise hypothesis’, more frequent contact, and thus better expertise, with own-age than other-age persons in daily life (Ebner & Johnson, 2009) likely influence the processing of those faces, even at very early processing stages (Ebner, He, et al., 2010). It seems also reasonable that, as a consequence of more frequent encounters with persons of one’s own age, individuals develop and/or maintain better schemas that influence their visual scan patterns, with effects for expression identification.

In line with theoretical and empirical suggestions (Calder et al., 2000; Murphy & Isaacowitz, in press; Sullivan et al., 2007; Wong et al., 2005), upper half looking was longest for angry and sad, followed by neutral and fearful, and shortest for happy and disgusted faces. Correspondingly, lower half looking was longest for happy faces, and also was longer for disgusted, than sad, neutral, and angry faces. This pattern of findings was the same for young and older participants. In fact, upper and lower gaze time was independent of participants’ age (but see Murphy & Isaacowitz, in press; Sullivan et al, 2007; Wong et al., 2005). This suggests that the age-group differences in misattributions of anger and disgust reported above do not simply reflect differences in young and older participants’ visual inspection of upper and lower half of angry and disgusted faces. Upper and lower half looking was furthermore largely independent of the age of faces, with the only difference between young and older faces for anger (longer looking at lower half of young than older faces and longer looking at upper half of older than young faces) and disgust (longer looking at lower half for older than young faces).

Thus, different from previous studies (Firestone et al., 2007; Murphy & Isaacowitz, in press; Sullivan et al., 2007; Wong et al., 2005), our young participants did not show longer looking at the upper, and our older participants did not show longer looking at the lower, half of faces. These differences across studies are perhaps due to methodological differences such as in task agendas, duration of face presentation, eye-tracking variables, participant selection criteria, or defining criteria for areas of interest. These inconsistencies between the present study and previous studies (which also differ among themselves) need to be further explored in futures studies and require caution when interpreting and attempting to generalize the results across studies.

When we explored the relation between looking at upper and lower half of faces and expression identification for young and older faces in young and older participants, an interesting pattern emerged: Whereas young participants’ expression identification of young faces was better the longer they looked at the upper half of young faces, older adults’ expression identification of young faces was better the longer they looked at the lower half of young faces. At this point we can only speculate about why young and older adults may benefit differentially from focusing on different facial regions of young (but not older) faces in the context of emotion identification. Age-differential facilitation of emotion identification when looking at different parts of faces may reflect age-related differences in the tendency to look for different expressions (e.g., young adults for anger and older adults for disgust). Another possibility is that there are cohort differences in rules of conduct in terms of direct versus indirect eye gazing during social interactions, and those may even vary for young as opposed to older social interaction partners. Alternatively, age-related hearing impairment may make it necessary for older adults to focus more on the lower half in the attempt to extract and integrate verbal information when determining a person’s emotional state. To follow up on some of these possibilities, and to overcome the present study’s limitation of only using still photographs of discrete facial expressions, it would be interesting to present real-time, continuously developing and changing facial expressions, perhaps supplemented by bodily postures and verbal expressions, while recording eye movements. Such studies would determine whether the patterns reported here for static pictures also generalize to visual inspection and its relation to expression identification in more natural situations.

Conclusions

The present study is the first to use faces of different ages and expressions to examine young and older adults’ visual scan patterns in the context of an expression identification task. Our results extend and, in some cases, challenge or qualify previous research. Supporting and extending prior work (Ebner & Johnson, 2009; He et al., in press), we found that not only neutral and angry but also fearful, sad, and disgusted young as compared to older faces were more likely to be correctly identified, by both young and older adults. We also found intriguing asymmetries in the misattributions of angry and disgusted faces: Young adults were more likely to label disgusted faces as angry, whereas older adults were more likely to label angry faces as disgusted. Consistent with previous research, across age of participants and faces, looking at upper half was longest for angry and sad faces, whereas looking at lower half was longest for happy faces. Importantly, however, in addition to effects of the emotion expressed in faces we provide novel evidence suggesting that the age of faces affects young and older adults’ visual inspection: Looking time at own-age faces was longer than looking time at other-age faces for both age groups, and was associated with better expression identification. Thus, taken together our results suggest that the cues used in expression identification may shift not only as a function of the emotion expressed but also the age of the faces, in interaction with the age of the participant.

Acknowledgments

This research was conducted at Yale University and supported by National Institute on Aging grant R37AG009253 awarded to MKJ and German Research Foundation Research Grant DFG EB 436/1-1 to NCE. The authors wish to thank John Bargh for providing the eye-tracking equipment, the Memory and Cognition Lab and Derek Isaacowitz for discussions of the study reported in this paper, William Hwang and Sebastian Gluth for assistance in data collection, and Carol L. Raye for comments on an earlier version of this manuscript.

References

- Allport GW. The nature of prejudice. Cambridge, MA: Addison-Wesley; 1954. [Google Scholar]

- Arditi A. Improving the design of the letter contrast sensitivity test. Investigative Ophthalmology and Visual Science. 2005;46:2225–2229. doi: 10.1167/iovs.04–1198. [DOI] [PubMed] [Google Scholar]

- Bäckman L. Recognition memory across the adult life span: The role of prior knowledge. Memory & Cognition. 1991;19:63–71. doi: 10.3758/bf03198496. [DOI] [PubMed] [Google Scholar]

- Burt DM, Perrett DI. Perception of age in adult Caucasian male faces: Computer graphic manipulation of shape and colour information. Proceedings of the Royal Society of London, B. 1995;259:137–143. doi: 10.1098/rspb.1995.0021. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance. 2000;26:527–551. doi: 10.1037/0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- Carstensen LL, Gross JJ, Fung H. The social context of emotional experience. In: Schaie KW, Lawton MP, editors. Annual Review of Gerontology and Geriatrics. Vol. 17. New York: Springer; 1998. pp. 325–352. [Google Scholar]

- Carstensen LL, Isaacowitz DM, Charles ST. Taking time seriously: A theory of socioemotional selectivity. American Psychologist. 1999;54:165–181. doi: 10.1037/0003-066X.54.3.165. [DOI] [PubMed] [Google Scholar]

- Cunningham WA, Johnson MK, Raye CL, Gatenby JC, Gore JC, Banaji MR. Separable Neural Components in the Processing of Black and White Faces. Psychological Science. 2004;15:806–813. doi: 10.1111/j.0956-7976.2004.00760.x. [DOI] [PubMed] [Google Scholar]

- D’Argembeau A, Van der Linden M. Identity but not expression memory for unfamiliar faces is affected by ageing. Memory. 2004;12:644–54. doi: 10.1080/09658210344000198. [DOI] [PubMed] [Google Scholar]

- Ebner NC, Gluth S, Johnson MR, Raye CL, Mitchell KM, Johnson MK. Medial prefrontal cortex activity when thinking about others depends on their age. Neurocase. doi: 10.1080/13554794.2010.536953. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebner NC, He Y, Fichtenholtz HM, McCarthy G, Johnson MK. Electrophysiological correlates of processing faces of younger and older individuals. Social Cognitive and Affective Neuroscience. 2010 doi: 10.1093/scan/nsq074. Advance online publication. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebner NC, Johnson MK. Age-group differences in interference from young and older emotional faces. Cognition & Emotion. doi: 10.1080/02699930903128395. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebner NC, Johnson MK. Young and older emotional faces: Are there age-group differences in expression identification and memory? Emotion. 2009;9:329–39. doi: 10.1037/a0015179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebner NC, Riediger M, Lindenberger U. FACES—A database of facial expressions in young, middle-aged, and older women and men: Development and validation. Behavioral Research Methods. 2010;42:351–362. doi: 10.3758/BRM.42.1.351. [DOI] [PubMed] [Google Scholar]

- Firestone A, Turk-Browne N, Ryan J. Age-related deficits in face recognition are related to underlying changes in scanning behavior. Aging, Neuropsychology, and Cognition. 2007;14:594–607. doi: 10.1080/13825580600899717. [DOI] [PubMed] [Google Scholar]

- Harrison V, Hole GJ. Evidence for a contact-based explanation of the own-age bias in face recognition. Psychonomic Bulletin & Review. 2009;16:264–269. doi: 10.3758/PBR.16.2.264. [DOI] [PubMed] [Google Scholar]

- He Y, Ebner NC, Johnson MK. What predicts the own-age bias in face recognition memory? Social Cognition. doi: 10.1521/soco.2011.29.1.97. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- He Y, Johnson MK, Dovidio JF, McCarthy G. The relation between race-related implicit associations and scalp-recorded neural activity evoked by faces from different races. Social Neuroscience. 2009;26:1–17. doi: 10.1080/17470910902949184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isaacowitz DM, Loeckenhoff C, Wright R, Sechrest L, Riedel R, Lane RA, Costa PT. Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychology & Aging. 2007;22:147–159. doi: 10.1037/0882-7974.22.1.147. [DOI] [PubMed] [Google Scholar]

- Isaacowitz DM, Wadlinger HA, Goren D, Wilson HR. Selective preference in visual fixation away from negative images in old age? An eye-tracking study. Psychology and Aging. 2006;21:40–48. doi: 10.1037/0882-7974.21.1.40. [DOI] [PubMed] [Google Scholar]

- Liu J, Harris A, Kahnwisher N. Stages of processing in face perception: An MEG study. Nature Neuroscience. 2002;5:910–916. doi: 10.1038/nn909. [DOI] [PubMed] [Google Scholar]

- Mather M, Carstensen LL. Aging and motivated cognition: The positivity effect in attention and memory. Trends in Cognitive Science. 2005;9:496–502. doi: 10.1016/j.tics.2005.08.005. [DOI] [PubMed] [Google Scholar]

- Murphy NA, Isaacowitz DM. Age effects and gaze patterns in recognizing emotional expressions: An in-depth look at gaze measures and covariates. Cognition & Emotion. doi: 10.1080/02699930802664623. (in press) [DOI] [Google Scholar]

- Murphy NA, Lehrfeld J, Isaacowitz DM. Recognition of posed and spontaneous dynamic smiles in young and older adults. Psychology & Aging. 2010 doi: 10.1037/a0019888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riediger M, Voelkle MC, Ebner NC, Lindenberger U. Beyond “happy, angry, or sad?” Age-of-poser and age-of-rater effects on multi-dimensional emotion perception. Cognition & Emotion. 2011 doi: 10.1080/02699931.2010.540812. this issue. [DOI] [PubMed] [Google Scholar]

- Rosenbaum JG. The biggest reward for my invention isn’t money. Medical Economics. 1984;61:152–163. [Google Scholar]

- Ruffman T, Henry JD, Livingstone V, Phillips LH. A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience and Biobehavioral Reviews. 2008;32:863–881. doi: 10.1016/j.neubiorev.2008.01.001. [DOI] [PubMed] [Google Scholar]

- Sporer SL. Recognizing faces of other ethnic groups: An integration of theories. Psychology, Public Policy, and Law. 2001;7:36–97. [Google Scholar]

- Signorielli N. Aging on television: Messages relating to gender, race, and occupation in prime time. Journal of Broadcasting & Electronic Media. 2004;48:279–30. doi: 10.1207/s15506878jobem4802_7. [DOI] [Google Scholar]

- Sullivan S, Ruffman T, Hutton S. Age differences in emotion recognition skills and the visual scanning of emotion faces. Journal of Gerontology: Psychological Sciences. 2007;62B:53–60. doi: 10.1093/geronb/62.1.p53. [DOI] [PubMed] [Google Scholar]

- Watson D, Clark LA, Tellegen A. Development and validation of brief measures of positive and negative affect: The PANAS scales. Journal of Personality and Social Psychology. 1988;54:1063–1070. doi: 10.1037//0022-3514.54.6.1063. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Manual for the Wechsler Adult Intelligence Scale-Revised (WAIS-R) New York: Psychological Corporation; 1981. [Google Scholar]

- Wong B, Cronin-Golomb A, Neargarder SA. Patterns of visual scanning as predictors of emotion identification in normal aging. Neuropsychology. 2005;19:739–749. doi: 10.1037/0894-4105.19.6.739. [DOI] [PubMed] [Google Scholar]