Abstract

Our ability to recognize other people’s faces and bodies is crucial for our social interactions. Previous neuroimaging studies have repeatedly demonstrated the existence of brain areas that selectively respond to visually presented faces and bodies. In daily life, however, we see “whole” people and not just isolated faces and bodies, and the question remains of how information from these two categories of stimuli is integrated at a neural level. Are faces and bodies merely processed independently, or are there neural populations that actually code for whole individuals? In the current study we addressed this question using a functional magnetic resonance imaging adaptation paradigm involving the sequential presentation of visual stimuli depicting whole individuals. It is known that adaptation effects for a component of a stimulus only occur in neural populations that are sensitive to that particular component. The design of our experiment allowed us to measure adaptation effects occurring when either just the face, just the body, or both the face and the body of an individual were repeated. Crucially, we found novel evidence for the existence of neural populations in fusiform as well as extrastriate regions that showed selective adaptation for whole individuals, which could not be merely explained by the sum of adaptation for face and body respectively. The functional specificity of these neural populations is likely to support fast and accurate recognition and integration of information conveyed by both faces and bodies. Hence, they can be assumed to play an important role for identity as well as emotion recognition in everyday life.

Keywords: face perception, body perception, fMRI, fusiform gyrus, extrastriate cortex

Introduction

We are inherently social beings, and our ability to efficiently determine the identity, emotions, and intentions of others is crucial for our social interactions. For this purpose we heavily rely on visual information conveyed by both faces and bodies (Van den Stock et al., 2007), and the human (Kanwisher et al., 1997; Peelen and Downing, 2007) as well as primate (Perrett et al., 1982; Wachsmuth et al., 1994) brain contains areas that are specialized in processing these two categories of visual stimuli. In humans, face as well as body selective areas can be found in the fusiform gyrus, namely the fusiform face area (FFA; Kanwisher et al., 1997), and the fusiform body area (FBA; Peelen and Downing, 2007). In addition, posterior parts of the lateral occipitotemporal cortex also contain face and body selective areas, namely the occipital face area (OFA; Gauthier et al., 2000), and the extrastriate body area (EBA; Downing et al., 2001). While these face and body selective brain areas can be anatomically distinguished to a large extent, there is also evidence for varying degrees of overlap between them, which is typically more pronounced in fusiform regions (Schwarzlose et al., 2005; Peelen and Downing, 2007; Downing and Peelen, 2011).

The existence of anatomically distinguishable portions of face and body selective areas is an indication for the fact that, at least to some degree, these two categories of stimuli are processed independently. Most of the past research on face and body processing has in fact been built around emphasizing this anatomical and functional specialization. For example, high-resolution functional magnetic resonance imaging (fMRI) has been used to clearly delineate, and distinguish between, face and body selective patches in the fusiform gyrus (Schwarzlose et al., 2005). Other lines of fMRI studies have focused on characterizing the response selectivity of extrastriate areas by examining and disentangling brain responses to visually presented body stimuli presented with or without a face (Morris et al., 2006). Lastly, taking the notion of anatomical and functional specialization even further, recent neuroimaging work has identified distinct foci within body selective areas that maximally respond to specific individual body-parts (Chan et al., 2010; Orlov et al., 2010).

The fact that there is evidence for varying degrees of overlap between face and body selective areas however, also points to the fact that these areas might share some common processing mechanisms and characteristics (Minnebusch and Daum, 2009). For example, it is known that face as well as body perception rely on both part-based and configural processing (Maurer et al., 2002; Reed et al., 2003). Interestingly, converging evidence from independent neuroimaging studies on face and body perception suggests that for both categories of stimuli part-based processing seems to be predominantly supported by extrastriate areas, whereas configural processing seems to be predominantly supported by fusiform areas (Taylor et al., 2007; Liu et al., 2010; Pitcher et al., 2011). Behavioral studies have also provided evidence for the functional interaction between face and body perception, showing how the processing of body stimuli can influence the processing of face stimuli and vice versa. For instance, Ghuman et al. (2010) have demonstrated a cross category body-to-face adaptation aftereffect. In a nutshell, the adaptation aftereffect refers to the fact that prolonged exposure to a stimulus (e.g., the face of individual A) biases the subsequent perception of that stimulus (i.e., following the exposure a morphed face containing 50% of individual A and 50% individual B is more likely to be classified as individual B). Intriguingly, the authors found that this biased face classification did not only occur after prolonged exposure to individual A’s face, but also to individual A’s body, demonstrating that the perception of a body alone can alter the tuning properties of face processing mechanisms. An influence in the opposite direction has been shown by a study of Yovel et al. (2010), in the context of the so-called body inversion effect. This inversion effect, originally demonstrated with faces, refers to a less accurate processing of inverted compared to upright stimuli, and has been interpreted as evidence for configural processing mechanisms. In short, the authors found that the body inversion effect was clearly influenced by the presence or absence of a face, with the omission of the head form the body stimuli markedly reducing its magnitude. Lastly, it has been shown that the recognition of the emotional expression conveyed by a face is significantly influenced by the concomitant expression conveyed by the body (Aviezer et al., 2008), further suggesting a close interaction between face and body processing.

In a way, these demonstrations of close coupling of face and body processing are not surprising, given that in everyday life we predominantly see “whole” people, and not just isolated faces and bodies. What remains unclear however, are the neural mechanisms underlying these effects. Are they merely a result of the interaction between face and body selective areas, or are there in fact neural populations that code for whole individuals? In the current study we addressed this question using an fMRI adaptation (fMRI-A) paradigm1. fMRI-A involves the sequential presentation of individual stimuli, and the adaptation effect is an attenuation of the blood oxygenation level-dependent (BOLD) signal resulting from the repetition of a specific component of a stimulus. Importantly, this attenuation occurs only in neural populations that are sensitive to that particular component (Grill-Spector and Malach, 2001). So far fMRI-A has been used to characterize the representations underlying neural responses to a number of visual stimulus classes including faces (Williams et al., 2007), headless bodies (Myers and Sowden, 2008), objects (Vuilleumier et al., 2002), scenes (Epstein et al., 2005), as well as more generally to the binding of objects and background scenes (Goh et al., 2004) and to the coding of objects presented in peripersonal space (Brozzoli et al., 2011).

In the current study we used fMRI-A to investigate whether there are neural populations that show selective adaptation to the visual presentation of whole individuals, as opposed to just isolated faces or bodies. In order to do this, we first performed an independent localizer to determine regions of interest (ROIs) encompassing both face and body selective brain regions for each participant. Within the ROIs we then examined adaptation effects associated with the sequential presentation of visual stimuli depicting whole individuals. Importantly, we measured adaptation effects occurring when either just the face, just the body, or both the face and the body of an individual were repeated. This allowed us to examine the differential adaptation effects to these stimulus categories within the same experiment. We hypothesized that if “whole individual selective” neural populations exist, this should be reflected by selective adaptation for whole individuals, which cannot merely be explained by the sum of adaptation that would occur to their faces and bodies respectively.

Materials and Methods

Participants

Ten healthy volunteers participated in the fMRI experiment (Age: 22–35, Mean = 28.8; Sex: four male–six female). None of the participants had a history of head injury or any other neurological condition, and all had normal vision. The study was approved by the Ethics Committees of Macquarie University and St Vincent’s Hospital (Sydney, Australia), and informed written consent was obtained from all participants.

fMRI data acquisition

Functional magnetic resonance imaging scanning was performed with a 3-T Philips Scanner at St Vincent’s Hospital, Sydney, Australia. At the beginning of the experimental session a high-resolution anatomical scan was acquired for each participant using a 3D-MPRAGE (magnetization prepared rapid gradient-echo) sequence. Subsequently, high-resolution functional scans were obtained using an eight-channel head coil and a gradient-echo echo-planar (EPI) sequence (Slices: 15; Resolution: 1.4 mm × 1.4 mm × 2 mm; Inter-slice gap: 20%; Inter-scan interval: 2 s; Echo time: 32 ms). The 15 oblique axial slices were aligned approximately parallel to the anterior–posterior commissure line.

The presentation of stimuli during the fMRI acquisition was programmed with Presentation software2 (Neurobehavioral Systems, Albany, CA, USA) and run on a 15′′ Macintosh Power Book with screen resolution set to 1280 × 854. Stimuli were back-projected via a projector onto a screen positioned 1.5 m behind the fMRI scanner, and participants viewed the screen through a mirror mounted on the head coil and positioned at 10 cm distance from their head. An optic fiber button box was used to record the participants’ responses.

Data analysis

Both anatomical and functional scans were converted with MRIcro3. Processing and data analysis was performed with SPM 84 (Wellcome Department of Imaging Neuroscience). ROIs were defined with WFU PickAtlas5. Before statistical analyses, images were realigned (motion corrected) and smoothened with a 4-mm Gaussian filter.

Experimental tasks

Localizer

We first performed an independent localizer with a block design to define ROIs.

Stimuli

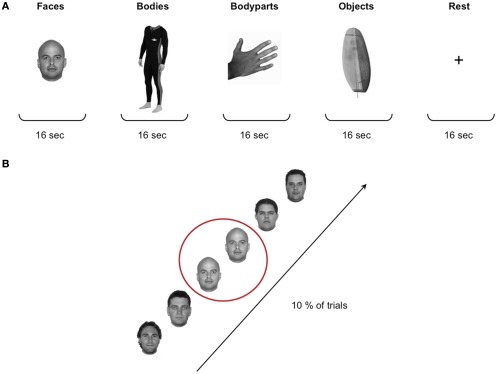

Stimuli for the localizer task were grayscale photographs of faces, headless bodies, individual body-parts (hand and feet), and objects (Figure 1). The set of stimuli included a total of 480 images, 120 for each of the four stimulus categories. Stimuli were edited with Adobe Photoshop editing software and matched for brightness and contrast. Stimuli subtended a visual angle of approximately 15°.

Figure 1.

Localizer stimuli and design. (A) Stimuli for the localizer task were grayscale photographs of faces, headless bodies, individual body-parts (hand and feet), and objects. The four stimulus categories were presented in a blocked design, with a total of 32 blocks of 16 s each. In between each stimulus block participants were presented with a 16-s rest block during which a fixation cross was shown in the middle of the screen. (B) Participants performed a standard “one-back” task to ensure maintenance of attention to the stimuli, that is they were instructed to press a button whenever a particular image was repeated consecutively, which occurred on 10% of the trials.

Design

Each participant completed two runs of the localizer task. The duration of the runs was 342 s, and during each run 171 functional scans were acquired. The four stimulus categories were presented in a blocked design, with a total of 32 blocks of 16 s each. Each block contained 16 stimuli presented in the center of the screen for 500 ms with a 500-ms inter-stimulus interval (ISI). The order of the blocks was counterbalanced across subjects. In between each stimulus block participants were presented with a 16-s rest block during which a fixation cross was presented in the middle of the screen. Participants performed a standard “one-back” task to ensure maintenance of attention to the stimuli (i.e., they were instructed to press a button whenever a particular image was repeated consecutively, which occurred once in every block of 16 stimuli; Figure 1).

Analysis

Firstly, for each participant two face selective and two body selective regions were localized in each hemisphere: the FFA, the OFA, the FBA, and the EBA. Face selective regions were defined by contrasting the BOLD fMRI signal associated with the presentation of faces compared to objects (Faces > Objects). Similarly, body selective regions were defined by contrasting the BOLD fMRI signal associated with the presentation of headless bodies compared to objects (Bodies > Objects). Contrasts were calculated using t-tests and adopting family-wise error (FWE) corrections for multiple comparisons (p < 0.05). The peak voxels of the face and body selective clusters of each participant were then used to define ROIs for the fMRI-A experiment (see Results for more details).

fMRI-A experiment

Following the localizer task, participants performed an event-related adaptation (fMRI-A) experiment to characterize the activation in response to repeated presentations of faces, bodies, as well as whole individuals, within the defined ROIs.

Stimuli

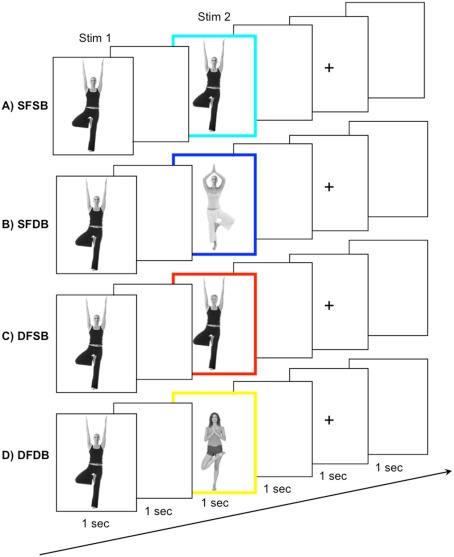

Stimuli for the adaptation experiment were grayscale photographs of whole individuals (i.e., all photographs contained both a face and a body). Photographs were edited with Adobe Photoshop software in order to create stimuli for the four experimental conditions (see below) by replacing either the face or the body of the original individual with that of another person. A total of 320 pairs of stimuli, consisting of Stim 1 and Stim 2, were created for the adaptation trials. Stim 1 was always an original grayscale image of a whole individual. Stim 2 could be either: (a) The exact same individual as Stim 1 (“same face–same body” – SFSB condition); (b) The face of Stim 1 with a different body (“same face–different body” – SFDB condition); (c) The body of Stim 1 with a different face (“different face–same body” – DFSB condition); (d) A completely different individual (“different face–different body” – DFDB condition; Figure 2). In addition, one further individual was chosen as a “target individual,” and by replacing either the target’s face or the target’s body an additional two target stimuli were created.

Figure 2.

fMRI-A experiment conditions and trial structure. Participants were shown pairs of subsequently presented stimuli. Stimulus 1, a whole individual, was followed by Stimulus 2, belonging to one out of four conditions: (A) A repeat of the same face and the same body (SFSB); (B) A repeat of the same face but a different body (SFDB); (C) a different face but repeat of the same body (DFSB); and (D) both a different face and different body (DFDB). To ensure maintenance of attention to both the face and the body of the experimental stimuli, participants were instructed to press a button whenever they detected a specific target face or body, which occurred on 10% of the trials.

Design

Each participant completed eight runs of the adaptation experiment. The duration of each run was 262 s, and during each run 131 functional scans were acquired. Participants were presented with a total of 320 pairs of stimuli, 80 for each experimental condition, as well as 32 target stimuli. The order of the experimental conditions, as well as the presentation of the target stimuli, was randomized within each run. In addition, each face/body combination was shown equally often during the experiment to avoid the possibility for across trial adaptation effects. Each trial began with the presentation of Stim 1 for 1 s, followed by an ISI of 1 s, and the presentation of Stim 2 for 1 s. The inter trial interval consisted of a 1-s blank screen, followed by a 1-s fixation cross, and another 1 s blank screen (Figure 2). Participants were instructed to view the stimuli and press a button whenever they detected one of the three target stimuli (i.e., a stimulus containing either the face or the body of the target individual), which occurred on 10% of the trials. This served to ensure maintenance of attention to both the face and the body of the experimental stimuli. As for the localizer, stimuli subtended a visual angle of approximately 15°.

Analysis

We performed two main analyses. Firstly, we performed an analysis to explore condition specific adaptation effects. In order to do this, within each of the ROIs we characterized the activation in response to the repeated presentation of same faces, bodies, as well as whole individuals. That is, for each of the four experimental conditions (SFSB, SFDB, DFSB, and DFDB), we determined clusters showing significant adaptation effects (i.e., a significant decrease in BOLD activation for Stim 2 compared to Stim 1). Contrasts were defined as (Stim 1 > Stim 2) and calculated using t-tests with FWE corrections for multiple comparisons (p < 0.05). Secondly, we performed an analysis to explore superadditive adaptation effects for the SFSB condition. In order to do this, we performed a new independent contrast within each ROI to determine whether part of the SFSB adapting voxels would actually show significantly larger adaptation to the SFSB condition than to the sum of all the other conditions. The contrast was defined as (SFSB adaptation) > (SFDB adaptation + DFSB adaptation + DFDB adaptation), and the activation was displayed using the SFSB simple adaptation contrast (SFSB Stim1 > SFSB Stim 2) as an inclusive mask in order to highlight voxels showing both SFSB adaptation and superadditivity. As for all other analyses, contrasts were calculated using t-tests with FWE corrections for multiple comparisons (p < 0.05).

Results

Localizer and definition of ROIs

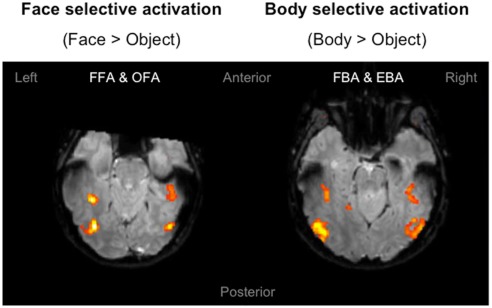

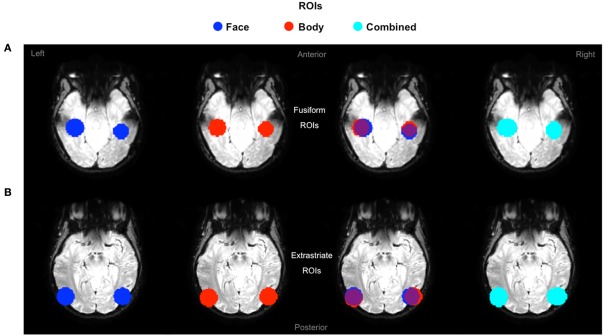

The purpose of the independent localizer was to determine face and body selective regions for each participant, and in turn to use them for the definition of ROIs for the fMRI-A experiment. Hence, for each participant we initially localized the FFA, OFA, FBA, and EBA. Consistent with previous studies (Downing et al., 2001; Orlov et al., 2010), body selective clusters were larger in the extrastriate cortex compared to the fusiform gyrus (Figure 3). For face selective responses results were mixed, with the average cluster size being larger in the left extrastriate cortex but the right fusiform gyrus (details of individual cluster sizes for all participants are provided in the Appendix). Subsequently, we defined four large ROIs (two in each hemisphere) comprising both face and body selective areas. Fusiform ROIs were defined by: (a) Extracting the peak voxel of the left and right FFA and FBA respectively; (b) Defining a sphere of 15 mm diameter around each of the peak voxels; (c) Combining the FFA and FBA centered spheres in order to create “combined” ROIs comprising both face and body selective regions. Extrastriate ROIs were defined with the exact same procedure around the peak voxels of the left and right OFA and EBA (Figure 4). This method of defining ROIs was deliberately over-inclusive, in order to not only restrict our analyses to the “face-only” and “body-only” clusters defined with the independent localizer, but to also include overlapping and adjacent voxels. Since our main aim was to investigate whether there are neural populations that selectively code for the visual perception of whole individuals, we hypothesized that these latter areas might be of particular relevance.

Figure 3.

Localizer activation maps of face and body selective clusters. Examples of activation maps of face and body selective clusters as defined by the independent localizer, displayed on axial functional image slices. The clusters were defined by contrasting the BOLD signal associated with the presentation of faces and bodies compared to objects. Contrasts were calculated using t-tests with family-wise error (FWE) corrections for multiple comparisons (p < 0.05).

Figure 4.

Definition of ROIs. Extrastriate and fusiform ROIs displayed on axial functional image slices. (A) Fusiform ROIs were defined by selecting, and then combining, spheres of 15 mm diameter around the peak voxel of the FFA and FBA. (B) Extrastriate ROIs were defined with the exact same procedure around the peak voxels of the OFA and EBA.

fMRI-A experiment

Simple adaptation effects

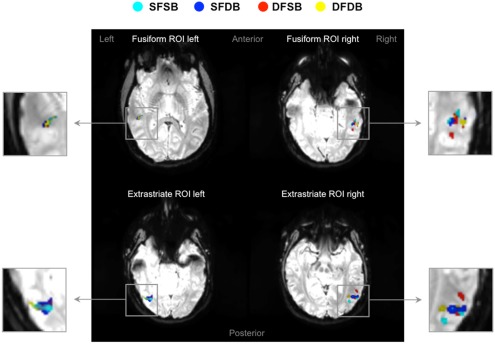

In the first instance, we performed an analysis to determine clusters of voxels showing condition specific adaptation effects for each of the experimental conditions. Detailed results of these first analyses are displayed in Figures 5 and 6, as well as Table 1. As can be seen in Figure 5, in line with previous neuroimaging studies we found voxels with condition specific adaptation effects for faces (Williams et al., 2007) and bodies (Myers and Sowden, 2008), as well as voxels that adapted to both (overlapping displays; Schwarzlose et al., 2005). In addition however, we found clusters of voxels that showed significant adaptation only if both the face and the body were repeated (SFSB condition), indicating preferential coding for whole individuals. Lastly, we also found clusters of voxels showing significant adaptation effects in the DFDB condition, which are suggestive of category specific rather than identity specific coding. As shown in Figure 6 and Table 1, overall the adaptation effects were more consistent in the extrastriate compared to the fusiform ROIs.

Figure 5.

fMRI adaptation effects in fusiform and extrastriate ROIs. Four examples of activation maps, one for each ROI, showing condition specific adaptation effects displayed on functional image slices.  : Same Face – Same Body (SFSB);

: Same Face – Same Body (SFSB);  : Same Face – Different Body (SFDB);

: Same Face – Different Body (SFDB);  : Different Face – Same Body (DFSB);

: Different Face – Same Body (DFSB);  : Different Face – Different Body (DFDB). Contrasts were calculated using t-tests with family-wise error (FWE) corrections for multiple comparisons (p < 0.05).

: Different Face – Different Body (DFDB). Contrasts were calculated using t-tests with family-wise error (FWE) corrections for multiple comparisons (p < 0.05).

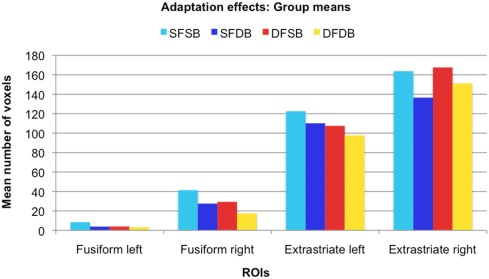

Figure 6.

Adaptation effects: Group means. For each ROI, the graph displays the mean number of voxels that showed significant adaptation effects for each condition.

Table 1.

Adaptation effects: Individual data.

| Subjects | SFSB voxels | Peak p* | Peak t | SFDB voxels | Peak p* | Peak t | DFSB voxels | Peak p* | Peak t | DFDB voxels | Peak p* | Peak t |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FUSIFORM LEFT | ||||||||||||

| S1 | 0 | / | / | 0 | / | / | 0 | / | / | 0 | / | / |

| S2 | 0 | / | / | 0 | / | / | 0 | / | / | 0 | / | / |

| S3 | 29 | <0.001 | 7.470 | 7 | 0.001 | 5.820 | 6 | 0.002 | 5.770 | 12 | <0.001 | 5.510 |

| S4 | 0 | / | / | 0 | / | / | 3 | 0.017 | 4.450 | 0 | / | / |

| S5 | 0 | / | / | 0 | / | / | 1 | 0.018 | 4.110 | 1 | 0.018 | 4.420 |

| S6 | 0 | / | / | 0 | / | / | 7 | 0.003 | 5.610 | 0 | / | / |

| S7 | 46 | <0.001 | 9.570 | 25 | <0.001 | 9.560 | 23 | <0.001 | 9.720 | 17 | <0.001 | 8.390 |

| S8 | 0 | / | / | 0 | / | / | 0 | / | / | 0 | / | / |

| S9 | 0 | / | / | 0 | / | / | 0 | / | / | 0 | / | / |

| S10 | 10 | 0.001 | 4.620 | 7 | 0.004 | 4.600 | 0 | / | / | 2 | 0.011 | 4.670 |

| Mean N = 10 | 9 | 4 | 4 | 3 | ||||||||

| FUSIFORM RIGHT | ||||||||||||

| S1 | 140 | <0.001 | 12.570 | 103 | <0.001 | 13.840 | 84 | <0.001 | 12.020 | 59 | <0.001 | 8.760 |

| S2 | 0 | / | / | 0 | / | / | 0 | / | / | 0 | / | / |

| S3 | 27 | <0.001 | 6.150 | 2 | 0.011 | 4.600 | 2 | 0.011 | 4.400 | 0 | / | / |

| S4 | 33 | 0.001 | 8.520 | 32 | 0.001 | 10.420 | 65 | 0.001 | 10.150 | 21 | 0.001 | 9.540 |

| S5 | 0 | / | / | 2 | 0.010 | 5.060 | 0 | / | / | 2 | 0.019 | 4.510 |

| S6 | 0 | / | / | 0 | / | / | 3 | 0.006 | 4.750 | 0 | / | / |

| S7 | 30 | 0.004 | 5.030 | 2 | 0.012 | 4.740 | 2 | 0.012 | 4.590 | 0 | / | / |

| S8 | 0 | / | / | 13 | 0.001 | 4.720 | 10 | 0.001 | 5.170 | 0 | / | / |

| S9 | 177 | <0.001 | 6.500 | 96 | 0.001 | 5.530 | 127 | <0.001 | 5.930 | 86 | <0.001 | 5.940 |

| S10 | 7 | 0.001 | 5.660 | 26 | <0.001 | 5.570 | 1 | 0.019 | 4.410 | 7 | 0.001 | 5.660 |

| Mean N = 10 | 41 | 28 | 29 | 18 | ||||||||

| EXTRASTRIATE LEFT | ||||||||||||

| S1 | 437 | <0.001 | 21.110 | 388 | <0.001 | 20.820 | 377 | <0.001 | 19.580 | 358 | <0.001 | 18.350 |

| S2 | 21 | 0.002 | 5.100 | 21 | <0.001 | 6.270 | 7 | 0.004 | 4.510 | 3 | 0.014 | 4.410 |

| S3 | 83 | <0.001 | 7.170 | 62 | <0.001 | 7.470 | 54 | <0.001 | 6.340 | 34 | <0.001 | 5.480 |

| S4 | 200 | <0.001 | 11.100 | 122 | <0.001 | 10.310 | 139 | <0.001 | 10.650 | 115 | <0.001 | 9.030 |

| S5 | 139 | <0.001 | 7.560 | 75 | <0.001 | 6.470 | 133 | <0.001 | 6.480 | 174 | <0.001 | 8.190 |

| S6 | 28 | <0.001 | 6.330 | 34 | <0.001 | 6.160 | 13 | 0.001 | 5.460 | 32 | <0.001 | 5.990 |

| S7 | 202 | <0.001 | 7.730 | 219 | <0.001 | 8.900 | 226 | <0.001 | 8.610 | 144 | <0.001 | 8.230 |

| S8 | 9 | 0.008 | 4.770 | 20 | 0.008 | 4.670 | 4 | 0.013 | 4.390 | 16 | 0.002 | 4.850 |

| S9 | 99 | <0.001 | 5.530 | 157 | <0.001 | 6.350 | 117 | <0.001 | 6.520 | 102 | <0.001 | 5.560 |

| S10 | 7 | 0.012 | 4.450 | 4 | 0.005 | 4.690 | 5 | 0.012 | 4.940 | 0 | / | / |

| Mean N = 10 | 123 | 110 | 108 | 98 | ||||||||

| EXTRASTRIATE RIGHT | ||||||||||||

| S1 | 549 | <0.001 | 20.360 | 519 | <0.001 | 19.520 | 519 | <0.001 | 18.420 | 496 | <0.001 | 17.660 |

| S2 | 5 | 0.006 | 4.330 | 6 | 0.003 | 5.220 | 0 | / | / | 0 | / | / |

| S3 | 326 | <0.001 | 7.810 | 143 | <0.001 | 7.560 | 168 | <0.001 | 7.530 | 105 | <0.001 | 6.710 |

| S4 | 264 | <0.001 | 9.260 | 227 | <0.001 | 10.380 | 317 | <0.001 | 10.830 | 169 | <0.001 | 9.270 |

| S5 | 157 | <0.001 | 6.780 | 74 | <0.001 | 6.220 | 175 | <0.001 | 6.890 | 229 | <0.001 | 8.280 |

| S6 | 74 | <0.001 | 6.300 | 36 | <0.001 | 5.950 | 74 | <0.001 | 7.020 | 67 | <0.001 | 6.800 |

| S7 | 29 | <0.001 | 6.160 | 37 | <0.001 | 7.060 | 37 | <0.001 | 7.580 | 34 | <0.001 | 7.220 |

| S8 | 0 | / | / | 4 | 0.005 | 4.430 | 0 | / | / | 0 | / | / |

| S9 | 228 | <0.001 | 6.000 | 309 | <0.001 | 6.770 | 383 | <0.001 | 6.870 | 394 | <0.001 | 6.870 |

| S10 | 6 | 0.002 | 4.620 | 11 | 0.002 | 5.050 | 3 | 0.007 | 4.760 | 17 | <0.001 | 5.660 |

| Mean N = 10 | 164 | 137 | 168 | 151 | ||||||||

For each ROI, the table displays the individual adaptation effects. For each experimental condition (SFSB, SFDB, DFSB, and DFDB), it displays the number of voxels showing significant adaptation as well as the peak p and t values of the adaptation clusters. *All p values are family-wise error (FWE) corrected (p < 0.05). / = missing value due to absence of adaptation effect.

Superadditive adaptation effects

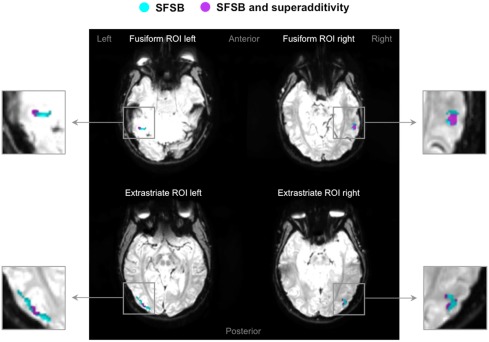

As mentioned earlier, our main question of interest was to investigate whether there are neural populations that exclusively code for whole individuals. A supportive indication of this fact was the finding that some voxels significantly adapted only to the repetition of the same whole individual, and not to the repetition of the same face or same body alone. However, these apparently selective responses to the SFSB condition could also be due to additivity. That is, on the basis of our first analyses we cannot exclude that SFSB adapting voxels merely reflect the coupled response of face and body selective neural populations. In order to get around this issue, we performed an additional analysis by defining a superadditive contrast for the SFSB condition, and investigated whether part of the SFSB adapting voxels would actually show significantly larger adaptation to the SFSB condition than to the sum of all the other conditions. The results of this second analysis are displayed in Figures 7 and 8, as well as Table 2. In each of the ROIs, we found a small percentage of voxels that showed both SFSB adaptation and superadditivity, and hence response selectivity to whole individuals which cannot be explained by the sum of face and body selective responses.

Figure 7.

Superadditivity effects in fusiform and extrastriate ROIs. Four examples of activation maps, one for each ROI, showing SFSB condition specific adaptation effects as well as SFSB superadditivity adaptation effects displayed on functional image slices.  : Voxels showing SFSB adaptation;

: Voxels showing SFSB adaptation;  : SFSB voxels also showing superadditivity – (SFSB adaptation) > (SFDB adaptation + DFSB adaptation + DFDB adaptation). Contrasts were calculated using t-tests with family-wise error (FWE) corrections for multiple comparisons (p < 0.05).

: SFSB voxels also showing superadditivity – (SFSB adaptation) > (SFDB adaptation + DFSB adaptation + DFDB adaptation). Contrasts were calculated using t-tests with family-wise error (FWE) corrections for multiple comparisons (p < 0.05).

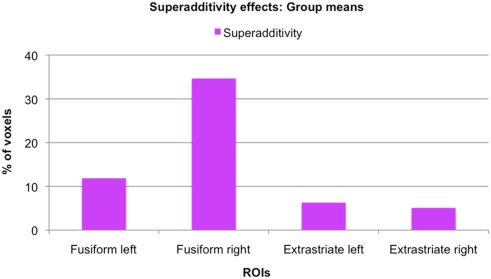

Figure 8.

Size of superadditivity effects: Group means. For each ROI, the graph displays the mean percentage of voxels of the original SFSB condition specific adaptation effects that also showed superadditivity, that is where the SFSB adaptation effect was bigger than the sum of the adaptation effects of all other conditions.

Table 2.

Superadditivity effects: Individual data.

| Subjects | SFSB voxels | Superadditivity voxels | Peak p* | Peak t | Super additivity % |

|---|---|---|---|---|---|

| FUSIFORM LEFT | |||||

| S1 | / | / | / | / | / |

| S2 | / | / | / | / | / |

| S3 | 29 | 4 | 0.009 | 3.380 | 14 |

| S4 | / | / | / | / | / |

| S5 | / | / | / | / | / |

| S6 | / | / | / | / | / |

| S7 | 46 | 10 | <0.001 | 4.970 | 22 |

| S8 | / | / | / | / | / |

| S9 | / | / | / | / | / |

| S10 | 10 | 0 | / | / | 0 |

| Mean N = 3 | 28 | 5 | 12 | ||

| FUSIFORM RIGHT | |||||

| S1 | 140 | 21 | <0.001 | 5.030 | 15 |

| S2 | / | / | / | / | / |

| S3 | 27 | 18 | <0.001 | 6.390 | 67 |

| S4 | 33 | 16 | 0.002 | 3.940 | 48 |

| S5 | / | / | / | / | / |

| S6 | / | / | / | / | / |

| S7 | 30 | 22 | 0.001 | 3.990 | 73 |

| S8 | / | / | / | / | / |

| S9 | 177 | 8 | 0.006 | 3.540 | 5 |

| S10 | 7 | 0 | / | / | 0 |

| Mean N = 6 | 69 | 14 | 35 | ||

| EXTRASTRIATE LEFT | |||||

| S1 | 437 | 18 | <0.001 | 4.580 | 4 |

| S2 | 21 | 4 | 0.021 | 3.040 | 19 |

| S3 | 83 | 6 | 0.007 | 3.460 | 7 |

| S4 | 200 | 9 | 0.009 | 3.350 | 5 |

| S5 | 139 | 5 | 0.027 | 2.920 | 4 |

| S6 | 28 | 0 | / | / | 0 |

| S7 | 202 | 27 | 0.006 | 3.540 | 13 |

| S8 | 9 | 1 | 0.019 | 3.080 | 11 |

| S9 | 99 | 0 | / | / | 0 |

| S10 | 7 | 0 | / | / | 0 |

| Mean N = 10 | 123 | 7 | 6 | ||

| EXTRASTRIATE RIGHT | |||||

| S1 | 549 | 21 | 0.005 | 3.580 | 4 |

| S2 | 5 | 0 | / | / | 0 |

| S3 | 326 | 50 | <0.001 | 6.390 | 15 |

| S4 | 264 | 18 | <0.001 | 4.560 | 7 |

| S5 | 157 | 4 | 0.005 | 3.590 | 3 |

| S6 | 74 | 5 | 0.038 | 2.770 | 7 |

| S7 | 29 | 2 | 0.001 | 4.280 | 7 |

| S8 | / | / | / | / | / |

| S9 | 228 | 8 | 0.020 | 3.040 | 4 |

| S10 | 6 | 0 | / | / | 0 |

| Mean N = 9 | 182 | 12 | 5 | ||

For each ROI, the table displays the individual superadditivity effects. For each subject, it displays the number of voxels showing SFSB adaptation, the number, and percentage of voxels also showing superadditivity, as well as the peak p and t values of the superadditivity clusters. *All p values are family-wise error (FWE) corrected (p < 0.05). / = missing value due to absence of adaptation effect.

Discussion

Several neuroimaging studies have shown that the perception of isolated faces as well as headless bodies evokes selective neural activity stemming from specialized brain regions in the fusiform gyrus and occipitotemporal cortex (Kanwisher et al., 1997; Peelen and Downing, 2007). The aim of the current study was to investigate to which extent the perception of faces and bodies is integrated at a neural level, giving rise to our ability to simultaneously extract information conveyed by the face and the body of other individuals (Van den Stock et al., 2007). That is, we aimed to investigate whether, in addition to brain areas responding selectively to isolated faces and headless bodies, there are brain areas that selectively respond to whole individuals.

We addressed this question using an fMRI-A paradigm, involving the sequential presentation of visual stimuli depicting whole individuals. The design of our experiment allowed us to measure adaptation effects occurring when either both the face and the body (SFSB), just the face (SFDB condition), just the body (DFSB condition), or neither the face nor the body (DFDB condition) of an individual were repeated. It is known that adaptation effects for a component of a stimulus (e.g., the face or the body) only occur in neural populations that are sensitive to that particular component (Grill-Spector and Malach, 2001). Hence, evidence for the existence of neural populations that selectively code for whole individuals would be clusters of voxels that show more adaptation to whole individuals than what could merely be explained by the sum of adaptation occurring to face and body respectively.

After defining ROIs comprising both face and body selective areas in fusiform and extrastriate regions, we initially performed simple adaptation contrasts and determined the individual adaptation effects for all experimental conditions (SFSB, SFDB, DFSB, and DFDB). In line with previous neuroimaging studies we found voxels with condition specific adaptation effects for faces (Williams et al., 2007) and bodies (Myers and Sowden, 2008). Other voxels on the contrary adapted to both the same face (SFDB) and the same body (DFSB) conditions, reflecting the previously shown overlap between face and body selective processing areas. In addition to face and body selective adaptation effects, we also found clusters of voxels that only adapted when both the face and the body were repeated (SFSB) condition, suggesting identity specific adaptation to whole individuals. Lastly, we found clusters of voxels that significantly adapted even if both the face and the body of Stim 2 were different to that of Stim 1 (DFDB condition). This is likely to reflect a category specific rather than identity specific adaptation effect, namely the fact that both stimuli are classified as whole individuals, rather than the fact that Stim 1 and Stim 2 are recognized being the same individual as such. While simple adaptation effects were present in all ROIs, cluster sizes were larger and more consistent across conditions in the extrastriate ROIs for all experimental conditions (Figure 6; Table 1). This might be explained by the fact that with exception of the right FFA, an in line with previous studies (Downing et al., 2001; Orlov et al., 2010), the activation clusters detected with the independent localizer were larger in the extrastriate compared to the fusiform regions (Figure 3; Appendix). Even though we did not use these actual clusters for our fMRI-A experiment, but instead defined homogenous ROIs around their peak voxels for all participants, it could still be the case that there was a larger concentration of face as well as body responsive neurons in the extrastriate compared to the fusiform ROIs.

While the existence of voxels that show significant adaptation only when both the same face and the same body are presented points into the direction of response selectivity for whole individuals, it cannot be taken as evidence for it. The adaptation effect for whole individuals could be merely additive, namely a sum of face and body specific responses. That is, it could simply reflect the fact that some voxels contain a mixture of face and body selective neurons whose individual category specific response is not strong enough to yield significant adaptation, but whose combined response is. We therefore took our analysis one step further and defined a superadditive contrast for the SFSB condition. Specifically, we defined a new independent contrast that allowed us to investigate whether for some voxels showing significant SFSB adaptation, this adaptation was actually larger than the sum of the adaptation shown for all other conditions. As described in the Section “Materials and Methods,” the contrast was defined as (SFSB adaptation) > (SFDB adaptation + DFSB adaptation + DFDB adaptation), and the activation was displayed using the SFSB simple adaptation contrast (SFSB Stim1 > SFSB Stim 2) as an inclusive mask in order to highlight voxels showing both SFSB adaptation and superadditivity. Importantly, we would like to underline that in order to avoid circularity both contrasts (the SFSB condition specific adaptation effect and the SFSB superadditive effect) were defined independently, and for both contrasts significance thresholds were adjusted for multiple comparisons using all voxels of the original ROIs. Hence, taken together the two analyses constitute a form of conjunction analysis that enables the identification of voxels with significant effects in two independent contrasts of interest (Nichols et al., 2005). Our analysis revealed a small percentage of superadditive SFSB voxels in all ROIs, hence demonstrating at least some degree of response selectivity for whole individuals in both fusiform and extrastriate regions (Figure 8; Table 2). Interestingly, while the simple SFSB adaptation effects were much less consistent in the fusiform ROIs, the actual percentage of SFSB voxels that also showed superadditivity was larger in the fusiform compared to the extrastriate regions. Overall, this trend is in line with the proposed idea that fusiform regions are more specialized in holistic or configural processing, whereas extrastriate regions are more specialized in featural or part-based processing (Taylor et al., 2007; Liu et al., 2010; Pitcher et al., 2011). However, given our relatively small sample size and the even smaller number of SFSB adaptation effects in the fusiform ROIs, we cannot draw any strong conclusions on the basis of this specific pattern observed in our data.

In sum, using fMRI-A we found evidence for selective brain activation associated with the visual perception of whole individuals. Albeit only shown by a relatively small number of voxels, these selective brain responses were present in both fusiform and extrastriate regions, suggesting that both play a role in the integration of face and body processing. Hence, our results suggest that at a neural level face and body perception are more closely integrated than so far assumed. The functional specificity of neural populations showing response selectivity for whole individuals is likely to support fast and accurate recognition and integration of information conveyed by both faces and bodies. In turn, they can be assumed to play an important role for identity as well as emotion recognition in everyday life. These neural populations may also represent the neural underpinnings of some of the behavioral experimental effects demonstrating functional interaction of face and body processing, such as the cross category body-to-face adaptation aftereffect. Lastly, our findings are of interest for cognitive models of face and body perception that outline the sequential steps proposed to be involved in the processing of either of these categories of stimuli, and call for a clearer specification of the level at which such integration might occur.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank the Reviewers for their feedback. Many thanks also to Chris Baker, Anina Rich, and Rebecca Schwarzlose for their comments on an earlier version of this manuscript, to Claudio Brozzoli for invaluable discussions during the revision process, and to Giovanni Gentile for technical advice on the analyses. Laura Schmalzl is supported by the European Research Council, Regine Zopf is supported by a Macquarie University Research Excellence Scholarship (MQRES), and Mark A. Williams is supported by the Australian Research Council (DP0984919).

Appendix

Table A1.

Face and body selective activations shown with the independent localizer: Individual data.

| Subjects | FFA left | FFA right | FBA left | FBA right |

|---|---|---|---|---|

| FUSIFORM FACE AND BODY SELECTIVE ACTIVATIONS | ||||

| S1 | 57 | 284 | 259 | 229 |

| S2 | 29 | 24 | 4 | 33 |

| S3 | 213 | 454 | 122 | 268 |

| S4 | 11 | 18 | 4 | 4 |

| S5 | 107 | 210 | 7 | 125 |

| S6 | 264 | 239 | 87 | 96 |

| S7 | 13 | 95 | 22 | 101 |

| S8 | 318 | 2745 | 34 | 97 |

| S9 | 0 | 59 | 0 | 0 |

| S10 | 128 | 313 | 19 | 47 |

| Mean N = 10 | 114 | 444 | 56 | 100 |

| Subjects | OFA left | OFA right | EBA left | EBA right |

| EXTRASTRIATE FACE AND BODY SELECTIVE ACTIVATIONS | ||||

| S1 | 44 | 80 | 1408 | 1229 |

| S2 | 160 | 389 | 500 | 775 |

| S3 | 386 | 888 | 346 | 978 |

| S4 | 10 | 44 | 21 | 27 |

| S5 | 405 | 227 | 377 | 236 |

| S6 | 80 | 172 | 422 | 682 |

| S7 | 69 | 145 | 261 | 542 |

| S8 | 2117 | 4 | 1254 | 1325 |

| S9 | 9 | 24 | 79 | 121 |

| S10 | 16 | 91 | 313 | 932 |

| Mean N = 10 | 330 | 206 | 498 | 685 |

The table displays the number of voxels that showed face selective (Face > Object) and body selective (Body > Object) activations in both the fusiform region (FFA and FBA) and extrastriate region (OFA and EBA). All contrasts were calculated using t-tests and adopting family-wise error (FWE) corrections for multiple comparisons (p < 0.05).

Footnotes

1Functional magnetic resonance imaging adaptation is not to be confused with the behavioral adaptation aftereffect paradigm described above.

References

- Aviezer H., Hassin R. R., Ryan J., Grady C., Susskind J., Anderson A., Moscovitch M., Bentin S. (2008). Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 19, 724–732 10.1111/j.1467-9280.2008.02148.x [DOI] [PubMed] [Google Scholar]

- Brozzoli C., Gentile G., Petkova V. I., Ehrsson H. H. (2011). fMRI-adaptation reveals a cortical mechanism for the coding of space near the hand. J. Neurosci. 31, 9023–9031 10.1523/JNEUROSCI.1172-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan A. W., Kravitz D. J., Truong S., Arizpe J., Baker C. I. (2010). Cortical representations of bodies and faces are strongest in commonly experienced configurations. Nat. Neurosci. 13, 417–418 10.1038/nn.2502 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing P. E., Jiang Y., Shuman M., Kanwisher N. (2001). A cortical area selective for visual processing of the human body. Science 293, 2470–2473 10.1126/science.1063414 [DOI] [PubMed] [Google Scholar]

- Downing P. E., Peelen M. V. (2011). The role of occipitotemporal body-selective regions in person perception. Cogn. Neurosci. 2, 186–203 10.1080/17588928.2011.582945 [DOI] [PubMed] [Google Scholar]

- Epstein R. A., Higgins J. S., Thompson-Schill S. L. (2005). Learning places from views: variation in scene processing as a function of experience and navigational ability. J. Cogn. Neurosci. 17, 73–83 10.1162/0898929052879987 [DOI] [PubMed] [Google Scholar]

- Gauthier I., Tarr M. J., Moylan J., Skudlarski P., Gore J. C., Anderson W. A. (2000). The fusiform “face area” is part of a network that processes faces at the individual level. J. Cogn. Neurosci. 12, 495–504 10.1162/089892900562165 [DOI] [PubMed] [Google Scholar]

- Ghuman A. S., McDaniel J. R., Martin A. (2010). Face adaptation without a face. Curr. Biol. 20, 32–36 10.1016/j.cub.2009.10.077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goh J. O. S., Siong S. C., Park D., Gutchess A., Hebrank A., Chee M. W. L. (2004). Cortical areas involved in object, background, and object–background processing revealed with functional magnetic resonance adaptation. J. Neurosci. 24, 10223–10228 10.1523/JNEUROSCI.3373-04.2004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K., Malach R. (2001). fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol. (Amst.) 107, 293–321 10.1016/S0001-6918(01)00019-1 [DOI] [PubMed] [Google Scholar]

- Kanwisher N., McDermott J., Chun M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J., Harris A., Kanwisher K. (2010). Perception of face parts and face configurations: an fMRI study. J. Cogn. Neurosci. 22, 203–211 10.1162/jocn.2009.21203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maurer D., Le Grand R., Mondloch C. J. (2002). The many faces of configural processing. Trends Cogn. Sci. (Regul. Ed.) 6, 255–260 10.1016/S1364-6613(02)01903-4 [DOI] [PubMed] [Google Scholar]

- Minnebusch D. A., Daum I. (2009). Neuropsychological mechanisms of visual face and body perception. Neurosci. Biobehav. Rev. 33, 1133–1144 10.1016/j.neubiorev.2009.05.008 [DOI] [PubMed] [Google Scholar]

- Morris J. P., Pelphrey K. A., McCarthy G. (2006). Occipitotemporal activation evoked by the perception of human bodies is modulated by the presence or absence of the face. Neuropsychologia 44, 1919–1927 10.1016/j.neuropsychologia.2006.01.035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers A., Sowden P. T. (2008). Your hand or mine? The extrastriate body area. Neuroimage 42, 1669–1677 10.1016/j.neuroimage.2008.05.045 [DOI] [PubMed] [Google Scholar]

- Nichols T., Brett M., Andersson J., Wager T., Poline J. (2005). Valid conjunction inference with the minimum statistic. Neuroimage 25, 653–660 10.1016/j.neuroimage.2004.12.005 [DOI] [PubMed] [Google Scholar]

- Orlov T., Makin T. R., Zohary E. (2010). Topographic representation of the human body in the occipitotemporal cortex. Neuron 68, 586–600 10.1016/j.neuron.2010.09.032 [DOI] [PubMed] [Google Scholar]

- Peelen M. V., Downing P. E. (2007). The neural basis of visual body perception. Nat. Rev. Neurosci. 8, 636–648 10.1038/nrn2195 [DOI] [PubMed] [Google Scholar]

- Perrett D. I., Rolls E. T., Caan W. (1982). Visual Neurons responsive to faces in the monkey temporal cortex. Exp. Brian Res. 47, 329–342 [DOI] [PubMed] [Google Scholar]

- Pitcher D., Duchaine B., Walsh V., Yovel G., Kanwisher N. (2011). The role of lateral occipital face and object areas in the face inversion effect. Neuropsychologia 49, 3448–3453 10.1016/j.neuropsychologia.2011.08.020 [DOI] [PubMed] [Google Scholar]

- Reed C. L., Stone V. E., Bozova S., Tanaka J. (2003). The body-inversion effect. Psychol. Sci. 14, 302–308 10.1111/1467-9280.14431 [DOI] [PubMed] [Google Scholar]

- Schwarzlose R. F., Baker C. I., Kanwisher N. (2005). Separate face and body selectivity on the fusiform gyrus. J. Neurosci. 25, 11055–11059 10.1523/JNEUROSCI.2621-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor J. C., Wiggett A. J., Downing P. E. (2007). Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. J. Neurophysiol. 98, 1626–1633 10.1152/jn.00012.2007 [DOI] [PubMed] [Google Scholar]

- Van den Stock J., Righart R., de Gelder B. (2007). Body expressions influence recognition of emotions in the face and voice. Emotion 7, 487–494 10.1037/1528-3542.7.3.487 [DOI] [PubMed] [Google Scholar]

- Vuilleumier P., Henson R. N., Driver J., Dolan R. J. (2002). Multiple levels of visual object constancy revealed by event-related fMRI of repetition priming. Nat. Neurosci. 5, 491–499 10.1038/nn839 [DOI] [PubMed] [Google Scholar]

- Wachsmuth E., Oram M. W., Perrett D. I. (1994). Recognition of objects and their component parts: responses of single units in the temporal cortex of the macaque. Cereb. Cortex 4, 509–522 10.1093/cercor/4.5.509 [DOI] [PubMed] [Google Scholar]

- Williams M. A., Berberovic N., Mattingley J. B. (2007). Abnormal fMRI adaptation to unfamiliar faces in a case of developmental prosopamnesia. Curr. Biol. 17, 1259–1264 10.1016/j.cub.2007.03.027 [DOI] [PubMed] [Google Scholar]

- Yovel G., Pelc T., Lubezky I. (2010). It’s all in your head: why is the body inversion effect abolished for headless bodies? J. Exp. Psychol. Hum. Percept. Perform. 36, 759–767 10.1037/a0017451 [DOI] [PubMed] [Google Scholar]