Abstract

Oscillatory alpha-band activity (8–15 Hz) over parieto-occipital cortex in humans plays an important role in suppression of processing for inputs at to-be-ignored regions of space, with increased alpha-band power observed over cortex contralateral to locations expected to contain distractors. It is unclear whether similar processes operate during deployment of spatial attention in other sensory modalities. Evidence from lesion patients suggests that parietal regions house supramodal representations of space. The parietal lobes are prominent generators of alpha oscillations, raising the possibility that alpha is a neural signature of supramodal spatial attention. Furthermore, when spatial attention is deployed within vision, processing of task-irrelevant auditory inputs at attended locations is also enhanced, pointing to automatic links between spatial deployments across senses. Here, we asked whether lateralized alpha-band activity is also evident in a purely auditory spatial-cueing task and whether it had the same underlying generator configuration as in a purely visuospatial task. If common to both sensory systems, this would provide strong support for “supramodal” attention theory. Alternately, alpha-band differences between auditory and visual tasks would support a sensory-specific account. Lateralized shifts in alpha-band activity were indeed observed during a purely auditory spatial task. Crucially, there were clear differences in scalp topographies of this alpha activity depending on the sensory system within which spatial attention was deployed. Findings suggest that parietally generated alpha-band mechanisms are central to attentional deployments across modalities but that they are invoked in a sensory-specific manner. The data support an “interactivity account,” whereby a supramodal system interacts with sensory-specific control systems during deployment of spatial attention.

Introduction

One influential theory of attention proposes that a single control system allocates attention to locations in space regardless of the sensory modality to be attended [a “supramodal” spatial attention system (Farah et al., 1989)]. An alternative account is that spatial attention could be allocated independently by several sensory-specific control systems. Studies of patients with parietal lesions have provided strong support for the former account, with further supporting evidence for a supramodal system coming from electroencephalography (EEG) (Eimer et al., 2003; Störmer et al., 2009; Kerlin et al., 2010) and functional magnetic resonance imaging (fMRI) studies (Macaluso et al., 2003; Shomstein and Yantis, 2006; Smith et al., 2010). However, other findings point to a third possibility, whereby a supramodal system interacts with sensory-specific control systems during the deployment of spatial attention, what we will term “the interactivity thesis” (Spence and Driver, 1996; Eimer et al., 2002; Santangelo et al., 2010). The present study was designed to adjudicate between these competing accounts. Here, we assess the role of alpha-band (8–15 Hz) oscillatory mechanisms in the anticipatory deployment of both auditory and visual spatial attention. We chose to interrogate alpha-band activity, specifically because it is mainly generated by those structures in the right parietal lobe implicated in the supramodal theory and also because it has been firmly established as a mechanism by which attention is deployed within vision (Foxe et al., 1998; Worden et al., 2000; Dockree et al., 2007; Rihs et al., 2007). Conversely, it is not yet clear whether this oscillatory mechanism is also invoked during the deployment of attention in a purely auditory spatial task. If this mechanism were found to be common to both sensory systems, this would provide compelling support for the supramodal account. Alternatively, a sensory-specific account would be supported if the spatially specific alpha-band activity seen during visuospatial deployments was absent during auditory deployments. The third possibility is that alpha-band activity is invoked during both auditory and visual deployments but that there are systematic differences in terms of the cortical regions generating these effects. Under this last scenario, alpha-band activity would likely represent a mechanism that has both supramodal and sensory-specific generators, providing support for the interactivity thesis.

If a supramodal system does indeed control deployment of spatial attention, neural mechanisms for the suppression of irrelevant/distracting information found during visuospatial attention tasks might be expected to be similarly deployed during spatially selective attention to stimulation in other sensory modalities. Retinotopically specific increases of power in the 8–15 Hz (“alpha”) frequency band of the EEG have been repeatedly implicated in the anticipatory deployment of visuospatial attention (Worden et al., 2000; Kelly et al., 2006; Thut et al., 2006; Gomez-Ramirez et al., 2009). These alpha increases have parieto-occipital scalp distributions indicative of neural generators in visual cortex with receptive fields corresponding to the spatial location of the distractors, strongly suggesting that alpha-band increases reflect an active attentional suppression mechanism (Foxe et al., 1998; Gomez-Ramirez et al., 2007; Snyder and Foxe, 2010). In support of this interpretation, a transcranial magnetic stimulation (TMS) study found that disruption of frontal or parietal regions of the attentional control system disrupted modulation of alpha-band activity over primary visual areas in a visual spatial attention task and that the degree of disruption of alpha modulation was related to impaired behavioral performance (Capotosto et al., 2009). The functional role of this process is bolstered by a number of additional studies that have shown a relationship between the magnitude of anticipatory alpha-band activity and subsequent performance (Thut et al., 2006; Kelly et al., 2009; O'Connell et al., 2009). In a particularly compelling demonstration, Romei et al. (2010) used short-train TMS of visual cortex to show that alpha-band stimulation (10 Hz) selectively disrupted target detection in the visual field contralateral to stimulation, an effect that was not observed using other stimulation frequencies (theta, 5 Hz; beta, 20 Hz).

Here we assessed whether these alpha-band mechanisms would also be evoked in a purely audiospatial task. The experimental setup was carefully titrated such that there were no visual cues whatsoever as to the location of the auditory imperative stimuli. We then compared alpha-band activity during spatial deployments in this pure audiospatial task with those recorded during the equivalent visuospatial task, asking whether lateralized alpha effects would be evident under both settings and, if so, whether these oscillatory mechanisms were generated by the same cortical regions or showed sensory-specific generator patterns.

Materials and Methods

Participants

Twenty neurologically typical adults (12 female; mean ± SD, 25.31 ± 4.59 years) served in the study. All participants were recruited from the psychology department at the City College of New York. Data from 2 of the 20 participants were excluded as a result of excessive eye blinks that resulted in an insufficient number of trials after artifact rejection. Thus, 18 participants (11 female; mean ± SD, 23.15 ± 4.16 years) remained in the sample. Two participants were left-handed by self-report, and all had normal or corrected-to-normal vision and normal hearing. All subjects provided written informed consent and received a modest fee for participation ($12/h). All materials and procedures were approved by the institutional review board of the City College of the City University of New York, and ethical guidelines were in accordance with the tenets of the Declaration of Helsinki.

There were two phases to the experiment: (1) a purely auditory spatial task (Fig. 1A), (2) followed by a visuospatial task (Fig. 1B). This task order was a crucial design feature. Because the primary question here concerned whether putatively visual oscillatory mechanisms would also be observed during a purely auditory spatial task, we felt it essential to conduct the auditory task first without presenting any visuospatial cues to the participants. We reasoned that, if the visual task were administered first, participants might develop a task strategy for processing stimuli in visuospatial coordinates, and this strategy could then carry over to the auditory task if it were administered afterward. There is much precedent for the continued activation of circuits responsible for a formerly relevant task, even if participants are fully aware that the task will not be relevant again (Wylie et al., 2004). Thus, all participants completed the auditory task before the visual task. Additionally, because the occurrence of lateralized alpha-band effects during visuospatial tasks is not in dispute, having been replicated many times by many investigators (Kelly et al., 2005, 2009; Rihs et al., 2009), this secondary visuospatial task was conducted here to ensure that the effect was again replicated in this specific cohort and so that topographies of putative alpha effects could be compared across sensory modalities.

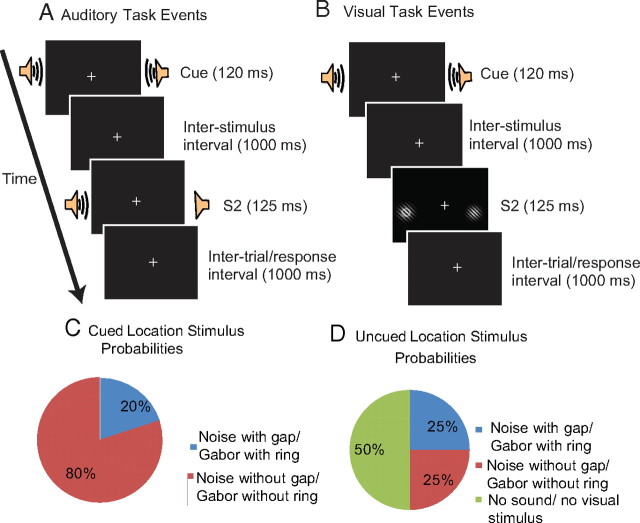

Figure 1.

A schematic of the experimental paradigm illustrates the sequence of events and their timing within a trial, for each of the auditory (A) and visual (B) tasks. The spoken phoneme (/ba/ or /da/) served to cue participants to attend right or to attend left for the occurrence of a possible target. These auditory cues were followed 1000 ms later by the imperative stimulus (S2: Gabors in the visual task and a noise burst in the auditory task). In the depiction of the visual task (B), a target ring is present in the left Gabor. C and D delineate the stimulus probabilities at cued and uncued locations for auditory and visual tasks.

Data from the first two participants from the visual task were not included in the grand average because of equipment failures during delivery of the stimuli. Thus, 18 participants were included in the final group for the auditory task, and 16 participants were included in the final group for the visual task.

Stimuli, apparatus, and procedure

Participants sat in a darkened, electrically shielded, sound-attenuated booth and fixated on a cross displayed continuously on the center of a cathode ray tube computer monitor (NEC FE2111SB, 40 × 30 cm), placed 100 cm in front of them. Stimuli were delivered using Presentation software (Neurobehavioral Systems). Speakers were placed to the left and right of the computer monitor (centered ∼14° from midline) and were completely hidden from view behind a permanent opaque black curtain to prevent their explicit visuospatial mapping. Participants were never able to see the speakers. Gaze direction was monitored using an Eyelink 1000 eye-tracking camera (SR Research Ltd.) to ensure that participants maintained central fixation. Horizontal electro-oculogram data were also recorded and analyzed to confirm that there were no effects of systematic eye movements.

We used a classic S1–S2 cued attention task, in which each trial consisted of a cue (S1), an intervening blank preparatory period, followed immediately by a task-relevant second stimulus (S2) (Fig. 1). Tasks of this type often use probabilistic cues, in which participants are told to respond to all targets, even at the uncued location (Posner et al., 1980). Here, instructional cues were used (Worden et al., 2000) such that participants were directed only to respond to targets at the cued location and suppress/ignore stimuli at the uncued location. Thus, all stimuli at uncued locations served as distractors, suppression of which would be expected to benefit task performance. On each trial, a symbolic auditory cue (the spoken phoneme /ba/ or /da/) was presented for 120 ms at 60 dB SPL from both speakers simultaneously (Fig. 1A). Assignment of the cues /ba/ or /da/ to signify attend-right or attend-left trials was counterbalanced across participants. Auditory cues were produced using “Open Mary Text-to-Speech System” (Schröder and Trouvain, 2003). The use of auditory cues is a crucial design feature, because typical visual arrow cues may introduce confounds. Arrow cues have been demonstrated to induce exogenous visuospatial attention effects attributable to the over-learned association between an arrow and a spatial location, even when the task is entirely nonspatial (Ivanoff and Saoud, 2009). Additionally, auditory cues have been successfully used previously to induce alpha attention effects (Fu et al., 2001).

After the S1–S2 (cue–target) interval of 1000 ms, auditory S2s were delivered in the form of band-delimited noise bursts of three frequency ranges (300–1400, 600–1800, and 900–2700 Hz) for 125 ms at 60 dB SPL (Fig. 1A). Auditory S2s occurred from the left, right, or both speakers with equal probability (33.3%). For bilateral auditory S2s, noise bursts within two different frequency ranges were presented to each side to facilitate spatial segregation of the two sounds. Participants responded by depressing the left button of a computer mouse with their right index finger on detection of an S2 containing a gap at the cued location (target) and withheld responses otherwise. In the auditory task, the probabilities for stimuli at the cued location were as follows: noise with a gap, 0.20; noise without a gap, 0.80. At the uncued location in the auditory task, the probabilities were as follows: noise with a gap, 0.25; noise without a gap, 0.25; no auditory stimulus, 0.50 (Fig. 1C,D). It is important to note that a noise with a gap at the uncued location did not constitute a target and did not call for a response. A stimulus with a gap could be presented on both sides, but participants were instructed to only attend to the cued location. Bilateral trials were included to examine suppression of stimuli at the uncued location. Participants were instructed to use the cue information and maintain central fixation.

The duration of the gap in the auditory noise-burst S2s was determined psychophysically on an individual subject basis before beginning the experiment. There were 50 possible gap durations ranging from 1 to 50 ms. A beginning level of 79.4% target detection rate was titrated for each subject using the up–down transform response method (UDTR) (Wetherill and Levitt, 1965). Because of an inability to meet the target performance during the UDTR procedure, one participant was placed at the highest gap-detection level (50 ms) for the auditory experimental blocks. Participants performed the auditory task UDTR, followed by the auditory task, a break, and then the visual UDTR and task. Participants were given a cumulative account of their performance at regular intervals throughout the procedure. Approximately 600 trials per task (auditory and visual) were collected for each participant during the EEG portion of the experiment.

The visual task was designed to be as similar to the auditory task as possible, except that the S2s were visual (the cues remained auditory). The S2s were gray-and-white Gabor patches on a black background that appeared at the farthest left and/or right peripheral extent of the monitor (centered at 9.15° from midline) to maintain as comparable a location to the auditory S2s as was feasible (Fig. 1). These stimuli were constructed using a 12.9 cycles/° sinusoidal wave with values ranging from black [monitor red–green–blue (RGB) = 0,0,0] to 98.82% white (RGB = 252,252,252) enveloped with a 2-D Gaussian with a width at half-maximum of 1.19° visual angle. The sinusoid was oriented at 45° relative to horizontal. The target was a ring ranging in brightness from 1.27% gray (RGB = 3,3,3) to 100% white (RGB = 255,255,255) by 80 discrete levels. The ring had an inside diameter of 0.45° visual angle and an outside diameter of 0.67° visual angle. The target ring and Gabor mask were combined using a screen overlay.

For the visual task, participants were instructed to press the left mouse button when they detected a bright ring in the center of the Gabor at the cued location and to withhold responses otherwise. Participants also performed a UDTR session before the visual condition to determine the target ring brightness level for a 79.4% detection rate. The UDTR method was used to bring participants to equivalent levels of stimulus detection before the main experimental sessions began. Thereafter, we did not adjust the stimulus levels throughout the experiment because the intention was to compare electrophysiological responses with stimuli containing the same properties. Four participants were unable to attain the predetermined target performance level during the UDTR procedure. These participants were therefore placed at the maximal target contrast level (i.e., white against black) during the experimental blocks. All participants were provided feedback on their cumulative signal detection for this task in the same manner as in the auditory task. The stimulus probabilities for cued and uncued locations were exactly the same in the visual task as in the auditory task (Fig. 1C,D).

EEG recordings and analysis

Continuous EEG was acquired through the BioSemi ActiveTwo electrode system from 168 Ag-Cl electrodes, digitized at 512 Hz. With the Biosemi system, every electrode or combination of electrodes can be assigned as a reference, which is done purely in software after acquisition. Biosemi replaces the ground electrodes that are used in conventional systems with two separate electrodes that are used in conventional systems with two separate electrodes: common mode sense and driven right leg passive electrode. These two electrodes create a feedback loop, thus rendering them as references. EEG data were processed using the FieldTrip toolbox (Donders Institute for Brain, Cognition, and Behavior, Radboud University Nijmegen, Nijmegen, The Netherlands; http://www.ru.nl/neuroimaging/fieldtrip) for MATLAB (MathWorks).

Raw data were re-referenced to the nasion for analysis after acquisition. Data were epoched (200 ms before S1 onset to 1000 ms after S1 onset) and then averaged offline. Three hundred trials for each cue direction in each sensory modality were collected for each subject. We defined baseline as the mean voltage over 200 to 50 ms preceding the onset of the stimulus. Trials with eye blinks and trials in which the angle was greater than 3° from central fixation were rejected on the basis of eye-tracking data. Trials for which four or more electrodes had voltage values spanning a range of >120 μV were rejected, to exclude periods of high muscle activity and other noise transients.

Analysis strategy

Behavioral data

A repeated-measures ANOVA was used to examine performance (correct positive responses divided by total targets presented) for visual and auditory tasks (modality × cue direction). A repeated-measures ANOVA was also performed on reaction time data for visual and auditory tasks (modality × cue direction). The two participants whose behavioral data for the visual task were corrupted were not included in these repeated-measures ANOVA analyses.

Electrophysiological data

The temporal spectral evolution (TSE) method was used to focus analysis on alpha-band activity. To derive TSE waveforms, the data were first bandpass filtered between 8 and 15 Hz (fourth-order digital Butterworth, zero-phase). Then, the instantaneous amplitude of the complex-valued analytic signal was derived by the Hilbert transform (Le Van Quyen et al., 2001). Frequency and temporal resolution are determined wholly by the filtering step and are not altered by the Hilbert transformation. This procedure results in all positive-valued data. Because we were interested in the average change of alpha-band power after the presentation of the cue information, we re-baselined the data before averaging across trials. Thus, any change from zero—the baseline—would indicate a change in alpha power. Use of a narrow bandpass filter introduces an artifact near the edge of the epoch, so our baseline window for this procedure was reset to −100 to 0 ms to avoid including the edge artifact in the baseline calculation. Thus, voltage values in the epoch represent absolute change in alpha-band power from baseline levels. Data were ultimately grand-averaged across subjects for purposes of illustration. Our analyses then proceeded in two stages, assessing two separable aspects of the alpha-band response. (1) The aim in the first stage was to assess our primary hypothesis as to whether previously characterized, spatially specific, alpha effects would be common to both audiospatial and visuospatial conditions. (2) The second stage aimed to assess whether general attentional deployment processes in the alpha band (i.e., when left vs right space was not considered as a factor) would be common or separable between sensory modalities. An alpha criterion of p < 0.05 was used.

Stage 1 analysis: assessing spatially specific alpha suppression effects across modalities.

Repeated-measures ANOVAs were used to analyze main effects and interactions of cue direction (left or right) and region of interest (ROI) (left or right hemiscalp) on alpha-power amplitudes. These ANOVAs were performed for each task (auditory and visual) and for each of six time windows, for a total of 12 statistical tests. The regions of interest chosen for testing were based on scalp topographies in existing studies (Worden et al., 2000; Kelly et al., 2008) with electrode clusters over left and right parieto-occipital scalp. These locations are outlined in Figure 4. The time windows of 400–500, 500–600, 600–700, 700–800, 800–900, and 900–940 ms were selected for analysis based on the observed time courses of differential anticipatory alpha-band shifts in previous studies (Worden et al., 2000; Fu et al., 2001). The last 60 ms of the anticipatory period before the S2 were excluded from these analyses to preclude inclusion of oscillatory effects caused by the onset of S2 stimulus processing, because it was anticipatory effects that were of primary interest. An alpha criterion of 0.05 was used and corrected for multiple comparisons when appropriate.

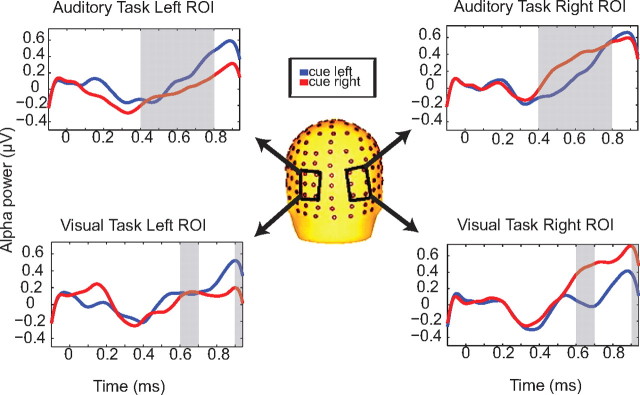

Figure 4.

Alpha power in each ROI dependent on cue direction. Comparing left and right ROIs for each of the auditory and visual tasks, alpha power is greater over the parieto-occipital cortex ipsilateral to the direction of attention for several post-S1 time windows, for both visual and auditory tasks.

Stage 2 analysis: assessing right parieto-occipital alpha-band activity between modalities.

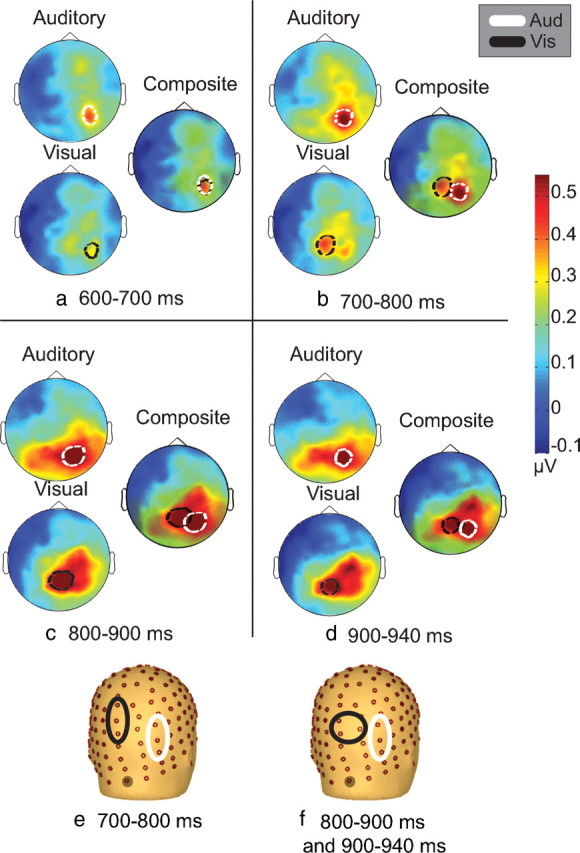

A second stage of analysis aimed to characterize potential topographic differences in terms of general spatial attention deployments as a function of sensory modality (i.e., regardless of which side of space was to be specifically attended). This was done by averaging across cue-right and cue-left conditions within modality, allowing for examination of sensory- specific alpha-band attentional processes that are common across leftward and rightward directed attention for each sensory condition (auditory and visual). The first stage of analysis was “blind” to these common processes because they are subtracted out in the left-versus-right comparison. For this analysis, only the 16 participants who completed both auditory and visual tasks were included. Scalp topographies and latency of alpha power increases between the auditory and visual tasks were statistically assessed using repeated-measures ANOVA with the factors of modality (auditory or visual) and ROI. We again considered the time period preceding the arrival of the anticipated imperative S2 (700–940 ms after S1). In this second stage of analysis, we did not have previous literature to rely on in terms of defining specific scalp regions a priori to select for statistical comparisons, but we had solid reason to expect a strong right parieto-occipital focus for alpha-band activity during the anticipatory period from previous intersensory work (Foxe et al., 1998; Fu et al., 2001) and assumed that this would be evident for both modalities in the current datasets. The main question was whether this right parieto-occipital alpha focus would be identical or different across modalities. We approached this issue by quantitatively assessing the regions containing peak alpha power in each time window (700–800, 800–900, 900–940 ms) within a given sensory modality, picking the three adjacent scalp sites that showed maximal alpha power. The scalp sites chosen through this method for each task modality are illustrated in Figure 5. When these regions differed between tasks, we then submitted them to the statistical analyses described above. An alpha criterion of 0.05 was used and corrected for multiple comparisons when appropriate. We did not test the 600–700 ms time window because the electrodes at which peak alpha power was observed were completely overlapping for the auditory and visual tasks during this period. Confirmatory analyses were also performed whereby the TSE signals were global field potential (GFP) normalized using the freely available software Cartool (Brunet et al., 2011). Repeated-measures ANOVAs were then conducted on the normalized data using the factors of condition and electrode for three time bins (700–800, 800–900, 900–940 ms after S1). Additionally, paired-samples t tests were performed for these normalized TSE data between the auditory and visual conditions using all electrodes and all time points, corrected for multiple comparisons.

Figure 5.

Scalp topographies of alpha power averaged over cue condition for the auditory and visual tasks. For each time interval from 600 to 940 ms (a–d), topographies are displayed separately for auditory and visual tasks. To illustrate differences in the scalp distribution of alpha power between the sensory conditions, these are accompanied by a composite display of the same auditory and visual scalp topographies and their centers of maximal activity. The bottom row (e, f) displays the electrodes used for the stage 2 analysis. The gray circle shaded electrode represents the midline occipital electrode as a bearing, as the scalp has been rotated to depict mainly right hemisphere electrodes.

Results

Behavioral data

For the auditory task, the mean ± SD gap level (n = 18) determined by UDTR was 28.28 ± 10.60 ms. Mean ± SD performance for the auditory task was 78.68 ± 13.57% for cue-left and 72.14 ± 17.55% for cue-right conditions (Fig. 2a). For the visual task, the mean ± SD target ring brightness level (n = 16) determined by UDTR was 58.83 ± 22.90%. Mean ± SD performance for the visual task was 60.08 ± 23.32% for cue-left and 62.13 ± 26.31% for cue-right conditions (Fig. 2a).

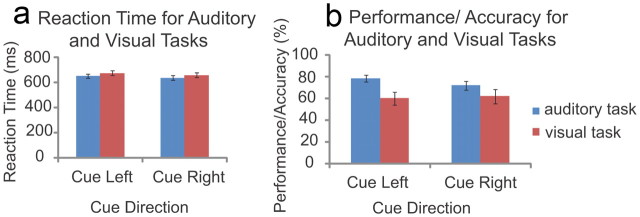

Figure 2.

Performance on the auditory and visual tasks. Accuracy as a function of task and cue direction is illustrated in a, and reaction time as a function of task and cue direction is illustrated in b.

A plot of the horizontal electro-oculogram traces in the cue-left and cue-right conditions time locked to the S1 for auditory and visual tasks is included (supplemental Fig. S-1, available at www.jneurosci.org as supplemental material). Paired-samples t tests of each trace were performed across several conditions. For the auditory task, the conditions included left horizontal electrode (cue left vs cue right) and right horizontal electrode (cue left vs cue right). The visual task analysis included these same pairs. This analysis revealed no significant differences between any of these pairs. Eye tracking was used to ensure appropriate fixation and mean ± SD gaze deviation from fixation across participants in the period between S1 and S2 was 0.5233 ± 0.2446° on the horizontal axis. A plot of the mean gaze deviation from fixation for each participant in auditory and visual tasks between the S1 and S2 was included (supplemental Fig. S-2, available at www.jneurosci.org as supplemental material). A paired-samples t test between auditory and visual tasks revealed no significant difference in gaze deviation between the tasks (t(15) = −0.368, p = 0.718).

The lower performance of some participants for the visual task may be attributed to fatigue in the latter half of the testing session. We opted to retain participants that did not maintain 79.4% performance after behavioral titration with the UDTR procedure because, although these individuals showed performance declines relative to beginning levels, they were nonetheless able to effectively deploy attention as instructed and to discriminate targets. During examination of the d′ (discriminability index) values for each participant, it was clear that all participants performed above chance (d′ > 0), except one participant, who performed below chance only in one hemifield. For the auditory task, the mean ± SD discriminability index was d′ = 1.70 ± 0.73 for cue left and d′ = 1.45 ± 0.58 for cue right. For the visual task, the mean ± SD discriminability index was d′ = 0.87 ± 0.56 for cue left and d′ = 0.87 ± 0.72 for cue right. Individual performance levels for both tasks and each hemifield are tabulated in supplemental Table S-1 (available at www.jneurosci.org as supplemental material). In response to a reviewer's comment, topographies were compared for participants who were at similar levels of performance for auditory and visual tasks to ensure that the pattern of effects remained unchanged. Participants with the lowest quartile of performance in the visual task were removed, and grand averages were produced for these auditory and visual task data (supplemental Fig. S-4, available at www.jneurosci.org as supplemental material). These topographies were very similar to those in the original analysis throughout the periods of interest.

Repeated-measures ANOVAs on performance with factors of cue direction (attend right or left) and modality (auditory or visual task) revealed a main effect of modality (F(1,15) = 12.088, p = 0.003). This main effect was driven by better performance in the auditory task (mean ± SD, 75.41 ± 15.81%) than the visual task (mean ± SD, 61.09 ± 24.48%). When the four participants who did not meet target performance for the visual task and the one participant who did not meet target performance for the auditory task were removed, a paired-samples t test showed that auditory task performance (mean, 80.27%) remained significantly better than visual task performance (mean, 64.95%) (t(23) = 3.266, p = 0.003). Analysis of the reaction time data revealed no significant differences between auditory and visual tasks (Fig. 2b).

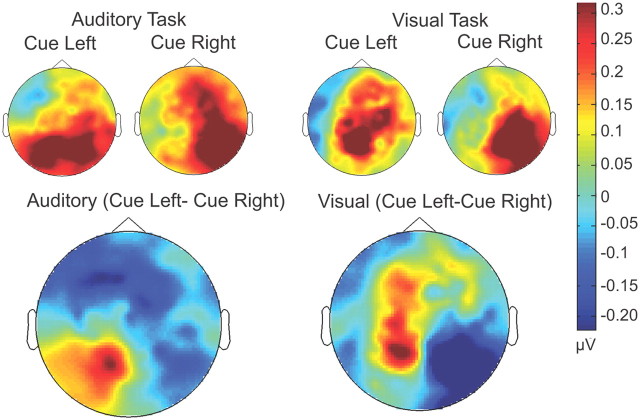

Electrophysiological data

Stage 1: assessing spatially specific alpha suppression effects

The top panel of Figure 3 displays alpha-band TSE topographies as a function of cuing condition (left or right) for auditory and visual tasks (600–940 ms after S1). The bottom panel of Figure 3 shows the maps of the difference between this pair of conditions (i.e., cue-left minus cue-right). Bilateral parieto-occipital alpha foci are evident for both modalities (note that the red and blue coloring in the difference maps is simply a function of the direction of the subtraction). Both alpha foci, in fact, represent increased alpha-power relative to baseline. It can be readily appreciated from these difference maps that the topography of these alpha-lateralization effects are highly similar between modalities.

Figure 3.

Scalp topographic maps of alpha power (from 600 to 940 ms) for cue-left and cue-right conditions, for each of the auditory and visual tasks. In the bottom row, cue right is subtracted from cue left to illustrate the parietal distribution of the cue-specific alpha-power topographies that is seen in both the auditory and visual tasks.

Figure 4 displays TSE waveforms from parieto-occipital scalp sites showing the shifts in alpha power dependent on cue direction that were observed for each of the auditory and visual tasks. For the auditory task and visual task, we performed two separate repeated-measures ANOVAs comparing left-hemisphere and right-hemisphere regions of interest across the two cue conditions. For the auditory task, we found main effects of hemisphere and cue × hemisphere interactions for the periods from 400–800 ms after S1 (Table 1). For the visual task, we found significant cue × hemisphere interactions from 600–700 and 900–940 ms after S1, with no significant main effects or interactions during the intervening time periods (700–900 ms), although these approached significance. Table 1 includes a summary of these results.

Table 1.

Summary of main effects and interactions for alpha power by cue (left vs right) and hemisphere (left or right parieto-occipital regions of interest) in each time window

| Task | 400–500 ms | 500–600 ms | 600–700 ms | 700–800 ms | 800–900 ms | 900–940 ms |

|---|---|---|---|---|---|---|

| Auditory | Main effect of hemisphere (F(1,17) = 6.957, p = 0.017) | Main effect of hemisphere (F(1,17) = 8.790, p = 0.009) | Main effect of hemisphere (F(1,17) = 12.038, p = 0.003) | Main effect of hemisphere (F(1,17) = 13.550, p = 0.002) | NS | NS |

| Cue × hemisphere interaction (F(1,17) = 6.747, p = 0.019) | Cue × hemisphere interaction (F(1,17) = 8.964, p = 0.008) | Cue × hemisphere interaction (F(1,17) = 5.812, p = 0.028) | Cue × hemisphere interaction (F(1,17) = 5.443, p = 0.032) | NS | NS | |

| Visual | NS | NS | Cue × hemisphere interaction (F(1,15) = 4.892, p = 0.043) | Marginal cue × hemisphere interaction (F(1,15) = 4.197, p = 0.058) | Marginal cue × hemisphere interaction (F(1,15) = 4.165, p = 0.059) | Cue × hemisphere interaction (F(1,15) = 4.848, p = 0.044) |

Stage 2: assessing right parieto-occipital alpha-band activity across modalities

In the second phase of analysis, potential alpha-band topographical differences between the auditory and visual tasks were explored. Here, the data from left and right conditions were collapsed within modality to analyze differences in deployment of attention for each sensory modality regardless of the specific spatial location to be attended. Figure 5, e and f, shows the regions of interest that were used in this analysis (their selection is described in Materials and Methods). For 700–800 ms after S1, a significant modality × region of interest interaction was observed (F(1,15) = 4.612, p = 0.048), and this interaction was also evident for the 800–900 ms period (F(1,15) = 5.070, p = 0.040). For these two time windows spanning the 700–900 ms post-S1 period, alpha power was greatest over the right parietal cortex for both auditory and visual tasks as predicted, with the focus during the auditory task showing a distinctly more lateral distribution to that seen during visuospatial deployments. From 900–940 ms post-S1 period, the foci of peak activation for auditory and visual tasks remained distinct, and there was a significant modality × region of interest interaction (F(1,15) = 6.166, p = 0.025). Figure 5 illustrates the differences in topography between the two tasks during these three time windows.

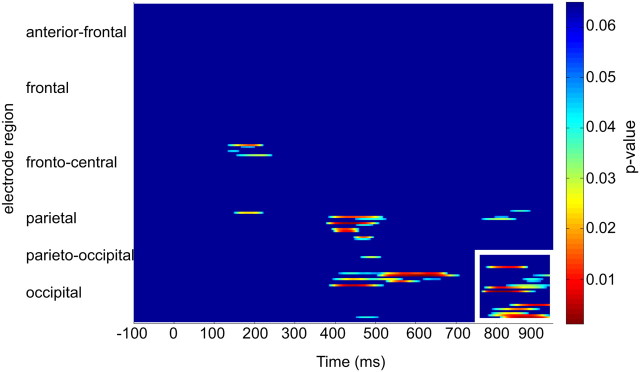

These data were GFP normalized, and repeated-measures ANOVAs were conducted using the factors of condition and electrode for three time bins (700–800, 800–900, 900–940 ms after S1). A significant condition × region interaction was observed for 800–900 ms (F(1,15) = 6.4, p = 0.023) and also for 900–940 ms (F(1,15) = 7.464, p = 0.015). However, no condition × region interaction was observed for the time bin of 700–800 ms after S1. Figure 6 displays the results from the paired t tests across all electrodes and all time points for these data. Significant differences (p < 0.05) were observed over parieto-occipital electrodes between the conditions from 780–940 ms after S1, including the electrodes used in the original analysis (Fig. 5e,f).

Figure 6.

Paired t tests of all electrodes and all time points across auditory and visual conditions (corrected for multiple comparisons). Parieto-occipital electrodes outlined in white show significant (p < 0.05) difference across the two conditions from 780 to 940 ms after S1. Significant differences were also observed for a few parietal electrodes from 400 to 700 ms, but distinct topographical shifts were not observed for this time period.

Discussion

We set out to assess the contribution of shared versus sensory-specific mechanisms in the deployment of spatial attention, by comparing alpha-band oscillatory activity under conditions of audiospatial and visuospatial attention. This measure of preparatory spatial attention has been very well characterized in the visual domain and provides a reliable measure of top-down control of spatial attention processes. Auditory directional cues were explicitly used in both cases to avoid the previously mentioned confounds around visually presented arrows. Using tightly matched stimulus setups across sensory modalities, the current data clearly demonstrate a central role for parieto-occipital alpha-band oscillatory mechanisms in the directing of both audiospatial and visuospatial attention. In this regard, these results point to a supramodal role for alpha-band oscillatory mechanisms. Specifically, the deployment of spatial attention toward anticipated auditory or visual events resulted in lateralized increases in alpha-power over parieto-occipital cortex contralateral to unattended space (ipsilateral to the cued location). Topographic mapping of these lateralized alpha effects (i.e., maps of the difference between cue-left minus cue-right conditions) across the late pre-S2 anticipatory period revealed very similar distributions for the attend-auditory and attend-visual conditions (Fig. 3). It is clear from these data that alpha-band oscillations play a key role in the deployment of spatial attention within both the auditory and visual systems. It is also clear that structures within the right parietal lobe produce the majority of this phasic alpha-band activity during both sensory tasks, consistent with lesion data showing cross-sensory attentional deficits when these same structures are compromised (Brozzoli et al., 2006).

In a second stage of analysis, the data were collapsed across spatial-cueing conditions to examine potential between-modality differences, independent of spatial considerations. Using this approach, additional support for a supramodal spatial attention system was seen in the 600–700 ms post-S1 time window. A highly focused right parieto-occipital alpha distribution was observed, and this map was extremely similar between modalities, suggesting common processes for spatial attention during this epoch. Thereafter, however, clear topographical differences in alpha power emerged between the auditory and visual tasks, pointing to additional sensory-specific spatial mechanisms. In this late anticipatory period before onset of the imperative S2 (800–940 ms), alpha-power increases were evident over quite distinct regions of right parietal cortex depending on the sensory modality of the task, suggesting contributions from sensory-specific mechanisms. Despite these clear dissociations of topography during the 800–940 ms post-S1 period, it was also notable that the auditory and visual alpha foci were nested in very close adjacency to each other. This topographic proximity suggests that there may be sensory-selective subfields in tightly neighboring regions, perhaps within a complex of closely related parietal regions.

Parietal role in spatial-selective attention and supramodal deployment mechanisms

Structures within the right parietal lobe are heavily implicated in the deployment of visuospatial attention, with damage to these structures often resulting in severe deficits in the ability to deploy spatial attention (Heilman and Van Den Abell, 1980; Vallar and Perani, 1987; Farah et al., 1989; Foxe et al., 2003). One paradigmatic finding in parietal lesion patients is impairment in the ability to disengage attention from an ipsilesionally presented visual stimulus (i.e., a right hemifield input) to attend to a subsequently presented contralesional stimulus. In a seminal study, Farah et al. (1989), rather than using typical visual–visual stimulus pairings, investigated the effect of cross-sensory pairings on the attentional disengagement abilities of parietal lesion patients. Specifically, they tested whether an ipsilesional auditory stimulus would capture and hold spatial attention, thereby interfering with subsequent processing of a contralesional visual target. They reasoned that, if auditory cues impacted the subsequent deployment of spatial attention to a visual stimulus, this would provide support for a supramodal role for right parietal areas in controlling spatial attention. Indeed, this is precisely what was found, with patients showing impairment in their ability to disengage attention regardless of the sensory modality of the initial ipsilesional cue. Consistent with Farah's supramodal model, visuospatial neglect resulting from parietal lesions often extends across sensory modalities (Vallar, 1997; Brozzoli et al., 2006). Additional evidence for a supramodal system derives from consistent findings that directing attention to a location within a given sensory modality results in enhanced processing, not only for stimuli presented in the attended sensory modality but also for stimuli presented at that location in task-irrelevant sensory modalities (Teder-Sälejärvi et al., 1999; McDonald et al., 2000; Eimer and Driver, 2001). The thesis is further bolstered by imaging studies showing similar frontoparietal attentional networks engaged whether attention is directed within visual, auditory, or tactile space (Macaluso et al., 2003; Shomstein and Yantis, 2006; Krumbholz et al., 2009; Smith et al., 2010).

TMS and electrophysiology studies also provide some support for supramodal representations in the parietal lobe. For example, Chambers et al. (2007) used TMS over parietal cortex during somatosensory spatial and visuospatial tasks. Spatially aligned visual or somatosensory cues directed participants to attend to visual or somatosensory targets in the left or right hemifield. By delivering single-pulse TMS over the right angular and supramarginal gyri of the inferior parietal lobe, they interfered with reflexive attentional shifts to both somatosensory and visual stimuli, when somatosensory cues were used (Chambers et al., 2007). Complicating a straightforward supramodal interpretation, however, similar interference was not seen for visual cues, possibly indicating dominance of visual over other types of information in this node of the spatial orienting system. Of particular relevance to the current work, Kerlin et al. (2010) examined alpha-band activity in a cued auditory spatial task. Arrows instructed participants to attend to spoken sentences on one side while ignoring sentences presented on the other side. Examining alpha power during the S2, they found lateralized effects over parieto-occipital cortex that reflected the hemifield of auditory-selective attention. The important point here is that the topographies of these spatially specific alpha effects appeared highly similar to those reported previously for visuospatial attention tasks (Worden et al., 2000; Kelly et al., 2006, 2010). It needs to be pointed out, however, that only auditory attention was examined and so no direct comparisons between modalities were possible.

Sensory-specific deployment mechanisms

Despite substantial evidence supporting a supramodal account, there is an increasing accumulation of data suggesting a more complex story. For one, in the aforementioned studies in which spatial attention was seen to spread from the attended modality to colocated task-irrelevant stimuli in another modality, the enhancement seen for the task-irrelevant stimuli was typically smaller than if those stimuli had in fact been primarily attended (Eimer et al., 2002). This implies some top-down differentiation of the relevant sensory modality. Also consistent with additional sensory-specific mechanisms, imaging studies have not always demonstrated complete overlap of the frontoparietal control network (Krumbholz et al., 2009). For example, Wu et al. (2007) found that, although generally similar frontoparietal regions were activated during orientation of both visual and auditory attention, there were also sensory-specific activation differences in a subset of parietal and frontal regions. It is also noteworthy that some, although not all, of the imaging studies that have found substantially overlapped networks have used visually presented arrows to cue location (Smith et al., 2010), which is a limitation as mentioned previously.

Findings from the current study suggest that supramodal spatial attention processes are engaged during 600–940 ms after S1, as evidenced by similar lateralized shifts in alpha power over parietal electrodes in this time window (Fig. 3). However, in stage 2 of the present analysis, collapsing across spatial cues revealed sensory-specific increases in alpha power over topographically distinct regions directly preceding the S2 (Fig. 5C,D). This is consistent with the known functional anatomy of attentional control regions within the intraparietal sulcus (IPS) of nonhuman primates (Grefkes and Fink, 2005). Also, although compromise of spatial attention is often found across sensory modalities in neglect patients, it is also the case that more careful psychophysical testing has shown clear distinctions in the extent of involvement across modalities (Sinnett et al., 2007). It seems reasonable to propose that the relatively gross lesions that are common in neglect patients would likely involve a large tract of parietal cortex that might compromise a number of sensory-selective regions but that this would not always be the case when lesions are more punctate. In this latter case, dissociations between sensory systems would be more likely, and this is what has been seen. It is also intriguing that the alpha focus we observed over parietal cortex during auditory deployments in the current study was more lateral than the visual focus, suggesting perhaps that parietal regions closer to the temporal lobe control attention for functions in that lobe. In support, anatomic tracer studies in macaques have shown greater connectivity between ventral intraparietal cortex and auditory cortical regions than were seen for more dorsal IPS regions (Lewis and Van Essen, 2000). Additionally, PET and fMRI studies on auditory sound localization have shown activation in right parietal cortex regions, including the right inferior parietal lobule (Zatorre et al., 2002; Brunetti et al., 2008).

Study limitations and considerations

An important consideration in attention cueing studies such as this concerns potential effects of the sensory modality within which the instructional cue is delivered (Foxe and Simpson, 2005). Here, we used exclusively auditory symbolic cues, explicitly because of concerns about the use of visual arrows. However, this raises the possibility that using auditory cues to predict upcoming visual imperative S2s may not be exactly equated with the use of the same auditory cues to predict an upcoming auditory target. In the former scenario, in addition to deploying spatial attention, the participant must also switch sensory modality between the cue and target. In the latter case, no such cross-modal switch is necessary. Studies by Harvey (1980) and Turatto et al. (2002, 2004) have convincingly shown that this issue of cross-modal switching has real impact on performance in terms of reaction times. For example, Turatto et al. (2004) presented within-modality and across-modality stimulus pairs, using variable interstimulus intervals (ISIs) between both stimuli. The first stimulus of the pair was always irrelevant to the task, whereas subjects were required to make a speeded discrimination response to the occurrence of the second stimulus. Although the first stimulus of the pair was completely irrelevant, reaction times to the second stimulus were significantly slower when the sensory modality switched relative to instances in which the S1 and S2 were of the same modality. The results make it clear that some attentional resources must have been automatically captured by the modality of the S1. In a second experiment, the modality of the S2 never switched such that participants were in no doubt as to which modality they should respond to. Even so, the totally irrelevant S1 resulted in relatively slowed reaction times to the cross-modal S2. These results have obvious implications for the present study in which the auditory spatial task required no cross-modal switch, but the visuospatial task did. However, we believe that this is not a significant issue here because, in all of these studies, the automatic sensory capture effect was found to have a relatively short-lived epoch. Although these cross-modal effects were found to be robust at ISIs of 150 ms, they were highly attenuated for 600 ms ISIs and nonexistent for 1 s delays (Turatto et al., 2004). In the current study, the cue-target interval used was fully 1 s, and so it is highly unlikely that cross-modal switch effects can have impacted the alpha processes of interest here, which were all observed during the late delay-period.

One inherent compromise of the current design pertains to the sequencing of the auditory and visual tasks. That is, to avoid providing any visuospatial cues before assessing “pure” auditory spatial attention, the auditory task was always run before the visual task. It is likely that it was this sequencing that led to the observed waning of performance of the visual task relative to the auditory task, despite the fact that both tasks were initially titrated to have equivalent performance levels at the individual subject level. Unlike the auditory task, performance of the visual task declined after initial titration, and it seems likely that this occurred because the visual task was always conducted in the second half of the recording session as subjects began to fatigue. It is important, however, to note that, although this fatigue effect was a necessary consequence of design considerations, we believe that it is highly unlikely that these modest performance differences could have impacted the frank shifts in the neural circuits that were observed here between tasks.

Another potential issue with the current study pertains to the slight spatial offset of the auditory S2 stimuli during the auditory task (14°) relative to the S2 stimuli in the follow-up visual task (9.2 °), which was necessitated because the speakers flanked the screen. It could be argued that topographical differences uncovered in this study might in part reflect this spatial disparity. We consider this extremely unlikely for a number of reasons. First, auditory spatial localization abilities for the brief broadband noise bursts used here are relatively crude, and the SD of absolute sound localization also grows with increasing eccentricity (Recanzone et al., 1998). In a careful study in macaques, localization thresholds for noise bursts ranged from 3.2 to 4.1° (Recanzone et al., 2000). The disparity here was 4.85°, which falls very close to this threshold. Even had participants been presented the visual and auditory targets during the same task, we strongly suspect that it would have been very difficult for them to resolve this small spatial difference. The second key point is that, when we compared the alpha topographies for cue-left versus cue-right conditions between modalities (the spatially specific aspect of the data), no differences were observed, although this is the aspect of the data that would be expected to modulate as a function of spatial differences.

In turn, if we take the position that participants could indeed precisely resolve these spatial differences between modalities and across tasks, the effects still do not accord with a spatial disparity account. That is, topographical mapping of visual cortex in primates has shown that there is dramatically decreased cortical representation in early retinotopic cortices as the eccentricity of stimulation increases (Tootell et al., 1988), the so-called cortical magnification factor. For eccentricities between 10 and 14°, our difference of 4.85° would correspond to an ∼5–6 mm shift along retinotopic cortex (Virsu and Rovamo, 1979), a shift that is not consistent with the frank changes in topography over unilateral parietal scalp that we observe here. Finally, in the current study, distracter space was very broadly defined, because the left half of space was merely pitted against the right. That is, participants would not have to respond to stimuli occurring anywhere near the uncued hemispace, such that such fine-resolution representations of distracter space were unnecessary. Therefore, it is more likely that participants used a coarse representation of the to-be-ignored space (i.e., “left” or “right”).

Conclusion

These results show that auditory spatial attention recruits similar alpha-band oscillatory mechanisms to visuospatial attention, as evidenced by lateralized shifts in alpha activity over parieto-occipital cortex contralateral to unattended space. However, clear topographic differences between auditory and visual tasks also suggest that sensory-specific mechanisms are recruited, providing support for the interactivity thesis. These results are in accord with EEG and fMRI studies (Eimer et al., 2002; Santangelo et al., 2010) that suggest a hybrid role for the parietal cortex, in which supramodal spatial attention mechanisms act in concert with sensory-specific processes.

Footnotes

This work was primarily supported by National Science Foundation Grant BCS0642584 (J.J.F.). A.C.S. is supported by a Ruth L. Kirschstein National Research Service Award predoctoral fellowship from National Institute of Mental Health Grant MH087077. S.B. is supported by a City University of New York Enhanced Chancellor's Fellowship, through the Program in Cognitive Neuroscience at City College. Additional support for the work of J.J.F. and S.M. came from National Institute of Mental Health Grant MH085322. We thank Dr. Hans-Peter Frey for technical assistance with eye tracking.

References

- Brozzoli C, Demattè ML, Pavani F, Frassinetti F, Farnè A. Neglect and extinction: within and between sensory modalities. Restor Neurol Neurosci. 2006;24:217–232. [PubMed] [Google Scholar]

- Brunet D, Murray MM, Michel CM. Spatiotemporal analysis of multichannel EEG: Cartool. Comput Intell Neurosci. 2011;2011:813870. doi: 10.1155/2011/813870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunetti M, Della Penna S, Ferretti A, Del Gratta C, Cianflone F, Belardinelli P, Caulo M, Pizzella V, Olivetti Belardinelli M, Romani GL. A frontoparietal network for spatial attention reorienting in the auditory domain: a human fMRI/MEG study of functional and temporal dynamics. Cereb Cortex. 2008;18:1139–1147. doi: 10.1093/cercor/bhm145. [DOI] [PubMed] [Google Scholar]

- Capotosto P, Babiloni C, Romani GL, Corbetta M. Frontoparietal cortex controls spatial attention through modulation of anticipatory alpha rhythms. J Neurosci. 2009;29:5863–5872. doi: 10.1523/JNEUROSCI.0539-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers CD, Payne JM, Mattingley JB. Parietal disruption impairs reflexive spatial attention within and between sensory modalities. Neuropsychologia. 2007;45:1715–1724. doi: 10.1016/j.neuropsychologia.2007.01.001. [DOI] [PubMed] [Google Scholar]

- Dockree PM, Kelly SP, Foxe JJ, Reilly RB, Robertson IH. Optimal sustained attention is linked to the spectral content of background EEG activity: greater ongoing tonic alpha (approximately 10 Hz) power supports successful phasic goal activation. Eur J Neurosci. 2007;25:900–907. doi: 10.1111/j.1460-9568.2007.05324.x. [DOI] [PubMed] [Google Scholar]

- Eimer M, Driver J. Cross-modal links in endogenous and exogenous spatial attention: evidence from event-related brain potential studies. Neurosci Biobehav Rev. 2001;25:497–511. doi: 10.1016/s0149-7634(01)00029-x. [DOI] [PubMed] [Google Scholar]

- Eimer M, van Velzen J, Driver J. Cross-modal interactions between audition, touch, and vision in endogenous spatial attention: ERP evidence on preparatory states and sensory modulations. J Cogn Neurosci. 2002;14:254–271. doi: 10.1162/089892902317236885. [DOI] [PubMed] [Google Scholar]

- Eimer M, van Velzen J, Forster B, Driver J. Shifts of attention in light and in darkness: an ERP study of supramodal attentional control and crossmodal links in spatial attention. Cogn Brain Res. 2003;15:308–323. doi: 10.1016/s0926-6410(02)00203-3. [DOI] [PubMed] [Google Scholar]

- Farah MJ, Wong AB, Monheit MA, Morrow LA. Parietal lobe mechanisms of spatial attention: modality specific or supramodal? Neuropsychologia. 1989;27:461–470. doi: 10.1016/0028-3932(89)90051-1. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Simpson GV. Biasing the brain's attentional set. II. Effects of selective intersensory attentional deployments on subsequent sensory processing. Exp Brain Res. 2005;166:393–401. doi: 10.1007/s00221-005-2379-6. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Simpson GV, Ahlfors SP. Parieto-occipital ∼10 Hz activity reflects anticipatory state of visual attention mechanisms. NeuroReport. 1998;9:3929–3933. doi: 10.1097/00001756-199812010-00030. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, McCourt ME, Javitt DC. Right hemisphere control of visuospatial attention: line-bisection judgments evaluated with high-density electrical mapping and source analysis. Neuroimage. 2003;19:710–726. doi: 10.1016/s1053-8119(03)00057-0. [DOI] [PubMed] [Google Scholar]

- Fu KM, Foxe JJ, Murray MM, Higgins BA, Javitt DC, Schroeder CE. Attention-dependent supression of distractor visual input can be cross-modally cued as indexed by anticipatory parieto-occipital alpha-band oscillations. Cogn Brain Res. 2001;12:145–152. doi: 10.1016/s0926-6410(01)00034-9. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Fink GR. The functional organization of the intraparietal sulcus in humans and monkeys. J Anat. 2005;207:3–17. doi: 10.1111/j.1469-7580.2005.00426.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gomez-Ramirez M, Higgins BA, Rycroft JA, Owen GN, Mahoney J, Shpaner M, Foxe JJ. The deployment of intersensory selective attention: a high-density electrical mapping study of the effects of theanine. Clin Neuropharmacol. 2007;30:25–38. doi: 10.1097/01.WNF.0000240940.13876.17. [DOI] [PubMed] [Google Scholar]

- Gomez-Ramirez M, Kelly SP, Montesi JL, Foxe JJ. The effects of l-theanine on alpha-band oscillatory brain activity during a visuo-spatial attention task. Brain Topogr. 2009;22:44–51. doi: 10.1007/s10548-008-0068-z. [DOI] [PubMed] [Google Scholar]

- Harvey N. Non-informative effects of stimuli functioning as cues. Q J Exp Psychol A. 1980;32:413–425. doi: 10.1080/14640748008401835. [DOI] [PubMed] [Google Scholar]

- Heilman KM, Van Den Abell T. Right hemisphere dominance for attention: the mechanism underlying hemispheric asymmetries of inattention (neglect) Neurology. 1980;30:327–330. doi: 10.1212/wnl.30.3.327. [DOI] [PubMed] [Google Scholar]

- Ivanoff J, Saoud W. Nonattentional effects of nonpredictive central cues. Atten Percept Psychophys. 2009;71:872–880. doi: 10.3758/APP.71.4.872. [DOI] [PubMed] [Google Scholar]

- Kelly SP, Lalor EC, Finucane C, McDarby G, Reilly RB. Visual spatial attention control in an independent brain-computer interface. IEEE Trans Biomed Eng. 2005;52:1588–1596. doi: 10.1109/TBME.2005.851510. [DOI] [PubMed] [Google Scholar]

- Kelly SP, Lalor EC, Reilly RB, Foxe JJ. Increases in alpha oscillatory power reflect an active retinotopic mechanism for distractor supression during sustained visuospatial attention. J Neurophysiol. 2006;95:3844–3851. doi: 10.1152/jn.01234.2005. [DOI] [PubMed] [Google Scholar]

- Kelly SP, Gomez-Ramirez M, Montesi JL, Foxe JJ. l-Theanine and caffeine in combination affect human cognition as evidenced by oscillatory alpha-band activity and attention task performance. J Nutr. 2008;138:1572S–1577S. doi: 10.1093/jn/138.8.1572S. [DOI] [PubMed] [Google Scholar]

- Kelly SP, Gomez-Ramirez M, Foxe JJ. The strength of anticipatory spatial biasing predicts target discrimination at attended locations: a high-density EEG study. Eur J Neurosci. 2009;30:2224–2234. doi: 10.1111/j.1460-9568.2009.06980.x. [DOI] [PubMed] [Google Scholar]

- Kelly SP, Foxe JJ, Newman G, Edelman JA. Prepare for conflict: EEG correlates of the anticipation of target competition during overt and covert shifts of visual attention. Eur J Neurosci. 2010;31:1690–1700. doi: 10.1111/j.1460-9568.2010.07219.x. [DOI] [PubMed] [Google Scholar]

- Kerlin JR, Shahin AJ, Miller LM. Attentional gain control of ongoing cortical speech representations in a “cocktail party.”. J Neurosci. 2010;30:620–628. doi: 10.1523/JNEUROSCI.3631-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krumbholz K, Nobis EA, Weatheritt RJ, Fink GR. Executive control of spatial attention shifts in the auditory compared to the visual modality. Hum Brain Mapp. 2009;30:1457–1469. doi: 10.1002/hbm.20615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le Van Quyen M, Foucher J, Lachaux J, Rodriguez E, Lutz A, Martinerie J, Varela FJ. Comparison of Hilbert transform and wavelet methods for the analysis of neuronal synchrony. J Neurosci Methods. 2001;111:83–98. doi: 10.1016/s0165-0270(01)00372-7. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Van Essen DC. Corticocortical connections of visual, sensorimotor, and multimodal processing areas in the parietal lobe of the macaque monkey. J Comp Neurol. 2000;428:112–137. doi: 10.1002/1096-9861(20001204)428:1<112::aid-cne8>3.0.co;2-9. [DOI] [PubMed] [Google Scholar]

- Macaluso E, Eimer M, Frith CD, Driver J. Preparatory states in crossmodal spatial attention: spatial specificity and possible control mechanisms. Exp Brain Res. 2003;149:62–74. doi: 10.1007/s00221-002-1335-y. [DOI] [PubMed] [Google Scholar]

- McDonald JJ, Teder-Sälejärvi WA, Hillyard SA. Involuntary orienting to sound improves visual perception. Nature. 2000;407:906–908. doi: 10.1038/35038085. [DOI] [PubMed] [Google Scholar]

- O'Connell RG, Dockree PM, Robertson IH, Bellgrove MA, Foxe JJ, Kelly SP. Uncovering the neural signature of lapsing attention: electrophysiological signals predict errors up to 20 s before they occur. J Neurosci. 2009;29:8604–8611. doi: 10.1523/JNEUROSCI.5967-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Posner MI, Snyder CR, Davidson BJ. Attention and detection of signals. J Exp Psychol. 1980;109:160–174. [PubMed] [Google Scholar]

- Recanzone GH, Makhamra SD, Guard DC. Comparison of relative and absolute sound localization ability in humans. J Acoust Soc Am. 1998;103:1085–1097. doi: 10.1121/1.421222. [DOI] [PubMed] [Google Scholar]

- Recanzone GH, Guard DC, Phan ML, Su TK. Correlation between activity of single auditory cortical neurons and sound localization behavior in the macaque monkey. J Neurophysiol. 2000;83:2723–2739. doi: 10.1152/jn.2000.83.5.2723. [DOI] [PubMed] [Google Scholar]

- Rihs TA, Michel CM, Thut G. Mechanisms of selective inhibition in visual spatial attention are indexed by alpha-band EEG synchronization. Eur J Neurosci. 2007;25:603–610. doi: 10.1111/j.1460-9568.2007.05278.x. [DOI] [PubMed] [Google Scholar]

- Rihs TA, Michel CM, Thut G. A bias for posterior alpha-band power suppression versus enhancement during shifting versus maintenance of spatial attention. Neuroimage. 2009;44:190–199. doi: 10.1016/j.neuroimage.2008.08.022. [DOI] [PubMed] [Google Scholar]

- Romei V, Gross J, Thut G. On the role of prestimulus alpha rhythms over occipito-parietal areas in visual input regulation: correlation or causation? J Neurosci. 2010;30:8692–8697. doi: 10.1523/JNEUROSCI.0160-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santangelo V, Fagioli S, Macaluso E. The costs of monitoring simultaneously two sensory modalities decrease when dividing attention in space. Neuroimage. 2010;49:2717–2727. doi: 10.1016/j.neuroimage.2009.10.061. [DOI] [PubMed] [Google Scholar]

- Schröder M, Trouvain J. The German text-to-speech synthesis system MARY: a tool for research, development, and teaching. Int J Speech Technol. 2003;6:365–377. [Google Scholar]

- Shomstein S, Yantis S. Parietal cortex mediates voluntary control of spatial and nonspatial auditory attention. J Neurosci. 2006;26:435–439. doi: 10.1523/JNEUROSCI.4408-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sinnett S, Juncadella M, Rafal R, Azañón E, Soto-Faraco S. A dissociation between visual and auditory hemi-inattention: evidence from temporal order judgements. Neuropsychologia. 2007;45:552–560. doi: 10.1016/j.neuropsychologia.2006.03.006. [DOI] [PubMed] [Google Scholar]

- Smith DV, Davis B, Niu K, Healy EW, Bonhilha L, Fridriksson J, Morgan PS, Rorden C. Spatial attention evokes similar activation patterns for visual and auditory stimuli. J Cogn Neurosci. 2010;22:347–361. doi: 10.1162/jocn.2009.21241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snyder AC, Foxe JJ. Anticipatory attentional suppression of visual features indexed by oscillatory alpha-band power increases: a high density electrical mapping study. J Neurosci. 2010;30:4024–4032. doi: 10.1523/JNEUROSCI.5684-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spence C, Driver J. Audiovisual links in endogenous covert spatial attention. J Exp Psychol Hum Percept Perform. 1996;22:1005–1030. doi: 10.1037//0096-1523.22.4.1005. [DOI] [PubMed] [Google Scholar]

- Störmer VS, Green JJ, McDonald JJ. Tracking the voluntary control of auditory spatial attention with event-related brain potentials. Psychophysiology. 2009;46:357–366. doi: 10.1111/j.1469-8986.2008.00778.x. [DOI] [PubMed] [Google Scholar]

- Teder-Sälejärvi WA, Münte TF, Sperlich F, Hillyard SA. Intra-modal and crossmodal spatial attention to auditory and visual stimuli. An event-related brain potential study. Cogn Brain Res. 1999;8:327–343. doi: 10.1016/s0926-6410(99)00037-3. [DOI] [PubMed] [Google Scholar]

- Thut G, Nietzel A, Brandt SA, Pascual-Leone A. Alpha-band electroencephalographic activity over occipital cortex indexes visuospatial attention bias and predicts visual target detection. J Neurosci. 2006;26:9494–9502. doi: 10.1523/JNEUROSCI.0875-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tootell RB, Switkes E, Silverman MS, Hamilton SL. Functional anatomy of macaque striate cortex. II. Retinotopic organization. J Neurosci. 1988;8:1531–1568. doi: 10.1523/JNEUROSCI.08-05-01531.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turatto M, Benso F, Galfano G, Umilt Nonspatial attentional shifts between audition and vision. J Exp Psychol Hum Percept Perform. 2002;28:628–639. doi: 10.1037//0096-1523.28.3.628. [DOI] [PubMed] [Google Scholar]

- Turatto M, Galfano G, Bridgeman B, Umiltà C. Space-independent modality-driven attentional capture in auditory, tactile and visual systems. Exp Brain Res. 2004;155:301–310. doi: 10.1007/s00221-003-1724-x. [DOI] [PubMed] [Google Scholar]

- Vallar G. Spatial frames of reference and somatosensory processing: a neuropsychological perspective. Philos Trans R Soc Lond B Biol Sci. 1997;352:1401–1409. doi: 10.1098/rstb.1997.0126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vallar G, Perani D. The anatomy of spatial neglect in humans. In: Marc J, editor. Advances in psychology. New York: Elsevier; 1987. pp. 235–258. [Google Scholar]

- Virsu V, Rovamo J. Visual resolution, contrast sensitivity, and the cortical magnification factor. Exp Brain Res. 1979;37:475–494. doi: 10.1007/BF00236818. [DOI] [PubMed] [Google Scholar]

- Wetherill GB, Levitt H. Sequential estimation points on a psychometric function. Br J Math Stat Psychol. 1965;18:1–10. doi: 10.1111/j.2044-8317.1965.tb00689.x. [DOI] [PubMed] [Google Scholar]

- Worden MS, Foxe JJ, Wang N, Simpson GV. Anticipatory biasing of visuospatial attention indexed by retinotopically specific alpha-band electroencephalography increases over occipital cortex. J Neurosci. 2000;20(RC63):1–6. doi: 10.1523/JNEUROSCI.20-06-j0002.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu CT, Weissman DH, Roberts KC, Woldorff MG. The neural circuitry underlying the executive control of auditory spatial attention. Brain Res. 2007;1134:187–198. doi: 10.1016/j.brainres.2006.11.088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wylie GR, Javitt DC, Foxe JJ. Don't think of a white bear: an fMRI investigation of the effects of sequential instructional sets on cortical activity in a task-switching paradigm. Hum Brain Mapp. 2004;21:279–297. doi: 10.1002/hbm.20003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Bouffard M, Ahad P, Belin P. What is “where” in the human auditory cortex? Nat Neurosci. 2002;5:905–909. doi: 10.1038/nn904. [DOI] [PubMed] [Google Scholar]