Abstract

The segregation between cortical pathways for the identification and localization of objects is thought of as a general organizational principle in the brain. Yet, little is known about the unimodal versus multimodal nature of these processing streams. The main purpose of the present study was to test whether the auditory and tactile dual pathways converged into specialized multisensory brain areas. We used functional magnetic resonance imaging (fMRI) to compare directly in the same subjects the brain activation related to localization and identification of comparable auditory and vibrotactile stimuli. Results indicate that the right inferior frontal gyrus (IFG) and both left and right insula were more activated during identification conditions than during localization in both touch and audition. The reverse dissociation was found for the left and right inferior parietal lobules (IPL), the left superior parietal lobule (SPL) and the right precuneus-SPL, which were all more activated during localization conditions in the two modalities. We propose that specialized areas in the right IFG and the left and right insula are multisensory operators for the processing of stimulus identity whereas parts of the left and right IPL and SPL are specialized for the processing of spatial attributes independently of sensory modality.

Introduction

The traditional sensory processing schemes postulate the presence of sharply defined, modality-specific brain areas, but the modality-exclusivity of sensory brain regions has been challenged over the last decade (Pascual-Leone and Hamilton, 2001; Wallace et al., 2004; Schroeder and Foxe, 2005). Numerous studies have shown that several regions in the visual cortex can be activated by additional modalities, such as touch (Hyvärinen et al., 1981; Zangaladze et al., 1999; Merabet et al., 2004; Pietrini et al., 2004; Prather et al., 2004; Zhang et al., 2004, 2005; Burton et al., 2006; Peltier et al., 2007) and hearing (Zimmer et al., 2004; Renier et al., 2005). Similarly, different auditory areas have been shown to respond to somatosensory (Schroeder et al., 2001; Foxe et al., 2002; Golaszewski et al., 2002; Fu et al., 2003; Hackett et al., 2007; Smiley et al., 2007) and visual stimulation (Calvert, 2001; Wright et al., 2003; Beauchamp et al., 2004a,b; van Atteveldt et al., 2004, 2007; Kayser et al., 2007, 2008; Meyer et al., 2007; Noesselt et al., 2007). Several parietal and frontal areas also support multisensory integration that binds information from different modalities into unified multisensory representations (Hyvärinen and Poranen, 1974; Downar et al., 2000; Bremmer et al., 2001).

In addition to the sensory organization scheme, the principle of “division of labor” has been demonstrated and implies a specialization of function, i.e., localization versus identification, in various visual cortical areas (Zeki, 1978; Ungerleider and Mishkin, 1982; Haxby et al., 1991; Goodale and Milner, 1992). Studies in nonhuman animals and humans have also indicated two distinct pathways within the cerebral cortex for hearing (Rauschecker, 1997, 1998a,b; Rauschecker et al., 1997; Deibert et al., 1999; Alain et al., 2001; Maeder et al., 2001; Anurova et al., 2003; Poremba et al., 2003) and, to a lesser extent, for touch (Stoesz et al., 2003; Harada et al., 2004; Van Boven et al., 2005; Kitada et al., 2006). Beyond the primary sensory cortices, the “what” and “where” pathways involve some brain areas within the parietal, frontal and temporal cortices that show multimodal properties (Romanski and Goldman-Rakic, 2002), although even in these regions sensory processing is initially still unimodal (Bushara et al., 1999). Therefore, one may wonder at what point along the “what” and “where” streams brain areas turn from unimodal into multimodal.

Different stimulus attributes, such as location, shape or frequency, can be accessed by different modalities and are stored and processed in specialized multimodal brain regions. Object shape, for instance, can be “apprehended” both by touch and vision (Sathian, 2005) and is ultimately processed by a multimodal (tactile-visual) region, the lateral occipital complex (Amedi et al., 2001, 2002). In the present study we used functional magnetic resonance imaging (fMRI) to compare directly the brain activation in the same subjects who processed spatial and nonspatial attributes of auditory and vibrotactile stimuli. We hypothesized that sounds and comparable vibrotactile stimuli would activate common multimodal brain areas within the frontal and parietal cortices according to the nature of the task, i.e., during “what” and “where” processing, respectively.

Materials and Methods

Subjects

Seventeen healthy adult subjects participated in this study (5 males and 12 females; 15 right-handed and 2 left-handed, mean age = 39 years; SD = 11.4; minimum-maximum = 25–58). Subjects were recruited on a voluntary basis via flyers posted on the Georgetown University campus and hospital. Seventeen subjects were then randomly selected among these volunteers provided they were eligible for an fMRI study (i.e., they were healthy adults <60 years of age without history of neurological, psychiatric, or sensory impairment). The protocol was approved by Georgetown University's Internal Review Board, and written informed consent was obtained from all participants before the experiment.

MRI acquisition

All fMRI data were acquired at Georgetown University's Center for Functional and Molecular Imaging using an echoplanar imaging sequence on a 3-Tesla Siemens Tim Trio scanner with a 12-channel head coil (flip angle = 90°, repetition time = 3 s, echo time = 60 ms, 64 × 64 matrix). For each run, 184 volumes of 50 slices (slice thickness, 2.8 mm; gap thickness, 0.196 mm) were acquired in a continuous sampling. At the end, a three-dimensional T1-weighted MPRAGE (magnetization-prepared rapid-acquisition gradient echo) image (resolution 1 × 1 × 1 mm3) was acquired for each subject.

Stimuli and experimental paradigms

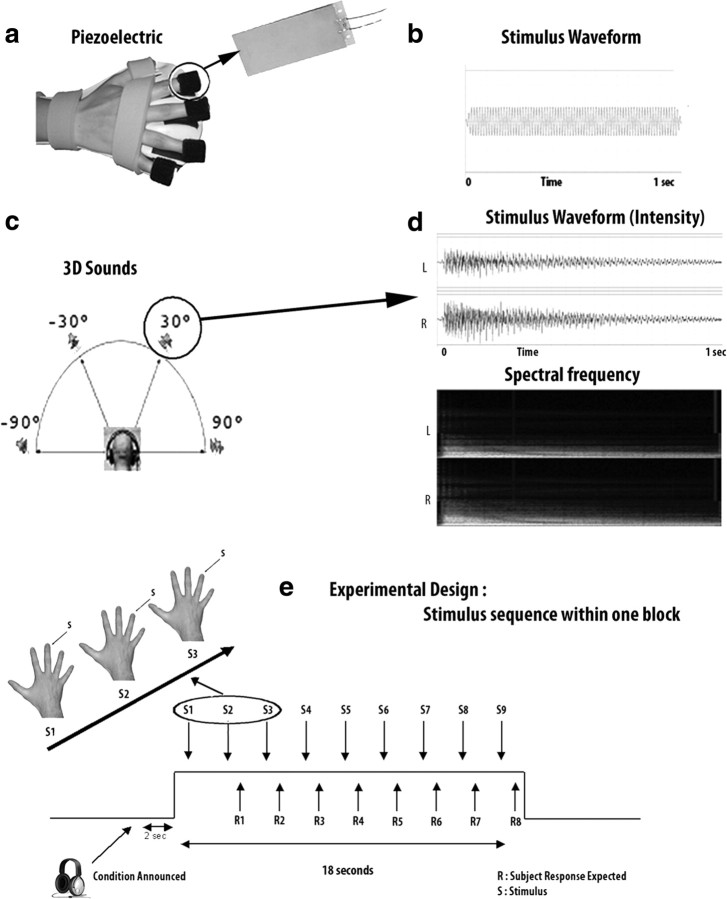

We used a block design paradigm in which six different conditions alternated, separated by “off” conditions: i.e., three tasks (identification, localization, and detection) and two sensory modalities (auditory and tactile stimulation). One individual study consisted of six runs of ∼9 min each. Before scanning, subjects underwent a brief familiarization session to stimuli and tasks while lying in the scanner. During the familiarization, the intensity of auditory and tactile stimulation was individually adapted to each subject's sensibility and adjusted so that the stimulation was noticeable while being comfortable for the subject. Special attention was paid to equalize the subjective intensity of stimulation between the two modalities. A predetermined pseudo-random order of the conditions and runs was counterbalanced across subjects. During the localization and identification conditions, we used a one-back comparison task in which subjects were asked to compare each stimulus with the previous one, to determine whether it was the same or different regarding either its identity (i.e., frequency) or its location. This task involved both perceptual and working memory aspects. The subjects delivered their responses by pressing one of two buttons on a response pad held in the left hand (while the right hand was equipped with the tactile stimulator) (Fig. 1a). During the detection tasks, subjects were required to press the left button of the response pad at stimulus presentation. The detection conditions served as control conditions to subtract the related basic sensory activation from auditory or tactile identity and spatial processing. The same stimuli were used in the three conditions within a modality; only the instructions and the task varied. The stimulus duration was identical in the two modalities (1 s). During the experiment, subjects were blindfolded. Each condition was announced through headphones at the end of each preceding resting period (Fig. 1e). Stimulus presentation and response recording were controlled using Superlab software 4.0 (Cedrus) running on a personal computer.

Figure 1.

Experimental setup. a, The experimental setup used to deliver vibrotactile stimulation. Vibrotactile stimuli were produced by nonmagnetic, ceramic piezoelectric bending elements placed directly under the fingers of the hand. The benders were driven by tones presented at a high-amplitude value and produced a vibration that corresponded to that driving frequency. Each of the four piezoelectric elements was attached to one of the four fingers with fasteners (Velcro), which was glued to the underside of the piezoelectric element. In addition, the right hand was securely attached to prevent any hand movements during the experiment. b, Stimuli consisted of four pure tones of 40, 80, 160, and 320 Hz (duration = 1000 ms, with a rise/fall time of ∼10 ms, and an interstimulus interval of 1000 ms) applied to one of the four fingers of the right hand. The waveform of the 80 Hz stimulus is shown here for illustration. c, For the auditory modality, four piano chords varying in pitch originated from four different sources around the subject: −90°, −30°, 30°, and 90°. d, One of the auditory stimuli originating from a source located at 30°. The signal varied both in intensity and in frequency according to the head-related transfer function calculated from a mean head size. e, Experimental design. The stimulus sequence within one block for the tactile modality is schematized here. During one block (18 s), a sequence of nine stimuli of 1 s duration (separated by an interstimulus interval of 1 s) was delivered on the subject's fingers. The subjects had to perform a one-back comparison task, i.e., to compare each stimulus with the previous one and to determine whether it was the same or different regarding either its frequency or its location. Eight responses were expected per block of nine stimuli. An identical paradigm was used in the auditory modality.

Auditory stimulation.

We used a set of 16 stimuli that consisted of four piano chords varying in pitch and originating from four different locations in virtual auditory space in front of the listener. The stimuli were presented via electrostatic MRI-compatible headphones (STAX, frequency transfer function 20 Hz–20 kHz). The sounds were created using Fleximusic Composer and combined four different notes. All sounds were further modified using Amphiotik Synthesis from Holistiks to generate an authentic Virtual Auditory Environment based on the Head Related Transfer Functions measured from a mean head size. The four sources were located within a half-circle in front of the listener (Fig. 1). The first source was located at the extreme left of the subject corresponding to −90° azimuth. The other sources were placed, respectively, at −30°, +30°, and +90° progressing toward the right of the listener. The stimulus duration was 1000 ms including the rise/fall times of 10 ms; the interstimulus interval was 1000 ms. Pretests performed on a separate group of 10 subjects showed that the sounds could be localized accurately in a forced-choice paradigm with a mean accuracy >95% in all subjects. A similar stimulation technique to generate a virtual three-dimensional (3-D) sound environment has been used successfully in previous studies (Bushara et al., 1999; Weeks et al., 1999, 2000).

Tactile stimulation.

We used equipment similar to the one used by Weaver and Stevens (2007) to generate vibrotactile stimulation on the subjects' fingers. Tactile stimuli were made as comparable as possible to the auditory ones: stimulus duration was identical and subjective stimulus intensity was made equivalent as well. Stimuli consisted of four pure tones of 40, 80, 160, and 320 Hz (duration = 1000 ms, with a rise/fall time of 10 ms, and an interstimulus interval of 1000 ms) applied to one of the four fingers of the right hand (i.e., index finger, middle finger, ring finger, and baby finger) (Fig. 1a). Frequencies were selected based on their salience and from within the range allowed by the equipment (the piezoelectric elements in particular). Stimuli were created using Adobe Audition software and generated by an echo audiofire8 soundcard (AudioAmigo). Vibrotactile stimuli were then produced by a nonmagnetic, ceramic piezoelectric bending element (i.e., benders Q220-A4-303YB; Piezo Systems, Quick Mount Bender) placed directly under the fingers of the hand. The benders were driven by the above tones presented at a high-amplitude value and produced a vibration that corresponded to that driving frequency. The amplitude (intensity) of the signal was amplified using Alesis RA150 amplifiers. To accurately and consistently present vibrotactile stimulation to individuals, each of the four piezoelectric elements was attached to one of the four fingers with hook-and-loop fasteners (Velcro), which were glued to the underside of the piezoelectric elements and wrapped around one finger. In addition, the right hand was securely fastened into an anti-spasticity ball splint (Sammons Preston) to prevent any hand movements during the experiment. This splint encased the distal third of the arm and the whole hand, to secure the position of the arm relative to the hand, and separated the digits to prevent changes in finger position. Foam pads with Velcro adjustments were wrapped around the arm while resting in the splint.

Data analysis

The fMRI signal in the different conditions was compared using BrainVoyager QX (Version 1.10; Brain Innovation), applying a regression analysis. Before analysis, preprocessing consisted of slice timing correction, temporal high-pass filtering (removing frequencies lower than 3 cycles/run), and correction for small interscan head movements (Friston et al., 1995). Data were transformed into Talairach space (Talairach and Tournoux, 1988). For anatomical reference, the computed statistical maps were overlaid on the 3-D T1-weighted scans. The predictor time courses of the regression model were computed on the basis of a linear model of the relation between neural activity and hemodynamic response function (HRF), assuming an HRF neural response during phases of sensory stimulation (Boynton et al., 1996). A random-effects analysis (RFX) in the group was then performed at the whole-brain level, with a threshold of q < 0.05, corrected for false discovery rate (FDR), in combination with a cluster size threshold of p < 0.05. Alternatively, a threshold of p < 0.005 (uncorrected for multiple comparisons) was used depending on the strictness of the contrasts performed. The obtained maps were projected onto the inflated cortical surface of a representative subject for display purposes. Brain areas were identified using Talairach Client 2.4 (Lancaster et al., 2000). All the contrasts used for the comparisons (main effects and interactions) are presented in a “table of predefined contrasts” (see supplemental Table 1S, available at www.jneurosci.org as supplemental material). Behavioral analyses were performed using Statistica software 6.0. (Statsoft Inc).

Results

Behavioral results

Behavioral performance during MRI scanning was overall satisfactory for all conditions in both modalities, although the results were better in the auditory than in the tactile modality (Table 1, Audio, Tact). A 2 (modality) × 3 (task) ANOVA further confirmed an effect of the task, the modality and an interaction between these two factors: F(2,32) = 11.796, p < 0.001; F(1,16) = 19.234, p < 0.001 and F(2,32) = 111.630, p < 0.001, respectively. Newman–Keuls post hoc comparisons showed no significant difference between audio identification (AID) and audio localization (AL), but did show a difference between tactile identification (TID) and tactile localization (TL): p = 0.77 and p < 0.001, respectively. There were also differences in the performance between AID and TID, AL and TL as well as between audio detection (AD) and tactile detection (TD) (all p values <0.001). To assess the effect of age on subjects' performance, a correlation analysis was performed. No significant correlation was observed between age and performance (all r values <0.035; all p values >0.19).

Table 1.

Behavioral performance (percentage of correct responses) as a function of the task and the modality

| Identification | Localization | Detection | |

|---|---|---|---|

| Audio | 91.48; SD = 7.1 | 91.97; SD = 6.7 | 98.45; SD = 2.8 |

| Tact | 70.35; SD = 8.6 | 79.17; SD = 9.3 | 93.42; SD = 5.5 |

Functional MRI results

Main effects related to sensory modality

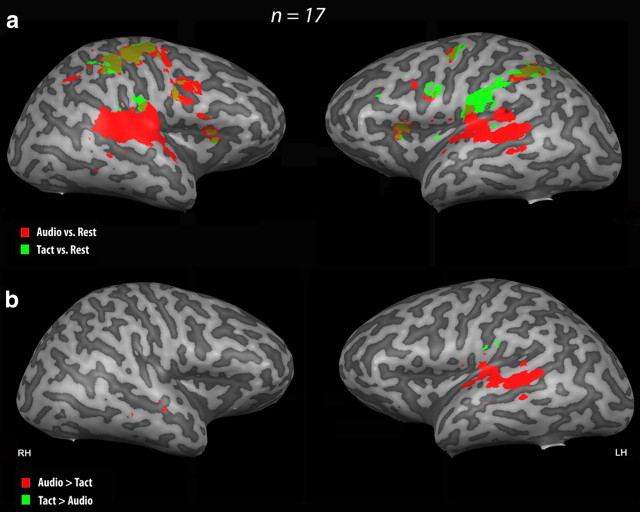

RFX group analysis was performed at the whole-brain level, using a threshold of q < 0.05 [corrected for multiple comparisons using false discovery rate (FDR)] in combination with a cluster size threshold of p < 0.05. The contrast {Audio vs rest} showed the expected bilateral activation in primary and secondary auditory cortices [i.e., in Brodmann area (BA) 41, BA 42, and BA 22 in the superior temporal cortex] during auditory conditions, whereas the contrast {Tact vs rest} revealed bilateral activation (more pronounced in the left hemisphere) in primary and secondary somatosensory cortices (i.e., in the postcentral gyrus) in response to vibrotactile stimulation (BA 1, 2, 3, 40, 43) (Fig. 2a, Tables 2, 3). Overlap between the activation foci was observed in several regions including the right precentral and postcentral gyri and the posterior parietal cortex (BA 4 and BA 40), the left insula (BA 13), and the left and right medial frontal gyrus (BA 6) (Tables 2, 3).

Figure 2.

Brain activation related to the sensory modality. Auditory (in red) and tactile (in green) functional MRI activation in 17 sighted subjects is projected onto a 3-D representation of the right and left hemispheres (RH and LH) of a representative brain of one subject. Only positive differences are shown in the related contrasts. a, Activation maps resulting from the contrasts {Auditory conditions vs Rest}, i.e., {(AID + AL + AD)} and {Tact vs Rest}, i.e., {(TID + TL + TD)}. The activation maps were obtained using RFX with a corrected threshold for multiple comparisons using FDR of q < 0.05 in combination with a cluster size threshold correction of p < 0.05 and superimposed on the individual brain. The auditory conditions activated bilaterally the primary and secondary auditory cortex (BA41, BA42, BA22) (see Tables 2 and 3 for an exhaustive list of the activation foci). The tactile conditions activated bilaterally the primary and secondary somatosensory cortex (BA1, 2, 3, 43) (see Tables 2 and 3 for an exhaustive list of activation foci), although the activation was more extended in the left hemisphere. Overlapping activation is shown in yellow. b, Activation maps resulting from the contrasts {Auditory Conditions − Tactile Conditions}, i.e., {[(AID + AL + AD) > (TID + TL + TD)] ∩ (AID + AL + AD)} and {Tactile Conditions − Auditory Conditions}, i.e., {[(TID + TL + TD) > (AID + AL + AD)] ∩ (TID + TL + TD)}. The activation maps were obtained using RFX analyses with an uncorrected threshold of p < 0.005 in combination with a cluster size threshold correction of p < 0.05 and superimposed on the individual brain. Left and right primary and secondary auditory cortices (BA41, BA42, BA22) were specifically activated during the auditory conditions (Tables 2, 3). The left postcentral gyrus (BA43, contralateral to the stimulated hand) was specifically activated during the tactile conditions (Tables 2, 3).

Table 2.

List of activation foci related to auditory and tactile stimulation: main effect

| {Audio versus rest} q < 0.05 FDR corrected |

{Tact versus rest} q < 0.05 FDR corrected |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | ||||

| R TTG & STG | BA 41, 42, 22 | 13120 | 11.85 | 54 | −19 | 10 | R PoG | BA 43 | 400 | 5.50 | 51 | −19 | 19 |

| R precuneus + PoG | BA 4, 3, 2, 40, 9 | 17319 | 8.41 | 39 | −25 | 61 | R PrecG | BA 6 | 910 | 5.75 | 42 | −1 | 34 |

| R MFG | BA 9 | 485 | 5.79 | 39 | 38 | 37 | R PrecG-IPL + PoG | BA 4, 40, 3, 2 | 10636 | 9.55 | 39 | −25 | 61 |

| R thalamus | 6435 | 9.85 | 9 | −16 | 7 | R insula | BA 13 | 939 | 7.65 | 30 | 17 | 16 | |

| R caudate nucleus | 704 | 6.09 | 18 | −4 | 16 | R putamen | 316 | 5.18 | 21 | −4 | 13 | ||

| R medial globus pallidus | 128 | 4.75 | 15 | −7 | −2 | R thalamus | 619 | 6.44 | 15 | −16 | 10 | ||

| R & L MFG | BA 6 | 4720 | 9.53 | −3 | −4 | 52 | R & L MFG | BA 6 | 3799 | 1.06 | −3 | −10 | 55 |

| L LingG | BA 18 | 427 | 4.75 | 0 | −85 | −5 | R midbrain | 148 | 4.64 | 6 | −19 | −8 | |

| L precuneus | BA 7 | 137 | 4.06 | −12 | −73 | 49 | L LingG | BA 18 | 954 | 6.22 | 0 | −76 | −5 |

| L putamen | 828 | 5.65 | −21 | −7 | 13 | L precuneus | BA 7 | 372 | 4.64 | −21 | −67 | 40 | |

| L insula | BA 13 | 1576 | 7.11 | −27 | 20 | 13 | L putamen | 549 | 5.26 | −18 | 5 | 10 | |

| L PrecG | BA 6 | 941 | 5.77 | −39 | −16 | 61 | L insula | BA 13 | 1178 | 8.75 | −30 | 17 | 13 |

| L SMG | BA 40 | 3526 | 6.30 | −42 | −43 | 34 | L PoG-IPL | BA 40, 2 | 7971 | 8.87 | −51 | −22 | 19 |

| L STG + TTG | BA 22, 42, 41 | 11209 | 1.01 | −63 | −34 | 7 | L PrecG | BA 4 | 1393 | 7.29 | −45 | −13 | 49 |

| L IFG | BA 9 | 161 | 4.86 | −33 | 8 | 31 | L SPL | BA 7 | 355 | 4.61 | −39 | −55 | 52 |

| L Insula | BA 13 | 390 | 4.87 | −36 | −4 | 25 | L PrecG | BA 6 | 883 | 5.85 | −42 | −1 | 28 |

TTG, Transverse temporal gyrus; PoG, postcentral gyrus; PrecG, precentral gyrus; LingG, lingual gyrus; SMG, supramarginal gyrus.

Table 3.

List of activation foci specifically related to auditory and tactile stimulation

| {Audio > Tact} p < 0.005 uncorrected |

{Tact > Audio} p < 0.005 uncorrected |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | ||||

| R TTG | BA41 | 11204 | 9.70 | 54 | −19 | 10 | L PoG | BA 43 | 145 | 4.57 | −54 | −19 | 19 |

| R MFG | BA 8 | 151 | 5.27 | 39 | 38 | 40 | L IPL | BA 40 | 126 | 4.38 | −54 | −25 | 25 |

| R thalamus | 156 | 5.21 | 12 | −16 | 7 | ||||||||

| L STG | BA13 | 8435 | 9.33 | −45 | −22 | 7 | |||||||

TTG, Transverse temporal gyrus; PoG, postcentral gyrus.

In addition, extended bilateral deactivation of the auditory cortex was observed during the tactile conditions, including in the superior temporal gyrus (STG) bilaterally, although it was more pronounced in the left hemisphere (see Table 2S, available at www.jneurosci.org as supplemental material). Equivalent deactivation of the somatosensory cortex during the auditory conditions was not observed. However, both modalities deactivated several regions of the visual cortex, including the right cuneus (BA 18) and right fusiform gyrus (BA 37) during tactile stimulation, and the right fusiform gyrus (BA 37), the left cuneus (BA 17), and the left middle occipital gyrus (MOG) (BA 18) during auditory stimulation.

To further identify the brain activation specific to each modality, we contrasted the auditory conditions with the tactile ones and vice versa: {[(AID + AL + AD) > (TID + TL + TD)] ∩ (AID + AL + AD)} and {[(TID + TL + TD) > (AID + AL + AD)] ∩ (TID + TL + TD)}. An RFX group analysis was performed at the whole-brain level with an uncorrected threshold of p < 0.005 in combination with a cluster size threshold correction of p < 0.05. Using a conjunction (“∩”) in the way it was used in the contrasts is similar to the use of a mask of generally active areas and aimed to eliminate any false positives related to any deactivation in the subtracted modality. The contrast {[auditory conditions] − [tactile conditions]} in conjunction with [auditory conditions] confirmed the specific recruitment of primary and secondary auditory cortices bilaterally, although more pronounced in the left hemisphere (Fig. 2b). The tactile activation foci resulting from the contrast {[tactile conditions] − [auditory conditions]} in conjunction with [tactile conditions] were found in the left posterior postcentral gyrus (contralateral to the vibrotactile stimulation).

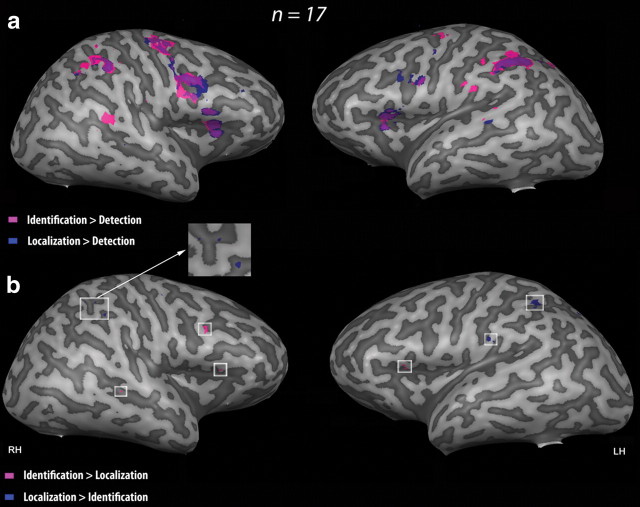

Main effects related to the task

RFX group analysis was performed at the whole-brain level with a corrected threshold for FDR of q < 0.05 in combination with a cluster size threshold correction of p < 0.05. The activation maps resulting from (1) {[(AID + TID) > (AD + TD)] ∩ (AID + TID)} and (2) {[(AL + TL) > (AD + TD)] ∩ (AL + TL)} revealed partially overlapping activation foci in parietal, frontal, and insular areas including the left and right inferior parietal lobule (IPL; BA 40), the left and right precentral gyrus (BA 6), the right middle frontal gyrus (MFG) (BA 8-BA 9), and the insula bilaterally (Fig. 3a, Tables 4, 5). However, the frontal activation foci were more extended for the identification conditions whereas the parietal activation foci were more extended for the localization conditions. In addition to these common activation foci, the identification conditions specifically activated several regions in the frontal cortex including parts of the right medial frontal gyrus (BA 6), the left precentral gyrus (BA 4), and the left MFG (BA 9). The localization conditions specifically activated several regions in the temporal, frontal, and parietal areas including the right STG (BA 22), parts of the right MFG (BA 6), and the left and right precuneus (BA 7).

Figure 3.

Brain activation related to the task. Identification (in pink) and localization (in blue) functional MRI activation in 17 sighted subjects is projected onto a 3-D representation of the right and left hemispheres (RH and LH) of a representative brain of one subject. Please note that only activations that reach the surface of the cortex are visible on the mesh. a, Activation maps resulting from the contrasts {identification task − control task (detection)}, i.e., {[(AID + TID) > (AD + TD)] ∩ (AID + TID)} and {localization task − control task (detection)}, i.e., {[(AL + TL) > (AD + TD)] ∩ (AL + TL)}. The activation maps were obtained using RFX analyses with a corrected threshold for FDR of q < 0.05 in combination with a cluster size threshold correction of p < 0.05 and superimposed on the individual brain. The identification conditions activated bilaterally the frontal and parietal cortices, including the middle and inferior frontal gyri and the IPL (see Tables 4 and 5 for an exhaustive list of activation foci). The localization conditions activated the left SPL and IPL, the right IPL and the right precuneus as well as the middle and inferior frontal gyri (BA7, BA40, and BA6, BA9) (see Tables 4 and 5 for an exhaustive list of activation foci). b, Activation maps resulting from the contrasts {Identification − Localization}, i.e., {[(AID + TID) > (AL + TL)] ∩ (AID + TID)} and {Localization − Identification}, i.e., {[(AL + TL) > (AID + TID)] ∩ (AL + TL)}. The activation maps were obtained using RFX analyses with an uncorrected threshold of p < 0.005 in combination with a cluster size threshold correction of p < 0.05 and superimposed on the individual brain. The right inferior frontal gyrus (BA9) as well as the insula bilaterally (not visible in this figure) was specifically activated during the identification conditions (Tables 4, 5). The right IPL (BA40), the right precuneus (BA7), the left SPL (BA7), and the left IPL (BA40) were specifically activated during the localization conditions (Tables 4, 5).

Table 4.

List of activation foci related to stimulus identification and localization: main effect

| {Identification > Detection} q < 0.05 FDR corrected |

{Localization > Detection} q < 0.05 FDR corrected |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | ||||

| R insula + PrecG | BA 13, 4, 6 | 8606 | 7.66 | 30 | 20 | 10 | R STG | BA 22 | 581 | 5.30 | 57 | −43 | 13 |

| R PoG-IPL | BA 5, 40 | 2334 | 5.56 | 36 | −46 | 58 | R insula + IFG | BA 13, 45, 40 | 4818 | 6.35 | 30 | 17 | 13 |

| R MFG | BA 8 | 368 | 5.14 | 39 | 35 | 40 | R IPL | BA 40 | 2975 | 6.96 | 33 | −43 | 34 |

| R caudate nucleus | 532 | 4.89 | 15 | 5 | 10 | R PrecG | BA 6 | 3107 | 6.37 | 39 | −10 | 52 | |

| R thalamus | 421 | 6.74 | 12 | −13 | 13 | R MFG | BA 9 | 208 | 5.09 | 39 | 38 | 37 | |

| R & L MFG | BA 6 | 3459 | 7.43 | −3 | 2 | 49 | R lentiform nucleus | Putamen | 165 | 4.43 | 18 | 8 | 10 |

| R midbrain | 806 | 6.42 | 9 | −19 | −11 | R midbrain-thalamus | 3015 | 7.12 | 6 | −22 | −8 | ||

| L midbrain | 572 | 5.83 | −9 | −16 | −8 | R precuneus | BA 7 | 212 | 4.32 | 15 | −61 | 34 | |

| L thalamus | 1043 | 9.28 | −12 | −13 | 10 | R & L MFG | BA 6 | 2637 | 6.93 | 0 | 2 | 49 | |

| L putamen | 806 | 5.63 | −18 | 5 | 10 | L thalamus | 1905 | 6.17 | −12 | −13 | 10 | ||

| L insula | BA 13 | 1866 | 7.87 | −30 | 20 | 10 | L precuneus | BA 7 | 988 | 5.90 | −21 | −67 | 40 |

| L IPL | BA 40 | 3804 | 6.59 | −48 | −43 | 43 | L PrecG | BA 6 | 489 | 4.72 | −27 | −13 | 55 |

| L PrecG | BA 6 | 222 | 4.58 | −33 | −13 | 64 | L IPL | BA 40 | 5375 | 6.64 | −36 | −49 | 37 |

| L PrecG | BA 6 | 720 | 5.63 | −36 | 5 | 31 | L insula | BA 13 | 1307 | 6.20 | −30 | 20 | 10 |

| L MFG | BA 9 | 129 | 4.62 | −45 | 17 | 37 | L PrecG | BA 6 | 263 | 4.84 | −36 | −19 | 64 |

| L PrecG | BA 4 | 232 | 5.20 | −48 | −10 | 49 | L IFG | BA 9 | 108 | 4.77 | −36 | 5 | 31 |

| L STG | BA 22 | 18 | 3.46 | 50 | −52 | 1 | |||||||

| L PrecG | BA 6 | 278 | 4.50 | −51 | 2 | 31 | |||||||

PoG, Postcentral gyrus; PrecG, precentral gyrus.

Table 5.

List of activation foci specifically related to stimulus identification and localization

| {ID > Localization} p < 0.005 uncorrected |

{Localization > ID} p < 0.005 uncorrected |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | ||||

| R IFG | BA 9 | 120 | 4.22 | 45 | 8 | 31 | R IPL | BA 40 | 159 | 4.54 | 42 | −37 | 40 |

| R insula | BA 13 | 170 | 5.65 | 33 | 20 | 13 | R precuneus | BA 7 | 261 | 4.16 | 15 | −67 | 37 |

| L insula | BA 13 | 93 | 4.73 | −33 | 20 | 10 | L SPL | BA 7 | 444 | 4.18 | −21 | −67 | 43 |

| L IPL | BA 40 | 430 | 5.31 | −39 | −43 | 43 | |||||||

To better identify the brain areas involved more specifically in “what” and “where” processing regardless of sensory modality, we also contrasted identification with localization conditions and vice versa (Fig. 3b, Tables 4, 5). RFX group analysis was performed at the whole brain level with an uncorrected threshold of p < 0.005 in combination with a cluster size threshold correction of p < 0.05. The contrast {[(AID + TID) > (AL + TL)] ∩ (AID + TID)} confirmed stronger involvement of the insula (BA 13) bilaterally and the right inferior frontal gyrus (IFG) (BA 9) in stimulus identification. The activation foci resulting from the contrast {[(AL + TL) > (AID + TID)] ∩ (AL + TL)} confirmed stronger involvement of several regions in the parietal cortex, including the left and right IPL (BA 40), the right precuneus (BA 7), and the left superior parietal lobule (SPL) (BA 7), during localization.

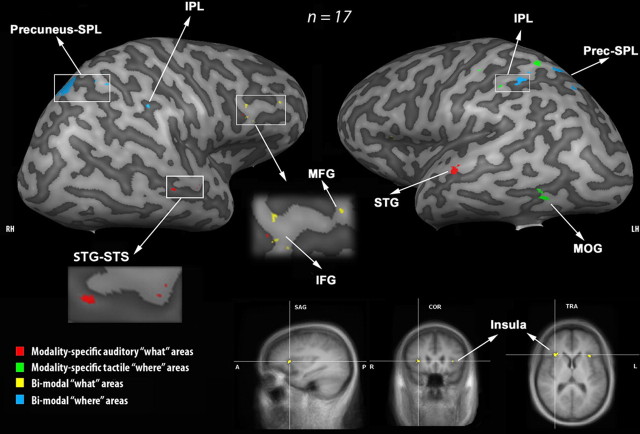

Unimodal versus bimodal brain areas

To identify modality-specific (auditory and tactile) brain areas within the “what” and “where” processing streams, we used the four following contrasts: (1) {(AID > TID) ∩ (AID > AL)}, (2) {(TID > AID) ∩ (TID > TL)}, (3) {(AL > TL) ∩ (AL > AID)} and (4) {(TL > AL) ∩ (TL > TID)}. These contrasts were very strict and aimed at isolating the brain areas that were exclusively task- and modality-specific. Using a threshold of p < 0.005 (uncorrected for multiple comparison), only contrasts 1 and 4 showed significant activation foci (Fig. 4, Tables 6, 7). Contrast 1 showed that some parts of the right anterior STG-superior temporal sulcus (STS) (BA 21/22), the right IFG (BA 9), and the left anterior STG (BA 22) were specifically involved in the auditory “what” processing stream. Contrast 4 revealed that some parts of the left precuneus (BA 7), the left SPL (BA 7), the left IPL (BA 40), and the MOG (BA 37) were specifically recruited in the tactile “where” processing stream. A closer look at the blood oxygenation level-dependent (BOLD) response in the MOG during the auditory and tactile conditions revealed a deactivation during all the conditions. This deactivation was less pronounced during the TL condition.

Figure 4.

Brain activation related to auditory and tactile “what” and “where” processing. The modality-specific auditory “what” (in red), the modality-specific tactile “where” (in green), the bimodal “what” (in yellow), and the bimodal “where” (in blue) functional MRI activation in 17 subjects is projected onto a 3-D representation of the right and left hemispheres (RH and LH) of a representative brain of one subject. The activation maps resulting from the contrasts {(AID > TID) ∩ (AID > AL)} (modality-specific tactile “what” areas), {(TL > AL) ∩ (TL > TID)} (modality-specific tactile “where” areas), {[(AID + TID) > (AL + TL)] ∩ [(AID > AD) ∩ (TID > TD)]} (bimodal “what” areas), and {[(AL + TL) > (AID + TID)] ∩ [(AL > AD ∩ (TL > TD)]} (bimodal “where” areas) were obtained using RFX with a threshold of p < 0.005 (uncorrected for multiple comparisons) and superimposed on the individual brain. Bimodal “what” brain areas were mainly found in the inferior frontal lobe and insula whereas bimodal “where” brain areas were mainly in the parietal lobe. Modality-specific auditory “what” brain areas were in the anterior STG-STS whereas modality-specific tactile “where” brain areas were distributed in the parietal cortex. It is worth noting that activation in the MOG was revealed by this contrast, whereas this region was deactivated to a different extent during all auditory and tactile conditions. No significant modality-specific auditory “where” or tactile “what” activations were found. The lower part of the figure shows the bilateral activation of the insula on the sagittal (SAG), coronal (COR), and transversal (TRA) plane, related to bimodal “what” processing.

Table 6.

List of modality-specific activation foci related to spatial and nonspatial processing

| Modality-specific auditory ″what″ processing areas |

Modality-specific tactile ″where″ processing areas |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | ||||

| R STG | BA 21 | 29 | 4.04 | 54 | −16 | −2 | L precuneus-SPL | BA 7 | 44 | 3.92 | −15 | −70 | 52 |

| R STG-STS | BA 22 | 259 | 4.17 | 42 | −34 | 1 | L SPL | BA 7 | 265 | 5.63 | −30 | −52 | 58 |

| R IFG | BA 9 | 30 | 4.50 | 45 | 11 | 25 | L IPL | BA 40 | 455 | 4.99 | −42 | −37 | 55 |

| L STG | BA 22 | 242 | 5.67 | −60 | −19 | 4 | L MOG | BA 37 | 192 | 5.06 | −48 | −61 | −8 |

p < 0.005 uncorrected.

Table 7.

List of bimodal activation foci related to spatial and nonspatial processing

| Bimodal ″what″ processing areas |

Bimodal ″where″ processing areas |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | Brain region | Brodmann area | Cluster size | t value | Coordinates (x, y, z) | ||||

| R IFG | BA 9 | 43 | 3.99 | 48 | 11 | 25 | R IPL | BA 40 | 151 | 4.54 | 42 | −37 | 40 |

| R IFG | BA 9 | 25 | 4.26 | 45 | 8 | 31 | R precuneus-SPL | BA 7 | 1020 | 4.74 | 15 | −67 | 46 |

| R MFG | BA 9 | 151 | 4.64 | 42 | 32 | 28 | L SPL | BA 7 | 600 | 4.18 | −21 | −67 | 43 |

| R insula | BA 13 | 14 | 3.87 | 39 | 14 | 7 | L IPL | BA 40 | 666 | 5.31 | −39 | −43 | 43 |

| R insula-IFG | BA 13-45 | 150 | 4.47 | 36 | 23 | 7 | |||||||

| L insula | BA 13 | 88 | 4.40 | −30 | 17 | 16 | |||||||

To identify multimodal brain activation within the “what” and “where” processing streams, we used the two following contrasts: (1) {[(AID + TID) > (AL + TL)] ∩ [(AID > AD) ∩ (TID > TD)]} and (2) {[(AL + TL) > (AID + TID)] ∩ [(AL > AD ∩ (TL > TD)]}. The choice of these contrasts was justified for the following reasons. (1) Since the brain areas that were more involved in the “what” (or in the “where”) processing stream could also be involved in the other processing stream (Fig. 3), albeit to a lesser extent, we contrasted the identification conditions with the localization conditions (and vice versa). (2) Grouping the identification conditions together (or grouping the localization conditions together) to identify the “what” (or “where”) streams aimed to increase the statistical power and to optimize the corresponding contrast. (3) The subsequent conjunctions with the identification (or localization) conditions in each modality aimed to ensure proper identification of the brain areas that were really activated by both modalities. i.v.) The idea was to identify the brain areas that were specialized in the “what” (or in the “where”) processing in both modalities even if the extent of their recruitment was slightly different. Undeniably, multimodal brain areas, which were activated by the two modalities, were not necessarily recruited to the same extent (Fig. 2). Therefore, the subtraction of the detection conditions from the identification (or from the localization) conditions within each modality was meant to eliminate all uncontrolled basic sensory processes as well as the unspecific attentional mechanisms unrelated to the task. Using a threshold of p < 0.005 (uncorrected for multiple comparison), the contrast 1 showed that parts of the right IFG and MFG (BA 9) and the insula bilaterally (BA 13) were more activated by the two modalities during the identification conditions (Fig. 4, Tables 6, 7). The contrast 2 showed that parts of the right and the left IPL (BA 40), the right precuneus-SPL (BA 7), the left SPL (BA 7), and the caudal belt/parabelt were more activated by both modalities during the localization conditions.

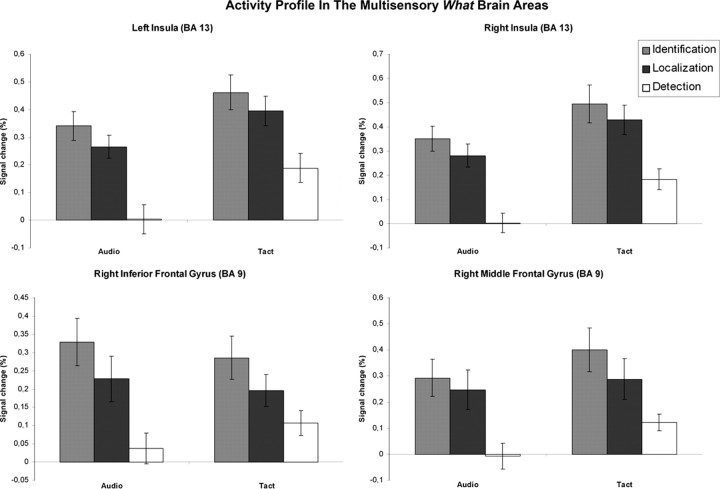

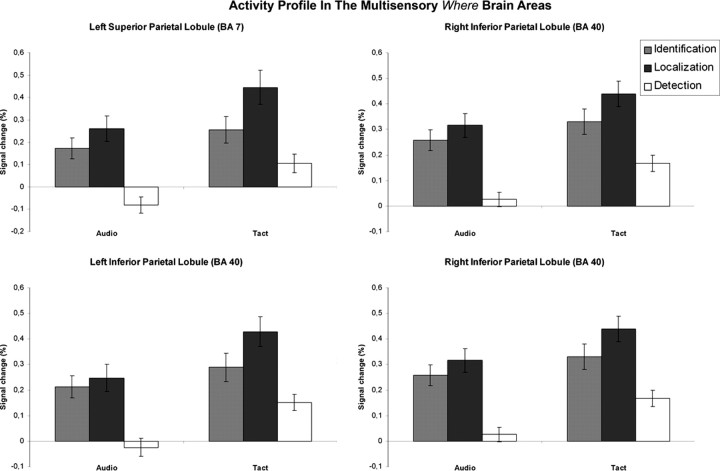

A graphic analysis of brain activation in the eight multimodal brain areas identified by the above procedure (Tables 6, 7) is also provided in Figures 5 and 6: the right IFG, the right MFG, the right insula, the left insula, the right and left IPL, the right precuneus-SPL, and the left SPL. It should be noted that any major difference between “what” and “where” processing, when observed in only one of the two modalities, would lead to a significant activation of the same area in the two contrasts described above. This could lead one to think that such a brain area is a domain-specific multimodal area, although it would be domain-specific for only one modality. For this reason, a subsequent detailed examination of the BOLD signal in the brain areas identified from these two contrasts was also performed. Figures 5 and 6 show the percentage of signal change (BOLD signal) for the six conditions (i.e., AID, AL, AD, TID, TL, TD) within eight regions that were identified as constituting the multimodal “what” and “where” streams. The two activation foci in the right IFG had a similar topography and were grouped by averaging their BOLD signal. The same averaging procedure was applied to the two neighboring activation foci identified as part of the right insula.

Figure 5.

Activity profile in the brain areas identified as multisensory “what” brain areas. The BOLD signal is plotted as a function of the task and the modality in the left (x = −30; y = 17; z = 16; cluster size = 88 voxels) and right (x = 36; y = 23; z = 7; cluster size = 164 voxels) insula, the right IFG (x = 48; y = 11; z = 25; cluster size = 68 voxels), and the right MFG (x = 42; y = 32; z = 28; cluster size = 151 voxels). The BOLD response is expressed as a percentage of signal change. The error bars represent SE. Identification conditions activated inferior middle frontal and insular regions more than localization conditions in both modalities.

Figure 6.

Activity profile in the brain areas identified as multisensory “where” brain areas. The BOLD signal is plotted as a function of the task and the modality in the left SPL (x = −21; y = −67; z = 43; cluster size = 600 voxels), the left IPL (x = −39, y = −43; z = 43; cluster size = 666 voxels), the right precuneus (x = 15; y = −67; z = 46; cluster size = 1020 voxels), and the right IPL (x = 42; y = −37; z = 40; cluster size = 151 voxels). The BOLD response is expressed in percentage of signal change. The error bars represent SE. Localization conditions activated parietal regions more than identification conditions in both modalities.

A close look at the BOLD signal within the right IFG and bilateral insula confirmed that they were activated during both the identification and localization conditions, but that the identification conditions activated these regions more strongly than the localization conditions in both modalities. The reverse profile was observed in the parietal regions that were more strongly activated during the localization conditions than during the identification conditions in both modalities, consistent with the activation maps derived from our two contrasts. It should be noted, however, that only the right IFG, the right precuneus, and the right IPL showed a significant effect of the task in both modalities when tested separately (p < 0.05, post hoc comparisons of selected means) (see Table 3S, available at www.jneurosci.org as supplemental material). In most of the other brain areas, the effect was significant in the tactile modality only. A significant effect of sensory modality was observed in all eight brain regions except in the right IFG and the right precuneus-SPL, with the tactile stimulation generating a higher BOLD signal than the auditory one.

Covariance and correlation analysis

To test whether the effects observed in the eight identified regions depended more on the difficulty of the task than on the nature of the processing itself, covariance analyses were performed at the whole-brainmporal cortex during auditory and tactile cond level, using behavioral performance as a covariate (regressor of interest). These analyses did not yield any significant result even when using a very low statistical threshold of p < 0.05 (uncorrected). In addition, a correlation analysis was performed to test the relationship between behavioral performance and the corresponding BOLD signal in the eight multimodal brain regions (using the mean activity in these regions). Concerning the multimodal “what” brain areas, a weak but significant negative correlation between performance and activation level was observed at the group level in the left insula only: r = −0.4823, p = 0.05 and r = −0.5526, p = 0.021, for the AID and the TID conditions, respectively. Concerning the multimodal “where” brain areas, a significant negative correlation between performance and activation level was observed in the left IPL only: r = −0.5569, p = 0.02 and r = −0.4931, p = 0.044, for the AL and the TL conditions, respectively. Additional covariance analyses were performed in the eight regions of interest to test the potential effect of subjects' age on the effects observed in these specific areas, using the age of the subjects as a covariate (regressor of interest). These analyses did not yield any significant result even when using a very low statistical threshold of p < 0.05 (uncorrected).

Discussion

To our knowledge, this study is the first to compare directly in the same subjects the brain areas for “what” and “where” processing in two different sensory modalities. Using fMRI, we monitored the BOLD signal during identification and localization of comparable auditory and vibrotactile stimuli. We observed that areas in the right inferior frontal gyrus (IFG) and bilateral insula were more activated during the processing of stimulus identity in both audition and touch, whereas parts of the left and right inferior and superior parietal lobules (IPL and SPL) were more recruited during the processing of spatial attributes in both modalities.

Brain activation by auditory versus tactile perception

The activation maps related to the main effect of sensory modality showed the expected modality-specific activation within auditory and somatosensory cortices. The overlapping activation observed in the temporal, frontal, and parietal areas depended partly on somatosensory and motor aspects related to the subject's response (when pressing the response-button) and working memory components that were induced in the one-back comparison task (Arnott et al., 2005; Lehnert and Zimmer, 2008a,b). In accordance with our results, the medial frontal gyrus and the insular cortex have been considered multisensory integration centers (Senkowski et al., 2007; Rodgers et al., 2008). Deactivation of auditory cortex during tactile conditions and of occipito-temporal cortex during auditory and tactile conditions may reflect cross-modal inhibition of sensory inputs to neutralize potential interferences, as shown in previous studies (Drzezga et al., 2005; Laurienti et al., 2002). On the other hand, excitatory convergence of somatosensory and auditory inputs, as found here in subregions of auditory cortex, is evidence for multisensory integration (Schroeder et al., 2001; Kayser et al., 2005; Caetano and Jousmäki, 2006; Hackett et al., 2007).

Brain activation by identification versus localization

We observed the expected segregation of “what” and “where” processing streams in inferior-frontal and parietal regions, respectively, when comparing identification and localization conditions. Parts of frontal cortex were also recruited by the localization tasks, and parts of parietal cortex were activated during the identification conditions, but to a much lesser extent than during identification and localization, respectively. Previous studies have demonstrated that dorsal areas of frontal cortex are involved in visual and auditory localization (Bushara et al., 1999; Martinkauppi et al., 2000), whereas activation in other regions is higher during identification tasks (Rämä, 2008). Furthermore, in the present study, part of the overlapping activation in the parietal and frontal areas may reflect general working memory components under both identification and localization conditions (Belger et al., 1998; Ricciardi et al., 2006).

“What” versus “where”modality-specific brain areas

Four distinct activation foci were identified as modality-specific during auditory “what” processing: three of them were located in auditory areas, i.e., BA22 in right anterior STG-STS, BA21/22 in right anterior STG, and BA22 in left anterior STG. The fourth activation focus was located in BA9, in the right IFG. In monkeys, the secondary auditory belt cortex appears to be functionally organized such that rostral areas are more specialized in sound identification, whereas caudal areas are involved in sound localization (Rauschecker and Tian, 2000; Tian et al., 2001). Some fMRI data in humans indicate a similar functional dissociation (Maeder et al., 2001; Warren and Griffiths, 2003; Arnott et al., 2004). Our results are in accordance with these previous observations: only anterior temporal areas were specifically recruited during the auditory identification task. The functional contribution of the IFG (or ventrolateral prefrontal cortex) specifically during the nonspatial auditory task is also consistent with animal and human studies (Romanski et al., 1999; Romanski and Goldman-Rakic, 2002; Arnott et al., 2004).

Three distinct parietal activation foci were identified as modality-specific during tactile “where” processing: BA 7 in the precuneus-SPL, BA 7 in the SPL, and BA 40 in the IPL. It is worth noting that all foci were located in the left hemisphere, contralateral to the stimulated hand. In the few imaging studies that have investigated “what” and “where” segregation in the tactile domain, different activation patterns were described (Forster and Eimer, 2004; Van Boven et al., 2005), probably due to differences in experimental tasks, procedures, and stimuli (De Santis et al., 2007). As in most previous fMRI studies (Reed et al., 2005; Van Boven et al., 2005), tactile “where” processing activated mainly parietal areas.

In the present study, no tactile-specific activation specialized in “what” processing was found. In particular, we did not observe domain-specific activation in postcentral gyrus. Since stimulus attributes strongly influence identification processes, these results may apply specifically to identification of vibrotactile stimuli not involving shape processing. Using real objects or 2D shapes might have activated specific areas involved in shape processing, such as the lateral occipital complex (Amedi et al., 2001).

Although parts of the left STG corresponding to caudal belt/parabelt were activated during auditory localization (Fig. 3), none of these activations reached a level of significance in the modality-specific contrasts; most areas involved in sound localization were also activated by touch (see below).

“What” versus “where” multimodal brain areas

Four distinct regions were more involved in the processing of nonspatial than spatial attributes in both modalities: BA9 in the right IFG, BA9 in the right MFG, BA13/45 in the right insula-IFG, and BA13 in the insula bilaterally. Recruitment of the right IFG has been previously observed during identification processes in touch and in audition (Alain et al., 2001; Reed et al., 2005; Van Boven et al., 2005). The right IFG was the frontal area that showed the strongest effect of the task in both modalities in our study. The specific involvement of the insula has also been reported in some studies of auditory and/or somatosensory processing of stimulus identity (Prather et al., 2004; Zimmer et al., 2006). The insula is known as a multisensory region in which perceptual information from different senses converges (Calvert, 2001), but whose precise function is still unclear (Meyer et al., 2007). Recruitment of the insula has been reported in a variety of sensory and cognitive tasks (Augustine, 1996; Ackermann and Riecker, 2004), including audio-visual integration in communication sound processing (Remedios et al., 2009), and auditory-visual matching (Hadjikhani and Roland, 1998; Bamiou et al., 2003, 2006; Banati et al., 2000). Interestingly, a fronto-parietal network has been identified in the left hemisphere during auditory and visual nonspatial working memory (Tanabe et al., 2005).

Four distinct parietal foci were more activated during “where” than “what” processing of auditory and tactile stimuli: BA40 in the right and left IPL, BA7 in the right precuneus-SPL, and BA7 in the left SPL. In addition, a restricted zone of caudal belt/parabelt was also activated. Recruitment of SPL and precuneus has been reported in auditory and tactile studies involving spatial processing (Bushara et al., 1999; Alain et al., 2001; Reed et al., 2005). In accordance with results from hemispheric specialization and spatial working memory (D'Esposito et al., 1998; Roth and Hellige, 1998; Smith and Jonides, 1998; Jager and Postma, 2003; Wager and Smith, 2003), the present study shows that right-hemisphere regions (e.g., precuneus and IPL) display the strongest effect of localization conditions in both modalities. The IPL sustains a wide range of functions related to attention, motion processing, and spatial working memory (Stricanne et al., 1996; D'Esposito et al., 1998; Culham and Kanwisher, 2001; Ricciardi et al., 2006; Alain et al., 2008; Zimmer, 2008). Its involvement in auditory and tactile localization has been reported frequently (Weeks et al., 1999; Maeder et al., 2001; Clarke et al., 2002; Zatorre et al., 2002; Arnott et al., 2004; Reed et al., 2005; Altmann et al., 2007, 2008; Lewald et al., 2008). In our study, all areas involved in sound localization were also activated by touch, i.e., appeared to be multisensory. This confirms recent reports on the multisensory nature of the caudal belt region in rhesus monkeys (Smiley et al., 2007; Hackett et al., 2007) and underscores the importance of the postero-dorsal stream more generally in sensorimotor integration (Rauschecker and Scott, 2009).

Methodological concerns

Despite our best efforts to equalize the difficulty level under the various conditions by adjusting the subjective intensity of stimuli individually, tactile conditions appeared to be somewhat more difficult for our subjects than auditory ones. However, covariance and correlation analyses did not show a significant effect of performance on brain activity in any region, except in the left insula and IPL, which were weakly negatively correlated with performance in identification and localization, respectively, in both modalities. This cannot be attributed to task difficulty, but is rather consistent with a specific role of the insula in stimulus identification and of the IPL in localization.

Conclusions

In addition to the confirmation of the multisensory nature of some areas, such as the insula, and the general segregation between an inferior-frontal (ventral) and a parietal (dorsal) pathway, we demonstrate here for the first time that multisensory “what” and “where” pathways exist in the brain. The convergence of the same functional attributes into one centralized representation constitutes an optimal form of brain organization for the rapid and efficient processing and binding of perceptual information from different sensory modalities.

Footnotes

This study was supported by grants from the National Institutes of Health (R01 NS052494), the Cognitive Neuroscience Initiative of the National Science Foundation (NSF) (BCS-0519127), the NSF Partnerships for International Research and Education (PIRE) program (OISE-0730255), and the Tinnitus Research Initiative to J.P.R., and grants from the Academy of Finland to S.C. (a National Center of Excellence grant and Neuro-Program) and to I.A. (Grant 123044). A.G.D.V. is a senior research associate and L.A.R. is a postdoctoral researcher at the National Fund for Scientific Research (Belgium). L.A.R. was also supported by the Belgian American Educational Foundation and the Special Fund for Research from the Université Catholique de Louvain (Belgium). We thank Mark A. Chevillet, Pawel Kusmierek, Valérie Wuyts, and Pascal Couti for their help in the creation of the experimental equipment, Amber Leaver for her advice with the statistical analyses, and Jennifer Sasaki for her help in the subject recruitment.

References

- Ackermann H, Riecker A. The contribution of the insula to motor aspects of speech production: a review and a hypothesis. Brain Lang. 2004;89:320–328. doi: 10.1016/S0093-934X(03)00347-X. [DOI] [PubMed] [Google Scholar]

- Alain C, Arnott SR, Hevenor S, Graham S, Grady CL. “What” and “where” in the human auditory system. Proc Natl Acad Sci U S A. 2001;98:12301–12306. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, He Y, Grady C. The contribution of the inferior parietal lobe to auditory spatial working memory. J Cogn Neurosci. 2008;20:285–295. doi: 10.1162/jocn.2008.20014. [DOI] [PubMed] [Google Scholar]

- Altmann CF, Bledowski C, Wibral M, Kaiser J. Processing of location and pattern changes of natural sounds in the human auditory cortex. Neuroimage. 2007;35:1192–1200. doi: 10.1016/j.neuroimage.2007.01.007. [DOI] [PubMed] [Google Scholar]

- Altmann CF, Henning M, Döring MK, Kaiser J. Effects of feature-selective attention on auditory pattern and location processing. Neuroimage. 2008;41:69–79. doi: 10.1016/j.neuroimage.2008.02.013. [DOI] [PubMed] [Google Scholar]

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Amedi A, Jacobson G, Hendler T, Malach R, Zohary E. Convergence of visual and tactile shape processing in the human lateral occipital complex. Cereb Cortex. 2002;12:1202–1212. doi: 10.1093/cercor/12.11.1202. [DOI] [PubMed] [Google Scholar]

- Anurova I, Artchakov D, Korvenoja A, Ilmoniemi RJ, Aronen HJ, Carlson S. Differences between auditory evoked responses recorded during spatial and nonspatial working memory tasks. Neuroimage. 2003;20:1181–1192. doi: 10.1016/S1053-8119(03)00353-7. [DOI] [PubMed] [Google Scholar]

- Arnott SR, Binns MA, Grady CL, Alain C. Assessing the auditory dual-pathway model in humans. Neuroimage. 2004;22:401–408. doi: 10.1016/j.neuroimage.2004.01.014. [DOI] [PubMed] [Google Scholar]

- Arnott SR, Grady CL, Hevenor SJ, Graham S, Alain C. The functional organization of auditory working memory as revealed by fMRI. J Cogn Neurosci. 2005;17:819–831. doi: 10.1162/0898929053747612. [DOI] [PubMed] [Google Scholar]

- Augustine JR. Circuitry and functional aspects of the insular lobe in primates including humans. Brain Res Brain Res Rev. 1996;22:229–244. doi: 10.1016/s0165-0173(96)00011-2. [DOI] [PubMed] [Google Scholar]

- Bamiou DE, Musiek FE, Luxon LM. The insula (Island of Reil) and its role in auditory processing. Literature review. Brain Res Brain Res Rev. 2003;42:143–154. doi: 10.1016/s0165-0173(03)00172-3. [DOI] [PubMed] [Google Scholar]

- Bamiou DE, Musiek FE, Stow I, Stevens J, Cipolotti L, Brown MM, Luxon LM. Auditory temporal processing deficits in patients with insular stroke. Neurology. 2006;67:614–619. doi: 10.1212/01.wnl.0000230197.40410.db. [DOI] [PubMed] [Google Scholar]

- Banati RB, Goerres GW, Tjoa C, Aggleton JP, Grasby P. The functional anatomy of visual-tactile integration in man: a study using positron emission tomography. Neuropsychologia. 2000;38:115–124. doi: 10.1016/s0028-3932(99)00074-3. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Argall BD, Martin A. Integration of auditory and visual information about objects in superior temporal sulcus. Neuron. 2004a;41:809–823. doi: 10.1016/s0896-6273(04)00070-4. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Argall BD, Bodurka J, Duyn JH, Martin A. Unraveling multisensory integration: patchy organization within human STS multisensory cortex. Nat Neurosci. 2004b;7:1190–1192. doi: 10.1038/nn1333. [DOI] [PubMed] [Google Scholar]

- Belger A, Puce A, Krystal JH, Gore JC, Goldman-Rakic P, McCarthy G. Dissociation of mnemonic and perceptual processes during spatial and nonspatial working memory using fMRI. Hum Brain Mapp. 1998;6:14–32. doi: 10.1002/(SICI)1097-0193(1998)6:1<14::AID-HBM2>3.0.CO;2-O. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boynton GM, Engel SA, Glover GH, Heeger DJ. Linear systems analysis of functional magnetic resonance imaging in human V1. J Neurosci. 1996;16:4207–4221. doi: 10.1523/JNEUROSCI.16-13-04207.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bremmer F, Schlack A, Shah NJ, Zafiris O, Kubischik M, Hoffmann K, Zilles K, Fink GR. Polymodal motion processing in posterior parietal and premotor cortex: a human fMRI study strongly implies equivalencies between humans and monkeys. Neuron. 2001;29:287–296. doi: 10.1016/s0896-6273(01)00198-2. [DOI] [PubMed] [Google Scholar]

- Burton H, McLaren DG, Sinclair RJ. Reading embossed capital letters: an fMRI study in blind and sighted individuals. Hum Brain Mapp. 2006;27:325–339. doi: 10.1002/hbm.20188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bushara KO, Weeks RA, Ishii K, Catalan MJ, Tian B, Rauschecker JP, Hallet M. Modality-specific frontal and parietal areas for auditory and visual spatial localization in humans. Nat Neurosci. 1999;2:759–766. doi: 10.1038/11239. [DOI] [PubMed] [Google Scholar]

- Caetano G, Jousmäki V. Evidence of vibrotactile input to human auditory cortex. Neuroimage. 2006;29:15–28. doi: 10.1016/j.neuroimage.2005.07.023. [DOI] [PubMed] [Google Scholar]

- Calvert GA. Crossmodal processing in the human brain: insights from functional neuroimaging studies. Cereb Cortex. 2001;11:1110–1123. doi: 10.1093/cercor/11.12.1110. [DOI] [PubMed] [Google Scholar]

- Clarke S, Bellmann Thiran A, Maeder P, Adriani M, Vernet O, Regli L, Cuisenaire O, Thiran JP. What and where in human audition: selective deficits following focal hemispheric lesions. Exp Brain Res. 2002;147:8–15. doi: 10.1007/s00221-002-1203-9. [DOI] [PubMed] [Google Scholar]

- Culham JC, Kanwisher NG. Neuroimaging of cognitive functions in human parietal cortex. Curr Opin Neurobiol. 2001;11:157–163. doi: 10.1016/s0959-4388(00)00191-4. [DOI] [PubMed] [Google Scholar]

- Deibert E, Kraut M, Kremen S, Hart J., Jr Neural pathways in tactile object recognition. Neurology. 1999;52:1413–1417. doi: 10.1212/wnl.52.7.1413. [DOI] [PubMed] [Google Scholar]

- De Santis L, Spierer L, Clarke S, Murray MM. Getting in touch: segregated somatosensory what and where pathways in humans revealed by electrical neuroimaging. Neuroimage. 2007;37:890–903. doi: 10.1016/j.neuroimage.2007.05.052. [DOI] [PubMed] [Google Scholar]

- D'Esposito M, Aguirre GK, Zarahn E, Ballard D, Shin RK, Lease J. Functional MRI studies of spatial and nonspatial working memory. Brain Res Cogn Brain Res. 1998;7:1–13. doi: 10.1016/s0926-6410(98)00004-4. [DOI] [PubMed] [Google Scholar]

- Downar J, Crawley AP, Mikulis DJ, Davis KD. A multimodal cortical network for the detection of changes in the sensory environment. Nat Neurosci. 2000;3:277–283. doi: 10.1038/72991. [DOI] [PubMed] [Google Scholar]

- Drzezga A, Grimmer T, Peller M, Wermke M, Siebner H, Rauschecker JP, Schwaiger M, Kurz A. Impaired cross-modal inhibition in Alzheimer disease. PLoS Med. 2005;2:e288. doi: 10.1371/journal.pmed.0020288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forster B, Eimer M. The attentional selection of spatial and non-spatial attributes in touch: ERP evidence for parallel and independent processes. Biol Psychol. 2004;66:1–20. doi: 10.1016/j.biopsycho.2003.08.001. [DOI] [PubMed] [Google Scholar]

- Foxe JJ, Wylie GR, Martinez A, Schroeder CE, Javitt DC, Guilfoyle D, Ritter W, Murray MM. Auditory-somatosensory multisensory processing in auditory association cortex: an fMRI study. J Neurophysiol. 2002;88:540–543. doi: 10.1152/jn.2002.88.1.540. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Frith CD, Turner R, Frackowiak RS. Characterizing evoked hemodynamics with fMRI. Neuroimage. 1995;2:157–165. doi: 10.1006/nimg.1995.1018. [DOI] [PubMed] [Google Scholar]

- Fu KM, Johnston TA, Shah AS, Arnold L, Smiley J, Hackett TA, Garraghty PE, Schroeder CE. Auditory cortical neurons respond to somatosensory stimulation. J Neurosci. 2003;23:7510–7515. doi: 10.1523/JNEUROSCI.23-20-07510.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golaszewski SM, Siedentopf CM, Baldauf E, Koppelstaetter F, Eisner W, Unterrainer J, Guendisch GM, Mottaghy FM, Felber SR. Functional magnetic resonance imaging of the human sensorimotor cortex using a novel vibrotactile stimulator. Neuroimage. 2002;17:421–430. doi: 10.1006/nimg.2002.1195. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Hackett TA, De La Mothe LA, Ulbert I, Karmos G, Smiley J, Schroeder CE. Multisensory convergence in auditory cortex, II. Thalamocortical connections of the caudal superior temporal plane. J Comp Neurol. 2007;502:924–952. doi: 10.1002/cne.21326. [DOI] [PubMed] [Google Scholar]

- Hadjikhani N, Roland PE. Cross-modal transfer of information between the tactile and the visual representations in the human brain: a positron emission tomographic study. J Neurosci. 1998;18:1072–1084. doi: 10.1523/JNEUROSCI.18-03-01072.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harada T, Saito DN, Kashikura K, Sato T, Yonekura Y, Honda M, Sadato N. Asymmetrical neural substrates of tactile discrimination in humans: a functional magnetic resonance imaging study. J Neurosci. 2004;24:7524–7530. doi: 10.1523/JNEUROSCI.1395-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Grady CL, Horwitz B, Ungerleider LG, Mishkin M, Carson RE, Herscovitch P, Schapiro MB, Rapoport SI. Dissociation of object and spatial visual processing pathways in human extrastriate cortex. Proc Natl Acad Sci U S A. 1991;88:1621–1625. doi: 10.1073/pnas.88.5.1621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyvärinen J, Poranen A. Function of parietal associative area 7 as revealed from cellular discharges in alert monkeys. Brain. 1974;97:673–692. doi: 10.1093/brain/97.1.673. [DOI] [PubMed] [Google Scholar]

- Hyvärinen J, Carlson S, Hyvärinen L. Early visual deprivation alters modality of neuronal responses in area 19 of monkey cortex. Neurosci Lett. 1981;26:239–243. doi: 10.1016/0304-3940(81)90139-7. [DOI] [PubMed] [Google Scholar]

- Jager G, Postma A. On the hemispheric specialization for categorical and coordinate spatial relations: a review of the current evidence. Neuropsychologia. 2003;41:504–515. doi: 10.1016/s0028-3932(02)00086-6. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Augath M, Logothetis NK. Integration of touch and sound in auditory cortex. Neuron. 2005;48:373–384. doi: 10.1016/j.neuron.2005.09.018. [DOI] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Augath M, Logothetis NK. Functional imaging reveals visual modulation of specific fields in auditory cortex. J Neurosci. 2007;27:1824–1835. doi: 10.1523/JNEUROSCI.4737-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kayser C, Petkov CI, Logothetis NK. Visual modulation of neurons in auditory cortex. Cereb Cortex. 2008;18:1560–1574. doi: 10.1093/cercor/bhm187. [DOI] [PubMed] [Google Scholar]

- Kitada R, Kito T, Saito DN, Kochiyama T, Matsumura M, Sadato N, Lederman SJ. Multisensory activation of the intraparietal area when classifying grating orientation: a functional magnetic resonance imaging study. J Neurosci. 2006;26:7491–7501. doi: 10.1523/JNEUROSCI.0822-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L, Kochunov PV, Nickerson D, Mikiten SA, Fox PT. Automated Talairach Atlas labels for functional brain mapping. Hum Brain Mapp. 2000;10:120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurienti PJ, Burdette JH, Wallace MT, Yen YF, Field AS, Stein BE. Deactivation of sensory-specific cortex by cross-modal stimuli. J Cogn Neurosci. 2002;14:420–429. doi: 10.1162/089892902317361930. [DOI] [PubMed] [Google Scholar]

- Lehnert G, Zimmer HD. Common coding of auditory and visual spatial information in working memory. Brain Res. 2008a;1230:158–167. doi: 10.1016/j.brainres.2008.07.005. [DOI] [PubMed] [Google Scholar]

- Lehnert G, Zimmer HD. Modality and domain specific components in auditory and visual working memory tasks. Cogn Process. 2008b;9:53–61. doi: 10.1007/s10339-007-0187-6. [DOI] [PubMed] [Google Scholar]

- Lewald J, Riederer KA, Lentz T, Meister IG. Processing of sound location in human cortex. Eur J Neurosci. 2008;27:1261–1270. doi: 10.1111/j.1460-9568.2008.06094.x. [DOI] [PubMed] [Google Scholar]

- Maeder PP, Meuli RA, Adriani M, Bellmann A, Fornari E, Thiran JP, Pittet A, Clarke S. Distinct pathways involved in sound recognition and localization: a human fMRI study. Neuroimage. 2001;14:802–816. doi: 10.1006/nimg.2001.0888. [DOI] [PubMed] [Google Scholar]

- Martinkauppi S, Rämä P, Aronen HJ, Korvenoja A, Carlson S. Working memory of auditory localization. Cereb Cortex. 2000;10:889–898. doi: 10.1093/cercor/10.9.889. [DOI] [PubMed] [Google Scholar]

- Merabet L, Thut G, Murray B, Andrews J, Hsiao S, Pascual-Leone A. Feeling by sight or seeing by touch? Neuron. 2004;42:173–179. doi: 10.1016/s0896-6273(04)00147-3. [DOI] [PubMed] [Google Scholar]

- Meyer M, Baumann S, Marchina S, Jancke L. Hemodynamic responses in human multisensory and auditory association cortex to purely visual stimulation. BMC Neurosci. 2007;8:14. doi: 10.1186/1471-2202-8-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noesselt T, Rieger JW, Schoenfeld MA, Kanowski M, Hinrichs H, Heinze HJ, Driver J. Audiovisual temporal correspondence modulates human multisensory superior temporal sulcus plus primary sensory cortices. J Neurosci. 2007;27:11431–11441. doi: 10.1523/JNEUROSCI.2252-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pascual-Leone A, Hamilton R. The metamodal organization of the brain. Prog Brain Res. 2001;134:427–445. doi: 10.1016/s0079-6123(01)34028-1. [DOI] [PubMed] [Google Scholar]

- Peltier S, Stilla R, Mariola E, LaConte S, Hu X, Sathian K. Activity and effective connectivity of parietal and occipital cortical regions during haptic shape perception. Neuropsychologia. 2007;45:476–483. doi: 10.1016/j.neuropsychologia.2006.03.003. [DOI] [PubMed] [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu WH, Cohen L, Guazzelli M, Haxby JV. Beyond sensory images: object-based representation in the human ventral pathway. Proc Natl Acad Sci U S A. 2004;101:5658–5663. doi: 10.1073/pnas.0400707101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poremba A, Saunders RC, Crane AM, Cook M, Sokoloff L, Mishkin M. Functional mapping of the primate auditory system. Science. 2003;299:568–572. doi: 10.1126/science.1078900. [DOI] [PubMed] [Google Scholar]

- Prather SC, Votaw JR, Sathian K. Task-specific recruitment of dorsal and ventral visual areas during tactile perception. Neuropsychologia. 2004;42:1079–1087. doi: 10.1016/j.neuropsychologia.2003.12.013. [DOI] [PubMed] [Google Scholar]

- Rämä P. Domain-dependent activation during spatial and nonspatial auditory working memory. Cogn Process. 2008;9:29–34. doi: 10.1007/s10339-007-0182-y. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP. Processing of complex sounds in the auditory cortex of cat, monkey, and man. Acta Otolaryngol Suppl. 1997;532:34–38. doi: 10.3109/00016489709126142. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP. Parallel processing in the auditory cortex of primates. Audiol Neurootol. 1998a;3:86–103. doi: 10.1159/000013784. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP. Cortical processing of complex sounds. Curr Opin Neurobiol. 1998b;8:516–521. doi: 10.1016/s0959-4388(98)80040-8. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Scott SK. Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing. Nat Neurosci. 2009;12:718–724. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci U S A. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B, Pons T, Mishkin M. Serial and parallel processing in rhesus monkey auditory cortex. J Comp Neurol. 1997;382:89–103. [PubMed] [Google Scholar]

- Reed CL, Klatzky RL, Halgren E. What vs where in touch: an fMRI study. Neuroimage. 2005;25:718–726. doi: 10.1016/j.neuroimage.2004.11.044. [DOI] [PubMed] [Google Scholar]

- Remedios R, Logothetis NK, Kayser C. An auditory region in the primate insular cortex responding preferentially to vocal communication sounds. J Neurosci. 2009;29:1034–1045. doi: 10.1523/JNEUROSCI.4089-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Renier L, Collignon O, Poirier C, Tranduy D, Vanlierde A, Bol A, Veraart C, De Volder AG. Cross-modal activation of visual cortex during depth perception using auditory substitution of vision. Neuroimage. 2005;26:573–580. doi: 10.1016/j.neuroimage.2005.01.047. [DOI] [PubMed] [Google Scholar]

- Ricciardi E, Bonino D, Gentili C, Sani L, Pietrini P, Vecchi T. Neural correlates of spatial working memory in humans: a functional magnetic resonance imaging study comparing visual and tactile processes. Neuroscience. 2006;139:339–349. doi: 10.1016/j.neuroscience.2005.08.045. [DOI] [PubMed] [Google Scholar]

- Rodgers KM, Benison AM, Klein A, Barth DS. Auditory, somatosensory, and multisensory insular cortex in the rat. Cereb Cortex. 2008;18:2941–2951. doi: 10.1093/cercor/bhn054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Goldman-Rakic PS. An auditory domain in primate prefrontal cortex. Nat Neurosci. 2002;5:15–16. doi: 10.1038/nn781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roth EC, Hellige JB. Spatial processing and hemispheric asymmetry. Contributions of the transient/magnocellular visual system. J Cogn Neurosci. 1998;10:472–484. doi: 10.1162/089892998562889. [DOI] [PubMed] [Google Scholar]

- Sathian K. Visual cortical activity during tactile perception in the sighted and the visually deprived. Dev Psychobiol. 2005;46:279–286. doi: 10.1002/dev.20056. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe J. Multisensory contributions to low-level, ‘unisensory’ processing. Curr Opin Neurobiol. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Lindsley RW, Specht C, Marcovici A, Smiley JF, Javitt DC. Somatosensory input to auditory association cortex in the macaque monkey. J Neurophysiol. 2001;85:1322–1327. doi: 10.1152/jn.2001.85.3.1322. [DOI] [PubMed] [Google Scholar]

- Senkowski D, Saint-Amour D, Kelly SP, Foxe JJ. Multisensory processing of naturalistic objects in motion: a high-density electrical mapping and source estimation study. Neuroimage. 2007;36:877–888. doi: 10.1016/j.neuroimage.2007.01.053. [DOI] [PubMed] [Google Scholar]

- Smiley JF, Hackett TA, Ulbert I, Karmas G, Lakatos P, Javitt DC, Schroeder CE. Multisensory convergence in auditory cortex. I. Cortical connections of the caudal superior temporal plane in macaque monkeys. J Comp Neurol. 2007;502:894–923. doi: 10.1002/cne.21325. [DOI] [PubMed] [Google Scholar]

- Smith EE, Jonides J. Neuroimaging analyses of human working memory. Proc Natl Acad Sci U S A. 1998;95:12061–12068. doi: 10.1073/pnas.95.20.12061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoesz MR, Zhang M, Weisser VD, Prather SC, Mao H, Sathian K. Neural networks active during tactile form perception: common and differential activity during macrospatial and microspatial tasks. Int J Psychophysiol. 2003;50:41–49. doi: 10.1016/s0167-8760(03)00123-5. [DOI] [PubMed] [Google Scholar]

- Stricanne B, Andersen RA, Mazzoni P. Eye-centered, head-centered, and intermediate coding of remembered sound locations in area LIP. J Neurophysiol. 1996;76:2071–2076. doi: 10.1152/jn.1996.76.3.2071. [DOI] [PubMed] [Google Scholar]

- Talairach G, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Tanabe HC, Honda M, Sadato N. Functionally segregated neural substrates for arbitrary audiovisual paired-association learning. J Neurosci. 2005;25:6409–6418. doi: 10.1523/JNEUROSCI.0636-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian B, Reser D, Durham A, Kustov A, Rauschecker JP. Functional specialization in rhesus monkey auditory cortex. Science. 2001;292:290–293. doi: 10.1126/science.1058911. [DOI] [PubMed] [Google Scholar]

- Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW, editors. Analysis of visual behavior. Cambridge, MA: MIT; 1982. pp. 549–586. [Google Scholar]

- van Atteveldt N, Formisano E, Goebel R, Blomert L. Integration of letters and speech sounds in the human brain. Neuron. 2004;43:271–282. doi: 10.1016/j.neuron.2004.06.025. [DOI] [PubMed] [Google Scholar]

- van Atteveldt NM, Formisano E, Goebel R, Blomert L. Top-down task effects overrule automatic multisensory responses to letter-sound pairs in auditory association cortex. Neuroimage. 2007;36:1345–1360. doi: 10.1016/j.neuroimage.2007.03.065. [DOI] [PubMed] [Google Scholar]

- Van Boven RW, Ingeholm JE, Beauchamp MS, Bikle PC, Ungerleider LG. Tactile form and location processing in the human brain. Proc Natl Acad Sci U S A. 2005;102:12601–12605. doi: 10.1073/pnas.0505907102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wager TD, Smith EE. Neuroimaging studies of working memory: a meta-analysis. Cogn Affect Behav Neurosci. 2003;3:255–274. doi: 10.3758/cabn.3.4.255. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Ramachandran R, Stein BE. A revised view of sensory cortical parcellation. Proc Natl Acad Sci U S A. 2004;101:2167–2172. doi: 10.1073/pnas.0305697101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren JD, Griffiths TD. Distinct mechanisms for processing spatial sequences and pitch sequences in the human auditory brain. J Neurosci. 2003;23:5799–5804. doi: 10.1523/JNEUROSCI.23-13-05799.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weaver KE, Stevens AA. Attention and sensory interactions within the occipital cortex in the early blind: an fMRI study. J Cogn Neurosci. 2007;19:315–330. doi: 10.1162/jocn.2007.19.2.315. [DOI] [PubMed] [Google Scholar]

- Weeks R, Horwitz B, Aziz-Sultan A, Tian B, Wessinger CM, Cohen LG, Hallett M, Rauschecker JP. A positron emission tomographic study of auditory localization in the congenitally blind. J Neurosci. 2000;20:2664–2672. doi: 10.1523/JNEUROSCI.20-07-02664.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weeks RA, Aziz-Sultan A, Bushara KO, Tian B, Wessinger CM, Dang N, Rauschecker JP, Hallett M. A PET study of human auditory spatial processing. Neurosci Lett. 1999;262:155–158. doi: 10.1016/s0304-3940(99)00062-2. [DOI] [PubMed] [Google Scholar]

- Wright TM, Pelphrey KA, Allison T, McKeown MJ, McCarthy G. Polysensory interactions along lateral temporal regions evoked by audiovisual speech. Cereb Cortex. 2003;13:1034–1043. doi: 10.1093/cercor/13.10.1034. [DOI] [PubMed] [Google Scholar]

- Zangaladze A, Epstein CM, Grafton ST, Sathian K. Involvement of visual cortex in tactile discrimination of orientation. Nature. 1999;401:587–590. doi: 10.1038/44139. [DOI] [PubMed] [Google Scholar]