Abstract

Do the target-distractor and distractor-distractor similarity relationships known to exist for simple stimuli extend to real-world objects, and are these effects expressed in search guidance or target verification? Parts of photorealistic distractors were replaced with target parts to create four levels of target-distractor similarity under heterogenous and homogenous conditions. We found that increasing target-distractor similarity and decreasing distractor-distractor similarity impaired search guidance and target verification, but that target-distractor similarity and heterogeneity/homogeneity interacted only in measures of guidance; distractor homogeneity lessens effects of target-distractor similarity by causing gaze to fixate the target sooner, not by speeding target detection following its fixation.

Keywords: Visual search, eye movements, visual similarity, object parts, target-distractor similarity, distractor homogeneity

1. Introduction

Similarity is a core construct in human perception and cognition. The similarity relationships between objects have been shown to affect behavior in many tasks: change detection (e.g., Zelinsky, 2003), recognition (e.g., Ashby & Perrin, 1988; Edelman, 1998), categorization (e.g., Medin, Goldstone & Gentner, 1993; Oliva & Torralba, 2001), analogical reasoning (e.g., Blanchette & Dunbar, 2000), and many others (Medin et al., 1993; Tversky, 1977). Although similarity does not impact each of these tasks in exactly the same way or to the same degree, it is possible to characterize its influence in terms of a general rule of thumb: as the similarity between task-relevant and task-irrelevant objects increases, people are more likely to confuse the two.

Similarity relationships also profoundly affect performance in visual search tasks. Manual search times in tasks with target-similar distractors are longer than for tasks with target-dissimilar distractors (e.g., Duncan & Humphreys, 1989), regardless of whether the task is a feature or conjunction search (Phillips, Takeda, & Kumada, 2006). Search performance is also affected by how similar distractors are to other distractors (Duncan & Humphreys, 1989), how similar targets are to a search background (Neider & Zelinsky, 2006a), and even how similar targets are to the targets used on different trials (Smith, Redford, Gent & Washburn, 2005).

Visual similarity relationships also serve as the foundation of many search theories. Feature Integration Theory (FIT; Treisman & Gelade, 1980) predicts the preattentive detection of targets when they are maximally dissimilar from distractors on at least one feature dimension. Duncan and Humphreys’ (1989) theory suggests a more nuanced effect of similarity, one that is expressed along a continuum (see Treisman, 1991, and Duncan & Humphreys, 1992, for further debate on this point). Guided Search Theory (Wolfe, 1994; Wolfe, Cave, & Franzel, 1989) distinguishes between bottom-up and top-down effects of similarity; bottom-up guidance depends on the degree of feature similarity between an object and its neighbors in a search display, and the efficiency of top-down guidance depends on the similarity between the search items and the target. Signal Detection Theory (Green & Swets, 1966) and Ideal Observer Theory (e.g., Geisler, 1989; Najemnik & Geisler, 2005) have also been applied to search, and similarity again plays a central role in these accounts; as targets and distractors become more similar, the likelihood of confusing one for the other increases (e.g., Palmer, Verghese, & Pavel, 2000; see, Verghese, 2001, for a review).

All of the above-described work quantified effects of similarity on search using relatively simple stimuli, but the recent trend has been to extend characterizations of search to more naturalistic contexts, usually arrays of common objects (e.g., Castelhano, Pollatsek, and Cave, 2008; Schmidt & Zelinsky, 2009; Yang, Chen, & Zelinsky, 2009) and computer-generated or fully realistic scenes (e.g., Brockmole & Henderson, 2006; Bravo & Farid, 2009; Eckstein, Drescher, & Shimozaki, 2006; Foulsham & Underwood, 2011; Neider & Zelinsky, 2008; Oliva, Wolfe, & Arsenio, 2004). This movement towards more naturalistic search contexts has also fueled the development of image-based search theories, capable of representing search patterns of arbitrary visual complexity (e.g., Kanan, Tong, Zhang, & Cottrell, 2009; Torralba, Oliva, Castelhano, & Henderson, 2006; Pomplun, Reingold, & Shen, 2003; Rao, Zelinsky, Hayhoe, & Ballard, 2002; Zelinsky, 2008). These theories, although different from their non-image-based counterparts (e.g., Wolfe, 1994) in numerous respects, are nevertheless similar in that they too are driven largely by similarity relationships. For example, the Target Acquisition Model (TAM; Zelinsky, 2008) attributed variability in the number of eye movements needed to acquire a search target to differences in similarity between that target and the other objects in a scene (Experiment 1) or the distractors in a search array (Experiment 2). The problem lies, however, in that these similarity assumptions were never confirmed through independent behavioral testing. It may be the case that variability in search behavior indeed is due to differences in target-distractor similarity as suggested by TAM, but until such relationships are actually verified such conclusions must remain speculative.

As the visual search literature completes its movement from simple to more complex contexts, it is important to demonstrate that the key relationships upon which the search literature was built actually generalize to realistic objects and scenes. Similarity relationships are a perfect case in point. At least two factors prevent one from assuming that the similarity relationships established between simple patterns will also hold true for more realistic objects. First, realistic objects and scenes are semantically rich, and this semantic structure profoundly affects search (Henderson, Weeks & Hollingworth, 1999). Moores, Laiti and Chelazzi (2003) found that initial saccades are more likely to land on items with semantic associations than on control items (see also Eckstein et al., 2006). Neider and Zelinsky (2006b) found that observers searching a desert scene for a car tend to look initially down to the ground, while observers searching the same scene for a blimp tend to look initially up to the sky. Complex objects cast a web of associations that simply do not exist for simple objects, and these associations qualitatively change the similarity relationships between a target and the elements of a scene, as well as between the scene elements themselves (see Zelinsky & Schmidt, 2009, for additional discussion of this topic). Second, the similarity relationships established for complex objects and simple objects may differ simply because these objects reside in different feature spaces. Complex objects likely exist in very high-dimensional feature spaces; simple objects, by definition, do not. These differing degrees of feature complexity may impact the similarity relationships between objects. If two complex objects, each composed of 1000 features, differ on only one feature dimension, one might rightly consider these objects to be quite similar. However, if two green bars have very different orientations, one might rate these bars as quite dissimilar even though they also differ by only a single feature. Relatedly, the number of ways that a dog can be similar, and dissimilar, to a duck is large and probably innumerable; the number of ways that a green bar can be similar to a red bar can probably be counted on the fingers of one hand. These differing opportunities for the expression of similarity mean that the relationships established between simple objects cannot be simply assumed to exist for complex objects; these relationships have to be demonstrated anew (Alexander & Zelinsky, 2011).

The methods used to manipulate similarity between simple patterns also cannot be ported in a straightforward way to realistic objects. Manipulations have traditionally varied the similarity distance between two patterns in terms of only one feature dimension (e.g., hue, as in D’Zmura, 1991), holding constant all of the others. Extending this experimental logic to complex objects, however, may not be appropriate (but see Ling, Pietta, & Hulbert, 2009). Even in the context of simple patterns, evidence exists that feature conjunctions can influence similarity relationships independently of their constituent features (Takeda, Phillips & Kumada, 2007). This influence is probably even more pronounced for complex objects, where changing one feature (e.g., shape) might affect dozens more (texture, size, shading, brightness, etc.), resulting in unpredictable changes to the similarity relationships. Independent manipulations of a feature might also change the representation of a complex object in unintended ways, perhaps distorting it from a canonical form or even changing its semantic meaning. If a banana becomes too square, it may stop being a banana. Given that features of complex objects may combine and interact in ways that we can scarcely fathom, the manipulation of individual features is probably ill-advised.

If one does not wish to tinker with the features of complex objects, how else might similarity relationships be manipulated? We propose transplanting target features to distractors on a part-by-part and pixel-by-pixel basis. We believe that a part-based similarity manipulation is preferable to a feature-based similarity manipulation for several reasons. By transplanting the parts of one object to another, we also allow some (but not all) of the higher-order combinations of target features to be duplicated in the distractors. Given that the similarity relationships mediated by higher-order features might differ from those mediated by basic features, even a partial transference of feature combinations is desirable. So long as targets and distractors are from the same semantic category, and the transplanted parts replace conceptually equivalent parts, a part-based similarity manipulation should also avoid the creation of conceptual oddities. For example, a lamp might be fitted with any number of lampshades without altering the object’s basic category. In the present study we introduce a part-transplantation method using teddy bear images as targets and distractors. Teddy bears have clear part boundaries (head, arms, legs, and torso), making these objects well-suited to our method; the similarity between a target and distractor can be manipulated in terms of the number of target parts moved to the distractor objects. As the number of parts shared by a target and distractor increases, so does our expectation of similarity between these objects. This method therefore enables a meaningful manipulation of similarity between multi-dimensional and featurally complex objects, while avoiding the problems associated with manipulating, identifying, enumerating, or otherwise specifying the actual features or feature combinations involved in the similarity manipulation.

A related part-based similarity manipulation was used by Wolfe and Bennett (1996) in the context of a search task, albeit to address a fundamentally different question. Their goal was to determine whether subjects could search efficiently for a target having a part that was not present on any of the distractors. They found that preattentive search was not possible based on a unique target part, suggesting that parts are integrated into the holistic representation of an object for the purpose of search. Despite the use of a similar part manipulation paradigm, the present study differs from the Wolfe and Bennett study in several key respects. We used photorealistic objects as stimuli, not line drawings. Our similarity manipulation allowed the target and distractors to share the same semantic category; in the Wolfe and Bennett study the target was a “chicken” and the distractors were “not chickens”. And lastly, the goal of the Wolfe and Bennett study was to determine whether the shape of a part can be used as a preattentive feature; our goals are: to determine whether the relationships between similarity and search reported using simple stimuli extend to realistic objects, to assess whether a part-based similarity manipulation is effective in revealing these relationships, and to quantify the effects of these relationships on search guidance to a target and on target verification.

Given our focus on search guidance in this study, we will rely on eye movement measures rather than manual reaction time (RT) measures of search efficiency. Manual RT measures are useful in indicating the overall difficulty of a search task, but they lack the resolution needed to tease apart key components of the search process. For example, it is ambiguous from an 800 ms RT whether the search process required two 400 ms fixations or four 200 ms fixations (Zelinsky & Sheinberg, 1997). The former case indicates a search process that is strongly guided to the target but requires a fairly long time to verify the object following each eye movement; the latter case indicates a comparatively poor search guidance process that requires only half the time to recognize an object as a target or distractor. This distinction is particularly important in the current context, as similarity effects might be expressed as changes in search guidance, object verification time, or both. Previous investigations that have used eye movements to study similarity effects on search have not addressed this question. Ludwig and Gilchrist (2002, 2003) demonstrated that observers were more likely to fixate a target-similar irrelevant distractor than a target-dissimilar one, but their paradigm had distractors abruptly onsetting. Using a more traditional search task, Becker, Ansorge, and Horstmann (2009) found that target-similar distractors were fixated more frequently and for longer durations, but their stimuli were simple patterns that either shared the target’s color or did not. To our knowledge, ours is the first study to ask how part-based visual similarity relationships between realistic objects affect the guidance of gaze to search targets.

2. Experiment 1

If the similarity between realistic objects affects search in the same way as the similarity between simple patterns, then previous work suggests that increasing target-distractor (T-D) similarity should result in longer RTs. The cause of this predicted increase in RT depends on whether T-D similarity affects search guidance or verification. If the similarity between targets and distractors affects guidance, increasing similarity should result in fewer trials in which the target is the first object fixated and longer times to the first fixation on the target. However, if T-D similarity effects are expressed primarily in verification times we would expect small and unreliable differences in these guidance measures, but significantly longer times between the first fixation on the target and the manual response. Moreover, we expect that our part-based similarity manipulation will be able to reveal the limits of any relationship between T-D similarity and search performance; as the number of parts shared by the targets and distractors increase observers may become unable to discriminate between the two types of objects, resulting in an increase in false positive errors. The potential therefore exists to better understand how T-D similarity affects search, in addition to demonstrating that similarity effects extend to realistic objects and that our part-based manipulation is useful in revealing these similarity effects.

2.1. Methods

2.1.1. Participants

Sixteen observers (mean age of 20.5 years) from Stony Brook University participated in exchange for course credit. All had normal or corrected-to-normal vision and normal color vision, by self-report.

2.1.2. Stimuli and apparatus

Images of teddy bear objects were obtained from Cockrill (2001; as described in Yang & Zelinsky, 2009), the Vermont Teddy Bear website (http://shop.vermontteddybear.com, downloaded 2/19/08), and the Hemera® Photo-objects collection, and all were resized to subtend a visual angle of approximately 2.8°. For each image the head, torso, arms, and legs of the teddy bear were segmented manually at part boundaries using Adobe Photoshop CS3. Pairs of arms and legs were treated as one “part” in order to maintain object symmetry across the part manipulations. All manipulations were substitutions between corresponding parts; one or more parts of the target bear replaced the corresponding parts on a distractor bear. For example, to create a head substitution, the head of a teddy bear distractor would be replaced by the head from the target teddy bear. No distractor or target appeared more than once, so as to avoid any repetitions that might influence search behavior.

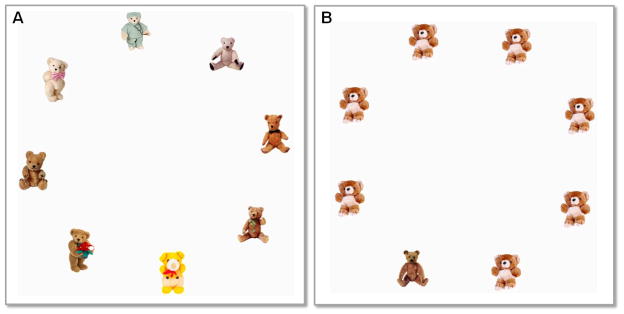

Four levels of T-D similarity were used, each describing the number of target parts that were transplanted to a distractor (Figure 1). In the 0-part condition, the distractor bears had no parts matched to the target. This condition served as a baseline with respect to our part-based similarity manipulation; the properties shared by the target and distractors were those that naturally exist between images of teddy bears. In the 1-part condition, one part of the target bear -- either the head, torso, arms (as a pair), or legs (as a pair) -- was transplanted to each distractor. In the 2-part condition, two target parts were transplanted to each distractor. These parts were chosen from six allowable combinations of parts: head and torso, head and arms, head and legs, torso and arms, torso and legs, and arms and legs. In the 3-part condition, three parts from the target were transplanted onto the distractors, leaving distractors with only one part unmatched to the target. On a given trial, each distractor was drawn from the same T-D similarity condition, and each individual target part (e.g., the target’s head) appeared equally often (as much as possible). For example, a 1-part target-absent trial would have two distractors with the same head as the target, two with the same arms as the target, two with the same legs as the target, and two with the same torso as the target. The target parts transplanted onto the distractors were counterbalanced across trials for each T-D similarity condition, and each observer participated in 30 trials at each of the four levels of the T-D similarity manipulation.

Figure 1.

Examples of target and distractor teddy bears from each T-D similarity condition. 0-part, 1-part, 2-part, and 3-part refer to the number of parts from a distractor that were matched to the target.

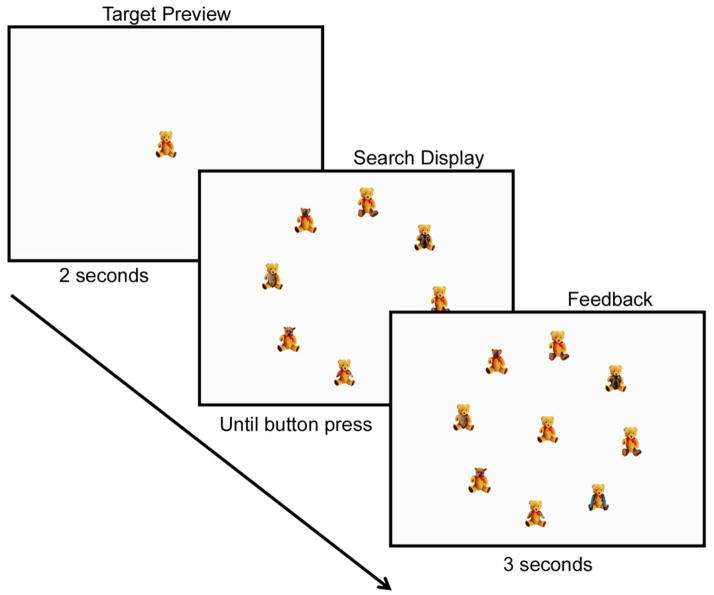

Figure 2A shows a representative search display, each consisting of either eight distractors (target absent) or seven distractors and a target (target present). Objects in the search display were positioned on an imaginary circle having a radius of 6.7° relative to central fixation, and neighboring objects were separated by approximately 5.4° (center-to-center distance). The positions of objects in each display were counterbalanced across observers so that any observed differences in guidance between the T-D similarity conditions could not be due to differences in object locations. Eye position was sampled at 500 Hz using an SR Research EyeLink® II eye tracking system. SR Research Experiment Builder (version 1.5.201) was used to control the experiment. Observers indicated their target present and absent judgments by pressing either the left or right index finger triggers, respectively, of a GamePad controller. Stimuli were displayed on a flat-screen ViewSonic P95f+ monitor using a Radeon X600 series video card at a resolution of 1024 × 768 pixels and a refresh rate of 85 Hz. A chin rest was used to fix head position and viewing distance at 72 cm from the screen, resulting in the display subtending 28° × 21°.

Figure 2.

Representative examples of 0-part similarity search displays in (A) Experiment 1 (heterogenous distractors) and (B) Experiment 2 (homogenous distractors).

2.1.3. Design and procedure

The experimental design included four levels of T-D similarity (0-part, 1-part, 2-part, or 3-part), and present and absent search trials. T-D similarity (and of course target presence) was a within-subjects variable and was randomly interleaved so as to discourage observers from adopting different search strategies depending on the specific level of similarity.

Figure 3 illustrates the general procedure. The experiment began with a 9-point calibration routine used to map eye position to screen coordinates. Calibrations were not accepted until the average error was less than .4° and the maximum error was less than .9°. There were eight practice trials, each illustrating a different within-subjects condition, followed by 120 experimental trials. Each trial began with a 2 second pictorial preview of the target bear. The preview was centrally located and subtended approximately 2.8°, the same size as objects in the search arrays. The target preview was followed immediately by a search array. The search array remained visible until the manual button press judgment. Observers were instructed to search for the designated teddy bear target among the other images of teddy bears, and to make their target present or absent response as quickly and as accurately as possible. The instructions did not mention the similarity manipulation between the target and distractor bears, or that the parts of the bears might be relevant to the search judgment. Accuracy feedback was provided on each trial. For correct decisions, the word “correct” was displayed immediately upon offset of the search array. For incorrect decisions on target-present trials, the search array would remain visible for 3 seconds with a circle drawn around the target. For incorrect decisions on target-absent trials, the array of distractor bears would again remain for 3 seconds, and the target bear would be displayed at the center of the display (Figure 3). These measures were adopted so that observers could better compare the target to the distractors and to examine the differences between them.

Figure 3.

The procedure for a representative 3-part target absent trial in Experiment 1.

2.1.4. Similarity Rating Task

Given that the targets and distractors in the search task were all teddy bears, and teddy bears as a class of objects tend to be self-similar, it is unclear whether the part-transplantation method would create distractors that are perceptually more similar to the target than the unaltered distractors. It is also possible that the act of transplanting a part to a distractor may make that object holistically less similar to the target than another bear distractor, despite the transplanted part belonging to the target. To directly assess the perceptibility of our similarity manipulation we asked seven observers, none of whom participated in the search experiment, to explicitly rate the visual similarity between each search target and the distractors that accompanied that target in the search task. We did this using the actual displays from the search experiment, only with the target appearing at the display’s center (as in the accuracy feedback displays from the target-absent search condition; see Figure 3). Similarity ratings were based on a 1–9 scale, with 1 indicating a teddy bear that looked nothing like the target and 9 indicating perfect target similarity (i.e., the actual target bear). Observers provided eight visual similarity ratings per trial, one for each of the eight objects. The rating task was self-paced, with the entire task requiring approximately one hour.

2.2. Results

2.2.1. Visual Similarity Ratings

Visual similarity estimates from the rating task averaged 2.22 (SE = .44) in the 0-parts matched condition, 4.14 (SE = .41) in the 1-part matched condition, 5.14 (SE = .30) in the 2-parts matched condition, and 6.20 (SE = .26) in the 3-parts matched condition, F(3,18) = 64.31, p < .001. This confirms that our part-based similarity manipulation was effective in producing the desired changes in perceptual similarity between targets and distractors. Moreover, these estimates were highly linear over the range of our manipulation, with linearity accounting for 96% of the variability in the estimates. This suggests that our part transplantation manipulation was parametric, with the addition of each transplanted part creating a relatively constant increase in perceived mean similarity. All results to follow will pertain only to the search task.

2.2.2. Search Accuracy

Increasing the number of parts matched to the target should increase the percentage of false positive errors, as observers would be more likely to confuse a target-similar distractor with the actual target on target absent trials. As shown in Table 1, this prediction was confirmed. Observers made more false positives than misses, F(1,15) = 18.58, p < .01, errors increased with T-D similarity, F(3,45) = 55.00, p < .001, and T-D similarity had more of an effect on target-absent trials than on target-present trials, F(3, 45) = 38.21, p < .001. This pattern of results suggests that our part-based manipulation was effective in manipulating similarity in the context of realistic objects. At the lowest level of T-D similarity, when the distractors were not matched to the target, error rates were generally low, and misses tended to be more common than false positives. This is typical for visual search tasks. However, false positive rates increased with even one distractor part matching the target, and with two or three matching parts these errors increased to 20–46%, levels indicating that observers frequently could not discriminate distractors from targets.

Table 1.

Error rates, mean button press reaction times, mean number of distractors fixated, and mean dwell time on distractors in Experiment 1 (heterogenous distractors) as a function of target-distractor similarity and target presence.

| Present | Absent | |||||||

|---|---|---|---|---|---|---|---|---|

| Level of target-distractor similarity (number of parts matched to target) | ||||||||

| 0 | 1 | 2 | 3 | 0 | 1 | 2 | 3 | |

| % Errors | 6.7 | 11.7 | 13.3 | 10.0 | 1.7 | 5.8 | 20.8 | 46.3 |

| SEM | 1.5 | 2.4 | 2.4 | 2.4 | 0.7 | 1.5 | 2.3 | 4.7 |

| RT (ms) | 1220 | 1600 | 2016 | 2108 | 1471 | 2163 | 2613 | 3126 |

| SEM | 48 | 59 | 104 | 105 | 61 | 107 | 145 | 222 |

| Objects fixated | 1.7 (1.5) | 2.4 (2.2) | 2.9 (2.6) | 2.7 (2.4) | 3.4 | 4.8 | 5.2 | 5.7 |

| SEM | 0.1 (0.1) | 0.1 (0.1) | 0.2 (0.2) | 0.1 (0.1) | 0.3 | 0.3 | 0.3 | 0.3 |

| Dwell time (ms) | 167 | 219 | 289 | 409 | 192 | 254 | 302 | 355 |

| SEM | 4.7 | 9.3 | 10.5 | 18.1 | 4.8 | 10.4 | 10.3 | 17.1 |

Notes. All data are from correct trials, except for error rates. Values in parentheses are the mean number of distractors fixated before the target, a measure of search guidance.

2.2.3. Manual Search Times

These high false positive rates, while clearly demonstrating the effectiveness of our similarity manipulation, also create a potential problem for interpreting the manual RT data. If observers could not do the task, as was often the case in the 3-part similarity condition, then their judgments might be faster due to a tradeoff between speed and accuracy. This proved not to be the case (Table 1). RTs increased with T-D similarity in both the target-present, F(3,45) = 54.21, p < .001, and target-absent, F(3,45) = 64.48, p < .001, data, with a more pronounced increase in the target-absent data compared to the target-present data, F(3,45) = 13.22, p < .001. Manual RTs therefore dovetail perfectly with the accuracy data in suggesting that search performance declines as target-matched parts are added to the distractors in a search display.

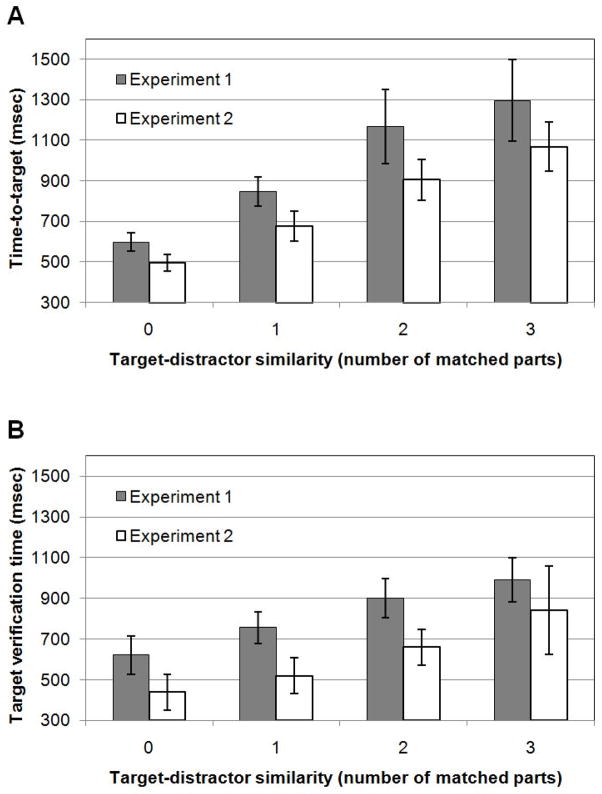

2.2.4. Time-to-Target and Target Verification Times

To determine whether T-D similarity affected guidance to the target and/or the time needed to recognize the target once it was fixated, we decomposed the overall target present RT data into the time that it took observers to first fixate the target (time-to-target) and the time that it took observers to correctly indicate the presence of the target following its fixation (target verification time). If the effect of T-D similarity is confined to search guidance, we would expect the time-to-target measure to increase with the number of matched parts, but verification times not to differ between the similarity conditions. If the effect of T-D similarity is confined to the target decision process, we would expect verification times to increase with the number of matched parts, but no differences in time-to-target. Of course, if T-D similarity affects both guidance and target decision processes, both time-to-target and target verification time should increase as more target parts are transplanted onto the distractors.

Figure 4A shows that search guidance was affected by T-D similarity. T-D similarity affected the time from search display onset until the observer’s first fixation on the target, F(3,45) = 35.18, p < .001, with time-to-target increasing with the number of target-matched parts. The number of distractors fixated during the same time period also increased with T-D similarity (Table 1), F(3,45) = 20.02, p < .001, confirming that T-D similarity affected guidance during search. Figure 4B shows that increasing T-D similarity also resulted in longer verification times after the target was fixated, F(3,45) = 22.65, p < .001 (the effect remained after excluding cases in which observers shifted gaze to another object after fixating the target, F(3,45) = 15.56, p < .001). This effect of similarity on search decisions also extended to the time taken to reject distractors (Table 1), F(3,45) = 116.54, p < .001. Together, these patterns suggest that increasing T-D similarity negatively impacts search very broadly, affecting search guidance to the target as well as the time needed to make a target decision. In fact, adding even a single target-matched part to distractors resulted in significant decrements for both search measures (both p < .001), providing further evidence for the effectiveness of our part-based similarity manipulation.

Figure 4.

Time-to-target (A) and target verification time (B) for correct target present trials in Experiments 1 and 2. Error bars show 95% confidence intervals.

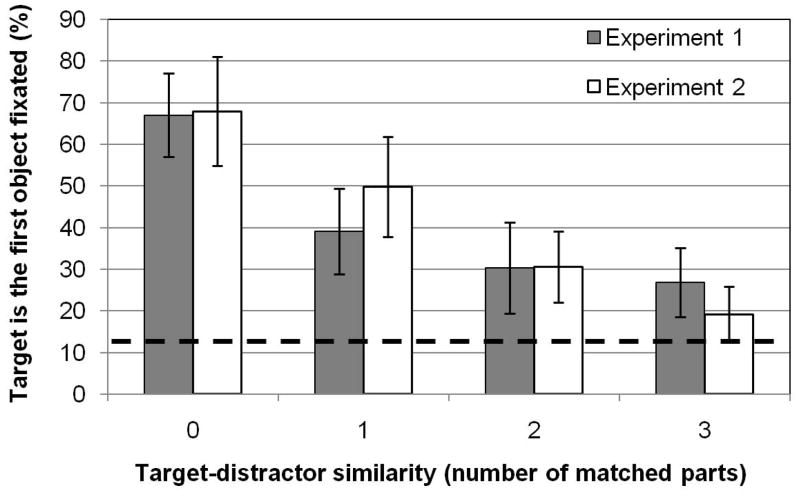

2.2.5. First Object Fixated

There is good reason to believe that the T-D similarity effect reported in the time-to-target measure reflects actual search guidance, but it is still possible that the longer distractor rejection times leading up to the initial target fixation might have masqueraded as a guidance effect. It is also unclear from a time-to-target measure how soon a guidance effect might be expressed after search display onset. To address both of these concerns we determined the percentage of target present trials on which the target was the first object fixated. If observers first fixate the target more frequently than what would be expected by chance, this would be evidence for an early guidance of search to the target. This measure is also free of contaminants introduced by variable-duration fixations on distractors, and its short latency makes it relatively free of biases introduced by semantic analyses of the search objects (Yang et al., 2009).

Figure 5 shows the percentages of target-present trials in which the target was the first object fixated as a function of T-D similarity. Note that on 12.5% of the trials the target should be immediately fixated by chance alone, given eight objects in the display. Consistent with the time-to-target measure, the percentage of first object fixations on targets decreased with increasing T-D similarity, F(3,45) = 22.57, p < .001.1 Guidance was most pronounced when distractors were not matched to the target, but increasing T-D similarity by matching even one distractor part to the target resulted in a significant reduction in immediate target fixations (p < .001). Note that search guidance continued to decrease when distractors shared two or three parts with the target, although guidance was still above chance even at these high levels of T-D similarity, t(15) = 3.48, p < .01 and t(15) = 3.69, p < .01, respectively.

Figure 5.

Percentage of trials in which the target was the first object fixated in Experiments 1 and 2. Error bars show 95% confidence intervals. The dashed line indicates chance.

2.3. Discussion

We conducted Experiment 1 to address three main goals: (1) to determine whether the effects of T-D similarity on search described for simple stimuli generalize to visually complex realistic objects, (2) to evaluate this relationship in terms of a part-based similarity manipulation, and (3) to quantify these T-D similarity effects in terms of search guidance and target verification processes, as assessed by explicit eye movement measures.

The data are clear in suggesting that a part-based manipulation is an effective method of manipulating T-D similarity between realistic objects. Increasing the number of parts shared by targets and distractors resulted in longer manual RTs and higher false positive error rates, patterns that are consistent with previous reports using simpler search stimuli. T-D similarity effects on search are therefore not confined to relationships between clearly identifiable features residing in a low-dimensional feature space; even when the similarity relationships are more complex and arguably quite subtle, search performance was found to worsen with increasing T-D similarity. It is also worth noting that as T-D similarity increased in our task, so too did distractor-distractor similarity; as more target parts were transplanted onto distractors, the distractors necessarily became more similar to each other. This dependency would be expected to work against finding an effect of T-D similarity; the search benefit from increased distractor-distractor similarity (Duncan & Humphreys, 1989, 1992) should mitigate the search cost resulting from increased T-D similarity. Given this, the actual effect of T-D similarity on search might therefore be even stronger than the already large effect that we report here.

We also found that this effect of T-D similarity on search efficiency is a result of both longer search decisions as well as an actual change in search guidance. We demonstrated that increasing T-D similarity resulted in longer times to fixate targets, more distractors fixated before the target, fewer immediate target fixations, longer target verification times, longer dwell times on distractors, and lower accuracy. Finally, we were able to determine the number of shared parts that were necessary to produce these effects of T-D similarity. In this regard, our part-swapping manipulation was even more effective than we had anticipated. Not only did the transplantation of even one target part onto the distractors result in a significant T-D similarity effect, transplanting three parts lowered search guidance to nearly chance. This finding is important, as it suggests a rather low upper limit on how similar a realistic target can be to distractors in order for it to generate a useable signal for guidance and recognition. Even though a quarter of the target’s parts were not shared by the distractors, this level of uniqueness was largely inadequate for guiding search to the target in this task (see also Wolfe and Bennett, 1996).

3. Experiment 2

The similarity between targets and distractors is not the only visual similarity relationship known to affect search; search is also affected by how similar distractors are to each other. Using a letter search task, Duncan and Humphreys (1989, 1992) found that search efficiency decreased, not only with increasing T-D similarity, but also with decreasing distractor-distractor (D-D) similarity. Moreover, these two forms of visual similarity interacted. T-D similarity degraded search more when D-D similarity was low (e.g. heterogenous displays, where each distractor is different) than when D-D similarity was high (e.g. homogenous displays, where every distractor is identical). Duncan and Humphreys theorized that higher T-D similarity makes selecting the target more difficult, whereas higher D-D similarity enables even target similar distractors to be rejected as a group through a process that they referred to as “spreading suppression”. FIT (Treisman & Gelade, 1980) and Guided Search (Wolfe, 1994, 2007) also predict an interaction between T-D and D-D similarity, but through different processes. Under FIT, when distractors are not target-similar their corresponding feature maps can be inhibited preattentively. However, when the distractors are target-similar, each relevant feature map must be selected to determine the target’s location, slowing search. T-D similarity would affect search less in homogenous displays because, while the relevant feature map would need to be considered, distractors could be rejected as a group (Treisman, 1988; Treisman & Sato, 1990). Similarly, Guided Search theory (Wolfe et al., 1989) predicts a greater preferential fixation of target-similar distractors when these distractors have a high degree of feature heterogeneity. When the distractors are all self-similar (homogenous), the feature channel differing from the target can be more easily isolated, allowing all of the distractors to be de-weighted in the computation of the activation map used to guide search.

The above described theories hold that T-D and D-D similarity relationships exert their influence very early in search, during the process of target selection. However, from Experiment 1 we know that higher-level decision processes also affect search, raising the possibility that this process, and not better target selection and guidance, is responsible for the reports of improved search efficiency with homogenous arrays of distractors. The plausibility of this suggestion is captured best by signal detection characterizations of search (e.g., Palmer et al., 2000; Verghese, 2001). As distractor homogeneity increases and the distribution of distractor-related activity narrows, these objects become easier to discriminate from targets. This heightened discriminability might result in improved guidance to the target, but also shorter verification/decision times once the target is located. Differences in verification and distractor rejection times could also underlie interactions between T-D and D-D similarity. Although target-similar distractors might generally take longer to reject due to the need to accumulate additional discriminating information, this effect of T-D similarity might be mitigated by D-D similarity; when all of the distractors are the same a single decision rule can be applied to each rejection. Effects of D-D similarity on search can therefore not simply be assumed to occur at the level of search guidance; this relationship to guidance must be actually demonstrated so as to rule out potential contributions from higher-level decision processes.

The goals of this experiment were identical to those described for Experiment 1, only now tailored to reveal effects of D-D similarity on search. The levels of D-D similarity in Experiment 1 were consistently low; all of the distractors were different teddy bears with different parts matched to the target. In contrast, distractors in Experiment 2 were identical teddy bears with the same part(s) matched to the target. By comparing search under these homogenous distractor conditions to the heterogeneous conditions from Experiment 1, we will be able determine whether the D-D similarity effects reported using simple stimuli generalize to more complex objects. Moreover, by manipulating the number of target parts transplanted onto the distractors we will be able to observe how D-D similarity interacts with T-D similarity. If the relationship described by Duncan and Humphreys (1989) holds true for a part-based similarity manipulation between realistic objects, we would expect search to be most efficient when the distractors are homogenous and have no parts matching the target. We would also expect search efficiency to again decrease with increasing T-D similarity, although not as much as in Experiment 1. As in Experiment 1, we will use eye movement analyses to specify whether similarity-based changes in manual search efficiency are due to guidance processes, target verification processes, or both. If D-D similarity effects are mediated by spreading suppression between distractors (Duncan & Humphreys, 1989) or by the preattentive inhibition of distractor features (Treisman, 1988; Wolfe et al., 1989), we should find a larger percentage of first object fixations on targets and shorter time-to-target fixation latencies (both indications of improved guidance) with homogenous distractor displays compared to the heterogeneous displays used in Experiment 1. However, if D-D similarity effects are due to differential demands on target verification, then manipulating distractor homogeneity/heterogeneity should affect the latency between target fixation and response but should not affect measures of search guidance. Finally, we will seek to determine whether the interaction between T-D and D-D similarity results from the differential recruitment of these verification and guidance processes.

3.1. Methods

3.1.1. Participants

Sixteen observers (mean age of 20.8 years) from Stony Brook University participated in exchange for course credit. All reported normal or corrected-to-normal visual acuity and color vision, and none had participated in Experiment 1.2

3.1.2. Design and procedure

The methods used in Experiment 2 were identical to those described for Experiment 1 except in one key respect. In Experiment 1 each distractor was a different randomly selected teddy bear with different parts matched to the target, which in the current context we will now refer to as the heterogeneous distractor condition. In Experiment 2 each distractor bear in a search display was the same randomly selected teddy bear with the same parts matched to the target. We will refer to this as the homogenous distractor condition (see Figure 2 for examples of heterogeneous and homogenous search displays).

To create a homogenous search display, we randomly selected a non-target bear and used it for each of the distractors. There were again 0, 1, 2, and 3-parts matched conditions describing the number of target parts transplanted onto the distractor bear. Each observer again participated in 30 trials at each of these four levels of the T-D similarity. All of the distractor bears on a given trial shared the same target part(s), meaning that there might be a trial depicting eight instances of the distractor bear each having the target’s head and legs. Transplanted target part(s) were counterbalanced across trials. Thus, the number of trials in which the distractors had the same head as the target and the number of trials in which the distractors had the same legs as the target were equal. Importantly, all of the distractors on any given trial were exactly the same, the same distractor bear with the same parts matched to the target.

3.2. Results

3.2.1. Accuracy

Table 2 shows that observers again made more errors as more parts were matched to the target, F(3,45) = 32.47, p < .001, and this effect was greater in absent trials than present trials, F(3,45) = 6.37, p < .01. This interaction is reflected in the sharp increase in false positive errors with increasing T-D similarity. To compare data patterns between Experiments 1 and 2, we performed a 2×4×2 mixed ANOVA, with target presence/absence and T-D similarity being within-subjects variables and D-D similarity being a between-subjects variable. This revealed a significant three-way interaction, F(3,90) = 16.08, p < .001; false positive errors increased with T-D similarity more so than misses, with this trend being less pronounced in Experiment 2 compared to Experiment 1. Overall accuracy was also significantly higher in Experiment 2, F(1,30) = 5.43, p < .05. These patterns are consistent with observers making partial use of an “odd one out” search strategy. Observers could correctly respond “absent” in Experiment 2 after determining that all of the distractors were identical, even if they could not be distinguished from the target. As in Experiment 1, error trials were excluded from all subsequent analyses.

Table 2.

Error rates, mean button press reaction times, mean number of distractors fixated, and mean dwell times on distractors in Experiment 2 (homogeneous distractors) as a function of target-distractor similarity and target presence.

| Present | Absent | |||||||

|---|---|---|---|---|---|---|---|---|

| Level of target-distractor similarity (number of parts matched to target) | ||||||||

| 0 | 1 | 2 | 3 | 0 | 1 | 2 | 3 | |

| % Errors | 5.4 | 3.3 | 11.3 | 16.3 | 1.3 | 2.9 | 22.5 | 22.5 |

| SEM | 1.4 | 0.8 | 2.3 | 2.7 | 0.7 | 1.0 | 2.6 | 3.7 |

| RT (ms) | 935 | 1215 | 1573 | 1832 | 955 | 1561 | 1912 | 1932 |

| SEM | 58 | 72 | 80 | 120 | 57 | 91 | 108 | 103 |

| Objects fixated | 1.5 (1.4) | 1.9 (1.8) | 2.8 (2.1) | 2.9 (2.0) | 1.9 | 3.8 | 4.3 | 4.4 |

| SEM | 0.1 (0.1) | 0.1 (0.1) | 0.1 (0.1) | 0.2 (0.1) | 0.1 | 0.2 | 0.2 | 0.2 |

| Dwell time (ms) | 136 | 199 | 213 | 299 | 205 | 202 | 228 | 226 |

| SEM | 6.7 | 12.2 | 10.8 | 15.7 | 9.3 | 10.5 | 11.4 | 11.2 |

Notes. All data are from correct trials, except for error rates. Values in parentheses are the mean number of distractors fixated before the target, a measure of search guidance.

3.2.2. Manual Reaction Times

RTs again increased with T-D similarity in both the target-present, F(3,45) = 61.28, p < .001, and target-absent data, F(3,45) = 101.97, p < .001, with T-D similarity and target presence interacting, F(3,45) = 7.86, p < .01. More critical to this experiment are comparisons to the heterogenous conditions from Experiment 1. If similarity relationships between part-matched, realistic objects behave in the same way as those reported using simple stimuli, we would expect that increasing T-D similarity (more parts matched to the target) and decreasing D-D similarity (heterogeneous rather than homogenous conditions) should result in longer RTs, and that this increase should be most pronounced on target-absent heterogeneous trials at the highest level of T-D similarity. This data pattern was obtained (Table 2); T-D similarity and D-D similarity interacted in absent trials, F(3,90) = 9.50, p < .01, but not in present trials, F(3,90) = 1.16, p = .32. These analyses largely replicate, and extend to complex objects, the data patterns reported originally by Duncan and Humphreys (1989); RTs increased with T-D similarity, decreased with D-D similarity, and interacted such that the presence of homogenous distractors mitigated the effect of T-D similarity.

3.2.3. Time-to-target and Target Verification Times

As in the case of the heterogenous displays from Experiment 1, significant T-D similarity effects were found using homogenous displays in both time-to-target, F(3,45) = 60.61, p < .001, and target verification times, F(3,45) = 17.01, p < .001. This latter effect was obtained even when cases of gaze shifting to another object after target fixation were removed, F(3,45) = 10.84, p < .001. When distractor parts were matched to the target, observers took longer to guide their eye movements to the target (Figure 4A) and took longer to make their search decision once the target was fixated (Figure 4B; see also Table 2 for number of distractors fixated and mean distractor dwell times).

To determine if the interaction between T-D and D-D similarity is expressed in search guidance or target verification, we again compared the findings from Experiments 1 and 2. If this interaction is due to improved search guidance, as hypothesized by Duncan and Humphreys (1989), we would expect to find a lesser effect of increasing T-D similarity in Experiment 2 on time-to-target latencies. However, if the relationship between T-D and D-D similarity affects decision processes, then this interaction should be expressed in target verification times. Although main effects of T-D and D-D similarity were found for time-to-target and for target verification times (p < .01 for all), no significant interactions were found between T-D and D-D similarity (p = .28 for time-to-target; p = .26 for verification time). However, because accuracy and guidance were so poor in the 2-part and 3-part conditions, this may simply be due to T-D similarity being too high. Given that observers were often unable to discriminate between targets and distractors in the 2-part and 3-part matching conditions, distractor grouping and rejection would not be possible and an interaction between T-D and D-D similarity would not be expected. We therefore performed additional 2×2 ANOVAs using only the 0-part and 1-part T-D similarity conditions, both of which had high accuracy and guidance levels that were well above chance. These analyses suggested clear support for an interaction between T-D and D-D similarity in search guidance, but not target verification. For time-to-target, we found main effects of T-D similarity, F(1,30) = 187.20, p < .001, and D-D similarity, F(1,30) = 13.78, p < .01, as well as a significant interaction, F(1,30) = 5.10, p < .05. Main effects were also found in target verification times (both p < .01), but critically T-D and D-D similarity again failed to interact, F(1,30) = .49, p = .49. These analyses support the conclusion originally suggested by Duncan and Humphreys (1989); the mitigating effects of increasing D-D similarity result from improved search guidance. Distractor homogeneity shortens the time it takes observers to guide their eyes to the target, but it does not lessen the effects of high T-D similarity on the time needed to accumulate information for the present/absent search decision after the target has been fixated.3

3.2.4. First Object Fixated

Figure 5 shows the percentage of target-present trials in which the target was the first object fixated. As in Experiment 1, increasing the number of target-matching distractor parts resulted in fewer immediate fixations on the target, F(3,45) = 23.92, p < .001, confirming that T-D similarity did affect search guidance in Experiment 2. Also as in Experiment 1, this level of early search guidance disappeared almost entirely under conditions of high T-D similarity; when three distractor parts matched the target guidance was only marginally better than chance, t(15) = 2.10, p = .05. We again interpret this as evidence for an inverse relationship between T-D similarity and the strength of a guidance signal; high levels of T-D similarity result in low levels of immediate search guidance to the target even when the distractors are perceptually identical.

To test whether D-D similarity affects early search guidance we again compared immediate target fixation rates in Experiments 1 and 2. If one or more distractors must be fixated in order for these objects to become grouped as a homogenous set, we would not expect to find evidence for preferential target fixation in early saccades. However, if D-D similarity relationships can be established based on peripheral visual analysis and acted upon very rapidly, these relationships might result in an increased rate of immediate target object fixations. No main effect of D-D similarity on immediate target fixations was found, F(1,30) = .05, p = .82, nor did T-D and D-D similarity interact, F(3,90) = 1.65, p = .19. Even when 2-part and 3-part conditions were excluded from the ANOVA, analyses revealed neither a main effect of D-D similarity, F(1,30) = .73, p = .40, nor an interaction between D-D similarity and T-D similarity, F(1,30) = 2.13, p = .16. As is clear from Figure 5, observers guided their early eye movements to the target under homogenous conditions about as well as they did under heterogenous conditions. This suggests that, while distractor homogeneity can improve search guidance (as evidenced by our time-to-target measure), this improvement does not take effect until observers have fixated at least one distractor object. This is consistent with the idea that distractors were being rejected as a group in the homogenous condition; we speculate that observers may need to fixate and categorize one distractor from the group in order to confirm that it is not the target before rejecting the group as a whole (see also Logan, 2002).

3.3. Discussion

Experiment 1 determined that T-D similarity affects search in the context of real-world objects; from Experiment 2 we learned that the same is true for D-D similarity effects. Specifically, we found that T-D and D-D similarity interact; the effect of T-D similarity is lessened when D-D similarity is high. This finding extends previous demonstrations of this interaction in several key respects. First, finding evidence for D-D similarity effects in our task suggests that the grouping process hypothesized to mediate such effects is not limited to simple patterns consisting of only one or two basic features; this grouping process operates also on visually complex objects having a diverse range of features. Second, previous reports of D-D similarity effects, and their interaction with T-D similarity, were confined to manual RT measures, leaving open the possibility that these effects were acting on verification processes relating to the present/absent search decision and not on the actual selection of search targets. We clarified the source of D-D similarity effects by using eye movement analyses to decompose the standard manual RT measure into separate guidance and verification components. Doing this, we found evidence for an interaction only in the time taken by observers to first fixate the target. We can therefore conclude that increasing D-D similarity mitigates the effects of T-D similarity by improving search guidance, and not by facilitating the search decision following fixation of the target. Third, this effect of D-D similarity on search does not happen immediately, as it did not increase the frequency of first fixations on the target. Although this is not strong evidence against the preattentive grouping of these objects, it does suggest that this grouping did not occur quickly enough to affect the first fixated objects in this study.

4. General Discussion

In this paper we asked how the visual similarity relationships between realistic objects affect the guidance of gaze to search targets. This effort produced several new findings. Some of these findings confirmed and extended results reported previously in the search literature; other observations were novel empirical and theoretical contributions. Consistent with previous work we found that the T-D and D-D similarity relationships established for simple search stimuli also hold for more visually complex real-world objects. Increasing T-D similarity or decreasing D-D similarity resulted in longer RTs and more errors. Also consistent with previous work we found that these similarity manipulations interacted; T-D similarity had more of an effect on distractor heterogeneous trials than on distractor homogenous trials. The effects of visual similarity relationships on search therefore appear to be robust, giving one reason to be cautiously optimistic about their generalization to even more realistic contexts (see also Alexander & Zelinsky, 2011).

A more novel contribution of our work is the demonstration of these similarity relationships using eye movement dependent measures. This demonstration is important, for two reasons. First, the higher temporal resolution of this measure enabled us to decompose the manual button press latencies into separate guidance (the time taken to first fixate the target) and target verification (the time taken to respond following target fixation) processes. Because previous work relied exclusively on manual responses, it was unclear how each of these processes contributed to search similarity effects. We found that, whereas both T-D and D-D similarity affect search during both stages, the two similarity effects interacted only during the early stage of selecting the search target for fixation. Second, eye movement measures can indicate how soon visual similarity relationships are expressed during search—do these effects show up immediately, or only after the fixation of one or more distractors? We found effects of T-D similarity on search guidance in the very first object fixated following search display onset, suggesting the preattentive comparison of a target template to peripherally viewed objects. However, the effect of D-D similarity on search guidance may not be preattentive, as D-D similarity did not affect the frequency of immediate target fixations. Some small amount of time is needed before these D-D similarity relationships can be used to guide search, at least for the visually complex objects used in this study.

With the introduction of our part-transplantation procedure, our work also makes a significant methodological contribution to the quantification of visual similarity relationships in realistic contexts. It is not at all clear how best to manipulate the visual similarity between complex real-world objects, as doing so requires a bit of a balancing act. On the one hand it is desirable to include a wide range of features in this manipulation so as to fully capture the visual similarity relationship between the objects, but on the other hand if too many features are manipulated, or if one feature is manipulated too drastically, one runs the risk of changing the semantic relationship between the objects and undermining the visual similarity estimate. Our solution to these opposing constraints was to adopt a part-based similarity manipulation. Because object parts are themselves featurally complex, our method enables the transplantation of multiple target features to distractors, thereby creating a more compelling similarity manipulation compared to what might result from the manipulation of only a single feature. Moreover, because substantial variability can be tolerated across part boundaries, a part manipulation enables the introduction of relatively dramatic visual changes (thereby allowing a fuller exploration of the similarity space) without altering the semantic characterization of the object (all of our part-matched distractors were still, unquestionably, teddy bears). Our manipulation also has the added virtues of being highly intuitive, extremely effective (with effects appearing in both T-D and D-D similarity), and easily quantifiable—visual similarity is characterized in terms of discrete parts. However, our method also has limitations. Although it was shown to work well for objects from the same category (teddy bears), it would likely create oddities if parts were transferred between different categories of objects (e.g., teddy bears and fish). Similarly, although our technique is agnostic to the features that are transplanted and therefore frees researchers to study similarity relationships without isolating specific features, this also limits the technique’s usefulness in identifying the features most important for search guidance.

A theoretically important finding arising from our part-based similarity manipulation is that very few parts had to be transplanted from target to distractor in order for the two objects to be perceived as highly similar. Significant T-D similarity effects were found after transplanting only one target part, and when three distractor parts were matched to the target response accuracy and search guidance both dropped to near chance. The interpretation of this result depends on one’s perspective. Given that our objects had only four distinct parts (as defined in this study), the fact that search was profoundly affected when distractors shared three-quarters of their parts with the target is in one sense unsurprising. However, these objects still differed from the target in one-quarter of its parts, raising the question of why search was guided so inefficiently to the target despite the features of this unmatched part being relatively unique?

The search literature suggests at least two possible explanations for this highly compromised level of target guidance. One explanation is grounded in signal detection theory (e.g., Palmer et al., 2000; Verghese, 2001). As the number of transplanted target parts increases, so too does the probability of confusing a distractor with the target. In the case of three matched parts, the signal generated by the target’s unique part might simply become lost in the noise generated by the parts shared with the distractors. Note, however, that this account would have to assume considerable noise in the feature-matching process, so much so that a distractor having only a 75% match to the target would be as likely as the actual target (which has a 100% match) to be selected first for fixation. An alternative possibility assumes that the representation of the search target is part-based, and that there is a limit on how extensively a target’s parts are represented. Using an extreme example, if observers were constrained by visual working memory limitations to represent only one part of the target bear, then guidance should be compromised when this critical part is transplanted onto a distractor. Moreover, guidance should decrease as more target parts are transplanted onto distractors (i.e., higher T-D similarity), as this would tend to increase the probability that the critical target part(s) is among those that are transplanted. This critical part explanation is also consistent with the lower accuracy found in the high T-D similarity conditions, indicating a process affecting object recognition as well as search.

Such a part-based explanation is consistent with the Target Acquisition Model (TAM; Zelinsky, 2008). Although not strictly part-based, TAM represents a target by extracting visual features from a local region surrounding a specific point in the target image. If this point was placed on the target bear’s head, the features of the head would be disproportionately weighted in the target representation, more so than features from more distant target parts (e.g., the legs). In this example TAM would predict weak target guidance to the extent that distractors share the target’s head, and comparatively strong guidance when non-head parts are transplanted onto distractors. This also means that guidance should weaken with increasing T-D similarity, as this manipulation would tend to increase the number of distractors with the more heavily weighted target part, and that guidance should be stronger in the target-matched Experiment 2 homogenous trials (compared to the Experiment 1 heterogenous trials), as fewer homogenous trials would have distractors with the critical target part. These are the precise patterns that we found in our data.

Future work will continue to explore the role of object parts in search guidance, focusing first on finding evidence for part preferences in the target representation used to guide search.4 Most search theories make no predictions as to why some target parts should be more important than others, nor do they place a priori limits on the number of parts that can be included in the target representation. Finding evidence for either form of part-specific guidance, particularly if the critical target part was selected idiosyncratically (e.g., some observers using the target’s head to guide their search, others using the torso), would call attention to the need for search theories to consider a target’s part structure in the generation of a guidance signal.

Highlights.

Search is very difficult when distractors share even one part with the target

Target-distractor similarity affects the first object fixated during search

Distractor-distractor similarity does not

Target-distractor and distractor-distractor similarity interact to guide search

Target verification times showed no such interaction

Acknowledgments

We thank Nancy Franklin, Christian Luhmann, and Marc Pomplun for helpful comments and feedback on previous versions of this manuscript. We also thank Andrew Ferreira, Afrin Howlader, Zainab Karimjee, Ryan Moore, Roxanna Nahvi, Sarah Osborn, and Jonathan Ryan for their help with data collection, and all the members of the Eye Cog lab for many invaluable discussions. This work was supported by NIH grant R01-MH063748 to G.J.Z.

Footnotes

Because the part transplantation method introduced digitally manipulated objects to the display, it is possible that observers were attracted to these visual oddities (Becker, Pashler, & Lubin, 2007) and that this masqueraded as an effect of T-D similarity in our study. However, in a control experiment we found that our digitally manipulated bears were not more likely to be fixated initially than non-manipulated bears, thereby ruling out this alternative explanation.

It was not possible to test the same participants in Experiments 1 and 2, as this would have required finding a prohibitive number of teddy bear images given our decision not to repeat stimuli.

To test whether the non-significant interaction between T-D similarity and D-D similarity in target verification times was due to low power, we included data from 24 additional observers, half of whom participated in the T-D similarity condition (Experiment 1) and the other half in the D-D similarity condition (Experiment 2). These observers were from a data set collected prior to the data from the 32 observers reported in this paper. The task and stimuli used for these two groups of observers were nearly identical (same methodology, design, and search objects), with the only difference being that the locations of the objects in the search displays were not properly counterbalanced over conditions and observers. However, while this confound could potentially affect guidance measures if observers were biased to look initially to a particular display location, it would not be expected to affect target verification. Including these 24 observers in the ANOVA (bringing the total to 56) resulted in the interaction becoming less significant, not more (F(3,159) = .482, p = .63). In light of this analysis, the non-significant interaction is likely real and informative; increasing D-D similarity lessened the T-D similarity effect by improving guidance, not by speeding target verification.

While the experiments in this study were not designed to test for part preferences, we analyzed our existing data for evidence that observers were preferentially using one target part to guide their search. Specifically, for the Experiment 1 data we calculated the percentages of 1-part matched trials (target absent condition only) in which the first fixated distractor had the target’s head, arms, legs, or torso. This analysis revealed no evidence for a part preference, F(3,45) = .41, p = .67. We also determined for Experiment 2 the percentage of first fixations on the target for trials in which the distractors had the target’s head, arms, legs, or torso. This analysis also revealed no part preference (p = .57). We conducted similar analyses for individual observers and found the same results, suggesting that our null results were not due to averaging over observers who had different part preferences.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Alexander RG, Zelinsky GJ. Visual Similarity Effects in Categorical Search. Journal of Vision. 2011;11(8):9, 1–15. doi: 10.1167/11.8.9. http://www.journalofvision.org/content/11/8/9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashby FG, Perrin NA. Toward a unified theory of similarity and recognition. Psychological Review. 1988;95:124–150. [Google Scholar]

- Becker MW, Pashler H, Lubin J. Object-Intrinsic oddities draw early saccades. Journal of Experimental Psychology: Human Perception and Performance. 2007;33:20–30. doi: 10.1037/0096-1523.33.1.20. [DOI] [PubMed] [Google Scholar]

- Becker SI, Ansorge U, Horstmann G. Can intertrial priming account for the similarity effect in visual search? Vision Research. 2009;49(14):1738–1756. doi: 10.1016/j.visres.2009.04.001. [DOI] [PubMed] [Google Scholar]

- Blanchette I, Dunbar K. How analogies are generated: The roles of structural and superficial similarity. Memory & Cognition. 2000;28(1):108–124. doi: 10.3758/bf03211580. [DOI] [PubMed] [Google Scholar]

- Bravo MJ, Farid H. The specificity of the search template. Journal of Vision. 2009;9(1):34, 1–9. doi: 10.1167/9.1.34. http://journalofvision.org/9/1/34/ [DOI] [PubMed] [Google Scholar]

- Brockmole JR, Henderson JM. Using real-world scenes as contextual cues for search. Visual Cognition. 2006;13(1):99–108. [Google Scholar]

- Castelhano MS, Pollatsek A, Cave KR. Typicality aids search for an unspecified target, but only in identification, and not in attentional guidance. Psychonomic Bulletin & Review. 2008;15:795–801. doi: 10.3758/pbr.15.4.795. [DOI] [PubMed] [Google Scholar]

- Cockrill P. The Teddy Bear Encyclopedia. New York: DK Publishing Inc; 2001. [Google Scholar]

- Duncan J, Humphreys GW. Visual search and stimulus similarity. Psychological Review. 1989;96:433–458. doi: 10.1037/0033-295x.96.3.433. [DOI] [PubMed] [Google Scholar]

- Duncan J, Humphreys GW. Beyond the search surface: Visual search and attentional engagement. Journal of Experimental Psychology: Human Perception and Performance. 1992;18:578–588. doi: 10.1037//0096-1523.18.2.578. [DOI] [PubMed] [Google Scholar]

- D’Zmura M. Color in visual search. Vision Research. 1991;31(6):951–966. doi: 10.1016/0042-6989(91)90203-h. [DOI] [PubMed] [Google Scholar]

- Eckstein MP, Drescher B, Shimozaki SS. Attentional cues in real scenes, saccadic targeting and Bayesian priors. Psychological Science. 2006;17:973–980. doi: 10.1111/j.1467-9280.2006.01815.x. [DOI] [PubMed] [Google Scholar]

- Edelman S. Representation is representation of similarities. Behavioral and Brain Sciences. 1998;21:449–498. doi: 10.1017/s0140525x98001253. [DOI] [PubMed] [Google Scholar]

- Foulsham T, Underwood G. If visual saliency predicts search, then why? Evidence from normal and gaze-contingent search tasks in natural scenes. Cognitive Computation. 2011;3(1):48–63. [Google Scholar]

- Geisler WS. Sequential ideal-observer analysis of visual discriminations. Psychological Review. 1989;96(2):267–314. doi: 10.1037/0033-295x.96.2.267. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal detection theory and psychophysics. Oxford, England: John Wiley; 1966. [Google Scholar]

- Henderson JM, Weeks P, Hollingworth A. The effects of semantic consistency on eye movements during scene viewing. Journal of Experimental Psychology: Human Perception and Performance. 1999;25:210–228. [Google Scholar]

- Kanan C, Tong MH, Zhang L, Cottrell GW. SUN: Top-down saliency using natural statistics. Visual Cognition. 2009;17(6):979–1003. doi: 10.1080/13506280902771138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ling Y, Pietta I, Hurlbert A. The interaction of colour and texture in an object classification task. Journal of Vision. 2009;9(8) doi: 10.1167/9.8.788. [DOI] [Google Scholar]

- Logan GD. An instance theory of attention and memory. Psychological Review. 2002;109:376–400. doi: 10.1037/0033-295X.109.2.376. [DOI] [PubMed] [Google Scholar]

- Ludwig CJH, Gilchrist ID. Stimulus-driven and goal-driven control over visual selection. Journal of Experimental Psychology: Human Perception and Performance. 2002;28:902–912. [PubMed] [Google Scholar]

- Ludwig CJH, Gilchrist ID. Goal-driven modulation of oculomotor capture. Perception & Psychophysics. 2003;65:1243–1251. doi: 10.3758/bf03194849. [DOI] [PubMed] [Google Scholar]

- Medin DL, Goldstone RL, Gentner D. Respects for Similarity. Psychological Review. 1993;100(2):254–278. [Google Scholar]

- Moores E, Laiti L, Chelazzi L. Associative knowledge controls deployment of visual selective attention. Nature Neuroscience. 2003;6:182–189. doi: 10.1038/nn996. [DOI] [PubMed] [Google Scholar]

- Najemnik J, Geisler WS. Optimal eye movement strategies in visual search. Nature. 2005;434:387–391. doi: 10.1038/nature03390. [DOI] [PubMed] [Google Scholar]

- Neider MB, Zelinsky GJ. Searching for camouflaged targets: Effects of target-background similarity on visual search. Vision Research. 2006a;46:2217–2235. doi: 10.1016/j.visres.2006.01.006. [DOI] [PubMed] [Google Scholar]

- Neider MB, Zelinsky GJ. Scene context guides eye movements during visual search. Vision Research. 2006b;46(5):614–621. doi: 10.1016/j.visres.2005.08.025. [DOI] [PubMed] [Google Scholar]

- Neider MB, Zelinsky GJ. Exploring set size effects in scenes: Identifying the objects of search. Visual Cognition. 2008;16(1):1–10. [Google Scholar]

- Oliva A, Torralba A. Modeling the shape of the scene: a holistic representation of the spatial envelope. International Journal in Computer Vision. 2001;42:145–175. [Google Scholar]

- Oliva A, Wolfe JM, Arsenio HC. Panoramic search: The interaction of memory and vision in search through a familiar scene. Journal of Experimental Psychology: Human Perception and Performance. 2004;30:1132–1146. doi: 10.1037/0096-1523.30.6.1132. [DOI] [PubMed] [Google Scholar]

- Palmer J, Verghese P, Pavel M. The psychophysics of visual search. Vision Research. 2000;40:1227–1268. doi: 10.1016/s0042-6989(99)00244-8. [DOI] [PubMed] [Google Scholar]

- Phillips S, Takeda Y, Kumada T. An inter-item similarity model unifying feature and conjunction search. Vision Research. 2006;46:3867–3880. doi: 10.1016/j.visres.2006.06.016. [DOI] [PubMed] [Google Scholar]

- Pomplun M, Reingold EM, Shen J. Area activation: A computational model of saccadic selectivity in visual search. Cognitive Science. 2003;27(2):299–312. [Google Scholar]

- Rao RPN, Zelinsky GJ, Hayhoe MM, Ballard DH. Eye movements in iconic visual search. Vision Research. 2002;42:1447–1463. doi: 10.1016/s0042-6989(02)00040-8. [DOI] [PubMed] [Google Scholar]

- Schmidt J, Zelinsky GJ. Search guidance is proportional to the categorical specificity of a target cue. Quarterly Journal of Experimental Psychology. 2009;62(10):1904–1914. doi: 10.1080/17470210902853530. [DOI] [PubMed] [Google Scholar]

- Smith JD, Redford JS, Gent LC, Washburn DA. Visual search and the collapse of categorization. Journal of Experimental Psychology: General. 2005;134:443–460. doi: 10.1037/0096-3445.134.4.443. [DOI] [PubMed] [Google Scholar]

- Takeda Y, Phillips S, Kumada T. A conjunctive feature similarity effect for visual search. The Quarterly Journal of Experimental Psychology. 2007;60:186–190. doi: 10.1080/17470210601063142. [DOI] [PubMed] [Google Scholar]

- Torralba A, Oliva A, Castelhano M, Henderson JM. Contextual guidance of attention in natural scenes: The role of global features on object search. Psychological Review. 2006;113:766–786. doi: 10.1037/0033-295X.113.4.766. [DOI] [PubMed] [Google Scholar]

- Treisman AM. Features and objects: The Fourteenth Bartlett Memorial Lecture. The Quarterly Journal of Experimental Psychology. 1988;40A:201–237. doi: 10.1080/02724988843000104. [DOI] [PubMed] [Google Scholar]

- Treisman AM. Search, similarity, and integration of features between and within dimensions. Journal of Experimental Psychology: Human Perception and Performance. 1991;17(3):652–676. doi: 10.1037//0096-1523.17.3.652. [DOI] [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature integration theory of attention. Cognitive Psychology. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Treisman AM, Sato S. Conjunction search revisited. Journal of Experimental Psychology: Human Perception and Performance. 1990;16:459–478. doi: 10.1037//0096-1523.16.3.459. [DOI] [PubMed] [Google Scholar]

- Tversky A. Features of similarity. Psychological Review. 1977;84:327–352. [Google Scholar]

- Verghese P. Visual search and attention: A signal detection theory approach. Neuron. 2001;31:523–35. doi: 10.1016/s0896-6273(01)00392-0. [DOI] [PubMed] [Google Scholar]

- Wolfe JM. Guided Search 2.0: A revised model of visual search. Psychonomic Bulletin & Review. 1994;1:202–238. doi: 10.3758/BF03200774. [DOI] [PubMed] [Google Scholar]

- Wolfe JM. Guided Search 4.0: Current progress with a model of visual search. In: Gray WD, editor. Integrated models of cognitive systems. New York: Oxford; 2007. pp. 99–119. [Google Scholar]

- Wolfe JM, Bennett SC. Preattentive object files: Shapeless bundles of basic features. Vision Research. 1996;37:25–43. doi: 10.1016/s0042-6989(96)00111-3. [DOI] [PubMed] [Google Scholar]

- Wolfe JM, Cave KR, Franzel SL. Guided Search: An alternative to the feature integration model for visual search. Journal of Experimental Psychology: Human Perception and Performance. 1989;15(3):419–433. doi: 10.1037//0096-1523.15.3.419. [DOI] [PubMed] [Google Scholar]

- Yang H, Chen X, Zelinsky GJ. A new look at novelty effects: Guiding search away from old distractors. Attention, Perception, & Psychophysics. 2009;71(3):554–564. doi: 10.3758/APP.71.3.554. [DOI] [PubMed] [Google Scholar]

- Yang H, Zelinsky GJ. Visual search is guided to categorically-defined targets. Vision Research. 2009;49:2095–2103. doi: 10.1016/j.visres.2009.05.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zelinsky GJ. Detecting changes between real-world objects using spatiochromatic filters. Psychonomic Bulletin & Review. 2003;10(3):533–555. doi: 10.3758/bf03196516. [DOI] [PubMed] [Google Scholar]

- Zelinsky GJ. A theory of eye movements during target acquisition. Psychological Review. 2008;115:787–835. doi: 10.1037/a0013118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zelinsky GJ, Schmidt J. An effect of referential scene constraint on search implies scene segmentation. Visual Cognition. 2009;17(6):1004–1028. [Google Scholar]

- Zelinsky GJ, Sheinberg DL. Eye movements during parallel-serial visual search. Journal of Experimental Psychology: Human Perception and Performance. 1997;23(1):244–262. doi: 10.1037//0096-1523.23.1.244. [DOI] [PubMed] [Google Scholar]