Abstract

Current accounts of spoken language assume the existence of a lexicon where wordforms are stored and interact during spoken language perception, understanding and production. Despite the theoretical importance of the wordform lexicon, the exact localization and function of the lexicon in the broader context of language use is not well understood. This review draws on evidence from aphasia, functional imaging, neuroanatomy, laboratory phonology and behavioral results to argue for the existence of parallel lexica that facilitate different processes in the dorsal and ventral speech pathways. The dorsal lexicon, localized in the inferior parietal region including the supramarginal gyrus, serves as an interface between phonetic and articulatory representations. The ventral lexicon, localized in the posterior superior temporal sulcus and middle temporal gyrus, serves as an interface between phonetic and semantic representations. In addition to their interface roles, the two lexica contribute to the robustness of speech processing.

Keywords: lexicon, language, spoken word recognition, lexical access, speech perception, speech production, neuroimaging, aphasia, dual stream model, localization

1. Introduction

This paper presents a new model of how lexical knowledge is represented and utilized and where it is stored in the human brain. Building on the dual pathway model of speech processing proposed by Hickok and Poeppel (2000; 2004; 2007), its central claim is that representations of the forms of spoken words are stored in two parallel lexica. One lexicon, localized in the posterior temporal lobe and forming part of the ventral speech stream, mediates the mapping from sound to meaning. A second lexicon, localized in the inferior parietal lobe and forming part of the dorsal speech stream, mediates the mapping between sound and articulation.

Lexical knowledge is an essential component of virtually every aspect of language processing. Language learners leverage the words they know to infer the meanings of new words based on the assumption of mutual exclusivity (Merriman & Bowman, 1989). Listeners use stored lexical knowledge to inform phonetic categorization (Ganong, 1980) and to guide processes including lexical segmentation (Gow & Gordon, 1995), perceptual learning (Norris et al., 2003) and the acquisition of novel wordforms (Gaskell & Dumay, 2003). Lexically indexed syntactic information also guides the assembly and parsing of syntactic structures (Bresnan, 2001; Lewis et al., 2006). By some estimates, a typical literate adult English speaker may command a vocabulary of 50,000 to 100,000 words (Miller, 1991) in order to achieve these goals. Given this background, it is important to understand where and how words are represented in the brain.

Studies of this question date to the first scientific papers on the neural basis of language. In 1874 Carl Wernicke described a link between damage to the left posterior superior temporal gyrus (pSTG) and impaired auditory speech comprehension. He hypothesized that the root of the impairment was damage to a putative permanent store of word knowledge that he termed the wortshatz or “treasury of words”. In his model, this treasury consisted of sensory representations of words that interfaced with both a frontal articulatory center and a widely distributed set of conceptual representations in motor, association and sensory cortices. In this model, Wernicke was careful to distinguish between permanent “memory images” of the sounds of words, and the effects of “sensory stimulation”, a notion akin to activation associated with sensory processing or short-term buffers (Wernicke, 1874/1969). The broad dual pathway organization of Wernicke’s model has been supported by modern research (Hickok & Poeppel, 2000; 2004; 2007; Scott & Wise, 2004; Scott, 2005), but his interpretation of the left STG as the location of a permanent store of auditory representation of words is open to debate.

The strongest support for the classical interpretation of the pSTG as a permanent store of lexical representations comes from BOLD imaging studies that show that activation of the left pSTG and adjacent superior temporal sulcus (STS) is sensitive to lexical properties including word frequency and neighborhood size (Okada & Hickok, 2006; Graves et al., 2007). Neighborhood size is a measure of the number of words that closely resemble the phonological form of a given word. This result is balanced in part by evidence that a number of regions outside of the pSTG/STS are also sensitive to these factors (c.f. Prabhakaran et al., 2006; Goldrick & Rapp, 2006; Graves et al., 2007) and directly modulate pSTG/STS activation during speech perception (Gow et al., 2008; Gow & Segawa, 2009). This raises the possibility that sensitivity to lexical properties is referred from other areas, and that the STG/STS acts as a sensory buffer where multiple information types converge to refine and perhaps normalize transient representations of wordform.

This view of the STG/STS is consistent with both neuropsychological and neuroimaging evidence. In the 1970’s and 1980’s aphasiologists noted that damage to the left STG does not lead to impaired word comprehension (Basso et al., 1977; Blumstein et al., 1977a; 1977b; Miceli et al., 1980; Damasio & Damasio, 1980). A review of BOLD imaging studies by Hickok and Poeppel (2007) showed consistent bilateral activity in the mostly posterior STG in speech-resting state contrasts and adjacent STS when participants listened to speech as compared to listening to tones or less speech-like complex auditory stimuli. They interpreted this pattern as evidence that the bilateral superior temporal cortex is involved in high-level spectrotemporal auditory analyses, including the acoustic-phonetic processing of speech. This spectrotemporal analysis could in turn be informed by top-down influences from permanent wordform representations stored in other parts of the brain on the STG to produce evolving transient representations of phonological form that are consistent with higher level linguistic constraints and representations. This hypothesis is discussed in section 7.

At the same time that aphasiologists and neurolinguists were recharacterizing the function of the STG, psycholinguists were developing a more nuanced understanding of lexical processing. A distinction emerged between spoken word recognition, the mapping of sound onto stored phonological representations of words, and lexical access, the activation of representations of word meaning and syntactic properties. This distinction was reinforced by studies of patients who showed a double dissociation between the ability recognize words and the ability to understand them. Some patients had preserved lexical decision but impaired word comprehension (Franklin et al., 1994; 1996; Hall & Riddoch, 1997), while others showed relatively preserved word comprehension with deficient lexical decision or phonological processing (Blumstein et al., 1977b; Caplan & Utman, 1994). At a higher level, some patients showed more circumscribed deficits in word comprehension coupled with specific deficits in the naming of items in certain categories including colors and body parts (Damasio, McKee & Damasio, 1979; Dennis, 1976). This fractionation of lexical knowledge was accompanied by a widening list of brain structures associated with lexical processing. Disturbances in various aspects of spoken word recognition, comprehension and production were associated with damage to regions in the temporal, parietal and frontal lobes (c.f. Damasio & Damasio, 1980; Gainotti et al., 1986; Coltheart, 2004; Patterson et al., 2007).

The advent of functional neuroimaging techniques introduced invaluable new data that underscore the conceptual challenges of localizing wordform representations. Three types of studies have dominated this work: (1) word-pseudoword contrasts, (2) repetition suppression/enhancement designs, and (3) designs employing parametric manipulation of lexical properties. Many studies have contrasted activation associated with listening to words versus pseudowords. (Binder et al., 2000; Newman & Twieg, 2001; Kotz et al., 2002; Majerus et al., 2002; 2005; Bellgowan et al., 2003; Rissman et al., 2003; Vigneau et al., 2005; Xiao et al., 2005; Prabhakaran et al., 2006; Orifanidou et al., 2006; Valdois et al., 2006; Raettig & Kotz, 2008; Sabri et al., 2008; Gagnepain et al., 2008; Davis et al., 2009). These studies differ by task and in the specific wordform properties of both word and pseudoword properties. Nevertheless, several reviews and metanalyses have found several systematic trends in these data (Raettig & Kotz, 2008; Davis & Gaskell, 2009). A metanalysis of 11 studies by Davis and Gaskell (2009) found 68 peak voxels that show more activation for words than pseudowords at a corrected level of significance. These included left hemisphere voxels in the anterior and posterior middle and superior temporal gyri, the inferior temporal and fusiform gyri, the inferior and superior parietal lobules, supramarginal gyrus, and the inferior and middle frontal gyri, In the right hemisphere, words produced more activation than nonwords in the middle and superior temporal gyri, supramarginal gyrus, and precentral gyrus. The same study also showed significantly more activation by pseudowords than words in 29 regions including a voxels in left mid-posterior and mid-anterior superior temporal gyrus, left posterior middle temporal gyrus, and portions of the left inferior frontal gyrus, the right superior and middle temporal gyri.

While these studies would appear to bear on the localization of the lexicon, it is important to note the lexicon is rarely invoked in this work. This subtraction is generally associated with the broader identification of brain regions supporting “lexico-semantic processing” (c.f. Raettig & Kotz, 2008) or “word recognition” (c.f. Davis & Gaskell, 2009). There are several reasons to suspect that a narrower reading of these subtractions that directly and uniquely ties them to wordform localization is unviable. Recognizable words trigger a cascade of representations and processes related to their semantic and syntactic properties that pseudowords either do not trigger, or trigger to a different extent1. As result, many of the regions that are activated in word-pseudoword subtractions may be associated with the representation of information that is associated with wordforms, and not just wordforms themselves.

Behavioral and neuroimaging results provide converging evidence that suggests another limitation of the word-pseudoword subtraction as a tool for localizing wordform representations. One can imagine a system in which words activated stored representations of form, but nonwords did not. Given such a system, a word-pseudoword subtraction could be used to localize the lexicon. However, evidence from behavioral and neuroimaging studies suggests that pseudowords are represented using the same resources that are used to represent words. A number of behavioral results in tasks including lexical decision, naming, and repetition show that the processing of nonwords is influenced by the degree to which they resemble real words (c.f. Gathercole et al., 1991; Gathercole & Martin, 1996; Vitevitch & Luce, 1998; 1999; Frisch et al., 2000; Luce & Large, 2001; Saito et al., 2003). The overlap in operations is masked by word-pseudoword subtractions, but is apparent in BOLD results that employ resting state subtractions. Binder et al. (2000) and Xiao et al. (2005) showed almost identical patterns of activation in word-resting state and pseudoword-resting state subtractions. The only differences they reported were a tendency for more bilateral activation for words in the ventral precentral sulcus and pars opercularis in the Binder et al. study and less activation in the parahippopcampal region in the Xiao et al. study. Moreover, several studies have shown that pseudoword BOLD activation is influenced by the degree to which pseudowords resemble known words, with word-like pseudowords producing activation patterns that were more similar to those produced by familiar words than those produced by less-wordlike tokens (Majerus et al., 2005; Raettig & Kotz, 2008). Evidence for a shared neural substrate for the representation of words and pseudowords has implications for the nature of wordform representations (discussed in section 2). Moreover, it suggests that differential activation produced by listening to words and pseudowords relates to form properties of pseudowords that are not generally controlled for in this research.

Repetition suppression and enhancement designs offer a more targeted tool for localizing wordform representations. In word recognition tasks, repeated presentation of the same items leads to a reduction in response latency and increase in accuracy. This type of repetition priming is mirrored at a physiological level by repetition suppression and enhancement, in which repetition of a stimulus leads to changes in localized BOLD responses (see review by Hensen, 2003). Several studies using passive listening to meaningful words have demonstrated repetition suppression effects in left mid-anterior STS (Cohen et al., 2004; Dehaene-Lambertz et al., 2006). This finding was replicated by Buschbaum and D’Esposito (2009) who used an explicit “new/old” recognition judgment. They also found repetition enhancement or reactivation at the boundary of bilateral pSTG, anterior insula and inferior parietal cortex including the SMG.

The fact that words were used in these studies does not necessarily indicate that repetition effects reflect lexical activation. Activation changes could reflect representation or processing at any level (e.g. auditory, acoustic-phonetic, phonemic, lexical). In order to directly tie these effects to lexical representation it is necessary to control for the contribution of non-lexical repetition. Orfanidou et al. (2006) addressed this issue by using different speakers for first and second presentations of words to minimize the influence of auditory representation, and by contrasting repetition effects associated with phonotactically matched word and pseudoword stimuli to target specifically lexical properties. They found no evidence of interaction between lexicality and repetition in any voxel in whole brain comparisons. This result is again consistent with the notion that word and pseudoword representation share a common neural substrate. Analyses collapsing across lexicality showed significant repetition suppression in the supplemental motor area (SMA), and bilateral inferior frontal posterior inferior temporal regions as well as repetition enhancement in in bilateral parietal, orbitofrontal and dorsal frontal regions as well as the right posterior inferior temporal gyrus and a region including the right precuneas and adjacent parietal lobe. The lack of anterior STS suppression in these results may reflect the diminished role of auditory effects due to the speaker manipulation. However, the lack of orthogonal manipulation of phoneme, syllable or diphone repetition make it unclear whether these effects are directly attributable to lexical representation.

The other primary BOLD imaging strategy for localizing lexical representation involves contrasts that rely on parametric manipulation of specifically lexical properties including word frequency, phonological neighborhood size and lexical competitor environment. This strategy (which is discussed again in section 3) is less widely used than word-pseudoword contrasts or repetition suppression/enhancement techniques, but has been explored by several groups. In an auditory lexical decision task, Prabhakaran et al. (2006) found differential activation based on word frequency in left pMTG extending into STG and left aMTG. In contrast, Graves et al. (2007) found frequency sensitivity in left hemisphere SMG, pSTG, and posterior occipitotemporal cortex and bilateral inferior frontal gyrus in a picture naming task. These results differ, but do show some overlapping STG activation and adjacent activations in the left posterior temporal lobe associated with word frequency. Differences in frequency sensitivity in the two studies in other areas may be related to differences in the task demands imposed by lexical decision versus overt naming.

Manipulations of neighborhood size have also produced different patterns of activation in different studies. Okada and Hickok (2006) found sensitivity to neighborhood size limited to bilateral pSTS in a passive listening task, while Prabhakaran et al. (2006) found neighborhood effects in the left SMG, caudate and parahippocampal region in their auditory lexical decision task. In this case, the differences may be related to the differing attentional demands of passive listening versus lexical decision. In a study employing a selective attention manipulation during bimodal language processing, Sabri et al. (2008) found that while superior temporal regions were activated in all speech conditions, differential activation associated with lexical manipulations (word-pseudoword subtraction) was only found when subjects attended to speech. This suggests that tasks such as passive listening that require only shallow processing may fail to produce robust activation outside of superior temporal cortex.

To summarize, the complex and often contradictory results seen in the BOLD imaging literature do not provide a simple resolution to the localization problem, but they do delineate a number of issues that any satisfying resolution must address. Claims about the localization of the lexicon must be framed in relation to a general understanding of the nature of lexical representation that specifically addresses the relationship between the representation of words, pseudowords and sublexical representations, and the causes of task effects.

Recent behavioral results and advances in the characterization of neural processing streams associated with spoken language processing suggest that some task effects may be attributable to a fundamental distinction between semantic and articulatory phonological processes. In one line of experimentation, researchers have found that listeners show different patterns of behavioral effects when presented with the same set of spoken word stimuli in similar tasks that tap phonological versus semantic aspects of word knowledge. Gaskell and Marslen-Wilson (2002) showed that gated primes (e.g. captain presented as /kæpt/ or /kaæptI/) produce significant phonological priming for complete words (CAPTAIN), but no priming and no effect of degree of overlap for strong semantic associates (e.g. COMMANDER). Norris et al. (2006) found several similar differences between phonological and semantic cross-modal priming. They found both associative (date – TIME) and identity (date – DATE) priming when spoken primes were presented in isolation, but only identity priming when they were presented in sentences. In instances in which a short wordform in embedded in a longer wordform (e.g. date in sedate) no associative priming was found for embedded words (sedate-TIME), but negative form priming (sedate-DATE) was found in sentential contexts. Together, these results demonstrate the dissociability of semantic and phonological modes of lexical processing in the perception of spoken words.

Gaskell and Marslen-Wilson (1997) explored the idea that semantic and phonological aspects of spoken word processing may be independent of each other in their distributed cohort model. Unlike earlier models (c.f. McClelland & Elman, 1986) that assumed that lexical access is the result of an ordered mapping from acoustic-phonetic representation to phonological and then semantic representation, their model employed direct simultaneous parallel mapping processes between low-level sensory representations and distributed semantic and phonological representations.2 In their work, the decision to represent lexical semantics and phonology as separate outputs was motivated in part by computational considerations. Parallel architecture offers potentially faster access to semantic representations. This general organization also allows for the development of intermediate representations that are optimally suited for the mapping between a common input representation and different output representations.

The parallel mapping between low-level phonetic representations of speech and semantic versus phonological representation proposed by Gaskell and Marslen-Wilson is similar to the form of modern dual-pathway models of spoken language processing that draw on the pathology, functional imaging and psychological literatures and postulate separate routes from auditory processing to semantics and speech production (Hickok & Poeppel, 2000; 2004; 2007; Wise, 2003; Scott & Wise, 2004; Scott, 2005; Warren et al., 2005; Rauschecker & Scott, 2009). In these models auditory input representations are initially processed in primary auditory cortex, with higher-level auditory and acoustic-phonetic processing taking place in adjacent superior temporal structures. As in Gaskell and Marslen-Wilson’s model, subsequent mappings are carried out in simultaneous parallel processing streams. In the neural models these include a dorsal pathway that provides a mapping between sound and articulation, and a ventral pathway that maps from sound to meaning.

In the model developed by Scott and colleagues (Scott & Wise, 2004; Scott, 2005; Rauschecker & Scott, 2009), the left ventral pathway links primary auditory cortex to the lateral STG and then the anterior STS (aSTS). No ventral lexicon is proposed in these models. In the Hickok and Poeppel model (2000; 2004; 2007), the mapping between sound and meaning is mediated by a lexical interface located in the posterior middle temporal gyrus (pMTG) and adjacent cortices. This interface is the most explicit description of a lexicon in any of the dual stream models.

Parallels between the distributed model’s phonological output and the articulatory dorsal processing stream in dual stream models are less clear. One critical question is whether articulatory and phonological representations are the same thing. While phonological representation is historically rooted in articulatory description (Chomsky & Halle, 1968), current theories of featural representation include both explicitly articulatory (c.f. Browman & Goldstein, 1992) and purely abstract systems (c.f. Hale & Ross, 2008). The lexical representations used in Gaskell and Marslen-Wilson’s model do not make a clear commitment to articulatory or non-articulatory representation.

In summary, despite widespread evidence that words play a central role in language processing, over a century of research has produced no clear consensus on where or how words are represented in the brain. This may be attributed to a number of factors including the methodological challenges inherent in discriminating between lexical activation, processes that follow on lexical activation, and the application of lexical processes to pseudoword stimuli. During the same period, evidence from dissociations in unimpaired and aphasic behavioral processing measures have pointed towards a potential dissociation between semantic and phonological or articulatory aspects of lexical processing that roughly parallels distinctions made in recent dual stream models of spoken language processing in the human brain. In the sections that follow I will develop a framework for understanding the organization and function of lexical representations and review evidence from a variety of disciplines that suggests the existence of parallel lexica in the ventral and dorsal language processing streams.

2. The Computational Significance of Words

The lexicon been hard to localize in part because of a lack of agreement about its function. Researchers have adopted the term “lexicon” to describe the specific role that lexical knowledge plays in a variety of aspects of processing. As a result, the term has different meanings to different research communities. Syntacticians describe it as a store of grammatical knowledge (Bresnan, 2001; Jackendoff, 2002), morphologists see it as an interface between sound and meaning (Ullman et al., 2005), and computational linguists see it as a kind of database where representations of a string corresponding to a word are linked to a list of properties including the word’s meaning, spelling, pronunciation, and grammatical function (Pustajevsky, 1996). These approaches seem to be at odds with each other, but they share one essential property. All of them begin with the idea that the word is a kind of interface that links representations of word form or sound with other types of knowledge. This view is reflected in Hickok and Poeppel (2007), who refer to a “lexical interface” rather than using the term “lexicon”.

The notion of a lexical interface draws attention to several essential computational properties of the lexicon. The most important is that words are a means of accessing different types of knowledge, and should not be viewed as ends in themselves. We activate entries in the lexicon only as a way to access or process specific types of information that we may need to complete a given task: for example, parsing a sentence, assembling the motor commands to pronounce a word, or enlisting top-down information to interpret a perceptually ambiguous word.

To the extent that computational efficiency defines representation, the specific form of any interface representation should be constrained by the input-out mappings it mediates. In the model proposed here, lexical representations play a computational role similar to that of hidden nodes in a connectionist model. Hidden nodes are sensitive to the features of the input representation that are most relevant to its mapping onto the output representation. Computational efficiency may require different sets of features for different mappings. Consider the mappings involved in understanding versus recognizing and accurately pronouncing the word ran. The words ran and run both relate to the same general idea of moving quickly by using your legs, and so in the context of mapping onto meaning might be considered different variants of the same word. In contrast, the sound-to-articulation mapping that mediates the accurate pronunciation or recognition of run as opposed to ran needs to mark the distinction between the words. This distinction, which linguists term the lexeme/lemma distinction, suggests that different tasks may require different representations of wordforms.

In addition to their role as interfaces between sound and higher-level representation, words also appear to play a role in refining lower level acoustic-phonetic representation and processing. Lexical influences on speech perception have been widely demonstrated a variety of behavioral phenomena including the phoneme restoration effect (Warren, 1970), Ganong effect (Ganong, 1980), and low-level phoneme context effects produced by “restored” phonemes (Elman & McClelland, 1988). An effective connectivity analysis by Gow et al. (2008) found that the Ganong effect is the result of a feedback dynamic between the pSTG and SMG in a phoneme categorization task. In a similar study, Gow and Segawa (2009) demonstrated that a lexical bias in the way that listeners compensate for lawful phonological variation is mediated by feedback between the pMTG and pSTG in a phase picture matching task. These processing dynamics address the inherent variability of the speech signal by reconciling acoustic-phonetic input representations with abstract canonical representations of word form. In addition to playing a role in speech perception, this dynamic may also play a role in offline perceptual learning, where lexical constraints have been shown to influence adaptation to anomalous pronunciation of speech sounds (Norris et al., 2003).

In summary, lexical representations serve two primary functions. The first is an interface between low-level representations of sound and higher-level representations of different aspects of linguistic or world knowledge. The second role is as mechanism for normalizing acoustically variable input representations to resolve phonetic ambiguity in both online and offline processing.

3. Distributed Versus Local Representation

This section will examine the question of how lexical representation might be instantiated and identified in behavioral or neural data. In many models of lexical access words are assumed to have local representation (c.f. Morton 1969; McClelland & Elman, 1986; Marslen-Wilson, 1987; Norris, 1994) in which each word is represented by a single discrete node or entry. This type of representation is transparently and unequivocally lexical. In contrast, many connectionist models of spoken and visual word recognition (c.f. Gaskell & Marslen-Wilson, 1997; Seidenberg & McClelland, 1996; Plaut et al., 1996) rely on distributed representations in which a single word may be represented by a pattern of activation over many nodes. Both computational and biological evidence supports the general claim that distributed representation is a fundamental property of cortical processing for all but the most primitive perceptual or cognitive categories (Hinton et al., 1986; Plaut & McClelland, 2010). In their connectionist model of reading, Seidenberg and McClelland (1996) explicitly argued that their distributed representations of words were not lexical in that representational units did not map directly onto words, and the representations that they did use could be used to represent non-words. Distributed representation attributes the effects of wordlikeness effects in nonword processing (c.f. Gathercole et al., 1991; Gathercole & Martin, 1996; Vitevitch & Luce, 1998; 1999; Frisch et al., 2000; Luce & Large, 2001; Saito et al., 2003) to the effects of overlapping activation dynamics that follow on the partial activation of distributed lexical representations that resemble nonword probes. Coltheart (2004) responded to these claims by arguing that without lexical representation there is no basis for the ability to perform lexical decisions. One might argue that people perform lexical decisions by determining whether or not a word or nonword representation maps onto a semantic representation. However, Coltheart provided evidence that some patients (though not all, see Patterson et al., 2007) show the preserved ability to perform lexical decisions despite significant deficits in semantic knowledge.

At first pass, the crux of this debate appears to be that that distributed models such as Seidenberg and McClelland’s (1996) provide a clear account of nonword processing effects, but a less clear account of our ability to recognize words while localist models such as that proposed by Coltheart (2004) have the opposite problem – they explain lexical decision well, but offer no clear explanation of wordlikeness effects. Physiological plausibility and computational efficiency considerations favor distributed lexical representation, but both positions may be otherwise salvageable. Localist representation may account for nonword wordlikeness effects if they rely on continuous activation rather than all- or-nothing activation. Thus, in a continuous activation model such TRACE (McClelland & Elman, 1986), nonword inputs such as /blæg/ may produce partial activation of lexical nodes representing similar words (e.g. black, bag, blog, plaid), which will compete for activation and influence the timecourse of processing. At the same time, distributed representations can be described as being lexical if the activation of the representation corresponding to an entire word has properties above and beyond those of its constituents. In connectionist models that learn, such as Gaskell and Marslen-Wilson’s (1997) distributed model of spoken language processing, lexical properties follow from the target output representations used in training. Distributed representations of words have specifically lexical properties because they are trained as words. Plaut et al. (1996) provide a formal analysis of how properties of the global mapping between input and output representations over training produce sensitivity to global properties of these representations including word frequency.

The idea that distributed representations of words have properties that are not reducible to the properties of patterns of sublexical representation is important because it makes it possible to distinguish lexically mediated mappings and mapping based on segmental or syllabic representation (c.f. Hickok & Poeppel, 2004; 2007; Hickok et al., 2011). In the remainder of this section I will discuss several properties of words that make it possible to distinguish between lexical and sublexical representation based on behavioral and neural data, and then present evidence that purely segmental or syllabic representations cannot account for the human ability to recognize or produce spoken words.

For a representation to be meaningfully lexical, it must have properties that are not completely reducible or attributable to simple patterns of sublexical representation. Several properties satisfy this requirement. One is word frequency. Higher frequency words show a number of processing advantages over their lower frequency counterparts across experimental tasks. Frequent words produce faster lexical decision, shorter naming latencies, earlier N400 responses, and more accurate recognition under adverse listening conditions (c.f. Schilling et al., 1998; Pisoni, 1996; Rugg, 1990). In contrast, words that are composed of high frequency phoneme sequences show processing disadvantages on several of the same tasks. In a series of studies, Vitevitch and Luce (1999; 1998; 2005) controlled for word frequency and found that words composed of high phonotactic probability segmental sequences produce longer naming latencies and poorer lexical discrimination than words composed of lower frequency combinations. This suggests that lexical frequency effects cannot be attributed to sublexical factors.

Another uniquely lexical property is phonological neighborhood density. A word’s phonological neighborhood consists of the set of words that can formed by changing a single phoneme. For example, cad is a neighbor of cat. This measure is lexical in that it is defined in reference to an entire wordform and not simply to its parts. Neighborhood size is correlated to phonotactic frequency (a segmental measure), but word lists can be constructed in which neighborhood density and phonotactic frequency vary orthogonally. Luce and Large (2001) took advantage of this dissociation to show independent effects of phonotactic frequency and neighborhood density in a speeded same-different judgment task.

The claim that lexical representation is distributed but discriminable from segmental representation may seem counterintuitive, but the two properties are in fact compatible. Segmental representation is widely assumed in models of spoken word recognition despite a large experimental literature that has produced decidedly mixed evidence in favor of the primacy of segmental or syllabic representation. In their review of this literature, Goldinger and Azuma (2003) note that factors including stimulus properties, task demands and even social influences may bias listeners towards attending to different units of representation. Obligatory prelexical segmental representation may be generally assumed, but it is not well supported by the experimental literature.

Several independent lines of evidence suggest that sublexical units such as the segment or syllable are more appropriately viewed as post-lexical units that are only identified by non-obligatory segmentation of words that have been recognized as wholes. Human listeners can comprehend meaningful “compressed” speech at rates of over 400 words per minute (Foulk & Stitcht, 1969). This corresponds to an average rate of 30 msec per phoneme. However, when listeners are asked to identify the order of phonemes in recycling sequences consisting of either 4 vowels or four CV syllables, they are unable to report the order of tokens when phonemes are less than 100 msec in duration (Cullinan et al.,1977). When vowel duration is between 30 and 100 msec in such sequences, listeners show the ability to discriminate between pairs, but not the ability to identify the order of segments (Warren et al.,1990). Warren (1992) notes that if words were perceived as sequences of segments, the ability to identify segmental order would be a prerequisite for word recognition. He interprets the gulf between the temporal limits of word recognition and vowel or syllable recognition as evidence for holistic representation of wordforms and argues that identity of phonemes is inferred after word recognition rather than being directly perceived. This hypothesis is strengthened by evidence that illiterate subjects and monolingual speakers of languages such as Chinese that employ non-alphabetic orthographies are unable to perform word games that require either adding or deleting individual segments when repeating spoken words (Morais et al., 1979; Read et al. 1986). It is also consistent with Foss and Swinney’s (1973) finding that monitoring latencies for two-syllable words are shorter than those found when subjects monitor for initial segments in the same stimulus materials. A review of functional imaging results in phonological processing studies by Burton et al. (2000) supports this claim. They found that tasks that require explicit segmentation produce increased activation in the LIFG that is not found in similar tasks that do not require segmentation.

The holistic versus segmental representation question can also be viewed through the lens of dependencies between non-adjacent units in speech production or perception. If representation were at an entirely segmental or syllabic level, one would not expect to find long-range dependencies. However, production studies (see review by Grosvald, 2010) have found evidence of anticipatory coarticulation between segments within a single word separated by as many as six segments. Perception studies similarly show that listeners are sensitive to this coarticulation, and may use it to facilitate the perception of subsequent speech sounds (Martin & Bunnell, 1982; Beddor et al., 2002). Similar long-range range dependencies can be found in phonological constraints on word formation. For example, in vowel harmony, the feat ures of one vowel influence features of a nonadjacent vowel within the same word (see review by Clements, 1980). Similarly, in some languages constraints on the patterning of moraic tone contours are defined across syllables within a word (c.f. Cheng & Kisseberth, 1979). These phenomena both support the hypothesis that the word is a meaningful phonological unit, and point to the inadequacy of linear, purely segmental or syllabic phonological representation. As a result, phonologists have developed several classes of phonological theory including tiered phonology and autosegmental theory that allow for nonlinear mappings between phonological features and higher level phonological units within the word (c.f. Clements & Keyser, 1981; Goldsmith, 1990). These theories provide potential support for the claim that phonological representation may address segmental or syllabic properties while representing words at a holistic, distinctly lexical level of representation. Segmental or syllabic information may (or may not) be represented by some aspects of a distributed lexical representation, but representations used to access semantic knowledge or mediate the relationship between articulation and sound clearly must encode global wordform properties to account for word frequency, neighborhood density, and coarticulatory effects.

4. Overview of the Dual Lexicon Model

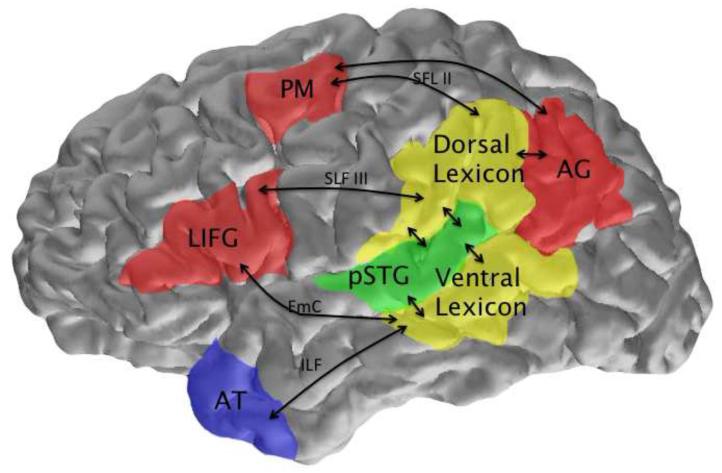

The dual lexicon model works within the broader context of dual pathway models of spoken language process. The anatomical organization of left hemisphere components of this bilateral model are shown in Figure 1. In the ventral pathway, a lexicon located in pMTG and adjacent pITS mediates the mapping between words and meaning. This area is not a store of semantic knowledge, but instead houses morphologically organized representations of word forms. These representations link the acoustic phonetic representations localized in bilateral pSTG to representations of semantic content in a broad, bilateral distributed network and to syntactic processes in a similarly broad a distributed bilateral network (see contrasting reviews by Caplan, 2007; Grodzinsky & Friederici, 2006; Hickok & Rogalsky, in press). The ventral lexicon therefore plays a role in the semantic interpretation of spoken words, sentence processing, and the production of spoken words to communicate meaning (as in a picture naming task). This characterization of the ventral lexicon is consistent with claims made about the role of pITS and pMTG in current dual pathway models of spoken language processing (Hickok & Poeppel,2000; 2004; 2007).

Figure 1. Dorsal and ventral lexicons shown within the context of left hemisphere components of a dual stream processing model.

Dorsal stream components are shown in red, and ventral components are shown in blue in this two lexicon model. The bilateral posterior superior temporal gyrus (pSTG), shown in green, is the primary site of acoustic-phonetic analyses of unmodified natural speech. More anterior portions of STG may be associated with the mnestic grouping processes. The dorsal lexicon, found in the supramarginal gyrus and parietal operculum (yellow), mediates the mapping between acoustic phonetic structure and the left dominant articulatory network including premotor cortex, posterior IFG and anterior insula described by Hickok and Poeppel (2001; 2004; 2007), with connectivity supplied by divisions II and III of the superior longitudinal fasiculus (SLF II and SLF III). The angular gyrus is hypothesized to play a role in the identification of sublexical units. The ventral lexicon (yellow), localized in the posterior middle temporal gyrus (pMTG) and adjacent tissue mediates the mapping between acoustic-phonetic representations in pSTG and conceptual representations associated with a semantic hub in the temporal pole that integrates aspects of semantic representation associated with a widely distributed conceptual network. The pMTG and temporal poles are connected by the inferior longitudinal fasiculus (ILF). Direct connections between pMTG and the IFG are supplied by the extreme capsule (EmC).

A parallel lexicon exists in the dorsal speech pathway, localized to the left SMG (the inferior portion of Brodmann’s area 40 delineated by the intraparietal sulcus, the Jensen or primary intermediate sulcus and the postcentral sulcus and the sylvian fissure) and adjacent parietal operculum. Consistent with the dorsal pathway’s role in the mapping between sound and articulation, the dorsal lexicon houses articulatorily organized word form representations. Where previous accounts imply that dorsal processing operates at a sublexical level, such as the phoneme or syllable, I will argue that the SMG representation is explicitly lexical.

Representations in the dorsal and ventral pathways play a number of complementary roles in processing. Both facilitate acoustic-phonetic conversion in auditory speech perception by providing task-specific top-down influences on pSTG activation (Gow et al., 2008; Gow & Segawa, 2009). These influences are influenced by the task that the listener is performing but also converge allowing both semantic and phonological context to affect perception, and thus contributing to both the robustness of spoken language interpretation following brain insult and listeners’ ability to interpret ambiguous or degraded speech sounds.

5. The Ventral Lexicon

Hickok and Poeppel (2004; 2007) identify a region comprising pMTG and adjacent pITS that projects directly to a widely distributed semantic network and acts as a lexical interface between sound and meaning in the ventral pathway. This is clearly a lexicon within the current framework. In contrast, Scott and Wise’s dual stream model (2004) focuses on prelexical processes, and does not identify a comparable structure. In their model, the “what” pathway links low level auditory processing in bilateral primary auditory cortex to higher level auditory processing in lateral anterior and posterior bilateral STG and then anterior STS which is sensitive to speech intelligibility in the left and dynamic pitch variation in the right. They suggest that this collective stream plays a role in the sound to meaning mapping, but stop short of describing any component as playing the role of a lexicon.

Converging evidence from a number of lines of research suggests that the pMTG and adjacent tissue in the pSTS function as a lexicon in the ventral speech stream. Damage to this region is associated with transcortical sensory aphasia (TSA), a condition marked by semantic paraphasias (e.g. calling a table a chair) and impaired auditory word comprehension coupled with preserved spoken word repetition and fluent speech (Wernicke, 1874; Goldstein, 1948; Coslett et al., 1987) (see Table 1 for an overview of neuropsychological syndromes discussed in this review). Boatman et al. (2000) demonstrated that these symptoms could also be transiently induced and narrowly localized across subjects who otherwise showed no symptoms of TSA using direct cortical stimulation by electrode pairs along the middle to posterior left MTG. These deficits do not appear to be caused by the breakdown of perceptual processing. Damage to the pMTG is not associated with impaired acoustic-phonetic discrimination or identification. This observation is supported by work showing that cortical stimulation of this region does not influence performance on these tasks (Boatman et al., 2000), and clinical findings demonstrating that impairment in these tasks is reliably associated with damage to the left frontal and inferior parietal lobe (Blumstein et al., 1977a; Micelli, et al., 1980; Caplan et al., 1995).

Table 1.

A summary of language pathologies discussed in this paper.

| Semantic Dementia |

Transcortical Sensory Aphasia |

Reproduction Conduction Aphasia |

Repetition Conduction Aphasia |

|

|---|---|---|---|---|

|

Primary lesion Localization |

temporal Pole | posterior MTG | left SMG and parietal operculum |

superior temporal |

| Defining Symptom | loss of semantic knowledge |

semantic paraphasia (TABLE → chair) |

phonological paraphasia (PEN → pan) |

impaired word recall and recognition |

|

Auditory Word Comprehension |

impaired | impaired | preserved | preserved |

|

Nonverbal Comprehension |

impaired | preserved | preserved | preserved |

| Speech Production | preserved | preserved | impaired | preserved |

|

Phoneme Discrimination |

preserved | preserved | impaired | preserved |

|

Spoken Word Repetition |

preserved | preserved | impaired | impaired |

|

Hypothesized Affected Function |

conceptual hub | ventral lexicon | dorsal lexicon | phonological short- term store |

|

Alternate Term |

primary progressive aphasia |

word meaning deafness |

word form deafness |

associative aphasia |

The existence of semantic paraphasias in TSA implicates a breakdown in lexico-semantic processing. This breakdown might be interpreted as either a loss of conceptual knowledge, or a loss of lexical mechanisms for accessing such knowledge. The difference between these two potential deficits is illuminated by the contrast between transcortical sensory aphasia and semantic dementia (SD). Semantic dementia is a neurodegenerative condition characterized by the bilateral degeneration of the anterior temporal lobes and the loss of both verbal and nonverbal semantic knowledge with preserved episodic knowledge (Warrington, 1975; Snowdon et al., 1989; Mummery et al., 2000). Several dissociations suggest that the primary deficit is related to word retrieval in TSA, and to semantic representation in SD. Jeffries and Lambon Ralph (2006) found that in word-picture verification SD patients were showed better word-picture verification performance for more general terms (e.g. animal) than for more specific (“Labrador”). TSA patients showed no such semantic specificity effect in tests using the same materials. The same study found that the two patient groups made different types of errors in picture naming. SD patients were more likely to apply a superordinate label to a picture (e.g. kangaroo named as “animal”), while TSA patients were more likely to make an associative error (squirrel named as “nuts”). The use of more general terms or broader categories is consistent with access to a reduced semantic representation. In contrast, associative naming errors and semantic paraphasias are consistent with preserved semantic representation, but a deficit in the mapping between words and meaning. This distinction is further supported by evidence that patients with TSA benefit significantly from first phoneme-cueing that supports word-retrieval in picture naming tasks, while SD patients show relatively little benefit from cueing (Graham et al., 1995; Patterson et al., 2004; Jeffries & Lambon Ralph, 2006).

This dissociation between anterior temporal and pMTG function suggests the mapping between words and meaning occurs in two distinct steps. In their review of SD and related BOLD imaging work Patterson et al. (2007) argue that that the anterior temporal lobe acts as a “semantic hub” linking nodes in a widely distributed neural network that represents disparate aspects of semantic knowledge related to sensorimotor or perceptual representation. Many of the features that define the meaning of a word are assumed to be sensory (e.g. the color or taste of an apple) or motoric (e.g. motoric representations of the acts of picking cutting or eating an apple). Evidence from pathology and neuroimaging suggests that these features have distributed representation that maps onto the regions of the brain that are involved in the processing of these sensory features or motor acts (see review by Martin, 2007). Patterson and colleagues base their semantic hub argument on the observation that despite the distributed nature of this network, focal damage to the anterior temporal lobe such as that seen in SD leads to a loss of conceptual knowledge, and BOLD studies that the same region is activated in tasks including category judgment (c.f. Bright et al., 2004; Rogers et al., 2006). Dissociations between semantic knowledge and the lexically-mediated mapping between sound and meaning support a step-process in which lexical representations in the pMTG mediate the mapping between acoustic-phonetic representation in the pSTG and amodal conceptual centers in the anterior temporal lobe that project to a distributed network of localized modality specific semantic features. This organization appears to be supported by a network of white matter connections that include connectivity between the pSTG and pMTG provided by local connections and possibly the posterior segment of the arcuate fasiculus (Catani et al., 2005) and the left pMTG and the anterior temporal pole provided by the inferior longitudinal fasiculus or ILF (Mandonnet et al., 2007).

Evidence from BOLD imaging extends the case for MTG’s role in lexical processing by demonstrating that the region is sensitive to stimulus properties that correlate uniquely with a lexical level of representation. In auditory lexical decision tasks, the MTG is one of several areas in which BOLD activation is modulated by lexicality (Prabhakaran et al., 2006). High frequency words produce stronger BOLD activation than low frequency words in both anterior and posterior portions of the left MTG (Prabhakaran et al., 2006). These results support the notion that the MTG plays a role in lexical representation.

The more specific claim that the MTG is involved in semantic aspects of lexical processing is supported by a series of results showing differential BOLD activation of the MTG in semantic priming and semantic interference paradigms, in which the semantic relationships between serially presented items influence lexical decision or naming (Rissman, Eliassen & Blumstein, 2003; Sass, et al., 2009; de Zubicaray et al., 2001). In general, targets following semantically related primes produce less MTG activation than unrelated targets do in tasks that emphasize automatic processing. This is often attributed to the fact that related words activate overlapping sets of semantic features that in turn prime semantically related lexical representations.

5.1 The Ventral Lexicon’s Role in Unification

Language processing draws on a large, distributed perisylvian network. To fully understand the function of the ventral lexicon it is important to consider how it interacts with other parts of the network during processing. The description offered so far primarily captures the bidirectional mapping between acoustic phonetic representations localized in bilateral pSTG, lemma representations in the posterior temporal lobe, and representations of semantic features that appear to be localized over a very broadly distributed network (Damasio & Damasio, 1994), with an important amodal convergence of information in anterior inferior temporal lobe (Tyler et al., 2004; Visser et al., 2009). This description captures the essential network that is involved in accessing the meaning of individual words heard without context. However, words are primarily experienced in sentences or discourses in which their semantic and syntactic significance is understood in reference to their context. Thus the word pitcher is interpreted differently in the sentences The pitcher sized up the batter and The pitcher is full of ice water, and the word run has different meanings and grammatical functions in the sentences Her first runs were fast and She runs before work.

Recent neuroimaging work suggests that unification, the process of relating individual words to a broader syntactic and semantic context, is achieved by a network that includes the left posterior middle temporal gyrus, the inferior frontal gyri, and subcortical and medial structures including the striatum and anterior cingulate. These structures show differential activation by single-versus meaningful multi-word stimuli (Snijders et al., 2009), and demonstrate a strong pattern of function interaction in psychophysical interaction analyses of BOLD imaging data (Snijders et al., 2010). Within the framework of this network, the posterior middle temporal lobe participates in unification in three identifiable ways.

The first is in its role in the morphological system. Morphemes, which include words, word stems and affixes, are phonologically defined units of meaning. For example, smiled consists of two morphemes: the stem smile and the inflectional suffix –ed that indicates the past tense and in this case makes it clear that smile is a verb rather than a noun in this usage. Morphology is a context sensitive process. For example, in English, verb inflections mark number, so one would say He runs, but they run. An inflection, like tense, may be marked through a regular process (the addition of the suffix –ed in smiled), or through an irregular process (alternate forms such as went for go, ran for run).

There is some argument over whether regular forms and irregular forms are processed by the same or different mechanisms (Pinker, 1999; Ullman et al., 2005; Joanisse & Seidenberg, 2005; McClelland & Rumelhart, 1986; McClelland & Patterson, 2002). Regardless of how this debate is resolved, MTG appears to be activated by both regular and irregular morphological processes. In past tense generation and comprehension tasks both regular and irregular forms have been shown to produce a BOLD response in bilateral pMTG (Joanisse & Seidenberg, 2005), with most studies showing greater activation in this region by irregular forms than by regular ones (Tyler et al., 2005; Yokoyama et al., 2006). In related work, the processing of both spoken and regular morphological markers for gender in Italian (Miceli et al., 2002) and the German plural (Berreta et al., 2003) have also been shown to produce increased pMTG activation. Despite evidence that bilateral pMTG is involved in both forms of morphology, numerous studies have shown a dissociation between impairments in regular and irregular morphology in aphasia (Marslen-Wilson & Tyler, 1997; Tyler et al., 2002, Tyler et al., 2005; Ullman et al., 2005). This work tends to show that frontal lobe damage, particularly damage to the LIFG, is associated with deficits in the processing of regular morphology with preserved processing of irregular forms. Patients with posterior damage, primarily including the left posterior temporal lobe, may show the opposite pattern, providing a double dissociation (Ullman et al., 2005). For regular inflection, processing seems to require interactions between the pMTG and LIFG. These areas appear to be connected by the arcuate fasciculus (Petrides & Pandya, 1988; Catani et al., 2005), and/or the extreme capsule (Makris & Pandya, 2008), and show functional connectivity in morphological processing (Stamatakis et al., 2005).

The same frontotemporal network is also involved in at least two forms of context-dependent interpretation of ambiguous words. In pioneering work in the late 1970’s, psycholinguists discovered that listeners who hear ambiguous words such as bug may momentarily access multiple interpretations (e.g. insect, electronic surveillance device), and then use context to select the relevant interpretation (Swinney, 1979). Systematic studies of dictionary meanings show that at least 80% of common words have more than one meaning, and some words may have more than 40 (Parks et al., 1998). A recent BOLD imaging study found increased activation in the left pMTG and adjacent pITG and left dominant bilateral IFG associated with polysemous words heard in clearly disambiguating contexts (Rodd et al., 2005; Davis et al., 2007; Zempleni et al., 2007). The IFG activation is consistent with results of related semantic selection studies that reliably show increased left prefrontal activation associated with increased selection demands (Thompson-Schill et al., 1997; Wagner et al., 2001); the ITG and MTG activation in studies of ambiguity is consistent with activation of more lexical items in that area. Similar activation patterns are found in tasks in which sentential context constrains the interpretation of words with ambiguous syntactic category. For example, the word bowl is clearly a noun in the sentence He might drop the bowl, but clearly a verb in the sentence He might bowl a perfect game. Several studies have found activation of the LIFG and pMTG is modulated by this type of ambiguity (Gennari et al., 2007; Snijders et al., 2009). A recent study using psychophysical interactions to examine patterns of effective connectivity showed increased coupling between the LIFG and the superior aspect of the left pMTG for ambiguous versus unambiguous sentences of this type (Snijders et al., 2010). This pattern was primarily left lateralized, but extended to RIFG and right pMTG as well as the striatum. As above, the MTG activation in studies of ambiguity is consistent with activation of more lexical items in that area.

6. The Dorsal Lexicon

A broad convergence of evidence suggests that the supramarginal gyrus (SMG) serves as a dorsal stream lexicon, playing a role in speech production and perception as well as articulatory working memory rehearsal. The notion that speech production and perception share a common lexicon is a matter of some debate, with prominent psycholinguistic models arguing for separate input and output lexica (Dell et al., 1997; Levelt et al., 1999), and models motivated by neuropsychological and functional imaging evidence tending to favor a common resource (Allport, 1984; McKay, 1987; Coleman, 1998; Buschbaum et al., 2001; 2003; Hickok & Poeppel, 2000; 2004; 2007). Jacquemot et al. (2007) found a dissociation between production and perceptual performance in a patient with conduction aphasia, and argued that their results favored the existence of interacting phonological codes associated with perception and production with dissociable feedforward and feedback connections. This idea is developed more fully by Hickok et al. (2011) who review evidence from behavioral studies of normal and impaired listeners and neuroimaging results that demonstrate a modulatory role of production resources on speech perception, and a predictive role of perceptual modeling on the control of speech production modeled within a state feedback control framework.

None of the current dual stream models of spoken word recognition recognize a dorsal stream lexicon. Hickok and Poeppel (2000; 2007) identify left lateralized Spt, a region inside the sylvian fossa that involves the planem temporale and parietal operculum, as an interface between sensory and motor representations in a manner that parallels the role that they ascribe to the MTG as an interface between sensory and semantic representations. Hickok and Poeppel (2000) argue that this region does not function as a phonological store or lexicon. In Hickok et al.’s (2011) state feedback control model of speech production, the Spt acts as a sensorimotor conversion system that acts at the level of segment-sized feature bundles and coarser syllabic units. Syllabic coding could of course be applied to entire monosyllabic words, but the conversion unit in this model is not specifically or intrinsically lexical. It is worth noting that the two studies that most directly support the claim that the Spt is the locus of acoustic-phonetic conversion both showed concurrent activation in the inferior parietal lobe. Buschbaum et al. (2001) found activation in the parietal operculum associated with the perception and production of nonsense words and Hickok et al. (2003) found activation in a region that appears to include portions of the SMG associated3 with the rehearsal and production of nonsense sentences and musical sequences. The region that was most strongly activated by linguistic stimuli was a subset of the larger region activated by musical stimuli. The mapping of syllable-sized units from sound to articulation could have several potential roles in speech production. Articulatory coding of syllables could provide a direct non-lexical route to production, which would be useful for word learning. It could also inform lexical representation in the SMG.

While syllabic encoding may facilitate speech production, recent evidence showing an influence of lexical properties on speech production suggest that lexical representations typically mediate the mapping between sound and articulation. A series of studies have demonstrated that word pronunciation is influenced by phonological neighborhood properties (Scarborough, 2003; 2004; Wright, 2004; Munson & Solomon, 2004; Munson, 2007). Unfortunately, phonotactic probability, a sublexical distributional property, may be confounded with phonological neighborhood size in these studies, making it unclear whether these results reflect lexical or sublexical effects. More compelling evidence comes from studies showing that pronunciation is influenced by word frequency (Pluymaekers et al., 2005) and lexical predictability (Bell et al., 2003). Baese-Berk and Goldrick (2009) recently demonstrated that voice onset time (VOT), a correlate of the [voicing] feature, is typically longer in a self-paced single word reading task for word-initial voiceless stop consonants in words that have a voiceless competitor than it is for words that do not. Thus, VOT is longer for the /k/ in “coat” (which has the competitor “goat”) than it is for the /k/ in “cope” (which has no competitor “gope”). These interactions occur in words with the same onset biphones, suggesting that the mapping from sound to articulation is mediated by some form of lexical representation, not sublexical factors. BOLD imaging results by Peramunage et al. (2011) exploring this interaction (described in section 6.2) suggest that the locus of this effect is in left SMG. These results do not preclude the existence of a nonlexical or syllabic pathway from acoustic-phonetic representation to articulation involving Spt rather than SMG, but they do support the claim that there is a lexically-mediated articulatory dorsal pathway involving the SMG.

6.1 Evidence from Disruption of Lexical Processes

Damage to the left supramarginal gyrus and adjacent parietal operculum is associated with several language processing deficits that appear to reflect impaired abstract phonological representation or processing. These include deficits in phoneme discrimination and categorization (Caplan et al., 1995; Gow & Caplan, 1996; Blumstein et al., 1994) and impaired phonological working memory (Paulesu et al., 1993). The critical question is whether these impairments reflect damage to a hypothesized dorsal lexicon.

Several lines of evidence suggest that aphasics with damage to the inferior parietal lobe including SMG show processing deficits that are correlated with lexical frequency. Goldrick and Rapp (2007) describe the patient CSS, who suffered a left parietal infarct. In naming tasks CSS made more errors for low frequency words than for higher frequency words.

Damage to the SMG may also produce a form of conduction aphasia that is characterized in part by sensitivity to lexical frequency in word repetition (see Table 1). Based on a review of previous reported cases of conduction aphasia, Shallice and Warrington (1977) proposed a distinction between repetition and reproduction aphasia. In the former variant, patients tend to show unimpaired spontaneous speech and preserved single word production, but show marked deficits in word recall and recognition (Shallice & Warrington, 1970; Valler & Baddeley, 1984). In contrast, reproduction conduction aphasia is characterized by frequent phonological paraphasias in spontaneous speech, and impaired single word production in oral reading and picture naming with preserved recognition and recall (Yamadori & Ikamura, 1975). This two-way distinction is confirmed by a study by Axer et al. (2001) that also found an anatomical dissociation between the variants. In a quantitative lesion overlap analysis of CT lesion data from 15 conduction aphasics they found evidence that the repetition form is associated with superior temporal damage (also see Buschbaum et al., in press), and the reproduction form associated with damage to the left supramarginal gyrus and adjacent parietal operculum.

I will argue that the deficit in some reproduction conduction aphasics is lexical, and provides a double dissociation with the loss of lexical representations in the ventral lexicon associated with transcortical sensory aphasia. The primary features of this dissociation are summarized in Table 1.Two properties of reproduction conduction aphasia suggest that it reflects damage to the hypothesized dorsal lexicon. The first is sensitivity to the lexical properties of stimulus items in repetition tasks, including lexical frequency and word length (Shallice et al., 2000; Knobel et al., 2008; Romani et al., 2011). However, these features could result from damage to sublexical phonological representations in an output “buffer” that plans articulation. The sublexical account might predict symmetrical deficits in word and pronounceable nonword repetition, since both are composed of the same sublexical constituents, but that is not necessarily the only prediction. The existence of patients with reproduction conduction aphasia who show significantly greater deficits in nonword repetition (Caplan et al., 1986; Caramazza et al., 1986; Dubois et al., 1964/1973; Shallice et al., 2000; Strub & Gardner, 1974; Notoya et al., 1982) or better repetition of nonwords that were judged to be highly wordlike than for nonwords judged to be less wordlike (although still phonotactically legal; Saito et al., 2003) is consistent with the hypothesis that nonword repetition draws on the same resources as word repetition, but in a less efficient manner that engages a broader network of overlapping lexical representations (Kohn and Smith, 1994; Shallice et al., 2000).

A number of models of speech production (c.f. Fromkin, 1973; Shattuck-Hufnagel, 1979; Dell et al., 1997) posit the existence of a phonological output buffer that serves as an interface between phonological and articulatory representations during syllabification and speech planning. Consistent with these models, several prominent explanations of reproduction conduction aphasia describe the functional deficit as an impairment of this output buffer (Caramazza et al., 1986; Shallice et al., 2000; Saito et al., 2003). Caramazza et al. (1986) described one reproduction patient who showed strong length and serial position effects in error patterns for repetition and spelling of nonwords, which are consistent with the buffer constrained performance. Shallice et al. (2000) describe a subject with a lesion that included both supra- and infra-sylvian cortices who showed similar evidence of an impairment in repetition of both nonwords and words, and suggested that the increased vulnerability of nonwords in these tasks is attributable to increased demands on a common resource.

However, other patients’ performances are not easily attributed to a disruption in the phonological output buffer, subject to support from the lexicon. For example, Romani et al. (2011) examined set of six conduction aphasics who showed severe deficits in word repetition. In their data, there was not a consistent or robust pattern of increased errors for longer words that would follow from reduced buffer capacity, or evidence of the type of serial position effect (by phoneme) that is the hallmark of an output buffer deficit. These data suggest that reproduction conduction aphasia is not the result of damage to a buffer in these cases. Goldrick and Rapp’s (2007) finding of sensitivity to phonological neighborhood density and the sensitivity to lexical frequency seen in some reproduction conduction aphasics suggest that damage to the inferior parietal lobe can lead to a deficit in the long-term representation of lexical wordforms used in planning speech production.

6.2 Functional Imaging Evidence

Evidence from BOLD imaging studies is consistent with evidence from (c.f. Caplan et al., 1996) that implicates the SMG in phoneme discrimination and identification Several studies have shown an association between SMG activation and dishabituation effects when subjects listen to a series of synthetic syllables and then hear a syllable that begins with a different consonant (Dehaene-Lambertz et al., 2005; Zevin & McCandliss, 2005). Desai et al. (2008) found a correlation between SMG BOLD activation and the strength of categorical perception in a phoneme discrimination task using synthetic speech continua. Similarly, Raizada and Poldrack (2007) found a correlation between neural amplification in the SMG and discrimination scores in a monitoring task involving synthetic nonsense syllable pairs. A metanalysis by Turkletaub & Coslett (2010) of eight studies including 123 subjects that used fMRI to study phoneme categorization found significant clusters of activation associated with categorization in the SMG and angular gyrus (AG). These results clearly show that the SMG plays a role in phonological processing. As discussed above, though non-lexical mechanisms are commonly assumed to underlie these effects, it may be that partial matches between nonsense syllables and stored abstract phonological representations of words (e.g. ba and balloon) are the source of this effect. It is also possible that SMG activation is the result of mappings between speech input and phonological representations of words and sublexical units such as segments.

Lexical representation in SMG is more directly indicated by studies that examine the effects of wordform similarity between words on BOLD activation. Recent functional imaging studies provide converging evidence that SMG activation is modulated by the presence or absence of words that resemble a spoken word. In one study, subjects performed a lexical decision task using spoken words from dense versus sparse lexical neighborhoods (Prabhakaran et al., 2006). Prabhakaran et al. found that words from dense neighborhoods produce increased left SMG activation. In a related study, members of the same group (Righi et al., 2010) combined fMRI with eyetracking techniques to examine the influence of the presence or absence of a phonological competitor in a search task. Subjects heard a word such as “beaker” and were asked to select a picture that matched that word from an array of images. In some trials, one of the visual distracters was an image of a word that shared an onset with the target (e.g. a beetle). When these items were present in the visual array they produced phonological competition, as evidenced by slower overall responses and increased looks to the competitor early in the trial. BOLD imaging revealed that these competition effects were accompanied by increased activation in the bilateral SMG as well as portions of LIFG, left cingulate and left insula.

Similar competition effects are found in speech production when subjects are asked to read words aloud that either do or do not have a close competitor in the lexicon that differs only in the voicing of the initial segment. For example, cape has the neighbor gape, but cake does not have a comparable neighbor (gake). When a competitor is present in the lexicon (although not present on screen) subjects once again show increased BOLD activation in left SMG, as well as the LIFG and left precentral gyrus (Peramunage et al., 2011).

In our work (Gow et al., 2008), we have used Granger causation analysis to examine the influence of lexical representation on speech categorization. A large body of behavioral work (c.f. Ganong, 1980; Pitt and Samuel, 1993) has shown that the perception of lexically ambiguous speech sounds is influenced by their lexical context. For example, a phoneme that is ambiguous between /s/ and /∫/ is more likely to be interpreted as /s/ in *andal, and as /∫/ in *ampoo. We found that this bias corresponds to a pattern of SMG influence on pSTG activation (associated with acoustic phonetic processing) that begins at the time period where lexical effects are first seen in electrophysiological data.

As noted, the fact that competition and top-down effects are formally defined by stimulus properties derived from words strongly suggests, but does not conclusively demonstrate, that differences in BOLD activation reflect lexical activation. The existence of phonotactic constraints on how sublexical units (typically phonemes) can be combined to form words leads to a strong correlation between measures of lexical similarity and sublexical constituent frequency. For example, neighborhood density measures, which are generally thought to reflect lexical properties, are highly correlated with sublexical diphone frequency measures (Luce & Pisoni, 1998; Prabhakaran et al., 2006). Moreover, increases in BOLD response observed when a stimulus item has a lexical competitor might reflect increased phonetic analyses needed to discriminate between perceptually similar wordforms. Conversely, behavioral and simulation results suggest that putatively sublexical effects – such as the influence of phonotactic constraints on the perception of nonwords – may be explained by top-down gang effects driven by partially-activated lexical items (McClelland & Elman, 1986). To untangle these factors, the most useful data come from studies that show different activation patterns for tasks that focus on lexical versus segmental processing. Several studies have found that tasks that require explicit segmental categorization do not modulate the SMG BOLD response, but do modulate the activation of other regions including the middle temporal gyrus (MTG), LIFG and AG (Burton et al., 2000; Blumstein et al., 2005; Gow et al. 2008). Blumstein et al. (2005) propose that in these tasks the AG and LIFG are involved in non-obligatory segmentation processes. Seghlier et al . (2010) note that the AG is reliably activated in tasks that involve semantic processing and suggest that activation of the dorsal AG in non-semantic perceptual tasks reflects failed attempts to find semantic content in the processing of inherently non-semantic materials. These proposals may not be mutually exclusive. One possibility is that the AG plays a role in inhibiting lexical processing of items that do not lead to semantic processing, and perhaps facilitating sublexical processing involving Spt. In the case of tasks that require sublexical or segmental processing the same AG mechanism could be invoked strategically by a network involving LIFG and MTG.

6.3 Word Learning Effects

Studies of changes in brain anatomy and activation related to word learning provide converging evidence that the SMG plays a role in lexical representation in the dorsal speech pathway. In one of the first studies in this area, researchers taught subjects a set of unfamiliar archaic words (Cornelissen et al., 2004). MEG was used to examine brain responses before and after learning these words. The study found that 60% of the subjects showed increased activation associated with an equivalent dipole modeled in the inferior parietal lobe during a time period associated with lexical processing (400 msec after word onset). In a similar study using fMRI, another group found increased BOLD response in the same area after subjects learned a set of pseudowords that were used to name novel objects. A closely related line of work has examined correlations between vocabulary size and gray matter density. The earliest work in this area found an association between gray matter density in the left pSMG and bilingualism (Mechelli et al., 2004; Green et al., 2007). More recent work showed an association between gray matter density in the left pSMG and vocabulary size in monolinguals (Lee et al., 2007; Richardson et al., 2010). Taken together, these data suggest that this link is a function of increases in lexicon size associated with knowing more words – either through being multi-lingual or through having a more extensive vocabulary.

7. The function of the STG

The dual lexicon model is an attempt to consolidate new data with our evolving understanding of the role of lexical representation in language processing. This section briefly discusses the role of the posterior superior temporal cortex, the original wortshatz, in the context of the dual lexicon framework.