Abstract

Attending radiologists routinely edit radiology trainee dictated preliminary reports as part of standard workflow models. Time constraints, high volume, and spatial separation may not always facilitate clear discussion of these changes with trainees. However, these edits can represent significant teaching moments that are lost if they are not communicated back to trainees. We created an electronic method for retrieving and displaying changes made to resident written preliminary reports by attending radiologists during the process of radiology report finalization. The Radiology Information System is queried. Preliminary and final radiology reports, as well as report metadata, are extracted and stored in a database indexed by accession number and trainee/radiologist identity. A web application presents to trainees their 100 most recent preliminary and final report pairs both side by side and in a “track changes” mode. Web utilization audits showed regular utilization by trainees. Surveyed residents stated they compared reports for educational value, to improve future reports, and to improve patient care. Residents stated that they compared reports more frequently after deployment of this software solution and that regular assessment of their work using the Report Comparator allowed them to routinely improve future report quality and improved radiological understanding. In an era with increasing workload demands, trainee work hour restrictions, and decentralization of department resources (e.g., faculty, PACS), this solution helps to retain an important part of the educational experience that would have otherwise run the risk of being lost and provides it to the trainees in an efficient and highly consumable manner.

Keywords: Communication, Computers in medicine, Continuing medical education, Databases, Medical education, Efficiency, Electronic medical record, Electronic teaching file, Internship and residency, Internet, Interpretation errors, Medical records systems, PACS support, Radiology reporting

Background

Reporting Workflow in Academic Radiology Departments

The process of educating residents in the proper techniques of radiology report generation is a time-honored and essential tradition in radiology training programs. Although the technology used for generating and consuming radiology reports and the requirements for effective communication have changed drastically in the past decade, the process for mentoring trainees in this essential skill set has not.

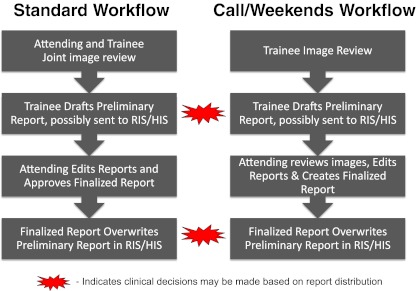

There are two traditional radiology trainee/attending workflow models that lead to the creation of two types of trainee reports (Fig. 1). In the first model, an attending physician reviews imaging studies with a trainee and discusses the relevant findings with the trainee. Afterwards, the trainee will draft a preliminary report. Some institutions export these preliminary reports to the Radiology Information System (RIS). Depending on institution, these preliminary reports may also be sent to the Hospital Information System (HIS) for clinicians to view and use in their clinical decision making. A second workflow model entails radiology trainees composing preliminary reports on their own, without trainee–attending joint study review. These reports can also be passed on to the RIS and/or HIS and this workflow may be most commonly used for overnight/on-call cases.

Fig. 1.

Two traditional academic radiology department reporting workflow models are presented: standard and on-call workflows. Clinical decisions may be routinely made based on preliminary or finalized radiology reports, depending on whether information is made available to the HIS

In our institution, radiology resident preliminary reports are passed to the RIS and the HIS with labels indicating “Preliminary Report.” In both workflow models, preliminary reports are eventually revised by attending radiologists during a report finalization process. Preliminary reports in the HIS and RIS are subsequently overwritten after the attending has finalized the preliminary report.

Workflow Challenges Can Impact Educational Experience

Traditionally, it was commonplace for a trainee to review a series of studies and/or reports as part of the face-to-face mentoring process that occurred during the training. Today, multiple barriers inhibit the ability to regularly and consistently maintain an attending–trainee dialog to review imaging findings and report construction. This is particularly problematic in overnight or ED call workflow where the attending physician who reviews and finalizes the report may never have any direct contact with the resident who created the preliminary report. Moreover, finalizing the report may be done outside of the regular work hours. Given the widespread availability of Picture Archiving and Communication Systems (PACS), attending radiologist edits may even occur in a different location in the hospital or in the attending physician’s residence. Since attending physicians often work with several trainees in a given day, the task of communicating changes may be further complicated by changes occurring with multiple individuals. In addition, the increased clinical demand for rapid report turnaround ostensibly imposes limits on the time available to review reports with trainees. Finally, the recent institution of resident work hour restrictions may require trainees to leave the hospital, making them less available for receiving report feedback.

Although radiology training programs utilize digital dictation systems, there are no inherent features in these systems that make it easy for a trainee to compare versions of a report. Typically, a motivated trainee must maintain a log of cases that they have dictated and find time to look up the final report without access to their original text. While this scenario generally allows for recalling of major discrepancies, it is not reasonable for trainees to remember or identify minor changes made to their now overwritten preliminary reports. Furthermore, it is time-consuming to look up many imaging studies individually and inevitably some studies will get missed due to errors in log creation or search. Increased time demands on radiology trainees in recent years and lack of capability provide a reliable system for self-study of report corrections has meant that skills in report creation are potentially being compromised in training programs.

Electronic Solution for Retrieving Latent Learning Opportunities

Without an effective and efficient way to retrieve these changes and present them to residents, important radiological and reporting teaching points become lost opportunities for resident education. Residents simply must be aware of edits to their reports in order to improve their radiological acumen and reporting skills.

For this reason, we created a simple, semi-automated solution to facilitate the process of identifying changes between preliminary and final reports. Once the application was deployed to the trainees, we monitored the frequency of logins and the number of unique report views. We then surveyed residents to assess their perceptions both of the process of comparing preliminary and finalized reports and also using our semi-automated Report Comparator (RC) application.

Methods

Creation of Report Comparator Software

A server sided script (Active Server Pages, ASP) was created which queries and extracts preliminary and finalized report pairs, and report authors from the RIS (General Electric Centricity 10.4) at 15-min intervals using SQL queries and an active data object (ADO) connection. Metadata that is also extracted includes: accession number, study location, exam modality, body part, technologist identifiers, modifier codes, trainee name, attending name, and examination times (order time, examination start time, exam completion time, dictation start time, and dictation completion time). Every 15 min, any new preliminary reports that have been created in that time period are captured and stored in a mySQL database table along with the report metadata. In addition, any new finalized reports are also captured and matched to the preliminary reports.

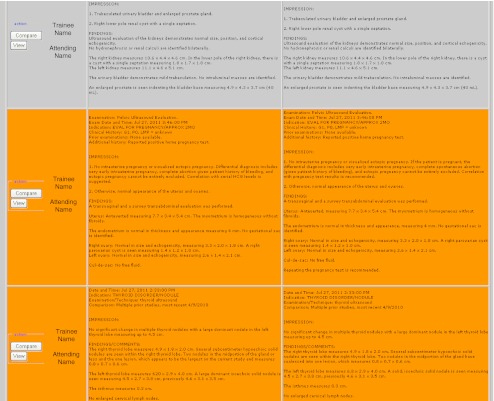

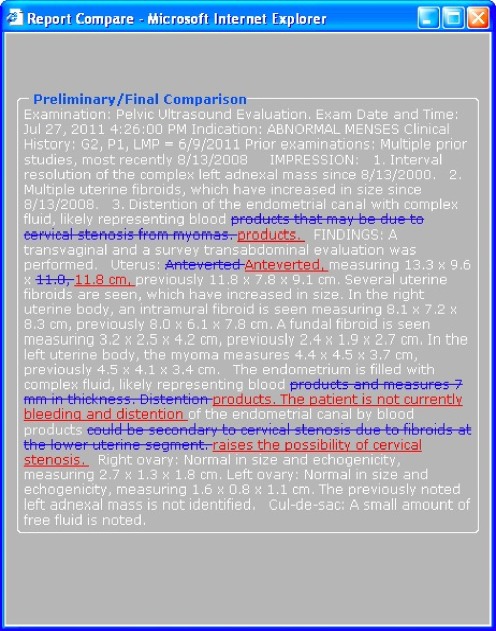

A second web application entitled the “Report Comparator” was created to display trainee preliminary and attending finalized report pairs, as well as a track changes analysis of these two reports. To view report pairs, a trainee authenticates to the RC website with their RIS credentials. Once authenticated, the script performs a query on the report database table and filters for the most recent 100 reports generated by that trainee in reverse date order (Fig. 2). Character and word count for each preliminary/final report pair was calculated and stored. On demand, the trainee can invoke a text comparator function (Javascript) which opens a pop-up windows that contains the same report with color code insertions (displayed in underlined red text) and deletions (displayed in strikethrough blue text) to make it easier to visualize the extent of the changes that were made (Fig. 3). In addition, background of the table is color coded based upon the percent difference in report length between the preliminary and finalized version. A checkbox labeled “view only changed reports” at the top of the list of reports allows residents to exclude all reports that were unchanged during the finalization process. Another button allows for launching of the examination in a PACS web applet.

Fig. 2.

The Report Comparator User Interface displays resident name, attending name, preliminary report, and finalized report. The left column “compare” option launches a “track changes” display (see Fig. 3) and the “view” option launches a PACS browser with study of interest loaded

Fig. 3.

When trainees click “Compare” in the RC User Interface, a “Track Changes” pop-up window demonstrates revisions made during the attending finalization process

Survey of Resident Sentiment Regarding Comparing Reports and Using the Report Comparator

IRB approval was obtained from our institution for this investigation before beginning any research involving human subjects or clinical information. All residents in our training program were emailed a link to a web survey (www.surveymonkey.com) approximately 6 months after the release of the RC software. Responding residents’ perceptions about comparing resident preliminary reports in general and then about the impact of the RC software on this process were analyzed using 5-point Likert scales ranging from “Strongly Agree” to “Strongly Disagree” which were coded from +2 to −2, respectively. Average agreement was then calculated. Ninety-five percent confidence levels were also calculated.

In the survey, residents were asked to describe their agreement about whether they were interested in knowing how resident dictated preliminary reports differed from attending finalized reports. They were then asked to about motivations for comparing reports, and whether they did it for educational value, to improve patient care, to improve report quality, or because “doing so is required of me.”

Trainees were asked for their overall agreement with the following statements regarding the RC: “I like using the RC,” “The RC helps significantly improve the quality of my radiology reports,” and “The RC significantly improves my understanding of radiological principles and/or disease processes.”

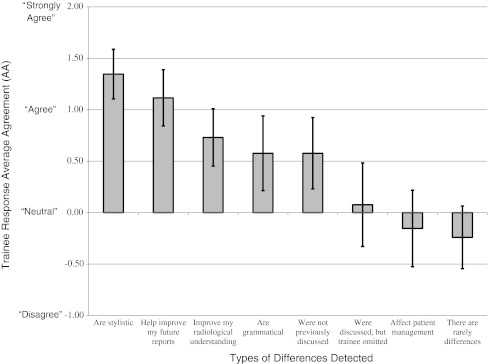

Next, they were asked to rate their agreement on the types of differences they detected with the RC regarding whether differences affected patient management, helped improve their future reports, improved their radiological understanding, were grammatical or stylistic, were previously discussed with attending radiologist (but inadvertently omitted), were not previously discussed, or “there are rarely differences.”

Trainees were also asked how often they compared reports prior to, and after, launch of the RC, their year of training, and what they felt was the most effective way to compare reports.

Results

Report Comparator Usage

Over an 8-month period, there were 993 distinct RC logins by 65 distinct trainees. Each trainee logged in an average of 16 times (95% CI = 11.8–20.2) during the investigated 8-month interval and with each login was shown his/her 100 most recent preliminary/final report pairs. Residents clicked on 4,408 distinct reports to display the “track changes” mode to view detailed analysis of insertions/deletions made within these specific reports.

Note that there were more trainees logging on to the RC during the study period (65) than were surveyed (36). The 65 RC logins 8 residents that graduated from the program during the study interval, after having logged into the RC, but prior to survey administration, as well as 19 fellows that logged into RC, but whom were not surveyed (survey was sent to residents). One resident became a fellow at approximately the time the survey was distributed, completed the survey, and was labeled as a fellow in the survey arm of this study.

Survey Results

Survey responses were received by 26 of 36 (72.2%) residents. Respondent level of training was: first year radiology residents (9), second year radiology residents (7), third year radiology residents (6), fourth year radiology residents (3), and 1 fellow.

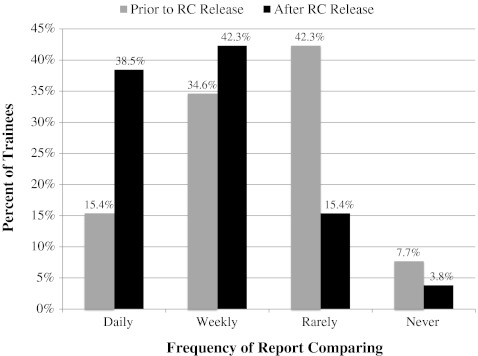

Prior to the release of the RC, responding trainees reported that they manually reviewed finalized attending reports to discern differences between reports daily to weekly (12, 46.2%) and rarely to never (14, 53.2%). Report comparing to discern differences between reports increased after RC launch to daily to weekly (21, 80.8%) and rarely to never (5, 19.2%; Fig. 4). Approximately 9 of 26 (34.6%) residents increased their report checking behavior from rarely or never to daily or weekly.

Fig. 4.

Frequency of trainee report comparing behavior is presented prior to and after the Report Comparator release. Trainees compared reports more frequently after Report Comparator software release

Survey responses are reported by average agreement (AA). AA was calculated using 5-point Likert scale from “Strongly Agree” (+2) to “Strongly Disagree” (−2). WAA > 0 corresponds to general agreement and WAA < 0 corresponds with general disagreement. The magnitude of WAA indicates strength of agreement.

Radiology trainees indicated that they compared preliminary reports to finalized reports, in order of decreasing WAA: for educational value AA = +1.62, 95% CI (1.35–1.88), to improve quality of future reports +1.46 (1.15–1.77), to improve patient care +1.15 (0.77–1.54), and because they perceive it to be a requirement −0.15 (−0.56–+0.25).

Specifically regarding the RC, trainees stated that they routinely detected differences that, in order of decreasing AA, are stylistic +1.35 (1.10–1.59), help improve future reports +1.12 (0.84–1.39), improve radiological understanding +0.73 (0.45–1.01), are grammatical +0.58 (0.21–0.94), and affect patient management −0.15 (−0.53–+0.22) (Fig. 5).

Fig. 5.

Resident survey responses regarding report differences residents detected while using the RC. Data are presented as Average Agreement (AA). AA > 0 corresponds to general agreement and AA < 0 corresponds to general disagreement

Regarding using the RC, trainees state that, in order of decreasing WAA, they like using the RC program +1.62 (1.4–1.83), the comparator helps significantly improve the quality of their radiology reports +1.04 (0.74–1.34), and the comparator helps improve their understanding of radiological principles and/or disease processes +0.46 (0.1–0.83).

Residents stated that the most useful way to compare radiology reports was with RC software using “track changes mode” (12, 44%), with Report Comparator using side-by-side report pair comparison (11, 44%), and manually using HIS/RIS (1, 4%).

Discussion

Effective feedback is an essential component of education and can provide trainees with stimulus for self-improvement. To be considered effective, authors have suggested that feedback be comprehensive, balanced, timely, specific, and should deal with behaviors within the control and ability of the learner [1, 2]. However, effective feedback in medical education is commonly perceived either as absent or inadequate [2–5]. Electronic systems are one mechanism to provide effective feedback and have been demonstrated to offer useful and effective educational adjuncts [6].

Attempting to change resident behavior is most effective when feedback can be provided as close as possible to the point of care. Although a motivated resident could maintain a manual log of dictated cases for subsequent review, subtle changes can be difficult to identify and characterize without ready access to both versions of the report. Not only is this process time intensive and laborious, but also inefficient since residents would likely be unable to identify subtle changes made by attending radiologists over a range of a large number of reports.

While not a substitute for attending and trainee interaction, the RC offers a technical solution to provide comprehensive, balanced, timely, and specific feedback to trainees regarding their reporting skills. As increased clinical volume and demands on trainee and attending time limits the time available to teach trainees reporting skills, some of these educational pearls can be recaptured through self-study using this system. As few opportunities exist whereby a trainee has the luxury to review report changes and corrections with their attending, the RC can effectively bridge that educational divide.

Radiology residents recognize the educational value that lies in the edits attending radiologists make to resident dictations. Residents feel that the awareness of these changes improves their radiological understanding, as well as the value of their future reports. Residents agreed that there are often differences between preliminary and finalized reports and that many differences are stylistic and grammatical.

Residents prefer to identify report edits via a RC technological solution instead of traditional HIS or RIS comparing strategies. Implementation of such RC software approximately doubled the number of residents that make comparing reports part of their daily or weekly routine. It is important to note that residents were never required to access the Report Comparator. The results of this study underscore the fact that if feedback of this nature can be made readily available to the trainee, the trainee will voluntary utilize it more frequently.

One principal limitation of this solution is that it is still incumbent upon the trainee to assess the changes made to a report as either stylistic or clinically meaningful. No direct analysis of the context of the changes was incorporated into the application. A natural language processor might be beneficial to examine specifically for changes that could have impact on clinical context such as use of negation or alteration in location (e.g., right versus left).

Residents do not perceive that the report differences they generally encounter would often impact patient management. Further research is needed to validate these sentiments via a review of actual differences across a large number of reports. It is likely that there will be general, but not absolute agreement between the consumers of these reports about whether report changes might impact patient management. Additional investigation is recommended to identify the types differences observed between preliminary and finalized reports, as well as the agreement between radiology trainees, attending radiologists and referring clinicians regarding the categorization and significance of such differences.

The establishment of a quantitative metric applied to all report pairs, if validated, could potentially be used to monitor resident educational progress. With qualitative analysis of the nature of changes made, it could be evaluated whether residents improve their dictation style over time, and if they do improve, these improvements reflect growth and improved prose, or whether trainees learn the nuances of each individual attending and cater to those differences. Additional analysis could potentially utilize trends among report pairs, such as combining them with attending RVU values, for example, to evaluate attending productivity.

The process of mining available data into an easy to understand report comparing user interface has provided a novel and useful educational tool to enhance resident education using data and resources that are already available. It is likely that other training programs choosing to implement this inexpensive solution can also provide added educational opportunities to their trainees. RIS and speech vendors could easily provide a similar type of solution as part of their product line.

Conclusion

Radiology trainees prefer to learn from attending radiologist edits made to preliminary radiology reports using RC software over manual reconciliation. Implementation of this software motivates them to compare reports more frequently and is perceived to be an efficient way to improve both radiological understanding and reporting skills.

References

- 1.Wood B. Feedback: a key feature of medical training. Radiology. 2000;215:17–19. doi: 10.1148/radiology.215.1.r00ap5917. [DOI] [PubMed] [Google Scholar]

- 2.Sachdeva AK. Use of effective feedback to facilitate adult learning. J Cancer Educ. 1996;11:106–118. doi: 10.1080/08858199609528405. [DOI] [PubMed] [Google Scholar]

- 3.Ende J. Feedback in clinical medical education. JAMA. 1983;250:777–781. doi: 10.1001/jama.1983.03340060055026. [DOI] [PubMed] [Google Scholar]

- 4.Nadler DA. Feedback and organization development: using databased methods. Reading: Addison-Wesley; 1977. [Google Scholar]

- 5.De SK, Henke PK, Ailawadi G, Dimick JB, Colletti LM. Attending, house officer, and medical student perceptions about teaching in the third-year medical school general surgery clerkship. J Am Coll Surg. 2004;199(6):932–942. doi: 10.1016/j.jamcollsurg.2004.08.025. [DOI] [PubMed] [Google Scholar]

- 6.Jaffe CC, Lynch PJ. Computer aided instruction in radiology: opportunities for more effective learning. AJR. 1995;164:463–467. doi: 10.2214/ajr.164.2.7839990. [DOI] [PubMed] [Google Scholar]