Abstract

Semantic memory includes all acquired knowledge about the world and is the basis for nearly all human activity, yet its neurobiological foundation is only now becoming clear. Recent neuroimaging studies demonstrate two striking results: the participation of modality-specific sensory, motor, and emotion systems in language comprehension, and the existence of large brain regions that participate in comprehension tasks but are not modality-specific. These latter regions, which include the inferior parietal lobe and much of the temporal lobe, lie at convergences of multiple perceptual processing streams. These convergences enable increasingly abstract, supramodal representations of perceptual experience that support a variety of conceptual functions including object recognition, social cognition, language, and the remarkable human capacity to remember the past and imagine the future.

The centrality of semantic memory in human behavior

Human brains acquire and use concepts with such apparent ease that the neurobiology of this complex process seems almost to have been taken for granted. Although philosophers have puzzled for centuries over the nature of concepts [1], semantic memory (see Glossary) became a topic of formal study in cognitive science only relatively recently [2]. This history is remarkable, given that semantic memory is one of our most defining human traits, encompassing all the declarative knowledge we acquire about the world. A short list of examples includes the names and physical attributes of all objects, the origin and history of objects, the names and attributes of actions, all abstract concepts and their names, knowledge of how people behave and why, opinions and beliefs, knowledge of historical events, knowledge of causes and effects, associations between concepts, categories and their bases, and on and on.

Also remarkable is the variety of everyday cognitive activities that depend on this extensive store of knowledge. A common example is the recognition and use of objects, which has been the focus of much theoretical and empirical work on semantic memory [3–7]. Recognition and use of objects, however, is a capacity shared by many non-human animals that interact with food sources, build simple structures, or use simple tools. More uniquely human is the ability to represent concepts in the form of language, which allows not only the spread of conceptual knowledge in an abstract symbolic form, but also a cognitive mechanism for the fluid and flexible manipulation, association, and combination of concepts [8, 9]. Thus humans use conceptual knowledge for much more than merely interacting with objects. All of human culture, including science, literature, social institutions, religion, and art, is constructed from conceptual knowledge. We do not reason, plan the future or remember the past without conceptual content – all of these activities depend on activation of concepts stored in semantic memory.

Scientific study of human semantic memory processes has been limited in the past both by a relatively restricted focus on object knowledge and by an experimental tradition emphasizing stimulus-driven brain activity. Human brains are occupied much of the day with reasoning, planning, and remembering. This highly conceptual activity need not be triggered by stimuli in the immediate environment – all of it can be done, and usually is, in the privacy of one's own mind. Together with recent insights gained from studies of patients with semantic memory loss, functional imaging data are rapidly converging on a new anatomical model of the brain systems involved in these processes. Given the centrality of semantic memory to human behavior and human culture, the significance of these discoveries can hardly be overstated.

In this article we propose a large-scale neural model of semantic processing that synthesizes multiple lines of empirical and theoretical work. Our core argument is that semantic memory consists of both modality-specific and supramodal representations, the latter supported by the gradual convergence of information throughout large regions of temporal and inferior parietal association cortex. These supramodal convergences support a variety of conceptual functions including object recognition, social cognition, language and the uniquely human capacity to construct mental simulations of the past and future.

Central issues in semantic processing

A major issue in the study of semantic memory concerns the nature of concept representations. Efforts in the last century to develop artificial intelligence focused on knowledge representation in the form of abstract symbols [10]. This approach led to powerful new techniques for information representation and manipulation (e.g., semantic nets, feature lists, ontologies, schemata). Recent advances in this area used machine learning techniques together with massive verbal inputs to create a highly flexible, probabilistic symbolic network that can respond to general questions in a natural language format [11]. Scientists interested in human brains, on the other hand, have long assumed that the brain represents concepts at least partly in the form of sensory and motor experiences. Nineteenth-century neurologists, for example, pictured a widely distributed `concept field' in the brain where visual, auditory, tactile, and motor `images' associated with a concept were activated in the process of word comprehension [12, 13]. A major advantage of such a theory over a purely symbolic representation is that it provides a parsimonious and biologically plausible mechanism for conceptual learning. Over the course of many similar experiences with entities from the same category, an idealized sensory or motor representation of the entity develops by generalization across unique exemplars, and reactivation or `simulation' of these modality-specific representations forms the basis of concept retrieval [14].

In addition to these issues concerning representation of information, questions arise about the mechanisms that control semantic information retrieval. Clearly not all knowledge associated with a concept is relevant in all contexts, thus mechanisms are needed for selecting or attending to task-relevant information [15, 16]. Some conceptual tasks also place strong demands on creativity, a term we use here to refer to flexible problem solving in the absence of strong constraining cues. Creative invention through technological innovation, art, and `brainstorming' are uniquely human endeavors that require fluent conceptual retrieval and flexible association of ideas. Even everyday conversation requires a logical but relatively unconstrained flow of ideas, in which one topic leads to another through a series of associated concepts. This type of flexible association and combining of concepts, though ubiquitous in everyday life, has largely been overlooked in functional imaging studies, which tend to focus on highly constrained retrieval tasks involving recognition of words and objects.

Evidence for modality-specific simulation in comprehension

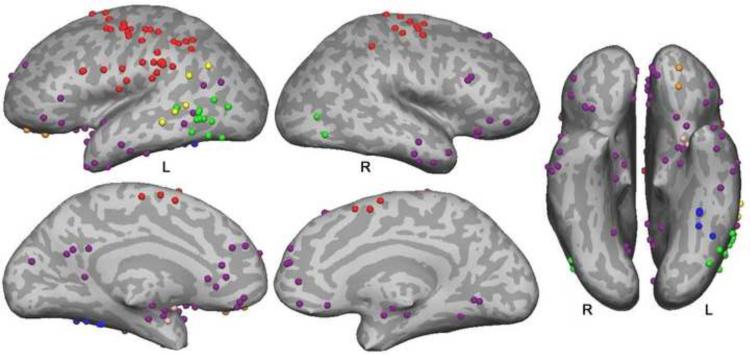

The idea that sensory and motor experiences form the basis of conceptual knowledge has a long history in philosophy, psychology, and neuroscience [1, 3, 12, 13]. In recent years, this proposal has gained new steam under the rubric of `embodied' or `situated' cognition, supported by numerous neuroimaging and behavioral studies. Some of the imaging studies showing modality-specific activations during language processing are summarized in Figure 1. A number of these address action concepts and show that processing action-related language activates brain regions involved in executing and planning actions. Motion, sound, color, olfaction, and gustatory concept processing have also been addressed, and also tend to show activation in or near regions that process these perceptual modalities (see legend, Figure 1).

Figure 1. Modality-specific activation peaks during language comprehension.

This figure displays sites of peak activation from 38 imaging studies that examined modality-specific knowledge processing during language comprehension tasks. Peaks were mapped to a common spatial coordinate system and then to a representative brain surface. Action knowledge peaks (red) cluster in primary and secondary sensorimotor regions in the posterior frontal and anterior parietal lobes. Motion peaks (green) cluster in posterior inferolateral temporal regions near the visual motion processing pathway. Note that motion concepts, especially when elicited by action verbs, are difficult to distinguish from action concepts. Peaks near motion processing area MT/MST in four of the studies of action language are interpreted here as reflecting motion knowledge. Auditory peaks (yellow) occur in superior temporal and temporoparietal regions adjacent to auditory association cortex. Color peaks (blue) cluster in the fusiform gyrus just anterior to color-selective regions of extrastriate visual cortex. Olfactory peaks (pink) observed in one study were in olfactory areas (prepiriform cortex and amygdala). Gustatory peaks (orange) were observed in one study in the anterior orbital frontal cortex. Emotion peaks (purple) involve primarily anterior temporal, medial and orbital prefrontal, and posterior cingulate regions. Details regarding study selection and a list of the included studies are provided in supplementary material online.

Challenges to the embodiment view have also arisen. One objection is that activations observed in imaging experiments could be epiphenomenal and not causally related to comprehension [17]. This hypothesis has been tested in patients with various forms of motor system damage. Initial results indicate a selective impairment for comprehending action verbs in patients with Parkinson's disease [18], progressive supranuclear palsy [19], apraxia [20], and motor neuron disease [21, 22]. Several studies employing transcranial magnetic stimulation to induce transient lesions in the primary motor cortex or inferior parietal lobe provide converging results [23–28]. Thus, involvement of the motor system during action word processing contributes to comprehension and is not a mere by-product. A related argument is that the activations represent post-comprehension imagery. In studies using imaging methods with high temporal resolution, however, the activation of motor regions during action word processing appear to be rapid, approximately 150–200 ms from word onset [29–32], suggesting that it is part of early semantic access rather than a result of post-comprehension processes. These converging results provide compelling evidence that sensory-motor cortices play an essential role in conceptual representation.

Although it is often overlooked in reviews of embodied cognition, emotion is as much a modality of experience as sensory and motor processing [33]. Words and concepts vary in the magnitude and specific type of emotional response they evoke, and these emotional responses are a large part of the meaning of many concepts. Purple dots in Figure 1 represent activation peaks from 14 imaging studies that examined activation as a function of the emotional content of words or phrases. There is a clear preponderance of activations in the temporal pole (13 studies) and ventromedial prefrontal cortex (10 studies), both of which play a central role in emotion [34, 35]. Involvement of the temporal pole in high-level representation of emotion may also explain activation in this region associated with social concepts [36, 37], which tend to have strong emotional valence.

Evidence for high level convergence zones

In addition to modality-specific simulations, we propose that the brain uses abstract, supramodal representations during conceptual tasks. One compelling argument for this view is that the human brain possesses large areas of cortex that are situated between modal sensory-motor systems and thus appear to function as information `convergence zones' [14]. These heteromodal areas include the inferior parietal cortex (angular and supramarginal gyri), large parts of the middle and inferior temporal gyri, and anterior portions of the fusiform gyrus [38]. These areas have expanded disproportionately in the human brain relative to the monkey brain, `taking over' much of the temporal lobe from the visual system [39]. Advocates of a strictly embodied theory of conceptual processing have largely ignored these brain regions, yet they occupy a substantial proportion of the posterior cortex in humans.

A second body of evidence comes from patients with damage in the inferior and lateral temporal lobe, particularly patients with semantic dementia, a syndrome characterized by progressive temporal lobe atrophy and multimodal loss of semantic memory [40, 41]. These patients are unable to retrieve names of objects, categorize objects or judge their relative similarity, identify the correct color or sound of objects, or retrieve knowledge about actions associated with objects [42–45]. Critically, the deficits do not appear to be category-specific [46] – further evidence that the semantic impairment does not involve strongly modal representations.

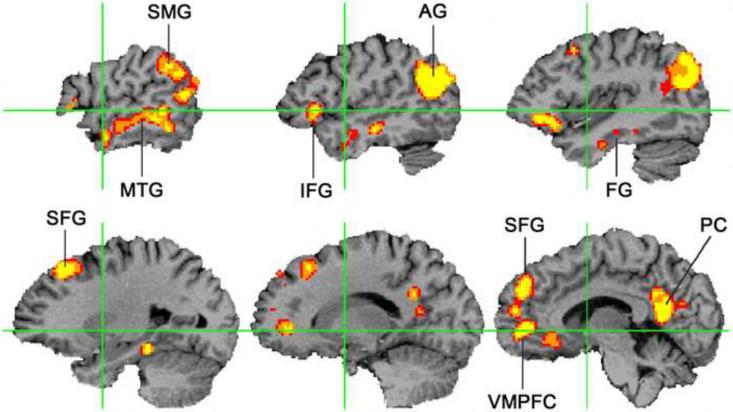

A third large body of evidence comes from functional imaging studies that target general semantic rather than modality-specific semantic processes. For example, many imaging experiments have contrasted words against pseudowords, related against unrelated word pairs, meaningful against nonsensical sentences, and sentences against random word strings. In another type of general semantic contrast, a semantic task (e.g., a semantic decision) is contrasted with a phonological control task (e.g., rhyme decision). What is important to understand about all of these `general' contrasts is that although they elicit differences in the degree of access to semantic information, they include no manipulation of modality-specific information. In the absence of systematic biases affecting stimulus selection, the activations resulting from these contrasts are unlikely to reflect modality-specific representations.

A quantitative meta-analysis of 120 of these studies was recently performed [47]. Studies were included only if they satisfied strict criteria for a semantic contrast. Studies were excluded if the stimuli in the contrasting conditions were not matched on orthographic or phonological properties, or if the activations could be explained by differences in attention or working memory demands. Reliability of the activation sites reported in the studies was analyzed using a volume-based technique called activation likelihood estimation [48].

The results showed remarkable consistency across studies, with reliable activation throughout the left temporal and parietal heteromodal cortex (Figure 2). These locations are consistent with the location of pathological changes in semantic dementia, as well as with temporal and parietal vascular lesions causing semantic impairments [49–53]. Other consistent sites of activation included the dorsomedial prefrontal cortex (superior frontal gyrus), ventromedial prefrontal cortex, inferior frontal gyrus, and the posterior cingulate gyrus and precuneus. The results offer compelling evidence for high-level convergence zones in the inferior parietal, lateral temporal, and ventral temporal cortex. These regions are far from primary sensory and motor cortices and appear to be involved in processing general rather than modality-specific semantic information.

Figure 2. Meta-analysis of functional imaging studies of semantic processing.

This figure displays brain regions reliably activated by general semantic processes, based on reported activation peaks from 120 independent functional imaging studies (p <.05 corrected for family-wise error). The analysis method assigns a significance value to the degree of spatial overlap between the reported activation coordinates in a standard volume space. The figure shows selected sagittal sections in the left hemisphere; right hemisphere activations occurred in similar locations but were less extensive. AG = angular gyrus, FG = fusiform gyrus, IFG = inferior frontal gyrus, MTG = middle temporal gyrus, PC = posterior cingulate gyrus, SFG = superior frontal gyrus, SMG = supramarginal gyrus, VMPFC = ventromedial prefrontal cortex. Green lines indicate the Y and Z axes in standard space. Adapted from [47].

Embodied abstraction in conceptual representation

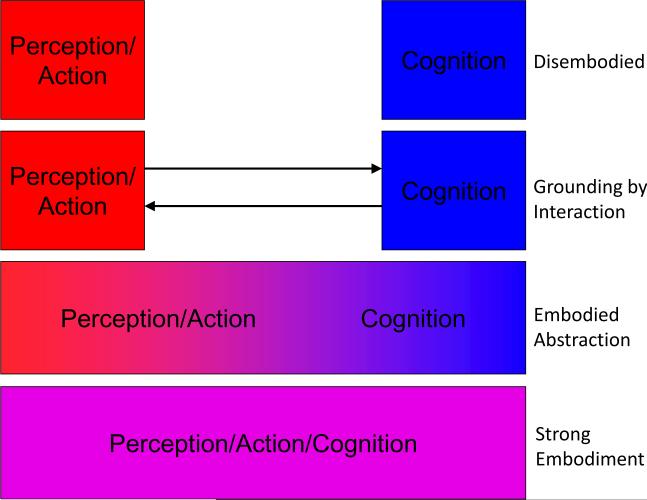

Figure 3 illustrates several prominent theories that differ in the proposed level of separation between conceptual and perceptual representations. Models based on disembodied, symbolic conceptual representations [9, 10] are often criticized on the grounds that such symbols are ultimately devoid of content [54]. From an empirical standpoint, the extensive evidence for involvement of modality-specific sensory, action, and emotion systems during language comprehension is also inconsistent with such a model.

Figure 3. Possible relationships between perceptual and conceptual representation.

Theories of perception and cognition vary in terms of the degree of separation between these processes. Disembodied models propose a complete separation, in which conceptual processing is based entirely on amodal, symbolic representations [9, 10, 17]. Other theories propose that conceptual and perceptual representations are distinct and separate but interact closely so that amodal symbols can derive content from perceptual knowledge [7, 14]. In contrast to both of these theories, strong embodiment models posit that perceptual and conceptual processes are carried out by a single system [55, 56]. In contrast to all of these theories, the neuroanatomical evidence for multiple modality-specific systems gradually converging on a common semantic network suggests a process of `embodied abstraction,' in which conceptual representation is embodied in multiple levels of abstraction from sensory, motor and affective input. The extent to which modality-specific perceptual representations are activated during semantic tasks varies with concept familiarity, demand for perceptual information and degree of contextual support (see Box 1).

At the other end of the spectrum are `strong embodiment' models in which perceptual and conceptual processes are carried out by the same (perceptual) system [55, 56]. These models are inconsistent with the evidence for modality-independent semantic networks reviewed above. Furthermore, conceptual deficits in patients with sensory-motor impairments, when present, tend to be subtle rather than catastrophic. In a recent study of aphasic patients [57], lesions in both sensory-motor and temporal regions were correlated with impairment in a picture-word matching task involving action words. This evidence is incompatible with a strong version of the embodiment account, in which sensory-motor regions are necessary and sufficient for conceptual representation.

Other theories propose that amodal representations derive their content from close interactions with modal perceptual systems [7, 14]. The purpose of amodal representations in these latter models is to bind and efficiently access information across modalities rather than to represent the information itself [58]. The need for distinct amodal representations in such a model has been sharply questioned, however, as multimodal perceptual representations could fulfill the same role [55, 56].

We suggest that the current evidence is most compatible with a view we term `embodied abstraction,' briefly sketched here (see [59, 60] for similar proposals). In this view, conceptual representation consists of multiple levels of abstraction from sensory, motor, and affective input. All levels are not automatically accessed or activated under all conditions. Rather, this access is subject to factors such as context, frequency, familiarity, and task demands. The top level contains schematic representations that are highly abstracted from detailed representations in the primary perceptual-motor systems. These representations are `fleshed out' to varying degrees by sensory-motor-affective contributions in accordance with task demands. In highly familiar contexts, the schematic representations are sufficient for adequate and rapid processing. In novel contexts or when the task requires deeper processing, sensory-motor-affective systems make a greater contribution in fleshing out the representations (Box 1).

A neuroanatomical model of semantic processing

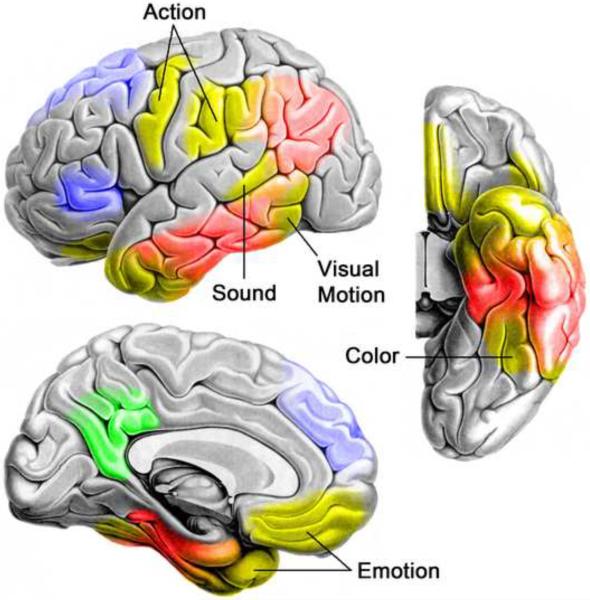

Figure 4 outlines a neuroanatomical model of semantic memory consistent with a broad range of available data. Modality-specific representations (yellow areas in Figure 4), located near corresponding sensory, motor, and emotion networks, develop as a result of experience with entities and events in the external and internal environment. These representations code recurring spatial and temporal configurations of lower-level modal representations. Although depicted as somewhat modular, we view these systems as an interactive continuum of hierarchically ordered neural ensembles, supporting progressively more combinatorial and idealized representations. These systems correspond to Damasio's local convergence zones [14] and to Barsalou's unimodal perceptual symbol systems [55]. In addition to bottom-up input in their associated modality, they receive a range of top-down input from other modal systems and from attention. They are modal in the sense that the information they represent is an analog of (i.e., isomorphic with) their bottom-up input [55].

Figure 4. A neuroanatomical model of semantic processing.

A model of semantic processing in the human brain is shown, based on a broad range of pathological and functional neuroimaging data. Modality-specific sensory, action, and emotion systems (yellow regions) provide experiential input to high-level temporal and inferior parietal convergence zones (red regions) that store increasingly abstract representations of entity and event knowledge. Dorsomedial and inferior prefrontal cortices (blue regions) control the goal-directed activation and selection of the information stored in temporoparietal cortices. The posterior cingulate gyrus and adjacent precuneus (green region) may function as an interface between the semantic network and the hippocampal memory system, helping to encode meaningful events into episodic memory. A similar, somewhat less extensive semantic network exists in the right hemisphere, although the functional and anatomical differences between left and right brain semantic systems are still unclear.

These modal convergence zones then converge with each other in higher-level cortices located in the inferior parietal lobe and much of the ventral and lateral temporal lobe (red areas in Figure 4). One function of these high-level convergences is to bind representations from two or more modalities, such as the sound and visual appearance of an animal, or the visual representation and action knowledge associated with a hand tool [7, 12, 14, 55]. Such supramodal representations capture similarity structures that define categories, such as the collection of attributes that place `pear' and `light bulb' in different categories despite a superficial similarity of appearance, and `pear' and `pineapple' in the same category despite very different appearances [58]. More generally, supramodal representations allow the efficient manipulation of abstract, schematic conceptual knowledge that characterizes natural language, social cognition, and other forms of highly creative thinking [59, 60].

These modal and supramodal convergence zones store the actual content of semantic knowledge, whereas the prefrontal regions colored blue in Figure 4 control top-down activation and selection of the content in posterior stores (Box 2). The posterior cingulate gyrus and adjacent precuneus (green area in Figure 4) consistently show semantic effects in imaging experiments and have also been implicated in a wide variety of other processes, as discussed below. Given the strong reciprocal connections this region has with the hippocampal formation, it likely plays a role in encoding semantically and emotionally meaningful events in episodic memory [61], though its precise function remains a topic for future research.

Our view of semantic processing in posterior cortical regions is similar to the `hub and spoke' model of Patterson, Rogers, Lambon Ralph, and colleagues [7, 46, 58] and to the convergence zone model of Damasio [14], but differs in two important respects. First, we do not believe the data support a central role for the temporal pole as the highest level in the convergence zone hierarchy (Box 3). As shown in Figures 2 and 4, multimodal convergence of information processing streams occurs throughout much of the lateral and ventral temporal cortex, as well as in the inferior parietal lobe, whereas the temporal pole receives strong affective input from the ventral frontal lobe and amygdala and is better characterized as a modal region for processing emotion and social concepts [34, 36, 37]. Second, proponents of the hub and spoke model explicitly deny a role for the inferior parietal lobe in representation of semantic information [62]. We believe that the anatomical and functional imaging evidence for semantic memory storage in the inferior parietal lobe is difficult to deny, even though the nature of the information represented in this region is still unclear (Box 4).

Social cognition, declarative memory retrieval, prospection, and the default mode

The network of brain regions we associate here with semantic processing has also been linked with more specific functions. Nearly all parts of the network have been implicated in aspects of social cognition, including theory-of-mind (processing of knowledge pertaining to mental states of other people), emotion processing, and knowledge of social concepts [36, 37, 63–67]. Much of the network has been implicated in retrieval of episodic and particularly autobiographical memories [68, 69], leading to the hypothesis that these regions function to retrieve event memories through a process of `scene construction' [70]. The same scene construction processes have been proposed as the basis for `prospection,' i.e., imagining future scenarios for the purpose of planning and goal attainment [71, 72]. Finally, the association of these regions with autobiographical, `self-projection,' and self-referential processes has led to suggestions that they are specifically involved in processing self knowledge [73, 74]. Several recent reviews and meta-analyses attest to the high degree of neuroanatomical overlap between the networks supporting these purportedly distinct processes [67, 75–77].

Given this overlap, it is logical to ask whether there is a process common to all of these cognitive functions. A model based on self-referential processing cannot easily explain activation of the same regions by theory-of-mind tasks, which by definition emphasize knowledge pertaining to others. The general process of mental scene construction is common to episodic memory retrieval, prospection, and many theory-of-mind tasks, but this model cannot explain the consistent activation of these regions by single word comprehension tasks, as shown above in Figure 2. Indeed, the contrasts analyzed in Figure 2 focused on general semantic knowledge (especially knowledge about object concepts) and did not emphasize episodic, autobiographical, social, emotional, self, or any other specific knowledge domain.

One process shared across semantic, social cognition, episodic memory, scene construction, and self-knowledge tasks is the retrieval of conceptual knowledge. The scene construction posited to underlie episodic memory retrieval and prospection refers to a partial, internal simulation of prior experience. But the construction of a scene requires content. The content of such a simulation is conceptual knowledge about particular entities, events, and relationships. The variety of this content is impressive, encompassing object, action, social, self, spatial, and other domains, yet these types of content all share a common basis in sensory-motor experience, learning through generalization across individual exemplars, and progressive abstraction from perceptual detail. We propose that the essential function of the high-level convergence zone network is to store and retrieve this conceptual content, which is employed over a variety of domain-specific tasks.

This network of high-level convergence zones also overlaps extensively with the `default mode network' of regions that show higher levels of activity during passive and `resting' states than during attentional tasks [47, 74–76, 78]. The similarity between all of these networks lends strong support to proposals that `resting' is a cognitively complex condition characterized by episodic and autobiographical memory retrieval, manipulation of semantic and social knowledge, creativity, problem solving, prospection, and planning [75, 78–81]. Several authors have emphasized the profound adaptive value of these processes, which not only enable the attainment of personal goals but are also responsible for all of human cultural and technological development [78, 80, 81].

Concluding remarks

This review proposes a large-scale brain model of semantic memory organization in the human brain based on synthesis of a large body of empirical imaging data with a modified embodiment theory of knowledge representation. In contrast to strong versions of embodiment theory, the data show that large areas of heteromodal cortex participate in semantic memory processes. The multimodal convergence of information toward these brain areas enables progressive abstraction of conceptual knowledge from perceptual experience, enabling rapid and flexible manipulation of this knowledge for language and other highly creative tasks. In contrast to models that identify the temporal pole as the principal site of this information convergence, the evidence suggests involvement of heteromodal regions throughout the temporal and the inferior parietal lobes. We hope this anatomical-functional model provides a useful framework for several future lines of research (Box 5).

Supplementary Material

Box 1. Variability in sensory-motor embodiment.

Modality-specific simulation provides a plausible mechanism for retrieval of concrete object concepts, but difficulties arise in considering abstract concepts. What sensory, motor, or emotional experience is reactivated in comprehending words such as `abstract', `concept', `modality', and `theory'? Another potential difficulty arises from the speed of spoken language, which is easily understood at rates of 3–4 words per second [82]. It is far from clear that an extended sensory, motor, or emotional simulation of each word is possible at such speeds, or even necessary. Thus there is a strong rationale for considering theories that allow both sensory-motor-emotional simulation and manipulation of more abstract representations as a basis for conceptual processing, depending on the exigencies of a given task [59, 60]. At one end of this continuum are tasks that encourage simulation by explicitly requiring mental imagery of an object or event. At the other end are tasks requiring comprehension of rapidly presented, abstract verbal materials that evoke little or no mental simulation. Imagine, for example, that you are deciding which of two cars to buy. This task is likely to engage extended mental simulation of the sensory and motor experiences of examining and test-driving each car. In contrast, imagine hearing someone say, “I don't really have any need or money for a car right now, so it's low on my priority list”. This statement is perfectly understandable and full of meaning, but how extensively must the sensory attributes of `car' be simulated for full comprehension to occur, or simulation of words such as `need', `now', `low', and `priority'? Another factor that likely modulates `depth' of simulation during language comprehension is the familiarity of an expression. Imagine that instead of the statement about a car, you hear, “I don't really have any need or money for a llama right now, so it's low on my priority list”. Comprehending the word `llama' is likely to require an extended visual simulation and the unfamiliarity of the statement itself is likely to elicit a range of simulations involving possible uses for a llama. In general, the involvement of sensory-motor systems in language comprehension seems to change through a gradual abstraction process whereby relatively detailed simulations are needed for unfamiliar or infrequent concepts and these simulations become less detailed as familiarity and contextual support increases [83].

Box 2. The role of the temporal poles.

Studies of patients with semantic dementia have drawn attention to the temporal pole (TP) and the proposal that it functions as a central `hub' housing amodal semantic representations [7]. Emphasis on the TP is also consistent with a longstanding view of this region as the zenith of a caudal-to-rostral convergence of information in the temporal lobe [14]. There are, however, several reasons to question claims that the TP is the sole or principal focus of high-level information convergence. The concept of the TP as an anatomical convergence zone is based mainly on two sources of information: the caudal-rostral progression of information processing in the primate ventral visual system [84] and the convergence of massive multimodal inputs on anterior medial temporal regions, particularly perirhinal cortex [85, 86]. Although a caudal-rostral hierarchy of information complexity in the primate visual system is undeniable, the proportion of the temporal lobe devoted to unimodal visual processing is considerably less in the human than in the monkey brain [39]. In contrast to the monkey visual system, which occupies ventral and lateral temporal cortex all the way to the temporal pole, the human visual system is largely confined to occipital cortex and posterior ventral temporal lobe. Apart from modal auditory cortex in the superior temporal gyrus, remaining regions in the human temporal lobe are not clearly modality-specific, therefore multimodal convergences are likely to occur along the entire length of the temporal lobe. The convergence of inputs on the anterior medial temporal lobe, though sometimes construed as serving a conceptual function [14], are more likely to represent input to the hippocampal system for the purpose of episodic memory encoding [87].

Pathological evidence regarding the TP is also somewhat ambiguous. Although atrophy in semantic dementia is typically most severe in the TP, the total area involved is usually much larger, including most of the ventral temporal cortex [88–90]. Regions showing the strongest correlation between atrophy and semantic deficits are actually closer to the mid-temporal lobe than the TP [90, 91]. Finally, the TP, ventromedial prefrontal cortex and lateral orbitofrontal cortex constitute a tightly interconnected network [34, 92] implicated in processing modality-specific emotional aspects of word meaning (see Figure 1). Considered together, these data suggest that the most anterior parts of the temporal lobe, including the TP and anteromedial temporal regions, are unlikely to be a critical hub for retrieval of multimodal semantic knowledge.

Box 3. The role of the prefrontal cortex.

Imaging studies identify reliable semantic processing effects in the left inferior frontal gyrus (IFG) and in a larger dorsomedial prefrontal region extending from the posterior middle frontal gyrus laterally to the superior frontal gyrus (SFG) medially (see blue areas in Figure 4). Numerous experiments indicate that the IFG is engaged when tasks require effortful selection of semantic information, as when many alternative responses are possible or lexical ambiguity gives rise to competing semantic representations [15, 16, 93, 94]. Consistent with prior reviews [95, 96], the meta-analytical data presented here show more reliable activation of anterior and ventral aspects of the IFG (pars orbitalis and triangularis) in semantic studies compared to posterior IFG.

The role of dorsomedial prefrontal cortex in semantic processing has been much less studied, although this region has been a focus of attention in research on emotion processes, social cognition, self-referential processing and the default mode [67, 73, 74, 97–99]. Ischemic lesions to the left SFG cause transcortical motor aphasia, a syndrome characterized by sparse speech output [100, 101]. There is typically a striking disparity between cued and uncued speech production, in that patients can repeat words and name objects relatively normally, but are unable to generate lists of words within a category or invent non-formulaic responses in conversation. That is, patients perform well when a simple response is fully specified (a word to be repeated or object to be named) but poorly when a plan must be created for generating a response [102]. This pattern suggests a specific deficit of self-initiated, self-guided retrieval of semantic information. The SFG lies between ventromedial prefrontal areas (rostral cingulate gyrus and medial orbitofrontal cortex) involved in emotion and reward and lateral prefrontal networks involved in cognitive control, and may act as an intermediary link between these processing systems. We propose that a key role of this region in semantic processing is to translate affective drive states into a coordinated plan for knowledge retrieval, that is, a plan for top-down activation of semantic fields relevant to the problem at hand. Damage to this region causes no loss of stored knowledge per se, but impairs the ability to access this knowledge for creative problem solving. As noted earlier, generating creative solutions in open-ended situations – including interpersonal conflicts, mechanical problems, future plans, even trivial conversational exchanges – is a relatively common task in daily life and also appears to be a large component of the conscious `resting' state.

Box 4. The role of the inferior parietal cortex.

The inferior parietal cortex lies at a confluence of visual, spatial, somatosensory and auditory processing streams. Human functional imaging studies implicate this region specifically in representational aspects of semantic memory. For example, the AG responds more strongly to words than to matched pseudowords [47], more to high-frequency than low-frequency words [103], more to concrete than abstract words [104] and more to meaningful than meaningless sentences [105]. Thus the level of AG activation seems to reflect the amount of semantic information that can be successfully retrieved from a given input.

Whether the parietal and temporal convergence zones play distinct roles in representing meaning remains a topic for future research, although available evidence offers some intriguing clues. One clue comes from differences in the location and anatomical connectivity of these regions, which to some degree parallel well-established differences between the ventral and dorsal visual networks. The temporal lobe convergence zone receives heavy input from the ventral visual object identification network and from the auditory `what' pathway [106], suggesting that its main role in semantic memory concerns conceptual representation of concrete objects. In contrast, the AG is bounded by dorsal attention networks that play a central role in spatial cognition, anterior parietal regions concerned with representation of action and posterior temporal regions supporting movement perception [107]. This suggests that the AG may play a unique role in representation of event concepts. Semantic memory research has focused overwhelmingly on knowledge about static concrete entities (i.e., objects, object features, categories), yet much of human knowledge concerns events in which entities interact in space and time. For example, the concept `birthday party' does not refer to a static entity but is instead defined by a configuration of entities (people, cake, candles, presents) and a series of events unfolding in time and space (lighting candles, singing, eating, opening presents). These spatial and temporal configurations define `birthday party' and distinguish it from similar concepts like `picnic' or `office party' in the same way that sets of sensory and motor features distinguish one object from another.

This hypothesis is consistent with recent evidence showing involvement of the AG in retrieval of episodic memories and in understanding theory-of-mind stories. The content of both episodic memory and socially complex stories consists largely of events. Several other studies show specific involvement of the AG in processing temporal and spatial information in stories [108, 109].

Box 5. Questions for future research

More data are needed to clarify the location of modality-specific conceptual networks. As shown in Figure 1, most of the work to date has focused on knowledge related to action, visual motion and emotion, with very little data on auditory, olfactory and gustatory concepts. Within the visual domain, more work is needed on the representation of specific types of information such as color, visual form, size and spatial knowledge.

We propose here that the degree of activation in modality-specific perceptual systems during conceptual tasks varies with context. This more nuanced version of embodiment theory should be tested in future studies by controlled manipulation of variables such as stimulus familiarity, demands on speed and depth of processing requirements.

The necessity of modality-specific systems for conceptual processing is a critical issue. Studies of patient groups with different types and degrees of modality-specific impairments, combined with TMS studies targeting primary and secondary sensory-motor cortices, with varying task demands, are needed to answer this question definitively.

Entities and events constitute two ontologically distinct categories of knowledge with distinct types of attributes, yet there has been little research to date exploring the neural correlates of this distinction. Our hypothesis that the temporal lobe is involved mainly in representation of object knowledge and the inferior parietal lobe in representation of event knowledge is both defensible and testable.

The role of posterior medial cortex (posterior cingulate gyrus and precuneus) in semantic processing remains unclear. Unraveling this mystery will likely require a combination of functional imaging, focal brain lesion, and nonhuman primate studies.

The semantic memory network supports a variety of knowledge domains, including knowledge of self, theory of mind, social concepts, episodic and autobiographical memories, and knowledge of future hypothetical scenarios. Work to date suggests a large degree of overlap in the neural systems supporting these categories of knowledge, thus a major question for future research is whether these types of knowledge retrieval tasks uniquely or preferentially engage distinct components of the semantic network.

Acknowledgements

Supported by NIH grants R01 NS33576 and R01 DC010783. Thanks to Lisa Conant, Will Graves, Colin Humphries, Tim Rogers, and Mark Seidenberg for helpful discussions.

Glossary

- Embodied cognition

in cognitive neuroscience, the general theory that perceptual and motor systems support conceptual knowledge, that is, that understanding or retrieving a concept involves some degree of sensory or motor simulation of the concept. A related term, situated cognition, refers to a more general perspective that emphasizes a central role of perception and action in cognition, rather than memory and memory retrieval.

- Heteromodal cortex

cortex that receives highly processed, multimodal input not dominated by any single modality; also called supramodal, multimodal, or polymodal.

- Modality-specific representations

information pertaining to a specific modality of experience and processed within the corresponding sensory, motor, or affective system. Modality-specific representations can include primary perceptual or motor information, as well as more complex or abstract representations that are nonetheless modal (e.g., extrastriate visual cortex, parabelt auditory cortex). Modal specificity refers to the representational format of the information. For example, knowledge about the sound a piano makes is modally auditory, whereas knowledge about the appearance of a piano is modally visual, and knowledge of the feeling of playing a piano is modally kinesthetic. Modal representations reflect relevant perceptual dimensions of the input, that is, they are analogs of the input. An auditory representation, for example, captures the spectrotemporal form and loudness of an input, whereas a visual representation codes visual dimensions such as visual form, size and color.

- Semantic memory

an individual's store of knowledge about the world. The content of semantic memory is abstracted from actual experience and is therefore said to be conceptual, that is, generalized and without reference to any specific experience. Memory for specific experiences is called episodic memory, although the content of episodic memory depends heavily on retrieval of conceptual knowledge. Remembering, for example, that one had coffee and eggs for breakfast requires retrieval of the concepts of coffee, eggs and breakfast. Episodic memory might be more properly seen as a particular kind of knowledge manipulation that creates spatial-temporal configurations of object and event concepts.

- Simulation

in cognitive neuroscience, the partial re-creation of a perceptual/motor/affective experience or concept through partial reactivation of the neural ensembles originally activated by the experience or concept. Explicit mental imagery may require relatively detailed simulation of a particular experience, whereas tasks such as word comprehension may require only schematic simulations.

- Supramodal representations

information that does not pertain to a single modality of experience. Supramodal representations store information about cross-modal conjunctions, such as a particular combination of auditory and visual object attributes. Their existence is sometimes disputed, yet they provide a simple mechanism for a wide range of inferential capacities, such as knowing the visual appearance of a piano given only its sound and knowing about the conceptual similarity structures that define categories. Supramodal representations may also enable the rapid, schematic retrieval of semantic knowledge that characterizes natural language.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Appendix A. Supplementary data Supplementary data associated with this article can be found, in the online version, at

References

- 1.Locke J. An essay concerning human understanding. Dover; 1690/1959. [Google Scholar]

- 2.Tulving E. Episodic and semantic memory. In: Tulving E, Donaldson W, editors. Organization of Memory. Academic Press; 1972. pp. 381–403. [Google Scholar]

- 3.Allport DA, Funnell E. Components of the mental lexicon. Philos. Trans. R. Soc. Lond. B. 1981;295:379–410. [Google Scholar]

- 4.Barsalou LW. Situated simulation in the human conceptual system. Lang. Cogn. Processes. 2003;18:513–562. [Google Scholar]

- 5.Martin A, Caramazza A. Neuropsychological and neuroimaging perspectives on conceptual knowledge: an introduction. Cogn. Neuropsychol. 2003;20:195–212. doi: 10.1080/02643290342000050. [DOI] [PubMed] [Google Scholar]

- 6.Damasio H, et al. Neural systems behind word and concept retrieval. Cognition. 2004;92:179–229. doi: 10.1016/j.cognition.2002.07.001. [DOI] [PubMed] [Google Scholar]

- 7.Patterson K, et al. Where do you know what you know? The representation of semantic knowledge in the human brain. Nat. Rev. Neurosci. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- 8.Vygotsky LS. Thought and language. Wiley; 1962. [Google Scholar]

- 9.Fodor J. The language of thought. Harvard University Press; 1975. [Google Scholar]

- 10.Pylyshyn ZW. Computation and cognition: toward a foundation for cognitive science. MIT Press; 1984. [Google Scholar]

- 11.Ferrucci D, et al. Building Watson: an overview of the DeepQA project. AI Magazine. 2010;31:59–79. [Google Scholar]

- 12.Wernicke C. Der aphasische Symptomenkomplex. Cohn & Weigert; 1874. [Google Scholar]

- 13.Freud S. On aphasia: a critical study. International Universities Press; 1891/1953. [Google Scholar]

- 14.Damasio AR. Time-locked multiregional retroactivation: a systems-level proposal for the neural substrates of recall and recognition. Cognition. 1989;33:25–62. doi: 10.1016/0010-0277(89)90005-x. [DOI] [PubMed] [Google Scholar]

- 15.Thompson-Schill SL, et al. Role of left inferior prefrontal cortex in retrieval of semantic knowledge: a reevaluation. Proc. Natl. Acad. Sci. U.S.A. 1997;94:14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wagner AD, et al. Recovering meaning: left prefrontal cortex guides semantic retrieval. Neuron. 2001;31:329–338. doi: 10.1016/s0896-6273(01)00359-2. [DOI] [PubMed] [Google Scholar]

- 17.Mahon BZ, Caramazza A. A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. J. Physiol. (Paris) 2008;102:59–70. doi: 10.1016/j.jphysparis.2008.03.004. [DOI] [PubMed] [Google Scholar]

- 18.Boulenger V, et al. Word processing in Parkinson's disease is impaired for action verbs but not for concrete nouns. Neuropsychologia. 2008;46:743–756. doi: 10.1016/j.neuropsychologia.2007.10.007. [DOI] [PubMed] [Google Scholar]

- 19.Bak TH, et al. Clinical, imaging and pathological correlates of a hereditary deficit in verb and action processing. Brain. 2006;129:321–332. doi: 10.1093/brain/awh701. [DOI] [PubMed] [Google Scholar]

- 20.Buxbaum LJ, Saffran EM. Knowledge of object manipulation and object function: dissociations in apraxic and nonapraxic subjects. Brain Lang. 2002;82:179–199. doi: 10.1016/s0093-934x(02)00014-7. [DOI] [PubMed] [Google Scholar]

- 21.Bak TH, Hodges JR. The effects of motor neurone disease on language: further evidence. Brain Lang. 2004;89:354–361. doi: 10.1016/S0093-934X(03)00357-2. [DOI] [PubMed] [Google Scholar]

- 22.Grossman M, et al. Impaired action knowledge in amyotrophic lateral sclerosis. Neurology. 2008;71:1396–1401. doi: 10.1212/01.wnl.0000319701.50168.8c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Oliveri M, et al. All talk and no action: a transcranial magnetic stimulation study of motor cortex activation during action word production. J. Cogn. Neurosci. 2004;16:374–381. doi: 10.1162/089892904322926719. [DOI] [PubMed] [Google Scholar]

- 24.Buccino G, et al. Listening to action-related sentences modulates the activity of the motor system: a combined TMS and behavioral study. Brain Res. Cogn. Brain Res. 2005;24:355–363. doi: 10.1016/j.cogbrainres.2005.02.020. [DOI] [PubMed] [Google Scholar]

- 25.Pulvermuller F, et al. Functional links between motor and language systems. Eur. J. Neurosci. 2005;21:793–797. doi: 10.1111/j.1460-9568.2005.03900.x. [DOI] [PubMed] [Google Scholar]

- 26.Glenberg AM, et al. Processing abstract language modulates motor system activity. Q. J. Exp. Psychol. 2008;61:905–919. doi: 10.1080/17470210701625550. [DOI] [PubMed] [Google Scholar]

- 27.Pobric G, et al. Category-specific versus category-general semantic impairment induced by transcranial magnetic stimulation. Curr. Biol. 2010;20:964–968. doi: 10.1016/j.cub.2010.03.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Ishibashi R, et al. Different roles of lateral anterior temporal lobe and inferior parietal lobule in coding function and manipulation tool knowledge: evidence from an rTMS study. Neuropsychologia. 2011;49:1128–1135. doi: 10.1016/j.neuropsychologia.2011.01.004. [DOI] [PubMed] [Google Scholar]

- 29.Pulvermuller F, et al. Brain signatures of meaning access in action word recognition. J. Cogn. Neurosci. 2005;17:884–892. doi: 10.1162/0898929054021111. [DOI] [PubMed] [Google Scholar]

- 30.Boulenger V, et al. Cross-talk between language processes and overt motor behavior in the first 200 msec of processing. J. Cogn. Neurosci. 2006;18:1607–1615. doi: 10.1162/jocn.2006.18.10.1607. [DOI] [PubMed] [Google Scholar]

- 31.Revill KP, et al. Neural correlates of partial lexical activation. Proc. Natl. Acad. Sci. U.S.A. 2008;105:13111–13115. doi: 10.1073/pnas.0807054105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hoenig K, et al. Conceptual flexibility in the human brain: dynamic recruitment of semantic maps from visual, motor, and motion-related areas. J. Cogn. Neurosci. 2008;20:1799–1814. doi: 10.1162/jocn.2008.20123. [DOI] [PubMed] [Google Scholar]

- 33.Vigliocco G, et al. Toward a theory of semantic representation. Lang. Cogn. 2009;1:219–248. [Google Scholar]

- 34.Olson IR, et al. The enigmatic temporal pole: a review of findings on social and emotional processing. Brain. 2007;130:1718–1731. doi: 10.1093/brain/awm052. [DOI] [PubMed] [Google Scholar]

- 35.Etkin A, et al. Emotional processing in anterior cingulate and medial prefrontal cortex. Trends Cogn. Sci. 2011;15:85–93. doi: 10.1016/j.tics.2010.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ross LA, Olson IR. Social cognition and the anterior temporal lobes. Neuroimage. 2010;49:3452–3462. doi: 10.1016/j.neuroimage.2009.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Zahn R, et al. Social concepts are represented in the superior anterior temporal cortex. Proc. Natl. Acad. Sci. U.S.A. 2007;104:6430–6435. doi: 10.1073/pnas.0607061104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Mesulam M. Patterns in behavioral neuroanatomy: association areas, the limbic system, and hemispheric specialization. In: Mesulam M, editor. Principles of Behavioral Neurology. F.A. Davis; 1985. pp. 1–70. [Google Scholar]

- 39.Orban GA, et al. Comparative mapping of higher visual areas in monkeys and humans. Trends Cogn. Sci. 2004;8:315–324. doi: 10.1016/j.tics.2004.05.009. [DOI] [PubMed] [Google Scholar]

- 40.Hodges JR, et al. Semantic dementia: progressive fluent aphasia with temporal lobe atrophy. Brain. 1992;115:1783–1806. doi: 10.1093/brain/115.6.1783. [DOI] [PubMed] [Google Scholar]

- 41.Mummery CJ, et al. A voxel-based morphometry study of semantic dementia: Relationship between temporal lobe atrophy and semantic memory. Ann. Neurol. 2000;47:36–45. [PubMed] [Google Scholar]

- 42.Bozeat S, et al. Nonverbal semantic impairment in semantic dementia. Neuropsychologia. 2000;38:1207–1215. doi: 10.1016/s0028-3932(00)00034-8. [DOI] [PubMed] [Google Scholar]

- 43.Hodges JR, et al. The role of conceptual knowledge in object use: evidence from semantic dementia. Brain. 2000;123:1913–1925. doi: 10.1093/brain/123.9.1913. [DOI] [PubMed] [Google Scholar]

- 44.Bozeat S, et al. When objects lose their meaning: what happens to their use? Cogn. Affect. Behav. Neurosci. 2002;2:236–251. doi: 10.3758/cabn.2.3.236. [DOI] [PubMed] [Google Scholar]

- 45.Rogers TT, et al. Colour knowledge in semantic dementia: it is not all black and white. Neuropsychologia. 2007;45:3285–3298. doi: 10.1016/j.neuropsychologia.2007.06.020. [DOI] [PubMed] [Google Scholar]

- 46.Lambon Ralph MA, et al. Neural basis of category-specific semantic deficits for living things: evidence from semantic dementia, HSVE and a neural network model. Brain. 2007;130:1127–1137. doi: 10.1093/brain/awm025. [DOI] [PubMed] [Google Scholar]

- 47.Binder JR, et al. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cereb. Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Turkeltaub PE, et al. Meta-analysis of the functional neuroanatomy of single-word reading: method and validation. Neuroimage. 2002;16:765–780. doi: 10.1006/nimg.2002.1131. [DOI] [PubMed] [Google Scholar]

- 49.Alexander MP, et al. Distributed anatomy of transcortical sensory aphasia. Arch. Neurol. 1989;46:885–892. doi: 10.1001/archneur.1989.00520440075023. [DOI] [PubMed] [Google Scholar]

- 50.Damasio H. Neuroimaging contributions to the understanding of aphasia. In: Boller F, Grafman J, editors. Handbook of neuropsychology. Elsevier; 1989. pp. 3–46. [Google Scholar]

- 51.Hart J, Gordon B. Delineation of single-word semantic comprehension deficits in aphasia, with anatomic correlation. Ann. Neurol. 1990;27:226–231. doi: 10.1002/ana.410270303. [DOI] [PubMed] [Google Scholar]

- 52.Chertkow H, et al. On the status of object concepts in aphasia. Brain Lang. 1997;58:203–232. doi: 10.1006/brln.1997.1771. [DOI] [PubMed] [Google Scholar]

- 53.Dronkers NF, et al. Lesion analysis of the brain areas involved in language comprehension. Cognition. 2004;92:145–177. doi: 10.1016/j.cognition.2003.11.002. [DOI] [PubMed] [Google Scholar]

- 54.Harnad S. The symbol grounding problem. Physica D. 1990;42:335–346. [Google Scholar]

- 55.Barsalou LW. Perceptual symbol systems. Behav. Brain Sci. 1999;22:577–660. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- 56.Gallese V, Lakoff G. The brain's concepts: the role of the sensory-motor system in conceptual knowledge. Cogn. Neuropsychol. 2005;22:455–479. doi: 10.1080/02643290442000310. [DOI] [PubMed] [Google Scholar]

- 57.Arévalo AL, et al. What do brain lesions tell us about theories of embodied semantics and the human mirror neuron system? Cortex. doi: 10.1016/j.cortex.2010.06.001. in press. doi: 10.1016/j.cortex.2010.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Rogers TT, McClelland JL. Semantic cognition: a parallel distributed processing approach. MIT Press; 2004. [DOI] [PubMed] [Google Scholar]

- 59.Dove G. On the need for embodied and dis-embodied cognition. Frontiers Psychol. 2011;1 doi: 10.3389/fpsyg.2010.00242. Article 242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Taylor LJ, Zwaan RA. Action in cognition: the case for language. Lang. Cogn. 2009;1:45–58. [Google Scholar]

- 61.Valenstein E, et al. Retrosplenial amnesia. Brain. 1987;110:1631–1646. doi: 10.1093/brain/110.6.1631. [DOI] [PubMed] [Google Scholar]

- 62.Jefferies E, Lambon Ralph MA. Semantic impairment in stroke aphasia versus semantic dementia: a case-series comparison. Brain. 2006;129:2132–2147. doi: 10.1093/brain/awl153. [DOI] [PubMed] [Google Scholar]

- 63.Fletcher PC, et al. Other minds in the brain: a functional imaging study of `theory of mind' in story comprehension. Cognition. 1995;57:109–128. doi: 10.1016/0010-0277(95)00692-r. [DOI] [PubMed] [Google Scholar]

- 64.Saxe R, Kanwisher N. People thinking about thinking people: the role of the temporo-parietal junction in `theory of mind'. Neuroimage. 2003;19:1835–1842. doi: 10.1016/s1053-8119(03)00230-1. [DOI] [PubMed] [Google Scholar]

- 65.Amodio DM, Frith CD. Meeting of minds: the medial frontal cortex and social cognition. Nat. Rev. Neurosci. 2006;7:268–277. doi: 10.1038/nrn1884. [DOI] [PubMed] [Google Scholar]

- 66.Aichhorn M, et al. Temporo-parietal junction activity in Theory-of-Mind tasks: falseness, beliefs, or attention. J. Cogn. Neurosci. 2009;21:1179–1192. doi: 10.1162/jocn.2009.21082. [DOI] [PubMed] [Google Scholar]

- 67.Van Overwalle F. Social cognition and the brain: a meta-analysis. Hum. Brain Mapp. 2009;30:829–858. doi: 10.1002/hbm.20547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Svoboda E, et al. The functional neuroanatomy of autobiographical memory: A meta-analysis. Neuropsychologia. 2006;44:2189–2208. doi: 10.1016/j.neuropsychologia.2006.05.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Vilberg KL, Rugg MD. Memory retrieval and the parietal cortex: a review of evidence from a dual-process perspective. Neuropsychologia. 2008;46:1787–1799. doi: 10.1016/j.neuropsychologia.2008.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Hassabis D, Maguire EA. Deconstructing episodic memory with construction. Trends Cogn. Sci. 2007;11:299–306. doi: 10.1016/j.tics.2007.05.001. [DOI] [PubMed] [Google Scholar]

- 71.Addis DR, et al. Remembering the past and imagining the future: common and distinct neural substrates during event construction and elaboration. Neuropsychologia. 2007;45:1363–1377. doi: 10.1016/j.neuropsychologia.2006.10.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Gerlach KD, et al. Solving future problems: default network and executive activity associated with goal-directed mental simulations. Neuroimage. 2011;55:1816–1824. doi: 10.1016/j.neuroimage.2011.01.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Gusnard DA, et al. Medial prefrontal cortex and self-referential mental activity: relation to a default mode of brain function. Proc. Natl. Acad. Sci. U.S.A. 2001;98:4259–4264. doi: 10.1073/pnas.071043098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Whitfield-Gabrieli S, et al. Associations and dissociations between default and self-reference networks in the human brain. Neuroimage. 2011;55:225–232. doi: 10.1016/j.neuroimage.2010.11.048. [DOI] [PubMed] [Google Scholar]

- 75.Buckner RL, et al. The brain's default network: anatomy, function, and relevance to disease. Ann. N. Y. Acad. Sci. 2008;1124:1–38. doi: 10.1196/annals.1440.011. [DOI] [PubMed] [Google Scholar]

- 76.Spreng RN, et al. The common neural basis of autobiographical memory, prospection, navigation, theory of mind, and the default mode: a quantitative meta-analysis. J. Cogn. Neurosci. 2009;21:489–510. doi: 10.1162/jocn.2008.21029. [DOI] [PubMed] [Google Scholar]

- 77.Spreng RN, Grady CL. Patterns of brain activity supporting autobiographical memory, prospection, and theory of mind, and their relationship to the default mode network. J. Cogn. Neurosci. 2009;22:1112–1123. doi: 10.1162/jocn.2009.21282. [DOI] [PubMed] [Google Scholar]

- 78.Binder JR, et al. Conceptual processing during the conscious resting state: a functional MRI study. J. Cogn. Neurosci. 1999;11:80–93. doi: 10.1162/089892999563265. [DOI] [PubMed] [Google Scholar]

- 79.Ingvar DH. Memory of the future: an essay on the temporal organization of conscious awareness. Hum. Neurobiol. 1985;4:127–136. [PubMed] [Google Scholar]

- 80.Andreasen NC, et al. Remembering the past: two facets of episodic memory explored with positron emission tomography. Am. J. Psychiatry. 1995;152:1576–1585. doi: 10.1176/ajp.152.11.1576. [DOI] [PubMed] [Google Scholar]

- 81.Andrews-Hanna JR. The brain's default network and its adaptive role in internal mentation. Neuroscientist. 2011 doi: 10.1177/1073858411403316. doi: 10.1177/1073858411403316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Fairbanks G. Experimental phonetics: selected articles. University of Illinois Press; 1966. [Google Scholar]

- 83.Desai RH, et al. The neural career of sensorimotor metaphors. J. Cogn. Neurosci. 2011;23:2376–2386. doi: 10.1162/jocn.2010.21596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate cerebral cortex. Cereb. Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- 85.Jones EG, Powell TSP. An anatomical study of converging sensory pathways within the cerebral cortex of the monkey. Brain. 1970;93:793–820. doi: 10.1093/brain/93.4.793. [DOI] [PubMed] [Google Scholar]

- 86.Van Hoesen GW. The parahippocampal gyrus: new observations regarding its cortical connections in the monkey. Trends Neurosci. 1982;5:345–350. [Google Scholar]

- 87.Squire LR. Memory and the hippocampus: a synthesis from findings with rats, monkeys, and humans. Psychol. Rev. 1992;99:195–231. doi: 10.1037/0033-295x.99.2.195. [DOI] [PubMed] [Google Scholar]

- 88.Gorno-Tempini ML, et al. Cognition and anatomy in three variants of primary progressive aphasia. Ann. Neurol. 2004;55:335–346. doi: 10.1002/ana.10825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Rohrer JD, et al. Patterns of cortical thinning in the language variants of frontotemporal lobar degeneration. Neurology. 2009;72:1562–1569. doi: 10.1212/WNL.0b013e3181a4124e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Mion M, et al. What the left and right anterior fusiform gyri tell us about semantic memory. Brain. 2010;133:3256–3268. doi: 10.1093/brain/awq272. [DOI] [PubMed] [Google Scholar]

- 91.Binney RJ, et al. The ventral and inferolateral aspects of the anterior temporal lobe are crucial in semantic memory:evidence from a novel direct comparison of distortion-corrected fMRI, rTMS, and semantic dementia. Cereb. Cortex. 2010;20:2728–2738. doi: 10.1093/cercor/bhq019. [DOI] [PubMed] [Google Scholar]

- 92.Kondo H, et al. Differential connections of the temporal pole with the orbital and medial prefrontal networks in macaque monkeys. J. Comp. Neurol. 2003;465:499–523. doi: 10.1002/cne.10842. [DOI] [PubMed] [Google Scholar]

- 93.Badre D, et al. Dissociable controlled retrieval and generalized selection mechanisms in ventrolateral prefrontal cortex. Neuron. 2005;47:907–918. doi: 10.1016/j.neuron.2005.07.023. [DOI] [PubMed] [Google Scholar]

- 94.Rodd JM, et al. The neural mechanisms of speech comprehension: fMRI studies of semantic ambiguity. Cereb. Cortex. 2005;15:1261–1269. doi: 10.1093/cercor/bhi009. [DOI] [PubMed] [Google Scholar]

- 95.Fiez JA. Phonology, semantics and the role of the left inferior prefrontal cortex. Hum. Brain Mapp. 1997;5:79–83. [PubMed] [Google Scholar]

- 96.Bookheimer SY. Functional MRI of language: new approaches to understanding the cortical organization of semantic processing. Annu. Rev. Neurosci. 2002;25:151–188. doi: 10.1146/annurev.neuro.25.112701.142946. [DOI] [PubMed] [Google Scholar]

- 97.Zysset S, et al. Functional specialization within the anterior medial prefrontal cortex: a functional magnetic resonance imaging study with human subjects. Neurosci. Lett. 2003;335:183–186. doi: 10.1016/s0304-3940(02)01196-5. [DOI] [PubMed] [Google Scholar]

- 98.Heatherton TF, et al. Medial prefrontal activity differentiates self from close others. Soc. Cogn. Affect. Neurosci. 2006;1:18–25. doi: 10.1093/scan/nsl001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Mitchell JP, et al. Dissociable medial prefrontal contributions to judgments of similar and dissimilar others. Neuron. 2006;50:655–663. doi: 10.1016/j.neuron.2006.03.040. [DOI] [PubMed] [Google Scholar]

- 100.Luria AR, Tsvetkova LS. The mechanism of `dynamic aphasia'. Found. Lang. 1968;4:296–307. [Google Scholar]

- 101.Alexander MP, et al. Frontal lobes and language. Brain Lang. 1989;37:656–691. doi: 10.1016/0093-934x(89)90118-1. [DOI] [PubMed] [Google Scholar]

- 102.Robinson G, et al. Dynamic aphasia: an inability to select between competing verbal responses? Brain. 1998;121:77–89. doi: 10.1093/brain/121.1.77. [DOI] [PubMed] [Google Scholar]

- 103.Graves WW, et al. Neural systems for reading aloud: A multiparametric approach. Cereb. Cortex. 2010;20:1799–1815. doi: 10.1093/cercor/bhp245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Binder JR, et al. Distinct brain systems for processing concrete and abstract concepts. J. Cogn. Neurosci. 2005;17:905–917. doi: 10.1162/0898929054021102. [DOI] [PubMed] [Google Scholar]

- 105.Humphries C, et al. Time course of semantic processes during sentence comprehension: an fMRI study. Neuroimage. 2007;36:924–932. doi: 10.1016/j.neuroimage.2007.03.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Rauschecker JP, Tian B. Mechanisms and streams for processing of `what' and `where' in auditory cortex. Proc. Natl. Acad. Sci. U.S.A. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Kravitz DJ, et al. A new neural framework for visuospatial processing. Nat. Rev. Neurosci. 2011;12:217–230. doi: 10.1038/nrn3008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 108.Ferstl EC, et al. Emotional and temporal aspects of situation model processing during text comprehension: An event-related fMRI study. J. Cogn. Neurosci. 2005;17:724–739. doi: 10.1162/0898929053747658. [DOI] [PubMed] [Google Scholar]

- 109.Ferstl EC, von Cramon DY. Time, space and emotion: fMRI reveals content-specific activation during text comprehension. Neurosci. Lett. 2007;427:159–164. doi: 10.1016/j.neulet.2007.09.046. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.