Abstract

Objectives

Ability to understand spoken health information is an important facet of health literacy, but to date, no instrument has been available to quantify patients’ ability in this area. We sought to develop a test to assess comprehension of spoken health messages related to cancer prevention and screening to fill this gap, and a complementary test of comprehension of written health messages.

Methods

We used the Sentence Verification Technique to write items based on realistic health messages about cancer prevention and screening, including media messages, clinical encounters and clinical print materials. Items were reviewed, revised, and pre-tested. Adults aged 40 to 70 participated in a pilot administration in Georgia, Hawaii, and Massachusetts.

Results

The Cancer Message Literacy Test-Listening is self-administered via touchscreen laptop computer. No reading is required. It takes approximately 1 hour. The Cancer Message Literacy Test-Reading is self-administered on paper. It takes approximately 10 minutes.

Conclusions

These two new tests will allow researchers to assess comprehension of spoken health messages, to examine the relationship between listening and reading literacy, and to explore the impact of each form of literacy on health-related outcomes.

Practice Implications

Researchers and clinicians now have a means of measuring comprehension of spoken health information.

Keywords: Health literacy, Cancer prevention, Instrument development

1. Introduction

Health literacy, “the capacity to obtain, process and understand basic health information” [emphasis added][1], is a major problem that has been identified as a national priority[2, 3, 4]. A growing body of research suggests that low health literacy is associated with several negative health-related outcomes[1, 5, 6, 7, 8]. Patients with lower health literacy tend to have less understanding of their illness[9, 10], higher psychosocial distress[11], poorer self-reported health[12, 13], worse health outcomes[14], higher rates of hospitalization[13, 15, 16], higher healthcare costs[17, 18] and higher risk of death[19]. Health literacy may be an important explanatory factor in health disparities[20, 21, 22]. Inadequate health literacy also predicts negative outcomes specific to cancer control, including poor understanding of cancer risk[23] and the need for screening[24], as well as lower rates of participation in cancer prevention efforts[25, 26, 27, 28]. Cancer patients with poor health literacy tend to be diagnosed at a later stage[29] and to have difficulty providing informed consent for treatment[30, 31].

These findings are concerning. However, past research has only begun to determine the extent of the negative effects of low health literacy, and the mechanisms of these effects. One reason is that past efforts to measure health literacy have focused on ascertaining people’s recognition of written medical terminology[32], or their reading comprehension and numeracy[33]. With few exceptions[34, 35, 36, 37, 38, 39, 40], health literacy research has largely ignored the fact that health literacy is a multidimensional phenomenon that involves the comprehension of oral as well as written information. This is an important omission because oral communication is essential to most physician-patient encounters, as well as to media channels such as television news. Both of these are key sources of health information for many Americans[41]. The current absence of instruments to assess health literacy with respect to spoken health messages is a significant limitation which prevents researchers from fully and accurately describing the relationship between health literacy and health[42].

The primary goal of this study was to develop an instrument to assess comprehension of spoken health messages related to cancer prevention and screening. A secondary goal was to develop a test to assess comprehension of written health messages related to cancer prevention and screening. By developing measures in tandem, using similar processes and selecting content related to cancer prevention and screening for both tests, we sought to create a pair of tests that would facilitate investigation of the relative importance of reading and listening in comprehension of health messages, and the interrelationship between the two. Our ultimate goal, to be addressed in a future study, is to examine the extent to which these critical components of health literacy—the ability to comprehend both spoken and print messages related to cancer prevention and screening—are in turn related to cancer prevention and screening behaviors.

We chose to focus on cancer prevention and screening specifically for several reasons. Cancer is a complex and prevalent disease, and primary and secondary prevention are recognized as major factors in controlling cancer morbidity and mortality[43, 44]. Understanding of cancer prevention and screening messages is essential if such messages are to be successful in helping individuals make decisions about health behaviors. Messages about cancer prevention and screening have the potential to influence behaviors, which in turn may directly affect health; message comprehension likely mediates health behaviors. Moreover, some cancer prevention practices, such as avoiding tobacco, eating a healthy diet, and regularly seeing a healthcare provider, contribute to risk reduction for other chronic diseases.

This paper describes the process used to create a pair of tests to measure comprehension of spoken and written messages about cancer prevention and screening, and describes the resulting instruments.

2. Methods

This study was conducted within the Cancer Research Network (CRN), a consortium of research organizations affiliated with 14 community-based non-profit integrated healthcare delivery systems and the National Cancer Institute. Three sites recruited participants: Kaiser Permanente Georgia, Kaiser Permanente Hawaii, and Fallon Community Health Plan; investigators from two additional sites participated, but did not recruit members for this study. The study was reviewed and approved by the Institutional Review Boards at each of the sites.

The test development team included investigators from diverse backgrounds including psychometrics, psychology, anthropology, epidemiology, public health, internal medicine, and oncology. Additional advice was provided by experts in cognition and aging, cognitive psychology, item response theory and psychometrics.

2.1 Overview

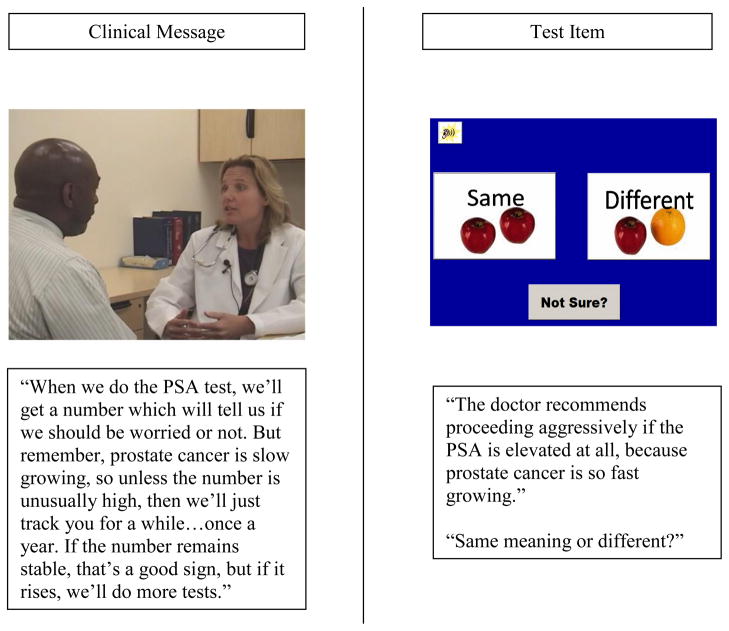

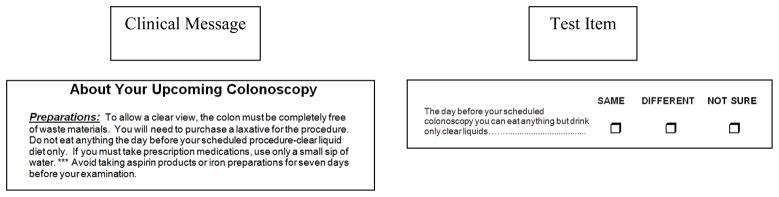

The instruments are titled the Cancer Message Literacy Test-Listening (CMLT-Listening) and the Cancer Message Literacy Test-Reading (CMLT-Reading). The purpose of the CMLT-Listening is to assess comprehension of spoken information on cancer prevention and screening. The test consists of a series of brief video clips (messages). Each video clip is followed by a series of spoken statements (items). For each item, the participant must respond by indicating whether the meaning of the item is the same as the meaning of the video. (Figure 1) The purpose of the CMLT-Reading is to assess comprehension of written information on cancer prevention and screening. The CMLT-Reading consists of a series of brief text excerpts (messages), followed by series of written statements (items), and the participant must respond by indicating whether the meaning of the item is the same as the meaning of the text excerpt. (Figure 2)

Figure 1.

CMLT-Listening Sample

Figure 2.

CMLT-Reading Sample

2.2 Principles

Test content

The most important criterion was that test messages be realistic and typical of the cancer prevention and screening messages that an adult might encounter in his or her day-to-day life. Test content was limited to cancer prevention or screening, with a focus on common cancers for which screening is available or for which the risk of cancer is influenced by behavior, including breast, colorectal, lung, cervical and prostate cancers. We sought to include messages that varied in terms of purpose (i.e., the message recommended action or simply provided information); audience (public or clinical); type (whether the message included a personal narrative or story, provided statistical information, or provided factual information without statistics). The specific content of the health messages included: prevalence, incidence, information on specific risk factors (both modifiable and non-modifiable), screening (recommendations for screening, what the test entails, risks, and benefits) and prevention (e.g. the HPV vaccine). In order to achieve the dual goals of including messages that varied across these dimensions, and messages that were realistic, we decided to limit message length, and to use extracts of approximately 1 to 2 minutes for the CMLT-Listening and about 100 to 400 words for the CMLT-Reading. This was expected to be short enough to allow inclusion of a variety of messages and items, but long enough to be realistic. While both the CMLT-Listening and the CMLT-Reading included content related to cancer prevention and screening, each test contained distinctly different messages, which were sampled from different sources.

2.3 Processes

2.3.1 Identification of Candidate Health Messages

Publicly available messages

For the CMLT-Listening, publicly available messages were identified via the internet, which included searches of television and radio stories and messages, as well as health websites such as WebMD and the National Cancer Institute. We also examined commercially available patient education materials and text of scripts from cancer information telephone lines. For the CMLT-Reading, publicly available written health messages were identified via the internet, and review of magazines, and newspapers. All publicly available messages included in the final versions of the tests were accessed between June 2007 and January 2009.

Clinical messages

To create realistic clinical messages for the CMLT-Listening, we recruited three primary care providers to role-play discussions about cancer prevention and screening. A professional writer with experience in medical and script writing created scripts, which the team reviewed and revised for clinical content and realism. Videotapes of simulated physician-patient and nurse-patient encounters were created using these scripts. To identify clinical messages for the CMLT-Reading, written materials, such as patient education leaflets and instruction sheets, were identified via colleagues and collaborators practicing in clinical settings.

2.3.2 Message Selection

Message selection decisions were made using an iterative process. All messages were reviewed by the entire team, including the two physicians. Messages were excluded if the content was obviously inaccurate or misleading, or if legal permission to use the message was not forthcoming. Where multiple candidate messages were identified, the team chose the messages that together provided the best mix and desired variety.

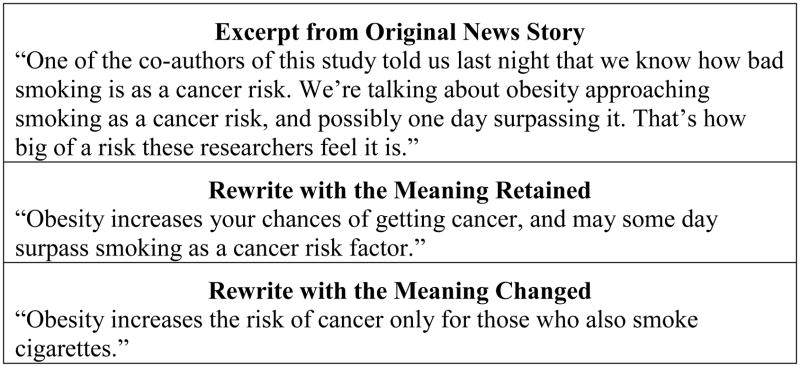

2.3.3 Item Writing and Editing

Items were written using a modified Sentence Verification Technique (SVT). The SVT is an innovative but well-established approach to measuring text and listening comprehension[45]. First, the original message was transcribed verbatim. Next, professional item writers rewrote portions of the message to either retain or change the meaning. An example of a message excerpt, and two possible rewrites are presented in Figure 3. The participant’s task was to indicate whether the meaning of the rewritten portion was the same as the original. Several items were produced for each candidate message in order to have three to four items per message in the final version. The research team reviewed all items and made revisions as appropriate. Results of a prior study[36] identified potentially difficult or confusing concepts, such as risk, and being at risk. Participants also revealed confusion or mistaken beliefs about the relationship between risk and causality, the distinction between screening and treatment, the protective value of screening and other health behaviors, and the prevalence of common cancers. The team sought to include items which referred to these concepts, while achieving balance and variety in item content.

Figure 3.

Example of Using the SVT to Create Items

2.3.4 Pre-testing the CMLT-Listening

Seven adults were recruited at the Massachusetts site to participate in pre-testing the CMLT-Listening. This sample size was determined based on the team’s iterative review of results of the pre-test. Potential participants were identified from administrative health system records by randomly sampling members aged 40 to 70 who had been enrolled with the health plan for at least five years. To optimize sampling across educational levels, sampling was stratified by US Census-based estimates of educational level defined by the percentage of residents with a high school education or less in the census tract in which participants lived. Selected members were sent a letter of invitation with a telephone number to call to express interest. Interested participants were screened to confirm fluency in spoken English; adequate corrected hearing and vision; and the absence of other physical or psychological limitations that would preclude participation in a study session. No health criteria were used. All participants provided written informed consent. Study sessions lasted approximately one and a half hours; participants received $35 cash for their participation. A research assistant (RA) observed the participant as he/she completed the test. After the test, the RA queried the participant about ease of use, comprehensibility of the instructions and the task, and reactions. Difficulties observed or expressed by the participant were noted as well as any glitches in the computer program. Overall, participants were positive about the test and understood the task quickly with minimal instruction from the RA.

The investigative team reviewed all notes and comments. After seven sessions were completed, the team concurred that sufficient information had been gathered to make significant modifications, and to prepare for pilot testing. Identified glitches in the test program were remedied, and test instructions were revised slightly. Messages and items were replaced after team discussion.

2.3.5 Pilot-Testing the CMLT-Listening and CMLT-Reading

A total of 79 adults 40 to 70 years old were recruited from the three study sites for pilot-testing. The sample size was determined by practical constraints. This number was considered sufficient as pilot testing was considered a developmental step rather than a final validation sample; administration to a sample of 1,000 adults was planned for a later date after the pilot study. Recruitment and eligibility criteria were as described for the pre-pilot testing, with additional stratification at one site. At the Georgia site, census tracts were further stratified according to the percent of African-American residents to ensure that African-American and white members were invited in equal numbers within each of the educational strata. Prior experience at the Georgia site led to concerns that not doing so would result in large differences in educational achievement for African-American and white members; prior experience at the two other sites did not suggest a need for or value of additional stratification there. All participants provided written informed consent.

During pilot sessions, participants answered background questions, and completed other items and measures (e.g., the Subjective Numeracy Scale[46], newly created subjective print literacy items, etc.). Next, the CMLT-Listening was administered via touchscreen laptop computer; a computer mouse was available. Headphones were offered but not required. Separate speakers were also available so that message volume could more easily be adjusted to suit participants. The CMLT-Reading was administered after the CMLT-Listening. Pilot sessions lasted approximately 2 hours; participants were encouraged to take breaks at three points. Participants received $50 cash for their participation.

2.3.6 Item Selection

Item selection was an iterative process. Once pilot data were available, the team reviewed initial results (i.e., corrected item-total correlations, coefficient alphas, and differences in the proportion of correct responses across quartiles). Items with the lowest item-total correlations, the least contribution to coefficient alpha, and the smallest difference in the proportion of correct responses across quartiles were candidates for dropping or modification. This information was considered in conjunction with consideration of item content to guide decisions about item selection and revision. For example, if an item had a poor item-total correlation, but the item content was judged to be important, and alternative items were not available, that item was modified; if an alternative item covering similar content was available, that item was replaced. Decisions about individual items were influenced by decisions about messages, as items were embedded in the messages.

3. Results

3.1. Pilot Test Results

A total of 79 adults aged 40 to 70 participated in pilot sessions; the mean age was 57. Approximately 50 percent of the participants self-identified as white (N=40); 20 percent self-identified as African American (N=16); 16 percent self-identified as Asian, Pacific Islander, or Native Hawaiian (N=13); remaining participants (N=10) identified with multiple racial or ethnic groups, with some other racial or ethnic group, or did not report their race or ethnicity. Eighty two percent of participants (N= 64) reported at least some college. Ten percent (N=8) reported that English was not their first language. Twelve percent (N=9) reported using a computer once a month or less.

The reliability analysis of the 66 item pilot version of the CMLT-Listening resulted in a coefficient alpha of .82. Item means (proportion correct) ranged from .38 to .99; the mean total percent correct score was 84.2, with a standard deviation of 9.3. Coefficient alpha for the 21 item pilot version CMLT-Reading test was .55. Item means ranged from .50 to .95; the mean total percent correct score was 82.2, with a standard deviation of 11.4. The correlation between the two scores in this sample was .51. Comparison of mean scores on by educational level revealed statistically significant differences between those with a four year college degree and those without on the CMLT-Reading (87 vs. 78, p<.001), but not on the CMLT-Listening (86 vs. 84, p=.30).

3.2 Participant Response

Spontaneous comments from pilot participants indicate that they found the test sessions interesting. While a few participants initially expressed trepidation about using the computer to complete the test, RAs administering the tests reported that none of the participants appeared uncomfortable completing the computer-based test independently. Several participants commented that they had learned something new from participating.

3.3 Modifications Based on Pilot Results

The team reviewed the results from the pilot data analyses in conjunction with the items and messages, and made additional decisions about deletions, additions, and edits to both the CMLT-Listening and the CMLT-Reading. Two substantive changes were made, primarily in an effort to increase the difficulty of the tests. First, the team added one new video-clip (and a set of items based on that message) to the CMLT-Listening. The video focused on esophageal cancer, which the team anticipated would be less familiar than the cancers included to that point. Second, after discussion with consulting psychometricians, both the CMLT-Listening and the CMLT-Reading were modified to allow a “Not Sure” response option, but to also require a “Best Guess” response to the original question of “Same Meaning or Different” if “Not Sure” was selected. Future analyses will investigate whether including information on the use of the “Not Sure” option in scoring provides useful information about participant performance and increases score reliability overall.

3.4 Description of the Final Tests

The CMLT-Listening is programmed in Microsoft Access and self-administered via a touchscreen laptop computer. Response requirements are minimal to reduce the potential for computer familiarity to provide an advantage. No reading is required. The program begins with a short, computer-narrated introduction that explains what the test entails, and provides instructions on how to proceed through the test. One practice item is required; additional practice items are optional. Feedback is provided for the practice items, but not for the actual test items. The CMLT-Listening contains a total of 15 messages; each message has 2 to 4 associated items, for a total of 48 test items. Screen shots from one of the CMLT-Listening clinical messages and a transcript of the associated narration and item are shown in Figure 1. Participants may not replay a message; but can replay the statement made by the narrator (i.e., the paraphrased message content which the participant must evaluate). Participants respond by selecting the “Same” or the “Different” button. Participants may select the “?” button, but must then also select either “Same” or “Different” before continuing on to the next item. The test takes approximately 60 minutes to complete. Message characteristics are shown in Table 1.

Table 1.

Test Characteristics

| Test | ||

|---|---|---|

| CMLT-Listening | CMLT-Reading | |

| Time to Administer | ~60 m | ~10 m |

| Total Number of Messages | 15 | 6 |

| Message Characteristics | ||

| Message Audience | ||

| General Public | 10 | 3 |

| Clinical Setting | 5 | 3 |

| Message Type | ||

| Narrative/Personal Story | 3 | 0 |

| Factual Report With Numeric or Statistical Data | 8 | 5 |

| Factual Report Without Numeric or Statistical Data | 7 | 1 |

| Type of Cancer | ||

| Breast | 4 | 1 |

| Colorectal | 3 | 1 |

| Prostate | 2 | 1 |

| Lung | 3 | 1 |

| Cervical | 2 | 1 |

| Skin | 2 | 0 |

| Esophageal | 1 | 0 |

| General | 2 | 2 |

| Message Focus | ||

| Screening (e.g., importance, procedures) | 8 | 2 |

| Modifiable Risk Factors (e.g., smoking) | 7 | 2 |

| Non-modifiable Risk Factors (e.g., family history, genetics) | 6 | 2 |

Table note: Categories of characteristics are not mutually exclusive, e.g., some video clips refer to two or more cancers.

The CMLT-Reading is printed in 14 and 16 point font on standard paper (8 ½′ by 11′), and assembled into a booklet with instructions on the cover page. It contains 6 messages, each with 3 or 4 associated items, for a total of 23 items. Like the CMLT-Listening, the CMLT-Reading provides three response options – “Same,” “Different” and “Not Sure”. Participants are asked to select either “Same” or “Different” even if they select “Not sure” initially. The test takes approximately 10 minutes to complete. Message characteristics are shown in Table 1.

4. DISCUSSION and CONCLUSION

4.1 Discussion

How adults understand and react to spoken health information is a critical aspect of health literacy[42, 47]. Research in this area has already revealed how clinical and media messages have the potential to confuse patients and consumers[36, 40, 48]. The CMLT-Listening makes a unique contribution to this line of research, as it will enable researchers and clinicians to assess patients’ comprehension of spoken health information. We hope that this instrument will facilitate research in this area, much as the availability of print tests such as the REALM[32] and the TOFHLA[33] allowed researchers to study the relationship between scores on these measures and a variety of health-related processes and outcomes. We anticipate that the new CMLT-Listening will allow researchers to conduct comparable studies examining the relationship between comprehension of spoken health messages and similar health-related outcomes. We also hope that it will facilitate the development of training and interventions that will help clinicians and producers of media to communicate more clearly. Our team is currently collecting clinical data on cancer screening utilization as a first step in examining the relationship between scores on these two new instruments and actual screening behaviors.

The new CMLT-Reading is also an important contribution to the field of health literacy. Although instruments to assess health literacy with respect to print materials already exist, these tests have limitations. For instance, the REALM is a word recognition test, and provides no information on whether a respondent understands the words he or she is able to pronounce. While the S-TOFHLA assesses comprehension, it focuses on the medical setting, omitting other types of printed health materials which adults are likely to encounter in their day-to-day lives. The CMLT-Reading assesses comprehension, and includes a variety of message types. Most importantly, as half of this pair of complementary tests, the CMLT-Reading enables investigators to explore the relationship between comprehension of spoken materials and written materials. Our group is currently conducting analyses that we anticipate will help to clarify this relationship.

Establishing the validity of test scores is a process[49]. This paper reports on the careful test development process we used, an important first step in that process. The fact that both tests incorporate realistic health messages that adults may encounter in their day-to-day lives further helps to establish content validity, as does the fact that test content includes prevalent cancers (breast, colorectal, lung, prostate and cervical), common screening methods, and key health concepts that are important across these and other major health conditions. The item writing technique which we used was specifically designed to create items which would assess comprehension. Each of these components contributes to the content validity of the CMLT-Listening and CMLT–Reading test scores, but additional investigations are needed. The fact that the pilot study included only 79 participants, and was not designed to provide strong validity evidence is a limitation. We did estimate reliability coefficients (.82 and .55 for the CMLT-Listening and -Reading respectively). The lower reliability coefficient for the CMLT-Reading is as expected due to the fewer items. We anticipate that the post-pilot item selection decisions and edits will result in an improvement in reliability in the final versions of the tests.

The messages and tests appear to be engaging and interesting for participants, possibly due in part to the realism of the test materials. The fact that we did not systematically query participants about their experience with the test is a limitation, but those administering the study sessions reported that even older participants and those who use computers once a month or less were able to complete the CMLT-Listening independently using the touch-screen laptop computer technologies.

The cancer prevention and screening is a topic relevant to almost all adults. This, together with the fact that many of the concepts covered (e.g., risk) are directly relevant to other health conditions and decisions, extends the utility of these tests beyond the realm of cancer.

An important limitation of the CMLT-Listening measure is that it takes approximately 60 minutes to administer. To reduce respondent burden and improve the measure’s feasibility, we plan to develop a short form, a computerized adaptive version, or both in the future. However, presenting information orally takes longer than presenting information via print; the average video length was just over 1.5 minutes. An encouraging finding, however, is that pilot participants did not appear fatigued. Another limitation of our new measures is that test content may become outdated, given the evolving evidence for many cancer prevention and screening interventions. Changes in public awareness and background knowledge may affect item difficulty.

It should be stressed that the tests described here are intended to assess comprehension – they are not intended to assess the many factors that might cause difficulty in comprehension. Thus, a low score on either measure might be attributable to English language difficulties, cognitive difficulties, neurological deficits, inattention, lack of background knowledge, or other factors.

Establishing the validity of any measure is most usefully thought of as the process of building an argument[50, 51]. A convincing validity argument consists of a series of premises, each of which contributes to overall argument. Further validation of our measures will depend on further research to establish their power to predict health outcomes. Since completing this test development process, we have administered both tests to over 1000 adults at 4 sites around the country. Analyses from this large scale administration are underway.

4.2 Conclusion

In conclusion, we have developed instruments to assess comprehension of spoken and written health messages, focused on cancer prevention and screening. Because comprehension is an essential component of health literacy, we hope that the availability of these instruments will expand our understanding of health literacy, and the complex interrelationships between different facets of health literacy and cancer screening. Ultimately, we hope that these instruments will help improve both spoken and written communication about cancer prevention and screening.

Acknowledgments

Funding: The study reported here was funded by a grant from the National Cancer Institute (U19 CA079689). It was conducted within the context of a core project of to the HMORN Cancer Research Network, Health Literacy and Cancer Prevention: Do People Understand What They Hear?

Funding/Support and Role of Sponsor: The funding agency did not contribute to the study design; data collection, analysis, or interpretation; or the decision to submit the manuscript for publication. The study reported here was funded by a grant from the National Cancer Institute (U19 CA079689). It was conducted within the context of a core project of the HMORN Cancer Research Network, Health Literacy and Cancer Prevention: Do People Understand What They Hear?

The research team would like to extend a sincere thank you to Melissa Finucane, PhD, for all of her efforts at the start of the project. The authors would also like to acknowledge JAMA® for the generous contribution of video content related to HPV infection for the CMLT-Listening.

Footnotes

Data Access and Responsibility: Dr. Mazor had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the analysis.

Previous Presentation of Data: Portions of this study have been reported at the following meetings: HMORN Annual Meeting, Minneapolis MN, 2008; Institute of Medicine of the National Academies, Washington DC, 2009; Boston University Medical Campus’ Health Literacy Annual Research Conference, Washington DC, 2009; HMORN Annual Meeting, Danville PA, 2009; HMORN Annual Meeting, Austin TX, 2010; HMORN Annual Meeting, Boston MA, 2011.

Conflicts of Interest: Sarah Greene has co-developed free, public domain trainings and tools in the area of health literacy. These tools are freely available online at http://prism.grouphealthresearch.org. Her time for developing these tools was funded by a grant from the National Institutes of Health. No additional conflicts of interest were reported by any of the authors.

Author Contributions:

Study concept and design: Mazor, Roblin, Williams, Greene, Field, Costanza, Saccoccio, Calvi, Cove, Cowan.

Acquisition of data: Mazor, Williams, Gaglio, Field, Saccoccio, Calvi, Cove, Cowan.

Analysis and interpretation of data: Mazor, Williams, Costanza, Han, Calvi.

Drafting of the manuscript: Mazor.

Critical revision of the manuscript for important intellectual content: Roblin, Williams, Greene, Gaglio, Field, Costanza, Han, Saccoccio, Calvi, Cove, Cowan.

Statistical analysis: Mazor.

Administrative, technical, or material support: Mazor, Greene, Gaglio, Field, Costanza, Han, Saccoccio, Calvi, Cove, Cowan.

Study supervision: Mazor, Roblin, Williams, Calvi.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Nielsen-Bohlman L, Panzer AM, Kindig DA, editors. Health literacy: a prescription to end confusion. Washington DC: The National Academies Press; 2004. The Institute of Medicine of the National Academies. [PubMed] [Google Scholar]

- 2.Carmona RH. Health Literacy: A National Priority. J Gen Intern Med. 2006;21:803. [Google Scholar]

- 3.Benjamin R. Health literacy improvement as a national priority. J Health Commun. 2010;15:1–3. doi: 10.1080/10810730.2010.499992. [DOI] [PubMed] [Google Scholar]

- 4.U.S. Department of Health and Human Services, Office of Disease Prevention and Health Promotion. National action plan to improve health literacy. Washington, DC: Department of Health and Human Services; 2010. [Google Scholar]

- 5.Rudd RE, Moeykens BA, Colton TC. Health and literacy. A review of medical and public health literature. In: Comings JGB, Smith C, editors. Annual review of adult learning and literacy. New York: Jossey-Bass; 2000. [Google Scholar]

- 6.Rudd RE, Colton T, Schacht R. An overview of medical and public health literature addressing literacy issues: an annotated bibliography. Cambridge, MA: The National Center for the Study of Adult Literacy, Harvard Graduate School of Education; 2000. [Google Scholar]

- 7.Berkman ND, Dewalt DA, Pignone MP, Sheridan SL, Lohr KN, Lux L, Sutton SF, Swinson T, Bonito AJ. Literacy and health outcomes. Evid Rep Technol Assess (Summ) 2004;87:1–8. [PMC free article] [PubMed] [Google Scholar]

- 8.Ad Hoc Committee on Health Literacy for the Council on Scientific Affairs AMA. Health literacy: report of the Council on Scientific Affairs. JAMA. 1999;281:552–7. [PubMed] [Google Scholar]

- 9.Kalichman SC, Benotsch E, Suarez T, Catz S, Miller J, Rompa D. Health literacy and health-related knowledge among persons living with HIV/AIDS. Am J Prev Med. 2000;18:325–31. doi: 10.1016/s0749-3797(00)00121-5. [DOI] [PubMed] [Google Scholar]

- 10.Williams MV, Baker DW, Parker RM, Nurss JR. Relationship of functional health literacy to patients’ knowledge of their chronic disease. A study of patients with hypertension and diabetes. Arch Intern Med. 1998;158:166–72. doi: 10.1001/archinte.158.2.166. [DOI] [PubMed] [Google Scholar]

- 11.Sharp LK, Zurawski JM, Roland PY, O’Toole C, Hines J. Health literacy, cervical cancer risk factors, and distress in low-income African-American women seeking colposcopy. Ethn Dis. 2002;12:541–6. [PubMed] [Google Scholar]

- 12.Lindau ST, Tomori C, McCarville MA, Bennett CL. Improving rates of cervical cancer screening and Pap smear follow-up for low-income women with limited health literacy. Cancer Invest. 2001;19:316–23. doi: 10.1081/cnv-100102558. [DOI] [PubMed] [Google Scholar]

- 13.Baker DW, Parker RM, Williams MV, Clark WS, Nurss J. The relationship of patient reading ability to self-reported health and use of health services. Am J Public Health. 1997;87:1027–30. doi: 10.2105/ajph.87.6.1027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Schillinger D, Grumbach K, Piette J, Wang F, Osmond D, Daher C, Palacios J, Sullivan GD, Bindman AB. Association of health literacy with diabetes outcomes. JAMA. 2002;288:475–82. doi: 10.1001/jama.288.4.475. [DOI] [PubMed] [Google Scholar]

- 15.Baker DW, Gazmararian JA, Williams MV, Scott T, Parker RM, Green D, Ren J, Peel J. Functional health literacy and the risk of hospital admission among Medicare managed care enrollees. Am J Public Health. 2002;92:1278–83. doi: 10.2105/ajph.92.8.1278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Baker DW, Parker RM, Williams MV, Clark WS. Health literacy and the risk of hospital admission. J Gen Intern Med. 1998;13:791–8. doi: 10.1046/j.1525-1497.1998.00242.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Weiss BD, Palmer R. Relationship between health care costs and very low literacy skills in a medically needy and indigent Medicaid population. J Am Board Fam Pract. 2004;17:44–7. doi: 10.3122/jabfm.17.1.44. [DOI] [PubMed] [Google Scholar]

- 18.Eichler K, Wieser S, Brugger U. The costs of limited health literacy: a systematic review. Int J Public Health. 2009;54:313–24. doi: 10.1007/s00038-009-0058-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sudore RL, Yaffe K, Satterfield S, Harris TB, Mehta KM, Simonsick EM, Newman AB, Rosano C, Rooks R, Rubin SM, Ayonayon HN, Schillinger D. Limited literacy and mortality in the elderly: the health, aging, and body composition study. J Gen Intern Med. 2006;21:806–12. doi: 10.1111/j.1525-1497.2006.00539.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sentell TL, Halpin HA. Importance of adult literacy in understanding health disparities. J Gen Intern Med. 2006;21:862–6. doi: 10.1111/j.1525-1497.2006.00538.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bennett IM, Chen J, Soroui JS, White S. The contribution of health literacy to disparities in self-rated health status and preventive health behaviors in older adults. Ann Fam Med. 2009;7:204–11. doi: 10.1370/afm.940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Polacek GN, Ramos MC, Ferrer RL. Breast cancer disparities and decision-making among U.S. women. Patient Educ Couns. 2007;65:158–65. doi: 10.1016/j.pec.2006.06.003. [DOI] [PubMed] [Google Scholar]

- 23.Brewer NT, Tzeng JP, Lillie SE, Edwards AS, Peppercorn JM, Rimer BK. Health literacy and cancer risk perception: implications for genomic risk communication. Med Decis Making. 2009;29:157–66. doi: 10.1177/0272989X08327111. [DOI] [PubMed] [Google Scholar]

- 24.Dolan NC, Ferreira MR, Davis TC, Fitzgibbon ML, Rademaker A, Liu D, Schmitt BP, Gorby N, Wolf M, Bennett CL. Colorectal cancer screening knowledge, attitudes, and beliefs among veterans: does literacy make a difference? J Clin Oncol. 2004;22:2617–22. doi: 10.1200/JCO.2004.10.149. [DOI] [PubMed] [Google Scholar]

- 25.Lindau ST, Tomori C, Lyons T, Langseth L, Bennett CL, Garcia P. The association of health literacy with cervical cancer prevention knowledge and health behaviors in a multiethnic cohort of women. Am J Obstet Gynecol. 2002;186:938–43. doi: 10.1067/mob.2002.122091. [DOI] [PubMed] [Google Scholar]

- 26.Scott TL, Gazmararian JA, Williams MV, Baker DW. Health literacy and preventive health care use among Medicare enrollees in a managed care organization. Med Care. 2002;40:395–404. doi: 10.1097/00005650-200205000-00005. [DOI] [PubMed] [Google Scholar]

- 27.Davis TC, Dolan NC, Ferreira MR, Tomori C, Green KW, Sipler AM, Bennett CL. The role of inadequate health literacy skills in colorectal cancer screening. Cancer Invest. 2001;19:193–200. doi: 10.1081/cnv-100000154. [DOI] [PubMed] [Google Scholar]

- 28.Lindau ST, Basu A, Leitsch SA. Health literacy as a predictor of follow-up after an abnormal Pap smear: a prospective study. J Gen Intern Med. 2006;21:829–34. doi: 10.1111/j.1525-1497.2006.00534.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bennett CL, Ferreira MR, Davis TC, Kaplan J, Weinberger M, Kuzel T, Seday MA, Sartor O. Relation between literacy, race, and stage of presentation among low-income patients with prostate cancer. J Clin Oncol. 1998;16:3101–4. doi: 10.1200/JCO.1998.16.9.3101. [DOI] [PubMed] [Google Scholar]

- 30.Cox K. Informed consent and decision-making: patients’ experiences of the process of recruitment to phases I and II anti-cancer drug trials. Patient Educ Couns. 2002;46:31–8. doi: 10.1016/s0738-3991(01)00147-1. [DOI] [PubMed] [Google Scholar]

- 31.Davis TC, Williams MV, Marin E, Parker RM, Glass J. Health literacy and cancer communication. CA Cancer J Clin. 2002;52:134–49. doi: 10.3322/canjclin.52.3.134. [DOI] [PubMed] [Google Scholar]

- 32.Davis TC, Long SW, Jackson RH, Mayeaux EJ, George RB, Murphy PW, Crouch MA. Rapid estimate of adult literacy in medicine: a shortened screening instrument. Fam Med. 1993;25:391–5. [PubMed] [Google Scholar]

- 33.Parker RM, Baker DW, Williams MV, Nurss JR. The test of functional health literacy in adults: a new instrument for measuring patients’ literacy skills. J Gen Intern Med. 1995;10:537–41. doi: 10.1007/BF02640361. [DOI] [PubMed] [Google Scholar]

- 34.Roter DL, Erby L, Larson S, Ellington L. Oral literacy demand of prenatal genetic counseling dialogue: Predictors of learning. Patient Educ Couns. 2009;75:392–7. doi: 10.1016/j.pec.2009.01.005. [DOI] [PubMed] [Google Scholar]

- 35.Rosenfeld L, Rudd R, Emmons KM, Acevedo-Garcia D, Martin L, Buka S. Beyond reading alone: the relationship between aural literacy and asthma management. Patient Educ Couns. 2011;82:110–6. doi: 10.1016/j.pec.2010.02.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Mazor KM, Calvi J, Cowan R, Costanza ME, Han PKJ, Greene SM, Saccoccio L, Cove E, Roblin D, Williams A. Media messages about cancer: what do people understand? J Health Commun. 2010;15:126–45. doi: 10.1080/10810730.2010.499983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Koch-Weser S, Rudd RE, Dejong W. Quantifying word use to study health literacy in doctor-patient communication. J Health Commun. 2010;15:590–602. doi: 10.1080/10810730.2010.499592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Portnoy DB, Roter D, Erby LH. The role of numeracy on client knowledge in BRCA genetic counseling. Patient Educ Couns. 2010;81:131–6. doi: 10.1016/j.pec.2009.09.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.McCormack L, Bann C, Squiers L, Berkman ND, Squire C, Schillinger D, Ohene-Frempong J, Hibbard J. Measuring health literacy: a pilot study of a new skills-based instrument. J Health Commun. 2010;15:51–71. doi: 10.1080/10810730.2010.499987. [DOI] [PubMed] [Google Scholar]

- 40.Roter DL, Erby LH, Larson S, Ellington L. Assessing oral literacy demand in genetic counseling dialogue: preliminary test of a conceptual framework. Soc Sci Med. 2007;65:1442–57. doi: 10.1016/j.socscimed.2007.05.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Gallup Organization. Gallup poll. Storrs, CT: Roper Center for Public Opinion Research; 2002. [Google Scholar]

- 42.Berkman NDSS, Donahue KE, Halpern DJ, Viera A, Crotty K, Holland A, Brasure M, Lohr KN, Harden E, Tant E, Wallace I, Viswanathan M. Health literacy interventions and outcomes: an updated systematic review. Rockville, MD: Agency for Healthcare Research and Quality; 2011. [PMC free article] [PubMed] [Google Scholar]

- 43.Edwards BK, Ward E, Kohler BA, Eheman C, Zauber AG, Anderson RN, Jemal A, Schymura MJ, Lansdorp-Vogelaar I, Seeff LC, van Ballegooijen M, Goede SL, Ries LA. Annual report to the nation on the status of cancer, 1975–2006, featuring colorectal cancer trends and impact of interventions (risk factors, screening, and treatment) to reduce future rates. Cancer. 2010;116:544–73. doi: 10.1002/cncr.24760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.American Cancer Society. Cancer Facts & Figures 2010. Atlanta: American Cancer Society; 2010. [Google Scholar]

- 45.Royer J. Developing reading and listening comprehension tests based on the Sentence Verification Technique. J Adol Adult Literacy. 2001;45:30–41. [Google Scholar]

- 46.Fagerlin A, Zikmund-Fisher BJ, Ubel PA, Jankovic A, Derry HA, Smith DM. Measuring numeracy without a math test: development of the Subjective Numeracy Scale. Med Decis Making. 2007;27:672–80. doi: 10.1177/0272989X07304449. [DOI] [PubMed] [Google Scholar]

- 47.Rudd RE. Improving Americans’ health literacy. N Engl J Med. 2010;363:2283–5. doi: 10.1056/NEJMp1008755. [DOI] [PubMed] [Google Scholar]

- 48.Koch-Weser S, Dejong W, Rudd RE. Medical word use in clinical encounters. Health Expect. 2009;12:371–82. doi: 10.1111/j.1369-7625.2009.00555.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.The American Educational Research Association, The American Psychological Association, & The National Council on Measurement in Education. Standards for educational and psychological testing. Washington, D.C: American Education Research Association; 1999. [Google Scholar]

- 50.Clauser BE, Margolis MJ, Holtman MC, Katsufrakis PJ, Hawkins RE. Validity considerations in the assessment of professionalism. Adv Health Sci Educ Theory Pract. 2010 Jan; doi: 10.1007/s10459-010-9219-6. [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- 51.Downing SM, Haladyna TM, editors. Handbook of test development. Mahwah, NJ: Lawrence Erlbaum Associates; 2006. [Google Scholar]