Abstract

Given the complexity of health care and the ‘people’ nature of healthcare work and delivery, STSA (Sociotechnical Systems Analysis) research is needed to address the numerous quality of care problems observed across the world. This paper describes open STSA research areas, including workload management, physical, cognitive and macroergonomic issues of medical devices and health information technologies, STSA in transitions of care, STSA of patient-centered care, risk management and patient safety management, resilience, and feedback loops between event detection, reporting and analysis and system redesign.

Keywords: socio-technical systems, human factors and ergonomics, transitions of care, workload, patient safety, medical devices, health information technology, risk management, patient-centered care

1. Introduction

The healthcare industry is very different from other industries because of the intensity of the personal interactions. Health care is all about people: patients and their families and friends, and the various healthcare professionals and workers. In an editorial published in the International Journal of Medical Informatics, Brennan and Safran (2004) talked about “Patient safety being about the patients after all”… Therefore, when analyzing, designing, implementing and improving healthcare systems, the people dimension should be at the forefront. This clearly underlines the need for sociotechnical systems analysis (STSA) and the consideration of human factors and organizational issues related to healthcare quality and patient safety.

The report by the US Institute of Medicine on Crossing the Quality Chasm (2001) outlines six dimensions of quality: (1) safety, (2) effectiveness, (3) patient-centered care, (4) timeliness, (5) efficiency, and (6) equity1. In a safe healthcare system, injuries to patients are avoided, i.e. prevented or mitigated. An effective healthcare system provides “services based on scientific knowledge to all who could benefit, and refraining from providing services to those not likely to benefit” (Institute of Medicine Committee on Quality of Health Care in America, 2001). Patient-centered care is “care that is respectful of and responsive to individual patient preferences, needs, and values, and ensuring that patient values guide all clinical decisions” (Institute of Medicine Committee on Quality of Health Care in America, 2001). Timeliness of care is specifically concerned with reduction in care delays and patient waits, and, more broadly, efficiency of care addresses issues of waste, including waste of equipment, supplies, ideas and energy. Equitable care is care that does not vary according to personal patient characteristics, such as gender and ethnic background (see, for example, the discussion on health disparities (Smedley, Stith, & Nelson, 2003). Three of the six quality aims described by the Institute of Medicine, i.e. patient safety, efficiency of care and patient-centered care, are particularly relevant for the sociotechnical systems approach to health care. For instance, patient safety has largely benefited from conceptual and methodological contributions from the discipline of human factors and ergonomics (HFE) (Bogner, 1994; Cook, Woods, & Miller, 1998; Vincent, Taylor-Adams, & Stanhope, 1998). The physical design of healthcare organizations can create inefficiencies and increase the time and energy that nurses and other healthcare workers have to spend; these inefficiencies can be addressed by conducting a systematic sociotechnical systems analysis and applying HFE principles. Patient-centered care requires a deep understanding of the ‘work’ of both patients and their healthcare providers, and their interactions; this can benefit from STSA models and methods.

In this paper, we address the current state of the research literature relevant to STSA in health care. The STSA department editorial team encourages researchers to address these important issues.

2. Complex healthcare systems and STSA

2.1 Complexity of healthcare systems

Healthcare delivery has dramatically changed in the past 20 years. The delivery of health care relies on a variety of people and stakeholders that interact with each other as well as with a variety of technologies and devices. Health care occurs in a variety of physical and organizational settings (e.g., hospital, primary care physician) and environments (e.g., home care) that are often loosely connected (e.g., patient discharge and follow-up visit to primary care physician) and sometimes tightly connected (e.g., error in medication administration and potential harm to patient). The increasing complexity of healthcare systems plays a significant role in the multiple vulnerabilities, failures and errors that have been reported (Kohn, Corrigan, & Donaldson, 1999). Often the objectives of the healthcare sub-systems or various participants in healthcare delivery conflict with each other; this misalignment of objectives can produce inefficiencies, hazards and other quality of care problems. The case study of a medical device manufacturer by Vicente (2003) shows how misalignment of system goals led to the design, production and distribution of an unsafe patient-controlled analgesia device.

Different views about complexity in health care have been proposed. Plsek and Greenhalgh (2001) describe health care as a complex adaptive system that has the following characteristics:

System boundaries are fuzzy and ill-defined: individuals that are members of the system (or sub-system) change and may belong to multiple systems.

Individuals in the healthcare system (e.g., physicians, nurses, patients) use rules and mental models that are internalized, and may not be shared with or understood by others. In addition, these rules and mental models change over time.

People and system(s) adapt to local contingencies.

Systems are embedded within other systems and co-evolve and interact over time. Those interactions between multiple systems may produce tension and conflict that do not necessarily need to be resolved or can be resolved. In addition, the system interactions continually produce new behaviors and new approaches to problem solving.

The system interactions are non-linear and often unpredictable. This lack of predictability is however accompanied by general patterns of behavior.

Self-organization is inherent through simple locally applied rules.

Effken (2002) describes health care as a complex dynamic sociotechnical system in which groups of people cooperate for patient care and are faced with numerous contingencies that cannot be fully anticipated; people involved in health care have different perspectives. In addition, healthcare organizations may have conflicting values and objectives (e.g., financial objectives and humanitarian objectives) and are increasingly diverse and subject to considerable pressures and changes. According to Effken (2002), a key characteristic of health care is its dynamic nature, i.e. a system exposed to continual change that can have important consequences for other system elements. Finally, Effken (2002) describes the highly technical nature of healthcare systems. Carayon (2006) described 11 dimensions of complexity in health care; many of them are similar to those described by Plsek and Grenhalgh (2001) and Effken (2002). Additional complexity dimensions include the large problem space in health care (e.g., large number of illnesses), and the hazardous nature of many healthcare procedures and processes (Carayon, P., 2006). STSA research should take into account the complexity of healthcare systems.

Because of the great complexity inherent in health care, it is important to conduct STSA research that adopts a systems approach aimed at identifying the multiple system elements, their interactions and their impact on quality of care, as well as understanding the key adaptive role of people in the system. The SEIPS (Systems Engineering Initiative for Patient Safety) model of work system and patient safety (Carayon et al., 2006) is one example of this STSA approach aimed at understanding the complex system interactions that can produce hazards and patient safety risks. According to the SEIPS model (Carayon et al., 2006), healthcare systems can be conceptualized as work systems in which people perform multiple tasks using various tools and technologies in a physical environment and under specific organizational conditions; those system interactions influence care processes and patient outcomes. Because of its focus on the human and organizational aspects of system design, STSA research addresses not only patient outcomes but people outcomes in general including family and care provider outcomes, as well as organizational outcomes (Carayon et al., 2006). STSA research may, for instance, examine the circumstances under which teamwork enhances communication and information flow and reduces errors as well as improves staff well-being and job satisfaction. In addition, STSA research should recognize the ‘active’ role of individuals in healthcare systems: individuals are affected or influenced by the way systems are designed, but also influence outcomes through their interactions with the system.

In addition to ‘local’ work systems in healthcare organizations (Carayon et al., 2006), STSA research should also consider the larger system when developing new methods and design guidelines. For instance, the reimbursement system may create constraints on system design. Cultural characteristics and values may affect team interaction or use of medical device or information technology (Pennathur et al., Submitted). Values regarding participation in medical decision making are important to consider when examining STSA issues related to patient-centered care. Organizational models of hospitals as well as primary care providers may provide unique contextual factors that need to be integrated when designing physical space or health information technology applications. The STSA approach to the design and management of clinical pathways should consider the different levels and settings of care (Dy & Gurses, 2010). These are examples of how the larger system may influence STSA research and practice. The impact of regulatory oversight is mixed and would benefit from STSA input. For example, regulatory requirements for the healthcare industry have not been evaluated universally with respect to the most basic measures such as patient mortality (see, for example, Howard et al. (2004)). Medical device oversight for complex medical devices has yet to determine if increasing device complexity is associated with decreased reliability (see for example Maisel et al. (2006)).

2.2 Healthcare system design, implementation and use

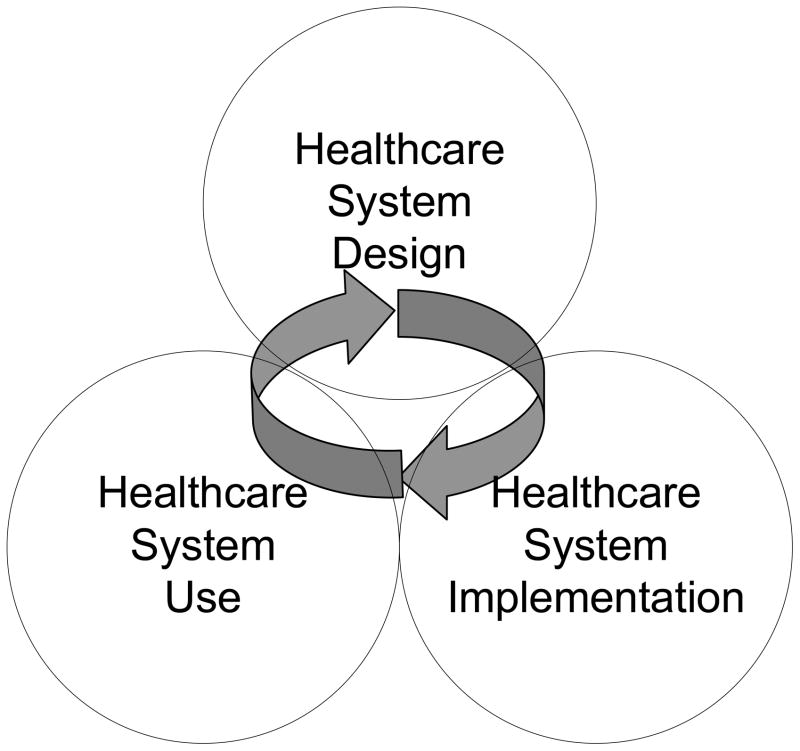

Anchored in healthcare systems engineering, STSA research contributes knowledge, theories and methods to improving healthcare system design, implementation and use. For instance, in addition to understanding the mechanisms of the impact of teamwork on patient safety, STSA research will produce guidelines and methods for implementing teamwork in a healthcare setting. Figure 1 displays the activities of system design, implementation and use as interrelated activities that interact with each other and feed upon each other. The linear sequential approach to system design, implementation and use has been challenged; it is now recognized that design, implementation and use activities overlap and interact with one other. In the context of organizational change, Weick and Quinn (1999) contrast episodic change to continuous change: an episodic change needs to be planned for and managed; a continuous change requires sense-making processes and an organizational culture open to learning. The continuous change model shows that the actual phase of system use may feed back into the system design phase and consequently the system implementation phase (Carayon, 2006). Therefore, the phases of system design, implementation and use are inter-related and iterative (see Figure 1).

Figure 1.

The STSA Cycle of Healthcare System Design, Implementation and Use

To develop requirements for new devices and decision support tools, engineers use multifaceted approaches including focus groups, interviews, task analyses, formal analysis and other forms of computational modeling, iterative prototyping, testing, on the job observations and end user feedback (Gurses, & Xiao, 2006; Gurses Xiao, & Hu, 2009). New requirements are incrementally implemented in computational and then physical working prototypes where end users can interact with and provide feedback about added functions that are iteratively incorporated into the prototype’s design. While collaborating can be difficult when working with end users who are busy and have irregular schedules, “on the fly” feedback functions can be designed into tools to facilitate timely input. This process was used by DeVoge et al. (2009) to redesign a sign-out support tool for physicians. As a complement to engaging end users after a physical prototype is completed, computational methods such as statistical modeling approaches (see for example, Baumgart et al. (2010)) and formal methods (such as Bolton & Bass, 2010; Bolton, Siminiceanu, & Bass, 2011) incorporating models of end user task behavior, medical devices, decision support tools, and/or the operational environment can inform requirements refinement. Such methods can allow modeling the impact of normative as well as potential erroneous human behavior (Bolton & Bass, in press; Bolton, Bass, & Siminiceanu, submitted). STSA research should clearly identify its contributions to the phases of system design, implementation and use, and the interactions between these phases. Safety and efficiency can be aided by standards, which are an initial stop in the journey to better technology design. Many of these standards work well to specify technology characteristics. These standards include:

IEC (2004) 60601-1-6: Medical electrical equipment – Part 1–6: General requirements for safety- Collateral standard: Usability

IEC (2000). EN 894-3: Safety of Machinery – Ergonomics Requirements for the Design of Display and Control Actuators Part 3: Control Actuators.

IEC (2002). IEC 60073: Basic and Safety Principles for Man-Machine Interface, Marking and Identification.

ISO (2006). 9241-110: Ergonomics of human-system interaction

ISO/IEC 25062 (2006). Software engineering — Software product Quality Requirements and Evaluation (SQuaRE) — Common Industry Format (CIF) for usability test reports.

However, simply following the rules and the standards is insufficient to ensure a usable, intuitive system design that creates effective, efficient, safe, patient-centered care, while simultaneously protecting the medical staff from harm. Other standards and guidelines from the Association of Medical Instrumentation (AAMI), Agency for Healthcare Research and Quality (AHRQ) and the Food and Drug Administration (FDA) help the designer to achieve the IOM quality aims:

AAMI HE 48 (1993): Human factors engineering guidelines and preferred practices for the design of medical devices

AAMI HE 75 (2009). Human factors engineering - Design of medical devices

ANSI/AAMI HE 74 (2001/R2009): Human factors design process for medical devices

FDA (1996) Good Manufacturing Practices/Quality Systems Regulations

FDA (2000). Medical Device Use-Safety: Incorporating Human Factors Engineering in to Risk Management.

FDA (1997). Do it by Design-An intro to human factors in medical devices.

FDA (2011). Guidance document on “Applying Human Factors and Usability Engineering to Medical Device Design” (http://www.fda.gov/MedicalDevices/DeviceRegulationandGuidance/GuidanceDocuments/ucm259748.htm).

AHRQ Quality Improvement and Patient Safety guidelines (AHRQ.gov)

Patient safety and delivery of efficient and effective care with a patient-centered approach cannot simply follow a checklist of requirements (Haynes et al., 2009), but these standards and guidelines can help to integrate a systems approach where the focus is on user-centered design for the whole system and its diverse groups of users. For instance, research has shown that surgical suite systems errors arise from two main factors: 1) inadequate training regarding device handling and use, including poorly organized routines and 2) poorly designed user interfaces inducing errors or not facilitating recovery from error during operations (Hallbeck, 2010; Hallbeck et al., 2008; Lowe, 2006). More importantly, these errors can lead to morbidity, mortality and other safety concerns through lack of effective care provided for the patient or the healthcare provider. System errors are associated with inefficient or wasteful care. These errors have traditionally been attributed to the user as part of the blame and shame culture in medicine, a cultural shift that inhibits research in the area.

The healthcare system design, such as in the OR, needs further study and redesign to create and good working environments, practical strategies and systems guidelines. We encourage STSA researchers to evaluate and design healthcare systems and tools in conjunction with the healthcare providers so that they can be utilized with efficiency, effectiveness and safety. We further encourage the researcher to look for the root causes of and system contributors to error and using user-centered approaches rather than allowing the blame to be on the user or healthcare provider.

3. Open STSA research challenges

A range of STSA research challenges are still open and need to be addressed. In this paper, we focus on the following challenging open STSA research topics: workload management, physical design of medical devices and healthcare tools, cognitive design of health information technology, macroergonomics of healthcare tools and technologies, transitions of care, STSA of patient-centered care, and STSA in patient safety management.

3.1 Workload management

High care provider workload is a major problem in health care. Studies in the last ten years have shown that high workload can have detrimental effects on safety of care. For example, there is substantial evidence for the deterioration in safety of care provided in ICUs with high nursing workload (Carayon& Gurses, 2005; Trinkoff et al., 2010; Trinkoff et al., 2011). An ICU nurse/patient ratio greater than 1:2 is associated with higher infection rates, increased risk for respiratory failure and reintubation, and higher mortality rates (Penoyer, 2010). Similar findings have been reported for physician workload. For example, first year residents made significantly more serious medical errors when they worked on a traditional schedule (with extended work shifts -24 hours or more- every other shift) compared to when they worked on a modified schedule (with no extended work shifts and reduced number of hours per week) (Landrigan et al., 2004). A retrospective study showed that an intensivist to ICU bed ratio of 1/15 or less was associated with prolonged ICU length of stay as compared to higher staffing ratios (Dara & Afessa, 2005). High workload can have a negative effect on patient-centered care. For example, high workload was associated with nurses providing less “individualized” patient care, i.e. care that takes into account patients’ and families’ needs, choices, preferences (Gurses, Carayon, & Wall, 2009; Waters & Easton, 1999). In addition to negative consequences for patient care, high workload can negatively affect care providers’ quality of working life (e.g., stress, fatigue, job dissatisfaction) (Linzer et al., 2002; McVicar, 2003). For example, research shows that high workload negatively affects nursing job satisfaction and, as a result, contributes to high turnover and the nursing shortage (Aiken et al. 2002; Duffield & O’Brien-Pallas, 2003). High workload is one of the most important job stressors among nurses and can lead to distress and burnout (Crickmore, 1987; Oates & Oates, 1996).

New policies and mandates have been developed and implemented to manage the high workload in health care settings and reduce its negative consequences. For example, fifteen US states and the District of Columbia have enacted legislation and/or adopted regulations to address nurse staffing (http://www.nursingworld.org/mainmenucategories/ANAPoliticalPower/State/StateLegislativeAgenda/StaffingPlansandRatios_1.aspx). The long working hours of medical residents partially related to the need for continuity of care have been recently addressed by regulatory bodies. To reduce the negative impact of heavy workload on residents’ quality of working life and quality and safety of care they provide, the Accreditation Council for Graduate Medical Education (ACGME) limited work hours for US medical residents to 80 hours per week (http://www.acgme.org/DutyHours/dhSummary.pdf). Although these interventions are necessary to improve workload management, they are not sufficient to solve the ‘heavy workload’ problem in health care. This is especially true given the constraints present in health care, including a shortage of nursing staff and physicians and high costs of care. We need to develop effective, sustainable and feasible solutions for managing workload that consider current and future constraints of health care.

Workload is a complex and multidimensional concept; therefore, solutions to manage workload need to consider the different levels, types and elements of workload (Carayon & Gurses, 2005). For instance, human factors research indicates that excessively high levels of workload can lead to errors and system failure, whereas underload can lead to complacency and eventual errors (Braby, Harris, & Muir, 1993). To manage workload in health care more effectively, first we need to conceptualize and measure workload adequately. Although measures such as nurse/patient ratio or number of work hours are practical and useful, they do not represent the complexity of workload and are insufficient to study and manage workload adequately: the multidimensional, multifaceted concept of workload cannot be captured by single, simplistic measures. For example, four different categories of measures for ICU nursing workload have been described (Carayon & Gurses, 2005):

ICU-level measures: measures of workload at a macro-level such as the nurse/patient ratio.

Job-level measures: measures that characterize workload as a stable characteristic of the job

Patient-level measures: measures that estimate workload based on the condition of the patient

Situation-level measures: measures how the design characteristics of an ICU work system affect demands on individual care providers.

Each measure has strengths and weaknesses and can be appropriate to use in certain situations. Nurse/patient ratio, for instance, is a measure of the overall nursing workload in an ICU at a macro-level; this is a useful measure to compare ICUs and clinical outcomes. On the other hand, this measure is not sensitive to changes in the work system such as redesign of the physical environment. The impact of the ICU work system redesign efforts on workload can be best evaluated using situation-level measures.

Redesigning work systems may lead to better management of workload through improving efficiencies. Inefficiencies in a work system can be considered as performance obstacles. Performance obstacles are factors that hinder care providers to do their job and can be related to tasks (e.g., ambiguous job/role definitions), tools/technology (e.g., poor usability of CPOE), organization (e.g., inadequate teamwork), and environment (e.g., poor layout and workspace design) (Carayon et al., 2005; Gurses & Carayon, 2009). Another approach to workload management may be through removing or mitigating the effects of performance obstacles. However, there is limited research on how to effectively manage workload through work system redesign. STSA researchers should collaborate with clinicians to further understand workload in various healthcare settings, and develop, implement and evaluate solutions for workload management. We encourage STSA researchers to conduct studies that evaluate the impact of work system redesign efforts on care providers’ workload and to develop practical strategies and guidelines to more effectively manage workload.

3.2 STSA in the physical design of medical devices and healthcare tools

The hardware and software design of medical devices and healthcare tools needs to rely on usability studies that take into account all aspects of human factors and ergonomics, i.e. physical ergonomics, cognitive ergonomics and macroergonomics. There is regulatory effort to improve the usability and safety of medical devices. For instance, the new EU directive 27/2007 on medical devices specifically refers to “the need to consider ergonomic design in the essential requirements” of medical devices. The Directive also requires the assessment of dynamic safety provided by the interaction between workers and the devices in the context of use in order to “reduce, as far as possible, the risk of use error due to the ergonomic features of the device and the environment in which the device is intended to be used (design for patient safety)”2. The FDA has recently released a guidance document on the application of human factors to the design of medical devices3. The need to incorporate various human factors issues is most obvious for devices used in the operating room (Pennathur et al., Submitted), especially for laparoscopic or minimally invasive surgeries.

Minimally invasive surgery (MIS) is intended to reduce trauma associated with traditional techniques by eliminating the large incisions necessary to access the operative field (Crombie & Graves, 1996). The benefits to the patient are numerous: minimal cosmetic disfigurement; fewer post-operative complications and pain; and a more rapid recovery than open surgery (Cuschieri, 1995). However, with every benefit there is usually a cost and in this case the cost comes at the expense of the surgeon. Evidence of this comes from the title of a recent study “The operating room as a hostile environment for surgeons” (Sari et al., 2010).

During open surgery, surgeons lean forward toward or across the surgical field to directly see in 3-D and manipulate the organs (Albayrak, 2008); therefore, providing greater mobility inside the operating field in comparison to laparoscopic or minimally invasive surgery (Szeto et al., 2009). While in laparoscopic surgery, the organ view is 2-d and indirect on a monitor (Van Det et al., 2009) and manipulation is at the end of a shaft with no haptic feedback. Without the ability to directly touch or see the target inside the body, laparoscopic surgeons are limited by their instruments. Unfortunately, current laparoscopic surgery tools are poorly designed from an ergonomic perspective (Albayrak 2008; Berguer, 1998; Berguer, Forkey, & Smith, 1999; Matern & Waller, 1999; Trejo et al., 2006). In addition, the tools and the ports lead to constrained postures with arm abduction, awkward wrist and hand postures, often with high hand forces exerted while the 2-D monitor placement often causes eye, head and neck strain.

Many studies have addressed the design issues related to current laparoscopic tools, particularly the physical discomfort during and after the use of laparoscopic instruments (Berguer, 1997, 1998, 1999; Berguer et al., 1999; Matern & Waller, 1999; Trejo et al., 2006; Van Veelen & Meijer, 1999). These studies have shown the ramifications of the current instrument use with complaints ranging anywhere from pain or numbness in the neck/upper extremities to nerve lesions and paresthesias in the hand/finger (Kano et al., 1995; Majeed et al., 1993; Park et al., 2010). Application of ergonomic principles in the design of surgical instruments would help to increase patient safety by reducing surgeon’s fatigue and decreasing the need for complex cognitive planning tasks with excessive, awkward arm motions (Van Veelen et al., 2001).

Current laparoscopic instruments have been found to be poorly designed from an ergonomic perspective and it is likely that ergonomic issues were not considered at all in the design process. Laparoscopic surgeons perform various tasks during surgery such as grasping, dissecting, cauterizing and suturing of the organs with various long-shafted hand tools. Most studies have focused on aiming (Trejo et al., 2006), grasping (Berguer, 1998; Berguer et al., 1999; Matern et al., 2002) and suturing (Emam et al., 2001) tasks.

Overall, physical ergonomic issues have been identified in the operating room environment: the operating room table height, width and adjustability (Albayrak et al., 2004; Kranenburg & Gossot, 2004; Matern & Koneczny, 2007; Van Veelen,, Jakimowicz, & Kazemier, 2004), OR lights and lighting (Kaya et al., 2008; Matern & Koneczny, 2007), floor space and layout of ORs (Albayrak et al., 2004; Decker & Bauer, 2003; Kaya et al., 2008; Koneczny, 2009; Kranenburg & Gossot, 2004), trip hazard from cables (Koneczny, 2009; Matern & Koneczny, 2007), and monitor placement (Albayrak et al., 2004; Berguer, 1999; Decker & Bauer, 2003; Kaya et al., 2008; Matern & Koneczny, 2007; Van Veelen et al., 2001); Lin et al., 2007;. There has also been work examining the tools provided in the OR, especially those for minimally invasive or laparoscopic surgery: instruments (Kaya et al., 2008; Matern, 2001; Nguyen et al., 2001; Sheikhzadeh et al., 2009; Trejo et al., 2006; Trejo et al., 2007; Van Veelen et al., 2001), instrument carts (Albayrak et al., 2004; Sheikhzadeh et al., 2009), and foot pedals (Kranenburg & Gossot, 2004; Van Veelen et al., 2001). In addition, work has continued on identifying and quantifying the negative effects experienced by surgical team members as a result of the postures adopted during procedures (Albayrak et al., 2007; Kranenburg & Gossot, 2004; Matern & Koneczny, 2007), which can result in pain or work related musculoskeletal disorders (Forst, Friedman, & Shapiro, 2006; Gerbrands, Albayrak, & Kazemier, 2004; Kaya et al., 2008; Koneczny, 2009; Nguyen et al., 2001; Park et al., 2010; Sheikhzadeh et al., 2009). The percentage of surgeons reporting musculoskeletal discomfort or physical symptoms such as frequent hand and wrist pain range from 55% (Trejo et al.) to 59% (Berguer, 1998) to 61% (Szeto et al., 2009). This is becoming so prevalent that it is called laparoscopist’s thumb (Kano et al., 1995; Majeed et al., 1993; Wauben et al. 2006). The study by Berguer (1998) done over a decade with the involvement of Society of Gastrointestinal and Endoscopic Surgeons (SAGES) reports that an astonishing 87% of SAGES members reported physical symptoms of discomfort during or after laparoscopic surgery; this is a much higher percentage as compared to the 20–30% of those laparoscopic surgeons with occupational injuries currently (Park et al., 2010). Clearly there is much ergonomic work to be done in the OR, especially for laparoscopic surgery – the intervening decade did little to reduce the physical load on the surgeon. Recently, laparoendoscopic single-site surgery (LESS) or single incision laparoscopic surgery (SILS) has been introduced. This new technique increases the awkward and constrained body postures and tool collisions as well as surgical time required (Brown-Clerk et al., 2010).

Studies of the OR as a system with particular emphasis on new technologies being added (e.g. MIS, LESS and image-guided surgeries) need to be continued and extended. A systems approach to the study and extension of knowledge for standard healthcare delivery is also needed. In particular, there is a gap between older OR configurations and environments with these ORs not designed for these technologies, tools and techniques which create new burdens to the workers. The outcomes of this research agenda also needs to be standardized so that the results can be incorporated quickly into guidelines for the healthcare providers. Especially in the OR, there is a culture to be overcome – currently many surgeons feel that “conquering” bad equipment and environments is something to brag about not something to be fixed or designed out and a blame and shame response to errors. Therefore, future research should not only focus on the physical and cognitive issues in healthcare, but the sociotechnical aspects as well.

3.3 STSA in the cognitive design of health information technology

Improved automation such as health information technology or health IT has the potential to improve patient safety and healthcare quality as it can support the processes of information acquisition, information analysis, decision and action selection, and action implementation (Parasuraman, Sheridan, & Wickens, 2000). However, the introduction of health IT impacts health care providers’ physical and cognitive workflows by changing the way activities are carried out and therefore can create new classes of problems related to the coupling and dynamic interleaving of humans and automated systems. What information is presented and the manner in which it is represented (Bass & Pritchett, 2008; Norman, 1990), the types of recommended decisions and actions (Smith, McCoy, & Layton, 1997), and the way in which automated actions are carried out (Sarter & Woods, 1995) can lead to situations not anticipated by the system designer and difficult for the human operator (Holden, 2010; Israelski & Muto, 2004; Patterson, Cook, & Render, 2002). In addition, human operators must be able to complete their work in a dynamic environment, where goals change, interruptions occur and objectives change in priority (Ebright et al., 2003; Gurses et al., 2009). Current technology does not effectively support the exchange of information between care providers and there is a strong link between poor communication, errors, and adverse events: communication problems are the most common cause of preventable in-hospital disability or death (Wilson et al., 1995) and account for over 60% of root causes of sentinel events reported to the Joint Commission (2010). Health IT does not seem designed to effectively support task management and communication.

Cognitive systems engineering focuses on understanding human behavior and applying this knowledge to the design of human–automation interaction: making systems easier to use while reducing errors and/or allowing recovery from them (Stanton, 2005; Wickens et al., 2004). For cognitive systems engineering analyses of human–automation interaction, one must consider the goals and procedures of the human operator; the automated system and its human device interface; and the constraints imposed by the operational environment including dynamic changes that impact the current goals. Cognitive work analysis is concerned with identifying constraints in the operational environment that shape the mission goals of the human operator (Vicente, 1999); cognitive task analysis is concerned with describing how human operators normatively and descriptively perform goal oriented tasks when interacting with an automated system (Kirwan & Ainsworth, 1992; Schraagen, Chipman, & Shalin, 2000); and modeling frameworks such as Bolton & Bass (2010, in press) seek to find discrepancies between human mental models, potential erroneous human behavior, human-device interfaces (HDIs), and device automation while considering the human operator’s goals, the operational environment, and relevant interrelationships.

Regulatory requirements for the evaluation of health IT are slowly emerging4 and few methods exist to formally and comprehensively test human interaction with even the simplest devices (Bolton & Bass, 2010). For system design as well as evaluation, automation should be designed using an explicit, unified, accurate, and comprehensive model of the work domain, which provides a clear understanding of the care problem that is independent of how systems are implemented. Then interfaces for users should be designed with comprehensive yet easy to navigate controls and displays not based on legacy hardware and software features. The interfaces should support task performance and communication instead of being based on billing and legal requirements as today’s systems tend to support (Brown, Borowitz, & Novicoff, 2004; Gurses & Xiao, 2006). Cognitive systems engineering methods for supporting the design of automation for single users cannot address the combinatorial explosion resulting from system complexity where sets of care providers with different goals collaborate. Future research should extend the research methods to address the analysis of concepts of operation with the range of roles and responsibilities potentially assignable to the human and automated agents. Such analyses must consider the conditions that could impact system safety including the environment, human behavior and operational procedures, methods of collaboration and organization structures, policies and regulations.

3.4 STSA in the macroergonomic aspects of healthcare tools and technologies

Various types of tools and technologies, such as medical devices and health IT, are continuously introduced into healthcare organizations. These tools and technologies are often introduced in order to improve care quality. For instance, smart infusion pump technology and bar coding medication administration technology have been introduced to prevent medication administration errors (Bates, & Gawande, 2003; Poon et al., 2010; Rothschild et al., 2005). The evidence for the safety benefits of various healthcare tools and technologies is limited. For instance, a recent study demonstrated the positive impact of bar coding medication technology on medication safety, including non-timing medication errors and potential adverse drug events (Poon et al., 2010). However, STSA research has provided evidence for many work-arounds that occur with bar coding medication technology that could defeat the safety benefits of the technology (Carayon et al., 2007; Koppel et al., 2008). Evidence for the safety benefits of health IT such as computerized provider order entry (CPOE) and electronic health record (EHR) is also limited (Chaudhry et al., 2006). Several researchers have identified numerous sociotechnical problems of the design and implementation of these health information technologies (Ash et al., 2009; Koppel et al., 2005).

In order for the tools and technologies to produce safety benefits, we need to better understand the various physical, cognitive and psychosocial interactions between the users and the technologies. The previous two sections (sections 3.2 and 3.3.) addressed issues related to the physical and cognitive aspects of technology; this section focuses on the macroergonomic aspects of technology. End user involvement in the implementation of technology has been clearly identified as contributing to improved acceptance and use of the technology inside (Carayon et al., 2010; Karsh, 2004) and outside of health care (Korunka, Weiss, & Karetta, 1993). Various participatory ergonomics methods have been developed to foster end user involvement in technology implementation; however, the application of participatory ergonomics methods in health care has encountered numerous challenges. For instance, participation may be difficult to achieve if healthcare professionals are busy with patient care and are overloaded (Bohr, Evanoff, & Wolf, 1997). Therefore, STSA research should develop, implement and evaluate participatory methods for designing and implementing technology that consider the constraints and reality of health care.

Another important STSA research area concerns the integration of human factors and ergonomics in healthcare organizations, in particular in the context of purchasing and implementation of healthcare tools and technologies. This is an area where the six IOM quality aims can be operationalized in order to help purchasers of healthcare organizations in the decision making process of selecting and comparing technologies. In fact while influencing the design of a new tool can be a very hard and long-lasting challenge, a better assessment of the users’ needs and of the overall characteristics of the technologies on the market can result in a fast and effective solution to improve system performance. STSA research should contribute to the development of approaches and methods for efficiently integrating human factors and ergonomics in the design and purchasing of technologies (Pronovost et al., 2009).

3.5 STSA in transitions of care

Increasing specialization in medicine, coupled with limited resources, has led to an increase in the number of care transitions (also known as handoffs or handovers) and resulted in highly fragmented care. Unfortunately, care transitions can pose significant risks to patient safety and result in poor clinical and financial outcomes if not managed effectively. Most of the existing research on care transitions has been on inter-shift handoffs between clinicians (Arora et al., 2005; Borowitz et al., 2008; Helms et al., under review; Horwitz et al., 2008). For example, a study of malpractice claims closed between 1984 and 2004 found that poor transitions of care between residents and between residents and attending physicians were one of the most common types of teamwork-related problems that led to patient harm (Singh et al., 2007). A qualitative study of novice nurses’ near misses and adverse events found that in seven of the eight cases, poor handoffs (due to inadequate information transfer and/or ambiguity in responsibility transfer) was a contributing factor (Ebright et al., 2004).

Care transitions between healthcare settings are critical for patient safety. For example, studies have found that 46% to 56% of all medication errors in hospitals occur when patients move from one unit to another (Barnsteiner, 2005; Bates et al., 1997; Pippins et al., 2008; Rozich & Roger, 2001). One out of five patients was estimated to experience an adverse event (62% preventable) in transitions from hospital to home (Forster et al., 2003). A recent study found that over 50% of the Medicare patients who were re-hospitalized had not gone to a follow-up outpatient appointment after discharge (Jencks, Williams, & Coleman, 2009). Another study of adverse events conducted in two large hospitals in the United Kingdom using retrospective records revealed that 11% of hospitalized patients had an adverse event, 18% of which were attributable to the discharge process (Neale, Woloshynowych, & Vincent, 2001).

Despite its importance, research aimed at improving transitions of care is limited. Previous literature identified sociotechnical factors that may negatively affect transitions of care and as a result patient safety. For example, a qualitative study revealed that floor nurses experience several problems when receiving patients from ICU including ineffective and unnecessarily detailed handoff reports, not getting information about equipment required for the particular patient prior to the transfer, untidy paper-based patient notes, and lack of adequate resources (e.g., right skill-mix, well-designed information tools) to support a safe patient transfer (Whittaker & Ball, 2000). In a cross-sectional survey study, ED housestaff, ED physician assistants, internal medicine house staff and hospitalists at an urban academic medical center revealed information-related problems in transitions of care from ED to inpatient care, including failure to communicate critical information, particularly the most recent set of vital signs, no feedback mechanism from receiving internal medicine housestaff or hospitalists to the ED physicians, handoffs not being conducted in an interactive nature, and limited intra-group communication (e.g., physician-nurse) (Horwitz et al., 2009). A qualitative study on the process of patient handover between ED and ICU nurses showed that the information transferred during the handover was inconsistent and incomplete (McFetridge et al., 2007).

Standardizing handoff communication using communication techniques such as SBAR have been adopted by many healthcare organizations; however, the impact of this approach on improving patient safety has not been adequately demonstrated. Several other types of interventions aimed at improving transitions of care have been reported in the literature. For example, research showed that effective and practical interventions such as simplifying and standardizing a partially automated resident handoff form improved the accuracy, completeness, and clarity of transfer of care report between residents (Wayne et al., 2008). Changes in the shift schedule to eliminate short “cross cover” shifts could reduce the opportunity for communication related problems (Helms et al., under review). Another research study indicated that using a paper-based discharge survey for medication reconciliation almost eliminated medication errors in ICU discharge orders (Pronovost et al., 2003). Another study found the use of a discharge information brochure to be effective and helpful for families as it provided answers to some key questions related to transfer of their child from PICU to the general floor (Linton, Grant, & Pellegrini, 2008).

Although various interventions have been designed and implemented, transitions of care continue to be a particularly vulnerable process for patient safety. This is mainly due to the complexity of transitions of care that involve multi-dimensional problems. Most of the existing research and quality improvement efforts focus on improving transitions of care through standardizing or structuring the handoff communication (Pillow, 2007). Although using a standardized communication mechanism during the handoff report may be important, it will not be possible to improve the safety of transitions of care just by focusing on this type of intervention. Many factors in the work system may affect the safety of care transitions including culture in the organization, physical work environment, job design, and technology design and implementation. Hence, there is an urgent need for STSA research on transitions of care; this research should consider all the work system components and their interactions to identify hazards in transitions of care and develop, implement and evaluate effective and practical solutions.

3.6 STSA of patient-centered care

Care is a service situation (Falzon & Mollo, 2007); therefore, human factors models of service situations that have been applied in other work domains can be applied to healthcare. These models are centered on a participatory view of service situations, in which service production is defined as a cooperative activity between non-professionals and professionals interacting for a specific purpose.

Research on patient-centered care has significantly grown in the past two decades. In particular, the roles attributed to healthcare professionals and to patients have been debated extensively in the medical literature. Four main models can be distinguished: paternalistic decision-making, informed decision-making, physician-as-agent for patient decision-making and shared decision-making. They represent the historical evolution of the role of patient from the status of object of analysis to the status of actor involved in a cooperative activity (see Charles, Gafni, & Whelan (1999) for a review of literature). These models fail to recognize the challenges of implementing shared decision making in complex healthcare systems. On the contrary, from an STSA viewpoint, the question is less about adopting a unique “optimal” model than to analyze work activity in specific sociotechnical systems; this analysis will describe conditions that favor or hinder patient-centered care. In others words, the question is less: How to develop patient-centered care? but rather: does patient-centered care actually constitute a way of improving patient satisfaction or quality and safety of care? Under which technical and organizational conditions is patient-centered care effective?

Considering patients as the center of the care process implies that patients are given more power to act, i.e. to strengthen their capacity to act on the determining factors of their health. Thus, patient-centered care requires the development of patient empowerment, which aims at “building up the capacity of patients to help them to become active partners in their own care, to enable them to share in clinical decision making and to contribute to a wider perspective in the health care system” (Lau, 2002). From an STSA viewpoint, patient participation cannot be something that the institution requires from the patients: it must be seen as a possibility that provides more degrees of freedom to patients. STSA research needs to be conducted to define ways of empowering patients, both at the clinical level (for example from the analysis of healthcare professionals’ style and patient-provider interactions) and at the organizational level (for example in analyzing initiatives that encourage patient involvement in clinical units or at the level of healthcare systems). STSA research should also address the linkage between patient empowerment and healthcare professional empowerment; it is possible that healthcare professionals’ working conditions may influence how they cooperate with patients. For instance, busy healthcare professionals may be less likely to encourage patient participation.

An underdeveloped area of STSA research concerns the roles that patients can play in the management and improvement of their own safety (Mollo et al., 2011; Pernet & Mollo, 2011; Vincent& Coulter, 2002). This line of research links two issues of importance for health policy around the world, i.e. patient safety and patient/citizen’s participation in health decisions. Patient participation in patient safety can be defined as the actions that patients take to reduce the likelihood of medical errors and/or the actions that patients take to mitigate the effects of medical errors (Davis, 2007). Theoretical evidence legitimates patient empowerment in healthcare safety:

Patients are at the center of the healthcare process: they are not only the recipient and beneficiary of the care, but can also observe the whole care process (Koutantji et al., 2005). This allows patients to detect and identify possible errors, and thus to contribute to safety improvement.

In a way similar to healthcare professionals, patients can also make errors (Buetow & Elwyn, 2007). Therefore, providing patients an opportunity to have an active role in safety management may be a means to enhance awareness of patient safety among patients and health professionals, and more generally citizens. Active patient participation in safety management may also reduce the probability of error occurrence by creating a process of cooperative error management.

Medical information has become more easily accessible through various media and the Internet. Patients are thus more informed about health care; this can influence the relationship between healthcare professionals and patients. Patients may be more demanding concerning their care, and the exchange between care providers and care receivers may be less expert-based. In spite of the need for controlling the quality of information accessible on Internet, the growing level of patient knowledge will probably lead to greater patient involvement and active participation in safety management. Because patients are more and better informed about care processes, they may be in a better position to detect adverse events.

Active patient involvement offers a promising way for developing a safety culture based on the cooperation between patients and healthcare providers, for improving citizens/users’ satisfaction, for reducing the costs associated with adverse events and for achieving a more participatory approach to healthcare system design. STSA research needs to assess and identify the technical and organizational conditions that encourage patient participation but avoid harmful consequences for patients or for healthcare professionals.

3.7 STSA in patient safety management

Patient safety has received significant attention in the past 10 years; various national and international organizations have promoted a range of patient safety activities and programs; unfortunately, many of these activities have been conducted without a clear management plan to define strategies as well as measurable objectives. Once the extent and severity of adverse events became clear worldwide, policy-makers and hospital managers started to look for methods and tools to reduce adverse events; researchers were challenged to create solutions and demonstrate the benefits of those solutions. Risk management methods and tools that existed in other industries were transferred to healthcare, but often without taking into account the specificities of health care (Bagnara, Parlangeli, & Tartaglia, 2010). This limited the use of these risk management methods and tools (Leape & Berwick, 2005); sometimes these methods and tools were used but with the wrong expectations (UK Department of Health, 2006). One example is the use of incident reporting systems that were thought to be “the one best way” to measure risk. It is now clear that incident reporting systems are only one of many tools that can be used to identify risks in healthcare systems and processes (Sari et al, 2006; Olsen et al, 2005). In addition, STSA research has shown that incident reporting systems are effective when the organization supports feedback loops targeted at both individual and collective learning (Albolino et al., 2010; Hundt, 2007; World Health Organization, 2005).

There has been little research on how to design and implement systems for patient safety management; therefore, many recommendations (e.g., the series of reports by the Institute of Medicine) and in some cases regulations (e.g., The Danish Patient Safety Act) of international and national bodies are not built on strong research evidence. In addition, many of the promising concepts and approaches to patient safety management are still under development. For instance, the concept of resilience has received much attention; it may help healthcare organizations to implement some of the features of the High Reliability Organizing (HRO). However, the resilience concept has to be operationalized and translated into research hypothesis and practice in the healthcare sector (Amalberti et al., 2005). There are few examples of application of resilience engineering in healthcare (see www.resilience-engineering.org).

In complex high-risk industries, safety management and risk management have merged and led to improved methods for monitoring operations and recognizing the boundaries of unacceptable performance (Amalberti & Hourlier, 2007; Reason, 2009). Systems for safety and risk management usually include risk measurement, risk assessment and risk control; see, for example, the recent ISO document on Risk Management (ISO 31000:2009 Risk management --Principles and guidelines). Yet in health care, national strategies and local policies still refer separately to the three components of risk management. The consequence is that after an incident has been identified, it may not be systematically investigated and may not produce an adequate improvement plan. In this perspective, we can interpret the valuable campaigns promoted by the WHO or the Institute for Healthcare Improvement as a way to compensate for the inabilities of local actors to systematically address patient safety risks. STSA research needs to develop, implement and evaluate risk management strategies that can help clinicians at the front line to identify, analyze and anticipate risks, while allowing healthcare managers to measure, assess and control risks. We also need STSA research on the integration of risk management with quality improvement activities and programs as the data coming from robust information systems on actual incidents and process breakdowns provide the local evidence needed to set priorities for ongoing system redesign.

STSA with her sister discipline of human factors and ergonomics shall contribute to foster prospective research on the evidence on the who, where, what, why and how aspects of patient safety management so that patient safety can be effective and sustainable in a manner similar to what has been done in other industries (Reason, 1997).

4. What kind of STSA research?

Table 1 summarizes topics for the STSA research agenda. In addition to the specific topics of STSA research, we propose recommendations for the conduct of STSA research. In order to have impact and relevance, STSA research should involve partnership with healthcare professionals and identify the specific care process and/or patient outcome of interest. These partnerships facilitate the integration of the STSA methods and processes into medicine and facilitate the buy-in for the medical profession. The outcome from this partnership will fulfill many dimensions of quality (Institute of Medicine Committee on Quality of Health Care in America, 2001), including safety, effectiveness, patient-centered care, timeliness and efficiency. The proposed STSA research topics listed in Table 1 are those identified at this time, but as systems including personnel, equipment, devices, technologies, facilities, organizations and processes change, these topics will develop and change as well.

Table 1.

STSA Research Agenda

| Areas of STSA Research | Research Topics |

|---|---|

| Workload management |

|

| Physical design of medical devices |

|

| Cognitive design of health information technology |

|

| Macroergonomics of healthcare technology |

|

| STSA in transitions of care |

|

| STSA of patient-centered care |

|

| Risk management and patient safety management |

|

| Resilience |

|

| Feedback loop between identification, analysis of error/patient safety events and system redesign |

|

STSA research can contribute to the design of healthcare systems of the future by contributing to major redesign projects aimed at improving all six dimensions of quality, as well as to small projects with the capacity to transfer and implement the new knowledge and methods in different settings so that they can have an impact on a significant population. The success of the surgical safety checklist is an example of a “glocal” tool: it is intended just for the specific context of the operating room, but it has a global validity and effectiveness (Haynes et al., 2009). Analysis frameworks that utilize concurrent models of healthcare provider task behavior, human mission (the goals the providers wish to achieve), device automation, and the operational environment that are composed together to form a larger system model should be developed (Bolton & Bass, 2010).

We as STSA researchers should focus our efforts on demonstrating how designing or redesigning care systems and processes can have an impact on patient and provider outcomes. It is important to integrate one or several of the IOM quality aims in STSA research. Only then the value of using STSA in health care can be appreciated adequately.

5. Conclusion

The ambitious STSA research agenda laid out in this paper can only be accomplished through significant, long-lasting partnerships between STSA researchers and healthcare partners. Those healthcare partners include healthcare organizations (e.g., hospital, family medicine clinic, skilled nursing facility), healthcare professionals (e.g., physicians, nurses, pharmacists, health IT professionals, patient safety professionals), designers, manufacturers and vendors of healthcare technologies, and healthcare policy makers and decision makers. These partnerships are necessary and require bridging ‘cultural gaps’ between the healthcare culture and the STSA culture (Carayon & Xie, 2011). When the two cultures meet and try to work together, there may be some potential conflict that need to be anticipated and resolved through STSA training and education of healthcare professionals and researchers and increasing dissemination and application of STSA concepts and methods in health care.

STSA research is one of several domains covered by IIE Transactions on Healthcare Systems Engineering. We need to strongly encourage collaborative research across the various domains. For instance, the problem of workload management can benefit from STSA research as well as quantitative methods and models developed by operations management researchers.

Acknowledgments

Dr. Carayon’s contribution to this publication was partially supported by grant 1UL1RR025011 from the Clinical & Translational Science Award (CTSA) program of the National Center for Research Resources National Institutes of Health [PI: M. Drezner]. Dr. Gurses was supported in part by an Agency for Healthcare Research and Quality K01 grant (HS018762) for her work on this paper.

Footnotes

References to the IOM quality aims are underlined throughout the paper.

DIRECTIVE 2007/47/EC OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL of 5 September 2007 amending Council Directive 90/385/EEC on the approximation of the laws of the Member States relating to active implantable medical devices, Council Directive 93/42/EEC concerning medical devices and Directive 98/8/EC concerning the placing of biocidal products on the market” http://eur-lex.europa.eu/LexUriServ/LexUriServ.do?uri=OJ:L:2007:247:0021:0055:en:PDF

FDA’s recent guidance document on “Applying Human Factors and Usability Engineering to Medical Device Design” available online at: http://www.fda.gov/MedicalDevices/DeviceRegulationandGuidance/GuidanceDocuments/ucm259748.htm

See, for example, the EU directive referenced above. The EU directive covers biomedical devices and includes software applications that directly co-determine the interaction with the patient. For example a Laboratory Information System is not included in this definition but the application governing an infusion pump is.

Contributor Information

Dr Pascale Carayon, Email: carayon@engr.wisc.edu, University of Wisconsin-Madison, Dept of Industrial & Systems Engr - CQPI, 1550 Engineering Drive, 3126 Engineering Centers Building, Madison, 53705 United States.

Dr Ellen Bass, Email: ejb4n@virginia.edu, University of Virginia, Systems and Information Engineering, 151 Engineer’s Way, P.O. Box 400747, Charlottesville, 22904 United States.

Tommaso Bellandi, Email: bellandit@aou-careggi.toscana.it, Centro Gestione Rischio Clinico e Sicurezza dei Pazienti, Patient Safety Research Lab, Palazzina 67a, Azienda Ospedaliera Universitaria Careggi, Largo Brambilla 3, Firenze, 50134 Italy.

Dr Ayse Gurses, Email: agurses1@jhmi.edu, Johns Hopkins University, Anesthesiology and Critical Care, Health Policy and Management, 1909 Thames Street, 2nd floor, Baltimore, 21231 United States.

Dr Susan Hallbeck, Email: hallbeck@unl.edu, University of Nebraska.

Dr Vanina Mollo, Email: vanina.mollo@cnam.fr, CNAM, Toulouse, France.

References

- Aiken LJ, Clarke SP, Sloane DM, Sochalski JA, Silber JH. Hospital nurse staffing and patient mortality, nurse burnout, and job dissatisfaction. Journal of the American Medical Association. 2002;288(16):1987–1993. doi: 10.1001/jama.288.16.1987. [DOI] [PubMed] [Google Scholar]

- Albayrak A, Kazemier G, Meijer DW, Bonjer HJ. Current state of ergonomics of operating rooms of Dutch hospitals in the endoscopic era. Minimally Invasive Therapy & Allied Technologies. 2004;13(3):156–160. doi: 10.1080/13645700410034093. [DOI] [PubMed] [Google Scholar]

- Albayrak A. Ergonomics in the operating room: Transition from open to image-based surgery. Delft University of Technology; 2008. [Google Scholar]

- Albayrak A, van Veelen MA, Prins JF, Snijders CJ, de Ridder H, Kazemier G. A newly designed ergonomic body support for surgeons. Surgical Endoscopy. 2007;21(10):1835–1840. doi: 10.1007/s00464-007-9249-1. [DOI] [PubMed] [Google Scholar]

- Albolino S, Tartaglia R, Bellandi T, Amicosante E, Bianchini E, Biggeri A. Patient safety and incident reporting: Survey of Italian healthcare workers. Quality and Safety in Health Care. 2010;19:i8–i12. doi: 10.1136/qshc.2009.036376. [DOI] [PubMed] [Google Scholar]

- Amalberti R, Auroy Y, Berwick D, Barach P. Improving patient care: Five system barriers to achieving ultrasafe health care. Annals of Internal Medicine. 2005;142:756–764. doi: 10.7326/0003-4819-142-9-200505030-00012. [DOI] [PubMed] [Google Scholar]

- Amalberti R, Hourlier S. Humane error reduction strategies in healthcare. In: Carayon P, editor. Handbook of Human Factors and Ergonomics in Health Care and Patient Safety. Mahwah, N.J.: Lawrence Erlbaum Associates; 2007. pp. 525–560. [Google Scholar]

- Arora V, Johnson JJ, Lovinger D, Humphrey HJ, Meltzer DO. Communication failures in patient sign-out and suggestions for improvement: A critical incident analysis. Quality & Safety in Health Care. 2005;14:401–407. doi: 10.1136/qshc.2005.015107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ash JS, Sittig DF, Dykstra R, Campbell E, Guappone K. The unintended consequences of computerized provider order entry: Findings from a mixed methods exploration. International Journal of Medical Informatics. 2009;78(Supplement 1):S69–S76. doi: 10.1016/j.ijmedinf.2008.07.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bagnara S, Parlangeli O, Tartaglia R. Are hospitals becoming high reliability organizations? Applied Ergonomics. 2010;41(5):713–718. doi: 10.1016/j.apergo.2009.12.009. [DOI] [PubMed] [Google Scholar]

- Barnsteiner JH. Medication reconciliation: Transfer of medication information across settings - keeping it free from error. Journal of Infusion Nursing. 2005;28(supplement 2):31–36. doi: 10.1097/00129804-200503001-00007. [DOI] [PubMed] [Google Scholar]

- Bass EJ, Pritchett AR. Human-Automated Judge Learning: A methodology for examining human interaction with information analysis automation. IEEE Transactions on Systems, Man, and Cybernetics, Part A: Systems and Humans. 2008;38(4):759–776. [Google Scholar]

- Bates DW, Gawande AA. Improving safety with information technology. The New England Journal of Medicine. 2003;348(25):2526–2534. doi: 10.1056/NEJMsa020847. [DOI] [PubMed] [Google Scholar]

- Bates DW, Spell N, Cullen DJ, Burdick E, Laird N, Petersen LA, et al. The cost of adverse drug events in hospitalized patients. Journal of the American Medical Association. 1997;277(4):307–311. [PubMed] [Google Scholar]

- Baumgart LA, Gerling GJ, Bass EJ. Characterizing the range of simulated prostate abnormalities palpable by digital rectal examination. Cancer Epidemiology, Biomarkers and Prevention. 2010;34:79–84. doi: 10.1016/j.canep.2009.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berguer R. The application of ergonomics in the work environment of general surgeons. Reviews on Environmental Health. 1997;12(2):99–106. doi: 10.1515/reveh.1997.12.2.99. [DOI] [PubMed] [Google Scholar]

- Berguer R. Surgical technology and the ergonomics of laparoscopic instruments. Surgical Endoscopy. 1998;12(5):458–462. doi: 10.1007/s004649900705. [DOI] [PubMed] [Google Scholar]

- Berguer R. Surgery and ergonomics. Archives of surgery (Chicago, Ill : 1960) 1999;134(9):1011–1016. doi: 10.1001/archsurg.134.9.1011. [DOI] [PubMed] [Google Scholar]

- Berguer R, Forkey DL, Smith WD. Ergonomic problems associated with laparoscopic surgery. Surgical Endoscopy. 1999;13(5):466–468. doi: 10.1007/pl00009635. [DOI] [PubMed] [Google Scholar]

- Bogner MS, editor. Human Error in Medicine. Hillsdale, NJ: Lawrence Erlbaum Associates; 1994. [Google Scholar]

- Bohr PC, Evanoff BA, Wolf L. Implementing participatory ergonomics teams among health care workers. American Journal of Industrial Medicine. 1997;32(3):190–196. doi: 10.1002/(sici)1097-0274(199709)32:3<190::aid-ajim2>3.0.co;2-1. [DOI] [PubMed] [Google Scholar]

- Bolton ML, Bass EJ. Formally verifying human-automation interaction as part of a system model: Limitations and tradeoffs. Innovations in Systems and Software Engineering. 2010;6(3):219–231. doi: 10.1007/s11334-010-0129-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolton ML, Bass EJ. Using task analytic behavior models, strategic knowledge-based erroneous human behavior generation, and model checking to evaluate human-automation. Paper presented at the Interaction. 2011 IEEE International Conference on Systems, Man, and Cybernetics. (in press) [Google Scholar]

- Bolton ML, Bass EJ, Siminiceanu RI. Generating phenotypical erroneous human behavior to evaluate human-automation interaction using model checking. Formal Aspects of Computing. doi: 10.1016/j.ijhcs.2012.05.010. (submitted) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolton ML, Siminiceanu RI, Bass EJ. IEEE Transactions on Systems, Man, and Cybernetics, Part A: Systems and Humans. 2011. A systematic approach to model checking human-automation interaction using task-analytic models. [Google Scholar]

- Borowitz SM, Waggoner-Fountain LA, Bass EJ, Sledd RM. Adequacy of information transferred at resident sign-out (inhospital handover of care): A prospective survey. Quality and Safety in Health Care. 2008;17(6):6–10. doi: 10.1136/qshc.2006.019273. [DOI] [PubMed] [Google Scholar]

- Braby CD, Harris D, Muir HC. A psychophysiological approach to the assessment of work underload. Ergonomics. 1993;36(9):1035– 1042. doi: 10.1080/00140139308967975. [DOI] [PubMed] [Google Scholar]

- Brennan PF, Safran C. Patient safety. Remember who it’s really. International Journal of Medical Informatics. 2004;73(7–8):547–550. doi: 10.1016/j.ijmedinf.2004.05.005. [DOI] [PubMed] [Google Scholar]

- Brown-Clerk BJ, De Laveaga AE, LaGrange CA, Lowndes BR, Wirth LM, Hallbeck MS. Evalution of laparoendoscopic single-site surgery (LESS) ports in a validated inanimate laparoscopic training model. Journal of Endourology. 2010;24(Supplement 1):A283. [Google Scholar]

- Brown PJ, Borowitz SM, Novicoff W. Information exchange in the NICU: what sources of patient data do physicians prefer to use? International Journal of Medical Informatics. 2004;73(4):349–355. doi: 10.1016/j.ijmedinf.2004.03.001. [DOI] [PubMed] [Google Scholar]

- Buetow S, Elwyn G. Patient safety and patient error. Lancet. 2007;369(9556):158–161. doi: 10.1016/S0140-6736(07)60077-4. [DOI] [PubMed] [Google Scholar]

- Carayon P. Human factors of complex sociotechnical systems. Applied Ergonomics. 2006;37:525–535. doi: 10.1016/j.apergo.2006.04.011. [DOI] [PubMed] [Google Scholar]

- Carayon P, Gurses A. Nursing workload and patient safety in intensive care units: A human factors engineering evaluation of the literature. Intensive and Critical Care Nursing. 2005;21:284–301. doi: 10.1016/j.iccn.2004.12.003. [DOI] [PubMed] [Google Scholar]

- Carayon P, Gurses AP, Hundt AS, Ayoub P, Alvarado CJ. Performance obstacles and facilitators of healthcare providers. In: Korunka C, Hoffmann P, editors. Change and Quality in Human Service Work. Vol. 4. Munchen, Germany: Hampp Publishers; 2005. pp. 257–276. [Google Scholar]

- Carayon P, Hundt AS, Karsh BT, Gurses AP, Alvarado CJ, Smith M, Brennan P. Work system design for patient safety: The SEIPS model. Quality & Safety in Health Care. 2006;15(Supplement I):i50–i58. doi: 10.1136/qshc.2005.015842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carayon P, Hundt AS, Wetterneck TB. Nurses’ acceptance of Smart IV pump technology. International Journal of Medical Informatics. 2010;79:401–411. doi: 10.1016/j.ijmedinf.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carayon P, Wetterneck TB, Hundt AS, Ozkaynak M, DeSilvey J, Ludwig B, Ram P, Rough SS. Evaluation of nurse interaction with bar code medication administration technology in the work environment. Journal of Patient Safety. 2007;3(1):34–42. [Google Scholar]

- Carayon P, Xie A. Decision making in healthcare system design: When human factors engineering meets health care. In: Proctor RW, Nof SY, Yih Y, editors. Cultural Factors in Decision Making and Action. Taylor & Francis; 2011. [Google Scholar]

- Charles C, Gafni A, Whelan T. Decision-making in the physician-patient encounter: Revisiting the shared treatment decision-making model. Social Science and Medicine. 1999;49(5):651–661. doi: 10.1016/s0277-9536(99)00145-8. [DOI] [PubMed] [Google Scholar]

- Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, et al. Systematic review: Impact of health information technology on quality, efficiency, and costs of medical care. Annals of Internal Medicine. 2006;144:E12–E22. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- Cook RI, Woods DD, Miller C. A Tale of Two Stories: Contrasting Views of Patient Safety. Chicago, IL: National Patient Safety Foundation; 1998. [Google Scholar]

- Crickmore R. A review of stress in the intensive care unit. Intensive Care Nursing. 1987;3:19–27. doi: 10.1016/0266-612x(87)90006-x. [DOI] [PubMed] [Google Scholar]

- Crombie NAM, Graves RJ. Ergonomics of keyhole surgical instruments - patient friendly, but surgeon unfriendly. In: Roberston S, editor. Contemporary Ergonomics. London: Taylor & Francis; 1996. pp. 385–390. [Google Scholar]

- Cuschieri A. Whither minimal access surgery: tribulations and expectations. American Journal of Surgery. 1995;169(1):9–19. doi: 10.1016/s0002-9610(99)80104-4. [DOI] [PubMed] [Google Scholar]

- Dara SI, Afessa B. Intensivist-to-bed ratio: Association with outcomes in the medical ICU. Chest. 2005;128:567–572. doi: 10.1378/chest.128.2.567. [DOI] [PubMed] [Google Scholar]

- Davis RE. Patient involvement in patient safety. National Knowledge Week 2007 Nov 26–30;2007 [Google Scholar]

- Decker K, Bauer M. Ergonomics in the operating room - from the anesthesiologist’s point of view. Minimally Invasive Therapy & Allied Technologies. 2003;12(6):268–277. doi: 10.1080/13645700310018795. [DOI] [PubMed] [Google Scholar]

- DeVoge JM, Bass EJ, Sledd R, Borowitz SM, Waggoner-Fountain L. Collaborating with physicians to redesign a sign-out tool. Ergonomics in Design. 2009;17:20–28. doi: 10.1518/106480409X415170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duffield C, O’Brien-Pallas L. The causes and consequences of nursing shortages: A helicopter view of the research. Australian Health Review. 2003;26(1) doi: 10.1071/ah030186. [DOI] [PubMed] [Google Scholar]

- Dy S, Gurses AP. Care pathways and patient safety: Key concepts, patient outcomes, related interventions. International Journal of Care Pathways. 2010;14(3):124–128. [Google Scholar]

- Ebright PR, Patterson ES, Chalko BA, Render ML. Understanding the complexity of registered nurse work in acute care settings. Journal of Nursing Administration. 2003;33(12):630–638. doi: 10.1097/00005110-200312000-00004. [DOI] [PubMed] [Google Scholar]

- Ebright PR, Urden L, Patterson E, Chalko B. Themes surrounding novice nurse near-miss and adverse-event situations. Journal of Nursing Administration. 2004;34(11):531–538. doi: 10.1097/00005110-200411000-00010. [DOI] [PubMed] [Google Scholar]

- Effken JA. Different lenses, improved outcomes: A new approach to the analysis and design of healthcare information systems. International Journal of Medical Informatics. 2002;65(1):59–74. doi: 10.1016/s1386-5056(02)00003-5. [DOI] [PubMed] [Google Scholar]

- Emam TA, Frank TG, Hanna GB, Cuschieri A. Influence of handle design on the surgeon’s upper limb movements, muscle recruitment, and fatigue during endoscopic suturing. Surgical Endoscopy. 2001;15(7):667–672. doi: 10.1007/s004640080141. [DOI] [PubMed] [Google Scholar]

- Falzon P, Mollo V. Managing patients’ demands: The practitioners’ point of view. Theoretical Issues in Ergonomics Science. 2007;8(5):445–468. [Google Scholar]

- Forst L, Friedman L, Shapiro D. Carpal tunnel syndrome in spine surgeons: A pilot study. Archives of Environmental & Occupational Health. 2006;61(6):259–262. doi: 10.3200/AEOH.61.6.259-262. [DOI] [PubMed] [Google Scholar]

- Forster AJ, Murff HJ, Peterson JF, Gandhi TK, Bates DW. The incidence and severity of adverse events affecting patients after discharge from the hospital. Annals of Internal Medicine. 2003;138(3):161–174. doi: 10.7326/0003-4819-138-3-200302040-00007. [DOI] [PubMed] [Google Scholar]

- Gerbrands A, Albayrak A, Kazemier G. Ergonomic evaluation of the work area of the scrub nurse. Minimally Invasive Therapy & Allied Technologies. 2004;13(3):142–146. doi: 10.1080/13645700410033184. [DOI] [PubMed] [Google Scholar]

- Gurses A, Carayon P. A qualitative study of performance obstacles and facilitators among ICU nurses. Applied Ergonomics. 2009;40(3):509–518. doi: 10.1016/j.apergo.2008.09.003. [DOI] [PubMed] [Google Scholar]

- Gurses A, Carayon P, Wall M. Impact of performance obstacles on intensive care nurses workload, perceived quality and safety of care, and quality of working life. Health Services Research. 2009:422–443. doi: 10.1111/j.1475-6773.2008.00934.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gurses AP, Xiao Y. A Systematic Review of the Literature on Multidisciplinary Rounds to Design Information Technology. Journal of the American Medical Informatics Association. 2006;13(3):267–276. doi: 10.1197/jamia.M1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gurses AP, Xiao Y, Hu P. User-designed information tools to support communication and care coordination in a trauma hospital. Journal of Biomedical Informatics. 2009;42(4):667–677. doi: 10.1016/j.jbi.2009.03.007. [DOI] [PubMed] [Google Scholar]

- Hallbeck MS. How to develop usable surgical devices - The view from a U.S. research university. In: Duffy VG, editor. Advances in Occupational, Social, and Organizational Ergonomics. CRC Press; 2010. pp. 286–295. [Google Scholar]

- Hallbeck MS, Koneczny S, Buchel D, Matern U. Ergonomic usability testing of operating room devices. Studies in Health Technology and Informatics. 2008;132:147–152. [PubMed] [Google Scholar]

- Haynes AB, Weiser TG, Berry WR, Lipsitz SR, Breizat AH, Dellinger EP, et al. A surgical safety checklist to reduce morbidity and mortality in a global population. New England Journal of Medicine. 2009;360(5):491–499. doi: 10.1056/NEJMsa0810119. [DOI] [PubMed] [Google Scholar]

- Helms AS, Perez TE, Baltz J, Donowitz G, Hoke G, Bass EJ, et al. Internal medicine resident sign-out: Analysis of hand-off of care, the impact of night float, and an appreciative-inquiry approach to improvement. Journal of General Internal Medicine. doi: 10.1007/s11606-011-1885-4. (under review) [DOI] [PMC free article] [PubMed] [Google Scholar]