Abstract

Handheld computing has had many applications in medicine, but relatively few in pathology. Most reported uses of handhelds in pathology have been limited to experimental endeavors in telemedicine or education. With recent advances in handheld hardware and software, along with concurrent advances in whole-slide imaging (WSI), new opportunities and challenges have presented themselves. This review addresses the current state of handheld hardware and software, provides a history of handheld devices in medicine focusing on pathology, and presents future use cases for such handhelds in pathology.

Keywords: Education, handhelds, smartphones, tablets, WSI

INTRODUCTION

Handheld computing – the act of utilizing small computers that fit on the palm of a hand, and whose user interfaces are geared for touch input – has a two decade long history in medicine, with applications ranging from the simple – such as a list of eponyms[1] – to the more complex – such as the creation of automated systems to remind patients of upcoming medical appointments[2] – and beyond. From the early 1990s to the present, handheld computing has sustained remarkable growth in user interface, available computational resources, applications, and popularity. It is therefore no surprise that a search of the medical literature reveals that there are now thousands of peer-reviewed articles on the use of handheld computing for some aspect of medicine. However, very few of these directly relate to the specialty of pathology. This poses a conundrum: if handheld computing has become truly ubiquitous in medicine, why has pathology lagged behind? In this review, we attempt to answer this question by (a) providing a short history of handheld computing, (b) detailing the current capabilities of handheld devices (handhelds), (c) comprehensively reviewing the medical literature on handheld devices, (d) focusing on the subset of literature that deals with handhelds in pathology, (e) addressing security issues, and (f) extrapolating future trends.

HISTORICAL PERSPECTIVE: HANDHELD COMPUTING

The history of handheld computing began in 1984 with the Psion Organiser, which was followed in 1986 by the Organiser II. In 1992, Go Corporation shipped PenPoint, a handwritten gesture user interface technology that became the basis of the first handheld handwriting-driven computer platforms, which encouraged Microsoft to develop its own handwriting recognition technologies. Although the user interface of the Psion Series 3 (released in 1993) was that of a miniature laptop with no touchscreen or handwritten input, it is widely considered to be the first true personal digital assistant (PDA) – a device that incorporates contact management, time management, and other secretarial functions. Its contemporary competitor, the Apple Newton, integrated sophisticated handwriting recognition capabilities, and was the first handheld computer to use an Advanced RISC Machine (ARM) central processing unit (CPU). In 1996, Palm released its iconic Pilot series of PDAs, incorporating a gesture area (an area where touch input is accepted, but not part of the screen) and a highly simplified and effective handwriting notation known as Graffiti. Between 1996 and 2002, Palm and its PalmOS were the de facto PDA standard, but the release of Windows CE (later to become Windows Mobile)-based devices in the early 2000s would challenge this.

Meanwhile, also in 1996, the Nokia 9000 Communicator was released; it operated much like the Psion Series 3, but had an integrated telephone, cellular modem, and text web browser. It is now widely considered to be the first true smartphone (a phone that provides functionality and a user experience comparable to traditional desktop computing). In 2001, both Palm and Microsoft made their first forays into the smartphone market, but were largely unsuccessful outside the United States. In 2002, the first BlackBerry appeared; refined and upgraded versions of these devices are still available today. The year 2002 also marked the release of the Handspring (later acquired by Palm) Treo, the first smartphone with both a touchscreen (a screen that is also a touch input area) and a full keyboard; it was also the first handheld device to integrate all of the features that we recognize in a smartphone today (except for multitouch technology and a modern standards-compliant web browser).

Between 2002 and 2007, Nokia attained dominance in the smartphone market, and nonsmartphone PDAs became obsolete. In 2003 Microsoft brought Tablet personal computers (PCs) – pen-based, fully functional PCs with handwriting support – to market, but they never gained popularity and are now extremely rare. The year 2007 marked a revolution in the handheld computing industry, with the release of the iPhone (which runs an operating system [OS] known as iOS). The iPhone was the first handheld computer to conquer the modern multitouch user interface paradigm, and feature a truly modern Web browser. In 2008, Android OS was released by Google, with features and a user interface similar to the iPhone; by 2010 it had become the dominant smartphone operating system on the market. In 2009, Palm released the Pre; this phone's operating system, known as WebOS, had an advanced multitasking user interface that has been widely copied by other smartphone user interfaces.[3,4] In 2010, Microsoft released Windows Phone 7, which had a radically new multitouch-based user interface that brought Windows-based smartphones into the modern age. This user interface, rather than Microsoft's older Tablet PC interface, is now being integrated into the next desktop version of Windows.[5]

The iOS-based tablet known as the iPad was released by Apple in 2010, followed by the release of Android-based and WebOS-based tablets (handheld computers with 7- to 10-inch screens, no keyboards, and often no cellular modems) in 2011. WebOS (and associated WebOS-based hardware) was abruptly discontinued in August 2011, leaving iOS and Android as the two standard smartphone operating systems. Finally, in October 2011 Apple launched its iPhone 4S, which integrated a sophisticated voice recognition-based user interface known as Siri. We have now arrived at an era where handheld devices feature multitouch user interfaces, increasingly fast central processing units, large amounts of RAM, powerful integrated graphics processors, and fast mobile broadband to deliver a mobile computing experience that many people find more than “good enough” for their daily computing needs.[6]

CURRENT HANDHELDS: HARDWARE

Modern handhelds have several hardware components in common, including the following:

A battery

A 1GHz+ ARM CPU with integrated graphics processor

256MB-1GB RAM

1-16GB flash memory

Global positioning system (GPS)

3G and/or 4G network connectivity

Wi-Fi (802.11n) network connectivity

Capacitive touchscreen with multitouch technology

Accelerometer

Gyroscope

MicroUSB connectivity

Bluetooth connectivity

Integrated camera

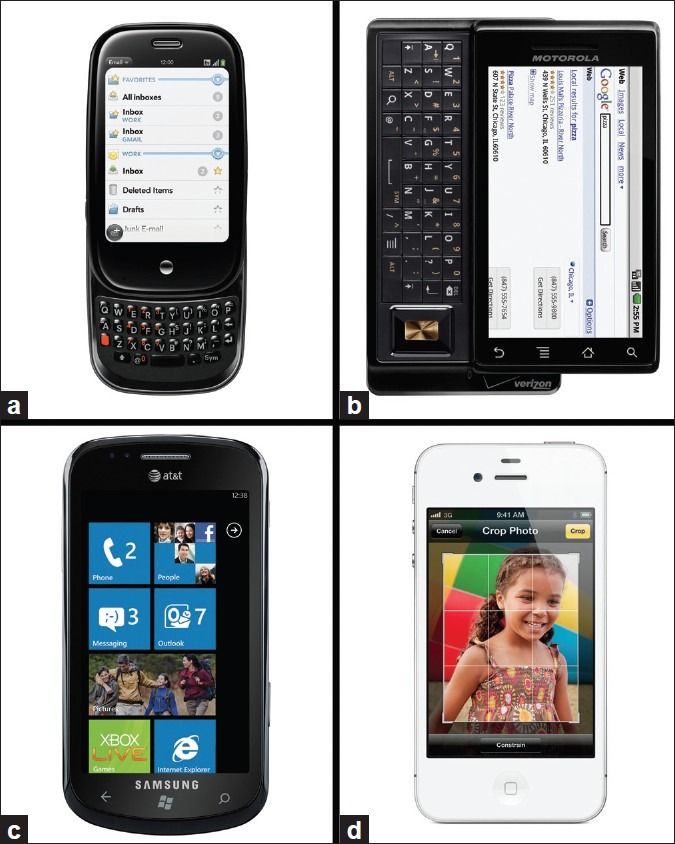

Some handheld devices have physical keyboards and others have connectivity for video output [Figure 1]. ARM CPUs are not directly comparable with the ×86 CPUs in desktop and laptop computers; they are tailored for low power consumption at the expense of processing speed. Benchmarks of modern ARM CPUs have shown them to be anywhere between 1.5 and 4 times slower than modern low-end laptop CPUs, but with over 10 times the power efficiency.[7] In addition, modern ARM CPUs integrate hardware decode engines for popular video formats (resulting in seamless playback of 1080p HD video on even the most modest smartphone) and powerful 3D graphics processing units that approach the power of the current generation of video gaming consoles.[8] As a result, current smartphones and tablets have proven capable of applications that were once the bailiwick of high-end computing, including rendering of interactive 3D environments[9] and manipulation of extremely large image data sets.[10]

Figure 1.

Comparison of modern smartphones (images courtesy Palm, Motorola, Apple, and Samsung). (a) Palm Pre, a vertical slider phone running HP webOS; (b) Motorola Droid, a horizontal slider phone running Google Android; (c) Samsung Focus, a slate phone running Microsoft Windows Phone 7; (D) Apple iPhone 4S, a slate phone running Apple iOS

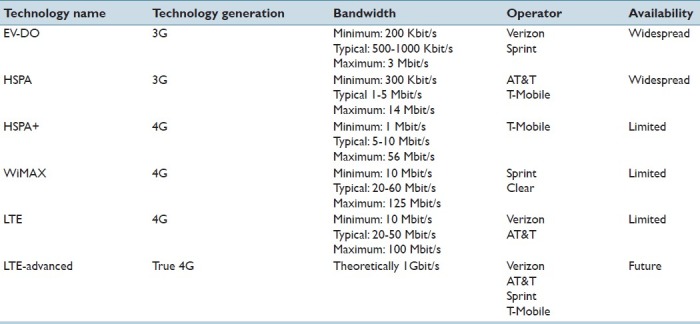

Both 3G and 4G wireless network connectivity are considered to be “broadband,” as opposed to the earlier 1G (analog voice) and 2G (digital voice) network standards. Wireless cellular 3G connectivity is currently dominant, which supports minimum speeds of at least 200 Kbit/s, usual speeds of around 500-1,000 Kbit/s, and maximum speeds in the low Mbit range.[11] Wireless 4G connectivity is still under development, and currently boasts peak speeds of 56-128 Mbit/s, depending on the technology used [Table 1]. It should be noted that the connectivity currently marketed as 4G does not actually meet the International Telecommunications Union (ITU) criteria for the technical 4G standard, which mandates peak speeds of 1 Gbit/s.[12] In practice, current 3G and 4G networks – even when they are not working at peak speeds – have proven capable of sending and receiving large sets of tiled image data very similar to that used in whole slide imaging (WSI) on a real-time basis.[13]

Table 1.

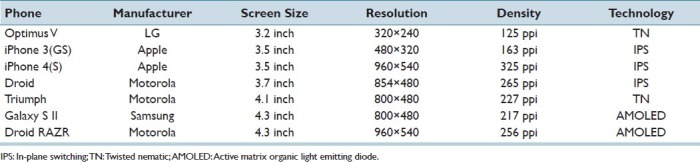

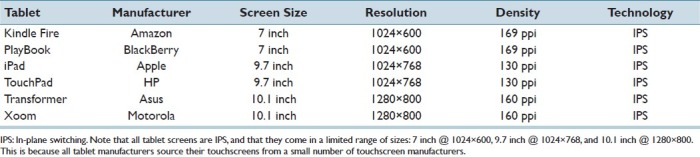

Screens range in size from 3.2 to 4.3 inches diagonal for smartphones, and from 7 to 10 inches diagonal for tablets. Resolutions range from 320×240 to 960×540 for smartphones, and from 1024×768 to 1280×800 for tablets. Pixel density ranges from 120 to 325 pixels per inch depending on device and form factor. Twisted nematic (TN), in-plane switching (IPS), and active-matrix organic light emitting diode (AMOLED) technology predominate in these liquid crystal displays (LCD). TN screens are cheap and power efficient, but with mediocre color reproduction and poor viewing angles. Moreover, TN screens are more sensitive to frequent pressure by users. IPS screens have the best color reproduction and viewing angles of all display types, but are expensive and utilize a large amount of power. AMOLED screens have color reproduction and viewing angles comparable to IPS, with power efficiency comparable to TN, but are expensive, have a relatively short operational life, and are made only by one supplier. It therefore comes as no surprise that TN screens are found in low end to midrange handhelds, and that IPS and AMOLED screens are found in higher end models [Tables 2 and 3].[14]

Table 2.

Comparison of some modern handheld (phone) screens

Table 3.

Comparison of some modern handheld (tablet) screens

Many cell phones incorporate a compact digital camera (so-called camera phone). These cameras range from 3 to 12 megapixels in resolution; at the high end, most also have built-in LED flash units. Often these cameras have fixed focus lenses, smaller sensors that limit their performance in poor lighting, and may not have a physical shutter. The performance of these cameras tends to lag far behind that of low-end stand-alone digital cameras, though there exist notable exceptions to this rule (e.g., the Nokia N8).[15,16] That being said, there have been multiple studies across several different specialties of medicine that show that these cameras can be of utility in telemedicine and even limited forms of static telepathology. Current camera phones are capable of taking excellent quality microscopic images [Figure 2].

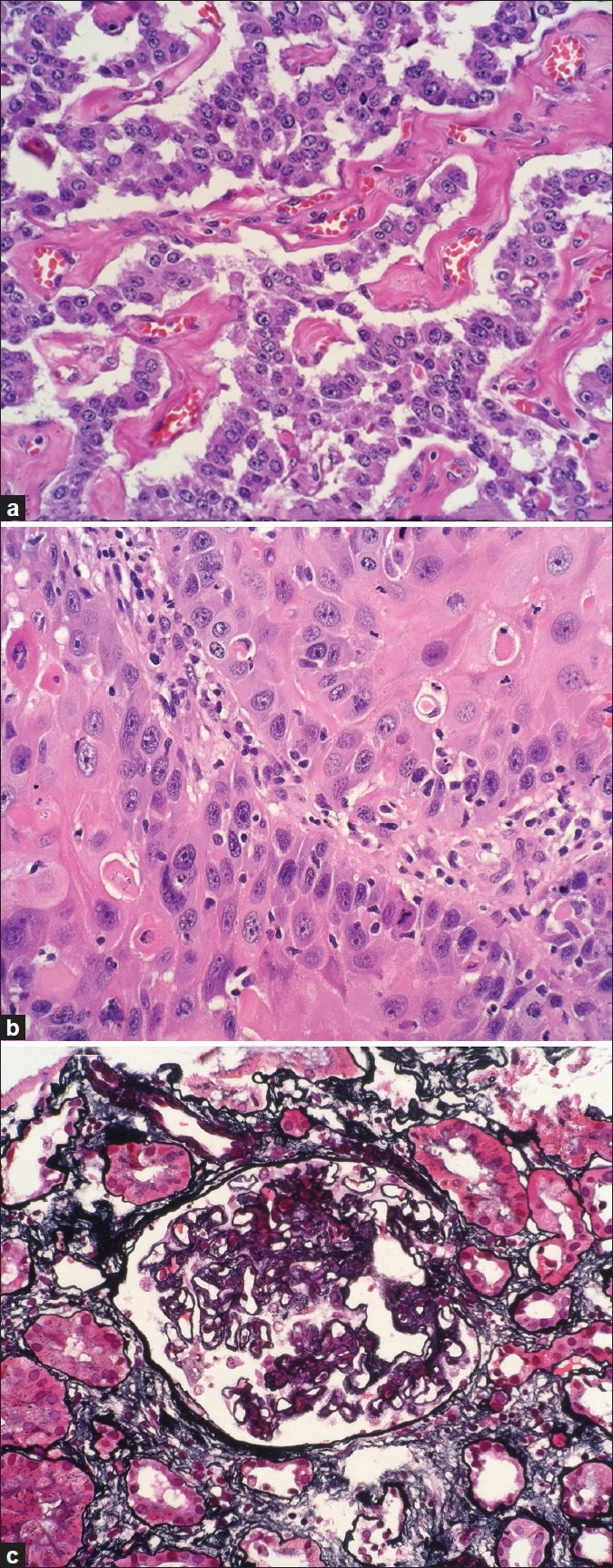

Figure 2.

Microscopic images taken through a microscope eyepiece objective using an Apple iPhone 4 (image courtesy of Dr. Milon Amin, UPMC). (a) pancreatic neuroendocrine tumor (H and E); (b) squamous cell carcinoma (H and E); (c) normal glomerulus (Jones Silver)

CURRENT HANDHELDS: SOFTWARE

Just as in the world of general computing, the operating system largely defines what a handheld computer can and cannot do. The dominant operating systems of the modern era are Google's Android and Apple's iOS, with Microsoft's Windows Phone 7 and Research in Motion's Blackberry OS as other options. While Android's market share is higher than iOS′s, iOS enjoys far greater third-party support, sporting over 230,000 installable applications as opposed to Android's 70,000.[17] iOS's software ecosystem takes a so-called “walled garden” approach, making it impossible for a user to install any piece of third-party software that has not been approved by Apple. In contrast, Google's software ecosystem is open in the manner of Microsoft's desktop operating system (Windows), allowing the user to install any application from any source. Ironically, Microsoft's handheld operating system takes the walled garden approach.[18]

With the exception of Blackberry OS, all of these operating systems are fundamentally rooted in what is known as the multitouch user interface paradigm, in which the primary point of human-computer interaction is a capacitive touchscreen that utilizes finger-driven – as opposed to stylus- or pen-driven – input. When this technology was first introduced, it constituted a radical break from the familiar monitor--keyboard--mouse user interface paradigm that has dominated computing since the 1980s, and necessitated the translation of familiar tasks into gestures that could be performed by the fingers of a human hand. Gestures universal to iOS, Android, and Windows Phone 7 include the following:[19]

Flicking upward or downward with one finger to scroll through text

Pinching inward to zoom in, pinching outward to zoom out

Pressing and holding in order to drag items from one position to another, and releasing when done

Holding a button down to access advanced options.

While the multitouch user interface paradigm is known to be superb for the user who is merely consuming content, it is not as well suited for the user who is creating content – unless said user happens to be finger painting. The touchscreen as the major point of human-computer interaction becomes a liability when attempting to input any amount of text, for instance, because there is little to no tactile feedback for key presses and the visual feedback is easily obscured by the fingers that are attempting to input the text. Several methods of tackling this problem have arisen:[20]

-

The integration of a physical keyboard

- Examples: Palm Pre (WebOS), LG Optimus Slider (Android), Blackberry Curve (Blackberry OS)

-

On-screen keyboards with built-in pattern recognition and word autocorrection/autocompletion algorithms

- Examples: iOS Keyboard (iOS), Swiftkey X (Android)

-

On-screen input methods that focus on multitouch capabilities to deliver innovative ways of entering text

- Example: Swype (Android)

-

Voice recognition

- Example: Siri (iOS; specific to iPhone 4S).

Each of these methods has advantages and pitfalls. Physical keyboards are the most accurate option, but add extra weight and expense to the device; therefore, they are becoming increasingly rare. On-screen keyboards are the standard input method of the modern era, yet error rate is known to be high and input speed is lowest with this method. Multitouch input methods mitigate both the high error rate and the low input speed characteristic of on-screen keyboards, but have a steep learning curve.[20] Voice recognition is still in its infancy, and is currently difficult to use if the user does not speak English, has a speech impediment, or speaks English with a heavy accent.[21]

With the exception of Blackberry OS – which runs Opera Mini – and Windows Phone 7 – which runs Internet Explorer – the web browsers found on current handheld devices share the same heritage. They are all based on WebKit, a rendering engine that forms the basis of Apple's Safari and Google's Chrome.[22] As such, they are modern web browsers that have (a) support for advanced web standards like HTML5, CSS3, and XML, (b) fast JavaScript engines that greatly accelerate Web 2.0 applications, and (c) hardware support for high-definition web video playback. They are no less capable than modern desktop web browsers, meaning that Web 2.0 applications targeted at desktop PCs will function as intended on most current handhelds.[23]

“Voice over IP” (VoIP) applications (e.g., Skype) have emerged as viable options on handhelds, with some smartphones and tablets integrating front-facing cameras in order to enable two-way videoconferencing.[24] Standardized programming interfaces that support videoconferencing are available on both iOS and Android, reducing the programming burden for this class of applications. Methods for grabbing still images from, or placing annotations atop, live video streams also exist.[25]

Finally, all modern handheld operating systems integrate a relational database that can be manipulated by way of its database management system (DBMS). SQLite is the de facto standard found in iOS, Android, Blackberry OS, and WebOS.[26] It is high-performance and fully ACID (atomicity, consistency, isolation, durability) compliant, meaning that it is no less reliable than the commercial DBMS's found at the heart of modern electronic medical records (EMR) or laboratory information systems (LIS). Perhaps most important to the informaticist is the fact that this DBMS is equipped with a universal set of SQL commands, meaning that database access and manipulation is identical to that of desktop and enterprise relational databases.[27]

THE EVOLUTION OF HANDHELDS IN MEDICINE

While there are reports in the literature as early as 1983 of rudimentary clinical calculations done on programmable calculators,[28,29] we choose to begin with the Apple Newton in 1993, as this was the first general-purpose handheld computer to be used in medicine. Unfortunately, it was large, had short battery life, and, though it featured handwriting support that was state-of-the-art for its time, this was still too poor for use in a production environment.[30] Early applications included note taking, the usage of the device as a calculator or as a quick medical reference, usage of the device's PCMCIA (PC card) slot for interfacing with medical sensors, and at least one abortive effort to create a distributed e-prescribing system.[31] While the Newton was an open platform, all programs had to be written in NewtonScript – a lightweight but advanced programming language that integrated concepts that were well ahead of their time, and that would later be found in languages like the now-ubiquitous JavaScript.[32] Furthermore, at the time Newtons could only be used with Apple Macintosh computers, severely limiting their utility in the Microsoft Windows-dominated medical world. It is no surprise, therefore, that after an initial wave of interest, Newton was quickly ruled out as a viable medical handheld platform.

The Palm Pilot and Windows CE-based devices – though they came with their own set of restrictions – largely solved the problems that made the Newton unsuitable for frontline medical use, with a resultant explosion in the usage of handhelds in medicine.[33] However, there were several limitations. First of all, the available processing power of these devices was extremely limited. Though color screens became a standard feature for PDAs in the early- to mid-2000s, they were both low-resolution and low-quality, usually capable of displaying up to 8-bit color. The networking capabilities of these PDAs was largely limited to docking and syncing to a host PC, and unsynced data could be irretrievably lost if the PDA ran out of battery power at any point. As a result, applications during this era of handhelds in medicine were almost completely text based. That being said, this allowed for the development of the following applications:

-

Quick references to medical reference and pharmacopoeias

-

Sophisticated clinical calculators[36]

-

♦Cockroft-Gault for GFR estimation

-

♦Anion gap

-

♦

-

Access to medical literature[37]

-

♦AvantGo

-

♦HealthProLink

-

♦OVID@Hand

-

♦

-

Patient tracking, electronic medical record, and clinical decision support[37,38]

-

♦Patient Tracker

-

♦WardWatch

-

♦Medical PocketChart

-

♦

-

Medical education tools[39,40]

- Procedure logging

- Evaluation of courses

- Communication between faculty and students

Data acquisition for research.[41]

The rise of the smartphone between 2002 and 2007 ushered in the integration of network connectivity, nonvolatile flash memory, and cameras. Processor power and memory capacity also grew exponentially during this time period. Mobile operating systems became more capable and enabled the creation of increasingly sophisticated mobile applications. The availability and popularity of these devices in the general population also rose rapidly, meaning that more patients could be expected to have one. Unfortunately, there was still little to no integration or support of these devices in the information technology (IT) environments of health care systems, with the exception of Blackberry phones for staff e-mail and paging purposes. Though color screens were standard by this point, they were still relatively low resolution and could generally only display up to 16-bit color. Devices in this era suffered from severe “platform fragmentation;” that is, programs written for one phone model were specific to that model, and could not easily be ported to other systems. As a result, advances in medical use of handheld computing during this time period tended to focus on either the integrated camera, the universal text messaging protocol known as Short Messaging Service (SMS), or a combination of the two. Examples of such applications include the following:

-

Telemedicine

-

Patient care

The last 4 years constitute the modern age of handheld computing. This is an era dominated by Apple iOS-based and Google Android-based devices, with multitouch user interfaces, high-resolution screens that can display 24-bit color, true mobile broadband network connections, and processing capabilities approaching that of low-end laptops. Platform fragmentation is rapidly becoming a thing of the past, as the industry has essentially standardized on iOS and Android. Both platforms offer consistent programming environments and rich software ecosystems. Between 2009 and 2011, smartphone market share in the United States tripled from 10% to 28%, with some estimates predicting that by 2014, the majority of phones sold will be smartphones.[53] Accordingly, the vast majority of activity regarding handheld computing in medicine has shifted to smartphones and their unique capabilities. These include the following:

-

Expansion of previous telemedicine efforts

-

Portable electronic medical records

-

Medical education

-

♦Video access on smartphones and tablets[63]

-

♦

-

Patient care

-

♦Increasing use of SMS and MMS technology

-

♦Patient access to personal health information

-

♦Direct annotation of radiologic images.[64]

-

♦

In this era, security is a persistent issue. When utilizing 3G or 4G telecommunications, all data pass through servers of a network provider, which would be considered a third party. Interception of sensitive personal health information is also a possibility. Some network providers – such as Verizon – are currently taking steps to assist with these thorny issues in health information exchange.[65] Also, a smartphone could easily be stolen or misplaced, leading to potentially catastrophic disclosure of sensitive medical data. There are likewise risks for disclosure via eavesdropping, as a user with a smartphone is likely to access sensitive medical data in a public space.

HANDHELDS IN THE PATHOLOGY LITERATURE

An exhaustive search of the medical literature in PubMed reveals 6716 articles on the topic of handheld computing, ranging in publication date from 1983 to the present. Of these, 3663 (55%) deal with the usage of handhelds in medicine, rather than the health risks of handheld devices (e.g., risk of cancer from radiation, risk of automobile accidents, electromagnetic interference in hospital settings). The vast majority of these articles were published in the last decade; for instance, two articles were published in the year of 1983, compared to 61 articles in October 2011 alone. However, there are only nine articles in the entire medical literature that deal directly with handheld computing in pathology, ranging in publication date from 2004 to the present.

In 2004, Ng and Yeo published a paper on using Internet search engines to find high-quality reference material for oral and maxillofacial pathology, and then caching this material on a PDA.[66] In 2006, Sharma and Kamal reported on the preliminary use of mobile phone cameras as a method of remote teaching in undergraduate pathology education.[67] In 2007, Skeate et al, reported the usage of knowledge bases (e.g., AJCC tumor staging guidelines) on PDAs, and their role in enhancing both resident learning and pathology report completion.[68] In 2008, Rutty et al, utilized a fingerprint scanner attached to a PDA in order to obtain biometric information from autopsy specimens.[69] In 2009, Bellina et al, and McLean et al, both reported on the usage of mobile phone cameras to take static digital microscopy images through the objective lens of a microscope, and the subsequent usage of those images in telepathology.[70,71] Concurrently, Massone et al, reported on the usage of mobile phones as tools to take static dermatoscopic images and to send them for teledermatopathologic consultation.[72] Finally, in 2011 Saw et al, published their experience on the usage of SMS in reporting critical lab values,[73] Fontanelo et al, reported on the usage of iPads for the online distribution of whole-slide image (WSI) teaching sets in low-resource countries,[74] and Collins reported on the usage of iPads for the online distribution of digital textbooks for cytopathology.[75]

Based on these publications, a few trends are notable. Almost half (4/9) of these articles deal with static telepathology using mobile phone cameras. Only one paper deals with WSI. Few of these papers (2/9) deal with clinical pathology subspecialties, where one of these is a pure morphology application in hematopathology. Two papers deal with medical education. One paper deals with forensic pathology. Handhelds in the pathology literature have a 7-year history, of which 2009 was the most prolific year. Finally, compared to other specialties in medicine, the interest level in handheld computing in pathology has been extremely low.

There are multiple possible reasons for this. Pathology, though not unique among the medical specialties in its need to manipulate data and/or images, is unique in the sheer scale of data it must handle. It is believed that over 70% of the data in a typical electronic medical record are generated by clinical pathology laboratory data.[76] In anatomic pathology, a WSI can easily be gigabytes in size, even with heavy compression; compare this to radiology, in which it is rare for a digital image to be more than a few hundred megabytes in size. Moreover, while radiologists work primarily on grayscale (8-bit) images with resolutions in the thousands of pixels, pathologists work with color (24-32 bit) images of resolutions in the tens to hundreds of thousands of pixels. This necessitates a large amount of computational power, high-quality, high-resolution screens with large color gamuts, and fast broadband network access, all of which were not available in handheld (or even desktop) computing devices until very recently.[77]

While not reported in the medical literature, there have been other innovative uses of handhelds in both Anatomic and Clinical pathology. Several reference laboratories offer handheld applications from which clients can order tests and see the results of previous tests.[78] There have been many initiatives to utilize the built-in phone cameras, ranging in scope from simply taking photographs through the eyepiece to full-featured attachments that interface a phone with a microscope directly.[79] As handheld computers are increasingly used as readers of published material, textbooks and other educational material are increasingly being placed on handhelds.[80] There are various efforts to create pathology-centric image viewers for handhelds, although these tend to be vendor specific.[81]

FUTURE DIRECTIONS FOR HANDHELDS IN PATHOLOGY

We have reached a point where the hardware and software of handheld computing platforms are powerful and mature enough to be leveraged in Pathology, including the use of WSI-based applications. Therefore, it is reasonable to expect that there will be increasingly more articles in the future regarding pathology and handhelds. Since half of the existing literature already deals with telepathology applications, we can anticipate more research in this area of digital imaging. Many factors require further enhancements including optimal resolution, pixel density, and improved technology for display of WSI, as well as greater attention devoted to patient security and regulations regarding mobile computing in healthcare.

Map/navigation applications on handhelds (e.g., Google Maps, Microsoft Bing Maps) behave in a manner extremely similar to WSI: usage of massive pyramidal images broken up into tiles that are served on a real-time basis to the viewer. There have been efforts to use the publically available map application programming interfaces (API) from Google and Microsoft to create WSI viewers for educational use,[82] and it is only a matter of time before this work is replicated on handhelds. The multitouch and voice recognition user interface paradigms likewise bear further exploration, especially on larger form factors like tablets.

At least two major problems exist when considering the use of WSI on a smartphone. One problem is that smartphone screens are, by design, are small. The small physical size is intrinsic to the role of a smartphone, and therefore not a limitation that will disappear as technology evolves. Large screen size, however, is valuable for viewing and interpreting a whole-slide image. One plausible solution would be to create smartphones in which the screen can be expanded if needed, and then shrunk back for normal use. Work on creating flexible LCD screens has been in progress at many places in the past decade. A good example of the current state of the art is the prototype by Sony that was announced in 2010.[83] This screen is thin and flexible enough to be rolled around a pencil. If smartphones with such expandable screens were to become widely available, they would solve the problem of small screen size for WSI.

An alternative solution would be for a smartphone user to leverage a nearby large screen by borrowing it for temporary use. Large LCD screens are a consumer item today, and available in large volumes at relatively low prices. The challenge is to develop a workflow and associated software that allows use of nearby displays from smartphones in a manner that respects HIPAA-compliant data privacy and at the same time offers a viable business model. Initial experimental steps toward such a capability were described by Wolbach et al, in 2008.[84] Here is a motivating scenario verbatim from that paper:

Dr. Jone is at a restaurant with his family. He is contacted during dinner by his senior resident, who is having difficulty interpreting a pathology slide. Although Dr. Jones could download and view a low-resolution version of the pathology slide on his smart phone, it would be a fruitless exercise because of the tiny screen. Fortunately, the restaurant has a large display with an Internet-connected computer near the entrance. It is sometimes used by customers who are waiting for tables; at other times it displays advertising. Using Kimberley, Dr. Jones is able to temporarily install a whole-slide image viewer, download the 100 MB pathology slide from a secure web site, and view the slide at full resolution on the large display. He chooses to view privacy-sensitive information about the patient on his smart phone rather than the large display. He quickly sees the source of the resident's difficulty, helps him resolve the issue over the phone, and then returns to dinner with his family.

A second problem that impedes universal viewing of WSI from smartphones is the vendor specificity of data formats. Each scanner manufacturer typically uses a different, proprietary format for its whole-slide images. Software that can interpret this proprietary format is a lucrative line of business for the manufacturer. Unfortunately, the marketplace fragmentation induced by these proprietary formats complicates efforts to create universal software for remote viewing of whole-slide images from smartphones. As a step toward solving this problem, a vendor-neutral open-source software library for WSI called OpenSlide has been created.[85] Reading whole-slide images using standard tools or libraries is a challenge because these are typically designed for images that fit into memory when uncompressed. Whole-slide images routinely exceed memory sizes, often occupying tens of gigabytes when uncompressed. The design of OpenSlide is structured similar to the device driver model found in operating systems. Application-facing code is linked to vendor-specific code by way of internal constructors and function pointers. Recently, OpenSlide has been extended to support remote viewing over the Internet. The implementation is in the form of Python bindings for the OpenSeaDragon viewer.[86] This framework-agnostic AJAX-based Deep-Zoom viewer is derived from the code that was released open source by Microsoft as part of its ASP.NET AJAX Control Toolkit in September 2009.[87] Example whole-slide images at the OpenSlide demo site[88] can be viewed today over a 3G network on a smartphone browser.

Due to the fact that handheld web browsers are now identical to those being deployed on desktop PCs, any advance in the usage of Web 2.0 technologies in pathology will directly apply to handhelds. Furthermore, smartphones and tablets now integrate both Wi-Fi connectivity and relational DBMS′s, raising the possibility of their use as both servers and clients for full-fledged LIS and EMRs, especially in developing countries and during times of catastrophe. Tablets provide a form factor and size similar to that of printed books, raising the possibility of their unique use in pathology education. In developing countries, for instance, a tablet with preloaded educational content could be made to function as a webserver, streaming that content to a learning community that would otherwise not have those resources.

CONCLUSION

While work on handheld computing in pathology has been scarce to date, there is a great deal of potential. Handheld hardware has become increasingly fast, reliable, and ubiquitous, and software support comparable to that seen in desktop computing now exists. However, just like WSI, handheld technology creates both opportunities and challenges. While there are clear niches for and several experimental successes with handhelds – especially in education, telepathology, and delivery of care for developing countries – more study is required, and development of standards of practice and validation guidelines are a must. Despite the current efforts of network vendors, questions related to security remain. Even so, it is likely that handheld computing will play a large role in pathology in the digital decade to come.

Footnotes

Available FREE in open access from: http://www.jpathinformatics.org/text.asp?2012/3/1/15/95127

REFERENCES

- 1.Yee A, Tschopp M. Medical eponyms for the PalmOS. [Last accessed on 2011 Nov 1]. Available from: http://eponyms.net/eponyms.htm.

- 2.Koshy E, Car J, Majeed A. Effectiveness of mobile-phone short message service (SMS) reminders for ophthalmology outpatient appointments: observational study. BMC Ophthalmol. 2008;8:9. doi: 10.1186/1471-2415-8-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Buchanan M. Blackberry PlayBook Review. Gizmodo. 2011. Apr 13, [Last accessed on 2011 Dec 7]. Available from: http://gizmodo.com/5791814/blackberry-playbook-review-surprise .

- 4.Pegoraro R. Google's next Android serving: Ice Cream Sandwich. Discovery News. 2011. Oct 27, [Last accessed on 2011 Dec 7]. Available from: http://news.discovery.com/tech/android-ice-cream-sandwich-111027.html .

- 5.Polsson K. Chronology of handheld computers. Handheldtimeline.info 2001-2011. [Last accessed on 2011 Nov 1]. Available from: http://handheldtimeline.info/

- 6.Reed B. A brief history of smartphones. NetworkWorld. 2010. Jun, [Last accessed on 2011 Nov 1]. Available from: http://www.pcworld.com/article/199243/a_brief_history_of_smartphones.html .

- 7.Smith B. ARM and Intel battle over the mobile chip's future. Computer. 2008;41:15–8. [Google Scholar]

- 8.Gowri V. Nvidia Tegra 2 – graphics performance update. Anandtech. 2010. Dec 20, [Last accessed 2011 Nov 3]. Available from: http://www.anandtech.com/show/4067/nvidia-tegra-2-graphics-performance-update .

- 9.Gaudiosi J. How far can Infinity Blade II push the iOS platforms? GamePro 1. 2011. Nov, [Last accessed on 2011 Nov 3]. Available from: http://www.gamepro.com/article/previews/224504/how-far-can-infinity-blade-ii-push-the-ios-platforms/

- 10.Shih G, Lakhani P, Nagy P. Is android or iPhone the platform for innovation in imaging informatics. J Digit Imaging. 2010;23:2–7. doi: 10.1007/s10278-009-9242-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Melero J. GSM, GPRS, and EDGE Performance: Evolution towards 3G/UMTS. West Sussex, New Jersey: Hoboken, John Wiley and Sons; 2003. [Google Scholar]

- 12.Lu WW, Walke BH, Xuemin S. 4G mobile communications: Toward open wireless architecture. Wirel Commun. 2004;11:4–6. [Google Scholar]

- 13.Setlur V, Kuo C, Mikelsons P. Towards designing better map interfaces for the mobile: experience by example. Proceedings of the 1st International Conference and Exhibition on Computing for Geospatial Research and Application. 2010 Jun 21-23; [Google Scholar]

- 14.Korzeniowski P. When it comes to smartphone screens, size matters? Brighthand.com. 2010. Aug 29, [Last accessed on 2011 Nov 3]. Available from: http://www.brighthand.com/default.asp?newsID=16973andnews=Google+Android+OS+Apple+iPhone .

- 15.Fisher J. Smartphone camera shoot-out: iPhone 4S vs. Droid Bionic vs. Galaxy S II vs. HTC Amaze PCMag.com. 2011. Oct 28, [Last accessed on 2011 Nov 2]. Available from: http://www.pcmag.com/article2/0,2817,2395393,00.asp#fbid=Ifeb2ENd7rW .

- 16.Honig Z. Camera showdown: iPhone 4S vs. Iphone 4, Galaxy S II, Nokia N8 and Amaze 4G. Engadget. 2011. Oct 17, [Last accessed on 2011 Nov 2]. Available from: http://www.engadget.com/2011/10/17/camera-showdown-iphone-4s-vs-iphone-4-galaxy-s-iinokia-n8-a/

- 17.Rothman W. App showdown: Android vs. iPhone. msnbc.com. 2011. Nov 2, [Last accessed on 2011 Nov 2]. Available from: http://www.msnbc.msn.com/id/38382217/ns/technology_and_science-wireless/t/app-showdown-android-vs-iphone/

- 18.West J, Mace M. Browsing as the killer app: explaining the rapid success of Apple's iPhone. Telecomm Policy. 2010;34:270–86. [Google Scholar]

- 19.Wigdor D, Fletcher J, Morrison G. Designing user interfaces for multi-touch and gesture devices. Proceedings of the 27th International Conference on Human Factors in Computing Systems. 2009 Apr 4-8; [Google Scholar]

- 20.Castellucci SJ, MacKenzie IS. Gathering text entry metrics on Android devices. Proceedings of the 2011 Annual Conference on Human Factors in Computing Systems. 2011 May 7-12; [Google Scholar]

- 21.O’Brien L. Siri coming to Ireland but not configured to native speakers. Silicon Republic. 2011. Oct 24, [Last accessed on 2011 Nov 1]. Available from: http://www.siliconrepublic.com/digital-life/item/24180-siri-coming-to-ireland-but/

- 22.Grigsby J. WebKit: the dominant smartphone platform. Cloud Four Blog 24. 2009. Aug, [Last accessed on 2011 Nov 1]. Available from: http://www.cloudfour.com/webkit-the-dominantsmartphone-platform/

- 23.Abelson F. Android and iPhone browser wars, part 1: WebKit to the rescue . IBM DeveloperWorks. 2009. Dec 8, [Last accessed on 2011 Nov 3]. Available from: http://www.ibm.com/developerworks/opensource/library/os-androidiphone1/

- 24.Gibbs C, Huang W. Video chat on your Android phone. Google Mobile Blog. 2011. Apr 28, [Last accessed on 2011 Nov 3]. Available from: http://googlemobile.blogspot.com/2011/04/video-chat-on-your-android-phone.html .

- 25.Google. Session initiation protocol. Android Developer Guide. [Last accessed on 2011 Nov 3]. Available from: http://developer.android.com/guide/topics/network/sip.html .

- 26.SQLite Development Team. Well-known users of SQLite sqlite.org. [Last accessed on 2011 Nov 2]. Available from: http://sqlite.org/famous.html .

- 27.SQLite Development Team. Features of SQLite sqlite.org. [Last accessed on 2011 Nov 2]. Available from: http://sqlite.org/features.html .

- 28.DiDonato LJ. Use of the handheld programmable calculator in the preparation of TPN solutions. (531-3).Hosp Pharm. 1983;18:526–8. [PubMed] [Google Scholar]

- 29.Messori A, Donati-Cori G, Tendi E. Iterative least-squares fitting programs in pharmacokinetics for a programmable handheld calculator. Am J Hosp Pharm. 1983;40:1673–84. [PubMed] [Google Scholar]

- 30.Embi PJ. Information at hand: using handheld computers in medicine. Cleve Clin J Med. 2001;68:840–2. doi: 10.3949/ccjm.68.10.840. 845-6, 848-9 passim. [DOI] [PubMed] [Google Scholar]

- 31.Roller JI, Zimnik PR, Goeringer F, Flax RA, Whitecotton B. Application of personal digital assistant technology in clinical obstetrics and gynecology: a serial appraisal. 33rd Annual Meeting of the Armed Forces District of the Americal College of Obstetricians and Gynecologists. 1994. Nov 14-18, [Last accessed on 2011 Nov 1]. Available from: http://myapplenewton.blogspot.com/2010/06/militaryhospitals-trial-apple-newton.html .

- 32.Yacub A, Chambers C, Bey C. Cupertino: Apple Computer; 1996. [Last accessed on 2011 Nov 1]. The NewtonScript Programming Language. Available from: http://www.newted.org/download/manuals/NewtonScriptProgramLanguage.pdf . [Google Scholar]

- 33.Fischer S, Stewart TE, Mehta S, Wax R, Lapinsky SE. Handheld computing in medicine. J Am Med Inform Assoc. 2003;10:139–49. doi: 10.1197/jamia.M1180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Rothschild JM, Lee TH, Bae T, Bates DW. Clinician use of a palmtop drug reference guide. J Am Med Inform Assoc. 2002;9:223–9. doi: 10.1197/jamia.M1001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Fox GN, Moawad N. UpToDate: a comprehensive clinical database. J Fam Pract. 2003;52:706–10. [PubMed] [Google Scholar]

- 36.Barret JR, Strayer SM, Schubart JR. Information needs of residents during inpatient and outpatient rotations: identifying effective personal digital assistant applications. AMIA Annu Symp Proc. 2003:784. [PMC free article] [PubMed] [Google Scholar]

- 37.Bergeron BP. Pen-based computing: applications in clinical medicine. J Med Pract Manage. 2000;16:148–50. [PubMed] [Google Scholar]

- 38.Ebell MH, Gaspar DL, Kurana S. Family physicians’ preferences for computerized decision-support hardware and software. J Fam Pract. 1997;45:136–41. [PubMed] [Google Scholar]

- 39.Fischer S, Lapinsky SE, Weshler J, Howard F, Rotstein LE, Cohen Z, et al. Surgical procedure logging with use of a hand-held computer. Can J Surg. 2002;45:345–50. [PMC free article] [PubMed] [Google Scholar]

- 40.Rao G. Introduction of handheld computing to a family practice residency program. J Am Board Fam Pract. 2002;15:118–22. [PubMed] [Google Scholar]

- 41.Gupta PC. Survey of sociodemographic characteristics of tobacco use among 99,598 individuals in Bombay, India using handheld computers. Tob Control. 1996;5:114–20. doi: 10.1136/tc.5.2.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Andrade R, Wangenheim A, Bortoluzzi MK. Wireless and PDA: a novel strategy to access DICOM-compliant medical data on mobile devices. Int J Med Inform. 2003;71:157–63. doi: 10.1016/s1386-5056(03)00093-5. [DOI] [PubMed] [Google Scholar]

- 43.DeLeo G, Krishna S, Balas EA, Maglaveras N, Boren SA, Beltrame F, et al. WEB-WAP based telecare. Proc AMIA Symp. 2002:200–4. [PMC free article] [PubMed] [Google Scholar]

- 44.Wallace D, Durrant C. Mobile messaging: emergency image transfer. Ann R Coll Surg Engl. 2005;87:72–3. doi: 10.1308/1478708051270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kondo Y. Medical image transfer in emergency care utilizing Internet and mobile phone. Nihon Hoshasen Gijutsu Gakkai Zasshi. 2002;58:1393–401. doi: 10.6009/jjrt.kj00003111390. [DOI] [PubMed] [Google Scholar]

- 46.Carroll AE, Saluja S, Tarczy-Hornoch P. Development of a Personal Digital Assistant based client-server NICU patient data and charting system. Proc AMIA Symp. 2001:100–4. [PMC free article] [PubMed] [Google Scholar]

- 47.Downer SR, Meara JG, Da Costa AC, Seturaman K. SMS text messaging improves outpatient attendance. Aust Health Rev. 2006;30:389–96. doi: 10.1071/ah060389. [DOI] [PubMed] [Google Scholar]

- 48.Obermayer JL, Riley WT, Asif O, Jean-Mary J. College smoking cessation using cell phone text messaging. J Am Coll Health. 2004;53:71–8. doi: 10.3200/JACH.53.2.71-78. [DOI] [PubMed] [Google Scholar]

- 49.Rodgers A, Corbett T, Bramley D, Riddell T, Wills M, Lin RB, et al. Do u smoke after txt. Results of a randomized trial of smoking cessation using mobile phone text messaging? Tob Control. 2005;14:255–61. doi: 10.1136/tc.2005.011577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Ferrer-Roca O, Cardenas A, Diaz-Cardama A, Pulido P. Mobile phone text messaging in the management of diabetes. J Telemed Telecare. 2004;10:282–5. doi: 10.1258/1357633042026341. [DOI] [PubMed] [Google Scholar]

- 51.van Baar JD, Joosten H, Car J, Freeman GK, Partridge MR, van Weel C, et al. Understanding reasons for asthma outpatient (non)-attendance and exploring the role of telephone and e-consulting in facilitating access to care: exploratory qualitative study. Qual Saf Health Care. 2006;15:191–5. doi: 10.1136/qshc.2004.013342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Vilella A, Bayas JM, Diaz MT, Guinovart C, Diez C, Simo D, et al. The role of mobile phones in improving vaccination rates in travelers. Prev Med. 2004;38:503–9. doi: 10.1016/j.ypmed.2003.12.005. [DOI] [PubMed] [Google Scholar]

- 53. comScore. com reports May 2011 US mobile subscriber market share comScore. com. 2011. Jul 5, [Last accessed on 2011 Nov 3]. Available from: http://www.comscore.com/Press_Events/Press_Releases/2011/7/comScore_Reports_May_2011_U.S._Mobile_Subscriber_Market_Share .

- 54.Armstrong DG, Giovinco N, Mills JL, Rogers LC. FaceTime for Physicians: Using Real Time Mobile Phone-Based Videoconferencing to Augment Diagnosis and Care in Telemedicine. Eplasty. 2011;11:e23. [PMC free article] [PubMed] [Google Scholar]

- 55.Trankler U, Hagen O, Horsch A. Video quality of 3G videophones for telephone cardiopulmonary resuscitation. J Telemed Telecare. 2008;14:396–400. doi: 10.1258/jtt.2008.007017. [DOI] [PubMed] [Google Scholar]

- 56.Hauck M, Bauer A, Voss F, Weretka S, Katus HA, Becker R. “Home monitoring” for early detection of implatable cardioverter-defibrillator failure: A single-center prospective observational study. Clin Res Cardiol. 2009;98:19–24. doi: 10.1007/s00392-008-0712-3. [DOI] [PubMed] [Google Scholar]

- 57.Farber N, Haik J, Liran A, Weissman O, Winkler E. Third generation cellular multimedia teleconsultations in plastic surgery. J Telemed Telecare. 2001;17:199–202. doi: 10.1258/jtt.2010.100604. [DOI] [PubMed] [Google Scholar]

- 58.Kroemer S, Fruhauf J, Campbell TM, Massone C, Scwantzer G, Soyer HP, et al. Mobile teledermatology for skin tumour screening: diagnostic accuracy of clinical and dermoscopic image tele-evaluation using cellular phones. Br J Dermatol. 2011;164:973–9. doi: 10.1111/j.1365-2133.2011.10208.x. [DOI] [PubMed] [Google Scholar]

- 59.Geneczko JT., Jr The cell phone camera enters the gastroenterologist's office. Am J Gastroenterol. 2008;103:1840–1. doi: 10.1111/j.1572-0241.2008.01959_9.x. [DOI] [PubMed] [Google Scholar]

- 60.Siegmund CJ, Niamat J, Avery CM. Photographic documentation with a mobile phone camera. Br J Oral Maxillofac Surg. 2008;46:109. doi: 10.1016/j.bjoms.2007.08.026. [DOI] [PubMed] [Google Scholar]

- 61.Filip M, Linzer P, Samal F, Tesar J, Herzig R, Skoloudik D. Medical consultations and the sharing of medical images involving spinal injury over mobile phones. Am J Emerg Med. 2011 doi: 10.1016/j.ajem.2011.05.007. [In Press] [DOI] [PubMed] [Google Scholar]

- 62.Doukas C, Pliakas T, Maglogiannis I. Mobile healthcare information management utilizing Cloud Computing and Android OS. Conf Proc IEEE Eng Med Biol Soc. 2010;2010:1037–40. doi: 10.1109/IEMBS.2010.5628061. [DOI] [PubMed] [Google Scholar]

- 63.Schreiber BE, Fukuta J, Gordon F. Live lecture versus video podcast in undergraduate medical education: A randomised controlled trial. BMC Med Educ. 2010;10:68. doi: 10.1186/1472-6920-10-68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Rubin DL, Rodriguez C, Shah P, Beaulieu C. iPad: Semantic annotation and markup of radiology images. AMIA Annu Symp Proc. 2008:626–30. [PMC free article] [PubMed] [Google Scholar]

- 65.Verizon. Leveraging the power of a Health Information Exchange verizon.com. [Last accessed on 2011 Nov 3]. Available from: http://www.verizonbusiness.com/solutions/healthcare/info/hie.xml .

- 66.Ng CH, Yeo JF. The role of Internet and personal digital assistant in oral and maxillofacial pathology. Ann Acad Med Singapore. 2004;33(4 Suppl):50–2. [PubMed] [Google Scholar]

- 67.Sharma P, Kamal V. A novel application of mobile phone cameras in undergraduate pathology education. Med Teach. 2006;28:745–6. [PubMed] [Google Scholar]

- 68.Skeate RC, Wahi MM, Jessurun J, Connelly DP. Personal digital assistant-enabled report content knowledgebase results in more complete pathology reports and enhances resident learning. Hum Pathol. 2007;38:1727–35. doi: 10.1016/j.humpath.2007.05.019. [DOI] [PubMed] [Google Scholar]

- 69.Rutty GN, Stringer K, Turk EE. Electronic fingerprinting of the dead. Int J Legal Med. 2008;122:77–80. doi: 10.1007/s00414-007-0158-6. [DOI] [PubMed] [Google Scholar]

- 70.Bellina L, Missoni E. Mobile cell-phones (M-phones) in telemicroscopy: increasing connectivity of isolated laboratories. Diagn Pathol. 2009;4:19. doi: 10.1186/1746-1596-4-19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.McLean R, Jury C, Bazeos A, Lewis SM. Application of camera phones in telehaematology. J Telemed Telecare. 2009;15:339–43. doi: 10.1258/jtt.2009.090114. [DOI] [PubMed] [Google Scholar]

- 72.Massone C, Brunasso AM, Campbell TM, Soyer HP. Mobile teledermoscopy--melanoma diagnosis by one click? Semin Cutan Med Surg. 2009;28:203–5. doi: 10.1016/j.sder.2009.06.002. [DOI] [PubMed] [Google Scholar]

- 73.Saw S, Loh TP, Ang SB, Yip JW, Sethi SK. Meeting regulatory requirements by the use of cell phone text message notification with autoescalation and loop closure for reporting of critical laboratory results. Am J Clin Pathol. 2011;136:30–4. doi: 10.1309/AJCPUZ53XZWQFYIS. [DOI] [PubMed] [Google Scholar]

- 74.Fontanelo P, Faustorilla J, Gavino A, Marcelo A. Digital pathology – implementation challenges in low resource countries. Anal Cell Pathol (Amst) 2011 doi: 10.3233/ACP-2011-0024. [In Press] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Collins B. iPad and cytopathology: content creation and delivery. CytoJournal. 2011;8:16. [Google Scholar]

- 76.Pantanowitz L, Henricks WH, Beckwith BA. Medical laboratory informatics. Clin Lab Med. 2007;27:823–43. doi: 10.1016/j.cll.2007.07.011. [DOI] [PubMed] [Google Scholar]

- 77.Pantanowitz L, Valenstein PN, Evans AJ, Kaplan KJ, Pfeifer JD, Wilbur DC, et al. Review of the current state of whole slide imaging in pathology. J Pathol Inform. 2011;2:36. doi: 10.4103/2153-3539.83746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.ARUP. Arup Consult on the Go. ARUPConsult.com. [Last accessed on 2011 Dec 7]. Available from: http://www.arupconsult.com/GeneralContent/MobileWebsite.html .

- 79.Breeslauer DN, Maamari RN, Switz NA, Lam WA, Fletcher DA. Mobile phone based clinical microscopy for global health applications. PLoS ONE. 2009;4:e6320. doi: 10.1371/journal.pone.0006320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Elsevier. Robbins Pathology Flashcards. [Last accessed on 2011 Dec 7]. Available from: http://itunes.apple.com/us/app/robbins-pathology-flash-cards/id367031323?mt=8 .

- 81.Leica Microsystems. Leica Microsystems launches free digital pathology app for iPad and iPhone leica-microsystems.com. 2011. Jun 28, [Last accessed on 2011 Dec 7]. Available from: http://www.leica-microsystems.com/news-media/press-releases/pressreleases-details/article/leica-microsystems-launches-free-digital-pathologyapp-for-ipad-and-iphone/

- 82.Triola MM, Hollowa WJ. Enhanced virtual microscopy for collaborative education. BMC Med Educ. 2011;11:4. doi: 10.1186/1472-6920-11-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Sony. Sony develops a “rollable” OTFT-driven OLED display that can wrap around a pencil sony.net. 2010. May 26, [Last accessed on 2012 Jan 12]. Available from: http://www.sony.net/SonyInfo/News/Press/201005/10-070E/

- 84.Wolbach A, Harkes J, Chellappa S, Satyanarayanan M. Transient customization of mobile computing infrastructure. Breckenridge, CO: Proceedings of MobiVirt 2008: the Workshop on Virtualization in Mobile Computing; 2008. [Google Scholar]

- 85.Goode A, Satyanarayanan M. Tech Rep CMU-CS-08-136. Pittsburgh PA: Computer Science Department, Carnegie Mellon University; 2008. A vendor-neutral library and viewer for wholeslide images. [Google Scholar]

- 86.OpenSlide. OpenSlide homepage openslide.org. 2011. Dec 12, [Last accessed on 2012 Jan 12]. Available at: http://www.openslide.org/

- 87.Microsoft. Seadragon AJAX sample asp.net. 2009. Sep, [Last accessed on 2012 Jan 12]. Available from: http://www.asp.net/ajaxLibrary/AjaxControlToolkitSampleSite/Seadragon/Seadragon.aspx .

- 88.OpenSlide. OpenSlide demo openslide.org. 2011. Dec 12, [Last accessed on 2012 Jan 12]. Available from: http://www.openslide.org/demo .