Abstract

Making accurate predictions about what may happen in the environment requires analogies between perceptual input and associations in memory. These elements of predictions are based on cortical representations, but little is known about how these processes can be enhanced by experience and training. On the other hand, studies on perceptual expertise have revealed that the acquisition of expertise leads to strengthened associative processing among features or objects, suggesting that predictions and expertise may be tightly connected. Here we review the behavioral and neural findings regarding the mechanisms involving prediction and expert processing, and highlight important possible overlaps between them. Future investigation should examine the relations among perception, memory and prediction skills as a function of expertise. The knowledge gained by this line of research will have implications for visual cognition research, and will advance our understanding of how the human brain can improve its ability to predict by learning from experience.

Keywords: Expectation, Anticipation, Learning, Perception, Memory, Top–down processing

1. Introduction

When walking on the street, we are not surprised to see cars, parking meters, or traffic lights. If we catch a glimpse of something that appears on the sidewalk and quickly disappears into the bushes, we may think that it could be a bird, a squirrel, or a cat, depending on its size and shape. However, we would be baffled if we instead saw something unpredicted, such as a goat or an anchor, on the street, because it would be completely out of context. While external information from the world is continuously extracted and processed by various sensory modalities, the human brain readily generates top–down predictions1 based on associations in memory formed from previous experience to make sense of and interact with the environment (Bar, 2007). Various predictions may be formed continuously. For instance, when we see a parking meter, we predict that it is likely a car next to it. Or we predict that a blurry impression is a harmless squirrel. Recent work on visual prediction has suggested that predictions are formed rapidly and draw on associative connections stored in long-term memory (e.g., Bar, 2004, 2009; Gilbert and Wilson, 2007; Schacter et al., 2007, 2008).

Strong associative activations and fast processing speed are also characteristics of expert processing (e.g., Chase and Ericsson, 1981; Freyhof et al., 1992; Richler et al., 2009). For instance, while most people may recognize a fast approaching car merely as a ‘silver car’, a car expert may recognize it instantaneously as the newest model of Jaguar XF, know what engine it may have, and can distinguish between this and other comparable models. In this review, we highlight the possible relations between the processes responsible for prediction and the processes involved in expert processing. We focus our discussion on recent behavioral and imaging findings on visual prediction and on visual expertise, as theories in these two areas have been elaborated and studied especially in the last decade (e.g., Bar, 2003, 2004; Gauthier et al., 2000a; Wong et al., 2009a). Merging the findings from these two literatures offers new insights on the role of associative processing in a variety of cognitive processes that are central to our mental lives, such as recognition, learning, memory and prediction.

2. Generating visual predictions based on analogies and associations

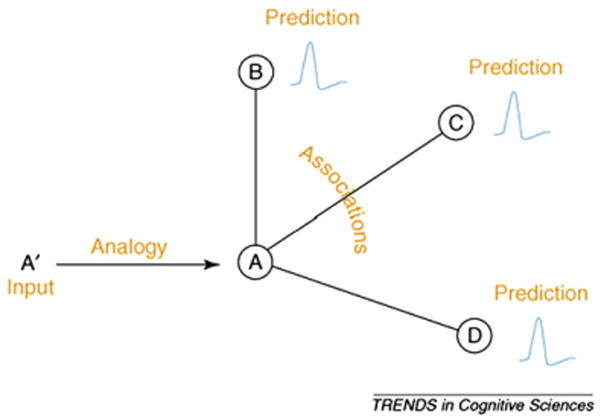

Making rapid and accurate predictions is beneficial in many situations and can facilitate perception and action. To do so, one needs to acquire knowledge about various attributes and relations of objects, people, and events in the world. Such knowledge, stored in memory, constitutes the basis of recognition and prediction for both familiar and unfamiliar instances (e.g., recognizing your own cat vs. a stray cat). While interacting with the environment, the human brain not only makes use of incoming perceptual information, but also compares this input with representations in memory, and generates specific and testable predictions (e.g., ‘is this my phone?’). To understand how the brain generates proactive predictions to guide cognition and behavior, Bar (2007, 2009) proposed a unified theory that links the study of analogies, associations and predictions, which have previously been studied independently (e.g., Bar, 2003, 2004; Gentner, 1983; Holyoak and Thagard, 1997; Minsky, 1975; Schank, 1975). The general idea of the proactive brain framework is that predictive processes involve finding an analogy between an input (e.g., a sofa) and a similar representation in memory (e.g., a general representation of similar sofas you saw before), which activates associated representations (e.g., a coffee table, pillows) related to the particular analogy. The co-activation of associated representations provides specific, on-line predictions on what other instances may be of high relevance in the particular context (see Fig. 1).

Fig. 1.

A schematic depiction of prediction generation via analogies and associations, as proposed in Bar (2007). An input (A′) activates an analogous representation in memory (A), which leads to the co-activation of associated representations (B, C, D) to generate predictions. The input may either be an external, sensory input, or an internally generated thought. Moreover, the input can be of different degrees of complexity, which may result in predictions that are of various levels of elaboration, encompassing the range from perceptual to executive predictions.

Copyright © by Elsevier Ltd. Reproduced with permission.

Since objects in the world rarely appear in isolation but rather appear in typical configurations with other objects that share the same context, knowledge about associations among objects becomes highly useful to understand what to expect in various situations. Objects can be related to each other or to the environment in numerous ways: for instance, a microwave and an oven are kitchen appliances; a cell phone or a laptop may be carried by a businessperson in an office, a train station, or a coffee shop. It has been proposed that stored memory representations of objects are clustered and linked depending on the relatedness of the objects. These clusters of related representations can be referred to as ‘context frames’ (e.g., Bar, 2004; Bar and Ullman, 1996; Barsalou, 1992; Friedman, 1979; Mandler and Johnson, 1976; Palmer, 1975; Schank, 1975). In such representations of context, certain elements are generally expected to appear (e.g., a sofa and a TV set in a living room, or a ball and a hoop in basketball game). Context frames can be formed from real-world experience via implicit observations or explicit learning. For instance, implicit learning can occur for covariance between shapes, syllables, tones, or even more abstract, conceptual categories that appear in a predictable arrangement (Behrmann et al., 2005; Brady and Oliva, 2008; Chun and Jiang, 1998; Fiser and Aslin, 2001; Saffran et al., 1996; Saffran et al., 1999; Turk-Browne et al., 2005). In addition, meaningful relations about objects, people, or events can also be learned explicitly (e.g., a bottle is for holding water; Superman and Clark Kent are the same person).

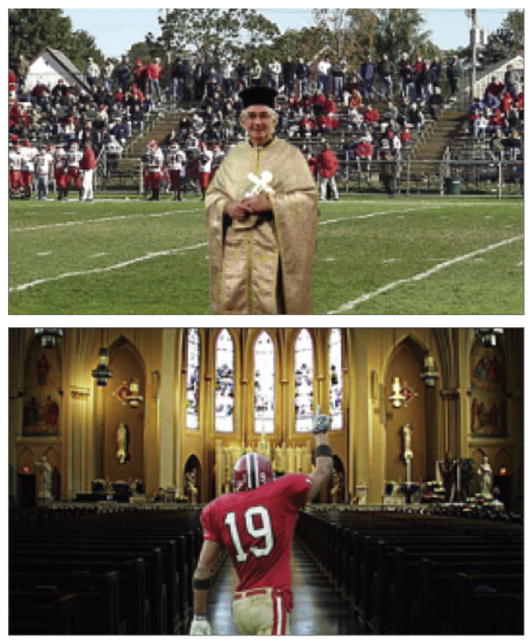

Associative processing is quickly triggered merely by looking at an everyday object (e.g., a chair; Aminoff et al., 2007; Bar and Aminoff, 2003; Bar et al., 2007a), and such associative processing is critical for visual recognition and prediction. The efficiency of predicting the occurrence of an item depends on the consistency between bottom– up sensory input and stored associative representations in memory. When seeing a salient item in a picture (e.g., a football player), associative processing may lead an observer to expect a particular context (e.g., a football field) and other objects in the scene (e.g., banners, cheering fans), as all these are predictable within the same context frame. But if an unexpected item occurs in a given context instead (e.g., a clergyman in a football field, see Fig. 2), recognition of either the item or context becomes hindered, presumably because the incongruent associations do not match our predictions or expectations (Davenport, 2007; Davenport and Potter, 2004; Joubert et al., 2007; Mack and Palmeri, 2010; Palmer, 1975; see also Biederman et al., 1982). These findings suggest that context frames are activated to generate predictions by seeing either a familiar object or context (Bar and Ullman, 1996), and that observers are unable to selectively attend to an item while ignoring the context (Davenport and Potter, 2004; Joubert et al., 2007; see also Mack and Palmeri, 2010). Notably, the ultra-rapid detection for inconsistency between objects and scenes suggests that associative predictions may be generated instinctively (e.g., for as brief as 26 ms of presentation time, Joubert et al., 2007; Mack and Palmeri, 2010).

Fig. 2.

Examples of inconsistent pairing of a salient item and a scene, used in Davenport and Potter (2004). Top: A clergyman in a football field. Bottom: A football player in a church.

Copyright © by American Psychological Society. Reproduced with permission.

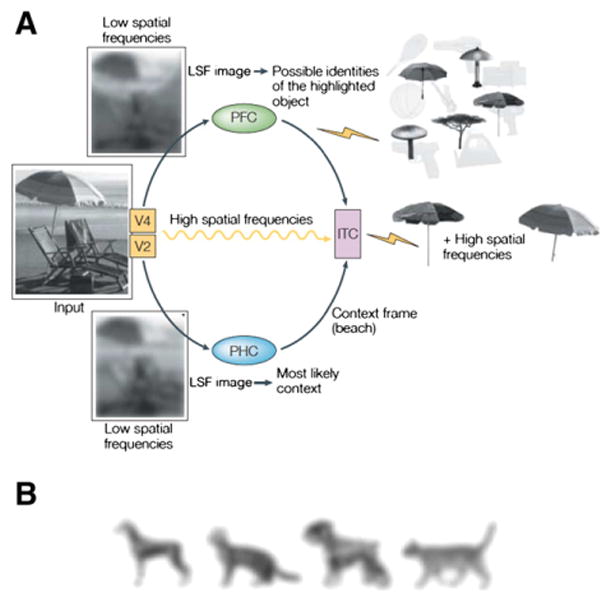

Predictions propagate from top–down mechanisms to influence bottom–up processes. The information extracted from bottom–up inputs to support the top–down processes may be minimal, such that the information can be analyzed and interpreted promptly. Bar (2003, 2004) proposed that only limited sensory information is necessary to trigger predictive processes (see Fig. 3A). Namely, partial information about objects in a visual context conveyed by low spatial frequencies (LSF) is extracted and processed rapidly relative to fine details carried by high spatial frequencies (HSF). The LSF information is then matched to representations in memory that may be ‘averaged’ from similar instances previously encountered. The general representations of objects or scenes, or ‘gist’ (Oliva and Torralba, 2007; Torralba and Oliva, 2003), reduce the number of possible identities for attended objects (e.g., umbrellas, lamps), and are often adequate for matching objects at the basic-level for everyday recognition (Rosch et al., 1976) since visual features of objects in the same basic-level categories (e.g., dogs) are often similar to each other (e.g., compared to cats, Fig. 3B). In sum, by linking the general impression of a new input with the most similar representation in memory based on similarity, top–down mechanisms can quickly generate predictions that facilitate bottom–up processes in object and context recognition (Aminoff et al., 2008; Bar et al., 2006a; Kveraga et al., 2007).

Fig. 3.

A. The model for the top–down contextual facilitation of object recognition proposed in Bar (2004). According to this model, low spatial frequencies (LSF) from an input are extracted rapidly to activate an association set with possible interpretations of a target object (e.g., the target object may be an umbrella or a lamp, but it is certainly not a lighthouse or a dog). The later arrival of high spatial frequencies determines the exact representation of the specific exemplar (e.g., the target object is indeed a beach umbrella). For simplicity, only the relevant cortical connections and flow directions of the proposed mechanisms are illustrated here. ITC, inferior temporal cortex; LSF, low spatial frequencies; PFC, prefrontal cortex; PHC, parahippocampal cortex, V2 and V4, early visual areas. ‘Lightening strike’ symbols represent activation of representations. B. Individual members of basic-level categories tend to look similar to each other and look different from members in other categories (e.g., dog vs. cat). LSF representations are often sufficient for distinguishing basic-level object categories.

Copyrights © Nature Publishing Group and MIT Press. Adapted with permission.

3. Associative processes in perceptual expertise

Observers appear to be able to generate associations and predictions reliably and possibly automatically, and this ability is likely acquired through extensive experience while interacting with the world. Just how much our ability to produce helpful predictions is enhanced by experience and further training is an important open question. Every person possesses some level of expertise in many domains, but enthusiasts of various domains (e.g., birdwatchers, chess players, musicians, stamp collectors) possess much broader and deeper visual and non-visual knowledge that are associated with items in their domains of expertise, compared with average people or novices (e.g., Tanaka and Taylor, 1991). Here we synthesize evidence from the existing literature that indicates that experts elicit stronger associative processing due to enhanced visual and nonvisual knowledge and are faster and more accurate in making use of analogies and generating predictions about their specific domains of expertise. Note that associative or predictive processes can be observed in most people. For instance, color information (e.g., yellow) may be strongly associated with certain everyday objects (e.g., banana). Such color–shape associations can influence perception of the actual color on the objects (Hasen et al., 2006; Witzel et al., 2011). Nonetheless, a key question is whether such processes can be enhanced through training. Our focus here is on expertise in visual perception, although the influence of enhanced associative knowledge on predictions is likely general to various areas of non-visual expertise (e.g., athletes; see Ericsson and Lehmann, 1996; Ericsson and Smith, 1991).

Not surprisingly, perceptual expertise2 leads to improvements over novices in many visual perception or memory tasks. At a glance, advanced birdwatchers or car experts excel at identifying individual objects in their respective areas of expertise, regardless of whether the task involves classification at the subordinate-level (e.g., ‘black-winged snowfinch’ or ‘Honda Civic 2004’) or the basic-level (e.g., ‘bird’ or ‘car’) (Mack et al., 2009; Tanaka, 2001; Tanaka and Taylor, 1991; Wong et al., 2009a); grandmasters of chess have larger visual and memory capacity for and greater search efficiency with meaningful chess configurations compared with amateur or novice players (e.g., Brockmole et al., 2008; Chase and Simon, 1973). In recent years, the majority of studies on perceptual expertise have focused on the role of visual or shape properties in expert recognition and memory (e.g., Gauthier and Tarr, 1997; Gauthier et al., 2000a,b, 2003; Grill-Spector et al., 2004; Harel et al., 2010; Herzmann and Curran, 2011; Op de Beeck et al., 2006, 2008; Rhodes et al., 2004; Rossion et al., 2004; Rossion et al., 2007; Scott et al., 2006, 2008; Wong et al., 2009a,b). We suggest that both visual and non-visual knowledge can play an important role in associative processing. Here we will first describe the studies that have revealed strong and rigid perceptual associations for objects that are developed during the acquisition of perceptual expertise. These findings indicate that some aspects of perceptual expertise effects may resemble the object–context associative effect in studies on prediction discussed above.

Rigid associative relations among different features and items can be learned and expected as a result of expertise training. Since associations among features or items are particularly strongly established in experts and are retrieved automatically (cf. Schneider and Shiffrin, 1977; Shiffrin and Schneider, 1977), experts may find it impossible to ignore associated visual information, even when such information is task-irrelevant. For instance, expert readers appear to automatically extract contextual information (e.g., font, size) when reading text, such that a sequence of letters presented in the same font are recognized faster than in different fonts (e.g., ‘prediction’ vs.

Gauthier et al., 2006; Mayall et al., 1997; Sanocki, 1987, 1988). Such contextual information may be completely irrelevant to the task at hand (i.e., to identify letters and words rather than the fonts). Although readers who are fluent in a language may take such contextual regularity for granted, novice readers (e.g., non-Chinese readers viewing Chinese characters) are not affected in the same manner (Gauthier et al., 2006). Likewise, impoverished or incomplete visual context may also be sufficient for experts to activate relevant associations to facilitate recognition. For instance, a briefly presented (50 ms) prime word, which consists of letters and digits (e.g., M4T3R14L) or letters and symbols (e.g., MΔT€R1ΔL) that are similar to an actual word (e.g., MATERIAL), facilitates recognition of a target word almost as much as when the prime and target are identical words (Perea et al., 2008), indicating powerful efficiency and expectancy in expert visual word recognition and prediction.

Gauthier et al., 2006; Mayall et al., 1997; Sanocki, 1987, 1988). Such contextual information may be completely irrelevant to the task at hand (i.e., to identify letters and words rather than the fonts). Although readers who are fluent in a language may take such contextual regularity for granted, novice readers (e.g., non-Chinese readers viewing Chinese characters) are not affected in the same manner (Gauthier et al., 2006). Likewise, impoverished or incomplete visual context may also be sufficient for experts to activate relevant associations to facilitate recognition. For instance, a briefly presented (50 ms) prime word, which consists of letters and digits (e.g., M4T3R14L) or letters and symbols (e.g., MΔT€R1ΔL) that are similar to an actual word (e.g., MATERIAL), facilitates recognition of a target word almost as much as when the prime and target are identical words (Perea et al., 2008), indicating powerful efficiency and expectancy in expert visual word recognition and prediction.

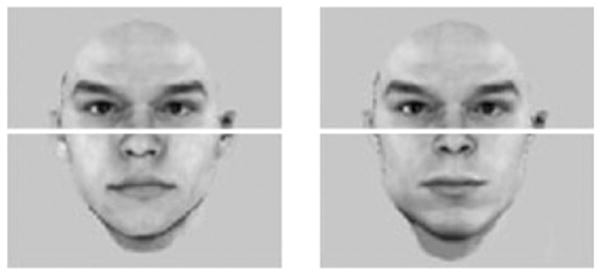

Another example of rigid visual associative processing can be found in face perception. Most adults are experts in face recognition. Although all faces are homogeneous in their configuration and features (i.e., all faces have two eyes, a nose and a mouth), we are able to discriminate and identify faces at the subordinate level (e.g., Brad Pitt) as quickly as at the basic level (e.g., male), indicating perceptual expertise (Tanaka, 2001). A characteristic of face perception is holistic processing, which reveals that all features in a face are processed as a whole (e.g., Farah et al., 1998; Tanaka and Farah, 1993; Young et al., 1987). Since facial features always co-occur and co-vary in a meaningful way (e.g., Brad Pitt’s eyes always appear with his nose and mouth), it appears natural that strong associations among facial features are developed and strengthened for face recognition expertise. For instance, seeing a big smile on someone’s face leads to the prediction or expectation of seeing dimples or scrunched eyes on the same face. In other words, it is almost impossible to selectively process one feature of a face without taking in other associated facial information. This inability for selective attention has been shown in the composite paradigm (see Fig. 4, Cheung et al., 2008; Farah et al., 1998; Hole, 1994; Richler et al., 2011; Young et al., 1987), where the top half of a face (e.g., Brad Pitt) is combined with the bottom half of another face (e.g., Matt Damon) to form a composite. Observers have great difficulty in identifying the target half of the composite (e.g., top) while ignoring the task-irrelevant half (e.g., bottom), because the representations of the facial features ‘fuse’ together within a face context.

Fig. 4.

Sample composite faces, made from combining different bottom halves with the same top half, appear to be two completely different faces. It is difficult to selectively attend to the top halves only and recognize that the top halves of the two composite faces are identical, presumably because the strong associative nature of face processing leads to ‘fusion’ of facial features within a face context, and to the expectation that these faces are of different people (as from our extensive experience, different features can only be found on different faces). The face halves were taken from the Max Planck Institute face database.

This holistic effect arises from rigid associative processing of features and happens at a glance (≤ 50 ms presentation time, Richler et al., 2009). Intriguingly, the holistic effect strikingly resembles the object– scene consistency effect (e.g., Davenport and Potter, 2004, cf. Figs. 2 and 4), indicating failures of selective attention to a subset of information in a context. Note that the holistic effect appears to be experience-based and is associated with specific computations (e.g., subordinate-level recognition) with objects in a homogeneous category (e.g., faces, cars, birds), as the holistic effect is reduced for objects with which we have less experience (e.g., faces from an unfamiliar race; Michel et al., 2006; Tanaka et al., 2004). Furthermore, holistic processing is not unique for faces, and has also been observed in experts of non-face categories such as cars (Bukach et al., 2010; Gauthier and Tarr, 2002), novel objects (Gauthier et al., 2003; Wong et al., 2009a), English words (Wong et al., 2011), musical notations (Wong and Gauthier, 2010a), fingerprints (Busey and Vanderkolk, 2005) and chess game boards (Boggan et al., in press). This raises the possibility that the object–context association effect (e.g., Davenport and Potter, 2004) can also be strengthened by additional yet specific practice.

4. Neural mechanisms for visual prediction and perceptual expertise

Although associative processing appears critical in both visual prediction and perceptual expertise, past studies from these two lines of research have asked distinct sets of questions. For instance, most studies in visual prediction are concerned with recognition of everyday objects and scenes, while most perceptual expertise research has emphasized rapid subordinate-level processing of objects in only one or a few categories. Investigation of the underlying neural mechanisms for these processes has also revealed different emphases: associative prediction research has focused on a large-scale brain network that coordinate top–down processes, whereas perceptual expertise studies have mainly concentrated on local regions in the ventral visual stream. Here we describe the main findings on the neural correlates of predictive and expert processing separately, and highlight the possible overlaps between them that likely merit further attention from both fields.

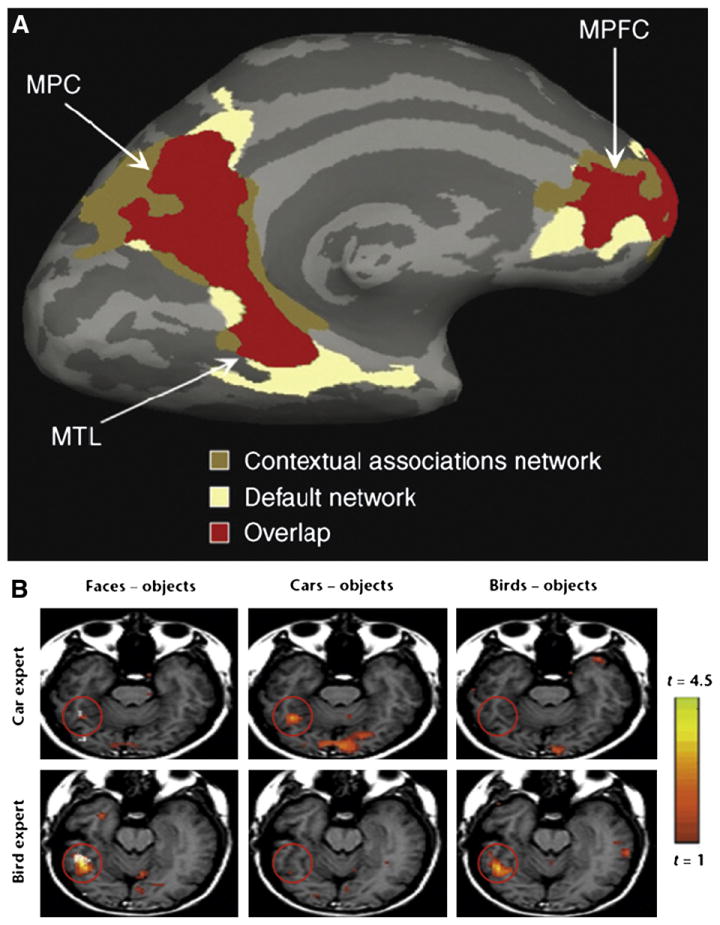

Recent studies using human functional neuroimaging have revealed a cortical network that mediates context-based associative predictions, which includes structures in three main regions: the medial temporal lobe (MTL), the medial parietal cortex (MPC) and the medial prefrontal cortex (MPFC). Note that this network largely overlaps with the ‘default mode’ network, which is active when observers are not engaged in goal-directed behavior (Raichle et al., 2001; Buckner et al., 2008). This overlap implies that the associative processing comprises a large part of the functions mediated by the brain’s default mode (Bar et al., 2007b; see Fig. 5). The associative prediction network has been defined by contrasting everyday objects that are strongly associated with a specific context (e.g., a beach ball) with objects that are weakly tied to any specific context (e.g., a camera) (Aminoff et al., 2007, 2008; Bar and Aminoff, 2003; Bar, 2004). Robust context association effects are observed in the parahippocampal cortex (PHC) in the MTL and the retrosplenial complex (RSC) in the MPC. The PHC and RSC have previously been thought to be engaged in place processing (e.g., Aguirre et al., 1996; Epstein and Kanwisher, 1998) and in episodic and autobiographical memory (e.g., Ranganath et al., 2004; Svoboda et al., 2006; Wagner et al., 1998), which may be reasonable due to the associative nature of these processes (e.g., Bar and Aminoff, 2003; Bar et al., 2008). While MTL, MPC, and MPFC may be involved for different kinds of associations, additional research is required to distinguish these relations. It is possible that the MTL is responsible for simple or unique associations (e.g., Schacter, 1987; Eichenbaum, 2000; Ranganath et al., 2004). Specifically, the PHC appears to represent stimulus-specific context and associations, which are sensitive to specific appearance (e.g., my office, Aminoff et al., 2008). In contrast, the RSC in the MPC represents prototypical, generic information about associative context frames (e.g., an office, Aminoff et al., 2008). The representations and processes from PHC and RSC presumably interact with and provide the basis for the predictive processes in the MPFC, while the MPFC may be involved for processing of deliberative or conditional associations (Bar et al., 2007b). In particular, the orbitofrontal cortex (OFC), which is a multimodal association region in the MPFC (Barbas, 2000; Kringelbach and Rolls, 2004) that receives fast projection of visual input via the magnocellular pathway (Kveraga et al., 2007), may become increasingly important in generating top–down influences to predict possible object identities when the visual input is relatively coarse (Bar et al., 2006a). These different kinds of top–down processes then facilitate modality-specific cortex such as the fusiform gyrus for object recognition (Bar et al., 2006a; Kveraga et al., 2011).

Fig. 5.

Different emphases in the investigation of neural mechanisms for associative prediction vs. perceptual expertise. A: Medial view of the typical contextual association network (e.g., Bar and Aminoff, 2003) and its overlap with the default network (e.g., Raichle et al., 2001). The context network activations are obtained from the contrast between strongly contextually associative objects (e.g., a tennis racket) and weakly contextually associative objects (e.g., a jacket). The default network regions are those that are more active during fixation rest than during task performance. MPC, medial parietal cortex. MTL, middle temporal lobe. MPFC, medial prefrontal cortex. B: An axial oblique slice through the right ‘fusiform face area’ (FFA, marked with a red circle), one of the local regions emphasized in several perceptual expertise studies (for subordinate-level expertise, e.g., Gauthier et al., 1999, 2000a,b; Wong et al., 2009b), for a car expert and a bird expert. The FFA was first defined by more robust activations for faces than objects. In Gauthier et al. (2000a), this area was found to be more activated for car experts when viewing cars compared to other objects, and for bird experts when viewing birds compared to other objects.

Copyrights © by Elsevier Ltd. and Nature America Inc. respectively. Adapted with permission.

Instead of examining large-scale cortical networks, many neuroimaging studies on perceptual expertise have instead focused on local regions in the ventral visual pathway, such as in the face-, word- or letter-selective regions (e.g., face-selective: Kanwisher et al., 1997; McCarthy et al., 1997; word-selective: Baker et al., 2007; Cohen et al., 2000; letter-selective: Gauthier et al., 2000; James et al., 2005). Various types of perceptual expertise (e.g., with faces, words, letters, or non-face objects) differentially alter neural representations in these perceptual regions (e.g., Gauthier et al., 1999; Gauthier et al., 2000a; James et al., 2005; Xu, 2005; Wong et al., 2009b). For instance, the word- and letter-selective areas are found to be selective to expert processing of printed words or letters in various languages (e.g., Roman letters, Chinese characters, Hebrew words; Baker et al., 2007; James et al., 2005; Wong et al., 2009c). In spite of the drastically different linguistic and visual properties in these writing systems, comparable expert training in reading appears to be critical in recruiting these areas. Likewise, the ‘fusiform face area’ (FFA) was originally proposed to be selective for faces (Kanwisher et al., 1997), which are processed holistically (i.e., facial features are associated in a face context; Farah et al., 1998; Tanaka and Farah, 1993; Young et al., 1987). As mentioned above, experts of non-face objects (e.g., cars, chess game boards) also exhibit enhanced holistic processing (e.g., Bukach et al., 2010; Boggan et al., in press). The increase in holistic processing is found to be correlated with higher activity in the FFA, suggesting the possibility that the FFA can be modulated by perceptual expertise with cars, birds (Gauthier et al., 2000a), chess game boards (Bilalić et al., 2011; see also Krawczyk et al., 2011), or novel 3D objects (Gauthier and Tarr, 2002; Wong et al., 2009b).

Since experts also possess superior non-visual associative knowledge about objects of expertise (e.g., the habitat of a warbler or that of a belted kingfisher, and what sounds they make), it is conceivable that enhanced activity should also be observed in the context associative regions. Interestingly, several studies indeed reported enhanced neural activity for objects of expertise in the lateral PHC for expert birdwatchers (Gauthier et al., 2000a), car experts (Gauthier et al., 2000a; Harel et al., 2010) and advanced chess players (Campitelli et al., 2007; see also Amidzic et al., 2001). Although it is possible that the enhanced PHC activity in chess experts is related to the spatial processing of the position of chess pieces on a chessboard, it is unlikely that the similar effect in bird and car experts can be explained by spatial processing, supporting the notion that the best characterization of the role of the PHC is related to associations in general rather than space in particular (Bar et al., 2008). Indeed, this may instead be a neural indicator of associative processing in experts across domains.

5. Proposed associative prediction framework in experts

In the current framework of associative prediction (e.g., Bar, 2003, 2004), the PHC, RSC and OFC influence processing in the perceptual system to guide object recognition (Bar et al., 2006a; Kveraga et al., 2011). Additionally, perceptual and semantic associations are strengthened with expertise (Gauthier et al., 2003; Tanaka and Taylor, 1991; Herzmann and Curran, 2011). Critically, how does the associative prediction network interact with the perceptual system in experts? Let’s take face expertise as an example to demonstrate the potential interactions between perceptual and predictive processes that are generated by perception of an object of expertise. When seeing a famous face (e.g., Barack Obama), all features of the face are processed holistically and the visual representation may instantly activate associated visual or non-visual details about the person (e.g., he lives in the White House; he was a Senator for Illinois). These associations can lead to predictions about what items or people may be around him (e.g., Michelle Obama or Joe Biden). Indeed, famous faces not only activate the FFA, a perceptual locus for faces (Grill-Spector et al., 2004; Kanwisher et al., 1997; Ishai et al., 1999), but also the PHC, a key area of the associative network (Bar et al., 2007a; Leveroni et al., 2000; Pourtois et al., 2005; Sergent et al., 1992; Trautner et al., 2004).3 Conversely, when meeting a new friend, all visual features of the face are also likely processed holistically. While this representation may not be linked to specific visual or non-visual details associated with that person, you likely generate associations related to the face immediately (e.g., he looks like a friend from high school) and make predictions about different attributes of the person (e.g., whether he is friendly or aggressive; what kind of job he may have) based on similarity of the person to ‘prototypes’ or general representations of many other people you already know (Ambady et al., 2000; Bar et al., 2006b; Bar et al., 2007a; Willis and Todorov, 2006). We suggest that these processes are supported by the interactions between the context associative network and the perceptual system, and may be triggered by various kinds of perceptual cues (e.g., biological motion, Kramer et al., 2010). It is likely that these interactive processes are not only found with face or person perception but with perceptual expertise for other object categories. However, further empirical work is necessary to understand how exactly these interactions operate.

The result and purpose of associative predictions may be to activate other brain areas to be readily engaged in anticipation for what is coming. To reiterate, experts’ superior visual and non-visual knowledge would be beneficial in generating such predictions. For instance, with a glance at a single, visually presented musical notation, a multimodal brain network (including the motor cortex, auditory cortex) is activated in proficient music readers, indicating the rich representations related to music reading and performance (Wong and Gauthier, 2010b). More importantly, experts do not only have more resources for generating predictions and planning for appropriate actions, but are also likely to make more accurate and elaborated predictions in a given context. For example, chess grandmasters make better moves than amateur chess players because they can ‘think ahead’ further (Charness, 1981; Holding, 1992). Specifically, strong players are more accurate in predicting the endpoint positions of the pieces than weaker players (Holding, 1989). Therefore, strong associations in long-term memory that are formed during the acquisition of expertise in a domain may lead to increased strength, depth and specificity of predictions.

5.1. Future directions

Apart from the potential links between visual prediction and perceptual expertise discussed above, many questions remain to be further explored. For instance, what are the critical elements that make efficient training for different object categories to promote top– down associative processing? As mentioned earlier, it is important to distinguish the nature of the visual training tasks, as not all training requirements would lead to top–down effects that are generalizable across tasks and across exemplars within an object category. Moreover, since various object categories (e.g., faces and letters) require different computational demands from the visual system, do the different categories recruit identical top–down associative mechanisms, or are there possible different sub-systems supporting associative processing?

Moreover, is there a qualitative or quantitative difference between the ability to predict between experts and novices? Important questions to address include what the differences are in the time course and mechanisms for accessing visual and non-visual associations between experts and novices, and whether there are any differences in the neural representations that support associations, depending on the degree of expertise. Currently, it remains unknown whether the visual and semantic associations are primarily stored in the context associative network for experts, or the associations may also be represented in the visual system as perceptual expertise strengthens visual performance.

It is also interesting to ask whether experts are more adaptive or inflexible when facing inconsistent or unpredictable situations. Some theoretical accounts on the flexibility of expertise skills (e.g., Ericsson and Lehmann, 1996) have suggested that experts automatically retrieve reasoning or associations linked to particular tasks or stimuli and thus cannot ignore such rigid associations even when the associations are not optimal for the task at hand. In contrast, experts might also have access to more probable associations and analogies that may contribute to more flexible and creative processing.

To answer these questions, future investigation of expertise should broaden the window of examination to include the behavioral and neural mechanisms of associative prediction in experts and novices in various domains. In sum, while most people are proficient in the skill of everyday recognition and prediction, little work in cognitive neuroscience has been done to understand the acquisition and development of this skill and how it may interact with expertise training in the perceptual system. We suggest that studying visual prediction jointly with perceptual expertise will provide a more complete picture of visual cognition.

Acknowledgments

This work was supported by NEI-NIH grant 1R01EY019477-01, NSF grant BCS-0842947, and DARPA grant N10AP20036. We thank Daryl Fougnie, Eiran Vadim Harel and Tomer Livne for helpful comments on the manuscript.

Footnotes

The terms ‘prediction’ and ‘expectation’ have been used interchangeably in the literature to describe top–down processes that are involved in visual recognition. Here, we primarily use ‘prediction’ to describe top–down visual facilitation that is based on activations of appropriate visual or non-visual associations and analogies.

Note that not all types of visual training would enhance top–down processing due to associations. For instance, perceptual learning studies often found task-specific improvement on visual discrimination for trained visual features or patterns (e.g., Zhang et al., 2010; for reviews, see Gilbert and Sigman, 2007; Sagi, 2011; but see Wong et al., 2011); contextual cueing studies showed implicit or explicit learning of specific contextual associations (e.g., Chun and Jiang, 1998; Brockmole and Henderson, 2006). In contrast, the training effects for perceptual expertise can be generalized across unfamiliar exemplars and tasks (e.g., identity or location tasks; e.g., Gauthier et al., 2000a, b; Wong et al., 2009a). More importantly, experts often acquire both visual and nonvisual object knowledge (e.g., Tanaka and Taylor, 1991), which we propose to play a critical role in the activation and progression of associations to facilitate object processing.

Moreover, attractive faces preferentially activate the OFC, which may be related to associative processing and reward or esthetic assessment (Aharon et al., 2001; Ishai, 2007; O’Doherty et al., 2003).

References

- Aguirre GK, Detre JA, Alsop DC, D’Esposito M. The parahippocampus subserves topographical learning in man. Cereb Cortex. 1996;6 (6):823–829. doi: 10.1093/cercor/6.6.823. [DOI] [PubMed] [Google Scholar]

- Aharon I, Etcoff N, Ariely D, Chabris CF, O’Connor E, Breiter HC. Beautiful faces have variable reward value: fMRI and behavioral evidence. Neuron. 2001;32 (3):537–551. doi: 10.1016/s0896-6273(01)00491-3. [DOI] [PubMed] [Google Scholar]

- Ambady N, Bernieri FJ, Richeson JA. Toward a histology of social behavior: judgmental accuracy from thin slices of the behavioral stream. In: Zanna MP, editor. Advances in Experimental Social Psychology. Vol. 32. Academic Press; San Diego, CA: 2000. pp. 201–272. [Google Scholar]

- Amidzic O, Riehle HJ, Fehr T, Wienbruch C, Elbert T. Pattern of focal gamma-bursts in chess players. Nature. 2001;412 (6847):603. doi: 10.1038/35088119. [DOI] [PubMed] [Google Scholar]

- Aminoff E, Gronau N, Bar M. The parahippocampal cortex mediates spatial and nonspatial associations. Cereb Cortex. 2007;17 (7):1493–1503. doi: 10.1093/cercor/bhl078. [DOI] [PubMed] [Google Scholar]

- Aminoff E, Schacter DL, Bar M. The cortical underpinnings of context-based memory distortion. J Cognitive Neurosci. 2008;20 (12):2226–2237. doi: 10.1162/jocn.2008.20156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker CI, Liu J, Wald LL, Kwong KK, Benner T, Kanwisher N. Visual word processing and experiential origins on functional selectivity in human extrastriate cortex. P Natl Acad Sci USA. 2007;104 (21):9087–9092. doi: 10.1073/pnas.0703300104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M. A cortical mechanism for triggering top–down facilitation in visual object recognition. J Cognitive Neurosci. 2003;15 (4):600–609. doi: 10.1162/089892903321662976. [DOI] [PubMed] [Google Scholar]

- Bar M. Visual objects in context. Nat Rev Neurosci. 2004;5 (8):617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Bar M. The proactive brain: using analogies and associations to generate predictions. Trends Cogn Sci. 2007;11 (7):280–289. doi: 10.1016/j.tics.2007.05.005. [DOI] [PubMed] [Google Scholar]

- Bar M. The proactive brain: memory for predictions. Philos T R Soc B. 2009;364 (1521):1235–1243. doi: 10.1098/rstb.2008.0310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Aminoff E. Cortical analysis of visual context. Neuron. 2003;38 (2):347–358. doi: 10.1016/s0896-6273(03)00167-3. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E, Ishai A. Famous faces activate contextual associations in the parahippocampal cortex. Cereb Cortex. 2007a;18 (6):1233–1238. doi: 10.1093/cercor/bhm170. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E, Mason M, Fenske M. The units of thought. Hippocampus. 2007b;17 (6):420–428. doi: 10.1002/hipo.20287. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E, Schacter DL. Scenes unseen: the parahippocampal cortex intrinsically subserves contextual associations, not scenes or places per se. J Neurosci. 2008;28 (34):8539–8544. doi: 10.1523/JNEUROSCI.0987-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmid AM, Dale AM, Hamalainen MS, Marinkovic K, Schacter DL, Rosen BR, Halgren E. Top–down facilitation of visual recognition. P Natl Acad Sci USA. 2006a;103 (2):449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bar M, Neta M, Linz H. Very first impressions. Emotion. 2006b;6 (2):269–278. doi: 10.1037/1528-3542.6.2.269. [DOI] [PubMed] [Google Scholar]

- Bar M, Ullman S. Spatial context in recognition. Perception. 1996;25 (3):343–352. doi: 10.1068/p250343. [DOI] [PubMed] [Google Scholar]

- Barbas H. Connections underlying the synthesis of cognition, memory, and emotion in primate prefrontal cortices. Brain Res Bull. 2000;52 (5):319–330. doi: 10.1016/s0361-9230(99)00245-2. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Frames, concepts, and conceptual fields. In: Lehrer A, Kittay EF, editors. Frames, Fields, and Contrasts: New Essays in Semantic and Lexical Organization. Lawrence Erlbaum Associates; Hillsdale, NJ: 1992. pp. 21–74. [Google Scholar]

- Behrmann M, Geng JJ, Baker CI. Acquisition of long-term visual representations: psychological and neural mechanisms. In: Utti B, Ohta N, MacLeod C, editors. Dynamic Cognitive Processes: The Fifth Tsukuba International Conference. Springer Verlag; Tokyo: 2005. pp. 1–26. [Google Scholar]

- Biederman I, Mezzanotte RJ, Rabinowitz JC. Scene perception: detecting and judging objects undergoing relational violations. Cognitive Psychol. 1982;14 (2):143–177. doi: 10.1016/0010-0285(82)90007-x. [DOI] [PubMed] [Google Scholar]

- Bilalić M, Langner R, Ulrich R, Grodd W. Many faces of expertise: fusiform face area in chess experts and novices. J Neurosci. 2011;31 (28):10206–10214. doi: 10.1523/JNEUROSCI.5727-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boggan AL, Barlett JC, Krawczyk DC. Chess masters show a hallmark of face processing with chess. J Exp Psychol Gen. doi: 10.1037/a0024236. (in press) [DOI] [PubMed] [Google Scholar]

- Brady TF, Oliva A. Statistical learning using real-world scenes: extracting categorical regularities without conscious intent. Psychol Sci. 2008;19 (7):678–685. doi: 10.1111/j.1467-9280.2008.02142.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brockmole JR, Hambrick DZ, Windisch DJ, Henderson JM. The role of meaning in contextual cueing: evidence from chess expertise. Q J Exp Psychol. 2008;61 (12):1886–1896. doi: 10.1080/17470210701781155. [DOI] [PubMed] [Google Scholar]

- Brockmole JR, Henderson JM. Using real-world scenes as contextual cues for search. Vis Cogn. 2006;13 (1):99–108. [Google Scholar]

- Buckner RL, Andrews-Hanna JR, Schacter DL. The brain’s default network: anatomy, function, and relevance to disease. Ann NY Acad Sci. 2008;1124:1–38. doi: 10.1196/annals.1440.011. [DOI] [PubMed] [Google Scholar]

- Bukach CM, Phillips WS, Gauthier I. Limits of generalization between categories and implications for theories of category specificity. Atten Percept Psycho. 2010;72 (7):1865–1874. doi: 10.3758/APP.72.7.1865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Busey TA, Vanderkolk JR. Behavioral and electrophysiological evidence for configural processing in fingerprint experts. Vision Res. 2005;45 (4):431–448. doi: 10.1016/j.visres.2004.08.021. [DOI] [PubMed] [Google Scholar]

- Campitelli G, Gobet F, Head K, Buckley M, Parker A. Brain localization of memory chunks in chessplayers. Int J Neurosci. 2007;117 (12):1641–1659. doi: 10.1080/00207450601041955. [DOI] [PubMed] [Google Scholar]

- Charness N. Aging and skilled problem solving. J Exp Psychol Gen. 1981;110 (1):21–38. doi: 10.1037//0096-3445.110.1.21. [DOI] [PubMed] [Google Scholar]

- Chase WG, Simon H. Perception in chess. Cognitive Psychol. 1973;4 (1):55–81. [Google Scholar]

- Chase WG, Ericsson KA. Skill memory. In: Anderson JR, editor. Cognitive Skills and Their Acquisition. Lawrence Erlbaum Associates; Hillsdale, NJ: 1981. pp. 141–189. [Google Scholar]

- Cheung OS, Richler JJ, Palmeri TJ, Gauthier I. Revisiting the role of spatial frequencies in the holistic processing of faces. J Exp Psychol Human. 2008;34 (6):1327–1336. doi: 10.1037/a0011752. [DOI] [PubMed] [Google Scholar]

- Chun MM, Jiang Y. Contextual cueing: implicit learning and memory of visual context guides spatial attention. Cognitive Psychol. 1998;36 (1):28–71. doi: 10.1006/cogp.1998.0681. [DOI] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehéricy S, Dehaene-Lambertz G, Hénaff M, Michel F. The visual word form area: spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain. 2000;123:291–307. doi: 10.1093/brain/123.2.291. [DOI] [PubMed] [Google Scholar]

- Davenport JL. Consistency effects between objects in scenes. Mem Cognition. 2007;35 (3):393–401. doi: 10.3758/bf03193280. [DOI] [PubMed] [Google Scholar]

- Davenport JL, Potter MC. Scene consistency in object and background perception. Psychol Sci. 2004;15 (8):559–564. doi: 10.1111/j.0956-7976.2004.00719.x. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H. A cortical–hippocampal system for declarative memory. Nat Rev Neurosci. 2000;1:41–50. doi: 10.1038/35036213. [DOI] [PubMed] [Google Scholar]

- Epstein RA, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392 (6676):598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Ericsson KA, Lehmann AC. Expert and exceptional performance: evidence of maximal adaptation to task constraints. Annu Rev Psychol. 1996;47:273–305. doi: 10.1146/annurev.psych.47.1.273. [DOI] [PubMed] [Google Scholar]

- Ericsson KA, Smith J, editors. Toward a General Theory of Expertise: Prospects and Limits. Cambridge University Press; New York, NY: 1991. [Google Scholar]

- Farah MJ, Wilson KD, Drain M, Tanaka JN. What is “special” about face perception? Psychol Rev. 1998;105 (3):482–498. doi: 10.1037/0033-295x.105.3.482. [DOI] [PubMed] [Google Scholar]

- Fiser J, Aslin RN. Unsupervised statistical learning of higher-order spatial structures from visual scenes. Psychol Sci. 2001;12 (6):499–504. doi: 10.1111/1467-9280.00392. [DOI] [PubMed] [Google Scholar]

- Freyhof H, Gruber H, Ziegler A. Expertise and hierarchical knowledge representation in chess. Psychol Res. 1992;54 (1):32–37. [Google Scholar]

- Friedman A. Framing pictures: the role of knowledge in automatized encoding and memory for gist. J Exp Psychol Gen. 1979;108 (3):316–355. doi: 10.1037//0096-3445.108.3.316. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Curran T, Curby KM, Collins D. Perceptual interference supports a non-modular account of face processing. Nat Neurosci. 2003;6 (4):428–432. doi: 10.1038/nn1029. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nat Neurosci. 2000a;3 (2):191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW. The fusiform “face area” is part of a network that processes faces at the individual level. J Cognitive Neurosci. 2000b;12 (3):495–504. doi: 10.1162/089892900562165. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Becoming a “Greeble” expert: exploring mechanisms for face recognition. Vision Res. 1997;37 (12):1673–1682. doi: 10.1016/s0042-6989(96)00286-6. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Unraveling mechanisms for expert object recognition: bridging brain activity and behavior. J Exp Psychol Human. 2002;28 (2):431–446. doi: 10.1037//0096-1523.28.2.431. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. Activation of the middle fusiform ‘face area’ increases with expertise in recognizing novel objects. Nat Neurosci. 1999;2 (6):568–573. doi: 10.1038/9224. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Wong ACN, Hayward WG, Cheung OS. Font tuning associated with expertise in letter perception. Perception. 2006;35 (4):541–559. doi: 10.1068/p5313. [DOI] [PubMed] [Google Scholar]

- Gentner D. Structure-mapping: A theoretical framework for analogy. Cognitive Sci. 1983;7:155–170. [Google Scholar]

- Gilbert CD, Sigman M. Brain states: top–down influences in sensory processing. Neuron. 2007;54 (5):677–696. doi: 10.1016/j.neuron.2007.05.019. [DOI] [PubMed] [Google Scholar]

- Gilbert DT, Wilson TD. Prospection: experiencing the future. Science. 2007;317 (5843):1351–1354. doi: 10.1126/science.1144161. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N. The fusiform face area subserves face perception, not generic within-category identification. Nat Neurosci. 2004;7 (5):555–562. doi: 10.1038/nn1224. [DOI] [PubMed] [Google Scholar]

- Harel A, Gilaie-Dotan S, Malach R, Bentin S. Top–down engagement modulates the neural expressions of visual expertise. Cereb Cortex. 2010;20 (10):2304–2318. doi: 10.1093/cercor/bhp316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasen T, Olkkonen M, Walter S, Gegenfurtner KR. Nat. Neurosci. 2006;9 (11):1367–1368. doi: 10.1038/nn1794. [DOI] [PubMed] [Google Scholar]

- Herzmann G, Curran T. Experts’ memory: an ERP study of perceptual expertise effects on encoding and recognition. Mem Cognition. 2011;39 (3):412–432. doi: 10.3758/s13421-010-0036-1. [DOI] [PubMed] [Google Scholar]

- Holding DH. Evaluation factors in human tree search. Am J Psychol. 1989;102 (1):103–108. [Google Scholar]

- Holding DH. Theories of chess skill. Psychol Res. 1992;54 (1):10–16. [Google Scholar]

- Hole GJ. Configurational factors in the perception of unfamiliar faces. Perception. 1994;23 (1):65–74. doi: 10.1068/p230065. [DOI] [PubMed] [Google Scholar]

- Holyoak KJ, Thagard P. The analogical mind. Am Psychol. 1997;52 (1):35–44. [PubMed] [Google Scholar]

- Ishai A. Sex, beauty and the orbitofrontal cortex. Int J Psychophysiol. 2007;63 (2):181–185. doi: 10.1016/j.ijpsycho.2006.03.010. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider L, Martin A, Schouten J, Haxby J. Distributed representation of objects in the human ventral visual pathway. P Natl Acad Sci USA. 1999;96 (16):9379–9384. doi: 10.1073/pnas.96.16.9379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James KH, James TW, Jobard G, Wong ACN, Gauthier I. Letter processing in the visual system: different activation patterns for single letters and strings. Cogn Affect Behav Ne. 2005;5 (4):452–466. doi: 10.3758/cabn.5.4.452. [DOI] [PubMed] [Google Scholar]

- Joubert O, Rousselet G, Fize D, Fabre-Thorpe M. Processing scene context: fast categorization and object interference. Vision Res. 2007;47 (26):3286–3297. doi: 10.1016/j.visres.2007.09.013. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17 (11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kringelbach ML, Rolls ET. The functional neuroanatomy of the human orbitofrontal cortex: evidence from neuroimaging and neuropsychology. Prog Neurobiol. 2004;72 (5):341–372. doi: 10.1016/j.pneurobio.2004.03.006. [DOI] [PubMed] [Google Scholar]

- Kramer RSS, Arend I, Ward R. Perceived health from biological motion predicts voting behavior. Q J Exp Psychol. 2010;634:625–632. doi: 10.1080/17470210903490977. [DOI] [PubMed] [Google Scholar]

- Krawczyk DC, Boggan AM, McClelland MM, Bartlett JC. The neural organization of perception in chess experts. Neurosci Lett. 2011;499 (2):64–69. doi: 10.1016/j.neulet.2011.05.033. [DOI] [PubMed] [Google Scholar]

- Kveraga K, Boshyan J, Bar M. Magnocellular projections as the trigger of top–down facilitation in recognition. J Neurosci. 2007;27 (48):13232–13240. doi: 10.1523/JNEUROSCI.3481-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kveraga K, Ghuman AS, Kassam KS, Aminoff E, Hämäläinen MS, Chaumon M, et al. Early onset of neural synchronization in the contextual associations network. P Natl Acad Sci USA. 2011;108 (8):3389–3394. doi: 10.1073/pnas.1013760108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leveroni CL, Seidenberg MS, Mayer AR, Mead LA, Binder JR, Rao SM. Neural systems underlying the recognition of familiar and newly learned faces. J Neurosci. 2000;20 (2):878–886. doi: 10.1523/JNEUROSCI.20-02-00878.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mack ML, Palmeri TJ. Modeling categorization of scenes containing consistent versus inconsistent objects. J Vision. 2010;10(3):1–11. 11. doi: 10.1167/10.3.11. [DOI] [PubMed] [Google Scholar]

- Mack ML, Wong ACN, Gauthier I, Tanaka JW, Palmeri TJ. Time course of visual object categorization: fastest does not necessarily mean first. Vision Res. 2009;49 (15):1961–1968. doi: 10.1016/j.visres.2009.05.005. [DOI] [PubMed] [Google Scholar]

- Mandler JM, Johnson NS. Some of the thousand words a picture is worth. J Exp Psychol-Hum L. 1976;2 (5):529–540. [PubMed] [Google Scholar]

- Mayall K, Humphreys GW, Olson A. Disruption to word or letter processing? The origins of case-mixing effects. J Exp Psychol -Hum L. 1997;23 (5):1275–1286. doi: 10.1037//0278-7393.23.5.1275. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Gore JC, Allison T. Face-specific processing in the human fusiform gyrus. J Cognitive Neurosci. 1997;9 (5):605–610. doi: 10.1162/jocn.1997.9.5.605. [DOI] [PubMed] [Google Scholar]

- Michel C, Rossion B, Han J, Chung CS, Caldara R. Holistic processing is finely tuned for faces of one’s own race. Psychol Sci. 2006;17 (7):608–615. doi: 10.1111/j.1467-9280.2006.01752.x. [DOI] [PubMed] [Google Scholar]

- Minsky M. A framework for representing knowledge. In: Winston PH, editor. The Psychology of Computer Vision. McGraw-Hill; New York, NY: 1975. pp. 211–277. [Google Scholar]

- O’Doherty J, Winston J, Critchley H, Perrett D, Burt DM, Dolan RJ. Beauty in a smile: the role of medial orbitofronal cortex in facial attractiveness. Neuropsychologia. 2003;41 (2):147–155. doi: 10.1016/s0028-3932(02)00145-8. [DOI] [PubMed] [Google Scholar]

- Oliva A, Torralba A. The role of context in object recognition. Trends Cogn Sci. 2007;11 (12):520–527. doi: 10.1016/j.tics.2007.09.009. [DOI] [PubMed] [Google Scholar]

- Op de Beeck HP, Baker CI, DiCarlo JJ, Kanwisher N. Discrimination training alters object representations in human extrastriate cortex. J Neurosci. 2006;26 (50):13025–13036. doi: 10.1523/JNEUROSCI.2481-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Op De Beeck HP, Torfs K, Wagemans J. Perceived shape similarity among unfamiliar objects and the organization of the human object vision pathway. J Neurosci. 2008;28 (40):10111–10123. doi: 10.1523/JNEUROSCI.2511-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer SE. The effects of contextual scenes on the identification of objects. Mem Cognition. 1975;3 (5):519–526. doi: 10.3758/BF03197524. [DOI] [PubMed] [Google Scholar]

- Perea M, Duñabeitia J, Carreiras M. R34D1NG W0RD5 W1TH NUMB3R5. J Exp Psychol Human. 2008;34 (1):237–241. doi: 10.1037/0096-1523.34.1.237. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Schwartz S, Seghier ML, Lazeyras F, Vuilleumier P. View-independent coding of face identity in frontal and temporal cortices is modulated by familiarity: an event-related fMRI study. Neuroimage. 2005;24 (4):1214–1224. doi: 10.1016/j.neuroimage.2004.10.038. [DOI] [PubMed] [Google Scholar]

- Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, Shulman GL. A default mode of brain function. P Natl Acad Sci USA. 2001;98 (2):676–682. doi: 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C, Yonelinas AP, Cohen MX, Dy CJ, Tom SM, D’Esposito M. Dissociable correlates of recollection and familiarity within the medial temporal lobes. Neuropsychologia. 2004;42 (1):2–13. doi: 10.1016/j.neuropsychologia.2003.07.006. [DOI] [PubMed] [Google Scholar]

- Rhodes G, Byatt G, Michie PT, Puce A. Is the fusiform face area specialized for faces, individuation, or expert individuation? J Cognitive Neurosci. 2004;16 (2):189–203. doi: 10.1162/089892904322984508. [DOI] [PubMed] [Google Scholar]

- Richler JJ, Cheung OS, Gauthier I. Holistic processing predicts face recognition. Psychol Sci. 2011;22 (4):464–471. doi: 10.1177/0956797611401753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richler JJ, Mack ML, Gauthier I, Palmeri TJ. Holistic processing of faces happens at a glance. Vision Res. 2009;49 (23):2856–2861. doi: 10.1016/j.visres.2009.08.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosch E, Mervis CB, Gray WD, Johnson DM, Boyes-Braem P. Basic objects in natural categories. Cognitive Psychol. 1976;8:382–439. [Google Scholar]

- Rossion B, Collins D, Goffaux V, Curran T. Long-term expertise with artificial objects increases visual competition with early face categorization processes. J Cognitive Neurosci. 2007;19 (3):543–555. doi: 10.1162/jocn.2007.19.3.543. [DOI] [PubMed] [Google Scholar]

- Rossion B, Kung CC, Tarr MJ. Visual expertise with nonface objects leads to competition with the early perceptual processing of faces in the human occipitotemporal cortex. P Natl Acad Sci USA. 2004;101 (40):14521–14526. doi: 10.1073/pnas.0405613101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274 (5294):1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- Saffran JR, Johnson EK, Aslin RN, Newport EL. Statistical learning of tone sequences by human infants and adults. Cognition. 1999;70 (1):27–52. doi: 10.1016/s0010-0277(98)00075-4. [DOI] [PubMed] [Google Scholar]

- Sagi D. Perceptual learning in Vision Research. Vision Res. 2011;51:1552–1566. doi: 10.1016/j.visres.2010.10.019. [DOI] [PubMed] [Google Scholar]

- Sanocki T. Visual knowledge underlying letter perception: font-specific, schematic tuning. J Exp Psychol Human. 1987;13 (2):267–278. doi: 10.1037//0096-1523.13.2.267. [DOI] [PubMed] [Google Scholar]

- Sanocki T. Font regularity constraints on the process of letter recognition. J Exp Psychol Human. 1988;14 (3):472–480. doi: 10.1037//0096-1523.14.3.472. [DOI] [PubMed] [Google Scholar]

- Schacter DL. Implicit expressions of memory in organic amnesia: learning of new facts and associations. Hum Neurobiol. 1987;6:107–118. [PubMed] [Google Scholar]

- Schacter DL, Addis DR, Buckner RL. Remembering the past to imagine the future: the prospective brain. Nat Rev Neurosci. 2007;8 (9):657–661. doi: 10.1038/nrn2213. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Addis DR, Buckner RL. Episodic simulation of future events: concepts, data, and applications. Ann NY Acad Sci. 2008;1124:39–60. doi: 10.1196/annals.1440.001. [DOI] [PubMed] [Google Scholar]

- Schank RC. Using knowledge to understand. TINLAP Proceedings of the 1975 Workshop on Theoretical Issues in Natural Language Processing; Tinlap, Arlington, Virginia. 1975. pp. 117–121. [Google Scholar]

- Schneider W, Shiffrin RM. Controlled and automatic human information processing: I. Detection, search, and attention. Psychol Rev. 1977;84 (1):1–66. [Google Scholar]

- Scott L, Tanaka J, Sheinberg DL, Curran T. A reevaluation of the electrophysiological correlates of expert object processing. J Cognitive Neurosci. 2006;18 (9):1453–1465. doi: 10.1162/jocn.2006.18.9.1453. [DOI] [PubMed] [Google Scholar]

- Scott L, Tanaka JW, Sheinberg D, Curran T. The role of category learning in the acquisition and retention of perceptual expertise: a behavioral and neurophysiological study. Brain Res. 2008;1210:204–215. doi: 10.1016/j.brainres.2008.02.054. [DOI] [PubMed] [Google Scholar]

- Sergent J, Ohta S, MacDonald B. Functional neuroanatomy of face and object processing: a positron emission tomography study. Brain. 1992;115:15–36. doi: 10.1093/brain/115.1.15. [DOI] [PubMed] [Google Scholar]

- Shiffrin RM, Schneider W. Controlled and automatic human information processing: II. Perceptual learning, automatic attending, and a general theory. Psychol Rev. 1977;84 (2):127–190. [Google Scholar]

- Svoboda E, McKinnon MC, Levine B. The functional neuroanatomy of autobiographical memory: a meta-analysis. Neuropsychologia. 2006;44 (12):2189–2208. doi: 10.1016/j.neuropsychologia.2006.05.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka JW. The entry point of face recognition: evidence for face expertise. J Exp Psychol Gen. 2001;130 (3):534–543. doi: 10.1037//0096-3445.130.3.534. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. Q J Exp Psychol A. 1993;46 (2):225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Kiefer M, Bukach CM. A holistic account of the own-race effect in face recognition: evidence from a cross-cultural study. Cognition. 2004;93 (1):B1–B9. doi: 10.1016/j.cognition.2003.09.011. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Taylor M. Object categories and expertise: is the basic level in the eye of the beholder? Cognitive Psychol. 1991;23:457–482. [Google Scholar]

- Torralba A, Oliva A. Statistics of natural image categories. Network. 2003;14 (3):391–412. [PubMed] [Google Scholar]

- Trautner P, Dietl T, Staedtgen M, Mecklinger A, Grunwald T, Elger C, et al. Recognition of famous faces in the medial temporal lobe: an invasive ERP study. Neurology. 2004;63 (7):1203–1208. doi: 10.1212/01.wnl.0000140487.55973.d7. [DOI] [PubMed] [Google Scholar]

- Turk-Browne NB, Jungé J, Scholl BJ. The automaticity of visual statistical learning. J Exp Psychol Gen. 2005;134 (4):552–564. doi: 10.1037/0096-3445.134.4.552. [DOI] [PubMed] [Google Scholar]

- Wagner AD, Schacter DL, Rotte M, Koutstaal W, Maril A, Dale AM, et al. Building memories: remembering and forgetting of verbal experiences as predicted by brain activity. Science. 1998;281 (5380):1188–1191. doi: 10.1126/science.281.5380.1188. [DOI] [PubMed] [Google Scholar]

- Willis J, Todorov A. First impressions: making up your mind after a 100-ms exposure to a face. Psychol Sci. 2006;17 (7):592–598. doi: 10.1111/j.1467-9280.2006.01750.x. [DOI] [PubMed] [Google Scholar]

- Witzel C, Valkova H, Hansen T, Gegenfurtner K. Object knowledge modulates colour appearance. Perception. 2011;2:13–49. doi: 10.1068/i0396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong ACN, Jobard G, James KH, James TW, Gauthier I. Expertise with characters in alphabetic and nonalphabetic writing systems engage overlapping occipito-temporal areas. Cogn Neuropsychol. 2009a;26 (1):111–127. doi: 10.1080/02643290802340972. [DOI] [PubMed] [Google Scholar]

- Wong ACN, Palmeri TJ, Gauthier I. Conditions for facelike expertise with objects: becoming a Ziggerin expert—but which type? Psychol Sci. 2009b;20 (9):1108–1117. doi: 10.1111/j.1467-9280.2009.02430.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong ACN, Palmeri TJ, Rogers B, Gore JC, Gauthier I. Beyond shape: how you learn about objects affects how they are represented in visual cortex. PLoS One. 2009c;4 (12):e8405. doi: 10.1371/journal.pone.0008405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong AC-N, Bukach CM, Yuen C, Yang L, Leung S, Greenspon E. Holistic processing of words modulated by reading experience. PLoS ONE. 2011;6(6):e20753. doi: 10.1371/journal.pone.0020753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong YK, Folstein JR, Gauthier I. Task-irrelevant perceptual expertise. J Vision. 2011;11 (14):1–15. doi: 10.1167/11.14.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong YK, Gauthier I. Holistic processing of musical notation: dissociating failures of selective attention in experts and novices. Cogn Affect Behav Ne. 2010a;10 (4):541–551. doi: 10.3758/CABN.10.4.541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong YK, Gauthier I. A multimodal neural network recruited by expertise with musical notation. J Cognitive Neurosci. 2010b;22 (4):695–713. doi: 10.1162/jocn.2009.21229. [DOI] [PubMed] [Google Scholar]

- Xu Y. Revisiting the role of the fusiform face area in visual expertise. Cereb Cortex. 2005;15 (8):1234–1242. doi: 10.1093/cercor/bhi006. [DOI] [PubMed] [Google Scholar]

- Young AW, Hellawell D, Hay DC. Configurational information in face perception. Perception. 1987;16 (6):747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]

- Zhang J, Meeson A, Welchman AE, Kourtzi Z. Learning alters the tuning of functional resonance imaging patterns for visual patterns. J Neurosci. 2010;30 (42):14127–14133. doi: 10.1523/JNEUROSCI.2204-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]