Abstract

BACKGROUND

Previous studies have identified hospitals with poor performance on cardiac process measures. How these hospitals fare in other domains such as patient satisfaction remains unknown.

METHODS

We used Hospital Compare data to identify hospitals that reported acute myocardial infarction (AMI) and heart failure (HF) process measures for 2006–2008 and calculated respective composite performance scores. Using these scores, we classified hospitals as low-performing (bottom decile for all three years), top-performing (top decile for all three years), and intermediate (all others). We used Hospital Consumer Assessment of Healthcare Providers and Systems 2008 data to compare overall satisfaction between low, intermediate, and top-performing hospitals.

RESULTS

Low-performing hospitals had fewer beds, fewer nurses per-patient, and were more likely rural, safety-net hospitals located in the South, compared to intermediate and top-performing hospitals (P<0.01 for all). After adjusting for hospital characteristics, patients were less likely to recommend low-performing hospitals to family or friends, relative to intermediate and top-performing hospitals (AMI: 58.8 vs. 63.9% vs. 68.8%, HF: 61.3% vs. 64.0% vs. 66.8%, P<0.001 for all) or provide an overall rating of ≥9 out of 10 (AMI: 56.7% vs. 60.7% vs. 64.9%, HF: 57.8% vs. 61.1% vs. 63.6% P<0.01 for all). However, we also noted discordance between a hospital’s performance on process measures and patient satisfaction (kappa statistic<0.20).

CONCLUSION

Hospitals with consistently poor performance on cardiac process measures also have lower patient satisfaction on average suggesting that these hospitals have overall poor quality of care. However, there is discordance between the two measures in profiling hospital quality.

INTRODUCTION

Improving the quality of healthcare in the United States is a national priority.1, 2 As part of these efforts, hospital performance measures are increasingly being used to benchmark quality.3 Current payment reforms from the Center for Medicare and Medicaid Services (CMS) include a 2% financial penalty for acute care hospitals that do not report quality data (pay for reporting).4 Beginning in 2012, under the Patient Protection and Affordable Care Act (P.L.111–148), CMS will seek to reimburse hospitals according to their actual performance on several key quality measures (pay for performance).4, 5

Prior research has identified a group of hospitals with consistently poor performance for cardiac care based on low adherence to important processes of care.6 These low-performing hospitals tend to be rural, safety-net facilities that serve populations with lower socio-economic status. Under the proposed payment structure, these hospitals stand to face significant financial penalties if their performance does not improve. However, critics have argued that hospital classification based on process measures alone may be problematic due to imprecision arising from lower case volume at low-performing hospitals and the poor reliability of hospitals’ self-reported data.7

In recent years, patient satisfaction has been recognized as an important quality metric.8 As opposed to process measures that may be subject to “gaming” or outcome measures that may be limited by incomplete risk-adjustment, patient satisfaction data are reported directly by patients and may provide a valuable instrument to determine a hospital’s quality. The development of the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey has allowed patient satisfaction measures to be formally incorporated into hospital evaluation and reimbursement.

However, it remains unknown how hospitals with consistently poor performance on process measures fare on patient satisfaction ratings. Evaluating this relationship is important because, if hospitals with low process measure performance also perform poorly on patient satisfaction ratings, then, this could be construed as additional evidence of poor quality of care at these facilities, which may be in need of focused attention.

To address this gap in knowledge, we examined patient satisfaction at hospitals that have consistently poor performance on process measures for 2 cardiac diseases – acute myocardial infarction (AMI) and heart failure (HF), and compared it to patient satisfaction at hospitals with intermediate and high performance.

METHODS

Data Sources

We relied on three primary data sources: 1) The CMS Hospital Compare database, 2006–2008 2) The American Hospital Association (AHA) annual survey, 2006 and 3) The United States Census, 2000.

The Hospital Compare database provides information on processes and outcomes of care for select conditions, and patient satisfaction with care (HCAHPS).9 Given that there are financial penalties for hospitals that do not report these data to CMS,10 participation in Hospital Compare has become nearly universal. We downloaded hospital-level process measures and HCAHPS patient satisfaction measures from the Hospital Compare website.

Process Measures

We were primarily interested in hospital performance for two cardiac diseases – AMI and HF, as these conditions are highly prevalent and have a rich evidence base supporting the development of process measures. We excluded all critical access hospitals (n= 831), as these hospitals are not required to report process measures data, hospitals with less than 25 eligible patients for recommended therapies (n=919 for AMI; n=271 for HF),3, 6 and hospitals located outside the 50 U.S. States and District of Columbia (n=54 hospitals in U.S. territories).

For the remaining hospitals, we obtained data on 7 measures reported for AMI and 4 measures for HF during 2006 –2008. Performance measures for AMI assessed the percentage of eligible patients who received 1) aspirin on arrival 2) aspirin at discharge 3) beta blockers at discharge 4) angiotensin converting enzyme-inhibitors (ACE-I) or angiotensin receptor blockers (ARB) for left ventricular (LV) systolic dysfunction 5) advice on smoking cessation 6) fibrinolytic medication within 30 minutes of arrival and 7) primary percutaneous coronary intervention (PCI) within 90 minutes of arrival during each year. Performance measures for HF assessed the percentage of eligible patients who received 1) discharge instructions 2) evaluation of LV systolic function 3) ACE-I or ARB drugs for fosystolic dysfunction and 4) advice on smoking cessation. Although an additional AMI measure (beta blocker on arrival) was reported previously, it was dropped in 2008 based on evidence of harm with this therapy during first 24 hours.11 We excluded this measure from our analysis for all 3 years.

Identification of low-, intermediate and top-performing hospitals

For each hospital, we calculated a composite performance score for AMI and HF performance for each year using the opportunities scoring method.12 This was done by dividing the total number of times each treatment was administered (numerator) by the total number of opportunities for each therapy (denominator) multiplied by 100. Next, we stratified hospitals into deciles based on their composite performance scores for each year. We defined low-performing hospitals as hospitals in the bottom decile of performance for each of the three year, top-performing hospitals as those in the top decile of performance for each year and intermediate hospitals (all others).

Patient Satisfaction

We used the HCAHPS data for hospital-level satisfaction between April 2008 and March 2009. Details of survey development, psychometric testing and factor analyses have been previously reported.13–15 Briefly, patients aged 18 years or older, with a non-psychiatric discharge diagnosis, who were alive at discharge, were eligible to receive the survey. Data on the number of patients completing the survey (< 100, 100 to 299, greater than or equal to 300) and the survey response rate (number of completed surveys divided by the total number of patients surveyed expressed as a percentage) was also available.

In addition to providing information on the quality of interpersonal exchange between patients and staff and amenities of care (reported under 8 domains), the HCAHPS survey includes two measures of overall satisfaction (eTable 1).8 These include 1) whether the patient would recommend the hospital to family and friends, with responses grouped into definitely yes, probably (yes or no) and definitely no; and 2) a global rating of the hospital on a scale of 0 to 10, with 0 being the worst and 10 being the best a hospital can be with rating grouped into three categories (0–6, 7–8, 9–10). For each hospital, HCAHPS also reports on the percentage of survey responses within each category. Since overall satisfaction ratings are highly correlated with individual items in the HCAPS survey;8 we focused only on the two overall ratings.

In order to ensure that publicly reported HCAHPS scores allow a fair and accurate comparison between hospitals, all survey responses are adjusted for differences in survey mode of administration (mail only, telephone only, mail and telephone, and active interactive voice response) and patient-mix (age, education, self reported health status, service line – medical, surgical or maternity, admission from emergency room, non-English primary language, and the relative lag between hospital discharge and survey completion) prior to public reporting. The adjustment coefficients are derived from a large scale validation experiment conducted by CMS prior to the national implementation of HCAHPS.13 In that study, it was determined that after adjustment for survey mode and patient-mix, no additional adjustment for non-response was necessary.

Hospital characteristics

The Hospital Compare database provides information on key hospital characteristics: ownership status – for profit/not for-profit, hospital state, and zip code. We categorized each hospital’s geographic location into U.S. census regions, and as rural or urban using zip code level commuting area codes derived from U.S. Census 2000 data.16 We obtained additional hospital-level data by linking the Hospital Compare data to the 2006 AHA survey using each hospital’s unique identification number. Variables that we used included: annual admission volume, number of beds, nurse staffing levels, teaching status (membership in council of teaching hospitals), and percentage of patients receiving Medicaid.17 We calculated the ratio of nurse to patient-days by dividing the number of nurse full-time equivalents on staff by 1000 patient days. Finally, we categorized hospitals as safety-net if the hospital’s Medicaid caseload for 2006 exceeded the mean for all hospitals in the state by 1 standard deviation.6 Nineteen AMI hospitals (1 low-performing and 18 intermediate performing), and 30 HF hospitals (2 low-performing, 26 intermediate performing and 2 top-performing hospitals) could not be linked to the AHA dataset and were excluded.

Statistical Analyses

We compared characteristics of low, intermediate and top-performing hospitals using chi-square test and Mantel Haenszel test of trend for categorical variables and linear regression for continuous variables. We also compared the number of patients completing the survey and the survey response rate across hospital groups using similar tests. Next, we compared hospital-level patient satisfaction using the two overall satisfaction measures between low-performing, intermediate and top-performing hospitals using multivariable linear regression while adjusting for differences in hospital characteristics (annual admission volume [per 1000], number of beds [< 100, 100–400, > 400], nurse full time equivalent (FTE) per 1000 patient days, teaching status, ownership status [for profit, non-profit], location [urban, rural], safety-net status, and census region [Midwest, Northeast, South, and West]). We also explored the degree of discordance between hospital categorization based on process measure performance, and the overall patient satisfaction ratings by examining the proportion of low, intermediate and top-performing hospitals within each disease category (AMI and HF) that were in the top quartile, top half and bottom quartile of satisfaction ratings. Finally, we categorized hospitals separately into quartiles based on 2008 composite scores and overall patient satisfaction ratings. We then compared agreement in hospital classification based on these two measures using kappa statistics.

In our multivariable modes, we used a combination of statistical and graphical methods to examine model assumptions of normality and homogeneity of variance of the error term. Since the assumption of homogeneity of variance was not satisfied, we applied different estimates of the variances for each of the hospital groups (low-performing, intermediate performing, top-performing). This was done using Proc Mixed in SAS with “Repeated” statement and “Group” option. This model allowed us to directly estimate the variance of our dependent variable for each hospital group, which was used to perform hypothesis tests.

To determine if our results were sensitive to our categorization of hospital performance, we repeated these analysis using alternative thresholds for defining low-performing and top-performing hospitals as the bottom and top 20%, and bottom and top 25% of all hospitals, respectively based on their composite performance scores.

All analyses were performed using SAS version 9.2 (SAS Institute Inc., Cary, NC). All p values are 2-sided. The study was approved by the Institutional Review Board at University of Iowa.

RESULTS

Among all hospitals that reported data on performance measures during 2006–2008, 2467 hospitals for AMI (72% of all hospitals) and 3115 (91% of all hospitals) for HF met the eligibility criteria for inclusion in the study (Table 1). Of these, 88 AMI hospitals and 147 HF hospitals were consistently low-performing while 49 AMI hospitals and 105 HF hospitals were consistently top-performing. Only 19 hospitals were low-performing for both AMI and HF, and 18 hospitals were top-performing for both diseases. None of the top-performing AMI (and HF) hospitals were in the low-performing HF (and AMI) category.

Table 1.

Characteristics of Study Hospitals

| CHARACTERISTIC | AMI Hospital N=2467 | HF Hospital N=3115 |

|---|---|---|

| Composite Performance Score | 94.1 (4.9) | 84.9 (11.8) |

| Low-Performing Hospital | 78.3 (7.0) | 48.1 (14.1) |

| Intermediate Performing Hospital | 94.6 (3.7) | 86.3 (7.8) |

| Top-Performing Hospital | 99.6 (0.3) | 98.7 (0.8) |

| Annual Admission Volume | 12,504 (9907) | 10,463 (9729) |

| Number of Beds† | 260.3 (199.2) | 223.2 (193.7) |

| Nurse FTE / 1000 patient days* | 6.4 (4.0) | 6.4 (3.8) |

| Teaching Hospital† | 268 (10.9) | 270 (8.7) |

| For Profit Hospital | 420 (17.0) | 544 (17.5) |

| Rural Location | 575 (23.3) | 1057 (33.9) |

| Safety Net Hospitals† | 207 (8.4) | 304 (9.8) |

| Census Region | ||

| Midwest | 564 (22.9) | 711 (22.8) |

| Northeast | 474 (19.2) | 510 (16.4) |

| South | 954 (38.7) | 1317 (42.3) |

| West | 475 (19.2) | 577 (18.5) |

Abbreviations: AMI: Acute Myocardial Infarction; HF: Heart Failure; FTE: Full term equivalent. Values in tables represent mean (standard deviation) for continuous variables and n (%) for categorical variables

Nurse staffing data were missing for 18 AMI hospitals (1 low-performing and 18 intermediate-performing hospitals) and 30 HF hospitals (2 low-performing, 26 intermediate-performing and 2 top-performing hospitals).

Number of beds, teaching status, and safety-net data were missing for 4 AMI hospitals (all intermediate-performing hospitals) and 12 HF hospitals (1 low-performing, 10 intermediate-performing and 1 top-performing hospital).

For AMI, mean composite performance score ranged from 78% at low-performing hospitals to over 99% at top-performing hospitals (eTable 2). For HF, mean score ranged from 48% at low-performing hospitals to 99% at top-performing hospitals (eTable 3). Importantly, for both AMI and HF, low-performing hospitals had lower annual admission volume, fewer beds, lower nurse FTE per 1000 patient days, and were more likely to be rural, safety-net hospitals. More than half of the low-performing AMI and HF hospitals were located in the South census region (eTable 2 & eTable 3).

Five AMI hospitals (all intermediate performing) and 12 HF hospitals (1 low-performing, 10 intermediate performing, and 1 top-performing) did not report HCAHPS data to CMS during the study period. Among reporting hospitals, overall survey response rate was significantly lower at low-performing hospitals for both AMI and HF when compared to better performing hospitals for both diseases (Table 2 & Table 3). Nearly all hospitals had at least 100 respondents, although low-performing AMI and HF hospitals had fewer respondents than intermediate and top-performing hospitals in both groups.

Table 2.

HCAHPS Survey Response Rate for Low, Intermediate and Top-Performing Hospitals 2008*

| AMI Hospitals | Low-Performing N=88 | Intermediate-Performing N=2330 | Top-Performing N=49 | P value for trend† |

|---|---|---|---|---|

| HCAHPS Survey Responses | ||||

| Response Rate, % | 26.9 (9.8) | 32.2 (8.2) | 37.3 (8.1) | <0.001 |

| No. of completed surveys | <0.001 | |||

| < 100 | 1 (1.1) | 0 | 0 | |

| 100 – 299 | 21 (23.9) | 78 (3.4) | 3 (6.1) | |

| ≥ 300 | 66 (75.0) | 2247 (96.6) | 46 (93.9) | |

| HF Hospitals | Low-Performing N=147 | Intermediate-Performing N=2863 | Top-Performing N=105 | P value for trend† |

| HCAHPS Survey Responses | ||||

| Response Rate, % | 30.0 (11.3) | 32.1 (8.5) | 33.0 (8.6) | 0.052 |

| No. of completed surveys | <0.001 | |||

| < 100 | 9 (6.3) | 13 (0.5) | 0 | |

| 100 – 299 | 69 (47.9) | 252 (8.8) | 7 (6.7) | |

| ≥ 300 | 66 (45.8) | 2587 (90.7) | 98 (93.3) | |

Abbreviations: AMI: Acute Myocardial Infarction; HF: Heart Failure

Values in tables represent mean (standard deviation) for continuous variables and n (%) for categorical variables

HCAHPS data were not reported by 5 AMI (all intermediate-performing) and 12 HF (1 low-performing, 10 intermediate-performing and 1 top-performing) hospitals.

P value for trend was derived from the Mantel-Haenszel test of trend for categorical variables and linear regression for continuous variables.

Table 3.

Unadjusted and Adjusted Ratings of Patient Satisfaction According to Hospital Performance on Process Measures

| AMI Hospital | Low-Performing (1) N=88 | Intermediate-Performing (2) N=2330 | Top-Performing (3) N=49 | P value (1 vs. 2) | P value (1 vs. 3) |

|---|---|---|---|---|---|

| Definitely Recommend | |||||

| Unadjusted | 59.7 | 66.5 | 72.6 | <0.001 | <0.001 |

| Adjusted† | 58.8 | 63.9 | 68.8 | <0.001 | <0.001 |

| Rating of 9 or 10 | |||||

| Unadjusted | 58.0 | 62.2 | 67.4 | <0.001 | <0.001 |

| Adjusted† | 56.7 | 60.7 | 64.9 | <0.001 | <0.001 |

| HF Hospital | Low-Performing (1) N=147 | Intermediate-Performing (2) N=2863 | Top-Performing (3) N=105 | P value (1 vs. 2) | P value (1 vs. 3) |

| Definitely Recommend | |||||

| Unadjusted | 62.7 | 66.5 | 69.6 | <0.001 | <0.001 |

| Adjusted† | 61.3 | 64.0 | 66.8 | <0.001 | <0.001 |

| Rating of 9 or 10 | |||||

| Unadjusted | 60.1 | 62.6 | 65.4 | 0.008 | <0.001 |

| Adjusted† | 57.8 | 61.1 | 63.6 | <0.001 | <0.001 |

Abbreviations: AMI: Acute Myocardial Infarction; HF: Heart Failure

Patient Satisfaction Ratings are adjusted for annual admission volume (per 1000), number of bed (< 100, 100–400, > 400), nurse full time equivalents per 1000 patient days, teaching status, ownership status (for profit, non-profit), location (urban, rural), safety-net status, and census region (Midwest, Northeast, South, and West) (please see eTable 4 for full model results)

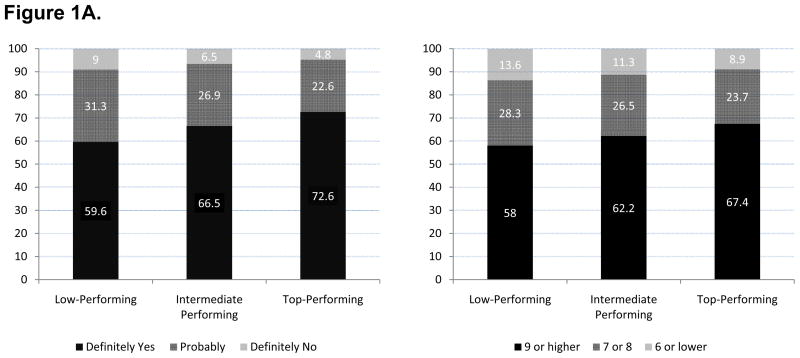

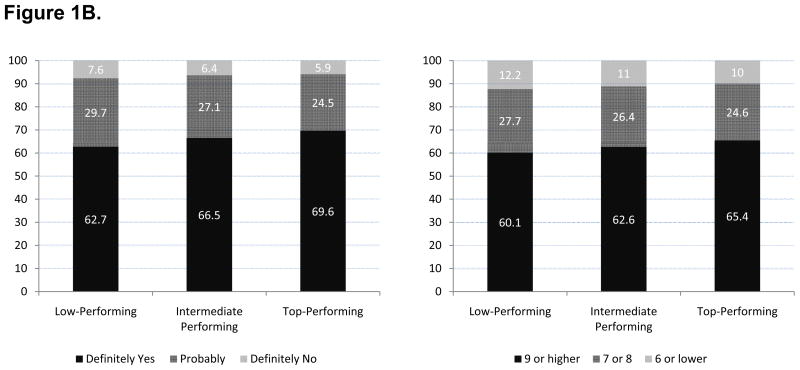

Overall, 66.4% of respondents at AMI hospitals reported that they would definitely recommend the hospital in which they received their care to their family and friends, and 62.2% of patients rated the care they received to be of a high quality (9 or 10). We found that low-performing AMI and HF hospitals scored significantly lower in both these domains of patient satisfaction, on average compared to intermediate and top-performing hospitals (Figure 1A and 1B, and Table 3; P <0.001 for both comparisons). The above results remained significant and largely unchanged even after adjustment for several hospital characteristics, with differences in satisfaction ratings as high as 10 percentage points (Table 3). Importantly, a lower ratio of nurse FTEs to patients, higher bed size and for-profit ownership were independently associated with lower patient satisfaction (eTable 4; P <0.001 for each comparison). The relationship between patient satisfaction ratings and hospital performance persisted in sensitivity analysis that used an alternative definition for low performance (bottom quartile, and bottom quintile of performance for 3 consecutive years, respectively, eTable 5).

Figure 1.

Figure 1A Unadjusted Ratings of Overall Patient Satisfaction at Low-Performing, Intermediate and Top-Performing Hospitals on Composite AMI Process Measures during 2006–2008

Figure 1B Unadjusted Ratings of Overall Patient Satisfaction at Low-Performing, Intermediate and Top-Performing Hospitals on Composite HF Process Measures during 2006–2008

Table 4 shows the degree of agreement between performance on AMI and HF composite measures and overall patient satisfaction rating. We found that among low-performing AMI hospitals, 51.4% were in the bottom quartile and 79.5% were in the bottom half of patient satisfaction, whereas among top-performing AMI hospitals, 51.0% were in the top quartile, and 69.4% were in the top half of patient satisfaction rating (whether a patient would recommend the hospital to their family or friends). Formal analyses of discordance using only 2008 process measures and patient satisfaction data revealed a weak agreement in these two measures of profiling hospital quality (weighted kappa statistic 0.19, Table 4). The discordance was much more pronounced when we examined patient satisfaction ratings at hospitals profiled on HF performance measures. We found that 39.6% of the low-performing HF hospitals were in the top half of patient satisfaction ratings whereas 40% of the top-performing HF hospitals were in the bottom half of patient satisfaction (using the same rating). Agreement between patient satisfaction ratings and HF performance measures was even weaker (weighted kappa statistic 0.07, Table 4). These findings were similar when we conducted the above analyses with the global rating of a hospital on a scale of 0 to 10 (Table 4).

Table 4.

Agreement Between Hospitals’ Performance on Composite Process Measures & Patient Satisfaction

| AMI Hospitals | Low-Performing N=88 | Intermediate-Performing N=2330 | Top-Performing N=49 | Weighted Kappa† (95% CI) |

|---|---|---|---|---|

| Definitely Recommend Hospital | 0.19 (0.16–0.21) | |||

| Top 25% | 9 (10.2) | 555 (23.9) | 25 (51.0) | |

| Top 50% | 18 (20.5) | 1130 (48.6) | 34 (69.4) | |

| Bottom 25% | 45 (51.4) | 568 (24.4) | 9 (18.4) | |

| Rating of 9 or 10 | 0.13 (0.10–0.16) | |||

| Top 25% | 15 (17.0) | 621 (26.7) | 24 (48.9) | |

| Top 50% | 33 (37.5) | 1205 (51.7) | 36 (73.5) | |

| Bottom 25% | 38 (43.2) | 600 (25.8) | 9 (18.4) | |

| HF Hospitals | Low-Performing N=147 | Intermediate-Performing N=2863 | Top-Performing N=105 | |

| Definitely Recommend Hospital | 0.07 (0.04–0.09) | |||

| Top 25% | 29 (20.1) | 690 (24.2) | 34 (32.4) | |

| Top 50% | 57 (39.6) | 1377 (48.3) | 63 (60.0) | |

| Bottom 25% | 60 (41.7) | 713 (25.0) | 17 (16.2) | |

| Rating of 9 or 10 | 0.06 (0.03–0.08) | |||

| Top 25% | 31 (21.5) | 687 (24.1) | 33 (31.4) | |

| Top 50% | 63 (43.8) | 1370 (48.0) | 63 (60.0) | |

| Bottom 25% | 49 (34.0) | 707 (24.8) | 18 (17.1) | |

Abbreviations: AMI: Acute Myocardial Infarction; HF: Heart Failure; 95% CI: 95 percent confidence interval

The Kappa statistic is based on the agreement between hospital satisfaction ratings for 2008 (categorized in quartiles) and hospital process performance measures for 2008 (categorized in quartiles).

DISCUSSION

We found that hospitals that consistently perform poorly on cardiac process measures also perform poorly on patient satisfaction suggesting that poor quality clinical care is perceived by patients. The difference in overall satisfaction between hospital categories was significant even after adjusting for important hospital characteristics that are previously known to influence patient satisfaction. Although patient satisfaction ratings were lower on average at low-performing hospitals compared to better performing hospitals, there was evidence of discordance in performance on process measures and patient satisfaction ratings, especially for HF. A number of our findings are important and merit further discussion.

While several studies have reported on the association between clinical process measures and patient satisfaction,8, 18–20 our study was specifically focused on hospitals with consistently poor performance on cardiac illnesses. Our study reiterates the findings from our previous work showing that consistently low-performing cardiac hospitals differ from better performing hospitals with regards to hospital structure and organization – these hospitals are smaller, rural facilities that are predominantly concentrated in the South, and have higher risk-adjusted mortality.6 The current analyses add to these findings by demonstrating that poor process measure adherence is also associated with lower patient satisfaction among surviving patients at these hospitals. Together, the two studies suggest that there is a discrete group of hospitals with consistently poor process measure adherence, consistently high risk-adjusted mortality, and consistently poor patient satisfaction and further strengthens the case for quality improvement at these hospitals.

Based on these results, one might argue that quality improvement initiatives focused at this discrete group of hospitals could theoretically magnify improvements in healthcare and positively impact care for vulnerable patients. However, pay for performance programs as currently envisioned in the Patient Protection and Affordable Care Act4 may fall short of this objective. Low-performing hospitals may be disadvantaged if they lack the resources necessary to engage in quality improvement efforts. By rewarding top performance or net improvement and penalizing low-performing hospitals, pay for performance could worsen disparities and adversely impact care of the poor, underserved, minority patients that seek care at these hospitals.21 While a recent study using data from the Premier initiative has challenged these concerns,22 the fact remains that hospitals that participated in Premier were financially secure with greater ability and commitment towards quality improvement compared to the average hospital.23 Thus, policy makers would need to go beyond current pay for performance incentives to spur improvement in quality at these low-performing hospitals. To accomplish that, a firm understanding of the factors associated with poor performance at these hospitals (e.g., organizational values, leadership, and communication),24 their community benefit and the alternative choices available to patients who seek care at these hospitals is necessary to better inform policy.

Under the proposed value based purchasing plan, patient satisfaction is likely to be an integral part of hospital reimbursement. While an important quality metric from a patient’s perspective, inclusion of patient satisfaction as a performance measure may pose some problems. First, unlike process measures (e.g. prescribing aspirin to patients with AMI), patient satisfaction is not a discrete intervention; it is a complex multidimensional construct, the correlates of which are not fully understood.25 Inclusion of satisfaction measures for incentive payment presupposes that hospitals with lower satisfaction scores “know” how to improve satisfaction at their hospitals. Although, based on this study, it is tempting to think that greater adherence to process measures might result in improved patient satisfaction with care, unmeasured patient, hospital or physician characteristics could certainly explain the association we observed. Additionally, hospital characteristics that are associated with patient satisfaction are not easily modifiable (urban location, non-profit status, number of beds), and scant data exist to show whether hospital investment in the modifiable factors (greater number of nurses) will result in improved quality of care. Thus, without a careful understanding of the determinants of patient satisfaction, such a proposal might result in misguided investments by hospitals in programs that may not be effective at improving patient satisfaction or the overall quality of care.

The relatively robust association that we observed between process measures and satisfaction is not consistent across all studies.8, 18–20 Some of these differences are likely due to differences in study design. Since we used three years of consecutive process measures’ data instead of a single year, low-performing hospitals in our study are an extreme group of low quality hospitals, by definition. Therefore, it is not surprising that the differences in patient satisfaction scores observed in our study are larger than have been previously reported.

Although, we found that patient satisfaction ratings were on average lower at low-performing hospitals, there was heterogeneity in satisfaction ratings within hospital groups suggesting the presence of low-performing hospitals with high patient satisfaction ratings and vice versa. This was especially true for HF where we found that nearly 40% of low-performing HF hospitals had better than average patient satisfaction ratings and 40% of top-performing HF hospitals had below average patient satisfaction ratings. The observed association between hospital performance and patient satisfaction notwithstanding, these findings illustrate that process measures and satisfaction ratings measure relatively distinct facets of hospital quality and support the notion that evaluation of hospital quality should be based on multiple measures. Future studies aimed at developing a better understanding of the factors that might explain the variability in patient satisfaction ratings at low-performing hospitals are warranted.

Our study should be interpreted in light of the following limitations. First, our analyses were based on data that is self-reported by hospitals which may be subject to “gaming”; CMS is planning on expanding its current auditing practices to improve reliability. Second, our choice of classifying hospitals into low, intermediate and top-performing groups is somewhat arbitrary. To ensure the robustness of our results, we conducted sensitivity analyses using alternate cut points which also yielded similar results. Third, we only had access to aggregate hospital-level HCAHPS survey data; data at the patient-level was not available limiting our ability to assess satisfaction only in patients with cardiovascular diseases. Despite that, we found lower hospital-wide patient satisfaction at low-performing cardiac hospitals suggesting broader issues in organization and delivery of care at these facilities. Fourth, our findings do not establish causality between poor hospital performance on process measures and lower satisfaction ratings at these hospitals. Finally, while overall survey response rates were low; these were significantly lower at low-performing hospitals. The HCAHPS survey is adjusted for differences in patient-mix including non-response prior to public reporting. Any residual bias would only strengthen our findings since non response is negatively associated with satisfaction.13

In conclusion, our study found that low-performing cardiac hospitals based on process measures have on average, lower satisfaction ratings compared to better performing hospitals suggesting that there is a dire need to improve quality of care at this easily identifiable group of hospitals. However, there is discordance between the two measures of profiling hospital quality.

Supplementary Material

WHAT IS KNOWN

Hospitals that are consistently poor performers on process of care measures for cardiac diseases are structurally distinct compared to better performing hospitals (smaller facilities, fewer nurses per-patient, more likely rural, safety-net hospitals)

Risk-adjusted mortality at low-performing cardiac hospitals is significantly worse compared to better performing hospitals

Little is known about the association between hospital performance and patient satisfaction ratings

WHAT THIS STUDY ADDS

Average patient satisfaction ratings were lower at low-performing hospitals compared to intermediate and top-performing hospitals, after adjusting for hospital characteristics

Despite this, there is heterogeneity in patient satisfaction ratings within hospital performance groups, with existence of low-performing hospitals that have better than average satisfaction ratings and top-performing hospitals with below average satisfaction ratings

Acknowledgments

SOURCES OF FUNDING: Dr. Saket Girotra is a Fellow in the Division of Cardiovascular Diseases, Department of Medicine at University of Iowa. Dr. Peter Cram is supported by a K24 award from the National Institute of Arthritis, Musculoskeletal and Skin Diseases (AR062133) and by the Department of Veterans Affairs. Dr. Ioana Popescu is supported by a K08 award from the National Heart, Lung and Blood Institute (NHLBI, HL095930-01). This work is also funded in-part by R01 HL085347 from NHLBI and R01 AG033035 from the National Institute of Aging at the National Institute of Health (Dr. Peter Cram).

Footnotes

DISCLOSURES: None

References

- 1.Crossing the quality chasm: a new health system for the 21st century. The Institute of Medicine. National Academy Press; 2001. [PubMed] [Google Scholar]

- 2.McGlynn EA, Asch SM, Adams J, Keesey J, Hicks J, DeCristofaro A, Kerr EA. The quality of health care delivered to adults in the United States. N Engl J Med. 2003;348(26):2635–45. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- 3.Jha AK, Li Z, Orav EJ, Epstein AM. Care in U.S. hospitals--the Hospital Quality Alliance program. N Engl J Med. 2005;353(3):265–74. doi: 10.1056/NEJMsa051249. [DOI] [PubMed] [Google Scholar]

- 4. [Accessed on December 4, 2011.];Patient Protection and Affordable Care Act, P.L. 111–148. http://frwebgate.access.gpo.gov/cgi-bin/getdoc.cgi?dbname=111_cong_bills&docid=f:h3590enr.txt.pdf.

- 5.Lindenauer PK, Remus D, Roman S, Rothberg MB, Benjamin EM, Ma A, Bratzler DW. Public reporting and pay for performance in hospital quality improvement. N Engl J Med. 2007;356(5):486–496. doi: 10.1056/NEJMsa064964. [DOI] [PubMed] [Google Scholar]

- 6.Popescu I, Werner RM, Vaughan-Sarrazin MS, Cram P. Characteristics and outcomes of america’s lowest-performing hospitals: an analysis of acute myocardial infarction hospital care in the United States. Circ Cardiovasc Qual Outcomes. 2009;2(3):221–227. doi: 10.1161/CIRCOUTCOMES.108.813790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Davidson G, Moscovice I, Remus D. Hospital size, uncertainty, and pay-for-performance. Health Care Financ Rev. 2007;29(1):45–57. [PMC free article] [PubMed] [Google Scholar]

- 8.Jha AK, Orav EJ, Zheng J, Epstein AM. Patients’ perception of hospital care in the United States. N Engl J Med. 2008;359(18):1921–1931. doi: 10.1056/NEJMsa0804116. [DOI] [PubMed] [Google Scholar]

- 9. [Accessed on December 4, 2011.];Hospital Compare Database. http://www.hospitalcompare.hhs.gov.

- 10. [Accessed on December 4, 2011.];Reporting Hospital Quality Data for Annual Payment Update (RHQDAPU) http://www.qualitynet.org/dcs/ContentServer?cid=1138115987129&pagename=QnetPublic%2FPage%2FQnetTier2&c=Page.

- 11.Early intravenous then oral metoprolol in 45[punctuation space]852 patients with acute myocardial infarction: randomised placebo-controlled trial. The Lancet. 2005;366(9497):1622–1632. doi: 10.1016/S0140-6736(05)67661-1. [DOI] [PubMed] [Google Scholar]

- 12.Peterson ED, DeLong ER, Masoudi FA, O’Brien SM, Peterson PN, Rumsfeld JS, Shahian DM, Shaw RE. ACCF/AHA 2010 Position Statement on Composite Measures for Healthcare Performance Assessment. Circulation. 2010;121(15):1780–1791. doi: 10.1161/CIR.0b013e3181d2ab98. [DOI] [PubMed] [Google Scholar]

- 13.Elliott MN, Edwards C, Angeles J, Hambarsoomians K, Hays RD. Patterns of unit and item nonresponse in the CAHPS Hospital Survey. Health Serv Res. 2005;40:2096–2119. doi: 10.1111/j.1475-6773.2005.00476.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Goldstein E, Farquhar M, Crofton C, Darby C, Garfinkel S. Measuring hospital care from the patients’ perspective: an overview of the CAHPS Hospital Survey development process. Health Serv Res. 2005;40:1977–1995. doi: 10.1111/j.1475-6773.2005.00477.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.O’Malley AJ, Zaslavsky AM, Elliott MN, Zaborski L, Cleary PD. Case-mix adjustment of the CAHPS Hospital Survey. Health Serv Res. 2005;40:2162–2181. doi: 10.1111/j.1475-6773.2005.00470.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. [Accessed on December 4, 2011];Rural Urban Commuting Area Codes. http://www.ers.usda.gov/Data/RuralUrbanCommutingAreaCodes/

- 17.American Hospital Association. [Accessed on December 4, 2011.];Annual Survey Database. http://www.ahadata.com/ahadata/html/AHASurvey.html.

- 18.Chang JT, Hays RD, Shekelle PG, MacLean CH, Solomon DH, Reuben DB, Roth CP, Kamberg CJ, Adams J, Young RT, Wenger NS. Patients’ global ratings of their health care are not associated with the technical quality of their care. Ann Intern Med. 2006;144(9):665–672. doi: 10.7326/0003-4819-144-9-200605020-00010. [DOI] [PubMed] [Google Scholar]

- 19.Glickman SW, Boulding W, Manary M, Staelin R, Roe MT, Wolosin RJ, Ohman EM, Peterson ED, Schulman KA. Patient Satisfaction and Its Relationship With Clinical Quality and Inpatient Mortality in Acute Myocardial Infarction. Circulation: Cardiovascular Quality and Outcomes. 2010;3(2):188–195. doi: 10.1161/CIRCOUTCOMES.109.900597. [DOI] [PubMed] [Google Scholar]

- 20.Lee DS, Tu JV, Chong A, Alter DA. Patient satisfaction and its relationship with quality and outcomes of care after acute myocardial infarction. Circulation. 2008;118(19):1938–1945. doi: 10.1161/CIRCULATIONAHA.108.792713. [DOI] [PubMed] [Google Scholar]

- 21.Werner RM, Goldman LE, Dudley RA. Comparison of Change in Quality of Care Between Safety-Net and Non-Safety-Net Hospitals. JAMA. 2008;299(18):2180–2187. doi: 10.1001/jama.299.18.2180. [DOI] [PubMed] [Google Scholar]

- 22.Jha AK, Orav EJ, Epstein AM. The effect of financial incentives on hospitals that serve poor patients. Ann Intern Med. 2010;153(5):299–306. doi: 10.7326/0003-4819-153-5-201009070-00004. [DOI] [PubMed] [Google Scholar]

- 23.Werner RM. Does pay-for-performance steal from the poor and give to the rich? Ann Intern Med. 2010;153(5):340–341. doi: 10.7326/0003-4819-153-5-201009070-00010. [DOI] [PubMed] [Google Scholar]

- 24.Curry LA, Spatz E, Cherlin E, Thompson JW, Berg D, Ting HH, Decker C, Krumholz HM, Bradley EH. What distinguishes top-performing hospitals in acute myocardial infarction mortality rates? A qualitative study. Ann Intern Med. 2011;154(6):384–390. doi: 10.7326/0003-4819-154-6-201103150-00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Plomondon ME, Magid DJ, Masoudi FA, Jones PG, Barry LC, Havranek E, Peterson ED, Krumholz HM, Spertus JA, Rumsfeld JS. Association between angina and treatment satisfaction after myocardial infarction. J Gen Intern Med. 2008;23 (1):1–6. doi: 10.1007/s11606-007-0430-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.