Abstract

The distractibility that older adults experience when listening to speech in challenging conditions has been attributed in part to reduced inhibition of irrelevant information within and across sensory systems. Whereas neuroimaging studies have shown that younger adults readily suppress visual cortex activation when listening to auditory stimuli, it is unclear the extent to which declining inhibition in older adults results in reduced suppression or compensatory engagement of other sensory cortices. The current functional magnetic resonance imaging study examined the effects of age and stimulus intelligibility in a word listening task. Across all participants, auditory cortex was engaged when listening to words. However, increasing age and declining word intelligibility had independent and spatially similar effects: both were associated with increasing engagement of visual cortex. Visual cortex activation was not explained by age-related differences in vascular reactivity but rather auditory and visual cortices were functionally connected across word listening conditions. The nature of this correlation changed with age: younger adults deactivated visual cortex when activating auditory cortex, middle-aged adults showed no relation, and older adults synchronously activated both cortices. These results suggest that age and stimulus integrity are additive modulators of crossmodal suppression and activation.

Keywords: aging, crossmodal, fMRI, speech perception, vascular reactivity

Introduction

Older adults have difficulty recognizing speech, particularly in challenging listening conditions (Gordon-Salant 1986; Dubno et al. 2002, 2003). This difficulty appears to stem from declines in the peripheral auditory system (Humes 1996) as well as the central auditory system (Zekveld et al. 2006; Eckert, Walczak, et al. 2008). Speech recognition declines may also result from changes in neural systems that direct attention. Indeed, aging is associated with increased distractibility (Connelly et al. 1991; Carlson et al. 1995; May 1999) which, according to the Inhibitory Deficit Hypothesis (Hasher and Zacks 1988; Gazzaley and D'Esposito 2007), reflects reduced inhibitory control within (Gazzaley et al. 2005) and across sensory systems (Laurienti et al. 2006; Peiffer et al. 2007). Thus, age-related declines in speech recognition may be due in part to older adults' diminished ability to inhibit both irrelevant auditory and non-auditory information.

Younger adults have been shown to readily suppress visual cortex activity when attending to auditory stimuli such as tones (Hairston et al. 2008), words (Yoncheva et al. 2010), and short melodies (Johnson and Zatorre 2005, 2006). This suppression follows from work by Haxby et al. (1994) showing that selective attention to a particular sensory modality is associated with decreased activity in regions that are responsive to other sensory modalities. For example, Kawashima et al. (1995) found that young adults suppressed visual cortex activation during a tactile task both when their eyes were kept open and closed. Likewise, visual suppression during an auditory task has been shown to occur whether or not visual stimuli are presented (Johnson and Zatorre 2005), suggesting that younger adults are adept at selectively attending and therefore inhibiting task-irrelevant crossmodal information whether externally or internally represented. Thus, suppression of task-irrelevant visual cortices may prevent increases in effort or declines in performance that are associated with divided attention or may limit distractibility, similar to wanting to close one's eyes while listening to difficult speech. The current study aimed to investigate to what extent task-irrelevant visual cortex suppression is dependent upon age and task difficulty when listening to speech.

Age-related changes in the control of visual cortex activation have been reported in previous research. Atypical activation has been observed when older adults view pictures of faces while ignoring scenes or vice versa (Gazzaley et al. 2005; Rissman et al. 2009) and when listening to tones (Peiffer et al. 2009). However, it is not known if older adults exhibit a crossmodal pattern of suppression during speech recognition like younger adults or if they exhibit atypical visual cortex activation. It is possible that changes in perceptual function (Murphy et al. 1999) and/or changes in task demands modulate these effects.

Increasing task difficulty may require additional attentional resources thereby limiting the availability of neural resources that typically provide inhibitory control and thus result in distractibility to disinhibited irrelevant stimuli. Older adults tend to exhibit more difficulty performing tasks than younger adults, especially with speeded responding (Salthouse and Somberg 1982). Young adults exhibit similar neurobiological effects as older adults when difficulty is equated between age groups. For example, in a unimodal visual task in which participants attend to images of faces and ignore scenes or vice versa, younger adults under increased task difficulty exhibited reduced suppression of task-irrelevant cortical regions similar to older adults performing under easier task conditions (i.e., increased engagement of the parahippocampal place area when attending to faces or the fusiform face area when attending to scenes; Rissman et al. 2009).

Such age-related changes in visusal cortex activation have even been found in a unimodal aural word recognition task, pointing to an interaction between deficits in aging and task difficulty on the degree of crossmodal engagement. For example, Eckert, Walczak, et al. (2008) observed increased frontal activity in older adults when they correctly repeated low-pass filtered words in relatively easy listening conditions. In contrast, younger adults were more likely to engage these regions when they incorrectly repeated these words in more challenging listening conditions. While it was not the focus of the study, a visual cortex region also exhibited this pattern of results. This finding suggests that visual cortex activation is dependent on both age and word intelligibility. The current experiment tests this hypothesis using a word listening task and examines to what extent changes in visual cortex activation are tied to changes in auditory processing.

The aim of this functional magnetic resonance imaging (fMRI) study was to investigate the extent to which listening difficulty and age modulate visual cortex (de)activation. Adults 19–79 years old listened to words that were band-pass filtered to vary intelligibility. We predicted that 1) younger adults would deactivate visual cortex during word listening compared with baseline, and, following from the Inhibitory Deficit Hypothesis, 2) increasing age would be associated with reduced suppression and therefore increasing visual cortex activation. Moreover, we predicted 3) similar effects of word intelligibility such that participants across the age range would demonstrate reduced visual cortex suppression with decreasing intelligibility (increased task difficulty). Because we have not observed differences in the parametric slope of auditory cortex activity with age in previous studies (e.g., Eckert, Walczak, et al. 2008; Harris et al. 2009), we did not predict interaction effects between word intelligibility and age in auditory cortex.

To rule out an alternative explanation that apparent failures of suppression may be explained by age-related changes in vascular reactivity (D'Esposito et al. 2003), participants also performed a respiration task in which their breathing was physiologically monitored as they were scanned (Thomason and Glover 2008). This is crucial in the current study because visual cortical regions are especially susceptible to age differences in vascular reactivity (Ross et al. 1997; Buckner et al. 2000). In addition, the hemodynamic response function (HRF) of older adults can be temporally delayed compared with younger adults (Taoka et al. 1998). A delayed HRF could manifest as an apparent suppression effect given that different points of the HRF curve could be sampled in a sparse sampling design. Thus, to control for physiological age differences in the interpretation of results, a respiration task was used to estimate potential individual variations in HRF amplitude and lag effects on crossmodal function.

Finally, we predicted that 4) activation in auditory and visual areas would be functionally correlated, thereby reflecting the engagement of a crossmodal system that has been observed in a resting state task with young adults (Eckert, Kamdar, et al. 2008). In the current study, we examined to what extent areas associated with auditory and visual processing are synchronously (de)activated and how this relationship changes with age. For younger adults who demonstrate suppression of visual cortex during auditory tasks, we predicted a negative relation between the engagement of auditory and visual cortices. If aging is associated with decreasing visual cortex suppression, this negative connectivity may weaken, degrade entirely, or manifest as a positive functional relation in older adults.

Materials and Methods

Participants

Thirty-six adults aged 19–79 years (M = 50.5, standard deviation [SD] = 21.0; 13 females) participated in this study. All were monolingual native speakers of American English. Their average years of education was 18.2 years (SD = 2.9), average socioeconomic status was 52.4 (SD = 10.0; Hollingshead 1975), and degree of handedness on the Edinburgh handedness questionnaire (Oldfield 1971) was 91.4 (SD = 9.6, where 100 is maximally right handed and −100 is maximally left handed). Age was not significantly correlated with any of these measures (all P > 0.05). Three age groups were constructed for a subset of analyses to clarify the pattern of results we find with our continuous age predictor: younger (<40 years old, n = 15), older (>61 years old, n = 15), and middle aged (n = 6).

Each participant completed the Mini Mental State Examination (Folstein et al. 1983) and had 3 or fewer errors, indicating little or no cognitive impairment (Tombaugh and McIntyre 1992). Participants provided written informed consent before participating in this Medical University of South Carolina Institutional Review Board approved study.

Audiometry

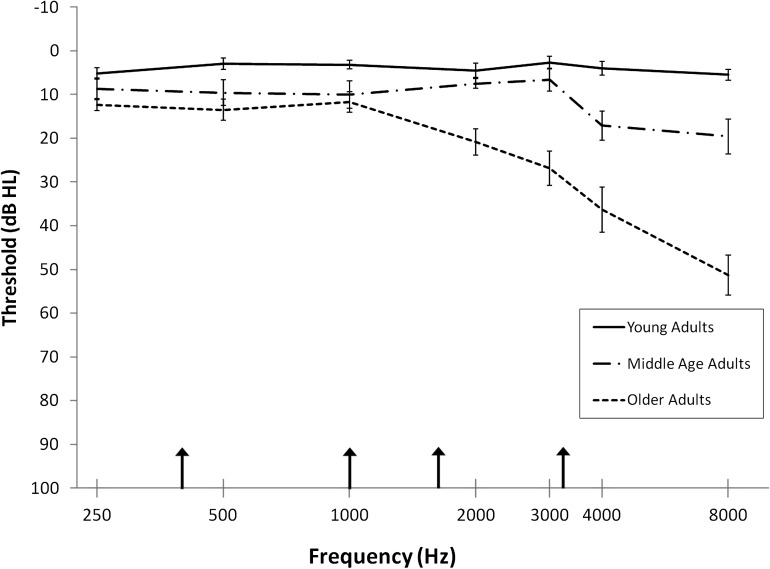

Pure-tone thresholds at standard intervals between 250 and 8000 Hz were measured with a Madsen OB922 clinical audiometer calibrated to appropriate ANSI standards (American National Standards Institute 2004) using TDH-39 headphones. Participants age 61 and younger had normal hearing (defined as thresholds ≤ 25 dB HL at 250–8000 Hz). Older participants' pure-tone thresholds varied from normal hearing to moderate high-frequency hearing loss. Mean pure-tone thresholds for 3 groups of adults (young, middle-age, and older) are depicted in Figure 1. As described later, a masking noise and band-pass filtering of speech reduced individual differences in word audibility.

Figure 1.

Average pure-tone thresholds (dB HL) for young (n = 15), middle age (n = 6), and older (n = 15) adults are presented for the average of left and right ears. Error bars represent the standard error of the mean. Arrows along the abscissa indicate the upper cutoff frequency of the band-pass filter applied to the speech (400, 1000, 1600 and 3150 Hz).

Materials

The stimuli for this study included 120 English words selected from a database of consonant-vowel-consonant words, which were spoken by a male Native English speaker (Dirks et al. 2001; see Supplementary Table 1). Previous studies have shown that the properties of words and phonemes can alter the ease with which words may be recognized (e.g., Luce and Pisoni 1998). Because these properties have been shown to differentially impact various age groups (Sommers 1996), we controlled for several lexical and phonemic properties of our stimuli. Specifically, the words presented in each of the 4 filter conditions did not differ significantly (by a one-way analysis of variance [ANOVA] and pairwise t-tests) on any of the following average measures: word familiarity, word frequency (Spoken Word Frequency, Kucera–Francis, and SUBTLEXUS), phonological neighborhood density, mean frequency of phonological neighbors, frequency of biphonemic units averaged across the word (weighted and unweighted by word frequency), articulation duration of each stimulus, mean count of voiced/voiceless phonemes per word, and mean count of stop/continuant phonemes per word. All measures were obtained from 3 databases (except for the last 3 measures, which were calculated by the first author): 1) a database of 400 words used in a prior study with older adults of the neighborhood activation model (Dirks et al. 2001), 2) the Irvine Phonotactic Online Dictionary (IPhod) database (Vaden et al. 2009), and 3) a Spoken Word Frequency Database for American English (Pastizzo and Carbone 2007), all of which pool word and phoneme metrics from various established sources (e.g., Kucera and Francis 1967; Simpson et al. 2002; Brysbaert and New 2009). For a complete listing of means, SDs, and statistical tests, see Supplementary Table 2.

To manipulate word intelligibility, each word was presented in only 1 of 4 band-pass filter conditions in which the upper cutoff frequencies were 400 (most speech information removed), 1000, 1600, and 3150 Hz (least speech information removed). Articulation Index calculations (Studebaker et al. 1993) and previous behavioral testing (Eckert, Walczak, et al. 2008) predicted that these cutoff frequencies would be approximately linearly related to word recognition when the upper cutoff frequency is plotted on a log scale. The lower cutoff frequency was fixed at 200 Hz (Eckert, Walczak, et al. 2008; Harris et al. 2009), which was greater than the average pitch (F0) across words (M = 122 Hz, SD = 27).

Procedures

Word Recognition Task

The word listening experiment used an event-related design in which each word was presented in 1 of the 4 filter conditions. The order of the conditions was pseudorandom within the presentation list such that no filter condition appeared twice in a row. Each participant received the same presentation list. A condition in which only background noise was presented (described below) was also included and pseudorandomly interspersed with the word trials. Eprime software (Psychology Software Tools, Pittsburgh, PA) and an IFIS-SA control system (Invivo, Orlando, FL) were used to control the presentation and timing of each trial in the scanner.

Each 8-s repetition time (TR) began with an image acquisition. During each word-presentation trial, a white crosshair was presented in the center of a projection screen display. Two seconds into the trial, a filtered word was presented (in 1 of the 4 filter conditions) at 75 dB SPL. One second later, the crosshair turned red and the participant responded by pressing the thumb button of an ergonomically designed button response keypad (MRI Devices Corp., Waukesha, WI) if they recognized the word and the index finger button if they did not. Participants were told that some words were more difficult to recognize than others but that they were encouraged to do their best to identify the word. Ten trials in which no word was presented were randomly interspersed throughout the experiment to serve as part of an implicit baseline and reduce the predictability of word onsets in this sparse sampling design. Thus, there was a total of 130 TRs (30 words in each of the 4 filter conditions and 10 no-word trials).

This task reduces participant focus on articulatory planning associated with overt production tasks or on semantic or sublexical processing associated with other types of semantic judgment and lexical decision tasks. Unlike with passive listening, however, this word recognition study ensured that participants were actively listening to stimuli while minimizing the potential for age-related differences in the ability to follow more complicated instructions throughout the experiment. Similar recognition judgment tasks have been successfully used in other studies of speech intelligibility (e.g., Davis and Johnsrude 2003; Obleser et al. 2007).

As described, pure-tone thresholds of older participants ranged from normal to moderately elevated, especially in the higher frequencies (Fig. 1). To reduce confounding effects of differences in word audibility across participants, words were presented in background noise that was spectrally shaped to elevate thresholds of all participants, similar to Dubno et al. (2006). One-third octave band levels of a broadband noise were set to produce estimated elevated thresholds (“masked thresholds”) of 20–25 dB HL from 250 to 2000 Hz and 30 dB HL at 3000 Hz (Hawkins and Stevens 1950). Measured thresholds in quiet for some older participants were higher than the estimated masked thresholds produced by the background noise. This occurred for 2 participants for thresholds between 250 and 1000 Hz, 5 participants for thresholds at 2000 Hz, and 6 participants for thresholds at 3000 Hz. For these participants, speech audibility in the unfiltered region may have been lower than for the other participants. Of course, this would not be a concern when band-pass filtering removed higher frequency speech information for all participants. A second computer was used to present the background noise continuously at 62.5 dB SPL, resulting in a signal-to-noise ratio of +12.5 dB. The words from the first computer were mixed with the broadband noise from the second computer at 2 s into each 8-s trial using an audio mixer and were delivered to 2 MR-compatible headphones (Sensimetrics, Malden, MA), diotically, so that the mixed speech and noise signal was presented to both ears. Signal levels were calibrated using a precision sound level meter (Larson Davis 800B, Provo, UT).

Respiration Task

A respiration experiment occurred subsequent to the word recognition task. Following Thomason and Glover (2008), participants viewed a screen that changed color from green to yellow to red. When the screen was green (6 TRs; 12 s), participants were instructed to breathe normally. When the screen turned yellow (1 TR; 2 s), participants prepared to hold their breath and when the screen turned red (6 TRs; 12 s), they held their breath. This sequence cycled 10 times. If participants were unable to hold their breath for this time, they were asked to hold their breath as long as was comfortable and then breathe normally. For this reason, as we discuss in the analysis section, we chose to determine onsets and durations of inhalations based on actual respiration measurements rather than assuming compliance with these specific instructions. Respiration was measured by placing an air cushion on the participant's abdomen and securing it with a Velcro belt. As the participant's abdomen rose with an inhalation, increased pressure was placed on the cushion registering as a positive deflection from baseline. The pressure placed on the cushion (signaling changes in respiration) was sampled at 100 Hz.

Image Acquisition

A sparse sampling design was used in which an entire volume was acquired once for each TR. This designed provided a means to present each word when the scanner noise was turned off (Eckert, Walczak, et al. 2008; Harris et al. 2009). T2*-weighted functional images were acquired using an 8-channel SENSE head coil on a Philips 3-T scanner. A single shot echo-planar imaging (EPI) sequence covered the entire brain (40 slices with a 64 × 64 matrix, TR = 8 s, echo time [TE] = 30 ms, slice thickness = 3.25 mm, and acquisition time [TA] = 1647 ms).

The respiration experiment utilized a continuous acquisition paradigm. T2*-weighted functional images were acquired once every 2 s. A single shot EPI sequence covered the entire brain (36 slices with a 64 × 64 matrix, TR = 2 s, TE = 30 ms, slice thickness = 3.25 mm, and a TA = 1944 ms).

T1-weighted structural images were also collected to normalize the functional data using higher resolution anatomical information (160 slices with a 256 × 256 matrix, TR = 8.13 ms, TE = 3.7 ms, flip angle = 8°, slice thickness = 1 mm, and no slice gap).

Image Preprocessing

Study-Specific Template

A study-specific structural template was created to ensure that images for all participants were coregistered to a common coordinate space. The participants' structural images were used to normalize the functional data into a common coordinate space, which was derived from diffeomorphic transformation of the structural images. Unified segmentation and diffeomorphic image registration (DARTEL) were performed in SPM5 (Ashburner and Friston 2005; Ashburner 2007). The DARTEL procedure warps each participant's native space gray matter image to a common coordinate space, providing closely aligned coregistration across participants (Eckert et al. 2010). The realigned functional data were coregistered to each participant's T1-weighted image using a mutual information algorithm. The DARTEL normalization parameters for each T1-weighted image were then applied to the anatomically aligned functional data. An 8 mm Gaussian kernel was used to ensure the data were normally distributed for parametric testing.

Word Listening

Functional images were preprocessed using SPM5 (http://www.fil.ion.ucl.ac.uk/spm). The Linear Model of the Global Signal method (Macey et al. 2004) was used to detrend the global mean signal fluctuations from the preprocessed images. Image volumes with significantly deviant signal (more than 2.5 SDs) relative to the global mean and with a significantly deviant number of voxels from their voxelwise mean across the run were identified and modeled as 2 nuisance regressors in the first-level analysis (Vaden et al. 2010). Using this method, 4.65% of the images were identified as significantly deviant. Two motion nuisance regressors, representing 3D translational and rotational motion, were calculated from the 6 realignment parameters generated in SPM via the Pythagorean Theorem.

A first-level fixed-effects analysis was performed for each individual's images to estimate differences in activity across the 4 filter conditions. The model contained 4 parameters (1 for each filter condition), 4 regressors (2 motion regressors and 2 signal intensity regressors), and a constant vector. The model was convolved with the SPM5 canonical HRF and high-pass filtered at 128 s. Contrasts were derived in the first-level analysis to examine the relative activation of listening to words versus an implicit baseline, which included the silent trials. Additional contrasts examined both linearly increasing and decreasing effects of word intelligibility.

Second-level random-effects analyses were performed to examine the consistency of the effects across participants. An age covariate was included to model the linear effect of increasing age on cortical activation during word listening and to control for age differences in intelligibility analyses. The peak voxel threshold and cluster extent thresholds were each set at P < 0.01 uncorrected (Poline et al. 1997; Harris et al. 2009) for all reported analyses unless otherwise noted.

Based on the second-level aging results, a functional connectivity analysis was conducted. A functionally defined region of interest (ROI) was created from the intersection of the resulting visual cluster and a 16 mm diameter sphere, centered around a peak voxel within that cluster, to ensure that the sphere did not extend beyond the visual cortex result or the edges of the brain. Using MarsBar (Brett et al. 2002), the time series from this seed region was entered as a regressor into first-level analyses. Two additional nuisance regressors consisted of time series extracted from 20% probability gray and white matter masks to control for whole-brain correlations. The results depicted the areas across the entire brain in which activation was correlated with the seed region time series in a second-level group analysis containing age as a covariate.

Vascular Reactivity

Six of the 36 participants were not included in this analysis because they did not complete the respiration task (n = 1; age 52), because physiological data were not recorded (n = 3; ages 19, 25, and 61), or because physiological data were too noisy to identify periods of breathing and holding (n = 2; ages 27 and 57). The functional data (preprocessed as described above) were analyzed by examining the blood oxygen level–dependent (BOLD) signal changes that were time locked to behaviorally defined periods of inhalation. Activation was time locked to respiratory events instead of stimulus events (screen color change) to provide greater sensitivity to increases in oxygenation, to mitigate effects in visual cortex that were solely linked to changes in the visual display, and to reduce the impact of age-related processing slowing that could differentially delay the behavior of older adults relative to the stimulus onsets. Because older adults are likely to have a delayed HRF (Taoka et al. 1998) and because of concerns with variability of the speed of responding to task demands, it was important to model each physiological event rather than contrasting average blocks of purported inhalations with average blocks of purported breath holding.

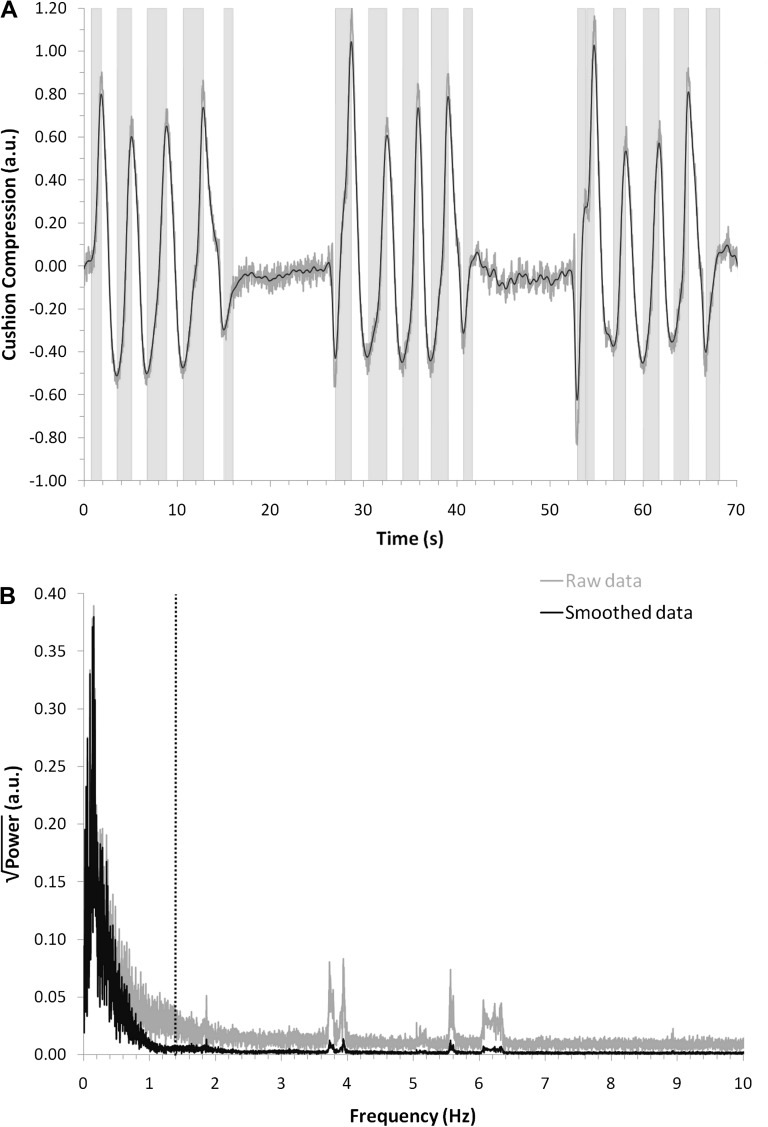

A custom MATLAB (Mathworks, Natick, MA) script was used to smooth the respiratory data (weighted average across an 800 ms window) and identify inhalation onsets and durations to be entered as parameters into the first-level model for each individual. An inhalation was defined as an increase in pressure on the cushion that persisted for more than 700 ms. On average, each participant had 48 (SD = 18) inhalations lasting 1.35 s (SD = 0.27). Figure 2A depicts the raw (gray line) and smoothed (black line) data from a representative participant (age 27), showing how the chosen smoothing and duration criteria classified periods as inhalations (light gray bands). Figure 2B presents a fast-Fourier transform of these data, which permits examination of how the smoothing (black line) and duration criteria (dotted vertical line) ignore information at frequencies that may have resulted from other physiological events and noise. These figures depict that, even if changes were made to the specific cutoff criteria, the data comprise high-powered low-frequency cycles (respiration) rather than high-frequency noise.

Figure 2.

The respiration task results are presented from one representative participant (age 27). Gray lines represent the raw data and black lines represent the data after smoothing. (A) The first 3 breathing epochs of the respiration task are displayed with the degree of inhalation (as measured by cushion compression) plotted against time. Vertical gray bands represent the onset and duration of inhalations as determined by the classification algorithm. (B) A fast-Fourier transform (FFT) of the data in A. This FFT permits the selection of robust smoothing and duration criteria which remove variability due to noise or other physiological events. The area to the left of the dotted black line represents the included data based on the minimum inhalation duration (700 ms = 1.4 Hz).

This inhalation parameter provided a means to assess differences in HRF amplitude across participants. The temporal derivative of the HRF was also specified as a parametric modulator. In this way, deviations in lag from the expected HRF could be compared for each participant. Two motion and 2 signal intensity nuisance regressors were also included in the model according to the procedure previously described.

A second-level analysis examined the extent to which differences in HRF amplitude and lag were related to age. To rule out the possibility that age-related differences in word listening were due to differences in vascular reactivity, the ROIs created from the word listening data were used to extract parameter estimates from the respiration data. These values were then entered as a separate regressor in the second-level word-listening analysis.

Results

Word Intelligibility Analyses

Effects of Word Intelligibility on Word Recognition

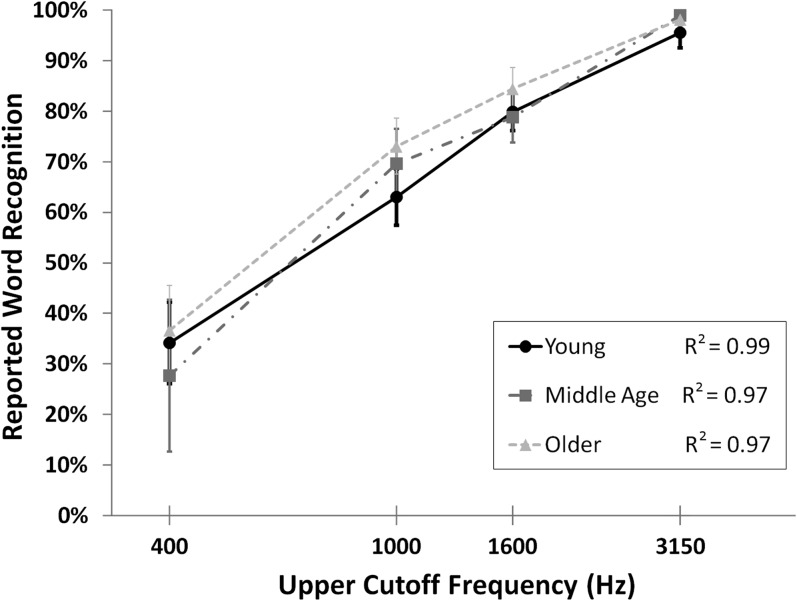

On average, participants indicated via button press that they had recognized (vs. not recognized) a word for 71.1% (SD = 17.1%) of responses. Figure 3 depicts reported word recognition for each of the 4 filter conditions, showing increased reported recognition with increasing word intelligibility (as determined by the 4 upper cutoff frequencies) across all ages. A repeated measures ANOVA confirmed that intelligibility had a significant influence on reported word recognition, F3,105 = 124.30, P < 0.001, with all pairwise comparisons P < 0.001. There were no significant correlations between word recognition and age across or within each condition, all |r| < 0.19, P > 0.29.

Figure 3.

The mean percent of words reported as recognized are plotted as a function of the upper cutoff frequency of the band-pass filter for each age group: young (n = 15), middle age (n = 6), and older adults (n = 15). The R2 value across participants is 0.99. Error bars represent the standard error of the mean.

Because participants occasionally button pressed during no-word trials (M = 3.81 false alarms, SD = 3.34), we were able to calculate d-prime to estimate their rate of button pressing when a word had been presented (hits) after subtracting their bias to respond when a word had not been presented (false alarms during no-word trials). Age was not significantly correlated with this unbiased word detection measure across (r = −0.21) and within each filter condition (r = −0.16, −0.23, −0.11, −0.05 for 400, 1000, 1600, and 3150, respectively), all P > 0.05. The normalized false alarm rate component of the d-prime measure (bias to respond during no-word trials) was also not correlated with age, r = −0.06, P > 0.05.

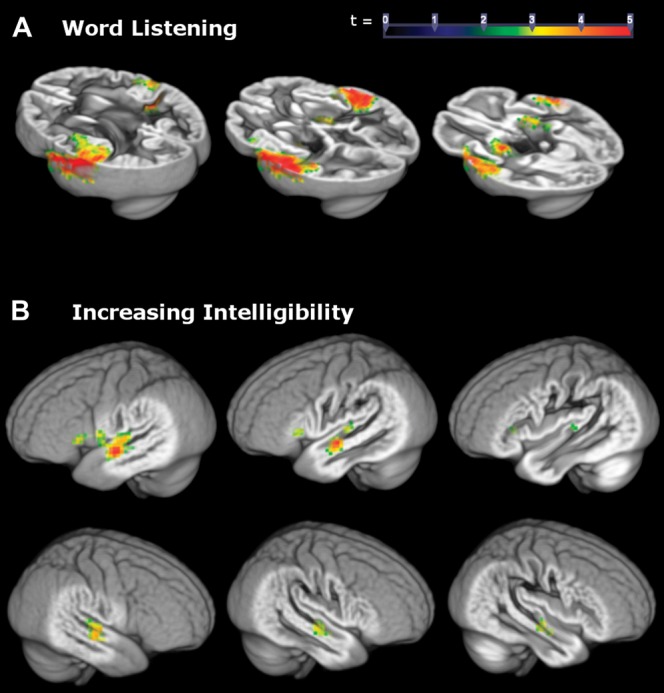

Effects of Word Intelligibility on BOLD Signal

Examination of the functional images during the task revealed that listening to words resulted in significantly increased activation throughout the temporal lobe particularly in bilateral superior temporal sulcus (STS) and gyrus (STG) and primary auditory cortex (Heschl’s gyrus, HG). Activation was also observed in the inferior frontal gyrus (Fig. 4A). With parametrically increasing intelligibility (from the 400 to 3150 Hz filter condition), these regions exhibited increasing activation (Fig. 4B). Left and right regions of the anterolateral STG and STS were particularly responsive to word intelligibility.

Figure 4.

Clusters represent the magnitude of the relation between increased BOLD signal in temporal areas and (A) listening to words across all conditions and (B) increasing word intelligibility from the 400 to 3150 Hz condition. Peak and cluster extent thresholds were both set to P < 0.01.

Aging and Task Difficulty Analyses

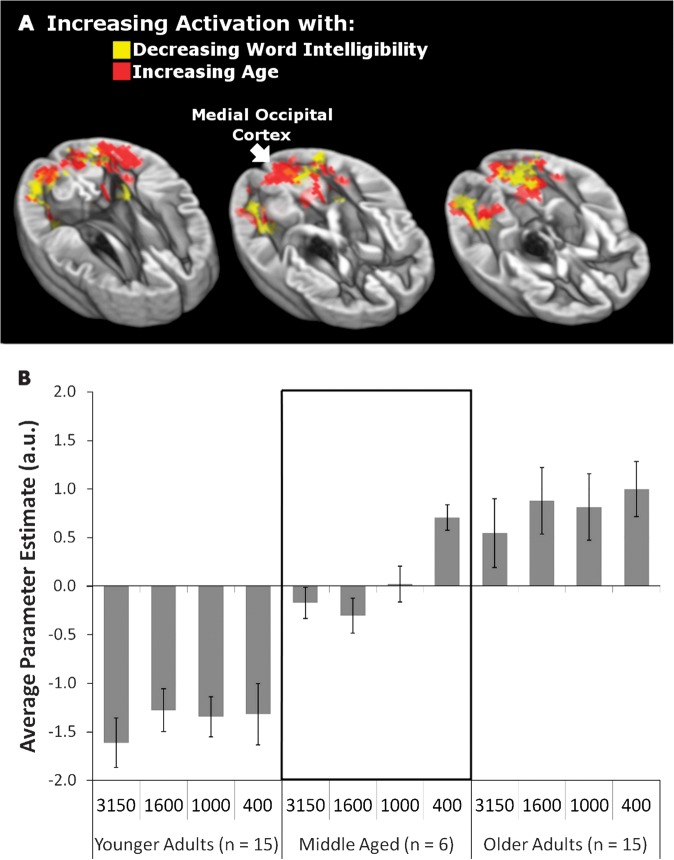

Based on evidence that effects similar to those observed in older adults can be observed in young adults by manipulating task demands (Rissman et al. 2009), we predicted that aging and decreasing intelligibility should have a similar impact on the engagement of visual cortex. To test this hypothesis, we examined the effects of parametrically increasing age and decreasing word intelligibility (while controlling for the other variable) on BOLD signal during word listening. In both cases, there was an increase in the activation of medial occipital cortex, bilateral inferior angular gyrus (iAG), and bilateral collateral sulci (Fig. 5A), and thus, increasing age and listening difficulty were related to increasing visual cortex activation.

Figure 5.

(A) Parametric increases in activation with decreasing intelligibility (red) overlap with increases in activation with increasing age (yellow). Peak and cluster extent thresholds were both set at P < 0.01. (B) Parameter estimates represent activation in the medial occipital cortex region (identified with a white arrow in A). The mean parameter estimates for activation in this region are presented separately for each filter condition within younger (n = 15), middle-aged (n = 6), and older groups (n = 15). Positive values indicate activation of medial occipital cortex and negative values indicate reduced activation relative to an implicit baseline mean. Error bars represent standard error of the mean.

When the parametric estimate values from this analysis were extracted from an ROI defined at the medial occipital peak within the visual cortex cluster, younger adults appeared to deactivate this region below an implicit baseline while older adults activated it during word listening. The transition between patterns of deactivation and activation began to emerge in middle age, with a change from visual cortex deactivation to activation occurring within this group for the most difficult listening condition (400 Hz). Figure 5B presents these values across filter condition and 3 age groups: younger (<40 years old, n = 15), older (>61 years old, n = 15), and middle aged (n = 6). Similar patterns were found for ROIs based on the entire visual cluster or other subpeaks within the visual cluster as well as for varying ROI sizes (8, 12, and 16 mm diameter spheres). Figure 5B also shows that the pattern of results in panel A (increasing listening difficulty with increasing visual cortex activation) holds within age groups. Thus, there appear to be effects of both age and word intelligibility on visual cortex activity.

As expected, age was highly correlated with average pure-tone threshold from 250 to 8000 Hz (r = 0.79, P < 0.001). Nevertheless, no significant relationship between average pure-tone threshold and visual cortex activation was observed when controlling for the contribution of age across all conditions (partial r = 0.11, P = 0.54) nor within any of the intelligibility conditions (all r < 0.18, P > 0.31). In contrast, the relationship between age and visual cortex activation persisted even when controlling the contribution of average pure-tone threshold (partial r = 0.42, P = 0.01), indicating that visual cortex activation was more strongly predicted by age than by hearing loss. This pattern held within each of the 3 most difficult listening conditions (partial r = 0.49, P < 0.005 for 400 Hz; partial r = 0.48, P < 0.005 for 1000 Hz; partial r = 0.34, P < 0.05 for 1600 Hz), however, the relation between age and visual cortex activation was no longer significant when controlling for pure-tone thresholds in the easiest condition (partial r = 0.27, P = 0.12 for 3150 Hz). This suggested that hearing loss may have contributed to visual cortex activation for the condition where the range of participants' thresholds was largest and older participants had more hearing loss (3150 Hz in Fig. 1).

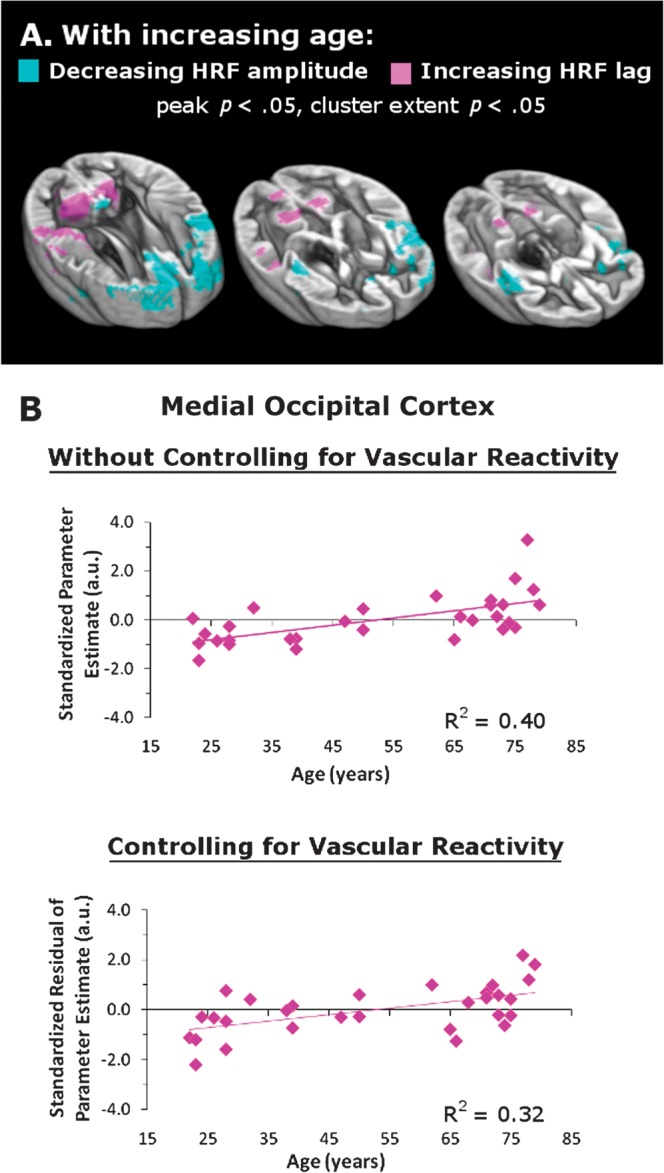

Vascular Reactivity Analyses

The respiration data were analyzed to determine the extent to which the age-related visual cortex findings could be attributed to non-neuronal sources of variance in the BOLD signal, such as the amplitude and delay of the vascular response. A group-level analysis demonstrated age differences within visual cortex only for the HRF lag, not amplitude (Fig. 6A). Moreover, HRF differences in visual cortex were only observed with less conservative peak and cluster extent thresholds, each set to P < 0.05.

Figure 6.

Vascular reactivity does not account for the effect of aging on visual cortex activation (in Fig. 5A). The data include only the 30 participants whose word listening and respiration data could be analyzed. (A) With increasing age, frontal and insular areas exhibit decreasing HRF amplitude while occipital and parietal areas exhibit increasing HRF lag. Peak and cluster extent thresholds are both set at P < 0.05. (B) The top graph depicts a significant effect of age on the activation of medial occipital cortex when listening to words even with a reduced sample of 30. The lower graph depicts that the age effect was still significant after controlling for individual differences in the HRF lag (both P < 0.01).

These weak age-related differences in the vascular reactivity of visual cortex did not account for the relationship between visual cortex activation and age depicted in Figure 5. The upper graphs of Figure 6B show the standardized parameter estimates extracted from medial occipital cortex and the collateral sulcus for the 30 participants whose word listening and respiration data could be analyzed. These results strengthen the findings depicted in Figure 5A by showing that even with 6 fewer participants, age is significantly correlated with visual cortex activation in medial occipital cortex (r = 0.63, P < 0.01). The same was true for a spot in the left collateral sulcus (r = 0.58, P < 0.01). As shown in Figure 6B, this relation persisted when the degree of individual HRF lag was entered as a predictor in a linear regression model (medial occipital cortex r = 0.57, P < 0.01; left collateral sulcus r = 0.55, P < 0.01). These results demonstrate that vascular reactivity did not drive the age-related changes in visual cortex during the word listening task. A separate analysis confirmed that the significant positive relation between increasing age and decreasing word intelligibility, as depicted in Figure 5A, persisted in this subsample (n = 30).

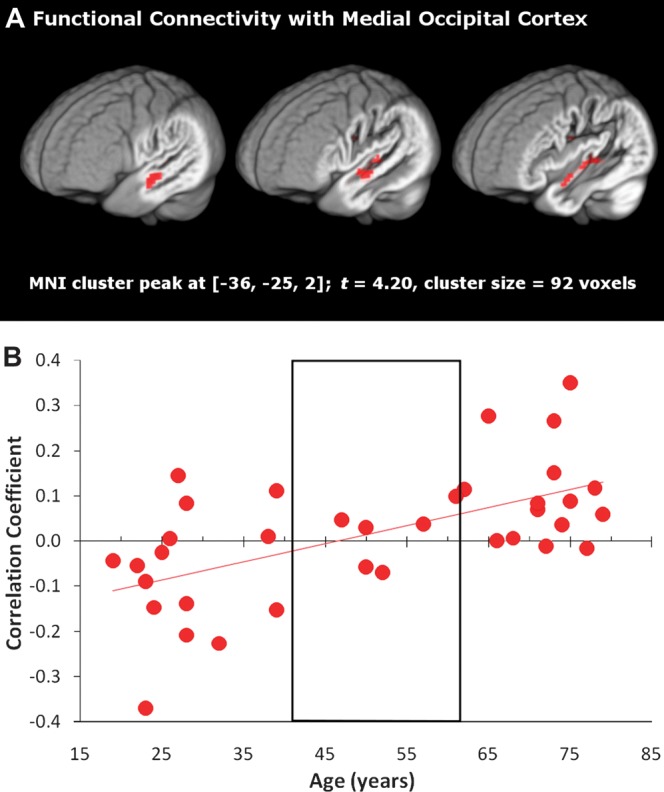

Crossmodal Connectivity Analysis

We predicted that auditory and visual cortical regions would be functionally correlated during the task if visual cortex activation is not spurious but rather driven by listening to words. Additionally, we tested the prediction that the nature of this correlation may be age dependent. Using the medial occipital cortex region as a seed in a functional connectivity analysis, we examined age-related changes in the synchrony of activation in medial occipital cortex with the entire brain. With increasing age, functional connectivity between medial occipital cortex and STS, STG, and HG increased (Fig. 7A). Highlighting that these areas are sensitive to word recognition within the current study, we found that 30.6% of the volume of this region overlapped with the volume of the age-independent region in left auditory cortex that responded to increasing intelligibility (Fig. 4). Figure 7B further clarifies the significant connectivity effect by showing how the sign of the correlation varied with age. While older adults generally demonstrated a positive correlation between occipital and temporal regions, younger adults were more likely to demonstrate inversely correlated activity between these regions, with middle-aged adults demonstrating no association.

Figure 7.

(A) Auditory cortex exhibited an age-dependent increase in correlated activity with the medial occipital region (defined in Fig. 5). Peak and cluster extent thresholds were both set to P < 0.01. (B) To illustrate how the sign of the correlation varies with age, the average correlation coefficient from the left auditory cluster is plotted for each individual, with the middle-aged group outlined. The linear trend line corresponds to the significant age effect in A.

Discussion

The results of this study are consistent with evidence that aging is associated with a decreasing ability to inhibit crossmodal processing in a unimodal task. In addition, we observed that declining stimulus integrity had an independent and spatially similar effect as aging. Together these results demonstrate additive effects of age and word intelligibility on the degree of visual cortex engagement in an auditory word listening task. The relation between temporal and occipital cortex was not driven by age-related differences in vascular reactivity but rather these regions were functionally correlated across word intelligibility conditions. The nature of this correlation appeared to change with age: younger adults deactivated visual cortex when activating auditory cortex, while older adults synchronously activated both cortices.

In this study, participants listened to words at 4 different levels of intelligibility and reported whether they recognized each word. The results showed the typical pattern of bilateral temporal and left inferior frontal activation when listening to speech and increasing activation in bilateral STS, STG, and HG with increasing intelligibility (Binder et al. 2000; Fridriksson et al. 2006; Scott et al. 2006; Sharp et al. 2006; Obleser et al. 2007; Eckert, Walczak, et al. 2008; Harris et al. 2009). We did not observe age effects in the responsiveness of auditory cortex to degraded stimuli. In the context of the Inhibitory Deficit Hypothesis, this result is consistent with evidence that the difference between younger and older adults lies not in the ability to activate task-specific information (bilateral auditory cortical regions) but rather in the ability to suppress extra-task information (visual cortical regions; Gazzaley et al. 2005).

Indeed, we found that with increasing age, visual cortex activation increased, primarily in regions surrounding the calcarine sulcus, collateral sulcus, and inferior angular gyrus. Younger adults tended to suppress visual cortex activation below the implicit baseline when listening to words, supporting previous results (Johnson and Zatorre 2005, 2006; Hairston et al. 2008; Yoncheva et al. 2010). Consistent with the Inhibitory Deficit Hypothesis (Hasher and Zacks 1988; Gazzaley and D'Esposito 2007), older adults activated this region above baseline. The shift from visual cortex suppression to activation occurred in middle age, notably in the most difficult listening condition. These results suggest an interaction between degradations in auditory signal and aging on the extent to which crossmodal systems are engaged.

Mechanisms for this age-related change could involve declines in top-down inhibitory control (Gazzaley and D'Esposito 2007; Eckert, Walczak, et al. 2008) and slowed processing speed (Eckert et al. 2010) that occur with declining integrity of prefrontal cortex (Eckert 2011), potentially producing a shift in baseline levels of attention (Greicius and Menon 2004). White matter pathology is consistently observed in people with slowed processing speed, particularly within frontal tracts projecting to and from the dorsolateral prefrontal cortex, which contributes to top-down control. Eckert et al. (2010) observed that periventricular white matter hyperintensities were most frequent in older adults with reduced dorsolateral prefrontal cortex gray matter and slow processing speed. Because changes in processing speed are due at least in part to increased distractibility (Lustig et al. 2006), we predict that declines in dorsolateral prefrontal tracts, due to cerebral small vessel disease, for example, limit the top-down control of frontal cortex on posterior sensory cortex. The consequence, based on the results of this study, appears to be failed suppression of visual cortex during a speech recognition task. Indeed, this could be one explanation for why older adults often experience distraction when trying to follow conversation (e.g., Tun et al. 2002).

The association between decreasing word intelligibility and increasing visual cortex activation suggests that degraded auditory representation may exacerbate this distractibility. Specifically, more frontal resources may be required to attend to difficult auditory stimuli and, as a result, inhibitory visual control decreases. Thus, older adults who are least able to suppress crossmodal information due to declining frontal control and degraded auditory representations should be most distracted by irrelevant visual stimuli when trying to understand speech in naturalistic conditions. While the visual information being suppressed may be internally represented (e.g., Johnson and Zatorre 2005), it is also possible that older adults' increased distractibility results in differential attention to the crosshair stimulus that appeared on every word listening trial (Townsend et al. 2006). We believe that this is a testable prediction suggested by our results, which may be best examined having older and younger adults participate in auditory fMRI tasks while their locus of attention to potentially distracting visual stimuli is monitored via eye tracking.

As an alternative to the interpretation that older adults' distractibility leads to an inhibitory failure of suppression, visual cortex may be activated in difficult listening conditions as a compensatory strategy to sustain optimal performance relative to younger adults. Indeed, we did not observe age differences for reported word recognition. Such a strategy could be unrelated to reported age-related differences in the speed-accuracy trade-off (Salthouse 1979), as response time was also not correlated with age (within and across all conditions, r < 0.21, P > 0.23). While it is possible that the task and the restricted range of reaction times resulted in insensitivity to performance differences in the sample, the fact that reported recognition tracked with the degree of word filtering suggests that participants were responsive to task demands. Also supporting this compensatory explanation, adults with hearing loss are, in fact, more likely to rely on visual cues than normal-hearing adults, regardless of age. For example, Pelson and Prather (1974) found that not only were older adults with hearing loss more successful speechreaders compared with age-matched normal-hearing controls, but they were also most greatly aided by the presentation of a semantically related scene cue during speechreading compared with controls. Older adults with hearing loss are often taught to use visual cues, such as those from articulatory gestures in speechreading and visual speech, to support word recognition (Erber 1975, 1996). In particular, a speech perception training protocol developed by Humes et al. (2009) trains older adults with hearing loss to link the orthographic forms of words to their degraded auditory form to improve word recognition. Work currently underway in our laboratory examines whether training to engage visual cortical areas supports improvements in understanding of speech.

The nature of the visual representation that younger adults suppress and older adults engage is not discernable in the current study, which employed a unimodal auditory word recognition task. The BrainMap application Sleuth (http://www.brainmap.org/sleuth/index.html; Laird et al. 2005) was used to identify studies which reported peak coordinates within a 10 mm3 cube surrounding the visual cortex peaks observed in the current study. Peak coordinates surrounding the medial occipital sulcus or left collateral sulcus peaks reported in Table 1 were observed in studies that involved the visual presentation of shapes and objects (e.g., Kohler et al. 2000; Rowe et al. 2000; Lepage et al. 2001; Gitelman et al. 2002) and orthography (e.g., Petersen et al. 1989; Price et al. 1994; Buckner et al. 1998; Kuo et al. 2001). Studies in which peak activation was observed surrounding the left iAG peak involved the presentation of myriad sensory stimuli, including motion (Decety et al. 1994), tactile (Ricciardi et al. 2006), spatial (Bonda et al. 1995), auditory (Sharp et al. 2010), and visual (shapes, Beason-Held et al. 1998; word forms, Buckner et al. 1996). This diversity points to left iAG's involvement in multisensory integration. Thus, the representations associated with the visual cortex activation observed in this study may be related to imagery of objects or scenes related to the words' semantic content or imagery of the words' orthographic forms. Determining the exact nature of this representation is beyond the scope of the current paper but could be investigated in future studies that manipulate the nature of audiovisual material that is presented to older and younger adults.

Table 1.

Age and word intelligibility effects on cortical activity (Fig. 5A)

| Region | MNI coordinates | T-score | Cluster size (voxels) | ||

| x | y | z | |||

| Areas correlated with decreasing intelligibility | |||||

| Right iAG | 26 | −83 | 23 | 4.55 | 181 |

| Left collateral sulcus | −28 | −81 | −15 | 4.31 | 363 |

| Right SG | 35 | −49 | 44 | 4.10 | 102 |

| Right collateral sulcus | 23 | −70 | −23 | 3.68 | 142 |

| Areas correlated with increasing age | |||||

| Left iAG | −41 | −79 | 21 | 5.13 | 913 |

| Left collateral sulcus | −29 | −63 | −3 | 4.32 | |

| Medial occipital cortex | −11 | −94 | 0 | 4.14 | |

| Right collateral sulcus | 31 | −59 | −9 | 3.84 | 119 |

| Left superior sensory cortex | −24 | −38 | 60 | 3.67 | 182 |

| Right iAG | 44 | −75 | 21 | 3.38 | 90 |

Note: Reported regions have peak voxels exceeding P < 0.01 and cluster extent thresholds P < 0.01 (65 voxels). Italicized entries represent a single visual cluster with 3 significant subpeaks. SG, supermarginal gyrus.

To further demonstrate that the suppression effect in visual cortex stemmed from engaging in the auditory task, thereby extending the results described by Eckert, Walczak, et al. (2008), we presented evidence for a functional link between the activation in left auditory cortex and visual cortex. The correlation between these areas became increasingly positive with age, with younger adults tending to show a negative relation, middle-aged adults showing almost no relation, and older adults showing a positive relation. The negative correlation found for younger adults again supports the interpretation that suppression of visual cortex is tied to listening to words. The positive correlation for older adults may be indicative of the engagement of a crossmodal system as a compensatory mechanism to support failing speech recognition in older adults. Behavioral work by Laurienti et al. (2006) suggested that older adults exhibit increased crossmodal integration compared with younger adults, engaging auditory and visual processing in synchrony (Peiffer et al. 2007).

Other researchers have found that while older and younger adults' speech recognition performance improves equivalently from the presentation of audiovisual compared with unimodal stimuli, older adults show overall reduced performance on audiovisual tasks compared with younger adults (Sommers et al. 2005; Tye-Murray et al. 2008). This suggests that the synchronous visual and auditory cortical activation we observed may not be compensatory. Rather, age-related failures of crossmodal suppression may result in increases in processing information that does not help or even hinders speech recognition performance. In fact, the degree to which increased crossmodal integration may be harmful (i.e., processing extratask information is distracting), helpful (i.e., processing extratask information may provide task-relevant cues), or irrelevant has been shown to depend on the nature of the task and stimuli (May 1999). While we do not distinguish among these alternatives in the current study, it will be important to determine the conditions under which visual cortex upregulation supports or diminishes speech recognition performance.

Hearing loss, which degrades the incoming speech signal, may also contribute to visual cortex activation. It was especially important to examine the impact of audibility on our results given research showing that differences in hearing loss may account for apparent differences in inhibition (Murphy et al. 1999). While controlling for individual differences in average pure-tone thresholds did not eliminate the correlation between age and activation in visual cortex, hearing loss independent of age was most related to visual cortex activation in the easiest listening condition. In this condition, audibility varied the most across participants (Fig. 1). Thus, there may be independent contributions of word intelligibility and age on crossmodal activation due to declines in both inhibitory control and hearing loss.

The visual cortex results, however, could not be attributed to declines in vascular reactivity. Because vascular reactivity has been shown to decrease with age, especially in visual cortex (Ross et al. 1997; Buckner et al. 2000), we utilized the results of a respiration experiment (Thomason and Glover 2008) to ensure that the age effect was not driven by physiological changes. We found a relatively weak increase in the time-to-peak (lag) of the HRF with increasing age in visual cortex, primarily surrounding the fusiform gyrus. These small effects did not account for the age effects on the activation of visual cortex associated with listening to speech.

The current study supports the premise that age and task difficulty are additive modulators of crossmodal suppression and activation. Unlike younger adults, older adults did not inhibit visual cortex activation during word listening. Extending previous work (Rissman et al. 2009), we found that degraded word intelligibility had an effect comparable to aging, suggesting that both factors modulate the level of activation of extratask cortical regions. Additionally, functional connectivity analyses provided a means to show that this visual cortex responsiveness is linked to auditory processing. This relationship appears malleable across the lifespan, highlighting that important changes occur in the understudied middle-aged population when the effects of cerebral small vessel disease and other age-related events begin to occur (Eckert 2011).

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/.

Funding

This work was supported by the National Institute on Deafness and Other Communication Disorders (P50 DC00422) and the MUSC Center for Advanced Imaging Research. This investigation was conducted in a facility constructed with support from Research Facilities Improvement Program (C06 RR14516) from the National Center for Research Resources (NCRR), National Institutes of Health (NIH). This project was supported by the South Carolina Clinical & Translational Research (SCTR) Institute, with an academic home at the Medical University of South Carolina, NIH/NCRR (UL1 RR029882)

Acknowledgments

We thank the study participants. Conflict of Interest: None declared.

References

- American National Standards Institute. American National Standard specifications for audiometers. New York: American National Standards Institute; 2004. [Google Scholar]

- Ashburner J. A fast diffeomorphic image registration algorithm. Neuroimage. 2007;38:95–113. doi: 10.1016/j.neuroimage.2007.07.007. [DOI] [PubMed] [Google Scholar]

- Ashburner J, Friston KJ. Unified segmentation. Neuroimage. 2005;26:839–851. doi: 10.1016/j.neuroimage.2005.02.018. [DOI] [PubMed] [Google Scholar]

- Beason-Held LL, Purpura KP, Van Meter JW, Azari NP, Mangot DJ, Optican LM, Mentis MJ, Alexander GE, Grady CL, Horwitz B, et al. PET reveals occipitotemporal pathway activation during elementary form perception in humans. Vis Neurosci. 1998;15:503–510. doi: 10.1017/s0952523898153117. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET. Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- Bonda E, Petrides M, Frey S, Evans A. Neural correlates of mental transformations of the body-in-space. Proc Natl Acad Sci U S A. 1995;92:11180–11184. doi: 10.1073/pnas.92.24.11180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brett M, Johnsrude IS, Owen AM. The problem of functional localization in the human brain. Nat Rev Neurosci. 2002;3:243–249. doi: 10.1038/nrn756. [DOI] [PubMed] [Google Scholar]

- Brysbaert M, New B. Moving beyond Kucera and Francis: a critical evaluation of current word frequency norms and the introduction of a new and improved word frequency measure for American English. Behav Res Methods. 2009;41:977–990. doi: 10.3758/BRM.41.4.977. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Koutstaal W, Schacter DL, Wagner AD, Rosen BR. Functional-anatomic study of episodic retrieval using fMRI, study of episodic retrieval using fMRI. I. Retrieval effort versus retrieval success. Neuroimage. 1998;7:151–162. doi: 10.1006/nimg.1998.0327. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Raichle ME, Miezin FM, Petersen SE. Functional anatomic studies of memory retrieval for auditory words and visual pictures. J Neurosci. 1996;16:6219–6235. doi: 10.1523/JNEUROSCI.16-19-06219.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner RL, Snyder AZ, Sanders AL, Raichle ME, Morris JC. Functional brain imaging of young, nondemented, and demented older adults. J Cogn Neurosci. 2000;12(Suppl 2):24–34. doi: 10.1162/089892900564046. [DOI] [PubMed] [Google Scholar]

- Carlson MC, Hasher L, Zacks RT, Connelly SL. Aging, distraction, and the benefits of predictable location. Psychol Aging. 1995;10:427–436. doi: 10.1037//0882-7974.10.3.427. [DOI] [PubMed] [Google Scholar]

- Connelly SL, Hasher L, Zacks RT. Age and reading: the impact of distraction. Psychol Aging. 1991;6:533–541. doi: 10.1037//0882-7974.6.4.533. [DOI] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS. Hierarchical processing in spoken language comprehension. J Neurosci. 2003;23:3423–3431. doi: 10.1523/JNEUROSCI.23-08-03423.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J, Perani D, Jeannerod M, Bettinardi V, Tadary B, Woods R, Mazziotta JC, Fazio F. Mapping motor representations with positron emission tomography. Nature. 1994;371:600–602. doi: 10.1038/371600a0. [DOI] [PubMed] [Google Scholar]

- D'Esposito M, Deouell LY, Gazzaley A. Alterations in the BOLD fMRI signal with ageing and disease: a challenge for neuroimaging. Nat Rev Neurosci. 2003;4:863–872. doi: 10.1038/nrn1246. [DOI] [PubMed] [Google Scholar]

- Dirks DD, Takayana S, Moshfegh A, Noffsinger PD, Fausti SA. Examination of the neighborhood activation theory in normal and hearing-impaired listeners. Ear Hear. 2001;22:1–13. doi: 10.1097/00003446-200102000-00001. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Horwitz AR, Ahlstrom JB. Benefit of modulated maskers for speech recognition by younger and older adults with normal hearing. J Acoust Soc Am. 2002;111:2897–2907. doi: 10.1121/1.1480421. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Horwitz AR, Ahlstrom JB. Recovery from prior stimulation: masking of speech by interrupted noise for younger and older adults with normal hearing. J Acoust Soc Am. 2003;113:2084–2094. doi: 10.1121/1.1555611. [DOI] [PubMed] [Google Scholar]

- Dubno JR, Horwitz AR, Ahlstrom JB. Spectral and threshold effects on recognition of speech at higher-than-normal levels. J Acoust Soc Am. 2006;120:310–320. doi: 10.1121/1.2206508. [DOI] [PubMed] [Google Scholar]

- Eckert MA. Slowing down: age-related neurobiological predictors of processing speed. Front Neurosci. 2011;5:25. doi: 10.3389/fnins.2011.00025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eckert MA, Kamdar NV, Chang CE, Beckmann CF, Greicius MD, Menon V. A cross-modal system linking primary auditory and visual cortices: evidence from intrinsic fMRI connectivity analysis. Hum Brain Mapp. 2008;29:848–857. doi: 10.1002/hbm.20560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eckert MA, Keren NI, Roberts DR, Calhoun VD, Harris KC. Age-related changes in processing speed: unique contributions of cerebellar and prefrontal cortex. Front Hum Neurosci. 2010;4:10. doi: 10.3389/neuro.09.010.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eckert MA, Walczak A, Ahlstrom J, Denslow S, Horwitz A, Dubno JR. Age-related effects on word recognition: reliance on cognitive control systems with structural declines in speech-responsive cortex. J Assoc Res Otolaryngol. 2008;9:252–259. doi: 10.1007/s10162-008-0113-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erber NP. Auditory-visual perception of speech. J Speech Hear Disord. 1975;40:481–492. doi: 10.1044/jshd.4004.481. [DOI] [PubMed] [Google Scholar]

- Erber NP. Communication therapy for adults with sensory loss. Clifton Hill (Australia): Clavis Publishing; 1996. [Google Scholar]

- Folstein MF, Robins LN, Helzer JE. The mini-mental state examination. Arch Gen Psychiatry. 1983;40:812. doi: 10.1001/archpsyc.1983.01790060110016. [DOI] [PubMed] [Google Scholar]

- Fridriksson J, Morrow KL, Moser D, Baylis GC. Age-related variability in cortical activity during language processing. J Speech Lang Hear Res. 2006;49:690–697. doi: 10.1044/1092-4388(2006/050). [DOI] [PubMed] [Google Scholar]

- Gazzaley A, Cooney JW, Rissman J, D'Esposito M. Top-down suppression deficit underlies working memory impairment in normal aging. Nat Neurosci. 2005;8:1298–1300. doi: 10.1038/nn1543. [DOI] [PubMed] [Google Scholar]

- Gazzaley A, D'Esposito M. Top-down modulation and normal aging. Ann N Y Acad Sci. 2007;1097:67–83. doi: 10.1196/annals.1379.010. [DOI] [PubMed] [Google Scholar]

- Gitelman DR, Parrish TB, Friston KJ, Mesulam MM. Functional anatomy of visual search: regional segregations within the frontal eye fields and effective connectivity of the superior colliculus. Neuroimage. 2002;15:970–982. doi: 10.1006/nimg.2001.1006. [DOI] [PubMed] [Google Scholar]

- Gordon-Salant S. Effects of aging on response criteria in speech-recognition tasks. J Speech Hear Res. 1986;29:155–162. doi: 10.1044/jshr.2902.155. [DOI] [PubMed] [Google Scholar]

- Greicius MD, Menon V. Default-mode activity during a passive sensory task: uncoupled from deactivation but impacting activation. J Cogn Neurosci. 2004;16:1484–1492. doi: 10.1162/0898929042568532. [DOI] [PubMed] [Google Scholar]

- Hairston WD, Hodges DA, Casanova R, Hayasaka S, Kraft R, Maldjian JA, Burdette JH. Closing the mind's eye: deactivation of visual cortex related to auditory task difficulty. Neuroreport. 2008;19:151–154. doi: 10.1097/WNR.0b013e3282f42509. [DOI] [PubMed] [Google Scholar]

- Harris KC, Dubno JR, Keren NI, Ahlstrom JB, Eckert MA. Speech recognition in younger and older adults: a dependency on low-level auditory cortex. J Neurosci. 2009;29:6078–6087. doi: 10.1523/JNEUROSCI.0412-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasher L, Zacks RT. Working memory, comprehension, and aging: a review and a new view. In: Bower GH, editor. The psychology of learning and motivation. New York: Academic Press; 1988. pp. 193–225. [Google Scholar]

- Hawkins JE, Jr., Stevens SS. The masking of pure tones and speech by white noise. J Acoust Soc Am. 1950;22:6–13. [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL. The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. J Neurosci. 1994;14:6336–6353. doi: 10.1523/JNEUROSCI.14-11-06336.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollingshead AB. Four factor index of social status. New Haven (CT): Yale University; 1975. [Google Scholar]

- Humes LE. Speech understanding in the elderly. J Am Acad Audiol. 1996;7:161–167. [PubMed] [Google Scholar]

- Humes LE, Burk MH, Strauser LE, Kinney DL. Development and efficacy of a frequent-word auditory training protocol for older adults with impaired hearing. Ear Hear. 2009;30:613–627. doi: 10.1097/AUD.0b013e3181b00d90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson JA, Zatorre RJ. Attention to simultaneous unrelated auditory and visual events: behavioral and neural correlates. Cereb Cortex. 2005;15:1609–1620. doi: 10.1093/cercor/bhi039. [DOI] [PubMed] [Google Scholar]

- Johnson JA, Zatorre RJ. Neural substrates for dividing and focusing attention between simultaneous auditory and visual events. Neuroimage. 2006;31:1673–1681. doi: 10.1016/j.neuroimage.2006.02.026. [DOI] [PubMed] [Google Scholar]

- Kawashima R, O'Sullivan BT, Roland PE. Positron-emission tomography studies of cross-modality inhibition in selective attentional tasks: closing the “mind's eye”. Proc Natl Acad Sci U S A. 1995;92:5969–5972. doi: 10.1073/pnas.92.13.5969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohler S, Moscovitch M, Winocur G, McIntosh AR. Episodic encoding and recognition of pictures and words: role of the human medial temporal lobes. Acta Psychol (Amst) 2000;105:159–179. doi: 10.1016/s0001-6918(00)00059-7. [DOI] [PubMed] [Google Scholar]

- Kucera H, Francis WN. Computational analysis of present-day American English. Providence (RI): Brown University Press; 1967. [Google Scholar]

- Kuo WJ, Yeh TC, Duann JR, Wu YT, Ho LT, Hung D, Tzeng OJ, Hsieh JC. A left-lateralized network for reading Chinese words: a 3 T fMRI study. Neuroreport. 2001;12:3997–4001. doi: 10.1097/00001756-200112210-00029. [DOI] [PubMed] [Google Scholar]

- Laird AR, Lancaster JL, Fox PT. BrainMap: the social evolution of a human brain mapping database. Neuroinformatics. 2005;3:65–78. doi: 10.1385/ni:3:1:065. [DOI] [PubMed] [Google Scholar]

- Laurienti PJ, Burdette JH, Maldjian JA, Wallace MT. Enhanced multisensory integration in older adults. Neurobiol Aging. 2006;27:1155–1163. doi: 10.1016/j.neurobiolaging.2005.05.024. [DOI] [PubMed] [Google Scholar]

- Lepage M, McIntosh AR, Tulving E. Transperceptual encoding and retrieval processes in memory: a PET study of visual and haptic objects. Neuroimage. 2001;14:572–584. doi: 10.1006/nimg.2001.0866. [DOI] [PubMed] [Google Scholar]

- Luce PA, Pisoni DB. Recognizing spoken words: the neighborhood activation model. Ear Hear. 1998;19:1–36. doi: 10.1097/00003446-199802000-00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lustig C, Hasher L, Tonev ST. Distraction as a determinant of processing speed. Psychon Bull Rev. 2006;13:619–625. doi: 10.3758/bf03193972. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macey PM, Macey KE, Kumar R, Harper RM. A method for removal of global effects from fMRI time series. Neuroimage. 2004;22:360–366. doi: 10.1016/j.neuroimage.2003.12.042. [DOI] [PubMed] [Google Scholar]

- May CP. Synchrony effects in cognition: the costs and a benefit. Psychon Bull Rev. 1999;6:142–147. doi: 10.3758/bf03210822. [DOI] [PubMed] [Google Scholar]

- Murphy DR, McDowd JM, Wilcox KA. Inhibition and aging: similarities between younger and older adults as revealed by the processing of unattended auditory information. Psychol Aging. 1999;14:44–59. doi: 10.1037//0882-7974.14.1.44. [DOI] [PubMed] [Google Scholar]

- Obleser J, Wise RJ, Alex Dresner M, Scott SK. Functional integration across brain regions improves speech perception under adverse listening conditions. J Neurosci. 2007;27:2283–2289. doi: 10.1523/JNEUROSCI.4663-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Pastizzo MJ, Carbone RF., Jr. Spoken word frequency counts based on 1.6 million words in American English. Behav Res Methods. 2007;39:1025–1028. doi: 10.3758/bf03193000. [DOI] [PubMed] [Google Scholar]

- Peiffer AM, Hugenschmidt CE, Maldjian JA, Casanova R, Srikanth R, Hayasaka S, Burdette JH, Kraft RA, Laurienti PJ. Aging and the interaction of sensory cortical function and structure. Hum Brain Mapp. 2009;30:228–240. doi: 10.1002/hbm.20497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peiffer AM, Mozolic JL, Hugenschmidt CE, Laurienti PJ. Age-related multisensory enhancement in a simple audiovisual detection task. Neuroreport. 2007;18:1077–1081. doi: 10.1097/WNR.0b013e3281e72ae7. [DOI] [PubMed] [Google Scholar]

- Pelson RO, Prather WF. Effects of visual message-related cues, age, and hearing impairment on speechreading performance. J Speech Hear Res. 1974;17:518–525. doi: 10.1044/jshr.1703.518. [DOI] [PubMed] [Google Scholar]

- Petersen SE, Fox PT, Posner MI, Mintun MA, Raichle ME. Positron emission tomographic studies of the processing of single words. J Cogn Neurosci. 1989;1:153–170. doi: 10.1162/jocn.1989.1.2.153. [DOI] [PubMed] [Google Scholar]

- Poline JB, Worsley KJ, Evans AC, Friston KJ. Combining spatial extent and peak intensity to test for activations in functional imaging. Neuroimage. 1997;5:83–96. doi: 10.1006/nimg.1996.0248. [DOI] [PubMed] [Google Scholar]

- Price CJ, Wise RJ, Watson JD, Patterson K, Howard D, Frackowiak RS. Brain activity during reading. The effects of exposure duration and task. Brain. 1994;117(Pt 6):1255–1269. doi: 10.1093/brain/117.6.1255. [DOI] [PubMed] [Google Scholar]

- Ricciardi E, Bonino D, Gentili C, Sani L, Pietrini P, Vecchi T. Neural correlates of spatial working memory in humans: a functional magnetic resonance imaging study comparing visual and tactile processes. Neuroscience. 2006;139:339–349. doi: 10.1016/j.neuroscience.2005.08.045. [DOI] [PubMed] [Google Scholar]

- Rissman J, Gazzaley A, D'Esposito M. The effect of non-visual working memory load on top-down modulation of visual processing. Neuropsychologia. 2009;47:1637–1646. doi: 10.1016/j.neuropsychologia.2009.01.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross MH, Yurgelun-Todd DA, Renshaw PF, Maas LC, Mendelson JH, Mello NK, Cohen BM, Levin JM. Age-related reduction in functional MRI response to photic stimulation. Neurology. 1997;48:173–176. doi: 10.1212/wnl.48.1.173. [DOI] [PubMed] [Google Scholar]

- Rowe JB, Toni I, Josephs O, Frackowiak RS, Passingham RE. The prefrontal cortex: response selection or maintenance within working memory? Science. 2000;288:1656–1660. doi: 10.1126/science.288.5471.1656. [DOI] [PubMed] [Google Scholar]

- Salthouse TA. Adult age and the speed-accuracy trade-off. Ergonomics. 1979;22:811–821. doi: 10.1080/00140137908924659. [DOI] [PubMed] [Google Scholar]

- Salthouse TA, Somberg BL. Isolating the age deficit in speeded performance. J Gerontol. 1982;37:59–63. doi: 10.1093/geronj/37.1.59. [DOI] [PubMed] [Google Scholar]

- Scott SK, Rosen S, Lang H, Wise RJ. Neural correlates of intelligibility in speech investigated with noise vocoded speech—a positron emission tomography study. J Acoust Soc Am. 2006;120:1075–1083. doi: 10.1121/1.2216725. [DOI] [PubMed] [Google Scholar]

- Sharp DJ, Awad M, Warren JE, Wise RJ, Vigliocco G, Scott SK. The neural response to changing semantic and perceptual complexity during language processing. Hum Brain Mapp. 2010;31:365–377. doi: 10.1002/hbm.20871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharp DJ, Scott SK, Mehta MA, Wise RJ. The neural correlates of declining performance with age: evidence for age-related changes in cognitive control. Cereb Cortex. 2006;16:1739–1749. doi: 10.1093/cercor/bhj109. [DOI] [PubMed] [Google Scholar]

- Simpson RC, Briggs SL, Ovens J, Swales JM. The Michigan corpus of American spoken English. Ann Arbor (MI): The Regents of the University of Michigan; 2002. [Google Scholar]

- Sommers MS. The structural organization of the mental lexicon and its contribution to age-related declines in spoken-word recognition. Psychol Aging. 1996;11:333–341. doi: 10.1037//0882-7974.11.2.333. [DOI] [PubMed] [Google Scholar]

- Sommers MS, Tye-Murray N, Spehar B. Auditory-visual speech perception and auditory-visual enhancement in normal-hearing younger and older adults. Ear Hear. 2005;26:263–275. doi: 10.1097/00003446-200506000-00003. [DOI] [PubMed] [Google Scholar]

- Studebaker GA, Sherbecoe RL, Gilmore C. Frequency-importance and transfer functions for the Auditec of St. Louis recordings of the NU-6 word test. J Speech Hear Res. 1993;36:799–807. doi: 10.1044/jshr.3604.799. [DOI] [PubMed] [Google Scholar]

- Taoka T, Iwasaki S, Uchida H, Fukusumi A, Nakagawa H, Kichikawa K, Takayama K, Yoshioka T, Takewa M, Ohishi H. Age correlation of the time lag in signal change on EPI-fMRI. J Comput Assist Tomogr. 1998;22:514–517. doi: 10.1097/00004728-199807000-00002. [DOI] [PubMed] [Google Scholar]

- Thomason ME, Glover GH. Controlled inspiration depth reduces variance in breath-holding-induced BOLD signal. Neuroimage. 2008;39:206–214. doi: 10.1016/j.neuroimage.2007.08.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tombaugh TN, McIntyre NJ. The mini-mental state examination: a comprehensive review. J Am Geriatr Soc. 1992;40:922–935. doi: 10.1111/j.1532-5415.1992.tb01992.x. [DOI] [PubMed] [Google Scholar]

- Townsend J, Adamo M, Haist F. Changing channels: an fMRI study of aging and cross-modal attention shifts. Neuroimage. 2006;31:1682–1692. doi: 10.1016/j.neuroimage.2006.01.045. [DOI] [PubMed] [Google Scholar]

- Tun PA, O'Kane G, Wingfield A. Distraction by competing speech in young and older adult listeners. Psychol Aging. 2002;17:453–467. doi: 10.1037//0882-7974.17.3.453. [DOI] [PubMed] [Google Scholar]

- Tye-Murray N, Sommers M, Spehar B, Myerson J, Hale S, Rose NS. Auditory-visual discourse comprehension by older and young adults in favorable and unfavorable conditions. Int J Audiol. 2008;47(Suppl 2):S31–S37. doi: 10.1080/14992020802301662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaden KI, Jr, Halpin HR, Hickok GS. Irvine Phonotactic Online Dictionary, version 2.0 [data file] 2009. Available from: http://www.iphod.com. [Google Scholar]

- Vaden KI, Jr, Muftuler LT, Hickok G. Phonological repetition-suppression in bilateral superior temporal sulci. Neuroimage. 2010;49:1018–1023. doi: 10.1016/j.neuroimage.2009.07.063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoncheva YN, Zevin JD, Maurer U, McCandliss BD. Auditory selective attention to speech modulates activity in the visual word form area. Cereb Cortex. 2010;20:622–632. doi: 10.1093/cercor/bhp129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zekveld AA, Heslenfeld DJ, Festen JM, Schoonhoven R. Top-down and bottom-up processes in speech comprehension. Neuroimage. 2006;32:1826–1836. doi: 10.1016/j.neuroimage.2006.04.199. [DOI] [PubMed] [Google Scholar]