ABSTRACT

BACKGROUND

Doctor rating websites are a burgeoning trend, yet little is known about their content.

OBJECTIVE

To explore the content of Internet reviews about primary care physicians.

DESIGN

Qualitative content analysis of 712 online reviews from two rating websites. We purposively sampled reviews of 445 primary care doctors (internists and family practitioners) from four geographically dispersed U.S. urban locations. We report the major themes, and because this is a large sample, the frequencies of domains within our coding scheme.

RESULTS

Most reviews (63%) were positive, recommending the physician. We found a major distinction between global reviews, “Dr. B is a great doctor.” vs. specific descriptions which included interpersonal manner, “She always listens to what I have to say and answers all my questions.”; technical competence “No matter who she has recommended re: MD specialists, this MD has done everything right.”; and/or systems issues such as appointment and telephone access. Among specific reviews, interpersonal manner “Dr. A is so compassionate.” and technical competence “He is knowledgeable, will research your case before giving you advice.” comments tended to be more positive (69% and 80%, respectively), whereas systems-issues comments “Staff is so-so, less professional than should be…” were more mixed (60% positive, 40% negative).

CONCLUSIONS

The majority of Internet reviews of primary care physicians are positive in nature. Our findings reaffirm that the care encounter extends beyond the patient–physician dyad; staff, access, and convenience all affect patient’s reviews of physicians. In addition, negative interpersonal reviews underscore the importance of well-perceived bedside manner for a successful patient–physician interaction.

KEY WORDS: patient satisfaction, primary care, family medicine, patient–physician relationship

BACKGROUND

The Internet’s role in health care is growing rapidly. Increasingly, the Internet is used by patients to find medical facilities or doctors, to research specific medical conditions,1–3 and to form support networks specific to health.4,5 The Pew Internet & American Life Project 2008’s Tracking Survey found an increase in the number of Americans that look online for health information from 25% in 2000 to 61% in 2008.6

Among those who use the Internet for health care information, the majority (60%) access “user-generated” information, including reading others’ health experiences, and consulting rankings or reviews of health care facilities or health care providers.6 To date, Internet ratings of physicians have received much interest from media and doctors,7–13 but little academic scrutiny. The one prior academic study of physician ratings, restricted to selected physicians in a small geographic area, revealed that Internet data on physicians is highly variable, with an absence of user-generated information on most.14

Because Internet reviews of physicians represent public perspectives from patients, they may provide valuable insights about patient perceptions of medical care. Therefore, we examined a wide range of publicly-available Internet-based reviews of primary care physicians, across different Internet sites and geographic areas, to better understand their content.

METHODS

Design

We conducted a qualitative content analysis of patient reviews about primary care physicians practicing in urban America. We chose urban areas because of the high density of doctors and the extensive availability of physician ratings from the Internet. We chose internal medicine and family medicine care because the majority of research on patient–physician interactions is focused on these primary care areas. We expect patient relationships with other primary care practitioners, such as obstetrician/gynecologists and pediatricians, to differ substantively. Similarly, relationships with subspecialty physicians would be likely to differ from those with primary care physicians.

Sampling

We employed a purposive sampling strategy in order to obtain a range of reviews of primary care physicians for adults, numerically balanced across four U.S. cities. We included reviews from Atlanta, Chicago, New York, and San Francisco as four cities from different geographical regions. We included internal medicine and family medicine physicians, excluding sub-specialists.

Data Sources

Our search strategy was meant to mimic two popular ways of searching for ratings using the Internet: 1) using a search engine and 2) using a well-known general ratings site. First, to mimic a patient’s approach, we utilized the popular Google search engine. When we entered the phrase “rate doctor” into Google.com, the first result was for the website ratemds.com. As its name suggests, ratemds.com exclusively rates physicians. Previous research has shown that there are at least 33 publicly available websites that allow patients to rate their doctors,14 and there may be more today. Second, because we surmised that patients might search for physician ratings on a website they use for other types of consumer ratings, we selected the website Yelp.com. We chose this general consumer and service rating site because of its ubiquity and large number of available ratings.15 Our aim was to have a balanced number of reviews from ratemds.com and Yelp.com.

Search Strategy

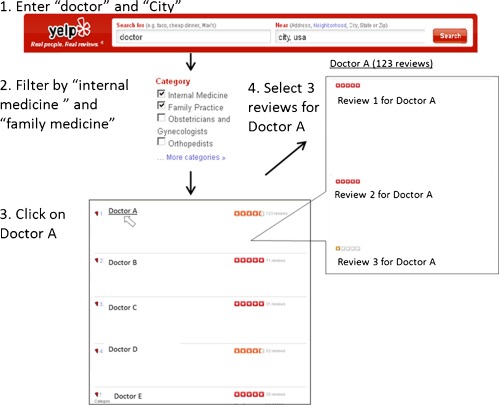

We obtained reviews from two websites: ratemds.com and Yelp.com. To obtain reviews from yelp.com, investigator (A.L.) entered “doctor” and the name of the city (e.g., “San Francisco”) into the search fields of the Yelp.com homepage. The website then generated a list of search results in the form of a list of doctors’ names (ranging between 200 and 690 doctors’ names, depending on location.) Each doctor had a varying number of reviews. Because we were only interested in general internists and family medicine physicians, we filtered our search to only show those doctors in the categories of “internal medicine” and “family medicine.” To search ratemds.com, investigator (A.L.) entered a zip code and then, using the website’s drop down menu, selected “internal medicine” and then “family medicine” (Fig. 1). We used ZIP codes for ratemds.com because at the time of the search, the website did not allow searches by city name. Importantly, all ZIP codes for a given city returned identical results.

Figure 1.

Method for selecting and including physician reviews.

Our sampling strategy had two distinct levels, because each physician could have multiple reviews. Each website first generates a list of physicians. Because the order in which doctors were listed on the website is non-random, we pre-specified our sampling of physicians as follows: We selected 30 reviews of doctors appearing at the beginning of the search results list, 40 reviews of doctors appearing in the middle of the search results list, and 30 reviews of doctors appearing at the end of the search results list. Next, we purposefully sampled the first three available reviews for each individual physician. We determined a priori that physicians with multiple ratings are likely to differ systematically from physicians with a single rating. Therefore, we included only up to three reviews for any single physician, to avoid bias introduced by selecting a large number of reviews from any particular physician. This allowed us to consider multiple reviews of the same physician independently of one another, with the assumption that each review represented a unique patient. No specific demographic information was available for reviewers on these websites.

Qualitative Analysis

We analyzed the data using content analysis.16,17 Three investigators developed a coding template. In developing our coding template, we wanted to mimic the patients’ experience. Therefore, we conceptualized the medical appointment process into three sequential sections 1) prior to physician contact (calling to make the appointment, waiting for an appointment, interactions with staff, office environment, visit wait time) 2) face-to-face interaction with physician (patients’ perception of their doctor’s competence, communication skills, clinical skills) and 3) follow-up to encounter (physician’s follow up, referral, refills, patients’ overall satisfaction). Additionally, we searched the literature to include factors that have been associated with patient health outcomes (doctor’s friendliness, empathy) and added those to our list as well. Two investigators (A.L. and A.D.) added to and refined the coding template by independently coding 50 different reviews. When patients mentioned a factor outside of our original coding scheme, we added it to our coding scheme. For example, we did not think location of practice would become a theme in patient ratings, but we added it into our scheme because it emerged from the content analysis. Two investigators independently coded 328 (46%) reviews. We achieved high inter-rater reliability (kappa range 0.8-1.0 across codes); thus, the remaining reviews were coded by the lead investigator.18 We employed qualitative content analysis, utilizing both deductive and inductive reasoning.18 At the start of data collection we relied on concepts derived from our own medical experiences as well as patient satisfaction concepts found in the literature. As analysis of the data progressed, new concepts and variables of interest emerged from reviews. Our final coding scheme incorporated concepts and variables from all three areas. We knew saturation was reached when codes became redundant and no new codes were observed.19

The investigators reviewed and combined codes into larger themes by consensus. Thematic saturation was reached after approximately 100 reviews. All analyses were done using Atlas.ti software (Berlin, 1997). The investigators selected by consensus the most common themes for presentation in this paper.

RESULTS

Our search strategy resulted in 712 reviews (representing 445 doctors); 397 (56%) from ratemds.com and 315 (44%) from Yelp.com. By design, reviews were largely evenly distributed by city: Chicago, 28%; San Francisco, 27%; New York, 26%; and Atlanta, 19%. Upon use of the websites we found that most doctors appearing at the beginning of our search had a higher number of reviews and a higher overall rating score, while doctors appearing at the end of our search had fewer reviews and/or a lower overall rating score.

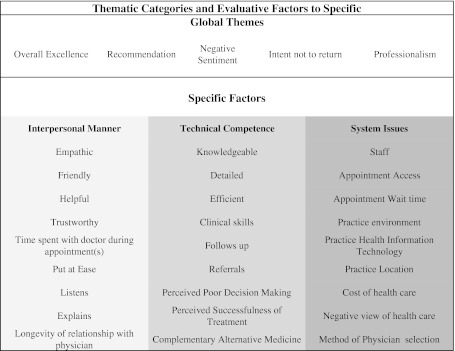

Reviews were first categorized as positive or negative. Next, in our analysis we made a clear distinction between global remarks and specific descriptions. Reviews categorized as global remarks consisted of general comments regarding the medical encounter or the doctor. In contrast, reviews that contained a more detailed account were categorized as specific descriptions and gave insight into the medical encounter or the doctor. Global statements were identified when a review lacked a specific description or action. For example a global review might be “My doctor is wonderful, kind, and caring.” Whereas a descriptive review might be “My doctor is wonderful, kind, and caring. He always listens to me and calls me to make sure I’m okay.” Or “My doctor is wonderful because I never have to wait long to see him and he never makes me feel rushed.” If investigators could answer the question “Why does the patient perceive this doctor positively or negatively?” then the review was specific. If however, the investigators could not identify why the patient perceived their doctor positively or negatively, then the review was global. Through sequential thematic analysis, three over-arching domains emerged within the reviews: interpersonal manner; technical competence; and system issues (Fig. 2). The interpersonal manner domain included items regarding the doctor’s characteristics, doctor’s attentiveness, and the doctor’s communication skills. The technical competence domain included issues relating to the doctor’s perceived aptitude, clinical skills, and follow-up or thoroughness. The system-issues domain included healthcare components outside to the patient–physician interaction including office staff, access to the doctor, and office environment.

Figure 2.

Predominant Factors in Themes Emerging from a Qualitative Content Analysis of Online Evaluations of Internal and Family Medicine Physicians for Four U.S. Cities in 2009.

The following tables provide examples of online reviewer comments by theme.

Global Remarks

Global reviews consisted of general comments regarding the medical encounter or the doctor (Table 1). They included comments about overall excellence, a recommendation of the doctor to others, expression of a negative sentiment, and few expressed intent not to return. Representative quotes include “My doctor is wonderful and the staff is nice,” and “He is wonderful.”

Table 1.

Global Remarks

| Code | Representative Quotes | Count |

|---|---|---|

| Overall Excellence | Dr. B is a great doctor. | 171 |

| Recommendation | I love him and have recommended him to all my friends and family. | 108 |

| Negative Sentiment | I wouldn’t take my dog to him. | 53 |

| Intent not to return | I don’t have time to waste I will not go back. | 27 |

Specific Descriptions

In contrast, reviews with specific descriptions were more detailed “She always listens to what I have to say and answers all my questions,” and “Dr. X listened to all my symptoms and eliminated all the unnecessary testing, I no longer feel like my entire life revolves around pain.” Three dominant themes emerged from specific reviews including interpersonal manner, technical competence, and system issues.

Interpersonal Manner

“Dr. X is friendly and caring.” Empathy was a central code mentioned under the interpersonal manner theme, both as a positive (69%) and negative (lacking in empathy, 31%). Negative reviews included, “Worst Dr. I’ve ever seen no concern for patients + arrogance off the charts…he really don’t give a !@#%.” Positive interpersonal reviews described doctors as empathetic, friendly, helpful, and trustworthy. Moreover, satisfaction with time spent with the doctor and feeling unhurried came up often in the interpersonal theme. Communication skills including listening and explaining also came up often in this theme with the majority of reviews expressing satisfaction. Reviewers also frequently mentioned how long they have been a patient of the doctor (Table 2).

Table 2.

Interpersonal Manner

| Code | Representative Quotes (positive/negative) | Count | % Positive |

|---|---|---|---|

| Empathetic | Dr. A is so compassionate. / Not a people person. | 258 | 69 |

| Time spent with doctor during appointment(s). | Finally a Dr. who spends time with you and doesn’t watch the clock. /Towards the end of my physical I asked about a problem, and she said that would have to be discussed at another appointment. | 161 | 75 |

| Physician Listens | She listens to every issue I bring up. /This guy does not listen to what you have to say and hears what he wants to. | 111 | 88 |

| Longevity of relationship with physician | I have been with Dr. F for several years now. | 106 | |

| Friendly | Dr. C is friendly. | 102 | |

| Physician Explains | Takes the time to explain options for treatment./ Does not answer my questions | 49 | 94 |

| Nonjudgmental/Condescending | She makes embarrassing things not embarrassing./ Can have a snotty and demeaning attitude. | 44 | 50 |

| Helpful | Generally, helpful. | 34 | -- |

| Trust worthy | I feel confident in his abilities and I have never seen a doctor who seemed so invested and devoted to his patients! | 22 | -- |

| Puts patient at ease | She made me feel comfortable during the visit. | 17 | -- |

| Competent | He seemed competent. | 15 | -- |

Technical Competence

Codes most frequently mentioned in the technical competence theme included a description of the doctor as knowledgeable, detailed and efficient. Examples include “Not only did he go the extra mile in time and stitches to ensure minimal scarring, he also put medical "glue" to further ensure a good closure,” and “She is very detail oriented and takes her time to write everything down.” Reviews also mentioned satisfaction with physicians’ follow up regarding diagnosis and test results as well as satisfaction with the physicians’ referrals to other providers. Negative technical reviews were more focused on perceived doctor error including perceived poor decision making. Representative quotes include “She mis-prescribed a medicine I have been taking for years. I have no intention of wasting my time with her again,” and “Didn’t take me seriously when I knew something was wrong with me. Turns out I had acute leukemia and she didn’t recognize my symptoms or refer me to a specialist.” (Table 3)

Table 3.

Technical Competence

| Code | Representative Quotes (positive/negative) | Count | % Positive |

|---|---|---|---|

| Knowledgeable | He is always very knowledgeable. | 114 | -- |

| Detailed | Very thorough. | 94 | -- |

| Follows up | She was always on the ball with all her inquiries and test and test…and yes! She really does call with results!!!! / Never returns calls. | 86 | 80 |

| Clinical Skills | She asked me what I wanted to do what specialist I wanted to see as if I should know what to do with my health problems./ You can expect his course of treatment to be right on the dime. | 60 | 52 |

| Referrals | No matter who she recommends everything is right./ Sent me to a horrible GI. | 55 | 78 |

| Perceived Poor Decision Making | She mis-prescribed a medicine I’ve been taking for years. | 40 | -- |

| Perceived Successfulness of Treatment | He listened, explained what I had and gave me a pill. Problem fixed./ He prescribed the wrong medication and permanently disfigured my (body part). | 35 | 63 |

| Efficient | Addressed my medical concerns in an efficient manner. | 16 | -- |

| Complementary Alternative Medicine | He takes a holistic approach to health care and talks with me about alternative treatments. | 14 | -- |

System-Issues

The most common codes included staff, appointment access, appointment wait time, and practice characteristics including environment, health information technology, and location. For instance, “The office staff is a whole different can of worms. They are extremely hard to get a hold of in the first place (even during normal business hours) and seem like they are annoyed when you finally do get them on the phone.” Further, reviewers more commonly mentioned dissatisfaction with appointment wait time, “In addition, I usually spent between 30 and 60 minutes in his waiting room before I actually got to see him,” while they seemed more satisfied with their ability to make an appointment “I can’t say it’s easy to get in to see him all the time, but making an appointment is painless.” (Table 4)

Table 4.

System- Issues

| Code | Representative Quotes (positive/negative) | Count | % Positive |

|---|---|---|---|

| Staff | The office staff remembers your name./Has a receptionist/nurse from hell. | 175 | 60 |

| Appointment wait time | His practice is busy, but I never have to wait for my appointment./I waited more than 45 minutes for each of the 2 appointments I had with her. | 105 | 39 |

| Appointment access | Appointments same day if warranted./ Forget seeing him when you are sick too, very inflexible that way. Will offer an appointment 2 weeks out. | 69 | 57 |

| Practice Office environment | Such a pleasant environment for my care./ I also find the clutter, dusty fake flowers, and especially her dog (!!!) to be inappropriate for a doctor’s office. | 50 | 56 |

| Method of MD selection | My mom recommended her. | 45 | -- |

| Cost of Care | This doctor took blood test that came to over 3000 dollars in cost. | 35 | -- |

| Negative view of health care | I hate doctors. | 21 | -- |

| Practice Health Information Technology | He came in with his apple book and took notes while I talked. | 20 | -- |

| Practice Location | His practice is just down the block./I drive an hour to see her, but it is worth it. | 14 | -- |

Discussion

Patient review sites provide anyone the opportunity to review a doctor in an anonymous, self-driven, and unstructured format. The sites are free of cost, and provide site users an opportunity to post their unrestricted comments. Thus, they differ significantly from the traditional patient satisfaction surveys with which health systems and researchers typically assess patient satisfaction. First, the anonymity of Internet reviews confers both drawbacks and benefits. Patients are able to evaluate their doctors without worrying that criticism might change the quality of care they receive; conversely, anonymity leads to a lack of accountability towards physicians by their reviewers. Second, traditional satisfaction assessments are distributed to patients by health systems or researchers and assessments are limited to a single encounter or time frame. In contrast, online reviews allow patients to take the initiative when providing feedback, rather than being asked to complete a patient satisfaction form at a particular time. Internet review sites are independent of health systems and patients may perceive them differently than a patient satisfaction questionnaire coming from a physician’s office. Third, results from traditional patient satisfaction questionnaires are pooled, while a single individual Internet review can have more influence than an individual patient’s survey responses. Fourth, online reviews are unstructured, whereas traditional patient satisfaction surveys are often close-ended questions. Thus, issues not addressed in closed-ended patient satisfaction instruments may come to light in Internet reviews. Although Internet ratings of physicians remain incomplete, with a recent study finding that the majority of local physicians did not have reviews online,20 they are rapidly expanding, and becoming increasingly important. Because Internet ratings are unfiltered, public, and consist of individual rather than aggregated ratings, many physicians perceive a danger to their professional reputations.8,9,11 Our data suggest that most, if not many, Internet reviews espouse positive sentiments about primary care physicians.

Although global reviews did not provide information on the drivers of satisfaction and dissatisfaction, specific reviews could provide fresh insights. From these, we note similar patterns to prior patient satisfaction research,21 as follows. We found that trust and confidence in doctor,22 ease of appointment making23 and a good interpersonal relationship24 lead to patient satisfaction among Internet reviewers as well as in other settings.

We know that currently used patient satisfaction measures do not capture all patient perceptions; some patients are much more likely to complete a satisfaction survey- or complain- than others.25 Further, measuring patient satisfaction is multidimensional and therefore results must be interpreted with consideration26. A review of 195 studies using patient satisfaction instruments found little evidence of reliability or validity.27 Although the evidence is mixed, previous studies of traditional satisfaction assessments reveal respondents to be older and less educated than non-respondents.25,28 Because patients completing Internet reviews are younger and more affluent, we suspect that Internet reviews may better capture the subset of patients less likely to respond to traditional patient satisfaction assessments, and thus may inform our understanding of the drivers of patient satisfaction for younger and educated patients. It is also possible that widespread public availability of patient opinions of physicians may affect the way patients and physicians interact. Moreover, Internet reviews do reflect certain aspects of the patient–physician relationship that are known to relate to health behaviors. For example, perception of the doctor as empathetic and friendly, which was a common theme in Internet reviews, has been associated with adherence.29 In addition, satisfaction with the doctor’s communication skills, also found in these reviews, has been shown to improve patient satisfaction30 and adherence.31 Interestingly, patients did not tend to list specific qualifications, such as the prestige of their medical school or post-graduate training, in recommending their physicians.

Patient dissatisfaction appears from prior work to be more than the absence of satisfaction and has been associated with factors including nonsocial doctor21 as well as difficulty making appointments21 and longer wait time.32 Similarly, we found that the themes of antagonistic physician, dissatisfaction with wait time, and difficulty making appointment makings were frequent in negative Internet reviews.

The subset of specific, negative reviews may be particularly useful for providers and health systems. First, negative interpersonal reviews underscore the importance of well-perceived bedside manner for a successful patient–physician interaction. In addition, our findings reaffirm that the care encounter extends beyond the patient–physician dyad; staff, access, and convenience all affect patient’s reviews of physicians. Such reviews could prompt efforts to make the office environment more patient-centered.

Nevertheless, our study has several limitations. First, demographic information is very limited. Based on the information available, it appears that persons who write Internet reviews of physicians are likely to differ from health care consumers in general. In particular, they are likely to be younger, healthier, and more affluent than health care users overall. Yelp.com maintains some demographic information on those that visit and subscribe to their site. The highest proportion of online reviewers are young adults between the ages of 18-34 (46%) college graduates (47%); 35% make more than $100, 000 a year.33 Comparable information was not available from RateMDs.com, because they do not collect demographic information about their website’s online reviewers. This complements the oft-cited response bias of traditional patient satisfaction surveys, in which more frequent health care users are more likely to respond.34,35 Second, we could not determine the exact algorithm for the order in which search results appear. We attempted to account for this potential bias by pre-specifying a purposive sampling strategy across the range of search results (to include physicians appearing at the top, middle, and bottom of search results). We also included only three reviews per physician. Including three reviews per physician limits our ability to draw conclusions for any individual physician, because we may not accurately capture the breadth of opinions about that individual. However, we judged it more important to avoid over-weighting our results towards characteristics of frequently-reviewed physicians because we expect these to differ substantively from seldom-rated physicians. Our finding that positive results were more likely to appear at the beginning of the search supports this sampling approach. Third, we restricted our results to urban locations in order to have sufficient density of physician reviews; rural areas are likely to differ systematically. Fourth, we limited our study to internal medicine and family medicine. Patient relationships with obstetrician/gynecologists may be confined to a short duration (such as pregnancy) and may not be a primary care relationship.36,37 For pediatricians, the relationship is between the parent(s), patient, and provider38 and this differs substantively from the one-on-one relationship between adults and their internal or family medicine providers. Thus we are hesitant to generalize our results to other primary care disciplines or to medical specialties.

Internet reviews of primary care physicians provide unfiltered patient perspectives on health care; as such, they provide useful insights into improving the patient-doctor relationship. It is not clear how medical practice will change with the advent of widespread, publicly-available physician reviews. The possibility of receiving a public review may alter communication between doctors and patients, and thus change the doctor-patient relationship. Online reviews of doctors could change patient–physician communication, because online reviews shift the balance of authority in the relationship. Patients are now able to evaluate their doctor in a public forum with complete anonymity. Often, physicians cannot respond to concerns raised in Internet reviews without breaching patient confidentiality standards. These public, unedited, potentially inaccurate reviews can later be accessed by potential new patients and future employers, impacting a physician’s career and reputation. Additionally, in an effort to protect themselves, doctors may have patients sign mutual privacy agreements or other similar agreements in an effort to mitigate potential criticism39–41. Although, we cannot predict the exact effect, we do expect these reviews to influence trust and communication in the patient–physician relationship. Future studies should explore physician reactions to Internet reviews and observe their effect on medical practice and communication.

Acknowledgments

The authors would like to acknowledge Dr. Anna M. Nápoles and Dr. Dean Schillinger for their early advice on this project. Dr. Sarkar is supported by Agency for Healthcare Research and Quality K08 HS017594 and National Center for Research Resources KL2RR024130.

None of the funders had any role in design and conduct of the study; collection, management, analysis, or interpretation of the data; or preparation, review, or approval of the manuscript.

Conflict of Interest

None disclosed.

References

- 1.Shinchuk LM, Chiou P, Czarnowski V, Meleger AL. Demographics and attitudes of chronic-pain patients who seek online pain-related medical information: implications for healthcare providers. Am J Phys Med Rehabil;89(2):141-6. [DOI] [PubMed]

- 2.Hay MC, Strathmann C, Lieber E, Wick K, Giesser B. Why patients go online: multiple sclerosis, the Internet, and physician-patient communication. Neurologist. 2008;14(6):374–81. doi: 10.1097/NRL.0b013e31817709bb. [DOI] [PubMed] [Google Scholar]

- 3.Hay MC, Cadigan RJ, Khanna D, Strathmann C, Lieber E, Altman R, et al. Prepared patients: Internet information seeking by new rheumatology patients. Arthritis Rheum. 2008;59(4):575–82. doi: 10.1002/art.23533. [DOI] [PubMed] [Google Scholar]

- 4.Rochman B. Health group therapy. Why so many patients are sharing their medical data online. Time;175(5):47-8. [PubMed]

- 5.Frost J, Massagli M. PatientsLikeMe the case for a data-centered patient community and how ALS patients use the community to inform treatment decisions and manage pulmonary health. Chron Respir Dis. 2009;6(4):225–9. doi: 10.1177/1479972309348655. [DOI] [PubMed] [Google Scholar]

- 6.Fox S, Jones S. The Social Life of Health Information Pew Internet & American Life Project; 2009.

- 7.Feldman R. He may be friendly, but is your doctor competent? Indystar.com; 2010.

- 8.Pasternak A, Scherger JE. Online reviews of physicians: what are your patients posting about you? Fam Pract Manag. 2009;16(3):9–11. [PubMed] [Google Scholar]

- 9.Tuffs A. German doctors fear that performance rating websites may be libellous. (1468-5833 (Electronic)). [DOI] [PMC free article] [PubMed]

- 10.Aungst H. Patients say the darnedest things. You can’t stop online ratings, but you can stop fretting about them. Med Econ. 2008;85(23):27–9. [PubMed] [Google Scholar]

- 11.Beacon N, Margaret McCartney. Will doctor rating sites improve standard of care? BMJ. 2009;338:688–9. doi: 10.1136/bmj.b688. [DOI] [PubMed] [Google Scholar]

- 12.Hodgkin PK. Doctor rating sites. Web based patient feedback. BMJ. 2009;338:b1377. doi: 10.1136/bmj.b1377. [DOI] [PubMed] [Google Scholar]

- 13.Freudenheim M. Noted Rater of Restaurants Brings Its Touch to Medicine New York Times; 2009.

- 14.Lagu T, Hannon NS, Rothberg MB, Lindenauer PK. Patients’ evaluations of health care providers in the era of social networking: an analysis of physician-rating websites. J Gen Intern Med;25(9):942-6. [DOI] [PMC free article] [PubMed]

- 15.Fost D. The Coffee Was Lousy. The Wait Was Long. New York: New York Time; 2008. [Google Scholar]

- 16.Borzekowski DL, Schenk S, Wilson JL, Peebles R. e-Ana and e-Mia: A content analysis of pro-eating disorder web sites. Am J Public Health;100(8):1526-34. [DOI] [PMC free article] [PubMed]

- 17.Jenssen BP, Klein JD, Salazar LF, Daluga NA, DiClemente RJ. Exposure to tobacco on the Internet: content analysis of adolescents’ Internet use. Pediatrics. 2009;124(2):e180–6. doi: 10.1542/peds.2008-3838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Trochim W. The Research Methods Knowledge Base. 2. Cincinnati, OH: Atomic Dog Publishing; 2000. [Google Scholar]

- 19.Strauss A, Corbin J. Basics of qualitative research: Grounded theory procedures and techniques. Newbury Park, CA: Sage Publications, Inc.; 1990. [Google Scholar]

- 20.Lagu T, Hannon NS, Rothberg MB, Lindenauer PK. Patients’ Evaluations of Health Care Providers in the Era of Social Networking: An Analysis of Physician-Rating Websites. J Gen Intern Med. [DOI] [PMC free article] [PubMed]

- 21.Beck RS, Daughtridge R, Sloane PD. Physician-patient communication in the primary care office: a systematic review. J Am Board Fam Pract. 2002;15(1):25–38. [PubMed] [Google Scholar]

- 22.Robertson R, Dixon A, Grand J. Patient choice in general practice: the implications of patient satisfaction surveys. J Health Serv Res Policy. 2008;13(2):67–72. doi: 10.1258/jhsrp.2007.007055. [DOI] [PubMed] [Google Scholar]

- 23.Gerard K, Salisbury C, Street D, Pope C, Baxter H. Is fast access to general practice all that should matter? A discrete choice experiment of patients’ preferences. J Health Serv Res Policy. 2008;13(Suppl 2):3–10. doi: 10.1258/jhsrp.2007.007087. [DOI] [PubMed] [Google Scholar]

- 24.Platonova EA, Kennedy KN, Shewchuk RM. Understanding patient satisfaction, trust, and loyalty to primary care physicians. Med Care Res Rev. 2008;65(6):696–712. doi: 10.1177/1077558708322863. [DOI] [PubMed] [Google Scholar]

- 25.Sitzia J, Wood N. Response rate in patient satisfaction research: an analysis of 210 published studies. Int J Qual Health Care. 1998;10(4):311–7. doi: 10.1093/intqhc/10.4.311. [DOI] [PubMed] [Google Scholar]

- 26.Sitzia J, Wood N. Patient satisfaction: a review of issues and concepts. Soc Sci Med. 1997;45(12):1829–43. doi: 10.1016/S0277-9536(97)00128-7. [DOI] [PubMed] [Google Scholar]

- 27.Sitzia J. How valid and reliable are patient satisfaction data? An analysis of 195 studies. Int J Qual Health Care. 1999;11(4):319–28. doi: 10.1093/intqhc/11.4.319. [DOI] [PubMed] [Google Scholar]

- 28.Lasek RJ, Barkley W, Harper DL, Rosenthal GE. An evaluation of the impact of nonresponse bias on patient satisfaction surveys. Med Care. 1997;35(6):646–52. doi: 10.1097/00005650-199706000-00009. [DOI] [PubMed] [Google Scholar]

- 29.Davis MS. Variations in patients’ compliance with doctors’ advice: an empirical analysis of patterns o communication. Am J Public Health Nations Health. 1968;58(2):274–88. doi: 10.2105/AJPH.58.2.274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Comstock LM, Hooper EM, Goodwin JM, Goodwin JS. Physician behaviors that correlate with patient satisfaction. J Med Educ. 1982;57(2):105–12. doi: 10.1097/00001888-198202000-00005. [DOI] [PubMed] [Google Scholar]

- 31.Orth JE, Stiles WB, Scherwitz L, Hennrikus D, Vallbona C. Patient exposition and provider explanation in routine interviews and hypertensive patients’ blood pressure control. Health Psychol. 1987;6(1):29–42. doi: 10.1037/0278-6133.6.1.29. [DOI] [PubMed] [Google Scholar]

- 32.Anderson RT, Camacho FT, Balkrishnan R. Willing to wait?: the influence of patient wait time on satisfaction with primary care. BMC Health Serv Res. 2007;7:31. doi: 10.1186/1472-6963-7-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.YELP. An introduction to Yelp. 2010.

- 34.Mazor KM, Clauser BE, Field T, Yood RA, Gurwitz JH. A demonstration of the impact of response bias on the results of patient satisfaction surveys. Health Serv Res. 2002;37(5):1403–17. doi: 10.1111/1475-6773.11194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Barkley WM, Furse DH. Changing priorities for improvement: the impact of low response rates in patient satisfaction. Jt Comm J Qual Improv. 1996;22(6):427–33. doi: 10.1016/s1070-3241(16)30245-0. [DOI] [PubMed] [Google Scholar]

- 36.Leader S, Perales PJ. Provision of primary-preventive health care services by obstetrician-gynecologists. Obstet Gynecol. 1995;85(3):391–5. doi: 10.1016/0029-7844(94)00411-6. [DOI] [PubMed] [Google Scholar]

- 37.Shear CL, Gipe BT, Mattheis JK, Levy MR. Provider continuity and quality of medical care. A retrospective analysis of prenatal and perinatal outcome. Med Care. 1983;21(12):1204–10. doi: 10.1097/00005650-198312000-00007. [DOI] [PubMed] [Google Scholar]

- 38.Tates K, Meeuwesen L. Doctor-parent-child communication. A (re)view of the literature. Soc Sci Med. 2001;52(6):839–51. doi: 10.1016/S0277-9536(00)00193-3. [DOI] [PubMed] [Google Scholar]

- 39.Tanner L. Doctors seek gag orders to stop patients’ online reviews. USA Today: The Associated Press; 2009. [Google Scholar]

- 40.Lee TB. Doctors and dentists tell patients, "all your review are belong to us". 2011.

- 41.Church C. Mutual privacy agreements: a tool for medical practice protection. Ridge Business Journal. May 4, 2009.