Abstract

One of the challenges with functional data is incorporating geometric structure, or local correlation, into the analysis. This structure is inherent in the output from an increasing number of biomedical technologies, and a functional linear model is often used to estimate the relationship between the predictor functions and scalar responses. Common approaches to the problem of estimating a coefficient function typically involve two stages: regularization and estimation. Regularization is usually done via dimension reduction, projecting onto a predefined span of basis functions or a reduced set of eigenvectors (principal components). In contrast, we present a unified approach that directly incorporates geometric structure into the estimation process by exploiting the joint eigenproperties of the predictors and a linear penalty operator. In this sense, the components in the regression are ‘partially empirical’ and the framework is provided by the generalized singular value decomposition (GSVD). The form of the penalized estimation is not new, but the GSVD clarifies the process and informs the choice of penalty by making explicit the joint influence of the penalty and predictors on the bias, variance and performance of the estimated coefficient function. Laboratory spectroscopy data and simulations are used to illustrate the concepts.

Keywords and phrases: Penalized regression, generalized singular value decomposition, regularization, functional data

1. Introduction

The coefficient function, β, in a functional linear model (fLM) represents the linear relationship between responses, y, and predictors, x, either of which may appear as a function. We consider the special case of scalar-on-function regression, formally written as y = ∫I x(t)β(t) dt + ε, where x is a random function, square integrable on a closed interval I ⊂

, and ε a vector of random i.i.d. mean-zero errors. In many instances, one has an approximate idea about the informative structure of the predictors, such as the extent to which they are smooth, oscillatory, peaked, etc. Here we focus on analytical framework for incorporating such information into the estimation of β.

, and ε a vector of random i.i.d. mean-zero errors. In many instances, one has an approximate idea about the informative structure of the predictors, such as the extent to which they are smooth, oscillatory, peaked, etc. Here we focus on analytical framework for incorporating such information into the estimation of β.

The analysis of data in this context involves a set of n responses corresponding to a set of predictor curves , each arising as a discretized sampling of an idealized function; i.e., xi ≡ (xi(t1), …, xi(tp)), for some, t1 < · · · < tp, of I. In particular, the concept of geometric or spatial structure implies an order relation among the index parameter values. We assume the predictor functions have been sampled equally and densely enough to capture geometric structure of the type typically attributed to functions in (subspaces of) L2(I). For this, it will be assumed that p > n although this condition is not necessary for our discussion.

Several methods for estimating β are based on the eigenfunctions associated with the auto-covariance operator defined by the predictors [16, 32]. These eigenfunctions provide an empirical basis for representing the estimate and are the basis for the usual ordinary least-squares and principal-component estimates in multivariate analysis. The book by Ramsay and Silverman [38] summarize a variety of estimation methods that involve some combination of the empirical eigenfunctions and smoothing, using B-splines or other technique, but none of these methods provide an analytically tractable way to incorporate presumed structure directly into the estimation process. The approach presented here achieves this by way of a penalty operator,

, defined on the space of predictor functions.

, defined on the space of predictor functions.

The joint influence of the penalty and predictors on the estimated coefficient function is made explicit by way of the generalized singular value decomposition (GSVD) for a matrix pair. Just as the ordinary SVD provides the ingredients for an ordinary least squares estimate (in terms of the empirical basis), the GSVD provides a natural way to express a penalized least-squares estimate in terms of a basis derived from both the penalty and the predictors. We describe this in terms of the n × p matrix of sampled predictors, X, and an m × p discretized penalty operator, L. The general formulation is familiar as we consider estimates of β that arise from a squared-error loss with quadratic penalty:

| (1) |

What distinguishes our presentation from others using this formulation is an emphasis on the joint spectral properties of the pair (X, L), as arise from the GSVD. We investigate the analytical role played by L in imposing structure on the estimate and focus on how the structure of L’s least-dominant singular vectors should be commensurate with the informative structure of β.

In a Bayesian view, one may think of L as implementing a prior that favors a coefficient function lying near a particular subspace; this subspace is determined jointly by X and L. We note, however, that informative priors must come from somewhere and while they may come from expectations regarding smoothness, other information often exists—including pilot data, scientific knowledge or laboratory and instrumental properties. Our presentation aims to elucidate the role of L in providing a flexible means of implementing informative priors, regardless of their origin.

The general concept of incorporating “structural information” into regularized estimation for functional and image data is well established [2, 12, 36]. Methods for penalized regression have adopted this by constraining high-dimensional problems in various “structured” ways (sometimes with use of an L1 norm): locally-constant structure [49, 46], spatial smoothness [20], correlation-based constraints [52], and network-dependence structure described via a graph [26]. These general penalties have been motivated by a variety of heuristics: Huang et al. [24] refer to the second-difference penalty as an “intuitive choice”; Hastie et al. [20] refer to a “structured penalty matrix [which] imposes smoothness with regard to an underlying space, time or frequency domain”; Tibshirani and Taylor [50] note that the rows of L should “reflect some believed structure or geometry in the signal”; and the penalties of Slawski et al. [46] aim to capture “a priori association structure of the features in more generality than the fused lasso.”

The most common penalty is a (discretized) derivative operator, motivated by the heuristic of penalizing roughness (see [21, 38]). Our perspective on this is more analytical: since the eigenfunctions of the second-derivative operator

=

=

(with zero boundary conditions on [0, 1]) are of the form ϕ(t) = sin(kπt), with eigenvalues k2π2 (k = 1, 2, …),

(with zero boundary conditions on [0, 1]) are of the form ϕ(t) = sin(kπt), with eigenvalues k2π2 (k = 1, 2, …),

implements the assumption that the coefficient function is well represented by low-frequency trigonometric functions. This is in contrast to ridge regression (L = I) which imposes no geometric structure. Although not typically viewed this way, the choice of

implements the assumption that the coefficient function is well represented by low-frequency trigonometric functions. This is in contrast to ridge regression (L = I) which imposes no geometric structure. Although not typically viewed this way, the choice of

=

=

, or any differential operator, implies a favored basis for expansion of the estimate.

, or any differential operator, implies a favored basis for expansion of the estimate.

A purely empirical basis comprised of a few dominant right singular vectors of X is a common and theoretically tractable choice. This is the essence of principal component regression (PCR) and these vectors also form the basis for a ridge estimate. Although this empirical basis does not technically impose local spatial structure (no order relation among the index parameter values is used), it may be justified by arguing that a few principal component vectors capture the “greatest part” of a set of predictors [17]. Properties of this approach for signal regression is the focus of [7] and [16]. The functional data analysis framework of Ramsay and Silverman [38] provides two formulations of PCR. One in which the predictor curves are themselves smoothed prior to construction of principal components (chap. 8) and another that incorporates a roughness penalty into the construction of principal components (chap. 9), as originally proposed in [45]. In a related presentation on signal regression, Marx and Eilers [30] proposed a penalized B-spline approach in which predictors are transformed using a basis external to the problem (B-splines) and the estimated coefficient function is derived using the transform coefficients. Combining ideas from [30] and [21], the “smooth principal components” method of [8] projects predictors onto the dominant eigenfunctions to obtain an estimate then uses B-splines in a procedure that smooths the estimate. Reiss and Ogden [40] provide a thorough study on several of these methods and propose modifications that include two versions of PCR using B-splines and second-derivative penalties: FPCRC applies the penalty to the construction of the principal components (cf. [45]), while FPCRR incorporates the penalty into the regression (cf. [38]).

In the context of nonparametric regression (X = I) the formulation (1) plays a dominant role for smoothing [54]. Related to this, Heckman and Ramsay [22] proposed a differential equations model-based estimate of a function μ whose properties are determined by a linear differential operator chosen from a parameterized family of differential equations, Lμ = 0. In this context, however, the GSVD is irrelevant since X does not appear and the role of L is relatively transparent.

Algebraic details on the GSVD as it relates to penalized least-squares are given in section 3 with analytic expressions for various properties of the estimation process are described in section 3.2. Intuitively, smaller bias is obtained by an informed choice of L (the goal being small Lβ). The affect of such a choice on the variance is described analytically. Section 4 describes several classes of structured penalties including two previously-proposed special cases that were justified by numerical simulations. The targeted penalties of subsection 4.2 are studied in more detail in section 5 including an analysis of the mean squared error for a family of penalized estimates which encompasses the ridge, principal-component and James-Stein estimates.

The assumptions on L here are increasingly restrictive to the point where the estimates are only minor extensions of these well-studied estimates. The goal, however, is to analytically describe the substantial gains achievable by even mild extensions of these established methods.

In applications the selection of the tuning parameter, α in (1), is important and so Section 6 describes our application of REML-based estimation for this. Numerical illustrations are provided in section 7: the simulation in subsection 7.1 is motivated by Reiss and Ogden’s study of fLMs [40]; 7.2 presents a simulation using experimentally-derived Raman spectroscopy curves in which the “true” β has naturally-occurring (laboratory) structure; and section 7.3 presents an application based on experimentally collected spectroscopy curves representing varied biochemical (nanoparticle) concentrations. An appendix looks at the simulation studied by Hall and Horowitz [16]. We begin in section 2 with a brief setup for notation and an introductory example. Note that for any L ≠ I, the estimated β is not given in terms of the ordinary empirical singular vectors (of X), but rather in terms of a “partially empirical” basis arising from a simultaneous diagonalization of X′X and L′L via the GSVD. Hence, for brevity, we refer to β̃α,L as a PEER (partially empirical eigenvector for regression) estimate whenever L ≠ I.

2. Background and simple example

Let β represent a linear functional on L2(I) defining a linear relationship y = ∫I x(t)β(t) dt + ε (observed with error, ε) between a response, y, and random predictor function, x ∈ L2(I). We assume a set of n scalar responses

corresponding to the set of n predictors,

, each discretely sampled at common locations in I. Denote by X the n × p matrix whose ith row is a p-dimensional vector, xi, of discretely sampled functions, and columns that are centered to have mean 0. The notation 〈·, ·〉 will be used to denote the inner product on either L2(I) or

, depending on the context.

, depending on the context.

The empirical covariance operator is , but for functional predictors, typically p > n or else K is ill-conditioned or rank deficient. In this case, there are either infinitely many least-squares solutions, β̂ ≡ arg minβ||y − Xβ||2, or else any such solution is highly unstable and of little use. The least-squares solution having minimum norm is unique, however, and it can be obtained directly by the singular value decomposition (SVD): X = UDV′ where the left and right singular vectors, uk and vk, are the columns of U and V, respectively, and D =[D1 0], where , typically ordered as σ1 ≥ σ2 ≥ · · · ≥ σr > 0 (r = rank(X)). In terms of the SVD of X, the minimum-norm solution is , where X† denotes the Moore-Penrose inverse of X: X† = VD†U′, where D† = diag{1/σk if σk ≠ 0; 0 if σk = 0}. The orthogonal vectors that form the columns of V are the eigenvectors of X′X and sometimes referred to as a Karhunen-Lòeve (K-L) basis for the row space of X.

The solution β̂+ is Marquardt’s generalized inverse estimator whose properties are discussed in [29]. For functional data, β̂+ is an unstable, meaningless solution. One obvious fix is to truncate the sum to d < r terms so that is bounded away from zero. This leads to the truncated singular value or principal component regression (PCR) estimate: where here, and subsequently, we use the notation Ad ≡ col[a1, …, ad] to denote the first d columns of a matrix A.

When L = I, the minimizer in (1) is the ridge penalty due to A. E. Hoerl [23]

| (2) |

or,

, where

. The factor Fα acts to counterweight, rather than truncate, the terms

as they get large. This is one of many possible filter factors which address problems of ill-determined rank (for more, see [12, 19, 33]). Weighted (or generalized) ridge regression replaces L = I with a diagonal matrix whose entries downweight those terms corresponding to the most variation [23]. Other “generalized ridge” estimates replace L = I by a discretized second-derivative operator, L =

. Indeed, the Tikhonov-Phillips form of regularization (1) has a long history in the context of differential equations [51, 36] and image analysis [15, 33] with emphasis on numerical stability; see also [28]. In a linear model context, the smoothing imposed by this penalty was mentioned by Hastie and Mallows [21], discussed in Ramsay and Silverman [38], and used (on the space of spline-transform coefficients) by Marx and Eilers [31], among others. The following simple example illustrates basic behavior for some of these penalties alongside an idealized PEER penalty.

. Indeed, the Tikhonov-Phillips form of regularization (1) has a long history in the context of differential equations [51, 36] and image analysis [15, 33] with emphasis on numerical stability; see also [28]. In a linear model context, the smoothing imposed by this penalty was mentioned by Hastie and Mallows [21], discussed in Ramsay and Silverman [38], and used (on the space of spline-transform coefficients) by Marx and Eilers [31], among others. The following simple example illustrates basic behavior for some of these penalties alongside an idealized PEER penalty.

2.1. A simple example

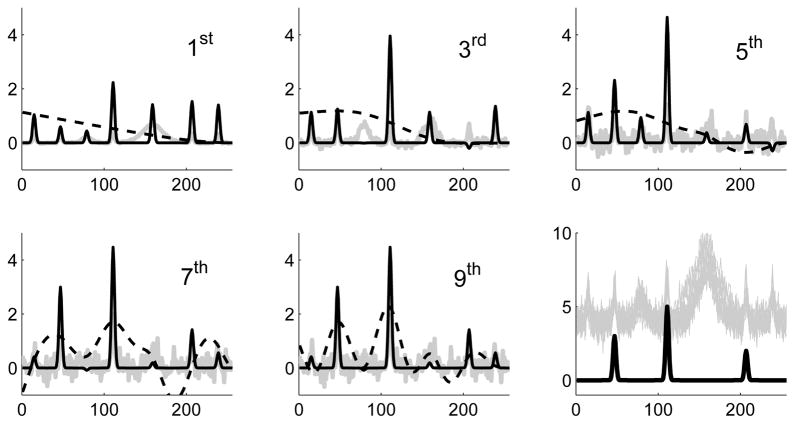

We consider a set of n = 50 bumpy predictor curves {xi} discretely sampled at p = 250 locations, as displayed in gray in the last panel of Figure 1. The true coefficient function, β, is displayed in black in this same panel. The responses are defined as yi = 〈xi, β〉 + εi (εi normal, uncorrelated mean-zero errors), and hence depend on the amplitudes of β’s three bumps centered at locations t = 45, 110, 210.

Fig 1. Partial sums of penalized estimates.

The first five odd-numbered partial sums from (7) for three penalties: 2nd-derivative (dashed), ridge (gray), targeted PEER (black; see text in sections 2.1 and 7.1). The last panel displays β (black) and 15 predictors, xi (gray), from the simulation.

A detailed simulation with complete results are provided in section 7.1. Here we simply illustrate the estimation process for L = I, as in (2), in comparison with L =

and an idealized PEER penalty. The latter is constructed using a visual inspection of the predictors and lightly penalizes the subspace spanned by such structure, specifically, bumps centered at all visible locations (approximately t = 15, 45, 80, 110, 160, 210, 240).

and an idealized PEER penalty. The latter is constructed using a visual inspection of the predictors and lightly penalizes the subspace spanned by such structure, specifically, bumps centered at all visible locations (approximately t = 15, 45, 80, 110, 160, 210, 240).

The first five panels serve to emphasize the role played by the structure of basis vectors that comprise the series expansion in (2) (in terms of ordinary singular vectors) versus the analogous expansion (see (7)) in terms of generalized singular vectors. In particular, Figure 1 shows several partial sums of (7) for these three penalties. The ridge process (gray) is, naturally, dominated by the right singular vectors of X which become increasingly noisy in successive partial sums. The second-derivative penalized estimate (dashed) is dominated by low-frequency structure, while the targeted PEER estimate converges quickly to the informative features.

In this toy example, visual structure (spatial location) is used to define a regularization process that easily outperforms uninformed methods of penalization. Less visual examples where the penalty is defined by a set of laboratory-derived structure (in Raman spectroscopy curves) is given in sections 7.2 and 7.3; see Figure 2. In that setting, and in general, the role played by L is appropriately viewed in terms of a preferred subspace in

determined by its singular vectors. Algebraic details about how structure in the estimation process is determined jointly by X and L ≠ I are described next.

determined by its singular vectors. Algebraic details about how structure in the estimation process is determined jointly by X and L ≠ I are described next.

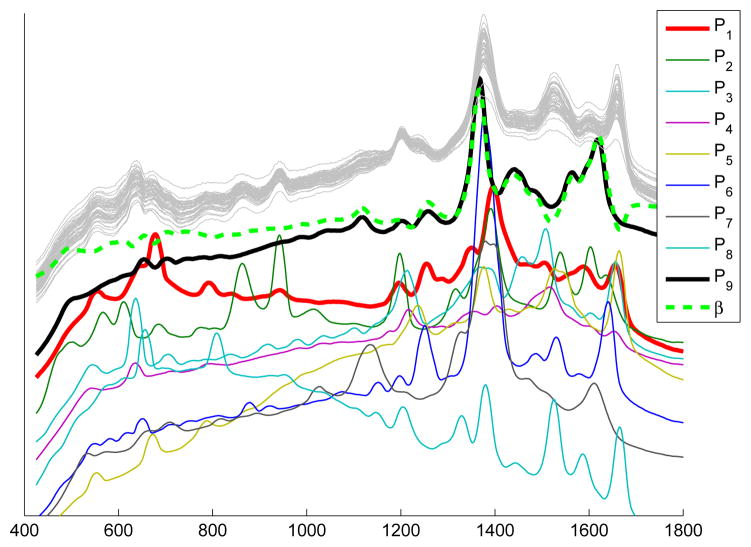

Fig 2.

Nine pure COIN spectra, P1, …, P9, and a coefficient function, β (each shifted for display). β arises as a solution to the fLM in which y denotes concentrations of P9 in an in silico mixture of 50 COIN spectra, xi (light gray). This β is used in the simulation study of Section 7.2.

3. Penalized least squares and the GSVD

Of the many methods for estimating a coefficient function discussed in the Introduction, nearly are all aimed at imposing geometric or “functional” structure into the process via the use of basis functions in some manner. An alternative to choosing a basis outright is to exploit the structure imposed by an informed choice of penalty operator. The basis, determined by a pair (X, L), can be tailored toward structure of interest by the choice of L. When this is carried out in the least-squares setting of (1), the algebraic properties of the GSVD explicitly reveal how the structure of the estimate is inherited from the spectral properties of (X, L).

3.1. The GSVD

For a given linear penalty L and parameter α > 0, the estimate in (1) takes the form

| (3) |

This cannot be expressed using the singular vectors of X alone, but the generalized singular value decomposition of the pair (X, L) provides a tractable and interpretable series expansion. The GSVD appears in the literature in a variety of forms and notational conventions. Here we provide the necessary notation and properties of the GSVD for our purposes (see, e.g., [19]) but refer to [4, 13, 35] for a complete discussion and details about its computation. See also the comments of Bingham and Larntz [3].

Assume X is an n×p matrix (n ≤ p) of rank n, L is an m×p matrix (m ≤ p) of rank m. We also assume that n ≤ m ≤ p ≤ m + n, and the rank of the (n + m) × p matrix Z: = [X′L′]′ is p. A unique solution is guaranteed if the null spaces of X and L intersect trivially: Null(L) ∩ Null(X) = {0}. This is not necessary for implementation, but it is natural in our applications and simplifies the notation. In addition, the condition p > n is not required, but rather than develop notation for multiple cases, this will be assumed.

Given X and L, the following matrices exist and form the decomposition below: an n × n matrix U and an m × m matrix V, each with orthonormal columns, U′U = I, V′V = I; diagonal matrices S (n × n) and M (m × m); and a nonsingular p × p matrix W such that

| (4) |

Here, S and M are of the form and , whose submatrices S1 and M1 have l:= n + m − p diagonal entries ordered as

| (5) |

These matrices satisfy

| (6) |

with S′S + M′M = I.

Denote the columns of U, V and W by uk, vk and wk, respectively. When L = I, the the generalized singular vectors uk and vk are those in the ordinary SVD of X (as denoted in Section 2) but their ordering is reversed. In this case, the corresponding ordinary singular values are equal to γk:= σk/μk for μk > 0. When L ≠ I, however, the GSVD vectors and values of the pair (X, L) differ from those in the SVD of X. By the convention for ordering the GS values and vectors, the last few columns of W span the subspace Null(L) (or, if Null(L) is empty, they correspond to the smallest GS values, μk). We set d = dim(Null(L)) and note that μk = 0 for k > n − d. In the special case that m = p and L is a p × p nonsingular matrix, we have L = V MW−1 and XL−1 = U(S M†)V′, which connects the SVD of XL−1 to the GSVD of (X, L). In general, we define the n × m matrix and the l × l diagonal matrix with entries γk = σk/μk for μk > 0, and γk = 0 for μk = 0.

Now, equation (6) and some algebra gives (X′X + αL′L)−1 = W(S′S + αM′M)−1W′, and so β̃α,L = W (S′S + αM′M)−1S′U′y. A consequence of the ordering adopted for the GS values and vectors is that the first p−n columns of W don’t enter into the expression for β̃α,L; see equation (4). Therefore, we will re-index the columns of W to reflect this and also so that the indexing coincides with that established for the GS values and vectors in (5). That is, denote the columns of W as follows: W:= col[w(1), …, w(p−n) | w1, …, wn−d | wn−d+1, …, wn]. Therefore, the L-penalized estimate can be expressed as a series in terms of the GSVD as

| (7) |

This GSV expansion corresponds to a new basis for the estimation process: the estimate is expressed in terms of GS vectors {wk} determined jointly by X and L; cf. the ridge estimate in (2).

For brevity, set o:= n − d and recall that Ao denotes the first n − d columns of a matrix A. Now denote by Aφ the last d columns of A. In particular, the range of Wφ is Null(L). Using this notation,

| (8) |

and equation (7) may be written concisely as

| (9) |

where .

In summary, the utility of a penalty L depends on whether the true coefficient function shares structural properties with this GSVD basis, . With regard to this, the importance of the parameter α may be reduced by a judicious choice of L since the terms in (7) corresponding to the vectors {wk: μk = 0} are independent of the parameter α [53].

As we’ll see, bias enters the estimate to the extent that the vectors {wk: μk ≠ 0 } appear in the expansion (7). The portion of β̃α,L that extends beyond the subspace Null(L) is constrained by a sphere (of radius determined by α); this portion corresponds to bias. Hence, L may be chosen in such a way that the bias and variance of β̃α,L arises from a specific type of structure, potentially decreasing bias without increasing complexity of the model. As a common example, L =

introduces a bias toward smoothness with structure imposed by the low-frequency trigonometric functions that correspond to its smallest eigenfunctions.

introduces a bias toward smoothness with structure imposed by the low-frequency trigonometric functions that correspond to its smallest eigenfunctions.

3.2. Bias and variance and the choice of penalty operator

Begin by observing that the penalized estimate β̃α,L in (3) is a linear transformation of any solution to the normal equations. Indeed, define and note that if β̂ denotes any solution to X′Xβ = X′y, then β̃α,L = X#X β̂ +X#ε. The resolution operator X#X reflects the extent to which the estimate in (7) is linearly transformed relative to an exact solution. In particular, E(β̃α,L) = X#Xβ. Additionally, we have bias(β̃α,L) = (I − X#X)β = α(X′X + αL′L)−1L′Lβ, and so ||bias(β̃α,L)|| ≤ ||α(X′X + αL′L)−1L′||||Lβ||. Hence bias can be controlled by the choice of L, with an estimate being unbiased whenever Lβ = 0. There is a tradeoff, of course, and equation (11) below quantifies the effect on the variance as determined by Wφ (i.e., ) if Null(L) is chosen to be too large.

More generally, the decompositions in (4) lead to an expression for the resolution matrix as X#X = W(S′S + αM′M)−1S′SW−1, and

For notational convenience, define W̃:= W′−1 (note, W̃ plays a role analogous to V ≡ V′−1 in the SVD). The bias of β̃α,L can be expressed as

| (10) |

where w̃k is the kth column of W̃, and the w(j)’s come from the first p − n columns of W; see (8).

A counterpart is an expression for the variance in terms of the GSVD. Let Σ denote the covariance for ε. Then var(β̃α,L) = var(X#Xβ+X#ε) = X#Σ(X#)′. Assuming Σ = σεI, this simplifies to

| (11) |

An interesting perspective of the bias-variance tradeoff is provided by the relationship between the GS-values in (5) and their role in equations (10) and (11). Moreover, these lead to an explicit expression for the mean squared error (MSE) of a PEER estimate. Since E(β̃α,L) = X#Xβ,

As a final remark, recall that one perspective on ridge estimation defines fictitious data from an orthogonal “experiment,” represented by an L, and expresses I as I = L′L [29]. Regardless of orthogonality this applies to any penalized estimate and L may similarly be viewed as augmenting the data, influencing the estimation process through its eigenstructure; the response, y, is set to zero for these supplementary “data”. In this view, equation (3) can be written as Zβ = y where and . This formulation proves useful in section 5.3 when assuring that the estimation process is stable with respect to perturbations in X and the choice of L.

4. Structured penalties

A structured penalty refers to a second term in (1) that involves an operator chosen to encourage certain functional properties in the estimate. A prototypical example is a derivative operator which imposes smoothness via its eigenstructure. Here we describe several examples of structured penalties, including two that were motivated heuristically and implemented without regard to the spectral properties that define their performance. Sections 3.2 and 5.3 provide a complete formulation of their properties as revealed by the GSVD.

4.1. The penalty of C. Goutis

The concept of using a penalty operator whose eigenstructure is targeted toward specific properties in the predictors appears implicitly in the work of C. Goutis [14]. This method aimed to account for the “functional nature of the predictors” without oversmoothing and, in essence, considered the inverse of a smoothing penalty. Specifically, if Δ denotes a discretized second-derivative operator (with some specified boundary conditions), the minimization in (1) was replaced by . Here, the term XΔ′ Δβ can be viewed as the product of XΔ′ (derivatives of the predictor curves) and Δβ (derivative of β). Defining γ: = Δ′Δβ and seeking a penalized estimate of γ leads to

| (12) |

In [14], the properties of γ̃ were conjectured to result from the eigenproperties of (Δ′Δ)†. This was explored by ignoring X and plotting some eigenvectors of (Δ′Δ)†. The properties of this method become transparent, however, when formulated in terms of the GSVD. That is, let L:= ((Δ′Δ)†)1/2 and note the functions that define γ̂ are influenced most by the highly oscillatory eigenvectors of L which correspond to its smallest eigenvalues; see equations (5) and (7).

This approach was applied in [14] only for prediction and has drawbacks in producing an interpretable estimate, especially for non-smooth predictor curves. The general insight is valid, however, and modifications of this penalty can be used to produce more stable results. The operator (Δ′Δ)† essentially reverses the frequency properties of the eigenvectors of Δ and is an extreme alternative to this smoothing penalty. An eigenanalysis of the pair (X, L), however, suggests penalties that may be more suited to the problem. This is illustrated in Section 7.

4.2. Targeted penalties

Given some knowledge about the relevant structure, a penalty can be defined in terms of a subspace containing this structure. For example, suppose

in L2(I). Set

and consider the orthogonal projection

= QQ†. (Here, q ⊗ q denotes the rank-one operator f ↦ 〈f, q〉q, for f ∈ L2(I).) For L = I −

= QQ†. (Here, q ⊗ q denotes the rank-one operator f ↦ 〈f, q〉q, for f ∈ L2(I).) For L = I −

, then β ∈ Null(L) and β̃α,L is unbiased. The problem may still be underdetermined so, more pragmatically, define a decomposition-based penalty

, then β ∈ Null(L) and β̃α,L is unbiased. The problem may still be underdetermined so, more pragmatically, define a decomposition-based penalty

| (13) |

for some a, b ≥ 0. Heuristically, when a > b > 0 the effect is to move the estimate towards Q by preferentially penalizing components orthogonal to

; i.e., assign a prior favoring structure contained in the subspace

; i.e., assign a prior favoring structure contained in the subspace

. To implement the tradeoff between the two subspaces, we view a and b as inversely related, ab = const. The analytical properties of estimates that arise from this are developed in the next section and illustrated numerically in Section 7. For example, bias is substantially reduced when β ⊂

. To implement the tradeoff between the two subspaces, we view a and b as inversely related, ab = const. The analytical properties of estimates that arise from this are developed in the next section and illustrated numerically in Section 7. For example, bias is substantially reduced when β ⊂

, and equation (18) quantifies the tradeoff with respect to variance when the prior

, and equation (18) quantifies the tradeoff with respect to variance when the prior

is chosen poorly.

is chosen poorly.

More generally, one may penalize each subspace differently by defining L = α1(I −

)

)

(I −

(I −

) + α2

) + α2

, for some operators

, for some operators

and

and

. This idea could be carried further: for any orthogonal decomposition of L2(I) by subspaces

. This idea could be carried further: for any orthogonal decomposition of L2(I) by subspaces

, …,

, …,

, let Pj be the projection onto

, let Pj be the projection onto

. Then the multi-space penalty

leads to the estimate

. Then the multi-space penalty

leads to the estimate

This concept was applied in the context of image recovery (where X represents a linear distortion model for a degraded image y) by Belge et al. [1].

The examples here illustrate ways in which assumptions about the structure of a coefficient function can be incorporated directly into the estimation process. In general, any estimation of β imposes assumptions about its structure (either implicitly or explicitly) and section 3.2 shows that the bias-variance tradeoff involves a choice on the type of bias (spatial structure) as well as the extent of bias (regularization parameter(s)).

5. Some analytical properties

Any direct comparison between estimates using different penalty operators is confounded by the fact there is no simple connection between the generalized singular values/vectors and the ordinary singular values/vectors. Therefore, we first consider the case of targeted or projection-based penalties (13). Within this class, we introduce a parameterized family of estimates that are comprised of ordinary singular values/vectors. Since the ridge and PCR estimates are contained in (or a limit of) this family, a comparison with some targeted PEER estimates is possible. For more general penalized estimates, properties of perturbations provide some less precise relationships; see proposition 5.6.

5.1. Transformation to standard form

We have reason to consider decomposition-based penalties (13) in which L is invertible. In this case, an expression for the estimate does not involve the second term in (9), and decomposing the first term into two parts will be useful. For this, we find it convenient to use the standard-form transformation due to Elden [11] in which the penalty L is absorbed into X. This transformation also provides a computational alternative to the GSVD which, for projection-based penalties in particular, can be less computationally expensive; see, e.g., [25]. By this transformation of X, a general PEER estimate (L ≠ I) can be expressed via a ridge-regression process.

Define the X-weighted generalized inverse of L and the corresponding transformed X as

see [11, 19]. In terms of the GSVD components (4), the transformed X is

= UΓV ′. In particular, the diagonal elements of

are the ordinary singular values of

= UΓV ′. In particular, the diagonal elements of

are the ordinary singular values of

, but in reversed order.

, but in reversed order.

Now define β̃φ:= [X(I − L†L)]†y, the component of the regularized solution β̃α,L that is in Null(L). The PEER estimate can be obtained from a ridge-like penalization process with respect to

as follows. Defining a ridge estimate in the transformed space as

as follows. Defining a ridge estimate in the transformed space as

| (14) |

then the PEER estimate is recovered as

Note that the transformed estimate as given in terms of the GSVD factors is:

= V FΓ†U′y, where

.

= V FΓ†U′y, where

.

In what follows we consider invertible L in which case

, [X(I − L†L)]† = 0, and

= y. In particular, β̃α,L = L−1

= y. In particular, β̃α,L = L−1

. For the penalty (13) of the form L = a(I −

. For the penalty (13) of the form L = a(I −

) + b

) + b

, then

, and so

. The regularization parameter, previously denoted by α, can be absorbed into the values a and b, so we will denote this PEER estimate β̃α,L simply as β̃a,b.

, then

, and so

. The regularization parameter, previously denoted by α, can be absorbed into the values a and b, so we will denote this PEER estimate β̃α,L simply as β̃a,b.

Remark 5.1

When

, this is simply a ridge estimate: β̃a,b = β̃α,I. Therefore, the best performance among this family of estimates is as least as good as the performance of ridge, regardless of the choice of

.

.

5.2. SVD targeted penalties

Consider the special case in which

is the span of the d largest right singular vectors of an n ×p matrix X of rank n. Let

be an ordinary singular value decomposition where D is a diagonal matrix of singular values. For consistency with the GSVD notation, these will be ordered as 0 ≤ σ1 ≤ · · · ≤ σn. Hence the last d columns of V correspond to the d largest singular values of X. For the rest of this section, we adopt the convention for indexing the columns of V as use for W in (8). In particular, Q = Vφ.

is the span of the d largest right singular vectors of an n ×p matrix X of rank n. Let

be an ordinary singular value decomposition where D is a diagonal matrix of singular values. For consistency with the GSVD notation, these will be ordered as 0 ≤ σ1 ≤ · · · ≤ σn. Hence the last d columns of V correspond to the d largest singular values of X. For the rest of this section, we adopt the convention for indexing the columns of V as use for W in (8). In particular, Q = Vφ.

We are interested interested in the penalty L = a(I −

) + b

) + b

, where d = dim(Null(I −

, where d = dim(Null(I −

)). Similar to before, set o = n − d and define o × o and d × d submatrices, Do and Dφ, of D as

)). Similar to before, set o = n − d and define o × o and d × d submatrices, Do and Dφ, of D as

| (15) |

Here,

= VφVφ′ and (I −

= VφVφ′ and (I −

) = VoVo′ and so,

) = VoVo′ and so,

This decomposition implies that the ridge estimate in (14) in the transformed space is of the following form: setting G = DΛ−1, denoting its diagonal entries by {γk}, and defining

gives

= V FG†U′y. Now,

= V FG†U′y. Now,

and so β̃a,b = L−1

= L−1(V FG†U′y) = VΛ−1FΛD−1U′y. By the decomposition (15),

= L−1(V FG†U′y) = VΛ−1FΛD−1U′y. By the decomposition (15),

This shows that the estimate decomposes as follows.

Theorem 5.2

Let

be the span of the largest d right singular vectors of X. Set L = a(I −

be the span of the largest d right singular vectors of X. Set L = a(I −

) + b

) + b

. Then, in terms of the notation above, the estimate β̃a,b decomposes as

. Then, in terms of the notation above, the estimate β̃a,b decomposes as

| (16) |

where the left and right terms are independent of b and a, respectively.

Similar arguments can be used to decompose an estimate for arbitrary

:

:

| (17) |

In this case, however, all terms are dependent on both a and b. Indeed, using notation as in (9) one can decompose

and obtain

= V FΓ†U′y. However, L−1V = WM†, and the non-orthogonal terms provided by W do not decompose the estimate into terms from the orthogonal sum

= V FΓ†U′y. However, L−1V = WM†, and the non-orthogonal terms provided by W do not decompose the estimate into terms from the orthogonal sum

⊕

⊕

.

.

The following corollary, along with Remark 5.1, records the manner in which (16) is a family of penalized estimates, parameterized by a, b ≥ 0 and d ∈ {1, …, n}, that extends some standard estimates.

Corollary 5.3

Under the conditions in Theorem 5.2,

when a > b > 0, β̃a,b is a sum of weighted ridge estimates on

and

and

;

;when a > 0 and b = 0, β̃a,0 is given by (9), which is a sum of PCR and ridge estimates on

and

and

, respectively;

, respectively;for each d, the PCR estimate is the limit of β̃a,0 as a → ∞.

In item 2, this estimate is similar to PCR except that a ridge penalty is placed on the least-dominant singular vectors. Under the assumptions here, wk ≡ vk are the ordinary singular vectors of X and the ordinary singular values appear as γk = σk/μk, for μk > 0. In the second term of (9), the singular vectors are in the null space of L (since b = 0), and so μk = 0 and σk = 1, for k = n − d + 1, …, p. Regarding item 3, although a PCR estimate is not obtained from equation (3) for any L, it is a limit of such estimates.

Other decompositions may be obtained simply by using a permutation, such as Q = ΠV, for some n × n permutation matrix Π. Stein’s estimate, β̃α,S, also fits into this framework as follows. When X′X is nonsingular, then β̃α,S = (X′X+αX′X)−1X′y (see, e.g., the class ‘STEIN’ in [10]), and X′X = V D′DV ′. Hence this estimate arises from the penalty LS = DV ′. This is a re-weighted version of L = a(I −

) where d = n, Q = V and the parameter a is replaced by the matrix D. The result is a constant filter factor F = diag{1/(1 + α)}. Using d < n and Q = Vd is a natural extension of this idea. More generally,

) where d = n, Q = V and the parameter a is replaced by the matrix D. The result is a constant filter factor F = diag{1/(1 + α)}. Using d < n and Q = Vd is a natural extension of this idea. More generally,

may be enriched with functions that span a wider range of structure potentially relevant to the estimate. This concept is illustrated in Section 7.3 where instead of Vd, we use a d-dimensional set of experimentally-derived “template” spectra supplemented with their derivatives to define

may be enriched with functions that span a wider range of structure potentially relevant to the estimate. This concept is illustrated in Section 7.3 where instead of Vd, we use a d-dimensional set of experimentally-derived “template” spectra supplemented with their derivatives to define

.

.

As an aside, we note that in a different approach to regularization one can define a general family of estimates arising from the SVD by way of β̃h,ϕ = VΣhU ′y, where

, and ϕ:

→

→

is an arbitrary continuous function [33]. A ridge estimate is obtained for ϕ(t) = 1/(1 + t), and PCR obtained for ϕ(t) = 1/t if t > 1, ϕ(t) = 0 if t ≤ 1 (an L2-limit of continuous functions). This is similar to item 3 in Corollary 5.3, but the family of estimates β̃h,ϕ is formulated in terms of functional filter factors rather than explicit penalty operators. Related to this is the fact that the optimal (with respect to MSE) estimate using SVD filter factors is, in the case C = σεI, expressed as β̃OH = V FD†V ′y, where

; see the “ideal filter” of O’Brien and Holt [34]. In fact, it’s easy to check that this optimal estimate can be obtained as β̃OH = β̃α,L for some L ≠ I. Since β̃OH involves knowledge of β, it is not directly obtainable but it points to the optimality of a PEER estimate.

is an arbitrary continuous function [33]. A ridge estimate is obtained for ϕ(t) = 1/(1 + t), and PCR obtained for ϕ(t) = 1/t if t > 1, ϕ(t) = 0 if t ≤ 1 (an L2-limit of continuous functions). This is similar to item 3 in Corollary 5.3, but the family of estimates β̃h,ϕ is formulated in terms of functional filter factors rather than explicit penalty operators. Related to this is the fact that the optimal (with respect to MSE) estimate using SVD filter factors is, in the case C = σεI, expressed as β̃OH = V FD†V ′y, where

; see the “ideal filter” of O’Brien and Holt [34]. In fact, it’s easy to check that this optimal estimate can be obtained as β̃OH = β̃α,L for some L ≠ I. Since β̃OH involves knowledge of β, it is not directly obtainable but it points to the optimality of a PEER estimate.

5.3. The MSE of some penalized estimates

Theorem 5.2 is used here to show that β̃a,b can have smaller MSE than the ridge or PCR estimates for a wide range of values of a and/or b. The MSE is potentially decreased further when L is defined by a more general

. In that case, a general statement is difficult to formulate but Proposition 5.6 confirms that any improvement in MSE is robust to perturbations in L (e.g., general

. In that case, a general statement is difficult to formulate but Proposition 5.6 confirms that any improvement in MSE is robust to perturbations in L (e.g., general

) and errors in x.

) and errors in x.

An immediate consequence of Theorem 5.2 is that the mean squared error for an estimate in this family (16) decomposes into easily-identifiable terms for the bias and variance:

| (18) |

The influence of b = 0 on the estimate is now clear: when the numerical rank of X is small relative to d, the σk’s in the last term decrease and the contribution to the variance from this term increases—the estimate fails for the same reason that ordinary least-squares fails. Any nonzero b stabilizes the estimate in the same way that a nonzero α stabilizes a standard ridge estimate; the decomposition (16) merely re-focuses the penalty. This is illustrated in Section 7 (Table 1) and in the Appendix (Table 4). Although there are three parameters to consider, the MSE of β̃a,b is relatively insensitive to b > 0 for sufficiently large d. This could be optimized (similar to efforts to optimize the number of principal components) but here we assume approximate knowledge regarding

, hence d. Relationships between ridge, PCR and PEER estimates in this family {β̃a,b}a,b>0 can be quantified more specifically as follows.

, hence d. Relationships between ridge, PCR and PEER estimates in this family {β̃a,b}a,b>0 can be quantified more specifically as follows.

Table 1.

Estimation errors (MSE) for simulation with selected bump locations. Sample size is n = 50

| R2 | S/N | LV | LU | PCR | ridge |

+ a I + a I

|

|

|---|---|---|---|---|---|---|---|

| 0.8 | 10 | 4.00 | 13.81 | 9.38 | 34.39 | 359.83 | 76.31 |

| 0.8 | 5 | 3.72 | 15.46 | 21.50 | 40.02 | 246.17 | 72.64 |

| 0.8 | 2 | 4.40 | 12.96 | 57.89 | 58.22 | 126.75 | 59.35 |

| 0.6 | 10 | 9.60 | 21.60 | 14.10 | 50.50 | 497.70 | 113.60 |

| 0.6 | 5 | 10.22 | 21.65 | 26.02 | 50.68 | 338.70 | 87.58 |

| 0.6 | 2 | 11.75 | 23.18 | 63.50 | 67.94 | 181.75 | 78.45 |

Table 4.

Estimation errors (MSE) for the simulation with localized frequencies

| n | R2 | S/N | LF | LG | PCR | ridge |

+ a I + a I

|

|

|---|---|---|---|---|---|---|---|---|

| 50 | 0.8 | 10 | 42.42 | 77.31 | 123.60 | 132.50 | 1051.20 | 568.45 |

| 50 | 0.8 | 5 | 41.55 | 75.41 | 128.75 | 143.48 | 447.64 | 184.07 |

| 200 | 0.8 | 10 | 8.28 | 13.48 | 33.44 | 65.78 | 453.54 | 169.41 |

| 200 | 0.8 | 5 | 8.56 | 13.08 | 36.36 | 87.59 | 100.15 | 76.24 |

| 50 | 0.6 | 10 | 106.89 | 200.08 | 173.56 | 173.94 | 1098.20 | 631.05 |

| 50 | 0.6 | 5 | 109.51 | 178.05 | 178.15 | 196.62 | 612.12 | 259.92 |

| 200 | 0.6 | 10 | 25.30 | 38.73 | 58.90 | 98.13 | 847.59 | 350.46 |

| 200 | 0.6 | 5 | 22.08 | 33.79 | 59.92 | 119.48 | 240.09 | 127.52 |

Proposition 5.4

Suppose β ∈

and fix α > 0. Then for any

, the ridge estimate satisfies

and fix α > 0. Then for any

, the ridge estimate satisfies

Proof

This follows from the fact that if β ∈

, then Vo′β = 0 and so the first term in (18) is zero. Therefore, the contribution to the MSE by the fourth term is decreased whenever

.

, then Vo′β = 0 and so the first term in (18) is zero. Therefore, the contribution to the MSE by the fourth term is decreased whenever

.

If β is exactly a sum of the d dominant right singular vectors, A PCR estimate using d terms may perform well, but in general it is not optimal:

Proposition 5.5

If β ∈

, a sufficient condition for the PCR estimate to satisfy

, a sufficient condition for the PCR estimate to satisfy

is

| (19) |

Note that the left side of (19) increases without bound as σk → 0. Since , and since the premise of PCR is that decreases with decreasing σk, this sufficient condition is entirely plausible.

Proof

If β ∈

, then the first and third terms in (18) are zero and the MSE of

consists of the second and last terms of (18):

, then the first and third terms in (18) are zero and the MSE of

consists of the second and last terms of (18):

In particular, a sufficient condition for this to exceed MSE(β̃∞,b) is for the variance term to exceed the second and last terms of (18):

One can check that this is satisfied when (19) holds.

A comment by Bingham and Larntz [3] on Dempster et al.’s intensive simulation study of ridge regression in [10] notes that “it is not at all clear that ridge methods offer a clear-cut improvement over [ordinary] least squares except for particular orientations of β relative to the eigenvectors of X′X.” Equation (18) repeats this observation relating these two classical methods as well as the minor extensions contained in (16). If, on the other hand, the orientation of β relative to the vk’s is not favorable, i.e., if β is nowhere near the range of V, then a PEER estimate as in (17) is more desirable than the estimate in (16) (assuming sufficient information is available to form

).

).

In summary, the family of estimates {β̃a,b}a,b>0 in (16) represents a hybrid of ridge and PCR estimation. This family—based on the ordinary singular vectors of X—is introduced here to provide a framework within which these two familiar estimates can be compared to (slightly) more general PEER estimates. Direct analytical comparison between general PEER estimates is more difficult since there’s no simple relationship between the generalized singular vectors for two different L (including L = I versus L ≠ I). However, it is important that the estimation process be stable with respect to changes in L and/or X. I.e., in going from an estimate in (16) to one in (17), the performance of the estimate should be predictably altered. Given an estimate in Proposition 5.4, if

is modified and/or X is observed with error, the MSE of the corresponding estimate,

, should be controlled: for sufficiently small perturbation E, the corresponding estimate

should be close to MSE(β̃α,I). This “stability” is true in general. To see this recall

, (of rank p) and

. Then another way to represent the estimate (3) is β̃α,L = Z†y. Let

for some n×p and m×p matrices E1 and E2. Set ZE = Z + E and denote the perturbed estimate by

. By continuity of the generalized inverse (e.g., [4], Section 1.4),

if and only if lim||E||→0 rank(ZE) = rank(Z). Therefore, provided the rank of Z is not changed by E,

is modified and/or X is observed with error, the MSE of the corresponding estimate,

, should be controlled: for sufficiently small perturbation E, the corresponding estimate

should be close to MSE(β̃α,I). This “stability” is true in general. To see this recall

, (of rank p) and

. Then another way to represent the estimate (3) is β̃α,L = Z†y. Let

for some n×p and m×p matrices E1 and E2. Set ZE = Z + E and denote the perturbed estimate by

. By continuity of the generalized inverse (e.g., [4], Section 1.4),

if and only if lim||E||→0 rank(ZE) = rank(Z). Therefore, provided the rank of Z is not changed by E,

and hence as ||E|| → 0. A more specific bound on the difference of estimates can be obtained under the condition ||Z†||||E||< 1 which implies that . This can be used to obtain the following bound.

Proposition 5.6

Assume ||Z†||||E|| < 1 and let r = y − Zβ̃α,L. Then

6. Tuning parameter selection

Despite our focus on the GSVD, the computation of a PEER estimate in (1) does not, of course, require that this decomposition be computed. Rather, the role of the GSVD has been to provide analytical insight into the role a penalty operator plays in the estimation process. For computation, on the other hand, we have chosen to use a method in which the tuning parameter, α, is estimated as part of the coefficient-function estimation process.

Because the choice of tuning parameter is so important, many selection criteria have been proposed, including generalized cross-validation (GCV) [9], AIC and its finite sample corrections [55]. As an alternative to GCV and AIC, a recently-proven equivalence between the penalized least squares estimation and a linear mixed model (LMM) representation [6] can be used. In particular, the best linear unbiased predictor (BLUP) of the response y is composed of the best linear unbiased estimator of the fixed effects and BLUP of the random effects for the given values of the random component variances (see [47] and [6]). Within the LMM framework, restricted maximum likelihood (REML) can be used to estimate the variance components and thus the choice of the tuning parameter, α, which is equal to the ratio of the error variance and the random effects variance [42]. REML-based estimation of the tuning parameter has been shown to perform at least as well as the other criteria and, under certain conditions, it seen to be less variable than GCV-based estimation [41]. In our case, the penalized least-squares criterion (1) is equivalent to

| (20) |

where , the Xunp corresponds to the unpenalized part of the design matrix, and Xpen to the penalized part.

For simplicity of presentation, we describe the transformation with an invertible L. However, a generalized inverse can be used in case L is not of full rank; see equation (14). Also, to facilitate a straightforward use of existing linear mixed model routines in widely available software packages (e.g., R [37] or SAS software [43]), we transform the coefficient vector β using the inverse of the matrix L. Let X★ = XL−1 and β★ = Lβ. Then equation (20) can be modified as follows

This REML-based estimation of tuning parameters is used in the application of Section 7.3.

For estimation of the parameters a, b and α involved in the decomposition-based penalty of equation (16), we view a and b as weights in a tradeoff between the subspaces and assume ab = const. In the current implementation, we use REML to estimate α for a fixed value of a, and do a grid search over the a values to jointly select the tuning parameters which maximize the REML criterion.

7. Numerical examples

To illustrate algebraic properties given in Section 5, we consider PEER estimation alongside some familiar methods in several numerical examples. Section 7.1 elaborates on the simple example in Section 2.1. These mass spectrometry-like predictors are mathematically synthesized in a manner similar to the study of Reiss and Ogden [40] (see also a numerical study in [48]). Here, β is also synthesized to represent a spectrum, or specific set of bumps. In contrast, Section 7.3 presents a real application to Raman spectroscopy data in which a set of spectra {xi} and nanoparticle concentrations {yi} are obtained from sets of laboratory mixtures. This laboratory-based application is preceded in section 7.2 by a simulation that uses these same Raman spectra. In both Raman examples, targeted penalties (13) are defined using discretized functions qj chosen to span specific subspaces,

. As before, let Q = col[q1, …, qd] and

= QQ†.

= QQ†.

Each section displays the results from several methods, including derivative-based penalties. Implementing these requires a choice of discretization scheme and boundary conditions which define the operator. We use

where

where

= [di,j] is a square matrix with entries di,i = 1, di,i+1 = −1 and di,j = 0 otherwise. In addition to some standard estimates, sections 7.3 and 7.2 also consider FPCRR, a functional PCR estimate described in [40]. This approach extends the penalized B-spline estimates of [8] and assumes β = Bη where B is an p × K matrix whose columns consist of K B-spline functions and η is a vector of B-spline coefficients. The estimation process takes place in the coefficient space using the penalty L =

= [di,j] is a square matrix with entries di,i = 1, di,i+1 = −1 and di,j = 0 otherwise. In addition to some standard estimates, sections 7.3 and 7.2 also consider FPCRR, a functional PCR estimate described in [40]. This approach extends the penalized B-spline estimates of [8] and assumes β = Bη where B is an p × K matrix whose columns consist of K B-spline functions and η is a vector of B-spline coefficients. The estimation process takes place in the coefficient space using the penalty L =

applied to η. The FPCRR estimate further assumes β = BVd η (Vd as defined in section 2).

applied to η. The FPCRR estimate further assumes β = BVd η (Vd as defined in section 2).

Estimation error is defined as mean squared error (MSE) ||β − β̃α,L||2, and the prediction error defined similarly as Σi |yi − ỹi|2, where ỹi = 〈xi, β̃〉. Each simulation incorporates response random errors, , added to the ith true response, . Letting denote the sample variance in the set , the response random errors, εi, are chosen such that (the squared multiple correlation coefficient of the true model) takes values 0.6 and 0.8. In sections 7.1 and 7.2, tuning parameters are chosen by a grid search. In section 7.3, tuning parameters are chosen using REML, as described in section 6.

7.1. Bumps simulation

Here we elaborate on the simple example of section 2.1. This simulation involves bumpy predictor curves xi(t) with a response yi that depends on the amplitudes xi(t) at some of the bump locations, t = ck, via the regression function β. In particular,

for t ∈ [0, 1], where JX = {2, 6, 10, 14, 20, 26, 30} and Jβ = {6, 14, 26}; a★ are magnitudes, b★ are spreads, and c★ are the locations of the bumps. In the first simulation, we set bj = 10000 and cj = 0.004(8j − 1), the same for each curve xi. This mimics, for instance, curves seen in mass spectrometry data. The assumption Jβ ⊂ JX simulates a setting in which the response is associated with a subset of metabolite or protein features in a collection of spectra. The aij’s are from a uniform distribution, and aj = 3, 5, 2 for j = 6, 14, 24, respectively. We consider discretized curves, xi(t), evaluated at p = 250 points, tj, j = 1, …, p. The sample size is fixed at n = 50 in each case.

Penalties

We consider a variety of estimation procedures: ridge (L = I), second-derivative (

), a more general derivative operator (

), a more general derivative operator (

+ a I) and PCR. We also define two decomposition-based penalties (13) formed by specific sub-spaces

+ a I) and PCR. We also define two decomposition-based penalties (13) formed by specific sub-spaces

= span{qj}j∈J for qj of the form qj(t) = aj exp[bj(t−cj)], with cj at all locations seen in the predictors, JV = {2, 6, 10, 14, 20, 26, 30}, or at uniformly-spaced locations, JU = {2, 4, …, 30}; denote these penalties by LV and LU, respectively.

= span{qj}j∈J for qj of the form qj(t) = aj exp[bj(t−cj)], with cj at all locations seen in the predictors, JV = {2, 6, 10, 14, 20, 26, 30}, or at uniformly-spaced locations, JU = {2, 4, …, 30}; denote these penalties by LV and LU, respectively.

Simulation results

The simulation incorporates two sources of noise: (i) response random errors, , added to the ith true response so that R2 = 0.6, 0.8; (ii) measurement error, , added to the ith predictor, xi. To define a signal-to-noise ratio, S/N, set , where μi is the mean value of xi, and set . The ei are chosen so that S/N: = SX/σe = 2, 5, 10.

Figure 1 shows a few partial sums of (7) for estimates arising from three penalties:

, L = I and LV, when R2 = 0.8 and S/N = 2. Table 1 gives a summary of estimation errors. The penalty LV, exploiting known structure, performs well in terms of estimation error. Not surprisingly, a penalty that encourages low-frequency singular vectors,

, L = I and LV, when R2 = 0.8 and S/N = 2. Table 1 gives a summary of estimation errors. The penalty LV, exploiting known structure, performs well in terms of estimation error. Not surprisingly, a penalty that encourages low-frequency singular vectors,

, is a poor choice although

, is a poor choice although

+ a I easily improves on

+ a I easily improves on

since the GSVs are more compatible with the relevant structure. PCR performs well with estimation errors that can be several times smaller than those of ridge. The number of terms used in PCR ranges here from 8 (S/N = 10) to 25 (S/N = 2).

since the GSVs are more compatible with the relevant structure. PCR performs well with estimation errors that can be several times smaller than those of ridge. The number of terms used in PCR ranges here from 8 (S/N = 10) to 25 (S/N = 2).

Predictably, PCR performance degrades with decreasing S/N, a property that is less pronounced, or not shared, by other estimates. Performances of LV and LU illustrate properties described in Section 5.3. As S/N → 0, the ordinary singular vectors of X (on which ridge and PCR rely) decreasingly represent the structure in β. The GS vectors of (X, LV) and (X, LU), however, retain structure relevant for representing β.

Table 2 summarizes prediction errors. When S/N is large, performance of PCR is comparable with LV and LU, but degrades for low S/N. Here, even

+a I provides smaller prediction errors, in most cases, than ridge,

+a I provides smaller prediction errors, in most cases, than ridge,

or PCR. This illustrates the GS vectors role in (12) and reiterates observations in [14].

or PCR. This illustrates the GS vectors role in (12) and reiterates observations in [14].

Table 2.

Prediction errors for simulation with selected bump locations. Sample size is n = 50. Errors are multiplied by 1000 for display

| R2 | S/N | LV | LU | PCR | ridge |

+ a I + a I

|

|

|---|---|---|---|---|---|---|---|

| 0.8 | 10 | 9.0 | 10.5 | 10.8 | 16.6 | 19.3 | 12.9 |

| 0.8 | 5 | 8.4 | 11.0 | 12.2 | 26.7 | 27.9 | 17.8 |

| 0.8 | 2 | 12.9 | 19.0 | 53.2 | 55.7 | 50.3 | 40.1 |

| 0.6 | 10 | 21.4 | 23.0 | 23.9 | 33.0 | 39.0 | 26.2 |

| 0.6 | 5 | 23.9 | 25.0 | 29.5 | 49.2 | 54.6 | 34.4 |

| 0.6 | 2 | 34.4 | 42.5 | 90.4 | 110.4 | 104.4 | 77.9 |

7.2. Raman simulation

We consider Raman spectroscopy curves which represent a vibrational response of laser-excited co-organic/inorganic nanoparticles (COINs). Each COIN has a unique signature spectrum and serves as a sensitive nanotag for immunoassays; see [27, 44]. Each spectrum consists of absorbance values measured at p = 600 wavenumbers. By the Beer-Lambert law, light absorbance increases linearly with a COIN’s concentration and so a spectrum from a mixture of COINs is reasonably modeled by a linear combination of pure COIN spectra. The data here come from experiments that were designed to establish the ability of these COINs to measure the existence and abundance of antigens in single-cell assays.

Let P1, …, P10 denote spectra from nine pure COINs and one “blank” (no biochemical material), each normalized to norm one. We form in-silico mixtures as follows: , i = 1, …, n, with coefficients {ci,k} generated from a uniform distribution. Figure 2 shows representative spectra from all nine COINs superimposed on a collection of mixture spectra, . Included in Figure 2 is the β (dashed curve) used to defined the simulation: yi = 〈xi, β〉 + ε, ε ~ N(0, σ2).

In this simulation, we have created a coefficient function which, instead of being modeled mathematically, is a curve that exhibits structure of the type found in Raman spectra. Details on the construction of this β are in Appendix 9.1 so here we simply note that it arises as a ridge estimate from a set of in-silico mixtures of Raman spectra in which one COIN, P9, is varied prominently relative to the others. See Figure 2. Motivation for defining β in this way is based on a view that it seems implausible for us to predict the structure of realistic signal in these data and recreate it using polynomials, Gaussians or other analytic functions.

Regardless of its construction, β defines signal that allows us to compute estimation and prediction error. The performances of five methods are summarized in Table 3. Note that although β was constructed as a ridge estimate (using a different set of in-silico mixtures; see Appendix 9.1), the ridge penalty is not necessarily optimal for recovering β. This is because the strictly empirical eigenvectors associated with the new spectra may contain structure not informative regarding y. Also, in these data, the performance of FPCRR is adversely affected by a tendency for the estimate to be smooth; cf., Figure 3. The PEER penalty used here is defined by a decomposition-based operator (16) in which

is spanned by a 10-dimensional set of pure-COIN spectra (including a blank). The success of such an estimate obviously depends on an informed formation of

is spanned by a 10-dimensional set of pure-COIN spectra (including a blank). The success of such an estimate obviously depends on an informed formation of

, but as long as the parameter-selection procedure allows for a = b, then the set of possible estimates includes ridge as well as estimates with potentially lower MSE than ridge; see Proposition 5.4.

, but as long as the parameter-selection procedure allows for a = b, then the set of possible estimates includes ridge as well as estimates with potentially lower MSE than ridge; see Proposition 5.4.

Table 3.

Estimation (MSE) and prediction (PE) errors of several penalization methods for the simulation described in Figure 2. Numbers represent the average error from 100 runs. PE errors are multiplied by 1000 for display

| LQ | PCR | ridge |

+ a I + a I

|

FPCRR | |

|---|---|---|---|---|---|

| MSE | 8.91 | 12.34 | 13.87 | 41.69 | 15.29 |

| PE | 0.0071 | 0.0179 | 0.0139 | 0.0131 | 0.0175 |

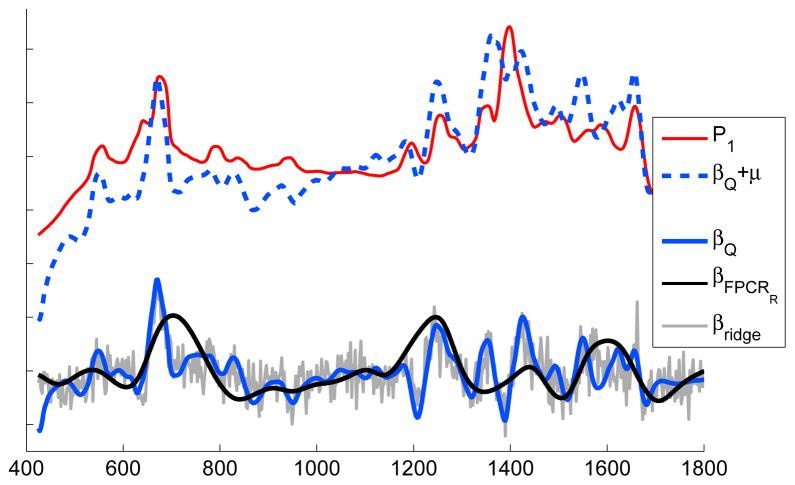

Fig 3.

Three estimates for a coefficient function that relates concentrations of P1 to its signal in 8-COIN laboratory mixtures. Estimates shown: ridge (β̃ridge; gray), FPCRR (β̃FPCRR; black) and PEER (β̃Q; blue). For perspective, P1 is plotted (in red) and the mean-adjusted PEER estimate, β̃Q + μ (dashed blue); μ is the mean of the mixture spectra (not shown).

We note that this simulation may be viewed as inherently unfair since the PEER estimate uses knowledge about the relevant structure. However, this is a point worth reemphasizing: when prior knowledge about the structure of the data is available, it can be incorporated naturally into the regression problem.

7.3. Raman application

We now consider spectra representing true antibody-conjugated COINs from nine laboratory mixtures. These mixtures contain various concentrations of eight COINs (of the nine shown in Figure 2). Spectra from four technical replicates in each mixture are included to create a set of n = 36 spectra . We designate P1 as the COIN whose concentration within each mixture defines y. Assuming a linear relationship between the spectra, {xi}, and the P1-concentrations, {yi}, we estimate P1. More precisely, we estimate the structure in P1 that correlates most with its concentrations, as manifest in this set of mixtures. The fLM is a simplistic model of this relationship between the concentration of P1 and its functional structure, but the physics of this technology imply it is a reasonable starting point.

We present the results of three estimation methods: ridge, FPCRR and PEER. In constructing a PEER penalty, we note that the informative structure in Raman spectra is not that of low-frequency or other easily modeled features, but it may be obtainable experimentally. Therefore, we define L as in (13) in which

contains the span of COIN template spectra:

. However, since a single set of templates may not faithfully represent signal in subsequent experiments (with new measurement errors, background and baseline noise etc), we enlarge

contains the span of COIN template spectra:

. However, since a single set of templates may not faithfully represent signal in subsequent experiments (with new measurement errors, background and baseline noise etc), we enlarge

by adding additional structure related to these templates. For this, set

, where

denotes the derivative of spectrum Pk. (Note, to form

by adding additional structure related to these templates. For this, set

, where

denotes the derivative of spectrum Pk. (Note, to form

, scale-based approximations to these derivatives are used since raw differencing of non-smooth spectra introduces noise.) Then set

, scale-based approximations to these derivatives are used since raw differencing of non-smooth spectra introduces noise.) Then set

= span{

= span{

∪

∪

} and define L = a(I −

} and define L = a(I −

) + b

) + b

.

.

The regularization parameters in the PEER and ridge estimation processes were chosen using REML, as described in Section 6. For the FPCRR estimate, we used the R-package refund [39] as implemented in [40].

Since β is not known (the model y = Xβ +ε is only approximate), we cannot report MSEs for these three methods. However, the structure of P1 is qualitatively known and by experimental design, y is directly associated with P1. The goal here is that of extracting structure of the constituent spectral components as manifest in a linear model. This application is similar to the classic problem of multivariate calibration [5, 31] which essentially leads to a regression model using an experimentally-designed set of spectra from laboratory mixes.

The structure in the estimate here is expected to reflect the structure in P1 that is correlated with P1’s concentrations, y. The estimate is not, however, expected to precisely reconstruct P1 since P1 shares structure with the other COIN spectra not associated with y. See Figure 2 where P1 is plotted alongside the other COIN spectra. Now, Figure 3 shows plots of the PEER, FPCRR and ridge estimates of the fLM coefficient function. The PEER estimate, β̃Q, provides an interpretable compromise between ridge, which involves no smoothing, and FPCRR, which appears to oversmooth. For reference, the P1 spectrum is also plotted along with a mean-adjusted version of β̃Q, β̃Q + μ (dashed line), where μ(t) = (1/36) Σi xi(t), t ∈ [400, 1800].

Finally, we consider prediction for these methods by forming a new set of spectra from different mixture compositions (different concentrations of each COIN) and, additionally, taken from different batches. This “test” set consists of spectra from four technical replicates in each of 15 mixtures forming a set of n = 60 spectra, . As before, P1 is the COIN whose concentration within each mixture defines the values . For the estimates from each of the three methods (shown in Figure 3) we compute the prediction error: . The errors for PEER, ridge, and FPCRR estimates are 0.770, 0.752, 2.139, respectively. The ridge estimate here illustrates how low prediction error is not necessarily accompanied by interpretable structure in the estimate (or low MSE) [7].

8. Discussion

As high-dimensional regression problems become more common, methods that exploit a priori information are increasingly popular. In this regard, many approaches to penalized regression are now founded on the idea of “structured” penalties which impose constraints based on prior knowledge about the problem’s scientific setting. There are many ways in which such constraints may be imposed, and we have focused on the algebraic aspects of a penalization process that imposes spatial structure directly into a regularized estimation. This approach fits into the classic framework of L2-penalized regression but with an emphasis on the algebraic role that a penalty operator plays to impart structure on the estimate.

The interplay between a structured regularization term and the coefficient-function estimate may not be well understood in part because it is not typically viewed in terms of the generalized singular vectors/values, which is fundamental to this investigation. In particular, any penalized estimate of the form (1) with L ≠ I is intrinsically based on GSVD factors in the same way that many common regression methods (such as PCR, ridge, James-Stein, or partial least squares) are intrinsically based on SVD factors. Just as the basics of the ubiquitous SVD are important to understanding these methods, we have aspired to established the basics of the GSVD as it applies to a this general penalized regression setting and to illustrate how the theory underlying this approach can be used inform the choice of penalty operator.

Toward this goal the presentation emphasizes the transparency provided by the partially-empirical eigenvector expansion (7). Properties of the estimate’s variance and bias are determined explicitly by the generalized singular vectors whose structure is determined by the penalty operator. We have restricted attention to additive constraints defined by penalty operators on L2 in order to retain the direct algebraic connection between the eigenproperties of the operator pair (X, L) and the spatial structure of β̃α,L. Intuitively, the structure of the penalty’s least-dominant singular vectors should be commensurate with the informative structure of β. The actual effect a penalty has on the properties of the estimate can be quantified in terms of the GSVD vectors/values.

This perspective differs from popular two-stage signal regression methods in which estimation is either preceded by fitting the predictors to a set of (external) basis functions or is followed by a step that smooths the estimate [8, 21, 30, 38, 40]. Instead, structure (smoothness or otherwise) is imposed directly into the estimation process. The implementation of a penalty that incorporates structure less generic than smoothness (or sparseness) requires some qualitative knowledge about spatial structure that is informative. Clearly this is not possible in all situations, but our presentation has focused on how a functional linear model may provide a rigorous and analytically tractable way to take advantage of such knowledge when it exists.

Acknowledgments

The authors wish to thank three anonymous referees and the associate editor for several corrections and suggestions that greatly improved the paper. We also gratefully acknowledge the patience, insight and assistance from Dr. C. Schachaf who provided the Raman spectroscopy data for the examples in Sections 7.2 and 7.3. Madan Gopal Kundu carefully read the manuscript and corrected several errors. Research support was provided by the National Institutes of Health grants R01-CA126205 (TR), P01-CA053996 (TR, ZF), U01-CA086368 (TR, ZF), R01-NS036524 (JH) and U01-MH083545 (JH).

9. Appendix

9.1. Defining β for the simulation in Section 7.2

This simulation is motivated by an interest in constructing a plausibly realistic β whose structure is naturally derived by the scientific setting involving Raman signatures of nanoparticles. Although one could model a β mathematically using, say, polynomials or Gaussian bumps (cf., Appendix A.2), such a simulation would be detached from the physical nature of this problem. Instead, we construct a coefficient function that genuinely comes from a functional linear model with Raman spectra as predictors.

We first generate in-silico mixtures of COIN spectra as , where ci,k ~ unif[0, 1]. Designating P9 as the COIN of interest, we define response values that correspond to the “concentration” of P9 by setting , i = 1, …, n. The factor of 3 imposes a strong association between P9 and the response.

Now, the example in section 7.2 aims to estimate a coefficient function that truly comes from a solution to a linear model. However, the equation yo = Xoβ has infinitely many solutions (where Xo is the matrix whose ith row is ), so we must we must regularize the problem to obtain a specific β. For this, we simply use a ridge penalty and designate the resulting solution to be β. This is shown by the dotted curve in Figure 2 and is qualitatively similar to P9.

We note that the simulation in section 7.2 uses the same set of COINs, but a new set of in-silico mixture spectra (i.e., a new set of {ci,k} ~ Unif[0, 1]). In addition, a small amount of measurement error was added, as in section 7.1, to each spectrum during the simulation.

9.2. Frequency domain simulation

We display results from a study that mimics the scenario of simulations studied by Hall and Horowitz [16]. We illustrate, in particular, properties of the MSE discussed following equation (18) in section 5.3 relating to b = 0. In fact, we consider the more general scenario in which

is not constructed from empirical eigenvectors (as in PCR and ridge), but is defined by a prespecified envelope of frequencies.

is not constructed from empirical eigenvectors (as in PCR and ridge), but is defined by a prespecified envelope of frequencies.

In this simulation both β and xi, i = 1, …, n, are generated as sums of the cosine functions

t ∈ [0, 1]; here γj = (−1)j+1j−0.75, Zij is uniformly distributed on [−31/2, 31/2] (E(Zij) = 0 and var(Zij) = 1), φ1 ≡ 1 and φj(t) = 21/2 cos(jπt) for j ≥ 1, and , and cov(ei(t), ei′ (t′)) = 0 for either i ≠ i′ or t ≠ t′. The response yi is defined as yi = 〈β, xi〉 + εi, where εi ~ N (0σ2), i.i.d.. The simulations involve discretizations of these curves evaluated at p = 100 equally spaced time points, tj, j = 1, …, p, that are common to all curves.

Penalties

We consider properties of estimates from a variety of penalties: ridge (L = I),

,

,

+ aI, and PCR1. In addition, targeted penalties of the form L = I −

+ aI, and PCR1. In addition, targeted penalties of the form L = I −

, are defined by the specified subspaces

, are defined by the specified subspaces

= span{φj}j∈J, for φj defined above. Specifically, we use J = JF = {j = 5, …, 17} (a tight envelope of frequencies) to define LF, and J = JG = {j = 4, …, 20} (a less focused span of frequencies) to define LG. The operator

= span{φj}j∈J, for φj defined above. Specifically, we use J = JF = {j = 5, …, 17} (a tight envelope of frequencies) to define LF, and J = JG = {j = 4, …, 20} (a less focused span of frequencies) to define LG. The operator

+ aI simply serves to illustrate the role of higher-frequency singular vectors as discussed in Section 4.1. In the simulations, the coefficient a in

+ aI simply serves to illustrate the role of higher-frequency singular vectors as discussed in Section 4.1. In the simulations, the coefficient a in

+ aI was chosen simultaneously with α via a two-dimensional grid search.

+ aI was chosen simultaneously with α via a two-dimensional grid search.

Simulation results

Table 4 summarizes estimation results for all six penalties and two sample sizes, n = 50, 200. The prediction results for these estimates are in Table 5. These are reported for S/N = 10, 5 and R2 = 0.8, 0.6. The number of terms in the PCR estimate was optimized and ranged from 19 to 25 when R2 = 0.8 and decreased with decreasing R2. Analogously, one could optimize over the dimension of

(to implement a truncated GSVD), but the purpose here is illustrative while in practice a more robust approach would emply a penalty of the form (13).