Abstract

Sorting objects and events into categories and concepts is a fundamental cognitive capacity that reduces the cost of learning every particular situation encountered in our daily lives. Relational concepts such as “same,” “different,” “better than,” or “larger than”—among others—are essential in human cognition because they allow highly efficient classifying of events irrespective of physical similarity. Mastering a relational concept involves encoding a relationship by the brain independently of the physical objects linked by the relation and is, therefore, consistent with abstraction capacities. Processing several concepts at a time presupposes an even higher level of cognitive sophistication that is not expected in an invertebrate. We found that the miniature brains of honey bees rapidly learn to master two abstract concepts simultaneously, one based on spatial relationships (above/below and right/left) and another based on the perception of difference. Bees that learned to classify visual targets by using this dual concept transferred their choices to unknown stimuli that offered a best match in terms of dual-concept availability: their components presented the appropriate spatial relationship and differed from one another. This study reveals a surprising facility of brains to extract abstract concepts from a set of complex pictures and to combine them in a rule for subsequent choices. This finding thus provides excellent opportunities for understanding how cognitive processing is achieved by relatively simple neural architectures.

Keywords: concept learning, vision, insect, Apis mellifera

Concepts and categories promote cognitive economy by sparing us the learning of every particular instance encountered in our daily lives (1–5). Instead, we group specific instances in broad classes that allow responses to novel situations, which are deemed equivalent to the classified items. Stimulus classification can be governed by perceptual similarities: The belonging to a given class is defined, in this case, by common physical features predictive of the category (6). Alternate classifications that are independent of perceptual similarity can be achieved by using relations as grouping criterion (6). Relations such as “same” or “different” and “better than” or “worse than” can serve as the basis for a form of classification that can be considered abstract because it involves learning beyond perceptual generalization. Given that the terms “category” and “concept” are often used indiscriminately (7), we will use here the former to refer to perceptual classification and the latter to relational classification. Manipulating concepts presupposes a high level of cognitive sophistication because the relational concept has to be coded as a specific entity by the brain independently of the physical nature of objects linked by this relation (8). Such ability requires time to develop during human infancy and is not necessarily suspected to be possible with in an insect brain (3, 5).

Honey bees are a powerful model for studies of visual cognition because they can be reliably trained to associate visual stimuli with sucrose reward (9–11). In this experimental framework, bees can learn, through experience, to navigate in complex mazes (12), to categorize objects based on coincident visual features (11, 13–15), and to master discriminations based on identity (16, 17) and numerosity (18–20). Bees can also learn the concept of above/below with respect to a constant reference (e.g., a horizontal line) (21), but whether bees can reach a level of abstraction allowing them to master multiple concepts simultaneously is an intriguing question that currently remains unknown and that goes beyond all previous attempts to characterize cognitive power of insect brains (22). Here we ask whether bees can learn to choose variable complex stimuli that fulfill simultaneously two conceptual requirements: being always placed in a consistent spatial relationship and, in addition, being always perceptually different.

Results

To answer this question, marked honey bees were individually trained to fly into a Y-shaped maze where they had to discriminate between two stimuli presented vertically on the back walls of the maze. Stimuli were composed of two different elements, which were arranged in one of two spatial configurations: above/below (one above the other) or left/right (one on the right of the other). Importantly, stimulus components were always different from one another, be they achromatic patterns or chromatic discs (Figs. 1A, 2A, and 3A, training). Bees were trained to enter the Y maze and were rewarded with sucrose solution for choosing either the above/below stimuli or the left/right stimuli. A drop of quinine solution was delivered for any incorrect choice because it significantly enhances visual attention while learning perceptually difficult tasks (23). Thus, during 30 learning trials (i.e., 30 foraging bouts between the hive and the Y maze), half of the bees (n = 14) were rewarded for recognizing the above/below relationship (and penalized for choosing the left/right relationship), with the conditions reversed for the other half (n = 14) (i.e., reward: right/left; punishment: above/below). Within each group, bees were either trained with achromatic patterns (half of the bees) or with color discs (Fig. S1). Stimulus features and positions were systematically varied between trials (Fig. S1 and Figs. 1A, 2A, and 3A), maintaining only the above/below or left/right relationship as the only predictor of reward, while also preserving the requirement that simultaneously presented stimuli contained different elements. To uncover the nature of the solutions inculcated by the training, we performed nonrewarded transfer tests in three different experiments in which bees were presented with novel stimuli (Figs. 1A, 2A, and 3A, transfer tests). To exclude the possibility that bees may potentially use the global center of gravity of the stimuli as a cue to resolve the task (24), all stimuli used in the nonrewarded tests were equally centered, thus presenting their center of gravity at exactly the same position.

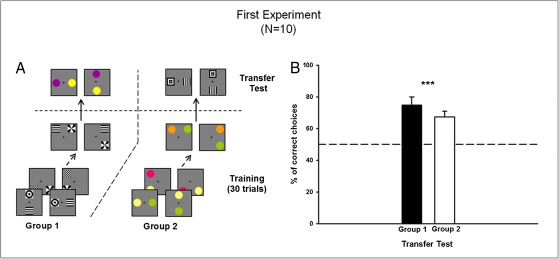

Fig. 1.

Experiment 1. (A) Example of conditioning and testing procedure for two groups of bees: group 1 trained with achromatic patterns and group 2 trained with color discs. Within each group, half of the bees were rewarded on the above/below relation, whereas the other half were rewarded on the right/left relation. Novel stimuli were presented in the transfer test. (B) Performance in the transfer test (percentage of correct choices) of groups 1 and 2. Bees preferred the novel stimuli arranged in the trained spatial relationship. Data shown are mean + SEM (n = 5 for each bar; ***P < 0.001).

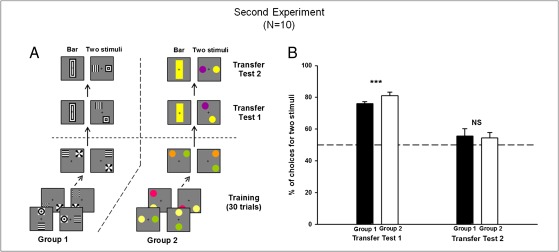

Fig. 2.

Experiment 2. (A) Example of conditioning and testing procedure for group 1 trained with achromatic patterns and group 2 trained with color discs. Within each group, half of the bees were rewarded on the above/below relation, whereas the other half were rewarded on the right/left relation. Transfer tests shown correspond to bees rewarded on the above/below relation. (B) Performance in the transfer tests (percentage of choices for the option displaying two stimuli). Bees preferred the two stimuli to the bar if they were linked by the appropriate relationship (transfer test 1) but not if they displayed the inappropriate relationship (transfer test 2). Data shown are mean + SEM (n = 5 for each bar; ***P < 0.001; NS, P > 0.05).

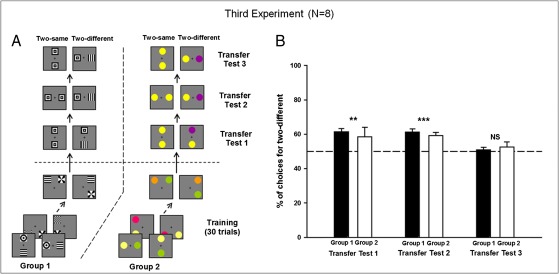

Fig. 3.

Experiment 3. (A) Example of conditioning and testing procedure for group 1 trained with achromatic patterns and group 2 trained with color discs. Within each group, half of the bees were rewarded on the above/below relation, whereas the other half were rewarded on the right/left relation. Transfer tests shown correspond to bees rewarded on the above/below relation. (B) Performance in the transfer tests (percentage of choices for the option displaying two different stimuli). Bees preferred two different to two identical stimuli both in the appropriate (transfer test 1) and the inappropriate (transfer test 2) relationships and chose randomly in a conflict situation (transfer test 3). Data shown are mean + SEM (n = 4 for each bar; **P < 0.01; ***P < 0.001; NS, P > 0.05).

During training, bees learned to choose the rewarded spatial relationship between stimuli, irrespective of the specific relationship (above/below or left/right) or the type of visual stimulus (achromatic patterns or chromatic discs) (P < 0.01 in all cases; see acquisition curves in Fig. S2). Learning was robust despite a systematic trial-to-trial variation of both stimulus features and the absolute position of stimuli in the bees’ visual field.

First Experiment: Bees Transferred the Learned Spatial Relation to Novel Stimuli.

We assessed the bees’ ability to transfer the learned spatial relationship to novel stimuli that differed perceptually from those used during training (Fig. 1A, transfer test). We thus determined whether stimulus similarity or a true relational concept was used by the bees. Bees trained with achromatic patterns (group 1; n = 5) were tested with colors, whereas those trained with colored disks were tested with achromatic patterns (group 2; n = 5). In both cases, bees chose the appropriate spatial relationship in the respective transfer tests (Fig. 1B; P < 0.001 for both groups 1 and 2). No significant differences were found between transfer performances of groups 1 and 2. This result shows that the bees learned to use the relational spatial concept (above/below or left/right) irrespective of the perceptual similarity between stimuli defining this concept.

Second Experiment: Bees Did Not Use the Global Orientation of the Stimuli as a Lower-Level Cue to Resolve the Task.

We then tested whether bees did indeed use a spatial concept or alternatively responded to broad orientation cues (25, 26). Specifically, we aimed to distinguish between learning that two stimuli were connected by a specific relationship (e.g., one above the other or one on the right of the other) and learning that a global cue (e.g., vertical or horizontal, respectively) was associated with sucrose reward. Two groups of bees (n = 5 each) were trained either with achromatic patterns (group 1) or colors (group 2) to choose a specific spatial relationship (above/below or left/right). In two transfer tests (Fig. 2A, transfer tests), bees were confronted with novel stimuli that were not used during the training. In transfer test 1, they had to choose between a large bar (Fig. S1C), vertical for above/below-trained animals and horizontal for left/right-trained animals, and two stimuli that were placed in the appropriate spatial relationship but which were shifted with respect to each other (Fig. 2A, transfer test 1) to avoid the global vertical or horizontal visual stimulation. In transfer test 2, the same large bar was presented versus two stimuli exhibiting the nonrewarded spatial relationship (left/right for above/below-trained animals and vice versa) (Fig. 2A, transfer test 2).

In transfer test 1, bees of both groups significantly preferred the two stimuli over the large bar (Fig. 2B; P < 0.001 for both groups 1 and 2; transfer test 1), thus showing that they were guided by the presence of two stimuli in a specific spatial relationship (even if stimuli were slightly shifted with respect to each other) rather than by using a strong orientation cue. There was no significant difference on transfer test performance for groups 1 and 2 nor between bees trained for above/below and bees trained for left/right [not significant (NS) in all cases]. In transfer test 2, bees from both groups exhibited no significant preference for either stimulus (Fig. 2B: NS for both groups 1 and 2; transfer test 2), showing that the bar providing the salient orientation cue was not perceived by bees as representing the rewarded stimuli. This finding thus allows us to exclude this potential low-level explanation for the bees’ behavior. These results confirmed that bees learned to choose two stimuli arranged in a specific spatial relationship, irrespective of the particular stimuli used to build this relationship.

Third Experiment: Bees Extracted an Additional Relational Concept from Training and Used It in Combination with the Spatial Concept.

Did bees also learn from training that the stimuli had to be different? To answer this question, we performed a third experiment to determine whether bees could additionally learn the concept that stimuli elements must always be different while also choosing on the basis of specific spatial relationships. Bees were trained with either achromatic patterns (group 1; n = 4) or color discs (group 2; n = 4) and then presented with three transfer tests (Fig. 3A) in which the bees had to choose between two different stimuli or the same stimulus repeated. In transfer test 1, the correct spatial relationship linked both kinds of stimuli, whereas in transfer test 2, the incorrect spatial relationship pertained. Finally, in transfer test 3, conflictive information was presented as the correct spatial relationship linked two identical stimuli, whereas the incorrect spatial relationship linked two different stimuli.

In transfer test 1, bees from both groups preferred two different stimuli arranged in the appropriate spatial relationship over two identical stimuli arranged in the same relationship (Fig. 3B; P < 0.01 for both groups 1 and 2; transfer test 1). Significant differences were not observed between transfer performances of groups 1 or 2 nor between bees trained for above/below and bees trained for left/right (NS in all cases). In transfer test 2, bees presented with stimuli in the incorrect spatial relationship also demonstrated a significant preference for the stimuli with two different components over stimuli with two identical components (Fig. 3B; P < 0.01 for both groups 1 and 2; transfer test 2). No significant differences were found between the transfer performances of groups 1 and 2 nor between bees trained on the above/below or left/right configurations (NS in all cases). These results confirm that, in addition to the specific spatial relationship, bees learned to attend to the requirement for different stimulus components. Note that preference for difference over sameness was so strong that, in transfer test 2, it even led the bees to choose a stimulus that was explicitly associated with a penalty of quinine solution during the training. This result admits two different interpretations: either difference dominates over spatial relationship or is at least treated separately in the definition of the rewarded category or the negative stimulus was not learned per se during training. The latter possibility can be excluded because studies on visual discrimination learning in the same setup and with identical reinforcements show that bees learn both the positive and the negative stimulus (23). Finally, in transfer test 3, bees presented with the dilemma of choosing between the correct relationship linking two identical stimuli and the incorrect relationship linking two different stimuli showed no preference for either situation (Fig. 3B; NS for both groups 1 and 2; transfer test 3). No differences were found between transfer performances of groups 1 and 2 nor between bees trained for above/below and bees trained for left/right (NS in all cases). Thus, because the bees had learned that stimuli elements both had to be different and had to have the correct spatial arrangement, the rule guiding the bees’ decisions consisted of two combined concepts.

Discussion

These results demonstrate that the miniature brain of honey bees is capable of extracting at least two relational concepts from experience with complex stimuli and that these concepts can be combined to determine sophisticated performances, which are independent of the physical nature of the stimuli. Irrespective of whether stimuli were chromatic or achromatic, bees learned that they had to choose stimuli arranged in a specific spatial relationship and that all stimuli were composed of different visual elements. Thus, a dual concept based on two distinct relational rules rather than perceptual similarity guided the honey bees’ choices in these experiments.

Sophisticated multiple-concept processing is not necessarily expected in an insect brain given its reduced size and small number of neurons relative to larger organisms (e.g., 960,000 neurons in a honey bee brain vs. 100 billion in a human brain). It seems, therefore, that views emphasizing relational thinking as a cornerstone of human perception and cognition by underlying among others analogy, language, or mathematical abilities (8) need to be reconsidered and extended to relatively simpler brains (22).

Notably, honey bees extracted the concept of difference even if they were not specifically trained to do so because both rewarded and punished stimuli were composed of two different elements. Moreover, bees required only 30 training trials to extract and master both relational concepts (i.e., ∼3 h of training), whereas several thousand of trials are generally required for similar concepts to develop in some primates (27). However, comparisons between bees’ and primates’ performances are not necessarily pertinent: Whereas bees could freely move in our experiments and their constant returns to the experimental setup revealed a high foraging motivation, conceptual learning in primates is usually tested in artificial laboratory environments in which animals are individually immobilized and far from biological relevant situations. Alternative research strategies favoring the study of primates’ cognitive abilities in seminatural environments and preserving social groups (28) may introduce drastic differences in terms of the easiness with which these animals solve conceptual problems. However, the relative facility of bees to master relational concepts should serve as the basis of a reassessment of the considerate requirement of high brain complexity involving multiple processing steps to resolve concept learning tasks (22).

Low-level explanations should be considered as potential accounts of some visual performances of insects (22). Focusing on generalization along a perceptual dimension such as color or achromatic pattern properties (11), differences in the center of gravity of images (24), or broad orientation cues (25) was explicitly excluded by our experiments. Template matching has been proposed as a simple mechanism by which insects compared learned images with images that are currently perceived (29–32). A strict pixel-by-pixel comparison (32) can be excluded in our experiments because bees transferred appropriately their choice to novel visual images that differed in spatial details and/or color. However, template matching can adopt more sophisticated forms compatible with generalization to novel stimuli. Correlation analysis can underlie flexible forms of template matching because it allows statistic quantification of similarity between two or more different images (33). To determine whether this type of visual strategy potentially accounted for the bees’ performance in our experiments, we computed average left/right and above/below stimuli, both for all stimuli displayed to the bees during the training and for those whose position during the training matched the position of the test stimuli (Fig. S3). In both cases, coefficients of correlation between average training stimuli and actual test stimuli were calculated by using fast-normalized cross-correlation, which provides best template coincidence (33). Fig. S3 shows that, although template matching can potentially account for transfer performances in experiment 1, it can be definitely discarded for experiments 2 and 3, where bees chose against the predictions of this simple visual strategy. The simultaneous mastering of two visual concepts by bees reflected, therefore, a higher level of complexity than just peripheral image coincidence.

What do bees gain from extracting and combining concepts of different nature? Identifying spatial relationships between objects is useful for learning about generic configurations that underlie specific stimulus categories in naturalistic environments. For example, bilateral flowers share a common layout with upper and lower petals and a left/right symmetry so that spatial relationships may facilitate the construction of a general configuration corresponding to what a bilateral flower should be (34–36). Furthermore, honey bees are central-place foragers, navigating between a fixed place (the hive) and surrounding food sources (flowers). Navigational strategies implemented by these insects are extremely sophisticated and may even rely on map-like representations of space (37). In this navigational context, dealing with abstract spatial relationships such as left/right or above/below may be extremely helpful for identification of expected landmarks en route to the goal in a complex environment where there may be a large amount of distracting information that must be ignored (38). If, in addition, the concept of “difference” is available, an abstract but rich representation of space can be achieved even by a miniature brain. For instance, bees may have learned that a food source is located between two landmarks that are adjacent either in the horizontal (i.e., left/right) or in the vertical (i.e., above/below) dimension and that, besides this spatial requirement, are also sufficiently different to be discriminated from other potential distracter stimuli present in the environment. Learning to combine the spatial and the difference concept then allows a bee to quickly apply the acquired rules to new scenarios that might occur as foraging conditions change. This finding is thus likely to be of very high value for understanding how brains can learn complex cognitive-type tasks and how modeling of miniature brain visual processing may provide useful solutions for the development of artificial visual systems (39, 40).

Materials and Methods

Free-flying honey bees were marked with a color spot on the thorax and individually trained to collect 1 M sucrose solution within a Y maze (41). The maze was covered with an UV-transparent Plexiglas ceiling and illuminated by natural daylight. During both training and tests, only one bee was present in the apparatus. The entrance of the maze led to a decision chamber defined by the intersection of the maze arms. Once in the decision chamber, a bee could choose between the stimuli presented on the back walls of both arms. The back walls (20 × 20 cm) were placed at a distance of 15 cm from the decision chamber.

To improve visual learning, a differential-conditioning procedure was used (41) so that one stimulus was rewarded with 1 M sucrose solution whereas another stimulus was penalized with 60 mM quinine hydrochloride dihydrate solution (23). Solutions were offered in a micropipette inserted in the center of the back walls. Each bee was trained for 30 trials (i.e., 30 foraging bouts between the hive and the laboratory) during which the sides of rewarded and nonrewarded stimuli (left or right) were interchanged in a pseudorandom sequence. In each trial, the first choice of a bee was quantified after it entered the maze. Tests lasted 45 s, during which the number of contacts with the surface of the stimuli was recorded. Each test was completed twice, interchanging the sides of the stimuli. Neither sucrose nor quinine was offered during tests. Three to six refreshing training trials were intermingled between the tests. The sequence of tests was randomized from bee to bee.

Stimuli.

Stimuli were presented on a gray background that covered the back walls of the maze. The background was cut from a neutral gray HKS-92N paper (K+E Stuttgart). Pairs of vertically or horizontally aligned achromatic black patterns or color discs were used to establish above/below or left/right relationships (Fig. S1).

Six different achromatic patterns printed with a high-resolution laser printer on UV-reflecting white paper of constant quality (a checkerboard, a radial four-sectored pattern, a horizontal grating, a vertical grating, and two concentric patterns; Fig. S1A) and six different color discs (cut from HKS papers 1N, 3N, 29N, 32N, 68N, and 71N; Fig. S1B) were used. Patterns were 7 × 7 cm and subtended a visual angle of 26° to the eyes of a bee located in the decision chamber of the maze. Grating stripes, checkerboard squares, and concentric squares and rings were all 1 cm width, which corresponded to a visual angle of 4° from the decision chamber. Sectors in the radial pattern covered 2.5 cm in their largest extension, thus subtending a visual angle of 10° to the bees’ eye when deciding between stimuli. Experiments using horizontal and vertical black-and-white gratings of varying spatial frequencies have shown that single stripes can be resolved if they subtend a threshold angle of 2.3° (42). Thus, all achromatic patterns could be well resolved for honey bees. Color disks were 7 cm diameter and subtended a visual angle of 26° to the eyes of a bee in the decision chamber. An angular threshold of 15° has been reported for color-disk detectability so that our color disks could be well detected and discriminated in terms of their color information (43). Colors could be well discriminated from each other according to both the color opponent coding space (44) and the color hexagon (45), two perceptual spaces proposed for honey bee color vision. Large achromatic and chromatic bars 7 × 18 cm were used in transfer tests of the second experiment. They could be presented with their main axis vertical or horizontal. Achromatic bars were extensions of the training patterns (Fig. S1C). Chromatic bars (Fig. S1C) were cut from the same HKS-N papers used for the training color disks.

During training, two groups of bees were trained in parallel in each experiment, one with achromatic patterns and the other with color discs. From the six stimuli available within each category, four were randomly selected as training stimuli, thus leaving two other stimuli for the transfer tests. The four training stimuli were arranged in pairs to define above/below and left/right relationships. All six possible combinations between the four training stimuli (six stimulus pairs) were used during the training, i.e., each pair was presented five times (30 learning trials in total). Additionally, vertically (above/below) and horizontally (left/right) aligned stimuli were presented in three different positions, either on the central axis or on both distal axes of the back wall (Fig. S1D). Stimulus pairs and their positions were randomly varied from trial to trial. In this way, bees were precluded from using absolute spatial locations or the center of gravity of patterns (24) to solve the discrimination task. This training procedure has been shown to promote concept formation in bees and offers the advantage of training and testing multiple stimulus combinations (21). Only bees that completed the entire training and test sequences were included in the results; no other selection criterion was applied.

Statistics.

Acquisition was measured in terms of the percentage of correct choices during three blocks of training trials. Performance of balanced groups during training was compared by using ANOVA for repeated measurements with groups and trial blocks as factors of analysis. During the tests, both the first choice and the cumulative contacts with the surface of the targets were counted for 45 s. The choice proportion for each of the two test stimuli was then calculated. Performance during the tests was analyzed in terms of the proportion of correct choices per test, producing a single value per bee to exclude pseudoreplication. A one-sample t test was used to test the null hypothesis that the proportion of correct choices was not different from a random value of 50%. A binomial test was used to analyze whether the first choices were randomly distributed between test stimuli. Test analyses refer to the cumulative contacts recorded during 45 s. Similar results were obtained when the first choice was considered.

Data of all bees (above/below- and left/right-trained bees as well as color- and achromatic pattern-trained bees) were pooled upon analysis of test performances and no significant differences were found between these different conditions. Between-group and between-test comparisons were made by using t tests for independent and paired samples, respectively. The α level for all analyses was 0.05.

Supplementary Material

Acknowledgments

We thank Tom Collett, Jochen Zeil, Lars Chittka, Lalina Muir, David Reser, and Shao-Wu Zhang for helpful criticisms and suggestions on previous versions of the manuscript. A.A.-W. and M.G. thank the French Research Council (Centre National de la Recherche Scientifique), the University Paul Sabatier (Project APIGENE), and the National Research Agency (Project Apicolor) for generous support. A.A.-W. was supported by a Travelling Fellowship of The Journal of Experimental Biology and by the fellowship “Actions Thématiques” of the University Paul Sabatier (ATUPS). A.G.D. acknowledges the Australian Research Council for Grants DP0878968 and DP0987989.

Footnotes

The authors declare no conflict of interest.

*This Direct Submission article had a prearranged editor.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1202576109/-/DCSupplemental.

References

- 1.Lamberts K, Shanks D. Knowledge, Concepts, and Categories. Cambridge, UK: Psychology Press; 1997. [Google Scholar]

- 2.Zayan R, Vauclair J. Categories as paradigms for comparative cognition. Behav Processes. 1998;42:87–99. doi: 10.1016/s0376-6357(97)00064-8. [DOI] [PubMed] [Google Scholar]

- 3.Murphy GL. The Big Book of Concepts. Cambridge, MA: MIT Press; 2002. [Google Scholar]

- 4.Zentall TR, Wasserman EA, Lazareva OF, Thompson RKR, Rattermann MJ. Concept learning in animals. Comp Cogn Behav Rev. 2008;3:13–45. [Google Scholar]

- 5.Mareschal D, Quinn PC, Lea SEG. The Making of Human Concepts. New York: Oxford Univ Press; 2010. [Google Scholar]

- 6.Zentall TR, Galizio M, Critchfied TS. Categorization, concept learning, and behavior analysis: An introduction. J Exp Anal Behav. 2002;78:237–248. doi: 10.1901/jeab.2002.78-237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Murphy GL. In: The Making of Human Concepts. Mareschal D, Quinn PC, Lea SEG, editors. New York: Oxford Univ Press; 2010. pp. 11–28. [Google Scholar]

- 8.Doumas LAA, Hummel JE, Sandhofer CM. A theory of the discovery and predication of relational concepts. Psychol Rev. 2008;115:1–43. doi: 10.1037/0033-295X.115.1.1. [DOI] [PubMed] [Google Scholar]

- 9.Giurfa M. Behavioral and neural analysis of associative learning in the honeybee: A taste from the magic well. J Comp Physiol A. 2007;193:801–824. doi: 10.1007/s00359-007-0235-9. [DOI] [PubMed] [Google Scholar]

- 10.Srinivasan MV. Honey bees as a model for vision, perception, and cognition. Annu Rev Entomol. 2010;55:267–284. doi: 10.1146/annurev.ento.010908.164537. [DOI] [PubMed] [Google Scholar]

- 11.Avarguès-Weber A, Deisig N, Giurfa M. Visual cognition in social insects. Annu Rev Entomol. 2011;56:423–443. doi: 10.1146/annurev-ento-120709-144855. [DOI] [PubMed] [Google Scholar]

- 12.Zhang S, Mizutani A, Srinivasan MV. Maze navigation by honeybees: Learning path regularity. Learn Mem. 2000;7:363–374. doi: 10.1101/lm.32900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zhang S, Srinivasan MV, Zhu H, Wong J. Grouping of visual objects by honeybees. J Exp Biol. 2004;207:3289–3298. doi: 10.1242/jeb.01155. [DOI] [PubMed] [Google Scholar]

- 14.Benard J, Stach S, Giurfa M. Categorization of visual stimuli in the honeybee Apis mellifera. Anim Cogn. 2006;9:257–270. doi: 10.1007/s10071-006-0032-9. [DOI] [PubMed] [Google Scholar]

- 15.Avarguès-Weber A, Portelli G, Benard J, Dyer AG, Giurfa M. Configural processing enables discrimination and categorization of face-like stimuli in honeybees. J Exp Biol. 2010;213:593–601. doi: 10.1242/jeb.039263. [DOI] [PubMed] [Google Scholar]

- 16.Giurfa M, Zhang S, Jenett A, Menzel R, Srinivasan MV. The concepts of ‘sameness’ and ‘difference’ in an insect. Nature. 2001;410:930–933. doi: 10.1038/35073582. [DOI] [PubMed] [Google Scholar]

- 17.Zhang S, Bock F, Si A, Tautz J, Srinivasan MV. Visual working memory in decision making by honey bees. Proc Natl Acad Sci USA. 2005;102:5250–5255. doi: 10.1073/pnas.0501440102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chittka L, Geiger K. Can honey bees count landmarks? Anim Behav. 1995;49:159–164. [Google Scholar]

- 19.Dacke M, Srinivasan MV. Evidence for counting in insects. Anim Cogn. 2008;11:683–689. doi: 10.1007/s10071-008-0159-y. [DOI] [PubMed] [Google Scholar]

- 20.Gross HJ, et al. Number-based visual generalisation in the honeybee. PLoS ONE. 2009;4:e4263. doi: 10.1371/journal.pone.0004263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Avarguès-Weber A, Dyer AG, Giurfa M. Conceptualization of above and below relationships by an insect. Proc Biol Sci. 2011;278:898–905. doi: 10.1098/rspb.2010.1891. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chittka L, Niven J. Are bigger brains better? Curr Biol. 2009;19:R995–R1008. doi: 10.1016/j.cub.2009.08.023. [DOI] [PubMed] [Google Scholar]

- 23.Avarguès-Weber A, de Brito Sanchez MG, Giurfa M, Dyer AG. Aversive reinforcement improves visual discrimination learning in free-flying honeybees. PLoS ONE. 2010;5:e15370. doi: 10.1371/journal.pone.0015370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ernst R, Heisenberg M. The memory template in Drosophila pattern vision at the flight simulator. Vision Res. 1999;39:3920–3933. doi: 10.1016/s0042-6989(99)00114-5. [DOI] [PubMed] [Google Scholar]

- 25.van Hateren JH, Srinivasan MV, Wait PB. Pattern recognition in bees: Orientation discrimination. J Comp Physiol A. 1990;167:649–654. [Google Scholar]

- 26.Yang EC, Maddess T. Orientation-sensitive neurons in the brain of the honey bee (Apis mellifera) J Insect Physiol. 1997;43:329–336. doi: 10.1016/s0022-1910(96)00111-4. [DOI] [PubMed] [Google Scholar]

- 27.Dépy D, Fagot J, Vauclair J. Processing of above/below categorical spatial relations by baboons (Papio papio) Behav Processes. 1999;48:1–9. doi: 10.1016/s0376-6357(99)00055-8. [DOI] [PubMed] [Google Scholar]

- 28.Fagot J, Paleressompoulle D. Automatic testing of cognitive performance in baboons maintained in social groups. Behav Res Methods. 2009;41:396–404. doi: 10.3758/BRM.41.2.396. [DOI] [PubMed] [Google Scholar]

- 29.Collett TS, Cartwright BA. Eidetic images in insects: Their role in navigation. Trends Neurosci. 1983;6:101–105. [Google Scholar]

- 30.Gould JL. How bees remember flower shapes. Science. 1985;227:1492–1494. doi: 10.1126/science.227.4693.1492. [DOI] [PubMed] [Google Scholar]

- 31.Gould JL. Pattern learning by honey bees. Anim Behav. 1986;34:990–997. [Google Scholar]

- 32.Dill M, Wolf R, Heisenberg M. Visual pattern recognition in Drosophila involves retinotopic matching. Nature. 1993;365:751–753. doi: 10.1038/365751a0. [DOI] [PubMed] [Google Scholar]

- 33.Gonzalez RC, Woods RE. Digital Image Processing. International Ed. Upper Saddle River, NJ: Prentice Hall; 2008. [Google Scholar]

- 34.Biederman I. Recognition-by-components: A theory of human image understanding. Psychol Rev. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- 35.Cave CB, Kosslyn SM. The role of parts and spatial relations in object identification. Percept. 1993;22:229–248. doi: 10.1068/p220229. [DOI] [PubMed] [Google Scholar]

- 36.Kirkpatrick K. Object recognition. In: Cook RG, editor. Avian visual cognition. 2001. Available at www.pigeon.psy.tufts.edu/avc/kirkpatrick. [Google Scholar]

- 37.Menzel R, et al. Honey bees navigate according to a map-like spatial memory. Proc Natl Acad Sci USA. 2005;102:3040–3045. doi: 10.1073/pnas.0408550102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Chittka L, Jensen K. Animal cognition: Concepts from apes to bees. Curr Biol. 2011;21:R116–R119. doi: 10.1016/j.cub.2010.12.045. [DOI] [PubMed] [Google Scholar]

- 39.Rind FC. In: Methods in Insect Sensory Neurosciences. Christensen TA, editor. Boca Raton, FL: CRC Press; 2004. pp. 213–235. [Google Scholar]

- 40.Srinivasan MV. Honeybees as a model for the study of visually guided flight, navigation, and biologically inspired robotics. Physiol Rev. 2011;91:413–460. doi: 10.1152/physrev.00005.2010. [DOI] [PubMed] [Google Scholar]

- 41.Giurfa M, et al. Pattern learning by honeybees: Conditioning procedure and recognition strategy. Anim Behav. 1999;57:315–324. doi: 10.1006/anbe.1998.0957. [DOI] [PubMed] [Google Scholar]

- 42.Srinivasan MV, Lehrer M. Spatial acuity of honeybee vision and its spectral properties. J Comp Physiol A. 1988;162:159–172. [Google Scholar]

- 43.Giurfa M, Vorobyev M, Kevan P, Menzel R. Detection of coloured stimuli by honeybees: Minimum visual angles and receptor specific contrasts. J Comp Physiol A. 1996;178:699–709. [Google Scholar]

- 44.Backhaus W. Color opponent coding in the visual system of the honeybee. Vision Res. 1991;31:1381–1397. doi: 10.1016/0042-6989(91)90059-e. [DOI] [PubMed] [Google Scholar]

- 45.Chittka L. The colour hexagon: A chromaticity diagram based on photoreceptor excitations as a generalized representation of colour opponency. J Comp Physiol A. 1992;170:533–543. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.