Abstract

We consider the problem of dichotomizing a continuous covariate when performing a regression analysis based on a generalized estimation approach. The problem involves estimation of the cutpoint for the covariate and testing the hypothesis that the binary covariate constructed from the continuous covariate has a significant impact on the outcome. Due to the multiple testing used to find the optimal cutpoint, we need to make an adjustment to the usual significance test to preserve the type-I error rates. We illustrate the techniques on one data set of patients given unrelated hematopoietic stem cell transplantation. Here the question is whether the CD34 cell dose given to patient affects the outcome of the transplant and what is the smallest cell dose which is needed for good outcomes.

Keywords: Dichotomized outcomes, Generalized estimating equations, Generalized linear model, Pseudo-values, Survival analysis

1. Introduction

In many medical studies it is of interest to investigate the relationship between explanatory variables, such as prognostic factors, treatment factors or patient characteristics, and the outcome. The outcome may be continuous, such as the time to some event or the level of some assayed enzyme, or it may be a discrete outcome, such as an indicator of relapse or death. We may also be interested in state occupation probabilities in multistate processes modeled via a pseudo-observation approach applied to censored data as discussed in, for example, Andersen et al. (2003). In any case, regression models based on generalized estimating equations (GEEs) (McCullagh and Nelder, 1989; Liang and Zeger, 1986) can be applied to examine the effects of covariates on the outcome.

In many cases the covariates of interest are continuous in nature. While they could be modeled as such, many clinicians find the interpretation of such covariates difficult and they prefer to model these effects as categorical or binary covariates reflecting different prognostic groups of patients based on the measured value of the continuous covariate. In other cases, the covariate may represent the dose of some drug or some other therapeutic agent, and the clinician is interested in identification of a therapeutic threshold. Even though evaluation of a variable’s prognostic value is best done with the variable in its continuous form and there is a possible loss of information when categorizing a continuous covariate, the need for thresholds for clinical use and treatment decisions justifies the development of appropriate statistical methods for finding optimal cutpoints. Methodologies for finding optimal cutpoints are also needed and used in various tree building algorithms (Lausen et al., 2004).

When discretizing a continuous covariate and then testing for the covariate effect, a number of techniques can be used. One group of techniques relies on the investigator to provide cutpoints based on historical data or it uses cutpoints based on a split into groups at a predetermined percentile of the continuous covariate. Another approach is to let the data decide on the cutpoint and then perform the test of the covariate effect on the two resulting groups. In most cases the continuous covariate in this approach is split into groups based on either the largest value of the likelihood or the largest value of some two-sample test statistic after a search of possible cutpoints. This selection of the largest likelihood or test statistic leads to an inflated type-I error due to multiple testing, so some correction needs to be applied to obtain the correct type-I error.

A data-dependent cutpoint selection and test adjustment has been proposed for survival data by a number of authors. The approach is based on a Cox proportional hazards model with a single covariate defined as 1 or 0 depending on the value of the continuous covariate. Jespersen (1986) based an adjusted test on the maximum value of the score statistic from the Cox model which suitably standardized converges to a Brownian bridge under the null hypothesis. Contal and O’Quigley (1999) modify the log rank test statistic and show that the process consisting of the score statistic using cutpoints at the order statistics of the continuous covariates converges to a Brownian bridge process. Lausen and Schumacher (1992, 1996) showed that for any standardized test statistic C (δ) for the two-sample problem with groups defined by a threshold parameter, δ, the following convergence result holds:

Here X(m) is the mth-order statistic of the continuous covariate and W0 is a standard Brownian bridge process. Klein and Wu (2004) compare these estimators and extend the Contal and O’Quigley approach to the accelerated failure time model and the Cox model with additional covariates.

In this note we examine these data-driven methods in a generalized linear model framework. We are particularly interested in using these techniques in pseudo-observation regression problems. The pseudo-observation approach has been suggested as a method for direct censored data regression modeling for the survival function (Logan et al., 2008), for the cumulative incidence function (Klein and Andersen, 2005), for the mean survival time (Andersen et al., 2004), for multistate probabilities (Andersen et al., 2003) and for mean quality of life (Andrei and Murray, 2007). In this approach, pseudo-observations are formed as the difference between the full sample and leave-one-out estimator based on an approximately unbiased estimator of the parameter of interest. These pseudo-observations are then used in a GEE model.

The methods we developed will be illustrated using data from a study from the Center for Blood and Marrow Transplantation Registry. In this study 709 patients with Acute Myeloid Leukemia (n = 395) or Acute Lymphocytic Leukemia (n = 397) in first (n = 204) or second (n = 488) complete remission were given a Bone Marrow Transplant (BMT) from an unrelated donor transplant. The cell source for all the transplants was bone marrow. The research question of interest was: “What level of CD34 cells in the graft is needed to lower the death in remission rates and is there a threshold dose of CD34 cells to affect the relapse rates?”. The questions require an estimation of the threshold dose level of CD34 cells and a comparison of the “high” and “low” CD34 dose groups for death in remission and relapse cumulative incidence probabilities.

2. Techniques for the generalized linear model and generalized estimating equations with a single covariate

In this section we show two approaches to discretizing a continuous covariate and testing for its significance based on the generalized estimating equation approach. Here we assume that the response for individual i, i = 1, …, n, is possibly multivariate and is denoted by Yi = (Yi1, …, YiJ)t. We allow for the Y to be continuous or discrete outcomes. Let μi = E[Yi] = (μi1, …, μiJ)t be the mean vector for individual i. We assume a generalized linear model (GLM) framework, so that the distribution of the Yij belongs to the same family with mean μij and common dispersion parameter ϕ (Liang and Zeger, 1986). Also, let Xi be the continuous covariate associated with individual i. We assume that the mean and the dichotomized covariate, Zδ, are related by

where g(·) is a known link function, αj for j = 1, …,J, δ and γ are unknown parameters and is the dichotomized covariate obtained from X, namely

| (1) |

We are interested in estimating the threshold parameter δ as well as the inference on the parameter γ. For a given δ, estimates of parameters can be based on the generalized estimating equations (GEEs) suggested by Liang and Zeger (1986):

| (2) |

where β = (γ, α1, …, αj)T and Vi is a working covariance matrix. GEE estimates of β, β̂ are found by solving Eq. (2). A sandwich estimator can be used to estimate the covariance matrix of β:

where

and

Standard packages such as GEESE in R or PROC GENMOD in SAS can be used to obtain estimates and their variances for a given δ.

In our setup, we are interested on the hypothesis H0 : γ = 0. One test that can be used is the Wald test given by , which has a standard normal distribution when H0 is true and δ is known a priori. A second test is the generalized score test (Boos, 1992). For the two-sample problem with no covariates, Liu et al. (2008) show that the score statistic for the test H0 : γ = 0 is given by

where n0 is the number of observations with , n1 is the number of observations with is the estimator of αj under the null hypothesis, . Under the null hypothesis, has a chi-square distribution with one degree of freedom when δ is known a priori.

In order to estimate δ, one common approach is to compute either for values of δ in some range and pick up the value that maximizes . This procedure is appropriate for estimating δ, but an adjustment must be made in order to make an inference for γ and preserve the type-I error rate.

The first adjustment that can be made is a GEE version of the approach in Lausen and Schumacher (1992, 1996). Both the generalized score statistic and the Wald statistic can be used. In order to apply this approach, the possible range of threshold values must be restricted to [X(nε); X(n(1−ε))], where X(k) is the kth-order statistic of the continuous variable and 0 < ε < 0.5. Let C (δ) be the test statistic for the two-sample problem with groups defined by δ. It can be shown that

as n → ∞, where W0 is a Brownian bridge. Details of the proof can be found in Lausen and Schumacher (1992) and Billingsley (1968, chap. 4). Miller and Siegmund (1982) show that, for b > 0,

where φ(·) is the standard normal density function. This result motivates the following approximation for a corrected p-value:

where z = Φ−1(1 − p/2), Φ(·) is the standard normal cumulative distribution function and p is the unadjusted p-value computed at the maximum of the test statistic. Notice that the approximation is valid for p < 0.5. Inferences for γ can be made by computing the corrected p-value for the maximum value of the test statistic.

The second approach considered here is a modification of the method in Contal and O’Quigley (1999). To construct the test statistic, recall that, for the two-sample problem without covariates, assuming that the working covariance matrix is the identity matrix, the score function can be written as

where is the linear predictor and μij = g−1(ηij). Let

| (3) |

be the score evaluated under the null hypothesis.

Billingsley (1999, pg. 196) proved the following theorem.

Theorem 1. Let {ξ1, ξ2, …} be a stationary and ergodic process for which its conditional expected value E(ξi|ξ1, …, ξi−1) is equal to zero with probability 1 and for which the expected value is positive and finite. Define

If

for p ∈ [0, 1]; then Sn(p) ⇒ W(p) as n → ∞, where W(p) is a Brownian motion process.

In our setup, in order to apply this result, we must first put the data in increasing order with respect to the continuous covariate X. Now, with the ordered data, define

and assume that Yi⊥Yi′. With this assumption, we can then see that the condition E(ξi|ξ1, …, ξi−1) = 0 is verified. It is important to notice that we are assuming that individuals are independent, but observations within the same individual may be correlated. Also, under the null hypothesis H0 : γ = 0, recalling that we are assuming a common dispersion parameter for distribution of the responses, we have that the Yi are independent and identically distributed (i.i.d.), so the ξi are also independent and identically distributed. Therefore, the ξi are stationary and ergodic. Finally, under the null hypothesis, we have

where VY is the covariance matrix of Yi and ġ is the vector of elements , which is finite. With the ordered data, it is clear that there is a value δp for which . In fact, any value of δp between X([np]) and X([np]+1) satisfies , so the midpoint can be used. If we define

we have Sn(p) ⇒ W(p) as n → ∞. Also, the process defined by Sn(p) − pSn(1) converges to a Brownian bridge (O’Quigley, 2008, pg. 245).

In practice, we replace the unknown quantities in the ξi by consistent estimators under the null, so we work with Ŝn(p). Following O’Quigley (2008, pg. 236), also based on Slutsky’s theorem, the large sample properties of the resulting test statistic will not be affected. Also, in general, the values of αj and υ2 are not known and we substitute by their estimators. The parameters αj can be estimated using the estimating equations under the null hypothesis, and for υ2 we can use the sample variance . Hence

| (4) |

Therefore, because Ŝn(1) = 0, we have that Ŝn(p) = Ŝn(p) − pŜn(1) (Klein and Wu, 2004) and, finally, Ŝn(p) ⇒ W0(p) as n → ∞, where W0(p) is a Brownian bridge. It has been shown that the distribution of the extreme value of the Brownian bridge (Billingsley, 1999, pg. 103) is given by

| (5) |

If we order the ξ by the continuous covariate for which we want to find a cutpoint, under the null hypothesis this sequence is formed by i.i.d. observations and the sequence of Ŝn(p) constructed with the ξ ordered converges to a Brownian bridge. We can then use as test statistic the maximum of Ŝn(p), p ∈ [0, 1]. If the null hypothesis is rejected, we estimate as cutpoint any value between X(m) and X(m+1), where X(m) is the mth value of the ordered Z and m = np*, where p* is the value of p for which |Ŝn(p)| is maximum. An important remark that should be made here is regarding the use of pseudo-observations. Although the pseudo-observations are computed based on a jackknife technique (and, therefore, they are computed based on (n − 1) observations), it can be shown that under some conditions they can be approximated by independent and identically distributed variables (Graw et al., 2009), so the results for the ξ remain valid.

3. The multiple covariate case

In the previous section, we considered the situation with only a single covariate in the model. However, in most practical applications there are other covariates, and we now discuss the extension to allow for many covariates in the model. The test statistic remains the same, so we discuss it here briefly.

As before, assume that Yij is the outcome for the jth observation on individual i, j = 1, …,J and i = 1, …, n. Also, let Xi be the continuous covariate associated with individual i and be a vector of other covariates. We assume that the mean and the covariates are related by

where g(·) is a known link function, αj for j = 1, …,J, γ and β* are the unknown parameters and is the categorized covariate given by (1).

As before, we are interested in finding the cutpoint δ and testing the hypothesis about γ. For a given δ, denote by β = (γ, α1, α2, …, αJ, β*T)T the vector of all unknown parameters. The estimating equations are given by (2) and the variance of estimates can be estimated by the sandwich estimator.

In order to test the hypothesis H0 : γ = 0, the usual Wald test or the generalized score test (Boos, 1992) can be used. The Wald test can be obtained easily using any standard package for GEEs. In order to derive an expression for the generalized score test, using an independence working covariance matrix Vi = I, we rewrite the score Eq. (2) as

where Zi is the (J × p) matrix of covariates (design matrix) of the ith observation, i.e., it is the augmented matrix given by , p is the number of rows of the parameter vector β, 1J is the vector with J rows of 1s, IJ×J is the identity matrix and Ġi = diag(ġ(ηij)) is the diagonal matrix of derivatives . The score test for H0 : γ = 0 is based on the first element of U(β) and the expression is exactly the same as that obtained for the single covariate case. As before, we must order the data increasing on the continuous covariate X and let be defined as in (3). We assume that the Yi are independent random variables with mean μi and the same covariance matrix Σ, so the (Yi − μi) are also independent with mean zero and common covariance matrix Σ. Now suppose we have covariates which constitute an i.i.d. sample from a distribution P(·). The random vectors Ġi(Yi − μi) are, therefore, i.i.d. with zero mean and variance EP(ĠiΣĠi). This allows us to apply the same results as in the situation without any covariates. Therefore, we can compute the sequence of Ŝn(p) given by (4) and the test statistic is given by supp∈[0, 1] |Ŝn(p)|, with distribution given by (5).

4. Monte Carlo study

A simulation study was designed to compare the performance of the different test procedures in terms of their type-I error rate and power. We constructed the simulation for correlated observations, and J-variate normal random variables were generated for each subject, J = 2 or 4. We took the means to be

| (6) |

for i = 1, …, n, j = 1, …, J. Here the J variables had a common correlation of ρ = 0, 0.25, or 0.5. The z-values used in generating the T-values were obtained by dichotomizing a continuous covariate X taken to be uniform [−1, 1]. Under the alternative hypothesis we used cutpoints of zero and 0.2. Total samples of size n = 50, 100, 200 and 400 were examined. Ten thousand samples were generated for each combination of γ, δ, ρ, J and n.

We computed the Wald and score test statistics along with both uncorrected and corrected P-values, and the sup score test statistic. We used ε = 0, 0.1, 0.15, 0.2 and 0.25 to restrict the range of possible values for the cutpoint. Although this restriction is not required for the new test statistic, we also computed the new test statistic using this range restriction.

Since we have a large number of scenarios, we applied analysis of variance (ANOVA) techniques to summarize the results. For the type-I error rate, we defined the outcome, R, as the percentage rejection rate minus the nominal rate of 5%, so good performance is indicated by values of the expectation of R near 0. Negative values indicate a conservative test procedure and positive vales indicate that the test procedure inflates the type-I error. The first model fit to our results has the following effects:

| (7) |

When fitting the model without an intercept and normalizing the effects of the other factors to have a sum of zero, the estimates for the interaction terms have the interpretation as average deviations from the nominal level of 5% adjusted for the effects of the other factors. Table 1 compares the size of the tests by values of ε. The results on Table 1 show clearly that an adjustment must be made because there is an enormous inflation of type-I error rate for both the uncorrected Wald and score test statistics. The adjusted Wald statistic also inflates the type-I error rate a little bit, but the inflation gets smaller when we increase the value of ε. The score test statistic is conservative, and we can also see that the type-I error gets closer to 5% when we move ε towards 0.5. The sup score test statistic is still conservative, but has a type-I error closer to the nominal one when compared to the other test statistic.

Table 1.

Average deviations from nominal 5% level for four tests adjusted using the ANOVA model (7).

| Test statistic | Value of ε | ||||

|---|---|---|---|---|---|

| 0 (entire range) | 0.1 | 0.15 | 0.2 | 0.25 | |

| Wald | 94.48 | 44.18 | 36.10 | 29.92 | 24.80 |

| Adjusted Wald | – | 3.17 | 1.46 | 0.64 | 0.28 |

| Score | 44.36 | 35.71 | 30.76 | 26.23 | 22.11 |

| Adjusted score | – | −2.46 | −2.06 | −1.72 | −1.49 |

| Sup score | −1.39 | −1.39 | −1.39 | −1.42 | −1.52 |

Table 2 examines, using appropriate ANOVA models, the effect of sample size, number of points and correlation on the size of the three adjusted tests. In Table 2, in the first model fitted we see that the Wald test is anti-conservative for small samples while the other two tests are conservative. For moderate samples the test based on the sup scores seems to perform the best. The models for the number of time points and the correlation ρ in Table 2 suggest that the results hold regardless of the dimension of Y or the correlation.

Table 2.

Average deviations from nominal 5% level for three tests adjusted using ANOVA models.

| Factor | Test statistic | |||

|---|---|---|---|---|

| Adjusted Wald | Adjusted score | Sup score | ||

| Sample size | 50 | 4.01 | −3.07 | −2.14 |

| 100 | 1.36 | −2.17 | −1.64 | |

| 200 | 0.31 | −1.46 | −1.12 | |

| 400 | −0.13 | −1.04 | −0.82 | |

| Number of time points | 2 | 1.36 | −1.96 | −1.44 |

| 4 | 1.41 | −1.91 | −1.42 | |

| Correlation ρ | 0.00 | 1.42 | −1.87 | −1.37 |

| 0.25 | 1.29 | −2.01 | −1.52 | |

| 0.50 | 1.49 | −1.94 | −1.41 | |

| 0.75 | 1.34 | −1.92 | −1.43 | |

To study the power of the tests we simulated as described above with γ = 0.25 or 0.5 and δ = 0 or 0.5. ANOVA methods on the percent rejections, R, are used to summarize the data. Table 3, based on the ANOVA model

| (8) |

shows that the power of the tests is not affected by the choice of ε. We see in Table 4 that the power increases as the sample size increases and as the dimensionality of Y increases. Also in Table 4, we see no difference in power for the two true cutpoints. In all tables the adjusted Wald statistic has the highest power. However, since it was anti-conservative this does not suggest that it is the best statistic to use. The new sup score statistic performs quite well and we suggest its use.

Table 3.

Power results for three tests adjusted using the ANOVA model (8).

| True β | Test statistic | Value of ε | ||||

|---|---|---|---|---|---|---|

| 0 (entire range) | 0.1 | 0.15 | 0.2 | 0.25 | ||

| 0.25 | Adjusted Wald | – | 43.26 | 43.29 | 44.01 | 44.85 |

| Adjusted score | – | 34.42 | 36.66 | 38.67 | 40.47 | |

| Sup score | 41.98 | 41.98 | 41.97 | 41.94 | 41.79 | |

| 0.50 | Adjusted Wald | – | 80.51 | 80.72 | 81.24 | 81.81 |

| Adjusted score | – | 72.37 | 74.27 | 75.91 | 77.40 | |

| Sup score | 78.77 | 78.77 | 78.77 | 78.76 | 78.69 | |

Table 4.

Power results for three tests adjusted using the ANOVA models.

| Effect | True β = 0.25 | True β = 0.50 | |||||

|---|---|---|---|---|---|---|---|

| Adjusted Wald | Adjusted score | Sup score | Adjusted Wald | Adjusted score | Sup score | ||

| Sample size | 50 | 20.45 | 8.57 | 12.06 | 51.87 | 34.88 | 42.89 |

| 100 | 29.05 | 21.39 | 26.04 | 76.70 | 70.27 | 75.76 | |

| 200 | 49.21 | 45.04 | 50.29 | 95.80 | 94.91 | 96.40 | |

| 400 | 76.70 | 75.23 | 79.29 | 99.90 | 82.91 | 90.92 | |

| Number of time points | 2 | 39.10 | 32.95 | 37.25 | 78.00 | 71.41 | 75.47 |

| 4 | 48.61 | 42.16 | 46.59 | 84.14 | 78.57 | 82.03 | |

| True cutpoint | 0.0 | 44.47 | 38.31 | 43.08 | 81.54 | 75.72 | 79.63 |

| 0.2 | 43.23 | 36.80 | 40.75 | 80.60 | 74.26 | 77.86 | |

| Correlation ρ | 0 | 57.49 | 50.82 | 55.27 | 90.41 | 85.65 | 88.57 |

| 0.25 | 46.59 | 40.00 | 44.60 | 83.79 | 77.68 | 81.42 | |

| 0.5 | 38.34 | 32.25 | 36.62 | 77.72 | 71.08 | 75.17 | |

| 0.75 | 32.98 | 27.15 | 31.19 | 72.36 | 65.55 | 69.83 | |

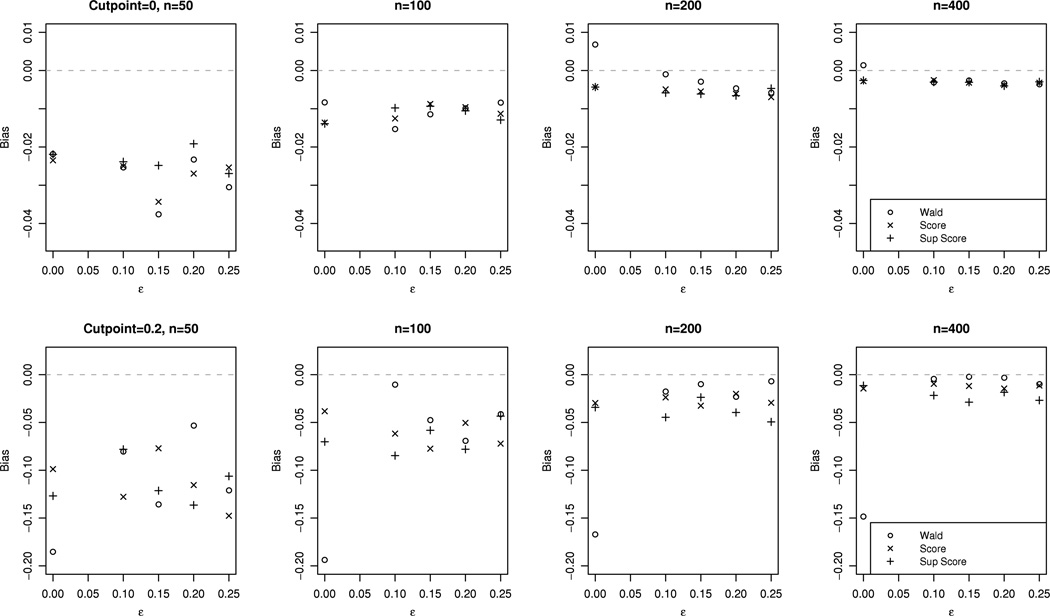

Finally, we show some results for the cutpoint estimates. Fig. 1 shows the mean bias for different sample sizes and different cutpoints. The results suggest small bias for all three procedures, with a smaller standard error for the sup score method. We also conducted a simulation study with data generated with a different link function and different values for the β in the linear predictor of the mean function and different cutpoints. The conclusions are similar to the ones shown here, so we do not include the results here.

Fig. 1.

Mean bias for cutpoint estimates.

5. An application to analysis of survival data based on pseudo-values

We illustrate the use of these techniques using data on 708 patients given an unrelated donor bone marrow transplant for acute leukemia. The question of interest is the effect of the number of CD34 cells in the donor marrow on the incidence of relapse and death in remission. Clinicians would like to know if there is a minimum cell dose beyond which patients have either lower relapse or less death in remission.

To examine the threshold CD34 count question for these two competing risks we use the pseudo-observation method of Klein and Andersen (2005). In this approach for relapse we compute at a grid of time points τj and the pseudo-observations, Yij, are based on the difference between the full sample estimate of the cumulative incidence of relapse and the leave-one-out estimator of the cumulative incidence. This difference or pseudo-observation is used in a generalized estimating equation to study the covariate effects on the relapse cumulative incidence function. In the example we use j = 0.5, 1, 2 and 3 years post transplant and a complementary log–log link function. Note that once the pseudo-observations are computed the censored data competing risks problem has been reduced to a GEE problem. Of course, we have similar pseudo-observations for the death in remission probability.

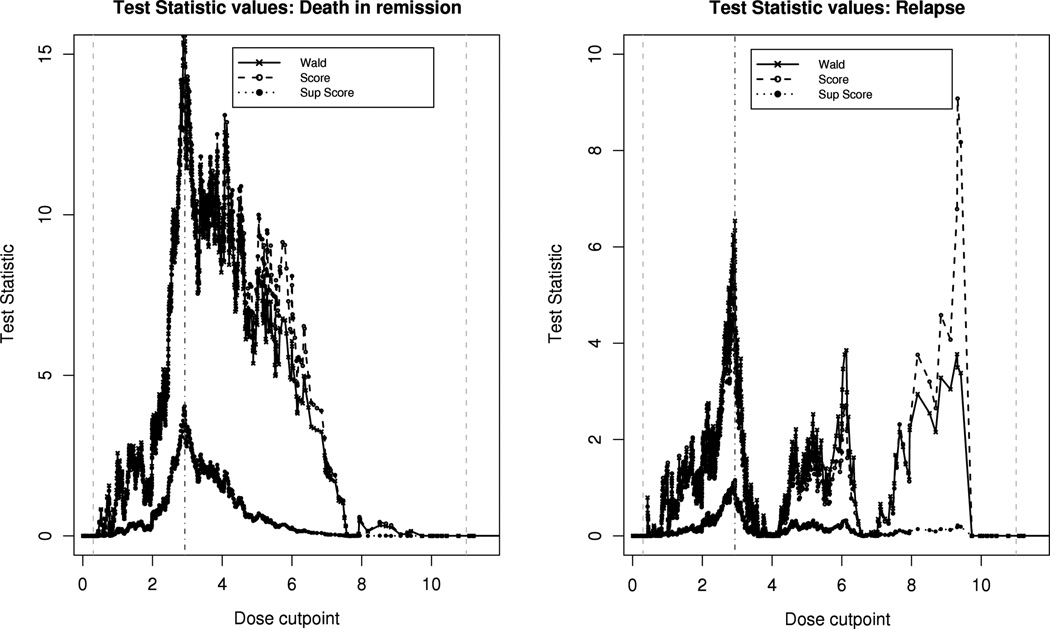

Fig. 2 shows the value of the three statistics. All statistics are adjusted for the patient’s age, Karnofsky performance score and the degree of HLA matching. Here we see that the optimal cutpoint for both relapse and death in remission is at 2.93 × 106 CD34 cells. Table 5 shows the unadjusted and adjusted tests for the effect of CD34 count. We see that if no adjustment is made for the estimation of the cutpoint that all tests are significant at the 5% level, while the more appropriate adjusted tests suggest that the CD34 cell count is only important for death in remission. In Table 6 we present the parameter estimates which shows that patients with low CD34 counts have significantly more death in remission.

Fig. 2.

Test statistic values for the bone marrow data example.

Table 5.

Cutpoints for the bone marrow data example, model for death in remission and relapse with covariates age, group and KPS.

| Outcome | Test statistic | Cutpoint selected | Value of test statistic | Unadjusted P-value | Adjusted P-value |

|---|---|---|---|---|---|

| Death in remission | Wald | 2.93 | 16.40 | < 0.0001 | 0.0019 |

| Score | 2.93 | 16.75 | < 0.0001 | 0.0016 | |

| Sup score | 2.93 | 3.88 | – | 0.0006 | |

| Relapse | Wald | 2.93 | 6.54 | 0.0105 | 0.1680 |

| Score | 2.93 | 5.11 | 0.0237 | 0.3017 | |

| Sup score | 2.93 | 1.16 | – | 0.1962 |

Table 6.

Parameters estimates for the bone marrow data example, death in remission and relapse.

| Parameter | N | Death in remission | Relapse | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Estimative | Standard error |

Test statistic |

P- value |

Estimative | Standard error |

Test statistic |

P-value | |||

| Intercept | −2.18 | 0.27 | 66.26a | <0.0001 | −0.63 | 0.22 | 8.35a | 0.0039 | ||

| 9.69b | 0.0079 | 1.81b | 0.4037 | |||||||

| Match | 8/8 | 442 | – | – | – | – | – | – | – | – |

| 7/8 | 234 | 0.13 | 0.14 | 0.86a | 0.3502 | −0.04 | 0.17 | 0.06a | 0.8046 | |

| 6/8 | 113 | 0.51 | 0.17 | 9.67a | 0.0019 | −0.33 | 0.25 | 1.82a | 0.1786 | |

| 41.85c | <0.0001 | 13.35c | 0.0203 | |||||||

| Age | <10 years | 170 | – | – | – | – | – | – | – | – |

| 10–19 years | 161 | 1.37 | 0.26 | 26.83a | <0.0001 | −0.36 | 0.23 | 2.53a | 0.1123 | |

| 20–29 years | 154 | 1.18 | 0.27 | 19.18a | <0.0001 | −0.29 | 0.23 | 1.64a | 0.2004 | |

| 30–39 years | 102 | 1.45 | 0.28 | 27.67a | <0.0001 | −0.01 | 0.25 | 0.00a | 0.9568 | |

| 40–49 years | 146 | 1.64 | 0.26 | 39.44a | <0.0001 | −0.68 | 0.27 | 6.25a | 0.0123 | |

| ≥ 50 years | 56 | 1.39 | 0.31 | 20.25a | <0.0001 | −1.07 | 0.40 | 6.97a | 0.0082 | |

| 3.01b | 0.2220 | 2.22b | 0.3928 | |||||||

| Karnofsky score | 0–90 | 162 | – | – | – | – | – | – | – | – |

| > 90 | 579 | −0.17 | 0.15 | 1.35a | 0.2449 | −0.26 | 0.18 | 2.04a | 0.1524 | |

| Missing | 48 | −0.46 | 0.28 | 2.69a | 0.1009 | −0.07 | 0.34 | 0.04a | 0.8472 | |

| Dose | > 2.93 | 407 | – | – | – | – | – | – | – | – |

| ≤ 2.93 | 382 | 0.47 | – | 3.88d | 0.0006 | −0.43 | – | 1.16d | 0.1962 | |

χ2 distributed, 1 degree of freedom.

χ2 distributed, 2 degrees of freedom.

χ2 distributed, 5 degrees of freedom.

Sup score test.

6. Discussion

We have proposed a new test statistic that can be used to estimate a threshold value and also to make inference about the regression coefficient associated with the categorized covariate for generalized linear models and generalized estimating equations. We compared our test statistic with the Wald and score tests statistics as well as their corrected versions. Our simulations show clearly that adjustments must be made when making inference for the regression parameter after a cutpoint is selected because the type-I error rates are extremely high when no correction is used. The adjusted Wald test still inflates the type-I error a little while the adjusted score test statistic is more conservative. Our proposed test statistic is conservative, but with type-I error probability closer to the desired one. Also, the method provides good estimates of the true cutpoint when a threshold model is correct.

Supplementary Material

Acknowledgements

Professor Tunes-da-Silva’s work was supported by Fundação de Amparo à Pesquisa do Estado de São Paulo – FAPESP, Brazil, grant number 2007/02823-3. Professor Klein’s work was supported by a grant from the National Cancer Institute (R01 CA54706-14).

Footnotes

Appendix. Supplementary data

Supplementary data associated with this article can be found, in the online version, at doi:10.1016/j.csda.2010.02.016.

References

- Andersen PK, Hansen MG, Klein JP. Regression analysis of restricted mean survival time based on pseudo-observations. Lifetime Data Analysis. 2004;10:335–350. doi: 10.1007/s10985-004-4771-0. [DOI] [PubMed] [Google Scholar]

- Andersen PK, Klein JP, Rosthoj S. Generalized linear models for correlated pseudo-observations, with applications to multi-state models. Biometrika. 2003;90:15–27. [Google Scholar]

- Andrei A-C, Murray S. Regression models for the mean of the quality-of-life-adjusted restricted survival time using pseudo-observations. Biometrics. 2007;63:398–404. doi: 10.1111/j.1541-0420.2006.00723.x. [DOI] [PubMed] [Google Scholar]

- Billingsley P. Convergence of Probability Measures. New York: Wiley and Sons; 1968. [Google Scholar]

- Billingsley P. Convergence of Probability Measures. 2nd ed. New York: Wiley and Sons; 1999. [Google Scholar]

- Boos D. On generalized score tests. The American Statistician. 1992;46(4):327–333. [Google Scholar]

- Contal C, O’Quigley J. An application of changepoint methods in studying the effect of age on survival in breast cancer. Computational Statistics and Data Analysis. 1999;30:253–270. [Google Scholar]

- Graw F, Gerds TA, Schumacher M. On pseudo-values for regression analysis in competing risks models. Lifetime Data Analysis. 2009;15:241–255. doi: 10.1007/s10985-008-9107-z. [DOI] [PubMed] [Google Scholar]

- Jespersen NCB. Dichotomizing a continuous covariate in the Cox regression model. Statistical Research Unit of University of Copenhagen, Research Report. 1986;86(2) [Google Scholar]

- Klein JP, Andersen PK. Regression modeling of competing risks data based on pseudovalues of the cumulative incidence function. Biometrics. 2005;61:223–229. doi: 10.1111/j.0006-341X.2005.031209.x. [DOI] [PubMed] [Google Scholar]

- Klein JP, Wu J-T. Discretizing a continuous covariate in survival studies. In: Balakrishnan N, Rao CR, editors. Handbook of Statistics 23: Advances in Survival Analysis. New York: Elsevier; 2004. pp. 27–42. [Google Scholar]

- Lausen B, Hothorn T, Bretz F, Schumacher M. Assessment of optimal selected prognostic factors. Biometrical Journal. 2004;46(3):364–374. [Google Scholar]

- Lausen B, Schumacher M. Maximally selected rank statistics. Biometrics. 1992;48:73–85. [Google Scholar]

- Lausen B, Schumacher M. Evaluating the effect of optimized cutoff values in the assessment of prognostic factors. Computational Statistics and Data Analysis. 1996;21:307–326. [Google Scholar]

- Liang K-Y, Zeger SL. Longitudinal data analysis using generalized linear models. Biometrika. 1986;78:13–22. [Google Scholar]

- Liu L, Logan B, Klein JP. Inference for current leukemia free survival. Lifetime Data Analysis. 2008;14:432–446. doi: 10.1007/s10985-008-9093-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logan BR, Klein JP, Zhang MJ. Comparing treatments in the presence of crossing survival curves: an application to bone marrow transplantation. Biometrics. 2008;64(3):733–740. doi: 10.1111/j.1541-0420.2007.00975.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCullagh P, Nelder J. Generalized Linear Models. London: Chapman and Hall; 1989. [Google Scholar]

- Miller R, Siegmund D. Maximally selected chi square statistics. Biometrics. 1982;38:1011–1016. [Google Scholar]

- O’Quigley J. Proportional Hazards Regression. New York: Springer; 2008. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.