Abstract

Benchmarking, a management approach for implementing best practices at best cost, is a recent concept in the healthcare system. The objectives of this paper are to better understand the concept and its evolution in the healthcare sector, to propose an operational definition, and to describe some French and international experiences of benchmarking in the healthcare sector. To this end, we reviewed the literature on this approach's emergence in the industrial sector, its evolution, its fields of application and examples of how it has been used in the healthcare sector.

Benchmarking is often thought to consist simply of comparing indicators and is not perceived in its entirety, that is, as a tool based on voluntary and active collaboration among several organizations to create a spirit of competition and to apply best practices. The key feature of benchmarking is its integration within a comprehensive and participatory policy of continuous quality improvement (CQI). Conditions for successful benchmarking focus essentially on careful preparation of the process, monitoring of the relevant indicators, staff involvement and inter-organizational visits.

Compared to methods previously implemented in France (CQI and collaborative projects), benchmarking has specific features that set it apart as a healthcare innovation. This is especially true for healthcare or medical–social organizations, as the principle of inter-organizational visiting is not part of their culture. Thus, this approach will need to be assessed for feasibility and acceptability before it is more widely promoted.

Abstract

Le benchmarking, démarche managériale de mise en œuvre des meilleures pratiques au meilleur coût, est un concept récent dans le système de santé. Les objectifs de cet article sont de mieux comprendre ce concept et son évolution dans le secteur de la santé, de proposer une définition opérationnelle et de décrire quelques expériences françaises et internationales dans le secteur de la santé. À cette fin, nous avons réalisé une revue de la littérature explorant le contexte d'émergence d'une telle approche dans le milieu industriel, son évolution, ses champs d'application et des exemples d'application de cette méthode dans le secteur de la santé.

Le benchmarking est souvent considéré comme la comparaison d'indicateurs et n'est pas perçu dans son entièreté, à savoir comme un outil fondé sur une collaboration volontaire et active entre plusieurs organisations en vue de créer une émulation et de mettre en application les meilleures pratiques. La principale caractéristique du benchmarking est son inscription dans une politique globale et participative d'amélioration continue de la qualité. Les conditions de réussite s'axent essentiellement sur la bonne préparation de la démarche, le suivi d'indicateurs pertinents, l'implication du personnel et la conduite de visites interétablissements.

Par rapport à des méthodes antérieurement mises en œuvre en France (programme d'amélioration continue [PAC] et projets collaboratifs), le benchmarking comporte des spécificités permettant de considérer cette approche comme innovante en santé. Elle le sera tout particulièrement pour les établissements de santé ou médicosociaux car le principe des visites interétablissements n'est pas inscrit dans leurs cultures. Une évaluation de sa faisabilité et de son acceptabilité est donc nécessaire avant toute promotion de cette démarche.

Benchmarking is usually considered to be a process of seeking out and implementing best practices at best cost. This pursuit of performance is based on collaboration among several organizations. The basic principle of benchmarking consists of identifying a point of comparison, called the benchmark, against which everything else can be compared.

Introduced by the Xerox company in an effort to reduce its production costs, benchmarking methods spread throughout the industrial sector in the 1980s and underwent several changes that, upon analysis, are highly instructive. First used as a method for comparing production costs with those of competitors in the same sector, benchmarking later became conceptualized and used as a method for continuous quality improvement (CQI) in any sector.

For more than 10 years now, the demand for performance has become a major issue for the healthcare system. This is due to three factors: the imperative to control healthcare costs; the need to structure the management of risk and of quality of care; and the need to satisfy patients' expectations. These demands have spurred the development of many national and international projects for indicator development and comparison. The term benchmarking emerged within the context of this comparison process. Subsequently, and without necessarily any continuity, the concept of benchmarking became more tightly defined as referring to the analysis of processes and of success factors for producing higher levels of performance. Finally, benchmarking was directed towards the pursuit of best practices in order to satisfy patients' expectations (Ellis 2006). Currently, the use of the term is often compromised by limiting it to a simple comparison of outcomes, whereas it should really be taken further, to promote discussions among front-line professionals on their practices in order to stimulate cultural and organizational change within the organizations being compared.

As part of the BELIEVE research project (BEnchmarking, LIens Visites Établissements de santé) (CCECQA 2010) funded by France's Haute autorité de santé (HAS – National Health Authority), the Comité de coordination de l'évaluation clinique et de la qualité en Aquitaine (CCECQA – Coordinating Committee for Clinical and Quality Evaluation in Aquitaine, a regional quality and safety centre) has developed a benchmarking method that it is currently testing in 32 organizations in the Aquitaine region. In that context, it conducted this literature review with three objectives in mind:

-

•

to describe the concept of benchmarking and its evolution;

-

•

to propose an operational definition of benchmarking in healthcare as well as its key stages;

-

•

to describe some experiences illustrating how benchmarking has been used in healthcare.

Methods

Documentary search

To better understand how the concept has evolved and how it is currently defined, we decided to extend the boundaries of the literature review to encompass all sectors. For the analysis of experiences, our survey of the healthcare sector literature was non-exhaustive, given the abundance of literature and the fact that the term benchmarking is used differently in different activity sectors and even within a single sector. This documentary search was carried out between December 2009 and January 2010.

In the first phase of this search, our aim was to identify concepts, models and definitions of benchmarking and its fields of application. We used the Google search engine with the following keywords: benchmarking, benchmarking methods, benchmarking models, benchmarking techniques, utilization of benchmarking, types of benchmarking, benchmarking in health, benchmarking in medicine, comparative evaluation and parangonnage (French term for benchmarking).

In a second phase, we targeted our search on healthcare benchmarking in the Medline, Science Direct and Scopus bibliographic databases, as well as by using the Google Scholar specialized search engine. This in-depth search targeted articles that identified benchmarking as a structured quality improvement method in healthcare and articles in which benchmarking was used as an approach for analyzing and improving healthcare processes.

Two search strategies were applied, depending on the database. The first used only keywords from the Medical Subject Headings (MeSH) thesaurus. The second used keywords that were not exclusively from MeSH to identify articles when searching in Scopus, Science Direct and Google Scholar. The search equations and the numbers of articles identified and selected in each search are summarized in Table 1.

TABLE 1.

Search strategy (2009–2010)

| Search Strategies by Database | Number of Responses | Number of References Read (title, abstract) | Number of References Selected |

|---|---|---|---|

| Medline | |||

| (“Benchmarking/methods”[Majr] OR “Benchmarking/organization and administration”[Majr] OR “Benchmarking/utilization”[Majr]) AND “Review”[Publication Type] | 159 | 159 | 35 |

| “Benchmarking”[Majr] AND “Physician's Practice Patterns”[Majr] | 68 | 68 | 1 |

| (“Benchmarking”[Majr] AND (“Professional Practice”[Majr]) OR “Institutional Practice”[Majr])) AND “Review”[Publication Type] | 23 | 23 | 2 |

| “Benchmarking”[Majr] AND “Methods”[Majr] | 17 | 17 | 0 |

| “Benchmarking”[Majr] AND “Process Assessment (Health Care)”[Majr] | 21 | 21 | 2 |

| “Benchmarking”[Majr] AND “Outcome and Process Assessment (Health Care)”[Majr] | 189 | 189 | 23 |

| “Benchmarking”[Majr] AND “Quality Indicators, Health Care”[Majr] | 255 | 100 | 11 |

| (“Benchmarking”[Majr] AND “Quality Assurance, Health Care”[Majr]) AND “Review”[Publication Type] | 254 | 100 | 11 |

| Science Direct | |||

| Benchmarking AND healthcare process | 1,488 | 100 | 24 |

| Scopus | |||

| TITLE (benchmarking) AND ALL (quality health care improvement) | 225 | 100 | 22 |

| (TITLE (benchmarking) AND ALL (health care)) AND DOCTYPE(re) | 86 | 86 | 67 |

This documentary search, as well as the reading and selection of the articles, was carried out by the first author (AE-T).

Selection criteria for articles

A first selection was done by reading the titles and abstracts of articles. When the search equation identified more than 200 references, we limited our reading to the first 100, according to the search engine's order of relevance. We included articles written in English or French and published between 1990 and 2010. Then, we (AE-T) eliminated duplicates and articles whose full text could not be found in the subscriptions of the library of Université Bordeaux Segalen. We included all types of articles (original, opinion) and of journals, as well as relevant articles found in the reference lists of the source articles. The critical analysis of the articles was done by AE-T.

To be included in the literature review, articles had to meet the following two inclusion criteria:

-

•

the primary subject of the article was benchmarking; and

-

•

the article contained at least one of the following types of information: history of benchmarking; its concept, definition, models or types; the method of benchmarking used; its impact on quality improvement in the healthcare field studied.

Selection criteria for other documents

The exploratory search provided articles, reports or personal pages published on the Internet. These various documents were selected based on the article selection criteria presented above. This search also led us to explore the work of various organizations involved in quality improvement in healthcare, such as the Haute autorité de santé (HAS), the Agence nationale d'appui à la performance (ANAP – National Agency to Support Performance), the World Health Organization (WHO) and the Organisation for Economic Co-operation and Development (OECD).

Results

Articles and documents selected

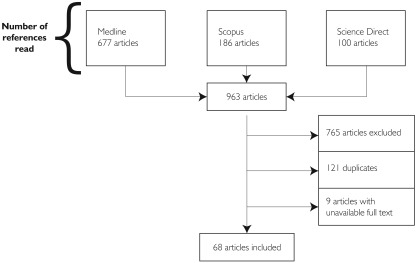

The various search strategies in the three databases identified 2,785 articles; of these, 963 titles and abstracts were read. We retained 68 of these articles for full reading, and we excluded 765 articles that did not meet the inclusion criteria, 121 that were duplicates and nine whose full text could not be retrieved (Figure 1). The complete list of references and the articles are available on the CCECQA website (www.ccecqa.asso.fr).

FIGURE 1.

Selection of articles for the literature review (2009–2010)

From the search using Google Scholar and other Internet sites, we selected 35 documents in the form of reports or articles published online.

Critical analysis

In the first phase of the search we identified literature on mechanisms for comparative evaluation or standardization of performance, better known as benchmarking, in different sectors of activity (education, employment, environment, finances, social protection, research and healthcare) and at all levels of public action (international, European, national, regional, local). The Canadian translations of parangonnage and French translations of comparative evaluation appeared to be neither explicit nor discriminating enough and are therefore not used in this paper.

The search targeting the healthcare sector showed that, depending on the authors, the term benchmarking could mean comparing practices against norms and standards, comparing the practices of several teams and/or organizations in order to set up standards (recommendations) at the national level (comparisons of surgical techniques or therapeutic approaches, for example), or developing/comparing indicators between organizations, or even between countries. The term benchmarking was used to describe comparative epidemiological studies. All these articles were excluded because they did not correspond to our selection criteria.

Of the 68 articles retained, 12 studies dealt with benchmarking as a structured quality improvement method (Ellis 2006; Bayney 2005; Bonnet et al. 2008; Braillon et al. 2008; Collins-Fulea et al. 2005; Ellershaw et al. 2008; Francis et al. 2008; Hermann et al. 2006a,b; Meissner 2006; Meissner et al. 2008; Reintjes et al. 2006; Schwappach et al. 2003) and only three explicitly described the method used (Ellis 2006; Bonnet et al. 2008; Reintjes et al. 2006).

Structural modifications to the concept of benchmarking over time

Developed in industry in the early 1930s, benchmarking was conceptualized within a competitive world at the end of the 1970s by the Xerox Company. In 1979, the Fuji–Xerox division in Japan analyzed the features and the quality of its products and those of its Japanese competitors. It determined that manufacturing costs were higher in the United States. In fact, competitors were selling their products at Xerox's production cost. Xerox initiated a process it called competitive benchmarking. In 1981, benchmarking was adopted in all Xerox's business units. In 1989, Xerox earned the American prize for quality, the Malcolm Baldrige National Quality Award (Fedor et al. 1996).

The criteria used in quality awards competitions encourage intensive use of benchmarking (Fedor et al. 1996). It has become primarily a self-assessment and decision support tool designed by management science for organizational rationalization (Barber 2004; Bruno 2008). For a business, it consists of setting progress goals by identifying best practices. Data collection is done by means of open and reciprocal exchange over the long term. As such, benchmarking has been popular in the business world since the 1990s; it has been the subject of manuals, specialized journals, institutes, clubs, associations and more. According to a 2007 survey of 6,323 companies in 40 countries conducted by the strategic consulting firm Bain & Company, benchmarking was their second most-used tool in 2001 and 2003 (right after strategic planning) (Bruno 2008). Another survey conducted in 2009 showed that benchmarking had reached the top spot among the 25 tools used (Rigby and Bilodeau 2009).

In the healthcare sector, comparison of outcome indicators dates back to the 17th century with the comparison of mortality in hospitals (Braillon et al. 2008). Its utilization as a structured method began only in the mid-1990s. It emerged in the United States and the United Kingdom with the imperative of comparing hospital outcomes to rationalize their funding (Camp 1998; Dewan et al. 2000).

Benchmarking in the healthcare sector has also undergone several modifications (Ellis 2006). Initially, benchmarking was essentially the comparison of performance outcomes to identify disparities. Then it expanded to include the analysis of processes and success factors for producing higher levels of performance (Bayney 2005; Collins-Fulea et al. 2005; Ellershaw et al. 2008; Meissner et al. 2008). The most recent modifications to the concept of benchmarking relate to the need to meet patients' expectations (Ellis 2006). The United Kingdom's Essence of Care program is certainly one of the most advanced in this respect (NHS 2003, 2006, 2007).

Thus, from its beginnings as a quantitative approach, benchmarking has evolved towards a qualitative approach. Initially, competitive benchmarking measured an organization's performance against the competition. Then comparative benchmarking focused on comparing similar functions in different organizations, the advantage of this approach being that it moderated the competitive aspect and provided opportunities for learning. Collaborative benchmarking involves sharing knowledge about a particular activity with the goal of improving the field being studied. Clinical practice benchmarking involves structured comparisons of processes and the sharing of best practices in clinical care; it is based on quality assessment and is integrated within a CQI approach. Finally, Essence of Care is a sophisticated approach to clinical practice benchmarking aimed at becoming an integral and effective component of healthcare services standardization to support CQI in services and increase patient satisfaction (Ellis 2006; Kay 2007). The various experiences of benchmarking applications in the healthcare sector described later in this article reflect the diversity of benchmarking practices.

The definition of benchmarking

Benchmarking's evolution over time and in different fields of application explains the multiplicity and heterogeneity of its definitions, which are found mainly in the industrial sector (Table 2).

TABLE 2.

Definitions of benchmarking

| Authors | Definitions |

|---|---|

| David Kearns, Executive Director, Xerox Corporation (1980s) | Benchmarking is the continuous process of measuring products, services and practices against the toughest competitors or those companies recognized as industry leaders |

| Robert C. Camp (1989) | Benchmarking is the search for best practices for a given activity that will ensure superiority. |

| Geber (1990) | A process of finding the world-class examples of a product, service or operational system and then adjusting own products, services or systems to meet or beat those standards. |

| Vaziri (1992) | A continuous process comparing an organization's performance against that of the best in the industry considering critical consumer needs and determining what should be improved. |

| Watson (1993) | The continuous input of new information to an organization. |

| Gerald J. Balm (1992) | The ongoing activity of comparing one's own process, product or service against the best known similar activity, so that challenging but attainable goals can be set and a realistic course of action implemented to efficiently become and remain best of the best in a reasonable time. |

| Kleine (1994) | An excellent tool to use in order to identify a performance goal for improvement, identify partners who have accomplished these goals and identify applicable practices to incorporate into a redesign effort. |

| Cook (1995) | A kind of performance improvement process by identifying, understanding and adopting outstanding practices from within the same organization or from other businesses. |

| APQC (American Productivity and Quality Center) (1999) | The process of continuously comparing and measuring an organization against business leaders anywhere in the world to gain information that will help the organization take action to improve its performance. |

| Vlăsceanu et al. (2007) | A standardized method for collecting and reporting critical operational data in a way that enables relevant comparisons among the performances of different organizations or programs, usually with a view to establishing good practice, diagnosing problems in performance and identifying areas of strength. Benchmarking gives the organization (or the program) the external references and the best practices on which to base its evaluation and to design its working processes. |

| EFQM – European Benchmarking Code of Conduct (2009) | The process of identifying and learning from good practices in other organizations. |

| Jac Fitz-enz (1993) | Benchmarking a systematic approach to identifying the benchmark, comparing yourself to the benchmark and identifying practices that enable you to become the new best-in-class. Benchmarking is not an exercise in imitation. It yields data, not solutions. |

Sources: Balm 1992; Camp 1989; EFQM 2009; Kay 2007; Fitz-enz 1993; Vlăsceanu et al. 2007.

BENCHMARKING IN THE INDUSTRIAL SECTOR

In the early 1990s, benchmarking referred to comparing products, services and methods against those of the best organizations in the sector. In fact, Rank Xerox, a pioneer in this field, defined benchmarking as “the continuous process of measuring products, services and practices against the toughest competitors or those companies recognized as industry leaders” (Camp 1989: 10).

Benchmarking referred mainly to competitive analysis or industrial analysis. These methods are still considered to be benchmarking, but numerous other elements have been added (Pitarelli and Monnier 2000).

In 1992, for the first time, G.J. Balm defined benchmarking as a CQI approach. Extending beyond the simple collection of information and comparisons with competitors, it became based on an exchange that allowed organizations to understand how best performances were achieved so that they could adapt the best ideas to their own practices. This expanded benchmarking approach involved, on the one hand, standardizing all key processes, and on the other, measuring one's organization not only against direct competitors, but also against non-competitor businesses recognized as being “best in class” (BIC). Finally, it also involved focusing on comparative measures that are of interest to the organization's users (Balm 1992; Pitarelli and Monnier 2000).

BENCHMARKING IN THE HEALTHCARE SYSTEM

Benchmarking made its first appearance in the healthcare system in 1990 with the requirements of the Joint Commission on Accreditation of Healthcare Organizations (JCAHO) in the United States, which defined it as a measurement tool for monitoring the impact of governance, management and clinical and logistical functions (Braillon et al. 2008).

Few definitions were adapted to the healthcare sector. Among them, that of Ellis (2006) summarized benchmarking in healthcare as a process of comparative evaluation and identification of the underlying causes leading to high levels of performance. Benchmarking must respond to patients' expectations. It involves a sustained effort to measure outcomes, compare these outcomes against those of other organizations to learn how those outcomes were achieved, and apply the lessons learned in order to improve.

To implement benchmarking, all the authors stress the need for useful, reliable and up-to-date information. This ongoing process of information management is called surveillance. This information surveillance, the first foundation of benchmarking, facilitates and accelerates the benchmarking process. A second foundation consists of learning, sharing information and adopting best practices to modify performance.

In practice, benchmarking also encompasses:

-

•

regularly comparing indicators (structure, activities, processes and outcomes) against best practitioners;

-

•

identifying differences in outcomes through inter-organizational visits;

-

•

seeking out new approaches in order to make improvements that will have the greatest impact on outcomes; and

-

•

monitoring indicators.

Like all continuous improvement methods, benchmarking fits within the conceptual framework of Deming's wheel of quality (Bonnet et al. 2008; Ellis 2006; Reintjes et al. 2006). The different descriptions vary in the number of steps, depending on how the steps are grouped, and each approach has its own value. The literature offers several examples of step groupings. In France, the Agence nationale pour l'accréditation et l'évaluation en santé (ANAES – National Agency for Healthcare Accreditation and Evaluation) published a reference document in 2000 made up of leaflets presenting methods and tools for effectively conducting quality improvement projects, including an eight-step benchmarking method (ANAES 2000). For one organization, Bonnet and colleagues (2008) proposed a benchmarking method adapted for an anaesthesia–resuscitation service that consisted of 12 steps grouped into four phases.

In this paper we describe the example of Pitarelli and Monnier (2000), which has nine steps:

Select the object of the benchmarking (the service or activity to be improved).

Identify benchmarking partners (reference points).

Collect and organize data internally.

Identify the competitive gap by comparing against external data.

Set future performance targets (objectives).

Communicate the benchmarking results.

Develop action plans.

Take concrete action (project management).

Monitor progress.

The authors recommend not starting the analysis too soon, before the process has been prepared: determine those products that are important for the organization (what), decide on whom to compare yourself against (who) and give careful consideration to data collection (how) (Pitarelli and Monnier 2000; Woodhouse et al. 2009).

Benchmarking can be carried out internally in very large organizations (e.g., hospitals), in which it is quite possible to compare outcomes in similar services. The advantage of internal benchmarking is that it is rapid and not too expensive, and inter-service visits can be carried out without any issues of confidentiality among facilities. It also is useful for learning the method. For external benchmarking of clinical practices, it is difficult, given the medical specificity of the indicators to be used, to see how these practices might be compared against other sectors. For non-clinical processes (billing, inventory management, traceability of products used and so on), comparison with other sectors is possible. Thus, comparisons with sectors in which traceability is crucial, such as the pharmaceutical industry or any other sectors with strong quality assurance, could be worthwhile (Gift and Mosel 1994).

Experiences of comparative evaluation based on indicators in healthcare

The healthcare system performance improvement movement of the early 1990s saw the emergence of several national and international projects to develop indicators and evaluate performance (Wait and Nolte 2005). Deliberations about the value of measuring these indicators led to the first initiatives of comparison in the healthcare sector. Thus began the development of indicator-based comparative evaluation of hospital performance.

The aims of the PATH (Performance Assessment Tool for Quality Improvement in Hospitals) project designed by WHO were to evaluate and compare hospitals' performance at the international level using an innovative multidimensional approach, to promote voluntary inter-organization benchmarking projects and to encourage hospitals' sustained commitment to quality improvement processes (Groene et al. 2008).

The HCQI (Health Care Quality Indicators) project launched in 2001 by the OECD focused on two broad questions: what aspects of healthcare quality should be evaluated, and how? Its long-term objective was to create a set of indicators that could be used to identify new avenues of research on healthcare quality in OECD countries. These indicators would essentially serve as the starting point for understanding why there were differences and what means could be used to reduce them and improve healthcare in all the countries (Arah et al. 2006; Marshall et al. 2006; Mattke et al. 2006a,b; McLoughlin et al. 2006).

There were also other international projects based on comparison of performance indicators. One of these, for example, was a project in the Nordic countries on healthcare quality indicators. The aim of this project of the Nordic Council of Ministers was to describe and analyze the quality of services for major illnesses in the Nordic countries (Denmark, Finland, Greenland, Iceland, Norway and Sweden) (Mainz et al. 2009c).

In the United States, since the 1990s, the AHRQ (Agency for Healthcare Research and Quality) has been developing and expanding a series of indicators, or QIs (quality indicators), using a conceptual model with four dimensions to measure the quality, safety, effectiveness and efficiency of services provided both within and outside hospitals. These indicators are produced using only hospitals' clinical and administrative data (AHRQ 2009, 2010).

Several other studies have targeted the comparison of healthcare indicators in a given area. For example, Earle and colleagues (2005) compared the intensity of end-of-life care for patients with cancer by using Medicare administrative data. Two other studies, one American and the other Australian, looked at comparative analyses of mental health indicators among several healthcare organizations (Hermann et al. 2006a; Meehan et al. 2007).

In France, generalizations of the Indicateurs pour l'amélioration de la qualité et de la sécurité des soins (IPAQSS – Indicators for Improvement of Service Quality and Safety) (HAS 2009) and of the Tableau de bord des infections nosocomiales (Nosocomial Infections Dashboard) were also aimed at comparing indicators among healthcare organizations (MTES 2011).

Other local and regional comparative indicator-based initiatives were developed in France:

-

•

The Fédération nationale des centres de lutte contre le cancer (FNCLCC – National Federation of Cancer Centres) made public in October 2009 the first comparative analysis of professional practices in radiotherapy in 20 cancer centres (CLCCs), as well as a comparison of the activities of all CLCCs against other players in the hospital landscape (university hospitals and the public hospital system of Paris, the AP–HP).

-

•

In the context of improving the internal organizational efficiency of surgical suites, the Mission nationale d'expertise et d'audit hospitaliers (MEAH – Mission for Hospital Evaluation and Improvement) and the managers in charge of efficiency at 10 Agences régionales de l'hospitalisation (ARH – Regional Hospitalization Agencies) conducted a benchmarking process between 2007 and 2009 looking at 850 surgical suites of all sizes in 352 institutions of every status (MEAH 2008).

-

•

In 2006 and 2008, the ARH of Aquitaine and the CCECQA carried out a generalized collection of quality indicators in all public and private medical–surgical–obstetrical (MCO) health institutions and physical rehabilitation centres (SSR) in the region, called the GINQA-MédINA (CCECQA 2008).

These projects made it possible to develop indicators and to begin doing comparisons in the healthcare sector.

Some experiences of incorporating benchmarking into the healthcare sector

In the literature, few articles described benchmarking as a quality improvement process carried out in successive stages and/or involving the structured exchange of information based on dialogue or on site visits in order to share best practices.

In Denmark, the national indicator development project was created in 2009. Between 2000 and 2008, several professional clinicians appointed by scientific societies developed evidence-based quality indicators for the management of illnesses. The objective of this project was to document and develop quality of care for the benefit of the patient. Another aim of this project was to conduct benchmarking processes through regular dialogue between the agency collecting the indicators and the representatives of a region's institutions about the results of the indicators, as well as structured dialogues with institutions whose results were atypical. This approach fits within a framework that is midway between internal improvement and external monitoring, in the sense that the agency would conduct a visit if this dialogue did not produce satisfactory explanations (Mainz et al. 2009b).

The German Bundesgeschäftsstelle Qualitätssicherung (BQS – Federal Agency for Quality and Patient Safety) has set up a similar benchmarking process, also called Structured Dialogue, in 2,000 healthcare institutions since 2001 (BQS 2011). It is based on indicators (190 indicators in 26 healthcare domains in 2007). Hospitals receive their own results as well as those of the other hospitals. Hospitals whose results are in the reference panel carry out, as part of the structured dialogue, an analysis of atypical results (outliers), as in Denmark, but in addition, there are discussions between professionals in the different healthcare institutions to identify the reasons for the performance disparities. The results for several specialties and the reasons for the differential evolutions between the regions are followed from year to year; finally, the indicators are analyzed and discussed from the methodological standpoint.

In the United Kingdom, Essence of Care is an approach to healthcare services, launched in 2001, that aims to improve the quality of the fundamental components of nursing care. It uses clinical best practice evidence to structure a patient-centred approach to care and to inform clinical governance, a generic term designating the managerial policy of making care teams directly responsible for improving clinical performance. Benchmarking, as described in Essence of Care, helps practitioners adopt a structured approach to sharing and comparing practices so that they can identify best practices and develop action plans (NHS 2003, 2006, 2007; Nursing Times 2007). Several publications dealt with this strategy and its application in various sectors of care. Butler's (2008) article analyzed the political, professional, social and economic factors that contributed to the development of this approach, focusing particularly on benchmarks related to treating bedsores. It will be interesting to follow the European Union's (EU) initiative, which used a structured, seven-step benchmarking process as a new tool to evaluate national communicable disease surveillance systems in six member states in order to identify their strengths and weaknesses. The objective was to make recommendations to decision-makers for improving the quality of these systems (Reintjes et al. 2006).

In Switzerland, the Office fédéral des assurances sociales (OFAS – Federal Social Insurance Bureau) launched the Emerge Project in November 2000 to improve the quality of medical treatments covered by the mandatory health insurance program. The aim of this project is to identify ways to promote strong linkages between quality measurement and a hospital's internal management. Coached by a team of advisers, hospitals learn how to analyze current emergency-room treatment processes and identify measures for improvement, as well as how to interpret outcomes by comparing them (OFAS 2005; Schwappach et al. 2003).

Discussion

The literature review highlighted how benchmarking approaches have evolved in the healthcare sector. This evolution produced numerous definitions, whose common theme is continuous measurement of one's own performance and comparison with best-performers to learn about the latest work methods and practices in other organizations. We recommend adopting Ellis's (2006) definition, which clearly reflects the benchmarking process and offers the advantage of focusing particularly on the use of indicators and on the functions of learning and of sharing methods. Likewise, Pitarelli and Monnier (2000) put forward the key elements of a benchmarking process, i.e., the importance of fully understanding all the steps of the process that needs to be improved and of collecting reliable data (surveillance) to support decision-making. Their model clearly shows that comparing indicators is only one step in the benchmarking process – a fundamental step, certainly, yet not enough in itself to be considered benchmarking.

Benchmarking in healthcare is not, to our knowledge, a subject that has ever been studied in a systematic and standardized way. This is why our review is based on multiple sources that often mix facts and opinions; we were unable to present the readings on the various experiences in as structured a grid as would be found in a classical review of articles based on similar methods. Another limitation of our review is that we did not do an exhaustive literature search, focusing rather on identifying articles that best illustrated our point of view, with the reading done by a single author. Finally, we looked at the socio-economic context that encouraged the use of benchmarking in the healthcare sector, but not the motives underlying the use and funding of the benchmarking projects undertaken in the experiences we selected for this article.

At the international level, while most projects for developing and disseminating performance indicators exhibit benchmarking-type objectives, these projects remain restricted to indicator comparisons. The original intent of developing action plans for improvement and of reducing disparities often comes up against the difficulty of reaching consensus on the validity of data used for interorganizational comparisons. The choice and validity of indicators used for internal and external comparisons between healthcare services and systems remains a matter of debate (Mainz et al. 2009c). At the national and international levels, there is a need to invest in quality measurement systems and in better international collaboration (Mainz et al. 2009a).

Comparing data within or between healthcare systems also raises the question of how such comparisons affect performance improvement and how they are to be incorporated into existing policies. Benchmarking is put forward as a solution to strengthen the use of indicators. This is undoubtedly one of the reasons that the benchmarking approach in healthcare is always based on indicators, which is not systematically the case in industry, where qualitative approaches are also applied.

The literature review showed that benchmarking as practised in industry is rarely implemented. Other terms have been used to designate approaches that are conceptually similar to benchmarking, such as the Breakthrough Series and Quality Improvement Collaboratives. These are all cases of collective methods of improvement. While these processes are purported to be different from one another, in fact, the actual scope of any such differences is questionable. Rather, are they simply taking advantage of current trends or communication methods to use supposedly new terms to re-ignite interest in what are actually old approaches?

The first, the Breakthrough Series, was originally developed by the Institute for Healthcare Improvement (IHI; Massachusetts, United States) (IHI 2003); in France, it was applied in a form closely related to that of the IHI, in the Programmes d'amélioration continue (PAC – Continuous Improvement Programs) funded by the ANAES in the late 1990s. This approach is based on the notion of significant advances and breakthroughs. The Breakthrough Series can be expressed as series of rapid advances in quality that are based on innovation, the search for the latest available scientific research, accelerated testing of changes and a sharing of experiences among many organizations. Even though this method cannot be developed without monitoring outcomes, the notion of indicators does not figure predominantly in the Breakthrough Series method, and inter-site visits are not systematic.

Quality Improvement Collaboratives are carried out by multidisciplinary teams from different healthcare services and organizations who decide to work together using a structured method for a limited time (a few months) to improve their practices (Schouten et al. 2008). This approach has been increasingly used in the United States, Canada, Australia and several European countries. In the United Kingdom and in the Netherlands, the public authorities support the development of such programs. In France, regional projects developed by regional agencies for evaluation and support also operate within this dynamic (Saillour-Glénisson and Michel 2009). This is the case for current research projects such as the BELIEVE project coordinated by the CCECQA (2010). Since December 2009, the ANAP has piloted a national benchmarking process, Imagerie 2010: scanner et imagerie par résonance magnétique (Imaging 2010: Scanning and Magnetic Resonance Imaging), that could be very similar to the practice standards related to benchmarking (ANAP 2009).

All these approaches are based on the same elements: multidisciplinary and multi-site characteristics, the implementation of improvement initiatives, and measurement. It is difficult to consider them completely equivalent, because each one focuses on one or another element, which necessarily influences its implementation strategy. In particular, the Breakthrough Series focuses on the rapidity of interventions, and the Collaboratives on the time-limited nature of the exercise. Benchmarking focuses on gathering indicators for long-term monitoring, making this method truly a CQI approach.

Research streams on benchmarking are numerous and quite varied, because they have not been very much developed before now. At the strategic level, it is important to ensure that healthcare benchmarking achieves its objective, which is to better delineate those areas where policy efforts should be concentrated to improve healthcare system performance (Wait and Nolte 2005). Technically, the success factors for benchmarking, which in general are closely related to those required by the main improvement approaches (involvement of management, planning and project management, use of tools to support working in groups, suitable training policy), very likely include specific elements such as a culture that is receptive to transparent exchanges. At the sociological level, a better understanding is needed of how indicators can be more widely adopted in healthcare organizations through the use of benchmarking processes and greater involvement of front-line professionals.

Of course, benchmarking is primarily a management tool; nevertheless, it requires care team involvement, at least in the analysis of practices and in comparisons with other care teams. The upcoming implementation of a structured benchmarking process in more than 30 healthcare organizations in Aquitaine will make it possible to study the factors that best support the adoption of this type of process.

Conclusion

Benchmarking often refers to the comparison of indicators in a time-limited approach. It is not yet often perceived as a tool for continuous improvement and support to change. Benchmarking's key characteristic is that it is part of a comprehensive and participative policy of continuous quality improvement. Indeed, benchmarking is based on voluntary and active collaboration among several organizations to create a spirit of competition and to apply best practices. Conditions for successful benchmarking focus essentially on careful preparation of the process, monitoring of the relevant indicators, staff involvement and inter-organizational visits.

Compared to methods previously implemented in France (Breakthrough Series called Programmes d'amélioration continue by the ANAES in the late 1990s and collaborative projects by regional evaluation and support agencies), benchmarking has specific features that set it apart as a healthcare innovation. This is especially true for healthcare or medical–social organizations, as the principle of inter-organizational visiting is not part of their culture. Thus, this approach will need to be assessed for feasibility and acceptability.

Contributor Information

Amina Ettorchi-Tardy, Public Health Physician, Comité de coordination de l'évaluation clinique et de la qualité en Aquitaine (CCECQA), Hôpital Xavier Arnozan (CHU de Bordeaux), Bordeaux, France.

Marie Levif, Graduate Student, Sociology, Comité de coordination de l'évaluation clinique et de la qualité en Aquitaine (CCECQA), EA 495 Laboratoire d'analyse des problèmes sociaux et de l'action collective (LAPSAC), Université Bordeaux Segalen, Bordeaux, France.

Philippe Michel, Public Health Physician & Director, Comité de coordination de l'évaluation clinique et de la qualité en Aquitaine (CCECQA), Bordeaux, France.

REFERENCES

- Agence nationale d'accréditation et d'évaluation en santé (ANAES) 2000. Méthodes et outils des démarches qualité pour les établissements de santé. Paris: Author. Retrieved February 26, 2012. <http://www.has-sante.fr/portail/upload/docs/application/pdf/2009-10/methodes.pdf>.

- Agence nationale d'appui à la performance des établissements de santé et médico-sociaux (ANAP) 2009. Benchmark Imagerie 2010: Scanner et IRM. Paris: Author; Retrieved February 26, 2012. <http://www.parhtage.sante.fr/re7/idf/doc.nsf/VDoc/9F47838712B5DA73C1257685004572D0/$FILE/Imagerie-Bench-Lct-091207-V1.pdf>. [Google Scholar]

- Agency for Healthcare Research and Quality (AHRQ) 2009. National Healthcare Quality Report 2008. Rockville, MD: US Department of Health and Human Services; Retrieved February 26, 2012. <http://www.ahrq.gov/qual/nhqr08/nhqr08.pdf>. [Google Scholar]

- Agency for Healthcare Research and Quality (AHRQ) 2010. Quality Indicators. Rockville, MD: US Department of Health and Human Services; Retrieved February 26, 2012. <http://www.qualityindicators.ahrq.gov>. [Google Scholar]

- American Productivity and Quality Center (APQC) 1999. “What Is Best Practice?” Houston, TX: Author [Google Scholar]

- Arah O., Westert G., Hurst J., Klazinga N. 2006. “A Conceptual Framework for the OECD Health Care Quality Indicators Project.” International Journal for Quality in Health Care 18(1 Suppl): 5–13 [DOI] [PubMed] [Google Scholar]

- Balm G.J. 1992. Benchmarking: A Practitioner's Guide for Becoming and Staying Best of the Best. Shaumburg, IL: QPMA Press [Google Scholar]

- Barber E. 2004. “Benchmarking the Management of Projects: A Review of Current Thinking.” International Journal of Project Management 22: 301–07 [Google Scholar]

- Bayney R. 2005. “Benchmarking in Mental Health: An Introduction for Psychiatrists.” Advances in Psychiatric Treatment 11: 305–14 [Google Scholar]

- Bonnet F., Solignac S., Marty J. 2008. “Vous avez dit benchmarking?” Annales françaises d'anesthésie et de réanimation 27: 222–29 [DOI] [PubMed] [Google Scholar]

- BQS-Institut (BQS) 2011. Benchmarking und Qualitätsvergleiche. Retrieved February 26, 2012. <http://www.bqs-institut.de/produkte/benchmarkingundqualitaetsvergleiche.html>.

- Braillon A., Chaine F., Gignon M. 2008. “Le Benchmarking, une histoire exemplaire pour la qualité des soins.” Annales françaises d'anesthésie et de réanimation 27: 467–69 [DOI] [PubMed] [Google Scholar]

- Bruno I. 2008. “La Recherche scientifique au crible du benchmarking. Petite histoire d'une technologie de gouvernement.” Revue d'histoire moderne et contemporaine 5(55-4bis): 28–45 [Google Scholar]

- Butler F. 2008. “Essence of Care and the Pressure Ulcer Benchmark – An Evaluation.” Journal of Tissue Viability 17: 44–59 [DOI] [PubMed] [Google Scholar]

- Camp R.C. 1989. Benchmarking: The Search for Industry Best Practices That Lead to Superior Performance. Milwaukee: American Society for Quality Control Quality Press [Google Scholar]

- Camp R.C. 1998. Global Cases in Benchmarking: Best Practices from Organizations Around the World. Milwaukee: American Society for Quality Control Quality Press [Google Scholar]

- Collins-Fulea C., Mohr J.J., Tillett J. 2005. “Improving Midwifery Practice: The American College of Nurse-Midwives' Benchmarking Project.” Journal of Midwifery & Women's Health 50(6): 461–71 [DOI] [PubMed] [Google Scholar]

- Comité de coordination de l'évaluation clinique et de la qualité en Aquitaine (CCECQA) 2008. Ginqa – MédInA: Généralisation d'indicateurs de qualité des soins en Aquitaine – 2e mesure. Bordeaux: Author. Retrieved February 26, 2012. <http://www.ccecqa.asso.fr/page/ginqa-medina>.

- Comité de coordination de l'évaluation clinique et de la qualité en Aquitaine (CCECQA) 2010. BELIEVE – Protocole. Bordeaux: Author; Retrieved February 26, 2012. <http://www.ccecqa.asso.fr/documents/believe-protocole>. [Google Scholar]

- Cook S. 1995. Practical Benchmarking: A Manager's Guide to Creating a Competitive Advantage. London: Kogan Page [Google Scholar]

- Dewan N.A., Daniels A., Zieman G., Kramer T. 2000. “The National Outcomes Management Project: A Benchmarking Collaborative.” Journal of Behavioral Health Services & Research 27(4): 431–36 [DOI] [PubMed] [Google Scholar]

- Earle C.C., Neville B.A., Landrum M.B., Souza J.M., Weeks J.C., Block S.D., Grunfeld E., Ayanian J.Z. 2005. “Evaluating Claims-Based Indicators of the Intensity of End-of-life Cancer Care.” International Journal for Quality in Health Care 17(6): 505–09 [DOI] [PubMed] [Google Scholar]

- EFQM 2009. “European Benchmarking Code of Conduct.” Brussells: Author. Retrieved February 26, 2012. <http://www.efqm.org/en/PdfResources/Benchmarking%20Code%20of%20Conduct%202009.pdf>.

- Ellershaw J., Gambles M., McGlinchey T. 2008. “Benchmarking: A Useful Tool for Informing and Improving Care of the Dying?” Supportive Care in Cancer 16: 813–19 [DOI] [PubMed] [Google Scholar]

- Ellis J. 2006. “All Inclusive Benchmarking.” Journal of Nursing Management 14(5): 377–83 [DOI] [PubMed] [Google Scholar]

- Fedor D., Parsons C., Shalley C. 1996. “Organizational Comparison Processes: Investigating the Adoption and Impact of Benchmarking-Related Activities.” Journal of Quality Management 1(2): 161–92 [Google Scholar]

- Fitz-enz J. 1993. Benchmarking Staff Performance: How Staff Departments Can Enhance Their Value to the Customer. San Francisco: Jossey Bass [Google Scholar]

- Francis R., Spies C., Kerner T. 2008. “Quality Management and Benchmarking in Emergency Medicine.” Current Opinion in Anaesthesiology 21(2): 233–39 [DOI] [PubMed] [Google Scholar]

- Geber B. 1990. (November). “Benchmarking: Measuring Yourself Against the Best.” Training: 36–44 [Google Scholar]

- Gift R., Mosel D. 1994. Benchmarking in Health Care: A Collaborative Approach. Chicago: American Hospital Association [Google Scholar]

- Groene O., Klazinga N., Kazandjian V., Lombrail P., Bartels P. 2008. “The World Health Organization Performance Assessment Tool for Quality Improvement in Hospitals (PATH): An Analysis of the Pilot Implementation in 37 Hospitals.” International Journal for Quality in Health Care 20(3): 155–61 [DOI] [PubMed] [Google Scholar]

- Haute autorité de la santé (HAS) 2009. IPAQSS: Indicateurs pour l'amélioration de la qualité et de la sécurité des soins. Paris: Author; Haute Autorité de la santé (HAS). Retrieved February 26, 2012. <http://www.has-sante.fr/portail/jcms/c_493937/ipaqss-indicateurs-pour-l-amelioration-de-la-qualite-et-de-la-securite-des-soins>. [Google Scholar]

- Hermann R.C., Chan J.A., Provost S.E., Chiu W.T. 2006a. “Statistical Benchmarks for Process Measures of Quality of Care for Mental and Substance Use Disorders.” Psychiatric Services 57(10): 1461–67 [DOI] [PubMed] [Google Scholar]

- Hermann R.C., Mattke S., Somekh D., Silfverhielm H., Goldner E., Glover G., Pirkis J., Mainz J., Chan J.A. 2006b. “Quality Indicators for International Benchmarking of Mental Health Care.” International Journal for Quality in Health Care 18(1 Suppl): 31–38 [DOI] [PubMed] [Google Scholar]

- Institute for Healthcare Improvement (IHI) 2003. The Breakthrough Series: IHI's Collaborative Model for Achieving Breakthrough Improvement. Boston: Author. Retrieved February 26, 2012. <http://www.ihi.org/knowledge/Pages/IHIWhitePapers/TheBreakthroughSeriesIHIsCollaborativeModelforAchievingBreakthroughImprovement.aspx>.

- Kay J.F.L. 2007. “Health Care Benchmarking.” Hong Kong Medical Diary 12(2): 22–27 [Google Scholar]

- Kleine B. 1994. (Spring). “Benchmarking for Continuous Performance Improvement: Tactics for Success.” Total Quality Environmental Management: 283–95 [Google Scholar]

- Mainz J., Bartels P., Rutberg H., Kelley E. 2009a. “International Benchmarking. Option or Illusion?” International Journal for Quality in Health Care 21(3): 151–52 [DOI] [PubMed] [Google Scholar]

- Mainz J., Hansen A., Palshof T., Bartels P. 2009b. “National Quality Measurement Using Clinical Indicators: The Danish National Indicator Project.” Journal of Surgical Oncology 99(8): 500–04 [DOI] [PubMed] [Google Scholar]

- Mainz J., Hjulsager M., Thorup-Eriksen M., Burgaard J. 2009c. “National Benchmarking Between the Nordic Countries on the Quality of Care.” Journal of Surgical Oncology 99(8): 505–07 [DOI] [PubMed] [Google Scholar]

- Marshall M., Klazinga N., Leatherman S., Hardy C., Bergmann E., Pisco L., Mattke S., Mainz J. 2006. “OECD Health Care Quality Indicator Project. The Expert Panel on Primary Care Prevention and Health Promotion.” International Journal for Quality in Health Care 18(1 Suppl): 21–25 [DOI] [PubMed] [Google Scholar]

- Mattke S., Epstein A., Leatherman S. 2006a. “The OECD Health Care Quality Indicators Project: History and Background.” International Journal for Quality in Health Care 18(1 Suppl): 1–4 [DOI] [PubMed] [Google Scholar]

- Mattke S., Kelley E., Scherer P., Hurst J., Lapetra M.L.G. and HCQI Expert Group Members 2006b. Health Care Quality Indicators Project: Initial Indicators Report. OECD Health Working Papers No. 22 Paris: Organisation for Economic Co-operation and Development; Retrieved February 26, 2012. <http://www.oecd.org/dataoecd/1/34/36262514.pdf>. [Google Scholar]

- McLoughlin V., Millar J., Mattke S., Franca M., Jonsson P., Somekh D., Bates D. 2006. “Selecting Indicators for Patient Safety at the Health System Level in OECD Countries.” International Journal for Quality in Health Care 18(1 Suppl): 14–20 [DOI] [PubMed] [Google Scholar]

- Meehan T., Stedman T., Neuendorf K., Francisco I., Neilson M. 2007. “Benchmarking Australia's Mental Health Services: Is It Possible and Useful?” Australian Health Review 31(4): 623–27 [DOI] [PubMed] [Google Scholar]

- Meissner W. 2006. “Continuous Quality Improvement in Postoperative Pain Management – Benchmarking Postoperative Pain.” Acute Pain 8: 43–44 [DOI] [PubMed] [Google Scholar]

- Meissner W., Mescha S., Rothaug J., Zwacka S., Göttermann A., Ulrich K., Schleppers A. 2008. “Quality Improvement in Postoperative Pain Management.” Deutsches Arzteblatt International 105(50): 865–70 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ministère du travail, de l'emploi et de la santé (MTES) 2011. Tableau de bord des infections nosocomiales dans les établissements de santé – campagne 2011. Paris: Author; Retrieved February 26, 2012. <http://www.sante-sports.gouv.fr/tableau-de-bord-des-infections-nosocomiales-dans-les-etablissements-de-sante-campagne-2011.html>. [Google Scholar]

- Mission nationale d'expertise et d'audit hospitaliers (MEAH) 2008. Benchmark des blocs opératoires dans dix régions pilotes – synthèse nationale. Paris: Author; Retrieved February 26, 2012. <http://www.anap.fr/uploads/tx_sabasedocu/Rapport_Benchmark_Blocs_Operatoires.pdf>. [Google Scholar]

- National Health Service (NHS) 2003. Essence of Care – Patient-Focused Benchmarks for Clinical Governance. London: Author; Retrieved February 26, 2012. <http://www.dh.gov.uk/prod_consum_dh/groups/dh_digitalassets/@dh/@en/documents/digitalasset/dh_4127915.pdf>. [Google Scholar]

- National Health Service (NHS) 2006. “Essence of Care – Benchmarks for Promoting Health.” London: Author; Retrieved February 26, 2012. <http://www.dh.gov.uk/prod_consum_dh/groups/dh_digitalassets/@dh/@en/documents/digitalasset/dh_4134454.pdf>. [Google Scholar]

- National Health Service (NHS) 2007. “Essence of Care – Benchmarks for the Care Environment.” London: Author; Retrieved February 26, 2012. <http://www.dh.gov.uk/prod_consum_dh/groups/dh_digitalassets/@dh/@en/documents/digitalasset/dh_080059.pdf>. [Google Scholar]

- Nursing Times 2007. (December 13). “Essence of Care.” Retrieved February 26, 2012. <http://www.nursingtimes.net/whats-new-in-nursing/essence-of-care/361112.article>.

- Office fédéral des assurances sociales (OFAS) 2005. (September 22). “Assurance-maladie: accent mis sur la sécurité des patients.” Press release Berne, Switzerland: Author; Retrieved February 26, 2012. <http://www.bsv.admin.ch/dokumentation/medieninformationen/archiv/index.html?msgsrc=/2000/f/00092201.htm&lang=fr>. [Google Scholar]

- Pitarelli E., Monnier E. 2000. “Benchmarking: The Missing Link Between Evaluation and Management?” Geneva: University of Geneva and Centre for European Expertise and Evaluation; Retrieved February 26, 2012. <http://www.eureval.fr/IMG/pdf/Article_Benchmarking_EM.pdf>. [Google Scholar]

- Reintjes R., Thelen M., Reiche R., Csohán A. 2006. “Infectious Diseases – Benchmarking National Surveillance Systems: A New Tool for the Comparison of Communicable Disease Surveillance and Control in Europe.” European Journal of Public Health 17(4): 375–80 [DOI] [PubMed] [Google Scholar]

- Rigby D., Bilodeau B. 2009. Management Tools 2009: An Executive's Guide. Boston: Bain & Company; Retrieved February 26, 2012. <http://www.bain.com/Images/Management_Tools_2009_Executive_Guide.pdf>. [Google Scholar]

- Saillour-Glénisson F., Michel P. 2009. “Le Pilotage régional de la qualité et de la sécurité des soins: leçons issues d'une expérience aquitaine.” Pratique et Organisation des Soins 40(4): 297–308 [Google Scholar]

- Schouten L., Hulscher M., Everdingen J. van, Huijsman R., Grol R. 2008. “Evidence for the Impact of Quality Improvement Collaboratives: Systematic Review.” British Medical Journal 336: 1491–94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwappach D.L., Blaudszun A., Conen D., Ebner H., Eichler K., Hochreutener M.A. 2003. “‘Emerge’: Benchmarking of Clinical Performance and Patients' Experiences with Emergency Care in Switzerland.” International Journal for Quality in Health Care 15(6): 473–85 [DOI] [PubMed] [Google Scholar]

- Vaziri H.K. 1992. (October). “Using Competitive Benchmarking to Set Goals.” Quality Progress 8: 1–5 [Google Scholar]

- Vlăsceanu L., Grünberg L., Pârlea D. 2007. Quality Assurance and Accreditation: A Glossary of Basic Terms and Definitions. Bucharest: UNESCO-CEPES [Google Scholar]

- Wait S., Nolte E. 2005. “Benchmarking Health Systems: Trends, Conceptual Issues and Future Perspectives.” Benchmarking: An International Journal 12(5): 436–48 [Google Scholar]

- Watson G. 1993. Strategic Benchmarking: How to Rate Your Company's Performance Against the World's Best. Toronto: Wiley Canada [Google Scholar]

- Woodhouse D., Berg M., van der Putten J., Houtepen J. 2009. “Will Benchmarking ICUs Improve Outcome?” Current Opinion in Critical Care 15(5): 450–55 [DOI] [PubMed] [Google Scholar]