Abstract

Although multisensory integration has been well modeled at the behavioral level, the link between these behavioral models and the underlying neural circuits is still not clear. This gap is even greater for the problem of sensory integration during movement planning and execution. The difficulty lies in applying simple models of sensory integration to the complex computations that are required for movement control and to the large networks of brain areas that perform these computations. Here I review psychophysical, computational, and physiological work on multisensory integration during movement planning, with an emphasis on goal-directed reaching. I argue that sensory transformations must play a central role in any modeling effort. In particular the statistical properties of these transformations factor heavily into the way in which downstream signals are combined. As a result, our models of optimal integration are only expected to apply “locally”, i.e. independently for each brain area. I suggest that local optimality can be reconciled with globally optimal behavior if one views the collection of parietal sensorimotor areas not as a set of task-specific domains, but rather as a palette of complex, sensorimotor representations that are flexibly combined to drive downstream activity and behavior.

Keywords: Sensory integration, reaching, neurophysiology, parietal cortex, computational models, vision, proprioception

Introduction

Multiple sensory modalities often provide “redundant” information about the same stimulus parameter, for example when one can feel and see an object touching one’s arm. Understanding how the brain combines these signals has been an active area of research. As described below, models of optimal integration have been successful at capturing psychophysical performance in a variety of tasks. Furthermore, network models have shown how optimal integration could be instantiated in neural circuits. However, strong links have yet to be made between these bodies of work and neurophysiological data.

Here we address how models of optimal integration apply to the context of a sensory-guided movement and its underlying neural circuitry. This paper focuses on the sensory integration required for goal-directed reaching and how that integration is implemented in the parietal cortex. We show that the models developed for perceptual tasks and simple neural networks cannot, on their own, explain behavioral and physiological observations. These principles may nonetheless apply at a “local level” within each neuronal population. Lastly, the link between local optimality and globally optimal behavior is considered in the context of the broad network of sensorimotor areas in parietal cortex.

Modeling the psychophysics of sensory integration

The principal hallmark of sensory integration should be the improvement of performance when multiple sensory signals are combined. In order to test this concept, we must choose a performance criterion by which to judge improvement. In the case of perception for action, the goal is often to estimate a spatial variable from the sensory input, e.g. the location of the hand or an object in the world. In this case, the simplest and most commonly employed measure of performance is the variability of the estimate. It is not difficult to show that the minimum variance combination of two unbiased estimates of a variable x is given by the expression:

| (Equation 1) |

where x̂i, i = 1,2, are the unimodal estimates and are their variances. In other words, the integrated estimate x̂integ is the weighted sum of the two unimodal estimates, with weights inversely proportional to the respective variances. Importantly, the variance of the integrated estimate, , is always less than either of the unimodal variances. While Equation 1 assumes that the unimodal estimates x̂i are scalar and independent (given x), the solution is easily extended to correlated or multidimensional signals. Furthermore, since the unimodal estimates are often well approximated by independent, normally distributed random variables, x̂integ can also be viewed as the Maximum Likelihood (ML) integrated estimate (Ernst and Banks, 2002; Ghahramani et al., 1997). This model has been tested psychophysically by measuring performance variability with unimodal sensory cues and then predicting either variability or bias with bimodal cues. Numerous studies have reported ML-optimal or near-optimal sensory integration in human subjects performing perceptual tasks (for example, Ernst and Banks, 2002; Ghahramani et al., 1997; Jacobs, 1999; Knill and Saunders, 2003; van Beers et al., 1999).

Sensory integration during reach behavior

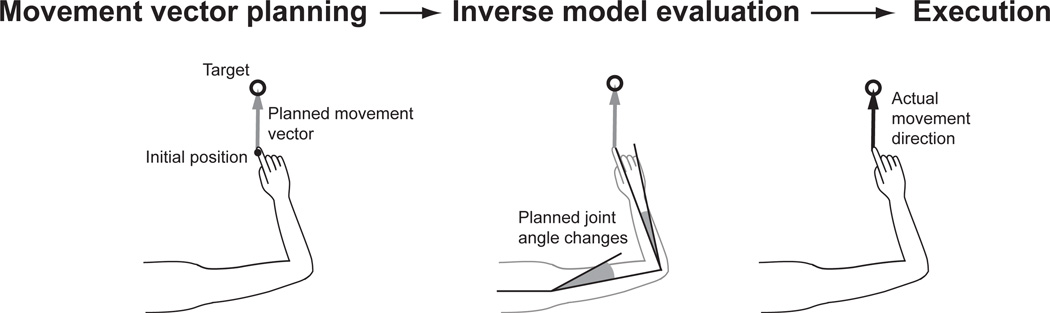

Sensory integration is more complicated for movement planning than for a simple perceptual task. The problem is that movement planning and execution rely on a number of different computations, and estimates of the same spatial variable may be needed for several of these. For example, there is both psychophysical (Rossetti et al., 1995) and physiological (Batista et al., 1999; Buneo et al., 2002; Kakei et al., 1999, 2001) evidence for two separate stages of movement planning, as illustrated in Figure 1. First, the movement vector is computed as the difference between the target location and the initial position of the hand. Next, the initial velocity along the planned movement vector must be converted into joint angle velocities (or other intrinsic variables such as muscle activations), which amounts to evaluating an inverse kinematic or dynamic model. This evaluation also requires knowing the initial position of the arm.

Figure 1. Two separate computations required for reach planning.

Adapted from Sober and Sabes (2005).

When planning a reaching movement, humans can often both see and feel the location of their hand. The ML model of sensory integration would seem to predict that the same weighting of vision and proprioception should be used for both of the computations illustrated in Figure 1. However, we have previously shown that when reaching to visual targets, the relative weighting of these signals was quite different for the two computations: movement vector planning relied almost entirely on vision of the hand, and the inverse model evaluation relied more strongly on proprioception (Sober and Sabes, 2003). We hypothesized that the difference was due to the nature of the computations. Movement vector planning requires comparing the visual target location to the initial hand position. Since proprioceptive signals would first have to be transformed, this computation favors vision. Conversely, evaluation of the inverse model deals with intrinsic properties of the arm, favoring proprioception. Indeed, when subjects are asked to reach to a proprioceptive target (their other hand), the weighting of vision is significantly reduced in the movement vector calculation (Sober and Sabes, 2005). We hypothesized that these results are consistent with “local” ML integration, performed separately for each computation, if sensory transformations inject variability into the transformed signal.

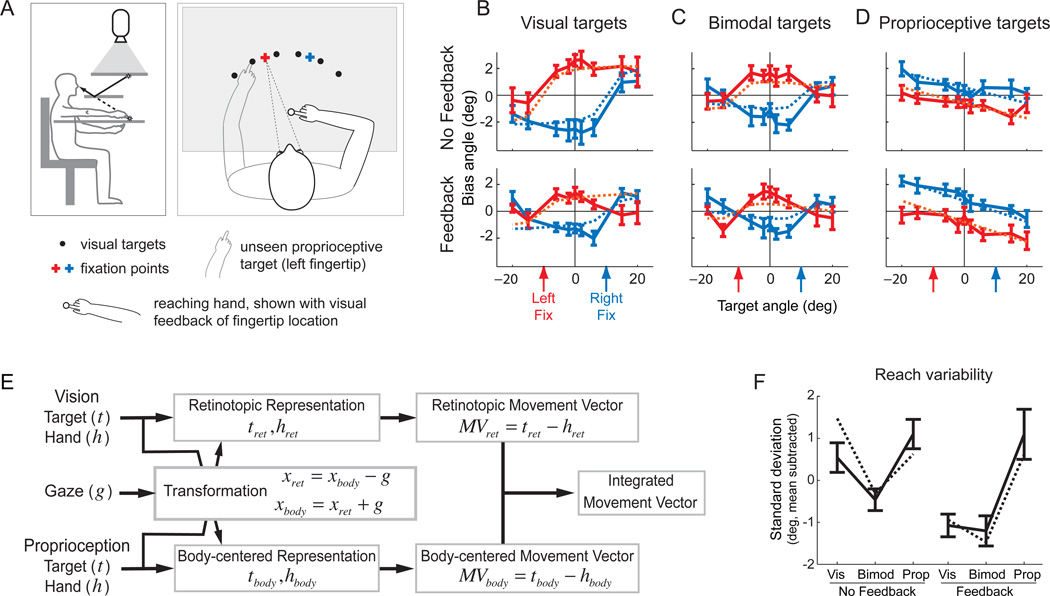

In order to make this hypothesis quantitative, we must understand the role of sensory transformations during reach planning and their statistical properties. We developed and tested a model for these transformations by studying patterns of reach errors (McGuire and Sabes, 2009). Subjects made a series of interleaved reaches to visual targets, proprioceptive targets (the other hand, unseen), or bimodal targets (the other hand, visible), as illustrated in Figure 2A. These reaches were made either with or without visual feedback of the hand prior to reach onset, and in particular during an enforced delay period after target presentation (after movement onset, feedback was extinguished in all trials). We took advantage of a bias in reaching that naturally occurs when subjects fixate a location distinct from the reach target. In particular, when subjects reach to a visual target in the peripheral visual field, reaches tend to be biased further from the fixation point (Bock, 1993; Enright, 1995). This pattern of reach errors is illustrated in the left-hand panels of Figure 2B: when reaching left of the fixation point a leftward bias is observed, and similarly for the right. Thus, these errors follow a retinotopic pattern, i.e. the bias curves shift with the fixation point. The bias pattern changes, but remains retinotopic, when reaching to bimodal targets (Figure 2C) or proprioceptive targets (Figure 2D). Most notably, the sign of the bias switches for proprioceptive reaches: subjects tend to reach closer to the point of fixation. Finally, the magnitude of these errors depends on whether visual feedback of the reaching hand is available prior to movement onset (compare the top and bottom panels of Figures 2B–D; see alsoBeurze et al. (2007)).

Figure 2.

A. Experimental setup. Left subpanel. Subjects sat in a simple virtual reality apparatus with a mirror reflecting images presented on a rear projection screen (Simani et al., 2007). View of both arms was blocked, but artificial feedback of either arm could be given in the form of a disk of light that moved with the fingertip. The right and left arms were separated by a thin table, allowing subject to reach to their left hand without tactile feedback. We were thus able to manipulate both the sensory modality of the target (visual, proprioceptive, or bimodal) and the presence of absence of visual feedback of the reaching hand. Right subpanel. For each target and feedback condition, reaches were made to an array of targets (displayed individually during the experiment) with the eyes fixated on one of two fixation points. B–D. Reach biases. Average reach angular errors are plotted as a function of target and fixation location, separately for each trial condition (target modality and presence of visual feedback). Target modalities were randomly interleaved across two sessions, one with visual feedback and one without. Solid lines: average reach errors (with standard errors) across eight subjects for each trial condition. Dashed lines: model fits to the data. The color of the line indicates the gaze location. D. Schematic of the Bayesian parallel representations model of reach planning. See text for details. E. Reach variability. Average variability of reach angle plotted for each trial condition. Solid lines: average standard deviation of reach error across subjects for each trial condition. Dashed lines: model predictions. Adapted from McGuire and Sabes (2011).

While these bias patterns might seem arbitrary, they suggest an underlying mechanism. First, the difference in the sign of errors for visual and proprioceptive targets suggests that the bias arises in the transformation from a retinotopic (or eye-centered) representation to a body-centered representation. To see why, consider that in its simplified one-dimensional form, the transformation requires only adding or subtracting the gaze location (see the box labeled “Transformation” in Figure 2E). This might appear to be a trivial computation. However, the internal estimate of gaze location is itself an uncertain quantity. We argued that this estimate relies on current sensory signals (proprioception or efference copy) as well as an internal prior that “expects” gaze to be coincident with the target. Thus, the estimate of gaze would be biased toward a retinally peripheral target. Since visual and proprioceptive information about target location travels in different directions through this transformation, a biased estimate of gaze location results in oppositely signed errors for the two signals, as observed in Figure 2B,D. Furthermore, because the internal estimate of gaze location is uncertain, the transformation adds variability to the signal (see also Schlicht and Schrater, 2007), even if the addition or subtraction operation itself can be performed without error (not necessarily the case for neural computations, Shadlen and Newsome, 1994). One consequence of this variability is that access to visual feedback of the hand would improve the reliability of an eye-centered representation (upper pathway in Figure 2E) more than it would improve the reliability of a body-centered representation (low pathway in Figure 2E), since the latter receives a transformed, and thus more variable, version of the signal. Therefore, if the final movement plan were constructed from the optimal combination of an eye-centered and body-centered plan (rightmost box in Figure 2E), the presence of visual feedback of the reaching hand should favor the eye-centered representation. This logic explains why the visual feedback of the reaching hand decreases the magnitude of the bias for visual targets (when the eye-centered space is unbiased; Figure 2B) but increases the magnitude of the bias for proprioceptive targets (when the eye-centered space is biased; Figure 2D).

Together, these ideas form the Bayesian integration model of reach planning with ‘parallel representations’, illustrated in Figure 2E. In this model, all sensory inputs related to a given spatial variable are combined with weights inversely proportional to their local variability (Equation 1), and a movement vector is then computed. This computation occurs simultaneously in an eye-centered and a body-centered representation. The two resultant movement vectors have different uncertainties, depending on the availability and reliability of the sensory signals they receive in a given experimental condition. The final output the network is itself a weighted sum of these two representations. We fit the four free parameters of the model (corresponding to values of sensory variability) to the reach error data shown in solid lines in Figure 2B–D. The model captures those error patterns (dashed lines in Figure 2B–D), and predicts the error patterns from two similar studies described above (Beurze et al., 2007; Sober and Sabes, 2005). In addition, the model predicts the differences we observed in reach variability across experimental conditions (Figure 2F).

These results challenge the idea that movement planning should begin by mapping the relevant sensory signals into a single common reference frame (Batista et al., 1999; Buneo et al., 2002; Cohen et al., 2002). The model shows that the use of two parallel representations of the movement plan yields a less variable output in the face of variable and sometimes missing sensory signals and noisy internal transformations. It is not clear whether or how this model can be mapped onto the real neural circuits that underlie reach planning. For example, the two parallel representations could be implemented by a single neuronal population (Pouget et al., 2002; Xing and Andersen, 2000; Zipser and Andersen, 1988). Before addressing this issue, though, we consider the question of how single neurons or populations of neurons should integrate their afferent signals.

Modeling sensory integration in neural populations

Stein and colleagues have studied multimodal responses in single neurons in the deep layers of cat superior colliculus, and have found both enhancement and suppression of multimodal responses (Meredith and Stein, 1983; Meredith and Stein, 1986; Stein and Stanford, 2008). Based on this work, they suggest that the definition of sensory integration at the level of the single unit is for the responses to be significantly enhanced or suppressed relative to the preferred unimodal stimulus (Stein et al., 2009). However, this definition is overly broad, and includes computations that are not typically thought of as integration. For example,Kadunce et al. (1997) showed that cross-modal suppressive effects in the superior colliculus often mimic those observed for paired within-modality stimuli. These effects are most likely due not to integration, but rather to competition within the spatial map of the superior colliculus, similar to the process seen during saccade planning in primate superior colliculus (Dorris et al., 2007; Trappenberg et al., 2001).

The criterion for signal integration should be the presence of a shared representation that offers improved performance (e.g., reduced variability) when multimodal inputs are available. Here, a “shared” representation is one that encodes all sensory inputs similarly. Using the notation of Equation 1, the strongest form a shared representation is one in which neural activity is function only of xinteg and , rather than being a function of the independent inputs, x1, and x2, . The advantage of such a representation is that downstream areas need not know about which sensory signals were available in order to use the information.

Ma et al. (2006) suggest a relatively simple approach to achieving such an integrated representation. They show that a population of neurons that simply adds the firing rates of independent input populations (or their linear transformations) effectively implements ML integration, at least when firing rates have Poisson-like distributions. This result can be understood intuitively for Poisson firing rates. The variance of the ML decode from each population is inversely proportional to its gain. Therefore, summing the inputs yields a representation with the summed gains, and thus with variance that matches the optimum defined in Equation 1 above. Furthermore, because addition preserves information about variability, this operation can be repeated hierarchically, a desirable feature for building more complex circuits like those required for sensory-guided movement.

It remains unknown whether real neural circuits employ such a strategy, or even if they combine their inputs in a statistically optimal manner. In practice, it can be difficult to quantitatively test the predictions of this and similar models. For example, strict additivity of the inputs is not to be expected in many situations, such as in the presence of inputs that are correlated or non-Poisson, or if activity levels are normalized within a given brain area (Ma et al., 2006; Ma and Pouget, 2008). These difficulties are compounded for recordings from single neurons. In this case, biases in the unimodal representations of space would lead to changes in firing rates across modalities even in the absence of integration-related changes in gain.

Nonetheless, several hallmarks of optimal integration have been observed in the responses of bimodal (visual and vestibular) motion encoding neurons in macaque area MST: bimodal activity is well-modeled as a weighted linear sum of unimodal responses; the visual weighting decreases when the visual stimulus is degraded; and the variability of the decoding improves in the bimodal condition (Morgan et al., 2008). Similarly, neurons in macaque Area 5 appear to integrate proprioceptive and visual cues of arm location (Graziano et al., 2000). In particular, the activity for a mismatched bimodal stimulus is between that observed for location-matched unimodal stimuli (weighting), and the activity for matched bimodal stimuli is greater than that observed for proprioception alone (variance reduction).

Sensory integration in the cortical circuits for reaching

In the context of the neural circuits for reach planning, locally optimal integration of incoming signals could be sufficient to explain behavior, as illustrated in the parallel representations model of Figure 2. Here we ask whether the principles in this model can be mapped onto the primate cortical reach network.

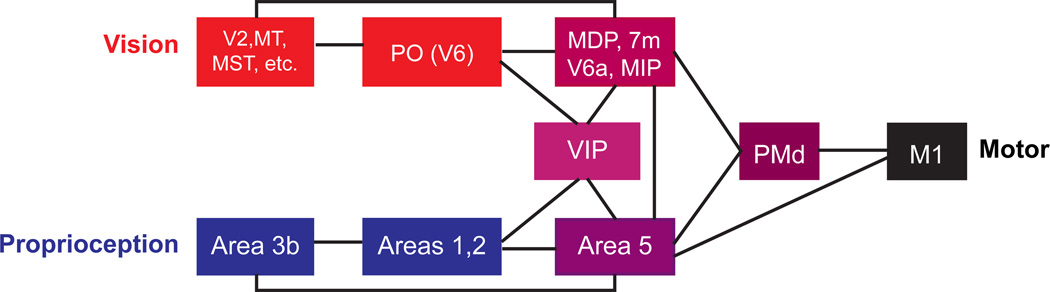

Volitional arm movements in primate involve a large network of brain areas with a rich pattern of inter-area connectivity. Within this larger circuit, there is a subnetwork of areas, illustrated in Figure 3, that appear to be responsible for the complex sensorimotor transformations required for goal-directed reaches under multisensory guidance. Visual information primarily enters this network via the parietal-occipital area, particularly area V6 (Galletti et al., 1996; Shipp et al., 1998). Proprioceptive information primarily enters via Area 5, which receives direct projections from primary somatosensory cortex (Crammond and Kalaska, 1989; Kalaska et al., 1983; Pearson and Powell, 1985). These visual and proprioceptive signals converge on a group of parietal sensorimotor areas in or near the intraparietal sulcus (IPS): MDP and 7m (Ferraina et al., 1997a; Ferraina et al., 1997b; Johnson et al., 1996), V6a (Galletti et al., 2001; Shipp et al., 1998), and MIP and VIP (Colby et al., 1993; Duhamel et al., 1998). The parietal reach region (PRR), characterized physiologically by Andersen and colleagues (Batista et al., 1999; Snyder et al., 1997), includes portions of MIP, V6a, and MDP (Snyder et al., 2000a). These parietal areas project forward to the dorsal premotor cortex (PMd) and, in some cases, the primary motor cortex (M1) and they all exhibit some degree of activity related to visual and proprioceptive movement cues, the pending movement plan (“set” or “delay” activity), and the ongoing movement kinematics or dynamics.

Figure 3.

Schematic illustration of the cortical reach circuit.

While the network illustrated in Figure 3 is clearly more complex than the simple computational schematic of Figure 2E, there is a suggestive parallel. While both Area 5 and MIP integrate multimodal signals and project extensively to the rest of the reach circuit, they differ in their anatomical proximity to their visual versus proprioceptive inputs: Area 5 is closer to somatosensory cortex, and MIP is closer to the visual inputs to reach circuit. Furthermore, Area 5 uses more body- or hand-centered representations compared to the eye-centered representations reported in MIP (Batista et al., 1999; Buneo et al., 2002; Chang and Snyder, 2010; Colby and Duhamel, 1996; Ferraina et al., 2009; Kalaska, 1996; Lacquaniti et al., 1995; Marconi et al., 2001; Scott et al., 1997). Thus, these areas are potential candidates for the parallel representations predicted in the behavioral model.

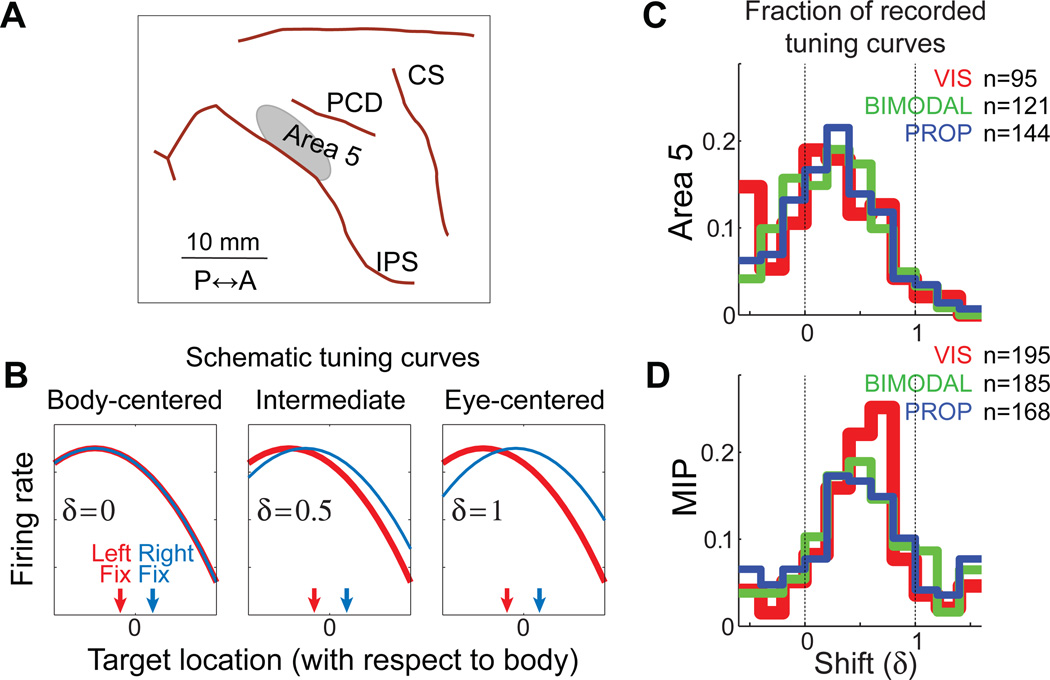

To test this possibility, we recorded from Area 5 and MIP (Figure 4A) as Macaque monkeys performed the same psychophysical task that was illustrated in Figure 2A for human subjects (McGuire and Sabes, 2011). One of the questions we addressed in this study is whether there is evidence for parallel representations of the movement plan in body and eye-centered reference frames. We performed several different analyses to characterize neural reference frames; here we focus on the tuning-curve approach illustrated in Figure 4B. Briefly, we fit a tuning curve to the neural responses for a range of targets with two different fixation points (illustrated schematically as the red and blue curves in Figure 4B). Tuning was assumed to be a function of T – δE, where T and E are the target and eye locations in absolute (or body-centered) space and δ is a dimensionless quantity. If δ = 0, firing rate depends on the body-centered location of the target (left panel of Figure 4B), and if δ = 1, firing rate depends on the eye-centered location of the target (right panel of Figure 4B).

Figure 4.

A: Recording locations. Approximate location of neural recordings in Area 5 with respect to sulcal anatomy. Recordings in MIP were located in the same region of cortex, but at a deeper penetration depth. The boundary between Area 5 and MIP was set to a nominal value of 2000 µm below the dura. This value was chosen based on anatomical MR images and the stereotactic coordinates of the recording cylinder. B: Schematic illustration of the tuning curve shift, δ. Each curve represents the firing rate for an idealized cell as a function of target for either the left (red) or right (blue) fixation points. Three idealize cells are illustrated, with shift values of δ = 0, 0.5, and 1. See text for more details. C,D: Distribution of shift values estimated from neural recordings in Area 5 (C) and MIP (D). Each cell may be included up to three times, once for the delay, reaction time, and movement epoch tuning curves. The number of tuning curves varies across modalities because tuning curves were only included when the confidence limit on the best-fit value of δ had a range of less than 1.5, a conservative value that excluded untuned cells. Adapted from (McGuire and Sabes, 2011).

We found that there is no difference in the mean or distribution of shift values across target modality for either cortical area (Figure 4C,D), i.e. these are shared (modality-invariant) representations. Although some evidence for other shared movement-related representations have been found in the parietal cortex (Cohen et al., 2002), many studies of multisensory areas in the partietal cortex and elsewhere have found that representations are determined, at least in part, by the representation of the current sensory input (Avillac et al., 2005; Fetsch et al., 2007; Jay and Sparks, 1987; Mullette-Gillman et al., 2005; Stricanne et al., 1996). Shared representations such as these have the advantage that downstream areas do not need to know which sensory signals are available in order to use of the representation.

We also observed significant differences in the mean and distribution of shift values across cortical areas, with MIP exhibiting a more eye-centered representation (mean δ = 0.51), while Area 5 has a more body-centered representation (mean δ = 0.25). In a separate analysis, we showed that more MIP cells encode target location alone, compared to Area 5, where more cells encode both target and hand location (McGuire and Sabes, 2011). These inter-area differences parallel observations from Andersen and colleagues of eye-centered target coding in PRR (Batista et al., 1999) and eye-centered movement vector representation for Area 5 (Buneo et al., 2002). However, where those papers report consistent, eye-centered reference frames, we observed a great deal of heterogeneity in representations within each area, with most cells exhibiting “intermediate” shifts between 0 and 1. We think this discrepancy lies primarily in the analyses used: the shift analysis does not force a choice between alternative reference frames, but rather allows for a continuum of intermediate reference frames. When an approach very similar to ours was applied to recordings from a more posterior region of the IPS, a similar spread of shift values was obtained, although the mean shift value was somewhat closer to unity (Chang and Snyder, 2010).

While we did not find the simple eye- and body-centered representations that were built into parallel representations model of Figure 4D, the results can be nonetheless be interpreted in light of that model. We found that both Area 5 and MIP use modality-invariant representations of the movement plan, an important feature of the model. Furthermore, there are multiple integrated representations of the movement plan within the superior parietal lobe, with an anterior to posterior gradient in the magnitude of gaze-dependent shifts (Chang and Snyder, 2010; McGuire and Sabes, 2011). A statistically optimal combination of these representations, dynamically changing with the current sensory inputs, would likely provide a close match to the output of the model. The physiological recordings also revealed a great deal of heterogeneity in shift values, suggesting an alternate implementation of the model. Xing and Andersen (2000) have observed that a network with a broad distribution of reference frame shifts can be used to compute multiple simultaneous readouts, each in a different reference frame. Indeed, a broad distribution of gaze-dependent tuning shifts has been observed within many parietal areas (Avillac et al., 2005; Chang and Snyder, 2010; Duhamel et al., 1998; Mullette-Gillman et al., 2005; Stricanne et al., 1996). Thus, parallel representations of movement planning could also be implemented within a single heterogeneous population of neurons.

From local to global optimality

We have adopted a simple definition of sensory integration, namely, improved performance when multiple sensory modalities are available – whether in a behavioral task or with respect to the variability of neural representations. This definition leads naturally to criteria for optimal integration such as the minimum variance/ML model of Equation 1, and a candidate mechanism for achieving such optimality was discussed above (Ma et al., 2006). In the context of a complex sensorimotor circuit, a mechanism such as this could be applied at the local level to integrate the afferent signals at each cortical area, independently across areas. However, these afferent signals will include the effects of the specific combination of upstream transformations, and so such a model would only appear to be optimal at the local level. It remains an open question as to how locally optimal (or near-optimal) integration could lead to globally optimal (or near-optimal) behavior.

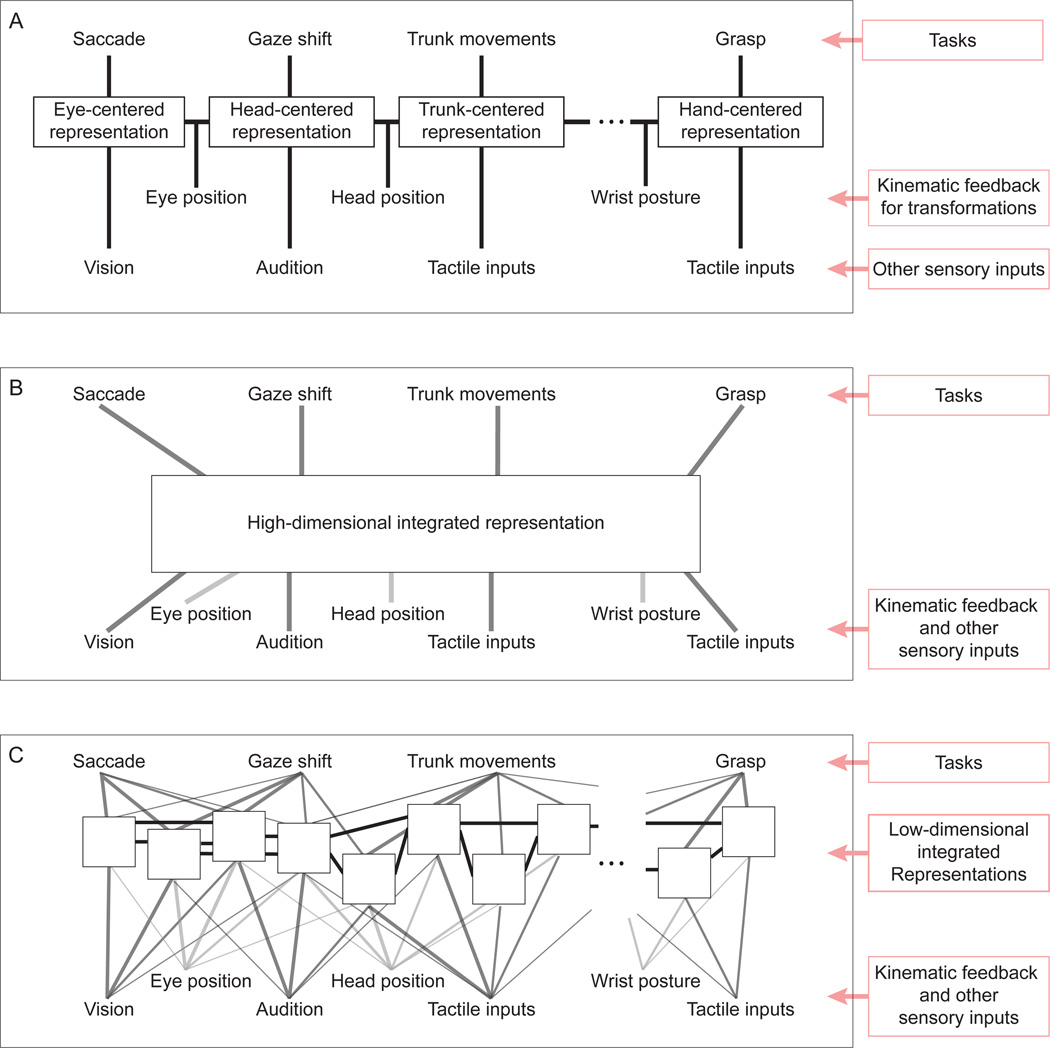

The parietal network that underlies reaching is part of a larger region along the IPS that subserves a wide range of sensorimotor tasks (reviewed, e.g., in Andersen and Buneo, 2002; Burnod et al., 1999; Colby and Goldberg, 1999; Grefkes and Fink, 2005; Rizzolatti et al., 1997). These tasks make use of many sensory inputs, each naturally linked to a particular reference frame (e.g. visual signals originate in a retinotopic reference frame), as well as an array of kinematic feedback signals needed to transform from one reference frame to another. In this context, it seems logical to suggest a series of representations and transformations, e.g., from eye-centered to hand-centered space, as illustrated in Figure 5A. This schema offers a great degree of flexibility, since the “right” representation would be available for any given task. An attractive hypothesis is that a schema such as this could be mapped onto the series of sensorimotor representations that lie along the IPS, e.g., from the retinotopic visual maps in area V6 (Fattori et al., 2009; Galletti et al., 1996) to the hand-centered grasp-related activity in AIP.

Figure 5. Three schematic models of the parietal representations of sensorimotor space.

A: A sequence of transformations that follows the kinematics of the body. Each behavior uses the representation that most closely matches the space of the task. B: A single high-dimensional representation that integrates all of the relevant sensorimotor variables and subserves the downstream computations for all tasks. C: A large collection of low-dimensional integrated representations with overlapping sensory inputs and a high degree of interconnectivity. Each white box represents a different representation of sensorimotor space. The nature of these representations is determined by their inputs, and their statistical properties (e.g. variability, gain) will depend on the sensory signals available at the time. The computations performed for any given task make use of several of these representations, with the relative weighting dynamically determined by their statistical properties.

The pure reference frame representations illustrated in the schema of Figure 5A are not consistent with the evidence for heterogeneous “intermediate” representations. However, the general schema of a sequence of transformations and representations might still be correct, since the neural circuits implementing these transformations need not represent these variables in the reference frames of their inputs, as illustrated by several network models of reference-frame transformations (Deneve et al., 2001; Salinas and Sejnowski, 2001; Xing and Andersen, 2000; Zipser and Andersen, 1988). The use of network models such as these could reconcile the schema of Figure 5A with the physiological data (Pouget et al., 2002; Salinas and Sejnowski, 2001).

While this schema is conceptually attractive, it has disadvantages. As described above, each transformation will inject variability into the circuit. This variability would accrue along the sequence a transformations, a problem that could potentially be avoided by “direct” sensorimotor transformations such as those proposed by (Buneo et al., 2002). Furthermore, in order not to lose fidelity along this sequence, all intermediate representations require comparably sized neuronal populations, even representations that are rarely directly used for behavior. Ideally, one would be able to allocate more resources to a retinotopic representation, for example, than an elbow-centered representation.

An alternative schema is to combine many sensory signals into each of a small number of representations; in the limit, a single complex representational network could be used (Figure 5B). It has been shown that multiple reference-frames can be read out from a single network of neurons when those neurons use “gain-field” representations, i.e. when their responses are multiplicative in the various input signals (Salinas and Abbott, 1995; Salinas and Sejnowski, 2001; Xing and Andersen, 2000). More generally, non-linear basis functions create general purpose representations that can be used to compute (at least approximately) a wide range of task-relevant variables (Pouget and Sejnowski, 1997; Pouget and Snyder, 2000). In particular, this approach would allow “direct” transformations from sensory to motor variables (Buneo et al., 2002) without the need for intervening sequences of transformations. However, this schema also has limitations. In order to represent all possible combinations of variables, the number of required neurons increases exponentially with the number of input variables (the “curse-of-dimensionality”). Indeed, computational models of such generic networks show a rapid increase in errors as the number of input variables grow (Salinas and Abbott, 1995). This limitation becomes prohibitive when the number of sensorimotor variables approaches a realistic value.

A solution to this problem, illustrated in Figure 5C, is to have a large number of networks, each with only a few inputs or encoding only a small subspace of the possible outputs. These representations would likely have complex or “intermediate” representations of sensorimotor space that would not directly map either to particular stages in the kinematic sequence (5A) or to the “right” reference frames for a set of tasks. Instead, the downstream circuits for behavior would draw upon several of these representations. This schema is consistent with the large continuum of representations seen along the IPS (reviewed, for example, in Burnod et al., 1999), and the fact that the anatomical distinctions between nominal cortical areas in this region are unclear and remain a matter of debate (Cavada, 2001; Lewis and Van Essen, 2000). It is also consistent with the fact that there is a great deal of overlap in the pattern of cortical areas that are active during any given task, e.g. saccade and reach activity have been observed in overlapping cortical areas (Snyder et al., 1997, 2000b) and grasp-related activity can be observed in nominally reach-related areas (Fattori et al., 2009). This suggests that brain areas around the IPS should not be thought of a set of task-specific domains (e.g., Andersen and Buneo, 2002; Colby and Goldberg, 1999; Grefkes and Fink, 2005), but rather as a palette of complex, sensorimotor representations.

This schema suggests a mechanism by which locally optimal integration could yield globally optimal behavior, essentially a generalization of the parallel representations model of Figure 4D. In both the parallel representations model and the schema of Figure 5C, downstream motor circuits integrate overlapping information from multiple sensorimotor representations of space. For any specific instance of a behavior, the weighting of these representations should depend on their relative variability, perhaps determined by gain (Ma et al., 2006), and this variability would depend on the sensory and motor signals available at that time. If each of the representations in this palette contains a locally optimal mixture of its input signals, optimal weighting of the downstream projections from this palette could drive statistically efficient behavior.

Acknowledgements

This work was supported by the National Eye Institute (R01 EY-015679) and the National Institute of Mental Health (P50 MH77970). I thank John Kalaska, Joseph Makin, and Matthew Fellows for reading and commenting on earlier drafts of this manuscript.

Abbreviations

- ML

Maximum Likelihood

- MST

Medial superior temporal area

- MDP

Medial dorsal parietal area

- MIP

Medial intraparietal area

- VIP

Ventral intraparietal area

- PMd

Dorsal premotor cortex

- M1

Primary motor cortex

- PRR

Parietal reach region

- IPS

Intraparietal sulcus

References

- Andersen RA, Buneo CA. Intentional maps in posterior parietal cortex. Annu Rev Neurosci. 2002:189–220. doi: 10.1146/annurev.neuro.25.112701.142922. [DOI] [PubMed] [Google Scholar]

- Avillac M, Deneve S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci. 2005;8:941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- Batista AP, Buneo CA, Snyder LH, Andersen RA. Reach plans in eye-centered coordinates. Science. 1999;285:257–260. doi: 10.1126/science.285.5425.257. [DOI] [PubMed] [Google Scholar]

- Beurze SM, de Lange FP, Toni I, Medendorp WP. Integration of target and effector information in the human brain during reach planning. J Neurophysiol. 2007;97:188–199. doi: 10.1152/jn.00456.2006. [DOI] [PubMed] [Google Scholar]

- Bock O. Localization of objects in the peripheral visual field. Behav Brain Res. 1993;56:77–84. doi: 10.1016/0166-4328(93)90023-j. [DOI] [PubMed] [Google Scholar]

- Buneo CA, Jarvis MR, Batista AP, Andersen RA. Direct visuomotor transformations for reaching. Nature. 2002:632–636. doi: 10.1038/416632a. [DOI] [PubMed] [Google Scholar]

- Burnod Y, Baraduc P, Battaglia-Mayer A, Guigon E, Koechlin E, Ferraina S, Lacquaniti F, Caminiti R. Parieto-frontal coding of reaching: an integrated framework. Exp Brain Res. 1999:325–346. doi: 10.1007/s002210050902. [DOI] [PubMed] [Google Scholar]

- Cavada C. The visual parietal areas in the macaque monkey: current structural knowledge and ignorance. Neuroimage. 2001:S21–S26. doi: 10.1006/nimg.2001.0818. [DOI] [PubMed] [Google Scholar]

- Chang SW, Snyder LH. Idiosyncratic and systematic aspects of spatial representations in the macaque parietal cortex. Proc Natl Acad Sci U S A. 2010 doi: 10.1073/pnas.0913209107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen YE, Batista AP, Andersen RA. Comparison of neural activity preceding reaches to auditory and visual stimuli in the parietal reach region. Neuroreport. 2002;13:891–894. doi: 10.1097/00001756-200205070-00031. [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel JR. Spatial representations for action in parietal cortex. Brain Res Cogn Brain Res. 1996;5:105–115. doi: 10.1016/s0926-6410(96)00046-8. [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel JR, Goldberg ME. Ventral intraparietal area of the macaque: anatomic location and visual response properties. J Neurophysiol. 1993;69:902–914. doi: 10.1152/jn.1993.69.3.902. [DOI] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Annu Rev Neurosci. 1999:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Crammond DJ, Kalaska JF. Neuronal activity in primate parietal cortex area 5 varies with intended movement direction during an instructed-delay period. Exp Brain Res. 1989:458–462. doi: 10.1007/BF00247902. [DOI] [PubMed] [Google Scholar]

- Deneve S, Latham PE, Pouget A. Efficient computation and cue integration with noisy population codes. Nat Neurosci. 2001;4:826–831. doi: 10.1038/90541. [DOI] [PubMed] [Google Scholar]

- Dorris MC, Olivier E, Munoz DP. Competitive integration of visual and preparatory signals in the superior colliculus during saccadic programming. J Neurosci. 2007;27:5053–5062. doi: 10.1523/JNEUROSCI.4212-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol. 1998;79:126–136. doi: 10.1152/jn.1998.79.1.126. [DOI] [PubMed] [Google Scholar]

- Enright JT. The non-visual impact of eye orientation on eye-hand coordination. Vision research. 1995;35:1611–1618. doi: 10.1016/0042-6989(94)00260-s. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Fattori P, Pitzalis S, Galletti C. The cortical visual area V6 in macaque and human brains. J Physiol Paris. 2009:88–97. doi: 10.1016/j.jphysparis.2009.05.012. [DOI] [PubMed] [Google Scholar]

- Ferraina S, Brunamonti E, Giusti MA, Costa S, Genovesio A, Caminiti R. Reaching in depth: hand position dominates over binocular eye position in the rostral superior parietal lobule. J Neurosci. 2009;29:11461–11470. doi: 10.1523/JNEUROSCI.1305-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferraina S, Garasto MR, Battaglia-Mayer A, Ferraresi P, Johnson PB, Lacquaniti F, Caminiti R. Visual control of hand-reaching movement: activity in parietal area 7m. Eur J Neurosci. 1997a;9:1090–1095. doi: 10.1111/j.1460-9568.1997.tb01460.x. [DOI] [PubMed] [Google Scholar]

- Ferraina S, Johnson PB, Garasto MR, Battaglia-Mayer A, Ercolani L, Bianchi L, Lacquaniti F, Caminiti R. Combination of hand and gaze signals during reaching: activity in parietal area 7 m of the monkey. J Neurophysiol. 1997b;77:1034–1038. doi: 10.1152/jn.1997.77.2.1034. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, Wang S, Gu Y, Deangelis GC, Angelaki DE. Spatial reference frames of visual, vestibular, and multimodal heading signals in the dorsal subdivision of the medial superior temporal area. J Neurosci. 2007:700–712. doi: 10.1523/JNEUROSCI.3553-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Battaglini P, Shipp S, Zeki S. Functional demarcation of a border between areas V6 and V6A in the superior parietal gyrus of the macaque monkey. Eur J Neurosci. 1996:30–52. doi: 10.1111/j.1460-9568.1996.tb01165.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Gamberini M, Kutz DF, Fattori P, Luppino G, Matelli M. The cortical connections of area V6: an occipito-parietal network processing visual information. Eur J Neurosci. 2001;13:1572–1588. doi: 10.1046/j.0953-816x.2001.01538.x. [DOI] [PubMed] [Google Scholar]

- Ghahramani Z, Wolpert DM, Jordan MI. Computational Models of Sensorimotor Integration. In: Morasso PG, Sanguineti V, editors. Self-Organization, Computational Maps and Motor Control. Oxford: Elsevier; 1997. pp. 117–147. [Google Scholar]

- Graziano MS, Cooke DF, Taylor CS. Coding the location of the arm by sight. Science. 2000:1782–1786. doi: 10.1126/science.290.5497.1782. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Fink GR. The functional organization of the intraparietal sulcus in humans and monkeys. J Anat. 2005:3–17. doi: 10.1111/j.1469-7580.2005.00426.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacobs RA. Optimal integration of texture and motion cues to depth. Vision research. 1999;39:3621–3629. doi: 10.1016/s0042-6989(99)00088-7. [DOI] [PubMed] [Google Scholar]

- Jay MF, Sparks DL. Sensorimotor integration in the primate superior colliculus. II. Coordinates of auditory signals. J Neurophysiol. 1987;57:35–55. doi: 10.1152/jn.1987.57.1.35. [DOI] [PubMed] [Google Scholar]

- Johnson PB, Ferraina S, Bianchi L, Caminiti R. Cortical networks for visual reaching: physiological and anatomical organization of frontal and parietal lobe arm regions. Cereb Cortex. 1996;6:102–119. doi: 10.1093/cercor/6.2.102. [DOI] [PubMed] [Google Scholar]

- Kadunce DC, Vaughan JW, Wallace MT, Benedek G, Stein BE. Mechanisms of within- and cross-modality suppression in the superior colliculus. Journal of neurophysiology. 1997:2834–2847. doi: 10.1152/jn.1997.78.6.2834. [DOI] [PubMed] [Google Scholar]

- Kakei S, Hoffman DS, Strick PL. Muscle and movement representations in the primary motor cortex. Science. 1999;285:2136–2139. doi: 10.1126/science.285.5436.2136. [DOI] [PubMed] [Google Scholar]

- Kakei S, Hoffman DS, Strick PL. Direction of action is represented in the ventral premotor cortex. Nat Neurosci. 2001;4:1020–1025. doi: 10.1038/nn726. [DOI] [PubMed] [Google Scholar]

- Kalaska JF. Parietal cortex area 5 and visuomotor behavior. Can J Physiol Pharmacol. 1996;74:483–498. [PubMed] [Google Scholar]

- Kalaska JF, Caminiti R, Georgopoulos AP. Cortical mechanisms related to the direction of two-dimensional arm movements: relations in parietal area 5 and comparison with motor cortex. Exp Brain Res. 1983:247–260. doi: 10.1007/BF00237200. [DOI] [PubMed] [Google Scholar]

- Knill DC, Saunders JA. Do humans optimally integrate stereo and texture information for judgments of surface slant? Vision research. 2003;43:2539–2558. doi: 10.1016/s0042-6989(03)00458-9. [DOI] [PubMed] [Google Scholar]

- Lacquaniti F, Guigon E, Bianchi L, Ferraina S, Caminiti R. Representing spatial information for limb movement: role of area 5 in the monkey. Cereb Cortex. 1995;5:391–409. doi: 10.1093/cercor/5.5.391. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Van Essen DC. Mapping of architectonic subdivisions in the macaque monkey, with emphasis on parieto-occipital cortex. J Comp Neurol. 2000:79–111. doi: 10.1002/1096-9861(20001204)428:1<79::aid-cne7>3.0.co;2-q. [DOI] [PubMed] [Google Scholar]

- Ma WJ, Beck JM, Latham PE, Pouget A. Bayesian inference with probabilistic population codes. Nat Neurosci. 2006;9:1432–1438. doi: 10.1038/nn1790. [DOI] [PubMed] [Google Scholar]

- Ma WJ, Pouget A. Linking neurons to behavior in multisensory perception: a computational review. Brain Res. 2008;1242:4–12. doi: 10.1016/j.brainres.2008.04.082. [DOI] [PubMed] [Google Scholar]

- Marconi B, Genovesio A, Battaglia-Mayer A, Ferraina S, Squatrito S, Molinari M, Lacquaniti F, Caminiti R. Eye-hand coordination during reaching. I. Anatomical relationships between parietal and frontal cortex. Cereb Cortex. 2001;11:513–527. doi: 10.1093/cercor/11.6.513. [DOI] [PubMed] [Google Scholar]

- McGuire LM, Sabes PN. Sensory transformations and the use of multiple reference frames for reach planning. Nat Neurosci. 2009;12:1056–1061. doi: 10.1038/nn.2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGuire LMM, Sabes PN. Heterogeneous representations in the superior parietal lobule are common across reaches to visual and proprioceptive targets. J Neurosci. 2011 doi: 10.1523/JNEUROSCI.2921-10.2011. In review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Interactions among converging sensory inputs in the superior colliculus. Science. 1983;221:389–391. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. Journal of neurophysiology. 1986:640–662. doi: 10.1152/jn.1986.56.3.640. [DOI] [PubMed] [Google Scholar]

- Morgan ML, Deangelis GC, Angelaki DE. Multisensory integration in macaque visual cortex depends on cue reliability. Neuron. 2008;59:662–673. doi: 10.1016/j.neuron.2008.06.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullette-Gillman OA, Cohen YE, Groh JM. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. J Neurophysiol. 2005;94:2331–2352. doi: 10.1152/jn.00021.2005. [DOI] [PubMed] [Google Scholar]

- Pearson RC, Powell TP. The projection of the primary somatic sensory cortex upon area 5 in the monkey. Brain Res. 1985;356:89–107. doi: 10.1016/0165-0173(85)90020-7. [DOI] [PubMed] [Google Scholar]

- Pouget A, Deneve S, Duhamel JR. A computational perspective on the neural basis of multisensory spatial representations. Nat Rev Neurosci. 2002;3:741–747. doi: 10.1038/nrn914. [DOI] [PubMed] [Google Scholar]

- Pouget A, Sejnowski TJ. Spatial transformations in the parietal cortex using basis functions. Journal of cognitive neuroscience. 1997:222–237. doi: 10.1162/jocn.1997.9.2.222. [DOI] [PubMed] [Google Scholar]

- Pouget A, Snyder LH. Computational approaches to sensorimotor transformations. Nature neuroscience. 2000:1192–1198. doi: 10.1038/81469. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V. Parietal cortex: from sight to action. Current opinion in neurobiology. 1997:562–567. doi: 10.1016/s0959-4388(97)80037-2. [DOI] [PubMed] [Google Scholar]

- Rossetti Y, Desmurget M, Prablanc C. Vectorial coding of movement: vision, proprioception, or both? J Neurophysiol. 1995;74:457–463. doi: 10.1152/jn.1995.74.1.457. [DOI] [PubMed] [Google Scholar]

- Salinas E, Abbott LF. Transfer of coded information from sensory to motor networks. J Neurosci. 1995:6461–6474. doi: 10.1523/JNEUROSCI.15-10-06461.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salinas E, Sejnowski TJ. Gain modulation in the central nervous system: where behavior, neurophysiology, and computation meet. Neuroscientist. 2001;7:430–440. doi: 10.1177/107385840100700512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlicht EJ, Schrater PR. Impact of coordinate transformation uncertainty on human sensorimotor control. J Neurophysiol. 2007;97:4203–4214. doi: 10.1152/jn.00160.2007. [DOI] [PubMed] [Google Scholar]

- Scott SH, Sergio LE, Kalaska JF. Reaching movements with similar hand paths but different arm orientations. II. Activity of individual cells in dorsal premotor cortex and parietal area 5. J Neurophysiol. 1997;78:2413–2426. doi: 10.1152/jn.1997.78.5.2413. [DOI] [PubMed] [Google Scholar]

- Shadlen MN, Newsome WT. Noise, neural codes and cortical organization. Curr Opin Neurobiol. 1994;4:569–579. doi: 10.1016/0959-4388(94)90059-0. [DOI] [PubMed] [Google Scholar]

- Shipp S, Blanton M, Zeki S. A visuo-somatomotor pathway through superior parietal cortex in the macaque monkey: cortical connections of areas V6 and V6A. Eur J Neurosci. 1998;10:3171–3193. doi: 10.1046/j.1460-9568.1998.00327.x. [DOI] [PubMed] [Google Scholar]

- Simani MC, McGuire LM, Sabes PN. Visual-shift adaptation is composed of separable sensory and task-dependent effects. J Neurophysiol. 2007;98:2827–2841. doi: 10.1152/jn.00290.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snyder LH, Batista AP, Andersen RA. Coding of intention in the posterior parietal cortex. Nature. 1997;386:167–170. doi: 10.1038/386167a0. [DOI] [PubMed] [Google Scholar]

- Snyder LH, Batista AP, Andersen RA. Intention-related activity in the posterior parietal cortex: a review. Vision research. 2000a;40:1433–1441. doi: 10.1016/s0042-6989(00)00052-3. [DOI] [PubMed] [Google Scholar]

- Snyder LH, Batista AP, Andersen RA. Saccade-related activity in the parietal reach region. J Neurophysiol. 2000b;83:1099–1102. doi: 10.1152/jn.2000.83.2.1099. [DOI] [PubMed] [Google Scholar]

- Sober SJ, Sabes PN. Multisensory integration during motor planning. J Neurosci. 2003;23:6982–6992. doi: 10.1523/JNEUROSCI.23-18-06982.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sober SJ, Sabes PN. Flexible strategies for sensory integration during motor planning. Nat Neurosci. 2005;8:490–497. doi: 10.1038/nn1427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- Stein BE, Stanford TR, Ramachandran R, Perrault TJ, Jr, Rowland BA. Challenges in quantifying multisensory integration: alternative criteria, models, and inverse effectiveness. Exp Brain Res. 2009;198:113–126. doi: 10.1007/s00221-009-1880-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stricanne B, Andersen RA, Mazzoni P. Eye-centered, head-centered, and intermediate coding of remembered sound locations in area LIP. Journal of neurophysiology. 1996:2071–2076. doi: 10.1152/jn.1996.76.3.2071. [DOI] [PubMed] [Google Scholar]

- Trappenberg TP, Dorris MC, Munoz DP, Klein RM. A model of saccade initiation based on the competitive integration of exogenous and endogenous signals in the superior colliculus. J Cogn Neurosci. 2001;13:256–271. doi: 10.1162/089892901564306. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Sittig AC, Denier van der Gon JJ. In: Integration of proprioceptive and visual position-information: An experimentally supported model. Sittig Ac GJJ, editor. 1999. pp. 1355–1365. [DOI] [PubMed] [Google Scholar]

- Xing J, Andersen RA. Models of the posterior parietal cortex which perform multimodal integration and represent space in several coordinate frames. Journal of cognitive neuroscience. 2000:601–614. doi: 10.1162/089892900562363. [DOI] [PubMed] [Google Scholar]

- Zipser D, Andersen RA. A back-propagation programmed network that simulates response properties of a subset of posterior parietal neurons. Nature. 1988;331:679–684. doi: 10.1038/331679a0. [DOI] [PubMed] [Google Scholar]