Abstract

The variance covariance matrix plays a central role in the inferential theories of high dimensional factor models in finance and economics. Popular regularization methods of directly exploiting sparsity are not directly applicable to many financial problems. Classical methods of estimating the covariance matrices are based on the strict factor models, assuming independent idiosyncratic components. This assumption, however, is restrictive in practical applications. By assuming sparse error covariance matrix, we allow the presence of the cross-sectional correlation even after taking out common factors, and it enables us to combine the merits of both methods. We estimate the sparse covariance using the adaptive thresholding technique as in Cai and Liu (2011), taking into account the fact that direct observations of the idiosyncratic components are unavailable. The impact of high dimensionality on the covariance matrix estimation based on the factor structure is then studied.

Keywords and phrases: sparse estimation, thresholding, cross-sectional correlation, common factors, idiosyncratic, seemingly unrelated regression

1. Introduction

We consider a factor model defined as follows:

| (1.1) |

where yit is the observed datum for the ith (i = 1, …, p) asset at time t = 1, …, T; bi is a K × 1 vector of factor loadings; ft is a K × 1 vector of common factors, and uit is the idiosyncratic error component of yit. Classical factor analysis assumes that both p and K are fixed, while T is allowed to grow. However, in the recent decades, both economic and financial applications have encountered very large data sets which contain high dimensional variables. For example, the World Bank has data for about two-hundred countries over forty years; in portfolio allocation, the number of stocks can be in thousands and be larger or of the same order of the sample size. In modeling housing prices in each zip code, the number of regions can be of order thousands, yet the sample size can be 240 months or twenty years. The covariance matrix of order several thousands is critical for understanding the co-movement of housing prices indices over these zip codes.

Inferential theory of factor analysis relies on estimating Σu, the variance-covariance matrix of the error term, and Σ, the variance-covariance matrix of yt = (y1t, …, ypt)′. In the literature, Σ = cov(yt) was traditionally estimated by the sample covariance matrix of yt:

which was always assumed to be pointwise root-T consistent. However, the sample covariance matrix is an inappropriate estimator in high dimensional settings. For example, when p is larger than T, Σsam becomes singular while Σ is always strictly positive definite. Even if p < T, Fan, Fan and Lv (2008) showed that this estimator has a very slow convergence rate under the Frobenius norm. Realizing the limitation of the sample covariance estimator in high dimensional factor models, Fan, Fan and Lv (2008) considered more refined estimation of Σ, by incorporating the common factor structure. One of the key assumptions they made was the cross-sectional independence among the idiosyncratic components, which results in a diagonal matrix . The cross-sectional independence, however, is restrictive in many applications, as it rules out the approximate factor structure as in Chamberlain and Rothschild (1983). In this paper, we relax this assumption, and investigate the impact of the cross-sectional correlations of idiosyncratic noises on the estimation of Σ and Σu, when both p and T are allowed to diverge. We show that the estimated covariance matrices are still invertible with probability approaching one, even if p > T. In particular, when estimating Σ−1 and , we allow p to increase much faster than T, say, p = O (exp(Tα)), for some α ∈ (0, 1).

Sparsity is one of the commonly used assumptions in the estimation of high dimensional covariance matrices, which assumes that many entries of the off diagonal elements are zero, and the number of nonzero off-diagonal entries is restricted to grow slowly. Imposing the sparsity assumption directly on the covariance of yt, however, is inappropriate for many applications of finance and economics. In this paper we use the factor model and assume that Σu is sparse, and estimate both Σu and using the thresholding method (Bickel and Levina (2008a), Cai and Liu (2011)) based on the estimated residuals in the factor model. It is assumed that the factors ft are observable, as in Fama and French (1992), Fan, Fan and Lv (2008), and many other empirical applications. We derive the convergence rates of both estimated Σ and its inverse respectively under various norms which are to be defined later. In addition, we achieve better convergence rates than those in Fan, Fan and Lv (2008).

Various approaches have been proposed to estimate a large covariance matrix: Bickel and Levina (2008a, 2008b) constructed the estimators based on regularization and thresholding respectively. Rothman, Levina and Zhou (2009) considered thresholding the sample covariance matrix with more general thresholding functions. Lam and Fan (2009) proposed penalized quasi-likelihood method to achieve both the consistency and sparsistency of the estimation. More recently, Cai and Zhou (2010) derived the minimax rate for sparse matrix estimation, and showed that the thresholding estimator attains this optimal rate under the operator norm. Cai and Liu (2011) proposed a thresholding procedure which is adaptive to the variability of individual entries, and unveiled its improved rate of convergence.

The rest of the paper is organized as follows. Section 2 provides the asymptotic theory for estimating the error covariance matrix and its inverse. Section 3 considers estimating the covariance matrix of yt. Section 4 extends the results to the seemingly unrelated regression model, a set of linear equations with correlated error terms in which the covariates are different across equations. Section 5 reports the simulation results. Finally, Section 6 concludes with discussions. All proofs are given in the appendix. Throughout the paper, we use λmin(A) and λmax(A) to denote the minimum and maximum eigenvalues of a matrix A. We also denote by ‖A‖F, ‖A‖ and ‖A‖∞ the Frobenius norm, operator norm and elementwise norm of a matrix A respectively, defined respectively as ‖A‖F = tr1/2(A′A), , and ‖A‖∞ = maxi,j |Aij|. Note that, when A is a vector, both ‖A‖ and ‖A‖F are equal to the Euclidean norm.

2. Estimation of Error Covariance Matrix

2.1. Adaptive thresholding

Consider the following approximate factor model, in which the cross-sectional correlation among the idiosyncratic error components is allowed:

| (2.1) |

where i = 1, ‥, , p and t = 1, …, T; bi is a K × 1 vector of factor loadings; ft is a K × 1 vector of observable common factors, uncorrelated with uit. Write

then model (2.1) can be written in a more compact form:

| (2.2) |

with E(ut|ft) = 0.

In practical applications, p can be thought of as the number of assets or stocks, or number of regions in spatial and temporal problems such as home price indices or sales of drugs, and in practice can be of the same order as, or even larger than T. For example, an asset pricing model may contain hundreds of assets while the sample size on daily returns is less than several hundreds. In the estimation of the optimal portfolio allocation, it was observed by Fan, Fan and Lv (2008) that the effect of large p on the convergence rate can be quite severe. In contrast, the number of common factors, K, can be much smaller. For example, the rank theory of consumer demand systems implies no more than three factors (e.g., Gorman (1981) and Lewbel (1991)).

The error covariance matrix

itself is of interest for the inferential theory of factor models. For example, the asymptotic covariance of the least square estimator of B depends on , and in simulating home price indices over a certain time horizon for mortgage based securities, a good estimate of Σu is needed. When p is close to or larger than T, estimating Σu is very challenging. Therefore, following the literature of high dimensional covariance matrix estimation, we assume it is sparse, i.e., many of its off-diagonal entries are zeros. Specifically, let Σu = (σij)p×p. Define

| (2.3) |

The sparsity assumption puts an upper bound restriction on mT. Specifically, we assume:

| (2.4) |

In this formulation, we even allow the number of factors K to be large, possibly growing with T.

A more general treatment (e.g., Bickel and Levina (2008a) and Cai and Liu (2011)) is to assume that the lq norm of the row vectors of Σu are uniformly bounded across rows by a slowly growing sequence, for some q ∈ [0, 1). In contrast, the assumption we make in this paper, i.e., q = 0, has clearer economic interpretation. For example, the firm returns can be modeled by the factor model, where uit represents a firm’s individual shock at time t. Driven by the industry-specific components, these shocks are correlated among the firms in the same industry, but can be assumed to be uncorrelated across industries, since the industry-specific components are not pervasive for the whole economy (Connor and Korajczyk (1993)).

We estimate Σu using the thresholding technique introduced and studied by Bickel and Levina (2008a), Rothman, Levina and Zhu (2009), Cai and Liu (2011), etc, which is summarized as follows: Suppose we observe data (X1, …, XT) of a p × 1 vector X, which follows a multivariate Gaussian distribution N (0, ΣX). The sample covariance matrix of X is thus given by:

Define the thresholding operator by 𝒯t(M) = (MijI (|Mij| ≥ t)) for any symmetric matrix M. Then 𝒯t preserves the symmetry of M. Let . Bickel and Levina (2008a) then showed that:

In the factor models, however, we do not observe the error term directly. Hence when estimating the error covariance matrix of a factor model, we need to construct a sample covariance matrix based on the residuals ûit before thresholding. The residuals are obtained using the plug-in method, by estimating the factor loadings first. Let b̂i be the ordinary least square (OLS) estimator of bi, and

Denote by ût = (û1t, …, ûpt)′. We then construct the residual covariance matrix as:

Note that the thresholding value in Bickel and Levina (2008a) is in fact obtained from the rate of convergence of maxij |sij − ΣX,ij |. This rate changes when sij is replaced with the residual ûij, which will be slower if the number of common factors K increases with T. Therefore, the thresholding value ωT used in this paper is adjusted to account for the effect of the estimation of the residuals.

2.2. Asymptotic properties of the thresholding estimator

Bickel and Levina (2008a) used a universal constant as the thresholding value. As pointed out by Rothman, Levina and Zhu (2009) and Cai and Liu (2011), when the variances of the entries of the sample covariance matrix vary over a wide range, it is more desirable to use thresholds that capture the variability of individual estimation. For this purpose, in this paper, we apply the adaptive thresholding estimator (Cai and Liu (2011)) to estimate the error covariance matrix, which is given by

| (2.5) |

for some ωT to be specified later.

We impose the following assumptions:

Assumption 2.1. (i) {ut}t≥1 is stationary and ergodic such that each ut has zero mean vector and covariance matrix Σu. In addition, the strong mixing condition in Assumption 3.2 holds.

(ii) There exist constants c1, c2 > 0 such that c1 < λmin(Σu) ≤ λmax(Σu) < c2, and c1 < var(uitujt) < c2 for all i ≤ p, j ≤ p.

(iii) There exist r1 > 0 and b1 > 0, such that for any s > 0 and i ≤ p,

| (2.6) |

Condition (i) allows the idiosyncratic components to be weakly dependent. We will formally present the strong mixing condition in the next section. In order for the main results in this section to hold, it suffices to impose the strong mixing condition marginally on ut only. Roughly speaking, we require the mixing coefficient

to decrease exponentially fast as T → ∞, where () are the σ-algebras generated by respectively.

Condition (ii) requires the nonsingularity of Σu. Note that Cai and Liu (2011) allowed maxj σjj to diverse when direct observations are available. Condition (ii), however, requires that σjj should be uniformly bounded. In factor models, a uniform upper bound on the variance of uit is needed when we estimate the covariance matrix of yt later. This assumption is satisfied by most of the applications of factor models. Condition (iii) requires the distributions of (u1t, …, upt) to have exponential-type tails, which allows us to apply the large deviation theory to .

Assumption 2.2. There exist positive sequences κ1(p, T) = o(1), κ2(p, T) = o(1) and aT = o(1), and a constant M > 0, such that for all C > M,

This assumption allows us to apply thresholding to the estimated error covariance matrix when direct observations are not available, without introducing too much extra estimation error. Note that it permits a general case when the original “data” is contaminated, including any type of estimate of the data when direct observations are not available, as well as the case when data is subject to measurement of errors. We will show in the next section that in a linear factor model when {uit}i≤p,t≤T are estimated using the OLS estimator, the rate of convergence .

The following theorem establishes the asymptotic properties of the thresholding estimator , based on observations with estimation errors. Let , where r1 and r2 are defined in Assumptions 2.1, 3.2 respectively.

Theorem 2.1. Suppose γ < 1 and (log p)6/γ−1 = o(T). Then under Assumptions 2.1 and 2.2, there exist C1 > 0 and C2 > 0 such that for defined in (2.5) with

we have,

| (2.7) |

In addition, if ωTmT = o(1), then with probability at least ,

and

Note that we derive the result (2.7) without assuming the sparsity on Σu, i.e., no restriction is imposed on mT. When ωTmT ≠ o(1), (2.7) still holds, but does not converge to zero in probability. On the other hand, the condition ωTmT = o(1) is required to preserve the nonsingularity of asymptotically and to consistently estimate .

The rate of convergence also depends on the averaged estimation error of the residual terms. We will see in the next section that when the number of common factors K increases slowly, the convergence rate in Theorem 2.1 is close to the minimax optimal rate as in Cai and Zhou (2010).

3. Estimation of Covariance Matrix Using Factors

We now investigate the estimation of the covariance matrix Σ in the approximate factor model:

where Σ = cov(yt). This covariance matrix is particularly of interest in many applications of factor models as well as corresponding inferential theories. When estimating a large dimensional covariance matrix, sparsity and banding are two commonly used assumptions for regularization (e.g., Bickel and Levina (2008a, 2008b)). In most of the applications in finance and economics, however, these two assumptions are inappropriate for Σ. For instance, the US housing prices in the county level are generally associated with a few national indices, and there is no natural ordering among the counties. Hence neither the sparsity nor banding is realistic for such a problem. On the other hand, it is natural to assume Σu sparse, after controling the common factors. Therefore, our approach combines the merits of both the sparsity and factor structures.

Note that

By the Sherman-Morrison-Woodbury formula,

When the factors are observable, one can estimate B by the least squares method: B̂ = (b̂1, …, b̂p)′, where,

The covariance matrix cov(ft) can be estimated by the sample covariance matrix

where X = (f1, …, fT), and 1 is a T-dimensional column vector of ones. Therefore, by employing the thresholding estimator in (2.5), we obtain substitution estimators

| (3.1) |

and

| (3.2) |

In practice, one may apply a common thresholding λ to the correlation matrix of Σ̂u, and then use the substitution estimator similar to (3.1). When λ = 0 (no thresholding), the resulting estimator is the sample covariance, whereas when λ = 1 (all off-diagonals are thresholded), the resulting estimator is an estimator based on the strict factor model (Fan, Fan and Lv (2008)). Thus we have created a path (indexed by λ) which connects the nonparametric estimate of covariance matrix to the parametric estimate.

The following assumptions are made.

Assumption 3.1. (i) {ft}t≥1 is stationary and ergodic.

(ii) {ut}t≥1 and {ft}t≥1 are independent.

In addition to the conditions above, we introduce the strong mixing conditions to conduct asymptotic analysis of the least square estimates. Let denote the σ-algebras generated by {(ft, ut) : −∞ ≤ t ≤ 0} and {(ft, ut) : T ≤ t ≤ ∞} respectively. In addition, define the mixing coefficient

The following strong mixing assumption enables us to apply the Bernstein’s inequality in the technical proofs.

Assumption 3.2. There exist positive constants r2 and C such that for all t ∈ ℤ+,

In addition, we impose the following regularity conditions.

Assumption 3.3. (i) There exists a constant M > 0 such that for all i, j and t, , and |bij| < M.

(ii) There exists a constant r3 > 0 with , and b2 > 0 such that for any s > 0 and i ≤ K,

| (3.3) |

Condition (ii) allows us to apply the Bernstein type inequality for the weakly dependent data.

Assumption 3.4. There exists a constant C > 0 such that λmin(cov(ft)) > C.

Assumptions 3.4 and 2.1 ensure that both λmin(cov(ft)) and λmin(Σ) are bounded away from zero, which is needed to derive the convergence rate of ‖(Σ̂𝒯)−1 − Σ−1‖ below.

The following lemma verifies Assumption 2.2, which derives the rate of convergence of the OLS estimator as well as the estimated residuals.

Let .

Lemma 3.1. Suppose K = o(p), K4(log p)2 = o(T) and (log p)2/γ2−1 = o(T). Then under the assumptions of Theorem 2.1 and Assumptions 3.1–3.4, there exists C > 0, such that

By Lemma 3.1 and Assumption 2.2, , and κ1(p, T) = κ2(p, T) = p−2 + T−2. Therefore in the linear approximate factor model, the thresholding parameter ωT defined in Theorem 2.1 is simplified to: for some positive constant ,

| (3.4) |

Now we can apply Theorem 2.1 to obtain the following theorem:

Theorem 3.1. Under the Assumptions of Lemma 3.1, there exist such that the adaptive thresholding estimator defined in (2.5) with satisfies

- If , then with probability at least ,

and

Remark 3.1. We briefly comment on the terms in the convergence rate above.

The term K appears as an effect of using the estimated residuals to construct the thresholding covariance estimator, which is typically small compared to p and T in many applications. For instance, the famous Fama-French three-factor model shows that K = 3 factors are adequate for the US equity market. In an empirical study on asset returns, Bai and Ng (2002) used the monthly data which contains the returns of 4883 stocks for sixty months. For their data set, T = 60, p = 4883. Bai and Ng (2002) determined K = 2 common factors.

As in Bickel and Levina (2008a) and Cai and Liu (2011), mT, the maximum number of nonzero components across the rows of Σu, also plays a role in the convergence rate. Note that when K is bounded, the convergence rate reduces to , the same as the minimax rate derived by Cai and Zhou (2010).

One of our main objectives is to estimate Σ, which is the p × p dimensional covarinace matrix of yt, assumed to be time invariant. We can achieve a better accuracy in estimating both Σ and Σ−1 by incorporating the factor structure than using the sample covariance matrix, as shown by Fan et al (2008) in the strict factor model case. When the cross sectional correlations among the idiosyncratic components (u1t, …, upt) are in presence, we can still take advantage of the factor structure. This is particularly essential when direct sparsity assumption on Σ is inapproriate.

Assumption 3.5. ‖p−1B′B−Ω‖ = o(1) for some K × K symmetric positive definite matrix Ω such that λmin(Ω) is bounded away from zero.

Assumption 3.5 requires that the factors should be pervasive, i.e., impact every individual time series (Harding (2009)). It was imposed by Fan et al.(2008) only when they tried to establish the asymptotic normality of the covariance estimator. However, it turns out to be also helpful to obtain a good upper bound of ‖(Σ̂𝒯)−1 − Σ−1‖, as it ensures that λmax((B′Σ−1B)−1) = O(p−1).

Fan et al. (2008) obtained an upper bound of ‖Σ̂𝒯 − Σ‖F under the Frobenius norm when Σu is diagonal, i.e., there was no cross-sectional correlation among the idiosyncratic errors. In order for their upper bound to decrease to zero, p2 < T is required. Even with this restrictive assumption, they showed that the convergence rate is the same as the usual sample covariance matrix of yt, though the latter does not take the factor structure into account. Alternatively, they considered the entropy loss norm, proposed by James and Stein (1961):

Here the factor p−1/2 is used for normalization, such that ‖Σ‖Σ = 1. Under this norm, Fan et al. (2008) showed that the substitution estimator has a better convergence rate than the usual sample covariance matrix. Note that the normalization factor p−1/2 in the definition results in an averaged estimation error, which also cancels out the diverging dimensionality introduced by p. In addition, for any two p × p matrices A1 and A2:

Combining with the estimated low-rank matrix Bcov(ft)B′, Theorem 3.1 implies the main theorem in this section:

Theorem 3.2. Suppose log T = o(p). Under the assumptions of Theorem 3.1 and Assumption 3.5, we have

- If , with probability at least ,

and

Note that we have derived a better convergence rate of (Σ̂𝒯)−1 than that in Fan et al.(2008). When the operator norm is considered, p is allowed to grow exponentially fast in T in order for (Σ̂𝒯)−1 to be consistent.

We have also derived the maximum elementwise estimation ‖Σ̂𝒯 − Σ‖∞. This quantity appears in risk assessment as in Fan, Zhang and Yu (2008). For any portfolio with allocation vector w, the true portfolio variance and the estimated one are given by w′Σw and w′Σ̂𝒯 w respectively. The estimation error is bounded by

where ‖w‖1, the l1 norm of w, is the gross exposure of the portfolio.

4. Extension: Seemingly Unrelated Regression

A seemingly unrelated regression model (Kmenta and Gilbert (1970)) is a set of linear equations in which the disturbances are correlated across equations. Specifically, we have

| (4.1) |

where bi and fit are both Ki × 1 vectors. The p linear equations (4.1) are related because their error terms uit are correlated, i.e., the covariance matrix

is not diagonal.

Model (4.1) allows each variable yit to have its own factors. This is important for many applications. In financial applications, the returns of individual stock depend on common market factors and sector-specific factors. In housing price index modeling, housing price appreciations depend on both national factors and local economy. When fit = ft for each i ≤ p, model (4.1) reduces to the approximate factor model (1.1) with common factors ft.

Under mild conditions, running OLS on each equation produces unbiased and consistent estimator of bi separately. However, since OLS does not take into account the cross sectional correlation among the noises, it is not efficient. Instead, statisticians obtain the best linear unbiased estimator (BLUE) via generalized least square (GLS). Write

The GLS estimator of B is given by Zellner (1962):

| (4.2) |

where IT denotes a T × T identity matrix, ⊗ represents the Kronecker product operation, and Σ̂u is a consistent estimator of Σu.

In classical seemingly unrelated regression in which p does not grow with T, Σu is estimated by a two-stage procedure: (Kmenta and Gilbert (1970)): On the first stage, estimate B via OLS, and obtain residuals

| (4.3) |

On the second stage, estimate Σu by

| (4.4) |

In high dimensional seemingly unrelated regression in which p > T, however, Σ̂u is not invertible, and hence the GLS estimator (4.2) is infeasible.

By the sparsity assumption of Σu, we can deal with this singularity problem by using the adaptive thresholding estimator, and produce a consistent nonsingular estimator of Σu:

| (4.5) |

To pursue this goal, we impose the following assumptions:

Assumption 4.1. For each i ≤ p,

{fit}t≥1 is stationary and ergodic.

{ut}t≥1 and {fit}t≥1 are independent.

Assumption 4.2. There exists positive constants C and r2 such that for each i ≤ p, the strong mixing condition

is satisfied by (fit, ut):

Assumption 4.3. There exist constants M and C > 0 such that for all i ≤ p, j ≤ Ki, t ≤ T

, |bij| < M, and .

mini≤p λmin(cov(fit)) > C.

Assumption 4.4. There exists a constant r4 > 0 with , and b3 > 0 such that for any s > 0 and i, j,

These assumptions are similar to those made in Section 3, except that here they are imposed on the sector-specific factors. The main theorem in this section is a direct application of Theorem 2.1, which shows that the adaptive thresholding produces a consistent nonsingular estimator of Σ̂u.

Theorem 4.1. Let K = maxi≤p Ki and ; suppose K = o(p), K4(log p)2 = o(T) and (log p)2/γ3−1 = o(T). Under Assumptions 2.1, 4.1–4.4, there exist constants C1 > 0 and C2 > 0 such that the adaptive thresholding estimator defined in (4.5) with satisfies

- If , then with probability at least ,

and

Therefore, in the case when p > T, Theorem 4.1 enables us to efficiently estimate B via feasible GLS:

5. Monte Carlo Experiments

In this section, we use simulation to demonstrate the rates of convergence of the estimators Σ̂𝒯 and (Σ̂𝒯)−1 that we have obtained so far. The simulation model is a modified version of the Fama-French three-factor model described in Fan, Fan, Lv (2008). We fix the number of factors, K = 3 and the length of time, T = 500, and let the dimensionality p gradually increase.

The Fama-French three-factor model (Fama and French (1992)) is given by

which models the excess return (real rate of return minus risk-free rate) of the ith stock of a portfolio, yit, with respect to 3 factors. The first factor is the excess return of the whole stock market, and the weighted excess return on all NASDAQ, AMEX and NYSE stocks is a commonly used proxy. It extends the capital assets pricing model (CAPM) by adding two new factors- SMB (“small minus big” cap) and HML (“high minus low” book/price). These two were added to the model after the observation that two types of stocks - small caps, and high book value to price ratio, tend to outperform the stock market as a whole.

We separate this section into three parts, calibration, simulation and results. Similar to Section 5 of Fan, Fan and Lv (2008), in the calibration part we want to calculate realistic multivariate distributions from which we can generate the factor loadings B, idiosyncratic noises and the observable factors . The data was obtained from the data library of Kenneth French’s website.

5.1. Calibration

To estimate the parameters in the Fama-French model, we will use the two-year daily data (ỹt, f̃t) from Jan 1st, 2009 to Dec 31st, 2010 (T=500) of 30 industry portfolios.

Calculate the least squares estimator B̃ of ỹt = Bf̃t + ut, and take the rows of B̃, namely b̃1 = (b11, b12, b13), …, b̃30 = (b30,1, b30,2, b30,3), to calculate the sample mean vector μB and sample covariance matrix ΣB. The results are depicted in Table 1. We then create a mutlivariate normal distribution N3(μB, ΣB), from which the factor loadings are drawn.

-

For each fixed p, create the sparse matrix in the following way. Let ût = ỹt − B̃f̃t. For i = 1, …, 30, let σ̂i denote the standard deviation of the residuals of the ith portfolio. We find min(σ̂i) = 0.3533, max(σ̂i) = 1.5222, and calculate the mean and the standard deviation of the σ̂i’s, namely σ̄ = 0.6055 and σSD = 0.2621.

Let , where σ1, …, σp are generated independently from the Gamma distribution G(α, β), with mean αβ and standard deviation α1/2β. We match these values to σ̄ = 0.6055 and σSD = 0.2621, to get α = 5.6840 and β = 0.1503. Further, we create a loop that only accepts the value of σi if it is between min(σ̂i) = 0.3533 and max(σ̂i) = 1.5222.

Create s = (s1, …, sp)′ to be a sparse vector. We set each si ~ N(0, 1) with probability , and si = 0 otherwise. This leads to an average of nonzero elements per each row of the error covariance matrix.

Create a loop that generates Σu multiple times until it is positive definite.

Assume the factors follow the vector autoregressive (VAR(1)) model ft = μ + Φft−1 + εt for some 3 × 3 matrix Φ, where εt’s are i.i.d. N3(0, Σε). We estimate Φ, μ and Σε from the data, and obtain cov(ft). They are summarized in Table 2.

Table 1.

Mean and covariance matrix used to generate b

| μB | ΣB | ||

|---|---|---|---|

| 1.0641 | 0.0475 | 0.0218 | 0.0488 |

| 0.1233 | 0.0218 | 0.0945 | 0.0215 |

| −0.0119 | 0.0488 | 0.0215 | 0.1261 |

Table 2.

Parameters of ft generating process

| μ | cov(ft) | Φ | ||||

|---|---|---|---|---|---|---|

| 0.1074 | 2.2540 | 0.2735 | 0.9197 | −0.1149 | 0.0024 | 0.0776 |

| 0.0357 | 0.2735 | 0.3767 | 0.0430 | 0.0016 | −0.0162 | 0.0387 |

| 0.0033 | 0.9197 | 0.0430 | 0.6822 | −0.0399 | 0.0218 | 0.0351 |

5.2. Simulation

For each fixed p, we generate (b1, …, bp) independently from N3(μB, ΣB), and generate independently. We keep T = 500 fixed, and gradually increase p from 20 to 600 in multiples of 20 to illustrate the rates of convergence when the number of variables diverges with respect to the sample size.

Repeat the following steps N = 200 times for each fixed p:

Generate independently from N3(μB, ΣB), and set B = (b1, …, bp)′.

Generate independently from Np(0, Σu).

Generate independently from the VAR(1) model ft = μ + Φft−1 + εt.

Calculate yt = Bft + ut for t = 1, …, T.

Set to obtain the thresholding estimator (2.5) and the sample covariance matrices .

We graph the convergence of Σ̂𝒯 and Σ̂y to Σ, the covariance matrix of y, under the entropy-loss norm ‖·‖Σ and the elementwise norm ‖·‖∞. We also graph the convergence of the inverses (Σ̂𝒯)−1 and to Σ−1 under the operator norm. Note that we graph that only for p from 20 to 300. Since T = 500, for p > 500 the sample covariance matrix is singular. Also, for p close to 500, Σ̂y is nearly singular, which leads to abnormally large values of the operator norm. Lastly, we record the standard deviations of these norms.

5.3. Results

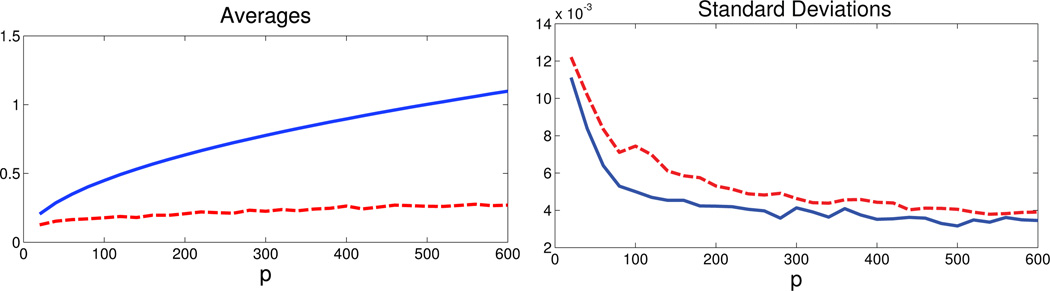

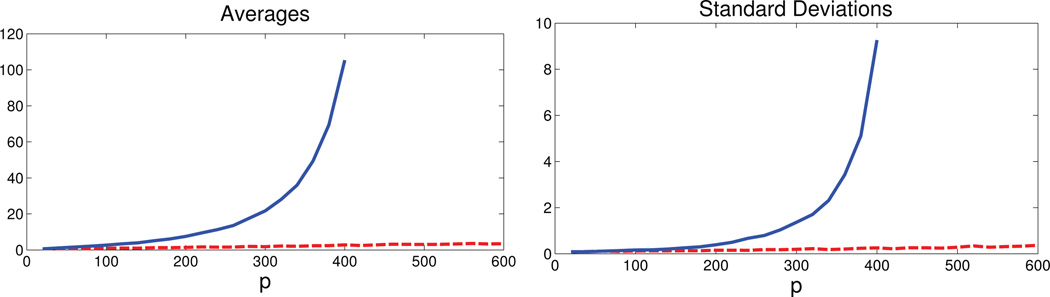

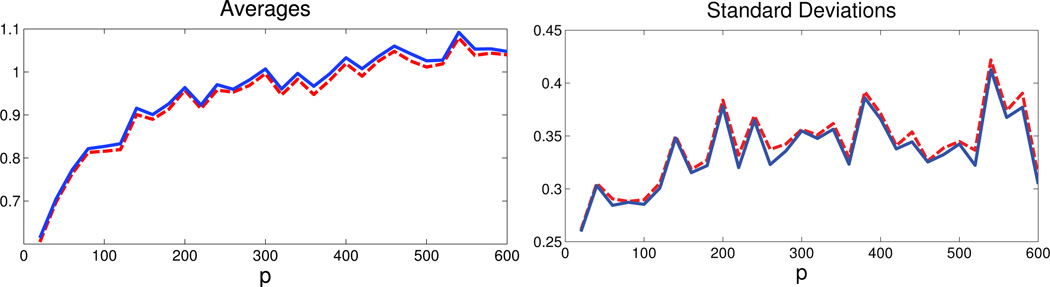

In Figures 1–3, the dashed curves correspond to Σ̂𝒯 and the solid curves correspond to the sample covariance matrix Σ̂y. Figure 1 and 2 present the averages and standard deviations of the estimation error of both of these matrices with respect to the Σ-norm and infinity norm, respectively. Figure 3 presents the averages and estimation errors of the inverses with respect to the operator norm. Based on the simulation results, we can make the following observations:

The standard deviations of the norms are negligible when compared to their corresponding averages.

-

Under the ‖·‖Σ, our estimate of the covariance matrix of y, Σ̂𝒯 performs much better than the sample covaraince matrix Σ̂y. Note that, in the proof of Theorem 2 in Fan, Fan, Lv(2008), it was shown that:

(5.1) For a small fixed value of K, such as K = 3, the dominating term in (5.1) is . From Theorem 4.1, and given that mT = o(p1/4), the dominating term in the convergence of . So, we would expect our estimator to perform better, and the simulation results are consistent with the theory.

Under the infinity norm, both estimators perform roughly the same. This is to be expected, given that the thresholding affects mainly the elements of the covariance matrix that are closest to 0, and the infinity norm depicts the magnitude of the largest elementwise absolute error.

Under the operator norm, the inverse of our estimator, (Σ̂𝒯)−1 also performs significantly better than the inverse of the sample covariance matrix.

Finally, when p > 500, the thresholding estimators and Σ̂𝒯 are still nonsingular.

Fig 1.

Averages and standard deviations of ‖Σ̂𝒯 − Σ‖Σ (dashed curve) and ‖Σ̂y − Σ‖Σ (solid curve) over N = 200 iterations, as a function of the dimensionality p.

Fig 3.

Averages and standard deviations of ‖(Σ̂𝒯)−1 − Σ−1‖ (dashed curve) and (solid curve) over N = 200 iterations, as a function of the dimensionality p.

Fig 2.

Averages and standard deviations of ‖Σ̂𝒯 − Σ‖∞ (dashed curve) and ‖Σ̂y − Σ‖∞ (solid curve) over N = 200 iterations, as a function of the dimensionality p.

In conclusion, even after imposing less restrictive assumptions on the error covariance matrix, we still reach an estimator Σ̂𝒯 that significantly outperforms the standard sample covariance matrix.

6. Conclusions and Discussions

We studied the rate of convergence of high dimensional covariance matrix of approximate factor models under various norms. By assuming sparse error covariance matrix, we allow for the presence of the cross-sectional correlation even after taking out common factors. Since direct observations of the noises are not available, we constructed the error sample covariance matrix first based on the estimation residuals, and then estimate the error covariance matrix using the adaptive thresholding method.We then constructed the covariance matrix of yt using the factor model, assuming that the factors follow a stationary and ergodic process, but can be weakly-dependent. It was shown that after thresholding, the estimated covariance matrices are still invertible even if p > T, and the rate of convergence of (Σ̂𝒯)−1 and is of order , where K comes from the impact of estimating the unobservable noise terms. This demonstrates when estimating the inverse covariance matrix, p is allowed to be much larger than T.

In fact, the rate of convergence in Theorem 2.1 reflects the impact of unobservable idiosyncratic components on the thresholding method. Generally, whether it is the minimax rate when direct observations are not available but have to be estimated is an important question, which is left as a research direction in the future.

Moreover, this paper uses the hard-thresholding technique, which takes the form of σ̂ij(σij) = σijI(|σij| > θij) for some pre-determined threshold θij. Recently, Rothman et al (2009) and Cai and Liu (2011) studied a more general thresholding function of Antoniadis and Fan (2001), which admits the form σ̂ij(θij) = s(σij), and also allows for soft-thresholding. It is easy to apply the more general thresholding here as well, and the rate of convergence of the resulting covariance matrix estimators should be straightforward to derive.

Finally, we considered the case when common factors are observable, as in Fama and French (1992). In some applications, the common factors are unobservable and need to be estimated (Bai (2003)). In that case, it is still possible to consistently estimate the covariance matrices using similar techniques as those in this paper. However, the impact of high dimensionality on the rate of convergence comes also from the estimation error of the unobservable factors. We plan to address this problem in a separate paper.

Acknowledgments

The research was partially supported by NIH Grant R01-GM072611, NSF Grant DMS-0704337, and NIH grant R01GM100474.

APPENDIX A: PROOFS FOR SECTION 2

A.1. Lemmas. The following lemmas are useful to be proved first, in which we consider the operator norm ‖A‖2 = λmax(A′A).

Lemma A.1. Let A be an m × m random matrix, B be an m × m deterministic matrix, and both A and B are semi-positive definite. If there exists a positive sequence such that for all large enough T, λmin(B) > cT. Then

Proof. For any v ∈ ℝm such that ‖v‖ = 1, under the event ‖A − B‖ ≤ 0.5cT,

Hence λmin(A) ≥ 0.5cT.

In addition, still under the event ‖A − B‖ ≤ 0.5cT,

Q.E.D.

Lemma A.2. Suppose that the random variables Z1, Z2 both satisfy the exponential-type tail condition: There exist r1, r2 ∈ (0, 1) and b1, b2 > 0, such that ∀s > 0,

Then for some r3 and b3 > 0, and any s > 0,

| (A.1) |

Proof. We have, for any s > 0, , b = b1b2, and r = r1r2/(r1 + r2),

Pick up an r3 ∈ (0, r), and b3 > max{(r3/r)1/rb, (1 + log 2)1/rb}, then it can be shown that F(s) = (s/b)r − (s/b3)r3 is increasing when s > b3. Hence F(s) > F(b3) > log 2 when s > b3, which implies when s > b3,

When s ≤ b3,

Q.E.D.

Lemma A.3. Under the Assumptions of Theorem 2.1, there exists a constant Cr > 0 that does not depend on (p, T), such that when C > Cr,

Proof. (i) By Assumption 2.1 and Lemma A.2, uitujt satisfies the exponential tail condition, with parameter r1/3 as shown in the proof of Lemma A.2. Therefore by the Bernstein’s inequality (Theorem 1 of Merlevède (2009)), there exist constants C1, C2, C3, C4 and C5 > 0 that only depend on b1, r1 and r2 such that for any i, j ≤ p, and ,

Using Bonferroni’s method, we have

Let for some C > 0. It is not hard to check that when (log p)2/γ−1 = o(T) (by assumption), for large enough C,

and

This proves (i).

(ii) For some such that

| (A.2) |

under the event , by Cauchy-Schwarz inequality,

Since aT = o(1), when , we have, for all large T,

and

By part (i) and (A.2), P(Z ≤ CaT) ≥ 1 − O(p−2 + κ1(p, T)).

(iii) By (i) and (ii), there exists Cr > 0, when C > Cr, the displayed inequalities in (i) and (ii) hold. Under the event , by the triangular inequality,

Hence the desired result follows from part (i) and part (ii) of the lemma.

Q.E.D.

Lemma A.4. Under Assumptions 2.1, 2.2,

where

Proof. (i) Using Bernstein’s inequality and the same argument as in the proof of Lemma A.3(i), we have, there exists , and (log p)6/γ−1 = o(T),

For some C > 0, under the event , where

we have, for any i, j, by adding and subtracting terms,

where the O(.) and o(.) terms are uniformly in p an T. Hence under , for all large enough T, p, uniformly in i, j, we have

Still by adding and subtracting terms, we obtain

Under the event , we have

Hence for all large T, p, uniformly in i, j, we have minij var(uitujt).

Finally, by Lemma A.3 and Assumption 2.2,

which completes the proof.

Q.E.D.

A.2. Proof of Theorem 2.1.

Proof. (i) For the operator norm, we have

By Lemma A.3 (iii), there exists C1 > 0 such that the event

occurs with probability . Let C > 0 be such that , where CL is defined in Lemma A.4. Let , and by Lemma A.4,

Define the following events

where CU is defined in Lemma A.4. Under , the event implies |σij| ≥ bT, and the event implies . We thus have, uniformly in i ≤ p, under ,

By Lemmas A.3(iii) and A.4, , which proves the result. Q.E.D.

(ii) By part (i) of the theorem, there exists some C > 0,

By Lemma A.1,

In addition, when ωTmT = o(1),

where the third inequality follows from Lemma A.1 as well.

Q.E.D.

APPENDIX B: PROOFS FOR SECTION 3

B.1. Proof of Theorem 3.1.

Lemma B.1. There exists C1 > 0 such that,

Proof. (i) Let . We bound maxij |Zij| using Bernstein type inequality. Lemma A.2 implies that for any i and j ≤ K, fitfjt satisfies the exponential tail condition (3.3) with parameter r3/3. Let , where r2 > 0 is the parameter in the strong mixing condition. By Assumption 3.3, r4 < 1, and by the Bernstein inequality for weakly dependent data in Merlevède (2009, Theorem 1), there exist Ci > 0, i = 1, …, 5, for any s > 0

| (B.1) |

Using the Bonferroni inequality,

Let . For all large enough C, since K2 = o(T),

This proves part (i).

(ii) By Lemma A.2, and Assumptions 2.1(iii) and 3.3(ii), satisfies the exponential tail condition (2.6) for the tail parameter 2r1r3/(3r1 + 3r3), as well as the strong mixing condition with parameter r2. Hence again we can apply the Bernstein inequality for weakly dependent data in Merlevède (2009, Theorem 1) and the Bonferroni’s method on similar to (B.1) with the parameter . It follows from that γ2 < 1. Thus when for large enough C, the term

and the rest terms on the right hand side of the inequality, multiplied by pK are of order o(p−2). Hence when (log p)2/γ2−1 = o(T) (which is implied by the Theorem’s assumption), and K = o(p), there exists C′ > 0,

| (B.2) |

Q.E.D.

Proof of Lemma 3.1

-

Since is bounded away from zero, for large enough T, by Lemma B.1(i),

(B.3) Hence by Lemma A.1,(B.4) The desired result then follows from that .

-

For C > maxi≤K , we have, by Lemma B.1(i),The result then follows from

and part(i). -

By Assumption 3.3, for any s > 0,When for large enough C, i.e., ,The result then follows from

and Lemma 3.1(i). Q.E.D.

Proof of Theorem 3.1 Theorem 3.1 follows immediately from Theorem 2.1 and Lemma 3.1. Q.E.D.

B.2. Proof of Theorem 3.2 Part (i). Define

We have,

| (B.5) |

We bound the terms on the right hand side in the following lemmas.

Lemma B.2. There exists C > 0, such that

Proof. (i) Similar to the proof of Lemma B.1(i), it can be shown that there exists C1 > 0,

Hence supK maxi≤K E|fit| < ∞ implies that there exists C > 0 such that

The result then follows from Lemma B.1(i) and that

(ii) We have CT = EX′(XX′)−1. By Lemma B.1 (ii), there exists C′ > 0 such that

Under the event

, which proves the result since is bounded away from zero and P(A) ≥ 1 − O (T−2 + p−2) due to (B.4).

Q.E.D.

Lemma B.3. There exists C > 0 such that

Proof. (i) The same argument in Fan, Fan and Lv (2008), proof of Theorem 2 implies that

Hence

| (B.6) |

On the other hand,

| (B.7) |

Respectively,

| (B.8) |

By Lemma B.1(i), and , P(‖XX′‖ > TC) = O(T−2) for some C > 0. Hence, Lemma B.2 (ii) implies

| (B.9) |

for some C′ > 0. In addition, the eigenvalues of are all bounded away from both zero and infinity with probability at least 1 − O(T−2) (implied by Lemmas B.1(i), A.1, and Assumption 3.4). Hence for some C1 > 0, with probability ast least 1 − O(T−2),

| (B.10) |

The result then follows from the combination of (B.6)–(B.10), and Lemma B.2.

(ii) Straightforward calculation yields:

Since ‖cov(ft)‖ is bounded, by Lemma B.1(i), is bounded with probability at least 1 − O(T−2). The result again follows from Lemma B.2(ii).

Proof of Theorem 3.2 Part (i)

-

We have

(B.11) - For the infinity norm, it is straightforward to find that

(B.12)

By Assumption, both ‖B‖∞ and ‖cov(ft)‖∞ are bounded uniformly in (p, K, T). In addition, let ei be a p-dimensional column vector whose ith component is one with the remaining components being zeros. Then under the events , we have, for some C′ > 0,

| (B.13) |

| (B.14) |

| (B.15) |

| (B.16) |

| (B.17) |

and

| (B.18) |

Moreover, the (i, j)th entry of is given by

Hence , which implies that with probability at least 1 − O(p−2 + T−2),

| (B.19) |

The result then follows from the combination of (B.12)–(B.19), (B.4), and Lemmas 3.1,B.1.

Q.E.D.

B.3. Proof of Theorem 3.2 Part (ii). We first prove two technical lemmas to be used below.

Lemma B.4. (i) for some c > 0.

(ii) .

Proof. (i) We have

It then follows from Assumption 3.5 that λmin(B′B) > cp for some c > 0 and all large p. The result follows since ‖Σu‖ is bounded away from infinity.

(ii) It follows immediately from

Q.E.D.

Lemma B.5. There exists C > 0 such that,

- for ,

Proof. (i) Let .

The same argument of Fan, Fan and Lv (2008) (eq. 14) implies that . Therefore, by Theorem 3.1 and Lemma B.2(ii), it is straightforward to verify the result.

(ii) Since ‖DT ‖F ≥ ‖DT‖, according to Lemma B.2(i), there exists C′ > 0 such that with probability ast least 1 − O(T−2), . Thus by Lemma A.1, for some C″ > 0,

which implies

| (B.20) |

Now let . Then part (i) and (B.20) imply

| (B.21) |

In addition, . Hence by Lemmas A.1, B.4(ii), for some C > 0,

which implies the desired result.

(iii) By the triangular inequality, . Hence Lemma B.2(ii) implies, for some C > 0,

| (B.22) |

In addition, since is bounded, it then follows from Theorem 3.1 that is bounded with probability at least 1 − O(p−2+T−2). The result then follows from the fact that

which is shown in part (ii).

Q.E.D.

To complete the proof of Theorem 3.2 Part (ii), we follow similar lines of proof as in Fan, Fan and Lv (2008). Using the Sherman-Morrison-Woodbury formula, we have

| (B.23) |

The bound of L1 is given in Theorem 3.1.

For , then

| (B.24) |

It follows from Theorem 3.1 and Lemma B.5(iii) that

The same bound can be achieved in a same way for L3. For L4, we have

It follows from Lemmas B.2, B.5(ii), and inequality (B.22) that

The same bound also applies to L5. Finally,

where both  and A are defined after inequality (B.20). By Lemma B.4(ii), ‖A−1‖ = O(p−1). Lemma B.5(ii) implies P(‖Â−1 ‖ > Cp−1) = O(p−2 + T−2). Combining with (B.21), we obtain

The proof is completed by combining L1 ~ L6. Q.E.D.

APPENDIX C: PROOFS FOR SECTION 4

The proof is similar to that of Lemma 3.1. Thus we sketch it very briefly. The OLS is given by

The same arguments in the proof of Lemma B.1 can yield, for large enough C > 0,

which then implies the rate of

The result then follows from a straightforward application of Theorem 2.1. Q.E.D.

Contributor Information

Jianqing Fan, Email: jqfan@princeton.edu.

Yuan Liao, Email: yuanliao@princeton.edu.

Martina Mincheva, Email: mincheva@princeton.edu.

REFERENCES

- 1.Antoniadis A, Fan J. Regularized wavelet approximations. J. Amer. Statist. Assoc. 2001;96:939–967. [Google Scholar]

- 2.Bai J. Inferential theory for factor models of large dimensions. Econometrica. 2003;71:135–171. [Google Scholar]

- 3.Bai J, Ng S. Determining the number of factors in approximate factor models. Econometrica. 2002;70:191–221. [Google Scholar]

- 4.Bickel P, Levina E. Covariance regularization by thresholding. Ann. Statist. 2008a;36:2577–2604. [Google Scholar]

- 5.Bickel P, Levina E. Regularized estimation of large covariance matrices. Ann. Statist. 2008b;36:199–227. [Google Scholar]

- 6.Cai T, Liu W. Adaptive thresholding for sparse covariance matrix estimation. J. Amer. Statist. Assoc. 2011;106:672–684. [Google Scholar]

- 7.Cai T, Zhou H. Manuscript. University of Pennsylvania; 2010. Optimal rates of convergence for sparse covariance matrix estimation. [Google Scholar]

- 8.Chamberlain G, Rothschild M. Arbitrage, factor structure and mean-variance analyssi in large asset markets. Econometrica. 1983;51:1305–1324. [Google Scholar]

- 9.Connor G, Korajczyk R. A Test for the number of factors in an approximate factor model. Journal of Finance. 1993;48:1263–1291. [Google Scholar]

- 10.Fama E, French K. The cross-section of expected stock returns. Journal of Finance. 1992;47:427–465. [Google Scholar]

- 11.Lam C, Fan J. Sparsistency and rates of convergence in large covariance matrix estimation. Ann. Statist. 2009;37:4254–4278. doi: 10.1214/09-AOS720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Fan J, Fan Y, Lv J. High dimensional covariance matrix estimation using a factor model. J. Econometrics. 2008;147:186–197. [Google Scholar]

- 13.Fan J, Zhang J, Yu K. Manuscript. Princeton University; 2008. Asset location and risk assessment with gross exposure constraints for vast portfolios. [Google Scholar]

- 14.Gorman M. Some Engel curves. In: Deaton A, editor. Essays in the Theory and Measurement of Consumer Behavior in Honor of Sir Richard Stone. New York: Cambridge University Press; 1981. [Google Scholar]

- 15.Harding M. Manuscript. Stanford University; 2009. Structural estimation of high-dimensional factor models. [Google Scholar]

- 16.James W, Stein C. Estimation with quadratic loss. Proc. Fourth Berkeley Symp. Math. Statist. Probab; Univ. California Press; Berkeley. 1961. pp. 361–379. [Google Scholar]

- 17.Kmenta J, Gilbert R. Estimation of seemingly unrelated regressions with autoregressive disturbances. J. Amer. Statist. Assoc. 1970;65:186–196. [Google Scholar]

- 18.Lewbel A. The rank of demand systems: theory and nonparametric estimation. Econometrica. 1991;59:711–730. [Google Scholar]

- 19.Merlevède F, Peligrad M, Rio E. Manuscript. Université Paris Est.; 2009. A Bernstein type inequality and moderate deviations for weakly dependent sequences. [Google Scholar]

- 20.Rothman A, Levina E, Zhu J. Generalized thresholding of large covariance matrices. J. Amer. Statist. Assoc. 2009;104:177–186. [Google Scholar]

- 21.Zellner A. An efficient method of estimating seemingly unrelated regressions and tests for aggregation bias. J. Amer. Statist. Assoc. 1962;57:348–368. [Google Scholar]