Abstract

We evaluated the effects of differential reinforcement and accurate verbal rules with feedback on the preference for choice and the verbal reports of 6 adults. Participants earned points on a probabilistic schedule by completing the terminal links of a concurrent-chains arrangement in a computer-based game of chance. In free-choice terminal links, participants selected 3 numbers from an 8-number array; in restricted-choice terminal links participants selected the order of 3 numbers preselected by a computer program. A pop-up box then informed the participants if the numbers they selected or ordered matched or did not match numbers generated by the computer but not displayed; matching in a trial resulted in one point earned. In baseline sessions, schedules of reinforcement were equal across free- and restricted-choice arrangements and a running tally of points earned was shown each trial. The effects of differentially reinforcing restricted-choice selections were evaluated using a reversal design. For 4 participants, the effects of providing a running tally of points won by arrangement and verbal rules regarding the schedule of reinforcement were also evaluated using a nonconcurrent multiple-baseline-across-participants design. Results varied across participants but generally demonstrated that (a) preference for choice corresponded more closely to verbal reports of the odds of winning than to reinforcement schedules, (b) rules and feedback were correlated with more accurate verbal reports, and (c) preference for choice corresponded more highly to the relative number of reinforcements obtained across free- and restricted-choice arrangements in a session than to the obtained probability of reinforcement or to verbal reports of the odds of winning.

Keywords: choice, preference, differential reinforcement, concurrent-chains arrangement, rule-governed behavior, self-rules, probabilistic, verbal reports

A number of studies have shown that humans and nonhumans often prefer choice arrangements to no-choice arrangements (e.g., Catania, 1975; Catania & Sagvolden, 1980; Fisher, Thompson, Piazza, Crosland, & Gotjen, 1997; Schmidt, Hanley, & Layer, 2009; Thompson, Fisher, & Contrucci, 1998; Tiger, Hanley, & Hernandez, 2006; Voss & Homzie, 1970). This preference has been demonstrated when researchers have controlled qualitative and quantitative differences between choice and no-choice arrangements by, among other procedures: (a) yoking reinforcers in the no-choice arrangement to selections in the choice arrangement (e.g., Fisher et al., 1997; but see Lerman et al., 1997); (b) using a concurrent-chains arrangement to test preference between one item (no-choice arrangement) versus several items identical to one another and to the no-choice item (choice arrangement), but allowing access to only one item in each arrangement (e.g., Tiger et al., 2006); and (c) using the same number of identical items in the no-choice and choice arrangements, but allowing the participant to select one item in the choice arrangement versus the experimenter selecting one item in the no-choice arrangement (e.g., Schmidt et al., 2009).

Whether preference for choice is relatively small but persistent (e.g., Catania & Sagvolden, 1980), or robust (e.g., Thompson et al., 1998), the cause or causes of this preference remain undetermined. Several studies have found evidence suggesting that preference for choice in humans may be influenced by stimulus generalization (Tiger et al., 2006) or reinforcement history (Karsina, Thompson, & Rodriguez, 2011). In these accounts, preference for choice in the absence of differential reinforcement for choice is explained, at least in part, by some relation to extra-experimental situations in which choice has produced differential reinforcement.

Verbal rules might also be a source of extra-experimental influence on the preference for choice. The literature on rule-governed behavior has shown that rules in the form of instructions can affect behavior in settings inside and outside of the laboratory (for a brief review, see Baron & Galizio, 1983). In some cases, contingencies that were ineffective in changing behavior alone were found to be effective when instructions were added (e.g., Ayllon & Azrin, 1964) and in other cases temporal patterns of responding were found to more optimally match schedules of reinforcement when instructions were provided (e.g., Baron, Kaufman, & Stauber, 1969; Galizio, 1979; Weiner, 1970). Other studies have found that incomplete or inaccurate instructions may result in some level of insensitivity to schedules of reinforcement—that is, responding may not change or may change relatively slowly and minimally when contingencies change (e.g., Bicard & Neef, 2002; Hackenberg & Joker, 1994; Hayes, Brownstein, Zettle, Rosenfarb, & Korn, 1986; Joyce & Chase, 1990; Kaufman, Baron, & Kopp, 1966; Lippman & Meyer, 1967; Matthews, Shimoff, Catania, & Sagvolden, 1977; Shimoff, Catania, & Matthews, 1981; Weiner, 1970).

Dixon (2000) measured the quantity of chips wagered by five female undergraduates when they picked the location of a bet on a roulette wheel and when the experimenter picked the location of the bet for them. At the conclusion of each of two sessions, the undergraduates were surveyed as to their actual winnings and their odds of winning. During a no-rules baseline and an inaccurate-rules condition (in which participants were individually given statements suggesting that picking their own numbers would increase their chances of winning), most participants wagered more and over-estimated their winnings and odds of winning when they placed their own bets. When provided with accurate rules (e.g., statements that the odds of winning were independent of who picked the numbers), the participants still wagered more chips when they placed their own bets, though to a lesser extent than when provided inaccurate rules, and they more accurately estimated their winnings and odds of winning. This study suggests that rules may play a role in the preference for choice.

However, Dixon's (2000) study has several limitations. Each participant underwent the same sequence of conditions (no rules, inaccurate rules, and accurate rules) and therefore sequence and practice effects cannot be ruled out. In addition, although the participants wagered individually and rules were introduced privately, all of the participants were present at the same time and witnessed one another's wagers and winnings. This group arrangement may have influenced responding. Also, although multiple measures of the amount wagered per bet were taken, verbal reports of the odds of winning were assessed only twice, once after each session. Thus there was limited measurement of this important variable.

In the current study, we were interested in examining the effects of differential reinforcement and rules with feedback on the preference for choice and verbal reports related to the experimental task. Accordingly, we selected participants for continued participation in this experiment who, at least initially, demonstrated a preference for a free-choice arrangement over a restricted-choice arrangement and who reported that the odds of winning were better when they selected the free-choice arrangement. We then measured preference for free choice and verbal reports of the odds of winning under parametric manipulations of differential reinforcement of restricted-choice selections. These manipulations served to test the durability of the preference for free choice as well as the verbal reports that the odds of winning were better when the free-choice arrangement was selected.

METHOD

Participants

Eleven undergraduates enrolled in an introductory psychology course taught by the third author at a small New England college and three adult employees of a school for children with autism and related disorders participated in the study. Seven of the undergraduates and one of the employees did not display a preference for free choice or report that the odds of winning were better when they selected the free-choice arrangement in initial sessions, and were therefore dropped from the study. The remaining undergraduates were three males (Edmond, Robert, and Xavier) and one female (Lucy), 18 to 20 years of age, who volunteered to participate in the study from at least one other equivalent arrangement activity to receive extra credit. The employees, Alysa, a 45-year-old female speech and language pathologist with an advanced degree in speech pathology, and Chloe, a 42-year-old female administrative assistant with a bachelor's degree, were selected based upon willingness to participate and availability. Alysa and Chloe received a small amount of money each session (from 1 to 4 cents per point earned) and tickets for a raffle to be held at the end of the study (the tickets and raffle were not offered to the undergraduates). Raffle tickets were won by scoring a certain number of points per session; Alysa and Chloe typically, but not always, won a ticket. Alysa and Chloe were told there would be more than one prize and that the prizes would be of a small monetary value (prizes were $10 and $20 iTunes gift cards and Alysa and Chloe each received one card). Approval was obtained from the local Institutional Review Board.

Setting and Materials

Sessions were conducted in a room equipped with one or two tables, several chairs, and laptop computers installed with a game programmed into Microsoft PowerPoint and equipped with a computer mouse. From 1–6 participants were in the room during sessions. When more than one participant was in the room, headphones were used to isolate auditory feedback from the game to the intended participant. Participants were instructed not to interact with one another during sessions and not to discuss the experiment with anyone except the experimenters. An experimenter was in the room and sat away from the participant(s) and read or worked quietly while the participant(s) played the game.

Procedure

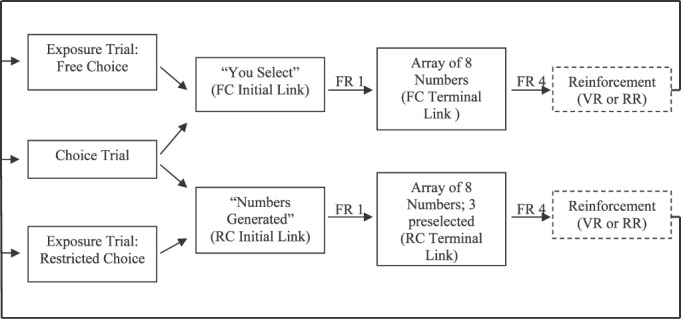

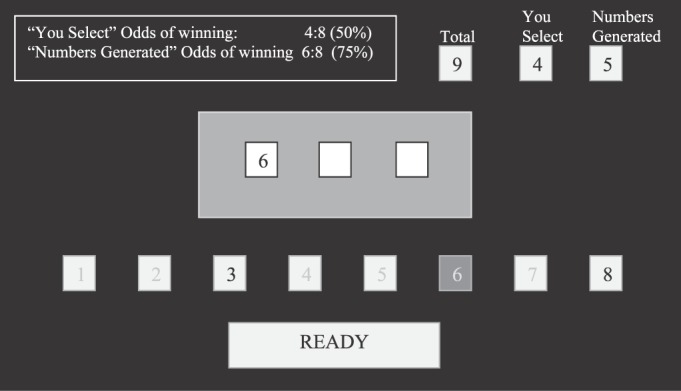

A concurrent-chains arrangement in the context of a computer-based game was used to measure preference for free choice (see Figure 1). The game is described in detail in Karsina et al. (2011), and is summarized briefly here. Participants were instructed that the goal of the game was to earn points by matching numbers to a random number generated by the computer. In the game, trials were presented in three sets of 40 trials; each set consisted of 32 exposure trials followed by 8 choice trials.

Figure 1. Schematic representing the concurrent-chains arrangement. During exposure trials, only one initial link was presented (FC = free choice; RC = restricted choice); during choice trials both initial links were presented. One click of the computer mouse (FR = fixed ratio) within one of the initial links brought up the corresponding terminal link. Clicking on three numbers and a “Ready” button (FR 4) completed the terminal link, resulting in a point delivery on a variable-ratio (VR; exposure trials) or random-ratio (RR; choice trials) schedule. Following completion of the terminal link, the cycle started over.

During exposure trials, the participant was presented with a gray message box with the words “You Select” (free-choice initial link) or “Numbers Generated” (restricted-choice initial link). The participant advanced to the terminal link on a fixed-ratio (FR) 1 schedule by clicking on the message box using the computer mouse. During choice trials, both message boxes were presented simultaneously on the screen and the participant clicked one of the boxes (also an FR 1 schedule) to advance to the corresponding terminal link. In the free-choice terminal link, the participant selected three numbers from a row of eight numbers (1–8, sequentially) by clicking on the numbers using a computer mouse. In the restricted-choice terminal link, the participant was presented with the same row of eight numbers, but five of the numbers (randomly determined each presentation) were dimmed and inoperative. The participant selected the order of the three operative numbers by clicking on them with a computer mouse. After the numbers were selected (free-choice terminal link) or ordered (restricted-choice terminal link) and the participant clicked “Ready” (a total of four clicks for each terminal link, or an FR 4 schedule), a pop-up box appeared with a written message that either the numbers matched and a point had been earned, or that the numbers did not match and no point was earned. The participant then clicked an “OK” button in the pop-up box and advanced to the next initial link screen. In both the free- and restricted-choice terminal links, a square with a running points tally was present in the upper right corner of the screen; this tally was updated each new presentation of the terminal links. Additionally, in the Rules-and-Feedback Condition a statement of the odds of winning for each choice arrangement and the points won by each terminal link were on the top of the screen.

Reinforcement in the form of points was delivered on a variable-ratio (VR) schedule during exposure trials and a random-ratio (RR) schedule during choice trials; the specific schedules varied across phases. RR schedules rather than VR schedules were used during choice trials because the number of exposures to each terminal link could not be controlled during choice trials. Figure 2 provides a schematic of the restricted-choice terminal link in the Rules-and-Feedback Condition; see Karsina et al. (2011) for additional schematics.

Figure 2. Schematic representing the restricted-choice terminal link in the rules condition. In this schematic, the participant has clicked on the number 6 and has two more numbers to enter (“3” and “8,” in any order). His points going into the trial are 9; 4 of these points were delivered following free-choice terminal link completions and 5 were delivered following restricted-choice terminal link completions.

There were two differences between the procedures for the students and the employees. First, the employees (Alysa and Chloe) exchanged the points they earned following each session for money and had the opportunity to earn a raffle ticket each session if they earned a prespecified number of points or greater—the number of points was determined by calculating the number of points programmed during exposure trials and adding 12 (i.e., 50% of choice trials). The undergraduates (Edmond, Lucy, Robert, and Xavier) received extra credit for participating in the sessions, but did not participate in any direct exchange between points earned and the amount of extra credit earned and did not have the opportunity to earn raffle tickets. Second, Alysa and Chloe had an additional question on their end-of-session questionnaires. This question asked if they wanted to roll a 4-sided die to determine the monetary value (from 1 to 4 cents) of each point delivered during the session, or if they wanted the experimenter to roll the die. This question was intended to measure generalization across stimulus arrangements, but the results were inconclusive and are omitted from the study. After the questionnaire was completed, either the participant or experimenter rolled a 4-sided die, depending on the participant's answer to the last question on the end-of-session questionnaire. The money earned for the session was then calculated and delivered to the participant, along with a raffle ticket (if earned). Occasionally, Alysa and Chloe chose to save their earnings until the next session in order to have enough money to use in a nearby vending machine. Otherwise, the procedures were the same for all participants.

Response Measurement

The computer program recorded all mouse clicks during the initial and terminal links and all points delivered during each trial. In addition, the program recorded latency (in seconds) from presentation to termination of each terminal link, the numbers selected or generated on each trial, and the order of selection. All data were saved on a Microsoft Excel spreadsheet. There were no relevant findings related to latency, numbers selected or generated, or the order of selections, so those data are not reported here.

Each participant's performance was analyzed via visual inspection for patterns consistent with preference for free choice and a self-rule of the efficacy of choice. The critical measures for these analyses were the choice quotient (see below) and the verbal report regarding the choice arrangement with the better “odds of winning” (as measured on the post-session questionnaire). Choice quotients indicating a preference for free choice, followed by verbal reports that over-estimated the probability of earning points in the free-choice arrangement relative to the restricted-choice arrangement, were consistent with—but not proof of—the possibility that preference for free choice was influenced by a self-rule of the efficacy of free choice; all other combinations were inconsistent with this possibility.

Choice quotient

The choice quotient expresses the preference for free choice versus restricted choice and was calculated for the 24 choice trials within a session as well as for the 8 choice trials in each set (each set consisted of 32 exposure trials followed by 8 choice trials and there were 3 sets per session). The choice quotient was calculated by subtracting the proportion of selections for restricted choice from the proportion of selections for free choice during choice trials. This resulted in a number from −1 to 1 (inclusive), with 1 indicating exclusive selections for free choice, 0 indicating equal free- and restricted-choice selections (i.e., indifference), and −1 indicating exclusive selections for restricted choice.

Points quotient

As there were 8 choice trials per set, a preference for one terminal link resulted in unequal exposure to the terminal links in the set. This could result in a different number of points earned across choice arrangements in sessions with no programmed differential reinforcement of free or restricted choice, even though the ratio of earnings was equal (or nearly equal) across choice arrangements. Thus, in order to measure this potential discrepancy in points earned, a points quotient for each session and for each set of 32 exposure trials and 8 choice trials within sessions was calculated by dividing the number of points delivered in the restricted-choice arrangement by the total number of points delivered in the session and subtracting this number from the number of points delivered in the free-choice arrangement divided by the total number of points delivered in the session. The resulting number was a number from −1 to 1 (inclusive) with −1 indicating that all of the points were delivered in the restricted-choice arrangement, 0 indicating equal point distribution across choice arrangements, and 1 indicating that all of the points were delivered in the free-choice arrangement.

Odds quotient

The use of RR schedules in choice trials also allowed obtained probabilities of reinforcement to differ from programmed probabilities of reinforcement. Therefore, we calculated the obtained probability of point delivery in free- and restricted-choice arrangements each session, and subtracted the probability of point delivery in restricted-choice arrangements from the probability of point delivery in free-choice arrangements. As with the previous calculated quotients, the resulting quotient (henceforth referred to as the “odds quotient”) was a number from −1 to 1 (inclusive). A −1 indicated that all of the restricted-choice terminal links and only the restricted-choice links were followed by a point, 0 indicated that the probability of point delivery following restricted- and free-choice terminal links was equal, and 1 indicated all of the free-choice terminal links and only the free-choice terminal links were followed by a point.

Correspondence of preference to reinforcement schedules

Our experiment was designed to measure the effects of differential reinforcement and verbal rules with feedback on preference for free choice and verbal reports of the relative odds of winning across free- and restricted-choice arrangements. Therefore, having a measure of how well responding during choice trials (i.e., preference) conformed to the different schedules of reinforcement allowed us to examine effects of reinforcement and rules with feedback. Schedules of reinforcement during choice trials were always RR schedules; thus, when free-choice selections and restricted-choice selections had the same RR schedules, any pattern of responding was optimal in terms of maximizing reinforcement and therefore correspondence of preference to this reinforcement schedule could not be calculated. However, when the RR schedules were not equal, exclusive responding to the terminal link with the higher probability of reinforcement maximized reinforcement, even if the difference in schedule values was relatively small.

We calculated correspondence of preference to reinforcement schedules by subtracting the proportion of selections for the choice arrangement with the lower probability of reinforcement during choice trials from the proportion of selections for the choice arrangement with the higher probability of reinforcement during choice trials. This resulted in a number from −1 to 1 (inclusive), with 1 indicating exclusive selections of the choice arrangement with the higher probability of reinforcement (i.e., responding that was consistent with the best chance for the most reinforcement), 0 indicating that half of the responding was for the choice arrangement with the higher probability of reinforcement, but half was for the choice arrangement with the lower probability of reinforcement, and −1 indicating exclusive selections of the choice arrangement with the lower probability of reinforcement. Thus, if a participant displayed a preference for the arrangement with the higher probability of reinforcement, the choice quotient and the measure of correspondence of preference to the schedules of reinforcement were the same, and if a participant displayed a preference for the arrangement with the lower probability of reinforcement, the choice quotient and the measure of correspondence of preference to the schedules of reinforcement were additive inverses of one another.

Correspondence of preference to verbal reports of the odds of winning

Another measure of interest was how well responding during choice trials (i.e., preference) corresponded with verbal reports of the choice arrangement (free or restricted) with the better odds of winning. High correspondence between preference and verbal reports of the choice arrangement with the better odds of winning was consistent with—but not proof of—responding influenced by a self-rule of the efficacy of choice. We calculated correspondence of preference with verbal reports of the choice arrangement with the better odds of winning by subtracting the proportion of selections for the choice arrangement not identified as the arrangement with the better odds of winning from the proportion of selections for the choice arrangement identified as having the better odds of winning. This resulted in a number from −1 to 1 (inclusive), with 1 indicating exclusive selections of the choice arrangement reported to have the better odds of winning (i.e., responding that was consistent with the verbal report of the choice arrangement with the better odds of winning), 0 indicating that half of the responding was for the choice arrangement reported as having the better odds of winning, but half for the other choice arrangement, and −1 indicating exclusive selections of the choice arrangement reported as having lower odds of winning. For verbal reports that the odds of winning were the same, any pattern of responding would be consistent with the verbal report in terms of optimizing reinforcement, therefore this calculation was not conducted for sessions with verbal reports that of the odds of winning were about the same. Thus, if a participant displayed a preference for the arrangement that he or she reported to have the higher probability of reinforcement, the choice quotient and the measure of correspondence of preference to verbal reports of the odds of winning were the same, and if a participant displayed a preference for the arrangement that he or she reported to have the lower probability of reinforcement, the choice quotient and the measure of correspondence of preference to verbal reports of the odds of winning were additive inverses of one another.

Verbal reports

We calculated correspondence between the verbal reports at the end of each session and obtained measures for verbal reports of the relative odds of winning, preference, and points won. Correspondence between verbal reports of which choice arrangement had the “better odds of winning” and programmed odds of winning was scored if the participant correctly identified the choice arrangement (free or restricted) with the higher percentage of programmed point delivery or if the participant reported that the odds were “about the same” in the absence of programmed differential reinforcement. A “marginal correspondence” was noted if the participant indicated that the odds of winning were better following free-choice or restricted-choice trials when the programmed odds were equivalent, but due to the RR schedules the obtained odds slightly favored the choice arrangement the participant indicated. For example, if the programmed probability of reinforcement for both the free- and restricted-choice arrangements was 50%, but the obtained probability of reinforcement for the free-choice arrangement was 52% and the obtained probability for the restricted-choice arrangement was 54%, a correspondence would be scored for a verbal report of the odds being “about the same,” a marginal correspondence would be scored for a verbal report of the odds being better in the restricted-choice arrangement, and no correspondence would be scored for a verbal report of the odds being better in the free-choice arrangement.

We calculated correspondence between verbal reports of preference for free and restricted choice and preference as indicated by the choice quotient as follows: (a) if the participant reported more enjoyment of the free-choice arrangement, a correspondence was scored if the choice quotient for the session was greater than 0; (b) if the participant reported more enjoyment of restricted-choice trials, a correspondence was scored if the choice quotient for the session was less than 0; and (c) if the participant reported about the same enjoyment, a correspondence was scored if the choice quotient for the session was greater than −.28 but less than .28. These criteria were selected to be relatively lenient in scoring correspondences.

For correspondence between verbal reports of which choice arrangement was followed by the most point deliveries, we scored a correspondence if the verbal report identified the choice arrangement followed by the most point deliveries, or if the participant indicated that the points delivered were “about the same” and the total points for each choice arrangement were within 10 points at the end of the session. For each verbal measure, the total number of correspondences was then divided by the total number of sessions for an overall proportion of correspondence.

No-Rules Condition

Before each session, an experimenter loaded and opened the designated version of the program on the computer used by the participant. The experimenter then led the participant to the computer and instructed the participant to begin whenever he was ready. Each version of the program contained written directions on the opening screen; the experimenter read the instructions out loud with the participant in the first session but not in subsequent sessions. The written directions instructed the participant to select three numbers when “you select” appeared on the screen and to click on the numbers highlighted by the computer when “numbers generated” appeared. Participants were instructed that numbers could be entered in any order but each number could be entered only once per turn. The participants were told that the numbers they selected or entered were matched to three unseen numbers generated by the computer and that as soon as any of the numbers matched they would hear a “pleasant” tone and receive a point. If none of the numbers matched they would hear a different tone and would not receive a point for that turn. The participants were instructed to “win points and have fun.” After the last trial, more directions appeared on the screen instructing the participant to notify the experimenter that she or he was done. Session duration was typically between 10 and 20 min, with one to two sessions run per day and a minimum of 5 min separating each session.

No-differential-reinforcement (NC) sessions

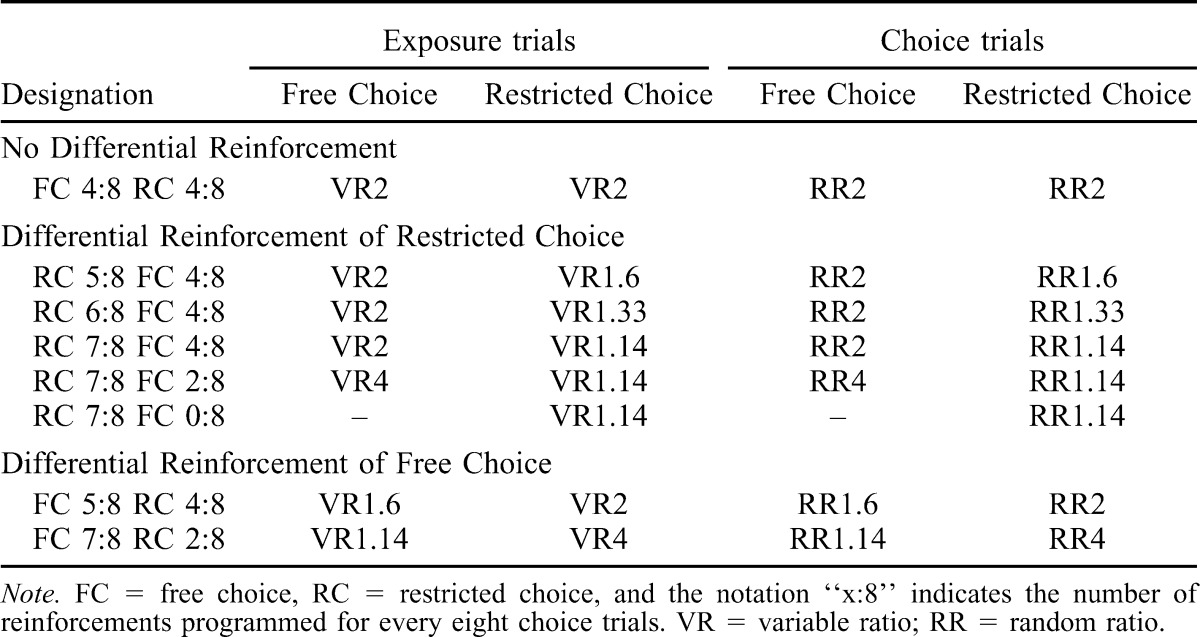

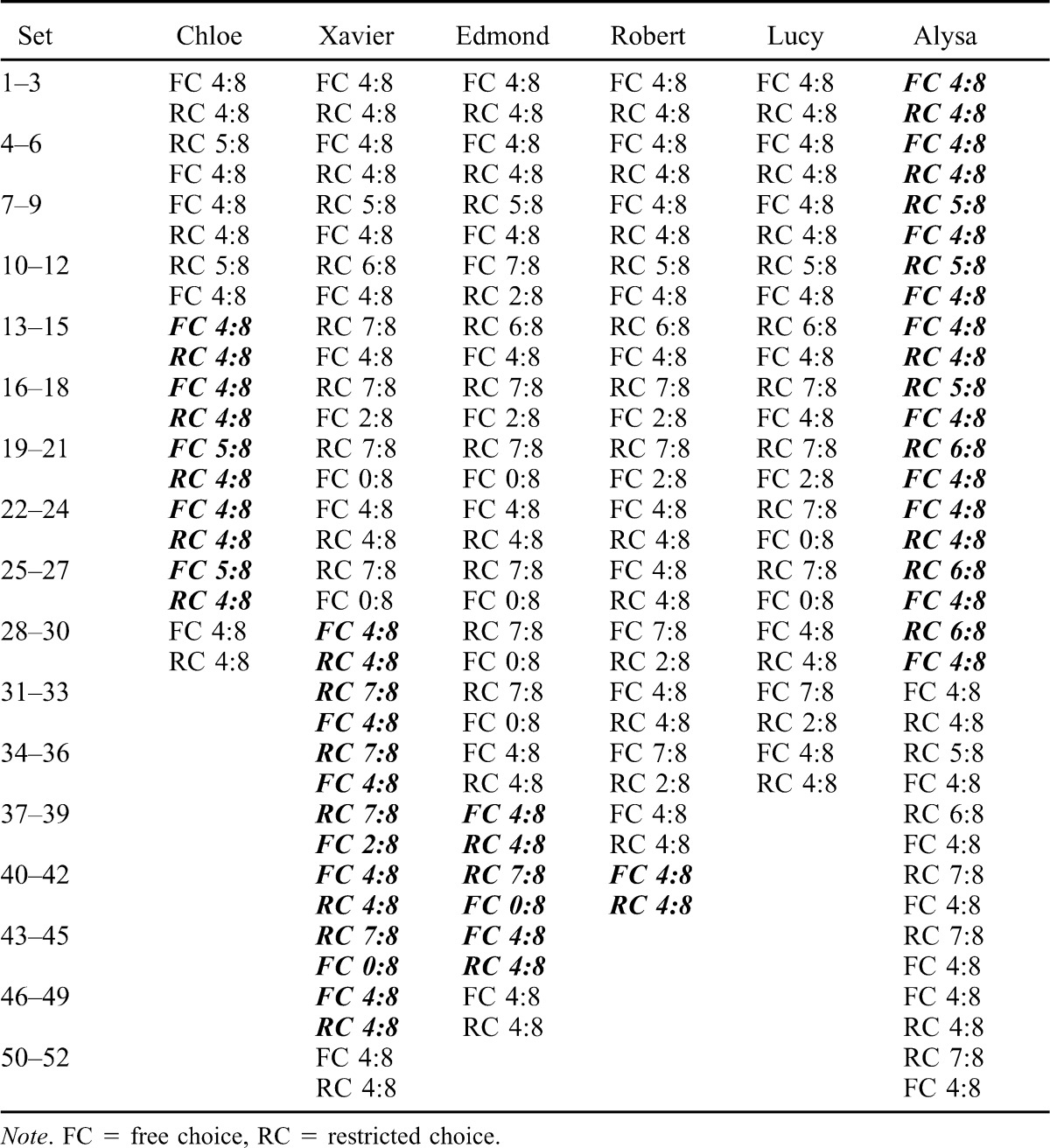

Each participant participated in 1–3 initial NC sessions. The number of sessions was varied in order to set up different exposure histories, and was determined by visual inspection of the data and participant availability. NC sessions were used to measure preference for free choice and verbal reports of the choice arrangement with the better odds of winning before and after differential reinforcement of free- or restricted-choice selections. During NC sessions, the schedule of reinforcement of the free-choice and restricted-choice terminal links was VR 2 during exposure trials and RR 2 during choice trials. For ease of comparison across different schedules the following convention is used: The schedule of each choice arrangement (expressed as FC for free choice and RC for restricted choice) is designated followed by the mean number of reinforcements after eight exposures (the number of possible exposures per choice set) to the terminal link of the specific choice arrangement. The choice arrangement with the more favorable ratio of reinforcement is presented first; FC is presented first in the case of equal schedules. Using this convention, the schedule of reinforcement during NC sessions was FC 4∶8 RC 4∶8 (see Table 1 for a list of schedules used).

Table 1.

Schedules of Reinforcement for Exposure and Choice Trials

Differential-reinforcement-of-restricted-choice (RC) sessions

In RC sessions, the reinforcement schedules for free- and restricted-choice selections were adjusted in order to measure the durability of the preference for choice and verbal reports of the choice arrangement with the better odds of winning. Schedules for the initial RC session for each participant were set at RC 5∶8 FC 4∶8 and were incrementally increased up to a maximum of RC 7∶8 FC 0∶8 following each session in which a participant displayed a preference for free choice. When returning to RC sessions after the first set of RC sessions and exposure to NC sessions, session schedules were set at the last RC session schedule (see Table 2). Preference for free choice or restricted choice was determined by visual inspection, with the general guideline that preference for free choice was demonstrated when 2 of the 3 choice sets within a session displayed positive choice quotients and the mean of the choice quotient for the session was positive, whereas a preference for restricted choice was demonstrated when 2 of 3 choice sets within a session displayed a negative choice quotient and the mean choice quotient for the session was negative.

Table 2.

Schedules of Reinforcement for Each Participant Across Sets (3 Sets per Session in the No-Rules Condition and the Rules-with-Feedback Condition) (bold and italics)

Differential-reinforcement-of-free-choice (FC)sessions

FC sessions were conducted for participants who did not demonstrate a preference for free choice in NC sessions following RC sessions to determine if preference for free choice in subsequent NC sessions could be established. All FC schedules in the No-Rules Condition were set at FC 7∶8 RC 2∶8, setting up a relatively dense schedule in the free-choice arrangement compared to the restricted-choice arrangement in order to change preference quickly. Preference for free and restricted choice was determined as in the RC sessions.

Rules-with-Feedback Condition

The Rules-with-Feedback Condition was designed to evaluate the effects of rules regarding the odds of winning and feedback depicting the points won by each choice arrangement on responding and verbal reports under varying schedules of reinforcement. All sessions in this condition included statements in the initial instructions concerning the odds of winning for each choice arrangement (free or restricted) and a display of points won by each choice arrangement on the game screen (see Figure 2). An example of the additional statements included in the initial instructions is provided below, though the specific numbers and percentages changed according to the schedule of point delivery in effect.

For this part of the session, your mathematical odds of winning when you select your own numbers will be 4∶8 (you should win about 4 of every 8 turns, or 50% of the time).

Your odds of winning when the numbers are generated for you will be 6∶8 (you should win about 6 of every 8 turns, or about 75% of the time).

That is, your odds of winning whether you select your own numbers or whether your numbers are generated for you are as follows:

You Select 4∶8 (50%)

Numbers Generated 6∶8 (75%)

The three types of sessions described under the No-Rules Condition (NC, RC, and FC) were also used during the Rules-with-Feedback Condition with the same general rationales, except as described below. See Table 2 for the order of schedule presentations for each participant.

NC sessions

NC sessions were used to assess preference for free choice and verbal reports of the odds of winning in the presence of accurate rules and feedback.

RC sessions

RC sessions were conducted following NC sessions in which the participant demonstrated a preference for free choice. For Alysa, the only participant to begin the experiment with the Rules-with-Feedback Condition, schedules for the initial RC sessions were set at RC 5∶8 FC 4∶8 and were incrementally increased until a preference for free choice was no longer demonstrated. For the remaining participants, schedules in this condition were set to either (a) determine if leaner schedules of differential reinforcement of restricted choice compared to schedules in the No-Rules Condition would result in no preference or a preference for restricted choice, or (b) replicate changes in preference for free choice demonstrated earlier. For Xavier and Edmond, time constraints precluded a more parametric analysis.

FC sessions

FC sessions were conducted if participants did not demonstrate a preference for free choice during NC sessions. This only occurred for one participant (Chloe), and the schedule for her FC sessions in the Rules and Feedback Condition was set at FC 5∶8 RC 4∶8 as relatively small changes in the schedules had been effective in changing preference for this participant.

Program variations

We created multiple variations of the program for each schedule of reinforcement and counterbalanced presentations of these variations across sessions for each participant. These variations were designed to facilitate the appearance that reinforcement delivery during exposure trials was not programmed and to control against the effects of one choice arrangement inadvertently being correlated with higher probabilities of reinforcement in initial or later exposure trials or being correlated with more consecutive “wins” than the other. These factors were also controlled to some extent within sessions by programming reinforcement delivery in the third set of exposure trials to be the mirror image of reinforcement delivery in the first set of exposure trials. For example, if the first two free-choice exposure trials resulted in reinforcement delivery in the first set of exposure trials, then the first two restricted-choice trials resulted in reinforcement delivery in the third set of exposure trials, and so on.

Experimental Design

Three participants (Chloe, Edmond, and Xavier) participated in the No-Rules Condition first, followed by the Rules-with-Feedback Condition, and then a return to the No-Rules Condition. Within the first two conditions, a reversal design was used to assess preference and verbal reports under equal and differential schedules of reinforcement while controlling for history or testing effects (e.g., the development of more efficient responding in one choice arrangement than the other). The last exposure to the No-Rules Condition was implemented to assess the effects of a history of exposure to rules and feedback on responding and verbal reports under equal schedules of reinforcement in the absence of rules and feedback. A nonconcurrent multiple-baseline-across-participants design (including data from Robert, below) was used to determine if exposure to accurate rules with feedback, rather than continued exposure to differential reinforcement, was responsible for changes in responding and reporting.

Two other participants, Robert and Lucy, were exposed to the No-Rules Condition in which a reversal design was used to assess preference and verbal reports of the choice arrangement with the better odds of winning under equal and differential schedules of reinforcement while controlling for history and testing effects. Following the No-Rules Condition, Robert participated in one NC session under the Rules-with-Feedback Condition to probe the effects of rules on preference and verbal reports of the choice arrangement with the better odds of winning following a history of differential reinforcement of both restricted-choice and free-choice arrangements.

Finally, Alysa participated in the Rules-with-Feedback Condition followed by the No-Rules Condition, and a reversal design was used to assess preference and verbal reports under equal and differential schedules of reinforcement within both of these conditions. This design does not allow for the separation of a history of exposure to rules regarding schedules of reinforcement from prolonged exposure to differential reinforcement of the restricted choice arrangement.

RESULTS

Results for the two main dependent variables, preference and verbal reports of the odds of winning, are presented below by condition, as are the correspondence of preference to schedules of reinforcement and correspondence of preference to verbal reports of the odds of winning. The points and odds quotients and the accuracy of the verbal reports are reported separately. For all of the participants except Alysa, the No-Rules Condition was conducted first. Results for all of the participants during the No-Rules Conditions are reported below. Lucy did not participate in the Rules-with-Feedback condition because of time constraints and because of the variability in her verbal reports during the No-Rules Condition.

No-Rules Condition

Preference and verbal reports of the choice arrangement with the better odds of winning

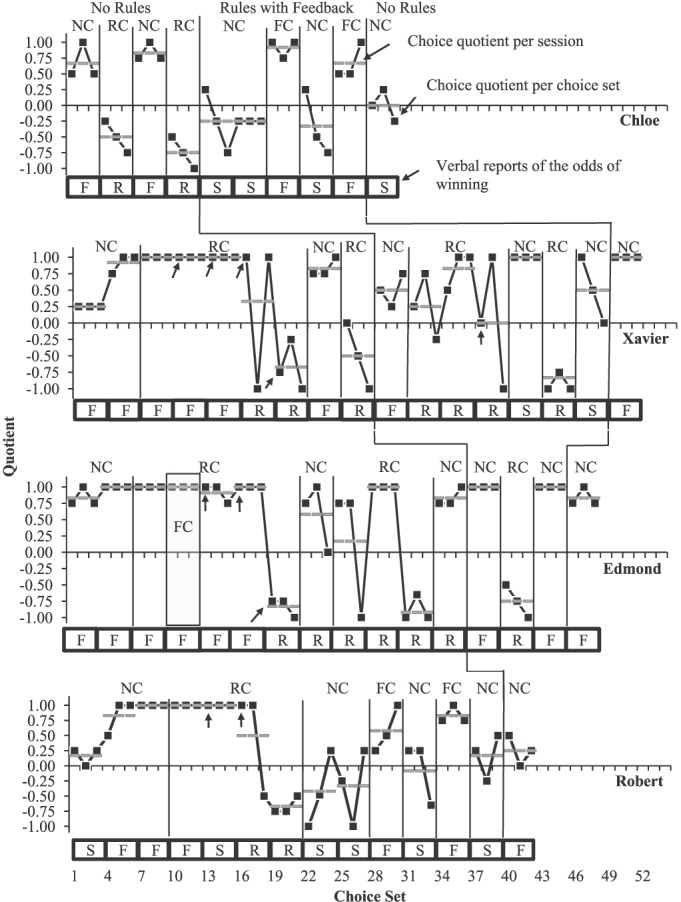

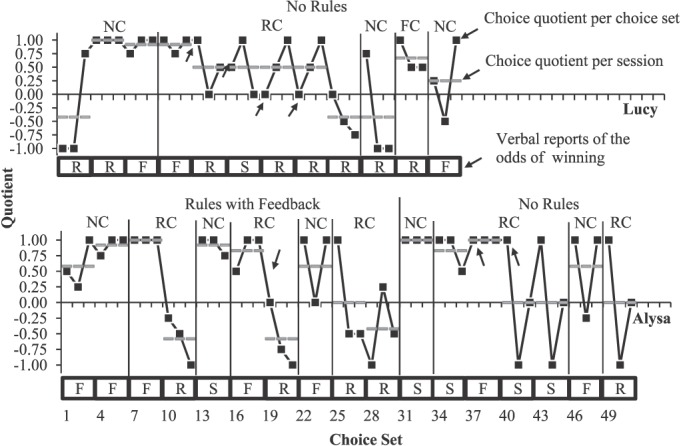

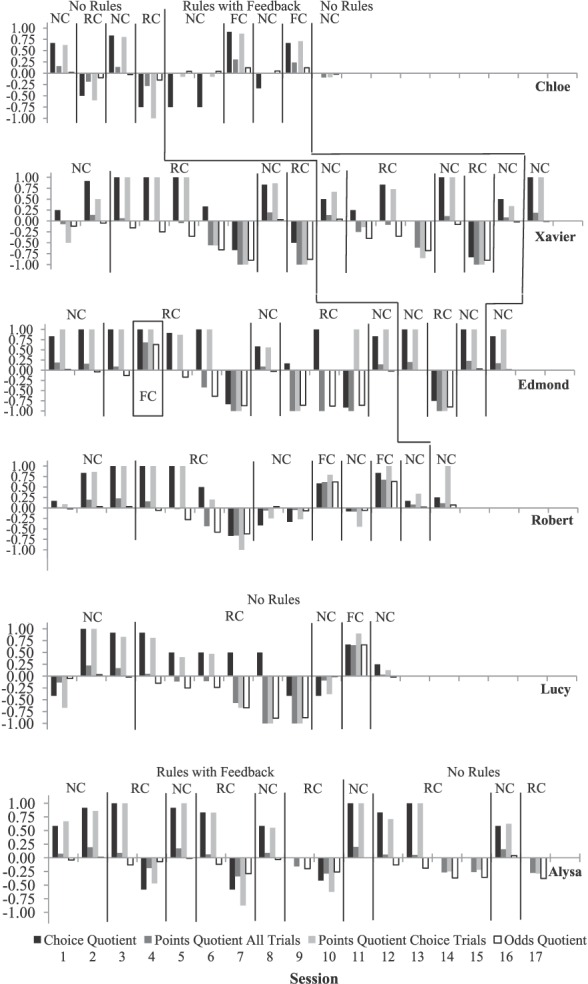

Preference was determined by visual inspection with the general guideline that preference for free choice was demonstrated when the choice quotients for at least two of the three choice sets within a session were positive, and the overall choice quotient for the session was also positive. Similarly, preference for restricted choice was demonstrated when the choice quotients for at least two of the three choice sets within a session were negative, and the overall choice quotient for the session was also negative. Figure 3 displays the choice quotients measured across choice sets and sessions and the verbal reports of the choice arrangement with the better odds of winning for each session for Chloe, Xavier, Edmond, and Robert; Figure 4 shows these same measures for Lucy and Alysa. All participants except Lucy exhibited a preference for free choice during all of the initial NC sessions in the No-Rules Condition; Lucy exhibited a preference for free choice in two of three initial NC sessions. Additionally, the 5 participants without a prior history with the Rules-with-Feedback Condition reported that the odds of winning were better in the free-choice arrangement in all (Chloe, Xavier, and Edmond) or at least some (Robert and Lucy) of these sessions. Alysa reported that her odds of winning were about the same in both choice arrangements in her initial NC session in the No-Rules Condition.

Figure 3. Choice quotient measured per choice set (black squares) and per session (grey lines) and verbal reports of the “odds of winning,” (white squares) for Chloe, Xavier, Edmond, and Robert (from top panel to bottom panel, respectively). For verbal reports, F, R, and S indicate the participant reported the odds of winning were better following free choice (F), restricted choice (R), or “about the same” (S). The unlabeled arrows indicate increases in the programmed differential reinforcement. For Edmond, during choice sets 10–12 free-choice selections were differentially reinforced due to experimenter error. (NC = No Differential Reinforcement; FC = Differential Reinforcement of Free Choice; RC = Differential Reinforcement of Restricted Choice).

Figure 4. Choice quotient measured per choice set (black squares) and per session (grey lines) and verbal reports of the “odds of winning,” (white squares) for Lucy (top panel) and Alysa (bottom panel). For verbal reports, F, R, and S indicate the participant reported the odds of winning were better following free choice (F), restricted choice (R), or “about the same” (S). The unlabeled arrows indicate increases in the programmed differential reinforcement. (NC = No Differential Reinforcement; FC = Differential Reinforcement of Free Choice; RC = Differential Reinforcement of Restricted Choice).

During the first set of RC sessions following NC sessions in the No-Rules Condition, participants exhibited a preference for restricted choice within one to six sessions as follows: Chloe, one session; Xavier, five sessions; Edmond, four sessions (not including one session of FC presented due to experimenter error); Robert, four sessions; Lucy, six sessions; and Alysa, four sessions. The schedule of reinforcement for the last RC session for each participant during this phase was as follows: Chloe, RC 5∶8 FC 4∶8; Alysa, RC 7∶8 FC 4∶8; Robert, RC 7∶8 FC 2∶8; and Xavier, Edmond, and Lucy, RC 7∶8 FC 0∶8. Despite some variability in verbal reports of the odds of winning in early RC sessions, all participants reported that the odds of winning were better in the restricted-choice arrangement in the last session of this phase, with the exception of Alysa, who reported the odds of winning were about the same across the choice arrangements.

During the return to NC sessions, Chloe, Xavier, Edmond, and Alysa displayed a preference for free choice within the first session, and Chloe, Xavier, and Alysa verbally reported that the odds of winning were better in the free-choice arrangement. Robert and Lucy displayed a preference for restricted choice in their first NC session, but an increasing trend in the choice quotients across sets was noted for Robert, and a second NC session was conducted. During this session, Robert also displayed a preference for restricted choice. Robert reported that his odds of winning were about the same across choice arrangements following each session, and Lucy reported her odds of winning were better in the restricted-choice arrangement.

For Chloe, Xavier, and Alysa a return to the RC sessions at the last reinforcement schedule of the previous RC sessions (RC 5∶8 FC 4∶8, RC 7∶8 FC 0∶8, and RC 7∶8 FC 4∶8, respectively) resulted in a return to preference for restricted choice or no preference within one session; for Edmond 3 sessions at RC 7∶8 FC 0∶8 were conducted before he no longer demonstrated a preference for free choice. Chloe, Xavier, Alysa, and Edmond all reported that their odds of winning were better following restricted-choice terminal links during these RC sessions.

Lucy and Robert did not display a preference for free choice during the return to NC sessions, therefore FC sessions were conducted with them next. Both participants exhibited a preference for free choice in the first FC session, with Robert verbally reporting that the odds of winning were better in the free-choice arrangement but Lucy reporting that the odds of winning were better in the restricted-choice arrangement. Robert did not demonstrate a preference for free choice in the next NC session, and a second FC session was conducted. Robert demonstrated a preference for free choice in this second session and in the NC session immediately following it. Robert reported that the odds of winning were better in the free-choice arrangement during FC sessions, and about the same across choice arrangements during NC sessions. Lucy demonstrated a preference for free choice in the NC session immediately following her first (and only) FC session, and reported that the odds of winning were better in the restricted-choice arrangement in the FC session and that the odds of winning were better in the free-choice arrangement in the NC session.

Chloe, Xavier, and Edmond all began the experiment with the No-Rules Condition, followed by the rules condition, and ending with a return to the No-Rules Condition for a final NC session. In this final session, Chloe exhibited no preference and reported that the odds of winning were the same across free- and restricted-choice arrangements. In contrast, Xavier and Edmond exhibited preference for free choice and reported that the odds of winning were better in the free-choice arrangements. As preference and verbal reports of the odds of winning during these sessions were similar to preference and verbal reports of the odds of winning during NC sessions before exposure to the Rules-with-Feedback Condition for 2 of the 3 participants (Xavier and Edmond), these three sessions are reported with the other NC sessions in the No-Rules Condition below.

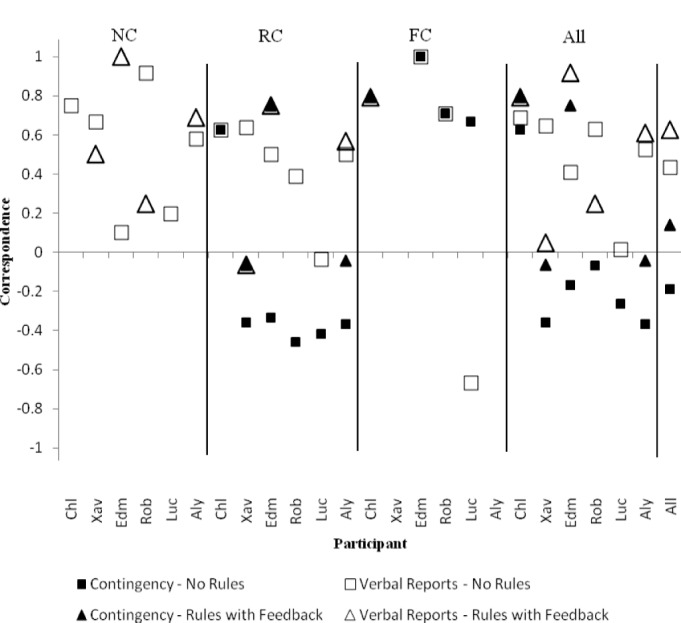

Correspondence of preference to schedules of reinforcement

With the exception of Chloe, correspondence between preference and the schedules of reinforcement was relatively low during RC sessions under the No-Rules Condition (see Figure 5). Mean correspondence during these conditions was as follows: .63 (Chloe), −.36 (Xavier), −.33 (Edmond), −.46 (Robert), −.42 (Lucy), and −.37 (Alysa). Mean correspondence during FC sessions in the No-Rules Condition was 1.0, .71, and .67 for Edmond, Robert, and Lucy, respectively. Correspondence was not calculated for NC sessions as any pattern of responding, and thus any demonstrated preference, is consistent with optimal responding in NC sessions.

Figure 5. Mean correspondence of preference to schedules of reinforcement during No-Rules sessions (black squares) and Rules-with-Feedback sessions (black triangles) and mean correspondence of preference to verbal reports of the choice arrangement with the better odds of winning during No-Rules sessions (white squares) and Rules-with-Feedback sessions (white triangles) for each participant measured across NC, RC, and FC sessions and across all sessions for each participant. (NC = No Differential Reinforcement; FC = Differential Reinforcement of Free Choice; RC = Differential Reinforcement of Restricted Choice).

Correspondence of preference to verbal reports of the odds of winning

Mean correspondence of preference to verbal reports of the choice arrangement with the better odds of winning during NC sessions was .75 for Chloe, .67 for Xavier, .10 for Edmond, .92 for Robert, .20 for Lucy, and .58 for Alysa. During RC sessions, mean correspondence of preference to verbal reports of the choice arrangement with the better odds of winning was as follows: .63 (Chloe), .64 (Xavier), .50 (Edmond), .39 (Robert), −.03 (Lucy), and .50 (Alysa). Mean correspondence during FC sessions in the No-Rules Condition was 1.0 for Edmond, .71 for Robert, and −.67 for Lucy. See Figure 5 for a summary of these correspondences.

Rules-with-Feedback Condition

Preference and verbal reports of the choice arrangement with the better odds of winning

All participants except Chloe exhibited a preference for free choice and reported that the odds of winning were better in the free-choice arrangement during the initial NC sessions in the Rules-with-Feedback Condition. Chloe exhibited a preference for restricted choice and reported that the odds of winning were about the same across choice arrangements in her first NC session in this condition, and replicated this performance in a second NC session immediately following the first (see Figure 3 for a summary of these measures for Chloe, Xavier, Edmond, and Robert and Figure 4 for a summary of these measures for Alysa).

During the first set of RC sessions following NC sessions in the Rules-with-Feedback Condition, participants exhibited a preference for restricted choice within 3 (Xavier), 1 (Edmond), or 2 (Alysa) sessions. The schedule of reinforcement for the last RC session for each participant during this phase was as follows: Alysa, RC 5∶8 FC 4∶8; Xavier, RC 7∶8 FC 2∶8; and Edmond, RC 7∶8 FC 0∶8. Alysa reported that the odds of winning were better in the free-choice arrangement in her first RC session, but that the odds of winning were better in the restricted-choice arrangement in her second RC session in this condition. Xavier and Edmond reported that the odds of winning were better in the restricted-choice arrangement during all RC sessions in the Rules-with-Feedback Condition.

During the NC session following RC sessions, Xavier, Edmond, and Alysa displayed a preference for free choice and Xavier and Edmond reported that the odds of winning were better in the free-choice arrangement whereas Alysa reported the odds of winning were the same across choice arrangements. At this point Edmond returned to the No-Rules Condition.

Xavier and Alysa demonstrated a preference for restricted choice in 1 (Xavier) or 2 (Alysa) RC sessions following the return to NC sessions at schedules of RC 7∶8 FC 0∶8 (Xavier) and RC 6∶8 FC 4∶8 (Alysa). Xavier reported that the odds of winning were better in the restricted-choice arrangement. Alysa reported that the odds of winning were better in the free-choice arrangement in her first RC session, but reported that the odds of winning were better in the restricted-choice arrangement in her second RC session.

Given her relatively variable performance in earlier RC sessions, Alysa participated in one more round of NC sessions followed by RC sessions. During the NC session, Alysa demonstrated a preference for free choice and reported that the odds of winning were better in the free-choice arrangement. During the RC sessions, Alysa demonstrated no preference in the first session and a preference for restricted choice in the second session, displaying a negative choice quotient in the last 5 choice sets. Alysa reported that her odds of winning were better in the restricted-choice arrangement in both sessions.

As Chloe demonstrated a preference for restricted choice during the initial NC sessions, a FC session was conducted next, during which Chloe exhibited a preference for free choice and reported the odds of winning were better in the free-choice arrangement. During the subsequent NC session, Chloe demonstrated a preference for restricted choice and reported the odds of winning were about the same across choice arrangements. During a second FC session, Chloe again demonstrated a preference for free choice and reported that the odds of winning were better in the free-choice arrangement.

Correspondence of preference to schedules of reinforcement

Mean correspondences of preference to the schedules of reinforcement during RC sessions in the Rules-with-Feedback Condition were −.06 (Xavier), .75 (Edmond), and −.04 (Alysa). Mean correspondence of preference to schedules of reinforcement during FC sessions in the Rules-with-Feedback Condition was .79 for the one participant exposed to these sessions (Chloe). Correspondence was not calculated for NC sessions as any pattern of responding, and thus any demonstrated preference, is consistent with optimal responding in NC sessions. See Figure 5 for a summary of these correspondences.

Correspondence of preference to verbal reports of the odds of winning

Mean correspondences of preference to verbal reports of the choice arrangement with the better odds of winning during NC sessions were 1.0 for Edmond, .25 for Robert, and .67 for Alysa. During RC sessions, mean correspondences of preference to verbal reports of the odds of winning were −.06 (Xavier), .75 (Edmond), and .57 (Alysa). Mean correspondence during FC sessions in the Rules-with-Feedback Condition was .79 for the one participant exposed to these sessions (Chloe). See Figure 5 for a summary of the correspondences.

Comparison of correspondences of preference to schedules of reinforcement and correspondence of preference to verbal reports of winning across the No-Rules and Rules-with-Feedback Conditions

The specific schedules of reinforcement within the RC and FC sessions were typically not matched across the No-Rules and Rules-with-Feedback Conditions. This presents a potential confound when evaluating if preference was more or less sensitive to reinforcement schedules or to reports of the choice arrangement with the better odds of winning in the Rules-with-Feedback Condition compared to the No-Rules Condition. In order to address this confound, only sessions with identical schedules of reinforcement in both the No-Rules and the Rules-with-Feedback Conditions for each participant were used to compare sensitivity to schedules of reinforcement. For five of the six different matched schedules across participants examined, the correspondences of preference to schedules of reinforcement were slightly greater in the Rules-with-Feedback Condition than in the No-Rules Condition. For three of the five comparisons, the correspondences of preference to verbal reports of the choice arrangement with the better odds of winning were slightly greater in the Rules-with-Feedback Condition than in the No-Rules Condition. These data are presented in Table 3.

Table 3.

Correspondences of Preference to Schedules of Reinforcement (Contingencies) and of Preference to Verbal Reports of the Odds of Winning on Matched Schedules Across the No- Rules and Rules-with-Feedback Conditions

Points and Odds Quotients

Points quotients were calculated for each participant across all points earned within a session and separately for points earned during choice trials (see Figure 6). Reinforcement following exposure trials was preprogrammed, and thus an equal number of points were won following free- and restricted-choice arrangements during exposure trials in NC sessions, more points were won following restricted-choice arrangements than following free-choice arrangements during exposure trials in all RC sessions, and more points were won following free-choice arrangements than following restricted-choice arrangements during exposure trials in all FC sessions. However, points were delivered on a RR schedule during choice trials, and exposure to the free- and restricted-choice arrangements was not controlled during these trials. Therefore the points quotient was used to determine how the amount of obtained reinforcement corresponded to programmed schedules of reinforcement. Across the 41 RC sessions, the points quotient measured across all trials within a session was negative during 30 sessions and the points quotient measured across choice trials only was negative during 20 sessions. Thus, in 21 of 41 sessions, more points were won in the free-choice arrangement than in the restricted-choice arrangement during choice trials, and in 11 of these sessions the difference in points won during choice trials was sufficient to offset the additional points won in the restricted-choice arrangement during exposure trials. In contrast, in all 6 of the FC sessions, the points quotient measured across all trials within a session and the points quotient measured across choice trials only were both positive.

Figure 6. Choice quotient, point quotient for all trials, point quotient for choice trials, and odds quotient per session for Chloe, Xavier, Edmond, Robert, Lucy, and Alysa (from top to bottom panel, respectively). (NC = No Differential Reinforcement; FC = Differential Reinforcement of Free Choice; RC = Differential Reinforcement of Restricted Choice).

The points quotients measured across all trials were relatively low (range, −.13 to .23) whereas points quotients measured across choice trials only were much more variable (range, −.75 to 1). Visual inspection of Figure 6 shows that for 38 of the 39 NC sessions across all participants the points quotient measured across all trials within a session and the choice quotient were either both positive, both negative, or one or both were zero. The one exception was the first NC session for Xavier, in which he selected the free-choice arrangement more often than the restricted-choice arrangement in choice trials, but earned slightly more points in the restricted-choice arrangement. For all 6 FC sessions across participants, the choice quotient and points quotient were both positive. Results for the RC sessions were more variable, with the choice and points quotients either both positive, both negative, or one or both zero in 28 of 41 sessions.

Additionally, for all participants, the points quotient measured across choice trials within that session generally corresponded to the choice quotient for a session. This was the case for 8 of 10 sessions (Chloe), 13 of 18 sessions (Xavier), 13 of 16 sessions (Edmond), 14 of 14 sessions (Robert), 10 of 12 sessions (Lucy), and 15 of 17 sessions (Alyssa). In 6 of the 14 sessions that did not display this pattern, either the points quotient measured across choice trials or the choice quotient (or both) was 0. Thus, in only 8 of 77 sessions was the points quotient measured across choice trials within a session positive and the choice quotient negative, or vice versa.

The odds quotient was calculated across each session for all participants to determine if obtained schedules of reinforcement were consistent with programmed schedules of reinforcement. For all 41 RC sessions, the odds quotient was negative, and for all FC sessions the odds quotient was positive (see Figure 6). During NC sessions, the mean odds quotient calculated across all participants was −.01 with a range of −.12 to .07. Thus, the obtained odds of winning during NC sessions were very close to the programmed odds of winning.

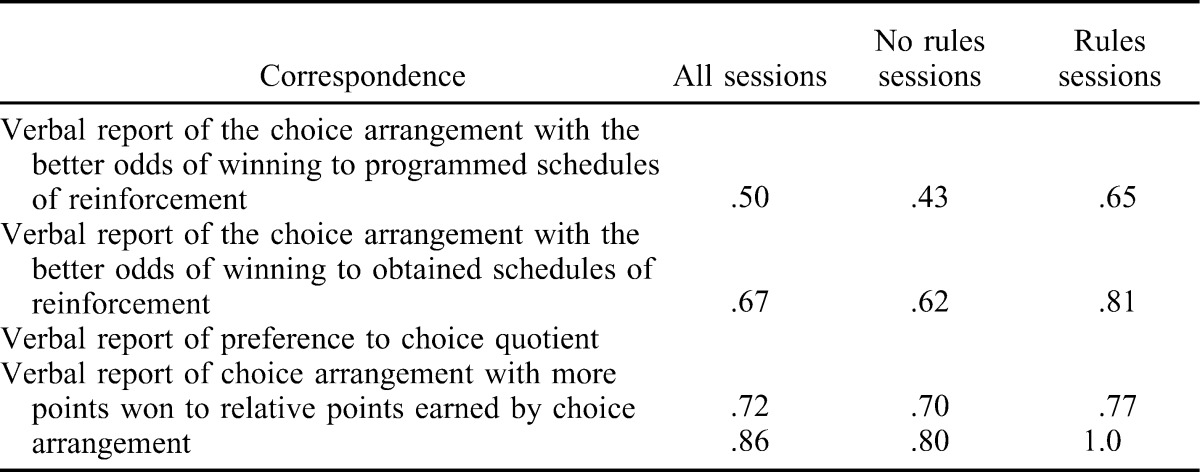

Correspondence Between Verbal Reports and Programmed or Obtained Measures

The correspondence between three verbal reports (relative odds of winning, preference for choice arrangements, and relative points won in choice arrangements) and programmed or obtained measures were collected for each participant every session. Overall, the correspondence of verbal reports of the choice arrangement with the better odds of winning to programmed and obtained probabilities of reinforcement was generally low and variable. Correspondence of verbal reports of preference to the choice quotient was also low and variable (see Table 4). Further, relatively liberal measures were employed in calculating these correspondences; it is therefore unlikely that the poor correspondence is an artifact of the measurements used. Across all sessions, the correspondence of verbal reports of the choice arrangement with the better odds of winning to programmed schedules of reinforcement was .50 and the correspondence of verbal reports of the choice arrangement with the better odds of winning to obtained schedules of reinforcement (i.e., including marginal correspondences) was .67. The overall correspondence of verbal reports of preference to the choice quotients each session was .72. Finally, the overall correspondence of verbal reports of relative points won per choice arrangement and points won per choice arrangement was .86.

Table 4.

Correspondence Across All Participants of Verbal Reports to Obtained or Programmed Outcomes

Overall correspondence was higher in the Rules-with-Feedback Condition than in the No-Rules Condition. The correspondence between verbal reports of the choice arrangement with the most points won and obtained measures was 1.0 in the Rules-with Feedback Condition, compared to .80 in the No-Rules Condition. This finding suggests that information regarding points won in different choice arrangements was accepted as accurate information by the participants. The correspondence between verbal reports of preference and choice quotients across all participants in the Rules-with-Feedback Condition was .77 compared to .70 in the No-Rules Condition. It is not clear why correspondence between reports of preference and the choice quotient would be higher in the Rules-with-Feedback Condition.

The correspondence of verbal reports of the choice arrangement with the better odds of winning to obtained relative odds of winning across all participants in the Rules-with-Feedback sessions was .65 compared to .43 in the No-Rules sessions, and .81 in Rules-with-Feedback sessions compared to .62 in No-Rules sessions when marginal correspondences were scored as correspondences.

DISCUSSION

In this study, we were interested in examining how preference and verbal reports consistent with a self-rule of the efficacy of choice (i.e., that choice selections produced better reinforcement in terms of quantity or quality than non-choice selections) would be affected by differential reinforcement of restricted choice and accurate rules with feedback. Although there was considerable variability in responding across participants, a few general conclusions from the results of this study can be offered. First, for all participants, differential reinforcement of restricted choice resulted in preference for restricted choice, though the durability of preference for free choice during differential reinforcement of restricted choice varied considerably across participants. Second, preference corresponded to the relative amount of points earned in each choice arrangement (free or restricted) in a session. Third, verbal reports of the choice arrangement with the better odds of winning, relative points earned, and preference were more accurate when rules and feedback were provided. Fourth, responding during sessions with rules and feedback corresponded slightly more to reinforcement schedules than responding during sessions with no rules, although the difference was small and the number of matched comparisons was limited. Fifth, correspondences of preference to verbal reports of the choice arrangement with the better odds of winning were generally greater than correspondences of preference to reinforcement schedules. These findings are discussed below, as are several relevant considerations.

All of the participants except for Chloe displayed at least some insensitivity to the schedules of reinforcement during RC sessions. This finding replicates previous research on preference for choice (Tiger et al., 2006; Thompson et al., 1998). During the initial RC sessions, Xavier, Edmond, and Lucy continued to display a preference for choice until 7 of 8 restricted-choice selections were reinforced and reinforcement for free-choice selections was extinguished, and Robert did not display a preference for restricted choice until reinforcement for restricted-choice selections occurred, on average, 3.5 times more frequently than reinforcement for free-choice selections. During the first set of RC sessions in the No-Rules Condition, even after prior exposure to RC sessions with rules and feedback, Alysa displayed a preference for choice until restricted-choice selections were reinforced, on average, 1.75 times more frequently than free-choice selections, and even at this schedule of reinforcement Alysa displayed no preference, rather than a preference for restricted choice.

One possible account for the relative insensitivity of preference to the schedules of reinforcement in the initial set of RC sessions in the No-Rules Condition is that the participants developed a self-rule of the efficacy of choice, and that this self-rule decreased sensitivity to schedules of reinforcement. This account would be consistent with previous findings that rules decrease sensitivity to contingencies (Bicard & Neef, 2002; Hackenberg & Joker, 1994; Hayes et al., 1986; Joyce & Chase, 1990; Kaufman et al., 1966; Lippman & Meyer, 1967; Matthews et al., 1977; Shimoff et al., 1981; Weiner, 1970) and that self-rules affect responding similarly to rules (Rosenfarb, Newland, Brannon, & Howey, 1992). The possibility that the participants developed a self-rule of the efficacy of choice is further supported by verbal reports that the odds of winning were higher in the free-choice arrangement than in the restricted-choice arrangement during the first several RC sessions in the No-Rules Condition. Three of the participants (Xavier, Robert, and Lucy) reported that the odds of winning were better in the restricted-choice arrangement in the session or two prior to the session in which they demonstrated preference for restricted choice. Thus, for these 3 participants verbal reports of the odds of winning changed before preference changed, suggesting that responding might have been influenced by a self-rule.

Although the preference for free choice and verbal reports of the odds of winning in the RC sessions discussed above are consistent with the presence of a self-rule of the efficacy of choice, these data are also consistent with other explanations. In the RC sessions in which Xavier, Edmond, Robert, Lucy, and Alysa demonstrated a preference for free choice and reported that the odds of winning were better in the free-choice arrangement discussed above, the points quotient measured across all trials each session was positive or near zero—that is, nearly as many or more points were won in the free-choice arrangement in these sessions than in the restricted-choice arrangement. Thus, rather than self-rules, the verbal reports that the odds of winning were better in the free-choice arrangement in these sessions may have been inaccurate descriptions of the reinforcement schedules influenced more by the relative amount of reinforcement across choice arrangements than by the probability of reinforcement for each choice arrangement. In the current experiment, we provided no programmed reinforcement for verbal reports; however, it is possible that verbal reports were influenced by unidentified social consequences (e.g., experimenter approval; see Schwartz & Baer, 1991; Shimoff, 1986). This possibility is minimized somewhat by the use of written questionnaires and limited interaction with the experimenters, but remains a possibility.

As noted, the durability of the preference for free choice displayed by five of the participants is consistent with a self-rule of the efficacy of choice influencing preference for free choice. However, other variables may have influenced the relative insensitivity to the reinforcement schedules displayed by most of the participants. Matthews et al. (1977) identified four common factors that may decrease sensitivity to schedules of reinforcement in experiments with humans: minimal or incomplete instructions, low response effort, ineffective reinforcers, and nonconsumable reinforcers. Any or all of these factors may have contributed to schedule insensitivity in the current experiment. The instructions in the No-Rules Condition were minimal, the response effort to complete each terminal link was very low, the reinforcers may have exerted weak control over responding (but control of points over responding was demonstrated for all participants), and nonconsumable reinforcers were used. It may be noteworthy in this regard that the 2 participants who displayed the greatest schedule sensitivity, Chloe and Alysa, both exchanged points earned in the experiment for money, whereas the other participants earned points for extra credit, but did not engage in exchanges.

To summarize the discussion to this point, 5 of the participants showed some durability of preference for free choice during the initial RC sessions in the No-Rules Condition. This durability may have been influenced by a self-rule of the efficacy of choice, or it may have been influenced by other factors including the relative amount of points earned across each choice arrangement rather than the probabilities of winning in each choice arrangement. But what of the participants' responding following differential reinforcement of restricted choice? Did they return to displaying a preference for free choice and giving verbal reports that the odds of winning were better following free-choice selections, or did one or both of these measures change? Based upon previous research (Karsina et al., 2011; Mazur, 1996), it might be expected that the previously reinforced selections for restricted choice might persist during the NC sessions following RC sessions. For example, Karsina and colleagues (2011) found that providing differential reinforcement for free choice resulted in a durable preference for free choice during sessions without differential reinforcement for 5 of their 7 participants. In contrast, only 2 of 6 participants in the current study (Lucy and Robert) demonstrated a preference for restricted choice in sessions without programmed differential reinforcement following sessions with differential reinforcement of restricted choice. It might be that the gradual schedule changes in the current experiment compared to the Karsina et al. study are responsible for the different findings. Interestingly, Robert and Lucy's data in NC sessions following FC sessions in the No-Rules Condition (sessions 11 and 13 for Robert, session 12 for Lucy) were also somewhat consistent with Karsina and colleagues previous findings. In these sessions, Lucy displayed a preference for free choice and Robert displayed preference for restricted choice in one session and preference for free choice in the other; however, none of the preferences displayed was very strong and responding across choice sets in these sessions was variable. Future research should examine the effects of a history of differential reinforcement for free choice versus a comparable history of reinforcement for restricted choice within and across participants.

In contrast to Robert and Lucy, Chloe, Xavier, Edmond, and Alysa all demonstrated a preference for free choice during NC sessions immediately following the first block of RC sessions in the No-Rules Condition. Chloe, Xavier, and Alysa also reported that their odds of winning were better in the free-choice arrangement. Thus, 3 of the 6 participants (Chloe, Xavier, and Alysa) demonstrated preference and verbal reports consistent with the efficacy of choice in the absence of programmed differential reinforcement following differential reinforcement of restricted choice, but 3 (Edmond, Robert, and Lucy) did not. In comparison, during the first NC session following RC sessions in the No-Rules Condition, all 6 participants demonstrated preference and verbal reports of the odds of winning consistent with the points quotient measured across all trials. However, whether the relative amount of points won across each terminal link determined preference or whether preference determined the amount of points won across each terminal link cannot be isolated with the current experimental arrangement. In measuring preference for free choice, we allowed differential exposure to the terminal links during choice trials. We attempted to mitigate this differential exposure with a high ratio of exposure trials to choice trials (4∶1), but at equal or near equal schedules of reinforcement a strong preference displayed during choice trials often resulted in more points won in one choice arrangement than the other, and sometimes even more points won in the choice arrangement with the lower probability of reinforcement. Future researchers could use higher ratios of exposure trials to choice trials and more distinct schedules of differential reinforcement to further reduce this potential confound. Other approaches that have been used previously to keep exposure to the choice arrangements relatively equal include employing variable interval schedules during the initial links of the concurrent-chains arrangement (e.g., Catania, 1975)—with this arrangement higher responding to one initial link does not necessarily result in more exposures to the corresponding terminal link.

In addition to examining the effects of differential reinforcement on preference and verbal reports of the odds of winning, we examined the effects of accurate rules and feedback on these measures. Although rules and feedback were correlated with more accurate verbal reports across the verbal reports of the choice arrangement with the better odds of winning, relative points won, and preference (see Table 4), we were interested specifically in preference and verbal reports of the odds of winning during NC sessions in the Rules-with-Feedback Condition. Of the 5 participants who participated in the Rules-with-Feedback Condition, only Chloe displayed a preference for restricted choice and reported that the odds of winning were the same across choice arrangements in NC sessions in this condition. Xavier, Edmond, Robert, and Alysa all displayed a preference for free choice in all NC sessions in the Rules-with-Feedback Condition, and Robert and Edmond reported that their odds of winning were better in the free-choice arrangement in all of these NC sessions. Xavier and Alysa reported that their odds of winning were better in the free-choice arrangement in some NC sessions in the Rules-with-Feedback Condition, but reported that the odds of winning were the same across choice arrangements in other NC sessions. Therefore, providing accurate rules and feedback did not consistently change preference or verbal reports of the odds of winning. This finding is consistent with the findings from Dixon (2000), as Dixon found that the participants in his study still wagered more chips when they placed their own bets versus the experimenter placing bets for them after accurate rules about winning were provided, they just wagered relatively less chips when placing their own bets than they had following inaccurate rules.

The more accurate verbal reports of the odds of winning the Rules-with-Feedback condition could be a function of the additional information provided in this condition. But if this is the case, what are we to make of the inaccurate verbal reports of the odds of winning during NC sessions for Xavier, Edmond, Robert, and Alyssa? After all, these participants were provided with the stated odds of winning for each terminal link at the beginning of each session and on every terminal link screen throughout the session (i.e., 121 exposures to the stated odds of winning each session), and still provided inaccurate verbal reports of the odds of winning in at least some NC sessions. One possibility is that the rules (stated odds of winning across choice arrangements) and the feedback (points won in each choice arrangement) may have appeared to conflict. The feedback in the Rules-with-Feedback Condition was intended to serve as verification for the participant that the stated odds for the choice arrangements were accurate, at least relative to one another; however, no indication of the number of exposures to each terminal link was provided. Thus, due to differential exposure to the choice arrangements, it was possible for participants to read that the odds were better in the restricted-choice arrangement, for example, but to also see that they had received more points in the free-choice arrangement. Although accurate, then, the feedback may have functioned as incomplete feedback, providing information as to the amount of points won in each choice arrangement but not the odds of winning for each choice arrangement. Research suggests that incomplete rules may lead to insensitive performance (Hayes et al., 1986; Hefferline, Keenan, & Harford, 1959), and inaccurate rules may lead to less compliance with the rule (Torgurd & Holborn, 1990). Thus, it is possible that in the current study providing the number of points won in each terminal link and the number of presentations of each terminal link may have led to more accurate verbal reports of the choice arrangement with the better odds of winning. Future research should examine the effects of providing different types of information such as only the points won per choice arrangement (as in this study) versus providing the points won per choice arrangement and the number of presentations of each choice arrangement.