Abstract

A polynomial spline estimator is proposed for the mean function of dense functional data together with a simultaneous confidence band which is asymptotically correct. In addition, the spline estimator and its accompanying confidence band enjoy oracle efficiency in the sense that they are asymptotically the same as if all random trajectories are observed entirely and without errors. The confidence band is also extended to the difference of mean functions of two populations of functional data. Simulation experiments provide strong evidence that corroborates the asymptotic theory while computing is efficient. The confidence band procedure is illustrated by analyzing the near infrared spectroscopy data.

Keywords: B spline, confidence band, functional data, Karhunen-Loéve L2 representation, oracle efficiency

AMS Subject Classification: Primary 62M10, Secondary 62G08

1. Introduction

In functional data analysis problems, estimation of mean functions is the fundamental first step; see Cardot (2000); Rice and Wu (2001); Cuevas, Febrero and Frainman (2006); Ferraty and Vieu (2006); Degras (2011) and Ma, Yang and Carroll (2011) for example. According to Ramsay and Silverman (2005), functional data consist of a collection of iid realizations 3-1 of a smooth random function η(x), with unknown mean function Eη(x) = m(x) and covariance function G(x, x′) = cov {η(x), η(x′)}. Although the domain of η(·) is an entire interval 𝒳, the recording of each random curve ηi(x) is only over a finite number Ni of points in 𝒳, and contaminated with measurement errors. Without loss of generality, we take 𝒳 = [0, 1].

Denote by Yij the j-th observation of the random curve ηi(·) at time point Xij, 1 ≤ i ≤ n, 1 ≤ j ≤ Ni. Although we refer to variable Xij as time, it could also be other numerical measures, such as wavelength in Section 6. In this paper, we examine the equally spaced dense design, in other words, Xij = j/N, 1 ≤ i ≤ n, 1 ≤ j ≤ N with N going to infinity. For the i-th subject, i = 1, 2, …,n, its sample path {j/N, Yij} is the noisy realization of the continuous time stochastic process ηi(x) in the sense that Yij = ηi (j/N)+σ (j/N) εij, with errors εij satisfying , and {ηi(x), x ∈ [0, 1]} are iid copies of the process {η(x), x ∈ [0, 1]} which is L2, i.e., E ∫[0,1] η2(x)dx < +∞.

For the standard process {η(x), x ∈ [0, 1]}, let sequences be the eigenvalues and eigenfunctions of G(x, x′) respectively, in which λ1 ≥ λ2 ≥ ⋯ ≥ 0, form an orthonormal basis of L2 ([0, 1]) and , which implies that ∫ G(x, x′) ψk (x′) dx′ = λkψk(x). The process {ηi(x), x ∈ [0, 1]} allows the Karhunen-Loève L2 representation , where the random coefficients ξik are uncorrelated with mean 0 and variance 1, and . In what follows, we assume that λk = 0, for k > κ, where κ is a positive integer or ∞, thus and the model that we consider is

| (1) |

Although the sequences and the random coefficients ξik exist mathematically, they are unknown or unobservable respectively.

The existing literature focuses on two data types. Yao, Müller and Wang (2005) studied sparse longitudinal data for which Ni, i.e. the number of observations for the i-th curve, is bounded and follows a given distribution, in which case Ma, Yang and Carroll (2011) obtained asymptotically simultaneous confidence band for the mean function of the functional data, using piecewise constant spline estimation. Li and Hsing (2010a) established uniform convergence rate for local linear estimation of mean and covariance function of dense functional data, where min1≤i≤n Ni ≫ (n/logn)1/4 as n → ∞ similar to our Assumption (A3), but did not provide asymptotic distribution of maximal deviation or simultaneous confidence band. Degras (2011) built asymptotically correct simultaneous confidence band for dense functional data using local linear estimator. Bunea, Ivanescu and Wegkamp (2011) proposed asymptotically conservative rather than correct confidence set for the mean function of Gaussian functional data.

In this paper, we propose polynomial spline confidence band for the mean function based on dense functional data. In function estimation problems, simultaneous confidence band is an important tool to address the variability in the mean curve, see Zhao and Wu (2008); Zhou, Shen and Wolfe (1998) and Zhou and Wu (2010) for related theory and applications. The fact that simultaneous confidence bands have not been widely used for functional data analysis is certainly not due to lack of interesting applications, but to the greater technical difficulty to formulate such bands for functional data and establish their theoretical properties. In this work, we have established asymptotic correctness of the proposed confidence band using various properties of spline smoothing. The spline estimator and the accompanying confidence band are asymptotically the same as if all the n random curves are recorded over the entire interval, without measurement errors. They are oracally efficient despite the use of spline smoothing, see Remark 1. This provides partial theoretical justification for treating functional data as perfectly recorded random curves over the entire data range, as in Ferraty and Vieu (2006). Theorem 3 of Hall, Müller and Wang (2006) stated mean square (rather than the stronger uniform) oracle efficiency for local linear estimation of eigenfunctions and eigenvalues (rather than the mean function), under assumptions similar to ours, but provided only an outline of proof. Among the existing works on functional data analysis, Ma, Yang and Carroll (2011) proposed the simultaneous confidence band for sparse functional data. However, their result does not enjoy the oracle efficiency stated in Theorem 2.1, since there are not enough observations for each subject to obtain an good estimate of the individual trajectories. As a result, it has the slow nonparametric convergence rate of n−1/3logn, instead of the parametric rate of n−1/2 as this paper. This essential difference completely separates dense functional data from sparse ones.

The aforementioned confidence band is also extended to the difference of two regression functions. This is motivated by Li and Yu (2008), which applied functional segment discriminant analysis to a Tecator data set, see Figure 3. In this data set, each observation (meat) consists of a 100-channel absorbance spectrum in the wavelength with different fat, water and protein percent. Li and Yu (2008) used the spectra to predict whether the fat percentage is greater than 20%. On the flip side, we are interested in building a 100 (1 − α) % confidence band for the difference between regression functions from the spectra of the less than 20% fat group and the higher than 20% fat group. If this 100 (1 − α) % confidence band covers the zero line, one accepts the null hypothesis of no difference between the two groups, with p-value no greater than α. Test for equality between two groups of curves based on the adaptive Neyman test and wavelet thresholding techniques were proposed in Fan and Lin (1998), which did not provide an estimator of the difference of the two mean functions nor a simultaneous confidence band for such estimator. As a result, their test did not extend to testing other important hypotheses on the difference of the two mean functions while our Theorem 2.3 provides a benchmark for all such testing. More recently, Benko, Häardle and Kneip (2009) developed two-sample bootstrap tests for the equality of eigenfunctions, eigenvalues and mean functions by using common functional principal components and bootstrap tests.

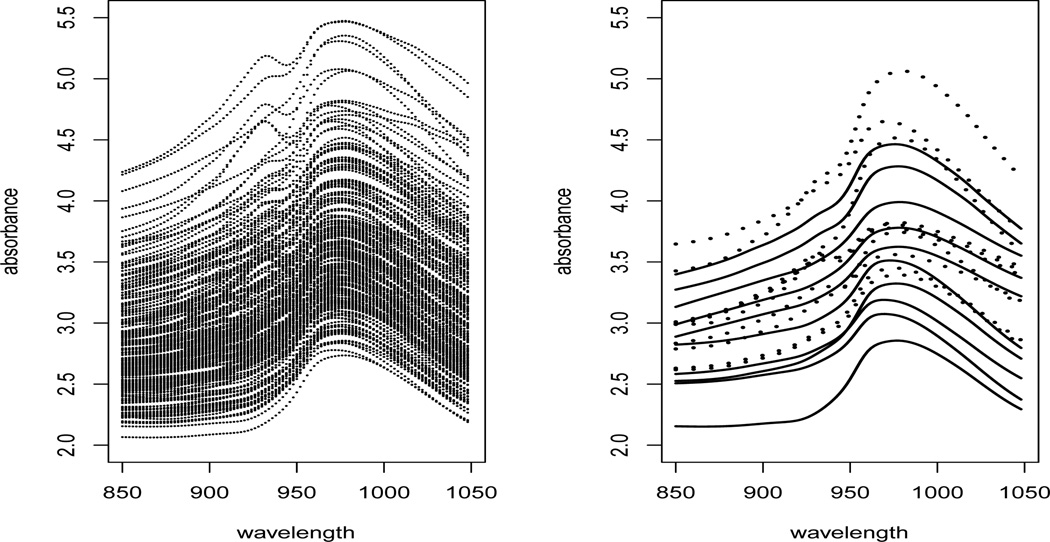

Figure 3.

Left: Plot of Tecator data. Right: Sample curves for the Tecator data. Each class has 10 sample curves. Dashed lines represent spectra with fact > 20% and solid lines represent spectra with fact < 20%.

The paper is organized as follows. Section 2 states main theoretical results on confidence bands constructed from polynomial splines. Section 3 provides further insights into the error structure of spline estimators. The actual steps to implement the confidence bands are provided in Section 4. A simulation study is presented in Section 5, and an empirical illustration on how to use the proposed spline confidence band for inference is reported in Section 6. Technical proofs are collected in the Appendix.

2. Main results

For any Lebesgue measurable function ϕ on [0, 1], denote ‖ϕ‖∞ = supx∈[0,1] |ϕ(x)|. For any ν ∈ (0, 1] and nonnegative integer q, let Cq,ν [0, 1] be the space of functions with ν-Häolder continuous q-th order derivatives on [0, 1], i.e.

To describe the spline functions, we first introduce a sequence of equally-spaced points , called interior knots which divide the interval [0, 1] into (Nm + 1) equal subintervals IJ = [tJ, tJ+1), J = 0, ….,Nm − 1, INm = [tNm, 1]. For any positive integer p, introduce left boundary knots t1−p, …,t0, and right boundary knots tNm+1, ….,tNm+p,

in which hm is the distance between neighboring knots. Denote by ℋ(p−2) the space of p-th order spline space, i.e., p − 2 times continuously differentiable functions on [0, 1] that are polynomials of degree p − 1 on [tJ, tJ+1], J = 0,…, Nm. Then , where BJ,p is the J-th B-spline basis of order p as defined in de Boor (2001).

We propose to estimate the mean function m(x) by

| (2) |

The technical assumptions we need are as follows:

-

(A1)

The regression function m ∈ Cp−1,1 [0, 1], i.e., m(p−1) ∈ C0,1 [0, 1].

-

(A2)

The standard deviation function σ(x) ∈ C0, μ [0, 1] for some μ ∈ (0, 1].

-

(A3)

As n → ∞, N−1n1/(2p) → 0 and N = O (nθ) for some θ > 1/ (2p); the number of interior knots Nm satisfies or equivalently Nhm → ∞, .

-

(A4)

There exists CG > 0 such that G(x, x) ≥ CG, x ∈ [0, 1], for k ∈ {1, …, κ}, ϕk (x) ∈ C0,μ [0, 1], for a sequence of increasing integers, with limn→∞ κn= κ and the constant μ ∈ (0, 1] as in Assumption (A2). In particular, .

-

(A5)There are constants C1,C2 ∈ (0,+∞), γ1, γ2 ∈ (1,+∞), β ∈ (0, 1/2) and iid N(0, 1) variables such that

(3) (4)

Assumptions (A1)–(A2) are typical for spline smoothing, see Huang and Yang (2004), Xue and Yang (2006) and Wang and Yang (2009a). Assumption (A3) concerns the number of observations for each subject, and the number of knots of B-splines. Assumption (A4) ensures that the principal components have collectively bounded smoothness. Assumption (A5) provides Gaussian approximation of estimation error process, and is ensured by the following elementary assumption:

-

(A5’)

There exist η1 > 4, η2 > 4+2θ such that E |ξik|η1+E |εij|η2 < +∞, for 1 ≤ i < ∞, 1 ≤ k ≤ κ, 1 ≤ j < ∞. The number κ of nonzero eigenvalues is finite or κ is infinite while the variables {ξik}1≤i<∞,1≤k<∞ are iid.

Degras (2011) makes a restrictive assumption (A.2) on the Hölder continuity of the stochastic process . It is elementary to construct examples where our Assumptions (A4) and (A5) are satisfied while assumption (A.2) of Degras (2011) is not.

The part of Assumption (A4) on ϕk’s holds trivially if κ is finite and all ϕk (x) ∈ C0,μ [0, 1]. Note also that by definition, , in which form an orthonormal basis of L2 ([0, 1]), hence, Assumption (A4) is fulfilled for κ = ∞ as long as λk decreases to zero sufficiently fast. Following one Referee’s suggestion, we provide the following example. One takes λk = ρ2[k/2], k = 1, 2, … for any ρ ∈ (0, 1), with the canonical orthonormal Fourier basis of L2 ([0, 1])

In this case, , while for any with κn increasing, odd and κn → ∞, and Lipschitz order μ = 1

Denote by ζ (x), x ∈ [0, 1] a standardized Gaussian process such that Eζ (x) ≡ 0, Eζ2 (x) ≡ 1, x ∈ [0, 1] with covariance function

and define the 100 × (1 − α)-th percentile of the absolute maxima distribution of ζ (x), ∀x ∈ [0, 1], i.e., P [supx∈[0,1] |ζ (x)| ≤ Q1−α] = 1 − α, ∀α ∈ (0, 1). Denote by z1−α/2 the 100 (1 − α/2)-th percentile of the standard normal distribution. Define also the following “infeasible estimator” of function m

| (5) |

The term “infeasible” refers to the fact that m̄(x) is computed from unknown quantity ηi(x), x ∈ [0, 1], and it would be the natural estimator of m(x) if all the iid random curves ηi(x), x ∈ [0, 1] were observed, a view taken in Ferraty and Vieu (2006).

We now state our main results in the following theorem.

Theorem 2.1 : Under Assumptions (A1)–(A5), for ∀α ∈ (0, 1), as n → ∞, the “infeasible estimator” m̄(x) converges at the rate

while the spline estimator m̂p is asymptotically equivalent to m̄ up to order n1/2, i.e.

Remark 1 : The significance of Theorem 2.1 lies in the fact that one does not need to distinguish between the spline estimator m̂p and the “infeasible estimator” m̄ in (5), which converges with rate like a parametric estimator. We therefore have established oracle efficiency of the nonparametric estimator m̂p.

Corollary 2.2: Under Assumptions (A1)–(A5), as n → ∞, an asymptotic 100 (1 − α) % correct confidence band for m(x), x ∈ [0, 1] is

while an asymptotic 100 (1 − α) % pointwise confidence interval for m(x), x ∈ [0, 1], is m̂p(x) ± G(x, x)1/2 z1−α/2n−1/2.

We next describe a two-sample extension of Theorem 2.1. Denote two samples indicated by d = 1, 2, which satisfy

with covariance functions respectively. We denote the ratio of two sample sizes as r̂ = n1/n2 and assume that limn1→∞ r̂ = r > 0.

For both groups, let m̂1p(x) and m̂2p(x) be the order p spline estimates of mean functions m1(x) and m2(x) by (2). Also denote by ζ12 (x), x ∈ [0, 1] a standardized Gaussian process such that Eζ12 (x) ≡ 0, , x ∈ [0, 1] with covariance function

Denote by Q12,1−α the (1 − α)-th quantile of the absolute maxima deviation of ζ12 (x), x ∈ [0, 1] as above. We mimic the two sample t-test and state the following theorem whose proof is analogous to that of Theorem 2.1.

Theorem 2.3 : If Assumptions (A1)–(A5) are modified for each group accordingly, then for any α ∈ (0, 1), as n1 → ∞, r̂ → r > 0,

Theorems 2.3 yields uniform asymptotic confidence band for m1(x)−m2(x), x ∈ [0, 1].

Corollary 2.4: If Assumptions (A1)–(A5) are modified for each group accordingly, as n1 → ∞, r̂ → r > 0, a 100 × (1 − α) % asymptotically correct confidence band for m1(x)−m2(x), x ∈ [0, 1] is , ∀α ∈ (0, 1).

If the confidence band in Corollary 2.2 is used to test hypothesis

for some given function m0(x), as one referee pointed out, the asymptotic power of the test is α under H0, 1 under H1 due to Theorem 2.1. The same can be said for testing hypothesis about m1(x) − m2(x) using the confidence band in Corollary 2.4.

3. Error Decomposition For the Spline Estimators

In this section, we break the estimation error m̂p(x) − m(x) into three terms. We begin by discussing the representation of the spline estimator m̂p(x) in (2).

The definition of m̂p(x) in (2) means that

with coefficients {β̂1−p,p, …, β̂Nm,p }T solving the following least squares problem

| (6) |

Applying elementary algebra, one obtains

| (7) |

where Y = (Ȳ.1,…, Ȳ.N)T, , and the design matrix X is

Projecting via (7) the relationship in model (1) onto the linear subspace of RNm+p spanned by (BJ,p (j/N)}1≤j≤N,1−p≤J≤Nm, we obtain the following crucial decomposition in the space ℋ(p−2) of spline functions:

| (8) |

where

| (9) |

The vectors {β̃1−p, …,β̃Nm}T, {ã1−p, …, ãNm}T and {τ̃k,1−p, …,τ̃k,Nm}T in (9) are solutions to (6) with Yij replaced by m(j/N), σ (j/N) εij and ξikϕk (j/N) respectively.

Alternatively,

in which m = (m(1/N),…m(N/N))T is the signal vector, is the noise vector and ϕk = (ϕk (1/N),…, ϕk (N/N))T are the eigenfunction vectors, and .

We cite next an important result from de Boor (2001), p. 149.

Theorem 3.1 : There is an absolute constant Cp−1,μ > 0 such that for every ϕ ∈ Cp−1,μ [0, 1] for some μ ∈ (0, 1], there exists a function g ∈ ℋ(p−1) [0, 1] for which .

The next three propositions concern m̃p(x), ẽp(x) and ζ̃p(x) given in (8).

Proposition 3.2: Under Assumptions (A1) and (A3), as n → ∞

| (10) |

Proposition 3.3: Under Assumptions (A2)–(A4), as n → ∞

| (11) |

Proposition 3.4: Under Assumptions (A2)–(A4), as n → ∞

| (12) |

also for any α ∈ (0, 1)

| (13) |

Equations (10), (11) and (12) yield the asymptotic efficiency of the spline estimator m̂p, i.e. supx∈[0,1] n1/2 |m̄(x) − m̂p(x)| = oP (1). The Appendix contains proofs for the above three propositions, which together with (8), imply Theorem 2.1.

4. Implementation

This section describes procedures to implement the confidence band in Corollary 2.2.

Given any data set from model (1), the spline estimator m̂p (x) is obtained from (7), the number of interior knots in estimating m(x) is taken to be Nm = [cn1/(2p)log (n)], in which [a] denotes the integer part of a. Our experiences show that the choice of constant c = 0.2, 0.3, 0.5, 1, 2 seems quite adequate, and that is what we recommend. When constructing the confidence bands, one needs to estimate the unknown functions G(·, ·) and the quantile Q1−α and then plug in these estimators: the same approach is taken in Ma, Yang and Carroll (2011) and Wang and Yang (2009a).

The pilot estimator Ĝp (x, x′) of covariance function G(x, x′) is

with and the tensor product spline space in which NG = [n1/(2p)log(log(n))].

In order to estimate Q1−α, one first does the eigenfunction decomposition of Ĝp (x, x′), i.e. , to obtain the estimated eigenvalues λ̂k and eigenfunctions ψ̂k. Next, one chooses the number κ of eigenfunctions by using the following standard and efficient criterion, i.e. are the first T estimated positive eigenvalues. Finally, one simulates are i.i.d standard normal variables with 1 ≤ k ≤ κ and b = 1, …, bM, where bM is a preset large integer, the default of which is 1000. One takes the maximal absolute value for each copy of ζ̂b (x) and estimates Q1−α by the empirical quantile Q̂1−α of these maximum values. One then uses the following confidence band

| (14) |

for the mean function. One estimates Q12,1−α analogous to Q̂1−α and computes

| (15) |

as confidence band for m1(x) − m2(x). Although beyond the scope of this paper, as one referee pointed out, the confidence band in (14) is expected to enjoy the same asymptotic coverage as if true values of Q1−α and G(x, x) were used instead, due to the consistency of Ĝp (x, x) estimating G(x, x). The same holds for the band in (15).

5. Simulation

To demonstrate the practical performance of our theoretical results, we perform a set of simulation studies. Data are generated from model

| (16) |

where ξik ~ N(0, 1), k = 1, 2, εij ~ N(0, 1), for 1 ≤ i ≤ n, 1 ≤ j ≤ N, m(x) = 10+sin {2π (x − 1/2)}, ϕ1(x) = −2 cos {π (x − 1/2)} and ϕ2(x) = sin {π (x − 1/2)}. This setting implies λ1 = 2 and λ2 = 0.5. The noise levels are set to be σ = 0.5 and 0.3. The number of subjects n is taken to be 60, 100, 200, 300 and 500, and under each sample size the number of observations per curve is assumed to be N = [n0.25log2(n)]. This simulated process has a similar design as one of the simulation models in Yao, Müller and Wang (2005), except that each subject is densely observed. We consider both linear and cubic spline estimators, and use confidence levels 1 − α = 0.95 and 0.99 for our simultaneous confidence bands. The constant c in the definition of Nm in Section 4 is taken to be 0.2, 0.3, 0.5, 1 and 2. Each simulation is repeated 500 times.

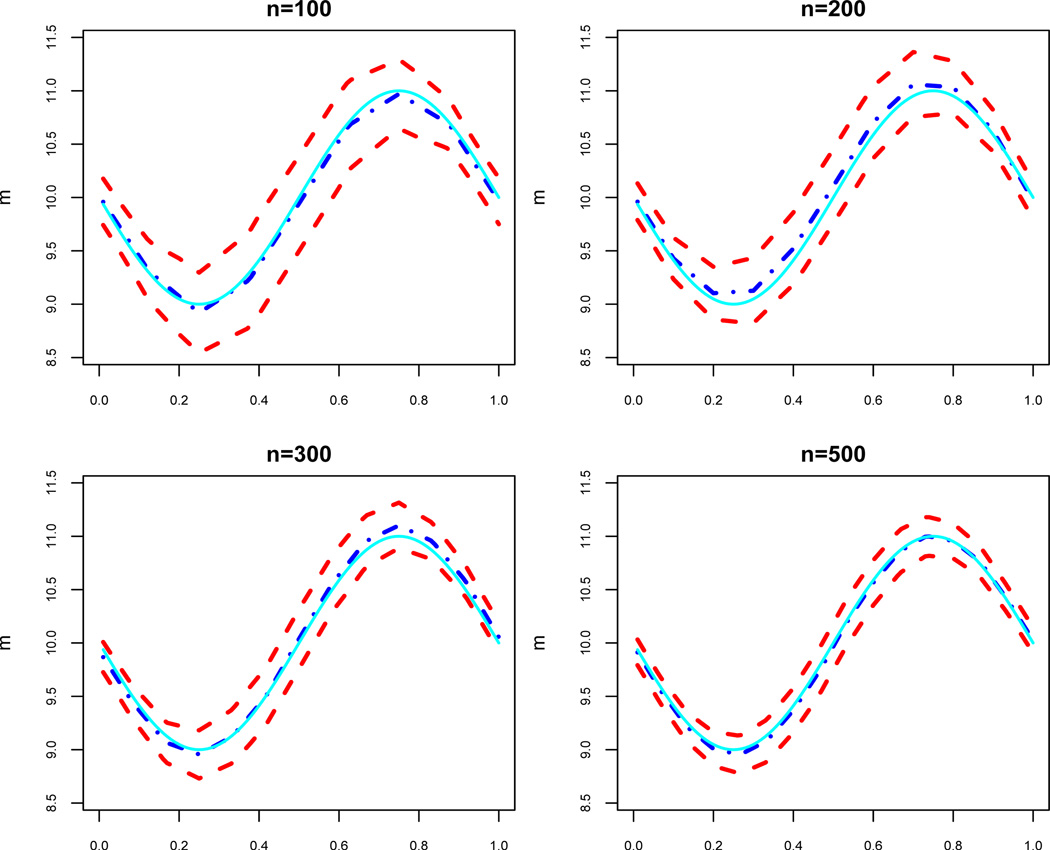

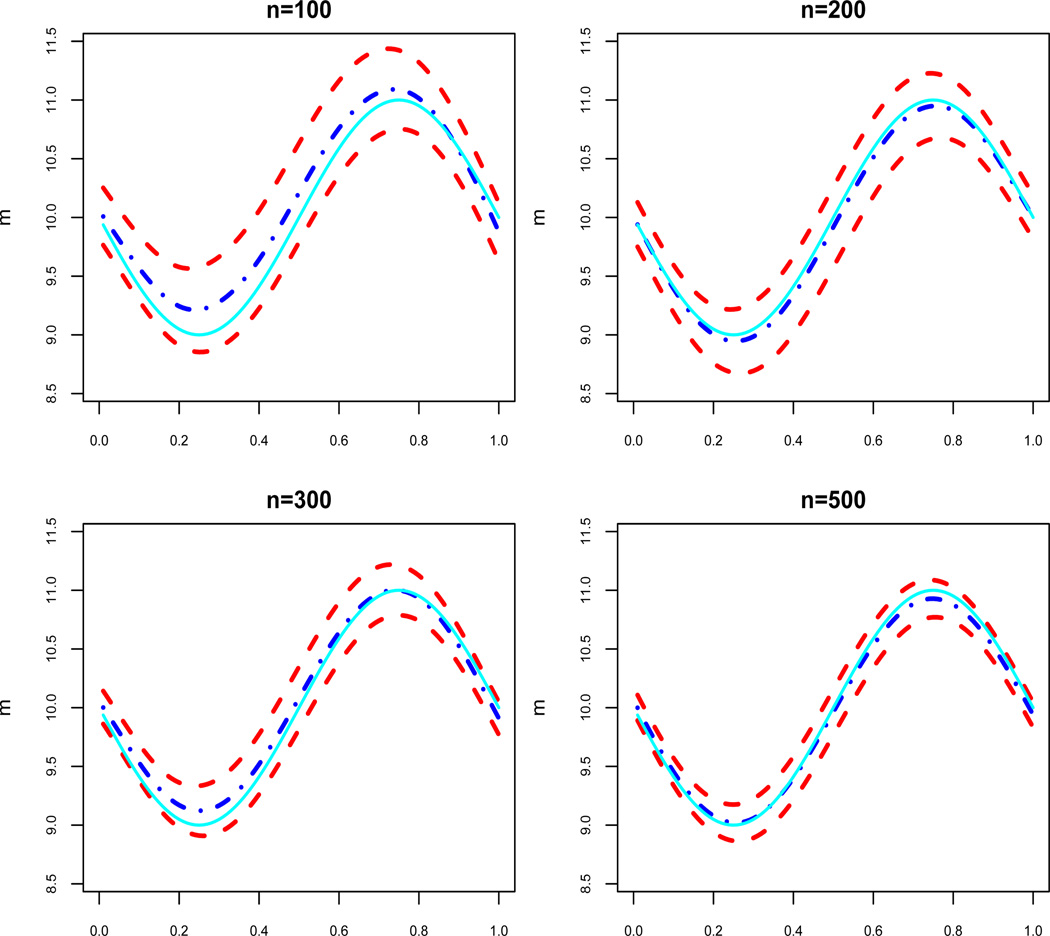

Figures 1 and 2 show the estimated mean functions and their 95% confidence bands for the true curve m(·) in Model (16) with σ = 0.3 and n = 100, 200, 300, 500 respectively. As expected when n increases, the confidence band becomes narrower and the linear and cubic spline estimators are closer to the true curve.

Figure 1.

Plots of the linear spline estimator (2) for simulated data (dashed-dotted line) and 95% confidence bands (14) (upper and lower dashed lines) (14) for m(x) (solid lines). In all panels, σ = 0.3.

Figure 2.

Plots of the cubic spline estimator (2) for simulated data (dashed-dotted line) and 95% confidence bands (14) (upper and lower dashed lines) (14) for m(x) (solid lines). In all panels, σ = 0.3.

Tables 1 and 2 show the empirical frequency that the true curve m(·) is covered by the linear and cubic spline confidence bands (14) at 100 points {1/100, …, 99/100, 1} respectively. At all noise levels, the coverage percentages for the confidence band are close to the nominal confidence levels 0.95 and 0.99 for linear splines with c = 0.5, 1 (Table 1), and cubic splines with c = 0.3, 0.5 (Table 2) but decline slightly for c = 2 and markedly for c = 0.2. The coverage percentages thus depend on the choice of Nm, and the dependency becomes stronger when sample sizes decrease. For large sample sizes n = 300, 500, the effect of the choice of Nm on the coverage percentages is negligible. Although our theory indicates no optimal choice of c, we recommend using c = 0.5 for data analysis as its performance in simulation for both linear and cubic splines is either optimal or near optimal.

Table 1.

Coverage frequencies from 500 replications using linear spline (14) with p = 2 and Nm = [cn1/(2p)log(n)].

| n | 1 − α | Coverage frequency σ = 0.5 |

Coverage frequency σ = 0.3 |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| c = 0.2 | c = 0.3 | c = 0.5 | c = 1 | c = 2 | c = 0.2 | c = 0.3 | c = 0.5 | c = 1 | c = 2 | ||

| 60 | 0.950 | 0.384 | 0.790 | 0.876 | 0.894 | 0.852 | 0.410 | 0.786 | 0.930 | 0.914 | 0.884 |

| 0.990 | 0.692 | 0.938 | 0.970 | 0.976 | 0.942 | 0.702 | 0.950 | 0.972 | 0.966 | 0.954 | |

| 100 | 0.950 | 0.184 | 0.826 | 0.886 | 0.884 | 0.838 | 0.198 | 0.822 | 0.916 | 0.916 | 0.896 |

| 0.990 | 0.476 | 0.936 | 0.964 | 0.966 | 0.944 | 0.496 | 0.940 | 0.974 | 0.974 | 0.968 | |

| 200 | 0.950 | 0.418 | 0.856 | 0.914 | 0.922 | 0.862 | 0.414 | 0.862 | 0.946 | 0.942 | 0.926 |

| 0.990 | 0.712 | 0.966 | 0.976 | 0.990 | 0.972 | 0.720 | 0.966 | 0.984 | 0.984 | 0.980 | |

| 300 | 0.950 | 0.600 | 0.888 | 0.920 | 0.932 | 0.874 | 0.602 | 0.896 | 0.940 | 0.934 | 0.926 |

| 0.990 | 0.834 | 0.978 | 0.976 | 0.980 | 0.972 | 0.840 | 0.982 | 0.984 | 0.986 | 0.980 | |

| 500 | 0.950 | 0.772 | 0.880 | 0.922 | 0.886 | 0.894 | 0.768 | 0.888 | 0.954 | 0.950 | 0.942 |

| 0.990 | 0.902 | 0.964 | 0.984 | 0.976 | 0.976 | 0.906 | 0.968 | 0.992 | 0.994 | 0.988 | |

Table 2.

Coverage frequencies from 500 replications using cubic spline (14) with p = 4 and Nm = [cn1/(2p)log(n)].

| n | 1 − α | Coverage frequency σ = 0.5 |

Coverage frequency σ = 0.3 |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| c = 0.2 | c = 0.3 | c = 0.5 | c = 1 | c = 2 | c = 0.2 | c = 0.3 | c = 0.5 | c = 1 | c = 2 | ||

| 60 | 0.950 | 0.644 | 0.916 | 0.902 | 0.890 | 0.738 | 0.672 | 0.922 | 0.940 | 0.940 | 0.916 |

| 0.990 | 0.866 | 0.980 | 0.958 | 0.964 | 0.888 | 0.884 | 0.986 | 0.986 | 0.984 | 0.982 | |

| 100 | 0.950 | 0.596 | 0.902 | 0.904 | 0.876 | 0.846 | 0.610 | 0.916 | 0.914 | 0.914 | 0.896 |

| 0.990 | 0.786 | 0.970 | 0.968 | 0.956 | 0.952 | 0.798 | 0.980 | 0.974 | 0.970 | 0.964 | |

| 200 | 0.950 | 0.928 | 0.942 | 0.932 | 0.936 | 0.904 | 0.938 | 0.952 | 0.950 | 0.948 | 0.934 |

| 0.990 | 0.978 | 0.992 | 0.982 | 0.992 | 0.978 | 0.982 | 0.984 | 0.992 | 0.982 | 0.984 | |

| 300 | 0.950 | 0.920 | 0.948 | 0.926 | 0.948 | 0.898 | 0.922 | 0.956 | 0.948 | 0.942 | 0.938 |

| 0.990 | 0.976 | 0.986 | 0.986 | 0.988 | 0.980 | 0.982 | 0.984 | 0.988 | 0.984 | 0.982 | |

| 500 | 0.950 | 0.928 | 0.922 | 0.954 | 0.902 | 0.898 | 0.928 | 0.928 | 0.936 | 0.932 | 0.916 |

| 0.990 | 0.980 | 0.982 | 0.990 | 0.976 | 0.978 | 0.980 | 0.982 | 0.990 | 0.990 | 0.992 | |

Following the suggestion of one referee and the Associate Editor, we compare by simulation the proposed spline confidence band to the least squares Bonferroni and least squares bootstrap bands in Bunea, Ivanescu and Wegkamp (2011) (BIW). Table 3 presents the empirical frequency that the true curve m(·) for model (16) is covered by these bands at {1/100, …, 99/100, 1} respectively as Table 1. The coverage frequency of the BIW Bonferroni band is much higher than the nominal level making it too conservative. The coverage frequency of the BIW bootstrap band is consistently lower than the nominal level by at least 10%, thus not recommended for practical use.

Table 3.

Coverage frequencies from 500 replications using least squares Bonferroni band and least squares Bootstrap band.

| n | 1 − α | Coverage frequency least squares Bonferroni |

Coverage frequency least squares bootstrap |

||

|---|---|---|---|---|---|

| σ = 0.5 | σ = 0.3 | σ = 0.5 | σ = 0.3 | ||

| 60 | 0.950 | 0.990 | 0.988 | 0.742 | 0.744 |

| 0.990 | 0.994 | 0.994 | 0.856 | 0.864 | |

| 100 | 0.950 | 0.996 | 0.998 | 0.678 | 0.712 |

| 0.990 | 0.998 | 1.000 | 0.860 | 0.870 | |

| 200 | 0.950 | 0.988 | 0.992 | 0.710 | 0.734 |

| 0.990 | 1.000 | 1.000 | 0.856 | 0.888 | |

| 300 | 0.950 | 0.988 | 0.998 | 0.704 | 0.720 |

| 0.990 | 1.000 | 1.000 | 0.868 | 0.870 | |

| 500 | 0.950 | 0.996 | 0.998 | 0.718 | 0.732 |

| 0.990 | 1.000 | 1.000 | 0.856 | 0.860 | |

Following the suggestion of one referee and the Associate Editor, we also compare the widths of the three bands. For each replication, we calculate the ratios of widths of the two BIW bands against the spline band at {1/100, …, 99/100, 1} and then average these 100 ratios. Table 4 shows the five number summary of these 500 averaged ratios for σ = 0.3 and p = 4. The BIW Bonferroni band is much wider than cubic spline band, making it undesirable. While the BIW bootstrap band is narrower, we have mentioned previously that its coverage frequency is too low to be useful in practice. Simulation for other cases (e.g. p = 2, σ = 0.5) leads to the same conclusion.

Table 4.

Five number summary of ratios of confidence band widths.

| n | 1 − α | least squares Bonferroni/cubic spline | least squares bootstrap/cubic spline | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Min. | Q1 | Med. | Q3 | Max. | Min. | Q1 | Med. | Q3 | Max. | ||

| 60 | 0.950 | 0.964 | 1.219 | 1.299 | 1.397 | 1.845 | 0.522 | 0.667 | 0.716 | 0.770 | 0.967 |

| 0.990 | 0.907 | 1.114 | 1.188 | 1.285 | 1.730 | 0.527 | 0.662 | 0.715 | 0.770 | 1.048 | |

| 100 | 0.950 | 0.995 | 1.263 | 1.331 | 1.415 | 1.684 | 0.565 | 0.675 | 0.714 | 0.754 | 0.888 |

| 0.990 | 0.910 | 1.148 | 1.219 | 1.295 | 1.603 | 0.536 | 0.665 | 0.708 | 0.752 | 0.925 | |

| 200 | 0.950 | 1.169 | 1.326 | 1.383 | 1.433 | 1.653 | 0.600 | 0.683 | 0.715 | 0.743 | 0.855 |

| 0.990 | 1.045 | 1.197 | 1.250 | 1.300 | 1.507 | 0.557 | 0.668 | 0.702 | 0.740 | 0.888 | |

| 300 | 0.950 | 1.169 | 1.363 | 1.412 | 1.462 | 1.663 | 0.574 | 0.690 | 0.717 | 0.742 | 0.838 |

| 0.990 | 1.067 | 1.228 | 1.277 | 1.322 | 1.509 | 0.587 | 0.676 | 0.707 | 0.739 | 0.850 | |

| 500 | 0.950 | 1.273 | 1.395 | 1.432 | 1.476 | 1.601 | 0.620 | 0.691 | 0.714 | 0.737 | 0.818 |

| 0.990 | 1.132 | 1.243 | 1.288 | 1.334 | 1.465 | 0.607 | 0.674 | 0.707 | 0.734 | 0.839 | |

To examine the performance of the two-sample test based on spline confidence band, Table 5 reports the empirical power and type I error for the proposed two-sample test. The data were generated from (16) with σ = 0.5 and m1(x) = 10+sin {2π (x − 1/2)}+δ (x), n = n1 for the first group, and m2(x) = 10 + sin {2π (x − 1/2)}, n = n2 for the another group. The remaining parameters, ξik, εij, ϕ1(x) and ϕ2(x) were set to the same values for each group as in (16). In order to mimic the real data in Section 6, we set N = 50, 100 and 200 when n1 = 160, 80 and 40 and n2 = 320, 160 and 80 accordingly. The studied hypotheses are:

Table 5.

Empirical power and type I error of two-sample test using cubic spline.

| δ (x) | n1 = 160, n2 = 320 | n1 = 80, n2 = 160 | n1 = 40, n2 = 80 | |||

|---|---|---|---|---|---|---|

| Nominal test level | Nominal test level | Nominal test level | ||||

| 0.05 | 0.01 | 0.05 | 0.01 | 0.05 | 0.01 | |

| 0.6t | 1.000 | 1.000 | 0.980 | 0.918 | 0.794 | 0.574 |

| 0.7sin(x) | 1.000 | 1.000 | 0.978 | 0.910 | 0.788 | 0.566 |

| 0 | 0.058 | 0.010 | 0.068 | 0.010 | 0.096 | 0.028 |

| Monte Carlo SE | 0.001 | 0.004 | 0.001 | 0.004 | 0.001 | 0.004 |

Table 5 shows the empirical frequencies of rejecting H0 in this simulation study with nominal test level equal to 0.05 and 0.01. If δ(x) ≠ 0, these empirical powers should be close to 1, and for δ(x) ≡ 0, the nominal levels. Each set of simulations consists of 500 Monte Carlo runs. Asymptotic standard errors (as the number of Monte Carlo iterations tends to infinity) are reported in the last row of the table. Results are listed only for cubic spline confidence bands, as those of the linear spline are similar. Overall, the two-sample test performs well, even with a rather small difference (δ(x) = 0.7 sin(x)), providing a reasonable empirical power. Moreover, the differences between nominal levels and empirical type I error do diminish as the sample size increases.

6. Empirical Example

In this section, we revisit the Tecator data mentioned in Section 1, which can be downloaded at http://lib.stat.cmu.edu/datasets/tecator. In this data set, there are measurements on n = 240 meat samples, where for each sample a N = 100 channel near-infrared spectrum of absorbance measurements was recorded, and contents of moisture (water), fat and protein were also obtained. The Feed Analyzer worked in the wavelength range from 850 nm to 1050 nm. Figure 3 shows the scatter plot of this data set. The spectral data can be naturally considered as functional data, and we will perform a two-sample test to see whether absorbance from the spectrum differs significantly due to difference in fat content.

This data set has been used for comparing four classification methods (Li and Yu, 2008), building a regression model to predict the fat content from the spectrum (Li and Hsing, 2010b). Following Li and Yu (2008), we separate samples according to their fat contents being less than 20% or not. The right panel of Figure 3 shows 10 samples from each group. Here, hypothesis of interest is:

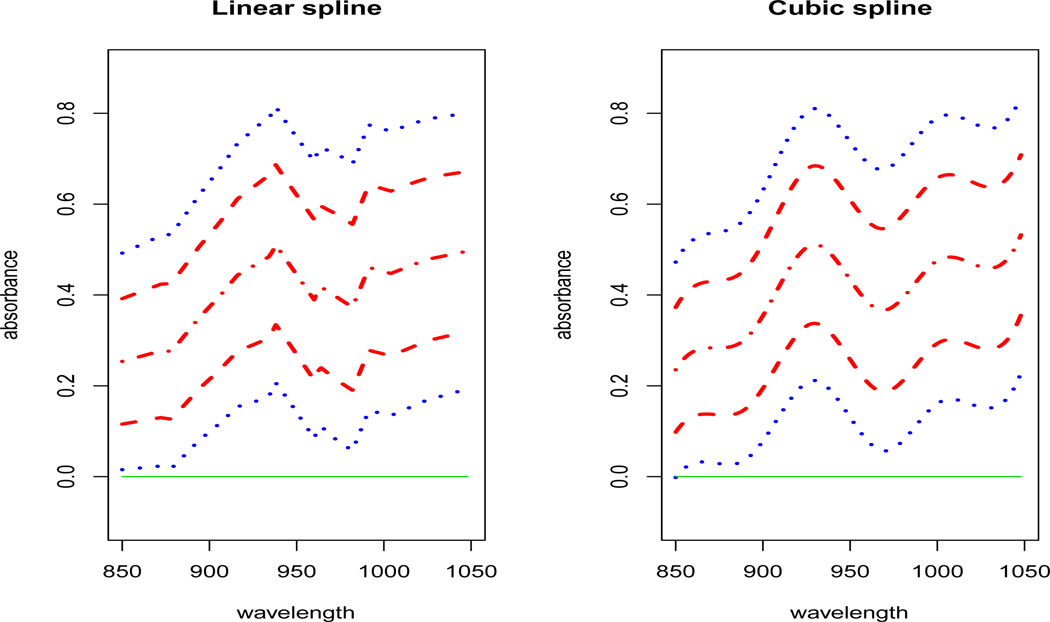

where m1(x) and m2(x) are the regression functions of absorbance on spectrum, for samples with fat content less than 20% and great than or equal to 20% respectively. Among 240 samples, there are n1 = 155 with fat content less than 20%, the rest n2 = 85 no less than 20%. The numbers of interior knots in (2) are computed as in Section 3 with c = 0.5 and are N1m = 4 and N2m = 3 for cubic spline fit and N1m = 8 and N2m = 6 for linear spline fit. Figure 4 depicts the linear and cubic spline confidence bands according to (15) at confidence levels 0.99 (upper and lower dashed lines) and 0.999995 (upper and lower dotted lines), with the center dashed-dotted line representing the spline estimator m̂1(x)− m̂2(x) and a solid line representing zero. Since even the 99.9995% confidence band does not contain the zero line entirely, the difference of low fat and high fat populations' absorbance was extremely significant. In fact, Figure 4 clearly indicates that the less the fat contained, the higher the absorbance is.

Figure 4.

Plots of the fitted linear and cubic spline regressions of m1(x)−m2(x) for the Tecator data (dashed-dotted line), 99% confidence bands (15) (upper and lower dashed lines), 99.9995% confidence bands (15) (upper and lower dotted lines) and the zero line (solid line).

Acknowledgment

This work has been supported in part by NSF awards DMS 0706518, 1007594, NCI/NIH K-award, 1K01 CA131259, a Dissertation Continuation Fellowship from Michigan State University, and funding from the Jiangsu Specially-Appointed Professor Program, Jiangsu Province, China. The helpful comments by two referees and the Associate Editor have led to significant improvement of the paper.

APPENDIX

In this appendix, we use C to denote a generic positive constant unless otherwise stated.

A.1. Preliminaries

For any vector ζ = (ζ1, …, ζs) ∈ Rs, denote the norm ‖ζ‖r = (|ζ1|r + ⋯ + |ζs|r)1/r, 1 ≤ r < +∞, ‖ζ‖∞ = max (|ζ1|, …, |ζs|). For any s × s symmetric matrix A, we define λmin (A) and λmax (A) as its smallest and largest eigenvalues, and its Lr norm as . In particular, ‖A‖2 = λmax (A), and if A is also nonsingular, .

For functions ϕ, φ ∈ L2[0, 1], one denotes the theoretical and empirical inner products as . The corresponding norms are .

We state a strong approximation result, which is used in the proof of Lemma A.6.

Lemma A.1: [Theorem 2.6.7 of Csőrgő and Révész (1981)] Suppose that ξi, 1 ≤ i < ∞ are iid with E(ξ1) = 0, and H(x) > 0 (x ≥ 0) is an increasing continuous function such that x−2−γH(x) is increasing for some γ > 0 and x−1logH(x) is decreasing with EH (|ξ1|) < ∞. Then there exist constants C1, C2, a > 0 which depend only on the distribution of ξ1 and a sequence of Brownian motions , such that for any satisfying H−1 (n) < xn < C1 (nlogn)1/2 and

The next lemma is a special case of Theorem 13.4.3, Page 404 of DeVore and Lorentz (1993). Let p be a positive integer, a matrix A = (aij) is said to have bandwidth p if aij = 0 when |i − j| ≥ p, and p is the smallest integer with this property.

Lemma A.2: If a matrix A with bandwidth p has an inverse A−1 and d = ‖A‖2‖A−1‖2 is the condition number of A, then ‖A−1‖∞ ≤ 2c0 (1 − η)−1, with c0 = ν−2p‖A−1‖2, η = ((d2 − 1)/(d2 + 1))1/(4p).

One writes , where the theoretical and empirical inner product matrices of are denoted as

| (A.1) |

We establish next that the theoretical inner product matrix Vp defined in (A.1) has an inverse with bounded L∞ norm.

Lemma A.3: For any positive integer p, there exists a constant Mp > 0 depending only on p, such that , where hm = (Nm + 1)−1.

Proof. According to Lemma A.1 in Wang and Yang (2009b), Vp is invertible since it is a symmetric matrix with all eigenvalues positive, i.e. , where cp and Cp are positive real numbers. The compact support of B-spline basis makes Vp of bandwidth p, hence one can apply Lemma A.2. Since dp = λmax (Vp) /λmin (Vp) ≤ Cp/cp, hence

If p = 1, then , the lemma holds with Mp = 1. If p > 1, let , then ‖u1−p‖2 = ‖u0‖2 = 1. Also lemma A.1 in Wang and Yang (2009b) implies that

hence where rp is an absolute constant depending only on p. Thus . Applying Lemma A.2 and putting the above bounds together, one obtains

The lemma is proved.

For any function ϕ ∈ C [0, 1], denote the vector ϕ = (ϕ (1/N), …, ϕ (N/N))T and function

Lemma A.4: Under Assumption (A3), for Vp and V̂p defined in (A.1), ‖Vp − V̂p‖∞ = O (N−1) and . There exists cϕ,p ∈ (0,∞) such that when n is large enough, ‖ϕ̃‖∞≤ cϕ,p ‖ϕ‖∞ for any ϕ ∈ C [0, 1]. Furthermore, if ϕ ∈ Cp−1,μ [0, 1] for some μ ∈ (0, 1], then for C̃p−1,μ = (cϕ,p + 1)Cp−1,μ

| (A.2) |

Proof. We first show that ‖Vp−V̂p‖∞ = O (N−1). In the case of p = 1, define for any 0 ≤ J ≤ Nm, the number of design points j/N in the J-th interval IJ as NJ, then

Clearly max0≤J≤Nm |NJ − Nhm| ≤ 1 and hence

For p > 1, de Boor (2001), Page 96, B-spline property ensures that there exists a constant C1,p > 0 such that

while there exists a constant C2,p > 0 such that max1−p≤J,J′≤Nm NJ,J′≤C2,pNhm where NJ,J′ = #{j : 1 ≤ j ≤ N,BJ,p (j/N)BJ′,p (j/N) > 0}. Hence

According to Lemma A.3, for any (Nm + p) vector γ,. Hence, ‖Vpγ‖∞ ≥ hm ‖γ‖∞ : By Assumption (A3), N−1 = o (hm) so if n is large enough, for any γ, one has

Hence .

To prove the last statement of the lemma, note that for any x ∈ [0, 1] at most (p + 1) of the numbers B1−p,p (x), …, BNm,p (x) are between 0 and 1, others being 0, so

in which IN = (1, …, 1)T. Clearly |XTINN−1|≤Chm for some C > 0, hence |ϕ̃(x)|≤ 2 (p + 1)C ‖ϕ‖∞ = cϕ,p ‖ϕ‖∞. Now if ϕ ∈ Cp−1,μ [0, 1] for some μ ∈ (0, 1], let g ∈ ℋ(p−1) [0, 1] be such that according to Theorem 3.1, then g̃ ≡ g as g ∈ ℋ(p−1) [0, 1] hence

proving (A.2).

Lemma A.5: Under Assumption (A5), for and n ≥ 1

| (A.3) |

| (A.4) |

where . Also

| (A.5) |

Proof. The proof of (A.4) is trivial. Assumption (A5) entails that F̄n+t,k < C2 (n + t)−γ1, k = 1, …, κ, t = 0, 1, …, ∞, in which . Taking expectation, one has

which proves (A.3) if one divides the above inequalities by n. The fact that Z̄,k,ξ ~ N (0, 1/n) entails that E |Z̄.k,ξ| = n−1/2 (2/π)1/2 and thus max1≤k≤κE|ξ̄.,k|≤ n−1/2 (2/π)1/2 + C0nβ−1.

Lemma A.6: Assumption (A5) holds under Assumption (A5’).

Proof. Under Assumption (A5’), E |ξik|η1 < +∞, η1 > 4, E |εij |η2 < +∞, η2 > 4+2θ, so there exists some β ∈ (0, 1/2) such that η1 > 2/β, η2 > (2 + θ) /β.

Now let H(x) = xη1, then Lemma A.1 entails that there exists constants C1k, C2k, ak which depend on the distribution of ξik, such that for and iid N(0, 1) variables Zik,ξ such that

Since η1 > 2/β, γ1 = η1β − 1 > 1. If the number κ of k is finite, so there are common constants C1,C2 > 0 such that which entails (3) since κ is finite. If κ is infinite but all the ξik's are iid, then C1k, C2k, ak are the same for all k, so the above is again true.

Likewise, under Assumption (A5’), if one lets H(x) = xη2, Lemma A.1 entails that there exists constants C1, C2, a which depend on the distribution of ξij, such that for and iid N(0, 1) variables Zij,ε such that

now η2β > 2+θ implies that there is γ2 > 1 such that η2β −1 > γ2 +θ and (4) follows.

Proof of Proposition 3.2. Applying (A.2), . Since Assumption (A3) implies that , equation (10) is proved.

Proof of Proposition 3.3.

Denote by Z̃p,ε (x) = {B1−p,p (x), …, BNm,p (x)} (XTX)−1XT Z, where Z = (σ (1/N) Z̄.1,ε, …, σ (N/N) Z̄.N,ξ)T. By (A.4), one has ‖Z − e‖∞ = Oa.s.(nβ−1), while

Also for any fixed x ∈ [0, 1], one has

Note next that the random vector -dimensional normal with covariance matrix , bounded above by

bounding the tail probabilities of entries of and applying Borel-Cantelli Lemma leads to

Hence, and

Thus (11) holds according to Assumption (A3).

Proof of Proposition 3.4.

We denote ζ̃k(x) = Z̄.k,ξϕk (x), k = 1, …, κ and define

It is clear that ζ̃ (x) is a Gaussian process with mean 0, variance 1 and covariance Eζ̃ (x) ζ̃ (x′) = G(x, x)−1/2 G(x, x′)−1/2 G(x, x′), for any x, x′ ∈ [0, 1]. Thus ζ̃ (x), x ∈ [0, 1] has the same distribution as ζ (x), x ∈ [0, 1].

Using Lemma A.4, one obtains that

| (A.6) |

Applying the above (A.6), (A.5) and Assumptions (A3), (A4), one has

hence

| (A.7) |

In addition, (A.3) and Assumptions (A3), (A4) entail that

hence

| (A.8) |

Note that

hence

according to (A.7) and (A.8), which leads to both (12) and (13).

References

- 1.Benko M, Härdle W, Kneip A. Common functional principal components. Annals of Statistics. 2009;37:1–34. [Google Scholar]

- 2.Bunea F, Ivanescu AE, Wegkamp M. Adaptive inference for the mean of a Gaussian process in functional data. Journal of the Royal Statistical Society, Series B. 2011 Forthcoming. [Google Scholar]

- 3.Cardot H. Nonparametric estimation of smoothed principal components analysis of sampled noisy functions. Journal of Nonparametric Statistics. 2000;12:503–538. [Google Scholar]

- 4.Csőrgő M, Révész P. Strong Approximations in Probability and Statistics. New York-London: Academic Press; 1981. [Google Scholar]

- 5.Cuevas A, Febrero M, Fraiman R. On the use of the bootstrap for estimating functions with functional data. Computational Statistics and Data Analysis. 2006;51:1063–1074. [Google Scholar]

- 6.de Boor C. A Practical Guide to Splines. New York: Springer-Verlag; 2001. [Google Scholar]

- 7.Degras DA. Simultaneous confidence bands for nonparametric regression with functional data. Statistica Sinica. 2011;21:1735–1765. [Google Scholar]

- 8.DeVore R, Lorentz G. Constructive approximation : polynomials and splines approximation. Berlin: Springer-Verlag; 1993. [Google Scholar]

- 9.Fan J, Lin S-K. Tests of significance when data are curves. Journal of the American Statistical Association. 1998;93:1007–1021. [Google Scholar]

- 10.Ferraty F, Vieu P. Nonparametric Functional Data Analysis: Theory and Practice. Berlin: Springer Series in Statistics, Springer; 2006. [Google Scholar]

- 11.Hall P, Müller HG, Wang JL. Properties of principal component methods for functional and longitudinal data analysis. Annals of Statistics. 2006;34:1493–1517. [Google Scholar]

- 12.Huang J, Yang L. Identification of nonlinear additive autoregressive models. Journal of the Royal Statistical Society Series B. 2004;66:463–477. [Google Scholar]

- 13.Li Y, Hsing T. Uniform convergence rates for nonparametric regression and principal component analysis in functional/longitudinal data. Annals of Statistics. 2010a;38:3321–3351. [Google Scholar]

- 14.Li Y, Hsing T. Deciding the dimension of effective dimension reduction space for functional and high-dimensional data. Annals of Statistics. 2010b;38:3028–3062. [Google Scholar]

- 15.Li B, Yu Q. Classification of functional data: a segmentation approach. Computational Statistics and Data Analysis. 2008;52:4790–4800. [Google Scholar]

- 16.Ma S, Yang L, Carroll RJ. A simultaneous confidence band for sparse longitudinal data. Statistica Sinica. 2011 doi: 10.5705/ss.2010.034. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ramsay JO, Silverman BW. Functional Data Analysis. Second Edition. New York: Springer Series in Statistics, Springer; 2005. [Google Scholar]

- 18.Rice JA, Wu CO. Nonparametric mixed effects models for unequally sampled noisy curves. Biometrics. 2001;57:253–259. doi: 10.1111/j.0006-341x.2001.00253.x. [DOI] [PubMed] [Google Scholar]

- 19.Wang J, Yang L. Polynomial spline confidence bands for regression curves. Statistica Sinica. 2009a;19:325–342. [Google Scholar]

- 20.Wang L, Yang L. Spline estimation of single index model. Statistica Sinica. 2009b;19:765–783. [Google Scholar]

- 21.Xue L, Yang L. Additive coefficient modelling via polynomial spline. Statistica Sinica. 2006;16:1423–1446. [Google Scholar]

- 22.Yao F, Müller HG, Wang JL. Functional data analysis for sparse longitudinal data. Journal of the American Statistical Association. 2005;100:577–590. [Google Scholar]

- 23.Zhao Z, Wu W. Confidence bands in nonparametric time series regression. Annals of Statistics. 2008;36:1854–1878. [Google Scholar]

- 24.Zhou S, Shen X, Wolfe DA. Local asymptotics of regression splines and confidence regions. Annals of Statistics. 1998;26:1760–1782. [Google Scholar]

- 25.Zhou Z, Wu W. Simultaneous inference of linear models with time varying coefficients. Journal of the Royal Statistical Society, Series B. 2010;72:513–531. [Google Scholar]