Abstract

The human capacity to acquire language is an outstanding scientific challenge to understand. Somehow our language capacities arise from the way the human brain processes, develops and learns in interaction with its environment. To set the stage, we begin with a summary of what is known about the neural organization of language and what our artificial grammar learning (AGL) studies have revealed. We then review the Chomsky hierarchy in the context of the theory of computation and formal learning theory. Finally, we outline a neurobiological model of language acquisition and processing based on an adaptive, recurrent, spiking network architecture. This architecture implements an asynchronous, event-driven, parallel system for recursive processing. We conclude that the brain represents grammars (or more precisely, the parser/generator) in its connectivity, and its ability for syntax is based on neurobiological infrastructure for structured sequence processing. The acquisition of this ability is accounted for in an adaptive dynamical systems framework. Artificial language learning (ALL) paradigms might be used to study the acquisition process within such a framework, as well as the processing properties of the underlying neurobiological infrastructure. However, it is necessary to combine and constrain the interpretation of ALL results by theoretical models and empirical studies on natural language processing. Given that the faculty of language is captured by classical computational models to a significant extent, and that these can be embedded in dynamic network architectures, there is hope that significant progress can be made in understanding the neurobiology of the language faculty.

Keywords: implicit artificial grammar learning, fMRI, repeated transcranial magnetic stimulation, language-related genes, CNTNAP2, spiking neural networks

1. Introduction

Recent years have seen a renewed interest in using artificial grammar learning (AGL) as a window onto the organization of the language system. It has been exploited in cross-species comparisons, but also in studies on the neural architecture for language. Our focus is on the role AGL can play in unravelling the neural basis of human language. For this purpose, its role is relatively limited and mainly restricted to modelling aspects of structured sequence learning and structured sequence processing, uncontaminated by the semantic and phonological sources of information that co-determine the production and comprehension of natural language. Before going into more details related to the neurobiology of syntax and the role of AGL research, we outline what we think are the major conclusions from the research on the neurobiology of language:

— The language network is more extensive than the classical language regions (i.e. Broca's and Wernicke's regions). It includes the left inferior frontal gyrus (LIFG), substantial parts of the superior-middle temporal cortex, the inferior parietal cortex and the basal ganglia. Homotopic regions in the right hemisphere are also engaged in language processing [1,2].

— The division of labour between Broca's (frontal cortex) and Wernicke's (temporal cortex) region is not that of production and comprehension [3–6]. The LIFG is at least involved in syntactic and semantic unification during comprehension and the superior-middle temporal cortex is involved in production [7]. Here, unification refers to real-time combinatorial operations (i.e. roughly ŝ = U(s, t), where U is the unification operation, s the current state of the processing memory, t an incoming, retrieved structural primitive (treelet) from the mental lexicon and ŝ the new state of the processing memory (unification space); see [8] for technical details).

— Broca's region plays a central role in what we have labelled unification [8,9]. However, this region's contributions to unification operations are neither syntax- nor language-specific. It plays a role in conceptual unification [10], integration operations in music [11,12] and in integrating language and co-speech gestures [13,14]. The specificity of the contribution of Broca's region in any given context is determined by dynamic connections with posterior (domain-specific) regions as well as other parts of the brain, including sub-cortical regions.

— None of the language-relevant brain regions or neurophysiological effects appear to be language-specific. All language-relevant event-related potential effects (N400, P600, LAN) are also triggered by other than language input (e.g. music, pictures, gestures) and all known language-relevant brain regions seem to be involved in processing other stimulus types as well [1].

— For language, as for other cognitive functions, the function-to-structure mapping as one-area-one-function (as currently conceptualized) is likely to be incorrect. Brain regions typically participate dynamically as nodes in more than one functional network. For instance, the processing of syntactic information depends on dynamic network interactions between Broca's region and the superior-middle temporal cortex, where lexicalized aspects of syntax are stored, while syntactic unification operations are under the control of Broca's region [5,6].

Although language processing combines information at multiple linguistic levels, in the following we focus on syntax. This is somewhat artificial, because syntactic processing never occurs in isolation from the other linguistic levels. Here, we take natural language to be a neurobiological system, and paraphrasing Chomsky [15], two outstanding fundamental questions to be answered are:

— What is the nature of the brain's ability for syntactic processing?

— How does the brain acquire this capacity?

An answer to the first question is that the human brain represents knowledge of syntax in its connectivity (i.e. its parametrized network topology with adaptable characteristics; see §8). This network is closely interwoven with the networks for phonological and semantic/pragmatic processing [3,4,16], all operating in close spatio-temporal contiguity during normal language processing (figure 1). We have therefore used the AGL paradigm as a relatively uncontaminated window onto the neurobiology of structured sequence processing. In this context, we take the view that natural and artificial syntax share a common abstraction—structured sequence processing [19]. AGL was originally implemented to investigate implicit learning mechanisms shared with natural language acquisition [20] and has recently been used in cross-species comparisons to understand the evolutionary origins of language and communication [21–25].

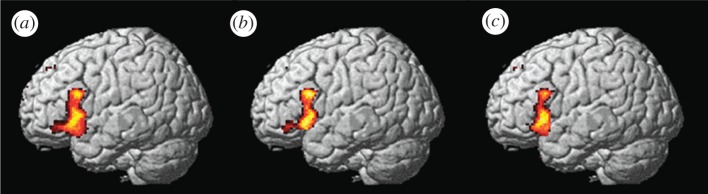

Figure 1.

Left inferior frontal regions related to phonological, syntactic and semantic processing [9]. The spheres are centred on the mean activation coordinate of the natural language fMRI studies reviewed in [17] and the radius indicates the spatial standard deviation. The brain activation displayed is related to artificial syntax processing [18].

The neurobiology of implicit sequence learning, assessed by AGL, has been investigated by means of functional neuroimaging [2,18,26–28], brain stimulation [29–31] and in agrammatic aphasics [32]. Frontostriatal circuits are generally involved [26,33]. The same circuits are also involved in the processing and acquisition of natural language syntax [34]. Moreover, the breakdown of syntax processing in agrammatic aphasia is associated with impairments in AGL [32] and individual variability in implicit sequence learning correlates with language processing [35,36]. Taken together, this supports the idea that AGL taps into implicit sequence learning and processes that are shared with aspects of natural syntax acquisition and processing. However, we stress one caveat relevant to much AGL work. A common assumption in the field is that if participants, after exposure to a grammar, are able to distinguish new grammatical from non-grammatical items, then they have learned some aspects of the underlying grammar. However, there is sometimes a tendency to assume more that participants process the sequences according to the grammar rules and strong claims are made about the representation acquired. However, this need not be the case. The use of a particular grammar does not ensure that subjects have learned and use this, instead of using a different and perhaps simpler way of representing the knowledge acquired. Several AGL studies have not sought to determine the minimal machinery needed to account for the observed performance, often leaving open questions about the nature of the acquired knowledge ([37] for additional remarks).

2. Multiple regular and non-regular dependencies

AGL is typically used to investigate implicit learning [20,38]. However, during the last decade, it has also been used in explicit procedures in which, for instance, participants are instructed to figure out the underlying rules while they receive performance feedback. The implicit version is closer to the conditions under which nature language acquisition takes place ([39], pp. 275–276) [40] and we therefore focus on studies of implicit AGL. The implicit AGL paradigm is based on the structural mere exposure effect and it provides a tool to investigate the aspects of structural acquisition from exposure to grammatical examples without any type of feedback, teaching instruction or engaging subjects in explicit problem-solving [41,42]. Generally, AGL paradigms consist of acquisition and classification phases. During acquisition, participants are exposed to a sample generated from a formal grammar. In the standard AGL version [20,38], subjects are informed after acquisition that the sequences were generated according to a complex set of rules and are asked to classify novel items as grammatical or not (grammaticality instruction), based on their immediate impression (guessing based on gut feeling). A well-replicated AGL finding is that subjects perform well above chance after several days of implicit acquisition; they do so on regular [41,42] and non-regular grammars [43,44].

An alternative way to assess implicit acquisition, structural mere exposure AGL, is to ask the participants to make like/not-like judgements (preference instruction) and therefore it is not necessary to inform them about the presence of a complex rule system before classification, which can thus be repeated [41,42]. Moreover, from the subject's point of view, there is no correct or incorrect response, and the motivation to use explicit (problem-solving) strategies is minimized. This version is based on the finding that repeated exposure to a stimulus induces an increased preference for that stimulus compared with novel stimuli [45]. We investigated both grammaticality and preference classification after 5 days of implicit acquisition on sequences generated from a simple right-linear unification grammar [2,41]. The results showed that the participants performed well above chance on both preference and grammaticality classification. In a follow-up study [43,44], we investigated the acquisition of multiple nested (context-free type) and crossed (context-sensitive type) non-adjacent dependencies, while controlling for local subsequence familiarity, in an implicit learning paradigm over nine days. This provided enough time for both abstraction and knowledge consolidation processes to take place. Recently, it has been suggested that abstraction and consolidation depend on sleep [46], consistent with results that naps promote abstraction processes after artificial language learning (ALL) in infants [47].

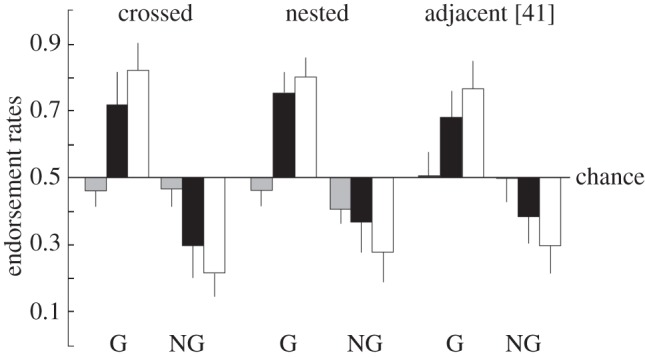

In one experiment [43], we employed a between-subject design to compare the implicit acquisition of context-sensitive, crossed dependencies (e.g. A1A2A3B1B2B3), and the more commonly studied context-free, nested dependencies (e.g. A1A2A3B3B2B1). The results showed robust performance, equivalent to the levels observed with regular grammars, for both types of dependencies. Similar findings were reported in [44] (figure 2), which demonstrates the feasibility of acquisition of multiple non-adjacent dependencies in implicit AGL without performance feedback. Taken together with additional results on implicit AGL [41,42], we concluded that the acquisition of non-adjacent dependencies showed quantitative, but little qualitative difference compared with the acquisition of adjacent dependencies: non-adjacent dependencies took some days longer to acquire [44]. These findings show that humans implicitly acquire knowledge about the aspects of structured regularities captured by complex rule systems by mere exposure. Moreover, the results show that when given enough exposure and time, participants show robust implicit learning of multiple non-adjacent dependencies. However, these results do not answer the question to what degree AGL recruits the same neural machinery as natural language syntax does. For this, we have to turn to neuroimaging methods, including functional magnetic resonance imaging (fMRI) and transcranial magnetic stimulation (TMS).

Figure 2.

Classification performance in endorsement rates. Black bars, preference classification, which was also in the baseline (grey bars) test. White bars, grammaticality classification. Error bars indicate standard deviations [41,44].

3. Functional MRI findings

In a recent fMRI study [2], we investigated a simple right-linear unification grammar in an implicit AGL paradigm. In addition, natural language data from a sentence comprehension experiment had been acquired in the same subjects in a factorial design with the factors syntax and semantics (for details see [2,48]). The main results of this study replicate previous findings on implicit AGL [18,26]. Moreover, in contrast to claims that Broca's region is specifically related to syntactic movement in the context of language processing [49–51] or the processing of nested dependencies [27,28,52], we found the left Brodmann's area (BA) 44 and 45 to be active during the processing of a well-formed sequence generated by a simple right-linear unification grammar.

Furthermore, Broca's region was engaged to a greater extent for syntactic anomalies and these effects were essentially identical when masked (i.e. the spatial intersection) with activity related to natural syntax processing in the same subjects (figure 3). The results are highly consistent with functional localization of natural language syntax in the LIFG (figure 1) [9,17]. These, and other findings, suggest that the left inferior frontal cortex is a structured sequence processor that unifies information from various sources in an incremental and recursive manner, independent of whether there are requirements for syntactic movement operations or for nested non-adjacent dependency processing [2].

Figure 3.

Brain regions engaged during correct preference classification in an implicit AGL paradigm. Preference classification after 5 days of implicit acquisition on sequences generated by a right-linear unification grammar: (a) main effect non-grammatical versus grammatical sequences in Broca's region BA 44 and 45; (b) when masked (spatial intersection) with the same main effect from grammaticality classification [2]; and (c) masked with the natural language syntax related variability observed [48] in the same subjects. Reproduced with permission from [53].

4. Transcranial magnetic stimulation findings

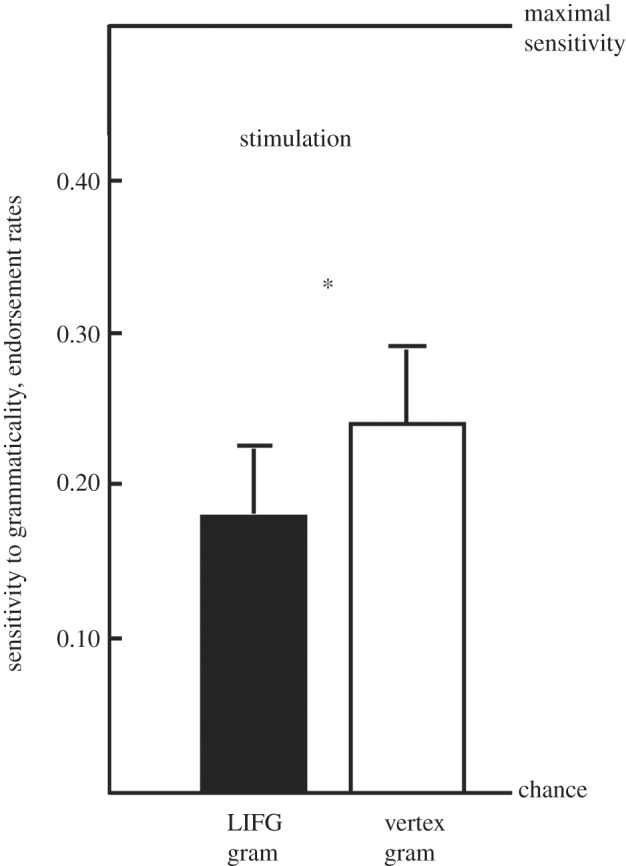

Given that fMRI findings are correlative, a way to test whether Broca's region (BA 44/45) is causally related to artificial syntax processing is to test whether repeated TMS (rTMS) applied to Broca's region modulates classification performance. This approach has been used to investigate natural language processing (for a review [29]). Previous results show that Broca's region is causally involved in processing sequences generated from a simple right-linear unification grammar [29]. A recent follow-up [31] showed that after participants had implicitly acquired aspects of a crossed dependency structure (multiple non-adjacent dependencies of a context-sensitive type similar to the ones described in §2), rTMS applied to Broca's region interfered with subsequent classification (figure 4). Together, these suggest that Broca's region is causally involved in processing both adjacent and non-adjacent dependencies.

Figure 4.

The difference in endorsement rates between grammatical and non-grammatical items with rTMS applied to the left inferior frontal gyrus (LIFG) or vertex. *rTMS to Broca's region (BA 44/45) leads to significantly impaired classification performance compared with control stimulation at vertex. The zero level on the y-axis = chance performance.

5. Genetic findings

A recent implicit AGL study [53] explored the potential role of the CNTNAP2 gene in artificial syntax acquisition/processing at the behavioural and brain levels. CNTNAP2 codes for a neural trans-membrane protein [54] and is downregulated by FOXP2, a gene that codes for a transcription factor [55]. Transcription factors and their genes make up complex gene regulatory networks, which control many complex biological processes, including ontogenetic development [56–58]. The expression of CNTNAP2 is relatively increased in developing human fronto-temporal-subcortical networks [59]. In particular, CNTNAP2 expression in humans is enriched in frontal brain regions, in contrast to mice or rats [60], and has been linked to specific language impairment [55]. A recent study investigated the effects of a common single nucleotide polymorphism (SNP) RS7794745 in CNTNAP2 (the same as investigated in [53]) on the brain response during language comprehension [61]. This study found both structural and functional brain differences in language comprehension related to the same SNP sub-grouping used in [53].

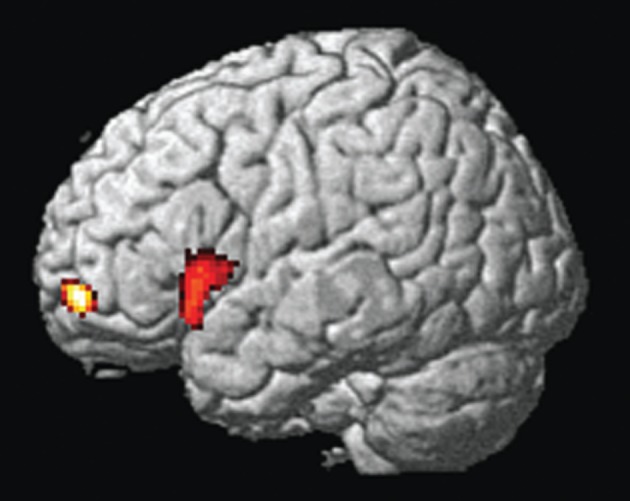

The behavioural findings showed that the T group (AT- and TT carriers) was sensitive to the grammaticality of the sequences independent of local subsequence familiarity. This might suggest that individuals with this genotype acquire structural knowledge more rapidly, use the acquired knowledge more effectively or are better at ignoring cues related to local subsequence familiarity in comparison with the non-T group (AA carriers). Parallel to these findings, significantly greater activation in Broca's region (BA 44/45) as well as in the left frontopolar region (BA 10) in the non-T compared with the T group was observed (figure 5). Assuming that the structured sequence learning mechanism investigated by AGL is shared between artificial and natural syntax acquisition, these results suggest that the FOXP2–CNTNAP2 pathway might be related to the development of the neural infrastructure relevant for the acquisition of structured sequence knowledge.

Figure 5.

Brain regions differentiating the T and the non-T groups. Group differences related to grammaticality classification (non-T > T). Reproduced with permission from [53].

In summary, quite an amount of knowledge has accumulated concerning the neurobiological infrastructure for implicit AGL, and firm evidence shows that the processing of artificial and natural language syntax is largely overlapping in Broca's region (BA 44/45). This lends credence to the claim that some aspects of natural language processing and its neurobiological basis can be fruitfully investigated with the help of well-designed artificial language paradigms. Before sketching a neurobiological framework for situating and interpreting results such as those reviewed here, we briefly review and comment on the Chomsky hierarchy, recursion and the competence–performance distinction to make explicit the connection between neurobiologically inspired dynamical systems and models of language formulated within the classical Turing framework of computation.

6. Recursion, competence grammars and performance models

In this and the following sections, we make explicit that the (extended) Chomsky hierarchy attains its meaning in the context of infinite memory resources. However, any physically realizable, classical computational system is finite with respect to its memory organization. Following Chomsky [62], we call these machines strictly finite1 (i.e. finite automata or finite-state machines, FSMs). Chomsky states that ‘performance, must necessarily be strictly finite’ ([62], pp. 331–333) and argues (p. 390) that the ‘performance of the speaker or hearer must be representable by a finite automaton of some sort. The speaker–hearer has only a finite memory, a part of which he uses to store the rules of his grammar (a set of rules for a device with unbounded memory), and a part of which he uses for computation…’. The apparent contradiction is explained in this section. We argue that important issues in the neurobiology of syntax, and language more generally, are related to the nature of the neural code (i.e. the character of neural representation), the properties of processing memory, as well as finite precision (noisy) neural computation. We suggest that (bounded) recursive processing is a broader phenomenon, not restricted to the language system, and conclude that one central, not yet well-understood, issue in neurobiology is the brain's capacity to process bounded patterns of non-adjacent dependencies.

A grammar G is roughly a finite set of rules that specifies how items in a lexicon (alphabet) are combined into well-formed sequences, thus generating a formal language L(G) [39,62–64]. The sequence set L(G) is called G's weak generative capacity and two grammars G1 and G2 are weakly equivalent if L(G1) = L(G2). To take a recently much discussed example in the AGL literature, the Chomsky hierarchy distinguishes between the regular L(G1) = {(ab)n | n a positive natural number} and the context-free language L(G2) = {anbn | n a positive natural number}. These are generated by, for example, the grammars G1 = {S → aB, B → bA, A → aB, B → b} and G2 = {S → aB, B → Ab, A → aB, B → b}. We note two properties, to which we will return in the following: (i) there is little complexity difference between the competence grammars G1 and G2 (they contain the same number of rules, terminal and non-terminal symbols) and (ii) the regular language L(G1) can be described by a grammar G1 that recursively generates hierarchical phrase-structure trees (in this case right-branching); thus neither the concept recursion nor hierarchical distinguish between regular and supra-regular languages (nor does the concept non-adjacency or long-distance dependencies [65]).

In the context of natural language grammars, it is important that G generates (at least) one structural description for each sequence in L(G) (e.g. labelled trees or phrase-structure markers; so-called strong generative capacity). A structural description typically represents ‘who-did-what-to-whom, when, how, and why’ relationships between words (lexical items) in a sentence, and these relationships are important to compute in order to interpret the sentence. Thus, the structural descriptions capture that part of sentence-level meaning that is represented in syntax. This information is partly encoded (decoded) in the corresponding word sequence during production (comprehension) with the help of procedures that incorporate, implicitly or explicitly, the knowledge of the underlying grammar. Two grammars G1 and G2 are strongly equivalent if their sets of generated structural descriptions are equal, SD(G1) = SD(G2). Many classes of grammars are described in the literature (see [63] for a review of some normal forms and the (extended) Chomsky hierarchy; grammar/language formalisms are however not restricted to these, [64,66,67]). Some important types of grammars generate classes of sequence (or string) sets that can be placed in a class hierarchy, the (extended) Chomsky hierarchy.

From a neurobiological point of view (i.e. with a focus on neural processing), it is natural to reformulate the Chomsky hierarchy in terms of equivalent algorithms, or more precisely, computational machine classes [62,64,68], because a central goal is to identify the neurobiological mechanisms that map between ‘meaning and sound’ (generators/transducers/parsers). In these terms, the Chomsky hierarchy corresponds to: finite-state (T3) ⊂ push-down stack (T2) ⊂ linearly bounded (T1) ⊂ and unbounded Turing machines (T0; where ⊂ means strict inclusion). Thus, (in terms of the theory of computation, the Chomsky hierarchy is a memory hierarchy that specifies the necessary (approx. minimal) memory resources required to process sequences of a formal language from a given class of the hierarchy, typically in a recognition paradigm. However, it is not a complexity hierarchy for the computational mechanism (approx. algorithm or processing logic) involved—these are all FSMs2 ([62], see also [69–74]). However, the distinctions made by the hierarchy in terms of minimal memory requirements, in particular the infinite memory requirements, are of unclear status from a neurobiological implementation point of view. For instance, Miller & Chomsky ([75], p. 472) state that ‘obviously, (finite memory) is beyond question’ (see also ([62], pp. 331–333). In this case, all levels in the hierarchy are special cases of the class of Turing machines with finite memory (i.e. strictly finite machines, SFMs). In order to abstract away from the finite memory limitation of real systems, Chomsky [39,62,75] introduced the competence–performance distinction. A competence grammar [76,77] is ‘a device that enumerates […] an infinite class of sentences with structural descriptions’ ([62], device A in fig. 1, pp. 329–330). The competence grammar is taken to be distinct from both the language acquisition and processing (i.e. performance) systems ([62], device C and B, respectively, in fig. 1, pp. 329–330). However, Chomsky also suggested that ‘any interesting realization of B [a performance system] that is not completely ad hoc will incorporate A [a competence grammar] as a fundamental component’, for example, Turing machines with finite tapes and register machines with a finite number of bounded registers.3 In both cases, one can view the finite-state controller (i.e. the processing logic or computational mechanism) as representing the knowledge of a competence grammar with an unbounded recursive potential, neither of which can be expressed or realized because of memory limitations. Chomsky [62] argued that if hardware constraints are disregarded, then the system can be understood as instantiating the equivalent of a competence grammar. A consequence of focusing on competence grammars is that the Chomsky hierarchy retains its meaning and this allows, among other things, the theoretical investigation of asymptotic properties of finite rule systems.

Formal ideas of hierarchy and recursion, intrinsic to cognition, have been present (at least) since the formalization of these concepts in computational terms [70–72]. Unbounded recursion [78] achieves discrete infinity [62,76]; or in contemporary terms, ‘since merge can apply to its own output, without limit, it generates endlessly many discrete, structured expressions, where ‘generates’ is used in its mathematical sense, as part of an idealization that abstracts away from certain performance limitations of actual biological systems’ ([79], p. 1218). Obviously, infinite recursive capacity is not realizable ([62], pp. 329–333, 390). Illustrations are empirical results showing that sentences with more than two centre embeddings are read with the same intonation as a list of random words [80], cannot easily be memorized [81,82], are difficult to paraphrase [83,84] and comprehend [85–88], and are sometimes paradoxically judged ungrammatical [89]. It is arguable that over-generation is one consequence of models that support unbounded recursion, a property not shared by the underlying object, the neurobiological faculty of language [90]. This might or might not be a problem, depending on perspective. The best that can be hoped for is that classical models in some sense are abstractions (or more realistically, approximations) of the underlying neurobiology.

Another, natural view on the competence–performance distinction is simply to consider bounded versions of the memory architectures entailed by, for example, the Chomsky hierarchy (or any other classical computational models). Nothing (essential) is lost from a neurobiological implementation point of view, and this shift in perspective makes explicit the role of processing memory in computation. To take one example, the unbound push-down stack (first-in-last-out memory) naturally correspond to the class of context-free grammars. It is conceivable that neural infrastructure can support, and make use of, bounded stacks during language processing, as suggested by Levelt ([66], vol. III, Psycholinguistic applications) as one possibility.4 The point here is that computation is intimately dependent on processing memory. Moreover, the computational capacities of SFMs does not have to be described by a regular (e.g. language/expression) formalism.5 Nevertheless, to the extent that classical models are relevant (in the final analysis), SFMs can represent and express all (bounded) relations and recursive types that are relevant from an empirical as well as theoretical point of view (see ch. 3, Machines with memory, in [91]). However, if one disregards memory bounds, then any SFM can be captured by a finite rule-system and investigated as a competence grammar.

The properties of memory used during processing is of central importance from a neurobiological perspective. More fundamentally, two factors enter into the notion of computation: (i) processing logic (algorithm) and (ii) processing memory; there can be little interesting (recursive) processing without either of these factors; processing logic and memory are tightly integrated in computation, both in classical ([91], pp. 110–115) and non-classical models (§8). However, the algorithm equivalent to the finite-state controller is of interest and captures the essential aspect of the competence notion. In this context, certain aspects of the computational complexity theory might be more useful than the Chomsky hierarchy itself [68,78,91–93]—in particular, the standard complexity metrics, which are closely related to processing complexity (roughly, the memory-use during computation and the time of computation). There are often interesting complex trade-offs between processing time and memory use in computational tasks, and understanding these might be of importance to neurobiology.

Neurobiological short- and long-term memory is an integral part of neural computation and given the co-localization of memory and processing in neural infrastructure (§8), it is natural to expect that the characteristics of processing memory will be central to: (i) a characterization of neural computation in general, including those supporting natural language processing; (ii) a realistic neural model of the language faculty; and (iii) provide natural bounds and explanation for human processing limitations (see [94], for an illustration in a spiking network model). What is relevant from a neurobiological perspective is the representational properties of language models (roughly, their capacity to generate internal interpretations) and their capacity to capture neurobiological realities. These issues are orthogonal to issues related to unbounded recursion and memory (which are of little, if any, consequence [65]). Instead, more realistic neural models will shed light on, and explain, errors and other types of breakdown in human performance.

It follows from the earlier-mentioned reasoning that we are free to choose a formal framework to work with, as long as this serves its purpose.6 Ultimately, it is the study object that will determine what is visible in any given formalism. This flexibility is useful when addressing the inner workings of syntax, or language, from a neurobiological point of view. Central issues in the neurobiology of syntax, and language more generally, are related to the nature of the neural code (i.e. the character of representation), the character of human processing memory and finite precision (noisy) neural computation [95,96] (see §8). Finally, we note that recurrent connectivity is a generic brain feature [97]. Therefore, it seems that (bounded) recursive processing is a latent (i.e. not necessarily realized) capacity in almost any neurobiological system and it would be surprising, indeed, if this would turn out to be unique to the neurobiological faculty of language ([37], pp. 591–599, for several examples of recursive domains outside language).

7. (non-)learnability

Results in formal learning theory [98] provide additional reasons to examine the relevance of the Chomsky hierarchy in the context of language acquisition and AGL. For instance, if the class of grammars representable by the brain, M, or the learnable subset, N ⊆ M, is finite, then there is little fundamental connection between these and the Chomsky hierarchy (the classes of which are infinite). Theoretical learnability results are in general negative [99,100]. For example, none of the language classes of the Chomsky hierarchy are learnable in the sense of Gold [101], that is, learnability in finite time from a representative sample of grammatical (positive) examples without performance feedback.7 The same result holds for several other notions of learnability, including notions of statistical approximation [40,98–100,102]. For instance, only the class of (deterministic) FSMs is tractably learnable (see ch. 8 in [40], which also reviews the role of computational complexity in learnability). This suggests that the distinctions made by the Chomsky hierarchy might not be natural from a learning perspective, whether in AGL or in natural language acquisition. With respect to the latter, a dominant theoretical position—the principles and parameters model [100,103,104]—proposes, based on poverty-of-stimulus arguments [40,79,105,106], that natural language grammars are acquired only in a very restricted sense in a finite model-space, defined by principles and learnable (bounded discrete) parameters.

If it is assumed that the brain has at its disposal a fixed number of formats for representing grammars (or alternative computational devices), and assuming a finite storage capacity, then it follows that there is a finite upper-bound, m, for the description length of representable grammars.8 This set Mm is finite and the set of learnable grammars Nm ⊆ Mm is thus also finite. The finiteness of Mm renders the full set Mm learnable in the sense of Gold as well as in several other learning paradigms [40,98,100]. It is the finite number of grammars representable by the brain that is critical here ([19] for an argument based on analogue systems leading to the same conclusion). The point of these remarks is that the class of grammars representable by the human brain, M, or the learnable subset N, might have little fundamental connection to the Chomsky hierarchy, as seems to be the case if M or N are finite. On independent grounds, based on considerations of the evolutionary origins of the language faculty, Jackendoff argues ([37], p. 616) that ‘what is called for is a hierarchy (or lattice) of grammars—not the familiar Chomsky hierarchy, which involves un-interpreted formal languages, but rather a hierarchy of formal systems that map between sound and meaning’. Finally, Clark & Lappin ([40], p. 94) emphasize that ‘the traditional classes of the Chomsky hierarchy are defined with reference to simple machine models, but we have no grounds for thinking that the human brain operates with these particular models. It is reasonable to expect that a deeper understanding of the nature of neural computation will yield new computational paradigms and corresponding classes of languages’.

8. Neural computations and adaptive dynamical systems

Analogue dynamical systems provide a non-classical alternative to classical computational architectures, and importantly, it is known that any Turing computable process can be embedded in dynamical systems instantiated by recurrent neural networks [107] that are closer in nature to real neurobiological systems. The fact that classical Turing architectures can be formalized as time-discrete dynamical systems provides a bridge between the concepts of classical and non-classical architectures [74,108,109]. The possibility of reducing classical architectures to neurobiological models is crucial, given the scientific challenge to understand how syntactic knowledge is represented in (noisy) spiking neural networks and how such networks come to develop this capacity. This reduction presupposes a neurobiologically informed theory of the language faculty. The adaptive dynamical systems framework, which we outline below, is an attempt to unify formal language theory with neurobiology, similar to the way in which chemistry and physics were unified during the 1920s. The framework represents a neurobiological implementation of the relevant aspects of formal language theory in order to make precise, from a neurobiological point of view, computational issues related to acquisition and processing of language, and structured sequences more generally.

The classical notions representation and processing are formalized within the framework of time-discrete dynamical systems9 as a state–space of internal states and a transition mapping, T, that maps pairs of an internal state, s, and an input, i, to a new internal state, ŝ, and (optionally) an output, λ, given by (ŝ, λ) = T(s, i); the transition mapping T governs how input is processed in a state-dependent manner. Thus, processing is represented by an input-driven state–space trajectory constrained by T; at time-step n, the system receives input i(n), being in state s(n), and as a result of processing, the system changes state to s(n + 1) = T[s(n), i(n)]. This also captures the idea of incremental recursive processing (cf. the unification operation ŝ = U(s, t) mentioned in §1). In an entirely analogous manner, the notion of incremental recursive processing is captured in analogue noisy time-continuous systems by s(t + dt) = s(t) + ds(t), where ds(t) is given by

| 8.1 |

where a noise process ξ(t) has been added to the coupled multivariate stochastic differential equation (e.g. [110]; we will return to the role of the parameter m).

Equation (8.1) is a generic noisy dynamical system, C, that interfaces its (computational) environment through an input interface i = f(u) and an output interface λ = g(s,i). Moreover, the increment ds(t), and thereby s(t + dt), is recursively determined by s(t) through T(s, m, i) (and noise; cf. figure 6). When the noise term dξ(t) is deleted from equation (8.1), the remaining terms (or more precisely T) can be understood as the competence of the system, while the full equation specifies its performance. Equation (8.1) is also a description of a spiking recurrent network, which can be seen in the following way: (i) the state s (a vector representing the information in the system) is a finite set of dynamic analogue registers (in the simplest case, membrane potentials, cf. [95,113,114]); (ii) the recurrent network topology is specified by the component equations of (8.1), which is thus naturally an asynchronous event-driven parallel architecture (i.e. the coupling pattern between the components of s specified by T; the notion of a module is captured by the notion of a sub-network) [109]; and finally, (iii) the specifics of the transfer function of the neural processing units, including synaptic characteristics and the spiking mechanism (here implicit in T, including for instance, membrane resetting, etc.). In other words, the computation of the neural system is essentially determined by T and its processing memory (cf. below and footnote 10), as in the classical case [108,115].

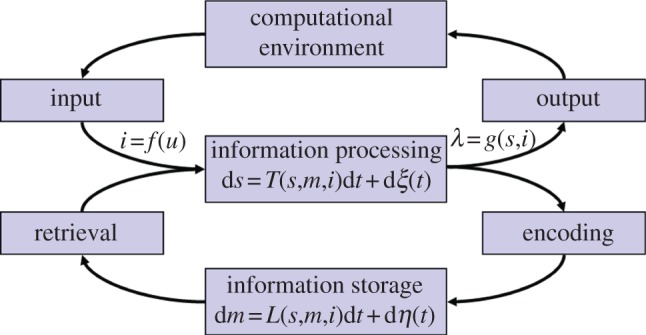

Figure 6.

An adaptive dynamical system framework. A representation of equations (8.1) and (8.2) from the text. Conceptually, the graphical representation shows that learning is a dynamic consequence of information processing [111,112], and conversely, that information processing is a dynamic consequence of learning/development, typically on different time scales (for details [108]).

To incorporate learning and development, the processing dynamics, T, needs to be parametrized with learning parameters, m (e.g. synaptic parameters for development as well as memory formation and retrieval) and a learning/development dynamics L (e.g. spike-time dependent plasticity, Hebbian learning, etc., figure 6). The learning parameters, m, live in a model-space M = {m | m can be instantiated by C}. To be concrete, let C be the neurobiological language system and T the parser associated with C. Development of the parsing capacity means that T changes its processing characteristics over time. We conceptualize this as a trajectory in the model-space M, where a given m corresponds to a state of the language system; at any point in time, C is in a model state m(t). If C incorporates an innately specified prior structure, we can capture this in at least four ways: (i) by a structured initial state m(t0) (e.g. a meaningful parsing capacity present from the start); (ii) constraints on the model-space M (e.g. M is finite or compact; domain-general/specific principles); (iii) domain specifications incorporated in the learning/developmental dynamics L (e.g. L is only sensitive to structural, and not serial order, relations); and (iv) constraints on the representational state–space or its dynamics T.

As C develops, it traces out a trajectory in M determined by its learning/development dynamics L according to (figure 6):

| 8.2 |

where a noise process η(t) has been added and the explicit dependence on time in L (non-stationarity) captures the idea of an innately specified developmental process (maturation). If the input streams i and the learning/development dynamics L are such that C converges (approximately) on a final model, this characterizes the end-state of the development process (e.g. adult competence).

In summary, learning and development is the joint result of two coupled dynamical systems, the representation dynamics T and the learning/development dynamics L, which together form an adaptive dynamical system (figure 6). In this analysis, language acquisition is the result of an interaction between two sources of information: (i) innate prior structure, which is likely to be of a pre-linguistic, non-language specific type and, to some presumably limited extent, language-specific; and (ii) the environment, both the linguistic and extra-linguistic experience. Thus, the underlying conceptualization is similar to that of Chomsky [15,116] and other classical models of acquisition [40,100,104,117], although the formulation in terms of a spiking recurrent network is clearly more natural to neurobiology [109,118]. Finally, we note that a suitable reinterpretation of equation (8.2), and added in as an analogous equation (8.3),10 would serve as a model for an online processing memory (beyond the memory capture by pure state-dependent effects). Although there are several important differences, it is interesting to note that the form of equations (8.1)–(8.3) suggests that there is little fundamental distinction between the dynamical variables for information processing (equation (8.1)) and those implementing memory at various time scales, equations (8.2)–(8.3). This suggests the possibility that memory in neurobiological systems might be actively computing.

Several non-standard computational models have been outlined (for reviews, see [107,119–121]). However, their dependence on unbounded or infinite precision processing11 implies that their computations are sensitive to system noise and other forms of perturbations. In addition to system-external noise, there are several brain-internal noise sources [95] and theoretical results show that common noise types put hard limits on the set of formal languages that analogue networks can recognize [120,122,123]. Moreover, the state–space (or configuration space) of any reasonable analogue model of a given brain system will be finite dimensional and compact (i.e. closed and bounded); compactness [124] is the natural generalization of finiteness in the Turing framework. Qualitatively, it follows from compactness that finite-precision processing or realistic noise levels have the effect of coarse graining the state–space—effectively discretizing this into a finite number of elements which then become the relevant computational states. Thus, even if we model a brain system as an analogue dynamical system including noise, this would approximately behave as a finite-state analogue [74]. This is essentially what the technical results of Maass and co-workers [122,123,125] and others [107,120,126] entail. Thus, under realistic noise assumptions, the best these systems can achieve is to ‘simulate…any Turing machine with tapes of finite length’ [125]. The insight that the human brain is limited by finite precision processing, finite processing memory and finite representational capacity is originally Turing's ([70,71], for a review see [72]).

9. Conclusion

The empirical results reviewed suggest that the nature of the brain's ability for syntax is based on neurobiological infrastructure for structured sequence processing. Grammars (or more precisely, the parser/generator) are represented in the connectivity of the human brain (specified by T). The acquisition of this ability is accounted for, in an adaptive dynamical system framework, by the coupling between the representation dynamics (T) and the learning dynamics (L). The neurobiological implementation of this system is still underspecified. However, given that the faculty of language is captured by classical computational models to a significant extent, and that these can be embedded in dynamic network architectures, there is hope that significant progress can be made in understanding the neurobiology of the language faculty. ALL paradigms might be used to study the acquisition process within such a framework as well as the processing properties of the underlying neurobiological infrastructure. However, it is necessary to combine and constrain the interpretation of results from ALL paradigms by theoretical models and empirical studies on natural language processing. Only within this context can investigations of ALL make a relevant, albeit limited, contribution to our understanding of the neurobiology of syntax (language).

Acknowledgements

This work was supported by Max Planck Institute for Psycholinguistics, Donders Institute for Brain, Cognition and Behaviour, Fundação para a Ciência e Tecnologia (PTDC/PSI-PCO/110734/2009; IBB/CBME, LA, FEDER/POCI 2010), and Vetenskapsrådet. We are grateful to three anonymous reviewers and in particular Dr Hartmut Fitz of the Neurobiology of Language Group at Max Planck Institute for Psycholinguistics for commenting on an earlier version of this text.

Endnotes

The strictly finite machines (SFMs) are all characterized by the fact that they can attain a finite number of configurations or states (including the possible states of memory). Thus, independent of any particular finite memory architecture (bounded stacks, finite Turing-tapes, or a finite number of bounded registers), it is always possible to construct a finite-state machine (FSM) that is equivalent in terms of processing trajectories in configuration space (path-equivalence). Conversely, the SFM can be viewed as a particular implementation of the path-equivalent FSM. Thus, the transition graph associated with the FSM specifies how a path-equivalent SFM computes by specifying the processing trajectories in the configuration space of the SFM. This also shows that a FSM has a finite memory (coded for in the states of the transition graph; see the electronic supplementary material for technical details). Finally, path-equivalence implies that path-equivalent systems generalize in identical ways. The representation by computational paths or processing trajectories makes the connection to dynamical systems transparent (cf. §8).

To see this, consider Turing machines (TMs), which—by definition—have their processing logic (i.e. the computational mechanism) implemented as a finite-state machine (finite-state control) that reads and writes to the tape memory. The hierarchy is then equivalent to finite memory TMs (T3); T2–0 are all infinite memory TMs with first-in-last-out access (T2), linearly bounded access (T1) and unrestricted access [64,68,69].

A Turing machine, or any other classical computing device, with finite memory is a strictly finite machine and its weak generative capacity is therefore a regular language (see the electronic supplementary material for technical details).

If the brain makes use of a stack memory, it is likely that the brain can support more than one stack. Two or more stacks entail full Turing (T0) computability, unless the stack memories are bounded [64,69].

The class of strictly finite machines and the class of regular languages are only weakly equivalent. For instance, the rewrite grammar {S → aB, B → bA, A → aB, B → b}, which is in a context-free format, specifies the regular language {(ab)n | n a positive natural number}. More generally, any finite rule system can be viewed as a competence grammar, if memory bounds are disregarded.

This includes the use of competence grammars in linguistics (e.g. to abstractly characterize knowledge by finite rule systems) and the use of infinite-state machines in the theory of computation (e.g. Turing machines; a state here includes the state of the finite-state controller and the state of the tape memory). Again, in the case of infinite-state machines, the transition graph representation of the computational paths in state-space makes the transition to the dynamical systems framework straight forward.

We use Gold's paradigm as an explicit example of learning theoretic results because it is relatively simple and well-understood, not because it is necessarily a realistic model of language acquisition. For instance, it is possible to ease the acquisition problem by assuming that the child's (language) environment can be modelled appropriately as a structured stochastic input source ([40] for an extensive discussion).

It is possible to implicitly represent an infinite class of grammars by finite means via, for example, Gödel enumeration ([79], ch. 5) and universal machines ([79], ch. 5). This type of scheme depends on the capacity to represent arbitrarily large (natural) numbers and thus runs into the same finiteness barrier as outlined in §6, at the stage of needing to decode or represent too large a number or the stage of attempting to ‘unpack’ a too complex grammar. More precisely, the inverse image of a finite set is finite under an injection; so the effective representational capacity of the brain, if it used such a scheme, would still be a finite set of grammars.

A dynamical system is a computing device if the dynamical variables (which carries numerical values and therefore can be regarded as analog registers) encode information or representations; thus the temporal evolution of the dynamical variables (i.e. their numerical values) is a reflection of information processing. This conceptualization is identical with, and generalises, the standard view taken in the Turing framework of classical computational architectures.

To be explicit, a new set of dynamical variables, n, needs to be introduced, and equation (8.3), with corresponding modifications of (8.1) and (8.2), is of the type:

| 8.3 |

the vector n instantiates the processing memory (e.g. rapid, short-term synaptic plasticity) and K its dynamics.

The difference between unbounded and infinite precision computation corresponds to computing with rational and real numbers, respectively. For instance, discrete-time, recurrent networks computing with rational and real numbers (synapses/internal states) correspond to Turing and super-Turing machines [107].

References

- 1.Hagoort P. 2009. Reflections on the neurobiology of syntax. In Biological foundations and origin of syntax (eds Bickerton D., Szathmáry E.), pp. 279–298 Cambridge, MA: MIT Press [Google Scholar]

- 2.Petersson K. M., Folia V., Hagoort P. 2010. What artificial grammar learning reveals about the neurobiology of syntax. Brain Lang. 120, 83–95 10.1016/j.bandl.2010.08.003 (doi:10.1016/j.bandl.2010.08.003) [DOI] [PubMed] [Google Scholar]

- 3.Segaert K., Menenti L., Weber K., Petersson K. M., Hagoort P. In press. Shared syntax in language production and language comprehension—an fMRI study. Cereb. Cortex. 10.1093/cercor/bhr249 (doi:10.1093/cercor/bhr249) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Menenti L., Gierhan S. M. E., Segaert K., Hagoort P. 2011. Shared language: overlap and segregation of the neuronal infrastructure for speaking and listening revealed by functional MRI. Psychol. Sci. 22, 1173–1182 10.1177/0956797611418347 (doi:10.1177/0956797611418347) [DOI] [PubMed] [Google Scholar]

- 5.Snijders T. M., Petersson K. M., Hagoort P. 2010. Effective connectivity of cortical and subcortical regions during unification of sentence structure. NeuroImage 52, 1633–1644 10.1016/j.neuroimage.2010.05.035 (doi:10.1016/j.neuroimage.2010.05.035) [DOI] [PubMed] [Google Scholar]

- 6.Snijders T. M., Vosse T., Kempen G., Van Berkum J. A., Petersson K. M., Hagoort P. 2009. Retrieval and unification in sentence comprehension: an fMRI study using word-category ambiguity. Cereb. Cortex 19, 1493–1503 10.1093/cercor/bhn187 (doi:10.1093/cercor/bhn187) [DOI] [PubMed] [Google Scholar]

- 7.Indefrey P., Levelt W. J. M. 2004. The spatial and temporal signatures of word production components. Cognition 92, 101–144 10.1016/j.cognition.2002.06.001 (doi:10.1016/j.cognition.2002.06.001) [DOI] [PubMed] [Google Scholar]

- 8.Vosse T., Kempen G. 2000. Syntactic structure assembly in human parsing: a computational model based on competitive inhibition and a lexicalist grammar. Cognition 75, 105–143 10.1016/S0010-0277(00)00063-9 (doi:10.1016/S0010-0277(00)00063-9) [DOI] [PubMed] [Google Scholar]

- 9.Hagoort P. 2005. On Broca, brain, and binding: a new framework. Trends Cogn. Sci. 9, 416–423 10.1016/j.tics.2005.07.004 (doi:10.1016/j.tics.2005.07.004) [DOI] [PubMed] [Google Scholar]

- 10.Hagoort P., Hald L., Bastiaansen M., Petersson K. M. 2004. Integration of word meaning and world knowledge in language comprehension. Science 304, 438–441 10.1126/science.1095455 (doi:10.1126/science.1095455) [DOI] [PubMed] [Google Scholar]

- 11.Patel A. D. 2003. Language, music, syntax and the brain. Nat. Neurosci. 6, 674–681 10.1038/nn1082 (doi:10.1038/nn1082) [DOI] [PubMed] [Google Scholar]

- 12.Patel A. D., Iversen J., Wassenaar M., Hagoort P. 2008. Musical syntactic processing in a grammatic Broca's aphasia. Aphasiology 22, 776–789 10.1080/02687030701803804 (doi:10.1080/02687030701803804) [DOI] [Google Scholar]

- 13.Willems R. M., Özyürek A., Hagoort P. 2007. When language meets action: the neural integration of gesture and speech. Cereb. Cortex 17, 2322–2333 10.1093/cercor/bhl141 (doi:10.1093/cercor/bhl141) [DOI] [PubMed] [Google Scholar]

- 14.Özyürek A., Willems R. M., Kita S., Hagoort P. 2007. On-line integration of semantic information from speech and gesture: insights from event-related brain potentials. J. Cogn. Neurosci. 19, 605–616 10.1162/jocn.2007.19.4.605 (doi:10.1162/jocn.2007.19.4.605) [DOI] [PubMed] [Google Scholar]

- 15.Chomsky N. 1986. Knowledge of language. New York, NY: Praeger [Google Scholar]

- 16.Xiang H.-D., Fonteijn H. M., Norris D. G., Hagoort P. 2010. Topographical functional connectivity pattern in the perisylvian language networks. Cereb. Cortex 20, 549–560 10.1093/cercor/bhp119 (doi:10.1093/cercor/bhp119) [DOI] [PubMed] [Google Scholar]

- 17.Bookheimer S. 2002. Functional MRI of language: new approaches to understanding the cortical organization of semantic processing. Annu. Rev. Neurosci. 25, 151–188 10.1146/annurev.neuro.25.112701.142946 (doi:10.1146/annurev.neuro.25.112701.142946) [DOI] [PubMed] [Google Scholar]

- 18.Petersson K. M., Forkstam C., Ingvar M. 2004. Artificial syntactic violations activate Broca's region. Cogn. Sci. 28, 383–407 10.1207/S15516709cog2803_4 (doi:10.1207/S15516709cog2803_4) [DOI] [Google Scholar]

- 19.Folia V., Uddén J., Forkstam C., De Vries M. H., Petersson K. M. 2010. Artificial language learning in adults and children. Lang. Learn. 60, 188–220 10.1111/j.1467-9922.2010.00606.x (doi:10.1111/j.1467-9922.2010.00606.x) [DOI] [Google Scholar]

- 20.Reber A. S. 1967. Implicit learning of artificial grammars. J. Verbal Learn. Verbal Behav. 5, 855–863 10.1016/S0022-5371(67)80149-X (doi:10.1016/S0022-5371(67)80149-X) [DOI] [Google Scholar]

- 21.Fitch W. T., Hauser M. D. 2004. Computational constraints on syntactic processing in a nonhuman primate. Science 303, 377–380 10.1126/science.1089401 (doi:10.1126/science.1089401) [DOI] [PubMed] [Google Scholar]

- 22.Gentner T. Q., Fenn K. M., Margoliash D., Nusbaum H. C. 2006. Recursive syntactic pattern learning by songbirds. Nature 440, 1204–1207 10.1038/nature04675 (doi:10.1038/nature04675) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hauser M. D., Chomsky N., Fitch W. T. 2002. The faculty of language: what is it, who has it, and how did it evolve? Science 298, 1569–1579 10.1126/science.298.5598.1569 (doi:10.1126/science.298.5598.1569) [DOI] [PubMed] [Google Scholar]

- 24.O'Donnell T. J., Hauser M. D., Fitch W. T. 2005. Using mathematical models of language experimentally. Trends Cogn. Sci. 9, 284–289 10.1016/j.tics.2005.04.011 (doi:10.1016/j.tics.2005.04.011) [DOI] [PubMed] [Google Scholar]

- 25.Saffran J. R., Hauser M., Seibel R., Kapfhamer J., Tsao F., Cushman F. 2008. Grammatical pattern learning by human infants and cotton-top tamarin monkeys. Cognition 107, 479–500 10.1016/j.cognition.2007.10.010 (doi:10.1016/j.cognition.2007.10.010) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Forkstam C., Hagoort P., Fernandez G., Ingvar M., Petersson K. M. 2006. Neural correlates of artificial syntactic structure classification. NeuroImage 32, 956–967 10.1016/j.neuroimage.2006.03.057 (doi:10.1016/j.neuroimage.2006.03.057) [DOI] [PubMed] [Google Scholar]

- 27.Friederici A. D., Bahlmann J., Heim S., Schubotz R. I., Anwander A. 2006. The brain differentiates human and non-human grammars: functional localization and structural connectivity. Proc. Natl Acad. Sci. USA 103, 2458–2463 10.1073/pnas.0509389103 (doi:10.1073/pnas.0509389103) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bahlmann J., Schubotz R. I., Friederici A. D. 2008. Hierarchical artificial grammar processing engages Broca's area. NeuroImage 42, 525–534 10.1016/j.neuroimage.2008.04.249 (doi:10.1016/j.neuroimage.2008.04.249) [DOI] [PubMed] [Google Scholar]

- 29.Uddén J., Folia V., Forkstam C., Ingvar M., Fernandez G., Overeem S., van Elswijk G., Hagoort P., Petersson K. M. 2008. The inferior frontal cortex in artificial syntax processing: an rTMS study. Brain Res. 1224, 69–78 10.1016/j.brainres.2008.05.070 (doi:10.1016/j.brainres.2008.05.070) [DOI] [PubMed] [Google Scholar]

- 30.de Vries M. H., Barth A. R. C., Maiworm S., Knecht S., Zwitserlood P., Floeel A. 2010. Electrical stimulation of Broca's area enhances implicit learning of an artificial grammar. J. Cogn. Neurosci. 22, 2427–2436 10.1162/jocn.2009.21385 (doi:10.1162/jocn.2009.21385) [DOI] [PubMed] [Google Scholar]

- 31.Uddén J., Ingvar M., Hagoort P., Petersson K. M. Submitted. Broca's region: a causal role in implicit processing of grammars with adjacent and non-adjacent dependencies. [DOI] [PubMed] [Google Scholar]

- 32.Christiansen M. H., Kelly M. L., Shillcock R., Greenfield K. 2010. Impaired artificial grammar learning in agrammatism. Cognition 116, 382–393 10.1016/j.cognition.2010.05.015 (doi:10.1016/j.cognition.2010.05.015) [DOI] [PubMed] [Google Scholar]

- 33.Forkstam C., Petersson K. M. 2005. Towards an explicit account of implicit learning. Curr. Opin. Neurol. 18, 435–441 10.1097/01.wco.0000171951.82995.c4 (doi:10.1097/01.wco.0000171951.82995.c4) [DOI] [PubMed] [Google Scholar]

- 34.Ullman M. T. 2004. Contributions of memory circuits to language: the declarative/procedural model. Cognition 92, 231–270 10.1016/j.cognition.2003.10.008 (doi:10.1016/j.cognition.2003.10.008) [DOI] [PubMed] [Google Scholar]

- 35.Conway C. N., Pisoni D. B. 2008. Neurocognitive basis of implicit learning of sequential structure and its relation to language processing. Ann. N. Y. Acad. Sci. 1145, 113–131 10.1196/annals.1416.009 (doi:10.1196/annals.1416.009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Misyak J. B., Christiansen M. H., Tomblin J. B. 2010. On-line individual differences in statistical learning predict language processing. Front. Psychol. 1, 31. 10.3389/fpsyg.2010.00031 (doi:10.3389/fpsyg.2010.00031) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Jackendoff R. 2011. What is the human language faculty? Two views. Language 87, 586–624 10.1353/lan.2011.0063 (doi:10.1353/lan.2011.0063) [DOI] [Google Scholar]

- 38.Stadler M. A., Frensch P. A. (eds) 1998. Handbook of implicit learning. London, UK: SAGE [Google Scholar]

- 39.Chomsky N., Miller G. A. 1963. Introduction to the formal analysis of natural languages. In Handbook of mathematical psychology (eds Luce R. D., Bush R. R., Galanter E.), pp. 269–321 New York, NY: John Wiley & Sons [Google Scholar]

- 40.Clark A., Lappin S. 2011. Linguistic nativism and the poverty of the stimulus. Oxford, UK: Wiley-Blackwell [Google Scholar]

- 41.Folia V., Uddén J., Forkstam C., Ingvar M., Hagoort P., Petersson K. M. 2008. Implicit learning and dyslexia. Ann. N Y Acad. Sci. 1145, 132–150 10.1196/annals.1416.012 (doi:10.1196/annals.1416.012) [DOI] [PubMed] [Google Scholar]

- 42.Forkstam C., Elwér Å., Ingvar M., Petersson K. M. 2008. Instruction effects in implicit artificial grammar learning: a preference for grammaticality. Brain Res. 1221, 80–92 10.1016/j.brainres.2008.05.005 (doi:10.1016/j.brainres.2008.05.005) [DOI] [PubMed] [Google Scholar]

- 43.Uddén J., Araujo S., Forkstam C., Ingvar M., Hagoort P., Petersson K. M. 2009. A matter of time: implicit acquisition of recursive sequence structures. Proc. Cogn. Sci. Soc. 2009, 2444–2449 [Google Scholar]

- 44.Uddén J., Araujo S., Ingvar M., Hagoort P., Petersson K. M. In press Implicit acquisition of grammars with crossed and nested non-adjacent dependencies: investigating the push-down stack model. Cogn. Sci. 10.1111/j.1551-6709.2012.01235.x (doi:10.1111/j.1551-6709.2012.01235.x) [DOI] [PubMed] [Google Scholar]

- 45.Zajonc R. B. 1968. Attitudinal effects of mere exposure. J. Pers. Soc. Psychol. Monogr. Suppl. 9 1–27 [Google Scholar]

- 46.Nieuwenhuis I. L. C., Folia V., Forkstam C., Jensen O., Petersson K. M. Submitted Sleep promotes the abstraction of implicit grammatical rules. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Gómez R. L., Bootzin R. R., Nadel L. 2006. Naps promote abstraction in language-learning infants. Psychol. Sci. 17, 670–674 10.1111/j.1467-9280.2006.01764.x (doi:10.1111/j.1467-9280.2006.01764.x) [DOI] [PubMed] [Google Scholar]

- 48.Folia V., Forkstam C., Hagoort P., Petersson K. M. 2009. Language comprehension: the interplay between form and content. Proc. Cogn. Sci. Soc. 2009, 1686–1691 [Google Scholar]

- 49.Grodzinsky Y., Santi A. 2008. The battle for Broca's region. Trends Cogn. Sci. 12, 474–480 10.1016/j.tics.2008.09.001 (doi:10.1016/j.tics.2008.09.001) [DOI] [PubMed] [Google Scholar]

- 50.Santi A., Grodzinsky Y. 2007. Working memory and syntax interact in Broca's area. NeuroImage 37, 8–17 10.1016/j.neuroimage.2007.04.047 (doi:10.1016/j.neuroimage.2007.04.047) [DOI] [PubMed] [Google Scholar]

- 51.Santi A., Grodzinsky Y. 2007. Taxing working memory with syntax: bihemispheric modulation. Hum. Brain Mapp. 28, 1089–1097 10.1002/hbm.20329 (doi:10.1002/hbm.20329) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Makuuchi M., Bahlmann J., Anwander A., Friederici A. D. 2009. Segregating the core computational faculty of human language from working memory. Proc. Natl Acad. Sci. USA 106, 8362–8367 10.1073/pnas.0810928106 (doi:10.1073/pnas.0810928106) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Folia V., Forkstam C., Ingvar M., Hagoort P., Petersson K. M. 2011. Implicit artificial syntax processing: genes, preference, and bounded recursion. Biolinguistics 5, 105–132 [Google Scholar]

- 54.Poliak S., Gollan L., Martinez R., Custer A., Einheber S., Salzer J. L., Trimmer J. S., Shrager P., Peles E. 1999. Caspr2, a new member of the neurexin superfamily, is localized at the juxtaparanodes of myelinated axons and associates with K+ channels. Neuron 24, 1037–1047 10.1016/S0896-6273(00)81049-1 (doi:10.1016/S0896-6273(00)81049-1) [DOI] [PubMed] [Google Scholar]

- 55.Vernes S. C., et al. 2008. A functional genetic link between distinct developmental language disorders. N. Engl. J. Med. 359, 2337–2345 10.1056/NEJMoa0802828 (doi:10.1056/NEJMoa0802828) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Davidson E. H. 2006. The regulatory genome: gene regulatory networks in development and evolution. San Diego, CA: Academic Press [Google Scholar]

- 57.Davidson E. H., et al. 2002. A genomic regulatory network for development. Science 295, 1669–1678 10.1126/science.1069883 (doi:10.1126/science.1069883) [DOI] [PubMed] [Google Scholar]

- 58.Alberts B., Johnson A., Lewis J., Raff M., Roberts K., Walter P. 2007. Molecular biology of the cell. New York, NY: Garland Science [Google Scholar]

- 59.Alarcón M., et al. 2008. Linkage, association, and gene-expression analyses identify CNTNAP2 as an autism-susceptibility gene. Am. J. Hum. Genet. 82, 150–159 10.1016/j.ajhg.2007.09.005 (doi:10.1016/j.ajhg.2007.09.005) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Abrahams B. S., Tentler D., Perederiy J. V., Oldham M. C., Coppola G., Geschwind D. H. 2007. Genome-wide analyses of human perisylvian cerebral cortical patterning. Proc. Natl Acad. Sci. USA 104, 17 849–17 854 10.1073/pnas.0706128104 (doi:10.1073/pnas.0706128104) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Snijders T. M., et al. Submitted A common CNTNAP2 polymorphism affects functional and structural aspects of language-relevant neuronal infrastructure. [Google Scholar]

- 62.Chomsky N. 1963. Formal properties of grammars. In Handbook of mathematical psychology (eds Luce R. D., Bush R. R., Galanter E.), pp. 323–418 New York, NY: John Wiley & Sons [Google Scholar]

- 63.Jäger G., Rogers J. 2012. Formal language theory: refining the Chomsky hierarchy. Phil. Trans. R. Soc. B 367, 1956–1970 10.1098/rstb.2012.0077 (doi:10.1098/rstb.2012.0077) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Davis M., Sigal R., Weyuker E. J. 1994. Computability, complexity, and languages: fundamentals of theoretical computer science. San Diego, CA: Academic Press [Google Scholar]

- 65.Pullum G. K., Scholz B. C. 2010. Recursion and the infinitude claim. In Recursion in human language (ed. van der Hulst H.), pp. 113–138 Berlin, Germany: Mouton de Gruyter [Google Scholar]

- 66.Levelt W. J. M. 1974. Formal grammars in linguistics and psycholinguistics. The Hague, The Netherlands: Mouton [Google Scholar]

- 67.Partee B. H., ter Meulen A., Wall R. E. 1990. Mathematical methods in linguistics. Dordrecht, The Netherlands: Kluwer Academic Publishers [Google Scholar]

- 68.Hopcroft J. E., Motwani R., Ullman J. D. 2000. Introduction to automata theory, languages, and computation. Reading, MA: Addison-Wesley [Google Scholar]

- 69.Minsky M. L. 1967. Computation: finite and infinite machines. Englewood Cliffs, NJ: Prentice-Hall [Google Scholar]

- 70.Turing A. 1936. On computable numbers with an application to the Entscheidungs problem (part 1). Proc. Lond. Math. Soc. 42, 230–240 10.1112/plms/s2-42.1.230 (doi:10.1112/plms/s2-42.1.230) [DOI] [Google Scholar]

- 71.Turing A. 1936. On computable numbers with an application to the Entscheidungs problem (part 2). Proc. Lond. Math. Soc. 42, 241–265 [Google Scholar]

- 72.Wells A. 2005. Rethinking cognitive computation: Turing and the science of the mind. Hampshire, UK: Palgrave Macmillan [Google Scholar]

- 73.Soare R. I. 1996. Computability and recursion. Bull. Symbolic Logic 2, 284–321 10.2307/420992 (doi:10.2307/420992) [DOI] [Google Scholar]

- 74.Petersson K. M. 2005. On the relevance of the neurobiological analogue of the finite-state architecture. Neurocomputing 65–66, 825–832 10.1016/j.neucom.2004.10.108 (doi:10.1016/j.neucom.2004.10.108) [DOI] [Google Scholar]

- 75.Savage J. E. 1998. Models of computation. Reading, MA: Addison-Wesley [Google Scholar]

- 76.Miller G. A., Chomsky N. 1963. Finitary models of language users. In Handbook of mathematical psychology (eds Luce R. D., Bush R. R., Galanter E.), pp. 419–491 New York, NY: John Wiley & Sons [Google Scholar]

- 77.Chomsky N. 1956. Three models for the description of language. IEEE Trans. Inform. Theory 2, 113–124 10.1109/TIT.1956.1056813 (doi:10.1109/TIT.1956.1056813) [DOI] [Google Scholar]

- 78.Chomsky N. 1957. Syntactic structures. Den Haag, The Netherlands: Mouton [Google Scholar]

- 79.Cutland N. J. 1980. Computability: an introduction to recursive function theory. Cambridge, UK: Cambridge University Press [Google Scholar]

- 80.Berwick R. C., Pietroski P., Yankama B., Chomsky N. 2011. Poverty of the stimulus revisited. Cogn. Sci. 35, 1207–1242 10.1111/j.1551-6709.2011.01189.x (doi:10.1111/j.1551-6709.2011.01189.x) [DOI] [PubMed] [Google Scholar]

- 81.Miller G. A. 1962. Some psychological studies of grammar. Am. Psychol. 17, 748–762 10.1037/h0044708 (doi:10.1037/h0044708) [DOI] [Google Scholar]

- 82.Foss D. J., Cairns H. S. 1970. Some effects of memory limitations upon sentence comprehension and recall. J. Verbal Learn. Verbal Behav. 9, 541–547 10.1016/S0022-5371(70)80099-8 (doi:10.1016/S0022-5371(70)80099-8) [DOI] [Google Scholar]

- 83.Miller G. A., Isard S. 1964. Free recall of self-embedded English sentences. Inform. Control 7, 292–303 10.1016/S0019-9958(64)90310-9 (doi:10.1016/S0019-9958(64)90310-9) [DOI] [Google Scholar]

- 84.Hakes D. T., Cairns H. S. 1970. Sentence comprehension and relative pronouns. Percept. Psychophys. 8, 5–8 10.3758/BF03208920 (doi:10.3758/BF03208920) [DOI] [Google Scholar]

- 85.Larkin W., Burns D. 1977. Sentence comprehension and memory for embedded structure. Mem. Cogn. 5, 17–22 10.3758/BF03209186 (doi:10.3758/BF03209186) [DOI] [PubMed] [Google Scholar]

- 86.Blaubergs M. S., Braine M. D. S. 1974. Short-term memory limitations on decoding self-embedded sentences. J. Exp. Psychol. 102, 745–748 10.1037/h0036091 (doi:10.1037/h0036091) [DOI] [Google Scholar]

- 87.Hakes D. T., Evans J. S., Brannon L. L. 1976. Understanding sentences with relative clauses. Mem. Cogn. 4, 283–290 10.3758/BF03213177 (doi:10.3758/BF03213177) [DOI] [PubMed] [Google Scholar]

- 88.Hamilton H. W., Deese J. 1971. Comprehensibility and subject-verb relations in complex sentences. J. Verbal Learn. Verbal Behav. 10, 163–170 10.1016/S0022-5371(71)80008-7 (doi:10.1016/S0022-5371(71)80008-7) [DOI] [Google Scholar]

- 89.Wang M. D. 1970. The role of syntactic complexity as a determiner of comprehensibility. J. Verbal Learn. Verbal Behav. 9, 398–404 10.1016/S0022-5371(70)80079-2 (doi:10.1016/S0022-5371(70)80079-2) [DOI] [Google Scholar]

- 90.Marks L. E. 1968. Scaling of grammaticalness of self-embedded English sentences. J. Verbal Learn. Verbal Behav. 7, 965–967 10.1016/S0022-5371(68)80106-9 (doi:10.1016/S0022-5371(68)80106-9) [DOI] [Google Scholar]

- 91.De Vries M. H., Christiansen M. H., Petersson K.M. 2011. Learning recursion: multiple nested and crossed dependencies. Biolinguistics 5, 10–35 [Google Scholar]

- 92.Arora S., Barak B. 2009. Computational complexity: a modern approach. Cambridge, UK: Cambridge University Press [Google Scholar]

- 93.Papadimitriou C. H. 1993. Computational complexity. Upper Saddle River, NJ: Addison Wesley [Google Scholar]

- 94.Duarte R. 2011. Self-organized sequence processing in recurrent neural networks with multiple interacting plasticity mechanisms. Faro, Portugal: Universidade do Algarve [Google Scholar]

- 95.Koch C. 1999. Biophysics of computation: information processing in single neurons. New York, NY: Oxford University Press [Google Scholar]

- 96.Trappenberg T. P. 2010. Fundamentals of computational neuroscience, 2nd edn Oxford, UK: Oxford University Press [Google Scholar]

- 97.Nieuwenhuys R., Voogd J., van Huijzen C. 1988. The human central nervous system: a synopsis and atlas, 3rd revised edn Berlin, Germany: Springer [Google Scholar]

- 98.Jain S., Osherson D., Royer J. S., Sharma A. 1999. Systems that learn. Cambridge, MA: MIT Press [Google Scholar]

- 99.Nowak M. A., Komarova N. L., Niyogi P. 2002. Computational and evolutionary aspects of language. Nature 417, 611–617 3, 205–213 10.1038/nature00771 (doi:10.1038/nature00771) [DOI] [PubMed] [Google Scholar]

- 100.Yang C. D. 2011. Computational models of syntactic acquisition. WIREs Cogn. Sci. 10.1002/wcs.1154 (doi:10.1002/wcs.1154) [DOI] [PubMed] [Google Scholar]

- 101.Gold E. M. 1967. Language identification in the limit. Inform. Control 10, 447–474 10.1016/S0019-9958(67)91165-5 (doi:10.1016/S0019-9958(67)91165-5) [DOI] [Google Scholar]

- 102.De la Higuera C. 2010. Grammatical inference: learning automata and grammars. Cambridge, UK: Cambridge University Press [Google Scholar]

- 103.Chomsky N., Lasnik H. 1995. The theory of principles and parameters. In The minimalist program (ed. Chomsky N.), pp. 13–128 Cambridge, MA: MIT Press [Google Scholar]

- 104.Yang C. D. 2002. Knowledge and learning in natural language. Oxford, UK: Oxford University Press [Google Scholar]

- 105.Pullum G. K., Scholz B. C. 2002. Empirical assessment of stimulus poverty arguments. Linguist. Rev. 19, 9–50 10.1515/tlir.19.1-2.9 (doi:10.1515/tlir.19.1-2.9) [DOI] [Google Scholar]

- 106.Scholz B. C., Pullum G. K. 2002. Searching for arguments to support linguistic nativism. Ling. Rev. 19, 185–223 10.1515/tlir.19.1-2.185 (doi:10.1515/tlir.19.1-2.185) [DOI] [Google Scholar]

- 107.Siegelmann H. T. 1999. Neural networks and analog computation: beyond the Turing limit. Basel, Switzerland: Birkhäuser [Google Scholar]

- 108.Petersson K. M. 2005. Learning and memory in the human brain. Stockholm, Sweden: Karolinska University Press [Google Scholar]

- 109.Petersson K. M. 2008. On cognition, structured sequence processing and adaptive dynamical systems. Proc. Am. Inst. Phys. Math. Stat. Phys. Subseries 1060, 195–200 [Google Scholar]

- 110.Øksendal B. 2000. Stochastic differential equations: an introduction with applications, 5th edn Berlin, Germany: Springer [Google Scholar]

- 111.Petersson K. M., Elfgren C., Ingvar M. 1997. A dynamic role of the medial temporal lobe during retrieval of declarative memory in man. NeuroImage 6, 1–11 10.1006/nimg.1997.0276 (doi:10.1006/nimg.1997.0276) [DOI] [PubMed] [Google Scholar]

- 112.Petersson K. M., Elfgren C., Ingvar M. 1999. Dynamic changes in the functional anatomy of the human brain during recall of abstract designs related to practice. Neuropsychologia 37, 567–587 10.1016/S0028-3932(98)00152-3 (doi:10.1016/S0028-3932(98)00152-3) [DOI] [PubMed] [Google Scholar]

- 113.Gerstner W., Kistler W. 2002. Spiking neuron models: single neurons, populations, plasticity. Cambridge, UK: Cambridge University Press [Google Scholar]

- 114.Izhikevich E. M. 2010. Dynamical systems in neuroscience: the geometry of excitability and bursting. Cambridge, MA: MIT Press [Google Scholar]

- 115.Petersson K. M., Ingvar M., Reis A. 2009. Language and literacy from a cognitive neuroscience perspective. In Cambridge handbook of literacy, pp. 152–182 Cambridge, UK: Cambridge University Press [Google Scholar]

- 116.Chomsky N. 2005. Three factors in language design. Linguist. Inq. 36, 1–22 10.1162/0024389052993655 (doi:10.1162/0024389052993655) [DOI] [Google Scholar]

- 117.Yang C. D. 2004. Universal grammar, statistics or both? Trends Cogn. Sci. 8, 451–456 [DOI] [PubMed] [Google Scholar]

- 118.Petersson K. M., Grenholm P., Forkstam C. 2005. Artificial grammar learning and neural networks. Proc. Cogn. Sci. Soc. 2005, 1726–1731 [Google Scholar]

- 119.Moore C. 1990. Unpredictability and undecidability in dynamical systems. Phys. Rev. Lett. 64, 2354–2357 10.1103/PhysRevLett.64.2354 (doi:10.1103/PhysRevLett.64.2354) [DOI] [PubMed] [Google Scholar]

- 120.Casey M. 1996. The dynamics of discrete-time computation with application to recurrent neural networks and finite state machine extraction. Neural Comput. 8, 1135–1178 10.1162/neco.1996.8.6.1135 (doi:10.1162/neco.1996.8.6.1135) [DOI] [PubMed] [Google Scholar]

- 121.Siegelmann H. T., Fishman S. 1998. Analog computation with dynamical systems. Physica D 120, 214–235 10.1016/S0167-2789(98)00057-8 (doi:10.1016/S0167-2789(98)00057-8) [DOI] [Google Scholar]

- 122.Maass W., Sontag E. 1999. Analog neural nets with Gaussian or other common noise distribution cannot recognize arbitrary regular languages. Neural Comput. 11, 771–782 10.1162/089976699300016656 (doi:10.1162/089976699300016656) [DOI] [PubMed] [Google Scholar]

- 123.Maass W., Orponen P. 1998. On the effect of analog noise on discrete-time analog computations. Neural Comput. 10, 1071–1095 10.1162/089976698300017359 (doi:10.1162/089976698300017359) [DOI] [Google Scholar]

- 124.Kelly J. L. 2008. General topology. New York, NY: Ishi Press International [Google Scholar]

- 125.Maass W., Joshi P., Sontag E. 2007. Computational aspects of feedback in neural circuits. PLoS Comput. Biol. 3, e165. 10.1371/journal.pcbi.0020165 (doi:10.1371/journal.pcbi.0020165) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 126.Siegelmann H. T. 2003. Neural and super-Turing computing. Minds Mach. 13, 103–114 10.1023/A:1021376718708 (doi:10.1023/A:1021376718708) [DOI] [Google Scholar]