Abstract

Rhythms at slow (<1 Hz) frequency of alternating Up and Down states occur during slow-wave sleep states, under deep anaesthesia and in cortical slices of mammals maintained in vitro. Such spontaneous oscillations result from the interplay between network reverberations nonlinearly sustained by a strong synaptic coupling and a fatigue mechanism inhibiting the neurons firing in an activity-dependent manner. Varying pharmacologically the excitability level of brain slices we exploit the network dynamics underlying slow rhythms, uncovering an intrinsic anticorrelation between Up and Down state durations. Besides, a non-monotonic change of Down state duration is also observed, which shrinks the distribution of the accessible frequencies of the slow rhythms. Attractor dynamics with activity-dependent self-inhibition predicts a similar trend even when the system excitability is reduced, because of a stability loss of Up and Down states. Hence, such cortical rhythms tend to display a maximal size of the distribution of Up/Down frequencies, envisaging the location of the system dynamics on a critical boundary of the parameter space. This would be an optimal solution for the system in order to display a wide spectrum of dynamical regimes and timescales.

Keywords: Slow oscillations, Mean-field theory, IF neuron networks, Bifurcation analysis, Relaxation oscillators, Up and Down states, Cortical rhythms

Introduction

Our brain needs to continuously collapse into discrete representations the enormous variety of sensory information and potentially associated actions. This is one of the reasons why attractor dynamics has long been proposed as a plausible and appropriate substrate for brain functions (Hopfield 1982; Amit 1989). Computational modeling has proven the versatility of such theoretical framework, accurately describing the evolution of the firing activity recorded during the performance of a wide range of cognitive functions (Zipser et al. 1993; Amit and Brunel 1997; Koulakov et al. 2002; Wang 2002; Machens et al. 2005; Deco and Rolls 2005). In vitro preparations show attractor dynamics as an emergent property of coordinated cell assemblies capable, through synaptic reverberation, to sustain meta-stable high-firing activity patterns (Cossart et al. 2003; Shu et al. 2003; Lau and Bi 2005; Sasaki et al. 2007). Some of these preparations spontaneously display slow oscillatory patterns of spiking activity in which Up states at high firing rates alternate rather regularly with almost quiescent Down states (Sanchez-Vives and McCormick 2000; Shu et al. 2003). Yet, attractor dynamics together with a self-inhibition modeling an activity-dependent fatigue mechanism, provide a reliable theoretical framework capable to quantitatively describe the observed features of this phenomenon (Latham et al. 2000; Compte et al. 2003).

Slow rhythms in cortical slices largely share electrophysiological properties with the in vivo recordings during slow wave sleep and ketamine anaesthesia, originally characterized in the neocortex of the cat (Steriade et al. 1993). Furthermore, Up states of spontaneous slow rhythms from the auditory and somatosensory cortices of rats in vivo, show stereotyped time course which conserve their temporal structure in the activity patterns produced in response to sensorial stimuli (Luczak et al. 2009; Harris et al. 2010). Hence, the study of the neurobiological substrate of slow Up/Down oscillations and the characterization of the underlying network dynamics is directly related to the question of which neuronal machinery is available for information processing in the brain.

Here we explore the footprints leaved by the nonlinear dynamics of the cortical networks during Up/Down oscillations aiming at strengthening the hypothesis that the nervous tissue, and in particular the cerebral cortex, is an excitable medium with a potentially high computational power (Langton 1990; Beggs 2008). In particular, we investigate the relationship between network excitability and the timescales observable in vitro like Up and Down state durations. We report a wide spectrum of such timescales whose distribution largely match the one predicted by the theoretical framework of attractor dynamics and activity-dependent self-inhibition. The resulting working regime of such cortical networks appears to be supported by a balanced interplay between intrinsic fluctuation of the spiking activity and an eroded landscape of force field driving the system to wonder with timescales longer than the ones available at microscopic level (Tsuda 2002; Durstewitz and Deco 2008; Braun and Mattia 2010).

Methods

Mean-field theory of neuronal network dynamics

Under quite general assumptions, the average firing rate ν of a neuron can be worked out as a function of the instantaneous mean and variance of ionic currents flowing across its membrane potential. The same input–output relationship can be used at the population level, provided that the statistics of incoming currents is similar for different neurons. In other words, a condition in which all the neurons in the network feel the same mean “field”, a physics concept which here has to be considered as a synonym of input current. Although such hypothesis could appear unlikely to work, it is fulfilled when a sufficiently wide network of neurons is undertaken (Amit and Tsodyks 1991), like those observed in the cortical tissue (Braitenberg and Schüz 1991). Mean-field theory allows to predict population firing rate of a network of spiking neurons under stationary conditions, provided that we are able to compute the input–output gain function Φ(νin), a sigmoidal function like those depicted in Fig. 1a. Φ(νin) returns the output discharge rate νout of a nervous cell when its presynaptic neurons emit spikes at frequency νin. The gain function is shaped both by single-neuron properties, like the absolute refractory period and the spike emission threshold for the membrane potential, and by synaptic features determining how incoming spikes on the dendritic tree are translated in input currents. Neuronal networks with higher or lower amplification gain (like red and blue curve in Fig. 1a, respectively) are more or less excitable, respectively. Gain functions have been worked out for several integrate-and-fire (IF) neurons and synaptic transmission models (see for review Burkitt 2006; La Camera et al. 2008). Here we use a Φ(ν) derived in (Fusi and Mattia 1999) for a simplified IF neuron model.

Fig. 1.

Mean-field theory of attractor network dynamics. a Input–output gain functions Φ(ν) for different levels of excitability. Neurons in a network respond to the input pre-synaptic firing νin, with an output firing νout = Φ(νin), which is higher for excited cells (red) with respect to the dampened ones (blue). In all panels, circles are “fixed points” of the dynamics, where the output firing is the same as the input one: νin = νout. b Restoring forces result from the “distance” between the input and output firing: Φ(νin) − νin. Larger is such distance, faster is the firing rate change of the network. Sigmoid shaped curves are forces for different levels of excitability: low (blue, as in Panel A); intermediate (green) and high (red, as in Panel A). Null force points out a fixed point of the dynamics (no motion). The integral of the force is a kind of “work” the network can spend: the “energies” sketched on top of the panel. Networks tend to reach a minimum of the energy, a stable fixed point. c Probability distributions of the network firing rates assuming intrinsic fluctuations of the dynamics, and directly computed from the energy landscapes in Panel B. A distribution peak corresponds to an energy valley, because the dynamics is “attracted” to such preferred state. d and e Same as Panels B and C for an intermediate level of excitability, which makes the network bistable (two preferred states corresponding to the energy wells)

Starting from the response properties in Φ(ν), the time evolution of the network activity ν(t) can be roughly obtained as a sequence of discrete steps. For each of them, Φ(ν(t)) provides the discharge rate ν(t+1) of the neuronal pool at the next step, to feedback as input in order to close the loop and have the mean-field dynamic equation of the network. For reference see (Amit 1989) and (Renart et al. 2003).

Energy landscape metaphor and firing rate distribution

The difference between the output firing νout = Φ(νin) and the input νin determines the “force” driving the activity ν(t) of the network: positive Φ(ν)−ν brings the neurons of the network to fire at higher rates. Examples of force profiles from the gain functions in Fig. 1a are show in Fig. 1b: steeper Φ(ν) (red curves) from a more excitable network induces more positive driving forces if compared to a dampen system (blue curves) where Φ(ν)−ν is mainly negative. If input activity equals the output ones (Φ(ν) = ν), null forces result and the network is stuck in a “fixed point” (circles in Fig. 1). These are special states of the system where the firing rate self-consistently reproduce itself. If forces nearby such states are “attractive” (curves with negative slopes in Fig. 1b), they are preferred activity levels of the network called attractor states.

From the concept of force, we can introduce the “work” a network could spend and then the available energy, given by the integral  . The resulting energy landscape, shown on top of Fig. 1b for the above gain functions, can help in recognizing the attractor states of the network as the bottom of the wells, which the network activity tends to reach. Actually, networks are composed of a finite number of nervous cells and on very short time scales the whole discharge rate is a fluctuating variable depending on the Poissonian counting statistics of emitted spikes (Brunel and Hakim 1999; Spiridon and Gerstner 1999; Mattia and Del Giudice 2002). Embodying such intrinsic fluctuations in the mean-field description, the energy landscape allows to work out the distribution of firing rates under stationary conditions, analogous to the Gibbs distribution (Risken 1989):

. The resulting energy landscape, shown on top of Fig. 1b for the above gain functions, can help in recognizing the attractor states of the network as the bottom of the wells, which the network activity tends to reach. Actually, networks are composed of a finite number of nervous cells and on very short time scales the whole discharge rate is a fluctuating variable depending on the Poissonian counting statistics of emitted spikes (Brunel and Hakim 1999; Spiridon and Gerstner 1999; Mattia and Del Giudice 2002). Embodying such intrinsic fluctuations in the mean-field description, the energy landscape allows to work out the distribution of firing rates under stationary conditions, analogous to the Gibbs distribution (Risken 1989):  , where κT is the parameter related to the fluctuation size of ν(t), inversely proportional to the number of neurons in the network. In Fig. 1c are the three distributions for the example networks in Fig. 1a, b.

, where κT is the parameter related to the fluctuation size of ν(t), inversely proportional to the number of neurons in the network. In Fig. 1c are the three distributions for the example networks in Fig. 1a, b.

Energy landscape can have more complex shapes as in Fig. 1c, where the intermediate excitability of the neurons yields to have three fixed points (circles): the two corresponding to the valleys of E(ν) are attractors states, while the third one pointing to the top of the central hill is an unstable fixed point. Activity distribution in Fig. 1d clearly shows the existence of two preferred states (two separated peaks) at high and low ν, analogously to what have been observed during Up/Down slow oscillations (see Fig. 3c).

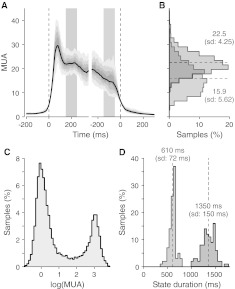

Fig. 3.

Time dependent in vitro MUA variability during Up states behaves as predicted by the attractor framework. a MUA centred around Up state onset (left) and Up-to-Down transition (right) in the same in vitro recording shown in Fig. 2. Solid black line, average MUA. Gray shaded regions depict the percentiles from 10 to 90 of the time-varying MUA distribution around both Up state onset (left dashed vertical line) and downward transitions (right dashed vertical line). b Histogram of MUAs in the intervals depicted in Panel A at the beginning (red vertical strip) and the end (green strip) of the Up state. Dashed lines, average MUA in the two periods (right labels, numeric values of the mean and the SD). c Histogram of log(MUA) showing the existence of two preferred states (Up and Down at high and low MUAs, respectively). d Histograms of Up (red) and Down (blue) state durations observed in this recording. Dashed lines, mean durations

In vitro experiments and data analysis

Results from the experiments reported have been previously published in (Sanchez-Vives et al. 2010; Reig et al. 2010), where a detailed description of experimental methods and data analysis is given. Briefly, extracellular recordings were obtained by means of tungsten electrodes from 400 μm thick cortical slices from adult ferrets. Slices were placed in an interface-style recording chamber and bathed in ACSF containing (in mM): NaCl, 124; KCl, 3.5; MgSO4, 1; NaHPO4, 1.25; CaCl2, 1.2; NaHCO3, 26; and dextrose, 10, and was aerated with 95% O2, 5% CO2 to a final pH of 7.4. The bath temperature was maintained at 34–36°C. Under these conditions, slow rhythmic activity appeared in the slice (Sanchez-Vives and McCormick 2000). Here we used only the control conditions from both studies (Sanchez-Vives et al. 2010; Reig et al. 2010), although further pharmacological and bath temperature manipulation were performed afterwards.

We introduced a multi-unit activity (MUA) estimate inspired to the evidence that power spectra of population firing rate have asymptotic values at very high frequency proportional to the firing rate itself (Mattia and Del Giudice 2002). If a linear transform occurs from spikes to local field potentials (LFP) at high Fourier frequencies, LFP power spectra ratios should provide a reliable estimate of population firing rate changes. MUAs were then estimated as the average power of the relative LFP spectra in the frequency band of 0.2–1.5 kHz, and sampled every 5 ms. The baseline spectrum was the one corresponding to the average down state level. Log(MUA) scaling was used to balance the large fluctuations of the nearby spikes. Log(MUA) was furthermore smoothed with a moving window of 80 ms.

The time of occurrence of an upward and downward transition was estimated as the crossing time of a threshold MUA value taken to be 60% of the distance between the two peaks in the log(MUA) histogram corresponding to Up and Down states. In Fig. 2a is shown a rasterplot of the time course of MUA extracted from an example recording around the detected Down-to-Up transitions (left) and the Up-to-Down transitions. Colour code in logarithmic scale MUA from low (blue) to high (red) firing rates taking as reference the activity during Down states. In matching theory, simulations and experiments we considered the population firing rate ν and the MUA as the same variable.

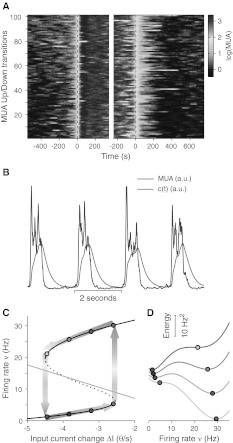

Fig. 2.

Activity-dependent self-inhibition and attractor dynamics to have a “relaxation” oscillator. a Rasterplot of MUA (multi-unit activity) centred around upward (Down-to-Up, left from blue to red) and downward (Up-to-Down, right from red to blue) transitions, from an in vitro experiment with ferret brain slices. b A sample period of MUA time series (blue) and the fatigue level c(t) (red) proportional to the activity-dependent self-inhibition and carried out from experimentally accessible MUAs (see text for details). cBlack (solid and dotted) curve depicts the firing rates at the fixed points of the attractor dynamics as a function of the changes ΔI in the incoming current to the neurons. Solid and dotted branches correspond to stable or unstable fixed points (valleys or saddles of the energy landscape), respectively. Gray tick line provides the amount of self-inhibition proportional to c(t) expected for a given output ν under stationary conditions. Stable fixed points at four sample ΔIs are shown as circles coloured adopting the code used for MUAs in Panel A. Background coloured thick arrows schematically illustrate the Up/Down cycle (see text for details). d Energy landscapes for the same sampled ΔIs as in C. Darker gray curves are for networks dampened by more negative ΔIs

Activity-dependent fatigue mechanism for Up/Down slow oscillations

The nonlinear dynamics of the network activity by itself is not capable to describe the full range of the experimental evidences collected on the Up/Down slow oscillations. Even when a bistable regime of activity like that shown in Fig. 1d, e is obtained, the residence times in one of the two preferred states (Up or Down) have approximately an exponential distribution (Gigante et al. 2007; Martí et al. 2008; Mejias et al. 2010), in analogy to the problem of the diffusion over a barrier (Risken 1989). On the other hand, slow oscillation in vitro are quite regular with a relatively low coefficient of variation (Sanchez-Vives and McCormick 2000; Sanchez-Vives et al. 2008; Sanchez-Vives et al. 2010; Reig et al. 2010), as shown in Fig. 3d for an example recording. An effective theoretical description has to include an activity-dependent modulation of the attractor dynamics. Indeed, if a mechanism of self-inhibition is embodied through an additional hyperpolarizing current or a short-term depression of the excitatory synaptic transmission, stability of Up and Down states may change with time introducing a time correlation and then a regularity of the permanence times (Latham et al. 2000; van Vreeswijk and Hansel 2001; Compte et al. 2003; Giugliano et al. 2004; Holcman and Tsodyks 2006; Gigante et al. 2007, Curto et al. 2009; Ngo et al. 2010). Here we included a self-inhibition proportional to a fatigue level c(t) modelled as an accumulation process with leakage driven by firing rate ν(t), mimicking the dynamics of the extracellular ionic concentrations which drive the activity-dependent hyperpolarizing potassium current :  , where τ is a characteristic decay time. A numerical reconstruction of c(t) from an experimentally estimated MUA is shown in Fig. 2b (an arbitrary τ = 250 ms has been used). High c levels results at the end of Up states, and may be responsible for the spike-frequency adaptation phenomenon, a monotonic decrease of firing rate with time. After Up-to-Down transitions, the absence of spiking activity allows a recovery stage during the Down states.

, where τ is a characteristic decay time. A numerical reconstruction of c(t) from an experimentally estimated MUA is shown in Fig. 2b (an arbitrary τ = 250 ms has been used). High c levels results at the end of Up states, and may be responsible for the spike-frequency adaptation phenomenon, a monotonic decrease of firing rate with time. After Up-to-Down transitions, the absence of spiking activity allows a recovery stage during the Down states.

Above mean-field theory can be extended to faithfully describe the network dynamics of IF neurons with spike frequency adaptation (Gigante et al. 2007). A good approximation is to consider Φ as function of a mean input current I(t) in which both the synaptic feedback due to the local activity ν(t) and the self-inhibition modulated by the fatigue level c(t) are taken into account:

|

where Crec is the synaptic weight proportional to the average number of local synapses multiplied by the effectiveness of the synaptic transmission; g is the coupling strength between the hyperpolarizing ionic current and the fatigue level; and Iext is the additional synaptic and ionotropic current both due to the firing activity from the neurons outside the monitored local network and to the ionic flux determining the excitability of the neurons. Setting I0 as the background current in absence of adaptation (c = 0) is convenient to write  , where ΔI is the change of a generic input current, used in this work as an alternative way to govern the neuron responsiveness.

, where ΔI is the change of a generic input current, used in this work as an alternative way to govern the neuron responsiveness.

The interplay between the nonlinear dynamics of ν(t) and the self-inhibition modulated by c(t) may yield to a “relaxation oscillator” behaviour completely fitting the experimental evidence (Latham et al. 2000; Compte et al. 2003; Giugliano et al. 2004). The relaxation oscillations are orbits (closed trajectories) in a phase plane like that in Fig. 2c. Black curve is where at different c fixed points Φ(c,ν) = ν are found: solid and dotted branches are for stable and unstable fixed points, respectively. As x-axis we used the input current change due to the fatigue level: ΔI = −gc. Under stationary condition and given a ν, the asymptotic (dc/dt = 0) fatigue level is c = τ ν: gray straight line illustrate such linear “nullcline” ν = −ΔI/gτ. For relatively slow c(t) with τ of several hundreds of milliseconds or more, firing rate approaches the closest stable fixed point available. In the phase plane, the system will then moves along the solid branches of the black curve, trying to relax by minimizing the distance with the gray nullcline, as sketched by the colored thick arrows. Respectively, relaxation dynamics on top and bottom branches correspond to the accumulation stage during Up state and the recovery stage during Down state in Fig. 2b. Seen from a different perspective the relaxation oscillator dynamics is the result of the shaping of the energy landscape at different fatigue levels (see Fig. 2d). From this it is then clear that Up and Down states terminate because the corresponding well disappears and the system moves to the other strengthened attractor.

In silico experiments: simulation details

We investigated the dynamical properties of the Up/Down slow oscillations and the distribution of residence time in the high and low ν states, adopting a network model of simplified IF neurons with spike frequency adaptation as in (Fusi and Mattia 1999; Gigante et al. 2007). Briefly, we simulated networks of N = 1,000 excitatory IF whose membrane potential dynamics is:  , where Isyn(t) is the input synaptic current and IAHP(t) is the self-inhibition modulated by the fatigue mechanisms hyperpolarizing V. Potential unit is the voltage gap θ between the spike emission threshold and the resting potential reached when no spikes are received. V(t) cannot be negative, and the model includes a reflecting barrier at V = 0 θ. The refractory period after a spike emission is of 10 ms. The constant leakage is β = 73 θ/s. Every time a spikes is emitted the local fatigue level c(t) of a single neuron is increased by 1, while during the inter-spike intervals exponentially decay with a time constant τ = 500 ms, if not otherwise specified. Self-inhibition is

, where Isyn(t) is the input synaptic current and IAHP(t) is the self-inhibition modulated by the fatigue mechanisms hyperpolarizing V. Potential unit is the voltage gap θ between the spike emission threshold and the resting potential reached when no spikes are received. V(t) cannot be negative, and the model includes a reflecting barrier at V = 0 θ. The refractory period after a spike emission is of 10 ms. The constant leakage is β = 73 θ/s. Every time a spikes is emitted the local fatigue level c(t) of a single neuron is increased by 1, while during the inter-spike intervals exponentially decay with a time constant τ = 500 ms, if not otherwise specified. Self-inhibition is  , with g = 1.0 θ/s. Synaptic transmission was instantaneous, and local spikes are transmitted with delay randomly chosen from an exponential distribution with decay constant of 3.3 ms. Connectivity is sparse and the probability two neurons are connected is 0.01. Depolarization induced by a local recurrent spike is Jrec = 0.15 θ. Besides, spikes from neurons outside the local network are modelled as a Poissonian spike trains at frequency νext = 1,500 Hz, coupled with a synaptic efficacy Jext = 0.0461 θ. Hence, the average recurrent coupling is Crec = 0.01 NJrec, and in what follows all the modulations of such parameter are expressed as percentage of such value. An event-based approach described in (Mattia and Del Giudice 2000) has been used to numerically integrate the network dynamics.

, with g = 1.0 θ/s. Synaptic transmission was instantaneous, and local spikes are transmitted with delay randomly chosen from an exponential distribution with decay constant of 3.3 ms. Connectivity is sparse and the probability two neurons are connected is 0.01. Depolarization induced by a local recurrent spike is Jrec = 0.15 θ. Besides, spikes from neurons outside the local network are modelled as a Poissonian spike trains at frequency νext = 1,500 Hz, coupled with a synaptic efficacy Jext = 0.0461 θ. Hence, the average recurrent coupling is Crec = 0.01 NJrec, and in what follows all the modulations of such parameter are expressed as percentage of such value. An event-based approach described in (Mattia and Del Giudice 2000) has been used to numerically integrate the network dynamics.

Results

Evidence of attractor dynamics during slow Up/Down oscillations

Attractor dynamics have been previously reported as an emergent network property explaining the occurrence of Up states during spontaneous slow oscillations in vitro (Cossart et al. 2003; Shu et al. 2003). Stereotyped activity patterns have been also observed during spontaneous and sensory evoked Up states sharing conserved temporal structure in vivo, which is suggestive of an attractor mechanism at work (Luczak et al. 2009). Indeed, a first clue of attractor dynamics is the existence of preferred states of the spiking activity, as predicted by the theoretical mean-field framework summarized in the Methods section. By preferred states we mean that although MUAs widely fluctuate in time, firing rate is “attracted” to a suited discharge frequency: an almost quiescent Down state at the beginning and the end of the cycles in Fig. 2a from an example recording in vitro, and a high firing Up state between the two transition times. Such preferred states are particularly evident in the bimodal distribution of MUA during time in logarithmic scale in Fig. 3c, usually observed in Up/Down slow oscillations experiments (Sanchez-Vives et al. 2008; Sanchez-Vives et al. 2010; Reig et al. 2010).

However, the narrow distributions of Up and Down state durations like those shown in Fig. 3d for the example in vitro recording, cannot be explained as the only result of the bistability of the cortical networks for which an almost exponential distribution is predicted (see Methods for details). Local synaptic reverberation has to interplay with an activity dependent fatigue mechanism in order to embody a time correlation which induces state transitions as in a relaxation oscillator (Latham et al. 2000; Compte et al. 2003; Giugliano et al. 2004; Gigante et al. 2007; Curto et al. 2009; Ngo et al. 2010). This additional mechanism can explain the spike frequency adaptation (SFA) observable at the single cycle level in Fig. 2a, where a tiredness and a recovery stage brings MUA from dark red to orange/yellow during Up states and from blue to cyan in the Down states, respectively. In Fig. 3a, average MUA across Up/Down cycles makes SFA more apparent during the high firing state between the two transitions marked by dashed lines.

The slow decrease of MUA is predictive for the mean-field theory of a relaxation due to an increased activity-dependent self-inhibition, reducing the stability of the high firing attractor, as in Fig. 2c, d. If this is the case, the restoring force making attractive the high frequency neuronal activity through synaptic reverberation becomes weaker when the fatigue increases, such that MUA fluctuations around the preferred state becomes larger. A widening of the firing rate ν distribution like that shown in Fig. 1b, c when the excitability of the model network is reduced from red to green. Such expectation is confirmed in Fig. 3b, where the distribution of experimentally recorded MUAs across cycles in a 100 ms interval after Up state onset (red strip in Fig. 3a) is narrower compared to the one obtained from the MUAs preceding the Up state offset (green strip). Such fluctuations changes are super-Poissonian (variance increases while mean decreases) and cannot be simply attributed to the intrinsic variability of the spike counting process. Interestingly, increasing inter-cycle variability of single-electrode MUAs during Up states is another way to describe the in vivo observed patterns of spike sequences showing a temporal precision which decays as the high firing state progresses (Luczak et al. 2009).

Relaxation oscillators should leave yet another footprint: a fast change in the time derivative of the firing rate ν(t) just before the detection time of a state transition. This behaviour is expected because transitions are the consequence of the stability loss driven by the adaptation slow dynamics, as shown in Fig. 2c, d. Under these conditions the system is driven to move on the steep descent to the only valley available corresponding to the other state. Network activity would next have to start a chain reaction elicited by recurrent self-excitation or a sudden drop to the silent state due to the strong self-inhibition. In Fig. 3a such almost discontinuous changes in the average MUA time course are clearly apparent looking within an interval of 50 ms preceding the two transitions.

Excitability modulation and related change of the network dynamics

The network dynamics of the relaxation oscillator behind the Up/Down oscillations can be widely modulated by shaping the neuronal response properties. The input/output gain function Φ(c,ν) returns the output firing rates of the cortical network when neurons have a fatigue c and receive presynaptic spikes at rate ν. Excitability of neuronal populations is mainly governed by the slope of Φ, the amplification gain, i.e. the steeper is Φ the more excitable the network is. Figure 4 displays how network parameters affect both the shape of Φ and the coupling strength between the single-neuron fatigue mechanism and the neuronal firing. Phase planes in Fig. 4a, c are the same as Fig. 2c: S-shaped curves are where the fixed-point of the dynamics can be found for a given c (ν-nullcline: dν/dt = 0), while gray lines show the change in the input current due to the adaptation level c reached when neurons fire at constant rate ν (c-nullcline: dc/dt = 0, see Methods for details).

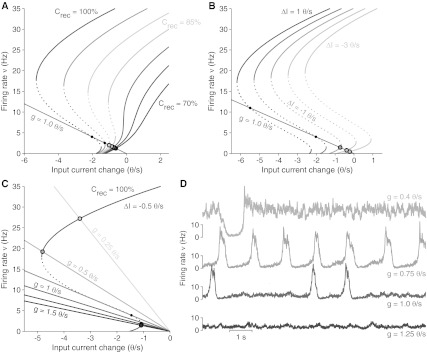

Fig. 4.

Modulation of the excitability of neuronal networks. a Shape change of the fixed-point curve (see Fig. 2c) by varying the strength of the recurrent synaptic coupling Crec, i.e. the self-excitation degree. At fixed self-inhibition feedback g and without any additional current ΔI, the network has stable stationary states at low firing rate (open coloured circles). Intersections marked by black dots are unstable fixed points, and Up/Down oscillations are expected. Larger Crec (red) are those with higher ν. b Fixed-point curves changing the incoming current ΔI. Crec = 100% and g = 1 θ/s. c Fixed-point curves at different self-inhibition feedback g. Crec = 100% and ΔI = 0 θ/s. Larger gs are those inducing a larger range of input current change (flatter gray lines). Smaller gs make the network more excitable such that states at high ν are preferred. d Spontaneous activity in simulated network of 1,000 excitatory IF neurons for Crec = 100%, ΔI = 0 θ/s changing g. From larger self-inhibition feedback (darker, bottom) to smaller gs (lighter, top) the network shifts from an asynchronous low firing state to the random occurrence of short Up states, to a periodic Up/Down oscillation, finally showing a persistent asynchronous high firing state only occasionally interleaved by short Down states

Increasing the average strength of the recurrent excitatory synaptic feedback Crec, reshapes Φ such that available fixed-points of the dynamics will be shifted at higher firing rates. For Crec > 80%, ν-nullcline are divided in three branches because for a subset of cs the network is expected to be bistable with three fixed point as in Fig. 1d, e: the unstable ones are pointed out by the dotted branch. Intersections between c- and ν-nullclines are stable (coloured circles) or unstable (black dots) fixed points of the whole c-ν mean-field dynamics. Where stable intersections occur, trajectories in the phase plane will be attracted as converging spirals. On the other hand, unstable intersections between gray lines and dotted branches will determine global oscillations (limit cycles) of the network activity: the Up/Down slow oscillations.

Another way to make the cortical network more or less excitable is to change the amount of incoming synaptic current due to the spiking activity of neurons outside the local network or by modulating pharmacologically the permeability of ionic channels on the cell membranes. As shown in Fig. 4b, such input current changes ΔI shift almost rigidly the ν-nullcline, shaping as expected in an additive way the gain function Φ. Finally, instead of increasing the excitatory input and/or the strength of self-excitation, the excitability of the system can be enhanced by reducing the self-inhibition due to the activity-dependent adaptation mechanism. In Fig. 4c, changes in the self-inhibition coupling term g are directly related to the slope of the gray c-nullcline: smaller is the feedback g, steeper is the nullcline such that stable intersections with the high-ν stable branch of the ν-nullcline may occur (see for instance g = 0.25 θ/s).

Each of the Crec, ΔI and g parameters affect the dynamics of the cortical network in a peculiar way. Nevertheless all of them are capable to induce phase transitions driving the system from stable asynchronous firing regime to Up/Down oscillations. Besides, such parameters may strongly affect the timescales of the dynamics without any change in the time constants determining the microscopic dynamics of the single neurons, like the decay time τ for c(t). In Fig. 4d we show the firing activity ν(t) sampled from four different simulations of simplified IF neuron networks with the same parameters as in Fig. 4c. Quantitative predictions on the dynamic phase and expected ν are faithfully reproduced by the in silico experiments. In particular, decreasing gs yield the population firing from low-ν asynchronous state (bottom, dark gray), to irregular and regular Up/Down oscillations (centre, two intermediate gray), and eventually to an almost stable high-ν asynchronous state (top, light gray). Interestingly, in the intermediate cases changing g from 0.75 to 1.0 θ/s, Down state have been widened to durations much longer than the larger microscopic timescale available, which in these examples is τ = 500 ms.

A spontaneously emerging wide spectrum of timescales

Hence, mean-field theory suggests qualitatively different ways to manipulate the excitability of the cortical networks. Both an increase of the synaptic self-excitation Crec and a positive change ΔI of the external current yield the network to respond at higher firing rates, although they shape differently the gain function Φ. On the other hand, the loss of excitability obtained augmenting the self-inhibition feedback g could be compensated at least in principle by an increase of Crec, although it could be not granted because of possible nonlinear compositions of the effects induced to the network dynamics. In order to investigate this issue we simulated a large set of networks composed of 1,000 simplified IF neuron (see “Methods”), sampling randomly for each of them the parameters Crec, ΔI and g from a uniform distribution. Networks have been selected among those with ν(t) alternating between Up and Down states during a time span of 200 s (n = 93), and discarding the others with a stable asynchronous activity at low or high firing rate. The average state durations have the wide distribution shown in Fig. 5a. Each simulated network is a coloured circle, changing from blue for very long Down states, to white for relatively short Up/Down cycles, and finally to red for long Up state durations.

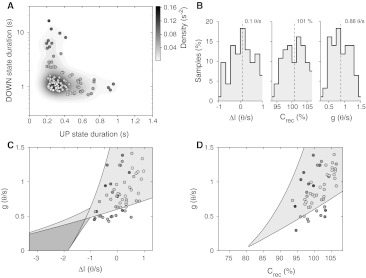

Fig. 5.

Anticorrelation of Up and Down state durations in simulated networks of IF neurons. a Average Up and Down state durations of n = 93 different simulated networks of 1,000 excitatory IF neurons with randomly chosen ΔI, Crec and g. Each circle is a different network and the colour is blue and red for long Down and long Up states, respectively. White filling is for relatively short state durations. Gray shaded regions represent the circle density in the plane smoothed by a convolution with a bivariate Gaussian kernel. b Histograms of the randomly chosen parameters: from left to right ΔI, Crec and g. Dashed lines, mean values. c Planar section of the bifurcation diagram at Crec = 100%. Coloured circles are from the subset of simulations shown in Panel A with Crec in [96.75,103.25]%. Different coloured patches delimit regions with qualitatively different network dynamics: yellow, regime of Up and Down oscillations; orange, two simultaneously stable Up and Down states without oscillations; white, only one stable state at low (top left) and high (bottom right) firing frequencies; green and cyan, regions in which coexist Up/Down oscillations and one stable state at low and high firing rate, respectively. d Another section of the bifurcation diagram at ΔI = 0 θ/s. Circles, the subset of simulations shown in A with ΔI in [−0.5,0.5] θ/s. Patch colours as in C

The distribution has a non-trivial hyperbolic shape representing an apparent anticorrelation between Up and Down state durations, such that a cortical model network with long Up states will almost certainly have short Down states, and vice versa. This is a direct consequence of the rigid modelling of the function Φ whose sigmoidal shape is not changed. Hence, if an intersection between the nullclines in Fig. 4 occurs close to the high ν stable fixed-point branch, it will be far from the bottom stable branch of the nullcline, such that the recovery stage during Down state will be necessarily fast. Another interesting feature of the distribution is its asymmetry: Down state durations elongate to more than 10 s intervals, while Up states are unlikely longer than one second. This is mainly due to the non-uniform distribution of the slope of the c-nullclines proportional to 1/g, implying a more dense sampling for large self-inhibition feedback g. Nullcline intersections are then more likely close to the bottom stable branch of the ν-nullclines, as in Fig. 4c. Indeed, such asymmetry cannot be attributed to an asymmetric sampling of the parameters being their distributions in Fig. 5b, bell-shaped and symmetric: the asymmetry is then the result of the generic nonlinearity of the network dynamics.

In Fig. 5c, d, we mapped the simulated networks in two representative subsections of the bifurcation diagram computed as in (Gigante et al. 2007) from the mean-field description of the activity dynamics (see Methods for details). The available dynamical regimes by changing Crec, ΔI and g span from simple asynchronous stable states (white regions) to more complex dynamics in which more asynchronous states coexist (orange area) or relaxation oscillations occur (yellow). Smaller areas in which both global oscillations and asynchronous state coexist are also visible (green and cyan triangles). The reliability of the mean-field description is shown by the almost complete overlap between the yellow corners and the circle positions representing the same model networks in Fig. 5a. Their positioning close to the stability boundary of the relaxation oscillator regime, explain why heterogeneous networks have a so widespread timescale distribution.

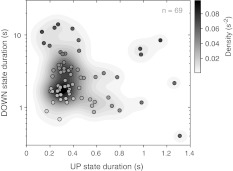

From an experimental point of view, a similar exploration of the parameters space can be performed by exploiting the intrinsic variability of the biological preparation. A cortical slice with spontaneous slow oscillations displays a wide heterogeneity in the detailed MUA dynamics and in the average duration of the Up and Down states (Sanchez-Vives et al. 2010; Reig et al. 2010). In Fig. 6 are plotted the Up and Down average durations for the recorded slices with spontaneous slow oscillations (n = 69) collected under control conditions (see Methods). Experimental evidence confirms the theoretical scenario predicted in Fig. 5. With few exceptions (the dark circles with relatively long durations of both Up and Down states), in vitro slow oscillations display an anticorrelation and an asymmetric distribution of Up and Down state durations.

Fig. 6.

Anticorrelation of Up and Down state durations in ferret brain slices. Each circle is a different average Up and Down state durations from n = 69 brain slices spontaneously showing Up/Down slow oscillations. Circles are gradually shaded by blue, red and white depending on the duration of the Up and Down states, as in Fig. 5A. Gray shaded areas depict different densities of slices in the plane, resulting from a smoothed version of the distribution (bivariate Gaussian kernel convolution as in Fig. 5a)

State-dependent shrinking of timescale distribution

In Sanchez-Vives et al. (2010) we probed the properties of the spontaneously Up/Down oscillations by changing pharmacologically the excitability of the cortical slices. We injected antagonists of the GABAA receptors, which reduce the efficacy of the synaptic transmission of inhibitory neurons. A progressive blockade of synaptic inhibition on average shortened Up state duration and elongated Down states, lowering the frequency of oscillations. Here we look that data at a single-slice level, in order to understand how timescales and dynamical regimes are affected by a direct modulation of the excitability. In Fig. 7a the average Up state duration for each cortical slice is plotted versus the duration change observed after the injection of 0.2 μM of a GABAA antagonist. Although Up states shorten, the duration change depends on the initial length of the state: longer durations display larger variations. More interestingly, the Down state durations in Fig. 7b have a non monotonic change: slices with Down states shorter than 2.35 s display an increase of duration, while the opposite occurs for longer average durations. Even in this case, the change in the state duration depends on the initial length of the state itself. In summary, the distribution of timescales in Fig. 6 shrinks when the cortical network excitability is increased by injecting a GABAA antagonist, and it does that in a non-trivial state-dependent manner.

Fig. 7.

Shrinkage of the distribution of Up and Down durations by increasing the slice excitability. a Average Up state duration versus the duration change when a GABAA antagonist (making less effective the inhibitory synaptic transmission) was injected (n = 31, 0.2 μM of BMI; n = 11, 0.2 μM of SR). Red dashed curve, quadratic polynomial fit: y = −0.71 x2 + 0.37 x−0.053. Red circle is the maximum of the parabola, occurring at 260 ms of average Up state duration. b Same as Panel A for average Down state durations, plotted versus their duration changes when drug is injected. Red circle is where the quadratic fit (red dashedline) y = −0.007 x2−0.46 x + 1.1, has a vanishing duration change, which occurs at Down duration of 2.4 s

Discussion

Slow Up/Down oscillations are a network phenomenon which emerges in isolated cortical tissue (Sanchez-Vives and McCormick 2000; Cossart et al. 2003) from the interplay between a strong synaptic self-excitation sustaining the reverberation of local spikes, and an activity-dependent self-inhibition associated to a fatigue mechanism (Latham et al. 2000; van Vreeswijk and Hansel 2001; Compte et al. 2003; Holcman and Tsodyks 2006; Curto et al. 2009; Ngo et al. 2010). Mean-field theory of IF neuronal networks provides a quantitatively reliable description of the dynamical regimes and timescales of such relaxation oscillators (Gigante et al. 2007). Here we tested several predictions of this theoretical framework on recordings from in vitro cortical slices with spontaneous oscillations (Sanchez-Vives et al. 2010; Reig et al. 2010), finding further evidence that attractor dynamics with adaptation are at work. In particular, we reported an inter-cycle variability of MUA which increases during Up states while spike frequency progressively decays, possibly adapting to an augmented fatigue level. Such trend has been observed in those recordings with relatively long Up state durations, which allowed the data analysis illustrated in the Results sections. The variability modulation in time does not have to be expected when high firing states do not display spike frequency adaptation. This would explain why in some of the eligible recordings we have not found a change in the fluctuation size of the activity during Up states.

Matching simulations of IF neuron networks, mean-field theory and in vitro experiments, we further found a wide spectrum of average Up and Down state durations with a non-trivial distribution. Its shape highlights a theoretically expected anticorrelation of the permanence times, together with an asymmetry in the maximum state durations: Down states are one order magnitude longer than Up states. This asymmetry and the wide range of timescales seem naturally emerging features of the proposed theoretical description, provided that the usually observed heterogeneity in the excitability of the cortical tissue is taken into account. Network parameters for in silico experiments have been sampled from random uniform distributions, but only the simulated networks displaying an alternation of firing rates between Up and Down states are taken into account. The resulting cloud of cell assemblies (the circles in the bifurcation diagram of Fig. 5c, d) are distributed closely to the bifurcation boundaries delimiting the corner where relaxation oscillations become unstable and asynchronous firing regimes take place. Hence, networks with Up/Down oscillations are those on the verge of losing stability. This is reminiscent of criticality, which emerges when nonlinear systems are placed at “the edge of chaos” (Langton 1990), where long range activity correlations in space and time are expected to be maximal. The wide spectrum of dynamical scales are the basis for complex computations and information processing in neuronal networks (Bertschinger and Natschläger 2004; Beggs 2008), and here we speculate that the emergence of slow Up/Down oscillations may reflect how cortical networks spontaneously settle in order to make available a high computational power.

Inspecting the behaviour of single brain slices when their excitability is enhanced through the progressive blockade of the inhibitory synaptic transmission, we observed changes in the state durations which depended on the permanence times under control conditions. As a result, the wide spectrum of timescales is shrunk, yielding an increase of the slow oscillation frequency and a shortening of Up state durations. From a mean-field perspective, this excitability enhancement implies a multivariate change of the key parameters Crec, ΔI and g. If the cloud of the networks in the parameter space is shifted far from the critical boundaries delimiting the stability of the relaxation oscillations, shorter state durations can be obtained in agreement with experimental observations. Hence, even when only a single network parameter like synaptic feedback is modulated, a kind of homeostatic process may yield the whole system to adapt other parameters accordingly.

Symmetrically, an excitability loss can be modelled shifting the network cloud in the opposite direction of the bifurcation diagram. Critical boundaries will be crossed, and for different reasons yet another compression of the distribution of the state durations is expected. Indeed, the loss of excitability dampens the gain function Φ, which reflects in a smaller gap between the firing rates in the Up and Down states. Under these conditions, the energy landscape has shallower valleys which eventually melt in a single attractor well associated to a stable asynchronous state. The excitability dampening ruins the force field (Tsuda 2002; Durstewitz and Deco 2008) allowing the system to have a wondering only mildly constrained by what remains of the barriers and the valleys of the energy landscape. The permanence times in the almost overlapped Up and Down states are consequently shortened. Such transition from relaxation oscillations to stationary asynchronous states well represents the change in the dynamical regimes of the activity recorded in vivo when the brain shifts from deep sleep states to resting wakefulness (Destexhe et al. 2007; Curto et al. 2009; Deco et al. 2010).

In summary, cortical networks seem to have slow Up/Down oscillations when set in a region of the parameter space maximizing the range of available timescales, which corresponds to a subspace close to the stability boundary of the oscillatory activity regimes. Finally, it should not be neglected that the theoretical framework adopted here is well suited to describe not only spontaneous slow oscillations but also other physiological and pathological brain states like epilepsy, identifiable as different dynamical regimes in the parameter space.

Acknowledgments

We want to thank M. Perez-Zabalza, V.F. Descalzo and R. Reig for their contribution. This work was supported by a Ministerio de Ciencia e Innovación (MICINN) grant (BFU2008-01371/BFI) to MVSV.

References

- Amit DJ. Modeling brain function: the world of attractor neural networks. Cambridge: Cambridge university press; 1989. [Google Scholar]

- Amit DJ, Brunel N. Model of global spontaneous activity and local structured activity during delay periods in the cerebral cortex. Cereb Cortex. 1997;7(3):237–252. doi: 10.1093/cercor/7.3.237. [DOI] [PubMed] [Google Scholar]

- Amit DJ, Tsodyks M. Quantitative study of attractor neural network retrieving at low spike rates: I. substrate—spikes, rates and neuronal gain. Network. 1991;2:259–273. doi: 10.1088/0954-898X/2/3/003. [DOI] [Google Scholar]

- Beggs JM. The criticality hypothesis: how local cortical networks might optimize information processing. Philos Transact A Math Phys Eng Sci. 2008;366(1864):329–343. doi: 10.1098/rsta.2007.2092. [DOI] [PubMed] [Google Scholar]

- Bertschinger N, Natschläger T. Real-time computation at the edge of chaos in recurrent neural networks. Neural Comput. 2004;16(7):1413–1436. doi: 10.1162/089976604323057443. [DOI] [PubMed] [Google Scholar]

- Braitenberg V, Schüz A. Anatomy of the cortex: statistics and geometry. Berlin: Springer; 1991. [Google Scholar]

- Braun J, Mattia M. Attractors and noise: twin drivers of decisions and multistability. Neuroimage. 2010;52(3):740–751. doi: 10.1016/j.neuroimage.2009.12.126. [DOI] [PubMed] [Google Scholar]

- Brunel N, Hakim V. Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural Comput. 1999;11:1621–1671. doi: 10.1162/089976699300016179. [DOI] [PubMed] [Google Scholar]

- Burkitt AN. A review of the integrate-and-fire neuron model: I. Homogeneous synaptic input. Biol Cybern. 2006;95:1–19. doi: 10.1007/s00422-006-0068-6. [DOI] [PubMed] [Google Scholar]

- Compte A, Sanchez-Vives MV, McCormick DA, Wang X-J. Cellular and network mechanisms of slow oscillatory activity (<1 Hz) and wave propagations in a cortical network model. J Neurophysiol. 2003;89(5):2707–2725. doi: 10.1152/jn.00845.2002. [DOI] [PubMed] [Google Scholar]

- Cossart R, Aronov D, Yuste R. Attractor dynamics of network UP states in the neocortex. Nature. 2003;423(6937):283–288. doi: 10.1038/nature01614. [DOI] [PubMed] [Google Scholar]

- Curto C, Sakata S, Marguet S, Itskov V, Harris KD. A simple model of cortical dynamics explains variability and state dependence of sensory responses in urethane-anesthetized auditory cortex. J Neurosci. 2009;29(34):10600–10612. doi: 10.1523/JNEUROSCI.2053-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deco G, Rolls ET. Synaptic and spiking dynamics underlying reward reversal in the orbitofrontal cortex. Cereb Cortex. 2005;15(1):15–30. doi: 10.1093/cercor/bhh103. [DOI] [PubMed] [Google Scholar]

- Deco G, Martí D, Ledberg A, Reig R, Sanchez-Vives MV. Effective reduced diffusion-models: a data driven approach to the analysis of neuronal dynamics. PLoS Comput Biol. 2010;5(12):e1000587. doi: 10.1371/journal.pcbi.1000587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Destexhe A, Hughes SW, Rudolph M, Crunelli V. Are corticothalamic ‘up’ states fragments of wakefulness? Trends Neurosci. 2007;30(7):334–342. doi: 10.1016/j.tins.2007.04.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durstewitz D, Deco G. Computational significance of transient dynamics in cortical networks. Eur J Neurosci. 2008;27(1):217–227. doi: 10.1111/j.1460-9568.2007.05976.x. [DOI] [PubMed] [Google Scholar]

- Fusi S, Mattia M. Collective behaviour of networks with linear (VLSI) integrate-and-fire neurons. Neural Comput. 1999;11:633–652. doi: 10.1162/089976699300016601. [DOI] [PubMed] [Google Scholar]

- Gigante G, Mattia M, Del Giudice P. Diverse population-bursting modes of adapting spiking neurons. Phys Rev Lett. 2007;98:148101. doi: 10.1103/PhysRevLett.98.148101. [DOI] [PubMed] [Google Scholar]

- Giugliano M, Darbon P, Arsiero M, Lüscher HR, Streit J. Single-neuron discharge properties and network activity in dissociated cultures of neocortex. J Neurophysiol. 2004;92(2):977–996. doi: 10.1152/jn.00067.2004. [DOI] [PubMed] [Google Scholar]

- Harris KD, Bartho P, Chadderton P, Curto C, la Rocha J, Hollender L, Itskov V, Luczak A, Marguet SL, Renart A, Sakata S. How do neurons work together? Lessons from auditory cortex. Hear Res. 2010;271(1–2):37–53. doi: 10.1016/j.heares.2010.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holcman D, Tsodyks M. The emergence of Up and Down states in cortical networks. PLoS Comput Biol. 2006;2(3):e23. doi: 10.1371/journal.pcbi.0020023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopfield JJ. Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci USA. 1982;79(8):2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koulakov AA, Raghavachari S, Kepecs A, Lisman JE. Model for a robust neural integrator. Nat Neurosci. 2002;5(8):775–782. doi: 10.1038/nn893. [DOI] [PubMed] [Google Scholar]

- La Camera G, Giugliano M, Senn W, Fusi S. The response of cortical neurons to in vivo-like input current: theory and experiment : I. Noisy inputs with stationary statistics. Biol Cybern. 2008;99:279–301. doi: 10.1007/s00422-008-0272-7. [DOI] [PubMed] [Google Scholar]

- Langton C. Computation at the edge of chaos: phase transitions and emergent computation. Physica D. 1990;42(1–3):12–37. doi: 10.1016/0167-2789(90)90064-V. [DOI] [Google Scholar]

- Latham PE, Richmond BJ, Nelson PG, Nirenberg S. Intrinsic dynamics in neuronal networks. I. Theory. J Neurophysiol. 2000;83(2):808–827. doi: 10.1152/jn.2000.83.2.808. [DOI] [PubMed] [Google Scholar]

- Lau P-M, Bi G-Q. Synaptic mechanisms of persistent reverberatory activity in neuronal networks. Proc Natl Acad Sci USA. 2005;102(29):10333–10338. doi: 10.1073/pnas.0500717102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luczak A, Barthó P, Harris KD. Spontaneous events outline the realm of possible sensory responses in neocortical populations. Neuron. 2009;62(3):413–425. doi: 10.1016/j.neuron.2009.03.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Machens CK, Romo R, Brody CD. Flexible control of mutual inhibition: a neural model of two-interval discrimination. Science. 2005;307(5712):1121–1124. doi: 10.1126/science.1104171. [DOI] [PubMed] [Google Scholar]

- Martí D, Deco G, Mattia M, Gigante G, Giudice P. A fluctuation-driven mechanism for slow decision processes in reverberant networks. PLoS One. 2008;3(7):e2534. doi: 10.1371/journal.pone.0002534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mattia M, Giudice P. Efficient event-driven simulation of large networks of spiking neurons and dynamical synapses. Neural Comput. 2000;12(10):2305–2329. doi: 10.1162/089976600300014953. [DOI] [PubMed] [Google Scholar]

- Mattia M, Del Giudice P. Population dynamics of interacting spiking neurons. Phys Rev E. 2002;66:051917. doi: 10.1103/PhysRevE.66.051917. [DOI] [PubMed] [Google Scholar]

- Mejias JF, Kappen HJ, Torres JJ. Irregular dynamics in up and down cortical states. PLoS One. 2010;5(11):e13651. doi: 10.1371/journal.pone.0013651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ngo H-VV, Köhler J, Mayer J, Claussen JC, Schuster HG. Triggering up states in all-to-all coupled neurons. Europhys Lett. 2010;89:68002. doi: 10.1209/0295-5075/89/68002. [DOI] [Google Scholar]

- Reig R, Mattia M, Compte A, Belmonte C, Sanchez-Vives MV. Temperature modulation of slow and fast cortical rhythms. J Neurophysiol. 2010;103:1253–1261. doi: 10.1152/jn.00890.2009. [DOI] [PubMed] [Google Scholar]

- Renart A, Brunel N, Wang X-J. Mean-field theory of irregularly spiking neuronal populations and working memory in recurrent cortical networks. In: Feng J, editor. Computational neuroscience: a comprehensive approach. Boca Raton: CRC Press; 2003. [Google Scholar]

- Risken H. The Fokker-Planck equation: methods of solution and applications. 2. Berlin: Springer; 1989. [Google Scholar]

- Sanchez-Vives MV, McCormick DA. Cellular and network mechanisms of rhythmic recurrent activity in neocortex. Nat Neurosci. 2000;3:1027–1034. doi: 10.1038/79848. [DOI] [PubMed] [Google Scholar]

- Sanchez-Vives MV, Descalzo VF, Reig R, Figueroa NA, Compte A, Gallego R. Rhythmic spontaneous activity in the piriform cortex. Cereb Cortex. 2008;18(5):1179–1192. doi: 10.1093/cercor/bhm152. [DOI] [PubMed] [Google Scholar]

- Sanchez-Vives MV, Mattia M, Compte A, Perez-Zabalza M, Winograd M, Descalzo VF, Reig R. Inhibitory modulation of cortical up states. J Neurophysiol. 2010;104:1314–1324. doi: 10.1152/jn.00178.2010. [DOI] [PubMed] [Google Scholar]

- Sasaki T, Matsuki N, Ikegaya Y. Metastability of active CA3 networks. J Neurosci. 2007;27(3):517–528. doi: 10.1523/JNEUROSCI.4514-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shu Y, Hasenstaub A, McCormick DA. Turning on and off recurrent balanced cortical activity. Nature. 2003;423(6937):288–293. doi: 10.1038/nature01616. [DOI] [PubMed] [Google Scholar]

- Spiridon M, Gerstner W. Noise spectrum and signal transmission through a population of spiking neurons. Network. 1999;12:409–421. [PubMed] [Google Scholar]

- Steriade M, Nunez A, Amzica F. A novel slow (<1 Hz) oscillation of neocortical neurons in vivo: depolarizing and hyperpolarizing components. J Neurosci. 1993;13(8):3252–3265. doi: 10.1523/JNEUROSCI.13-08-03252.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsuda I. Toward an interpretation of dynamic neural activity in terms of chaotic dynamical systems. Behav Brain Sci. 2002;24(5):793–810. doi: 10.1017/S0140525X01000097. [DOI] [PubMed] [Google Scholar]

- Vreeswijk C, Hansel D. Patterns of synchrony in neural networks with spike adaptation. Neural Comput. 2001;13(5):959–992. doi: 10.1162/08997660151134280. [DOI] [PubMed] [Google Scholar]

- Wang XJ. Probabilistic decision making by slow reverberation in cortical circuits. Neuron. 2002;36(5):955–968. doi: 10.1016/S0896-6273(02)01092-9. [DOI] [PubMed] [Google Scholar]

- Zipser D, Kehoe B, Littlewort G, Fuster J. A spiking network model of short-term active memory. J Neurosci. 1993;13(8):3406–3420. doi: 10.1523/JNEUROSCI.13-08-03406.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]