Abstract

Spoken languages use one set of articulators – the vocal tract, whereas signed languages use multiple articulators, including both manual and facial actions. How sensitive are the cortical circuits for language processing to the particular articulators that are observed? This question can only be addressed with participants who use both speech and a signed language. In this study, we used fMRI to compare the processing of speechreading and sign processing in deaf native signers of British Sign Language (BSL) who were also proficient speechreaders. The following questions were addressed: To what extent do these different language types rely on a common brain network? To what extent do the patterns of activation differ? How are these networks affected by the articulators that languages use?

Common perisylvian regions were activated both for speechreading English words and for BSL signs. Distinctive activation was also observed reflecting the language form. Speechreading elicited greater activation in the left mid-superior temporal cortex than BSL, whereas BSL processing generated greater activation at the parieto-occipito-temporal junction in both hemispheres.

We probed this distinction further within BSL, where manual signs can be accompanied by different sorts of mouth action. BSL signs with speech-like mouth actions showed greater superior temporal activation, while signs made with non-speech-like mouth actions showed more activation in posterior and inferior temporal regions. Distinct regions within the temporal cortex are not only differentially sensitive to perception of the distinctive articulators for speech and for sign, but also show sensitivity to the different articulators within the (signed) language.

Keywords: Functional MRI, Linguistics, Semantics, Neuroimaging, Temporal cortex

Introduction

A key attribute of language is that it is symbolic. It is essentially amodal and abstract, capturing referential distinctions using arbitrary features (‘phonemes’) at a segmental level within the language system (Hockett, 1960). Yet language is realised through human agency: it is produced and perceived by humans, using specifically human systems for production and perception. This report addresses the question: to what extent might the perception of items within the language be embodied with respect to their cortical correlates? That is, how might the patterns of activation for language processing be affected by the perceived articulators? This question is difficult to address by comparisons across different spoken languages, since they all use one articulatory system – the vocal tract. However in this, as in other ways, signed languages can cast a new perspective on the roots of language.

Signed languages (SLs), are natural human languages, containing all the linguistic features found in spoken languages (see, for example, Emmorey, 2002; Sandler & Lillo-Martin, 2001; Poizner, Klima, & Bellugi, 1987; Klima & Bellugi, 1979). However, SLs and spoken languages utilise different modalities. SLs use visible gestural actions, but do not use the auditory modality. The grammar makes use of systematic changes in manual and facial actions, and space is used for syntactic purposes (Johnston, 2005; Emmorey, 2002; Sutton-Spence & Woll, 1999). At the segmental level, SLs make use of distinctions that are realised as visible actions of the hands, head and trunk. The principal parameters of phonological structure for SLs include hand configuration, hand location and movement (Brentari, 1998; Stokoe, 1960). However, the hands are not the only articulators in SLs (e.g., Sutton-Spence & Woll, 1999; Liddell, 1978). Some signs require mouth as well as hand actions, and some of these can be considered to function phonologically, since the mouth action alone can lexically distinguish signs. The current study investigates the functional organisation of signed language and seen speech in deaf adults who were proficient both in speechreading English and in British Sign Language (BSL) in order to explore the extent to which the perception of the different articulators may impact on the pattern of activation for language processing.

The neural organisation of language processing is remarkably similar for sign and for speech, despite the differences in modality and form of the two language systems. Studies of patients with brain lesions reliably show that SL processing is supported by perisylvian regions of the left hemisphere (e.g., Atkinson, Marshall, Woll, & Thacker, 2005). Similarly, neuroimaging studies show similar patterns of activation for processing SL and spoken language when acquired as native languages. In particular, SL processing elicits activation in the superior temporal plane, posterior portions of the superior temporal gyrus and inferior frontal cortex of the left hemisphere, including Broca’s area (BA 44/45) (e.g., Lambertz, Gizewski, de Greiff, & Forsting, 2005; Sakai, Tatsuno, Suzuki, Kimura, & Ichida, 2005; MacSweeney, Woll, Campbell, McGuire, David et al., 2002b; Newman, Bavelier, Corina, Jezzard, & Neville, 2002; Petitto, Zatorre, Guana, Nikelski, Dostie et al., 2000; Neville, Bavelier, Corina, Rauschecker, Karni et al., 1998) just as for spoken language presented aurally (e.g., Davis & Johnsrude, 2003; Schlosser, Aoyagi, Fulbright, Gore, & McCarthy, 1998; Blumstein, 1994), visually (i.e., silent speechreading) (e.g., MacSweeney, Calvert, Campbell, McGuire, David et al., 2002a; Calvert, Bullmore, Brammer, Campbell, Williams et al., 1997) or audio-visually (Capek, Bavelier, Corina, Newman, Jezzard et al., 2004; MacSweeney et al., 2002b). Additionally, right hemisphere activation has been reported in superior temporal regions for SL processing (e.g., MacSweeney et al., 2002b; Newman et al., 2002; Neville et al., 1998) and also, for spoken language presented aurally (e.g., Davis & Johnsrude, 2003; Schlosser et al., 1998), visually (Pekkola, Ojanen, Autti, Jaaskelainen, Mottonen et al., 2005; Campbell, MacSweeney, Surguladze, Calvert, McGuire et al., 2001; Calvert et al., 1997) or audio-visually (Capek et al., 2004; MacSweeney et al., 2002b).

Speech and sign, however, do not appear to rely on identical brain networks. Neuroimaging research by Neville and colleagues has shown that, in hearing speakers of English, processing written sentences elicits a left-lateralised pattern of activation in perisylvian regions whereas, in deaf native signers of American Sign Language (ASL), signed sentence processing elicits a bilateral pattern of activation (Neville et al., 1998). One reason proposed for the differences in activation was that since the written language was a learned, visual code based on speech, and lacked prosody and other natural language features, it might generate left-dominant activation. However, Capek and colleagues (2004) showed that for monolingual speakers of English, audio-visual English sentence processing elicited left-dominant activation in the perisylvian regions. The implication is that differential right hemisphere recruitment found for ASL sentence processing by Neville and colleagues need not be restricted to comparisons between a signed and a written language. However, in another study, directly contrasting BSL (deaf native signers) and audio-visual English (hearing monolingual speakers), MacSweeney and colleagues (2002b) did not find laterality differences. They found differences between SL and audio-visual speech which they attributed to the modality of the input rather than to linguistic processes. Regions that showed greater activation for sign than audio-visual speech included the middle occipital gyri, bilaterally, and the left inferior parietal lobule (BA 40). These are regions are particularly involved in visual and visuo-spatial processing. In contrast, audio-visual English sentences elicited greater activation in superior temporal regions than signed sentences. They suggested that activation in these superior temporal regions (hearing > deaf) was driven by hearing status. Superior temporal cortex contains primary and secondary auditory processing regions (for a review, see Rauschecker, 1998), and, in clear audio-visual speech, audition may dominate (Schwartz, Robert-Ribes, & Escudier, 1998).

Another way to approach the question of similarities and differences as a function of language form is to examine a group with access to both sign and speech. Using PET, Söderfeldt and colleagues (1997; 1994) contrasted Swedish Sign Language (SSL) and audio-visual spoken Swedish in hearing native signers. They found differences as a function of language modality. In particular, SL generated greater activation in posterior and inferior temporal and occipital regions, bilaterally, while speech generated greater activation in auditory cortex in the superior temporal lobe, bilaterally (Söderfeldt et al., 1997). However, as with MacSweeney et al.’s finding, it is possible that the greater superior temporal activation for speech than for sign in this hearing group simply reflected its auditory component. On its own, Söderfeldt et al.’s studies cannot inform us whether the different language forms necessarily activated distinctive brain regions, since modality (auditory/visual) was confounded with language form (speech/sign). The current study is the first to examine the functional organisation for sign and seen speech processing within the same group of deaf participants. In these people, activation in superior temporal regions cannot be ascribed to auditory processing, since, by definition, their hearing is unlikely to support such processes to the same extent as in hearing people. These considerations are particularly important in trying to determine the extent to which language processing may rely on the processing of specific perceived articulatory actions.

For speech, a single articulatory system is used (the vocal tract). For signed languages, the hands are the primary articulators. Activation in lateral temporal regions has been shown to be sensitive to observation of non-linguistic hand and mouth movements in hearing people who do not use a signed language. In particular, Pelphrey and colleagues (2005) found that watching an avatar’s mouth opening and closing activated the middle and posterior STS, whereas watching the opening and closing of its hand additionally activated more posterior inferior temporo-occipital regions (Pelphrey et al., 2005). If this pattern extends to the observation of linguistically meaningful seen speech and signed manual actions, that would suggest that the language processing system retains sensitivity to its articulatory sources. One prediction, then, is that in deaf people who use both seen speech and BSL, the regions that show distinctive patterns of activation will resemble those reported for non-linguistic action observation: speechreading should activate relatively more superior and anterior temporal regions; BSL more inferior and posterior ones.

Mouthings and mouth gestures

If differences emerge between speechreading and SL processing that reflect the pattern observed by Pelphrey and colleagues (2005) (anterior superior temporal activation for mouths, posterior inferior for hands) that could simply be due to the distinctive effects on the visual input system of the different articulators. Manual gestures can be larger and ‘free-er’ (see Discussion) than mouth movements, and this may be sufficient to differentially activate those perceptual systems responsible for performing the task in each of the different linguistic modes. That is, differences between speechreading and SL perception may be extrinsic to language processing proper.

However, it is possible to explore the effects of the articulators within the SL, since, in addition to manual actions, SLs make use of non-manual articulators, including actions of the head, face and trunk (e.g., Sutton-Spence & Woll, 1999; Liddell, 1978). Moreover, two types of mouth action accompanying manual signs can be distinguished – speech-like and non-speech-like. If signs with different types of mouth pattern, but similar types and extent of hand action show distinctive activation, and if that resembles the pattern observed when speech-reading and sign are contrasted, we may be more confident that the differences observed, while possibly originating in specialized circuits for interpreting different body actions, nevertheless can reach deep into the language system.

Mouthings are speech-derived mouth actions accompanying manual signs (Boyes Braem & Sutton-Spence, 2001). Although SLs are unrelated to the spoken languages used in the surrounding hearing community, mouthings resemble spoken forms. They can disambiguate signs with similar or identical manual forms. In BSL, mouthings are relatively common, and minimal pairs of items can be identified which are only disambiguated by the mouth action (Sutton-Spence & Woll, 1999). In such disambiguating mouth (DM) signs, mouthings can serve the linguistic function of a phonological feature.

A different class of mouth action, unrelated to spoken language, is mouth gesture (Boyes Braem & Sutton-Spence, 2001). These include actions that reflect some of the dynamic properties of the manual action. The mouth gesture ‘follows’ the hand actions in terms of onset and offset, dynamic characteristics (speed and acceleration) and direction and type of movement (opening, closing, or internal movement). This type of non-speech-like mouth gesture has been termed ‘echo phonology’ (EP), since the mouth action is considered secondary to that of the hands (Woll, 2001). Thus, these gestures illustrate a condition where “the hands are the head of the mouth” (Boyes Braem & Sutton-Spence, 2001). EP mouth gestures are not derived from or influenced by the forms of spoken words borrowed into sign; rather, they are an obligatory, intrinsic component of this subgroup of signs, their patterning presumably constrained by common motor control mechanisms for hands and mouth (Woll, 2001).

While these patterns do not exhaust the types of mouth action that accompany manual acts in a SL (see for example, McCullough, Emmorey, & Sereno, 2005), they are critical for the purposes of the present study. Three sets of contrasts: (1) between speechreading (i.e., spoken English) and sign (BSL), (2) between signs with and without meaningful mouth actions, and (3) between signs with speech-like and non-speech-like mouth actions, will allow us to probe in increasingly specific detail the extent to which sight of specific articulators affects the networks activated in the language system. The following conditions, all comprising lists of single items, were presented to deaf participants in the fMRI scanner: (1) silent speechreading of English (SR); (2) BSL signs with no mouth action (manual-only, or Man); (3) BSL signs with mouthings (disambiguating mouth, or DM) and (4) BSL signs with mouth gestures (echo phonology, or EP).

Predictions

1 Similarities for speech and sign

Since our deaf volunteers were native signers of BSL and skilled speechreaders of English, we predicted that processing BSL signs and silently spoken English words would elicit activation in classical language regions of the left perisylvian cortex and their right hemisphere homologues.

2 Differences for speech and sign

We predicted that sign would elicit greater activation than speech in posterior temporal regions, possibly reflecting a greater motion processing component for manual action perception. For the contrast speech > sign, however, predictions remained open. If the pattern described for non-linguistic gestures (Pelphrey et al., 2005) is recapitulated, then we may expect greater activation in the middle portion of the superior temporal cortex. Whether these differences are lateralized remained an open question.

3 Sign: manual-with-mouth (DM and EP) vs. manual-only (Man)

The null hypothesis is that the sign language processing system is insensitive to the nature of the articulators, so similar patterns of activation will obtain for signs comprising manual-only (Man) or manual-with-mouth (DM, EP) actions. However, if the processing circuits for SL are sensitive to the perception of specific articulators, then a similar pattern to that reported by Pelphrey and colleagues (2005) for non-linguistic stimuli, may be obtained for manual-with-oral actions compared with manual actions alone. In particular, we predicted that signs that include mouth actions (DM and EP) would elicit activation in middle portion of STG more than signs that rely solely on manual articulators (Man). In contrast, manual-only signs (Man) would elicit greater activation in temporal-occipital regions than signs that include mouth actions (DM and EP).

4 Sign: mouthings (DM) vs. mouth gestures (EP)

To examine whether different types of mouth actions within SL language rely on non-identical brain regions, we compared DM and EP – two types of BSL signs both of which utilise manual and mouth actions. The null hypothesis is that EP and DM conditions will not differ systematically. This would suggest that the sight of any BSL mouth movements may be sufficient to generate activation in middle and posterior superior temporal regions. However, following Woll’s description that, for EP signs, it is the hands that drive mouth actions (Woll, 2001), we predicted that the EP activation pattern may be more similar to those for manual-only (Man) signs, while the pattern of activation for DM signs may more closely resemble speechreading. Such differences should recapitulate those predicted above: that is, EP signs may elicit greater activation in temporo-occipital cortex than DM signs, whereas DM signs may elicit greater activation in mid-superior temporal cortex than EP signs.

Methods

Participants

Thirteen (6 female; mean age: 27.4; age range: 18–49) right-handed participants were tested. Volunteers were congenitally, severely or profoundly deaf (81 dB mean loss or greater in the better ear over 4 octaves, spanning 500-4000 Hz). Across the group, the mean hearing loss in the better ear was 103 dB. All participants had had some experience with hearing aids, since their use is required in UK schools for the deaf. Seven only used hearing aids at school. The remaining six participants continue to wear them. The participants were native signers, having acquired BSL from their deaf parents. None of the participants had any known neurological or behavioural abnormalities and they performed at or above average on NVIQ (centile range = 50-99), as measured by the Block Design subtest of the WAIS-R. In addition to being native signers, these participants were also skilled speechreaders, with a mean score of 33.5 (range 26.9 – 39 out of 45) on the Test of Adult Speechreading. All these scores were above the mean of tested deaf speechreaders of this age and educational range (Mohammed, MacSweeney, & Campbell, 2003).

All participants gave written informed consent to participate in the study which was approved by the Institute of Psychiatry/South London and Maudsley NHS Trust Research Ethics Committee.

Stimuli

There were 4 experimental conditions, each comprising a list of unconnected items (a lexical list): (1) speechreading (SR), (2) manual-only signs (Man), (3) signs with disambiguating mouthings (DM) and (4) signs with echo phonology mouth gestures (EP). Each condition consisted of 24 stimulus items (96 items in total). The main aim in selecting stimuli was to present naturalistic stimuli whose meanings were easily accessible to the participants. For the speechreading condition, words that were visually (i.e., visemically) distinctive were chosen. Since there are no lexical norms for BSL, and those for English (written or spoken) are unlikely to be appropriate for native users of BSL, full control of selection of these items could not be assured. Moreover, several EP signs cannot be easily glossed as single English words. However, where possible, the psycholinguistic parameters that pertain to the referent of the items, rather than their form, were taken into account. The items in the SR, Man and DM conditions were matched in word category and concreteness (p-values > 0.2), however, there were two marginally significant differences between conditions. Man items tended to be more familiar than DM or SR items (F(2,41) = 2.944, p = 0.064) and SR items tended to be more imageable than Man and DM items (F(2,40) = 2.691, p = 0.080).

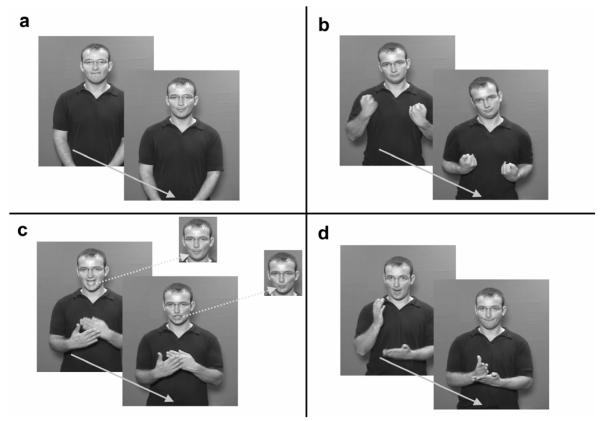

Figure 1 shows stopped-frame examples of the four types of stimuli, which were shown as videoclip sequences. In the SR condition, stimuli were silently mouthed English words with no manual component. In the Man condition, manual signs were not accompanied by any kind of mouth action (neither mouthings nor mouth gestures). In the DM condition, stimuli were BSL signs in which English-derived mouthings disambiguate between manual homonyms (Sutton-Spence & Woll, 1999). However, only one item of a homonym pair was included in the stimulus list. In the EP condition, items were signs that comprised a manual action accompanied by the appropriate (echo) mouth action.

Figure 1.

Selected images from representative videoclips illustrating each of the four experimental conditions. The arrow indicates the timeline sequence.

(a) Speechreading (SR). Two images from the speech pattern for “football”. The fricative /f/ (‘foot..’), and the semi-open vowel /ɔ:/ ( ‘..ball’) are clearly visible.

(b) Manual-Only (Man). The sign ILL is conveyed by the hands, accompanied by head drop

(c) Disambiguating Mouth (DM). The sign ASIAN, in the main image, uses the same manual actions as that for BLUE. The signs are distinguished by English-derived mouthings. For {ASIAN} the mouthing of /eɪ/ and/ʒ/ is shown. The face insets show the corresponding parts of the mouthings for BLUE, where /b/ and /u/ can be clearly seen.

(d) Echo Phonology (EP). Mouth gestures which are not speech-like accompany manual actions, ‘echoing’ the dynamic patterning of the hands. Here, the manual sequence for TRUE requires abrupt movement from an open to a closed contact gesture. As this occurs, the model’s mouth closes abruptly.

Signed and spoken stimuli were modelled by a deaf native signer of BSL, who also spoke English fluently. Between each sign, the model’s hands came to rest at his waist.

Experimental design and task

Stimuli were presented in alternating blocks of each of the experimental and a baseline condition lasting 30 s and 15 s, respectively. The total run duration was 15 min. Participants were instructed to understand the signs and words and they performed a target-detection task in all conditions, to encourage lexical processing. During the experimental conditions, participants were directed to make a push-button response whenever the stimulus item contained the meaning ‘yes’. This ‘yes’ target was presented in an appropriate form across all 4 conditions, specifically: as an English word with no manual component in the SR condition, as a BSL sign with no mouth action (but BSL-appropriate facial affect) in the Man condition, as a BSL sign with an English mouth pattern in the DM condition and as a BSL sign with a motoric mouth echo in the EP condition.

Over the course of the experiment, each stimulus item was seen 3 times (except for 1 item in each condition, which was seen 4 times, and the target item, which was seen 5 times). Items were not repeated within the same block and were pseudo-randomized to ensure that repeats were not clustered at the end of the experiment. Stimuli in the experimental conditions appeared at a rate of 15 items per block. The rate of articulation across experimental conditions approximates to 1 item every 2 seconds.

The baseline condition comprised video of the model at rest. The model’s face, trunk and hands were shown, as in the experimental conditions. During the baseline condition, participants were directed to press a button when a grey fixation cross, digitally superimposed on the face region of the resting model, turned red. To maintain vigilance, targets in both the experimental and baseline conditions occurred at a rate of 1 per block at a random position. All participants practiced the tasks outside the scanner.

All stimuli were projected onto a screen located at the base of the scanner table via a Sanyo XU40 LCD projector and then projected to a mirror angled above the participant’s head in the scanner.

Imaging parameters

Gradient echoplanar MRI data were acquired with a 1.5-T General Electric Signa Excite (Milwaukee, WI, USA) with TwinSpeed gradients and fitted with an 8-channel quadrature head coil. Three hundred T2*-weighted images depicting BOLD contrast were acquired at each of the 40 near-axial 3-mm thick planes parallel to the intercommissural (AC-PC) line (0.3 mm interslice gap; TR = 3 s, TE = 40 ms, flip angle = 90°). This field of view for the fMRI runs was 240 mm, and the matrix size was 64 × 64, with a resultant in-plane voxel size of 3.75 mm. High-resolution EPI scans were acquired to facilitate registration of individual fMRI datasets to Talairach space (Talairach & Tournoux, 1988). These comprised 40 near-axial 3-mm slices (0.3-mm gap), which were acquired parallel to the AC-PC line. The field of view for these scans was matched that of the fMRI scans, but the matrix size was increased to 128 × 128, resulting in an in-plane voxel size of 1.875mm. Other scan parameters (TR = 3 s, TE = 40 ms, flip angle = 90°) were, where possible, matched to those of the main EPI run, resulting in similar image contrast.

Data Analysis

The fMRI data were first corrected for motion artefact, then smoothed using a Gaussian filter (FWHM 7.2 mm) to improve the signal to noise ratio over each voxel and its immediate neighbors prior to data analysis. In addition, low frequency trends were removed by a wavelet-based procedure in which the time series at each voxel was first transformed into the wavelet domain and the wavelet coefficients of the three levels corresponding to the lowest temporal frequencies of the data were set to zero. The wavelet transform was then inverted to give the detrended time-series. The least-squares fit was computed between the observed time series at each voxel and the convolutions of two gamma variate functions (peak responses at 4 and 8 sec) with the experimental design (Friston, Josephs, Rees, & Turner, 1998). The best fit between the weighted sum of these convolutions and the time series at each voxel was computed using the constrained BOLD effect model suggested by Friman and colleagues (2003) in order to constrain the range of fits to those that reflect the physiological features of the BOLD response. Following computation of the model fit, a goodness of fit statistic was derived by calculating the ratio between the sum of squares due to the model fit and the residual sum of squares (SSQ ratio) at each voxel. The data were permuted by the wavelet-based method described by Bullmore and colleagues (2001) modified by removal, prior to permutation, of any wavelet coefficients exceeding the calculated threshold as described by Donoho and Johnstone (1994). These were replaced by the threshold value. This step reduces the likelihood of refitting large, experimentally-related components of the signal following permutation. Significant values of the SSQ were identified by comparing this statistic with the null distribution, determined by repeating the fitting procedure 20 times at each voxel. This procedure preserves the noise characteristics of the time-series during the permutation process and provides good control of Type I error rates. The voxel-wise SSQ ratios were calculated for each subject from the observed data and, following time series permutation, were transformed into Talairach and Tournoux’s standard space (1988) as described previously (Brammer, Bullmore, Simmons, Williams, Grasby et al., 1997; Bullmore, Brammer, Williams, Rabe-Hesketh, Janot et al., 1996). The Talairach transformation stage was performed in two parts. First, the fMRI data were transformed to high-resolution T2*-weighted image of each participant’s own brain using a rigid body transformation. Second, an affine transformation to the Talairach template was computed. The cost function for both transformations was the maximization of the correlation between the images. Voxel size in Talairach space was 3 × 3 × 3 mm.

Group Analysis

Identification of active 3-D clusters was performed by first thresholding the median voxel-level SSQ ratio maps at the false positive probability of 0.05. The activated voxels were assembled into 3-D connected clusters and the sum of the SSQ ratios (statistical cluster mass) determined for each cluster. This procedure was repeated for the median SSQ ratio maps obtained from the wavelet-permuted data to compute the null distribution of statistical cluster masses under the null hypothesis. The cluster-wise false positive threshold was then set using this distribution to give and expected false positive rate of <1 cluster per brain (Bullmore, Suckling, Overmeyer, Rabe-Hesketh, Taylor et al., 1999).

ANOVA

Separate ANOVAs comparing differences between experimental conditions were calculated by fitting the data at each voxel which all subjects had non-zero data using the following linear model: Y = a + bX + e. Where Y is the vector of BOLD effect sizes for each individual, X is the contrast matrix for the particular inter condition/group contrasts required, a is the mean effect across all individuals in the various conditions/groups, b is the computed group/condition difference and e is a vector of residual errors. The model is fitted by minimizing the sum of absolute deviations rather than the sums of squares to reduce outlier effects. The null distribution of b is computed by permuting data between conditions (assuming the null hypothesis of no effect of experimental condition) and refitting the above model. Group difference maps are computed as described above at voxel or cluster level by appropriate thresholding of the null distribution of b. This permutation method thus gives an exact test (for this set of data) of the probability of the value of b in the unpermuted data under the null hypothesis. The permutation process permits estimation of the distribution of b under the null hypothesis of no mean difference. Identification of significantly activated clusters was performed by using the cluster-wise false positive threshold that yielded an expected false positive rate of <1 cluster per brain (Bullmore et al., 1999).

Conjunction Analysis

Common areas of activations across experimental conditions were analyzed using a permutation-based conjunction analysis. This assessed whether the minimum mean activation at each voxel across all conditions of interest differed significantly from zero. The null distribution (distribution around zero response) was computed by using the wavelet-permuted SSQ ratio data for each individual following transformation into standard space (i.e., as described above for group activation maps but using the combined data for all conditions of interest). This procedure is the exact test (permutation) equivalent of the minimum t statistic test used when conjunction is performed parametrically. Identification of significantly activated clusters was performed by using the cluster-wise false positive threshold that yielded an expected false positive rate of <1 cluster per brain (Bullmore et al., 1999).

Results

Behavioural results

Due to a technical fault, responses for the Man condition were not recorded for ten volunteers. Repeated-measures ANOVA for accuracy and reaction time, performed on the remaining three conditions, showed that participants responded accurately to the targets across the experimental conditions (mean percent correct: DM and EP = 100, SR = 94; F(2,24) = 3.097, p = 0.064). An ANOVA showed that response reaction time was significantly different across conditions (F(2,24) = 32.426, p< 0.001). Post-hoc pair-wise comparisons exploring the difference in reaction time showed that participants took longer to respond targets in the EP condition than the DM (p<0.001) and SR (p=0.001) conditions. Overall, participants performed well on the task, suggesting that they were attending to the stimuli1.

Neuroimaging results

Speechreading (SR) vs. Baseline

Extensive activation was observed in frontotemporal cortices bilaterally including a large cluster of activation (916 voxels) with its focus in the left superior temporal gyrus (BA 42 /41). This large cluster of activation extended inferiorly to the middle (BA 21) and inferior (BA 37, 19) temporal gyri and superiorly to the inferior portion of supramarginal gyrus (BA 40). Activation also extended to inferior (BA 44, 45) and middle (BA 6, 9) frontal gyri and precentral sulcus (BA 4). In the right hemisphere, a cluster of activation with its focus in the middle / superior temporal gyri (BA 21 / 22) extended to posterior inferior temporal gyrus (BA 37, 19) and to superior temporal gyrus (BA 22, 42, 41). Additional activation was observed in the right frontal cortex, focused in the precentral gyrus (BA 6). This cluster extended to the inferior (BA 44, 45) and middle (BA 46, 9 and inferior 8) frontal gyri. Finally, activation was also observed in the medial frontal cortex including medial frontal gyrus (BA 6, border of 8) and the dorsal anterior cingulate gyrus (BA 32) (see Table 1).

Table 1.

Activated regions for the perception of speech and sign compared to baseline (static model) in deaf native signers

| Size | ||||

|---|---|---|---|---|

| Hemisphere | (Voxels) | x, y, z | BA | |

| Speechreading (SR) | ||||

| Middle /Superior Temporal Gyrus |

R | 246 | 51, −7, −3 | 21/22 |

| Superior Temporal Gyrus | L | 916 | −54, −22, 10 | 42/41 |

| Precentral Gyrus | R | 237 | 47, -4, 40 | 6 |

| Medial Frontal Lobe / Anterior Cingulate |

L | 211 | −4, 15, 43 | 6/32 |

| Manual-Only (Man) | ||||

| Posterior Inferior/Middle Temporal Gyrus |

R | 332 | 43, −59, 0 | 37 |

| Inferior Temporal Gyrus | L | 395 | −47, −63, −7 | 37 |

| Precentral Sulcus / Middle Frontal Gyrus |

R | 147 | 51, 0, 40 | 6/9 |

| Middle/ Inferior Frontal Gyrus | L | 299 | −43, 15, 26 | 9/44 |

| Disambiguating Mouth (DM) | ||||

| Posterior Inferior/Middle Temporal Gyrus |

R | 206 | 43, −59, 0 | 37 |

| Precentral Gyrus | L | 340 | −43, −4, 46 | 4 |

| Echo Phonology (EP) | ||||

| Post Inferior Temporal Gyrus | R | 355 | 43, −56, −7 | 37 |

| Middle Temporal Gyrus | L | 428 | −54, −37, 3 | 22 |

| Middle Frontal Gyrus | R | 220 | 47, 4, 43 | 6/9 |

| Inferior/ Middle Frontal Gyri | L | 394 | −40, 11, 23 | 44/9 |

| Medial Premotor Cortex & SMA | L/R | 213 | 0, 0, 59 | 6 |

Voxel-wise p-value = 0.05, cluster-wise p-value = 0.0025. Foci correspond to the most activated voxel in each 3-D cluster.

Manual-Only Signs (Man) vs. Baseline

Activation was observed in a bilateral frontotemporal network. In temporal cortices, activation was focused in the posterior inferior temporal gyrus (BA 37) and extended to middle and posterior portions of middle (BA 21) and superior (BA 22, 42, 41) temporal gyri and extended superiorly to supramarginal gyrus (BA 40). In the frontal cortex, activation was observed in middle portion of the middle frontal gyrus (BA 6, 4, border of 9) bilaterally. In the left hemisphere, this cluster of activation extended rostrally to dorsolateral prefrontal cortex (BA 46) and inferiorly to Broca’s area (BA 44, 45), whereas in the right hemisphere, frontal activation extended posteriorly and medially to the border of the dorsal posterior cingulate gyrus (BA 31, 24/32 border).

Disambiguating Mouth Signs (DM) vs. Baseline

Activation was observed in left perisylvian regions and in the right temporal cortex. Activation in bilateral temporal cortex was focused in the inferior temporal gyri (BA 37, 19) and extended superiorly to middle and posterior portions of the middle (BA 21) and superior (BA 22, 42, 41) temporal gyri. In the left hemisphere, activation extended superiorly to include the inferior parietal lobule (BA 39, 40). Activation in the left frontal cortex was focused in the precentral gyrus (BA 4) and extended inferiorly to Broca’s Area (BA 44, 45) and superiorly to dorsolateral prefrontal cortex (BA 46), and middle frontal gyrus (BA 6 9, inferior 8).

Echo Phonology Signs (EP) vs. Baseline

Extensive activation was observed in bilateral fronto-temporal cortices. Activations in the frontal cortices were focused in the inferior/middle gyri (BA 44/9) in the left hemisphere and in the middle frontal gyrus (BA 6/9) in the right hemisphere. In both hemispheres, the frontal clusters included the inferior (BA 44/45) middle (BA 6, 9, 46) and precentral (BA 4) gyri. Bilateral activation within the posterior inferior temporal cortex (BA 19/37) extended superiorly to the middle and posterior portions of middle (BA 21) and superior (BA 22/42) temporal gyri and to the inferior portion of supramarginal gyrus (BA 40) of the left hemisphere. Additional activation was observed in superior medial frontal cortex including supplementary motor area (SMA) (BAs 9, 8 and 6). This cluster extended inferiorly to the border of dorsal anterior cingulate gyrus (BA 32).

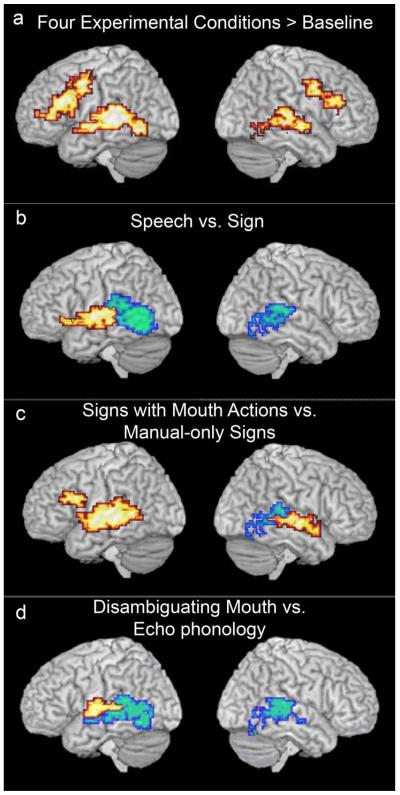

Regions common to the four linguistic conditions

The conjunction analysis (Figure 2a; Table 2) revealed that the four experimental conditions elicited greater activation in fronto-temporal cortices bilaterally than viewing the model at rest. Activation in the frontal cortices was focused in the middle frontal gyrus (BA 9/46) in the left hemisphere and in the precentral gyrus (BA 6) in the right hemisphere. In both hemispheres, the frontal activation included inferior (BA 44/45) and middle (BA 46, 6, 9, 4) frontal gyri. In the left hemisphere, activation extended ventrally to the border of lateral BA 10 and rostrally to posterior precentral gyrus. Activation in the temporal cortices was focused in the middle temporal gyri (BA 21) and extended superiorly to the middle and posterior portions of superior temporal gyrus (BA 22, 42) the border of SMG (BA 40), and inferiorly to the posterior portion of inferior temporal gyrus (BA 19) including fusiform gyrus (BA 37).

Figure 2.

Regions showing significant activation a) across all four language conditions compared to the baseline condition and active regions for ANOVA contrasts: b) Speech (SR) greater than Signs (EP, DM & Man) are in red, Signs (EP, DM & Man) greater than Speech (SR) are in blue, c) Signs with mouth actions (EP, DM) greater than Manual-only (Man) signs are in red, Manual-only (Man) signs greater than Signs with mouth actions (EP, DM) are in blue, d) Disambiguating Mouth (DM) signs greater than Echo Phonology (EP) signs are in red, Echo Phonology (EP) signs greater than Disambiguating Mouth (DM) signs are in blue. (Voxel-wise p-value = 0.05, cluster-wise p-value = 0.01.) Activations are displayed up to 15 mm beneath the cortical surface.

Table 2.

Regions showing significant activation across all four language conditions (SR, Man, DM and EP)

| Size | ||||

|---|---|---|---|---|

| Hemisphere | (Voxels) | x, y, z | BA | |

|

Conjunction Analysis across 4 conditions (SR, Man, DM and EP) |

||||

| Superior / Middle Temporal Gyrus | R | 231 | 43, −30, 0 | 22/21 |

| Middle Temporal Gyrus | L | 321 | −54, −37, 3 | 21 |

| Precentral Gyrus | R | 127 | 47, 0, 43 | 6 |

| Middle Frontal Gyrus | L | 317 | −43, 22, 26 | 9/46 |

Voxel-wise p-value = 0.05, cluster-wise p-value = 0.01. Foci correspond to the most activated voxel in each 3-D cluster.

Signs (EP, DM & M an) vs. Speechreading (SR)

Signs (EP, DM & Man) elicited greater activation in temporo-occipital cortices, bilaterally, than SR. Activation was focused in the posterior portions of inferior temporal gyri (BA 37) and extended to BA 19 and middle (BA 21) and superior temporal (BA 22, 42) gyri. In the left hemisphere, the activation extended superiorly to the supramarginal gyrus (BA 40) and the border of angular gyrus (BA 39). SR elicited greater activation in the left lateral tranverse temporal gyrus (BA 41) than signs (EP, DM & Man). This cluster of activation extended to the middle portion of superior and middle temporal gyri (BA 22, 42 and 21, respectively) and the inferior portion of precentral sulcus (BA 6) and the border of the pars orbitalis and pars triangularis of the inferior frontal cortex (BA 47, 45) (see Table 2, Figure 2b).

Signs with Mouth Actions (EP & DM) vs. Manual-Only Signs (Man)

Compared to Man, signs with mouth actions (EP & DM), elicited activation in the middle and posterior portions of the superior and middle temporal gyri (BA 42/22/41 and 21, respectively) and extended superiorly to the inferior portion of precentral gyrus (BA 6) in both hemispheres. In the left hemisphere, this cluster of activation extended posteriorly into SMG (BA 40). In the right hemisphere, the cluster of activation extended to the anterior portion of STG. In addition, signs with mouth actions also elicited activation in the left inferior (BA 44/45) and middle (BA 9/46/6) frontal gyri. Man, as compared to signs with mouth actions, elicited activation in the right occipito-temporal cortex, with its focus of activation in the fusiform gyrus (BA 37). The cluster of activation extended into the posterior portion of inferior (BA 19 border), middle (BA 21) and superior (BA 22/42) temporal gyri (see Table 2, Figure 2c).

Disambiguating Mouth (DM) vs. Echo Phonology (EP)

DM elicited greater activation in the left middle and posterior portions of the superior temporal gyrus (BA 22, 42, border of 41) than EP. This cluster of activation extended superiorly to the border of postcentral gyrus (BA 43) and inferiorly to the middle temporal gyrus (BA 21) and was focused in the STS (BA 22/21). EP elicited greater activation in bilateral posterior temporal cortices than DM. In the right hemisphere, the focus of activation was in the superior temporal gyrus (BA 22) and extended to BA 42, the middle and posterior portions of the middle temporal gyrus (BA 21) and the posterior inferior temporal gyrus (BA 19, 37). In the left hemisphere, the focus of activation was in the inferior temporal gyrus (BA 37) and extended to BA 19 and superiorly to the middle and posterior portions of the middle (BA 21) and superior (BA 22) temporal gyri and the angular gyrus (BA 39) (see Table 2, Figure 2d).

Discussion

Signed languages (SLs) offer a unique perspective on the relationship between the perception of language and its articulation, since the major articulators – that is the hands and the face – are displaced in space and are under somewhat independent cortical control. Perhaps surprisingly, eye-tracking studies report that signers fixate the face of the model, with gaze only rarely deviating to the hands (e.g., Muir & Richardson, 2005). The present study focused on the following questions: In deaf people who are skilled in both language types: (1) to what extent are English speechreading and BSL perception subserved by the same brain regions? (2) how do the patterns of activation elicited by sign and seen speech differ? (3) how are the patterns for SL processing affected by the number and type of articulators upon which signs rely? and (4) how is the cortical circuitry engaged by SL affected by mouth actions which are speech-like compared with those that are not speech-like? While answers to these questions will deepen knowledge of the cortical bases for signed language processing, their implications extend further. If sensitivity to the perception of particular articulators can be seen within as well as across the tested languages, that would suggest that the cortical basis for a language shows sensitivity to the perceived articulators that produce it – whatever form that language takes.

Similarities for sign and speech

There was extensive activation in perisylvian regions when watching either spoken words or signs, congruent with the interpretation that language systems were engaged in this task. In particular, the conjunction analysis (Figure 2a, Table 2) showed that the four linguistic conditions elicited activation in the frontal cortex, including the operculum, and in the superior temporal regions, bilaterally. Within the frontal cortex, extensive inferior and dorsolateral activation was observed, consistent with the findings of previous studies of signed (e.g., MacSweeney et al., 2002b; Newman et al., 2002; Petitto et al., 2000; Neville et al., 1998) and spoken (e.g., Schlosser et al., 1998; Blumstein, 1994) language processing. In addition, compared to watching a model at rest (baseline condition), several regions in these deaf native signers showed extensive activation whether seen speech or sign was presented. These included posterior regions including inferior temporal and fusiform regions, which have been reliably identified as sensitive to body, face and hand perception, and temporo-parieto-occipital regions sensitive to visual movement, especially biological movement (e.g., Puce & Perrett, 2003; Downing, Jiang, Shuman, & Kanwisher, 2001).

Speechreading vs. sign

While sign and speech elicited similar patterns of activation, these patterns were not identical; differences were focused primarily within the temporal lobe (Figure 2b). As predicted, sign activated more posterior and inferior regions of the temporal lobe, bilaterally, than speech (including p-STS and MT). This differential activation may reflect the greater motion processing demands of sign than speech and supports our previous findings (MacSweeney et al., 2002b) and those of Söderfeldt (1997). In contrast, speech activated anterior and superior regions of the temporal cortices to a greater extent than sign (including mid-STS). The fact that this included the analogue of auditory cortices in deaf participants is discussed in greater detail below. It should also be noted that this differential activation, for speech greater than sign, was located only in the left hemisphere. Previous studies comparing deaf and hearing participants found greater activation in superior temporal regions for (audio-visual) speech than sign bilaterally (MacSweeney et al., 2002b). The fact that speechreading > sign differences were left lateralised in the current study while sign > speech differences were bilateral may lend support to Neville et al.’s suggestion that there may be greater recruitment of the right hemisphere for SL processing than for the processing of spoken language (e.g., Bavelier, Corina, Jezzard, Clark, Karni et al., 1998; Neville et al., 1998), at least in deaf bilinguals who use both language forms. However, taken together with previous findings these data also highlight that differences in laterality observed between signed and spoken languages may depend on the groups (e.g., deaf native signers/ hearing native signers/ deaf vs. hearing non-signers) and conditions (e.g., audiovisual speech/ silent speechreading) tested.

The present data show clearly that SL processing recruits posterior temporal regions of both hemispheres to a greater extent than silent speechreading, under similar processing conditions. This could reflect the contribution of observed manual actions independent of language knowledge (we did not include a non-linguistic manual control condition in this study) or sensitivity to these visual events that is intrinsic to SL perception. Interestingly, when the same material was presented to hearing nonsigners (not reported here), the only region which was activated more in non-signers than deaf signers was in the left occipito-temporo-parietal boundary. However under those conditions, differential activation was not as extensive as that reported here for the SL/speechreading contrast in deaf native signers. In particular, only the most inferior, posterior parts of the temporo-occipital cortex was activated (hearing > deaf). We therefore infer that while some of the activation in these posterior temporal regions reflects ‘purely’ visual processing of seen manual actions, some of it does not, and is probably intrinsic to SL processing. Further findings relevant to this interpretation are indicated below, where contrasts within the SL are explored.

Signs with mouth actions vs. manual-only signs

Concerning the speechreading/SL comparisons, an argument could be mounted that speechreading was learned later and less efficiently than their native SL in these deaf children of deaf parents. That is, the differential patterns of activation might rather reflect properties of later compared with earlier language learning, rather than properties relating to the perceptibility of the articulators. This argument could be addressed by reference to studies of second (spoken) language processing. The regions identified as more active for speechreading than for SL processing do coincide with some of those which are more active for the later learned language in hearing speakers (for a review, see Perani & Abutalebi, 2005). For example, perisylvian activation can be greater for translation into the second language from the first than vice-versa (Klein, Zatorre, Chen, Milner, Crane et al., 2006). However those studies tend to find far more extensive activation (2nd> 1st language) in inferior frontal regions than we observed in our study. Moreover, we can address this question directly through the contrasts internal to SL processing. We compared signs within BSL that rely solely on their manual patterning for lexical processing (Man) with those that used both hands and mouth actions (DM and EP). In the absence of data concerning the developmental course of acquisition of these different signs we cannot rule out the possibility that the distinctive contrastive patterns reflected factors other than the sight of different articulators – for example, that signs with mouth actions are acquired later than signs with no mouth actions2. However, the data are compelling when viewed together with those that contrasted speechreading and SL. Signs which used mouth actions generated greater activation than manual-only signs in superior temporal sulci of both hemispheres. Additional activation was observed in the left inferior frontal gyrus. Thus, a plausible interpretation of this pattern is that these classical language regions of the perisylvian cortex are sensitive to the type and number of perceived articulators, when mouth actions accompany manual ones. In contrast, regions activated more by manual alone (Man) than manual-with-mouth actions included the right posterior temporo-occipital boundary, suggesting that, when a signed language is being processed, this region is specialized for the perception of hand actions, quite specifically. This is consistent with previous observations showing this region’s involvement in the perception of non-linguistic manual movements (Pelphrey et al., 2005), but extends it to SL. Crucially, this was not due to deactivation in this region when mouth actions are available.

Different types of mouth action within signed language

The contrast between mouthings (DM) and mouth gestures (EP) provides us with information concerning the nature of the mouth movements themselves, and their role within SL processing. Differential activation between these conditions was confined to temporal regions. Moreover, the pattern observed reiterates that above – speech-derived mouthings (DM) generated relatively greater activation in a somewhat circumscribed region of the left middle and posterior portions of the superior temporal gyrus, while for mouth gestures (EP), which are not speech-derived, there was relatively greater posterior activation in both hemispheres. This provides a reasonable cortical correlate of the proposal that, for mouth gestures, “the hands are the head of the mouth” (Boyes Braem & Sutton-Spence, 2001), as proposed by Woll (2001). While mouth actions can be of many different sorts, DM and EP show systematic differences in terms of their functional cortical correlates; DM resembles speechreading more closely, while EP resembles manual-only signs.

Implications for understanding the cortical bases of natural language processing

This study has found evidence for a common substrate for the perception of two very different languages – BSL and English (via speechreading). These regions are essentially perisylvian, although additional regions, including middle frontal and posterior temporo-parieto-occipital were also activated across all four experimental conditions, when compared with a baseline condition comprising a still head and torso of the model. However, we have also identified neural regions that are sensitive, firstly, to the language (speech or sign) presented to the deaf participants, and secondly, to articulatory patterns within SL. No studies of spoken language can offer analogues to these findings, since spoken languages use a single articulatory system, in contrast to SLs, which use manual and non-manual actions systematically and synthetically.

With respect to the language type distinction, we have shown that speechreading and SL processing rely differentially on different parts of the perisylvian system, with left inferior frontal and superior temporal activation dominant for speech, and posterior superior, middle and inferior temporal activation dominant for sign. Could the circuitry for speechreading – in particular, the finding that silent speechreading elicited activation in superior temporal cortex, including Heschl’s gyrus – reflect associations of seen speech with rudimentary hearing in these deaf speechreaders? It has been proposed that, in hearing people, superior temporal regions are specifically activated by speechreading because speech is generally both seen and heard. That is, p-STS serves as a ‘binding site’ (Calvert, Campbell, & Brammer, 2000) for such long-term and reliable associations. Since our participants had some experience using hearing aids, a level of potentially functional (aided) hearing has been available to them. However, it seems unlikely that the extensive activation in STS reflected sound-vision associations alone. Even if activation in superior temporal regions reflected some remnants of a circuit that was originally driven by auditory speech, this is unlikely to account for the mouth/hand distinctions that were observed within SL, experienced without systematic vocalization. In viewing SL, manual actions that required mouth movements could be distinguished from those that did not. Regions that showed relatively more activation for mouth movements (whether in BSL, where they accompanied manual actions, or in speech), may be specialized for the perception of mouth actions. This would hold whether the mouth actions are only seen or heard-and-seen and whether they are accompanied by manual actions (as they are in BSL) or not. It is well established that STS is activated in hearing people while observing mouth opening and closing (Puce, Allison, Bentin, Gore, & McCarthy, 1998).

The final contrast showing that DM signs elicited greater activation in left mid-superior temporal gyrus than EP suggests that this region is particularly involved in processing mouth actions that are speech-like in form, that is, mouth actions comprising regular opening and closing, with changes in shape and some variation in the place of articulation of visible articulators. It is possible that this activation reflects associations, albeit fairly unreliable and fragmentary ones, between these mouth movements and their corresponding speech sounds, since DM mouth movements typically resemble the phonemes of the sign’s English translations3. Another (not exclusive) possibility is that attention may need to be directed to the mouth for it to generate greater activation in these regions: it has been noted that gaze is usually directed to the face in the perception of connected sign discourse (e.g., Muir & Richardson, 2005). In the processing of the material in the present study we have no information on gaze or attention allocation, but it is likely that attention may be focused more closely to the mouth in the SR and DM conditions. However, a final plausible interpretation for the similar activation for SR and DM is that in processing DM signs, deaf participants may use language mixing (speechread English and BSL). Interestingly, the middle portion of the superior temporal gyrus is not differentially activated when DM and SR were contrasted (not reported here).

Hands and mouths: some general considerations

Perhaps the most impressive aspect of these findings is that they show internal consistency across the different contrasts. For example, greater activation was observed at the temporo-parieto-occipital boundary for sign than speech, manual only signs than signs with mouth actions, and signs with echo phonology than signs with disambiguating mouth actions. That is, in each of these contrasts, the more active region was that which was more involved in processing hand movements than mouth movements. These regions are specifically activated in the perception of simple hand gestures (Pelphrey et al., 2005). Neuroimaging studies of apraxic patients are consistent with this observation. For example, Goldenberg and Karnath (2006) found lesions in this region in patients whose hand posture imitations were poorer than their imitations of finger actions. These authors suggest that this region is implicated in the perception of biological actions that require discrimination of the action in relation to a specific spatial framework (i.e., the body), and that this may be contrasted with other biological actions (in their study, finger imitation) where discrimination may be organized in terms of number or seriality of body parts (i.e., how many fingers are seen, in what order – the hand is the spatial framework and is unchanging).

This insight can be extended to the contrasts explored here. Watching speech differs from watching sign in that, for speech, the spatial framework is fixed: the visible mouth boundaries constrain the actions of the tongue, teeth and lips that can be identified. By contrast, in manual signs, both hand shape and orientation vary quite freely, and both vary with respect to position (head, face, trunk, left or right). A similar proposal can be mounted with respect to the dynamics of speech and sign. Speech movements are seen in relation to the head, and have relatively few degrees of perceptible freedom of action; sign actions vary in speed and manner, may engage one or both hands and elements of the head, face and trunk, and have altogether more degrees of freedom. Such general principles may help to determine why these regions come to have their particular specializations.

To conclude, this study has shown that the cortical organization for language processing can be differentially and systematically sensitive to the perception of different articulators that deliver language. Oral actions processed by eye generate relatively greater activation in the middle portion of the superior temporal cortex, whereas manual actions rely on more posterior and inferior parts of the lateral temporal cortex. This pattern was observed between languages (spoken English and BSL) and also within the (signed) language of deaf participants. This suggests that the perception of a linguistic utterance ‘shows its roots’ in terms of the recruitment of regions specialized for the perception of specific articulators.

Table 3.

Regions showing significant activation for planned comparisons (ANOVAs)

| Size | ||||

|---|---|---|---|---|

| Hemisphere | (Voxels) | x, y, z | BA | |

| Signs vs. Speechreading (SR) | ||||

| Signs > SR | ||||

| Inferior Temporal Gyrus | R | 236 | 43, −63, 0 | 37 |

| Inferior Temporal Gyrus | L | 329 | −47, −63, −7 | 37 |

| SR > Signs | ||||

| Transverse Temporal Gyrus | L | 188 | −54, −15, 7 | 41 |

|

Signs with Mouth Actions vs.

Manual-only signs (Man) |

||||

| Signs with Mouth Actions > Man | ||||

| Middle Temporal Gyrus | R | 156 | 54, −15, −7 | 21 |

| Superior Temporal Gyrus | L | 341 | −54, −22, 10 | 42 |

| Inferior Frontal Gyrus | L | 83 | −40, 11, 23 | 44 |

| Man > Signs with Mouth Actions | ||||

| Fusiform Gyrus | R | 147 | 43, −52, −10 | 37 |

|

Disambiguating Mouth Signs vs.

Echo Phonology Signs |

||||

| DM > EP | ||||

| Superior/Middle Temporal Gyrus | L | 110 | −54, −11, 0 | 22/21 |

| EP > DM | ||||

| Superior Temporal Gyrus | R | 290 | 47, −37, 7 | 22 |

| Inferior Temporal Gyrus | L | 239 | −47, −59, −10 | 37 |

Voxel-wise p-value = 0.05, cluster-wise p-value = 0.01. Foci correspond to the most activated voxel in each 3-D cluster. (Signs = three SL conditions (Man, DM and EP), Signs with Mouth Actions = DM and EP).

Acknowledgements

This work was supported by the Wellcome Trust (068607/Z/02/Z; including a Career Development Fellowship supporting M.M.) and the Economic and Social Research Council of Great Britain (RES-620-28-6001) (B.W. and R.C.). We thank Jordan Fenlon, Tyron Woolfe, Mark Seal, Cathie Green, Zoë Hunter, Maartje Kouwenberg and Karine Gazarian for their assistance. We also thank all of the volunteers for their participation.

Footnotes

Nevertheless, since we did not test their lexical processing directly in the scanner, a behavioral post-test, using different volunteers, was run to determine the depth of processing of these items. In that study, four deaf signers (including three native signers), who were not scanned, viewed the stimuli used in the present study while performing the same target detection task. Following a 10 minute delay, in which they performed a slider puzzle, they were given a surprise-recognition test in which they viewed the familiar stimuli randomly mixed with stimuli they had not seen. Four hearing non-signers, matched for age, education and gender, were given the same task. The deaf native signers were better than hearing sign naïve participants at correctly identifying whether a sign was seen in the previous presentation or not (mean d’ for deaf signers = 2.26, for hearing non-signers = 0.92; t(6) = 4.63, p = 0.004). From this, we infer that the deaf native signers who participated in the imaging study were processing the signed material to a linguistically meaningful level.

As noted in the Methods section, the EP condition included items whose linguistic properties were not as closely matched as those of the other groups. In particular, some EP items could be glossed not only as single words, but had multiple meanings. Could this have accounted for some part of the patterns observed when EP items were included in various contrasts? We re-analyzed both the speech vs. sign and the manual-alone vs. Manual-with-mouth contrasts, but this time excluding EP. The outcomes of both analyses did not differ in any significant way from those reported here for the analyses which included EP. We conclude therefore that word-class differences could not account for the effects reported in this study

We thank one of the Reviewers for this suggestion.

References

- Atkinson J, Marshall J, Woll B, Thacker A. Testing comprehension abilities in users of British sign language following CVA. Brain & Language. 2005;94:233–248. doi: 10.1016/j.bandl.2004.12.008. [DOI] [PubMed] [Google Scholar]

- Bavelier D, Corina D, Jezzard P, Clark V, Karni A, Lalwani A, et al. Hemispheric specialization for English and ASL: Left invariance right variability. NeuroReport. 1998;9:1537–1542. doi: 10.1097/00001756-199805110-00054. [DOI] [PubMed] [Google Scholar]

- Blumstein SE. Impairments of speech production and speech perception in aphasia. Philosophical Transactions of the Royal Society of London. Series B, Biological sciences. 1994;346:29–36. doi: 10.1098/rstb.1994.0125. [DOI] [PubMed] [Google Scholar]

- Boyes Braem P, Sutton-Spence R, editors. The hands are the head of the mouth: The mouth as articulator in sign language. Signum; Hamburg: 2001. [Google Scholar]

- Brammer MJ, Bullmore ET, Simmons A, Williams SC, Grasby PM, Howard RJ, et al. Generic brain activation mapping in functional magnetic resonance imaging: A nonparametric approach. Magnetic Resonance Imaging. 1997;15:763–770. doi: 10.1016/s0730-725x(97)00135-5. [DOI] [PubMed] [Google Scholar]

- Brentari D. A Prosodic Model of Sign Language Phonology. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- Bullmore ET, Brammer M, Williams SC, Rabe-Hesketh S, Janot N, David A, et al. Statistical methods of estimation and inference for functional MR image analysis. Magnetic Resonance in Medicine. 1996;35:261–277. doi: 10.1002/mrm.1910350219. [DOI] [PubMed] [Google Scholar]

- Bullmore ET, Long C, Suckling J, Fadili J, Calvert G, Zelaya F, et al. Colored noise and computational inference in neurophysiological (fMRI) time series analysis: Resampling methods in time and wavelet domains. Human Brain Mapping. 2001;12:61–78. doi: 10.1002/1097-0193(200102)12:2<61::AID-HBM1004>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bullmore ET, Suckling J, Overmeyer S, Rabe-Hesketh S, Taylor E, Brammer MJ. Global, voxel, and cluster tests, by theory and permutation, for a difference between two groups of structural MR images of the brain. IEEE transactions on medical imaging. 1999;18:32–42. doi: 10.1109/42.750253. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Bullmore ET, Brammer MJ, Campbell R, Williams SCR, McGuire PK, et al. Activation of auditory cortex during silent lipreading. Science. 1997;276:593–596. doi: 10.1126/science.276.5312.593. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Campbell R, Brammer MJ. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Current Biology. 2000;10:649–657. doi: 10.1016/s0960-9822(00)00513-3. [DOI] [PubMed] [Google Scholar]

- Campbell R, MacSweeney M, Surguladze S, Calvert G, McGuire P, Suckling J, et al. Cortical substrates for the perception of face actions: An fMRI study of the specificity of activation for seen speech and for meaningless lower-face acts (gurning) Brain Research. Cognitive Brain Research. 2001;12:233–243. doi: 10.1016/s0926-6410(01)00054-4. [DOI] [PubMed] [Google Scholar]

- Capek CM, Bavelier D, Corina D, Newman AJ, Jezzard P, Neville HJ. The cortical organization of audio-visual sentence comprehension: An fMRI study at 4 Tesla. Brain Research. Cognitive Brain Research. 2004;20:111–119. doi: 10.1016/j.cogbrainres.2003.10.014. [DOI] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS. Hierarchial processing in spoken language comprehension. Journal of Neuroscience. 2003;23:3423–3431. doi: 10.1523/JNEUROSCI.23-08-03423.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donoho DL, Johnstone JM. Ideal spatial adaptation by wavelet shrinkage. Biometrika. 1994;81:425–455. [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Emmorey K. Language, cognition, and the brain: Insights from sign language research. Lawrence Erlbaum Associates, Inc., Publishers; Mahwah, NJ, US: 2002. [Google Scholar]

- Friman O, Borga M, Lundberg P, Knutsson H. Adaptive analysis of fMRI data. NeuroImage. 2003;19:837–845. doi: 10.1016/s1053-8119(03)00077-6. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Josephs O, Rees G, Turner R. Nonlinear event-related responses in fMRI. Magnetic Resonance in Medicine. 1998;39:41–52. doi: 10.1002/mrm.1910390109. [DOI] [PubMed] [Google Scholar]

- Goldenberg G, Karnath HO. The neural basis of imitation is body part specific. Journal of Neuroscience. 2006;26:6282–6287. doi: 10.1523/JNEUROSCI.0638-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hockett CF. The origin of speech. Sci Am. 1960;203:89–96. [PubMed] [Google Scholar]

- Johnston T. Sign Language: Morphology. In: Brown K, editor. Encyclopedia of Language & Linguistics. Second ed Vol. 11. University of Cambridge; Cambridge, UK: 2005. pp. 324–328. [Google Scholar]

- Klein D, Zatorre RJ, Chen JK, Milner B, Crane J, Belin P, et al. Bilingual brain organization: a functional magnetic resonance adaptation study. Neuroimage. 2006;31:366–375. doi: 10.1016/j.neuroimage.2005.12.012. [DOI] [PubMed] [Google Scholar]

- Klima E, Bellugi U. The signs of language. Harvard University Press; Cambridge: 1979. [Google Scholar]

- Lambertz N, Gizewski ER, de Greiff A, Forsting M. Cross-modal plasticity in deaf subjects dependent on the extent of hearing loss. Brain Research Cognitive Brain Research. 2005;25:884–890. doi: 10.1016/j.cogbrainres.2005.09.010. [DOI] [PubMed] [Google Scholar]

- Liddell SK. Nonmanual signs and relative clauses in American Sign Language (Perspectives in Neurolinguistics and Psycholinguistics) In: Siple P, editor. Understanding Language through Sign Language Research. Academic Press; New York, NY: 1978. pp. 59–90. [Google Scholar]

- MacSweeney M, Calvert GA, Campbell R, McGuire PK, David AS, Williams SC, et al. Speechreading circuits in people born deaf. Neuropsychologia. 2002a;40:801–807. doi: 10.1016/s0028-3932(01)00180-4. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Woll B, Campbell R, McGuire PK, David AS, Williams SCR, et al. Neural systems underlying British Sign Language and audio-visual English processing in native users. Brain. 2002b;125:1583–1593. doi: 10.1093/brain/awf153. [DOI] [PubMed] [Google Scholar]

- McCullough S, Emmorey K, Sereno M. Neural organization for recognition of grammatical and emotional facial expressions in deaf ASL signers and hearing nonsigners. Brain Research. Cognitive Brain Research. 2005;22:193–203. doi: 10.1016/j.cogbrainres.2004.08.012. [DOI] [PubMed] [Google Scholar]

- Mohammed T, MacSweeney M, Campbell R. Developing the TAS: Individual differences in silent speechreading, reading and phonological awareness in deaf and hearing speechreaders. Paper presented at the Auditory-Visual Speech Processing (AVSP); St Joriot, France. 2003. [Google Scholar]

- Muir LJ, Richardson IE. Perception of sign language and its application to visual communications for deaf people. Journal of Deaf Studies and Deaf Education. 2005;10:390–401. doi: 10.1093/deafed/eni037. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Bavelier D, Corina D, Rauschecker J, Karni A, Lalwani A, et al. Cerebral organization for language in deaf and hearing subjects: Biological constraints and effects of experience. Proceedings of the National Acadamy of Sciences of the United States of America. 1998;95:922–929. doi: 10.1073/pnas.95.3.922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman AJ, Bavelier D, Corina D, Jezzard P, Neville HJ. A critical period for right hemisphere recruitment in American Sign Language processing. Nature Neuroscience. 2002;5:76–80. doi: 10.1038/nn775. [DOI] [PubMed] [Google Scholar]

- Pekkola J, Ojanen V, Autti T, Jaaskelainen IP, Mottonen R, Tarkiainen A, et al. Primary auditory cortex activation by visual speech: an fMRI study at 3 T. NeuroReport. 2005;16:125–128. doi: 10.1097/00001756-200502080-00010. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, Michelich CR, Allison T, McCarthy G. Functional anatomy of biological motion perception in posterior temporal cortex: An FMRI study of eye, mouth and hand movements. Cerebral Cortex. 2005;15:1866–1876. doi: 10.1093/cercor/bhi064. [DOI] [PubMed] [Google Scholar]

- Perani D, Abutalebi J. The neural basis of first and second language processing. Curr Opin Neurobiol. 2005;15:202–206. doi: 10.1016/j.conb.2005.03.007. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Zatorre RJ, Guana K, Nikelski EJ, Dostie D, Evans AC. Speech-like cerebral activity in profoundly deaf people while processing signed languages: Implications for the neural basis of all human language. Proceedings of the National Academy of Sciences. 2000;97:13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poizner H, Klima ES, Bellugi U. What the hands reveal about the brain. MIT Press; Cambridge, MA: 1987. [Google Scholar]

- Puce A, Allison T, Bentin S, Gore JC, McCarthy G. Temporal cortex activation in humans viewing eye and mouth movements. Journal of Neuroscience. 1998;18:2188–2199. doi: 10.1523/JNEUROSCI.18-06-02188.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Perrett D. Electrophysiology and brain imaging of biological motion. Philosophical Transactions of the Royal Society of London. Series B, Biological sciences. 2003;358:435–445. doi: 10.1098/rstb.2002.1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschecker JP. Cortical processing of complex sounds. Curr Opin Neurobiol. 1998;8:516–521. doi: 10.1016/s0959-4388(98)80040-8. [DOI] [PubMed] [Google Scholar]

- Sakai KL, Tatsuno Y, Suzuki K, Kimura H, Ichida Y. Sign and speech: Amodal commonality in left hemisphere dominance for comprehension of sentences. Brain. 2005;128:1407–1417. doi: 10.1093/brain/awh465. [DOI] [PubMed] [Google Scholar]

- Sandler W, Lillo-Martin D. Natural Sign Languages. In: Aronoff M, Rees-Miller J, editors. The Handbook of Linguistics. Blackwell; Malden, MA: 2001. pp. 533–562. [Google Scholar]

- Schlosser MJ, Aoyagi N, Fulbright RK, Gore JC, McCarthy G. Functional MRI studies of auditory comprehension. Human Brain Mapping. 1998;6:1–13. doi: 10.1002/(SICI)1097-0193(1998)6:1<1::AID-HBM1>3.0.CO;2-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwartz J-L, Robert-Ribes J, Escudier P. Ten years after Summerfield: a taxonomy of models for audio-visual fusion in speech perception. In: Campbell R, Dodd B, Burnham D, editors. Hearing by eye II: Advances in the psychology of speechreading and auditory-visual speech. Psychology Press Ltd; Hove, UK: 1998. pp. 85–109. [Google Scholar]

- Söderfeldt B, Ingvar M, Ronnberg J, Eriksson L, Serrander M, Stone-Elander S. Signed and spoken language perception studied by positron emission tomography. Neurology. 1997;49:82–87. doi: 10.1212/wnl.49.1.82. [DOI] [PubMed] [Google Scholar]

- Söderfeldt B, Ronnberg J, Risberg J. Regional cerebral blood flow in sign language users. Brain & Language. 1994;46:59–68. doi: 10.1006/brln.1994.1004. [DOI] [PubMed] [Google Scholar]

- Stokoe WC. Sign language structure: An outline of the visual communication systems of the American deaf. University of Buffalo; Buffalo, NY: 1960. p. 78. (Studies in Linguistics: Occasional Papers Vol. 8). [DOI] [PubMed] [Google Scholar]

- Sutton-Spence R, Woll B. The Linguistics of British Sign Language. Cambridge University Press; Cambridge, UK: 1999. [Google Scholar]

- Talairach J, Tournoux P. In: Co-planar stereotaxic atlas of the human brain. Rayport M, translator. Thieme Medical Publishers, Inc; New York: 1988. [Google Scholar]

- Woll B. The sign that dares to speak its name: Echo phonology in British Sign Language (BSL) In: Boyes Braem P, Sutton-Spence R, editors. The hands are the head of the mouth: the mouth as articulator in sign language. Signum; Hamburg: 2001. pp. 87–98. [Google Scholar]